Abstract

By exploiting an analogy between population genetics and statistical mechanics, we study the evolution of a polygenic trait under stabilizing selection, mutation and genetic drift. This requires us to track only four macroscopic variables, instead of the distribution of all the allele frequencies that influence the trait. These macroscopic variables are the expectations of: the trait mean and its square, the genetic variance, and of a measure of heterozygosity, and are derived from a generating function that is in turn derived by maximizing an entropy measure. These four macroscopics are enough to accurately describe the dynamics of the trait mean and of its genetic variance (and in principle of any other quantity). Unlike previous approaches that were based on an infinite series of moments or cumulants, which had to be truncated arbitrarily, our calculations provide a well-defined approximation procedure. We apply the framework to abrupt and gradual changes in the optimum, as well as to changes in the strength of stabilizing selection. Our approximations are surprisingly accurate, even for systems with as few as five loci. We find that when the effects of drift are included, the expected genetic variance is hardly altered by directional selection, even though it fluctuates in any particular instance. We also find hysteresis, showing that even after averaging over the microscopic variables, the macroscopic trajectories retain a memory of the underlying genetic states.

Keywords: evolution, quantitative genetics, statistical mechanics, entropy, epistasis, genetic variance

1. Introduction

Quantitative genetics aims to explain and describe the response of a heritable trait to selection, but in the classical approach, dispensing with the genetic details that account for that heritability [1–3]. Yet, population genetics tells us that such responses cannot be predicted without knowing those genetic details [2,4,5]. Paradoxically, we can indeed make such predictions from empirical measurements of traits, but only by assuming that the genetic variance (the variance of the trait caused by genetic differences) is fixed: changes in variance owing to selection have been hard to predict (e.g. [6,7]).

Directional selection (DS) will usually allow a quick response at the cost of the depletion of genetic variance, which will ultimately be owing to mutation around a unique optimal genotype [5, ch. IV]. However, most traits are thought to be under some kind of stabilizing selection (SS), in which case the genetic variance will be reduced by fixing any one of the many genotypes that match the optimal value [8–10]. In order to understand the full stochastic model, which is a polygenic trait under SS, mutation and drift—the goal of this article—we first summarize the theory for infinite populations [8,10,11], where random fluctuations are absent, and evolution is deterministic.

For simplicity, we assume that each gene has only two alternative states (termed alleles). When mutation (μ) is much weaker than the selection (s) on each allele, the trait mean converges to the optimum, because any genetic locus can be almost fixed for either allele (i.e. allele frequencies are close to 0 or 1). Thus, there will be many stable equilibria for the whole set of loci, at which any genotype that matches the optimum is near fixation—there are degenerate genetic states for a trait mean matching the optimum. Assuming that there are enough genes (n≫1), the genetic variance is then roughly 4nμ/s [4]. When this variance is much greater than the maximum that can be contributed by any one locus [10], its increase owing to a substitution at any one locus is much smaller than the existing variance, as we expect to be the case for a trait that is influenced by many genes. In this regime, there are many sub-optimal stable equilibria, for which the genotype that is close to fixation does not quite match the optimum. For example, if the optimum is at zero, then with 100 loci, there are (100!/(50!)2) ∼ 1029 equilibria that match this precisely, but asymmetrical equilibria with 49 ‘−’ and 51 ‘+’ loci may also be stable. However, selection adjusts the frequencies of rare alleles with these asymmetrical combinations, such that the mean is still very close to the optimum, but the genetic variance is substantially higher, and the mean fitness is lower as a consequence [10].

Imagine that the population is initially at an ‘optimal’ equilibrium, which maximizes mean fitness. If the optimum now shifts, the population will stay near fixation for the original genotype, and so the genetic variance will inflate as allele frequencies adjust to keep the mean near the optimum. At some point, the equilibrium becomes unstable, one or more loci substitute, and the genetic variance drops abruptly. If all alleles have the same effect, as assumed so far, then variance will inflate at many loci, and so may become very high (figure 1). However, with unequal effects, the loci with smallest effect will substitute first, and the overall fluctuation will be much smaller.

Figure 1.

(a) Allele frequencies, (b) trait mean and (c) genetic variance plotted against time. A population is initially at equilibrium with stabilizing selection s = 0.05 towards zopt = 0 acting on an additive trait, with n = 100 loci of effect γ = 1; the mutation rate is μ = 0.002 per locus, which maintains a genetic variance of ν = 4nμγs = 16. The optimum then shifts abruptly to zopt = 20, and the mean responds almost immediately (b). The variance increases abruptly (c) as, the allele frequencies at all the ‘−’ loci increase substantially (d). However, this new state is unstable, and slight variations in the initial conditions cause some loci to shift down, and some to shift up. As a result, the genetic variance returns to its original value. The lower row shows snapshots of allele frequencies at times (d) 0, (e) 800 and (f) 3000 generations.

If the trait mean lags far behind the current optimum, we would expect to see erratic trajectories in an infinite population because of the following two points:

very rare alleles will increase, and will sweep through at an unpredictable time that depends on their initial frequency; and

the various—and phenotypically equivalent—combinations of alleles are reorganized when the phenotypic optimum takes a new value, transiently inflating the variance, as explained above.

We envisage three factors that smooth potentially erratic phenotypic paths. First, if the effects of alleles vary across loci, there is the opportunity to make smaller steps as the optimum changes, which alleviates pronounced and coincident changes in allele frequencies, and diminishes the excess variance as the phenotypic optimum moves. Second, if we average over all the possible genetic combinations that match the phenotypic optimum, then we expect that the speed and direction of the response to a change in optimum will be more regular. Third, if the population is finite, random drift will, on the one hand, homogenize the initial distribution of allele frequencies, alleviating point (i) above, and on the other, facilitate transitions between ‘adaptive peaks’, alleviating point (ii), so that the population can evolve without being trapped at local equilibria, as in Wright's [11] ‘shifting balance’. Also, note that under drift, plus weak mutation, allele frequencies p are distributed as approximately [p(1 − p)]−1, a special case in which the genetic variance stays constant under DS (since with this distribution, the increased variance owing to rare alleles that become common is balanced by the loss of variance from common alleles that become rare). Here, rare alleles replenish variance at the same rate as common alleles are fixed.

Measurable quantitative genetic variables are not sufficient to predict response to selection: the dynamics of the trait mean and of the genetic variance under selection depend on allele frequencies. We can use moments instead, but their dynamics depend on higher moments, and we end up requiring as many variables as allele frequencies [4,5,12–14]. In part, this problem arises from (i) and (ii) above. Surprisingly, including a third evolutionary process, random genetic drift, allows us to employ a different mathematical toolbox (from physics' statistical mechanics, SM) to make reliable predictions. Although we can no longer predict a single trajectory in detail, we can instead predict expectations over the ensemble of trajectories. Thus, we will focus on the second and third factors mentioned above, namely the averaging over allele frequencies that is caused by random genetic drift.

1.1. Statistical mechanics

The need to specify the detailed genetic state is avoided by the development of an analogy between SM and population genetics [15–19]. In these works, information entropy [20] was maximized under the assumption that any initial state was a priori equally probable. However, this choice of prior is not based on population genetics: we know that states are not equiprobable under random genetic drift, because in a finite population, fluctuations bias the neutral distribution towards the boundaries. Further work developed this idea in detail, tailoring an entropy measure which ensures that the equilibrium distribution matches the stationary solution to the diffusion equation [21,22]. This allows us to collapse an arbitrary number of degrees of freedom that cannot be accurately known (e.g. allele or genotype frequencies, central moments, cumulants, etc.) into a few variables, which approximate the whole stochastic system. The theory is built in two stages.

1.1.1. Maximum entropy

First, we observe that a measure of entropy is maximized at equilibrium, subject to constraints on the expectations of the macroscopic variables [21,23–26]. (Alternatively, we can define a ‘free fitness’ as the entropy divided by population size, plus the mean fitness, which is maximized at equilibrium under selection and drift; this is analogous to free energy in thermodynamics [22,27].) Although entropy is a concept alien to population genetics, it summarizes two fundamental aspects of the equilibrium distribution. On the one hand, it measures the degree of divergence of the distribution of the allele frequencies from a neutral base distribution. On the other hand, the entropy couples two sets of variables, the microscopic that in this case we take to be the allele frequencies, and the macroscopic that are the expectations of quantitative variables.

Generally speaking, the SM approach takes advantage of genetic drift in two ways. To start with, drift is expected to spread the trajectories relaxing the problem of trapping at local fitness optima, just as envisaged by Wright [28]. To follow, instead of calculating the values of metric characters themselves—these are stochastic variables—it makes predictions for their expectations.

Although we are free to apply any kind of selection, it is crucial that it acts only on the chosen macroscopic variables. That is, we cannot assume that selection will act on specific genotypes, because then, selection could favour arbitrary and improbable macroscopic states: the maximum entropy Ansatz would be contradicted, and would inevitably fail to give accurate predictions. For example, if we force the population to a specific set of allele frequencies, pi, then under DS s there would be sudden jumps at times ∼ (1/s) log(1/pi), as alleles reach high frequency.

The distribution of allele frequencies—like any statistical distribution—is formally a function of a set of parameters. In population genetics, it typically depends on the selection coefficients, mutation rate and population size, and the distribution of macroscopics can be calculated from these, using the diffusion approximation. This averaging over the microscopic variables has the fortunate consequence that the distribution of macroscopics, as well as their rates of evolution, do not explicitly depend on the allele frequencies, although their distribution is in fact estimated implicitly by maximizing the entropy. This averaging is not straightforward under SS, because there is epistasis for fitness, which fully couples the distribution of allele frequencies. However, the calculations can be greatly simplified because under the central limit theorem, the distribution of the macroscopic variables, conditioned on a particular value of the trait mean, will be approximately Gaussian. This will allow us to eliminate any explicit dependence on the allele frequencies, and gives workable expressions for the expectations.

1.1.2. Quasi-equilibrium

Second, we make a quasi-equilibrium approximation, which assumes that even during periods of change, the distribution of allele frequencies adopts a shape that takes the form of an equilibrium distribution, the parameters of which are chosen so that the distribution matches the current macroscopic variables.

In this framework, we can describe the state of the population by two complementary sets of variables: the expectations of the chosen macroscopics  , and the corresponding selective forces on these macroscopics. This is precisely analogous to the complementary set of variables (forces and variables of state) in statistical mechanics. Rather than working with the rates of evolution of the macroscopic variables, we can calculate the rates of change of the evolutionary forces. Consequently, we can find the expectations of the quantitative variables at any time, and thus know their evolutionary trajectory. If parameters change sufficiently slowly, the ensemble will be close to an equilibrium distribution, as with reversible changes to a physical system. When parameters change quickly, however, nothing ensures that the distribution will be close to some stationary distribution. Thus, the present work aims in part to verify this assumption, by comparing the results with numerical simulations. The mathematical details of this method are explained in detail by Barton & de Vladar [21].

, and the corresponding selective forces on these macroscopics. This is precisely analogous to the complementary set of variables (forces and variables of state) in statistical mechanics. Rather than working with the rates of evolution of the macroscopic variables, we can calculate the rates of change of the evolutionary forces. Consequently, we can find the expectations of the quantitative variables at any time, and thus know their evolutionary trajectory. If parameters change sufficiently slowly, the ensemble will be close to an equilibrium distribution, as with reversible changes to a physical system. When parameters change quickly, however, nothing ensures that the distribution will be close to some stationary distribution. Thus, the present work aims in part to verify this assumption, by comparing the results with numerical simulations. The mathematical details of this method are explained in detail by Barton & de Vladar [21].

A crucial advantage of the SM method is that it avoids the recursion to higher moments (or cumulants) of the traits, where higher order terms must be neglected arbitrarily [4,5,12–14,29,30]: the key is the choice of an appropriate set of macroscopics, which is determined by the set of traits that determine fitness.

Previously, we have successfully applied these ideas to the much simpler case of DS on a polygenic trait subject to mutation and drift [21]. There, our predictions are accurate for many loci of arbitrary effects. However, under DS solving the problem for a trait with n loci amounts essentially to solving the problem for a single locus, since there is no coupling between loci. Thus it is to some extent, a trivial case—though not entirely so because maximum entropy still drastically collapses the degrees of freedom from the full allele frequency distribution to only two, the trait mean, and the log-heterozygosity (equation (2.3)). A more demanding situation, and of deep biological relevance, is SS, which is the main focus of our present work. We will study whether the SM method applies to the challenging case of SS, mutation and drift. In particular, we will develop the details using Gaussian SS as a model. We analyse sharp changes in the optimum, a steadily moving optimum, and changes in selection on the genetic variance.

2. Stabilizing selection, mutation and genetic drift

2.1. Distribution of allele frequencies

We assume throughout that recombination is fast relative to selection, mutation and drift, so that we can assume linkage equilibrium, and describe the population by its allele frequencies. We also assume that population size is not small, so that we can use the diffusion approximation. The fitness of an individual with trait z is given by log[Wz] = − (s/2)(z − zo)2, which penalizes deviations from the optimum zo, since the extremes are unfit. Note that this expression can be written as

. The first term suggests that individuals which deviate from the mean of the population have less fitness. The second term is the deviation of the trait mean from the optimum. The mean log fitness is thus

. The first term suggests that individuals which deviate from the mean of the population have less fitness. The second term is the deviation of the trait mean from the optimum. The mean log fitness is thus  . Here we neglect terms 𝒪(s2), and the third term above averages to zero. For further convenience, we expand the quadratic term, and express it as

. Here we neglect terms 𝒪(s2), and the third term above averages to zero. For further convenience, we expand the quadratic term, and express it as  . This is essentially the same, but where selection is acting ‘independently’ over three quantitative variables: selection (of strength σ) against the genetic variance, ν; a directional term of strength β (=− zopts) regressing the mean trait

. This is essentially the same, but where selection is acting ‘independently’ over three quantitative variables: selection (of strength σ) against the genetic variance, ν; a directional term of strength β (=− zopts) regressing the mean trait  to the optimum and selection against deviations from the actual mean (of strength λ). Most works consider that σ = λ = −s/2, but here this constraint will be relaxed and allow σ and λ to differ, in order to improve the quasi equilibrium approximations, as will become clear later. We can think of examples, such as sexual selection or resource exploitation, where the individuals that deviate too much from the population's current mean value are unlikely to leave offspring, or selection might act directly on heterozygotes (and hence directly on ν). Of course, in some of these cases, selection might be frequency-dependent, in which case we will not have a stationary distribution that is of potential form, and the following analyses might not hold. However, we will keep these issues aside and concentrate on the simpler case of frequency independent selection, but allowing selection on the variance and on the deviation of the trait mean (factors σ and λ, respectively) to differ.

to the optimum and selection against deviations from the actual mean (of strength λ). Most works consider that σ = λ = −s/2, but here this constraint will be relaxed and allow σ and λ to differ, in order to improve the quasi equilibrium approximations, as will become clear later. We can think of examples, such as sexual selection or resource exploitation, where the individuals that deviate too much from the population's current mean value are unlikely to leave offspring, or selection might act directly on heterozygotes (and hence directly on ν). Of course, in some of these cases, selection might be frequency-dependent, in which case we will not have a stationary distribution that is of potential form, and the following analyses might not hold. However, we will keep these issues aside and concentrate on the simpler case of frequency independent selection, but allowing selection on the variance and on the deviation of the trait mean (factors σ and λ, respectively) to differ.

We will assume that selection is weak relative to recombination, so that linkage equilibrium holds. Then, if the population is moderately large, the evolution of the frequency p of a beneficial allele at each locus can be described by

|

2.1 |

where μ is the mutation rate (assumed to be reversible and equal in both directions), and ζd is the stochastic term owing to drift, modelled as a Gaussian of mean zero and variance pq/2N, where q = 1 − p, and N is the effective size of the population. Note that  depends on

depends on  and so couples the dynamics at all loci, something that does not occur under DS. In other words, fitness is essentially epistatic.

and so couples the dynamics at all loci, something that does not occur under DS. In other words, fitness is essentially epistatic.

At equilibrium, this process has the joint distribution of allele frequencies, ψ(p) [31–33, p. 442]:

|

2.2 |

which is identical to the more familiar expression  but arranged in a different way. The quantity ℤ normalizes the distribution. (From now on, we will use bold characters to denote vectors, and script capitals to denote matrices). As explained before [21– 23], we describe the effects of mutation by a potential function U, which is essentially the log of the geometric mean heterozygosity:

but arranged in a different way. The quantity ℤ normalizes the distribution. (From now on, we will use bold characters to denote vectors, and script capitals to denote matrices). As explained before [21– 23], we describe the effects of mutation by a potential function U, which is essentially the log of the geometric mean heterozygosity:

|

2.3 |

Thus, we have a set of macroscopic variables  that is associated with the parameters (forces) α = {β, λ, σ, μ}. Both together completely determine the distribution of allele frequencies. At first sight, it might seem that specifying both

that is associated with the parameters (forces) α = {β, λ, σ, μ}. Both together completely determine the distribution of allele frequencies. At first sight, it might seem that specifying both  and

and  is redundant. However, the latter introduces epistasis to the fitness function, making it non-multiplicative. But also, since it is their expectations that constrain the entropy, both terms are necessary to couple the microscopic and macroscopic variables, and thus to determine the expectations of the mean and the variance of the trait.

is redundant. However, the latter introduces epistasis to the fitness function, making it non-multiplicative. But also, since it is their expectations that constrain the entropy, both terms are necessary to couple the microscopic and macroscopic variables, and thus to determine the expectations of the mean and the variance of the trait.

2.2. Additive polygenic traits

So far, we have made no assumptions about the trait. The set of variables just defined, A, depend arbitrarily on the allele frequencies. We will assume now some elementary properties. Each trait is composed of a pair of alleles (thus we are assuming diploids) that contribute additively, each with an effect γl. The label X denotes different alleles (X = 0,1). Under this model, the trait, its mean, and its variance in the population are, respectively, as follows:

|

2.4 |

|

2.5 |

|

2.6 |

Defined this way, the trait is in the range of ±∑l γl, and the genetic variance is in [0, νmax], with  . If we assume that all loci have equal effects γ then

. If we assume that all loci have equal effects γ then  and ν = 2γ2 ∑l=1nplql. Without loss of generality, we will assume γ = 1. We can always include γ ≠ 1 by scaling β → γβ and σ → γ2 σ.

and ν = 2γ2 ∑l=1nplql. Without loss of generality, we will assume γ = 1. We can always include γ ≠ 1 by scaling β → γβ and σ → γ2 σ.

Along this article, we assume that mutation (μ) is weaker than selection (s) on each allele, that is μ < sγ2/4. But at the same time, since we require that the standing genetic variance is greater than the variance owing to a substitution, we will work in the regime of sγ2/4 < nμ [10], which ensures that there are many stable genetic equilibria.

3. Statistical Mechanics

In our previous work, we explained in detail how the distribution in equation (2.2) can be derived by maximizing a particular entropy measure [21]. In population genetics, this uncertainty measures the possible states in which a population can be. It is a functional of the distribution of allele frequencies ψ(p). We need to employ an information entropy measure which is defined relative to the distribution under drift alone [21,22]. That is, in the absence of any other evolutionary factor, random drift would drive the evolution of the population towards the distribution ϕ = ∏l=1n(pl ql)−1, which is a U-shaped distribution [31]. In fact, ϕ is not properly a distribution because it cannot be normalized. Nevertheless, under drift alone ϕ is the solution to the diffusion equation [34], and we will set entropy so that with a base distribution ϕ, it gives the correct stationary distribution with mutation and selection. Accordingly, we defined the entropy as

|

3.1 |

When S is maximized with respect to ψ we get that ψ ∝ ϕ. Although ϕ will diverge when pq → 0, imposing constraints on S will ensure that this is not a problem. This particular entropy measure was postulated to ensure that the distribution of allele frequencies corresponds to the one obtained by the diffusion methods, i.e. equation (2.2). However, the probability distribution is changed in the presence of, say, mutation and/or selection. Thus, entropy changes under any other factor that affects the evolution of a character or of fitness, like selection or mutation, because these increase or decrease the uncertainty of the outcomes, biasing the distribution ψ. To include these effects, we require that the distribution of allele frequencies must have specific values for the expectations 〈A〉. This is introduced in the form of constraints to the entropy maximization problem, and thus we introduce a Lagrange multiplier αk for each constraint 〈Ak〉 = ∫Ak ψdnp. Thus, we need to maximize the following functional (on ψ):

|

Here the third term ensures normalization of the distribution. This leads exactly to the distribution of allele frequencies in equation (2.2), and thus the Lagrange multipliers are the forces (selective coefficients, and mutation rate). The normalization condition is

|

3.2 |

where we renamed the multiplier exp[−2N α0] ≡ ℤ. This quantity is a function of the parameters αj (j ≠ 0) and it is analogous to the partition function in statistical physics. It plays a fundamental role, because it allows direct calculation of the macroscopics, as well as their covariances, since log[ℤ] is a generating function: ∂α log[ℤ] = 2N〈A〉 and ∂α,α2 log[ℤ] = 4N2C, where C = cov(Ai, Aj) is the covariance matrix of the macroscopics. These expectations and covariances are with respect to the distribution ψ, that is across possible states of a population, or alternatively with respect to an ensemble of populations, and must not be confused with the means and variances of a trait within a population. The technical effort focuses on a workable calculation for ℤ, which will lead to expressions for the rates of change of the α and the 〈A〉. In general, any vector of quantitative variables, in our case A, that is a function of the allele frequencies will have an expectation (macroscopic) that evolves as

| 3.3 |

where ℬ is a generalized matrix of genetic covariances, and V the rate of random drift;  and

and  . These formulas can be derived straightforwardly in several ways. The most compelling for a biologist is to use Wright's [35] formula, via the diffusion equation [36, pp. 136–137] or from the Fokker–Planck equation (for equation (2.1); [37, ch. 5]). Neither V, ℬ, nor 𝒞 (defined above) assume additive or equal effects. However, to obtain specific expressions we need to make some assumptions. We will now assume additivity, for which we get

. These formulas can be derived straightforwardly in several ways. The most compelling for a biologist is to use Wright's [35] formula, via the diffusion equation [36, pp. 136–137] or from the Fokker–Planck equation (for equation (2.1); [37, ch. 5]). Neither V, ℬ, nor 𝒞 (defined above) assume additive or equal effects. However, to obtain specific expressions we need to make some assumptions. We will now assume additivity, for which we get

|

3.4 |

and

|

3.5 |

These expressions are also valid under unequal effects. (The unfamiliar macroscopics involved, namely m3, m4, and H are specified in appendix A.) Note that the SM method provides a way of directly calculating the expectations of these quantities, as well as of the higher moments of the trait: they follow from mathematical manipulations of the generating function ℤ [21]. This is one of the most important improvements from the SM method with respect to other moments or cumulant methods where these higher order moments have to be computed ab initio using their particular differential equations, and approximated using specific models, like the Gaussian or House of Cards models [38], perturbation analysis [30], and/or simply by truncating the equations at a convenient level [4,5,12–14,19].

Under the quasi-equilibrium approximation, we assume that at every time point there is a set of variables α(t)* (for short, α*) which would give the observed 〈A〉 at a steady state, so that (d〈A〉/dt) = 0; then V* =−ℬ* · α*. Thus, the matrix ℬ in equation (3.3) is approximated by its equilibrium expression, but evaluated at α*, denoted ℬ*. Then, substituting into equation (3.3), we get that (d〈A〉/dt) = ℬ* · (α − α*(t)). Since the matrix ℬ* depends only on the α*, and the latter are calculated at every time point following the assumption of maximum entropy (see [21] for more details on the implementations), we are able to make predictions for the long-term response to selection of the expected trait mean and of the expected genetic variance.

3.1. Approximating the generating function

The function ℤ (equation (3.2)) is a multidimensional integral over the frequencies at the n loci. These integrals are coupled, because the function  is not separable into additive terms. Thus, in this section, we will focus on an approximation for ℤ. To begin with, we recall that Wright's formula [35, eqn. 39] implies that we can always express ψ(p) as

is not separable into additive terms. Thus, in this section, we will focus on an approximation for ℤ. To begin with, we recall that Wright's formula [35, eqn. 39] implies that we can always express ψ(p) as  , where

, where  is the distribution of the system free of selection over the trait mean. Thus we include the effects of drift, mutation (μ) and also of selection on the genetic variance (σ); these are all additive terms. Because ψsf (when normalized) is a product of per-locus distributions, the central limit theorem indicates that with many loci, ψsf will converge to a Gaussian (appendix A). Furthermore, the contribution of each locus is typically bimodal, where each peak is close to the borders. Hence, we have 2n adaptive peaks, which correspond to all the combinations of ‘+’/‘−’ alleles. In order to gain accuracy, we expand as a Gaussian not the whole distribution ψsf, but rather the distribution around each of the adaptive peaks, by dividing the range of each allele frequency into two regions. Under equal effects, there can be m and n − m loci near 0 and 1, respectively, weighted by a binomial term (appendix A). Each peak will be characterized by its expected mean trait values and variances. Because of the symmetry under equal effects, at each peak the contribution to the expected mean of each allele is equivalent in magnitude, Mo, and of opposite sign for the ‘+’ and ‘−’ peaks (the trait mean is an odd function of the allele frequencies). Thus, for a given combination (m,n − m) the expected trait mean results in 〈z〉m ≡ (n −2m)Mo. Similarly, the variance contributed by each peak is, per locus, Vo, so that for a combination (m,n − m) results in

is the distribution of the system free of selection over the trait mean. Thus we include the effects of drift, mutation (μ) and also of selection on the genetic variance (σ); these are all additive terms. Because ψsf (when normalized) is a product of per-locus distributions, the central limit theorem indicates that with many loci, ψsf will converge to a Gaussian (appendix A). Furthermore, the contribution of each locus is typically bimodal, where each peak is close to the borders. Hence, we have 2n adaptive peaks, which correspond to all the combinations of ‘+’/‘−’ alleles. In order to gain accuracy, we expand as a Gaussian not the whole distribution ψsf, but rather the distribution around each of the adaptive peaks, by dividing the range of each allele frequency into two regions. Under equal effects, there can be m and n − m loci near 0 and 1, respectively, weighted by a binomial term (appendix A). Each peak will be characterized by its expected mean trait values and variances. Because of the symmetry under equal effects, at each peak the contribution to the expected mean of each allele is equivalent in magnitude, Mo, and of opposite sign for the ‘+’ and ‘−’ peaks (the trait mean is an odd function of the allele frequencies). Thus, for a given combination (m,n − m) the expected trait mean results in 〈z〉m ≡ (n −2m)Mo. Similarly, the variance contributed by each peak is, per locus, Vo, so that for a combination (m,n − m) results in  (the variance being an even function of the allele frequencies). In the same way, we may include the expectation of other quantities (ν,U, etc., altogether denoted by a) along with their variances and covariances. Defining cm ≡ {covm (ak )}k, the covariance matrix of a at a given peak with configuration (m,n − m), we approximate ψsf as a multivariate Gaussian, namely:

(the variance being an even function of the allele frequencies). In the same way, we may include the expectation of other quantities (ν,U, etc., altogether denoted by a) along with their variances and covariances. Defining cm ≡ {covm (ak )}k, the covariance matrix of a at a given peak with configuration (m,n − m), we approximate ψsf as a multivariate Gaussian, namely:

|

3.6 |

where k is the number of variables included in the Gaussian approximation; in practice, we employed k = 5 variables, namely those required to compute ℬ, which are  . ℤo is the normalization constant of this Gaussian approximation, which for equal effects is

. ℤo is the normalization constant of this Gaussian approximation, which for equal effects is  , which in turn is the generating function for n independent single loci at an adaptive peak (appendix B):

, which in turn is the generating function for n independent single loci at an adaptive peak (appendix B):

|

3.7 |

After making this approximation, we need to multiply the resulting Gaussian distribution by the effects of selection, as specified by the mean fitness  , and integrate on

, and integrate on  , in order to get ℤ. Appendix A indicates the details and the intermediate steps in the derivation (which we work out for unequal effects, when the complexity of the calculation allows it). In short, we find that the generating function is

, in order to get ℤ. Appendix A indicates the details and the intermediate steps in the derivation (which we work out for unequal effects, when the complexity of the calculation allows it). In short, we find that the generating function is

|

3.8 |

The expectations of the macroscopics follow from the derivatives of log[ℤ] w.r.t. the parameters α as explained above, all of which can be written explicitly (see appendix A). In practice because n is large, the binomial terms  are computed by approximating them by a Gaussian, and the binomial sum by an integral, thus:

are computed by approximating them by a Gaussian, and the binomial sum by an integral, thus:

|

3.9 |

which allows computations that can be handled numerically (appendix A). In the following sections, we study the accuracy of our approximations.

4. Results

4.1. Numerical experiments

Exact analysis of the distribution of allele frequencies is in theory possible using a diffusion equation approach [33, ch. 7]. But for many coupled loci, as in this case of SS, the time-dependent analytic solution is unknown, and its numerical calculation is not feasible—short of Monte Carlo methods that would amount to simulation of the original problem. Thus, the possibilities to check our method are reduced to either simulating allele frequencies, or to employ individual-based simulations. We take the first approach, that is to draw multiple realizations of equation (2.1), each giving a stochastic path of the allele frequencies, employing in our purposes an Euler scheme with time step Δt = 0.01, and drawing deviates from a Gaussian with variance Δtpq/2N for the stochastic term modelling genetic drift (e.g. [39]). From these paths, we compute the trait mean and its genetic variance. Then, we can average over multiple realizations in order to estimate the expectations of these variables. We ignore linkage disequilibrium, but know that this is negligible for recombination rates r ≫ s [5, ch. VI.7]. Thus, we work entirely from the diffusion approximation to the evolution of allele frequencies, assuming that selection is weak (s ≪ r,1). Our statistical mechanical approach assumes that evolution starts from an initial equilibrium; it does not allow arbitrary initial distributions that would give arbitrary outcomes. Therefore, for a fair comparison, we need to start the numerical realizations also from an equilibrium, drawing an initial set of allele frequencies from the theoretical marginal distribution. Because the joint distribution of allele frequencies and the product of the marginals are not the same, we still allow the process to relax to the stationary state for a few thousands of generations (typically, approx. 105), until numerically we obtain that  . Only then we allow selection to change. From this moment, when evolution proceeds, we average and record the values to compare them later with the SM estimate.

. Only then we allow selection to change. From this moment, when evolution proceeds, we average and record the values to compare them later with the SM estimate.

In order to perform this comparison, we perform a significance test. The null hypothesis ℋ0 is that the numerical calculations are not significantly different from the SM expectations. Thus to support our theory, we would like to accept ℋ0. Because the numerical expectations are averages over many realizations, these are normally distributed. From the SM theory, we know the expectation and the variance of the macroscopics that we want to test, namely, the trait mean and the genetic variance. Summing up, we know the expected values and the variance and thus, ideally the residuals should be normally distributed. Hence, a z-test for the goodness of fit is enough for our purposes [40, ch. 3]. The z-statistic is  , where

, where  are the averages over the simulations, 〈A*〉 is a short-hand notation for 〈A〉(α*(t)) (the expectation of A evaluated at the local forces at time τ), T is the total number of time points and df = T − 1 are the degrees of freedom. We arbitrarily set the significance level to 99 per cent. In addition, we also compare the maximum observed deviation,

are the averages over the simulations, 〈A*〉 is a short-hand notation for 〈A〉(α*(t)) (the expectation of A evaluated at the local forces at time τ), T is the total number of time points and df = T − 1 are the degrees of freedom. We arbitrarily set the significance level to 99 per cent. In addition, we also compare the maximum observed deviation,  with the standard deviation from the SM at that same time point. Respectively, these two statistics quantify for the SM approximation the accuracy (i.e. how close are the predictions to the true value), and the precision (i.e. how much the observations deviate from its expected value). In turn these two measures give us a grip to judge whether our approximation deviates significantly from the ‘true’ values (from the simulations), and also whether significant deviations are biologically meaningful. As we will see in some examples, some systematic and statistically significant deviations may occur.

with the standard deviation from the SM at that same time point. Respectively, these two statistics quantify for the SM approximation the accuracy (i.e. how close are the predictions to the true value), and the precision (i.e. how much the observations deviate from its expected value). In turn these two measures give us a grip to judge whether our approximation deviates significantly from the ‘true’ values (from the simulations), and also whether significant deviations are biologically meaningful. As we will see in some examples, some systematic and statistically significant deviations may occur.

4.2. Shifting optimum

The most radical test of our approximation is when selection changes abruptly. For example, a sudden shift of the optimum would trigger a quick response of the trait, and a major reconfiguration of the genetic states. The prediction of the change of the trait mean and of the genetic variance is thus not a trivial task. In turn, our approximation allows us to estimate the change of their expectations, which gives a rather robust prediction of their evolutionary course. Figures 2 and 3 present two examples of this situation. We compare this response with intensive simulations of the Wright–Fisher model (equation (2.1)), for two distinct numbers of loci: five and a hundred. The response of the mean is quicker for traits with more loci. In this case of equal effects, the reason is clear: the expectations of the trait mean and the genetic variance are ‘extensive’, meaning that their values are proportional to the number of loci, n. The expectations are not strictly linear with n, but it is nevertheless a positive dependence. Thus, traits composed of a larger number of loci have larger genetic variance, and therefore respond faster to selection. This is expected from the formula from the Stochastic House of Cards model (SHoC; [5], p. 270, eqn (2.8)) that predicts that the expected genetic variance will be

|

4.1 |

(in this case, we set the effect to γ = 1). Thus increasing the number of loci, increases the genetic variance. The precision of our approximation does not seem to depend critically on n, except for a very low number of loci (n between 3 and 5, depending on the parameters, results not shown), where the Gaussian approximation fails. Yet, the predictions of the macroscopics are in very good agreement with the numerical expectations, even for n as low as 5.

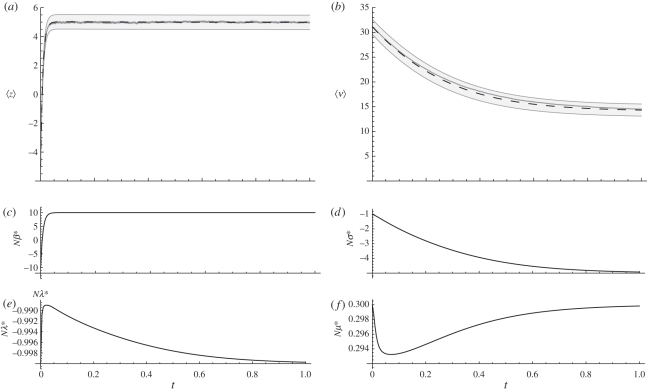

Figure 2.

Evolutionary dynamics when the optimum changes abruptly, shifted at time t = 0 from −0.75 to 0.75 (which corresponds to Nβ = −2.5 to Nβ = 2.5, see (c)). The trait consists of n = 5 loci of equal effect. Expectations (dashed lines) of (a) a polygenic trait and (b) its genetic variance. The grey regions cover ± the standard error (root mean squared deviation of the variance of the macroscopics). The change in the genetic variance is at most of 4.85% of the initial value, while its s.e. is 20.5%. The grey lines are averages of the numerical realizations (with population size of N = 100; 1000 replicas were employed). The goodness of fit (one tail chi-square with 51 d.f.) accepts the null hypothesis (the simulation points are random samples of the SM distributions) in both cases: (a) z = −0.058,p − 0.68; the maximum deviation is 12.19% of the standard error (0.47); (b) z = 0.12, p = 0.40; the maximum deviation is 18.27% of the standard error (0.31). (c–f) Evolution of the local forces. Note the short range of change on (d–f), these forces remain practically constant. Nλ = −1.0, Nσ = −5 and Nμ = 0.3.

Figure 3.

Evolutionary dynamics when the optimum changes abruptly, shifted at time t = 0 from −5 to 5 (which corresponds to Nβ = ±10, that is about 5% of the total range; see (c)). The trait consists of n = 100 loci of equal effect. Expectations (dashed lines) of (a) a polygenic trait and (b) its genetic variance. The grey regions cover ± the standard error (root mean squared deviation of the variance of the macroscopics). The change in the genetic variance is at most of 0.4% of the initial value, while its standard error is 10.3%. The grey lines are averages of the numerical realizations (with N = 100; 500 replicas were employed). The goodness of fit (151 d.f.) accepts the null hypothesis in both cases: (a) z = −1.14, p = 0.086; the maximum deviation is 26.5% of the standard error (0.50); (b) z = 0.18, p = 0.03; the maximum deviation is 22.19% of the standard error (1.41). (c–f) Evolution of the local forces. Note the short range of change on (d–f), these forces remain practically constant. Nλ = −1.0, Nσ = −4 and Nμ = 0.5. Otherwise as in figure 2.

In these examples, we find that the expected genetic variance remains practically unchanged, even though ν fluctuates wildly in any one realization. In fact, in the two cases in figures 2 and 3, 〈ν〉 is variable, but achieves at most an increase of 1 per cent. This makes it not only hard but to some extent pointless to aim for an accurate numerical or—more critically—empirical estimation of 〈ν〉 [41], in part because we would need an unrealistic number of observations. The variance of a set of independent (numerical or empirical) measurements of ν is of comparable magnitude to the mean range of response of such measurements, so any change in ν will be obfuscated by the effects of drift.

However, the nearly constant patterns of the expectations of ν should not be confused with a constancy of ν itself. The latter will of course fluctuate around its expectation because of genetic drift. Accordingly, we evaluated the deviations from the expectations by computing Var(ν) (which follows from the derivative ∂2log[ℤ]/∂4Nσ2, an element of the matrix 𝒞), and from it, the root mean square deviation (RMSD). The RMSD shows the range in which the individual paths are most likely to occur. These bounds are also shown in figures 3 and 4, exposing the fact that the effects of drift are big compared with the change in the expectations, but still in a narrow percentile range of the actual standing variation. This indicates that in practice ν can be considered constant. Such small changes in the genetic variance and its expectation justify the classical approaches like the breeder's equation, which relies on constant heritabilities for long-term predictions [1]. For these kind of situations, a complicated mathematical machinery like the one introduced here seems unjustified. Nevertheless, we can still apply our calculations in order to compute other aspects that do not arise naturally from the classical theory. For example, we can easily estimate the expected variance of the set of measurements alluded above [41]. This not only gives a rule of thumb of when to expect genetic variance to remain constant, but also provides a way to falsify the SM approach. With increasing numbers of loci (i) the range of change of ν becomes smaller (there is larger mutational load), and (ii) the range of fluctuation (i.e. the standard error) increases. Thus, we are even less able to detect changes in the expectations.

These calculations give some insight into why 〈ν〉 hardly varies in response to selection. As figures 2 and 3 suggest, drift would be the main driving force for the changes in genetic variance. Why is not the change in 〈ν〉 more pronounced?

Thinking in terms of the distribution of allele frequencies (figure 4, showing the marginal distribution at one locus), we find that the distribution is bimodal. The positions of the adaptive peaks determine the genetic variance, which depends only weakly on their height. The relative height of these peaks, on the other hand, determine the value of the trait mean, but depend weakly on their position. Thus, because these two quantities ( and ν) are practically uncoupled, it is possible to select over them more or less independently. Selection on the trait mean will cause multiple jumps among the peaks [10]. But the position of the peaks remain unchanged, because they are equidistant to the saddle, which is situated at an intermediate frequency

and ν) are practically uncoupled, it is possible to select over them more or less independently. Selection on the trait mean will cause multiple jumps among the peaks [10]. But the position of the peaks remain unchanged, because they are equidistant to the saddle, which is situated at an intermediate frequency  (this is because there is no dominance). Consequently, because the genetic variance is symmetric w.r.t. the allele frequencies, few ‘−’ alleles with frequency p (and thus ‘+’ alleles with frequency 1 − p) result in as much variance as numerous ‘−’ alleles with frequency 1 − p (and ‘+’ alleles of frequency p). So, although the alleles are jumping from one peak to the other, their frequencies always change from p to 1 − p (or vice-versa), affecting the proportions of ‘+’/‘−’ alleles at each peak, and thus the trait, but without affecting the genetic variance. However, the intermediate frequencies are transiently populated by the jumping alleles, so a slight increase in the genetic variance should be observed. This change, as a matter of fact is predicted by the SM but it is generally only a few per cent of the standard error, and often too small to be detected. Note, however, that the force related to the genetic variance (that is, N σ) shows a small change. But the scale is so narrow that it can practically be considered constant. This is, of course, supported by the SHoC expectation of the genetic variance, equation (4.1), where the expectation of the genetic variance does not depend on

(this is because there is no dominance). Consequently, because the genetic variance is symmetric w.r.t. the allele frequencies, few ‘−’ alleles with frequency p (and thus ‘+’ alleles with frequency 1 − p) result in as much variance as numerous ‘−’ alleles with frequency 1 − p (and ‘+’ alleles of frequency p). So, although the alleles are jumping from one peak to the other, their frequencies always change from p to 1 − p (or vice-versa), affecting the proportions of ‘+’/‘−’ alleles at each peak, and thus the trait, but without affecting the genetic variance. However, the intermediate frequencies are transiently populated by the jumping alleles, so a slight increase in the genetic variance should be observed. This change, as a matter of fact is predicted by the SM but it is generally only a few per cent of the standard error, and often too small to be detected. Note, however, that the force related to the genetic variance (that is, N σ) shows a small change. But the scale is so narrow that it can practically be considered constant. This is, of course, supported by the SHoC expectation of the genetic variance, equation (4.1), where the expectation of the genetic variance does not depend on  .

.

Figure 4.

Marginal distribution of frequencies of an allele. (a) Changing the intensity of selection over the trait modulates the height of the adaptive peaks (other things being equal, Nσ = −3.0) resulting in pronounced changes in the expectation of the trait mean but with weak changes (even a relatively constant value) of the expectation of the genetic variance. Solid line: Nβ = 2.5,  , 〈ν〉 = 1.12. Dashed line: Nβ = 0

, 〈ν〉 = 1.12. Dashed line: Nβ = 0  . Dotted line: Nβ = −2.5,

. Dotted line: Nβ = −2.5,  ,

,  . (b) Changing the intensity of selection over the genetic variance modulates the position of the adaptive peaks (other things being equal, Nβ = 2.5), resulting in pronounced changes in the expectation of the genetic variance, but with weak changes on the expectation of the trait mean. Dotted line: Nσ = 0,

. (b) Changing the intensity of selection over the genetic variance modulates the position of the adaptive peaks (other things being equal, Nβ = 2.5), resulting in pronounced changes in the expectation of the genetic variance, but with weak changes on the expectation of the trait mean. Dotted line: Nσ = 0,  , 〈ν〉 = 8.04. Short-dashed line: Nσ = −1,

, 〈ν〉 = 8.04. Short-dashed line: Nσ = −1,  ,

,  . Large-dashed line: Nσ =− 4,

. Large-dashed line: Nσ =− 4,  ,

,  . Solid line: Nσ = −10,

. Solid line: Nσ = −10,  ,

,  . In all cases Nμ = 0.5, Nλ = −1.0, and the trait is composed of 20 loci of equal effects.

. In all cases Nμ = 0.5, Nλ = −1.0, and the trait is composed of 20 loci of equal effects.

4.3. Effect of the number of loci on the rates of evolution

As suggested by comparing figures 2 and 3, with 5 and 100 loci, respectively, the amount of standing genetic variation is proportional to the number of loci n. This insinuates that traits composed of more loci should adapt quicker to ecological changes. But, is there any relationship between these rates of adaptation and the number of loci? As a matter of fact, if we measure time relative to the expected number of mutations (i.e. 2nμt) the time to reach an equilibrium are comparable for different number of loci. In this time scale, we find that the genetic variance is slowed down, but it also reaches higher levels for traits composed of larger numbers of loci (figure S1 in the electronic supplementary material, S1). In the limit of infinite loci, we would have (i) constant genetic variance, but (ii) its value would diverge, leading to ‘instant’ adaptation. A solution to this paradoxical limit comes from rescaling the effects of the alleles with the number of loci [42,43]. Thus in making  , the genetic variance is regularized: as the number of loci increases, its rate of change goes to zero, and it converges to a constant value, that is, the infinitesimal model [43]. These circumstances are depicted in figure S2 of the electronic supplementary material, S1.

, the genetic variance is regularized: as the number of loci increases, its rate of change goes to zero, and it converges to a constant value, that is, the infinitesimal model [43]. These circumstances are depicted in figure S2 of the electronic supplementary material, S1.

4.4. Moving optimum

Another example that was alluded in §1 and which is of general interest is when the optimum is steadily shifting its position [44–48]. Figure 5 presents a comparable situation to that of figure 3, but where the optimum is moving slowly. Again, we do not detect major changes in 〈ν〉. Here, the trait is being selected in a range that is much less than its total range of response (± n,n = 100 in this case, while the optimum shifts in a comparably short range, between ±5). However, if we consider a larger range, comparable to n (depicted in figure 6), then at the extremes we force fixation of some alleles, and the variance changes significantly. Note that during evolution, the range of change of the effective selective values (what we termed forces) can change notably (figure 6). But if we compare with other scenarios (e.g. figure 5), the forces can be virtually constant.

Figure 5.

Evolutionary dynamics when the optimum moves gradually from −5 to 5 (which corresponds to Nβ = ±10, see (c), dotted line) at a rate of 510−3 units per generation. The trait consists of n = 100 loci of equal effect. Expectations (dashed lines) of (a) a polygenic trait and (b) its genetic variance. The grey regions cover ± the standard error (root mean squared deviation of the variance of the macroscopics). The grey lines are averages of the numerical realizations (with N = 100; 500 replicas were employed). The goodness of fit (151 d.f.) rejects the null hypothesis for the trait mean, and accepts it for the genetic variance: (a) z = −0.21, p = 0.009; however, the maximum deviation is 37.31% of the standard error (0.50); (b) z = 0.067, p = 0.41; the maximum deviation is 15.94% of the standard error (1.41). (c–f) Evolution of the local forces. Note the short range of change on (d–f), these forces remain practically constant. Nλ = −1, Nσ = −4, Nμ = 0.5. Otherwise as in figure 2.

Figure 6.

Evolutionary dynamics when the optimum moves gradually from −12 to 12 (which corresponds to Nβ = ± 48, 60% of the total range; see (c), dotted line) at a rate of 5 × 10−3 units per generation. The trait consists of n = 20 loci of equal effect. Expectations (dashed lines) of (a) a polygenic trait and (b) its genetic variance. The grey regions cover ± the standard error (root mean squared deviation of the variance of the macroscopics). The grey lines are averages of the numerical realizations (with N = 100; 500 replicas were employed). The goodness of fit (101 d.f.) rejects the null hypothesis for the trait mean, and accepts it for the genetic variance: (a) z = −0.64, p< 10− 9; the maximum deviation is 171% of the standard error (0.36); (b) z = 0.06, p = 0.54; the maximum deviation is 41% of the standard error (0.69). (c–f) Evolution of the local forces. Note the short range of change on (d–f), these forces remain practically constant. Nλ = −2, Nσ = −2, Nμ = 0.5. Otherwise as in figure 2.

Despite the previous examples, the polygenic dynamics when the optimum is steadily moving can be very complicated, because as the optimum shifts the allelic combinations that match the optimum continuously change with dramatic microscopic modifications [46,48]. The result is that the path of ν is erratic in the deterministic case, because the alleles at different loci keep sweeping and their frequency keep changing, inflating and deflating the genetic variance in an almost chaotic fashion. This is all smoothed out when drift is present and we average over an ensemble. Thus, a more serious test for our theory would be to consider situations where there is considerable chance that the dynamics get stuck in local minima.

4.5. Selection on the genetic variance

In part, whether our predictions make biological sense, is a matter of time scale. In the first examples, genetic drift eliminated genetic variance at a similar rate to its production by mutation. Thus, the rate of change of 〈ν〉 was very small. We can obtain a different picture if the genetic variance is forced to change along with, or instead of, selecting over the trait. Under SS, the mean fitness is determined by two variance measures: the genetic variance, and the squared deviation of the trait mean w.r.t. the optimum. Both of them are under selection with strengths Nσ and Nλ, respectively. The canonical way to represent SS [49] will assume that Nσ = Nλ = s/2. We relax this constraint, and allow selection to act independently over ν and over the deviation from the optimum. At the moment, we focus on the first alternative.

The scenario that we study, is that when a population is initially under selection–mutation–drift equilibrium where selection acts over a quantitative trait, but with little strength on the variance. Suddenly, selection against the variance increases, and the optimum changes sign. This implies a radical reconfiguration of the adaptive landscape. In this case, will the SM approximation be accurate? An example of this scenario is demonstrated in figure 7. We find that there is a good agreement with the simulations.

Figure 7.

Evolutionary dynamics when the optimum changes abruptly, shifted at time t = 0 from −5 to 5 (which corresponds to Nβ = ±10, see (c)), and simultaneously, selection against the genetic variance is increased from Nσ = −1 to Nσ = −5. The trait consists of n = 100 loci of equal effect. Expectations (dashed lines) of (a) a polygenic trait and (b) its genetic variance. The grey regions cover ± s.e. (root mean squared deviation of the variance of the macroscopics). The change in the genetic variance is at most of 0.4% of the initial value, while its standard error is 10.3% . The grey lines are averages of the numerical realizations (with N = 100; 500 replicas were employed). The goodness of fit (151 d.f.) accepts the null hypothesis for the trait mean, but rejects it for the genetic variance: (a) z =− 0.011, p − 0.87; the maximum deviation is 17.19% of the standard error (0.50); (b) z = 0.28, p< 10−4; the maximum deviation is 39.77% of the standard error (1.37). (c–f) Evolution of the local forces. Note the short range of change on (d–f), these forces remain practically constant. Nλ = −1, Nμ = 0.3. Otherwise as in figure 2.

As explained above, selection over the trait mean and over the genetic variance are practically uncoupled. Thus, if the optimum moves without affecting the strength of selection, the trait mean can freely evolve without significant changes on the genetic variance (figures 2, 3 and 5). However, we have now studied a more general situation when both forces, the optimum and the selection strength, change. Figure 7 shows that the pattern of change of the force Nβ* is similar to the one in figure 3 where a similar situation was studied, except that the genetic variance did not change. This suggests that even in this case (figure 7) where both selection and the optimum shift, the evolution of the genetic variance and of the trait mean are uncoupled. This is unexpected because, unlike the previous example, the adaptive peaks are being relocated. This rearrangement of the microscopic space, which originally was nearly peaked close to  but which suddenly became bi-modal, partitioned the occurring alleles closer to the borders of fixation—these configurations that diminish the genetic variance. Simultaneously, a high frequency of ‘+’ alleles was produced, and at another locus a high frequency of ‘−’ alleles, in such proportions that maintain low genetic variance, while allowing the trait mean evolve to increase to its new optimum value.

but which suddenly became bi-modal, partitioned the occurring alleles closer to the borders of fixation—these configurations that diminish the genetic variance. Simultaneously, a high frequency of ‘+’ alleles was produced, and at another locus a high frequency of ‘−’ alleles, in such proportions that maintain low genetic variance, while allowing the trait mean evolve to increase to its new optimum value.

Under the quasi-equilibrium approximation, the SHoC formula (equation (4.1)) would hold for any time if we would substitute Nμ and Nσ for the effective forces Nμ* and Nσ*. Thus, the expectation of the genetic variance would only change if any of these change. If the optimum value is displaced without significantly changing Nμ* or Nσ*, there would be no reason to expect a change in 〈ν〉SHoC. A marginal issue here, is whether the predictions from equation (4.1) are in agreement with the SM expectations. For example, for the initial state depicted in figure 7, the numerical averages (from the Wright–Fisher simulations) for the genetic variance give  , the SHoC gives 〈ν〉SHoC = 30, whilst the SM gives 〈ν〉SM=31.08 ± 1.5. For the end equilibrium, we have

, the SHoC gives 〈ν〉SHoC = 30, whilst the SM gives 〈ν〉SM=31.08 ± 1.5. For the end equilibrium, we have  , and the models give 〈ν〉SHoC = 10.00, while 〈ν〉SM = 14.12 ± 1.21. There seems to be a decent agreement between both predictions. However, the agreement between the SHoC and the SM expectations does diverge as Nσ → 0 (data not shown).

, and the models give 〈ν〉SHoC = 10.00, while 〈ν〉SM = 14.12 ± 1.21. There seems to be a decent agreement between both predictions. However, the agreement between the SHoC and the SM expectations does diverge as Nσ → 0 (data not shown).

4.6. Further dynamical modes

Quantitative traits, and the genetic variance, under SS can also evolve by other means than selecting over the variance, and changing the position of the optimum. The statistical mechanics allows  and ν to vary by changes in Nλ. Recalling that this parameter penalizes the deviations of the trait mean from the optimum, varying it should not affect the value of

and ν to vary by changes in Nλ. Recalling that this parameter penalizes the deviations of the trait mean from the optimum, varying it should not affect the value of  . In fact, if we keep the optimum at a fixed place, and say, we reduce Nλ, the

. In fact, if we keep the optimum at a fixed place, and say, we reduce Nλ, the  and 〈ν〉 remain almost unchanged. Instead, there is an increase in the standard deviation of

and 〈ν〉 remain almost unchanged. Instead, there is an increase in the standard deviation of  . Thus, this parameter Nλ determines how much

. Thus, this parameter Nλ determines how much  deviates from its expected values. Surprisingly, the marginal distribution of allele frequencies remains practically unchanged. Despite that an adaptive peak is not the same as the marginal distribution of an allele, the mean and variance at each adaptive peak do not depend on Nλ (see appendix B), thus altering its value will not affect the positioning and relative heights of the adaptive peaks. However, Nλ would make the distribution more or less concentrated at such peaks. Thus although the expectations remain the same, populations with larger Nλ would show population means and variances more scattered than other population (or states) where Nλ is smaller (for numerical examples, see figures S4 and S5 of the electronic supplementary material, S1).

deviates from its expected values. Surprisingly, the marginal distribution of allele frequencies remains practically unchanged. Despite that an adaptive peak is not the same as the marginal distribution of an allele, the mean and variance at each adaptive peak do not depend on Nλ (see appendix B), thus altering its value will not affect the positioning and relative heights of the adaptive peaks. However, Nλ would make the distribution more or less concentrated at such peaks. Thus although the expectations remain the same, populations with larger Nλ would show population means and variances more scattered than other population (or states) where Nλ is smaller (for numerical examples, see figures S4 and S5 of the electronic supplementary material, S1).

Another way in which populations can evolve, is by changing the mutation rate. An increase (decrease) in the number of mutants will inflate (deflate) the expected genetic variance, but will not affect the trait mean's expectation. However, if the mutation rate approaches the μ = 1/4N, the SM method is inaccurate (this will be discussed in more detail in §4.7).

At such point, the borders of the distribution (i.e. p = 0,1) become absorbing, leading to a failure of the local equilibrium dynamics (figures S6 and S7 of the electronic supplementary material, S1). Close to the boundaries, two effects are present: (i) drift is much more powerful than selection in fixating alleles, and (ii), mutation does not produce enough alleles to keep the distribution away from fixation. Towards the centre of the distribution, selection is more effective than drift in maintaining polymorphisms. The rates at which each process occurs have different time scales: fixation towards the borders happen very fast, whereas diffusion away from the borders is much slower. The local equilibrium dynamics cannot cope with both time scales at the same time, and the SM approximation fails [21].

4.7. Some negative results: failure of the SM approximation

4.7.1. Failure at low mutation rates

In our previous paper, where we dealt with DS [21], the SM framework had to be modified for low mutation rates ( ). At these low mutation rates, there is a major qualitative change in the distribution of allele frequencies, since the fixation states suddenly become absorbing. This is also true in the present case of SS. Thus, when mutation rates are close to this critical point

). At these low mutation rates, there is a major qualitative change in the distribution of allele frequencies, since the fixation states suddenly become absorbing. This is also true in the present case of SS. Thus, when mutation rates are close to this critical point  , the local equilibrium underestimates the rates of change (e.g. the genetic variance), and the accuracy of our predictions is lost. In the electronic supplementary material, S1 (§5), we show two examples, with the optimum abruptly increased and for a moving optimum, where the predictions deviate significantly.

, the local equilibrium underestimates the rates of change (e.g. the genetic variance), and the accuracy of our predictions is lost. In the electronic supplementary material, S1 (§5), we show two examples, with the optimum abruptly increased and for a moving optimum, where the predictions deviate significantly.

When  the macroscopics that characterize the system change, in what is called a phase transition. In this case, the genetic heterozygosity U, has to be dropped, and the dynamics of the other macroscopics must be computed from the jump processes (using a master equation, instead of diffusion [21]). This works well for DS, but would lead to a different set of equations for the local variables and for the macroscopics compared with the high mutational input case. Nevertheless, the philosophy is the same: to maximize entropy under the constraint of the appropriate macroscopics, and approximate the dynamics using the local equilibrium Ansatz.

the macroscopics that characterize the system change, in what is called a phase transition. In this case, the genetic heterozygosity U, has to be dropped, and the dynamics of the other macroscopics must be computed from the jump processes (using a master equation, instead of diffusion [21]). This works well for DS, but would lead to a different set of equations for the local variables and for the macroscopics compared with the high mutational input case. Nevertheless, the philosophy is the same: to maximize entropy under the constraint of the appropriate macroscopics, and approximate the dynamics using the local equilibrium Ansatz.

4.7.2. Failure when selection on the genetic variance is strong

We also found that when selection on the genetic variance is very strong, 4|Nσ| ≫ 1, we also lose accuracy. In the electronic supplementary material, S1, we illustrate this situation for abrupt changes and gradual moves in the optimum trait value. The reason for the failure seems to be that when the valleys between the adaptive peaks are deep, the microscopic distribution approaches a stationary distribution very slowly, so that our central assumption that ψ is nearly stationary, fails. Again, a jump process seems to be a more accurate model than diffusion, which we are eager to apply for SS. We will come back to these explanations later.

4.8. Hysteresis

The quasi-equilibrium assumption will hold when the changes in the microscopic states are faster than the macroscopic changes. Because of the complexity of the adaptive landscape, a given macroscopic state can stand at different adaptive peaks. Although these peaks may be indistinguishable from the macroscopic point of view, they will have an effect on the rates of change of the latter. Because the transitions among different adaptive peaks may in general happen at different rates, then the final macroscopic state—after a peak shift—will depend on the particular microscopic configuration at the time of the jump. This is remarkable, because the stationary distribution is unique, and is determined solely by 〈A〉. An immediate implication is that the trajectory which the macroscopics take during evolution, depends on their previous history. That is, the dynamics show hysteresis. Thus, even when the microscopic variables are averaged out, there can be memory in the macroscopic dynamics.

In fact, we find that, out of equilibrium, a particular value of  does not always correspond to a unique value of 〈ν〉, which is the signature of hysteresis. Figure 8a shows an example where a trait evolves starting at the point a, following an optimum that increases towards the point b. After equilibrium has been reached, the optimum then moves back to the original value a; the other parameters are left unchanged. The trajectories in the space

does not always correspond to a unique value of 〈ν〉, which is the signature of hysteresis. Figure 8a shows an example where a trait evolves starting at the point a, following an optimum that increases towards the point b. After equilibrium has been reached, the optimum then moves back to the original value a; the other parameters are left unchanged. The trajectories in the space  are not the same for the forward and backwards processes. Although the predictions and the simulations do not agree (clearly, we are in the range where the adaptive peaks are well separated), both the actual model and the SM approximation show an equivalent qualitative response. In figure 8b the optimum moves slowly (as in figure 5) and afterwards, moves back to its original point. Because the optimum follows the same trajectory forward and backwards, the amount of hysteresis lower. Finally, in figure 8c both the optimum and selection against the genetic variance change abruptly (as in figure 7), and after equilibrium is attained, they abruptly change back to their original values. The rates of change of

are not the same for the forward and backwards processes. Although the predictions and the simulations do not agree (clearly, we are in the range where the adaptive peaks are well separated), both the actual model and the SM approximation show an equivalent qualitative response. In figure 8b the optimum moves slowly (as in figure 5) and afterwards, moves back to its original point. Because the optimum follows the same trajectory forward and backwards, the amount of hysteresis lower. Finally, in figure 8c both the optimum and selection against the genetic variance change abruptly (as in figure 7), and after equilibrium is attained, they abruptly change back to their original values. The rates of change of  and 〈ν〉 have different time scales, and hysteresis is enormous.

and 〈ν〉 have different time scales, and hysteresis is enormous.

Figure 8.

Hysteresis on the evolutionary dynamics of a trait that consists of n = 100 loci of equal effect. (a) Abrupt change in the optimum (Nβ =±12, Nσ = −15, Nλ = −1, Nμ = 0.7). (b) Moving optimum (Nβ = ±10, Nλ = −1, Nσ = −4, Nμ = 0.5; as in figure 3). (c) Abrupt change in the optimum and selection against the genetic variance (Nβ = ±12, Nσ = −1 → − 5, Nλ = −1, Nμ = 0.3; as in figure 7). The arrows indicate the direction of evolution, which start at the points s, ending at the point e, and then backwards, that is when the forces that changed are switched back. The black lines are the SM predictions, and the grey lines, averages from the simulations (population size N = 100; averages over 500 replicas). In all cases, the paths on both directions do not overlap, showing that the macroscopic states depend on the path.

The puzzling issue here is, why after averaging over the microstates, do the trajectories of the expectations show hysteresis? The question seems to be difficult to answer in detailed, mechanistic terms. But, we can understand it from the macroscopic phenomenology. As we showed and discussed above, the trait mean and the genetic variance can evolve more or less independently. Depending on the strength of the selection coefficients, which are the directional component towards the optimum—Nβ—and the strength of selection again the genetic variance—Nσ—the response will be quicker for one component or for the other (depending which one is effectively bigger). Thus the macroscopic that changes faster in one direction, will be also the one that changes faster in the other direction. For example, in figure 8a the optimum shifts. The standing genetic variance initially increases as the rare mutants become frequent and the trait mean increases. Eventually, this variance is rapidly consumed as the trait mean slows down relaxing to equilibrium. If we now consider the reverse process, shifting the optimum back to its original position, the story for the variance will be exactly the same: initial mutants will increase their representation, and the genetic variance increases. But although the genetic variance is taking the same path, the trait mean takes a mirror path: before it was characterized by a period of quick increase, followed by a slow increase. Now it takes a quick decrease followed by a slow decrease.

5. Discussion

The main question that we have addressed in this work is whether the SM approximation is valid in the challenging case of SS. In general, the maximum entropy distribution has been shown to match that of the exact model (the diffusion equation) at equilibrium, and it is straightforward to determine which macroscopics are—in principle—relevant for evolutionary change [21, appendix B]. This extension of our previous methodology has been applied to an important and classical theme in population and quantitative genetics: understanding the factors that generate quantitative variation and its evolution. In §1, we posed the problem which refers to the difficulties that appear in estimating the change of a complex trait. We also proposed three ways around to these complications, namely unequal allelic effects, averaging over the different deterministic paths and genetic drift. We have taken only the last two, for clarity, and assume equal effects to give a worst case scenario where microscopic irregularities are enhanced. In fact, an extension of the SM method to unequal effects involves a slightly different version of the generating function and per-locus equations (see appendices A,B). The approximations follow from the application of the central limit theorem, which does not require the same distribution at all loci, only independence between these loci (see appendix A).

We have been able to predict long-term changes of the expectations of the mean and variance of a trait, in the extreme situations when selection either changes abruptly, or gradually owing to a moving optimum. In each case the expected trait mean follows radically different paths, yet the expected genetic variance stays roughly constant. Unless there are changes in the selection against the genetic variance, its expectation seldom changes appreciably. Therefore, classical approaches of quantitative genetics, like the breeder's equation [1,50, ch. 20], where the constancy of the additive genetic variance is assumed, seem to describe fairly well the evolution of the trait mean, since the fluctuations owing to drift do not seem to affect critically this constancy of the expected genetic variance.