1. Introduction

Arguably, longitudinal research designs are the sin qua non for studying development and/or treatment effects among populations. Advantages include the ability to separate change over time within individuals (i.e., age effects) from differences among individuals at baseline (i.e., cohort effects) and establishment of temporal relationships that allow for stronger causal interpretations (Diggle, Heagerty et al., 2002). While the number of longitudinal studies has increased dramatically over the last several decades, limited time and resources still inhibit collection of these data, especially among high-risk populations. This is especially true among urban, racial/ethnic minority youth, as adolescent populations in urban cities with greater socioeconomic disadvantage and residential instability may be more difficult to track and locate (Ribisl, Walton et al., 1996). This is a critical gap in the literature, as racial/ethnic minority youth disproportionately reside in metropolitan cities and are among the fastest growing segments of the U.S. population (U.S. Census Bureau, 2000; 2003).

Participant attrition is a leading threat to the validity of inference in longitudinal studies, as statistical power and generalizability of findings are dependent upon retaining a large, representative, proportion of the original sample (Shadish, Cook et al., 2002). Unfortunately, attrition is inherent in almost every longitudinal study. However, the extent varies greatly; some studies retain as few as 44% of the original cohort while others retain more than 90% (Hansen, Tobler et al., 1990). Hansen and colleagues (1990) identified a clear linear relationship between participant retention and length of follow up in their seminal meta-analysis of 85 school-based substance abuse prevention cohort studies describing normative trends in attrition, reporting mean retention rates of 81% at 3 months, 78% at 6 months, 73% at 12 months, 72% at 24 months, and 68% at 36 months. They also noted significant attrition among cohorts; only 22% of cohorts were followed as long as 2 years and 7% were followed 3 years. Thus, there is a clear need to retain participants early and follow them over several years.

Among treatment studies, retention rates have been somewhat more favorable. For example, Cotter and colleagues (2005) successfully retained 90% of their young adult cohort participating in the developmental trends study over 7 years. Their sample included 177 clinic-referred boys with high rates of disruptive behavior disorders. The boys were 70% White, 41% low-income, and 53% resided in urban areas. Likewise, Haggerty et al (2008) retained 86% of children whose parents were receiving methadone treatment over 2 years. These families were 78% White, 41% low-income and all were receiving treatment in two Seattle-area methadone clinics. While such success is noteworthy, the distinction between treatment and prevention studies is important. Cohorts in treatment inherently have more frequent and/or regular contact with researchers, clinics, and the like while receiving services, despite perhaps being more physically and socially mobile (Scott, 2004). In contrast, young cohorts receiving school-based, universal preventive interventions generally provide limited personal information, have limited direct contact with researchers, and may be less vested in the project, especially as time progresses beyond the end of the intervention. Clearly, both types of studies must overcome considerable challenges in efforts to successfully retain participants; however, important differences remain.

Ribisl and colleagues (1996) recommended eight strategies for tracking participants in longitudinal studies: (1) gather complete location information at baseline from the participant, friends, or relatives; (2) establish formal and informal relationships with public and private agencies; (3) establish a project identity; (4) emphasize the importance of tracking to project staff; (5) use the simplest and most economical tracking methods first, saving more extensive methods for participants that are difficult to find; (6) make involvement interesting and rewarding for participants; (7) expend the greatest amount of tracking effort at initial follow-up; and (8) customize tracking efforts to the individual participant’s situation and the study’s circumstances. While a number of studies have employed these strategies (e.g. Coen, Patrick et al., 1996; Cotter, Burke et al., 2002; Haggerty, Fleming et al., 2008; Sullivan, Rumptz et al., 1996), few have illustrated their implementation in conjunction with various data collection strategies and identified the total monetary cost associated with each.

Tracking and data collection among urban, low-income populations presents unique challenges, as these populations tend to move more frequently (Lewis, 2007), have unreliable telephone numbers or email addresses (Frankel, Srinath et al., 2003; Rosston & Wimmer, 2000; Tarasuk, 2001), and may be forced out of the city due to gentrification (Atkinson, 2004). Targeting adolescents within this all ready difficult context is even more complex. Public schools represent one of the easier avenues for tracking and collecting data from youth. However, high school dropout ranges from 50% to 80% in the United States’ largest metropolitan cities (Editorial Projects in Education, 2009). Thus, school-based efforts are likely to have significant attrition among the highest-risk youth, severely limiting the generalizability of study findings. Further, adolescents are less likely to have individual records in credit or other public databases, as many urban youth are without a drivers license (Preusser, Ferguson et al., 1998) and are too young to register to vote or open bank, cell phone, or credit accounts commonly used to track individuals. Challenges notwithstanding, cohort studies among such populations of youth are increasingly important, as a number of social, behavioral, and physical health problems cluster in urban, low-income areas (Arkes, 2007; Duncan, Duncan et al., 2002; Hill & Angel, 2005) and the unique etiology of these remains open for debate.

The current study reports on the results of efforts to locate and survey participants in Project Northland Chicago (PNC), a longitudinal, group-randomized, controlled trial of an alcohol preventive intervention for multi-ethnic urban youth (Komro, Perry et al., 2004; Komro, Perry et al., 2008), 3 to 4 years after the end of the project’s intervention and evaluation activities. Successful follow-up of this cohort was important, as there are few longitudinal studies of urban, racial/ethnic minority youth and fewer still that include data from a full range of distal to proximal risk and protective factors (as available from the PNC study). Further, it reports on success and cost across three different phases of survey administration, (1) U.S. mail, (2) school-based and (3) courier service delivery. Comparisons across phase of completion are important, as they identify whom in the cohort we are trying to reach with more resource-intensive efforts. Specific contributions of this study include presentation of several methods to locate and survey members of the PNC cohort and a description of the types of response bias related to selective attrition of those at highest-risk and across different phases of data collection. Further, results provide important and interesting comparisons in terms of cost and success. The lessons learned from this study may appropriately inform future efforts to track and collect data over time among high-risk, populations of youth outside of the treatment context.

2. Methods

2.1 Sample

PNC focused on a cohort of students who were in the sixth grade in the 2002–2003 school year. These youth were enrolled in 61 Chicago public schools that were matched on ethnicity, poverty, mobility, and reading and math test scores and randomized, along with their surrounding community areas, to intervention or control conditions. Schools that participated in the study were located throughout Chicago and had similar demographic characteristics to students in the Chicago school district. Students continued in the intervention and evaluation (e.g. repeated annual surveys of students) activities through the 2004–2005 school year, when the students were completing the 8th grade. A complete description of the study design, intervention, evaluation and outcomes is provided elsewhere (Komro, Perry et al., 2004; Komro, Perry et al., 2008).

Repeated cross-sectional surveys, with an embedded cohort of youth, were administered in study schools during the fall of 2002, spring of 2003, spring of 2004, and spring of 2005, when the students were in 6th, 7th and 8th grades. Surveys were administered by three-person teams of trained university-based research staff interviewers using standardized protocols. Sixty-one schools and 4,259 students participated in the baseline survey (beginning of 6th grade), 59 schools and 4,240 students participated in the first follow-up survey (end of 6th grade), 60 schools and 3,778 students participated in the second follow-up survey (end of 7th grade), and 59 schools and 3,802 students participated in the third follow-up survey (end of 8th grade). The cohort follow-up rate from baseline to first follow-up was 89%, from baseline to second follow-up was 67% and from baseline to third follow-up was 61%. Loss to follow-up occurred mainly due to two schools closing and students leaving the other study schools. A total of 5,812 students completed one or more of these study surveys: 2,373 completed four surveys, 808 completed three surveys, 1,534 completed two surveys and 1,097 completed one survey.

The study sample was 50% boys, ethnically diverse (43% Black, 29% Hispanic, 13% white, and 15% other race/ethnicity) and of low socioeconomic status (72% received free or reduced-price lunch). Less than half the students lived with both of their parents (47%) and the majority (74%) reported English as the primary language spoken at home. The mean age when the students were beginning 6th grade (i.e., at baseline) was 11.85 (SD=0.63). In the long-term follow-up of this cohort, an attempt was made to survey 5,711 participants who completed one or more previous PNC study surveys during their anticipated 12th grade year. This excluded students who were deceased (N=17), incarcerated (N=81) or identified as duplications in the cohort list (N=3). Follow-up consisted of mail-, Internet- and school-based surveys administered between October 2008 and September 2009. At the time the follow-up began, 33% of the cohort was over age 18.

2.2 Tracking Strategy

Upon completion of the PNC trial, we began a four-step tracking procedure in April 2006, one year after the last contact with the study cohort (8th grade survey, spring 2005). First, we contacted Chicago Public Schools (CPS) and provided them with a list of the PNC student cohort and asked them to provide updated parent addresses. Second, postcards were mailed to the cohort and change of address information was requested from the post office. We used the last address on file for those whom CPS did not have updated contact information. Third, for those whose postcard was returned with no forwarding address, we contracted with the University of Minnesota’s Health Survey Research Center (HSRC) to perform intensive tracking using their subscription to Axciom Insight (Acxiom Corporation, 2010). Searches were performed using the parent and student names and recent address we provided, focusing first on the state of Illinois for people with the same name and then considering the entire U.S. if the search yielded no results. Lastly, postcards were mailed to new addresses identified by HSRC to confirm the address. These procedures were repeated in April 2007 to maintain annual contact with the study cohort and retain current addresses.

2.3 Survey Methods

We considered three survey methods for our primary data collection strategy: (1) school based, (2) telephone, and (3) mail and Internet-based. A mail and Internet-based strategy was selected for several important reasons: (1) a school-based survey was not practical, as the majority of the study cohort had spread out to over 130 high schools in Chicago and beyond; (2) school-based surveys suffer from nonresponse bias due to exclusion of school dropouts, absent students, and schools that refuse to participate (Johnston, O'Malley et al., 2002) and racial/ethnic minority youth have higher dropout rates (NCES, 2005); (3) typical response rates of telephone interviews with adolescents range from 49% to 65%, (Klein, Rossbach et al., 2001; Klein, Havens et al., 2005; Lee, Arheart et al., 2005; Sly, Hopkins et al., 2001; Sly, Trapido et al., 2002; Thomson, Siegel et al., 2005) and low-income households are less likely to have consistent telephone service (Frankel, Srinath et al., 2003; Rosston & Wimmer, 2000; Tarasuk, 2001); (4) there is a higher level of underreporting of drug use among adolescents and adults with telephone surveys compared with self-administered surveys (Aquilino, 1994; Gfroerer & Hughes, 1991; Moskowitz & Pepe, 2004), especially among ethnic minority youth (Aquilino, 1994; Aquilino & Losciuto, 1990); (5) several studies of pre-adolescents to young adults have found that reporting of sensitive behaviors does not appear to differ by Internet or paper survey mode (Mangunkusumo, Moorman et al., 2005; McCabe, 2004; McCabe, Boyd et al., 2002; McCabe, Couper et al., 2006); and (6) health questionnaires via Internet were positively evaluated by adolescents ages 13 to 17 (Mangunkusumo, Moorman et al., 2005). Given the practical limitations of recruiting more than 130 schools (and the inevitable loss of numerous schools due to principal decision) and complications and threats of nonresponse bias, low response rates and underreporting with school-based and telephone surveys, we selected a mail- and Internet-based survey as our primary data collection strategy. However, we incorporated school- and courier service-based efforts in subsequent phases to maximize our response rate.

First, we implemented a mail- and Internet-based survey with postcard and telephone follow-up reminders for non-responders from October 2008 to April 2009, the procedures for which were based on previous Internet- and mail-based survey research with youth (McCabe, Boyd et al., 2002; Schonlau, Asch et al., 2003). Using current addresses obtained from CPS and HSRC, parents were mailed a consent letter six weeks prior to the beginning of survey implementation, which described the survey and asked them to return a postage-paid, addressed postcard or call our toll-free telephone number if they did not want their son or daughter to participate. For students from whom we did not receive a parent refusal, we mailed a survey packet to each student directly, inviting them to participate in the survey via the Internet or mail. The packet included: (1) an assent form with instructions for the student to read before completing the survey, (2) a paper copy of the survey, (3) instructions on how to access the survey via the Internet with a unique passcode, and (4) a postage-paid, addressed return envelope. For non-respondents, we mailed additional materials, including: (1) a reminder postcard one week following the initial survey packet, (2) a reminder letter with another copy of the survey packet at four weeks following the survey packet, and (3) additional reminder postcards at five and eight weeks following the initial survey packet. At nine weeks following the initial survey packet, reminder calls were made to non-respondents with available telephone numbers. Return address service was requested from the post office on all mailings and materials were re-sent as needed. Students whose items were returned with no forwarding address were included in additional HSRC tracking efforts conducted in Phase 3.

In Phase 2 of the data collection (April–June, 2009), the survey was administered or distributed to non-responders enrolled in Chicago public high schools. First, we obtained updated enrollment information for our cohort from CPS. For schools with 30, or more, of our cohort enrolled (N=28 schools), the survey was administered by teams of Chicago-based research staff at a time and location specified by the schools’ administration. Students were directed to a single location and the survey was administered using standardized protocols. Survey packets were distributed (by our research team or the schools’ staff) to students who were absent on the day of the administration. These students were instructed to complete the survey at home either via the Internet or mail.

For schools with fewer than 30 of our cohort enrolled (N=110 schools), we shipped a package to the schools’ principal (using a courier service) and requested that school staff distribute the surveys to the specified students. The package included: (1) a letter describing the purpose and importance of the project, (2) copies of the CPS and Research Review Board letters of support/approval, (3) a list of cohort students enrolled in their schools, (4) survey packets for each student, (5) courier service return envelope and addressed label, and (6) a $25 Starbucks gift card. Schools were asked to return any surveys that they were not able to distribute using the courier materials provided. Telephone calls were made to each school to confirm receipt of the package and distribution of the surveys.

Phase 3 (July–October, 2009) involved additional tracking of non-responders by HSRC and shipping the survey packets via a courier service. Students who: (1) did not have valid addresses in Phase 1, (2) were not enrolled in Chicago public schools, or (3) were in schools for which we could not confirm survey distribution were targeted in this phase. HSRC tracked each of these students and confirmed or updated their home address. Then, another copy of the survey packet was shipped to each.

In all phases, respondents were mailed $30 cash after completion of the survey. Parent consent, student assent and data collection procedures were approved by the University of Florida Institutional Review Board and the Chicago Public Schools Research Review Board. A Certificate of Confidentiality was obtained from the U.S. Department of Health and Human Services to further protect the confidentiality of the student responses.

3. RESULTS

3.1 Tracking

We assumed contact with 87% (N=5,035) of the cohort in April 2006 with postcards mailed to the students’ homes, with assumed contact defined as a students’ postcard not being returned by the post office (hereafter described as “contact” for simplicity). HSRC performed detailed tracking for 12% (N=717) of those not contacted, and 231 new addresses were identified. Postcards were mailed and 178 contacts were achieved. In April 2007 we achieved contact via mailed postcard with 90% of the cohort. HSRC performed detailed tracking for 8% of the sample without current addresses (N=481). No new information was found for 37 students.

During Phases 1 and 2 of our efforts, we identified 1,715 (30.0%) students that required further tracking by HSRC. These were students who did not have valid mailing addresses (N=406), were not enrolled in school (N=1,124), enrolled in a school that refused participation (N=43), or enrolled in a school for which survey distribution was not confirmed (N=142). No new information was found for 145 (8%) of these and new addresses were identified for 931 (52%). Contact was achieved with 64% (N=1,104) of those tracked. Overall, we achieved contact with 89% (N=5,100) of the cohort.

3.2 Survey Completion

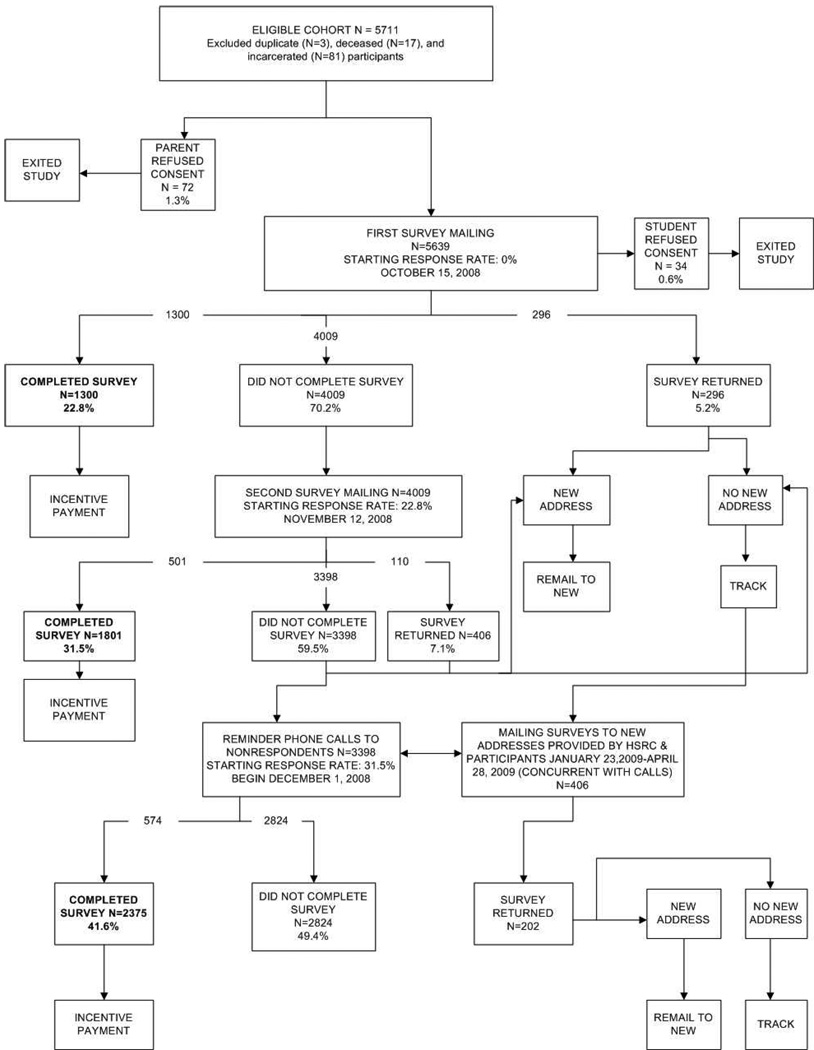

Seventy-two parents (1.3%) refused consent for their child’s participation and 34 students (0.6%) formally declined participation. In Phase 1, 2,375 (41.6%) students completed the follow-up survey (Figure 1), 1,300 (22.7%) did so after the first survey mailing, 501 (8.8%) completed after the second survey mailing, and 574 (10.1%) completed after the reminder telephone calls. Four hundred and six students did not have valid addresses and required additional tracking by HSRC in Phase 3.

Figure 1.

Phase 1 data collection efforts.

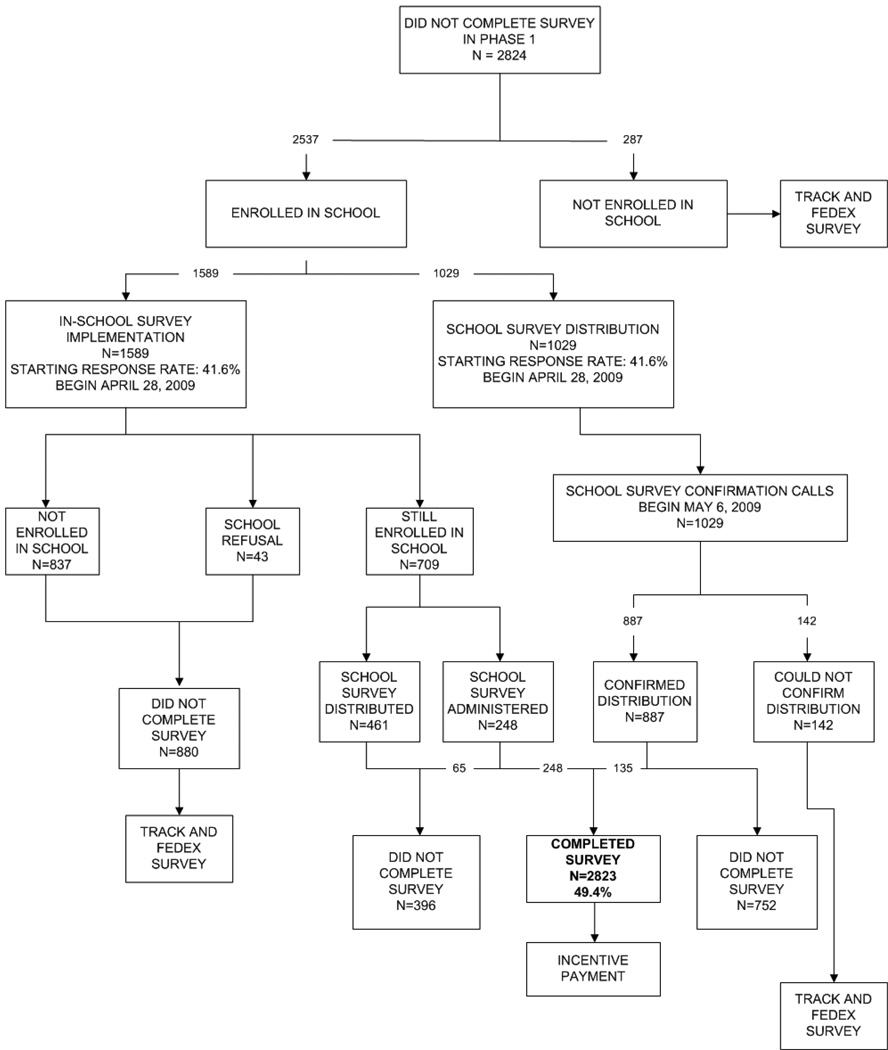

Phase 2 targeted 2,824 students who had not responded to the survey at the end of Phase 1. Resulting from these school-based efforts were 448 (7.8%) completed surveys: 248 students (4.3%) completed the surveys in school and 200 (3.5%) completed surveys distributed to them by research or school staff (Figure 2).

Figure 2.

Phase 2 data collection efforts.

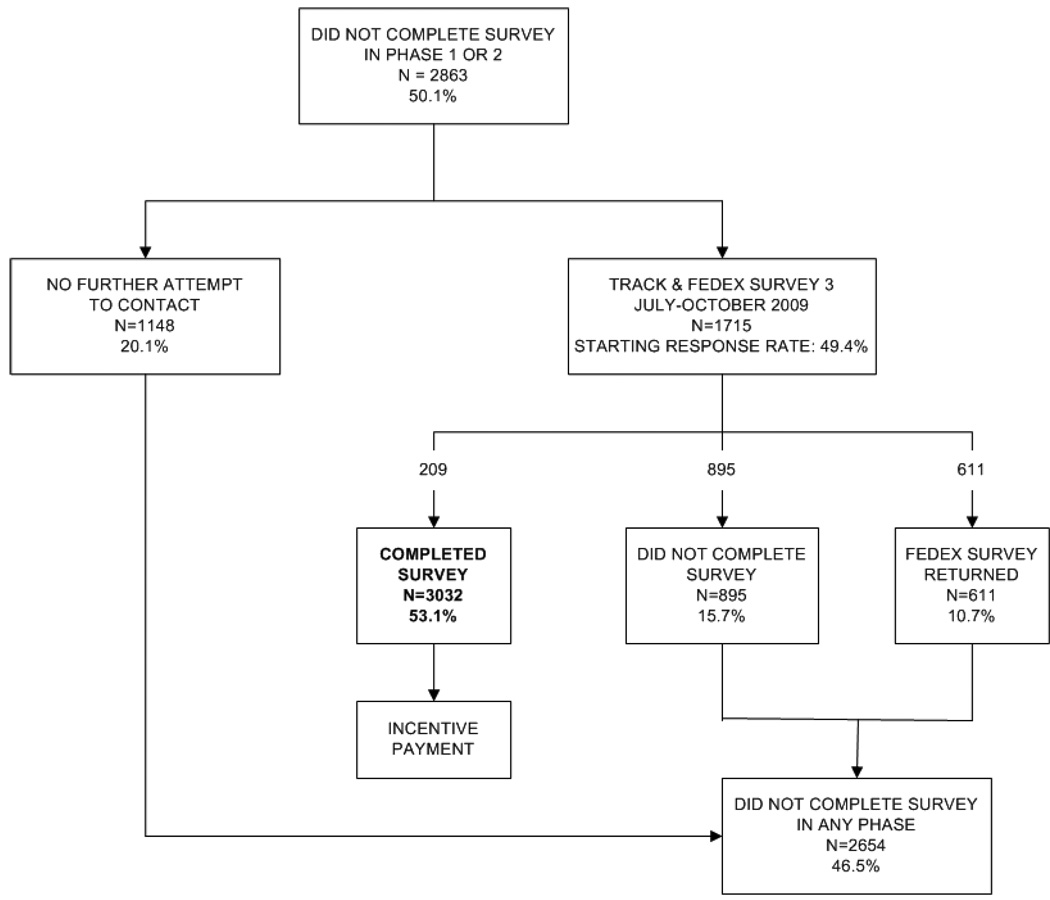

Phase 3 targeted 1,715 students who were not successfully located in Phases 1 or 2 (Figure 3). No further attempt was made to contact 1,148 non-responders who did not complete the survey after 3 previous opportunities (2 mailed to their home in Phase 1, 1 distributed at school in Phase 2). Following additional tracking by HSRC and courier service delivery, 209 students (3.7%) completed the survey. The courier was not able to deliver 611 (10.7%) of the students’ survey packets. There was no further attempt to contact these youth.

Figure 3.

Phase 3 data collection efforts.

Overall, 3,032 (53.1%) of the eligible cohort completed the long-term follow-up: 2,375 (41.6%) completed in Phase 1, 448 (7.8%) completed in Phase 2, and 209 (3.7%) completed in Phase 3. The costs per completed survey for each phase are included in Table 1. The cost per completed survey in Phase 1 was $118, $166 in Phase 2 and $440 in Phase. There were no significant differences in phase of completion across treatment conditions, socioeconomic status, family composition, race/ethnicity, or violent behavior at baseline (Table 1). However, boys were less likely to complete the survey across all phases than girls, with the proportion of boy completers ranging from 31% (in Phase 3) to 36% (in Phase 2), and youth reporting higher levels of substance use and delinquent behavior were most likely to complete in Phase 3 compared to Phases 1 and 2 (Table 1).

Table 1.

Comparison of those completing the survey in each phase of data collection.

| Phase 1 (n = 2,375) |

Phase 2 (n = 448) |

Phase 3 (n = 209) |

||||||

|---|---|---|---|---|---|---|---|---|

| Characteristics | N | % | N | % | N | % | χ2 | P-value |

| Demographics | ||||||||

| Treatment condition | 1094 | 46.1 | 198 | 44.2 | 85 | 40.7 | 3.13 | 0.209 |

| Male | 813 | 34.2 | 161 | 35.9 | 64 | 30.6 | 6.33 | 0.042 |

| Low socioeconomic status | 1265 | 53.3 | 216 | 48.2 | 98 | 46.9 | 4.56 | 0.335 |

| Two-parent household1 | 990 | 41.7 | 163 | 36.4 | 73 | 34.9 | 0.97 | 0.616 |

| Race/Ethnicity | ||||||||

| Hispanic | 601 | 25.3 | 138 | 30.8 | 49 | 23.4 | 1.36 | 0.507 |

| White | 352 | 14.8 | 52 | 11.6 | 35 | 16.7 | 4.21 | 0.122 |

| Black2 | 1002 | 42.2 | 202 | 45.1 | 87 | 41.6 | - | - |

| Baseline Behaviors (6th grade; Fall 2002) | Mean | SE | Mean | SE | Mean | SE | F | P-value |

| Alcohol use3 | 5.41 | 0.04 | 5.48 | 0.09 | 5.79 | 0.13 | 4.28 | 0.014 |

| Marijuana use4 | 2.11 | 0.02 | 2.02 | 0.04 | 2.31 | 0.06 | 7.84 | <0.001 |

| Violent behavior5 | 9.68 | 0.07 | 9.72 | 0.18 | 9.92 | 0.27 | 0.42 | 0.660 |

| Delinquent behavior6 | 9.27 | 0.05 | 9.10 | 0.12 | 9.66 | 0.18 | 3.49 | 0.031 |

| Age 17–18 Behaviors (Fall 2008/Spring 2009) | ||||||||

| Alcohol use3 | 8.07 | 0.09 | 8.44 | 0.21 | 10.01 | 0.32 | 17.57 | <0.001 |

| Marijuana use4 | 3.49 | 0.07 | 3.49 | 0.15 | 4.81 | 0.23 | 15.88 | <0.001 |

| Cost per completed survey | ||||||||

| Printing | $22.88 | $19.82 | - | |||||

| Postage/Courier service | $7.18 | $13.67 | $90.44 | |||||

| Database tracking | $6.34 | $12.42 | $135.27 | |||||

| Participant incentives | $30.00 | $30.00 | $30.00 | |||||

| Web survey hosting | $1.05 | $0.99 | $8.21 | |||||

| Survey processing/scanning | $4.22 | $4.38 | $4.54 | |||||

| Chicago-based staff | - | $33.79 | - | |||||

| University personnel | $46.39 | $50.47 | $171.95 | |||||

| Total | $118.06 | $165.54 | $440.41 | |||||

Referent category is all other family compositions.

Referent category for race/ethnicity comparisons.

5 item scale where higher scores indicate more alcohol use (Range 5–33).

2 item scale where higher scores indicate more marijuana use (Range 2–14).

6 item scale where higher scores indicate more violent behavior (Range 6–18).

7 item scale where higher scores indicate more delinquent behavior (Range 7–21).

We elected to target all youth who completed at least one previous PNC survey, rather than focusing only on those with more frequent (i.e., those who completed 3 or 4 PNC surveys while in middle school) or recent (i.e., those who complete a survey in 8th grade) contact. We did observe higher response rates among these select groups of youth—59% of students who completed 3 or 4 of the PNC surveys while in middle school completed the long-term follow-up and 58% of those who completed a survey in 8th grade completed the follow-up.

3.3 Attrition

Table 2 presents a comparison of those completing the follow-up survey and those lost to follow-up. There were no significant differences between those who completed and those who did not with respect to experimental condition, socioeconomic status, and marijuana use at baseline. However, students who reported higher levels of alcohol use, violence, and delinquent behavior at baseline (beginning of 6th grade) were less likely to complete the survey. Girls were more likely to complete the survey than boys, White students were more likely to complete than Hispanic and African American students, and African American students were more likely to complete than Hispanics.

Table 2.

Comparison of those completing the survey (n=3032) and those lost to follow-up (n=2654) at baseline (6th grade; Fall 2002).

| Completers | Attriters | |||||

|---|---|---|---|---|---|---|

| Characteristic | N | % | N | % | χ2 | P-value |

| Treatment condition | 1378 | 45.4 | 1208 | 45.5 | 2.34 | 0.126 |

| Male | 1039 | 34.3 | 1085 | 40.9 | 46.07 | <0.001 |

| Low socioeconomic status | 1578 | 52.0 | 1354 | 51.0 | 2.06 | 0.357 |

| Two-parent household1 | 1226 | 40.4 | 1003 | 37.8 | 14.90 | 0.037 |

| Race/Ethnicity | ||||||

| Hispanic | 788 | 26.0 | 849 | 32.0 | 6.13 | 0.013 |

| White | 439 | 14.5 | 290 | 10.9 | 15.03 | <0.001 |

| Black2 | 1291 | 42.6 | 1188 | 44.8 | - | - |

| Mean | SE | Mean | SE | t | P-value | |

| Alcohol use3 | 5.44 | 0.03 | 5.64 | 0.04 | 3.64 | <0.001 |

| Marijuana use4 | 2.11 | 0.02 | 2.15 | 0.02 | 1.65 | 0.100 |

| Violent behavior5 | 9.70 | 0.07 | 10.15 | 0.08 | 4.45 | <0.001 |

| Delinquent behavior6 | 9.28 | 0.04 | 9.73 | 0.05 | 6.69 | <0.001 |

Referent category is all other family compositions

Referent category for race/ethnicity comparisons

5 item scale where higher scores indicate more alcohol use (Range 5–33).

2 item scale where higher scores indicate more marijuana use (Range 2–14).

6 item scale where higher scores indicate more violent behavior (Range 6–18).

7 item scale where higher scores indicate more delinquent behavior (Range 7–21).

Students who were incarcerated (N=81) were more likely to be boys (χ2 (1) = 39.7, p < .001), African American (χ2 (5) = 14.7, p = 0.012), have higher levels of violent (t (51) = 3.85, p < 0.001) and delinquent behaviors (t (50.8) = 4.28, p < .0001) at baseline and not have dual-parent households (χ2 (1) = 6.70, p = .01). There were no significant differences in incarceration with respect to experimental condition, socioeconomic status, and drug use. Among those in the cohort who were deceased, there were no significant differences across sociodemographic characteristics, drug use, or violent and delinquent behaviors, although, these were few (N=17).

4. DISCUSSION & LESSONS LEARNED

Overall, we achieved annual contact with 89% of this high-risk cohort from the end of the PNC intervention and evaluation activities through the long-term follow-up efforts presented here. Fifty-three percent of the cohort responded to the survey, with the majority of those completing in Phase 1 of our data collection. Additional school-based and courier-delivery efforts increased our response rate by 11.5%. The cost per completed survey increased dramatically across phases--$118 in Phase 1, $166 in Phase 2, and $440 in Phase 3, not inclusive of personnel costs for the research team. Participants who completed in Phase 3 reported greater substance use and delinquent behaviors at baseline and more alcohol and marijuana use at age 17–18 compared to those completing in the first two phases of data collection.

This study illustrates that it is possible to locate high-risk, urban, young adults, even after having minimal contact in preceding years. The postcards mailed annually following the completion of the PNC intervention and evaluation efforts were a fruitful and inexpensive way to track the majority of the cohort. Approximately 10% of the cohort was lost to follow-up in each year and more intensive tracking strategies were needed. HSRC performed detailed tracking of these students using the student and parent names, address and telephone number. The success of our tracking efforts may have been improved with additional information, such as E-mail addresses and contact information for a few close friends and/or family members (Haggerty, Fleming et al., 2008). However, this information was not available to us and represents a missed opportunity during our 8th grade PNC data collection.

The mixed-mode strategy employed in this study yielded a response rate that was comparable or better than a number of different strategies considered. Response rates to telephone interviews with adolescents typically range from 49% to 65% (Klein, Rossbach et al., 2001; Klein, Havens et al., 2005; Lee, Arheart et al., 2005; Sly, Hopkins et al., 2001; Sly, Trapido et al., 2002; Thomson, Siegel et al., 2005) and are increasingly difficult with diffusion of caller identification and cell phone use (Moskowitz & Pepe, 2004). Relying on this survey mode would have been detrimental to this study, as valid, consistently active, telephone numbers were available for very few (8%) in our cohort. Response rates to mail-based surveys vary greatly, some studies achieving rates as low as 10% (Shih & Fan, 2008), while others show rates as high as 80% (Bachman, Johnston et al., 1996). We achieved a 42% response rate with our mail-based efforts alone. Lastly, cohort follow-up rates for in-school surveys are similar to what we achieved, even among studies that had annual in-school contact. For example, during the PNC trial, a 61% cohort response rate was observed after annual, in-school surveys from 6th to 8th grade (Komro, Perry et al., 2008). Likewise, Sloboda and colleagues (2009) observed a 54% cohort response rate after annual, in-school surveys from 7th to 11th grade among their sample of nearly 20,000 adolescents from 6 metropolitan cities. Treatment studies have had more favorable cohort retention rates (e.g., Cotter, Burke et al., 2005; Haggerty, Fleming et al., 2008). However, the nature and frequency of contacts with participants is fundamentally different in selected, treatment interventions versus that for universal, preventive interventions as reported here. Given an approximately 50% dropout rate in Chicago (Chicago Public Schools Office of Performance, 2010) and the experience of other scientists, it is unlikely that we would have achieved a better response rate if we attempted in-school administration alone for this follow-up or were able to administer the survey annually in schools.

Our decision to attempt to survey all students who had completed at least one PNC survey while in 6th to 8th grade was methodologically sound, yet ambitious, where justification could have been made to target only those students with whom we had more frequent or recent contact (e.g., completed 3 or 4 of the study surveys, or completed a survey in 8th grade). These students may have been less difficult to track and more likely to respond to the survey, as they were more vested in the project. This hypothesis was supported, as nearly 60% of those who completed 3 or 4 of the prior surveys, or who completed a survey in 8th grade, completed the long-term follow-up. Future efforts with more limited resources may wish to focus on those with more frequent or recent contact; however, this should be considered with the limitations inherent with designed attrition of those that may be higher risk.

The option for students to complete the survey on the Internet was provided based on previous positive evaluations of Internet-based health surveys by adolescents (Mangunkusumo, Moorman et al., 2005) and the widespread availability of computer and Internet access to students at school. However, among the students who completed the follow-up survey, only 17% (n=530) did so on the Internet. Thus, while Internet-based surveys may be more convenient and considerably less expensive to implement for researchers, barriers to computer and Internet access and use remain among low-income, urban, young adults (Eamon, 2004; Sun, Unger et al., 2005) and influence preference for completing surveys on paper. Until computer and Internet access and use is more prominent and readily available in the homes of urban, low-income populations, survey resources may be better used elsewhere.

This and other studies (Coen, Patrick et al., 1996; Cotter, Burke et al., 2002; Haggerty, Fleming et al., 2008; Sullivan, Rumptz et al., 1996) demonstrate that it is necessary to have frequent, quality contact to overcome attrition among the highest risk youth. Analyses in the present study revealed that those completing in later phases of survey administration and those lost to follow-up were higher risk, reporting greater levels of substance use, violence and delinquency at baseline. Further, we were unable to pursue 81 students in our cohort with known incarceration. It is common for longitudinal studies to experience attrition of higher-risk participants (Scott, 2004). However, researchers are often limited by temporal and financial considerations in the extent to which they can attempt to locate the most difficult to find participants. Some studies suggest that more than 20 contact attempts may be needed (Cotter, Bruke et al., 2005; Haggerty, Fleming et al., 2008; Hansten, Downey et al., 2000). Practically, this must be weighed with the financial costs of these more intensive efforts. For example, within a treatment study Haggerty and colleagues (2008) were able to locate and have approximately 90% of their sample complete a follow-up survey 10 years after conclusion of their intervention among a sample of parents who had received methadone treatment for opiate addiction and their children using two phases of tracking, one “universal” phase, similar to that utilized in the present study, and a second “tailored” phase that involved collaboration with the Washington State Departments of Social and Health Services and Corrections, methadone clinics, other study participants, and home or in-person visits by research staff. While they did not provide an estimate of cost, it is clear that such approaches to tracking are not without considerable expense. However, given that we can readily identify those most likely to be lost to follow-up at the beginning of a study, collection of more detailed personal information, such as date of birth, address, telephone number, E-mail address, contact information for friends and other family members, social security number, or drivers license number, at the outset and known conclusion of longitudinal studies and implementation of more intensive tracking efforts among this higher-risk subset of the cohort throughout the study would contribute to successful tracking and follow-up that may be yet unplanned or unfunded.

One key limitation in the present study, and others who use the U.S. postal service for tracking and follow-up, deserves consideration; defining a successful contact as one that does not have the mail-piece returned may lead to an overestimate of success. Delivery problems were noted by our study participants and have been the subject of much concern among Chicago communities (Hope, 2007). However, we were unable to discern the extent of this problem. With standard mail service there is no way to confirm or ensure delivery; thus, there is risk inherent in relying on returned mail to inform accuracy of contact information. We used a courier service to ship the survey packets in Phase 3, and while expensive, we were able to confirm every delivery and return of packets that were not deliverable due to incorrect or invalid addresses. Future efforts with considerable resources may wish to rely solely on courier-service delivery to ensure successful contact or, at the least, consider the risk of uncertain delivery when selecting a mail-based method.

Overall, we successfully located approximately 90% of our inner-city young adults and 53% of them responded to the follow-up survey. A few key activities provided good return on investment of personnel time and resources, including the tracking services provided by HSRC, telephone reminders at the end of Phase 1, and in-school administration and distribution of the survey. These activities resulted in relatively large increases in our contacts and completed surveys, beyond that initially achieved. While the response rate was relatively low, it was comparable or better than that typically achieved among competing strategies for universal preventive interventions. Costs per completed survey were high in each phase, and became increasingly more so as we attempted to reach more difficult segments of the cohort. This study illustrates that it is possible to track and follow-up a high-risk cohort as they progress through adolescence, even with minimal efforts in intervening years. However, given attrition of the higher-risk participants, challenges remain in locating and surveying these young adults.

Acknowledgments

This study was funded by a grant from the National Institute on Alcohol Abuse and Alcoholism, and support from the National Center on Minority Health and Health Disparities (R01 AA016549), awarded to Dr. Kelli A. Komro. We are grateful for and acknowledge the participants in the Project Northland Chicago trial, as well as the administrators in the Chicago public schools who participated in the data collection. Special acknowledgements go to Carolyn Kulb (Survey Coordinator), Laura Haderxhanaj (Graduate Research Assistant), Aria Jefferson (Student Assistant), Jennifer Reingle (Graduate Research Assistant), and our Chicago-based survey staff (Carol Johnson, Mirlene Dossous, Tamika Hinton, Britt Garton Pisto, Angie Slater, Lisa Austin, Gloria Riley, Gina Norton, and Debra Moore).

Biographies

Amy L. Tobler, MPH, PhD: Dr. Tobler is a social epidemiologist specializing in social and community-contextual determinants of health and prevention of substance use among adolescents. She has published in the areas of underage drinking, alcohol and drug use prevention, and evaluation of alcohol-related policies. Dr. Tobler is Co-Principal Investigator of a project examining the etiology of alcohol use among racially diverse, economically disadvantaged urban youth and the Vice-Chair of the Policy Team for a NIDA-funded project establishing the Promise Neighborhoods Research Consortium. She was also a co-investigator on projects evaluating and meta-analyzing the effects of alcohol tax policies on risky behaviors and health outcomes.

Kelli A. Komro, MPH, PhD: Dr. Komro is an epidemiologist specializing in the social determinants of health among children and adolescents. Her research focuses on designing and evaluating community-wide strategies to promote health among youth. She has been PI or Co-Investigator on multiple group-randomized controlled trials focusing on preventing drug use; violence; and HIV among youth, both in the U.S. and internationally, including trials in Chicago, rural Minnesota, Russia, India and Tanzania. Dr. Komro’s scholarly research has been recognized both nationally and internationally as is evident from her publication and presentation list, as well as her work in Tanzania, Norway, Japan, and the United Kingdom.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Amy L. Tobler, Email: amytobler@ufl.edu.

Kelli A. Komro, Email: komro@ufl.edu.

References

- Acxiom Corporation. Acxiom Insight. Little Rock, AR: Acxiom Corporation; 2010. [Google Scholar]

- Aquilino WS. Interview Mode Effects in Surveys of Drug and Alcohol-Use - a Field Experiment. Public Opinion Quarterly. 1994;58(2):210–240. [Google Scholar]

- Aquilino WS, Losciuto LA. Effects of Interview Mode on Self-Reported Drug-Use. Public Opinion Quarterly. 1990;54(3):362–395. [Google Scholar]

- Arkes J. Does the economy affect teenage substance use? Health Economics. 2007;16:19–36. doi: 10.1002/hec.1132. [DOI] [PubMed] [Google Scholar]

- Atkinson R. The evidence on the impact of gentrification: new lessons for the urban renaissance? International Journal of Housing Policy. 2004;4:107–131. [Google Scholar]

- Bachman JG, Johnston LD, O'Malley P. Monitoring the Future: A continuing study of the lifestyles and values of youth, 1994. University of Michigan, Ann Arbor, MI: Inter University Consortium for Political and Social Research; 1996. Conducted by the. [Google Scholar]

- Chicago Public Schools Office of Performance. Dropout and graduation rates, 1999–2009. Chicago, IL: Chicago Public Schools; 2010. [Google Scholar]

- Coen AS, Patrick DC, Shern DL. Minimizing attrition in longitudinal studies of special populations: An integrated management approach. Evaluation and Program Planning. 1996;19:309–319. [Google Scholar]

- Cotter RB, Burke JD, Loeber R, Navratil JL. Innovative retention methods in longitudinal research: A case study of the developmental trens study. Journal of Child and Family Studies. 2002;11:485–498. [Google Scholar]

- Cotter RB, Burke JD, Stouthamer-Loeber M, Loeber R. Contacting particpants for follow-up: how much effort is required to retain participants in longitudinal studies? Evaluation and Program Planning. 2005;28:15–21. [Google Scholar]

- Diggle PJ, Heagerty P, Liang K, Zeger SL. Analysis of Longitudinal Data, Second Edition. New York: Oxford University Press Inc; 2002. [Google Scholar]

- Duncan SC, Duncan TE, Strycker LA. A multilevel analysis of neighborhood context and youth alcohol and drug problems. Prevention Science. 2002;3(2):125–133. doi: 10.1023/a:1015483317310. [DOI] [PubMed] [Google Scholar]

- Eamon MK. Digital divide in computer access and use between poor and nonpoor youth. Journal of Sociology and Social Welfare. 2004;31:91–113. [Google Scholar]

- Editorial Projects in Education. Broader horizons: The challenge of college readiness for all students. Diplomas Count 2009. 2009 Retrieved May 19, 2010, from http://www.edweek.org/ew/toc/2009/06/11/index.html. [Google Scholar]

- Frankel MR, Srinath KP, Hoaglin DC, Battaglia MP, Smith PJ, Wright RA, et al. Adjustments for non-telephone bias in random-digit-dialling surveys. Statistics in Medicine. 2003;22(9):1611–1626. doi: 10.1002/sim.1515. [DOI] [PubMed] [Google Scholar]

- Gfroerer JC, Hughes AL. The Feasibility of Collecting Drug-Abuse Data by Telephone. Public Health Reports. 1991;106(4):384–393. [PMC free article] [PubMed] [Google Scholar]

- Haggerty KP, Fleming CB, Catalano RF, Petrie RS, Rubin RJ, Grassley MH. Ten years later: Locating and interviewing children of drug abusers. Evaluation and Program Planning. 2008;31:1–9. doi: 10.1016/j.evalprogplan.2007.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen WB, Tobler NS, Graham JW. Attrition in Substance-Abuse Prevention Research - a Metaanalysis of 85 Longitudinally Followed Cohorts. Evaluation Review. 1990;14(6):677–685. [Google Scholar]

- Hansten ML, Downey L, Rosengren DB, Donovan DM. Relationship between follow-up rates and treatment outcomes in substance abuse research: more is better but when is "enough" enough? Addiction. 2000;95:1403–1416. doi: 10.1046/j.1360-0443.2000.959140310.x. [DOI] [PubMed] [Google Scholar]

- Hill TD, Angel RJ. Neighborhood disorder, psychological distress, and heavy drinking. Social Science & Medicine. 2005;61:965–975. doi: 10.1016/j.socscimed.2004.12.027. [DOI] [PubMed] [Google Scholar]

- Hope L. Chicago postal officials promise to correct problems. Chicago, IL: ABC 7 News (WLS-TV); 2007. [Google Scholar]

- Johnston LD, O'Malley PM, Bachman JD. Monitoring the future national survey results on drug use, 1975–2001. Volume 1: Secondary school students. Bethesda, MD: National Institute on Drug Abuse; 2002. [Google Scholar]

- Klein J, Rossbach C, Nijher H, Geist M, Wilson K, Cohn S, et al. Where do adolescents get their condoms? Journal of Adolescent Health. 2001;29(3):186–193. doi: 10.1016/s1054-139x(01)00257-9. [DOI] [PubMed] [Google Scholar]

- Klein JD, Havens CG, Carlson EJ. Evaluation of an adolescent smoking-cessation media campaign: GottaQuit.com. Pediatrics. 2005;116(4):950–956. doi: 10.1542/peds.2005-0492. [DOI] [PubMed] [Google Scholar]

- Komro KA, Perry CL, Veblen-Mortenson S, Bosma LM, Dudovitz BS, Williams CL, et al. Brief report: the adaptation of Project Northland for urban youth. Journal of Pediatric Psychology. 2004;29(6):457–466. doi: 10.1093/jpepsy/jsh049. [DOI] [PubMed] [Google Scholar]

- Komro KA, Perry CL, Veblen-Mortenson S, Farbakhsh K, Toomey TL, Stigler MH, et al. Outcomes from a randomized controlled trial of a multi-component alcohol use preventive intervention for urban youth: Project Northland Chicago. Addiction. 2008;103:606–618. doi: 10.1111/j.1360-0443.2007.02110.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee DJ, Arheart KL, Trapido E, Soza-Vento R, Rodriguez R. Accuracy of parental and youth reporting of secondhand smoke exposure: The Florida youth cohort study. Addictive Behaviors. 2005;30(8):1555–1562. doi: 10.1016/j.addbeh.2005.02.008. [DOI] [PubMed] [Google Scholar]

- Lewis DA. Moving up and moving out? Economic and residential mobility of low-income Chicago families. Urban Affairs Review. 2007;43:139–170. [Google Scholar]

- Mangunkusumo RT, Moorman PW, van der Berg-de Ruiter AE, van der Lei J, De Koning HJ, Raat H. Internet-administered adolescent health questionnaires compared with a paper version in a randomized study. Journal of Adolescent Health. 2005;36(1):70.e71–70.e76. doi: 10.1016/j.jadohealth.2004.02.020. [DOI] [PubMed] [Google Scholar]

- McCabe SE. Comparison of web and mail surveys in collecting illicit drug use data: A randomized experiment. Journal of Drug Education. 2004;34(1):61–72. doi: 10.2190/4HEY-VWXL-DVR3-HAKV. [DOI] [PubMed] [Google Scholar]

- McCabe SE, Boyd CJ, Couper MP, Crawford S, D'Arcy H. Mode effects for collecting alcohol and other drug use data: Web and US mail. Journal of Studies on Alcohol. 2002;63(6):755–761. doi: 10.15288/jsa.2002.63.755. [DOI] [PubMed] [Google Scholar]

- McCabe SE, Couper MP, Cranford JA, Boyd CJ. Comparison of Web and mail surveys for studying secondary consequences associated with substance use: Evidence for minimal mode effects. Addictive Behaviors. 2006;31(1):162–168. doi: 10.1016/j.addbeh.2005.04.018. [DOI] [PubMed] [Google Scholar]

- Moskowitz CS, Pepe MS. Quantifying and comparing the predictive accuracy of continuous prognostic factors for binary outcomes. Biostatistics. 2004;5(1):113–127. doi: 10.1093/biostatistics/5.1.113. [DOI] [PubMed] [Google Scholar]

- NCES. Status dropout rates by race/ethnicity. 2005 Retrieved January 18, 2006, from http://nces.ed.gov/programs/coe/2005/section3/indicator19.asp.

- Okamoto K, Ohsuka K, Shiraishi T, Hukazawa E, Wakasugi S, Furuta K. Comparability of epidemiological information between self- and interviewer-administered questionnaires. Journal of Clinical Epidemiology. 2002;55(5):505–511. doi: 10.1016/s0895-4356(01)00515-7. [DOI] [PubMed] [Google Scholar]

- Preusser DF, Ferguson SA, Williams AF, Leaf WA, Farmer CM. Teenage driver licensure rates in four states. Journal of Safety Research. 1998;29:97–105. [Google Scholar]

- Ribisl KM, Walton MA, Mowbray CT, Luke DA, Davidson WS, Bootsmiller BJ. Minimizing participant attrition in panel data through the use of effective retention and tracking strategies: Review and recommendations. Evaluation and Program Planning. 1996;19:1–25. [Google Scholar]

- Rosston GL, Wimmer BS. The 'state' of universal service. Information Economics and Policy. 2000;12(3):261–283. [Google Scholar]

- Schonlau M, Asch BJ, Du C. Web surveys as part of a mixed-mode strategy for populations that cannot be contacted by e-mail. Social Science Computer Review. 2003;21(2):218–222. [Google Scholar]

- Scott CK. A replicable model for achieving over 90% follow-up rates in longitudinal studies of substance abusers. Drug and Alcohol Dependence. 2004;74:21–36. doi: 10.1016/j.drugalcdep.2003.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. New York: Houghton Mifflin Company; 2002. [Google Scholar]

- Shih T, Fan X. Comparing response rates from web and mail surveys: A meta-analysis. Field Methods. 2008;20:249–271. [Google Scholar]

- Sloboda Z, Stephens RC, Stephens PC, Grey SF, Teasdale B, Hawthorne RD, et al. The adolescent substance abuse prevention study: A randomized field trial of a universal substance abuse prevention program. Drug and Alcohol Dependence. 2009;102:1–10. doi: 10.1016/j.drugalcdep.2009.01.015. [DOI] [PubMed] [Google Scholar]

- Sly DF, Hopkins RS, Trapido E, Ray S. Influence of a counteradvertising media campaign on initiation of smoking: The Florida "truth" campaign. American Journal of Public Health. 2001;91(2):233–238. doi: 10.2105/ajph.91.2.233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sly DF, Trapido E, Ray S. Evidence of the dose effects of an antitobacco counteradvertising campaign. Preventive Medicine. 2002;35(5):511–518. doi: 10.1006/pmed.2002.1100. [DOI] [PubMed] [Google Scholar]

- Sullivan CM, Rumptz MH, Campbell R, Eby KK, Davidson WS. Retaining participants in longitudinal community research: A comprehensive protocol. Journal of Applied Behavioral Science. 1996;32:262–278. [Google Scholar]

- Sun P, Unger JB, Palmer PH, Gallaher P, Chou C, Baezconde-Garbanati L, et al. Internet accessibility and usage among urban adolescents in southern California: Implications for web-based health research. Cyber Psychology & Behavior. 2005;5:441–453. doi: 10.1089/cpb.2005.8.441. [DOI] [PubMed] [Google Scholar]

- Tarasuk VS. Household food insecurity with hunger is associated with women's food intakes, health and household circumstances. Journal of Nutrition. 2001;131(10):2670–2676. doi: 10.1093/jn/131.10.2670. [DOI] [PubMed] [Google Scholar]

- Thomson CC, Siegel M, Winickoff J, Biener L, Rigotti NA. Household smoking bans and adolescents' perceived prevalence of smoking and social acceptability of smoking. Preventive Medicine. 2005;41(2):349–356. doi: 10.1016/j.ypmed.2004.12.003. [DOI] [PubMed] [Google Scholar]

- U.S. Census Bureau. Washington, D.C.: U.S. Department of Commerce; United States Census 2000. 2000

- U.S. Census Bureau. Census Bureau releases population estimates by age, sex, race and Hispanic origin. 2003 from http://www.census.gov/PressRelease/www/2003/cb03-16.html.