Abstract

We propose a new algorithm for learning kernels for variants of the Normalized Cuts (NCuts) objective – i.e., given a set of training examples with known partitions, how should a basis set of similarity functions be combined to induce NCuts favorable distributions. Such a procedure facilitates design of good affinity matrices. It also helps assess the importance of different feature types for discrimination. Rather than formulating the learning problem in terms of the spectral relaxation, the alternative we pursue here is to work in the original discrete setting (i.e., the relaxation occurs much later). We show that this strategy is useful – while the initial specification seems rather difficult to optimize efficiently, a set of manipulations reveal a related model which permits a nice SDP relaxation. A salient feature of our model is that the eventual problem size is only a function of the number of input kernels and not the training set size. This relaxation also allows strong optimality guarantees, if certain conditions are satisfied. We show that the sub-kernel weights obtained provide a complementary approach for MKL based methods. Our experiments on Caltech101 and ADNI (a brain imaging dataset) show that the quality of solutions is competitive with the state-of-the-art.

1. Introduction

Normalized Cuts (NCuts) [1] refers to a popular graph partitioning (or clustering) technique that analyzes the eigen structure (or spectrum) of a matrix derived from the pairwise similarities of nodes, to identify clusters inherent in the data. The nodes in the graph represent individual data examples such as pixels in an image or vectors in a distribution χ. The algorithm, however, does not make use of the native space of χ, rather the space induced by the chosen measure of similarity. This works well because with a proper choice of this measure, the cohesiveness of clusters of points is nicely reflected in the so-called kernel (or affinity) matrix κ, and can be precisely characterized via stability of the eigen vectors of its Laplacian. Equipped with such a kernel, NCuts facilitates the discovery of high quality clusters by seeking to split the vertex set of the graph into k̂ disjoint partitions such that the total cost of edge weights between points in different clusters (normalized by a measure of total affinity quotient of each partition) is minimized.

Similar to kernel based methods such as Support Vector Machines, NCuts makes use of an affinity matrix κ where the entry κ(p, q) gives a notion of similarity between two examples, p, q ε χ. Clearly, for any supervised or unsupervised learning procedure to be useful, an important requirement is that the matrix κ provided by the user be informative. Unfortunately, coming up with a good similarity function can be rather difficult in practice. Even when its functional form is known (e.g., Gaussian or polynomial), a great deal of time must be devoted to tune the parameters, before a learning algorithm can be applied. In an effort to transfer the burden of kernel design from the user to the learning step, an attractive alternative has emerged – why not generate a large number of diverse kernels (based on different notions of similarity) and learn using a good weighted subset of these kernels? For example, in applications such as object categorization, while specifying a direct analytical expression for similarity may be difficult, a multitude of features if utilized in conjunction are helpful to identify different object types, given a small number of user-annotated images from each category. The class of techniques for choosing a combination of kernels in the supervised learning framework, is formalized as Multi-kernel learning.

In general, Multi-kernel learning (MKL) solves for the appropriate kernel and the classifier (e.g., in a maximum margin sense) simultaneously, where the optimization of the kernel weights (for a given set of basis kernels) and the separating hyperplane is interleaved. For a general clustering task, adopting a similar strategy would imply searching over the space of kernels such that the specific clustering objective (using the derived kernel) is optimized. Our paper looks at this kernel learning problem in the setting where given a few labeled examples we want a suitable kernel for variations of the NCuts (rather than max-margin) criterion. In other words, we seek to learn a set of weights for the collection of basis kernels {κ(1),⋯,κ(d)}, such that the cut on the resultant weighted kernel, κ*, is as good as possible.

Observe that one possible approach to address the above problem is to draw upon the relationship of the original NCuts specification (binary assignment of examples to clusters) to spectral clustering (its relaxation). If the loss function characterizes how close the eigen structure of a new similarity matrix is to the given (training) partition, the objective function is expressed with respect to functions of the eigen vectors of the to-be-found kernel matrix’s Laplacian. While technically sound, such an approach is fraught with difficulties – finding the solution numerically, running time (especially for large datasets), and upper-bounding the looseness of the approximation gap. In this paper, we explore the alternative – whether it is advantageous to look directly at the combinatorial specification of NCuts-type objectives, in terms of defining the learning problem. We see that while a direct expression of the objective is expectedly problematic, manipulations reveal an equivalent model that admits a Semidefinite Programming (SDP) relaxation. We first motivate and present our model, and then provide a detailed analysis of its interesting theoretical properties. We follow this with a set of experiments on (1) Object categorization benchmarks (Caltech101) and (2) Neuroimaging datasets (ADNI) where we obtain good empirical performance. The main contributions are:

We propose new SDP models for learning the kernel for variants of the NCuts objective. These relaxations are efficient and have nice theoretical properties.

A feature of our algorithm is that the optimization depends only on the number of kernels, and not the number of training examples. We view this as a distinct advantage (in terms of running time) in using such methods in vision applications.

The algorithm is easy to implement, and compares favorably with spectral learning methods and MKL approaches. On several datasets it gives close to the best known results in the literature.

1.1. Related Work

The underlying philosophy behind MKL methods is that the kernel should depend on the given data; so the weights (e.g., as a linear combination) for a set of basis kernels must be picked while simultaneously training the SVM. Starting with [2; 3], this research has led to several formulations for learning the kernel from training examples: via SOCPs [4], SILPs [5], gradient-descent [6], and as a two-step optimization procedure [7]. Means for combining a large number of kernels [8; 9] have also been proposed. For several diverse applications [10; 11], such methods have raised the bar by combining diverse sources of information [11; 12].

On the other hand, several novel algorithms have also been proposed for learning the kernel matrix given a set of training examples in the NCuts context (more specifically, in terms of its spectral relaxation). These include Target Similarity [13], Target Eigenvector [14], Orthogonal Projection [15], and Supervised Spectral learning [16; 17]. It is interesting to note that many of these methods have been designed with a focus on vision applications. For example, the Target Similarity algorithm [13] which optimizes the weights of a set of pairwise features to match two transition matrices, was originally developed for segmentation problems. Also, the Target Eigenvector [14] method, was proposed to detect rectangles in an image of background clutter. These approaches have nice convergence properties and work well for the intended applications, but their extension to the general learning setting is not straightforward (see [17], pg. 69). The cost function defined in [15], however, is quite general – it minimizes the difference between the given cluster indicators (for the training set) and the eigen vector projections of the learned kernel. With an appropriate regularization good convergence can be shown, but the approach is numerically unstable because derivatives of a function of the eigen-vectors w.r.t. the matrix must be numerically estimated. These issues are mitigated in the approaches in [16; 17] – which generalize and extend the previous ideas by interleaving gradient descent with a line search step. Their limitation is that the procedures are iterative, and one must specify the step size of the search properly to obtain quick convergence (see [17], pg. 85).

Our algorithm is a departure from these approaches. We will work directly with NCuts type objectives (rather than the relaxation), and formalize the problem of solving for the weights for the basis kernels (akin to the MKL formulation).

2. Problem Statement

We first summarize the objective function, and then introduce our main ideas. The input is provided in terms of a graph, 𝒢 = (ν, ε, κ), where ν is the set of vertices (denoting pixels or points), ε is the set of edges, and κpq gives the strength of similarity (i.e., for an edge) between nodes p and q. Let ν1 and ν2 denote subsets (i.e., partition) of ν such that ν1 ∪ ν2 = ν, and 𝒞(ν1, ν2) = ∑(p∈ν1,q∈ν2) κpq give the cost of splitting ν into ν1 and ν2. A variant of the NCuts objective as studied by [18; 19] is . Let 𝒟(ν1) = 𝒞(ν1, ν1), we consider the corresponding maximization problem for both clusters:

| (1) |

which encourages a partitioning of the graph such that similarity across segments is small, and similarity within the segment is large. Recall that the matrix κ = [κpq] which gives similarity in a feature space (i.e., 〈ϕ(p), ϕ(q)〉) without explicit knowledge of the map ϕ, is assumed to be positive-semidefinite (PSD).We will not make this assumption; but will nonetheless refer to such matrices as kernels to keep the presentation simple.

Objective

Our objective is to avoid assuming a specific similarity structure over the space of examples. In other words, we look at the inverse problem. Rather than optimize the criterion for the given κ, we would like to infer a κ̂ from a given set of partitions, such that we obtain a good cut using κ̂. To restrict the complexity of the space we operate in, a reasonable requirement is to express κ̂ as a weighted combination of certain basis kernels (similar to MKL methods [5]). This also provides interpretability, i.e., the relative importance of each type of feature for the criterion of interest.

We define the given basis kernels as {κ(1),⋯,κ(d)} and the desired kernel is given as

where α = [α1, α2,⋯,αd]T are the weights of individual basis kernels (i.e., sub-kernel weights). Therefore, the learning problem in this setting, assuming k̂ (instead of only two) partitions can be written as

| (2) |

| (3) |

where ν = ν1 ∪ ν2 ∪⋯∪ νk̂, and f(α) expresses the optimal value for κα. We seek to optimize f(α) (using some training examples) by solving for α.

2.1. Ratio optimization

Let us first define

| (4) |

where t = 1,⋯,k̂. We can now rewrite the optimization of f(α) in (2) in terms of (4) as

where we stipulate that all the weights are non-negative and sum to 1. Typically, the training data, χ, has multiple training examples, χ(1), χ(2),⋯,χ(N), each with its corresponding correct partition (e.g., ). To find α consistent with all N examples, we obtain the following expression for learning κ̂:

| (5) |

We will use j ∈ χ as shorthand for χ(j) ∈ χ as above when it is clear from context. The objective in (5) is non-convex in general and the problem is NP-hard [20]. When k̂ = 2, since all the matrices κ(l) are symmetric (not necessarily PSD), we have u(1, l) = u(2, l) for l = 1,⋯,d. In special cases, this reduces to the single ratio optimization problem, (equivalent to a linear problem [21]). As an illustration, given non-negative vectors û, v̂ ∈ ℝd where and û(l) = u(1, l), consider the following single ratio fractional model and its equivalent linear optimization problem

| (6) |

For |χ| = N = 1 the solution is simply the kernel with the smallest ratio, and can be determined trivially. However, given N > 1 training images/partitions, the problem is

| (7) |

Here, u(j) and v(j) are created from U and V in (4) for each training example j ∈ χ. This multiple ratio minimization is NP-hard and the simplification in (6) is no longer applicable. One possibility is to directly adapt techniques from fractional programming, but the resultant relaxations are rather weak both in theory and practice (e.g., for challenges in the context of vision applications in specific see [22]). To do better, we must look for other ways to derive a tractable form based on our model’s special structure. We describe our main ideas in the subsequent section.

3. Quadratic Optimization & SDP Relaxations

In this section, we propose a quadratic optimization model for learning the kernel matrix for NCuts type objectives, and then derive its SDP relaxation. First, let us reexamine the multi-ratio optimization problem (7) – observe that (7) can also be (equivalently) written as

| (8) |

Since (|χ| − 1) is a constant (independent of optimization), (8) and (7) have the same optimal solution. Let us create a set of training example “pairs”,

Now, (8) can be modified as

| (9) |

Recall that in the single ratio case, the optimal solution of the above problem can be recovered by minimizing a function defined by the gap, say δ, between the numerator and the denominator. We follow its natural extension by minimizing the sum of all gap functions as follows.

| (10) |

| (11) |

Using 𝒥gh = (𝒜gh − ℬgh), the model can be written as

| (12) |

where ≥ 0 implies entry-wise non-negativity. If we define 𝒥 = ∑g≠h 𝒥gh and let Q = (𝒥 + 𝒥T)/2, then we can derive the following quadratic optimization problem

| (13) |

The above problem is also called the standard quadratic programming problem (StQP in short) in optimization [23], and the problem of checking the co-positivity of a given matrix in linear algebra [24]. The problem is NP-hard [25] for Q ⋡ 0; several algorithms have been proposed [23; 26] that consider various convex relaxations for StQP. Different from [23; 26] where the authors are interested in tightening the lower bounds to improve the relaxation, we will instead pursue a SDP relaxation for the StQP [27], which will offer certain advantages.

Let Z = ααT. It follows easily that . Now, using the fact that we have Z ≽ ααT ≽ 0, we can obtain the following simple SDP.

| (14) |

Note that we have removed the constraint on vector α in the above SDP relaxation. We next present a rounding procedure to recover a feasible solution α to the StQP from a solution of the relaxed problem (14).

Algorithm

Step 1

Find an optimal solution Z* to problem (14);

Step 2

Construct a vector by the following procedure

| (15) |

Step 3

Rescale the vector α* to make it feasible for (13).

The above algorithm will not provide a globally optimal solution to problem (13) in general. However, if the vector constructed from Step 2 of the algorithm satisfies the relation , then α* is indeed a global optimal solution to problem (13). Next, we explore additional conditions under which our solution is optimal with respect to the original StQP (13). Our first result is

Theorem 1

Suppose that the optimal solution of problem (14) Z* has only positive elements. Then, the proposed algorithm will provide an optimal solution to the StQP (13).

Proof: (Sketch)

Let E denote a ones matrix of appropriate size; we consider the dual of the problem (14), which is defined as follows

| (16) |

Here X2 ≥ 0 indicates element-wise non-negativity. Since Z* is optimal for the primal problem (14), there exists a dual triple satisfying

| (17) |

Because all elements of Z* are positive and all the elements of are non-negative, the relation must hold. Therefore, we have

| (18) |

On the other hand, because Z* has only positive elements, all elements of its first eigenvector (denoted by λ*) corresponding to its largest eigenvalue must also be positive. By multiplying it with a suitable scalar if necessary, we can further assume that . Because and both are positive semidefinite, we have . From the relation (18), we obtain

| (19) |

which is precisely the first and second-order optimality conditions for problem (13). Note that the solution set of (13) stays the same if we replace the matrix Q by (Q − ρE) for any ρ ∈ R. Since λ* is a local minimum of (13), it is also a local minimum of a new problem with objective αT(Q − y*E)α subject to ∑l α = 1. From (19), (Q − y*E) ≽ 0 and (Q − y*E)λ* = 0. Using this rationale, it follows immediately that λ* is also a global optimal solution of problem (13).

We note that Thm. 1 can be extended to the case where

| (20) |

where has only positive elements (e.g., by performing permutations if needed). We can also show that

Theorem 2

Suppose that d ≤ 3. Then the optimal solution of problem (14) can be achieved at a rank one matrix Z.

Proof

To prove the theorem, we must consider two cases: (a) all elements of Z* are positive; (b) there are some elements of Z* that equal 0. Case (a) follows readily from Theorem 1. Therefore, it suffices to consider case (b).

First we note that the theorem holds trivially if d ≤ 2. If there is one diagonal element of Z* that equals 0, then we can reduce the problem to the scenario when d ≤ 2. Suppose that there are some off-diagonal elements that equal 0. It is also easy to see that if there are 4 or 6 zero-valued off-diagonal elements, then we can still reduce the problem to the case d ≤ 2. Therefore, it remains to consider only the scenario where there are only 2 off-diagonal elements equal to zero. By performing permutation if necessary, we can assume Z* takes the following form

| (21) |

| (22) |

Since PSD matrices have non-negative determinant,

| (23) |

which amounts to . Now let us divide Z* into three parts as follows

| (24) |

where all the matrices X̂1, X̂2 and X̂3 are feasible for the relaxed problem. It follows

All the three matrices X̂1, X̂2 and X̂3 have rank one, so the above relation implies that the optimal solution of problem (14) is achieved at a rank one matrix, from which one can easily construct an optimal solution to problem (13).

It may seem that Thm. 2 can be extended to d = 4 since a linear optimization problem over the cone of co-positive matrices (LOCP) can be written as a SDP for d ≤ 4. However, notice that a LOCP is only a relaxation of (13) and for d = 4, the optimal solution of the SDP relaxation of (13) may not be attained at a rank one matrix.

3.1. Relationship to max-margin classifiers

With a solution to (14) in hand, we can reconstruct a combined kernel having the desired properties, and possibly use it to train a SVM classifier (or other max-margin methods). This raises the following question: given that the kernel κ̂ has been optimized for separability in an NCuts sense, will a subsequent classification (using SVM) adequately exploit this property? Or if it is justified? It has been shown elsewhere [28; 29] that there is a connection between the NCuts objective and max-margin clustering. That is, in the most common relaxation of NCuts, the discrete cluster membership indicators is replaced by a continuous-valued weight vector, and cluster membership is later recovered from the sign or by thresholding. This relaxation is equivalent to choosing a hyperplane in feature space (under the generalized Representer theorem) which minimizes the ratio of inter-cluster to within-cluster affinity. It has the same optimum as max-margin clustering in the feature space – NCuts implicitly makes use of a weight factor (where θi is the angle between the i-th feature vector and the mean data vector). In the reverse direction, it has been shown that NCuts can be solved as max-margin clustering [28]. This equivalence (up to scaling and normalization) between NCuts and max-margin approaches suggests that our method offers a way of choosing kernel weights such that their combination can be utilized in a max-margin setting as well.

4. Experimental Results

We performed an extensive set of experiments to assess the empirical performance of the algorithm. To evaluate its strengths and weaknesses in different applications, we focus on two diverse domains: (a) Caltech-101 dataset for object classification applications; and (b) Alzheimer’s Disease Neuroimaging Initiative (ADNI) data for application to early AD diagnosis using MR images. Our purpose is to demonstrate the expected behavior of the model with respect to (i) other state-of-the art kernel learning algorithms, and analyze whether the model serves as a suitable alternative; (ii) its performance as a function of different parameters (e.g., uninformative features, increase in sample size).

4.1. Caltech 101: Object Categorization

The Caltech 101 dataset [30] is a popular benchmark dataset for object categorization with 102 categories of images (101 distinct objects and background), with 30 – 800 images per category. To facilitate presentation, we summarize the setup as follows:

Descriptors, Feature extraction, and Kernels

To generate the features for the set of images and create the kernels based on these features, we used the experimental setup as reported in [11] (graciously shared with us by the authors). Briefly, the features1 used in our experiments are PHOG Shape descriptors, several varieties of SIFT-based descriptors, Region Covariance, Local Binary Patterns (LBP) and VIS+. Also, consistent with [11], kernel matrices for these features were computed as , where d(·, ·) is the pair-wise distance between the features xi and xj and γ gives the mean of the pairwise distances (with χ2 distance for PHOG, SIFT, and LBP).

Pipeline

The number of images used were {10, 15, 20, 25, 30} for training size per category and at most 50 images per category for testing. To create the multiple training examples, we sampled the input training data into 5 groups/examples. For each run of our algorithm, we learned the sub-kernel weights using our approach by first creating the class ratios for each group (normalizing them if classes were unequal), and then solving (14) using SDPT3. The kernel weights were then used to generate a custom kernel, κ fed into a standard SVM solver with C = 10 (no model selection was performed).

Comparisons with other methods

We used the algorithm in [16] as a representative of the class of spectral learning algorithms proposed in the literature, it has been shown to be numerically stable and yield better solutions relative to other methods [17]. In addition, we also compared to 2-norm MKL [5] from SHOGUN, and two baseline methods (average of kernels, product of kernels). Finally, to evaluate algorithm’s performance for multi-class classification for all 102 classes in Caltech101, we compared the accuracy of our method with the best results known for this dataset.

Classes, Splits, and Errors

For the two-class setting, we report average error estimates over randomly chosen pairs of categories (over 300 runs) with train/test sizes set to 10/50 unless otherwise specified. For the multi-class setting, we report errors averaged over three splits (with the splits as defined in [11]). Note that the error rate was measured as the mean prediction rate per class (it has been recommended to normalize w.r.t. categories to compensate for favorable bias of easier classes with more images).

Analysis of Results

i) Noisy Kernels

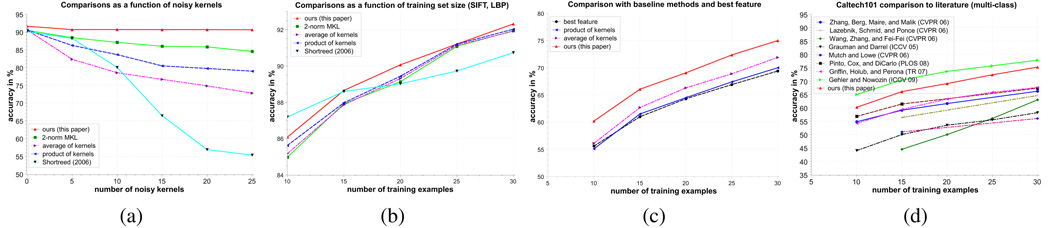

First, we assess the performance of the model w.r.t. introduction of noisy kernels in the set of basis kernels given, in the 2-class setting. We selected a set of ten kernels as our base set, and incrementally added {0, 5, 10, 15, 20, 25, 30} noisy kernels (random noise with same mean as the kernel without noise). The same setup is repeated for other algorithms that we measure our performance against. These results are summarized in Fig. 1(a). We see that our algorithm exhibits high level of robustness in the presence of noisy features, and negligible deterioration in performance even when an overwhelming majority of the basis kernel set is noisy.

Figure 1.

Empirical performance on the Caltech101 dataset for 2-class in (a), (b) and 102-class setting in (c), (d). Performance w.r.t. (a) introduction of noisy kernels, (b) different feature types, (c) baseline methods and best feature, (d) other algorithms from the literature.

ii) Performance of different feature types

Next, we evaluated the accuracy of our prediction as a function of different types of features (kernels) for 2-class classification. These results are shown in Fig. 1(b), for 4 (and 12) kernels of the LBP (and SIFT) features. We see that our algorithm outperforms the spectral learning algorithm from [16] and has comparable performance w.r.t. MKL and average of kernels. We saw a similar behavior for PHOG, LBP, and SIFT kernels individually.

iii) Performance vis-à-vis best feature

A natural question to ask is whether the weighted kernel provides an advantage over the best kernel in the set (if we select the best feature post-hoc). To evaluate this, we compare the performance of our algorithm to the best feature in the input for a number of different choices for the number of base kernels in {5, 10, 15, 20, 25, 30} in the 2-class and multi-class setting (102 classes). To prevent introducing a bias for a specific set of kernels, we select them randomly from a large set (about 150) and average over 10 possible choice of kernels for each pair of categories. Our results show that in about 90% cases, the weighted combination is better than the best feature. Accuracy shows an improvement of 3:5% over the best feature on average, and 6% – 10% (a function of kernel set size) over the average errors of all kernels. For the multi-class setting, we summarize our results in Fig. 1(c), where our model offers a significant advantage over baseline methods as well as the best feature in the set.

iv) Comparison to state of the art

We evaluated our algorithm for the multi-class classification scenario using all 102 classes in the Caltech dataset. To do this, we choose a set of 39 kernels and compared our method across different sizes of training sets (from 10 to 30). For the multi-class framework, we used a one-vs-all scheme, where each category is separated from all other classes. The results of this approach, together with the accuracy obtained by other state-of-the art algorithms in literature is shown in Fig. 1(d). We achieve an accuracy of 75.2 ± 0.67% using 30 training examples. This is better than all other algorithms (see Fig. 1(d)) except the recent paper of [11], who reported a slightly better performance using a multi-class boosting approach2. We found that the accuracy can be further improved by including 5 – 10 additional kernels; however, rather than strive for maximal performance we restrict ourselves to 39 kernels to enable meaningful comparison with other results and [11] (results with additional kernels will be available on a companion website).

Summary

Finally, we note that other than the promising accuracy estimates obtained by our model, the results are also encouraging because the training time of our method is comparable to baseline methods (average of kernels, product of kernels) – unlike other MKL methods. The time to solve the SDP is independent of the training set size, and takes ≤ 20s even for thousands of examples. The codebase/data will be publicly available after publication.

4.2. Alzheimer’s disease diagnosis (ADNI dataset)

We also evaluated the algorithm’s performance using MRI scans collected as part of the Alzheimer’s Disease Neuroimaging Initiative (ADNI) by performing 30-times repeated 10-fold cross validation experiments. ADNI is a landmark research study sponsored by the NIH, to determine the extent to which brain imaging can help predict onset and monitor progression of AD. We used brain image volumes of a population of 184 subjects (87 AD, 97 controls). Ground truth diagnosis of each subject (i.e., class labels) is available based on clinical evaluation of cognitive status. We calculated Gray Matter Probability (GMP) maps of each subject using Statistical Parametric Mapping (SPM). Gray matter probabilities at each voxel were then sorted by t-statistic for each fold, and kernels were constructed using increasing sets of features, from 5000 up to 250000 in seven steps. From each set of features, we constructed linear and polynomial kernels (degree 2, 3, 4) for a total of 28 kernels. Using kernel weights provided by our algorithm, we constructed a combined kernel, and trained an SVM for classification of AD subjects from controls.

We calculated test-set accuracy, and report on average predictive confidences over all 30 iterations to compute area under ROC-curves, a summary of the results is presented in Table 1. For comparison, we include results using 2-norm MKL, and a single SVM given the average of all 28 kernels. Briefly, we observe that our method had the best overall accuracy and AUC (84.61% / 0.9149).

Table 1.

Summary of accuracy of our method, 2-norm MKL, and average of kernels on ADNI data.

| Method | accuracy | AUC |

|---|---|---|

| ours (this paper) | 84.61% | 0.9149 |

| 2-norm MKL | 83.61% | 0.9130 |

| average of kernels | 83.44% | 0.9114 |

Interpretation of brain regions

A decision boundary in a linear kernel corresponds to a linear combination of examples (i.e., the support vectors). This allows us to reconstruct a map of discriminative brain regions used in classification, to verify whether the solutions are meaningful from a neuroscience perspective. In the case of a combined kernel, we can represent this by combining maps as reconstructed by each kernel (w.r.t. corresponding respective weights). However, this visual representation ignores the contribution of the polynomial kernels. For illustration, these results are shown in Fig. 2. Most prominently highlighted are the hippocampus and parahippocampal gyri, as well as middle temporal and lateral parietal regions, these regions are known to be affected by AD pathology.

Figure 2.

Brain regions selected when using gray matter probabilities derived from MR images. Numerical color scale corresponds to each voxel’s weight in the classifier, and has no units.

Conclusions

We present a new algorithm for learning the kernel (or affinity) matrix for variants of the NCuts objective, as a weighted combination of basis kernels. We propose convex relaxations, which can be efficiently solved using interior point methods for SDPs. Weights learnt by the algorithm are also good solutions to kernel learning problems for max-margin classification, typically addressed using MKL methods. Our method is not dependent on the training set size, this does not seem to affect the quality of the solutions (compared to approaches that do not have this feature), offers an advantage in many applications, and compares well to other multi-kernel learning methods (on Caltech101, ADNI). Finally, the solution is provably optimal in a number of special cases.

Footnotes

This work is supported in part by UW-ICTR and the following awards: NIH R21-AG034315, AFOSR FA9550-09-1-0098, NSF DMS 09-15240. Hinrichs is supported by a Wisconsin-CIBM fellowship.

A synopsis of all these features is available in [11]; for ease of reproducibility we did not change the parameters or setup used in [11].

Note that [11] discussed that accuracy reported in Varma and Ray (ICCV 07) for Caltech101 had certain errors. We found that the kernels have been corrected on the author’s websites very recently, which is why we have not included comparisons using those features in this paper.

Contributor Information

Lopamudra Mukherjee, Email: mukherjl@uww.edu.

Vikas Singh, Email: vsingh@biostat.wisc.edu.

Jiming Peng, Email: pengj@illinois.edu.

Chris Hinrichs, Email: hinrichs@cs.wisc.edu.

References

- 1.Shi J, Malik J. Normalized cuts and image segmentation. Trans. on Pattern Analysis and Machine Intell. 2000;22(8):888–905. [Google Scholar]

- 2.Cristianini N, Kandola J, Elissee A. On kernel target alignment. Neural Inf. Processing Syst. 2001 [Google Scholar]

- 3.Crammer K, Keshet J, Singer Y. Kernel design using boosting. Neural Inf. Processing Syst. 2003 [Google Scholar]

- 4.Bach FR, Lanckriet G, Jordan MI. Multiple kernel learning, conic duality, and the SMO algorithm; Intl. Conf. on Machine learning; 2004. [Google Scholar]

- 5.Sonnenburg S, Rätsch G, Schäfer C, Schölkopf B. Large scale multiple kernel learning. Journal of Machine Learning Research. 2006;7:1531–1565. [Google Scholar]

- 6.Rakotomamonjy A, Bach FR, Canu S, Grandvalet Y. SimpleMKL. Journal of Machine Learning Research. 2008;9:2491–2521. [Google Scholar]

- 7.Varma M, Babu BR. More generality in efficient multiple kernel learning; Intl. Conf. on Machine Learning; 2009. [Google Scholar]

- 8.Bach FR. Exploring large feature spaces with hierarchical multiple kernel learning. Neural Inf. Processing Syst. 2009 [Google Scholar]

- 9.Gehler PV, Nowozin S. Infinite kernel learning. Technical Report 178. Max Planck Inst. for Bio. Cyb.; 2008. [Google Scholar]

- 10.Kloft M, Brefeld U, Sonnenburg S, Zien A. Efficient and accurate ℓp-norm multiple kernel learning. Neural Inf. Processing Syst. 2009 [Google Scholar]

- 11.Gehler PV, Nowozin S. On feature combination for multiclass object classification; Intl. Conf. on Computer Vision; 2009. [Google Scholar]

- 12.Lin YY, Liu TL, Fuh CS, Sinica T. Local ensemble kernel learning for object category recognition. Computer Vision and Pattern Recog. 2007 [Google Scholar]

- 13.Meilă M, Shi J. Learning segmentation with random walks. Neural Inf. Processing Syst. 2001 [Google Scholar]

- 14.Cour T, Gogin N, Shi J. Learning spectral graph segmentation. Artificial Intel. and Statistics. 2005 [Google Scholar]

- 15.Bach FR, Jordan MI. Learning spectral clustering, with application to speech separation. Journal of Machine Learning Research. 2006;7:1963–2001. [Google Scholar]

- 16.Meilă M, Shortreed S, Xu L. Regularized Spectral Learning. Artificial Intel. and Statistics. 2005 [Google Scholar]

- 17.Shortreed S. Learning in spectral clustering. PhD thesis. University of Washington; 2006. [Google Scholar]

- 18.Hochbaum DS. Polynomial time algorithms for ratio regions and a variant of normalized cut. Trans. on Pattern Analysis and Machine Intell. 2010;32:889–898. doi: 10.1109/TPAMI.2009.80. [DOI] [PubMed] [Google Scholar]

- 19.Sharon E, Galun M, Sharon D, Basri R, Brandt A. Hierarchy and adaptivity in segmenting visual scenes. Nature. 2006;442:810–813. doi: 10.1038/nature04977. [DOI] [PubMed] [Google Scholar]

- 20.Schaible S, Shi J. Fractional programming: the sum-of-ratios case. Optim. Met. & Soft. 2003;18:219–229. [Google Scholar]

- 21.Charnes A, Cooper WW. Programming with linear fractional functionals. Naval Research Logistics Quarterly. 1962;9(3–4):181–186. [Google Scholar]

- 22.Kolev K, Cremers D. Continuous ratio optimization via convex relaxation with applications to multiview 3D reconstruction. Computer Vision and Pattern Recog. 2009 [Google Scholar]

- 23.Bomze IM, De Klerk E. Solving standard quadratic optimization problems via linear, semidefinite and copositive programming. J. Global Optim. 2002;24(2):163–185. [Google Scholar]

- 24.Kaplan W. A test for copositive matrices. Linear Algebra and its Applications. 2000;313:203–206. [Google Scholar]

- 25.Murty KG, Kabadi SN. Some NP-complete problems in quadratic and nonlinear programming. Math. Programming. 1987;39(1):117–129. [Google Scholar]

- 26.Locatelli M, Bomze IM, Tardella F. New and old bounds for standard quadratic optimization: dominance, equivalence and incomparability. Math. Programming. 2008;115(1):31–63. [Google Scholar]

- 27.Nowak I. A new semidefinite programming bound for indefinite quadratic forms over a simplex. J. Global Optim. 1999;14(4):357–364. [Google Scholar]

- 28.Valizadegan H, Jin R. Generalized maximum margin clustering and unsupervised kernel learning. Neural Inf. Processing Syst. 2006 [Google Scholar]

- 29.Rahimi A, Recht B. Clustering with normalized cuts is clustering with a hyperplane. Statistical Learning in Computer Vision. 2004 [Google Scholar]

- 30.Fei-Fei L, Fergus R, Perona P. Learning generative visual models from few training examples: an incremental bayesian approach tested on 101 object categories. Computer Vision and Pattern Recog. Workshop. 2004 [Google Scholar]