Abstract

Many decisions involve uncertainty, or imperfect knowledge about how choices lead to outcomes. Colloquial notions of uncertainty, particularly when describing a decision as ‘risky’, often carry connotations of potential danger as well. Gambling on a long shot, whether a horse at the racetrack or a foreign oil company in a hedge fund, can have negative consequences, but the impact of uncertainty on decision making extends beyond gambling. Indeed, uncertainty in some form pervades nearly all our choices in daily life. Stepping into traffic to hail a cab, braving an ice storm to be the first at work, or dating the boss’s son or daughter also offer potentially great windfalls, at the expense of surety. We continually face trade-offs between options that promise safety and others that offer an uncertain potential for jackpot or bust. When mechanisms for dealing with uncertain outcomes fail, as in mental disorders such as problem gambling or addiction, the results can be disastrous. Thus, understanding decision making—indeed, understanding behavior itself—requires knowing how the brain responds to and uses information about uncertainty.

The economics of uncertainty

‘Uncertainty’ has been defined in many ways for many audiences. Here we consider it the psychological state in which a decision maker lacks knowledge about what outcome will follow from what choice. The aspect of uncertainty most commonly considered by both economists and neuroscientists is risk, which refers to situations with a known distribution of possible outcomes. Early considerations of risk were tied to a problem of great interest to seventeenth-century intellectuals; namely, how to bet wisely in games of chance. Blaise Pascal recognized that by calculating the likelihood of the different outcomes in a gamble, an informed bettor could choose the option that provided the greatest combination of value (v) and probability (p). This quantity (v × p) is now known as ‘expected value’.

Yet expected value is often a poor predictor of choice. Suppose that you are a contestant on the popular television game show Deal or No Deal. There are two possible prizes remaining: a very large prize of $500,000 and a very small prize of $1. One of those rewards—you do not know which!—is in a briefcase next to you. The host of the game show offers you $100,000 for that briefcase, giving you the enviable yet difficult choice between a sure $100,000 or a 50% chance of $500,000. Selecting the briefcase would be risky, as either a desirable or undesirable outcome might occur with equal likelihood. Which do you choose? Most individuals faced with real-world analogs of this scenario choose the safe option, even though it has a lower expected value. This phenomenon, in which choosers sacrifice expected value for surety, is known as risk aversion. However, the influence of risk and reward on decision making may depend on many factors: a sure $100,000 may mean more to a pauper than to a hedge fund manager. Based on observations such as these, Daniel Bernoulli1 suggested that choice depends on the subjective value, or utility, of goods (u), which leads to models of choice based on ‘expected utility’ (that is, u × p). When outcomes will occur with 100% probability (“Should I select the steak dinner or the salad plate?”), people’s choices may be considered to reflect their relative preferences for the different outcomes2.

Although expected utility models provide a simple and powerful theoretical framework for choice under uncertainty, they often fail to describe real-world decision making. Across a wide range of situations—from investment choices to the allocation of effort—uncertainty leads to systematic violations of expected utility models3. In the decisions made by real Deal or No Deal contestants in several countries, contestants’ attitudes toward uncertainty were influenced by the history of their previous decisions4, not just the prizes available for the current decision. Moreover, many real-world decisions have a more complex form of uncertainty because the distribution of outcomes is itself unknown. For example, no one can know all of the consequences that will follow from enrolling at one university or another. When the outcomes of a decision cannot be specified, even probabilistically, the decision is said to be made under ambiguity, following concepts introduced by Knight5 and the terminology of Ellsberg6. In most circumstances, people are even more averse to ambiguity than to risk alone.

Economists and psychologists have studied how these different aspects of uncertainty influence decision making. This research indicates that people are generally uncertainty averse when making decisions about monetary gains, but uncertainty-seeking when faced with potential losses. However, when either probabilities or values get very small, these tendencies reverse. Thus, the same individual may buy lottery tickets in the hope of a large unlikely gain, but purchase insurance to protect against an unlikely loss. To account for these uncertainty-induced deviations from expected utility models, Tversky and Kahneman proposed prospect theory7,8, which posits separate functions for how people judge probabilities and how they convert objective value to subjective utility. Other theorists have developed models that account for the effects of ambiguity on choice, by treating ambiguity as a distribution of probabilities (and thus converting it to risk)9 or by modeling the psychological biases that ambiguity induces (such as attention to extreme outcomes)10,11.

An important area of ongoing research is the study of individual differences in decision making under uncertainty. To address this issue, some researchers have evaluated whether risk attitudes constitute a personality trait12-14. In part, these efforts reflect the intuition of both the public and the scientific community that some individuals are inherently risk-seeking, while others are consistently risk averse. Despite its plausibility, the identification of a risk-seeking phenotype has foundered on a number of difficult problems. First, risk taking seems to be highly domain specific, such that one might find very different attitudes toward risk taking in financial versus health versus social situations. For example, among 126 respondents, no person was consistently risk averse in all five content domains, and only four individuals were consistently risk-seeking14. Moreover, the wording of questions leads to systematic differences in apparent willingness to take risks. People may differ more across domains in how they perceive risk rather than in their willingness to trade increased risk for increased benefits. Despite these inconsistencies, some data suggest stable gender and cultural differences in attitudes toward uncertainty15,16. Women, for instance, are more averse to uncertainty in all domains except social decision making14. That the same individual may express different attitudes toward uncertainty under different circumstances points to a potentially important role for neuroscience in that an understanding of mechanism may provide a powerful framework for interpreting these diverse behavioral findings.

Risk sensitivity in nonhuman animals

The canonical perspective on decision making in nonhuman animals is that, like people, they are generally risk averse. Indeed, risk aversion is reported for animals as diverse as fish, birds and bumblebees17,18. However, recent studies provide a more nuanced and context-dependent picture of decision making under risk. For example, risk preferences of dark-eyed juncos—a species of small songbird—depend on physiological state19. Birds were given a choice between two trays of millet seeds: one with a fixed number of seeds and a second in which the number of seeds varied probabilistically around the same mean. When the birds were warm, they preferred the fixed option, but when they were cold, they preferred the variable option. The switch from risk aversion to risk seeking as temperature dropped makes intuitive adaptive sense given that these birds do not maintain energy stores in fat because of weight limitations for flight. At the higher temperature, the rate of gain from the fixed option was sufficient to maintain the bird on a positive energy budget, but at the lower temperature energy expenditures were elevated, and the fixed option was no longer adequate to meet the bird’s energy needs. Thus, gambling on the risky option might provide the only chance of hitting the jackpot and acquiring enough resources to survive a long, cold night. In humans, wealth effects on risk taking might reflect the operation of a similar adaptive mechanism, promoting behaviors carrying an infinitesimal, but nonzero, probability of a jackpot. However, state-dependent variables such as energy budget or wealth seem likely to influence decision making in different ways in different species, or even among individuals within the same species, depending on other contextual factors20,21.

When reward sizes are held constant, but the delay until reward is unpredictable, animals generally prefer the risky option22. This behavior may reflect the well known effects of delay on the subjective valuation of rewards, a phenomenon known as temporal discounting23. A wealth of data from studies of interval timing behavior indicates that animals represent delays linearly, with variance proportional to the mean24. This internal scaling of temporal intervals effectively skews the distribution of the subjective value of delayed rewards, thereby promoting risk-seeking behavior17. Together, these observations suggest that uncertainty about when a reward might materialize and uncertainty about how much reward might be realized are naturally related. One common explanation for the generality of temporal discounting is that delayed rewards might be viewed as risky, thus leading to preference for the sooner option in intertemporal choice tasks25.

The converse might also be true26,27; for example, when an animal makes repeated decisions about a risky gamble that is resolved immediately, that gamble could also be interpreted as offering virtually certain but unpredictably delayed rewards. In a test of this idea, rhesus macaques were tested in a gambling task when there were different delays between choices28. Monkeys preferred the risky option when the time between trials was between 0 and 3 s, but preference for the risky option declined systematically as the time between choices was increased beyond 45 s. One explanation is that the salience of the large reward, and the expected delay until that reward could be obtained, influenced the subjective utility of the risky option. According to this argument, monkeys prefer the risky option because they focus on the large reward and ignore bad outcomes—a possibility consistent with behavioral studies in humans and rats27. Alternatively, monkeys could have a concave utility function for reward when the time between trials is short, which becomes convex when the time between trials is long. In principle, these possibilities might be distinguished with neurophysiological data.

Neuroeconomics of decision making under uncertainty

Probability and value in the brain

The extensive economic research on decision making under uncertainty leaves unanswered the question of what brain mechanisms underlie these behavioral phenomena. For example, how does the brain deal with uncertainty? Are there distinct regions that process different forms of uncertainty? What are the contributions of brain systems for reward, executive control and other processes? The complexity of human decision making poses challenges for parsing its neural mechanisms. Even seemingly simple decisions may involve a host of neural processes (Fig. 1). A powerful approach has been to vary one component of uncertainty parametrically while tracking neural changes associated with that parameter, typically with neuroimaging techniques. Such research has identified potential neural substrates for probability and utility.

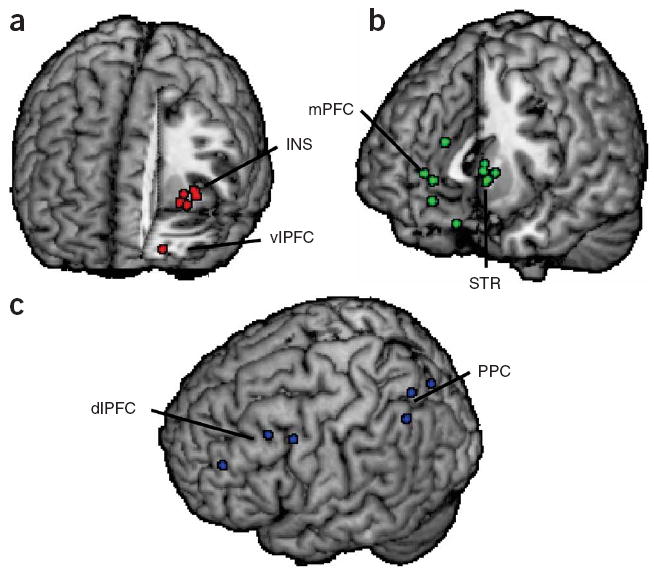

Figure 1.

Brain regions implicated in decision making under uncertainty. Shown are locations of activation from selected functional magnetic resonance imaging studies of decision making under uncertainty. (a) Aversive stimuli, whether decision options that involve increased risk or punishments themselves, have frequently been shown to activate insular cortex (INS)33,52,53,58 and ventrolateral prefrontal cortex (vlPFC)61. (b) Unexpected rewards modulate activation of the striatum (STR)43,46,53,59,76, particularly its ventral aspect, as well as the medial prefrontal cortex (mPFC)43,53,61,76. (c) Executive control processes required for evaluation of uncertain choice options are supported by dorsolateral prefrontal cortex (dlPFC)52,58 and posterior parietal cortex (PPC)33,34. Each circle indicates an activation focus from a single study. All locations are shown in the left hemisphere for ease of visualization.

If a decision maker cannot accurately learn the probabilities of potential outcomes, then decisions may be based on incomplete or erroneous information. In functional magnetic resonance imaging (fMRI) experiments29,30, subjects made a series of decisions under different degrees of uncertainty (from 60% to 100% probability that a correct decision would be rewarded). Importantly, subjects were never given explicit information about these probabilities, but learned them over time through feedback from their choices. Activation of the dorsomedial prefrontal cortex (Brodmann area 8) was significantly and negatively correlated with reward probability, an effect distinct from the activation associated with learning about probabilities. The medial prefrontal cortex has been previously implicated in other protocols in which subjects learn about uncertainty by trial and error, such as hypothesis testing31 and sequence prediction32.

Different brain regions may contribute to the selection of behavior based on estimated probability under other circumstances. In a probabilistic classification task in which decisions were based on the relative accumulation of information toward one choice or another33, activation of insular, lateral prefrontal and parietal cortices increased with increasing uncertainty, becoming maximal when there was equal evidence for each of two choices. This set of regions overlaps with those implicated in behavioral control and executive processing34-37, suggesting that information about probability may be an important input to neural control systems. Posterior parietal cortex, in particular, may be critical for many sorts of judgments about probability, value, and derivatives such as expected value because of its contributions to calculation and estimation38.

Converging evidence from primate electrophysiology and human neuroimaging has identified brain regions associated with utility. The receipt of a rewarding stimulus (‘outcome’ or ‘experienced’ utility) evokes activation of neurons in the ventral tegmental area of the midbrain, as well as in the projection targets of those neurons in the nucleus accumbens within the ventral striatum and in the ventromedial prefrontal cortex39. Computational modeling of the response properties of dopamine neurons has led to the hypothesis that they track a reward prediction error reflecting deviations from expectation40,41. Specifically, firing rate transiently increases in response to unpredicted rewards as well as to cues that predict future rewards, remains constant to fully predicted rewards and decreases transiently when an expected reward fails to occur. Similar results have been observed in human fMRI studies. For example, in a reaction-time game42,43, activation of the ventral striatum depends on the magnitude of the expected reward but is independent of the probability with which that reward is received43. A wide variety of different reward types modulate ventral striatum activation, from primary rewards such as juice44,45 to more abstract rewards such as money46,47, humor48 and attractive images49. Indeed, even information carried by unobtained rewards (a fictive error signal) modulates the ventral striatum50.

An important topic for future research is whether representations of value and probability share, at least in part, a common mechanism. While subjects played a gambling task, activation of the ventral striatum showed both a rapid response associated with expected value (maximal when a cue indicated a 100% chance of winning) and a sustained response associated with uncertainty (maximal when a cue indicated a 50% chance of winning)51. These results demonstrate the potential complexity of uncertainty representations in the brain, such that a single psychological state may reflect multiple overlapping mechanisms.

Uncertainty influences on neural systems mediating choice

Recent work in neuroeconomics has examined the effects of uncertainty on the neural process of decision making. These studies involve trade-offs between economic parameters, such as a choice between one outcome with higher expected value and another with lower risk. Because of the complexity of these research tasks, such studies are typically done using fMRI in human subjects, with monetary rewards.

In studies of risky choice, an intriguing and common result is increased activation in insular cortex when individuals choose higher-risk outcomes over safer outcomes. In an important early study, subjects played a ‘double-or-nothing’ game52. If subjects chose the safe option, passing, they would keep their current winnings. If subjects instead chose to gamble, they had a chance of doubling their total, at the risk of losing it all. Activation in the right anterior insula increased when the subjects chose to gamble, and the magnitude of insular activation was greatest in those individuals who scored highest on psychometric measures of neuroticism and harm avoidance. Under some circumstances, avoidance of risk may be maladaptive. For example, in a financial decision-making task that involves choices between safe ‘bonds’ and risky ‘stocks’53, when insular activation is relatively high before a decision, subjects tend to make risk-averse mistakes; that is, they chose the bonds, even though the stocks were an objectively superior choice. Insular activation is also robustly observed when decision-making impairments lead to increased risk54,55. Insular activation may reflect that region’s putative role in representing somatic states that can be used to simulate the potential negative consequences of actions56,57, as when people reject unfair offers in an economic game at substantial cost to themselves58.

Individuals tend to avoid risky options that could result in either a potential loss or a potential gain, even when the option has a positive expected value. Most people will reject such gambles until the size of the potential gain becomes approximately twice as large as the size of the potential loss; this phenomenon is known as loss aversion. Loss aversion may reflect competition between distinct systems for losses and gains or unequal responses within a single system supporting both types of outcomes. Both gains and losses evoke activation in similar regions, including the striatum, midbrain, ventral prefrontal cortex and anterior cingulate cortex, with activation increasing with potential gain but decreasing with potential loss59. Consistent with the assumptions of economic prospect theory7, activation in these regions is more sensitive to the magnitude of loss than that of gain. Whether losses and gains are encoded by the same system, as suggested by these results, or by more than one system60,61 remains an open and important question. A potential resolution is suggested by work demonstrating that prediction errors for losses and for gains are encoded in distinct regions of the ventral striatum62.

Fewer studies have investigated the neural mechanisms recruited by uncertainty associated with ambiguity, or the lack of knowledge about outcome probabilities. In a study notable for its use of parallel neuroimaging and lesion methods63, subjects chose between a sure reward ($3) and an outcome with unknown probability ($10 if a red card was drawn from a deck with unknown numbers of red and blue cards). On such ambiguity trials, most subjects preferred the sure outcomes. On the control trials, subjects were faced with similar trials that involved only risk (a sure $3 versus a 50% chance of winning $10). Thus, these two types of trials were matched on all factors except whether uncertainty was due to ambiguity or to risk. Ambiguity, relative to risk, increased fMRI activation in the lateral orbitofrontal cortex and the amygdala, whereas risk-related activation was stronger in the striatum and precuneus. In the same behavioral task, subjects with orbitofrontal damage were much less averse to ambiguity (and to risk) than were control subjects with temporal lobe deficits. One potential interpretation of these converging results is that aversive processes mediated by lateral orbitofrontal cortex, which is also implicated in processes associated with punishment61, exert a greater influence under conditions of ambiguity.

Similar comparisons of the neural correlates of risk and ambiguity have been done by other groups64,65. An fMRI study found that subjects’ preferences for ambiguity correlate with activation in lateral prefrontal cortex, whereas preferences for risk correlate with activity in parietal cortex64. These results link individual differences in economic preferences to activation of specific brain regions. However, there were no activation differences between ambiguity and risk in the ventral frontal cortex or in the amygdala. The lack of such effects may reflect the extensive training of the fMRI subjects, who were highly practiced at the experimental task and close to ambiguity-and risk-neutral in their choices. Thus, lateral prefrontal and parietal cortices may support computational demands of evaluating uncertain gambles, whereas orbitofrontal cortex and related regions may support emotional and motivational contributions to choice. None of these brain regions specifically processes ambiguity or risk; instead, each may contribute different aspects of information processing that are recruited to support decision making under different circumstances.

When faced with uncertainty, decision makers often try to gather information to improve future choices. Yet collecting information often requires forgoing more immediate rewards. This tension between seeking new information and choosing the best option, given what is already known, is called the ‘explore-exploit’ dilemma. In a study investigating the potential neural basis for such trade-offs66, subjects chose between four virtual slot machines, each with a different, unknown, and changing payoff structure. When subjects received large rewards from their chosen machine, there was increased activation in the ventromedial prefrontal cortex, with deviations from an expected reward level represented in the amplitude of ventral striatal activation. Most intriguingly, those trials in which subjects showed the most exploratory behavior were associated with increased activation in frontopolar cortex and the intraparietal sulcus. An important topic for future research will be identifying how these latter regions (and, presumably, others) modulate reward processing and behavioral control.

Neuronal correlates of outcome uncertainty and risky decision making

Neuroimaging studies have thus implicated several brain regions in uncertainty-sensitive decision making. Neurophysiological studies in animals confronted with reward uncertainty and risky decisions have only begun to explore the computations made by neurons in these areas and others. As described above, dopamine neurons fire a phasic burst of action potentials after the delivery of an unexpected reward as well as after the presentation of cues that predict rewards. This same system may contribute to the evaluation of reward uncertainty as well. When monkeys are presented with cues that probabilistically predict rewards, dopaminergic midbrain neurons respond with a tonic increase in activity after cue presentation that reflects reward uncertainty (Fig. 2a)67. Neuronal activity peaks for cues that predict rewards with 50% likelihood and decline as rewards become more or less likely. These results thus suggest that dopamine neurons may convey information about reward uncertainty. However, neuronal activity correlated with uncertain rewards may instead reflect, or even contribute to, the subjective utility of risky options, which was not measured in that study67, or the trial-wise back-propagation of reward prediction errors68 generated by dopamine neurons during learning (but see ref. 69). Nevertheless, the observation that lesions of the nucleus accumbens, a principal target of midbrain dopamine neurons, enhance risk aversion in rats70, as well as the increased incidence of compulsive gambling in patients with Parkinson’s disease taking dopamine agonists71,72, provides some functional support for dopaminergic involvement in risky decision making.

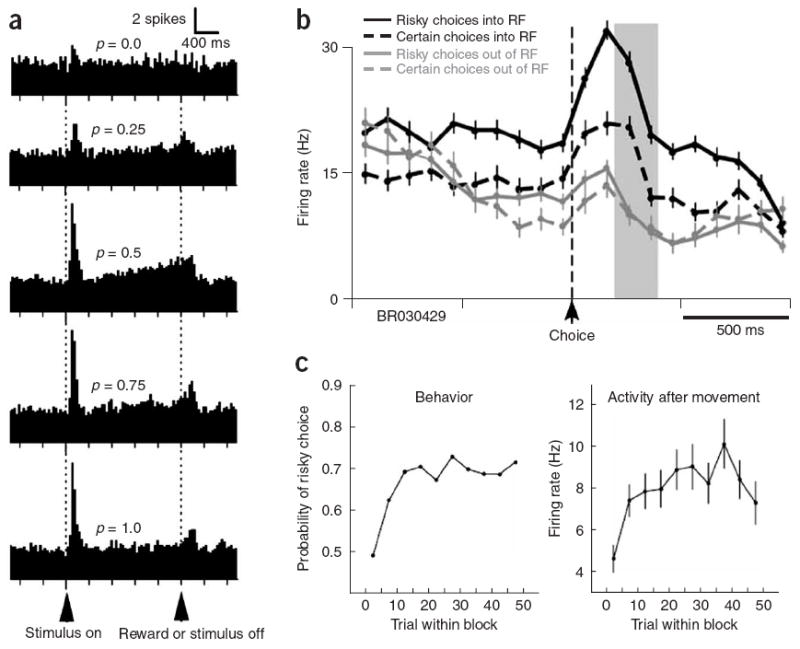

Figure 2.

Neuronal correlates of risky rewards. (a) Midbrain dopamine neurons in monkeys increase firing in anticipation of probabilistically delivered juice rewards (after C.D. Fiorillo et al., 2003)69. (b) Neurons in posterior cingulate cortex preferentially signal uncertain rewards in a visual gambling task. RF, receptive field target. (c) Changes in neuronal activity following a change in the identity of the uncertain target mirrored the development of preferences for that target (b,c after A.N. McCoy and M.L. Platt, 2005)75.

Regardless of which brain system provides initial signals about the uncertainty of impending rewards, an important question for neurobiologists is how such information about risk influences preferences and how these preferences are mapped onto the actions that express decisions. Neurons in posterior cingulate cortex (CGp) may be involved in risky decision making73. Based on anatomy, CGp is well situated to translate subjective valuation signals into choice because it makes connections with brain areas implicated in processing reward, attention and action74. Moreover, this area is activated during decision making when rewards are uncertain in either amount75 or time76, and the magnitude of activation depends on the subjective appeal of proffered rewards77. Finally, neurophysiology shows that CGp neurons respond to salient visual stimuli78, after visual orienting movements78,79 and after rewards80, and that all these responses scale with reward size and predictability80. Together, these data suggest that CGp has an evaluative role in guiding behavior79,80.

In a gambling task to assess whether reward-related modulation of neuronal activity in CGp reflects subjective utility or the objective properties of available rewards73, monkeys were given a choice between two options on a computer monitor that were matched for their expected value. Choosing the safe option always resulted in a medium-sized squirt of juice. Choosing the risky option resulted in a 50% chance of a large squirt of juice and a 50% chance of a small squirt of juice. In this task, monkeys favored the risky option, even in a second experiment when it paid less, on average, than the safe one. Neurons in CGp closely mirrored this behavioral bias. The studied neurons responded more strongly after risky choices (Fig. 2b), and these responses correlated with monkeys’ overall preference for the risky option rather than with the option’s objective value (Fig. 2c). These data are consistent with the hypothesis that CGp contributes to decision making by evaluating external events and actions with respect to subjective psychological state. One concern might be whether modulation of neuronal activity in CGp associated with choosing risky options reflects arousal, which might be elevated when making a risky choice81. Heart-rate, a somatic correlate of physiological arousal, did not vary between high-risk and low-risk blocks of trials, thus indicating that elevated arousal cannot completely account for these results. However, the responses of CGp neurons to uncertain gambles may reflect reward uncertainty per se rather than subjective preference for the risky option, as these variables were not dissociated in that study. Further studies that systematically dissociate risk and preference will be needed to address these questions.

CONCLUSIONS

Given the pervasiveness of uncertainty, it is hardly surprising that research from a wide range of disciplines—psychology, neuroscience, psychiatry and finance, among many—has already addressed some key questions: How should investors deal with financial risk, and how do they actually deal with it? What brain systems estimate outcome uncertainty? What is different about those systems in people who make bad choices? These are complex but tractable questions, and scientists have made significant progress toward their solution. We now understand that uncertainty strongly biases choice, that these biases vary across individuals and that specific brain systems contribute to biased decision making under uncertainty. These findings represent real advances, the importance of which should not be minimized.

Yet deep questions remain unanswered and even unaddressed. We contend that fundamental advances in the study of decision making will only arise from consilient integration of expertise and techniques across traditionally independent fields. Neuroscience data can constrain behavioral studies; individual differences in genetic biomarkers can inform economic models; developmental changes in both behavior and brain can guide studies of decision making in adults. Only through explicit interdisciplinary, multimethodological and theoretically integrative research will the current plethora of perspectives coalesce into a single descriptive, predictive theory of risk-sensitive decision making under uncertainty.

Acknowledgments

The authors wish to thank B. Hayden for comments on the manuscript and D. Smith for assistance with figure construction. The Center for Neuroeconomic Studies at Duke University is supported by the Office of the Provost and by the Duke Institute for Brain Sciences. The authors are also supported by MH-070685 (S.A.H.), EY-13496 (M.L.P.) and MH-71817 (M.L.P.).

References

- 1.Bernoulli D. Specimen theoriae novae de mensura sortis. Commentarii Academiae Scientarum Imperialis Petropolitanae. 1738;5:175–192. [Google Scholar]

- 2.Samuelson PA. Consumption theory in terms of revealed preference. Economica. 1948;15:243–253. [Google Scholar]

- 3.Camerer CF. Prospect theory in the wild: evidence from the field. In: Kahneman D, Tversky A, editors. Choices, Values, and Frames. Cambridge Univ. Press; Cambridge, UK: 1981. pp. 288–300. [Google Scholar]

- 4.Post T, van den Assem M, Baltussen G, Thaler RH. Deal or no deal? Decision making under risk in a large-payoff game show. Am Econ Rev. in the press. [Google Scholar]

- 5.Knight FH. Risk, Uncertainty, and Profit. Houghton Mifflin; New York: 1921. [Google Scholar]

- 6.Ellsberg D. Risk, ambiguity, and the Savage axioms. Q J Econ. 1961;75:643–669. [Google Scholar]

- 7.Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- 8.Tversky A, Kahneman D. Advances in prospect theory: cumulative representation of uncertainty. J Risk Uncertain. 1992;5:297–323. [Google Scholar]

- 9.Camerer C, Weber M. Recent developments in modeling preferences: uncertainty and ambiguity. J Risk Uncertain. 1992;5:325–370. [Google Scholar]

- 10.Ghirardato P, Maccheroni F, Marinacci M. Differentiating ambiguity and ambiguity attitude. J Econ Theory. 2004;118:133–173. [Google Scholar]

- 11.Tversky A, Fox CR. Weighing risk and uncertainty. Psychol Rev. 1995;102:269–283. [Google Scholar]

- 12.MacCrimmon KR, Wehrung DA. Taking Risks: The Management of Uncertainty. Free Press; New York: 1986. [Google Scholar]

- 13.Slovic P. Assessment of risk taking behavior. Psychol Bull. 1964;61:220–233. doi: 10.1037/h0043608. [DOI] [PubMed] [Google Scholar]

- 14.Weber EU, Blais AR, Betz E. A domain specific risk-attitude scale: measuring risk perceptions and risk behaviors. J Behav Decis Making. 2002;15:263–290. [Google Scholar]

- 15.Hsee CK, Weber EU. Cross-national differences in risk preference and lay predictions. J Behav Decis Making. 1999;12:165–179. [Google Scholar]

- 16.Bontempo RN, Bottom WP, Weber EU. Cross-cultural differences in risk perception: a model-based approach. Risk Anal. 1997;17:479–488. [Google Scholar]

- 17.Kacelnik A, Bateson M. Risky theories—the effects of variance on foraging decisions. Am Zool. 1996;36:402–434. [Google Scholar]

- 18.Stephens DW, Krebs JR. Foraging Theory. Princeton Univ. Press; Princeton, New Jersey, USA: 1986. [Google Scholar]

- 19.Caraco T. Energy budgets, risk and foraging preferences in dark-eyed juncos (Junco hyemalis) Behav Ecol Sociobiol. 1981;8:213–217. [Google Scholar]

- 20.Gilby IC, Wrangham RW. Risk-prone hunting by chimpanzees (Pan troglodytes schweinfurthii) increases during periods of high diet quality. Behav Ecol Sociobiol. 2007;61:1771–1779. [Google Scholar]

- 21.Kacelnik A. Normative and descriptive models of decision making: time discounting and risk sensitivity. Ciba Found Symp. 1997;208:51–67. doi: 10.1002/9780470515372.ch5. discussion 208, 67–70. [DOI] [PubMed] [Google Scholar]

- 22.Bateson M, Kacelnik A. Starlings’ preferences for predictable and unpredictable delays to food. Anim Behav. 1997;53:1129–1142. doi: 10.1006/anbe.1996.0388. [DOI] [PubMed] [Google Scholar]

- 23.Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons M, Mazur J, Nevin J, Rachlin H, editors. The Effect of Delay and of Intervening Events on Reinforcement Value. Erlbaum, Hillsdale; New Jersey, USA: 1987. pp. 55–73. [Google Scholar]

- 24.Gibbon J. Scalar expectancy theory and Weber’s law in animal timing. Psychol Rev. 1977;84:279–335. [Google Scholar]

- 25.McNamara JM, Houston AI. The common currency for behavioral decisions. Am Nat. 1986;127:358–378. [Google Scholar]

- 26.Kalenscher T. Decision making: don’t risk a delay. Curr Biol. 2007;17:R58–R61. doi: 10.1016/j.cub.2006.12.016. [DOI] [PubMed] [Google Scholar]

- 27.Rachlin H. The Science of Self-Control. Harvard Univ. Press; Cambridge, Massachusetts, USA: 2000. [Google Scholar]

- 28.Hayden BY, Platt ML. Temporal discounting predicts risk sensitivity in rhesus macaques. Curr Biol. 2007;17:49–53. doi: 10.1016/j.cub.2006.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Volz KG, Schubotz RI, von Cramon DY. Predicting events of varying probability: uncertainty investigated by fMRI. Neuroimage. 2003;19:271–280. doi: 10.1016/s1053-8119(03)00122-8. [DOI] [PubMed] [Google Scholar]

- 30.Volz KG, Schubotz RI, von Cramon DY. Why am I unsure? Internal and external attributions of uncertainty dissociated by fMRI. Neuroimage. 2004;21:848–857. doi: 10.1016/j.neuroimage.2003.10.028. [DOI] [PubMed] [Google Scholar]

- 31.Elliott R, Dolan RJ. Activation of different anterior cingulate foci in association with hypothesis testing and response selection. Neuroimage. 1998;8:17–29. doi: 10.1006/nimg.1998.0344. [DOI] [PubMed] [Google Scholar]

- 32.Schubotz RI, von Cramon DY. A blueprint for target motion: fMRI reveals perceived sequential complexity to modulate premotor cortex. Neuroimage. 2002;16:920–935. doi: 10.1006/nimg.2002.1183. [DOI] [PubMed] [Google Scholar]

- 33.Huettel SA, Song AW, McCarthy G. Decisions under uncertainty: probabilistic context influences activity of prefrontal and parietal cortices. J Neurosci. 2005;25:3304–3311. doi: 10.1523/JNEUROSCI.5070-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Paulus MP, et al. Prefrontal, parietal, and temporal cortex networks underlie decision-making in the presence of uncertainty. Neuroimage. 2001;13:91–100. doi: 10.1006/nimg.2000.0667. [DOI] [PubMed] [Google Scholar]

- 35.Koechlin E, Ody C, Kouneiher F. The architecture of cognitive control in the human prefrontal cortex. Science. 2003;302:1181–1185. doi: 10.1126/science.1088545. [DOI] [PubMed] [Google Scholar]

- 36.Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 37.Bunge SA, Hazeltine E, Scanlon MD, Rosen AC, Gabrieli JD. Dissociable contributions of prefrontal and parietal cortices to response selection. Neuroimage. 2002;17:1562–1571. doi: 10.1006/nimg.2002.1252. [DOI] [PubMed] [Google Scholar]

- 38.Dehaene S, Piazza M, Pinel P, Cohen L. Three parietal circuits for number processing. Cogn Neuropsychol. 2003;20:487–506. doi: 10.1080/02643290244000239. [DOI] [PubMed] [Google Scholar]

- 39.Schultz W, Dickinson A. Neuronal coding of prediction errors. Annu Rev Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- 40.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 42.Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001;12:3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- 43.Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. J Neurosci. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- 46.Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J Neurophysiol. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- 47.Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- 48.Azim E, Mobbs D, Jo B, Menon V, Reiss AL. Sex differences in brain activation elicited by humor. Proc Natl Acad Sci USA. 2005;102:16496–16501. doi: 10.1073/pnas.0408456102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Aharon I, et al. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32:537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- 50.Lohrenz T, McCabe K, Camerer CF, Montague PR. Neural signature of fictive learning signals in a sequential investment task. Proc Natl Acad Sci USA. 2007;104:9493–9498. doi: 10.1073/pnas.0608842104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Preuschoff K, Bossaerts P, Quartz SR. Neural differentiation of expected reward and risk in human subcortical structures. Neuron. 2006;51:381–390. doi: 10.1016/j.neuron.2006.06.024. [DOI] [PubMed] [Google Scholar]

- 52.Paulus MP, Rogalsky C, Simmons A, Feinstein JS, Stein MB. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. Neuroimage. 2003;19:1439–1448. doi: 10.1016/s1053-8119(03)00251-9. [DOI] [PubMed] [Google Scholar]

- 53.Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- 54.Paulus MP, Lovero KL, Wittmann M, Leland DS. Reduced behavioral and neural activation in stimulant users to different error rates during decision making. Biol Psychiatry. doi: 10.1016/j.biopsych.2007.09.007. published online 23 October 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Venkatraman V, Chuah YM, Huettel SA, Chee MW. Sleep deprivation elevates expectation of gains and attenuates response to losses following risky decisions. Sleep. 2007;30:603–609. doi: 10.1093/sleep/30.5.603. [DOI] [PubMed] [Google Scholar]

- 56.Damasio AR. The somatic marker hypothesis and the possible functions of the prefrontal cortex. Phil Trans R Soc Lond B. 1996;351:1413–1420. doi: 10.1098/rstb.1996.0125. [DOI] [PubMed] [Google Scholar]

- 57.Craig AD. How do you feel? Interoception: the sense of the physiological condition of the body. Nat Rev Neurosci. 2002;3:655–666. doi: 10.1038/nrn894. [DOI] [PubMed] [Google Scholar]

- 58.Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD. The neural basis of economic decision-making in the Ultimatum Game. Science. 2003;300:1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- 59.Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315:515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- 60.Kringelbach ML. The human orbitofrontal cortex: linking reward to hedonic experience. Nat Rev Neurosci. 2005;6:691–702. doi: 10.1038/nrn1747. [DOI] [PubMed] [Google Scholar]

- 61.O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- 62.Seymour B, Daw N, Dayan P, Singer T, Dolan R. Differential encoding of losses and gains in the human striatum. J Neurosci. 2007;27:4826–4831. doi: 10.1523/JNEUROSCI.0400-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- 64.Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- 65.Rustichini A, Dickhaut J, Ghirardato P, Smith K, Pardo JV. A brain imaging study of the choice procedure. Games Econ Behav. 2005;52:257–282. [Google Scholar]

- 66.Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 68.Niv Y, Duff MO, Dayan P. Dopamine, uncertainty and TD learning. Behav Brain Funct. 2005;1:6. doi: 10.1186/1744-9081-1-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Fiorillo CD, Tobler PN, Schultz W. Evidence that the delay-period activity of dopamine neurons corresponds to reward uncertainty rather than backpropagating TD errors. Behav Brain Funct. 2005;1:7. doi: 10.1186/1744-9081-1-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Cardinal RN, Howes NJ. Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci. 2005;6:37. doi: 10.1186/1471-2202-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Dodd ML, et al. Pathological gambling caused by drugs used to treat Parkinson disease. Arch Neurol. 2005;62:1377–1381. doi: 10.1001/archneur.62.9.noc50009. [DOI] [PubMed] [Google Scholar]

- 72.Driver-Dunckley E, Samanta J, Stacy M. Pathological gambling associated with dopamine agonist therapy in Parkinson’s disease. Neurology. 2003;61:422–423. doi: 10.1212/01.wnl.0000076478.45005.ec. [DOI] [PubMed] [Google Scholar]

- 73.McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- 74.Vogt BA, Finch DM, Olson CR. Functional heterogeneity in cingulate cortex: the anterior executive and posterior evaluative regions. Cereb Cortex. 1992;2:435–443. doi: 10.1093/cercor/2.6.435-a. [DOI] [PubMed] [Google Scholar]

- 75.Smith K, Dickhaut J, McCabe K, Pardo JV. Neuronal substrates for choice under ambiguity, risk, gains, and losses. Manage Sci. 2002;48:711–718. [Google Scholar]

- 76.Kable JW, Glimcher PW. The neural correlates of subjective value during inter-temporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Small DM, Zatorre RJ, Dagher A, Evans AC, Jones-Gotman M. Changes in brain activity related to eating chocolate: from pleasure to aversion. Brain. 2001;124:1720–1733. doi: 10.1093/brain/124.9.1720. [DOI] [PubMed] [Google Scholar]

- 78.Dean HL, Crowley JC, Platt ML. Visual and saccade-related activity in macaque posterior cingulate cortex. J Neurophysiol. 2004;92:3056–3068. doi: 10.1152/jn.00691.2003. [DOI] [PubMed] [Google Scholar]

- 79.Olson CR, Musil SY, Goldberg ME. Single neurons in posterior cingulate cortex of behaving macaque: eye movement signals. J Neurophysiol. 1996;76:3285–3300. doi: 10.1152/jn.1996.76.5.3285. [DOI] [PubMed] [Google Scholar]

- 80.McCoy AN, Crowley JC, Haghighian G, Dean HL, Platt ML. Saccade reward signals in posterior cingulate cortex. Neuron. 2003;40:1031–1040. doi: 10.1016/s0896-6273(03)00719-0. [DOI] [PubMed] [Google Scholar]

- 81.Meyer G, et al. Casino gambling increases heart rate and salivary cortisol in regular gamblers. Biol Psychiatry. 2000;48:948–953. doi: 10.1016/s0006-3223(00)00888-x. [DOI] [PubMed] [Google Scholar]