Abstract

The brain uses gaze orientation to organize myriad spatial tasks including hand movements. However, the neural correlates of gaze signals and their interaction with brain systems for arm movement control remain unresolved. Many studies have shown that gaze orientation modifies neuronal spike discharge in monkeys and activation in humans related to reaching and finger movements in parietal and frontal areas. To continue earlier studies that addressed interaction of horizontal gaze and hand movements in humans (Baker et al. 1999), we assessed how horizontal and vertical gaze deviations modified finger-related activation, hypothesizing that areas throughout the brain would exhibit movement-related activation that depended on gaze angle. The results indicated finger movement-related activation related to combinations of horizontal, vertical, and diagonal gaze deviations. We extended our prior findings to observation of these gaze-dependent effects in visual cortex, parietal cortex, motor, supplementary motor area, putamen, and cerebellum. Most significantly, we found a modulation bias for increased activation toward rightward, upper-right and vertically upward gaze deviations. Our results indicate that gaze modulation of finger movement-related regions in the human brain is spatially organized and could subserve sensorimotor transformations.

Keywords: Finger movement, Functional MRI, Gaze position, Human

Introduction

Humans commonly use their hands to reach, grasp, and manipulate objects, often using visual guidance. To perform these movements, the brain processes the location and physical properties of an object via visual information coded relative to gaze orientation, that is, in a gaze-centered frame of reference (Buneo and Andersen 2006). Psychophysics studies have provided substantial evidence that gaze orientation influences accuracy of hand movements (Bock et al. 1986; Enright 1995; McIntyre et al. 1997; Desmurget et al. 1998; Henriques et al. 1998), thereby demonstrating the importance of gaze signals for planning and controlling hand movements. These results suggest that hand movements are planned in a gaze-centered frame of reference (McIntyre et al. 1997; Henriques et al. 1998; Buneo and Andersen 2006). In addition, gaze orientation can influence identification of a sound source (Lewald 1997) and spatial navigation (Hollands et al. 2002). Therefore, gaze orientation represents a significant input signal that the brain computes when planning hand movements as well as other tasks.

Despite the importance of gaze in controlling everyday behavior, the precise mode that gaze orientation affects brain processing still remains somewhat elusive. Neural recording studies with monkeys and neuroimaging work with humans have revealed that gaze orientation modifies neuronal spike counts and MRI signal in various parts of the brain. In monkey, neuronal spike counts are modulated upon a reaching movement as a function of gaze orientation in the parietal cortex (MIP, area 5, V6A; Andersen and Mountcastle 1983; Andersen et al. 1985; Batista et al. 1999; Battaglia-Mayer et al. 2000; 2003; Buneo et al. 2002; Cisek and Kalaska 2002) and the ventral and dorsal part of the premotor cortex (PMv and PMd; Mushiake et al. 1997; Boussaoud et al. 1998; Jouffrais and Boussaoud 1999; Cisek and Kalaska 2002) but not in the primary motor cortex (M1; Mushiake et al. 1997). Neurons in the visual cortex also exhibit gaze-related modulation of spiking upon visual information processing (Trotter and Celebrini 1999; Rosenbluth and Allman 2002).

Human brain activation studies have also indicated that gaze angle can modulate information processing in a variety of brain areas involved in planning and preparing skeletal movements (Baker et al. 1999; DeSouza et al. 2000; 2002; Medendorp et al. 2003; Andersson et al. 2007). These gaze effects occur for tasks that required gaze alignment with finger pointing (DeSouza et al. 2000; Medendorp et al. 2003) and simply for arm position (Baker et al. 1999). Therefore, gaze effects on movement-related activation occur for dynamic pointing and for postural maintenance.

In the current work, we aimed to achieve a broader understanding of the spatial organization of gaze effects on brain representations for human voluntary movements, beyond the earlier descriptions of how horizontal gaze modulated hand movement-related activation (Baker et al. 1999; DeSouza et al. 2000; Medendorp et al. 2003). Revealing how vertical gaze deviations influence finger-related activation in humans also has importance since the lower visual field seems over-represented in visual and parietal areas compared to the upper visual field (Galletti et al. 1999; Dougherty et al. 2003). This over-representation might mediate the observed lower visual field advantage for controlling visually-guided movements (Danckert and Goodale 2001; Khan and Lawrence 2005). Prior studies focused upon frontal and parietal structures, although other brain structures, such as the cerebellum, have clear roles in oculomotor and arm motor control (Desmurget et al. 2001; Robinson and Fuchs 2001; Miall and Reckess 2002). Therefore, we undertook a more comprehensive evaluation of gaze effects on movement related activation in the entire human brain by asking participants to perform a visually-cued finger-tapping task and to maintain gaze on various visible targets arranged vertically and horizontally.

We hypothesized that visual, parietal, and frontal motor areas would exhibit horizontal gaze effects and that movement-related activation would increase as gaze deviated towards the effector. We also expected that sub-cortical regions such as the cerebellum and basal ganglia would show gaze effects since these regions have involvement in generating hand movements and they have reciprocal connections with cerebral cortex. We also hypothesized that deviating gaze vertically would modulate movement-related activation; here we reasoned that since lower visual field has an over-representation from behavioral and functional imaging work, there would be an increase of activation as gaze deviated downward in the visual, parietal, and frontal motor areas.

Materials and methods

Participants

Fifteen healthy adults (aged 19–34 years; 10 female, 5 male, 13 right-handed) recruited from the Brown University community participated in this project. They had no history of neurological, sensory or motor disorder. All participants provided written informed consent according to established Institution Review Board guidelines for human participation in experimental procedures at Brown University and Memorial Hospital of Rhode Island (the site of the MR imaging). Participants received modest monetary compensation for their participation.

Tasks and apparatus

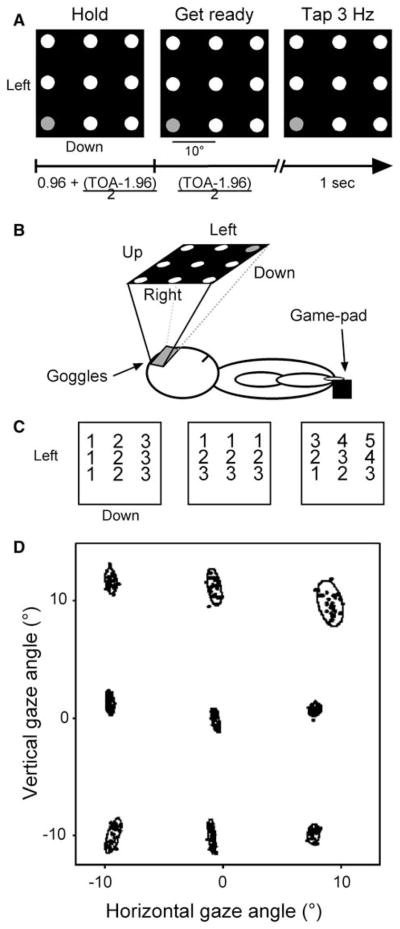

Participants performed a repetitive finger-tapping task, using the right index finger while statically directing gaze to one of nine visually defined locations (Fig. 1a). The simplicity of the task likely did not induce any nuisance effects due to two participants using their non-dominant index finger. After receiving task instructions, participants practiced the procedures for a few minutes before becoming positioned in the MRI in the standard supine body position with the right arm lying fully extended and pronated beside their right side (Fig. 1b). An MRI compatible game pad equipped with push-buttons (Resonance Technology, Inc., Northridge, CA) sensed the finger movements which were registered and stored in a Macintosh G3 Powerbook. Participants wore a set of headphones, for ear protection and communication with the experimenter, and a pair of MRI compatible LCD-based goggles for delivery of visual stimuli and eye movement monitoring via an embedded infrared camera (Resonance Technology, Inc.; 800 × 600 pixels resolution; accuracy of ±1 degree; Viewpoint software, Arrington Research, Scottsdale, AZ). A set of nine targets (circles, 10 mm diameter) in a 3 × 3 array (deviation of 10° of visual angle) was presented to the participant (Fig. 1a, b). This arrangement has similarity to experimental designs employed by others (e.g., Andersen and Mountcastle 1983; Boussaoud et al. 1998; Bremmer et al. 1999). All nine targets appeared as white dots on a black background, and they remained visible throughout the experiment. We used PsychToolbox for Matlab 5.2 (Mathworks, Natick, MA, http://www.psychtoolbox.org/; Brainard 1997; Pelli 1997) running on a Macintosh Powerbook 3400c to generate visual stimuli.

Fig. 1.

a Task schematic. Participants viewed nine targets and fixed gaze at 1 for 16 consecutive trials. In each trial the target’s color changed from red to yellow to green (left-middle-right panel for red-yellow-green, respectively) at which point the participant tapped three times with the right index finger. Trial-onset-asynchronies were 3.84, 4.8, 5.76, 6.72, or 7.68 s (random presentation). b Experimental set-up. Participants wore a pair of MRI compatible goggles and tapped with their right index finger. c Mapping of regression models for the horizontal (left), vertical (middle), and diagonal (right) dimensions. d Averages gaze positions for a subject for all trials as the target appeared green. See text for additional details

Each of six successive periods of acquiring functional MRI data consisted of three sets of 16 trials each. For each and every set of 16 trials, 18 sets of trials in total, participants fixed gaze upon one of the 9 targets as instructed by the target having colors other than white for each trial, first red, then yellow, then blinking green at 3 Hz (Fig. 1a). The blinking green target signaled participants to emit three synchronous taps using the right index finger. The flashing sequence (red-yellow-blinking green) was repeated 16 times for a particular target; then the same sequence occurred for another target until all nine targets had become sampled, upon which all nine targets become sampled once again, thereby yielding two sets of 16 trials of functional MRI data for each of the nine targets. The sequence of trial events yielded functional MRI signals for 288 finger-movement events (9 targets × 2 presentations × 16 trials). After 16 trials of gaze directed to a particular target, all targets turned to yellow for 500 ms to indicate that the flashing sequence would occur for a target at a different location. Participants were instructed to maintain gaze fixated on a single target until the set of 16 trials finished. The presentation order of the first and second set of nine targets occurred according to a Latin-square design to eliminate potential order effects and was randomized across participants. Eye position was monitored on-line and recorded via an infrared camera mounted inside the goggles (sampling rate 30 Hz), and participants became informed if their gaze had moved away from a particular target after each scan (i.e., three sets of 16 trials). Participants succeeded in maintaining gaze at the requested location for the duration of each set of 16 trials such that no trials became rejected due to non-compliance with the gaze fixation requirements.

Trials occurred with randomly presented trial-onset-asynchronies ranging from 3.84 to 7.68 s in 0.96 s increments, so as to facilitate the subsequent event-related functional MRI data analysis procedures. Each trial had the following time-course (x refers to one of the trial-onset-asynchronies): red target: 0.96 s + (x − 1.96 s)/2; yellow target: (x − 1.96 s)/2); green target flashing, 1 s.

MRI procedures

We used a 1.5 T Symphony MRI Magnetom MR system equipped with Quantum gradients (Siemens Medical Solutions, Erlangen, Germany) to acquire anatomical and functional MR images. Participants lay supine inside the magnet bore with the head resting inside a circularly polarized receive-only head coil used for radio frequency reception; the body coil transmitted radio frequency signals. Head movements were reduced by cushioning and mild restraint. After shimming the standing magnetic field, we acquired a high-resolution three-dimensional anatomical image consisting of 160 1 mm parasaggital slices (magnetization prepared rapid acquisition gradient echo sequence, MPRAGE; repetition time (TR) = 1,900 ms, echo time (TE) = 4.15 ms, inversion time = 20 ms, 1 mm isotropic voxels, 256 mm field of view). We then acquired T2*-weighted gradient echo images using the blood oxygenation level-dependent (BOLD) mechanism (Kwong et al. 1992; Ogawa et al. 1992). Forty-eight slices were acquired covering the whole brain (TE = 38 ms, TR = 3.84 s, field of view = 192 mm, image matrix = 64 × 64, 3 mm slice thickness for 3 mm isotropic voxels). The MRI system acquired the slices in an ascending interleaved manner. Images were acquired during 81 volumes (75 volumes for three participants) in each of six periods of three 16-trials sets of (five periods for one participant, see “Tasks and apparatus” above). The experimental procedures lasted 31.1 min and another ~9 min for the high-resolution T1-weighted MR image.

MRI signal processing and analysis

Images were processed, analyzed and visualized using AFNI (analysis of functional neuroimages; Medical College of Wisconsin; National Institute of Health: http://afni.nimh.nih.gov/afni, (Cox 1996; Cox and Hyde 1997) and FSL software packages (FMRIB software library, http://www.fmrib.ox.ac.uk/fsl/). The first two volumes in each scan were discarded from further consideration due to T1 saturation effects. The anatomical and functional datasets were co-registered and normalized with FSL to the MNI template (Mazziotta et al. 1995) using linear (affine) registration (Jenkinson and Smith 2001). The BOLD dataset for each participant was motion corrected using six motion parameters (x, y and z translations and roll, pitch and yaw rotations), the linear trends were removed, and it was spatially smoothed with a 6 × 6 × 6 mm Gaussian kernel using AFNI tools.

The occurrence of each tapping cue in each of the nine conditions (i.e., the nine target positions) was convolved with a gamma variate function (AFNI, waver tool; Cohen 1997) to yield an impulse response function. We then used these nine reference functions and the six motion correction parameters as inputs to a multiple regression analysis (AFNI, 3dDeconvolve tool) to estimate the β weight of each condition on a voxel-wise base. AFNI automatically computes a baseline activation level with this model. Finally, we normalized the estimated β weights into percentage change signal by dividing them by the mean signal of the entire time-series.

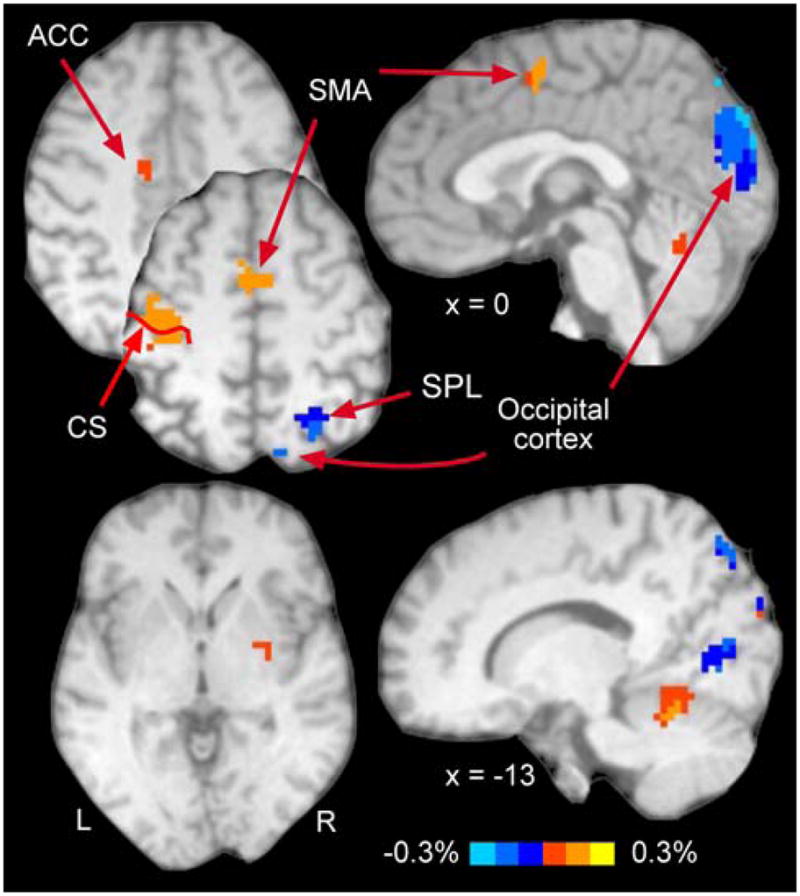

We first determined those voxels that exhibited significant finger movement-related activation by testing the null hypothesis that finger movement did not elicit activation using a t test for each target separately and retaining voxels that passed a threshold of p ≤ 0.001 for at least one target. We then corrected the resulting voxel-level analysis for multiple comparisons by setting a cluster threshold of p ≤ 0.05 corresponding to 12 adjacently clustered voxels. The cluster-level analysis used the Monte Carlo sampling procedure (AFNI, AlphaSim tool) and yielded seven clusters deemed to have finger movement-related activation (Fig. 2; Table 1).

Fig. 2.

Finger-related activation. Activation presented here results from data pooled across all targets. Note strong activation in classically defined motor areas, such as contralateral M1, SMA, anterior cingulate gyrus, and ipsilateral anterior cerebellum. See Table 1 for all activated areas. CS central sulcus

Table 1.

Cluster report for the finger-related activation

| Brain regions (BA) | Volume | Intensity | Coordinates |

Percent of voxels |

|||

|---|---|---|---|---|---|---|---|

| x | y | Z | Horizontal | Vertical | |||

| R Occipital cortex, lingual gyrus, Cuneus, SPL (18–19, 7) | 7,857 | −0.11 | −9 | 77 | 14 | 73.5* | 5.5* |

| L Primary motor cortex (4) | 3,915 | 0.16 | 40 | 20 | 56 | 77.2* | 48.9 |

| R Cerebellum (CR-IV-V) | 2,619 | 0.08 | −12 | 55 | −17 | 80.4* | 22.7* |

| L Anterior cingulate gyrus (24) | 1296 | 0.13 | 2 | −1 | 54 | 87.5* | 8.3* |

| R SPL (7) | 945 | −0.10 | −25 | 63 | 58 | 100* | 25.8* |

| L SMA (6) | 405 | 0.06 | 12 | 5 | 46 | 86.7* | 7.1* |

| R Putamen | 324 | 0.06 | −29 | 47 | 2 | 83.4 | 100* |

Cluster volume in μl with one voxel = 27 μl. Intensity represents the average activation across all targets. Coordinates represent the center of mass of an activation cluster, with +x indicating left hemisphere. Percent of voxels shows proportion of voxels within a cluster with a positive slope for the horizontal (left < center < right) and vertical dimensions (up < middle < low) with * indicating that the distribution of voxels is significantly different than chance

Note that the two left-handed participants showed the expected increased activation in the left hemisphere when tapping; this activation had similarly to that observed in the right-handed participants. As a check that inclusion of these two individuals in the group of otherwise right-handed participants might have untowardly influenced the results, we conducted a subsidiary analysis without these participants. The results of this sub-analysis indicated the same clusters of activation for the group of right-handed participants and the same gaze-dependent effects on movement-related activation. Therefore, we retained the two left-handed participants in the data analyses reported here.

We then tested the null hypothesis that gaze position did not modify finger movement-related activation in these seven clusters by computing the slope of observed activation across gaze conditions for each voxel within these clusters for the horizontal, vertical, and diagonal components (tested independently). We set the regressors to identify voxels showing increased activation as gaze deviated rightward (horizontal) or downward (vertical), though opposite effects would become detected with negative slopes (AFNI, 3dRegAna tool). For the diagonal regression, we tested for increase of activation for gaze deviations from the upper left target towards the lower right one (UL-LR) and from lower left target towards upper right one (LL-UR). In the horizontal dimension, we also used non-linear regression with weights of [1 2 2] for the left, center and right columns of targets to try to replicate the results of Baker et al. (1999) who found a higher number of activated voxels for both straight ahead and rightward gaze deviations than leftward gaze. We then used t test across voxels in each cluster to test whether the slopes differed significantly from zero.

We also determined whether gaze position influenced voxels that did not exhibit finger movement-related activation. For this analysis, we created a masked dataset using those voxels identified in the previous analysis as having finger movement-related activation and ran linear regression on the horizontal, vertical, and diagonal dimensions separately. In the horizontal dimension, we also used nonlinear regression with weights of [1 2 2] for the left, center and right columns of targets as in the previous analysis. For the horizontal and vertical regressions, we tested for increase of activation as gaze deviated rightward and downward, respectively. For the diagonal regression, we tested for increase of activation for gaze deviations from the upper left target towards the lower right one (UL-LR) and from lower left target towards upper right one (LL-UR). Figure 1c illustrates the mappings for the linear regressions for the horizontal, vertical and diagonal LL-UR regression model to the targets. For all regressions, we tested also for the opposite fit to a model. We used a voxel threshold of p = 0.001 and a cluster threshold of p = 0.05 corresponding to 11 adjacent voxels (smaller than the previous analysis because of the masking procedure).

We analyzed functional MRI data for 15 participants but behavioral data for 14 participants since one behavioral data-set became corrupted. As noted, we monitored eye position during the MRI procedures to ascertain that gaze remained fixed on a single target during each block of trials. Non-compliance to the instructions occurred rarely; therefore, we had no need to reject trial-data for any participant due to errant gaze positioning. Figure 1d illustrates gaze positions for each of the nine targets for a single representative participant. Each dot represents the average of all samples that were within two standard deviations of the mean for a trial during the TAP event (1 s) and each ellipse represents the area covering 95% of the average positions for each trial. We eliminated eye-position data beyond two SD’s, since they typically represented instability in the eye-tracking hardware.

We used the brain atlas of Duvernoy (1991), the cerebellum atlas of Schmahmann et al. (1999), and a navigable web-based human brain atlas (http://www.msu.edu/~brains/brains/human/index.html) to localize activation to brain areas and to assign Brodmann areas.

Results

Behavior

We addressed whether a particular gaze deviation affected behavioral aspects of finger movements by computing the reaction time (RT) of finger movements (elapsed time between the green cue appearing and the first tap, see Fig. 1a) by testing the null-hypothesis of no difference of RT for each target, using a one-way ANOVA. This analysis revealed no difference in RT across the nine targets, F8,117 = 0.35, with the mean (±SD) RT = 328 ± 30 ms (averaged across all nine targets; range: 290–370 ms). We also considered whether participants, contrary to the instructions, performed an unequal number of taps for different targets. The output of the one-way ANOVA failed to reject the null hypothesis that tap quantity did not differ across the nine gaze positions, with a mean number of finger taps per target of 3.05 ± 0.05 (averaged across targets; F8,117 = 1.11). These results indicate that behavior had similarity across the various gaze locations.

Finger movement-dependent gaze effects

We identified seven clusters that exhibited finger movement-related activation; their location appears in Fig. 2 and Table 1 provides additional details about these activation clusters. The largest of these finger movement related clusters appeared in occipital lobe and encompassed portions of the visual cortex, lingual gyrus (BA18, BA19), cuneus bilaterally, and right SPL (BA7); its location had consistency with prior work in our laboratory showing involvement of occipital cortical areas in visually paced tapping (Kim et al. 2005). We also found clusters in more classical motor regions, including the left M1 (BA4), right cerebellum (CR-IV-V), left ACC (BA24), right SPL (BA7), left SMA (BA6), and right putamen.

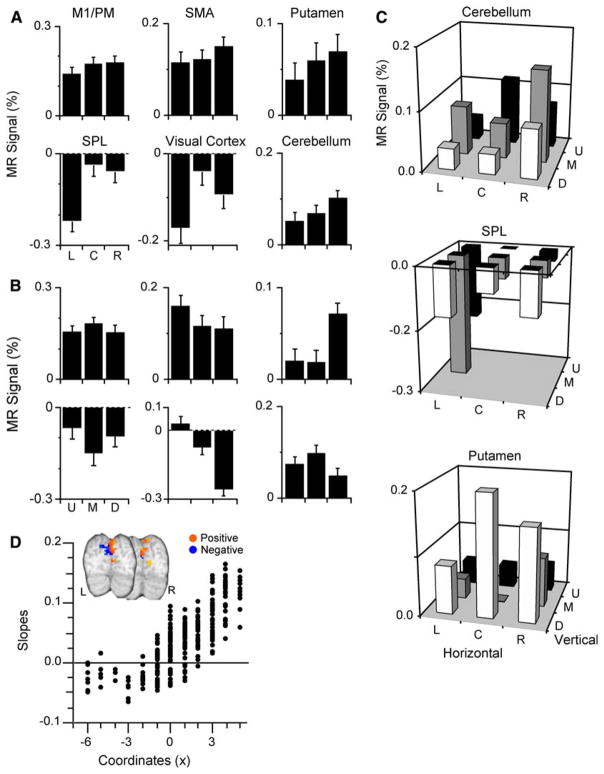

Figure 3a depicts the percent signal change for each cluster as a function of gaze positions in the horizontal dimension; the data revealed that all clusters exhibited increased activation as gaze deviated rightward. Our analyses did not reveal any cluster with the greatest movement-related activation when participants gazed leftward. All seven clusters had slopes significantly different than zero (all p < 0.0005) as gaze deviated increasingly rightward, indicating significant gaze effects on finger movement-related activation. Note that for the clusters in SPL and visual cortex the functional MRI signal was deactivated for all gaze positions; thus, gaze deviations toward the right yielded less deactivation, in other words, a relative increase in activation as gaze position moved from the left to the right. The regression analysis using the non-linear [1 2 2] model also indicated that all clusters had slopes significantly different than zero. However, we found that not all of the gaze-related patterns from individual voxels within any particular cluster exhibited a positive slope (Table 1). To evaluate the distribution of gaze-related patterns types within a cluster, we tabulated the percentage of voxels that exhibited a gaze-related pattern with a positive slope and ascertained whether this percentage occurred by chance. For all clusters, but the putamen, the percentage of positive slopes differed significantly than what was expected by chance (X2 > 3.84 (df = 1), p < 0.05). In the putamen, activation for 10/12 voxels exhibited a gaze-related positive slope. The lack of significance likely relates to power issue as 11/12 voxels with positive slope would have been significantly different than chance.

Fig. 3.

Gaze effects of the finger movement-related activation for the horizontal and vertical dimensions. a Activation for horizontal gaze shifts (pooled across vertical dimension). Movement-related activation increases as gaze deviates towards the right for all clusters. b Activation related to vertical gaze shifts (pooled across horizontal dimension). Movement-related activation increased as gaze deviated upward for every cluster but the putamen in which activation increased as gaze deviated downward and the primary motor cortex with did not exhibit vertical gaze effects. c Finger-movement-related activation of all gaze positions for the cerebellum, SPL, and putamen. d Slopes of individual voxels in the visual cortex cluster. Slopes show a spatial organization with negative slopes (more activation for leftward than rightward gaze deviation) appearing in the left hemisphere and positive slopes (more activation for rightward than for leftward gaze deviations) in the right hemisphere

For vertical gaze, all but one of the clusters with movement-related activation exhibited slopes significantly different than zero. The clusters in the SMA, ACC, SPL, cerebellum, and visual cortex showed increased activation as gaze deviated upward, whereas the putamen had increased activation as gaze deviated downward (all p < 0.002). The activation in M1 did not exhibit a vertical gaze effect, i.e., slopes were not significantly different than zero (p = 0.66). We also tabulated the percentage of voxels within each cluster that exhibited activation having positive slope as gaze increasing deviated downward. This analysis revealed that all, but M1, had more negative slopes than what was expected by chance; the putamen had more positive slopes than expected by chance (Table 1).

We found that all seven clusters with finger movement-related activation had slopes significantly different than zero for the LL-UR model (all p < 0.005), and all clusters but the one located in the putamen showed increased activation for gaze deviating toward the upper-right; the putamen exhibited increased activation for gaze deviating towards the lower left. For the UL-LR model all clusters but the ACC and SMA (p > 0.01) had slopes significantly different than zero and these showed increased of activation as gaze deviated towards the lower-right. Figure 3c illustrates the finger movement-related activation for all gaze positions for the cerebellum, SPL, and putamen.

We also note that participants experienced asymmetric visual input to the two hemispheres when they looked to the left or to the right targets. Since the targets remained visible throughout the procedures, it is possible that some of the observed activation patterns related to asymmetries in visual input, especially regarding lateralization of possible activation patterns. To address this potential confound, we examined the spatial distribution of the positive and negative slopes within the activation clusters relative to gaze deviating from left to right. We found that the cluster located in the region of the visual cortex exhibited a clear spatial dependence on visual input such that voxels in the left hemisphere had negative activation slopes, that is, less activation as gaze deviated increasingly rightward, whereas voxels in the right hemisphere had positive slopes, that is, more activation as gaze deviated increasingly rightward. No other activation cluster showed similar hemispheric effects related to deviations of gaze angle. We found no spatial effects for gaze in the vertical dimension.

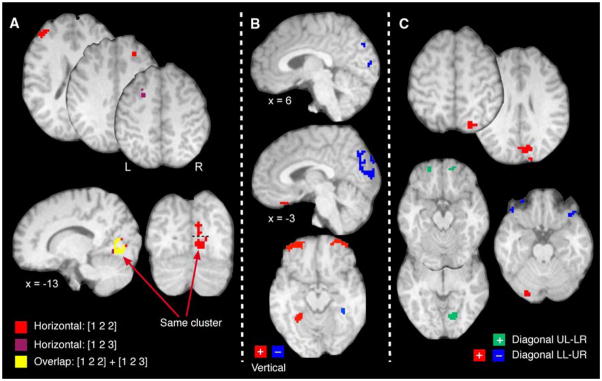

Finger-movement independent gaze effects

We next identified brain regions that exhibited systematic gaze-related modulation of activation independent of finger-movement activation. For the horizontal regression [1 2 3] model, we identified only a single cluster that exhibited increasing activation as gaze deviated from left to right; the cluster was located in the occipital cortex (104 voxels; x = −13, y = 7, z = −1; max R2 = 0.24). The cluster in the occipital cortex found with the horizontal [1 2 3] model largely overlapped (81%; the remaining voxels formed a small cluster of 10 voxels and the rest of the 9 voxels were scattered; not shown) with a cluster in the occipital gyrus/lingual gyrus (BA18) activated according to the horizontal [1 2 2] model (Table 2). We also found 12 voxels in the left MFG that exhibited increasing activation as gaze deviated rightward. However, these voxels did not form a reliable and contiguous cluster since the activation of a single voxel in this region was slightly below (p = 0.0015) the rigorous statistical threshold (dark pink label in axial slices in Fig. 4a). We found four clusters with an activation pattern that conformed to the [1 2 2] horizontal model (Table 2, Fig. 4a). We found four activation clusters for the [1 2 2] model with two clusters located in an expanse of right visual cortex (Fig. 4a, red label; on the coronal view they are separated by the dashed line) involving BA17, BA18, and BA19, the lingual and fusiform gyrus, and the right cuneus (most superior cluster). The analysis also revealed a cluster in the left pre-frontal cortex (BA46) and one in the right medial frontal gyrus (MFG, BA8; axial slices). Figure 4a also illustrates the locations of these clusters and corresponding overlap with those clusters showing activation conforming to the horizontal [1 2 3] model (red and yellow labels, respectively).

Table 2.

Cluster report for the horizontal regression analysis: [1 2 2] model

| Brain regions (BA) | Volume | R2 | Coordinates |

||

|---|---|---|---|---|---|

| X | y | z | |||

| R Occipital gyrus, lingual gyrus (18) | 2,646 | 0.22 | −13 | 72 | 0 |

| L Pre-frontal cortex (46) | 513 | 0.13 | 43 | −44 | 22 |

| R Cuneus (18) | 486 | 0.16 | −10 | 79 | 18 |

| R MFG (8) | 351 | 0.12 | −22 | −38 | 35 |

All clusters showed increase of finger-related activation as gaze deviates rightward. R2 correspond to the amount (maximum) of explained variance (%)

Fig. 4.

Horizontal, vertical, and diagonal gaze effects. a Horizontal gaze effects: brain responses principally for horizontal gaze shifts showing activation in the frontal gyrus bilaterally and occipital cortex (see Table 2 for all activated areas). Numbers refer to areas discussed in text. b Vertical gaze effects: activation related to vertical gaze shifts showing labeling in the occipital cortex, parahippocampal gyrus and orbito-frontal cortex bilaterally (see Table 3 for all activated areas). c Diagonal gaze effects: activation related to two diagonal regression models, lower-left to upper-right (LL-UR) and upper-left to lower-right (UL-LR). For the LL-UR regression, areas with gaze effects included a continuous cluster covering parts of the visual cortex, the superior parietal area and the cuneus, the cerebellum. For the UL-LR regression, gaze effects occurred in the right lingual gyrus, left superior temporal gyrus, and orbito-frontal gyrus bilaterally. Complete details in Tables 2, 3, and 4

Deviations in vertical gaze yielded systematic variation in activation independent of finger movement for 11 clusters, 6 that exhibited increased activation as gaze deviated increasingly downward and 5 clusters that showed more activation as gaze deviated increasingly upward (in Fig. 4b, red and blue labels, respectively, Table 3). From those five clusters with upward gaze effects, we found three adjacent clusters in the occipital cortex with one cluster located in the occipital gyrus extending superiorly to the pre-cuneus and SPL (BA18, 7; inferior saggital image) and two clusters in the left cuneus (BA18; most superior saggital image); they showed increasing activation as gaze shifted from the lower to the upper targets. We found two other clusters with this effect in the left IFG (BA10; not shown) and parahippocampal gyrus (37; axial image). The six activated clusters best related to downward gaze appeared in the parahippocampal formation (BA37; axial image) bilaterally and in the anterior orbito-frontal gyrus bilaterally (BA11; axial image) and medially (BA11; saggital image) and right fusiform gyrus (BA37; not shown).

Table 3.

Cluster report for the vertical regression analysis: [1 2 3] model

| Brain regions (BA) | Volume | R2 | Coordinates |

||

|---|---|---|---|---|---|

| X | y | z | |||

| R Occipital gyrus (18–19), SPL (7) | 5,184 | 0.25* | −4 | 83 | 25 |

| L Anterior orbito-frontal cortex (11) | 2,754 | 0.20 | 30 | −57 | −12 |

| R Anterior orbito-frontal cortex (11) | 2,133 | 0.18 | −29 | −55 | −17 |

| L Parahippocampal gyrus/lingual gyrus (18) | 1,539 | 0.18 | 27 | 46 | −11 |

| L IFG (10) | 432 | 0.21* | 28 | −30 | −26 |

| L Cuneus (18) | 405 | 0.16* | 10 | 86 | 31 |

| L Anterior orbito-frontal cortex (11) | 351 | 0.18 | 1 | −30 | −27 |

| R fusiform gyrus (37) | 351 | 0.14 | −36 | 37 | −22 |

| R Parahippocampal gyrus/hippocampus | 351 | 0.14 | −29 | 43 | −7 |

| L Cuneus (18) | 324 | 0.13* | 4 | 93 | 11 |

| R Parahippocampal gyrus | 297 | 0.12* | −35 | 39 | −13 |

R2 with an * refers an increase of finger-related activation as gaze deviates upward whereas those without refers to an increase of finger-related activation as gaze deviates downward

We also found activation that tracked linear gaze shifts along the diagonal of the target array. Application of the LL-UR regression model (Fig. 4c; Table 4) revealed seven clusters with two of these clusters exhibiting increased activation as gaze deviated progressively from the lower-left toward the upper-right portions of the visual workspace (Fig. 4c, red label). We found a large activation cluster posteriorly and principally in the right hemisphere that involved the lingual gyrus, the cuneus, and the visual cortex (BA17, BA18, and BA19) and extended superiorly to the right SPL (BA7). There was also a cluster in the left cerebellum (CR-I) that showed the same effect. Clusters that exhibited increasing activation as gaze deviated from the upper-right toward the lower-left of the target array (Fig. 4c, blue label) occurred bilaterally in the orbito-frontal gyrus (BA11; note that we found two clusters in each hemisphere) and the left parahippocampal gyrus (BA37). Finally, the UL-LR regression revealed four clusters (green labels) located in the orbito-frontal gyrus bilaterally (BA11), left superior temporal gyrus (BA38; not shown), and right lingual gyrus (BA18).

Table 4.

Cluster report for the LL-UR and UL-LR diagonal regressions analysis

| Brain regions (BA) | Volume | R2 | Coordinates |

||

|---|---|---|---|---|---|

| X | y | z | |||

| LL-UR | |||||

| R Occipital gyrus, cuneus, SPL (17,18,19,7) | 6,615 | 0.22 | −10 | 78 | 30 |

| L Orbito-frontal cortex (11) | 756 | 0.12* | 47 | − 33 | −15 |

| L Cerebellum (CR-I) | 513 | 0.15 | 24 | 86 | −23 |

| L Orbito-frontal cortex (11) | 486 | 0.15* | 31 | − 55 | −16 |

| L Parahippocampal gyrus (37) | 459 | 0.11* | 28 | 47 | −7 |

| R Orbito-frontal cortex (11) | 351 | 0.16* | −39 | − 30 | −20 |

| R Orbito-frontal cortex (11) | 324 | 0.12* | −36 | − 57 | −17 |

| UL-LR | |||||

| R Lingual gyrus (18) | 1,053 | 0.16 | −15 | 71 | −3 |

| L Orbito-frontal gyrus (11) | 351 | 0.11 | 22 | − 55 | −17 |

| L Superior temporal gyrus (38) | 324 | 0.12* | 26 | −4 | −38 |

| R Orbito-frontal gyrus (11) | 324 | 0.11 | −15 | − 55 | −18 |

R2 for the LL-UR regression with an * refers an increase of finger-related activation as gaze deviates left and downward whereas those without refers to an increase of finger-related activation as gaze deviates right and upward. R2 for the UL-LR regression with an * refers an increase of finger-related activation as gaze deviates left and upward whereas those without refers to an increase of finger-related activation as gaze deviates right and downward

Discussion

The current findings replicate previous findings that static gaze orientation influences finger movement-related brain activation in humans (Baker et al. 1999; DeSouza et al. 2000) and neural spiking of cortical areas in monkeys (Andersen and Mountcastle 1983; Andersen et al. 1985; Batista et al. 1999; Battaglia-Mayer et al. 2000; 2003; Buneo et al. 2002; Cisek and Kalaska 2002). We replicate these gaze effects in frontal and parietal areas and for the first time in the cerebellum and putamen. We also show that these gaze effects have an orderly spatial organization much like that described for neural activity in monkeys (Boussaoud et al. 1998; Bremmer et al. 1998). Finally, we show that gaze orientation also has a global influence on activation independently of finger movement, perhaps an attentional effect, in a host of cortical and sub-cortical regions across the entire brain. These results suggest that gaze represents an essential signal computed by the brain and that it underlies a basic organizational principle for conveying static and dynamic properties of visual input to wide sectors of the brain. Nevertheless, the lateralization of the visual inputs for the gaze left and gaze right conditions alone could have been a factor in some of the observed results and may for some areas prevent a clear differentiation between modulatory effects related to visual input or gaze.

Gaze effects on finger movement

The current findings provide support for widespread modulatory effects of gaze position on brain processing (e.g., Trotter and Celebrini 1999; Buneo et al. 2002) and in particular interactions between the ocular motor and skeletal motor systems (Baker et al. 1999; Batista et al. 1999; DeSouza et al. 2000; Buneo et al. 2002; Cisek and Kalaska 2002). We found static gaze effects in M1, ACC, and SMA, thereby extending to some frontal motor areas prior observations of gaze effects in humans that had been mostly limited to the parietal cortex (DeSouza et al. 2000; Medendorp et al. 2003). We also show for the first time in humans that maintaining gaze in different positions affects finger movement-related activation in the putamen and cerebellum. While we show that gaze modulated movement-related processing in M1 (Baker et al. 1999), other groups have not found this effect (e.g., Mushiake et al. 1997; DeSouza et al. 2000); the discrepancies may relate to differences in the tasks used across experiments, with gaze position modulating tapping-related activation (Baker et al. 1999 and current results) but not neural processes related to reaching (Mushiake et al. 1997; DeSouza et al. 2000).

We found that gaze held in different positions had widespread influences in the cerebellum and putamen by modulating finger movement-related activation, likely having relationship to the presumed role of these areas in forming voluntary motor commands. The cerebellum has reciprocal connections with the parietal cortex (Middleton and Strick 1998; Glickstein 2003) and participates in eye-hand coordination and regulation of ongoing movements (Desmurget et al. 2001; Robinson and Fuchs 2001; Miall and Reckess 2002). The cerebellum also sends projections to the putamen (Hoshi et al. 2005) which is connected to SMA and M1 via the basal ganglia thalamocortical circuits (Alexander et al. 1986). The cerebellum and putamen along with parietal and frontal motor areas likely participate in generating hand movements and our results show that these structures likely incorporate gaze signals as a common reference frame. While our work does not directly address how these regions integrate gaze and reaching reference frames, it nevertheless suggests interactions between brain systems controlling gaze and skeletal movements.

Although previous studies have demonstrated that gaze orientation modulates human brain processing (e.g., Baker et al. 1999; DeSouza et al. 2000; Medendorp et al. 2003), the current results indicate that these gaze effects follow a spatial organization with a general preference for rightward and upward gaze deviation, at least for finger-tapping. The vertical and diagonal gaze modulation of finger movement-related activation may have particular functional significance. Prior work has demonstrated an over-representation of the lower visual field in visual and parietal areas (Galletti et al. 1999; Dougherty et al. 2003), perhaps indicating asymmetries for visual processing in the vertical dimension. This vertical asymmetrical effect resonates with psychophysical studies showing an advantage in performing goal-directed movements in the lower visual field (Danckert and Goodale 2001; Khan and Lawrence 2005; but see Binsted and Heath 2005). Our results demonstrating coding of vertical visual space in motor areas, such as SMA, cerebellum, putamen, and also parietal and visual brain regions provides a physiological substrate for the observations of Danckert and Goodale (2001) and Khan and Lawrence (2005).

The current findings of gaze modulation in visual, parietal and frontal cortical areas and the cerebellum have compatibility with results indicating that these areas use a gaze-centered frame of reference to code visual information (Trotter and Celebrini 1999; DeSouza et al. 2002; Rosenbluth and Allman 2002) and plan movements (Baker et al. 1999; Batista et al. 1999; DeSouza et al. 2000; Buneo et al. 2002; Cisek and Kalaska 2002). Spatial congruence between the angle of gaze, visual targets and the physical location of the arm—as we found here—seem to combine to yield enhanced activation in the human brain across a variety of structures. However, this remains speculative since we have not tested the full range of hand and gaze positions. Note that one could have anticipated stronger gaze effects had the spatial congruence between gaze and hand been higher, that is had participants looked directly towards their hand. There is however evidence that performing goal-directed movements with simple sensorimotor transformations yields more MRI signal than movements with complex sensorimotor transformations (Gorbet et al. 2004). Our task focused more on spatial alignment between gaze and hand in the same hemispace which probably reduced the strength of gaze effects.

The observations of modulated activation in the right parietal cortex might seem surprising since one might have expected activation occurring predominantly in the left hemisphere, contralateral to the right hand movements. However, this region, especially in the right hemisphere of humans, has a prominent role in spatial attention and information processing (Perenin and Vighetto 1988); a feature of the current task. Also, it is well recognized that the ipsilateral hemisphere is critically involved in hand movements (Davare et al. 2007; Kawashima et al. 1998; Schaefer et al. 2007). Moreover, we observed that changes in gaze position modulated movement-relation changes in activation in a large portion of the visual cortex, a region not commonly considered a “motor” structure (but see Kim et al. 2005). However, Astafiev et al. (2004) recently showed enhanced occipital activation for movements performed toward a covertly attended peripheral visual target.

These findings might have relation to our showing gaze effects in occipital regions upon visually triggered hand movements. Nevertheless, we do acknowledge that activation observed in the visual cortex may have been driven by visual processing alone since we also found gaze effects in this region in the finger-independent gaze effects analysis. Furthermore, we also found this region had sensitivity to lateralization of visual inputs, a feature not present in other areas, certainly not as would be predicted by a strict segregation of visual input from the two hemi-fields. Therefore, gaze effects in visual cortex may be driven more by lateralization of visual inputs rather than by gaze effects on finger-movement activation. We also did not find activation in traditional ocular motor regions such as the parietal, frontal or supplementary eye fields (Krauzlis 2005), but consider that our task did not have saccades but gaze fixation.

Pure gaze effects

Consistent with previous observations (Trotter and Celebrini 1999; DeSouza et al. 2002; Rosenbluth and Allman 2002; Deutschländer et al. 2005; Andersson et al. 2007), we found that gaze modulated visual information processing activation independent of finger movement in occipital, frontal, and parietal cortex and parahippocampal region. Contrary to previous publications (Deutschländer et al. 2005; Andersson et al. 2007), we found a clear spatial organization of gaze effects that generally showed preference for rightward and upward gaze deviations. This spatial organization paralleled the one observed on finger movements and suggest a tight coupling between the visual and motor systems that could subserve and initiate visuo-motor integration. Gaze effects in the parahippocampal gyrus may not have been expected but consider that others have described gaze-dependent visual processing (Sato and Nakamura 2003; Deutschländer et al. 2005) as well as finger movement-related activation (Kertzman et al. 1997; Kim et al. 2005). These gaze effects may relate strictly to visual processing but attention, a feature of our task, may have enhanced the gaze effects as observed in posterior parietal cortex of monkeys (Andersen and Mountcastle 1983). The effect of attention on gaze effects upon processing visual information and/or computing hand movements has not been studied yet in humans.

Gaze-related gain fields

The spatial organization of the gaze effects we found here resembles the results of Baker et al. (1999) and those describing how gaze shifts by monkeys in two-dimensional space influence spiking (e.g., Andersen and Mountcastle 1983; Boussaoud et al. 1998; Bremmer et al. 1998; Buneo et al. 2002); the relationship between spiking and gaze position has been coined as gaze gain fields (Andersen and Mountcastle 1983). The gaze effects observed here could relate to the gaze gain field mechanism observed in monkeys. This gain field mechanism offers an explanation how visual information about a target location becomes transformed from a retinal to a head/body centered frame of reference (Salinas and Sejnowski 2001). Additionally, gain fields have roles in other visual tasks such as object recognition and spatial navigation (Salinas and Thier 2000; Salinas and Sejnowski 2001) and are ubiquitous in the monkey brain. In human, no such effect had been described yet, though our data suggest that these mechanisms occur across several neocortical and subcortical regions.

Acknowledgments

This work was funded by the National Institutes of Health (R01-EY01541 to J.N.S.) and the Ittleson Foundation (to J.N.S and John P. Donoghue). Portions of this work represented partial fulfillment of the Senior Honors project for Mr. Thangavel.

Contributor Information

Patrick Bédard, Department of Neuroscience, Alpert Medical School of Brown University, Box GL-N, Providence, RI 02912, USA.

Arul Thangavel, Department of Neuroscience, Alpert Medical School of Brown University, Box GL-N, Providence, RI 02912, USA.

Jerome N. Sanes, Email: Jerome_Sanes@brown.edu, Department of Neuroscience, Alpert Medical School of Brown University, Box GL-N, Providence, RI 02912, USA

References

- Alexander GE, DeLong MR, Strick PL. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annu Rev Neurosci. 1986;9:357–381. doi: 10.1146/annurev.ne.09.030186.002041. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Mountcastle VB. The influence of the angle of gaze upon the excitability of the light-sensitive neurons of the posterior parietal cortex. J Neurosci. 1983;3:532–548. doi: 10.1523/JNEUROSCI.03-03-00532.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Essick GK, Siegel RM. Encoding of spatial location by posterior parietal neurons. Science. 1985;230:456–458. doi: 10.1126/science.4048942. [DOI] [PubMed] [Google Scholar]

- Andersson F, Joliot M, Perchey G, Petit L. Eye position-dependent activity in the primary visual area as revealed by fMRI. Hum Brain Mapp. 2007;28:673–680. doi: 10.1002/hbm.20296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astafiev SV, Stanley CM, Shulman GL, Corbetta M. Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat Neurosci. 2004;7:542–548. doi: 10.1038/nn1241. [DOI] [PubMed] [Google Scholar]

- Baker JT, Donoghue JP, Sanes JN. Gaze direction modulates finger movement activation patterns in human cerebral cortex. J Neurosci. 1999;19:10044–10052. doi: 10.1523/JNEUROSCI.19-22-10044.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer A, Ferraina S, Mitsuda T, Marconi B, Genovesio A, Onorati P, Lacquaniti F, Caminiti R. Early coding of reaching in the parietooccipital cortex. J Neurophysiol. 2000;83:2374–2391. doi: 10.1152/jn.2000.83.4.2374. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer A, Caminiti R, Lacquaniti F, Zago M. Multiple levels of representation of reaching in the parieto-frontal network. Cereb Cortex. 2003;13:1009–1022. doi: 10.1093/cercor/13.10.1009. [DOI] [PubMed] [Google Scholar]

- Binsted G, Heath M. No evidence of a lower visual field specialization for visuomotor control. Exp Brain Res. 2005;162:89–94. doi: 10.1007/s00221-004-2108-6. [DOI] [PubMed] [Google Scholar]

- Bock O. Contribution of retinal versus extraretinal signals towards visual localization in goal-directed movements. Exp Brain Res. 1986;64:476–482. doi: 10.1007/BF00340484. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Bremmer F. Gaze effects in the cerebral cortex: reference frames for space coding and action. Exp Brain Res. 1999;128:170–180. doi: 10.1007/s002210050832. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Jouffirais C, Bremmer F. Eye position effects on the neuronal activity of dorsal premotor cortex in the macaque monkey. J Neurophysiol. 1998;80:1132–1150. doi: 10.1152/jn.1998.80.3.1132. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Bremmer F, Pouget A, Hoffmann KP. Eye position encoding in the macaque posterior parietal cortex. Eur J Neurosci. 1998;10:153–160. doi: 10.1046/j.1460-9568.1998.00010.x. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Andersen RA. The posterior parietal cortex: sensorimotor interface for the planning and online control of visually guided movements. Neuropsychologia. 2006;44:2594–2606. doi: 10.1016/j.neuropsychologia.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuo-motor transformations for reaching. Nature. 2002;416:632–636. doi: 10.1038/416632a. [DOI] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Modest gaze-related discharge modulation in monkey dorsal premotor cortex during a reaching task performed with free fixation. J Neurophysiol. 2002;88:1064–1072. doi: 10.1152/jn.00995.2001. [DOI] [PubMed] [Google Scholar]

- Cohen MS. Parametric analysis of fMRI data using linear systems methods. Neuroimage. 1997;6:93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cox RW, Hyde JS. Software tools for analysis and visualization of fMRI data. NMR Biomed. 1997;10:171–178. doi: 10.1002/(sici)1099-1492(199706/08)10:4/5<171::aid-nbm453>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- Danckert J, Goodale MA. Superior performance for visually guided pointing in the lower visual field. Exp Brain Res. 2001;137:303–308. doi: 10.1007/s002210000653. [DOI] [PubMed] [Google Scholar]

- Davare M, Duque J, Vandermeeren Y, Thonnard JL, Olivier E. Role of the ipsilateral primary motor cortex in controlling the timing of hand muscle recruitment. Cereb Cortex. 2007;17:353–362. doi: 10.1093/cercor/bhj152. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Pelisson D, Rossetti Y, Prablanc C. From eye to hand: planning goal-directed movements. Neurosci Biobehav Rev. 1998;22:761–788. doi: 10.1016/s0149-7634(98)00004-9. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Grea H, Grethe JS, Prablanc C, Alexander GE, Grafton ST. Functional anatomy of nonvisual feedback loops during reaching: a positron emission tomography study. J Neurosci. 2001;21:2919–2928. doi: 10.1523/JNEUROSCI.21-08-02919.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeSouza JF, Dukelow SP, Gati JS, Menon RS, Andersen RA, Vilis T. Eye position signal modulates a human parietal pointing region during memory-guided movements. J Neurosci. 2000;20:5835–5840. doi: 10.1523/JNEUROSCI.20-15-05835.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeSouza JF, Dukelow SP, Vilis T. Eye position signals modulate early dorsal and ventral visual areas. Cereb Cortex. 2002;12:991–997. doi: 10.1093/cercor/12.9.991. [DOI] [PubMed] [Google Scholar]

- Deutschländer A, Marx E, Stephan T, Riedel E, Wiesmann M, Dieterich M, Brandt T. Asymmetric modulation of human visual cortex activity during 10 degrees lateral gaze (fMRI study) Neuroimage. 2005;28:4–13. doi: 10.1016/j.neuroimage.2005.06.001. [DOI] [PubMed] [Google Scholar]

- Dougherty RF, Koch VM, Brewer AA, Fischer B, Modersitzki J, Wandell BA. Visual field representations and locations of visual areas V1/2/3 in human visual cortex. J Vis. 2003;3:586–598. doi: 10.1167/3.10.1. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. The human brain: surface three dimensional sectional anatomy and MRI. Springer; New York: 1991. [Google Scholar]

- Enright JT. The non-visual impact of eye orientation on eye-hand coordination. Vision Res. 1995;35:1611–1618. doi: 10.1016/0042-6989(94)00260-s. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Kutz DF, Gamberini M. Brain location and visual topography of cortical area V6A in the macaque monkey. Eur J Neurosci. 1999;11:575–582. doi: 10.1046/j.1460-9568.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- Glickstein M. Subcortical projections of the parietal lobes. Adv Neurol. 2003;93:43–55. [PubMed] [Google Scholar]

- Gorbet DJ, Staines WR, Sergio LE. Brain mechanisms for preparing increasingly complex sensory to motor transformations. Neuroimage. 2004;23:1100–1111. doi: 10.1016/j.neuroimage.2004.07.043. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci. 1998;18:1583–1594. doi: 10.1523/JNEUROSCI.18-04-01583.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollands MA, Patla AE, Vickers JN. “Look where you’re going!”: gaze behaviour associated with maintaining and changing the direction of locomotion. Exp Brain Res. 2002;143:221–230. doi: 10.1007/s00221-001-0983-7. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Tremblay L, Feger J, Carras PL, Strick PL. The cerebellum communicates with the basal ganglia. Nat Neurosci. 2005;8:1491–1493. doi: 10.1038/nn1544. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith SM. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jouffrais C, Boussaoud D. Neuronal activity related to eye-hand coordination in the primate premotor cortex. Exp Brain Res. 1999;128:205–209. doi: 10.1007/s002210050837. [DOI] [PubMed] [Google Scholar]

- Kawashima R, Matsumura M, Sadato N, Naito E, Waki A, Nakamura S, Matsunami K, Fukuda H, Yonekura Y. Regional cerebral blood flow changes in human brain related to ipsilateral and contralateral complex hand movements—a PET study. Eur J Neurosci. 1998;10:2254–2260. doi: 10.1046/j.1460-9568.1998.00237.x. [DOI] [PubMed] [Google Scholar]

- Kertzman C, Schwarz U, Zeffiro TA, Hallett M. The role of posterior parietal cortex in visually guided reaching movements in humans. Exp Brain Res. 1997;114:170–183. doi: 10.1007/pl00005617. [DOI] [PubMed] [Google Scholar]

- Khan MA, Lawrence GP. Differences in visuomotor control between the upper and lower visual fields. Exp Brain Res. 2005;164:395–398. doi: 10.1007/s00221-005-2325-7. [DOI] [PubMed] [Google Scholar]

- Kim JA, Eliassen JC, Sanes JN. Movement quantity and frequency coding in human motor areas. J Neurophysiol. 2005;94:2504–2511. doi: 10.1152/jn.01047.2004. [DOI] [PubMed] [Google Scholar]

- Krauzlis RJ. The control of voluntary eye movements: new perspectives. Neuroscientist. 2005;11:124–137. doi: 10.1177/1073858404271196. [DOI] [PubMed] [Google Scholar]

- Kwong KK, Belliveau JW, Chesler DA, Goldberg IE, Weisskoff RM, Poncelet BP, Kennedy DN, Hoppel BE, Cohen MS, Turner R, et al. Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc Natl Acad Sci USA. 1992;89:5675–5679. doi: 10.1073/pnas.89.12.5675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewald J. Eye-position effects in directional hearing. Behav Brain Res. 1997;87:35–48. doi: 10.1016/s0166-4328(96)02254-1. [DOI] [PubMed] [Google Scholar]

- Mazziotta JC, Toga AW, Evans A, Fox P, Lancaster J. A probabilistic atlas of the human brain: theory and rationale for its development. The international consortium for brain mapping (ICBM) Neuroimage. 1995;2:89–101. doi: 10.1006/nimg.1995.1012. [DOI] [PubMed] [Google Scholar]

- McIntyre J, Stratta F, Lacquaniti F. Viewer-centered frame of reference for pointing to memorized targets in three-dimensional space. J Neurophysiol. 1997;78:1601–1618. doi: 10.1152/jn.1997.78.3.1601. [DOI] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Vilis T, Crawford JD. Gaze-centered updating of visual space in human parietal cortex. J Neurosci. 2003;23:6209–6214. doi: 10.1523/JNEUROSCI.23-15-06209.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miall RC, Reckess GZ. The cerebellum and the timing of coordinated eye and hand tracking. Brain Cogn. 2002;48:212–226. doi: 10.1006/brcg.2001.1314. [DOI] [PubMed] [Google Scholar]

- Middleton FA, Strick PL. The cerebellum: an overview. Trends Neurosci. 1998;21:367–369. doi: 10.1016/s0166-2236(98)01330-7. [DOI] [PubMed] [Google Scholar]

- Mushiake H, Tanatsugu Y, Tanji J. Neuronal activity in the ventral part of premotor cortex during target-reach movement is modulated by direction of gaze. J Neurophysiol. 1997;78:567–571. doi: 10.1152/jn.1997.78.1.567. [DOI] [PubMed] [Google Scholar]

- Ogawa S, Tank DW, Menon R, Ellermann JM, Kim SG, Merkle H, Ugurbil K. Intrinsic signal changes accompanying sensory stimulation: functional brain mapping with magnetic resonance imaging. Proc Natl Acad Sci USA. 1992;89:5951–5955. doi: 10.1073/pnas.89.13.5951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. The Video toolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Perenin MT, Vighetto A. Optic ataxia: a specific disruption in visuomotor mechanisms. I. Different aspects of the deficit in reaching for objects. Brain. 1988;111:643–674. doi: 10.1093/brain/111.3.643. [DOI] [PubMed] [Google Scholar]

- Robinson FR, Fuchs AF. The role of the cerebellum in voluntary eye movements. Annu Rev Neurosci. 2001;24:981–1004. doi: 10.1146/annurev.neuro.24.1.981. [DOI] [PubMed] [Google Scholar]

- Rosenbluth D, Allman JM. The effect of gaze angle and fixation distance on the responses of neurons in V1, V2, and V4. Neuron. 2002;33:143–149. doi: 10.1016/s0896-6273(01)00559-1. [DOI] [PubMed] [Google Scholar]

- Salinas E, Sejnowski TJ. Gain modulation in the central nervous system: where behavior, neurophysiology, and computation meet. Neuroscientist. 2001;7:430–440. doi: 10.1177/107385840100700512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salinas E, Thier P. Gain modulation: a major computational principle of the central nervous system. Neuron. 2000;27:15–21. doi: 10.1016/s0896-6273(00)00004-0. [DOI] [PubMed] [Google Scholar]

- Sato N, Nakamura K. Visual response properties of neurons in the parahippocampal cortex of monkeys. J Neurophysiol. 2003;90:876–886. doi: 10.1152/jn.01089.2002. [DOI] [PubMed] [Google Scholar]

- Schaefer SY, Haaland KY, Sainburg RL. Ipsilesional motor deficits following stroke reflect hemispheric specializations for movement control. Brain. 2007;130:2146–2158. doi: 10.1093/brain/awm145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmahmann JD, Doyon J, McDonald D, Holmes C, Lavoie K, Hurwitz AS, Kabani N, Toga A, Evans A, Petrides M. Three-dimensional MRI atlas of the human cerebellum in proportional stereotaxic space. Neuroimage. 1999;10:233–260. doi: 10.1006/nimg.1999.0459. [DOI] [PubMed] [Google Scholar]

- Trotter Y, Celebrini S. Gaze direction controls response gain in primary visual-cortex neurons. Nature. 1999;398:239–242. doi: 10.1038/18444. [DOI] [PubMed] [Google Scholar]