Abstract

We have developed a new, unified implementation of the adaptive optics scanning laser ophthalmoscope (AOSLO) incorporating a wide-field line-scanning ophthalmoscope (LSO) and a closed-loop optical retinal tracker. AOSLO raster scans are deflected by the integrated tracking mirrors so that direct AOSLO stabilization is automatic during tracking. The wide-field imager and large-spherical-mirror optical interface design, as well as a large-stroke deformable mirror (DM), enable the AOSLO image field to be corrected at any retinal coordinates of interest in a field of >25 deg. AO performance was assessed by imaging individuals with a range of refractive errors. In most subjects, image contrast was measurable at spatial frequencies close to the diffraction limit. Closed-loop optical (hardware) tracking performance was assessed by comparing sequential image series with and without stabilization. Though usually better than 10 μm rms, or 0.03 deg, tracking does not yet stabilize to single cone precision but significantly improves average image quality and increases the number of frames that can be successfully aligned by software-based post-processing methods. The new optical interface allows the high-resolution imaging field to be placed anywhere within the wide field without requiring the subject to re-fixate, enabling easier retinal navigation and faster, more efficient AOSLO montage capture and stitching.

1. INTRODUCTION

Adaptive optics (AO) is an imaging technology supporting a rapidly growing range of applications in ophthalmology and vision research. Established and emerging AO imaging platforms include retinal cameras, confocal scanning laser ophthalmoscopes (SLOs), and optical coherence tomography (OCT) instruments for high-resolution reflectance imaging [1–11]. AO instruments have been applied to the study of photoreceptor biology and function [12–19] and endogenous and exogenous fluorescence imaging of ganglion and retinal pigment epithelium (RPE) cells [20–22], and they have been used to detect leukocytes and measure blood flow [23,24]. AO systems are also being extended to precision stimulus delivery, microperimetry, measurement of intrinsic retinal signals, psychophysics, and structural and functional vision studies in the research lab and the clinic [25–32]. We believe that AO imaging will have important uses in the future for advanced molecular imaging and gene therapies to determine treatment efficacy at the cellular level in human and animal models.

Most AO systems in current use and development have a number of commonalities due to the optical constraints of AO system design, no matter which particular imaging modality may be used. In an AO system, pupil images must be accurately relayed from the eye through a sequence of pupil conjugates located at scanners, deformable mirror(s) (DMs), a wavefront sensor, and finally to source and detector apertures. These optical relays are generally achieved by using spherical mirror pairs that are slightly off of their optical axes. To minimize aberrations, these off-axis angles are generally limited to a few degrees, and their relative orientations are varied in particular sequences [7,29] to minimize system aberrations. System field angles are also kept small; non-isoplanatic behavior in the human eye limits retinal focal regions to a few degrees [33], and as a result AO imaging systems usually operate over fields of view from 1 deg to 3 deg, consistent also with the necessary image resolution/pixellation required for elucidating cones, microvasculature, and other structures at the cellular level. Such high magnifications limit the flexibility of AO systems and can make reproducible access to any arbitrary retinal locus difficult to achieve quickly.

Three immediate consequences of these small fields are encountered by AO researchers for practical retinal navigation and imaging: (1) the lack of a wide-field retinal image for global orientation and selection of imaging targets makes the examination of the eye more complex and time consuming; (2) the effects of ordinary eye motions are substantially amplified in relation to such small fields, reducing the yield of good AO images and making stable imaging of local patches particularly difficult in subjects with poor fixation; and (3), conventional AO system optical interfaces to the eye are typically designed with small or modest field of regard—that is, the angular range of access to the retina is restricted by angle-limiting mirror diameters, and as a consequence, fields of regard are not much larger than image fields of view. To cover wider angular ranges, the eye itself must move (rotate) guided by a fixation target at some retinal conjugate in the optical system. Furthermore, imaging sequences and montage generation, etc., may be compromised by eye motion relative to small fields, which may not be fully addressable in post-processing due to poor overlap, especially with noisy or low-contrast images or slower scanning modalities (e.g., AO-OCT) [34].

A true wide-field system would facilitate routine examination with simpler and more comfortable interface to the eye and admit a range of features into the field of regard suitable for optical eye tracking (e.g., lamina cribrosa, scleral crescent, blood vessel junctions, and local pathologies). New high-stroke DMs, often used as “woofers” in “woofer–tweeter” configurations, have made new designs possible in which far more generous system wavefront error budgets required for wider fields of regard are accommodated.

2. METHODS

A. Optics

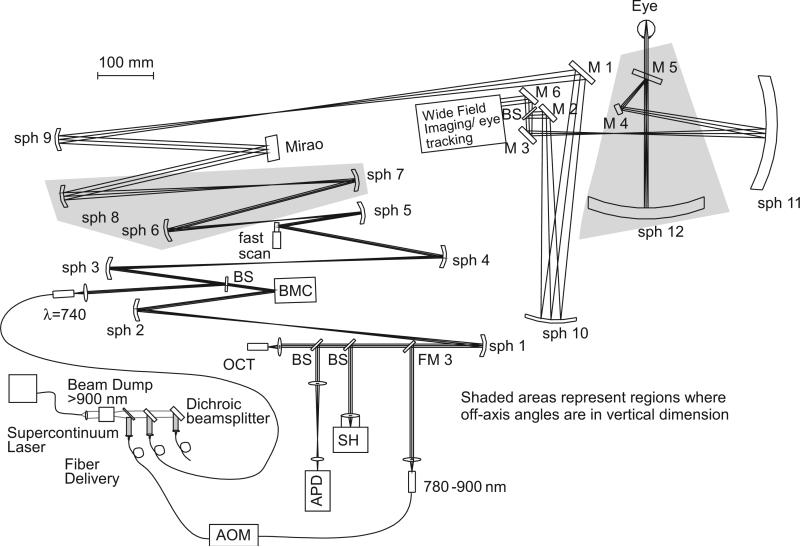

The flattened optical schematic of the overall AOSLO system design implemented first at the Indiana University (IU) School of Optometry is shown in Fig. 1. The IU system was assembled on an optical table. A similar system designed to fit entirely on a 2×2 ft breadboard was implemented at Physical Sciences Inc (PSI) and is described elsewhere [35], but this paper constitutes the first detailed comparison of the closed-loop eye tracking performance and precise AOSLO eye movement metrics. The IU and PSI systems differ in their AO optical layouts up to the wide-field imaging/eye-tracking module indicated in the Fig. but are essentially identical thereafter. The front ends of these systems are designed for a wide field of regard, allowing the integration of wide-field imaging, retinal tracking, and steering of the AOSLO imaging beam, all through common optical relays. This is achieved by combining the AOSLO subsystem and the wide-field imaging/eye-tracking module at the dichroic beamsplitter, BS, near the 15 mm pupil conjugate at M2 (with 2× pupil magnification relative to the design maximum at the eye of 7.5 mm). The AOSLO beam is steered in two orthogonal axes with a two-galvanometer tip–tilt configuration at a pupil that is optically conjugate to the line-scanning ophthalmoscope (LSO) and tracking pupils, allowing maintenance of a precise co-pupillary relation between the pupils of the optical subsystems [42,43].

Fig. 1.

Unfolded diagram of the Integrated Indiana AOSLO Implementation. Though the layout is similar to many other AO implementations, there are three regions where the optics are folded vertically for astigmatism compensation. The first two are shown shaded, and the third is at the final large spherical mirror (sph 12). The wide-field imaging/eye-tracking module integrates those features efficiently in a compact package. Other unique features a supercontinuum light source, which is filtered, separated into selected bands, and delivered via single-mode fiber to the main imaging system.

The system's combined beams are then relayed through a pair of large-diameter spherical mirrors and a pair of orthogonal tracking mirrors to the eye; the full field of regard for the AO and other fields are approximately 30 deg. Because the AOSLO beam is also deflected by the same tracking mirrors in this interface design, it is automatically corrected for eye motion at the full bandwidth of the tracker (up to 500 Hz, depending on tracker tuning conditions and gain). Video monitoring of the pupil by a separate video camera is used for initial eye positioning. The remainder of this paper describes results obtained with the integrated IU system only.

The design considerations for the wide-field ocular interface have been described in detail elsewhere [7]. Two large spherical mirrors, sph 11 and sph 12 (300 mm radius of curvature), galvanometer scanners at pupil conjugates M2 (AOSLO) and M6 (LSO/Tracker), and tracking mirrors M3 (y-axis) and M4 (x-axis) allow tracking, scanning, and positioning over the large field. These large mirrors are used at finite conjugates with the key advantage, for the final mirror in particular, that the two pupil conjugates on either side of it (~2f to 2f relay, nearly 1:1) are both close to the mirror's center of curvature; the imaging beam is nearly perpendicular to the mirror surface at all angles in the field of regard. Thus, while there is still off-axis astigmatism arising from the necessary physical displacement of the two pupil conjugates (one of which is the eye) from the mirror axis, this astigmatism is relatively small and, most importantly, changes only slowly across the AOSLO imaging field. As a result, the system aberrations for all points in a small field can be simultaneously compensated. Low-order system aberrations and defocus are well compensated with a large-stroke (woofer) deformable mirror (Mirao TM52-e, Imagine Eyes, Orsay, France), while precision AO correction uses a “tweeter” DM (Multi-DM, Boston Micromachines Corporation, Cambridge, Massachusetts, USA), which, along with basic AO technology, are described elsewhere [36–40]. The IU system uses a simultaneous control algorithm [41] that automatically sorts aberrations to the dual DMs using different damping terms.

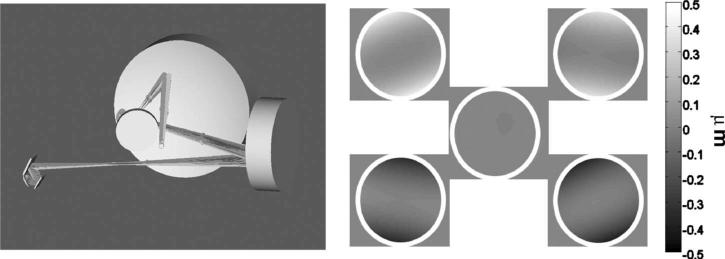

The behavior of the system over ~2 degree field angles is illustrated by the Zemax simulations in Figs. 2 and 3. As shown in Fig. 2, adequately isoplanatic behavior is achieved with a single DM correction sufficient to compensate the entire 2 deg field to 0.08 waves for most of the central field. As the field is moved to higher eccentricities, the correction degrades only slightly, still achieving a value of up to 0.11 waves rms in the corners of the small field at the location (8 deg,8 deg) illustrated in the figure. The predominant front-end system aberration is astigmatism, which can be corrected with either the woofer (Mirao) or the tweeter (BMC) but is better corrected with the former because the required stroke constitutes a smaller fraction of its total range. As examples of the capacity to correct these wide-field system aberrations, Zemax simulations of uncorrected and corrected rms system wavefront errors, along with the compensating Mirao DM surface sags are shown in Figs. 3(a)–3(c) corresponding to the top (+15 deg) of the field, center (0 deg), and bottom (−15 deg) in a paraxial eye. These simulated corrections use the Mirao DM alone, optimized through the third-order Zernike polynomials over several points in 2 deg AOSLO scan. The average rms error after AO correction is <0.15 waves (0.11 μm at 750 nm). The maximum stroke needed for system aberrations over the 30 deg field, shown in the Mirao surface sag false color maps (in μm, center panel in Fig. 3) field, is <10 μm.

Fig. 2.

Zemax simulations: near-diffraction-limited performance across a small imaging field. The angles were adjusted for 8 deg eccentricity along a diagonal. The Mirao mirror simulates a correction for the best field. Wavefronts for the four corners and the center of a 2.25 deg (diagonal) imaging field are shown. All rms wavefront errors are less than 0.11 μm. For AOSLO imaging fields near 0 deg eccentricity, variation is markedly smaller, with rms errors typically below 0.08 μm.

Fig. 3.

Zemax simulations of wavefront corrections for 2 deg AOSLO scans in 3 locations, corresponding to (a) the top (+15 deg) of the field, (b) center (0deg), and (c) bottom (−15 deg) of the field in a paraxial eye. Left panel, uncorrected system wavefront errors; center panel, compensating Mirao surface sags to third order with scales in μm; right panel, resulting corrected wavefronts. The average rms error after AO correction is <0.15 waves (0.11 μm at 750 nm). The maximum stroke (Mirao sag) needed to compensate system aberrations over the 30 deg field is <10 μm.

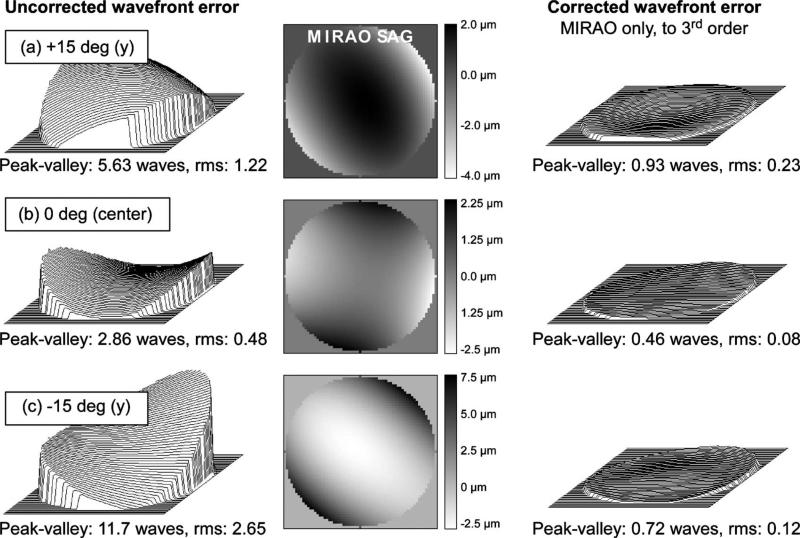

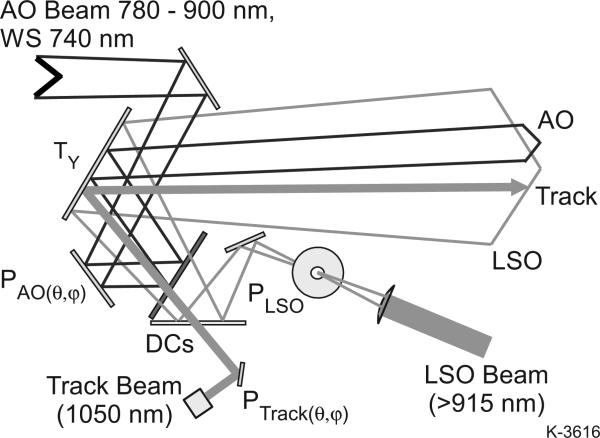

The method of integration of the closed-loop optical tracking and wide-field LSO imaging sub-components into these systems is illustrated in Fig. 4. The AOSLO raster, the tracking beam, and the LSO raster are each independently targeted by means of pupil plane scanners. The AOSLO vertical pupil scanner (M2), which forms the vertical axis of the AOSLO raster, also provides AO raster position control to access any point in the on two axes by means of a small scanner (6210H, Cambridge Technologies, Bedford Massachusetts, USA), yoked to a larger scanner (6230H) on an orthogonal axis. This enables both fast, vertical AOSLO raster scan sizing and positioning by vertical offsets and slower, horizontal offset positioning to be effected from a single scanner mirror (at the 15 mm M2 pupil conjugate). Rapid change of the raster size is possible, since the resonant scanner amplitude is also voltage controlled. The tracking beam similarly has independent dual-axis steering capability. Fig. 5 illustrates how these pupil conjugates are all optically combined before entering the final, wide-field ocular interface section. The dichroic beamsplitters enable the various bands to be combined with minimal losses and interferences. The vertical tracking mirror, TY (M3 above), is conjugate to the center of rotation of the eye, and steers all beams in common. TX, (not shown, M4 above) is located at the next pupil plane downstream, where an LCD fixation display is added.

Fig. 4.

Optical integration scheme. Pupils are combined before entering the final, wide-field ocular interface section. Dichroic beamsplitters (DCs) enable the various bands to be combined. With the appropriate dichroics, fluorescence imaging can be incorporated as well. The tracking mirror, TY, is conjugate to the center of rotation of the eye and steers all beams in common. TX (not shown) is just beyond the next pupil plane downstream.

Fig. 5.

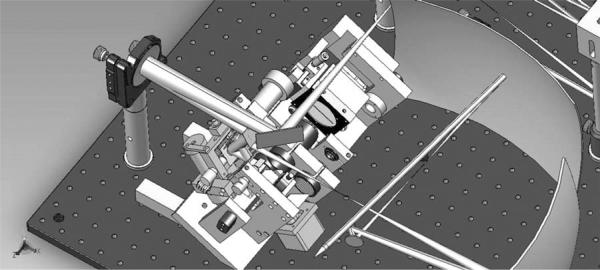

LSO/tracker optomechanical integration module in SolidWorks for the new AOSLO interface as implemented at IU and PSI. Wide field of regard >30 deg enables the small AOSLO raster to be steered anywhere within it. The final pair of large spherical mirrors and a paraxial eye are indicated as optical surfaces only.

The LSO/Tracker module portion of both optomechanical integrations is illustrated in the SolidWorks drawing of Fig. 6. The wide-field spherical relay mirror surfaces and a paraxial eye are modeled only optically. The assembly shown was first developed and built for the IU system, and several modifications have been made since. The pupil-combining and scanning optics, tracking mirrors, resonant scanners, and line-scan imager are combined in one compact module in such a way as to ensure that all the beams pass through the subject's dilated pupil without vignetting by the iris. The rear section of the module is mounted on a (manual) translation stage that enables common focus control, and ensures parfocality of the input LSO beam at the retina with the confocally imaged line-scan sensor array (Atmel 1024-pixel by 14 μm CCD line camera, e2v, Grenoble, France) and the incident tracking beam with its confocal reflectometer. The LSO and tracker beams are fiber-coupled from a control box, separate from the AOSLO system, which is connected to a dedicated PC.

Fig. 6.

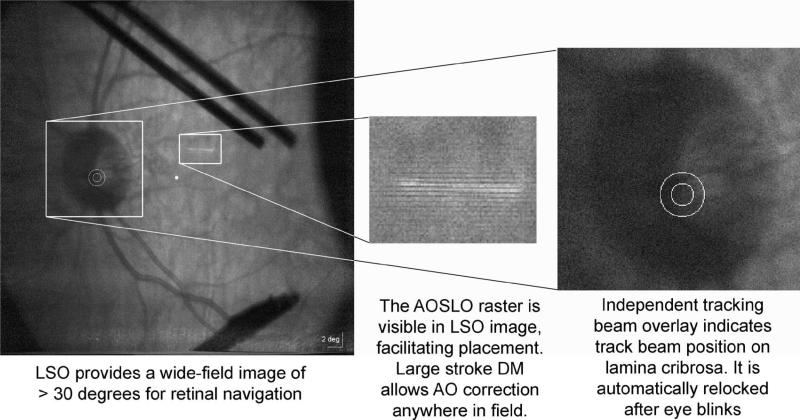

Examples of the LSO (915 nm), tracker (1050 nm), and AOSLO features provided in the GUI interface: AOSLO raster is visible in the LSO image to facilitate positioning, as are lower-resolution LSO features and landmarks seen in the AOSLO images; track beam overlay is shown relative to optic disc anatomy, as is a fixation coordinate (central dot at left), which was not calibrated to the LCD fixation display when these images were obtained: the shadows are due to a temporary fixation post with an LED attached.

B. Spectral Sources

The systems as implemented use four different wavelength bands simultaneously. In the IU system, the wavefront sensing beacon and the imaging source are both derived from a supercontinuum laser (Fianium Inc., Southampton, UK) [44]. This source is first filtered to eliminate all wavelengths longer than 900 nm. The resulting beam is then separated using dichroic beamsplitters and delivered to a 840 nm single-mode fiber (allowing wavelengths to be passed from about 800 nm to 900 nm), and to a 780 nm single-mode fiber (this fiber carries wavelengths from 500 nm to 790 nm, although shorter wavelengths are transmitted in multiple modes). The AOSLO wavefront sensor operates at 740 nm, and the imaging band is centered near 840 nm (~12 nm FWHM) in this work but can be anywhere in the range from 790–900 nm. The imaging beam is passed though an acousto-optical modulator (AOM) (Brimrose Co., Spanks, Massachusetts, USA) for blanking and intensity control, and the outputs of the fibers are then collimated and passed through narrowband filters (Semrock Inc, Rochester, New York, USA) and delivered to the system. Because the source is fiber coupled, the light source can be rapidly switched from the supercontinuum source to a superluminescent diode (SLD) when desired.

For the wide-field imaging and retinal tracking, longer wavelengths are used. The wide-field imaging/eye-tracking interface module combines a LSO [37,38] for wide-field imaging with a center wavelength of ~915 nm SLD and a 1050 nm SLD tracking beam (both Q-Photonics sources) for closed-loop AO image stabilization. LSO imaging is performed using light from a source with a bandwidth of ~35 nm, which eliminates wide-field image speckle. The illumination beam is spread in one axis with a cylindrical lens and scanned in the other axis to produce a raster on the retina. The raster light returned from the retina is de-scanned with the galvanometer, and the fixed line is passed back though the system and focused on a line array detector to produce retinal images. The reduced ocular return and quantum efficiency of silicon at this wavelength reduce the quality and brightness of the LSO images relative to shorter wavelength but are sufficient to guide AOSLO imaging. The power of the LSO beam at the cornea is <500 μW, far smaller than the ANSI laser safety limits for this wavelength and field size. The tracking beam (1050 nm) is also an extended source and is generally used at <250 μW. The IU AOSLO is very light efficient, despite a large number of surfaces, capturing images with as little as 50 μW with the current images being collected with a total power of 230 μW and a beacon power of 30 μW. The combined safety limits [the sum of the individual maximum permissible exposure (MPE) percentages] are less than a tenth of the ANSI limits for continuous exposure.

C. LSO/Tracker User Interface

The LSO and tracker are controlled via a custom Labview user interface (GUI). This software provides complete control of imaging parameters, fixation, and all tracking parameters, including tracking feedback gain and damping parameters, lock-in phase, tracking offsets, biases, and overlays of various fiducial markers. Tracking beam size and “dither” amplitude are manually set at present; adjustment of these is often important for optimally robust tracking behavior. The GUI enables live visualization of each of several key coordinate frame parameters and beams. The appearance of these LSO images at 915 nm is indicated in Fig. 6. Some remaining LSO imager and tracker interface and alignment issues are the subject of ongoing design and development efforts to improve the system; the LSO shows corneal reflections if axial pupil positioning is incorrect. The shadow of a thin, temporary post with an LED affixed for fixation can also be seen in the Fig.. The depth of focus of the LSO imager and tracking beam reflectometer (>1 mm) are significantly larger than that of the AOSLO. They are focused independently and do not affect AOSLO axial focus control.

D. Subjects

The AO control and steering system was tested in a series of 22 subjects (from 23 to 65 years of age). A number of AOSLO imaging protocols were used, but the images presented here are either 1.2 deg or 1.8 deg fields, depending on proximity to the fovea, captured as video sequences (typically 100 frames at 18.2 fps) to be aligned and averaged, or montage video sequences with programmed or manually selected steps. All image processing, including frame selection, alignment, averaging, co-adding, and montaging was done off-line with semi-automated custom IU software, or commercial software (such as Matlab or Photoshop).

For eye movement tracking measurements, seven normal subjects (6 male, 1 female) were tested. Of this group, five subjects were myopic, one subject was emmetropic (best corrected visual acuity 20/40 or better), and one subject was presbyopic. In these tests, 1.8 deg video frames were captured for post-processing alignment and averaging with and without tracking. No subject's eyes were dilated for eye movement compensation experiments. Most subjects had at least 4–5 mm diameter pupils in a darkened room, which provided sufficient lateral resolution from AO compensation to resolve cones at the test positions for the tracking system tests. Photoreceptors could be resolved in all subjects—one with mild cataract. The Mirao DM was used to correct the lower Zernike orders and the BMC for the higher orders, with a dual-DM control algorithm able to statically correct defocus (prior to AO compensation) in all individuals [41].

For AOSLO imaging, the steering mirrors were used to generate montages of several regions of interest. For collection of eye-tracking data, one or more locations were imaged in short bursts, with LSO and AOSLO videos with a duration of 5–30 s acquired simultaneously. Tracking-control voltages from the tracker were recorded (1 k samples/s) with LSO videos. These voltages alone are not adequate to demonstrate tracking fidelity; rather, we used spatial correlation of the AOSLO images to calculate net retinal image displacement versus time. The total eye motion is the tracking system position plus the AOSLO image displacement.

For most of the direct quantification of image motion, we performed off-line cross-correlation over a 163 ×163 μm region within the 500 μm region of the image, which produced an estimate of retinal position to within approximately 1 μm (when not corrupted by transient shearing and/or intermittent failures of the algorithm). The data were processed and fully aligned by post-processing software that can both align and count cones [45,46]; tracked and non-tracked image motion were compared. Further, overlapping time-stamped LSO/tracking data records and post-processed AOSLO videos were compared to investigate the limits and interactions of both methods.

3. RESULTS

A. Imaging Performance

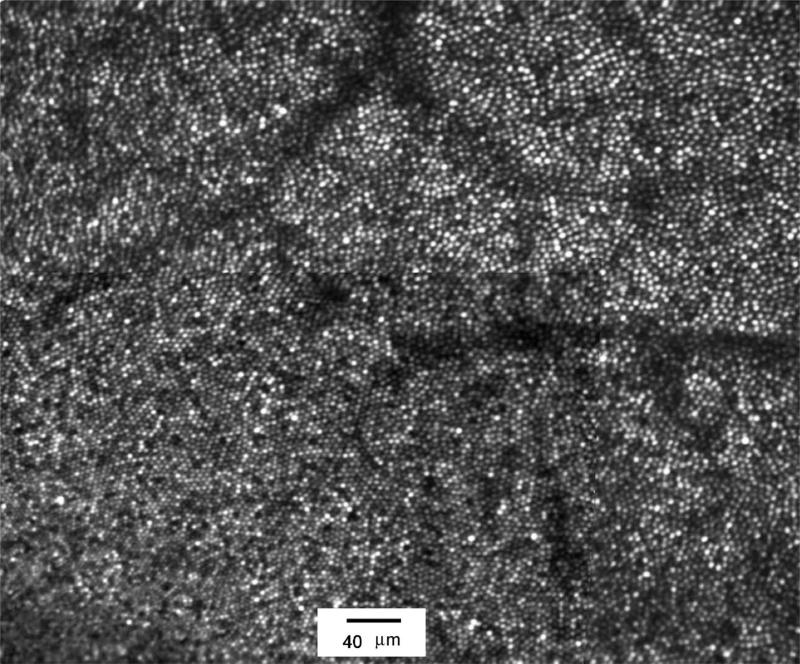

The imaging performance of the IU system is excellent. Image analysis in most subjects reveals finite image contrast at spatial frequencies corresponding to 2.4 μm features for a 7 mm pupil. Figure 7 shows a parafoveal image from a 27 year old male. The image represents a montage of an aligned/averaged images from a 1.2 deg AOSLO scan field (0.667 μm per pixel) for the region close to the fovea (the lower left portion of the image) and averages of 1.8 deg image fields (1 μm per pixel) over the same region to insure adequate cone resolution everywhere. In addition to high AOSLO image quality, the system also showed high light sensitivity. While the images shown were obtained with 180 mW of 840 nm light, single-frame images have been recorded with as little as 20 mW of imaging light.

Fig. 7.

Average foveal cone image montage obtained from a 27 year old male. Cones are imaged to within approximately 50 μm of the foveal center (the subject fixated the bottom left corner of the raster). Region shown is approximately 1.6×2.0 deg. Some residual distortion shows minor edge artifacts in the montage between different field sizes. Imaging wavelength was 840 nm with a 12 nm bandwidth. Imaging power was 180 μW, beacon power was 40 μW.

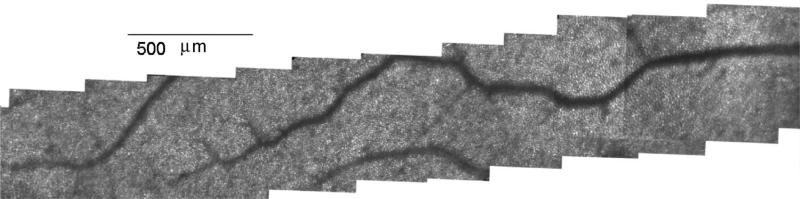

The montage capability also has proven quite useful and powerful. Figure 8 shows a montage of the central macular region of a male subject obtained by holding fixation steady and moving the imaging field from a point near the fovea to approximately 11 deg eccentricity. In practice, montages, together with the imaging capability, allowed complete collections of images along a meridian in as little as 5 min, although typically more time is required to ensure good image quality between blinks.

Fig. 8.

Montage of cone images obtained in a 56 year old male. Fixation was maintained just beyond the left end of the image (at fovea). AOSLO steering mirror (M2) was moved horizontally in a series of steps to ~11 deg eccentricity. At each location a series of frames of video were acquired, aligned, and averaged to create the montage.

The system also has been able to correct subjects, with no additional corrective lenses, ranging from −8.5 D of myopia to +2 D hyperopia. For many subjects images can be obtained without dilation, since the appearance of the imaging field is not particularly bright. For instance, it has been possible to image peripheral cones (from about 4 deg outward) in an undilated 59 year old female. While the image quality and brightness improved markedly with increased pupil size, in some individuals, and for some research questions, the freedom from the necessity of dilation may provide a benefit.

B. Effect of Eye Tracking on Imaging Performance

Figures 9–12 show retinal images collected with the new instrument for LSO/Tracker testing. AOSLO images with a raster size of approximately 1.8 deg were obtained over a large angular range in eyes exhibiting a number of fine-scale anatomical features including cone photoreceptors, blood vessels, and capillaries, the striations of the retinal nerve fiber layer (RNFL), etc. The advantages of active eye tracking are demonstrated in Fig. 9. Tracking was initiated during AOSLO imaging, and stabilized videos were compared with non-tracking sequences. During saccades with fixating subjects, the system successfully enables the operator to select, lock, and maintain a fixed field of view that can be repositioned or automatically scanned to any location in the field of regard. Automatic tracking re-lock is activated by blinks. Eye tracking can significantly improve stable overlap and efficiency of sequential AOSLO image capture by limiting the magnitude of eye movement excursions.

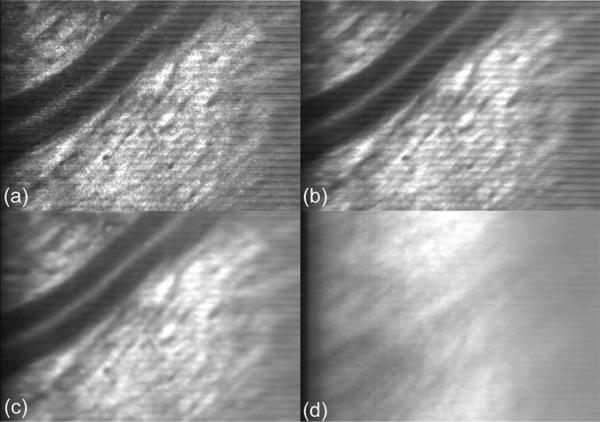

Fig. 9.

The function of active eye tracking. Tracking: (a) Single frame with vessel and nerve fiber, (b) 10-frame average, (c) 100-frame average. Non-tracking: (d) 100-frame average. Short-term (<1 s) and long-term (several seconds) rms tracking/registration errors are subject-dependent and span the range of ~5 to 15 μm and without tracking from ~50 to >300 μm. Eye tracking can significantly improve stable overlap and efficiency of sequential AOSLO image capture by limiting the magnitude of eye movement excursions

Fig. 12.

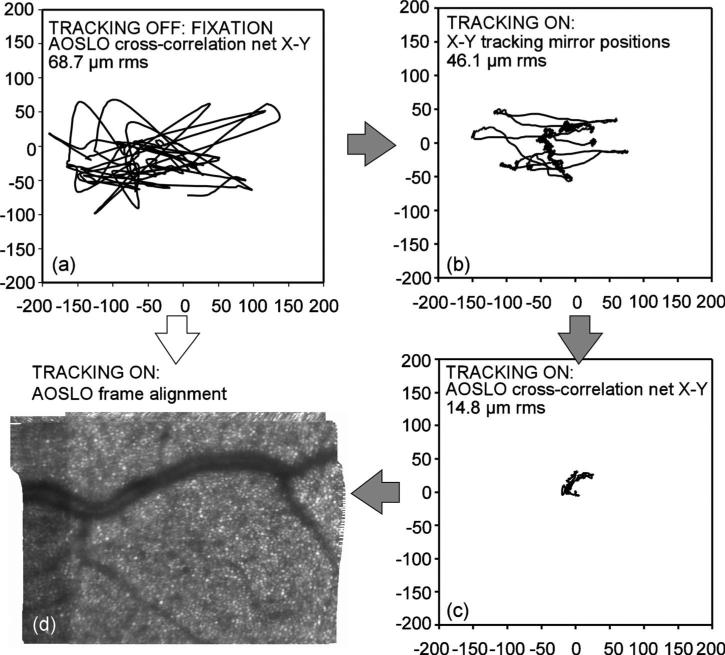

Eye tracking hierarchy. Eye motion can be partitioned between closed-loop optical tracking (hardware): AOSLO cross-correlation (software) and (a) non-tracking case, X-Y (software aligned); (b) tracking, hardware analog X-Y position signals, (c) tracking, residual X-Y frame errors (software); (d) fine-aligned 100-frame average, net result of software and hardware combined; resulting images aligned to within a single cone diameter. Real-time, on-line software mapping for fine alignment will be difficult from fixation alone. The path from (a) to (d) is greatly assisted in the stabilization hierarchy by hardware tracking through (b) and (c).

Of course, the point of the following measurements and analyses is not to imply that the hardware tracking system by itself can be directly employed with hundreds of images for image averaging, such as might be needed for low-light imaging application; indeed, that would ensure relatively poor final AOSLO image quality even with excellent tracking. Rather, image averaging in this work was a convenient quantitative gauge of how effective tracking can be in keeping other fine image registration methods within their useful ranges and the extent to which tracking will enable better clinical data to be gathered in shorter times.

To test tracking fidelity at various scales indirectly, image averaging (co-adding and scaling with no other manipulations) was performed over 10 and 100 consecutive frames. Figure 9(a) is single AOSLO image frame. As shown by averaged images in Fig. 9(b) and 9(c), small transient errors during eye accelerations cause averaged images to lose contrast, but significant fine scale detail (as measured by image sharpness metrics, for example, as below) survive even over duration of many seconds (5.5 s for 100 frames at 18.2 fps). Without tracking, Fig. 9(d), eye motions (microsaccades and drift) often exceed the raster size, resulting in a loss even of common reference features that might be used for post-processing (or real-time) software image registration. Note that without tracking, the eye may remain quiescent for short times (<1 s), but it eventually must reflect its long-term rms motion distribution (many seconds) during fixation. Figure 10 shows cone cell patterns (at left), over a different region at photoreceptor depth and (at right), an average over 200 frames (no de-warping, alignment or other manipulations) during longer-duration tracking (11 s at 18.2 fps). Clearly, hardware tracking by itself does not yet perform at the single-cone precision yet still preserves some spatial information near the cone-spacing spatial scale in averaged images, even when the tracked point (lamina cribrosa) is distant from the image field (~10 deg in this case).

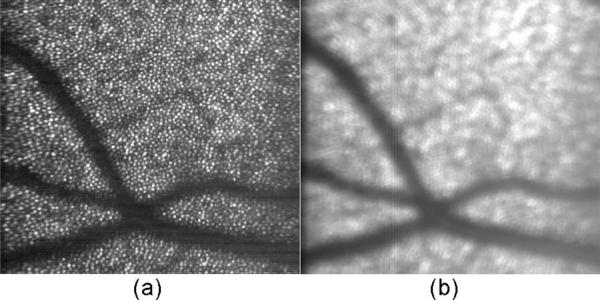

Fig. 10.

Averaging stabilized AOSLO cone photoreceptor images. (a) Single image of cones, 4 to 5 μm in diameter. (b) 200-frame average during tracking. Note that some information at the cone spatial frequency is preserved, even though the net broadening appears to be several cone diameters.

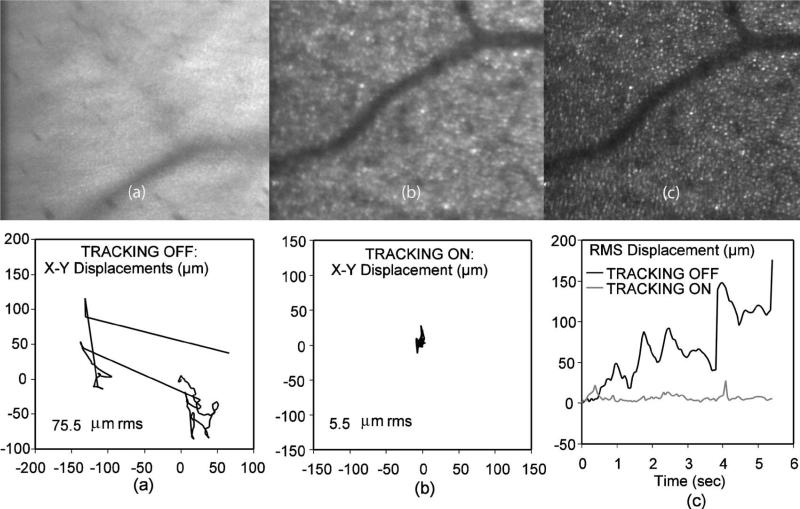

To test the tracking fidelity directly, we computed non-tracking and tracking net eye motion trajectories based on 100-frame AOSLO videos, with anatomical features used for direct (post-processing) alignment by AOSLO cross-correlation, and we present below the average of all 100 images showing the net effect of these degrees of frame-to-frame relative motion on spatial image resolution. Figure 11 demonstrates a benefit of tracking, even for a trained fixater. Fig. 11(a) is the result of averaging a complex spectrum of eye motion without eye-tracking over more than 6 s with a total range of micro-saccades and drifts of up to ~200 μm (rms displacement of 75.5 μm) as seen in the corresponding X-Y vector plot below it. Figure 11(b) is the corresponding tracked case from the same subject, with total rms displacement reduced to 5.5 μm and transient peaks limited to <30 μm during micro-saccades. Figure 11(c) is the case of fully de-warped (compensating intra-frame distortion where possible) and co-added images as a benchmark for the quality achieved with post-processing software alignment (<1 cone diameter). The plot below Fig. 11(c) is a comparison of net radial displacement with respect to the starting frame for tracking and non-tracking. Were the image of Fig. 11(c) to have a vector plot equivalent to (a) and (b), it would be a single point at (0,0) the size of the numerical error of the cross-correlation algorithm.

Fig. 11.

Eye motion from AOSLO image cross-correlation. (a) No-tracking 100-image average, rms motion is 75.7 μm shown in the x-y displacement graph below it. (b) Tracking, rms motion 5.5 μm, and (c) fully de-warped and overlaid images as a benchmark for perfect (<1 cone diameter) alignment. The final graph at bottom right shows the total radial displacement over time for tracking and non-tracking. Note tracking transients <30 μm.

We compared the measured net or residual x-y AOSLO cross-correlation displacements during tracking to the recorded X-Y tracking mirror positions. Ideally, the cross-correlation displacements will become very small as the tracker compensates the bulk of the motion, but tracking system's speed and accuracy are limited. The true eye position is actually the sum of the cross-correlation positions derived from the AOSLO images and the tracking mirrors’ analog position signals. Figures 12(a)–12(d) show what amounts to the partition of eye movement compensation between fixation, the hardware tracking system, and the software fine alignment algorithm by the paths indicated. Figure 12(a) is a vector displacement plot of a non-tracking case (fixation only) whose rms displacement is consistent with normal, untrained fixation. Figures 12(b) and 12(c) show, respectively, the X-Y hardware tracking positions and the residual AOSLO cross-correlation displacements, which in this case are roughly a third of the former. Figure 12(d) is the resulting fine-aligned image accounting for the net error. Any real-time image processing method for compensating the motion from Fig. 12(a) to generate the montage in Fig.12(d) directly may represent too big a step for practical clinical AO systems: indeed, without tracking, a fine-aligned image derived from the AOSLO video corresponding to motion Fig. 12(a), plotted at the same scale of Fig. 12(d), could barely be contained on the page if the abundant frame alignment errors (several times more than in the tracking case) were not manually excluded.

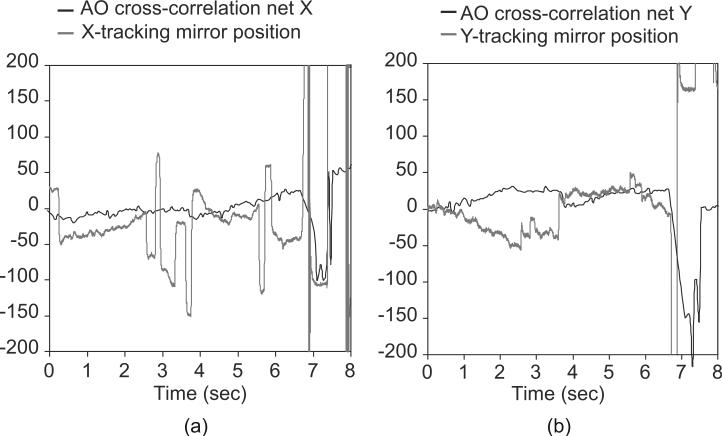

Figures 13(a) and 13(b) show direct comparisons of the measured x-y retinal positions derived from the tracking mirror signals during tracking to the net, or residual, retinal displacements calculated from a simultaneously recorded AOSLO video. The tracker captures the bulk of the motion, but small residual errors and systematic drifts are not fully compensated, due, in part, to the geometry and optics of the eye in combination with lateral (non-rotary head or cyclo-rotary eye) motions.

Fig. 13.

AOSLO cross-correlation software residual tracking errors (black), and hardware tracking signals (gray), in micrometers, compared in subject # 6. (a, b) X- and Y-positions versus time; a blink occurs at ~6.7 s. With superior tracking, the residual AOSLO corrections should become smaller as the tracking mirror positions reflect higher-fidelity tracking. Some pupil drift interacting with the pupil mismatch between the tracker and the AOSLO raster can cause the AOSLO image drift seen in (a) or saccadic “bleedthrough” seen in (b).

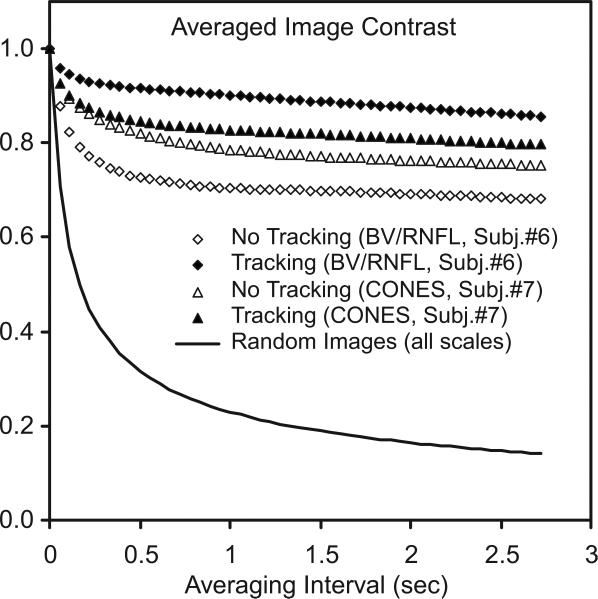

Finally, for blood vessel and nerve fiber images whose spatial frequency distributions are skewed lower than cone images, this residual error behavior and its effects on averaged images is conveniently summarized by Fig. 14, which shows the actual measured loss of average image sharpness (the second moment of I2 of averaged n-frame images) as a function of the n-frame averaging intervals (in seconds), for all such n-frame intervals in a 100-frame AOSLO video sequence normalized by the mean single-frame sharpness in that interval. This metric is a computationally simple, spatial frequency-independent measure of image contrast loss due to motion blur and can be taken as a direct figure of merit for tracking's effectiveness in preserving fine-scale structure. The diamonds are for blood vessel/retinal nerve fiber layer (RNFL) videos. The tracking (solid diamond) and non-tracking

Fig. 14.

Measured loss of averaged AOSLO image contrast (rms image sharpness) as a function of averaging interval over a 100-frame AOSLO video sequence (normalized to single-frame contrast). The diamonds are for an AOSLO video focused on blood vessels (BV/RNFL). The tracking (solid) and non-tracking (open) cases approach different contrast levels. The case of random data (zero frame overlap at all spatial frequencies) is included for comparison. The triangles are for cones; note the small difference because cones mosaics are dominated by features smaller than the rms displacement for either the tracking or the non-tracking cones.

(open) cases show different contrast levels, as only spatial frequencies smaller than the respective tracking and non-tracking rms displacements are averaged away. Stated simply, low spatial frequency images need large excursion to reduce contrast. The triangles are equivalent cases for cone pattern images, tracking (solid) and non-tracking (open). Note the much smaller difference between tracking and non-tracking due to the fact that cones’ mosaic spatial frequency spectra are dominated by spatial features smaller that the rms displacements for both tracking and non-tracking cases. The case of perfectly random images at all scales is estimated as a benchmark (solid line).

It is interesting to note anecdotally that when compared with subjects that make discrete saccades and dwell, subjects that tend to drift and return (e.g., smooth pursuit with nystagmus quick phases) wash out high spatial frequencies more quickly: spectral power at high spatial frequencies does not persist in that case, as it can with several meta-stable fixation loci. This is analogous to the difference between continuous motion blur versus sharp multiple exposures in photography. Good fixation always helps, but tracking is significantly better.

4. DISCUSSION

The new integrated design improves on the clinical applicability of AO imaging in several important ways. First, the woofer–tweeter design allows testing of almost every patient presenting to the laboratory; at IU, we have tested subjects from fairly high myopes (−8.5 D) to moderate hyperopes (+2 D). While we typically pre-focus the eye by adding a base curve on the Mirao, this is primarily to ensure that the wavefront sensor spots are sharp enough to compute centroids accurately. The second major advantage is the combination of the wide-field imaging with steerable high-resolution imaging. This allows data collection to occur in a planned manner. Working from the wide-field image, the experimenter can rapidly target regions of interest and simply move the high-resolution field directly to those points. Since there is a slight “bleed through” of the AOSLO field into the live wide-field image, the exact location of the high-magnification AOSLO image relative to the wide-field, low-magnification LSO image is immediately known. The value of the ability to quickly return to previously imaged retinal coordinates should also not be underestimated. Finally, closed-loop optical eye tracking improved our ability to obtain reliable image sequences, especially in the eyes of poor fixaters. While in good fixaters there is usually enough overlap between sequential frame of the AOSLO images that post-acquisition processing alone can align the images, in poor fixaters, or in AO-OCT imaging for example, tracking and stabilization is more valuable

Hardware eye tracking for AO imaging, and its relationship to fine image alignment and image averaging for a number of applications, is a rather complex problem and deserves some further discussion. In every case where tracking was possible, there was significant improvement in the rms image displacement of AOSLO videos during periods of valid lock—some dramatically so, reaching approximately to rms radial displacements levels of as little as 5 μm. In most tests in the seven fixating subjects, tracking decreased image position variability due to eye motion by factors from ~3 to 15, as determined by comparison of sequential tracking and non-tracking data sets. There is no way to ensure that the eye moves similarly in each test without an independent “gold standard” tracking device; these comparative measurements proved difficult to accomplish quantitatively, since obviously no tracking position voltages are recorded when not tracking; and when not tracking, the failure rate of the AOSLO cross-correlation algorithm became significantly greater. The new AOSLO has now, in this sense, become a potential gold standard of eye movement [47–49].

The fundamental limits of closed-loop optical tracking accuracy were not determined, but it is unlikely we reached them. Optimized tuning of the tracking control parameters and matching the tracking beam characteristics to the available features should result in improvement. Setting up stable tracking and robust auto-relock parameters and settings in any given eye is, at present, too much of an art—we are working toward a higher degree of automation and reliability. The presence of noise sources that contribute to angular tracking noise and instability are not yet fully characterized. However, tracking noise and instability must not dominate the AOSLO images, since all beams are steered by the same tracking mirrors. Once tracking was locked, we found no evidence of significant fine-scale jitter or oscillation due to the tracking unless control-loop feedback gains were too high for clean overall tracking performance as evidenced by obvious instability: the gain must be set high enough to maintain tight tracking lock, but not so high as to amplify the finite tracking noise (error) inherent to the system. Thus, without any unacceptable price in AO image quality, closed-loop optical tracking accuracy was better than could be achieved by fixation alone, even in the best fixaters.

The fact that the subjects were not dilated in the latter part of this study did reduce the crispness and brightness of the AO images in several cases, but the LSO/trackers are less affected by such pupil problems since they are less confocal and use a smaller pupil (around 3–4 mm) than the AOSLO subsystem (up to 7.5 mm). Other issues include the second-order effects of optical distortion with lateral pupil motion and torsional eye movements, when the imaged point is far from the tracked point, and at present these are not addressed. Cyclo-rotations and drift are the main uncompensated effects, but they rarely account for shifts of more than 10% of the field size. There are benefits still to be realized in tightening the tracking and speeding up the re-lock algorithms (though never quicker than normal blinks, of course). One approach being considered is to use the LSO image to assist in the recovery from a blink. Future systems will be able to exploit high-speed LSO for image-assisted tracking and re-ock and real-time stimulus delivery, but video-rate-limited imaging methods alone are unlikely to reach the bandwidth, precision, and dynamic range required for cone-level AOSLO image stabilization in the living eye.

These considerations point to the essentially hierarchical nature of the tracking/stabilization/alignment problem: a wide-field image-based tracker at a reasonable frame rate of tens of hertz can compensate a few degrees in a poor fixater and get to within <1 deg, or a few hundred microrometers on the retina. (The Heidelberg Spectralis has such a tracker, as do other non-AO devices and systems). Much more robust, higher-bandwidth, and higher-accuracy tracking is needed to reach the range of 5 – 10 μm for the average subject, but a fine, software-based on-line tracker would struggle very inefficiently with grosser motions. To get from 10 μm rms stabilization downto1 μm (sub-cone level) is possible with advanced real-time image processing and high-quality AOSLO imaging as pioneered by Arathorn et al. [50]. However, such methods alone are more easily disrupted by eye motion excursions out of bounds or compromised with imaging regions that have little structure or poor contrast. We believe hardware tracking capabilities are a useful augmention for advanced AO imaging systems that will ultimately increase AO image yield, enable real-time AO image-alignment software to work much more efficiently and reliably—especially with poorer quality images that will be the rule rather than the exception among broader, older patient populations—and improve the speed and quality of large image montage generation and automated retinal mosaic imaging for research and future clinical documentation of retinal pathologies.

5. CONCLUSION

These initial results suggest that a new AOSLO instrument with a wide-field interface design has the potential to greatly improve and simplify the clinical applications of adaptive optics retinal imaging and may lead to more widespread use of high-resolution imaging technology by ophthalmologists, optometrists, and vision researchers. Improved closed-loop, high-speed retinal tracking, imaging beam control and dynamics, and higher-order correction schemes will lead to better tracking performance in ongoing testing of precision AO imaging systems

ACKNOWLEDGMENTS

This investigation was supported by National Institutes of Health (NIH) grant nos. 5R01EY14375-07, EYO4395, and EY019008.

REFERENCES

- 1.Dreher AW, Bille JF, Weinreb RN. Active optical depth resolution improvement of the laser tomographic scanner. Appl. Opt. 1989;28:804–808. doi: 10.1364/AO.28.000804. [DOI] [PubMed] [Google Scholar]

- 2.Liang J, Williams DR, Miller DT. Supernormal vision and high-resolution retinal imaging through adaptive optics. J. Opt. Soc. Am. A. 1997;14:2884–2892. doi: 10.1364/josaa.14.002884. [DOI] [PubMed] [Google Scholar]

- 3.Roorda A, Romero-Borja F, Donnelly WI, Queener H, Hebert T, Campbell M. Adaptive optics scanning laser ophthalmoscopy. Opt. Express. 2002;10:405–412. doi: 10.1364/oe.10.000405. [DOI] [PubMed] [Google Scholar]

- 4.Hermann B, Fernandez EJ, Unterhuber A, Sattmann H, Fercher AF, Drexler W, Prieto PM, Artal P. Adaptive-optics ultrahigh-resolution optical coherence tomography. Opt. Lett. 2004;29:2142–2144. doi: 10.1364/ol.29.002142. [DOI] [PubMed] [Google Scholar]

- 5.Zawadzki RJ, Jones SM, Olivier SS, Zhao M, Bower BA, Izatt JA, Choi S, Laut S, Werner JS. Adaptive-optics optical coherence tomography for high-resolution and high-speed 3D retinal in vivo imaging. Opt. Express. 2005;13:8532–8546. doi: 10.1364/opex.13.008532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zhang Y, Cense B, Rha J, Jonnal RS, Gao W, Zawadzki RJ, Werner JS, Jones S, Olivier S, Miller DT. High-speed volumetric imaging of cone photoreceptors with adaptive optics spectral-domain optical coherence tomography. Opt. Express. 2006;14:4380–4394. doi: 10.1364/OE.14.004380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Burns SA, Tumbar R, Elsner AE, Ferguson RD, Hammer DX. Large field-of-view, modular, stabilized, adaptive-optics-based scanning laser ophthalmoscope. J. Opt. Soc. Am. A. 2007;24:1313–1326. doi: 10.1364/josaa.24.001313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bigelow CE, Iftimia NV, Ferguson RD, Ustun TE, Bloom B, Hammer DX. Compact multimodal adaptive-optics spectral-domain optical coherence tomography instrument for retinal imaging. J. Opt. Soc. Am. A. 2007;24:1327–1336. doi: 10.1364/josaa.24.001327. [DOI] [PubMed] [Google Scholar]

- 9.Zawadzki RJ, Cense B, Zhang Y, Choi SS, Miller DT, Werner JS. Ultrahigh-resolution optical coherence tomography with monochromatic and chromatic aberration correction. Opt. Express. 2008;16:8126–8143. doi: 10.1364/oe.16.008126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fernández EJ, Hermann B, Považay B, Unterhuber A, Sattmann H, Hofer B, Ahnelt PK, Drexler W. Ultrahigh-resolution optical coherence tomography and pancorrection for cellular imaging of the living human retina. Opt. Express. 2008;16:11083–11094. doi: 10.1364/oe.16.011083. [DOI] [PubMed] [Google Scholar]

- 11.Zhang Y, Poonja S, Roorda A. MEMS-based adaptive optics scanning laser ophthalmoscopy. Opt. Lett. 2006;31:1268–1270. doi: 10.1364/ol.31.001268. [DOI] [PubMed] [Google Scholar]

- 12.Jonnal RS, Rha J, Zhang Y, Cense B, Gao W, Miller DT. In vivo functional imaging of human cone photoreceptors. Opt. Express. 2007;15:16141–16160. doi: 10.1364/OE.15.016141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Grieve K, Roorda A. Intrinsic signals from human cone photoreceptors. Invest. Ophthalmol. Visual Sci. 2008;49:713–719. doi: 10.1167/iovs.07-0837. [DOI] [PubMed] [Google Scholar]

- 14.Roorda A, Williams DR. The arrangement of the three cone classes in the living human eye. Nature. 1999;397:520–522. doi: 10.1038/17383. [DOI] [PubMed] [Google Scholar]

- 15.Carroll J, Neitz M, Hofer H, Neitz J, Williams DR. Functional photoreceptor loss revealed with adaptive optics: an alternate cause of color blindness. Proc. Natl. Acad. Sci. U.S.A. 2004;101:8461–8466. doi: 10.1073/pnas.0401440101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hofer H, Carroll J, Neitz J, Neitz M, Williams DR. Organization of the human trichromatic cone mosaic. J. Neurosci. 2005;25:9669–9679. doi: 10.1523/JNEUROSCI.2414-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Roorda A, Williams DR. Optical fiber properties of individual human cones. J. Vision. 2002;2:404–412. doi: 10.1167/2.5.4. [DOI] [PubMed] [Google Scholar]

- 18.Makous W, Carroll J, Wolfing JI, Lin J, Christie N, Williams DR. Retinal microscotomas revealed with adaptive-optics microflashes. Invest. Ophthalmol. Visual Sci. 2006;47:4160–4167. doi: 10.1167/iovs.05-1195. [DOI] [PubMed] [Google Scholar]

- 19.Putnum NM, Hofer HJ, Doble N, Chen L, Carroll J, Williams DR. The locus of fixation and the foveal cone mosaic. J. Vision. 2005;5:632–639. doi: 10.1167/5.7.3. [DOI] [PubMed] [Google Scholar]

- 20.Gray DC, Merigan W, Wolfing JI, Gee BP, Porter J, Dubra A, Twietmeyer TH, Ahamd K, Tumbar R, Reinholz F, Williams DR. In vivo fluorescence imaging of primate retinal ganglion cells and retinal pigment epithelial cells. Opt. Express. 2006;14:7144–7158. doi: 10.1364/oe.14.007144. [DOI] [PubMed] [Google Scholar]

- 21.Morgan JIW, Dubra A, Wolfe R, Merigan WH, Williams DR. In vivo autofluorescence imaging of the human and macaque retinal pigment epithelial cell mosaic. Invest. Ophthalmol. Visual Sci. 2009;50:1350–1359. doi: 10.1167/iovs.08-2618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gray DC, Wolfe R, Gee BP, Scoles D, Geng Y, Masella BD, Dubra A, Luque S, Williams DR, Merigan WH. In vivo imaging of the fine structure of rhodamine-labeled macaque retinal ganglion cells. Invest. Ophthalmol. Visual Sci. 2008;49:467–473. doi: 10.1167/iovs.07-0605. [DOI] [PubMed] [Google Scholar]

- 23.Martin J, Roorda A. Direct and noninvasive assessment of parafoveal capillary leukocyte velocity. Ophthalmology. 2005;112:2219–2224. doi: 10.1016/j.ophtha.2005.06.033. [DOI] [PubMed] [Google Scholar]

- 24.Zhong Z, Petrig BL, Qi X, Burns SA. In vivo measurement of erythrocyte velocity and retinal blood flow using adaptive optics scanning laser ophthalmoscopy. Opt. Express. 2008;16:12746–12756. doi: 10.1364/oe.16.012746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Yoon MK, Roorda A, Zhang Y, Nakanishi C, Wong L-JC, Zhang Q, Gillum L, Green A, Duncan JL. Adaptive optics scanning laser ophthalmoscopy images demonstrate abnormal cone structure in a family with the mitochondrial DNA T8993C mutation. Invest. Ophthalmol. Visual Sci. 2009;50:1838–1847. doi: 10.1167/iovs.08-2029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Choi SS, Doble N, Hardy JL, Jones SM, Keltner JL, Olivier SS, Werner JS. In vivo imaging of the photoreceptor mosaic in retinal dystrophies and correlations with visual function. Invest. Ophthalmol. Visual Sci. 2006;47:2080–2092. doi: 10.1167/iovs.05-0997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wolfing JI, Chung M, Carroll J, Roorda A, Williams DR. High-resolution retinal imaging of cone-rod dystrophy. Ophthalmology. 2006;113:1014–1019. doi: 10.1016/j.ophtha.2006.01.056. [DOI] [PubMed] [Google Scholar]

- 28.Roorda A, Zhang Y, Duncan JL. High-resolution in vivo imaging of the RPE mosaic in eyes with retinal disease. Invest. Ophthalmol. Visual Sci. 2007;48:2297–2303. doi: 10.1167/iovs.06-1450. [DOI] [PubMed] [Google Scholar]

- 29.Gomez-Vieyra A, Dubra A, Malacara-Hernandez D, et al. First-order design of off-axis reflective ophthalmic adaptive optics systems using afocal telescopes. Opt. Express. 2009;17(21):18906–18919. doi: 10.1364/OE.17.018906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Duncan JL, Zhang Y, Gandhi J, Nakanishi C, Othman M, Branham KEH, Swaroop A, Roorda A. High-resolution imaging with adaptive optics in patients with inherited retinal degeneration. Invest. Ophthalmol. Visual Sci. 2007;48:3283–3291. doi: 10.1167/iovs.06-1422. [DOI] [PubMed] [Google Scholar]

- 31.Hammer DX, Iftimia NV, Ferguson RD, Bigelow CE, Ustun TE, Barnaby AM, Fulton AB. Foveal fine structure in retinopathy of prematurity: an adaptive optics Fourier domain optical coherence tomography study. Invest. Ophthalmol. Visual Sci. 2008;49:2061–2070. doi: 10.1167/iovs.07-1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Choi SS, Zawadzki RJ, Keltner JL, Werner JS. Changes in cellular structures revealed by ultra-high-resolution retinal imaging in optic neuropathies. Invest. Ophthalmol. Visual Sci. 2008;49:2103–2119. doi: 10.1167/iovs.07-0980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bedggood P, Daaboul M, Ashman R, Smith G, Metha A. Characteristics of the human isoplanatic patch and implications for adaptive optics retinal imaging. J. Biomed. Opt. 2008;3:024008. doi: 10.1117/1.2907211. [DOI] [PubMed] [Google Scholar]

- 34.Cense B, Koperda E, Brown JM, Kocaoglu OP, Gao W, Jonnal RS, Miller DT. Volumetric retinal imaging with ultrahigh-resolution spectral-domain optical coherence tomography and adaptive optics using two broadband light sources. Opt. Express. 2009;17:4095–4111. doi: 10.1364/oe.17.004095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mujat M, Ferguson RD, Patel AH, Iftimia N, Lue N, Hammer DX. high-resolution multimodal clinical ophthalmic imaging system. Opt. Express. 18(11):2010. doi: 10.1364/OE.18.011607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Doble N, Yoon G, Chen L, Bierden P, Singer B, Olivier S, Williams DR. Use of a microelectromechanical mirror for adaptive optics in the human eye. Opt. Lett. 2002;27:1537–1539. doi: 10.1364/ol.27.001537. [DOI] [PubMed] [Google Scholar]

- 37.Fernández EJ, Vabre L, Hermann B, Unterhuber A, Považay B, Drexler W. Adaptive optics with a magnetic deformable mirror: applications in the human eye. Opt. Express. 2006;14:8900–8917. doi: 10.1364/oe.14.008900. [DOI] [PubMed] [Google Scholar]

- 38.Vargas-Martin F, Prieto PM, Artal P. Correction of the aberrations in the human eye with a liquid-crystal spatial light modulator: limits to performance. J. Opt. Soc. Am. A. 1998;15:2552–2562. doi: 10.1364/josaa.15.002552. [DOI] [PubMed] [Google Scholar]

- 39.Shirai T. Liquid-crystal adaptive optics based on feedback interferometry for high-resolution retinal imaging. Appl. Opt. 2002;41:4013. doi: 10.1364/ao.41.004013. [DOI] [PubMed] [Google Scholar]

- 40.Dubra A, Gray DC, Morgan JIW, Williams DR. MEMS in adaptive optics scanning laser ophthalmoscopy: achievements and challenges. Proc. SPIE. 2008;6888:688803–688803-13. [Google Scholar]

- 41.Zou W, Qi X, Burns SA. Wavefront-aberration sorting and correction for a dual-deformable-mirror adaptive-optics system. Opt. Lett. 2008;33:2602–2604. doi: 10.1364/ol.33.002602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hammer DX, Ferguson RD, Ustun TE, Bigelow CE, Iftimia NV, Webb RH. Line-scanning laser ophthalmoscope. J. Biomed. Opt. 2006;11:041126. doi: 10.1117/1.2335470. [DOI] [PubMed] [Google Scholar]

- 43.Hammer DX, Ferguson RD, Bigelow CE, Iftimia NV, Ustun TE, Burns SA. Adaptive optics scanning laser ophthalmoscope for stabilized retinal imaging. Opt. Express. 2006;14:3354–3367. doi: 10.1364/oe.14.003354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Burns SA, Zou W, Song H, Zhong Z. Wavelength variable adaptive optics imaging using a supercontinuum light source. Invest. Ophthalmol. Visual Sci. 2009:50. ARVO E-Abstract 1053/D961. [Google Scholar]

- 45.Curcio CA, Sloan KR. Packing geometry of human cone photoreceptors—variation with eccentricity and evidence for local anisotropy. Visual Neurosci. 1992;9:169–180. doi: 10.1017/s0952523800009639. [DOI] [PubMed] [Google Scholar]

- 46.Chui TYP, Song H, Burns SA. Individual variations in human cone photoreceptor packing density: variations with refractive error. Invest. Ophthalmol. Visual Sci. 2008;49:4679–4687. doi: 10.1167/iovs.08-2135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Stevenson SB, Roorda A. Correcting for miniature eye movements in high-resolution scanning laser ophthalmoscopy. Proc. SPIE. 2005;5688:145–151. [Google Scholar]

- 48.Raghunandan A, Frasier J, Poonja S, Roorda A, Stevenson SB. Psychophysical measurements of referenced and unreferenced motion processing using high-resolution retinal imaging. J. Vision. 2008;8:1–11. doi: 10.1167/8.14.14. [DOI] [PubMed] [Google Scholar]

- 49.Vogel CR, Arathorn DW, Roorda A, Parker A. Retinal motion estimation in adaptive optics scanning laser ophthalmoscopy. Opt. Express. 2006;14:487–497. doi: 10.1364/opex.14.000487. [DOI] [PubMed] [Google Scholar]

- 50.Arathorn DW, Yang Q, Vogel CR, Zhang Y, Tiruveedhula P, Roorda A. Retinally stabilized cone-targeted stimulus delivery. Opt. Express. 2007;15:13731–13744. doi: 10.1364/oe.15.013731. [DOI] [PubMed] [Google Scholar]