Abstract

We present a subgradient extragradient method for solving variational inequalities in Hilbert space. In addition, we propose a modified version of our algorithm that finds a solution of a variational inequality which is also a fixed point of a given nonexpansive mapping. We establish weak convergence theorems for both algorithms.

Keywords: Extragradient method, Nonexpansive mapping, Subgradient, Variational inequalities

1 Introduction

In this paper, we are concerned with the Variational Inequality (VI), which consists in finding a point x*, such that

| (1) |

where H is a real Hilbert space, f : H → H is a given mapping, C ⊆ H is nonempty, closed and convex, and 〈·, ·〉 denotes the inner product in H. This problem, denoted by VI(C, f), is a fundamental problem in Variational Analysis and, in particular, in Optimization Theory. Many algorithms for solving the VI are projection algorithms that employ projections onto the feasible set C of the VI, or onto some related set, in order to iteratively reach a solution. In particular, Korpelevich [1] proposed an algorithm for solving the VI in Euclidean space, known as the Extragradient Method (see also Facchinei and Pang [2, Chapter 12]). In each iteration of her algorithm, in order to get the next iterate xk+1, two orthogonal projections onto C are calculated, according to the following iterative step. Given the current iterate xk, calculate

| (2) |

and then

| (3) |

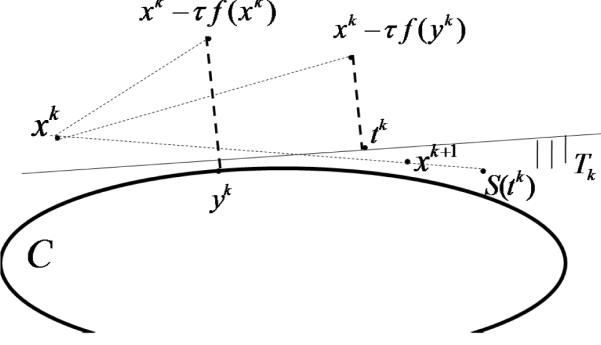

where τ is some positive number and PC denotes the Euclidean least distance projection onto C. Figure 1 illustrates the iterative step (2) and (3). The literature on the VI is vast and Korpelevich's extragradient method has received great attention by many authors, who improved it in various ways; see, e.g., [3, 4, 5] and references therein, to name but a few.

Figure 1.

Korpelevich's iterative step.

Though convergence was proved in [1] under the assumptions of Lipschitz continuity and pseudo-monotonicity, there is still the need to calculate two projections onto C. If the set C is simple enough, so that projections onto it are easily executed, then this method is particularly useful; but, if C is a general closed and convex set, then a minimal distance problem has to be solved (twice) in order to obtain the next iterate. This might seriously affect the efficiency of the extragradient method. Therefore, we developed in [6] the subgradient extragradient algorithm in Euclidean space, in which we replace the (second) projection (3) onto C by a projection onto a specific constructible half-space, which is actually one of the subgradient half-spaces as will be explained later. In this paper, we study the subgradient extragradient method for solving the VI in Hilbert space. In addition, we present a modified version of the algorithm, which finds a solution of the VI that is also a fixed point of a given nonexpansive mapping. We establish weak convergence theorems for both algorithms.

Our paper is organized as follows. In Section 3, we sketch a proof of the weak convergence of the extragradient method. In Section 4, the subgradient extragradient algorithm is presented. It is analyzed in Section 5. In Section 6, we modify the subgradient extragradient algorithm and then analyze it in Section 7.

2 Preliminaries

Let H be a real Hilbert space with inner product 〈·, ·〉 and norm ∥ · ∥, and let D be a nonempty, closed and convex subset of H. We write xk ⇀ x to indicate that the sequence converges weakly to x and xk → x to indicate that the sequence converges strongly to x. For each point x ∈ H, there exists a unique nearest point in D, denoted by PD(x). That is,

| (4) |

The mapping PD : H → D is called the metric projection of H onto D. It is well known that PD is a nonexpansive mapping of H onto D, i.e.,

| (5) |

The metric projection PD is characterized [7, Section 3] by the following two properties:

| (6) |

and

| (7) |

and if D is a hyperplane, then (7) becomes an equality. It follows that

| (8) |

We denote by ND (v) the normal cone of D, at v ∈ D, i.e.,

| (9) |

We also recall that in a real Hilbert space H,

| (10) |

for all x, y ∈ H and λ ∈ [0, 1].

Definition 2.1 Let B : H ⇉ 2H be a point-to-set operator defined on a real Hilbert space H. B is called a maximal monotone operator iff B is monotone, i.e.,

| (11) |

and the graph G(B) of B,

| (12) |

is not properly contained in the graph of any other monotone operator.

It is clear ([8, Theorem 3]) that a monotone mapping B is maximal if and only if, for any (x, u) ∈ H × H, if 〈u − v, x − y〉 ≥ 0 for all (v, y) ∈ G(B), then it follows that u ∈ B(x).

The next property is known as the Opial condition [9]. Any Hilbert space has this property.

Condition 2.1 (Opial) For any sequence in H that converges weakly to x (xk ⇀ x),

| (13) |

The next lemma was proved in [10, Lemma 3.2].

Lemma 2.1 Let H be a real Hilbert space and let D be a nonempty, closed and convex subset of H. Let the sequence be Fejér-monotone with respect to D, i.e., for every u ∈ D,

| (14) |

Then converges strongly to some z ∈ D.

Notation 2.1 Any closed and convex set D ⊂ H can be represented as

| (15) |

where c : H → R is an appropriate convex function.

We denote the subdifferential set of c at a point x by

| (16) |

For z ∈ H, take any ξ ∈ ∂c(z) and define

| (17) |

This is a half-space the bounding hyperplane of which separates the set D from the point z if z ∉ int D. Otherwise T(z) = H.

The next lemma is known (see, e.g., [11, Lemma 3.1]).

Lemma 2.2 Let H be a real Hilbert space, a real sequence satisfying 0 < a ≤ αk ≤ b < 1 for all k ≥ 0, and let and be two sequences in H such that for some σ ≥ 0,

| (18) |

| (19) |

and

| (20) |

Then

| (21) |

The next fact is known as the Demiclosedness Principle [12].

Demiclosedness Principle. Let H be a real Hilbert space, D a closed and convex subset of H and let S : D → H be a nonexpansive mapping, i.e.,

| (22) |

Then I − S (I is the identity operator on H) is demiclosed at y ∈ H, i.e., for any sequence in D such that and (I − S)xk → y, we have .

3 The Extragradient Algorithm

In this section we sketch the proof of the weak convergence of Korpelevich's extragradient method, (2)−(3).

We assume the following conditions.

Condition 3.1 The solution set of (1), denoted by SOL(C, f), is nonempty.

Condition 3.2 The mapping f is monotone on C, i.e.,

| (23) |

Condition 3.3 The mapping f is Lipschitz continuous on C with constant L > 0, that is,

| (24) |

We will use the same outline in Section 5. The next lemma is a known result which is crucial for the proof of our convergence theorem.

Lemma 3.1 Let and be the two sequences generated by the extragradient method, (2)–(3), and let u ∈ SOL(C, f). Then, under Conditions 3.1–3.3, we have

| (25) |

Proof. see, e.g., [1, Theorem 1, eq. (14)], [2, Lemma 12.1.10, p. 1117]

Theorem 3.1 Assume that Conditions 3.1–3.3 hold and let τ < 1/L. Then any sequences and generated by the extragradient method weakly converge to the same solution u* ∈ SOL(C, f) and furthermore,

| (26) |

Proof. Fix u ∈ SOL(C, f) and define ρ := 1 − τ2L2. Since τ < 1/L, ρ ∈ (0, 1). By (25), we have

| (27) |

Using (25) with k ← (k − 1), we get

| (28) |

Continuing, we get for all integers K ≥ 0,

| (29) |

and therefore

| (30) |

Hence

| (31) |

By Lemma 3.1, the sequence is bounded. Therefore it has at least one weak accumulation point. If is a weak limit point of some subsequence of , then

| (32) |

and

| (33) |

Let

| (34) |

where NC (v) is the normal cone of C at v ∈ C (see 9). It is known that A is a maximal monotone operator and A−1 (0) = SOL(f, C). If (v, w) ∈ G(A), then

| (35) |

and therefore . The Opial condition now implies that the entire sequence weakly converges to . Finally, if we take

| (36) |

then by (7) and Lemma 2.1, we see that converges strongly to some u* ∈ SOL(C, f). We also heve

| (37) |

and hence u* = , which completes the proof.

4 The Subgradient Extragradient Algorithm

Next we present the subgradient extragradient algorithm [6].

Algorithm 4.1 The subgradient extragradient algorithm

Step 0: Select a starting point x0 ∈ H and τ > 0, and set k = 0.

Step 1: Given the current iterate xk, compute

| (38) |

construct the half-space Tk the bounding hyperplane of which supports C at yk,

| (39) |

and calculate the next iterate

| (40) |

Step 2: If xk = yk then stop. Otherwise, set k ← (k + 1) and return to Step 1.

Remark 4.1 Every convex set C can be represented as a sublevel set of a convex function c : H → R as in (15); so if c is, in addition, differentiable at yk, then {(xk − τf(xk)) − yk} = ∂c(yk) = {∇c(yk)}. Otherwise, (xk − τf(xk)) − yk ∈ ∂c(yk).

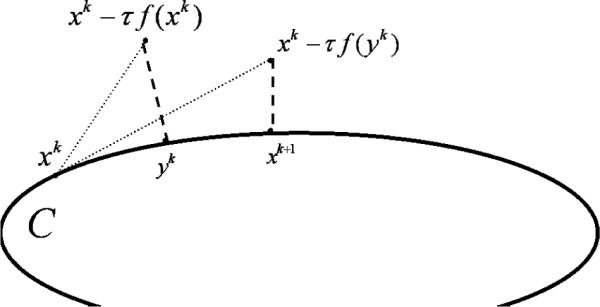

Figure 2 illustrates the iterative step of this algorithm.

Figure 2.

xk+1 is a subgradient projection of the point xk − τf(yk) onto the hyperplane Tk.

We assume the following condition.

Condition 4.1 The function f is Lipschitz continuous on H with constant L > 0, that is,

| (41) |

5 Convergence of the Subgradient Extragradient Algorithm

In this section we give a complete proof of the weak convergence theorem for Algorithm 4.1, using similar techniques to those sketched in Section 3. First we show that the stopping criterion in Step 2 of Algorithm 4.1 is valid.

Lemma 5.1 If xk = yk in Algorithm 4.1, then xk ∈ SOL(C, f).

Proof. If xk = yk, then xk = PC(xk−τf(xk)), so xk ∈ C. By the variational characterization of the metric projection onto C, we have

| (42) |

which implies that

| (43) |

Since τ > 0, inequality (43) implies that xk ∈ SOL(C, f).

The next lemma is crucial for the proof of our convergence theorem.

Lemma 5.2 Let and be the two sequences generated by Algorithm 4.1 and let u ∈ SOL(C, f). Then, under Conditions 3.1, 3.2 and 4.1, we have

| (44) |

Proof. Since u ∈ SOL(C, f), yk ∈ C and f is monotone, we have

| (45) |

This implies that

| (46) |

So,

| (47) |

By the variational characterization of the metric projection onto Tk, we have

| (48) |

for all k ≥ 0: Thus,

| (49) |

Denoting zk = xk = k − τf(yk), we obtain

| (50) |

Since

| (51) |

for all k ≥ 0, we get

| (52) |

for all k ≥ 0. Hence,

| (53) |

where the last inequality follows from (47). So,

| (54) |

and by (49),

| (55) |

Using the Cauchy–Schwarz inequality and Condition 4.1, we obtain

| (56) |

In addition,

| (57) |

So,

| (58) |

Combining the above inequalities and using Condition 4.1, we see that

| (59) |

Finally, we get

| (60) |

which completes the proof.

Theorem 5.1 Assume that Conditions 3.1, 3.2 and 4.1 hold and let τ < 1/L. Then any sequences and generated by Algorithm 4.1 weakly converge to the same solution z* ∈ SOL(C, F) and furthermore,

| (61) |

Proof. Fix u ∈ SOL(C, f) and define ρ := 1 − τ2L2. Since τ < 1/L, ρ ∈ (0, 1). By (60), we have

| (62) |

or

| (63) |

Using (60) with k ← (k − 1), we get

| (64) |

or

| (65) |

Continuing, we get for all integers K ≥ 0,

| (66) |

Since the sequence is monotonically increasing and bounded,

| (67) |

Hence

| (68) |

By Lemma 5.2, the sequence is bounded. Therefore, it has at least one weak accumulation point. If is a weak limit point of some subsequence of , then

| (69) |

and

| (70) |

Define the operator A by (34). It is known that A is a maximal monotone operator and A−1 (0) = SOL(f; C). If (v; w) ∈ G (A), since w ∈ A(v) = f(v) + NC (v), we get w − f(v) ∈ NC (v). Then

| (71) |

On the other hand, by the definition of yk and (7),

| (72) |

or

| (73) |

for all k ≥ 0. Using (68) and applying (71) with , we get

| (74) |

Hence,

| (75) |

and

| (76) |

Taking the limit as j → ∞, we obtain

| (77) |

and since A is a maximal monotone operator, it follows that . In order to show that the entire sequence weakly converges to , assume that there is another subsequence of that weakly converges to some and ∈ SOL(f, C). Note that from Lemma 5.2 it follows that the sequence is decreasing for each u ∈ SOL(C, f). By the Opial condition we have

| (78) |

and this is a contradiction, thus . This implies that the sequences and converge weakly to the same point . Finally, put

| (79) |

so by (7) and since ,

| (80) |

By Lemma 2.1, converges strongly to some u* ∈ SOL(C, f). Therefore

| (81) |

and hence .

6 The Modified Subgradient Extragradient Algorithm

Next we present the modified subgradient extragradient algorithm which finds a solution of the VI which is also a fixed point of a given nonexpansive mapping. Let S : H → H be a nonexpansive mapping and denote by Fix(S) its fixed point set, i.e.,

| (82) |

Let for some c, d ∈ (0, 1).

Algorithm 6.1 The modified subgradient extragradient algorithm

Step 0: Select a starting point x0 ∈ H and τ > 0, and set k = 0.

Step 1: Given the current iterate xk, compute

| (83) |

construct the half-space Tk as in (39) and calculate the next iterate

| (84) |

Step 2: Set k ← (k + 1) and return to Step 1.

Figure 3 illustrates the iterative step of this algorithm. We assume the following condition.

Figure 3.

The iterative step of Algorithm 6.1.

Condition 6.1 Fix(S) ∩ SOL(C, f) ≠ ∅.

7 Convergence of the Modified Subgradient Extragradient Algorithm

In this section we establish a weak convergence theorem for Algorithm 6.1. The outline of its proof is similar to that of [11, Theorem 3.1].

Theorem 7.1 Assume that Conditions 3.2, 4.1 and 6.1 hold and τ < 1/L. Then any sequences and generated by Algorithm 6.1 weakly converge to the same point u* ∈ Fix(S) ∩ SOL(C, f) and furthermore,

| (85) |

Proof. Denote tk := PTk (xk − τf(yk)) for all k ≥ 0. Let u ∈ Fix(S) ∩ SOL(C, f). Applying (8) with D = Tk, x = xk − τf(yk) and y = u, we obtain

| (86) |

By Condition 3.2,

| (87) |

and since u ∈ SOL(C, f)

| (88) |

So,

| (89) |

By (7) applied to Tk,

| (90) |

so

| (91) |

where the last two inequalities follow from the Cauchy–Schwarz inequality and Condition 4.1. Therefore

| (92) |

Observe that

| (93) |

so,

| (94) |

Thus

| (95) |

where the last inequality follows from the fact that τ < 1/L. Using (10), we get

| (96) |

so

| (97) |

Therefore there exists

| (98) |

and and are bounded. From the last relations it follows that

| (99) |

or

| (100) |

Hence,

| (101) |

In addition, by the definition of yk and Tk,

| (102) |

where the last inequality follows from Condition 4.1. So,

| (103) |

and by (101) we get

| (104) |

By the triangle inequality,

| (105) |

so by (101) and (104), we have

| (106) |

Since is bounded, it has a subsequence which weakly converges to some . We now show that . Define the operator A as in (34). By using arguments similar to those used in the proof of Theorem 5.1, we get that . It is now left to show that . To this end, let as before. Since S is nonexpansive, we get from (95) that

| (107) |

By (98),

| (108) |

Furthermore,

| (109) |

So applying Lemma 2.2, we obtain

| (110) |

Since

| (111) |

It follows from (106) and (110) that

| (112) |

Since S is nonexpansive on H, and

| (113) |

we obtain by the Demiclosedness Principle that , which means that . Now, again by using similar arguments to those used in the proof of Theorem 5.1, we get that the entire sequence weakly converges to . Therefore the sequences and weakly converge to . Finally, put

| (114) |

Since , it follows from (7) that

| (115) |

By Lemma 2.1, converges strongly to some u* ∈ Fix(S) ∩ SOL(C, f). Therefore

| (116) |

and hence .

Remark 7.1 In Algorithm 6.1 we assumed that S was a nonexpansive mapping on H. If it is defined only on C we can replace it by , which is a nonexpansive mapping on C. In this case the iterative step is as follows:

construct the half-space Tk (39) and calculate the next iterate

| (117) |

8 Conclusions

In this paper we proposed two subgradient extragradient algorithms for solving variational inequalities in Hilbert space and established weak convergence theorems for both of them. The second algorithm finds a solution of a variational inequality which is also a fixed point of a given nonexpansive mapping.

Acknowledgments

This work was partially supported by Award Number R01HL070472 from the National Heart, Lung and Blood Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Heart, Lung and Blood Institute or the National Institutes of Health. The third author was partially supported by the Israel Science Foundation (Grant 647/07), by the Fund for the Promotion of Research at the Technion and by the Technion President's Research Fund.

Footnotes

Communicated by B.T. Polyak

AMS Classification 65K15 · 58E35

References

- [1].Korpelevich GM. The extragradient method for finding saddle points and other problems. Ekonomika i Matematicheskie Metody. 1976;12:747–756. [Google Scholar]

- [2].Facchinei F, Pang JS. Finite-Dimensional Variational Inequalities and Complementarity Problems. Volume I and Volume II. Springer-Verlag; New York: 2003. [Google Scholar]

- [3].Iusem AN, Svaiter BF. A variant of Korpelevich's method for variational inequalities with a new search strategy. Optimization. 1997;42:309–321. [Google Scholar]

- [4].Khobotov EN. Modification of the extra-gradient method for solving variational inequalities and certain optimization problems. USSR Comput. Math. Math. Phys. 1989;27:120–127. [Google Scholar]

- [5].Solodov MV, Svaiter BF. A new projection method for variational inequality problems. SIAM J. Control Optim. 1999;37:765–776. [Google Scholar]

- [6].Censor Y, Gibali A, Reich S. Two extensions of Korpelevich's extra-gradient method for solving the variational inequality problem in Euclidean space. Technical Report. 2010 [Google Scholar]

- [7].Goebel K, Reich S. Uniform Convexity, Hyperbolic Geometry, and Non-expansive Mappings. Marcel Dekker; New York and Basel: 1984. [Google Scholar]

- [8].Rockafellar RT. On the maximality of sums of nonlinear monotone operators. Trans. Amer. Math. Soc. 1970;149:75–88. [Google Scholar]

- [9].Opial Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Amer. Math. Soc. 1967;73:591–597. [Google Scholar]

- [10].Takahashi W, Toyoda M. Weak convergence theorems for nonexpansive mappings and monotone mappings. J. Optim. Theory Appl. 2003;118:417–428. [Google Scholar]

- [11].Nadezhkina N, Takahashi W. Weak convergence theorem by an extra-gradient method for nonexpansive mappings and monotone mappings. J. Optim. Theory Appl. 2006;128:191–201. [Google Scholar]

- [12].Browder FE. Fixed point theorems for noncompact mappings in Hilbert space. Proc. Natl. Acad. Sci. USA. 1965;53:1272–1276. doi: 10.1073/pnas.53.6.1272. [DOI] [PMC free article] [PubMed] [Google Scholar]