Abstract

Studies of sound localization in humans have used various behavioral measures to quantify the observers’ perceptions; a non-comprehensive list includes verbal reports, head pointing, gun pointing, stylus pointing, and laser aiming. Comparison of localization performance reveals that in humans, just as in animals, different results are obtained with different experimental tasks. Accordingly, to circumvent problems associated with task selection and training, this study used gaze, an ethologically valid behavior for spatial pointing in species with a specialized area of the fovea, to measure sound localization perception of human subjects. Orienting using gaze as a pointer does not require training, preserves the natural link between perception and action, and allows for direct behavioral comparisons across species. The results revealed, unexpectedly, a large degree of variability across subjects in both accuracy and precision. The magnitude of the average angular localization errors for the most eccentric horizontal targets, however, were very similar to those documented in studies that used head pointing, whereas the magnitude of the localization errors for the frontal targets were considerably larger. In addition, an overall improvement in sound localization in the context of the memory-saccade task, as well as a lack of effect of initial eye and head position on perceived sound location were documented.

Keywords: Human, Sound localization, Head-unrestrained orienting, Gaze, Memory saccade task, Motor error

Introduction

A large variety of experimental tasks have been used to measure sound localization perception of human subjects. A non-comprehensive list includes verbal report of source location coordinates (Wightman and Kistler 1989b), identification of sound sources (Buttler et al. 1990), head pointing (Carlile et al. 1997; Makous and Middlebrooks 1990; Recanzone et al. 1998), pointing with a gun (Langendijk et al. 2001; Oldfield and Parker 1984) or with a stylus on a globe (Gilkey and Anderson 1995), and aiming a laser beam with manual controls (Lewald and Ehrenstein 1998; Zwiers et al. 2003).

Despite the advantage afforded by the delivery of precise verbal instructions, differences exist among the results obtained with different tasks. For example, subjects exhibit higher sound localization accuracy and less variability when instructed to face the perceived location of sound sources (Makous and Middlebrooks 1990) than when instructed to provide their coordinates verbally (Wightman and Kistler 1989b). It must be acknowledged, however, that rigorous comparisons are difficult to make across most studies due to large differences in several fundamental variables such as type of stimuli, form of presentation, use of spatial references to align the head of the subject, and time allowed to produce a response.

Psychophysical studies of absolute sound localization in animals have also shown, quite directly in fact, that differences exist between the results obtained with different experimental tasks. For example, rhesus monkeys point with their eyes to the sources of sounds very poorly when their heads are restrained, but can do so much more accurately and with much less variability when their heads are allowed to move freely (Populin 2006). Likewise, cats can localize sound sources much more accurately when allowed to orient with their heads unrestrained using gaze to point (Tollin et al. 2005) instead of the head (May and Huang 1996).

Ideally, the results of psychophysical studies in humans, in whom invasive physiological recordings are not routinely possible, are complemented by physiological (and psychophysical) recordings from animals that provide information about the underlying neural mechanisms. However, if task type affects the outcome of measurements of sound localization perception, it follows that it should also affect inferences made about the underlying neural mechanisms across species. Therefore, the use of behaviors common to both humans and animals might be imperative to meaningfully link psychophysics and physiology.

A behavior suitable for the study of sound localization that is common to both humans and animals, at least for species such as primates and cats with a specialized area of the retina that can be pointed to a specific area of space (Walls 1942), is the movement of the eyes or gaze. Gaze, defined as eye position in space, commonly refers to this measurement taken under head unrestrained conditions. This behavior allows subjects to point to the perceived location of sound sources quickly and, unlike most laboratory tasks used to study sound localization in humans, does not require specific training before testing because subjects arrive at the laboratory with a lifetime of practice. Alternatives to gaze for indicating the position of sound sources, such as the nose pointing task successfully used in human studies by Carlile et al. (1997) and Makous and Middlebrooks (1990), are not equally suitable for animals, as demonstrated by the comparison of the sound localization performance of cats documented by May and Huang (1996) and Tollin et al. (2005).

Although eye position has been used to measure sound localization perception in humans, most studies have restrained the head of the subjects (Frens and Van Opstan 1995; Hofman and van Opstal 1998; Jay and Sparks 1990; and Yao and Peck 1997), which restricts the area of space that can be studied to the oculomotor range of the species; about ±55° (Guitton and Volle 1987). A few studies that allowed orienting with head movements, on the other hand, focused on eye-head coordination (Goossens and Van Opstal 1997), and the extent to which the auditory system takes into account initial eye and head position to program orienting responses (Goossens and Van Opstal 1997), but not on the psychophysics of absolute sound localization. To my knowledge, it is not known how humans localize the sources of sounds by looking at the perceived locations without practice. Accordingly, this study was carried out to determine the accuracy and precision of human sound localization measured with gaze without specific training; experimental conditions that resembled those used to measure sound localization perception in non-human-primates (2006) such as stimulus type, duration and level, experimental tasks, and experimental setup were used. In addition, subjects were verbally instructed about how to perform an auditory memory-saccade task with a short delay period and an auditory dissociated-saccade task to measure the localization of acoustic targets starting from positions in which the eyes and head were purposely misaligned. The results revealed large variability in accuracy and precision across subjects, which was on average surprisingly similar to measurements taken with the head pointing task by Carlile et al. (1997) and Makous and Middlebrooks (1990), improved performance in the memory-saccade task in the presence of a spatial reference, and no effect of initial eye and head position on localization. Preliminary data were presented previously (Populin et al. 2001).

Materials and methods

Subjects

Three female and six male humans ranging from 21 to 43 years of age that were free of neurological disease and reported having normal hearing served as subjects. None of the subjects had any experience with experiments involving an absolute sound localization task, and with the exception of DJT and LCP (the author), subjects were naïve to the goals of the study. The experimental procedures were approved by the University of Wisconsin Institutional Review Board (IRB). All subjects gave written informed consent before taking part in the experiments and, with the exception of DJT and LCP, were compensated monetarily for participating.

Experimental setup and stimuli

Data were acquired in a (3 × 3 × 2 m) double-walled soundproof chamber (Acoustic Systems, Austin TX). The interior walls of the chamber, the field coils of the scleral search coil system used to measure eye and head movements, and the structure supporting the speakers were covered with 5.1 cm acoustic foam (Ilbruck, Minneapolis, MN) to attenuate acoustic reflections. The average background sound level in the experimental room was 27 dB SPL (Extech 407740, Extech, Waltham MA).

The acoustic stimuli consisted of 0.1–25 kHz broadband 150 ms noise bursts (rise/fall ±10 ms) generated with Tucker Davis Technologies System 3 hardware (Tucker Davis Technologies, Alachua, FL) and presented through loudspeakers (Radio Shack Supertweeters, modified to transduce low frequencies) located on the frontal hemifield, 84 cm away from the head of the subject; 32 speakers were selected from a large number by visual inspection of their frequency-response characteristics to a test broadband signal. The locations of the speakers on the frontal hemifield are listed in Table 1. The noise stimulus was identical in all trials (i.e., a frozen noise burst) and was presented at a 50–53 dB SPL; the level was roved over a 3 dB range in 1 dB steps to mask physical differences among the speakers that could have provided unwanted cues. At the start of each trial a speaker had to be selected among the 32 available for the presentation of the stimulus. This process involved closing an electronic switch to establish the connection between the chosen speaker and stimulus generation system. To minimize the probability of presenting unwanted cues during this process, for example, noise artifacts resulting from closing an electromechanical relay, the data acquisition program selected and then de-selected all 32 speakers in random order (i.e., first closed, then opened the relays corresponding to all available speakers), leaving selected only the speaker that was going to be used for stimulus presentation in a particular trial. Although the number of targets used varied from subject to subject due to their availability to participate in several experimental sessions, the position of the speakers used was maintained constant for the entire study.

Table 1.

Summary of angular errors and K−1 for the localization of visual and acoustic targets from all nine subjects combined in the fixation task

| Targets at eye level | (−80, 0) | (−70, 0) | (−60, 0) | (−29, 0) | (−18, 0) | (−10, 0) | (0, 0) | (10, 0) | (18, 0) | (29, 0) | (60, 0) | (70, 0) | (80, 0) | |

| Visual | Ang error | 2.26 | 3.96 | 1.59 | 1.13 | 0.93 | 0.14 | 0.86 | 2.38 | 1.65 | ||||

| K −1 | 0.0043 | 0.0035 | 0.0009 | 0.0010 | 0.0006 | 0.0006 | 0.0005 | 0.0013 | 0.0081 | |||||

| Auditory | Ang error | 5.57 | 2.06 | 3.87 | 1.89 | 0.89 | 1.76 | 3.47 | 4.68 | 5.58 | 3.74 | 8.24 | 6.48 | 1.45 |

| K −1 | 0.0135 | 0.0200 | 0.0154 | 0.0164 | 0.0200 | 0.0216 | 0.0138 | 0.0214 | 0.0220 | 0.0182 | 0.0169 | 0.0131 | 0.0227 | |

| Targets below eye level | (−75, −12) | (−60, −28) | (−60, −20) | (−31.5, −20) | (0, −12) | (0, −22) | (31, −26.5) | (60, −20) | (60, −28) | (75, −12) | ||||

| Visual | Ang error | 1.93 | 2.69 | |||||||||||

| K −1 | 0.0009 | 0.0015 | ||||||||||||

| Auditory | Ang error | 8.20 | 6.41 | 4.14 | 3.55 | 11.63 | 5.48 | 0.34 | 1.84 | 14.77 | 1.79 | |||

| K −1 | 0.0232 | 0.0251 | 0.0132 | 0.0140 | 0.0100 | 0.0115 | 0.0175 | 0.0353 | 0.0209 | 0.0293 | ||||

| Targets above eye level | (−70, 16) | (−60, 7.5) | (−19, 16) | (0, 8) | (0, 16) | (0, 24) | (17, 16) | (60, 9) | (60, 16) | |||||

| Visual | Ang error | 0.0011 | 0.0011 | 0.0011 | ||||||||||

| K −1 | 0.8778 | 0.2390 | 0.6083 | |||||||||||

| Auditory | Ang error | 3.50 | 12.07 | 2.80 | 4.78 | 5.57 | 4.70 | 6.94 | 17.24 | 4.87 | ||||

| K −1 | 0.0384 | 0.0196 | 0.0136 | 0.0145 | 0.0061 | 0.0080 | 0.0117 | 0.0152 | 0.0296 | |||||

Gaze and head movement measurements

Gaze and head position were measured with the search coil technique (Robinson 1963) using a phase angle system (CNC Engineering, Seattle, WA) and fine wire coils embedded in silicon annuli (Skalar Instruments, Delft, The Netherlands). The coil used to measure head position, of identical construction to those used to measure eye movements, was secured to the top of the subject’s head with a custom-made plastic holder attached to a headband; the ensemble weighed 104 g.

Subject preparation and coil calibration procedures

Subjects were asked to read information about the experiment and the risks associated with wearing a scleral search coil as required by the University of Wisconsin IRB. Upon obtaining written consent, verbal instructions were provided about the experimental procedures and the ophthalmologist applied a drop of topical ophthalmic anesthetic (Novesine; Oxybuprocaine, 0.4%) to the right eye. During the time required for the anesthetic to take effect the subjects were fitted with the headband that held the coil used to measure head movements. Next, the subjects were instructed to enter the experimental room and to sit in a wooden chair facing a dark hemisphere covered with black cheesecloth that concealed the location of the speakers and LEDs. The height of the chair was adjusted to position the heads of the subjects at the center of the cube housing the field coils of the search coil system used to measure gaze and head position, which was aligned with the straight ahead position.

After connecting the leads from the head coil to the recording equipment the subjects were asked to move their heads to the left, right, down, and up to determine the polarity of the signals and to align the head with an LED straight ahead to adjust the horizontal and vertical offsets of the coil system. Linear coefficients to transform the voltage representing head position to degrees were obtained before the experimental session by rotating the coil over a 180° range horizontally and 60° range vertically using a protractor.

Once it was determined that all aspects of head-movement recording were working properly, an ophthalmologist inserted the coil into the subjects’ right eye, checked the integrity of the coil with the continuity function of a voltmeter, and secured the leads to the forehead with surgical tape to avoid artifacts and interference. The leads were arranged to exit the eye nasally. The subject was then asked to look at LEDs lit at known positions in the frontal hemifield while the analog output of the coil system was digitally sampled; the horizontal and vertical channels representing eye and head position were low pass filtered at 250 Hz (Krohn-Hite, Co, Avon, MA) and digitally sampled at 500 Hz with an analog to digital converter (Tucker Davis Technologies, Alachua, FL). Linear equations were fit to the horizontal and vertical eye movement data and the coefficients used to transform the voltage output of the coil system into degrees. The coil calibration procedure was streamlined to maximize data acquisition because the recommended coil wearing time was short; the entire procedure lasted about 20–30 s.

Organization of the experimental sessions and the experimental tasks

The experimental sessions consisted of a random mixture of visual and auditory trials to any of 14 visual or 16–32 acoustic targets located in the frontal hemifield. The position of the speakers was held constant for the entire study. All subjects were initially tested with a basic set of 16 targets located on the main horizontal and vertical axes in the initial session; for subjects that returned for further testing, additional speakers were added to the original set. Auditory trials constituted two-thirds to three-fourths of the total number of trials in a session. The large number of potential targets and their random presentation made it difficult if not impossible for the subjects to anticipate their location in upcoming trials.

Data acquisition was limited to approximately 30 min as recommended by the manufacturer of the coils. Subjects were observed on close circuit TV while in the recording chamber. A two-way intercom (Radio Shack, Fort Worth Texas) was available to communicate with the subjects at all times during the experiment. Subjects were given specific instructions to interrupt the experimental session if they experienced discomfort due to the coil in the eye, or felt uncomfortable in the dark, isolated environment of the acoustic chamber. At the completion of data acquisition the eye coil was immediately removed, the subject’s right eye stained with fluorescin and examined under blue light by the ophthalmologist to determine if abrasions had been inflicted to the cornea.

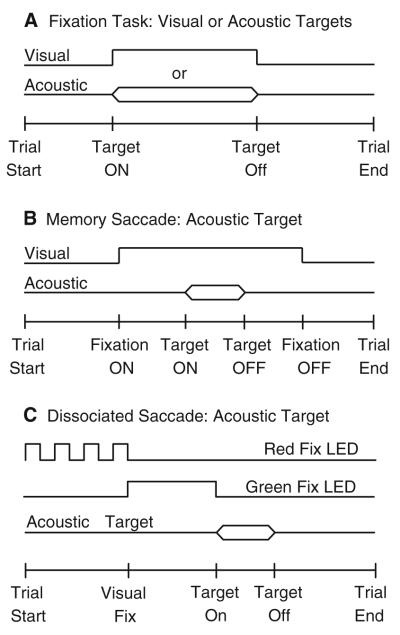

Three experimental tasks were used. The fixation task (Fig. 1a) consisted of an acoustic or visual target presented anywhere in the frontal hemifield without temporal or spatial behavioral constraints. The subject was required to orient to the sources of the stimuli. The memory saccade task (Fig. 1b) consisted of a fixation LED of variable duration (1,000–1,500 ms) presented at the straight-ahead position. During fixation, an acoustic or visual target was presented elsewhere in the frontal hemifield. The subjects were required to maintain their gaze within an (±3°, 3°) acceptance window set around the fixation LED until it was turned off, at which time they were expected to orient to the briefly presented target. Three delays, 300, 500, and 700 ms, were used in random order to prevent subjects from anticipating the moment in which they would be instructed to orient. Trials in which gaze went outside the acceptance window before the fixation LED was turned off were interrupted immediately and discarded. The dissociated saccade task, illustrated in Fig. 1c was modeled after the task used by Volle and Guitton (1993). It started with an LED blinking at 5 Hz at (±16°, 0°) or (0°, 0°) that instructed the subjects to align their gaze and head with it. The head was required to be within a (±5°, 5°) acceptance window set around the blinking LED. Subsequently, the blinking LED was turned off and a green LED was turned on at either of the other two positions. The subject was required to direct his gaze to the green LED without moving his head, which was aligned with the position of the extinguished blinking LED. Trials in which the subject moved his head were interrupted and discarded. Next, coinciding with the offset of the green LED, an acoustic or visual target was presented elsewhere in the frontal hemifield. The subject was required to look at the target. Trials of different types were presented in random order in all experimental sessions.

Fig. 1.

Schematic representation of the experimental tasks. a Fixation Task. Either an acoustic or visual target was presented anywhere in the frontal hemifield without behavioral requirements. The subject was instructed to respond by looking at the perceived location of the sources. b Memory-saccade task. This task started with a fixation LED presented straight ahead. During fixation, a target was presented elsewhere in the frontal hemifield. The subject’s task was to remember the position of the target while maintaining fixation and to look at it when the fixation LED was turned off. Trials in which the subject oriented to the target before the fixation LED was turned off were immediately terminated. c Dissociated saccade task. A red fixation LED flashing at 5 Hz signaled to the subject the presentation of a trial of this type. The subject was required to fixate the flashing LED at (0°, 0°) or (±16°, 0°) and to align the head with it. Next, a green LED was presented from the same position or either from the left or the right (±16°, 0°) of the subject. The subject was instructed to direct his gaze to the green LED without moving his head; this accomplished the dissociation of eye and head position. Coinciding with the offset of the green LED an acoustic target was presented elsewhere in the frontal hemifield. In some trials the red flashing LED straight ahead turned solid green, effectively aligning the eyes and the head at the starting position. The subject was required to look at the perceived location of the target at the offset of the green LED

Instructions to subjects

The subjects were instructed to maintain their backs against the back of the wooden chair on which they sat without moving their torso, and to look in the direction of the targets according to the following instructions. In reference to the fixation task subjects were told, “when you hear a sound or see a light, look to the source.” In reference to the memory saccade task, the subjects were told “when a green LED appears straight ahead, look at it and maintain fixation until it is turned off. An acoustic or another visual target will be presented while you are fixating the green LED: look at the target after the green fixation LED is turned off.” In reference to the dissociated saccade task, the subjects were told “when the fixation LED blinks, align your eyes and head with it; the fixation LED can be located to your left, right, or straight-ahead. Then, a green LED will appear, look at it without moving your head. Lastly, as the green LED is turned off, an acoustic (or visual) target will be presented; your task is to look at it.” No feedback was given on localization accuracy, but subjects were periodically encouraged to “keep up the good work.”

Data analysis

Final gaze position, defined as the position of the eyes in space at the end of the final corrective gaze shift in a trial was used as a measure of localization. Corrective gaze shifts that occurred within 300 ms of one another were deemed as directed to the target and included in the analysis; the return of gaze and head to the vicinity of the straight-ahead position was excluded. Custom interactive graphics software developed in MATLAB (The Math Works, Inc. Natick, MA) was used to determine the start and end of all gaze shifts and head movements in each trial. The criteria to determine the onset of gaze shifts and head movements have been described previously (Populin 2006). Briefly, the mean baseline velocity was computed from a point starting 100 ms before to 10 ms after the presentation of the target, during which gaze was expected to be stationary. The end of fixation, defined as the time at which the velocity of the eye exceeded two standard deviations (SD) of the mean baseline, marked the onset of the gaze shift. The return to fixation, defined as the time at which the velocity of the gaze signal returned to within two SD of the mean baseline, marked the end of the gaze shift. Head movements were analyzed in the same way.

The final gaze position data were analyzed as in Populin (2006) using a modified version of the SPAK software package developed by Carlile et al. (1997) for the analysis of spherical data. Three measures were used to quantify the subjects’ performance: (1) the spherical correlation coefficient (SCC) (Fisher et al. 1987), which provides a measure of the overall correspondence between the final gaze position of individual trials and the targets, (2) the angular error, computed as the mean of the unsigned angles between the final gaze position of individual trials and the targets, provides a measure of accuracy, and (3) the inverse of Kappa (K−1), the length of the vector resulting from adding the vectors representing final gaze position in individual trials, which is the preferred index of variability of spherical data (Fisher et al. 1987; Watson 1983; Wightman and Kistler 1989b). Head movement data were analyzed in the same way. The number of trials obtained per target varied between 3 and 5 due to the random presentation of the available targets and the relatively fixed duration of the experimental sessions, which was determined by the 30-min recommended coil wearing time.

Results

The accuracy and precision with which nine subjects localized sound sources were studied with the fixation task (Fig. 1a), which does not place behavioral constraints before the presentation of the stimuli and does not provide a spatial reference. The data from this experiment will be presented first. In addition, the ability to localize the sources of remembered acoustic targets was studied in two subjects using the memory saccade task (Fig. 1b). Data from this experiment reveal the effect of behavioral context, in particular the effect of a spatial reference, on sound localization performance. In addition, the ability to localize acoustic targets presented while the position of the eyes and head was deliberately misaligned was studied in three subjects using the dissociated saccade task (Fig. 1c).

The subjects received no behavioral training before testing, just succinct instructions. All experiments were carried out in complete darkness. Subject JCH was tested in only one 30-min session, whereas the rest of the subjects were tested in several sessions. Datasets from each experimental session were individually calibrated and collapsed because no differences were found across them.

General observations

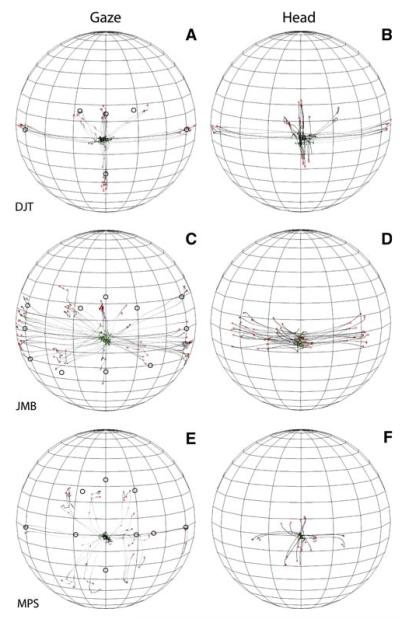

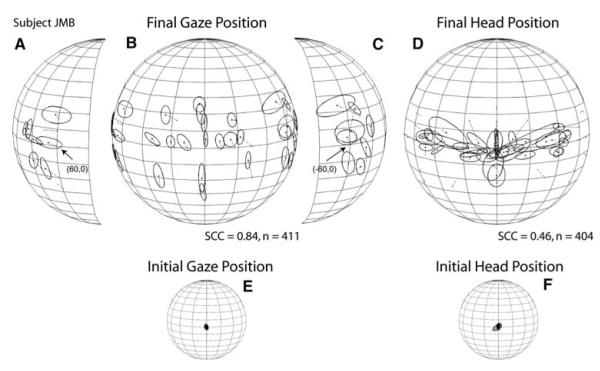

Figure 2 illustrates the orienting behavior of subjects DTJ, JMB, and MPS to 150 ms broadband acoustic targets recorded in the context of the fixation task. The data are plotted in spherical coordinates from the perspective of an observer located outside the sphere. The traces in Fig. 2a, c, and e represent the trajectories of gaze shifts from the onset of the stimulus to the end of the gaze shift; the traces in Fig. 2b, d, and f represent the trajectories of the corresponding head movements, also plotted from the time of stimulus onset to the end of the movement.

Fig. 2.

Gaze shifts and head movement to broadband noise targets recorded in the context of the fixation task from three subjects. The data are plotted in spherical coordinates from the perspective of an observer outside the sphere. The parallels on the sphere, which is tilted 10° downward to facilitate visualization of the data, are plotted every 10° and the meridians are plotted every 20°. The position of the acoustic targets is illustrated with round, hollow symbols on the gaze plots (a, c, e). All three subjects started their orienting movements from or near the straight ahead position, despite the fact that no instructions were provided to the effect. Note the differences among the subjects in the trajectories of their gaze and head movements, their accuracy, and precision

The starting position of most gaze shifts and head traces were near the straight ahead position at the start of most trials because the subjects returned their gaze and head to or near this area after orienting to a target on the previous trial. This strategy, which was also exhibited by monkeys tested under similar experimental conditions (Populin 2006), was adopted spontaneously in response to the random selection of target location in successive trials. It conveniently positioned gaze and head in rough alignment at the start of each trial.

Subjects DJT and JMB were among the best sound localizers in our sample. However, they accomplished their gaze shifts differently. Subject DJT’s orienting behavior was very consistent and accurate with large head movements that closely mirrored the gaze shifts (Fig. 2a, b). Subject’s JMB orienting behavior, on the other hand, was less accurate and more variable, with smaller head movements that were confined primarily to the horizontal plane (Fig. 2c, d). Subject MPS, one of the worse localizers in the sample, grossly mislocalized acoustic targets located on the horizontal plane near the midline (Fig. 2e). The trajectories of this subject’s head movements followed a pattern similar to the gaze but, unlike subject’s DJT’s pattern, it was compressed (Fig. 2f).

Localization of acoustic targets presented without behavioral constraints

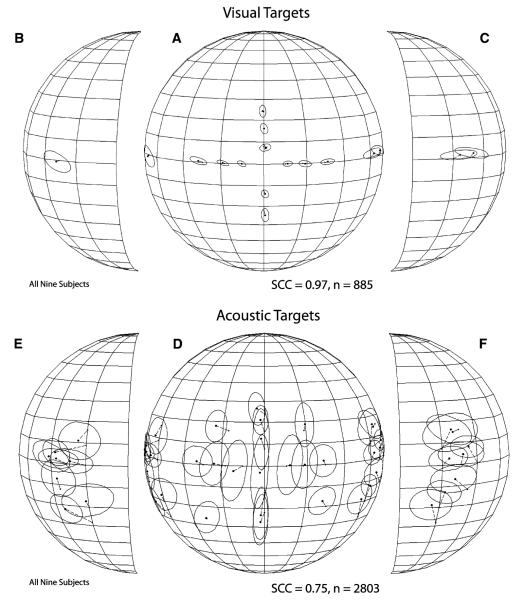

Figure 3 illustrates the average localization performance of all nine subjects in the fixation task. Final gaze position is plotted in spherical coordinates from the perspective of an observer outside the sphere. To better visualize the data corresponding to the most peripheral targets the lateral portions of the sphere are plotted separately and rotated toward the midline. The average final gaze position attained after orienting to visual (Fig. 3a–c) and acoustic (Fig. 3d–f) targets is plotted with round filled dots connected by thin broken lines to small filled squares representing the position of the corresponding targets. The ellipses/circles surrounding the average final gaze position symbols represent one standard deviation.

Fig. 3.

Summary of visual and sound localization performance from all nine subjects combined. (a–c) Spherical plots illustrating visual localization performance in the form of final gaze position from the perspective of an observer outside the sphere, a frontal view of which is shown in b. The parallels on the spheres are plotted 10° apart and the meridians 20° apart; the straight-ahead position corresponds to the intersection of the line representing the equator and the meridian at the center of the plot. Parts b and c are the lateral portions of the sphere plotted in a, rotated 45° toward the midline to depict more clearly the data corresponding to the most eccentric targets. The position of each target is represented with small filled symbols, and their corresponding final mean gaze positions, to which they are connected with thin broken lines, are represented with larger filled symbols. The ellipses/circles surrounding the final gaze position symbols represent one standard deviation functions. (e–f) Final mean gaze position plots illustrating auditory localization performance for all nine subjects

Gaze shifts to visual targets were included as controls to determine if the subjects could orient to the most eccentric targets along the horizontal and vertical axes. The average performance of all nine subjects yielded an SCC of 0.97, which demonstrates a high degree of spatial correspondence between the targets and the final position of the gaze shifts directed at them. The data plotted in Fig. 3a–c show no large systematic localization errors and, importantly, no limitations in range of motion. All subjects were able to reach the most eccentric targets with their gaze shifts, including those located outside the subjects’ oculomotor range (e.g., ±80°, 0°).

Gaze shifts to acoustic targets, which provided a measure of the subjects’ ability to localize acoustic targets, were less accurate and less precise than gaze shifts directed to visual targets. The sound localization performance of the nine subjects combined yielded a SCC of 0.75. Inspection of the data plotted in Fig. 3d–f (and the corresponding numerical values shown in Table 1) reveals that the localization of acoustic targets in the frontal portion of the space was relatively accurate: most targets were overshot by a few degrees but localization was very variable, particularly along the vertical dimension. Acoustic targets on the vertical plane on the midline were overshot and the distribution of the final gaze positions resulted in elliptical profiles because of up-down and down-up errors made by some subjects. These types of errors were made by both the best (e.g., subject DJT) and worse (e.g., subject MPS) sound localizers in the sample. The position of the centroids and one standard deviation functions corresponding to the two targets below the horizontal plane indicate that subjects did not distinguish them and localized them to a single position. Off-axes targets in the frontal portion of space were localized distinctly from other targets. Lastly, most centroids representing final gaze position corresponding to the most eccentric targets were displaced toward a common area on each side, illustrating the effect of the cone of confusion.

The SCCs from individual subjects from the visual and auditory conditions are shown in Table 2. Performance in the visual localization task was consistent across subjects, with SCC values ranging from 0.96 to 0.99. Performance in the sound localization task, on the other hand, varied considerably across subjects, with final SCCs ranging from 0.31 to 0.89. Final head position, that is, the position attained by the head after it had stopped moving, yielded even lower SCCs than final gaze position, with the exception of subject MPS who obtained identical SCCs for both measures; the SCCs computed from final head position measurements ranged from 0.13 to 0.8. The two measures were related. The slope of the regression line fitted to a plot of gaze versus head SCCs reached 0.81, which was significantly different than zero (P < 0.05), which would have indicated no relationship; the corresponding r2 was 0.58.

Table 2.

Spherical correlation coefficients from final gaze (visual and acoustic targets) and final head position (acoustic targets)

| Subject | Vis SCC Gaze |

Aud SCC Gaze |

Aud SCC Head |

|---|---|---|---|

| DJT | 0.99 | 0.89 | 0.75 |

| BJF | 0.98 | 0.87 | 0.8 |

| RAK | 0.98 | 0.85 | 0.73 |

| JMB | 0.97 | 0.84 | 0.44 |

| LCP | 0.99 | 0.82 | 0.4 |

| BLP | 0.96 | 0.79 | 0.54 |

| MPS | 0.98 | 0.66 | 0.66 |

| TAK | 0.97 | 0.42 | 0.13 |

| JCH | 0.99 | 0.31 | 0.19 |

Data from individual subjects from each of the targets tested are shown in Table 3. As expected based on the group data shown in Fig. 3, large differences in localization accuracy and precision were found across subjects. The sound localization performance of three representative subjects with different levels of skill is shown in Figs. 4, 5, 6. Data from subject DJT, whose data yielded the highest SCC in the sample, are shown in Fig. 4. Several characteristics of this subject’s dataset are notable (it must be noted that subject DJT was aware of the goals of the study). First, the localization errors of targets presented on the horizontal plane, within ±30° of the midline, ranged between 3 and 4° with the largest error, approximately 6°, corresponding to the target presented from straight ahead (0°, 0°) nearest to the spontaneous starting gaze position (Fig. 4b, Table 3). Second, subject DJT localized targets on the horizontal plane with small vertical errors. Third, the K−1 values were smaller than from most of the other subjects (Table 3). Fourth, DJT localized the three most eccentric targets on each side to a general location more eccentric than the actual targets, which is interesting because he was aware of the presence of several targets on each side (Fig. 4a–c). Fifth, DJT exhibited some difficulty in localizing the targets located on the vertical plane on the midline, as illustrated by the elongated ellipses of the one-SD functions corresponding to these targets (Fig. 4b). Sixth, final head position closely mirrored final gaze position, reaching an SCC of 0.75 (Fig. 4d).

Table 3.

Angular errors and K−1 from individual subjects for auditory localization with the fixation task

| EYE | TARGET POS | (−80,0) | (−70,0) | (−60,0) | (−29,0) | (−18,0) | (−10,0) | (0,0) | (10,0) | (18,0) | (29,0) | (60,0) | (70,0) | (80,0) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LEVEL | SUBJECT | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | |||||||||||||

| BJF | 12.61 | 0.0043 | 10.75 | 0.0091 | 5.51 | 0.0060 | 5.85 | 0.0077 | 6.23 | 0.0028 | 8.02 | 0.0041 | 5.18 | 0.0029 | 7.68 | 0.0059 | 9.41 | 0.0070 | 4.58 | 0.0026 | |||||||

| BLP | 12.09 | 0.0130 | 7.32 | 0.0073 | 3.24 | 0.0085 | 3.38 | 0.0081 | 9.65 | 0.0216 | 11.37 | 0.0259 | 8.55 | 0.0432 | 14.59 | 0.0128 | 10.83 | 0.0356 | 6.71 | 0.0087 | 10.23 | 0.0101 | 3.44 | 0.0247 | 8.75 | 0.032548 | |

| DJT | 8.82 | 0.0013 | 6.70 | 0.0033 | 4.67 | 0.0012 | 1.54 | 0.0017 | 1.07 | 0.0020 | 4.56 | 0.89 | 0.0018 | 1.04 | 0.0009 | 0.67 | 0.0008 | 6.26 | 0.0022 | 1.73 | 0.0009 | ||||||

| JCH | 21.55 | 0.0153 | 2.32 | 0.0013 | 9.00 | 0.0016 | 7.32 | 0.0010 | 3.93 | 0.0011 | 2.37 | 0.0015 | 4.67 | 0.0005 | 5.53 | 0.0004 | 21.04 | 0.0033 | 17.62 | 0.0023 | |||||||

| JMB | 2.31 | 0.0057 | 9.43 | 0.0193 | 3.10 | 0.0084 | 9.35 | 0.0022 | 5.41 | 0.0051 | 4.12 | 0.0026 | 5.61 | 0.0006 | 3.93 | 0.0038 | 3.90 | 0.0025 | 4.94 | 0.0023 | 6.06 | 0.0057 | 8.33 | 0.0026 | 7.62 | 0.001761 | |

| LCP | 11.73 | 0.0065 | 10.44 | 0.0045 | 3.32 | 0.0053 | 4.08 | 0.0032 | 3.87 | 0.0069 | 5.30 | 0.0078 | 7.61 | 0.0173 | 13.42 | 0.0157 | 13.17 | 0.0047 | 20.76 | 0.0039 | 24.30 | 0.0041 | |||||

| MPS | 2.47 | 0.0026 | 0.90 | 0.0056 | 1.82 | 0.0103 | 7.84 | 0.0122 | 15.02 | 0.0130 | 23.43 | 0.0100 | 19.66 | 0.0136 | 18.15 | 0.0249 | 10.74 | 0.0175 | 6.64 | 0.0216 | 4.34 | 0.0057 | 10.45 | 0.0060 | 6.39 | 0.003231 | |

| RAK | 6.79 | 0.0063 | 6.56 | 0.0044 | 3.44 | 0.0101 | 6.55 | 0.0047 | 1.48 | 0.0081 | 5.16 | 0.0094 | 3.70 | 0.0058 | 3.29 | 0.0030 | 7.12 | 0.0080 | 3.38 | 0.0029 | 9.67 | 0.0045 | 8.05 | 0.0052 | 4.14 | 0.013742 | |

| TAK | 2.02 | 0.0090 | 0.50 | 0.0057 | 8.95 | 0.0064 | 10.77 | 0.0047 | 15.16 | 0.0090 | 16.89 | 0.0062 | 15.45 | 0.0037 | 14.37 | 0.0036 | 6.32 | 0.0042 | 3.80 | 0.0043 | 2.42 | 0.0037 | |||||

| BELOW | (−75,−12) | (−60,−28) | (−60,−20) | (−31.5,−20) | (0,−12) | (0,−22) | (31,−26.5) | (60,−20) | (60,−28) | (75,−12) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HORIZONTAL | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | ||||||||||

| BJF | 6.20 | 0.0025 | 2.63 | 0.0047 | ||||||||||||||||

| BLP | 9.73 | 0.0381 | 6.73 | 0.0339 | 5.24 | 0.0282 | 3.01 | 0.0135 | 25.33 | 0.0157 | 16.59 | 0.0029 | 12.48 | 0.0247 | 11.18 | 0.0145 | 16.37 | 0.0150 | 2.94 | 0.0589 |

| DJT | 11.77 | 0.0021 | 7.89 | 0.0011 | ||||||||||||||||

| JCH | 9.69 | 0.0030 | 20.77 | 0.0016 | ||||||||||||||||

| JMB | 2.90 | 0.0031 | 8.35 | 0.0071 | 10.88 | 0.0055 | 11.25 | 0.0014 | 6.90 | 0.0025 | 6.81 | 0.0014 | 6.71 | 0.0051 | 10.33 | 0.0038 | 20.40 | 0.0057 | ||

| LCP | 21.06 | 0.0053 | 8.17 | 0.0059 | ||||||||||||||||

| MPS | 17.29 | 0.0205 | 7.93 | 0.0064 | 5.57 | 0.0064 | 5.87 | 0.0059 | 18.44 | 0.0079 | 16.40 | 0.0072 | 7.38 | 0.0149 | 18.39 | 0.0273 | 4.10 | 0.0119 | 2.96 | 0.0031 |

| RAK | 6.92 | 0.0055 | 6.61 | 0.0092 | 5.33 | 0.0038 | 5.15 | 0.0048 | 12.86 | 0.0012 | 7.90 | 0.0044 | 2.64 | 0.0027 | 6.44 | 0.0049 | 14.30 | 0.0058 | 2.76 | 0.0154 |

| TAK | 18.50 | 0.0130 | 26.69 | 0.0101 | ||||||||||||||||

| ABOVE | (−70,16) | (−60,7.5) | (−19,16) | (0,8) | (0,16) | (0,24) | (17,16) | (60,9) | (60,16) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HORIZONTAL | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | Ang Error K-1 | |||||||||

| BJF | 6.96 | 0.0099 | 8.19 | 0.0066 | 9.76 | 0.0021 | 8.98 | 0.0019 | 9.01 | 0.0029 | 8.20 | 0.0051 | 8.22 | 0.0075 | ||||

| BLP | 2.69 | 0.0032 | 5.71 | 0.0123 | 9.31 | 0.0159 | 12.62 | 0.0044 | 13.09 | 0.0020 | 4.62 | 0.0120 | 9.67 | 0.0038 | 11.75 | 0.0259 | 13.51 | 0.0099 |

| DJT | 8.98 | 0.0020 | 1.19 | 0.0005 | 4.84 | 0.0009 | ||||||||||||

| JCH | 36.80 | 0.0093 | 8.50 | 0.0032 | 2.45 | 0.0012 | 9.29 | 0.0004 | 11.60 | 0.0038 | 13.13 | 0.0067 | ||||||

| JMB | 16.55 | 0.0134 | 9.29 | 0.0021 | 2.22 | 0.0028 | 7.17 | 0.0014 | 5.04 | 0.0029 | 3.91 | 0.0046 | 8.28 | 0.0040 | 23.65 | 0.0038 | 2.83 | 0.0121 |

| LCP | 3.61 | 0.0127 | 6.29 | 0.0090 | 8.59 | 0.0086 | ||||||||||||

| MPS | 5.30 | 0.0052 | 18.13 | 0.0104 | 9.46 | 0.0282 | 6.98 | 0.0307 | 3.98 | 0.0039 | 6.59 | 0.0063 | 10.48 | 0.0119 | 13.14 | 0.0043 | 5.52 | 0.0045 |

| RAK | 3.22 | 0.0020 | 5.10 | 0.0104 | 2.39 | 0.0030 | 5.88 | 0.0020 | 4.11 | 0.0052 | 1.82 | 0.0021 | 7.00 | 0.0039 | 16.21 | 0.0074 | 10.37 | 0.0115 |

| TAK | 6.12 | 0.0067 | 3.78 | 0.0052 | 10.42 | 0.0038 | 7.05 | 0.0019 | 4.34 | 0.0020 | 8.19 | 0.0056 | 3.64 | 0.0052 | ||||

Fig. 4.

Summary of sound localization performance from subject DJT. The data are plotted as described in Fig. 3

Fig. 5.

Summary of sound localization performance from subject JMB. The data are plotted as described in Fig. 3

Fig. 6.

Summary of sound localization performance from subject MPS. The data are plotted as described in Fig. 3

Subject JMB’s results are illustrated in Fig. 5f. Targets presented within ±30° of the midline on the horizontal plane were localized with an upward shift and a small rightward bias whereas the most eccentric targets were overshot. Targets presented on the vertical axis were also overshot. The SCC computed from subject JMB’s data reached 0.84. In contrast to the previous subject, JMB’s overall final head position pattern bore little resemblance to final gaze position (Fig. 5d), with most of the data found near the horizontal plane. The head achieved very consistent final positions for some targets and very inconsistent for others, regardless of target eccentricity; the SCC for these data was 0.44 (Fig. 5d).

Lastly, subject MPS localized horizontal targets presented in the central portion of the field with a large downward shift which was most pronounced for the targets presented closest to the midline where the angular errors reached up to 29° (Fig. 6b). The most peripheral targets, on the other hand, were localized with the smallest angular errors, 5° on the left side and 4° on the right (Fig. 6a, c, Table 3). The responses to targets presented on the horizontal plane formed a U-shape, with the responses to the most eccentric targets grouped on a general location, particularly those on the subject’s right side (Fig. 6a). For targets located on the vertical plane, with the exception of the two targets presented at the highest elevations that were localized above their actual positions, MPS made numerous down-up errors, as illustrated by the elongated profiles of the one-SD functions (Fig. 6b) and the large K−1 values (Table 3). The SCC computed for final gaze position reached 0.66. MPS’s final head positions were mostly located below the horizontal plane and were less variable than the corresponding gaze shifts (Fig. 6d). The SCC computed for the final head position data reached 0.66.

Gaze latency, number of corrections, and relation between final gaze and head position

The time elapsed between the presentation of a stimulus and the initiation of a behavioral response is indicative of the processing load associated with completing the task (Posner 1978). In the context of the present study in which subjects were instructed to look at the sources of sounds implying accuracy, not speed, latency provides further insight as to how the sound localization task was performed.

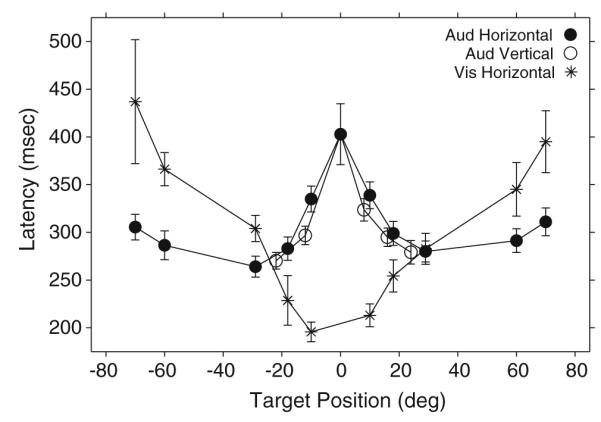

In contrast to the variability across subjects that characterized the sound localization data (Figs. 4, 5, 6; Tables 2, 3), the latency of the same gaze shifts was very consistent. This is illustrated in Fig. 7, which shows the mean latency of gaze shifts to acoustic targets located on the main horizontal and vertical axes from all nine subjects. The latencies of responses to visual targets on the horizontal plane are included for comparison. The standard bars represent 95% confidence intervals. The latency from only the first gaze saccade from each trial is included.

Fig. 7.

Average gaze shift latency to horizontal and vertical acoustic targets recorded in the context of the fixation task. The equivalent measure from horizontal visual targets is included for comparison. Data from all nine subjects are included

The data from the visual condition, plotted with asterisks (Fig. 7), formed a U-shape pattern with the shortest latencies corresponding to targets presented near the straight ahead position, near the subjects’ foveae, and the longest latencies corresponding to targets presented more eccentrically. These observations are consistent with those of Zahn et al. (1978).

The data from the auditory condition, plotted with filled and open circles for targets on the main horizontal and vertical axes respectively (Fig. 7), formed a pattern nearly opposite to the visual. For targets on the horizontal plane, the shortest latencies corresponded to those located at about (±30°, 0°), with slight increases for those located more eccentrically. The data from targets located on the main vertical axis closely mirrored those from horizontal targets of similar eccentricity, suggesting that the localization of targets within an area comprising approximately 10–15° from the straight ahead position, where the subjects’ gaze was located at the time of presentation of the stimuli, posed the greatest difficulty for localization. Judging by the average latency of the first gaze shift, the target located at the straight-ahead position was most difficult to localize.

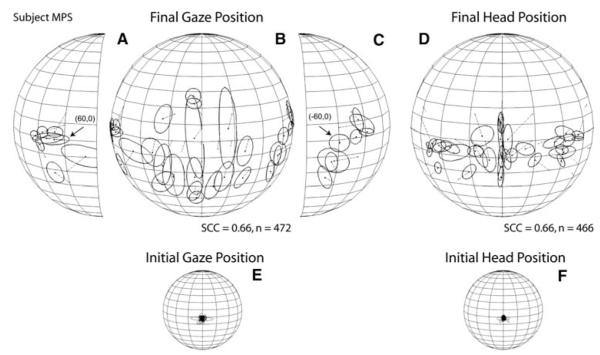

Localization of remembered acoustic targets

The ability of humans to orient to remembered acoustic targets after a compulsory waiting period was tested with the auditory memory-saccade task (Fig. 1b). The motivation for these experiments was twofold. First, orienting to the sources of sounds sometime after the stimuli have expired, when the conditions are appropriate, is common in everyday social and non-social circumstances; thus, it was important to determine how accurate and variable human subjects actually were under such conditions. Second, non-human primates tested with the memory saccade task localized sounds more accurately and with less variability than under the less restrictive conditions of the fixation task (Populin 2006). Because animals require extensive training to perform the memory saccade-task, it is important to determine if improvement in localization performance results from the extensive practice. Humans, on the other hand, can perform the memory-saccade task based on verbal instructions without practice.

Two humans, one of the best localizers in the sample, subject JMB, and one of the worst, subject MPS, were tested after a succinct verbal explanation of the requirements of the memory-saccade task (Fig. 1b). Trials of this type were presented in random order with trials of the fixation type in two experimental sessions for subject JMB and in three sessions for subject MPS. As in all experiments in this study, no feedback on localization accuracy or precision was provided.

The results of these experiments are shown in Fig. 8. The starting gaze and head position of both subjects was more consistent, as expected due to the requirement of aligning them with an LED straight ahead before the presentation of the target, than in the fixation task (Figs. 4, 5, 6e, f). Subject JMB’s performance yielded an SCC of 0.93 and subject MPS’s an SCC 0.88 for improvements of 6 and 12%, respectively, compared to the localization of the same targets in the context of the fixation task (Figs. 5, 6). Whereas the overall pattern of localization errors was essentially the same as in the fixation task, both subjects made smaller errors. Subject JMB made small gains in localization accuracy for most targets presented on the horizontal plane and targets presented on the vertical plane below the horizontal (Fig. 8b). Subject MPS also made much smaller errors than in the fixation task, including the localization of targets on the vertical plane, particularly those presented below the horizontal plane (Fig. 8h), and those presented on the horizontal plane near the midline (Fig. 8h).

Fig. 8.

Summary of sound localization of remembered acoustic targets. The data were collected with the memory-saccade task and are presented separately for each of the two subjects tested. The data are plotted as described in Fig. 3

Inspection of the angular error and K−1 values from the memory-saccade task shown in Table 4 reveals that localization of remembered targets was accomplished by both subjects with much less variability than in the fixation task condition (Figs. 5, 6), as corroborated by the actual differences between the two tasks, which are plotted in the lower portion of Table 4. Positive values, which comprise most of the targets tested, indicate improvement in sound localization performance in the context of the memory-saccade task. Lastly, changes were also observed in final head position, the SCC of which increased for both subjects compared to the fixation task (Fig. 8).

Table 4.

Localization performance in the memory saccade task and comparison with localization performance in the fixation task

| Target pos: Subject |

(−70, 0) |

(−60, 0) |

(−29, 0) |

(−18, 0) |

(−10, 0) |

(10, 0) |

(18, 0) |

(29, 0) |

(60, 0) |

(70, 0) |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | Ang error |

K−1 | |

| JMB | 2.78 | 0.0020 | 3.52 | 0.0016 | 11.72 | 0.0019 | 5.34 | 0.0005 | 7.24 | 0.0014 | 2.80 | 0.0017 | 4.76 | 0.0004 | 5.73 | 0.0007 | 8.93 | 0.0010 | 6.56 | 0.0014 |

| MPS | 2.07 | 0.0027 | 1.34 | 0.0019 | 7.05 | 0.0017 | 13.71 | 0.0038 | 5.33 | 0.0079 | 8.54 | 0.0089 | 3.61 | 0.0028 | 4.07 | 0.0022 | 1.89 | 0.0009 | ||

| (0, −22) | (0, −12) | (0, 8) | (0, 16) | (0, 24) | ||||||||||||||||

| JMB | 1.95 | 0.0002 | 3.17 | 0.0000 | 11.91 | 0.0004 | 8.22 | 0.0004 | 4.37 | 0.0003 | ||||||||||

| MPS | 8.47 | 0.0013 | 14.47 | 0.0022 | 4.80 | 0.0123 | 5.04 | 0.0012 | 4.10 | 0.0008 | ||||||||||

| Differences between fixation and memory saccade task; improvement in the memory saccade task is illustrated by values >0 | ||||||||||||||||||||

| (−70, 0) | (−60, 0) | (−29, 0) | (−18, 0) | (−10, 0) | (10, 0) | (18, 0) | (29, 0) | (60, 0) | (70, 0) | |||||||||||

| JMB | 6.65 | 0.0173 | −0.42 | 0.0067 | −2.37 | 0.0002 | 0.08 | 0.0045 | −3.12 | 0.0013 | 1.13 | 0.0020 | −0.86 | 0.0021 | −0.79 | 0.0016 | −2.87 | 0.0047 | 1.77 | 0.0013 |

| MPS | −1.17 | 0.0029 | 0.47 | 0.0084 | 0.79 | 0.0106 | 1.31 | 0.0093 | 18.10 | 0.0021 | 2.20 | 0.0086 | 3.03 | 0.0188 | 0.27 | 0.0035 | 8.56 | 0.0051 | ||

| (0, −22) | (0, −12) | (0, 8) | (0, 16) | (0, 24) | ||||||||||||||||

| JMB | 4.86 | 0.0012 | 3.74 | 0.0024 | −4.74 | 0.0010 | −3.18 | 0.0025 | −0.46 | 0.0043 | ||||||||||

| MPS | 7.93 | 0.0058 | 3.97 | 0.0057 | 2.18 | 0.0184 | −1.06 | 0.0027 | 2.49 | 0.0056 | ||||||||||

Effect of eye and head position misalignment at the time of acoustic stimuli presentation

In performing the fixation task, subjects returned their gaze and head to or near the straight-ahead position after orienting to a target to wait for the presentation of the next trial. This strategy resulted in a relatively stereotyped position for the start of the ensuing gaze shift with the eyes and head in rough alignment straight ahead (Fig. 2). Under those conditions the visual and auditory representations of space are expected to be in approximate spatial register. Such register is thought to constitute a substrate for sensorimotor integration (Harris et al. 1980; Stein and Meredith 1993). Primates, however, move their eyes spontaneously 2–3 times per second (Albano et al. 1982), thus creating conditions in which the eyes and the head, and therefore the visual and auditory representations of space, will be misaligned at the time acoustic events take place in the surrounding space. In fact, rarely will the head and eyes be aligned straight-ahead at the time an acoustic even takes place.

Behavioral tests in humans (Yao and Peck 1997) and animals (Metzger et al. 2004; Populin et al. 2004) carried out with the head restrained showed no proportional effect of initial eye position on sound localization accuracy. Goossens and van Opstal (1997), on the other hand, dissociated the initial position of the eyes and head of humans before presenting acoustic (and visual) stimuli as targets for gaze shifts. Their results showed no effect of initial eye and head position on final gaze position, but an effect on final head position. However, as pointed out at the outset, their study was focused on the mechanisms underlying the coordination of eye and head movements that comprised gaze shifts to acoustic and visual targets and not on the psychophysics of sound localization.

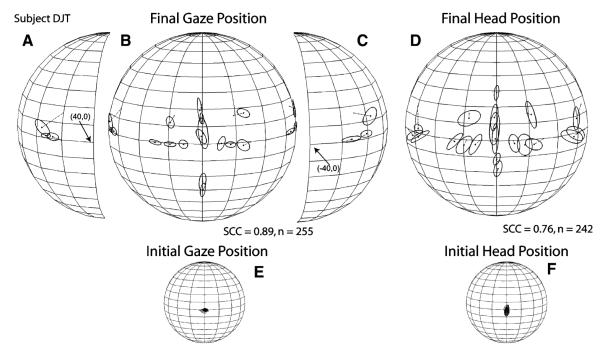

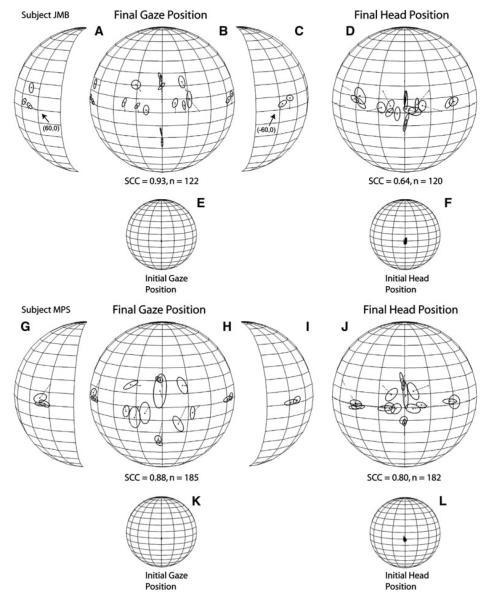

Accordingly, the horizontal position of the eyes and head of three subjects was systematically misaligned before the presentation of acoustic targets using the dissociated saccade task (Fig. 1c). Trials of this type were presented with low probability among trials involving the fixation task, approximately 15–20% of the total number in three to four experimental sessions. The subjects were cued about the imminent presentation of a trial of this type by flashing the fixation light at 5 Hz (Fig. 1c). All three subjects were able to perform the experimental task (Fig. 1c) without difficulty. As with the fixation and memory-saccade tasks, neither practice nor feedback was provided. Data from targets for which at least fifteen trials per each of the three initial gaze and head positions were available are presented; the targets were located at (±18°, 0°) and (±60°, 0°).

For each subject gaze and head horizontal position data illustrate the behavior under study (Fig. 9). All traces are plotted as a function of time and synchronized to the onset of the acoustic target at time 0 ms; traces representing vertical gaze and head position were omitted for clarity. In some trials, illustrated in Fig. 9a–b, g–h, and m–n, the subjects were instructed to maintain their heads aligned with the straight-ahead position while fixating their gaze on LEDs at (±16°, 0°) or (0°, 0°) before the presentation of a target. In other trials subjects were instructed to fixate their gaze straight-ahead while aligning their heads with LEDs at the positions listed above (Fig. 9c–d, i–j, o–p).

Fig. 9.

Localization of acoustic targets presented while the position of the eyes and head were misaligned. Data were collected from three subjects with the dissociated saccade task. (a, b; g, h; and m, n) Gaze and head horizontal position plotted as a function of time and synchronized to the onset of the acoustic target. Note that gaze position during the fixation period, before the onset of the acoustic target at time 0 ms, was (±16°, 0°) or (0°, 0°). (e, k, q) Corresponding summary plots of final gaze position for each of the three starting gaze position conditions. (c, d; i, j; and o, p) Gaze and head horizontal position plotted as a function of time and synchronized to the onset of the acoustic targets at time 0 ms. Note that in this condition the gaze of the animal was aligned straight ahead at the time of presentation of the acoustic stimuli, but the head was aligned with LEDs at (±16°, 0°) or (0°, 0°). (f, l, r) Corresponding summary plots of final gaze position for each of the three starting head position conditions. The hollow symbols represent the initial gaze and head positions as labeled (R right, C center, and L left)

Corresponding summary plots of final gaze position achieved in each of the conditions are shown in spherical coordinates separately for each subject. In each plot starting gaze and head position are illustrated with small circles labeled R, C, and L, for right, center, and left, respectively; the point C represents the straight-ahead position. As in previous figures, the spheres, the frontal portion of which are only plotted in this figure, are shown from the perspective of an observer located outside. Final gaze position symbols with extensive overlap are plotted unlabeled; those with slight deviations are labeled with the letter corresponding to the initial eye or head position.

In the condition in which the head started at the straight-ahead position and gaze started at (±16°, 0°) or (0°, 0°), subjects DJT and JMB oriented in most trials with a single gaze shift, regardless of initial gaze, head, or target position. Subject MPS also oriented with a single gaze shift to targets located to his left, but not to the most eccentric target located to his right (Fig. 9g). For the combinations of (±16°, 0°) starting gaze position and target (60°, 0°), that is, when the starting gaze position was either the closest to or the farthest from the target, the initial gaze shift consistently undershot the target. Small corrective eye movements were observed during the ensuing period of spontaneous fixation after having localized the target.

For all three subjects the initial head position was consistently maintained without movement at the straight-ahead position during the fixation period. At the end of the gaze shifts, the head arrived at a consistent location for each of the four targets independently of initial eye position. Most importantly, the final gaze position plots revealed no consistent effect of initial gaze position on sound localization for any of the three subjects tested (Fig. 9e, k, q).

The conditions in which the head started at (±16°, 0°) or (0°, 0°) and gaze straight-ahead produced similar results to the previous (Fig. 9c, d, i, j, l, o, p). That is, there was no effect of initial head position on sound localization. In most trials subjects oriented to the acoustic targets with a single gaze shift. Notably, the final head position was very consistent for each of the targets. Lastly, some small differences in final gaze position were observed, such as in subject DJT’s data (Fig. 9f), in which the starting position to the right of the center (labeled R) yielded a final gaze position that was slightly to the right of the other two corresponding to the left and center starting head positions. Note, however, that the deviation of this data point from the other two is only a small fraction to the initial deviation of the head from the straight-ahead condition.

Discussion

The goal of this study was to determine how accurately and precisely humans localize broadband sounds when simply instructed to look at the location of the sources without practice in the specific context of the experimental task. Moreover, taking advantage of the opportunity afforded by the delivery of verbal instructions, it was possible to address two additional questions without having to provide specific training: how accurately and precisely do humans localize remembered sound sources? And is there an effect of initial eye/head position on sound localization when the head is allowed to contribute to the gaze shift?

The data showed (1) that large variability and precision characterized sound localization performance across subjects, (2) that improvements in accuracy and precision resulted from the presence of a spatial reference while localizing remembered acoustic targets, (3) that sound localization was not affected by deviations of eye and head position from the midline at the time acoustic stimuli were presented, and (4) that consistent final head positions were achieved after orienting from different starting head positions. On average, these results obtained without training are very similar to those obtained from head-unrestrained monkeys tested under similar experimental conditions (Populin 2006), and surprisingly very similar to those obtained by Carlile et al. (1997) and Makous and Middlebrooks (1990) with the head pointing task after extensive training.

Sound localization with and without spatial references or constraints

Inspection of the group data plotted in Fig. 3 as well as the individual data in Tables 2 and 3 reveals that the most distinguishing aspect of the results of this study is the large variability in sound localization performance documented across subjects, which is not typically emphasized in the literature (but see Wightman and Kistler 1989b). Specifically, large variability was documented in the SCCs, a measure of the correspondence between the final gaze positions in individual trials and the position of the targets, and K−1, a measure of dispersion of spherical data.

For targets on the horizontal plane most subjects made large errors in localizing the most eccentric targets. The exceptions were the worst three sound localizers in the sample, subjects MPS, TAK, and JCH, who made large localization errors for the most centrally located targets (Table 3). Subject MPS localized the central targets below the horizontal plane (Fig. 6), whereas subject TAK localized the same targets above the horizontal plane (Table 3). Two other subjects, DJT and BLP, also exhibited some difficulty in localizing the most centrally located targets but not to the same extent, whereas subject RAK made relatively constant errors across all eccentricities (Table 3).

For targets on the vertical plane, on the other hand, despite variations in magnitude, subjects consistently made larger errors for targets located below the horizontal plane (Table 3). Some subjects, including subject DJT who’s performance produced the highest SCC (0.89), made up-down and down-up errors, as illustrated by the elongated, elliptical profiles of the one standard deviation functions corresponding to some of the vertical targets (Fig. 4), which were described by Kent, not Fisher statistics (analysis not shown). The origin of those errors is difficult to pinpoint, particularly because not all subjects made them. The acoustics of the recording room and the items within it, such as the frame that held the speakers in place and the cube that housed the field coils of the eye coil measuring system, the interference of which the subjects in question could not take into account, might have contributed to the problem.

The results of the experiments involving the localization of remembered targets suggest that certain aspects of the memory-saccade task may have significantly influenced sound localization. Specifically, improved performance was documented in both subjects tested: JMB, one of the best sound localizers in the sample, and MPS, one of the worst. Similar improvements in performance were documented in monkeys required to orient to remembered acoustic targets using the same memory-saccade task (Populin 2006), which requires subjects to orient to targets some time after they have expired based on information stored in working memory (Goldman-Rakic 1987). The present results are, therefore, somewhat paradoxical because orienting to the remembered location of a target is considered more difficult than orienting to a target under standard closed or open loop conditions. It must be acknowledged, however, that the 300, 500, and 700 ms delays used in the present study must have presented little difficulty and actual detriments in localization performance should be expected at much longer delays.

Two specific aspects of the memory-saccade task are likely to have contributed to the improvement in sound localization performance. The first concerns the presence of a reference at the straight ahead position, which commands the subject to line up his gaze with it; if enough time is provided (typically >500 ms) subjects also align their heads with the visual fixation reference. The fixation stimulus used in the memory saccade task was a red LED at the straight-ahead position. Thus, if the location of acoustic targets is encoded in a spatial or a body-centered frame of reference, as suggested by Goossens and van Opstal (1999), the spatial information provided by the reference located straight ahead should help the process of sound localization because the position of the target can be computed in relation to it. Determining the position of acoustic targets in the dark, relying only on somatosensory information about the position of the eyes and head, as well as efferent copies of previous motor commands issued to align the eyes and the head at the starting position, should be more difficult than determining the position of an acoustic target in the presence of a reference that defines straight-ahead.

The second aspect concerns the delay period of the memory-saccade task during which the subject was required to withhold the response until the fixation LED was turned off. During this period the subject had additional time to refine his estimate of the position of the acoustic target and to plan the execution of the behavioral response. The data in Fig. 7 show that this was not the case with the fixation task, in which subjects were free to orient to the perceived location of the acoustic targets without imposed temporal constraints. Despite the fact that the instruction was to look at the sound sources, which implied accuracy not speed, subjects responded quickly, within a few hundred milliseconds of the presentation of the target.

Lastly, as indicated above, the sound localization performance of monkeys also improved in the context of the memory-saccade task relative to the fixation task (Populin 2006). Such improvement in the monkey could have resulted from the extensive practice these subjects undergo in order to learn how to perform the task. However, the fact that similar improvements were documented in the human subjects tested in the present study without practice—the subjects were instructed verbally about how to perform the task a few minutes before the start of the first experimental session in which the memory-saccade task was used—indicates that the improvement is not the result of practice.

Comparison with other studies

Meaningful comparison with most studies of sound localization in humans is difficult because of the large differences in the paradigms used. However, the studies of Carlile et al. (1997) and Makous and Middlebrooks (1990) require discussion because of important similarities with the present study; chiefly, the broadband stimuli and their short duration, at least in their open loop conditions, and the use of head turning, an ethological response to the presentation of a sound in the surrounding space. Studies by Perrott et al. (1987) and Recanzone et al. (1998) also used head pointing to indicate the perceived location of sound sources, but the movements of the head and consequently the measurements were restricted to the horizontal plane, thus they will not be discussed.

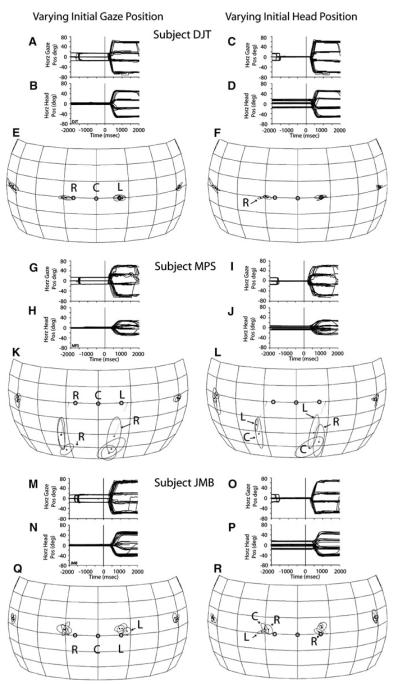

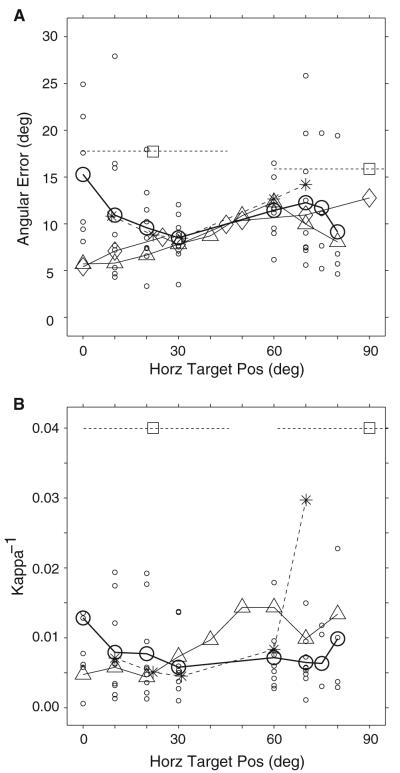

Figure 10 compares angular sound localization errors (Fig. 10a) and their associated K−1 values (Fig. 10b) from horizontal targets from this study and those of Makous and Middlebrooks (1990), Carlile et al. (1997), and of Populin (2006) from rhesus monkeys illustrated with circles, triangles, rhomboids, and asterisks, respectively. Data from Wightman and Kistler (1989b), who required subjects to report verbally the coordinates of the perceived location of sound sources, are also plotted as shown in Fig. 7 of Makous and Middlebrooks (1990) using square symbols; localization performance for stimuli presented on the horizontal plane at 0 and 18° of elevation was averaged over the extents illustrated by the broken horizontal lines. Makous and Middlebrooks (1990) data represent the average localization performance for horizontal targets located at 5 and 15° of elevation. The angular error from Carlile et al. (1997) are averages of the data points from localization data from horizontal targets presented between the horizontal and 20° of elevation shown in their Fig. 15. Note that Carlile et al. (1997), Makous and Middlebrooks (1990), and Wightman and Kistler (1989b) studied sound localization over much more extensive areas of the space surrounding the subjects than the present study; only data from eccentricities similar to those studied here are included for clarity. From this study, data from all nine subjects corresponding to targets presented to the left and right of the midline were averaged; data from individual subjects are plotted with small circles to illustrate the extent of the variability.

Fig. 10.

Comparison of sound localization performance for the azimuthal dimension across studies. Average angular error (a) and average Kappa-1 (b) from the condition without behavioral constraints or spatial references (i.e., the fixation task) are plotted with heavy open circles and heavy solid line; data from individual subjects are plotted with small open circles. Data from Makous and Middlebrooks (1990) are plotted with upward pointing triangles. Data from Wightman and Kistler (1990), as reported in Fig. 7 of Makous and Middlebrooks (1990) are plotted with open squares; the broken lines transecting the symbols represent the extent of azimuth over which the data were averaged. Angular error data from Carlile et al. (1997) are plotted with rhomboids. Data from the rhesus monkey acquired under conditions similar to those of the present study by Populin (2006) are plotted with asterisks and broken lines

Overall, the average angular errors made by the subjects in the present study are similar to the average angular errors reported by Carlile et al. (1997) and Makous and Middlebrooks (1990) for target eccentricities between approximately 30 and 80°. Conversely, in the most central portion of space, essentially the frontal quadrant, large differences were found. The average angular localization error for the straight-ahead target was 15° in the present study and only about 5–6° in Carlile et al. (1997) and Makous and Middlebrooks (1990).

Despite the overall similarities between the Carlile et al. (1997) and Makous and Middlebrooks (1990) studies and the present, several methodological differences are identified that might explain the differences in localization performance documented for the most central portion of space. For instance, both studies provided (1) a spatial reference straight ahead, visual in Carlile et al. (1997) and auditory in Makous and Middlebrooks (1990), to guide their subjects in the process of aligning their heads with the straight ahead position before the presentation of a target, (2) practically unlimited time for the subjects to respond by pressing a button to indicate that the head was pointed to the desired spatial location, and (3) extensive training before testing.

The potential help provided by a visual reference (Carlile et al. 1997) to localize the source of a sound was discussed above in reference to the improvements in performance documented in localizing acoustic targets in the context of memory-saccade task. Again, it is proposed that a spatial reference at the straight ahead position, or at any other known spatial position for that matter, facilitates determining the absolute location of acoustic targets because the subject can simply compute the difference between the location of the two sources of stimuli. Concerning the potential effects of the acoustic reference used by Makous and Middlebrooks (1990) as a guide for their subjects to align their heads with the straight ahead position, it is also possible that such stimuli aided localization. Although the acoustic reference could not have defined the straight ahead position as clearly as the visual, its presence a few hundred milliseconds before the onset of the target might have created conditions similar to those encountered in minimum audible angle experiments in which subjects would have been able to detect the difference between the position of the reference and test sounds. A reference-to-target delay of just over 500 ms, as used by Makous and Middlebrooks (1990), is a negligible period of time relative to the 15–20 s extent during which information can be reliably stored in spatial working memory (Goldman-Rakic 1987). In other words, the acoustic targets presented near the straight ahead position would have been perceived in a different location relative to the well-defined location of the reference, which should have facilitated their localization.

The average magnitude of the errors from Wightman and Kistler (1989b), on the other hand, were considerably larger despite the repeated presentation of the targets, eight times with a separation of 30 ms, in every trial. The most likely explanation for the poorer performance is the type of task used to measure sound localization perception in this study: the subjects were asked to report verbally the coordinates of the perceived location of the targets. It should be noted, however, that the goal of their study was the psychophysical validation of their method for simulating free-field listening conditions over head-phones, not the measurement of absolute human sound localization accuracy in the free field (Wightman and Kistler 1989a).

Lastly, it is also important to include in the comparison the magnitude of angular localization errors made by non-human primates, the behavioral/physiological model closest to humans. The data from Populin (2006) collected with the fixation task under nearly identical conditions to those used in the present study are plotted in Fig. 10a with asterisks connected with a broken line. Note that the average monkey data are very similar to the average data from the present study. As the humans in this study, the monkeys’ angular error increased for the targets located closest to the midline, implying that both species were affected similarly by the experimental conditions used for testing.

In terms of variability, the comparison of the average magnitude of K−1 values across subjects reveals that Makous and Middlebrooks (1990) results were less variable for the smaller horizontal eccentricities and greater for the more peripheral targets. The average magnitude of K−1 values from Wightman and Kistler (1989a, b), as reported by Makous and Middlebrooks (1990) in their Fig. 7, for the range of target eccentricities tested in the present study, were about three times larger. Lastly, the variability from the monkey study closely mirrored that of the present study, with the exception of the 70° eccentricity, for which one of the three monkeys tested exhibited great variability (Populin 2006). Group K−1 data from Carlile et al. (1997) could not be easily obtained from their figures, thus they are not included.

The large differences in the magnitude of K−1 reported by Wightman and Kistler (1990), and Makous and Middlebrooks (1990) and the present in terms of variability is most likely due to the differences in experimental tasks used to measure sound localization perception. In fact, it is somewhat unusual that the average variability was very consistent for the entire frontal hemifield.

The differences in variability between the results of the present study and those of Makous and Middlebrooks (1990), on the other hand, are difficult to explain, particularly when considering the extensive training (10–20 training sessions) underwent by their subjects before testing. Gaze shifts to targets within 30° of the midline, although accomplished with different eye-head coordination strategies for visual and acoustic target modalities (Biesiadecki and Populin 2005), will result in eye and head alignment if the subject is required to maintain final position for a prolonged period of time; in both Makous and Middlebrooks (1990) as well as Carlile et al. (1997) subjects had to point with their head, then press a key to record the desired head position. Thus, training for orienting to targets perceived within such eccentricities is likely to constitute a simple reinforcement of normal behavior. On the other hand, for targets perceived beyond 30° of eccentricity, where normal orienting is accomplished with combined eye-head movements, the training might not have been effective enough to compel subjects to produce head movements that consistently matched the position of the eyes in space.

Effect of initial eye and head position on sound localization

As documented above, in performing the fixation task subjects returned their gaze and head to or near the straight-ahead position after orienting to a target to wait for the initiation of the next trial. This strategy resulted in a relatively stereotyped position at the time of presentation of the next acoustic target, with the eyes and head in rough alignment straight ahead (Fig. 2). Proper alignment of the head and eyes at the straight ahead position is important to ensure the proper presentation of the acoustic stimuli in relation to the position of the subjects’ ears. Under such conditions the visual and auditory representations of space are expected to be in spatial register, which is thought to constitute a substrate for sensorimotor integration (Harris et al. 1980; Stein and Meredith 1993). The dissociated saccade task (Fig. 1c), therefore, was used to alter the alignment of the eyes and head at the time of stimulus presentation under controlled conditions.

No effect of initial eye or head position on sound localization was found. As expected, the position of the eyes and head was taken into account for computing the location of acoustic targets and for the execution of the goal-directed gaze shifts to such targets. These behavioral results are consistent with Sparks (1986) motor error hypothesis, which proposes that the position of sound sources is encoded in motor coordinates in the superior colliculus and predicts localization errors if eye position at the time of stimulus encoding is not taken into account. These results are also consistent with Goossens and Van Opstal’s (1999) hypothesis that information about acoustic targets is combined with head position information to encode targets in spatial or body centered frame of reference. Contrary to Goossens and Van Opstal’s (1999) observations, however, final head position data from the three subjects tested in the present study with the dissociated saccade task did not show a systematic effect of initial head position (Fig. 9d, j, p) suggesting, therefore, that the head component of gaze shifts were not the result of a displacement command, but rather of commands to move the head to a specific location in space.

Gaze as a measure of sound localization

In light of the fact that this is the first study that used gaze to study the psychophysics of sound localization in humans, it is important to consider the advantages and disadvantages associated with the methodology. The disadvantages are several and of different nature: (1) measuring gaze in humans is costly and laborious. Specialized hardware and software are needed for recording and analysis. (2) Placing the coils in the subjects’ eyes is considered an invasive procedure, which for this study prompted the University of Wisconsin IRB to require the presence of an ophthalmologist during the experiments. (3) The field coils of the search coil system likely degraded the anechoic nature of the environment we tried to create with the application of reticulated foam to every surface in the recording room. (4) The recommended 30-min eye coil-wearing time limits the duration of the experimental sessions.