Abstract

Three experiments examined the role of reference directions in spatial updating. Participants briefly viewed an array of five objects. A non-egocentric reference direction was primed by placing a stick under two objects in the array at the time of learning. After a short interval, participants detected which object had been moved at a novel view that was caused by table rotation or by their own locomotion. The stick was removed at test. The results showed that detection of position change was better when an object not on the stick was moved than when an object on the stick was moved. Furthermore change detection was better in the observer locomotion condition than in the table rotation condition only when an object on the stick was moved but not when an object not on the stick was moved. These results indicated that when the reference direction was not accurately indicated in the test scene, detection of position change was impaired but this impairment was less in the observer locomotion condition. These results suggest that people not only represent objects’ locations with respect to a fixed reference direction but also represent and update their orientation according to the same reference direction, which can be used to recover the accurate reference direction and facilitate detection of position change when no accurate reference direction is presented in the test scene.

As mobile organisms, humans must update their positions and orientations with respect to objects in the surrounding environment in order to efficiently interact with the world. For example, suppose you are entering your department's main office and see a colleague who is to your left. You stop and turn left to have a chat. After turning, you need to know that the main office is to the right of you. Such spatial updating processes are one of the most basic functions of the human cognitive system. The aim of this study was to examine the spatial reference system relative to which the spatial updating process takes place.

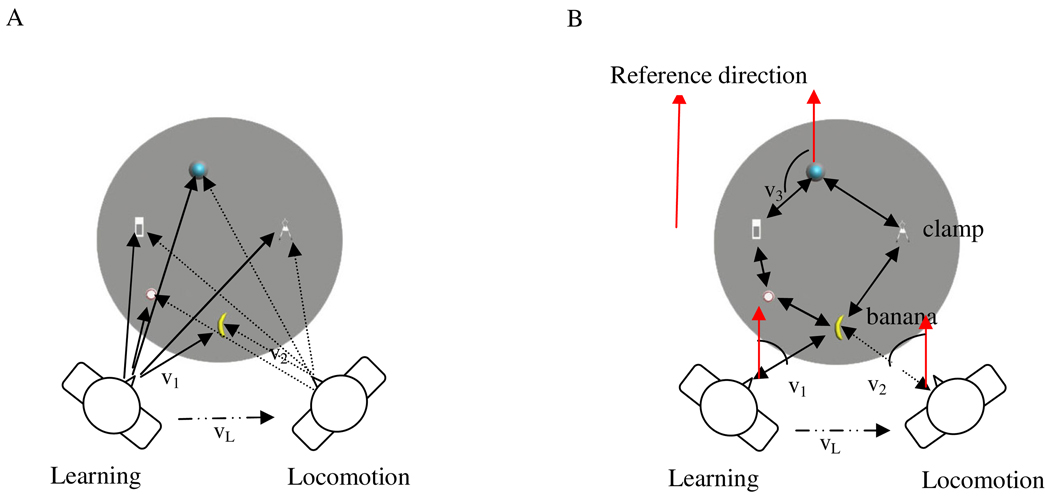

The early theories of spatial memory and navigation stipulated that animals, including humans, have an enduring cognitive map of objects in the environment and during locomotion update the representation of their location and orientation in the cognitive map (e.g., Gallistel, 1990; O’Keefe & Nadel, 1978; Tolman, 1948). These theories found support in the discovery of place cells (e.g., O’Keefe & Nadel, 1978) and head direction cells (e.g., Taube, Muller, & Ranck, 1990) in animals. These theories were challenged by Wang and Spelke (2002). Wang and Spelke stipulated that humans do not need to have enduring allocentric representations of objects’ locations, although they might have enduring allocentric representations of geometric shapes (e.g., shape of room). Instead humans represent locations of individual objects with respect to their body and momentarily update body-object vectors when they locomote. We refer to this model as the egocentric updating model. This model is illustrated in Figure 1A. Suppose an individual views five objects at the learning position and then closes his or her eyes, turns to face the direction indicated by the arrow of vL, walks a distance, turns left at the position and orientation indicated by “locomotion” in the figure. He or she represents body-object vectors, illustrated by the solid arrows (e.g., v1), at learning and then updates body-object vectors, illustrated by dashed arrows (e.g., v1 is updated to be v2), at locomotion.

Figure 1.

A. egocentric updating model, B. allocentric updating model. In B. the reference directions are illustrated by the single-direction arrow. The angles between v1, v2, v3 and the reference directions indicate the bearings of v1, v2, v3 in terms of the reference directions.

A large body of evidence, however, indicates that humans have allocentric representations of objects’ locations in memory (e.g., Burgess, Spiers, & Paleologou, 2004; Greenauer & Waller, 2010; Holmes & Sholl, 2005; Mou & McNamara, 2002; Mou, Xiao, & McNamara, 2008; Sargent, Dopkins, Philbeck, & Chichka, 2010). Allocentric representations have been determined according to two criteria. First, humans represent objects' locations with respect to other objects (Burgess et al., 2004; Holmes & Sholl, 2005; Sargent et al., 2010). Second humans use an allocentric reference direction to specify objects’ locations (Greenauer & Waller, 2010; Mou & McNamara, 2002). Mou, Xiao, and McNamara (2008) further demonstrated that the reference objects and reference directions used to encode an object’s location could both be allocentric; that is, an object’s location is represented with respect to other objects (reference objects) and the relations between the target and reference objects are specified with respect to an allocentric reference direction (e.g., the orientation of a rectangular table).

In the current project, we propose a model to reconcile the evidence for allocentric encoding and the egocentric updating model. We hypothesize that people can represent locations of objects, including their own body (as a special object), in terms of interobject vectors that are defined with respect to allocentric spatial reference directions (Mou & McNamara, 2002). When people move in the environment, they update their orientation and their location relative to other objects with respect to the original reference directions that are used to represent interobject vectors (Mou, McNamara, Valiquette, & Rump, 2004). This model can be better understood in Figure 1B. According to this model, the individual encodes vectors between his or her body and objects (e.g., v1) and between objects (e.g., v3). These vectors include distance and bearing between objects. The bearing of the vectors (e.g., v1, v3) are defined in terms of an allocentric reference direction indicated by the single-direction solid arrows in the figure. We refer to these body-object vectors as allocentric body-object vectors. The individual also encodes the bearing of his or her orientation in terms of the allocentric reference direction. We refer to an individual's orientation, defined in terms of an allocentric reference direction, as the allocentric heading (Klatzky, 1998).

During locomotion, the individual updates his or her location by updating allocentric body-object vectors, for example, v1 is updated to be v2. Such updating can be implemented by adding the allocentric body-object vectors at learning and the locomotion vector (vL), for example v2= v1+ vL. The allocentric direction of the locomotion vector can be computed by adding the first turning angle at the learning location (turning right in this example) and the allocentric heading at viewing. The length of the locomotion vector is the walking distance. Both the turning angle and the walking distance can be estimated from environmental and body-based self-motion cues when people walk with eyes open and only from body-based self-motion cues when people walk with eyes closed (Kelly, McNamara, Bodenheimer, Carr, & Rieser, 2008; Loomis, Klatzky, Golledge, Cicinelli, Pellegrino, & Fry, 1993; Rieser, Pick, Ashmead, & Garing, 1995). The individual also updates his or her orientation with respect to the original allocentric reference direction. The allocentric heading at locomotion is the sum of the allocentric heading at learning and the two turning angles (turning right, turning left). We refer to this model as the allocentric updating model.

Before continuing, we wish to clarify several key concepts in the model. First, there are at least two ways to decide whether an allocentric reference direction is being used. One approach is to focus on the types of cues used to establish the reference direction (e.g., Greenauer & Waller, 2008). In particular, one could conclude that a spatial reference direction is allocentric if non-egocentric cues were used to establish it. An alternative approach is to focus on the nature of the reference direction itself, regardless of the cues used to select it (e.g., Mou & McNamara, 2002; Shelton & McNamara, 2001). If the spatial reference direction is a fixed element of the spatial representation and is independent of the learner's body orientation and position when the person locomotes, then one concludes that it is allocentric.

We prefer the second of these two criteria because of the similarity between reference directions determined by egocentric cues and reference directions determined by non-egocentric cues. Previous studies have shown that a variety of cues including environmental cues, intrinsic features of a layout, and egocentric experiences all possibly affect selection of reference directions (e.g., Greenauer & Waller, 2010; Mou & McNamara, 2002; Shelton & McNamara, 2001). However, regardless of whether the reference direction is determined by egocentric cues or nonegocentric cues, similar reference direction orientation dependent performance is observed in judgments of relative direction (e.g., Greenauer & Waller, 2010; Mou, Liu, & McNamara, 2009) and in visual recognition (e.g., Li, Mou, & McNamara, 2009). Furthermore, to our knowledge, there is no evidence that reference directions determined by different cues have different characteristics in representing and processing spatial information. To avoid the possible ambiguity of the term “allocentric reference direction”, we use “fixed reference direction” in this paper instead.

Second, this model does not claim that all possible interobject vectors in the layout are represented. This model also does not claim that all possible body-object vectors are represented. The manner in which humans select reference objects is beyond the current model (but see Carlson & Hill, 2008 for a discussion). The interobject vectors illustrated by the bi-directional arrows in Figure 1B are based on proximity and are hypothetical.

Third, this model stipulates that reference directions in spatial memories are analogous to cardinal directions in geographic representations and to the identification of the “top” of a form (e.g., Rock, 1973). Reference directions may correspond to salient features of a collection of objects, such as an axis of bilateral symmetry (e.g., Mou & McNamara, 2002), but this correspondence is not essential. For instance, reference directions can be influenced by walls of a surrounding room (e.g., Shelton & McNamara, 2001) or by the orientation of the table on which objects are placed (e.g., Mou, Xiao, & McNamara, 2008). Mou, Zhang, and McNamara (2009) showed that detection of position change was facilitated by an arrow that indicated the learning orientation. The learning orientation did not correspond to intrinsic axes in the layout of objects but simply indicated a direction. In some special cases, reference directions may not differ from reference axes passing through aligned sets of objects. Such cases do not contradict the tenets of the model.

Both the allocentric updating model proposed in this study and the egocentric updating model proposed by Wang and Spelke (2002) stipulate that body-object vectors are represented and updated. However according to the allocentric updating model, body-object vectors (e.g., v1 and v2) are defined in terms of the fixed reference direction whereas according to the egocentric updating model, body-object vectors are defined in terms of an individual's changing body orientation. We refer to body-object vectors that are defined in terms of an individual's changing body orientation as egocentric body-object vectors. Egocentric but not allocentric body-object vectors rely on an individual's current body orientation. For example, v1 in Figure 1A changes but v1 in Figure 1B stays the same when the individual turns right after learning because the bearing of v1 in terms of the fixed reference direction is independent of the individual’s rotation. According to the egocentric updating model, egocentric body-object vectors are updated given the dynamic transformation of the individual's body orientation and position during locomotion. In contrast, according to the allocentric updating model, during locomotion egocentric body-object vectors are computed by subtracting the updated allocentric heading from updated allocentric body-object vectors. For example, suppose in Figure 1B the bearing at locomotion from the individual to the banana (v2) is 45° counter clockwise relative to the reference direction. The allocentric heading (the bearing of the individual’s orientation) at locomotion is 30° counter clockwise relative to the reference direction. Then the banana is 15° left of the individual’s orientation. Furthermore because not all allocentric body-object vectors are represented and then updated, those not represented and then not updated (e.g., body-clamp) need to be inferred from the represented and then updated allocentric body-object vectors (e.g., v2) and interobject vectors (banana-clamp). This process is consistent with the off-line updating proposed by Hodgson and Waller (2006). The second main difference between the egocentric updating model illustrated in Figure 1A and the allocentric updating model illustrated in Figure 1B is that interobject vectors are represented in terms of the fixed reference direction according to the allocentric updating model whereas neither interobject vectors nor fixed reference directions are necessary according to the egocentric updating model.

Mou, Zhang, and McNamara (2009) provided evidence in favor of the allocentric updating model. They used the task of detecting position change in an array of objects on a table from a novel viewpoint after table rotation or after observer locomotion (Simons & Wang, 1998; Wang & Simons, 1999). Their first experiment replicated the original findings of Simons and Wang (1998). Participants briefly viewed an array of five objects on a desktop and then attempted to detect the position change of one object. Participants were tested either from the learning perspective when the table was rotated or from a new perspective when the table was stationary. The results showed that visual detection of a position change was better when the novel view was caused by the locomotion of the observer than when the novel view was caused by the table rotation.

The facilitative effect of locomotion can be explained by both allocentric updating and egocentric updating models. According to the egocentric updating model, egocentric body-object vectors are immediately available after locomotion due to egocentric updating but not available after table rotation as no egocentric updating occurs. Hence egocentric body-object vectors facilitate detection of position change after observer locomotion but not after table rotation. According to the allocentric updating model, observers need to recover the reference direction, which had been selected at learning to represent interobject vectors and body-object vectors, in the test scene so that the vectors in the test scene can be compared with the represented vectors in memory to detect position change. There are two sources of information that could be used to recover the reference direction: (a) the updated allocentric heading during locomotion and (b) the visual input of the interobject vectors in the test scene. In the table rotation condition, only the latter is available whereas in the observer locomotion condition, both are available. Hence the recovery of the reference direction might be more accurate in the observer locomotion condition, due to the extra information available in the updated allocentric heading, than in the table rotation condition, which thereby facilitates detection of position change.

Experiment 3 of Mou, Zhang, and McNamara (2009) differentiated these two models. A stick was presented in the test scene to indicate the original learning viewpoint (see Christou, Tjan, & Bülthoff, 2003 for a similar paradigm in object recognition) in both the table rotation and observer locomotion conditions. The results showed that the accuracy in detection of position change in the table rotation condition increased and was as good as in the observer locomotion condition. This finding can be explained by the allocentric updating model if we assume that observers in their experiments established a reference direction parallel to their viewing direction (e.g., Greenauer & Waller, 2008; Shelton & McNamara, 2001). Because the correct reference direction was explicitly indicated by the stick in the test scene, the updated allocentric heading was not necessary to recover the reference direction. Hence the performance in detection of position change in the table rotation condition was as good as in the observer locomotion condition. It is difficult for the egocentric updating model to explain this result because the egocentric updating model stipulates that interobject vectors with respect to a fixed reference direction are not necessary.

One may argue that the equal performance in the table rotation and observer locomotion conditions does not guarantee that the same mechanisms are used in these conditions. For example, observers might represent allocentric representations and use them in the table rotation condition so that detection performance increased at the presentation of the stick. However observers might still represent egocentric body-object vectors and dynamically update them in the locomotion condition. More compelling evidence is needed to differentiate between the allocentric and egocentric updating models. The current study addresses this need.

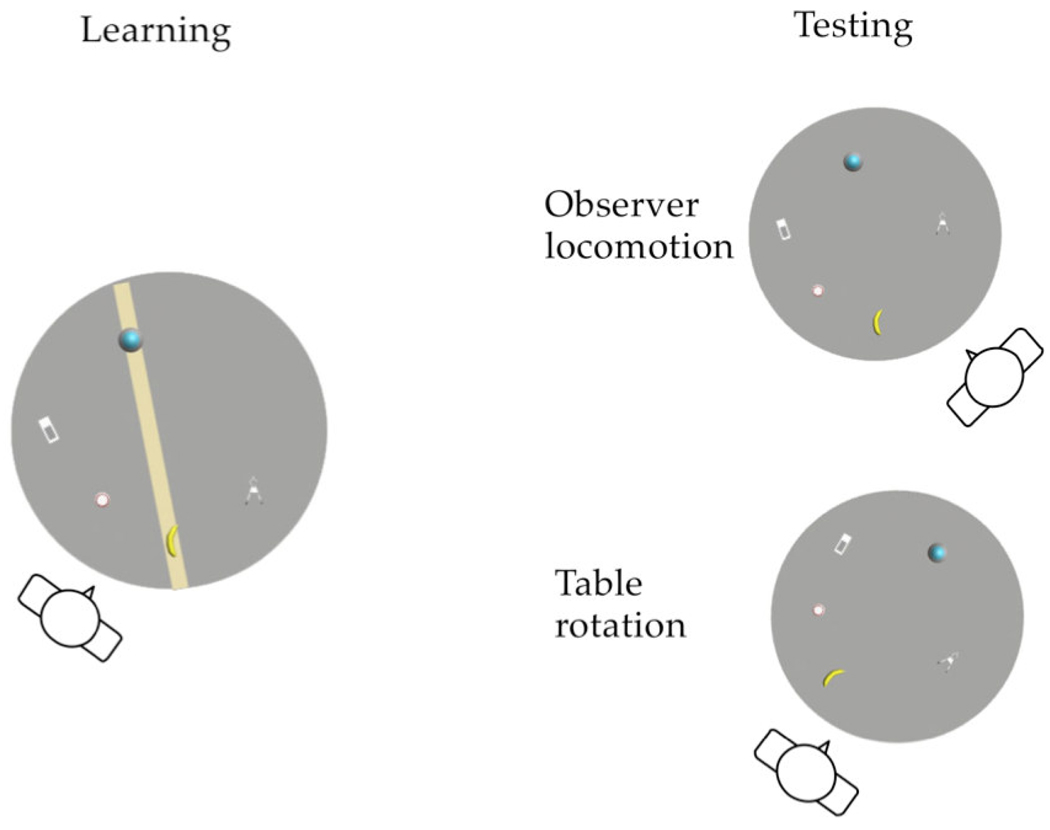

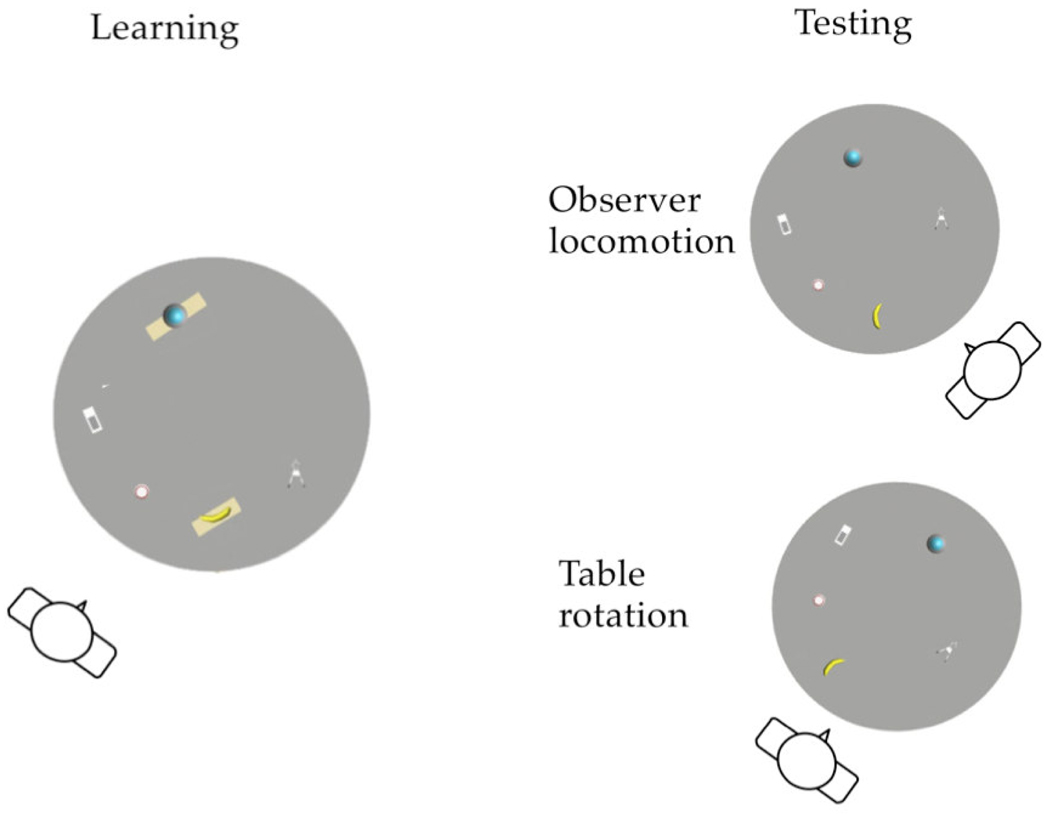

In the current study, a long stick was placed along an imaginary line passing underneath two of the five objects to prime a spatial reference direction (see Figure 2). The stick appeared only during the learning phase. A pilot experiment showed that a long stick presented underneath two objects can prime a spatial reference direction parallel to the orientation of the stick (Mou, Xiao, & McNamara, 2008). After a short interval, participants detected which object had been moved at a novel view that was caused by table rotation or by their own locomotion as in previous studies (e.g., Mou, Zhang, & McNamara, 2009; Simons & Wang, 1998). The target objects could be one of the objects on the stick or one of the objects not on the stick at learning. The egocentric updating model predicts no effect of target object (on or not on the stick at learning) on the accuracy in detection of position change and no interaction between target object and facilitative effect of locomotion. In particular participants should be equally accurate for target objects that had been on the stick and for target objects that had not been on the stick, and participants should be more accurate in detecting position change for objects, regardless of whether they had been on or not on the stick, in the locomotion condition than in the table rotation condition.

Figure 2.

Experiment design in Experiments 1–2

By contrast the allocentric updating model predicts an effect of target object on the accuracy in detection of position change and an interaction between target object and the facilitative effect of locomotion. In particular participants should be more accurate for target objects that had not been on the stick at learning than target objects that had been on the stick, and the facilitative effect of locomotion should be observed for target objects on the stick but not for target objects not on the stick. The rationale of these predictions is discussed in the following paragraphs.

According to the allocentric updating model, recovery of the reference direction is critical in detection of position change (e.g., Mou, Xiao, & McNamara, 2008). In the test scene, although the stick is not presented, the two objects that had been on the stick explicitly indicate the reference direction. When targets are not on the stick, the explicitly indicated reference direction is accurate. When one of the objects that are on the stick is moved, the explicitly indicated reference direction is not accurate. An extra process is required to detect and correct inaccuracy of the explicitly indicated reference direction. We assume that detecting and correcting inaccuracy of the explicitly indicated reference direction introduces extra costs with observed decrease in accuracy in detection of position change. Hence detection of position change is better when target objects are not on the stick than when target objects are on the stick.

When a target is on the stick (i.e., when an object that had been on the stick in the learning phase is moved), the explicitly indicated reference direction is not accurate. In the table rotation condition, only one source of information is available to detect and correct the inaccurately indicated reference direction, namely, the visual input of the interobject vectors in the test scence. In the locomotion condition, two sources of information are available, the visual input from the test scene and the allocentric heading updated during locomotion. The extra information provided by the updated allocentric heading in the locomotion condition facilitates the detection and correction of the inaccurately indicated reference direction, which causes more accurate detection of position change in the locomotion condition than in the table rotation condition. When a target is not on the stick (i.e., when neither of the objects that had been on the stick is moved), the explicitly indicated reference direction is accurate. The updated allocentric heading in the locomotion condition is not necessary to detect and correct the inaccurately indicated reference direction in the test scene. Hence the facilitative of locomotion is not expected for target objects not on the stick.

Experiment 1 examined the facilitative effect of locomotion when only the objects not on the stick were moved in all trials. Experiment 2 examined the interaction between the facilitative effect of locomotion and the effect of the target objects when either objects on or not on the stick were moved in a trial. Experiment 3 tested whether objects on the stick might have served as reference objects rather than indicating a spatial reference direction. We also conducted an experiment, using the same materials and experimental procedure as Experiments 1–3 except that no stick was presented in the learning phase, and successfully replicated the facilitative effect of locomotion on the novel view recognition as in the previous studies (Burgess, Spiers, & Paleologou, 2004; Mou, Zhang, & McNamara, 2009; Simons & Wang, 1998; Wang & Simons, 1999). This experiment assured that the failure to observe the facilitative effect of locomotion should not be attributed to the specific materials and procedure in this project. In the interest of brevity, this experiment is not described below.

Experiment 1

Participants briefly learned a layout of five objects. A stick was placed underneath two of the five objects to prime a reference direction that was not parallel to the viewing direction. After participants were blindfolded, the stick was removed and one of the three objects not on the stick was moved. Participants removed the blindfold and tried to determine which object had been moved at a novel viewpoint that was caused by table rotation or observer locomotion. Participants were not informed that the objects on the stick were never moved. We predicted that the facilitative effect of locomotion would not be observed because the two objects that were on the stick at the time of learning explicitly indicated the accurate reference direction in the table rotation condition even though the stick was removed in the test scene.

Method

Participant

Twelve university students (6 men and 6 women) participated in this study in return for monetary compensation.

Materials and design

The experiment was conducted in a room (4.0 by 2.8 m) with walls covered in black curtains. The room contained a circular table covered by a grey mat (80 cm in diameter, 69 cm above the floor), two chairs (seated 42 cm high), 5 common objects (eraser, locker, battery, bottle, and a plastic banana sized around 5 cm) coated with phosphorescent paint, and a stick (80 cm by 4.8 cm) coated with phosphorescent paint (Figure 2). The distance of the chairs to the middle of the table was 90 cm. The angular distance between the two chairs was 49°. The objects were placed on five of nine possible positions in an irregular array on the circular table. The distance between any two of the nine positions varied from 18 to 29 cm. The irregularity of the array ensured that no more than two objects were aligned with the observer throughout the experiment. The stick was placed underneath two of the five objects when participants learned the layout and was removed when participants detected the position change so that it could not be used as a cue to locate objects in the test scene. Participants wore a blindfold and a wireless earphone that was connected to a computer outside of the curtain. The lights were always off during the experiment, and the experimenter used a flashlight when she placed objects on the table. Throughout the experiment, participants were only able to see the locations of the five objects and also the stick at learning. The earphone was used to present white noise and instructions.

Forty irregular configurations were created. In each configuration, two of the five occupied locations were selected randomly to be the locations above which the stick would be placed at learning. One of the other three occupied locations was selected randomly to be the location of the target object. The target object was moved to be at one of the four unoccupied locations. This new location of the object was usually the open location closest to the original location and had a similar distance the center of the table so that this cue could not determine whether an object had moved (as shown in Figure 2). Participants were not informed that the two objects above the stick were never moved in all trials.

Participants underwent both the observer locomotion and table rotation conditions. 40 trials were created for each participant by presenting the 40 configurations in a random order and dividing them into 8 blocks (5 configurations for each block). Four blocks were assigned to table rotation conditions and four blocks were assigned to observer locomotion conditions. The blocks of table rotation and the blocks of observer locomotion were presented alternatively. Across all the participants, the block of table rotation was presented first in half of the male and female participants. At the beginning of each block, participants were ii about the condition of the block (table rotation or observer locomotion).

The primary independent variable was the cause of view change (table rotation or observer locomotion). The cause of view change was manipulated within participants.

Procedure

Assisted by the experimenter, participants walked into the testing room and sat on the viewing chair wearing a blindfold. Participants were instructed that “a long stick will be placed on the table when you learn the layout, and randomly two objects will fall on the stick. The stick will be removed when you make judgment, please pay attention to the two objects on the long bar”. Each trial was initiated by a key press by the experimenter and started with a verbal instruction via earphone (“please remove the blindfold, and try to remember the locations of the objects you are going to see.”). After three seconds, participants were instructed to put on the blindfold and walk to the new viewing position (“please wear the blindfold, walk to the other chair”) or remain stationary at the learning position (“please wear the blindfold”). Ten seconds after participants were instructed to stop viewing the layout, they were instructed to determine which object was moved (“please remove the blindfold and make judgment of which object has been moved”). The participant was instructed to respond as accurately as possible; speedy response was discouraged. After the response, the trial was ended by a key press of the experimenter and the participant was instructed to be ready for the next trial (“please wear the blindfold and sit on the original viewing chair”). All of the above instructions were prerecorded. The presentations of the instructions were sequenced by a computer to which the earphone was connected. The experimenter wrote down the response on a sheet of paper.

Before the 40 experimental trials, participants practiced walking to the other chair while blindfolded until they could do so easily. Then, 8 extra trials (4 for table rotation condition, and 4 for observer locomotion condition) were used as practice to make sure that participants were familiar with the procedure.

Results

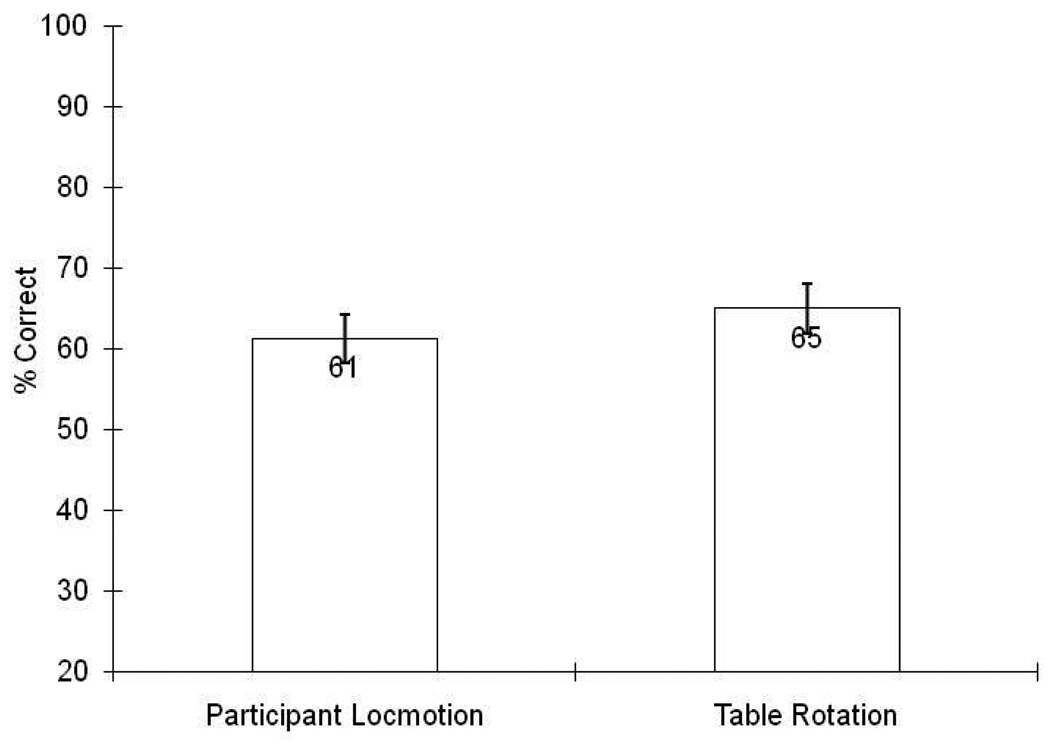

Mean percentage of correct judgment as a function of the cause of view change is plotted in Figure 3. The primary finding was that position change detection at a novel view was equally accurate regardless of the cause of new view.

Figure 3.

Correct percentage in detecting position change as the function of cause of view change in Experiment 1. (Error bars are ±1 standard error of the mean, as estimated from the analysis of variance.)

Percentage of correct judgment was computed for each participant, each movement condition and analyzed in a repeated measure analyses of variance (ANOVA), with one term corresponding to the cause of the novel view. There was no difference between two conditions of cause of novel view, F(1,11) <1, p> .05, MSE = .011.

Discussion

In Experiment 1, change detection at a novel view was equally accurate in the observer locomotion and the table rotation condition. This result confirmed our prediction that when the original reference direction is explicitly and accurately indicated in the test scene, the facilitative effect of locomotion will not be observed.

Experiment 2

In Experiment 2, participants viewed layouts of five objects and the stick that primed the reference direction. In half of the test trials, an object on the stick was moved; in the other half of the test trials, an object not on the stick was moved. The aim of this experiment was to test our predictions discussed in the Introduction together in a single experiment: As in Experiment 1, there should not be a facilitative effect of observer locomotion when the objects on the stick remained stationary and one of the objects not on the stick was moved; however, there should be a facilitative effect of observer locomotion when one of the objects on the stick was moved. Moreover, the detection accuracy should be higher when the target objects had not been on the stick than when the target objects had been on the stick.

Method

Participants

Twenty four university students (12 male and 12 female) participated in return for monetary compensation.

Materials, design, and procedure

The materials, design and procedure were similar to those in Experiment 1 except for the following modifications. Sixty irregular configurations (40 from Experiment 1 and 20 new) were created by randomly picking 5 occupied locations of the 9 possible locations. For each configuration, two of the 5 occupied locations were randomly selected to be the locations where the stick was placed.

For each participant, these 60 configurations were randomly divided into four sets (15 for each) and assigned to the combinations of target object (on stick or not on stick) and cause of view change (table rotation or observer locomotion). For the configurations assigned to the conditions of target object not on the stick, one of the three objects not on the stick was randomly selected to be the location of the target object. For the configurations assigned to the conditions of target object on the stick, one of the two objects on the stick was randomly selected to be the location of the target object. The 30 configurations in each of the view change conditions (including 15 configurations of target on stick and 15 configurations of target not on stick) were randomly divided into 6 blocks (5 for each).

Sixty trials were created for each participant by presenting the 12 blocks of configurations. The blocks of table rotation and the blocks of observer locomotion were presented alternatively. Across all the participants, the block of observer locomotion was presented first in half of the male and female participants. At the beginning of each block, participants were informed about which type of view change would occur in the block (table rotation or observer locomotion).

The primary independent variables were target object (on stick or not on stick) and cause of view change (table stationary or observer locomotion). Both independent variables were manipulated within participants.

Results and Discussion

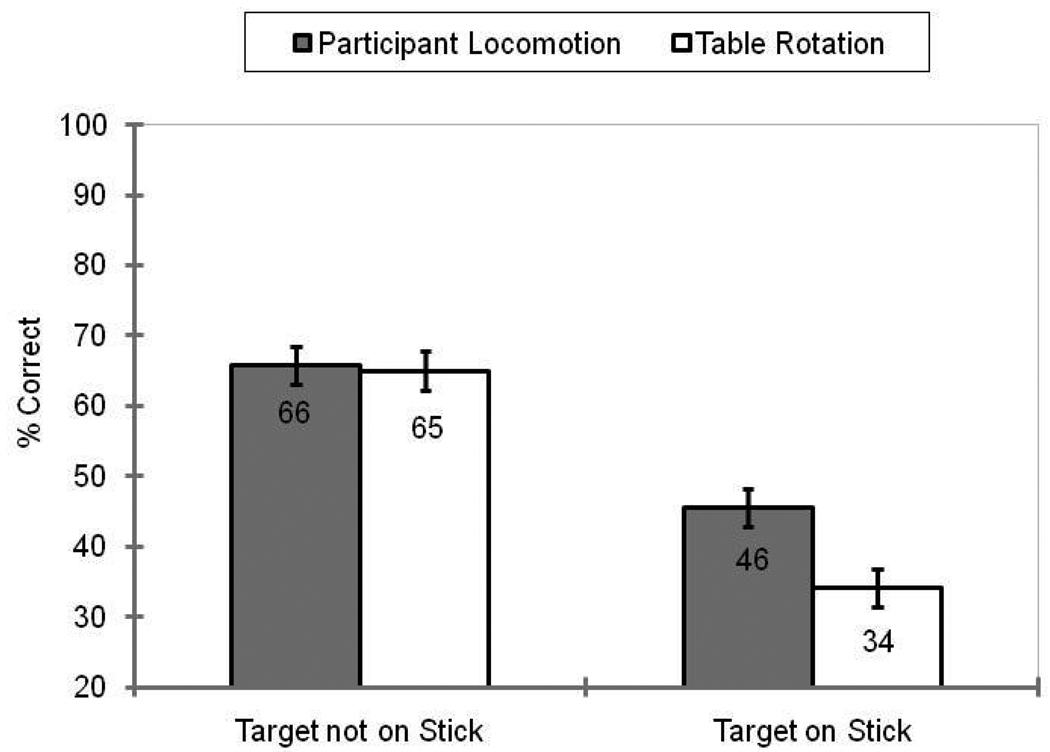

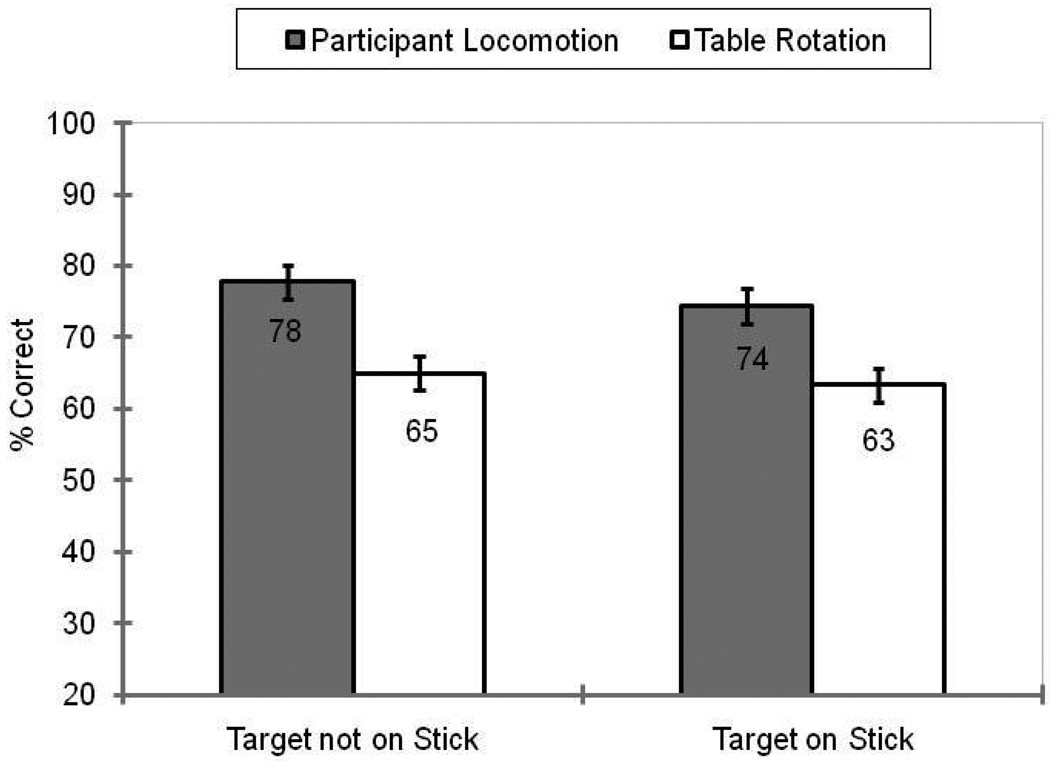

Mean percentage of correct judgment as a function of target object and cause of view change are plotted in Figure 4. The primary findings were these: for a target object on the stick participants were more accurate in detecting its position change when the novel view was caused by observer locomotion than when the novel view was caused by table rotation. However, for a target object not on the stick, the superiority of observer locomotion was not evident. Both these findings were supported statistically.

Figure 4.

Correct percentage in detecting position change as the function of target object and cause of view change in Experiment 2. (Error bars are ±1 standard error of the mean, as estimated from the analysis of variance.)

Percentage of correct judgment was computed for each participant, each target object condition and each cause of view change condition, and analyzed in repeated measure analyses of variance (ANOVAs), with variables corresponding to target object and cause of view change condition. Target object and cause of view change were both within participants.

The main effect of target object was significant, F(1,23) = 61.07, p < .001, MSE = .026. The main effect of cause of view change was significant, F(1,23) = 6.41, p < .05, MSE = .014. The interaction between target object and cause of view change was significant, F(1,23) = 4.47, p < .05, MSE = .015. In particular, for target objects on the stick detection of position change was better after observer locomotion than after table rotation, t(23) = 3.33, p < .01; for target objects not on the stick participants the simple effect of cause of view change was not significant, t(23) = 0.24, p > .05.

As in Experiment 1, the facilitative effect of observer locomotion was not observed when an object not on the stick was moved and the objects on the stick stayed stationary so as to accurately indicate the original reference direction. One novel result is that, the facilitative effect of observer locomotion was observed when one of the objects on the stick moved. We also observed that position change detection was overall more accurate when the objects not on the stick were moved than when the objects on the stick were moved. Hence all predictions discussed in Introduction are confirmed by the results.

The worse detection of position change for target objects on the stick cannot be due to the increased attention to the objects on the stick. Suppose we changed our task to detection of feature change (e.g., color) rather than position change. The detection of feature change should be easier for the objects on the stick because of the increased attention to the objects on the stick. However it is possible that the stick in Experiments 1–2 did not prime a reference direction and instead highlighted the two objects on it so that the two objects were used as landmarks. This possibility might explain the results if we assume that landmarks can be used to locate non-landmark objects and people update their location with respect to the landmarks. When the objects not on the sticks moved, that is a non-landmark moved, the correct locations of landmarks were presented explicitly so that the updated representations of the landmarks’ locations did not provide extra facilitation in locating the landmarks’ locations. Hence there was no facilitative effect of locomotion. When the objects on the stick moved, i.e. a landmark moved, the updated location of landmark could provide extra facilitation in detecting and correcting the inaccuracy of the explicitly indicated landmark. Also when the objects on the stick moved, an extra mechanism is required to detect and correct the inaccuracy of the explicitly indicated landmark. Hence detecting the movement of objects on the stick was harder than detecting movement of objects not on the stick. Experiment 3 removed this possibility.

Experiment 3

In Experiment 3, two short sticks instead of one long stick were placed underneath two objects so that these two objects were highlighted (see Figure 5). The short sticks were oriented parallel to the viewing direction to avoid priming a reference direction along the imaginary line passing through the two sticks. If detection accuracy and facilitative effect of locomotion on detection were modulated by target objects in Experiment 2 because the stick primed two landmarks rather reference direction, then the same results should be observed in this experiment.

Figure 5.

Experiment design in Experiment 3.

Method

Participants

Twelve university students (6 male and 6 female) participated in return for monetary compensation.

Materials, design, and procedure

The materials, design and procedure were similar as those of Experiment 2 except that two rectangular sticks (4.8 cm by 12 cm and 4.8 cm by 16.8 cm) coated with phosphorescent paint replaced the stick in Experiment 2. During the learning phase of each trial, two objects fell on sticks, one for each object, and the two sticks were removed during testing phase as in Experiments 1 and 2. The longer axes of the two sticks were oriented parallel to the participants’ viewing direction (as shown in Figure 4) to prevent participants from imagining the two sticks to be a single stick.

Results and Discussion

Mean percentage of correct judgment as a function of target object and cause of view change is plotted in Figure 6. Percentage of correct judgment was computed for each participant, each target condition and each cause of view change condition, and analyzed in repeated measure analyses of variance (ANOVAs), with variables corresponding to target and cause of view change. Target object and cause of view change were both within participants.

Figure 6.

Correct percentage in detecting position change as the function of target object and cause of view change in Experiment 2. (Error bars are ±1 standard error of the mean, as estimated from the analysis of variance.)

The main effect of cause of view change was significant, F(1,11) = 23.25, p < .01, MSE = .007. The main effect of target object was not significant, F(1,11) <1,p> .05, MSE = .008. The interaction between target object and cause of view change was not significant, F(1,11) <1,p>.05, MSE = .007. Planned comparisons showed that the performance was better in the condition of observer locomotion than in the condition of table rotation for target objects on the stick, t(11) = 3.25, p < .01, and for target objects not on the stick, t(11) = 3.74, p < .01.

The facilitative effect of observer locomotion appeared for both target objects on and not on the sticks. Furthermore detection accuracy for target objects on and not on the sticks did not differ. These results indicate that the different detection accuracy and different facilitative effect of locomotion for target objects on and not on the stick in Experiments 1 and 2 did not occur because objects on the stick were used as landmarks to locate objects.

General Discussion

The aim of this project was to seek evidence that people update their orientation with respect to a fixed reference direction when they learn an array of objects and walk around the array of objects. The position change detection paradigm developed by Simons and Wang (1998, see also Wang & Simons, 1999) was used. A reference direction was primed by placing two of the objects on a stick (Mou, Xiao, & McNamara, 2008). Position change detection at a novel view was compared when the novel view was caused by observer locomotion and when the novel view was caused by table rotation. There are three important findings: First, when an object not on the stick was moved and the objects on the stick stayed stationary in the test scene, the facilitative effect of observer locomotion was not observed. Second, when one of the two objects on the stick was moved in the test scene, the facilitative effect of locomotion on detection of position change appeared. Third, detection of position change was better when the target objects had not been on the stick than when the target objects had been on the stick whether the novel view was caused by table rotation or observer locomotion.

All these findings can be explained by the allocentric updating model discussed in the Introduction. According to this model, participants represented interobject vectors including body-object vectors and their orientation in terms of the reference direction primed by the stick, and during locomotion participants updated their orientation (i.e., allocentric heading, Klatzky, 1998) in terms of the same reference direction. The more accurately participants could identify the spatial reference direction in terms of which interobject vectors had been represented, the more accurately they would be able to locate objects’ locations (Mou, Fan, & McNamara, 2008; Mou, Zhang, & McNamara, 2009; Mou, Xiao, & McNamara, 2008). When an object not on the stick was moved and the objects on the stick stayed stationary in the test scene, the objects on the stick explicitly and accurately indicated the reference direction that had been selected at learning. The updated allocentric heading in the locomotion condition is not necessary in recovering the reference direction in the test scene. Hence detection of position change did not differ in the conditions of table rotation and observer locomotion. When one of the two objects on the stick was moved in the test scene, the reference direction explicitly indicated by these objects was not accurate. Visual input of interobject spatial relations in the test scene and the updated allocentric heading during locomotion are the information that can be used to detect and correct the inaccuracy of the explicitly indicated reference direction. The updated allocentric heading is available only in the observer locomotion condition but not in the table rotation condition. Hence the updated allocentric heading in the locomotion condition could facilitate the detection of position change. As discussed previously, the extra processes required to detect and correct the inaccurate reference direction were only required when target objects were on the stick and the indicated reference direction in the test scene was not accurate. Because extra processes involved extra cost, detection of position change for target objects on the stick was harder than detection of position change for target objects not on the stick.

The findings of this project provided a new challenge to all models that do not stipulate a fixed reference direction. According to the egocentric updating model proposed by Wang and Spelke (2002), participants represent egocentric body-object vectors, presumably in terms of the body orientation at learning. During locomotion to the test position participants dynamically update egocentric body-object vectors, presumably with respect to the current body orientation at test. This model should predict the facilitative effect of locomotion whether target objects are on or not on the stick. This prediction is not consistent with the disappearance of the facilitative effect of locomotion for target objects not on the stick. Mou, Zhang, and McNamara (2009) also reported that the facilitative effect of locomotion disappeared when the learning viewpoint was indicated in the test scene, indicating that spatial updating uses a fixed reference direction rather than a dynamically changing body orientation. However as discussed in the Introduction, there is one critical concern with using the disappearance of the facilitative effect of locomotion as evidence against updating of egocentric body-object vectors during locomotion. One may argue that different representations and processes in the two conditions. Participants might have allocentric representations that facilitated detection in the table rotation condition when the reference direction was indicated. Meanwhile participants also updated egocentric body-object vectors during locomotion. This possibility is undermined by another finding of the current study. If participants updated egocentric body-object vectors, then objects on or not on the stick should be equally updated. However the current study showed that detection of position change was better for targets not on the stick than for targets on the stick even in the observer locomotion condition.

Other models of spatial memory stipulate that fixed spatial reference directions are used in spatial memory and claim that these reference directions are primarily egocentric (e.g., Greenauer & Waller, 2008; Waller, Lippa, & Richardson, 2008). Waller and his colleagues proposed that spatial reference directions are primarily determined by the body, especially the viewing direction (Greenauer & Waller, 2008) or body-to-array axis (Waller, Lippa, & Richardson, 2008). Because this model makes use of fixed reference axes, it makes identical predictions to our allocentric updating model in the present experiments. Whereas Waller and colleagues use the nature of the cues (e.g., egocentric vs. allocentric) used to establish reference directions in memory as diagnostic of whether reference directions are egocentric or allocentric, we prefer to use the criterion of whether the reference direction, after having been selected, is stationary in the environment and independent of participants’ orientation during locomotion or changes with participants' orientations. Our preference is based on the observation that judgments of relative direction and visual recognition are reference direction dependent, that is performance is better when the test trials test the spatial relations directly represented with respect to the reference direction, whether the reference direction is selected based on egocentric or allocentric cues (e.g., Greenauer & Waller, 2010; Li, Mou, & McNamara, 2009; Mou, Liu, & McNamara, 2009). The findings of the current study and those of Mou, Zhang, and McNamara (2009) provide additional support for our preference. In the current study, the reference direction was not parallel to the learning view whereas in Mou, Zhang, and McNamara (2009), the reference direction was parallel to the learning view. However disappearance or appearance of the facilitative effect of locomotion was similarly modulated by whether the reference direction is accurately presented or not at test in both studies.

Experiment 2 showed that detection of position change was better for target objects not on the reference direction than for target objects on the reference direction even in the observer locomotion condition. According to the allocentric updating model, participants updated their orientation with respect to the reference direction. So why would change detection for objects on the reference direction be inferior to change detection for objects not on the reference direction in the locomotion condition? This effect might occur because the visual system overrides the locomotion system when conflicting information is produced by these two systems. Participants relied more on the visually indicated reference direction than the reference direction indicated by the updated allocentric heading. However this explanation could not explain the facilitative effect of locomotion for target objects on the stick. The other possibility is that updating of allocentric heading was not precise. The updated allocentric heading could detect the inaccuracy of the visually indicated reference direction only to some extent and not perfectly. This explanation is consistent with the finding that there was a facilitative effect of locomotion when the target objects were on the stick but detection of position change was better for target objects not on the reference direction than for target objects on the reference direction even in the observer locomotion condition. Future studies are required to further understand the roles of different modalities in recovering the reference direction.

Experiment 3 showed that when the sticks did not prime a non-egocentric the spatial reference direction, the facilitative effect of locomotion appeared for both target objects on and not on the sticks. This result indicated that the spatial reference direction was not explicitly indicated in the test scene. It is plausible that people establish a reference direction parallel to their viewing direction when there is no other salient direction (Greenauer & Waller, 2008; Li, Mou, & McNamara, 2009; Mou, Zhang, & McNamara, 2009). If this is the case, why was the spatial reference direction parallel to the viewing direction not explicitly indicated by two objects in the test scene for the table rotation condition? In Experiments 1–2 two objects fell on the stick intentionally in each trial whereas in Experiment 3 no two objects were placed along the viewing direction intentionally. Alignment of objects in the layout might not have been sufficiently salient or reliable as a cue to the spatial reference direction.

In this study we placed a stick underneath two objects to prime a non-egocentric reference direction so that we could easily manipulate target object on the reference direction or not on the reference direction. We do not propose that a non-egocentric reference direction is a "default" and ubiquitous means by which spatial representations are organized and spatial updating occurs. People can establish a fixed reference direction aligned with their learning orientation, with respect to which spatial representations are organized and spatial updating occurs as shown in Experiment 3 of the current study and in Mou, Zhang, & McNamara (2009).

It is not clear whether a fixed reference direction is established at learning and then referred to during updating one’s orientation in other spatial tasks where no visual identification of the reference direction is required. Wang and Spelke (2000) reported that pointing consistency across objects was disrupted after disorientation and concluded that there is no allocentric representation of object array. It is still possible that recovery of the fixed reference direction might be more disrupted after disorientation than after a brief rotation causing the disorientation effect. This possibility is being investigated in our lab. Many studies have shown that people can walk to a previously viewed object directly or indirectly without vision, and go back to the origin after walking a path of several legs without vision (e.g., Loomis et al., 1993; Philbeck & Loomis, 1997; Loomis & Phibeck, 2008, for a review). It is likely that egocentric body-target vectors are updated in these tasks. However it might also plausible that allocentric body-target vectors and allocentric heading are updated with respect to a fixed reference direction. One possibility is that participants update their allocentric heading and body-target or body-origin vectors with respect to the fixed reference direction that is determined by the first walking path. Systematic studies are required to test this possibility.

According to the allocentric updating model, spatial reference directions used in spatial updating one’s orientation are the same as those used in representing objects’ locations at learning. Numerous studies have investigated the cues that can affect selection of reference directions. Here we only discuss two cues that are relevant to the paradigm of position change detection. The first one is the walking path between the chairs in the current study. There is no evidence that participants used the walking path between the chairs to establish a reference direction. We speculate that visual cues (e.g., the stick) are more salient than walking path. The second one is the external card in Burgess et al. (2004). In this study, detection of position change is facilitated by the stable relation between the array of objects and the external card from learning to test. This facilitative effect is additive to the facilitative effect of locomotion, which indicated that the external card was not used to establish a reference direction. Rather it is more likely used as a reference object. The allocentric vector between the target object and the card can facilitate detection of position change when the card is stable relative to the object array (Mou, Xiao, & McNamara, 2008).

In the current study, as in the previous studies (Burgess, Spiers, & Paleologou, 2004; Mou, Zhang, & McNamara, 2009; Simons & Wang, 1998; Wang & Simons, 1999), the roles of spatial updating and reference direction on change detection are inferred from the accuracy of detection of position change. Response latency was not collected due to the difficulty to record it precisely. According to the allocentric updating model, the same representation and mechanism are speculated in detection of position change in the conditions of table rotation and observer locomotion except that an extra process of correcting the inaccuracy of the reference direction is involved in the observer locomotion condition. Hence the similar pattern in accuracy in Experiment 2 should be observed if we could collect the response latency.

In conclusion this project demonstrated that the facilitative effect of observer locomotion on position change detection at a novel view appeared when the spatial reference direction was not accurately indicated in the test scene and disappeared when the spatial reference direction was accurately indicated in the test scene. Detection of position change was worse when the spatial reference direction was not accurately indicated than when spatial reference direction was accurately indicated. These results suggest that people not only represent objects’ locations with respect to a fixed reference direction but also represent and update their own orientation according to the same reference direction.

Acknowledgements

Preparation of this paper and the research reported in it were supported in part by a grant from the Natural Sciences and Engineering Research Council of Canada and a grant from the National Natural Science Foundation of China (30770709) to WM and National Institute of Mental Health Grant 2-R01-MH57868 to TPM. We are grateful to Dr. Andrew Hollingworth and three anonymous reviewers for their helpful comments on the previous version of this manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Burgess N, Spiers HJ, Paleologou E. Orientational manoeuvres in the dark: dissociating allocentric and egocentric influences on spatial memory. Cognition. 2004;94:149–166. doi: 10.1016/j.cognition.2004.01.001. [DOI] [PubMed] [Google Scholar]

- Carlson LA, Hill PL. Processing the presence, placement and properties of a distractor in spatial language tasks. Memory & Cognition. 2008;36:240–255. doi: 10.3758/mc.36.2.240. [DOI] [PubMed] [Google Scholar]

- Christou CG, Tjan BS, Bülthoff HH. Extrinsic cues aid shape recognition from novel viewpoints. Journal of Vision. 2003;3:183–198. doi: 10.1167/3.3.1. [DOI] [PubMed] [Google Scholar]

- Gallistel CR. The organization of learning. Cambridge, MA: Bradford Books/MIT Press; 1990. [Google Scholar]

- Greenauer N, Waller D. Intrinsic array structure is neither necessary nor sufficient for nonegocentric coding of spatial layouts. Psychonomic Bulletin & Review. 2008;15:1015–1021. doi: 10.3758/PBR.15.5.1015. [DOI] [PubMed] [Google Scholar]

- Greenauer N, Waller D. Micro- and macro-reference frames: Specifying the relations between spatial categories in memory. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2010 doi: 10.1037/a0019647. In press. [DOI] [PubMed] [Google Scholar]

- Hodgson E, Waller D. Lack of set size effects in spatial updating: Evidence for offline updating. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2006;32:854–866. doi: 10.1037/0278-7393.32.4.854. [DOI] [PubMed] [Google Scholar]

- Holmes MC, Sholl MJ. Allocentric coding of object-to-object relations in overlearned and novel environments. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2005;31:1069–1087. doi: 10.1037/0278-7393.31.5.1069. [DOI] [PubMed] [Google Scholar]

- Kelly JW, McNamara TP, Bodenheimer B, Carr TH, Rieser JJ. The shape of human navigation: How environmental geometry is used in maintenance of spatial orientation. Cognition. 2008;109:281–286. doi: 10.1016/j.cognition.2008.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatzky RL. Allocentric and egocentric spatial representations: Definitions, distinctions, and interconnections. In: Freksa C, Habel C, Wender KF, editors. Spatial cognition: An interdisciplinary approach to representing and processing spatial knowledge. Berlin: Springer-Verlag; 1998. pp. 1–17. [Google Scholar]

- Li X, Mou W, McNamara TP. Intrinsic frames of reference and egocentric viewpoints in recognizing spatial structure of a shape. Psychonomic Bulletin & Review. 2009;16:518–523. doi: 10.3758/PBR.16.3.518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loomis JM, Klatzky RL, Golledge RG, Cicinelli JG, Pellegrino JW, Fry PA. Nonvisual navigation by blind and sighted: Assessment of path integration ability. Journal of Experimental Psychology: General. 1993;122:73–91. doi: 10.1037//0096-3445.122.1.73. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Philbeck JW. Measuring perception with spatial updating and action. In: Klatzky RL, Behrmann M, MacWhinney B, editors. Embodiment, ego-space, and action. Mahwah, NJ: Erlbaum; 2008. pp. 1–43. [Google Scholar]

- Mou W, Fan Y, McNamara TP, Owen CB. Intrinsic frames of reference and egocentric viewpoints in scene recognition. Cognition. 2008;106:750–769. doi: 10.1016/j.cognition.2007.04.009. [DOI] [PubMed] [Google Scholar]

- Mou W, Liu X, McNamara TP, Owen CB. Layout geometry in encoding and retrieval of spatial memory. Journal of Experimental Psychology: Human perception and performance. 2009;35:83–93. doi: 10.1037/0096-1523.35.1.83. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP. Intrinsic frames of reference in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:162–170. doi: 10.1037/0278-7393.28.1.162. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP, Valiquette CM, Rump B. Egocentric and allocentric updating of spatial memories. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:142–157. doi: 10.1037/0278-7393.30.1.142. [DOI] [PubMed] [Google Scholar]

- Mou W, Xiao C, McNamara TP. Reference directions and reference objects in spatial memory of a briefly viewed layout. Cognition. 2008;108:136–154. doi: 10.1016/j.cognition.2008.02.004. [DOI] [PubMed] [Google Scholar]

- Mou W, Zhang H, McNamara TP. Change detection of an object’s locations relies on relies on identifying spatial reference directions. Cognition. 2009;111:175–186. doi: 10.1016/j.cognition.2009.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J, Nadel L. The Hippocampus As a Cognitive Map. Oxford: Oxford University Press; 1978. [Google Scholar]

- Philbeck JW, Loomis JM. Comparison of two indicators of perceived egocentric distance under full-cue and reduced-cue conditions. Journal of Experimental Psychology: Human Perception and Performance. 1997;23:72–85. doi: 10.1037//0096-1523.23.1.72. [DOI] [PubMed] [Google Scholar]

- Rieser JJ, Pick HL, Ashmead DH, Garing AE. Calibration of human and models of perceptual-motor organization. Journal of Experimental Psychology: Human Perception & Performance. 1995;21:480–497. doi: 10.1037//0096-1523.21.3.480. [DOI] [PubMed] [Google Scholar]

- Rock I. Orientation and form. New York: Academic Press; 1973. [Google Scholar]

- Sargent J, Dopkins S, Philbeck J, Chichka D. Chunking in spatial memory. Journal of Experimental Psychology: Learning, memory, and cognition. 2010;36:576–589. doi: 10.1037/a0017528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shelton AL, McNamara TP. Systems of spatial reference in human memory. Cognitive Psychology. 2001;43:274–310. doi: 10.1006/cogp.2001.0758. [DOI] [PubMed] [Google Scholar]

- Simons DJ, Wang RF. Perceiving real-world viewpoint changes. Psychological Science. 1998;9:315–320. [Google Scholar]

- Spelke ES. Developmental neuroimaging: A developmental psychologist looks ahead. Developmental Science. 2002;5:392–396. [Google Scholar]

- Taube JS, Muller RU, Ranck JB., Jr Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. Journal of Neuroscience. 1990;10:420–435. doi: 10.1523/JNEUROSCI.10-02-00420.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolman EC. Cognitive maps in rats and men. Psychological Review. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- Waller D, Lippa Y, Richardson A. Isolating observer-based reference directions in human spatial memory: Head, body, and the self-to-array axis. Cognition. 2008;106:157–183. doi: 10.1016/j.cognition.2007.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang RF, Simons DJ. Active and passive scene recognition across views. Cognition. 1999;70:191–210. doi: 10.1016/s0010-0277(99)00012-8. [DOI] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Updating egocentric representations in human navigation. Cognition. 2000;77:215–250. doi: 10.1016/s0010-0277(00)00105-0. [DOI] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Human Spatial Representation: Insights from Animals. Trends in Cognitive Sciences. 2002;6:376–382. doi: 10.1016/s1364-6613(02)01961-7. [DOI] [PubMed] [Google Scholar]