Abstract

To identify neural regions that automatically respond to linguistically structured, but meaningless manual gestures, 14 deaf native users of American Sign Language (ASL) and 14 hearing non-signers passively viewed pseudosigns (possible but non-existent ASL signs) and non-iconic ASL signs, in addition to a fixation baseline. For the contrast between pseudosigns and baseline, greater activation was observed in left posterior superior temporal sulcus (STS), but not in left inferior frontal gyrus (BA 44/45), for deaf signers compared to hearing non-signers, based on VOI analyses. We hypothesize that left STS is more engaged for signers because this region becomes tuned to human body movements that conform the phonological constraints of sign language. For deaf signers, the contrast between pseudosigns and known ASL signs revealed increased activation for pseudosigns in left posterior superior temporal gyrus (STG) and in left inferior frontal cortex, but no regions were found to be more engaged for known signs than for pseudosigns. This contrast revealed no significant differences in activation for hearing non-signers. We hypothesize that left STG is involved in recognizing linguistic phonetic units within a dynamic visual or auditory signal, such that less familiar structural combinations produce increased neural activation in this region for both pseudosigns and pseudowords.

Keywords: sign language, deaf signers, fMRI, pseudowords

Introduction

When most hearing people watch sign language, they see an uninterpretable stream of rapid hand and arm movements. In contrast, deaf signers quickly and automatically extract meaning from this incoming visual signal. Recognizing linguistic elements within the visual sign stream is automatic and unconscious, as evidenced by Stroop effects for signs (Vaid & Corina, 1989). In addition, just as speakers become perceptually attuned to the phonological structure of their language (e.g., Werker & Tees, 1984), signers also develop perceptual sensitivities to phonologically contrastive linguistic categories, as evidenced by categorical perception effects for sign language (Baker, Isardi, Golinkoff, & Petitto, 2005; Emmorey, McCullough, & Brentari, 1993). For sign language, phonological categories are grounded in manual articulation and involve contrasts in hand configuration, movement, and location on the body (see Brentari, 1998, and Sandler & Lillo-Martin, 2006), for reviews of sign language phonology). Sensitivity to these phonological categories and automatic access to a sign-based lexicon are in part what allows deaf signers to extract meaning from the hand and arm movements that constitute sign production.

In this study, we investigated whether the perception of linguistically structured, but meaningless pseudosigns engages distinct neural regions for deaf signers compared to hearing non-signers. We hypothesized that pseudosigns, i.e., possible but non-existent lexical forms in American Sign Language (ASL), would be recognized and parsed as linguistic only by deaf ASL signers. We therefore predicted that pseudosigns would engage classic language regions, i.e., the left inferior frontal gyrus (Broca’s area) and left posterior superior temporal cortex (Wernicke’s area), to a greater extent for deaf signers than for hearing non-signers.

In a previous fMRI study, MacSweeney et al. (2004) presented hearing non-signers and deaf and hearing users of British Sign Language (BSL) with meaningless gestural strings from Tic Tac, a gesture code used in racecourse betting that was unknown to their study participants. MacSweeney et al. (2004) reported that the perception of Tic Tac gesture strings engaged the left inferior frontal gyrus and left posterior superior temporal sulcus for all groups, in comparison to a low-level baseline. However, the neural responses from the signing and non-signing groups were not directly contrasted, and thus it is not clear whether signers may have recruited these perisylvian language regions to a greater extent than non-signers. In addition, participants in this study were asked to guess which of the Tic Tac “sentences” did not make sense, and thus it is possible that all groups may have attempted to find meaning in the gesture strings through language-related processes (e.g., endeavoring to map form to meaning). In addition, Buchsbaum, Pickell, Love, Hatrak, Bellugi, and Hickok (2005) found that left inferior frontal gyrus (BA 44/6) and superior and middle temporal gyri were activated during both the perception and covert rehearsal of ASL pseudosigns. However, hearing non-signers were not included in their study, and no comparison was made between pseudosigns and known signs. In the experiment reported here, both signers and non-signers were asked to passively view pseudosigns and were not given a task to perform. This design allows us to better assess whether perisylvian language cortices are automatically and differentially engaged for signers in response to linguistically structured gestures.

In addition, we compared the neural response to pseudosigns with the response to familiar ASL signs. A recent meta-analysis of neuroimaging studies that directly contrasted spoken words and pseudowords found elevated neural responses to pseudowords in regions both anterior and posterior to Heschl’s Gyrus (in planum polare and planum temporale, respectively) extending into adjacent regions of the superior temporal gyrus (Davis & Gaskell, 2009). In addition, spoken pseudowords engaged left inferior frontal gyrus (opercularis) and premotor regions to a greater extent than known words. Davis and Gaskell (2009) suggest that these regions constitute putative neural correlates of phonological-encoding processes that are challenged during the initial processing of unfamiliar spoken words. Preferential activation for known words compared to pseudowords was observed in the posterior middle temporal gyrus and in the supramarginal gyrus. Davis and Gaskell (2009) suggest that activation in these regions represents higher level lexical processes, such as retrieval of stored phonological forms and initial access to word meanings.

The contrast between neural regions that are more responsive to pseudosigns than to known signs will help identify cortical regions that are preferentially engaged during phonetic and phonological processing of sign language. Of particular interest is whether pseudosigns, like pseudowords, recruit regions within superior temporal cortex adjacent to auditory cortex. If so, it would support the hypothesis that these neural regions are modality independent and respond preferentially to linguistically structured input (cf. Petitto et al., 2000). Phonetic processing for sign language involves the parsing and recognition of manual-based units: hand configurations (both dominant and non-dominant hands), movement patterns (path movements of the arm/hand, as well as “hand internal” movements of the fingers), and location of the arm/hand with respect to the face and body (the place of articulation for a sign). These phonological units are automatically identified during on-line sign recognition, which gives rise to phonological priming effects (Carreiras, Gutiérrez-Sigut, Baquero, & Corina, 2008; Corina & Emmorey, 1993), as well as picture-sign interference effects (Baus, Gutiérrez-Sigut, Quer, & Carreiras, 2008; Corina & Knapp, 2006). Furthermore, pseudosigns are processed in a manner parallel to real signs, such that they are sometimes misperceived as signs (Orfanidou, Adam, McQueen, & Morgan, 2009) and are segmented following universal and modality-independent constraints on what constitutes a possible word (Orfanidou, Adam, Morgan, & McQueen, 2010). Thus, both sign and speech perception involve a surprising number of shared psycholinguistic processes, and we may find that these processes also share the same neural substrate.

On the other hand, we may find that phonetic processing of pseudosigns engages distinct neural substrates due to modality-specific differences in perceptual processing. For example, spoken pseudowords may preferentially engage left superior temporal gyrus because speech involves fast temporal changes, whereas pseudosigns might preferentially engage left inferior parietal cortex because signing involves slower temporal changes and a visual-manual signal.

For this study, pseudosigns were created by substituting one or more phonological parameters (e.g. handshape, location, or movement) within an existing ASL sign to create a possible but nonexistent sign and by borrowing real signs from British Sign Language, which did not correspond to signs in ASL. We also selected ASL verbs that were non-iconic and rated as having little or no meaning by a separate group of hearing participants. Fourteen deaf native signers and 14 native English speakers were instructed to watch the video clips attentively without responding, while undergoing functional magnetic resonance imaging. A fixation cross served as a low level baseline. After scanning, participants were given a recognition test to evaluate their attentiveness during the period of image acquisition. Prior to scanning, participants were told that they would answer some (unspecified) questions about the stimuli at the end of the experiment. The results for passively viewing non-iconic ASL verbs versus baseline for each participant group are reported in Emmorey, Xu, Gannon, Goldin-Meadow, and Braun (2010) and are included in a supplemental table and figure (with FDR correction). Here, we report the results for the pseudosign condition included in that study.

Results

For deaf ASL signers, the contrast between passive viewing of pseudosigns and fixation baseline revealed significant activation in the inferior frontal gyrus (IFG) bilaterally and in left superior temporal gyrus (STG), extending into the middle temporal gyrus (MTG) and inferior parietal lobule (see Table 1A). For hearing non-signers, this contrast revealed activation within left IFG, but no significant cluster of activation within superior temporal cortex was observed. Rather, neural activation was observed bilaterally within the superior parietal lobule (BA 7) and inferior occipital cortex (BA 18/19) which extended into MTG. Figure 1S in the on-line supplementary materials illustrates the fixation baseline contrasts for each group.

Table 1.

Brain regions (cluster maxima) activated by pseudosigns in contrast to fixation or to ASL signs. Maxima coordinates are in MNI space.

| Deaf |

Hearing |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Region | BA | Side | t-value | x | y | z | cluster size |

t-value | x | y | z | cluster size |

| A. Pseudosigns vs Fixation | ||||||||||||

| Superior medial frontal gyrus | R | 7.39 | 9 | 63 | 27 | 11 | ||||||

| Inferior frontal gyrus | 44/45 | L | 4.14 | −45 | 12 | 21 | 172 | 6.35 | −57 | 21 | 27 | 460 |

| R | 3.72 | 42 | 9 | 33 | 35 | |||||||

| 47 | L | 4.52 | −45 | 33 | −9 | 36 | ||||||

| Precentral gyrus | 6 | L | 3.7 | −63 | 3 | 36 | 172 | 5.11 | −51 | 3 | 39 | 460 |

| Parahippocampal gyrus | L | 4.70 | −18 | −33 | −9 | 208 | ||||||

| R | 4.17 | 18 | −30 | −9 | 78 | |||||||

| Superior temporal gyrus | 22/42 | L | 5.78 | −51 | −42 | 12 | 2111 | |||||

| Middle temporal gyrus | 21 | L | 6.96 | −54 | −39 | −3 | 2111 | |||||

| R | 6.13 | 45 | −36 | −6 | 1692 | |||||||

| Inferior temporal gyrus | 20 | L | 3.95 | −30 | −3 | −33 | 13 | |||||

| Fusiform/ITG/IOG | 37/20/19 | L | 10.04 | −42 | −72 | −12 | 2111 | 13.96 | −51 | −75 | −9 | 1436 |

| R | 9.83 | 51 | −69 | −6 | 1692 | 11.83 | 48 | −75 | −6 | 1075 | ||

| Inferior parietal lobule | 40 | L | 4.74 | −54 | −41 | 26 | 2111 | 3.88 | −57 | −27 | 39 | 564 |

| Superior parietal lobule | 7 | L | 7.06 | −33 | −54 | 63 | 564 | |||||

| R | 5.64 | 30 | −54 | 69 | 122 | |||||||

| Inferior occipital gyrus | 18/19 | L | 10.70 | −27 | −93 | −3 | 1436 | |||||

| R | 9.44 | 39 | 90 | −9 | 1075 | |||||||

| B. Pseudosigns vs ASL signs | ||||||||||||

| Inferior frontal gyrus | 44 | L | 4.41 | −42 | 12 | 21 | 189 | No significant differences in activation | ||||

| R | 3.58 | 45 | 15 | 33 | 46 | |||||||

| 47 | L | 3.48 | −51 | 30 | −9 | 39 | ||||||

| Precentral gyrus | 6 | L | 3.02 | −36 | −42 | 42 | 10 | |||||

| Superior temporal gyrus | 22/42 | L | 3.59 | −54 | −33 | 11 | 1401 | |||||

| Middle temporal gyrus | 21 | L | 3.68 | −63 | −32 | 4 | 1401 | |||||

| R | 3.46 | 63 | −48 | −3 | 571 | |||||||

| Fusiform/ITG/IOG | 37/20/19 | L | 4.3 | −42 | −66 | −13 | 1401 | |||||

| R | 4.58 | 33 | −93 | −6 | 24 | |||||||

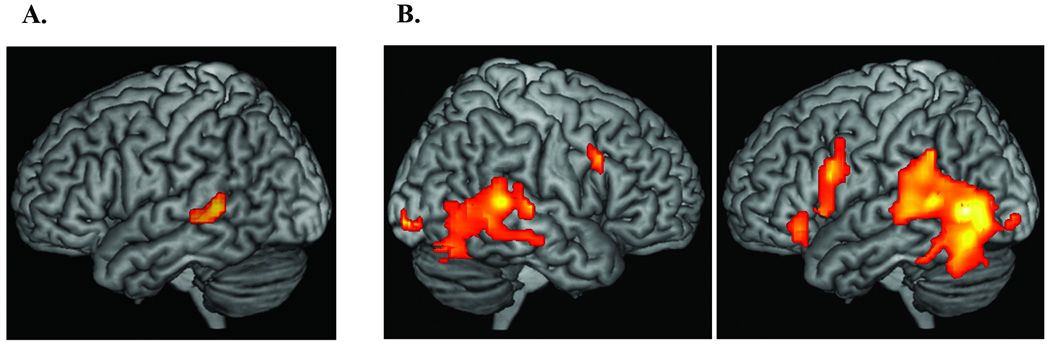

The whole brain analysis did not reveal a significant difference between the deaf and hearing groups for the pseudosign contrasts. However, because we were specifically interested in whether classic anterior (left inferior frontal gyrus (BA 44 and BA45) and posterior (left superior temporal gyrus and superior temporal sulcus) perisylvian language areas were more engaged for deaf signers, we conducted a VOI analysis targeting these two regions of interest (defined anatomically and functionally, see Methods). No significant group differences in activation were observed within left BA44 or BA45 (or when the two regions were combined). However, as shown Figure 1A, the superior bank of the left posterior superior temporal sulcus was more engaged for deaf signers than for hearing non-signers when viewing pseudosigns versus fixation.

Figure 1.

A) Illustration of the VOI analysis showing greater activation in left STS for deaf signers compared to hearing non-signers for the contrast pseudosigns minus fixation baseline (peak coordinates: −57, −30, −3). The statistical map was rendered on a single subject T1 image in MNI space using MRIcron. B) Illustration of brain regions that were more active for pseudosigns than ASL signs for the deaf participants. The statistical map is from a one sample t test of pseudosigns minus ASL signs and was rendered on a single subject T1 image in MNI space using MRIcron.

Table 1B lists the anatomical regions that were more engaged during the perception of pseudosigns compared to familiar ASL signs for deaf signers. Passive viewing of pseudosigns resulted in greater activation in inferior frontal cortex, left STG extending into the middle temporal gyrus, and bilateral inferior temporal cortex (see Figure 1B). No regions were more active for known signs compared to pseudosigns. For the hearing non-signers, the contrast between viewing pseudosigns and real ASL signs (both meaningless for these participants) showed no differences in activation.

Discussion

When deaf signers passively viewed linguistically structured, but meaningless gestures, greater activation was observed within left superior temporal cortex in contrast to hearing non-signers with the VOI analysis (Figure 1A) and in contrast to viewing familiar ASL signs (Figure 1B). There were similarities in the neural systems engaged when both signers and non-signers viewed meaningless gestures, replicating MacSweeney et al. (2004); in fact, the whole brain analysis revealed no regions of activation that were greater for deaf signers than for hearing non-signers. However, our targeted VOI analyses of the classic frontal and posterior language regions revealed greater activation within left superior temporal sulcus compared to non-signers, but not within left inferior frontal gyrus (BA 44 or BA 45). We may not have observed greater activation for signers in left IFG for the pseudosign vs. baseline contrast because of the multifunctionality of this region. Specifically, left IFG is argued to function as part of the human mirror neuron system, responding to observed hand and arm actions (see Rizzolatti and Sinigaglia, 2008, for review). Further, both Emmorey et al. (2010) and Corina et al. (2007) report greater activation in left IFG when hearing non-signers view meaningful human actions compared to deaf signers.

We hypothesize that increased left posterior STS activation (see Figure 1A) reflects heightened sensitivity to body movements that conform to the phonotactics and phonological structure of ASL. In hearing non-signers, posterior STS preferentially responds to point-light displays of biological motion (e.g., Grossman & Blake, 2002), and indeed compared to baseline fixation, viewing pseudosigns and real signs resulted in activation that extended into posterior STS for the hearing non-signers in this study (see Figure 1S in the supplementary materials). For deaf signers, this region may become tuned to respond strongly to human hand and arm movements that conform to the phonological and prosodic constraints of sign language. Specifically, dynamic movements (single path movements or changes in hand orientation) are critical to identifying syllabic structure in sign languages (Brentari, 1999), and movement also constrains the internal structure of signs (Perlmutter, 1992). Left STS may be significantly more active for deaf signers than for hearing non-signers because neurons in this region become particularly receptive to body movements that are linguistically structured and constrained. Right STS may also be engaged in sign-based phonological processing but to a lesser extent (activation in right MTG extended into posterior right STS for the pseudosign versus ASL sign contrast; see Figure 1B). An intriguing possibility is that left STS subserves categorical and combinatorial processing of sublexical sign structure, while right STS subserves more gradient phonological processes (see Hickok and Poeppel (2007) for a similar suggestion for speech processing).

For deaf signers, the contrast between pseudosigns and actual ASL signs revealed several regions of increased activation for pseudosigns, while this contrast revealed no difference in neural activity for hearing non-signers. Specifically, elevated neural responses were observed for pseudosigns in left inferior frontal cortex (BA 44 and 47) and in posterior STG (see Figure 1B), replicating what has been found for spoken language (Davis & Gaskell, 2009). Increased activation within inferior frontal cortex may reflect effortful lexical search processes (BA 47) or processes that map phonological representations to the articulators (BA 44). For spoken language, posterior STG is generally hypothesized to underlie the processing of the sound structure of human speech (e.g., Davis & Johnsrude, 2003) and has been found to be more active when listening to spoken pseudowords than to known words (e.g., Majerus et al., 2005). Despite the fact that this region is adjacent to primary auditory cortex (and quite removed from primary visual cortex), our results suggest that posterior STG may nonetheless be involved in sub-lexical processing of sign language. We speculate that this is a modality-independent region that is crucially involved in recognizing linguistic phonetic units within a dynamic signal (whether visual or auditory), such that less familiar structural combinations produce increased neural activation. However, further research is needed to identify precisely the nature of the structural analyses and/or segmentation processes that are associated with posterior STG.

Interestingly, we did not observe increased activation within anterior STG for pseudosigns compared to known signs, in contrast to results from spoken language. This finding is consistent with Davis and Gaskell’s (2009) hypothesis that the elevated response to pseudowords within anterior STG reflects the maintenance and processing of echoic representations of speech (e.g., Buchsbaum, Olsen, Koch, & Berman, 2005). The increased neural response to pseudowords but not to pseudosigns in the planum polare (anterior to Heschl’s Gyrus) may thus reflect modality-specific processing of auditory language input.

Finally, we found no regions that were more engaged for familiar ASL signs compared to pseudosigns. In contrast, MacSweeney et al. (2004) reported several regions that were more engaged for BSL sentences than for nonsense gesture strings (Tic Tac): left posterior STS, supramarginal gyrus, and inferior temporal cortex. The BSL/Tic Tac contrast differs in part from the ASL sign/pseudosign contrast because the Tic Tac gestures were much less phonologically rich than the BSL signs, involving only a few unmarked handshapes, locations, and movements. The pseudosign and sign stimuli used in our study had very similar phonological structure (both contained marked and unmarked handshapes and a variety of body locations and movement patterns). Based on the meta-analysis of spoken language by Davis and Gaskell (2009), we had anticipated that familiar ASL signs would engage the middle temporal gyrus bilaterally and/or the left inferior parietal lobule to a greater extent than pseudosigns, reflecting access to stored phonological and semantic representations. One possible explanation is that our pseudosigns may have partially activated phonological representations and hence lexical-semantic representations as well. Such partial lexical-semantic activation would reduce the difference in neural activity between known signs and pseudosigns. Prabhakaran et al. (2006) also failed to find regions of increased activation for spoken words compared to pseudowords in an fMRI study involving lexical decision, and they offer a similar explanation. Lexical-semantic information is also likely represented in widely distributed networks (e.g., Damasio, 1989), which could further explain the lack of significant difference for the known signs versus pseudosigns contrast.

In sum, our results support the hypothesis that left posterior superior temporal cortex is automatically engaged when deaf signers view linguistically structured manual gestures. We hypothesize that for deaf signers, the left posterior STS may become tuned to the specific hand and arm motions that constitute potential lexical forms in sign language. Furthermore, the perception of language-like gestures and sound patterns both require an initial sublexical phonetic analysis of the incoming visual or auditory signal. Our findings are consistent with the view that posterior STG is involved in such modality-independent sublexical processing.

Methods

Participants

The fourteen deaf signers (7 males) and 14 hearing non-signers (6 males) were the same as those in Emmorey et al. (2010). All participants were right-handed, and all had attended college. The deaf signers (mean age = 22.3 years) were all born into signing families, were exposed to ASL from birth, and reported a hearing loss of ≥ 70dB. The hearing non-signers (mean age = 24.3 years) reported normal hearing and no knowledge of a signed language.

Stimuli

A deaf actress (a native ASL signer) was filmed producing 108 ASL verbs and 93 pseudosigns. All pseudosigns conformed to the phonotactic constraints of ASL and were possible but non-occurring signs. The pseudosigns were practiced and produced as fluently as the ASL signs. In order to assess the meaningfulness of the stimuli, the ASL signs and pseudosigns (along with pantomimes and emblematic gestures) were presented to a separate group of 22 deaf signers and 38 hearing non-signers. These participants were asked to rate each video clip for meaning on a scale of 0 – 3, where 0 = no meaning, 1 = weak meaning, 2 = moderate or fairly clear meaning, and 3 = absolute strong/direct meaning. The participants were also asked to provide a brief description of meaning if they rated a video clip above 0.

Based on this norming study, 60 non-iconic ASL signs and 60 pseudosigns were selected for the experiment. The 60 ASL signs were rated as meaningful (a rating of 2 or 3) by 98.9% of the deaf participants and were rated as having weak or no meaning (a rating of 0 or 1) by 91.7% of the hearing participants. The 60 pseudosigns were rated as having weak or no meaning by 91.2% of the deaf signers and 92.2 % of the hearing non-signers.

Procedure

The procedure was the same as in Emmorey et al. (2010). Four 45-second ASL sign blocks, pseudosign blocks, and fixation baseline blocks were presented in random order while fMRI data were acquired. Each block contained 15 video clips, and the clips were presented with a 3 second inter-stimulus interval.

Image Acquisition and Data Analysis

Functional and structural MR images were collected as described in Emmorey et al. (2010). Task effects were estimated using a general linear model with the expected task-related response convolved with a standard hemodynamic response function and were first evaluated at the single subject level. The contrast images of the ASL signs minus the baseline and the pseudosigns minus the baseline were created for each participant for both the deaf signers and the hearing non-signers. To account for intersubject variance, a random effects model was used to obtain group results with the contrast images from each participant. A one sample t-test was used to evaluate task effects for each condition for both participant groups. Paired two-sample t tests were also performed as random effects analyses to estimate differences between the two conditions within each participant group. In addition, two-sample t tests were performed as random effects analyses, in which first level contrasts within subjects were compared between groups at the second level – equivalent to a group x task interaction. The inclusive masks were applied when pseudosigns and ASL signs were directly compared to exclude differences caused by deactivation. For example, results of unique activations for pseudosigns (pseudosigns minus ASL signs) were reported only when values for pseudosigns were significantly greater than the baseline. Whole brain false discovery rate (FDR) with a corrected p value of 0.05 was applied as the inference threshold.

For the VOI analysis, the left IFG VOI consisted of Brodmann areas 44 and 45. These regions were defined anatomically using the Brodmann area (cytoarchitectonic) atlas within WFU PickAtlas toolbox (www.ansir.wfubmc.edu). We created the posterior temporal VOI based on functional imaging data from previous studies that localized significant activation within left STG and STS for the comprehension of sign language versus a low level baseline (MacSweeney et al., 2002; MacSweeney et al., 2004; Petitto et al., 2000). To create the VOI, the minimum and maximum X, Y, and Z coordinates from STG and/or STS activations reported in these studies were identified (X: min = −45, max = −55; Y: min = −28, max = −52; Z: min = 2, max = 8), and then extended 5 mm in each direction. MarsBaR (http://marsbar.sourceforge.net) was then used to create the left posterior superior temporal cortex VOI, which spanned both the STG and STS. Significant activation was identified at a threshold of p < 0.05, FDR corrected for multiple comparisons within each VOI.

Supplementary Material

Activation for A) ASL signs and B) pseudosigns in contrast to fixation baseline for both groups. The statistic t maps are from a random effects analysis and are rendered on a single subject T1 image transformed into MNI space.

Acknowledgments

This research was supported in part by NIH grant R01 DC010997 (KE) and by the NIDCD Intramural Research Program. We would like to thank Franco Korpics for help recruiting Deaf participants, Jeannette Vincent for help with stimuli development, Helsa Borinstein for help with the gesture norming study, and all of the Deaf and hearing individuals who participated in the study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Karen Emmorey, San Diego State University.

Jiang Xu, National Institute on Deafness and other Communication Disorders.

Allen Braun, National Institute on Deafness and other Communication Disorders.

References

- Baker SA, Isardi WJ, Golinkoff RM, Petitto LA. The perception of handshapes in American Sign Language. Memory & Cognition. 2005;33(5):887–904. doi: 10.3758/bf03193083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baus C, Gutiérrez-Sigut E, Quer J, Carreiras M. Lexical access in Catalan Signed Language (LSC) production. Cognition. 2008;108(3):856–865. doi: 10.1016/j.cognition.2008.05.012. [DOI] [PubMed] [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. The MIT Press; 1998. [Google Scholar]

- Buchsbaum BR, Olsen RK, Koch P, Berman KF. Human dorsal and ventral auditory streams subserve rehearsal-based and echoic processes during verbal working memory. Neuron. 2005;48:687–697. doi: 10.1016/j.neuron.2005.09.029. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Pickell B, Love T, Hatrak M, Bellugi U, Hickok G. Neural substrates for verbal working memory in deaf signers: fMRI study and lesion case report. Brain and Language. 2005;95:265–272. doi: 10.1016/j.bandl.2005.01.009. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Gutiérrez-Sigut E, Baquero S, Corina D. Lexical processing in Spanish Sign Language (LSE) Journal of Memory and Language. 2008;58(1):100–122. [Google Scholar]

- Corina DP, Emmorey K. Lexical priming in American Sign Language; Paper presented at the 34th annual meeting of the Psychonomics Society; Washington, DC. 1993. [Google Scholar]

- Corina DP, Knapp H. Lexical retrieval in American Sign Language production. In: Goldstein LM, Whalen DH, Best CT, editors. Papers in Laboratory Phonology. Vol. 8. Berlin: Mouton de Gruyter; 2006. pp. 213–240. [Google Scholar]

- Corina D, Chiu YS, Knapp H, Greenwald R, San Jose-Robertson L, Braun A. Neural correlates of human action observation in hearing and deaf subjects. Brain Research. 2007;1152:111–129. doi: 10.1016/j.brainres.2007.03.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio AR. The brain binds entities and events by multiregional activation from convergence zones. Neural Computation. 1989;1:123–132. [Google Scholar]

- Davis MH, Gaskell MG. A complementary systems account of word learning: neural and behavioral evidence. Philosophical transactions of the royal society B. 2009;364:3773–3800. doi: 10.1098/rstb.2009.0111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS. Hierarchical processing in spoken language comprehension. Journal of Neuroscience. 2003;23:3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Brentari D. Categorical perception in American Sign Language. Language and Cognitive Processes. 2003;18(1):21–45. [Google Scholar]

- Emmorey K, Xu J, Gannon P, Goldin-Meadow S, Braun A. CNS activation and regional connectivity during pantomime observation: No engagement of the mirror neuron system for deaf signers. Neuroimage. 2010;49:994–1005. doi: 10.1016/j.neuroimage.2009.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman ED, Blake R. Brain areas active during visual perception of biological motion. Neuron. 2002;35:1167–1176. doi: 10.1016/s0896-6273(02)00897-8. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8:383–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Giampietro V, David AS, McGuire PK, et al. Dissociating linguistic and nonlinguistic gestural communication in the brain. Neuroimage. 2004;22(4):1605–1618. doi: 10.1016/j.neuroimage.2004.03.015. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, Calvert GA, McGuire PK, David AS, Simmons A, Brammer MJ. Neural correlates of British Sign Language comprehension: Spatial processing demands of topographic language. Journal of Cognitive Neuroscience. 2002;14(7):1064–1075. doi: 10.1162/089892902320474517. [DOI] [PubMed] [Google Scholar]

- Majerus S, Van der Linden M, Collette F, Laureys S, Poncelet M, Degueldre C, Delfiore G, Luxen A, Salmon E. Modulation of brain activity during phonological familiarization. Brain and Language. 2005;92:320–331. doi: 10.1016/j.bandl.2004.07.003. [DOI] [PubMed] [Google Scholar]

- Orfanidou E, Adam R, McQueen JM, Morgan G. Making sense of nonsense in British Sign Language (BSL): The contribution of different phonological parameters to sign recognition. Memory & Cognition. 2009;37(3):302–315. doi: 10.3758/MC.37.3.302. [DOI] [PubMed] [Google Scholar]

- Orfanidou E, Adam R, Morgan G, McQueen J. Recognition of signed and spoken language: Different sensory inputs, the same segmentation procedure. Journal of Memory and Language. 2010;62:272–283. [Google Scholar]

- Perlmutter D. Sonority and syllable structure in American Sign Language. Linguistic Inquiry. 1992;23:407–442. [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans A. Speechlike cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proceedings of the National Academy of Sciences. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prabhakaran R, Blumstein S, Myers EB, Hutchinson E, Britton B. An eventrelated fMRI investigation of phonological-lexical competition. Neuropsychologia. 2006;44:2209–2221. doi: 10.1016/j.neuropsychologia.2006.05.025. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C. Mirrors in the brain. Oxford: Oxford University Press; 2008. [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge: Cambridge University Press; 2006. [Google Scholar]

- Vaid J, Corina D. Visual field asymmetries in numerical size comparisons of digits, words, and signs. Brain and language. 1989;36:117–126. doi: 10.1016/0093-934x(89)90055-2. [DOI] [PubMed] [Google Scholar]

- Werker J, Tees R. Cross-language speech perception: evidence for perceptual reorganization during the first year of life. Infant Behavior and Development. 1984;7:49–63. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Activation for A) ASL signs and B) pseudosigns in contrast to fixation baseline for both groups. The statistic t maps are from a random effects analysis and are rendered on a single subject T1 image transformed into MNI space.