Abstract

The integration of multisensory information is essential to forming meaningful representations of the environment. Adults benefit from related multisensory stimuli but the extent to which the ability to optimally integrate multisensory inputs for functional purposes is present in children has not been extensively examined. Using a cross-sectional approach, high-density electrical mapping of event-related potentials (ERPs) was combined with behavioral measures to characterize neurodevelopmental changes in basic audiovisual (AV) integration from middle childhood through early adulthood. The data indicated a gradual fine-tuning of multisensory facilitation of performance on an AV simple reaction time task (as indexed by race model violation), which reaches mature levels by about 14 years of age. They also revealed a systematic relationship between age and the brain processes underlying multisensory integration (MSI) in the time frame of the auditory N1 ERP component (∼120 ms). A significant positive correlation between behavioral and neurophysiological measures of MSI suggested that the underlying brain processes contributed to the fine-tuning of multisensory facilitation of behavior that was observed over middle childhood. These findings are consistent with protracted plasticity in a dynamic system and provide a starting point from which future studies can begin to examine the developmental course of multisensory processing in clinical populations.

Keywords: children; cross-modal; development, electrophysiology; ERP; multisensory integration

Introduction

The ability to construct meaningful internal representations of the environment depends on integrating and segregating the myriad multisensory inputs that enter the nervous system at a given moment. Not surprisingly, it has been firmly established that as adults we frequently benefit from multisensory inputs when they represent redundant or complementary features of objects and events. For example, we react more quickly to the presence of multisensory compared with unisensory objects, and we are often better able to identify an object or event when it is conveyed through more than one sensory modality (Forster et al. 2002; Molholm et al. 2002; Lovelace et al. 2003; Gondan et al. 2005; Ross, Saint-Amour, Leavitt, Javitt, and Foxe 2007; Ross, Saint-Amour, Leavitt, Molholm, et al. 2007). As might be expected, children too benefit from related multisensory inputs (Neil et al. 2006; Gori et al. 2008; Barutchu, Danaher, et al. 2009). A number of behavioral studies show that infants are capable of recognizing relationships among multisensory inputs (Bahrick et al. 2005; e.g., Kohl and Meltzoff 1982), suggesting that from very early in development, multisensory associations are formed and temporal relationships between multisensory inputs recognized. For example, using a preferential looking paradigm, Patterson and Werker (2003) showed that 2-month-old infants were able to match vowel information in faces and voices, suggesting that by 2 months of age, if not earlier (2 months was the youngest age these authors tested due to issues of reliability and visual acuity in younger infants), some form of multisensory association occurs. Similarly, Lewkowicz (1992) found that when presented with an audiovisual (AV) compound stimulus (a bouncing object on a video screen), infants in all age-groups tested (4-, 6-, 8-, and 10-month olds), were sensitive to the temporal relationships between auditory and visual stimuli. Notably, infant behavioral studies on multisensory processing for the most part test whether the infants have noted relationships between presumably distinct stimulus representations, whereas they do not measure whether the multisensory inputs have been integrated (for discussion of this issue, see Stein et al. 2010). Studies in later childhood (usually with children 6 and older), where multisensory influences on perception can be more reliably assayed, demonstrate the influence of multisensory information on size judgments (Gori et al. 2008), speech percepts (Barutchu, Danaher, et al. 2009), and balance (Bair et al. 2007).

Still, optimal benefit from multisensory inputs often requires experience, and there is every reason to expect that there is a typical developmental course for the “tuning-up” of multisensory integration (MSI) (Lewkowicz 2002; Bair et al. 2007; Lewkowicz and Ghazanfar 2009). Bair et al. (2007) showed that the ability to optimally use visual and somatosensory multisensory cues to maintain balance improves between the ages of 4 and 10 years, and preliminary data from our laboratory suggest that the capacity to benefit from visual inputs to understand auditory speech presented in a noisy environment improves dramatically as a function of age (Ross et al. 2008). These latter data show that the fine-tuning of optimal MSI continues throughout childhood and probably even into adolescence. Thus, it is clear from infant studies that the ability to associate stimuli from separate sensory modalities emerges very early on and from studies of later childhood that the integration of these inputs for functional purposes changes dramatically over the course of development and in some cases even extends from the middle years into adolescence.

To date, knowledge of the development of the neurophysiological processes that underlie MSI comes largely from animal studies. For the most part, these have focused on the postnatal emergence of multisensory neurons and on the effects of dramatic manipulations of the multisensory environment on the development of these neurons. For example, neurophysiological studies in cats indicate that subcortical and cortical multisensory neurons are initially responsive to only one type of sensory input (i.e., they are unisensory neurons), with responsivity to more than one type of sensory input emerging over the course of early development and integrative properties emerging yet later than simple coregistration (Stein et al. 1973; Wallace and Stein 1997; Wallace et al. 2006). Furthermore, it has been found that altering the sensory environment can dramatically affect the receptive fields of multisensory neurons (Wallace et al. 2004; Carriere et al. 2007). In a most compelling demonstration, auditory and visual stimuli were only ever presented together in a systematically misaligned configuration. In this case, multisensory neurons in the superior colliculus were found to coregister the spatially misaligned auditory and visual stimuli. In contrast, they did not show typical coregistration of the stimuli when they were presented in a spatially aligned configuration (Wallace and Stein 2007). Thus, there is a huge degree of plasticity in the receptive fields of multisensory neurons that maps over development.

One approach to behaviorally assessing whether MSI has occurred is to compare reaction times to unisensory stimuli to reaction times to multisensory stimuli. When behavioral facilitation for the multisensory condition is shown, a test is performed to determine whether this “speeding up” exceeds the amount of facilitation predicted by the statistical summation of the fastest unisensory responses (Miller 1982, 1986). When this statistical threshold is exceeded and hence the so-called “race model” is violated, it can be concluded that MSI has occurred. Numerous studies with adults have used this approach and demonstrated MSI for stimuli containing “redundant” bisensory targets (e.g., Hughes et al. 1994; Harrington and Peck 1998; Molholm et al. 2002; Murray, Molholm, et al. 2005; Maravita et al. 2008). Only a few studies have similarly evaluated MSI in children. One failed to reveal a clear developmental trajectory in children from 6 to 11 years of age (Barutchu, Crewther, et al. 2009). Whereas another study of AV integration, during the first year of life, found that while infants of all ages had faster reaction times to AV stimuli compared with the unisensory stimuli, only the oldest infants (those 8- to 10-months old) exhibited reaction times faster than those that would be predicted by simple statistical summation (Neil et al. 2006). However, unlike typical reaction time paradigms in adults which require an intentional motor response, these measures were taken from infants and were accordingly constrained to associated stimulus-reactive head or eye movements, the timing of which were measured on the order of seconds rather than milliseconds. Accordingly, these intriguing findings must be interpreted with caution.

In the face of a considerable body of work investigating the neurophysiology of MSI in human adults and in animals, there is a marked dearth of corresponding work on the development of MSI in humans. The aim of the present study was to examine the developmental trajectory of AV integration in typically developing children from the ages of 7 to 16 years, and in adults, using both behavioral and neurophysiological methods. The technique of high-density electrical mapping was used to measure the temporal and spatial dynamics of basic MSI while participants performed a simple reaction time task to auditory and visual stimuli presented simultaneously or alone (Molholm et al. 2002, 2006). Based on findings from both behavioral research in human infants and neurophysiological research in animals, it was expected that by 7–9 years of age, the youngest age-group considered, electrophysiological measures would reveal a complex pattern of MSI. It was also expected that the precise timing and scalp topography of multisensory processing would not have reached maturity by the youngest age-group, and therefore, significant differences in these patterns as a function of age were predicted. Whereas neurophysiological evidence of MSI across all the age-groups was anticipated, whether this would uniformly translate to improvements in behavioral measures of MSI remained an empirical question. Performance benefits from multisensory inputs have been shown to change over childhood (Bair et al. 2007; Gori et al. 2008; Barutchu, Crewther, et al. 2009), and therefore, it would be reasonable to expect a systematic relationship between age and the extent of MSI. However, a recent behavioral study using a paradigm nearly identical to the present showed that although evidence for MSI was seen in individual children ranging in age from 6 to 11, it was not as consistent or as strong as in adults, and no systematic relationship between age and MSI was found (Barutchu, Crewther, et al. 2009). The present study, combining both behavioral and electrophysiological measures of MSI, affords the opportunity to ally evidence of functional neural reorganization with changes in behavior with respect to MSI. Furthermore, characterizing neurodevelopmental changes in basic AV integration from middle childhood through adolescence will provide an important starting point from which future studies can begin to examine the developmental course of multisensory processing in clinical populations, such as autism and schizophrenia.

Materials and Methods

Participants

Forty-nine typically developing children and adolescents aged 7- to 16-years old and 13 young adults participated in this study. In order to assess developmental changes in MSI, the children were divided into the following 3 age groups: 7–9 years (n = 17; 9 females; mean age = 8.59 years), 10–12 years (n = 15; 9 females; mean age = 11.47 years), and 13–16 years (n = 17; 9 females; mean age = 14.46 years). An additional 6 individuals (4 between the ages of 7–9, one 11 years of age, and one adult) were excluded from all behavioral and neurophysiological analyses because of percent hits below 74% (2 standard deviations [SDs] below the average), making it unclear if these individuals were maintaining attention sufficiently. Participants were screened for neurological and psychiatric disorders. The adults ranged in age from 20 to 29 years (mean age = 23.11 years; SD = 2.57; 7 females). Children were administered the Wechsler Abbreviated Scales of Intelligence, and those children with a Full Scale IQ below 85 were excluded. Audiometric threshold evaluation confirmed that all participants had normal hearing. All participants had normal or corrected-to-normal vision. Children and adolescents were recruited from a local junior high school and from a community sample obtained through friends and acquaintances of colleagues and college students. Adults were recruited through the college’s Psychology research subject pool and from a community sample. Before entering into the study, informed written consent was obtained from the children’s parents, and verbal or written assent was obtained from children. Informed written consent was obtained from adult participants. All procedures were approved by the Institutional Review Board of the City College of the City University of New York.

Stimuli

Auditory Alone

A 1000-Hz tone (duration 60 ms; 75 dB SPL; rise/fall time 5 ms) was presented from a single Hartman Multimedia JBL Duet speaker located centrally atop the computer monitor from which the visual stimulus was presented.

Visual Alone

A red disc with a diameter of 3.2 cm (subtending 1.5° in diameter at a viewing distance of 122 cm) appearing on a black background was presented on a monitor (Dell Ultrasharp 1704FTP) for 60 ms. The disc was located 0.4 cm superior to central fixation along the vertical meridian (0.9° at a viewing distance of 122 cm). A small cross marked the point of central fixation on the monitor.

Auditory and Visual Simultaneous

The “auditory-alone” and “visual-alone” conditions described above were presented simultaneously. The auditory and visual stimuli were presented in close spatial proximity, with the speaker placed atop the monitor in vertical alignment with the visual stimulus.

Procedures

Participants were seated in a dimly lit room 122 cm from the monitor. In order to minimize excessive movement artifacts, participants were asked to sit still and keep their eyes focused on the small cross in the center of the monitor. Participants were given a response pad (Logitech Wingman Precision) and instructed to press a button with their right thumb as quickly as possible when they saw the red circle, heard the tone, or saw the circle and heard the tone. The same response key was used for all 3 stimulus types. These were presented with equal probability and in random order in blocks of 100 trials. Interstimulus interval varied randomly between 1000 and 3000 (ms) according to a uniform (square wave) distribution. Participants completed a minimum of 8 blocks, most completed 10. Breaks were encouraged between blocks to help maintain concentration and reduce restlessness or fatigue. Throughout the experimental procedure, children’s efforts and good behavior were reinforced with stickers and verbal praise.

Data Acquisition and Analysis

Behavioral

Button press responses to the 3 stimulus conditions were acquired during the recording of the electroencephalography (EEG) and processed off-line. Reaction times between 100 and 900 ms were considered valid. This window was used to avoid the double categorization of a response.

Event-Related Potentials

High-density EEG was recorded from 72 scalp electrodes (impedances <5 kΩ) at a digitization rate of 512 Hz using the BioSemi system (BioSemi, www.biosemi.com). The continuous EEG was recorded referenced to a common mode sense (CMS) active electrode and a driven right leg (DRL) passive electrode. CMS and DRL, which replace the ground electrodes used in conventional systems, form a feedback loop, thus rendering them references (Leavitt et al. 2007). Offline, the EEG was rereferenced to an average of all electrodes and divided into 1000-ms epochs (200-ms prestimulus to 800-ms poststimulus onset) to assess slow wave activity in the data and perform high-pass filtering of the data without distorting the epoch of interest, (from −100 to 500 ms). For children, an automatic artifact rejection criterion of ±140 μV from −100 to 500 ms was applied offline to exclude epochs with excessive electromuscular activity. For adults, the automatic artifact rejection criterion was set at ±100 μV from −100 to + 500 ms. To compute event-related potentials (ERPs), epochs were sorted according to stimulus condition and averaged for each participant. Average waveforms from the auditory-alone condition and the visual-alone condition were then summed for each participant. Baseline was defined as the epoch from negative 50 to 10 ms relative to the stimulus onset (as in Molholm et al. 2002; making a 60 ms baseline). A low-pass filter of 45 Hz with a slope of 24 db/octave was applied to the individual averages to remove the high-frequency artifact generated by nearby electronic equipment. A high-pass filter of 1.6 Hz with a slope of 12 db/octave was applied to the individual averages to remove ongoing slow-wave activity in the signal, which otherwise would be doubly represented in the sum unisensory response when assessing MSIs (Molholm et al. 2002; Teder-Salejarvi et al. 2002). For each of the age-groups, group-averaged ERPs were calculated to view the waveform morphology of the 3 stimulus conditions and the sum condition.

Statistical Analyses

Behavioral

A 2-way mixed design analysis of variance (ANOVA) (with factors of age-group and stimulus condition) was performed to compare the reaction times of the 3 stimulus conditions and to assess the effect of age on reaction time. Planned comparisons between each of the unisensory conditions and the multisensory condition were performed to test for the presence of a redundant signal effect (RSE; in this case indicating behavioral facilitation for the multisensory condition compared with each of the unisensory conditions). A test of Miller’s race model (Miller 1982) was then implemented. According to the race model, mean reaction times shorten because there are now 2 inputs (in this case, auditory and visual) to trigger a response and the fastest one wins. In this case, facilitation can be explained in the absence of interaction between the 2 inputs. However, when there is violation of the race model, it is assumed that the unisensory inputs interacted during processing to facilitate reaction time performance.

While reaction time performance was the main focus of the behavioral analysis, percent hits, defined as the percent of trials in which a response occurred within the valid reaction time range, were also calculated for each stimulus condition for all participants. A 2-way mixed design ANOVA was performed to examine the effect of stimulus condition and age-group on percent hits.

Testing the Race Model

Miller’s (1982) race model places an upper limit on the cumulative probability (CP) of reaction time at a given latency for stimulus pairs with redundant targets (i.e., targets indicating the same response). For any latency, t, the race model holds when this CP value is less than or equal to the sum of the CP from each of the single target stimuli (the unisensory stimuli) minus an expression of their joint probability. For each subject, the reaction time range within the valid reaction times (in this case, 100–900 ms) was calculated over the 3 stimulus types (auditory-alone, visual-alone, and “multisensory”) and divided into quantiles from the 5th to 100th percentile in 5% increments (5, 10, … , 95, 100%). Violations were expected to occur for the quantiles representing the lower end of the reaction times because this is when it was most likely that interactions of the visual and auditory inputs would result in the fulfillment of a response criterion before either source alone satisfied the same criterion (Miller 1982). It should be noted here that failure to violate the race model is not evidence that the 2 information sources did not interact to produce response time facilitation but rather it places an upper boundary on reaction time facilitation that can be accounted for by probability summation.

ERPs

As in previous work (e.g., Giard and Peronnet 1999; Foxe et al. 2000; Molholm et al. 2002, 2006), AV interactions were measured by summing the responses to the auditory-alone condition and the visual-alone condition and comparing that “sum” waveform with the response to the multisensory AV condition. Based on the principle of superposition of electrical fields, any significant divergence between the multisensory and the sum waveforms indicates that the auditory and visual inputs interacted. (It should be noted that using this noninvasive far-field recording method, we are entirely reliant on nonlinear summation for evidence of multisensory interactions. As has been pointed out by Stanford and Stein (2007), a large proportion of multisensory neurons respond with straightforward linear properties—i.e., the multisensory response is a simple sum of the unisensory constituents.) To date, this common method of measuring AV multisensory processing by comparing the multisensory and sum ERPs has only been applied to adult data (Giard and Peronnet 1999; Molholm et al. 2002; Teder-Salejarvi et al. 2002; Talsma and Woldorff 2005). This left little guidance from the literature for constraining the analyses. To constrain the analyses independent of the dependent measure (the difference between the multisensory response and the sum response), the temporal windows of analyses and electrodes to be tested were defined based on the peaks of the grand-mean multisensory (AV) responses. This approach has been applied to a number of high-dimensional data sets from our laboratory as well as others (Wylie et al. 2003; Russo et al. 2010), and while conservative, it provides a reasonable approach to delimiting the statistical tests to be performed on an a priori basis. Since there are well-known developmental changes in auditory and visual evoked-potentials, the time windows and electrodes tested were defined separately for each age-group. This resulted in 6 predefined peaks (see Table 3), which had similar spatiotemporal properties to the dominant underlying unisensory componentry (auditory P1, N1-frontocentral, N1-lateral, and P2 and the visual P1and N1). For each of these time windows, data from the multisensory and sum ERPs were submitted to a mixed-design ANOVA with factors of stimulus type (multisensory vs. sum), scalp region where applicable (2–3 regions), and group (4 age-groups). Each scalp region was represented by the average amplitude over 2–5 electrodes. Greenhouse–Geisser corrections were used in reporting P values when appropriate.

Table 3.

Time windows (in ms) and regions of analyses used in the ANOVAs

| Peak | Corresponding unisensory component | Regions of analyses (electrodes) | Time windowsa tested in each age-group |

|||

| 7–9 years | 10–12 years | 13–16 years | Adults | |||

| 1 | Auditory P1 | Frontal (AFz, Fz, F1, F2) | 66–86 | 64–84 | 54–74 | 40–60 |

| 2 | Auditory N1 | Frontocentral (FCz, FC1, FC2, Fz) | 118–138 | 110–130 | 100–120 | 95–115 |

| 3 | Auditory N1 | Left temporal (FT7, T7, TP7) | 140–160 | 150–170 | 142–162 | 138–158 |

| Right temporal (FT8, T8, TP8) | 160–180 | 164–184 | 142–162 | 138–158 | ||

| 4 | Auditory P2 | Frontocentral (FCz, FC1, FC2, Cz) | 190–210 | 187–207 | 172–192 | 165–185 |

| 5 | Visual P1 | Occipital (Oz, O1, O2) | 140–150 | 138–158 | 115–135 | 115–135 |

| Left parietooccipital (PO3, PO7) | 140–150 | 138–158 | 115–135 | 115–135 | ||

| Right parietooccipital (PO4, PO8) | 140–150 | 138–158 | 115–135 | 115–135 | ||

| 6 | Visual N1 | Left TPOb (O1, P7, P9, PO7, TP7) | 190–210 | 193–213 | 176–196 | 166–186 |

| Right TPOb (O2, P8, P10, PO8, TP8) | 190–210 | 193–213 | 176–196 | 166–186 | ||

Note: The time windows, regions, and electrodes were chosen based on the morphology of the multisensory (AV) grand-averaged ERPs for each age-group.

Time is measured in ms.

TPO, Parieto–temporal–occipital region.

In addition to the highly conservative approach to data analysis described above, a second, more comprehensive approach was employed. In this second phase of data exploration, cluster maps were generated for each age-group: Point-wise running paired t-tests (2-tailed) between the multisensory and sum responses were performed at each time point, on data from each of the electrodes. Differences were only considered when at least 10 consecutive data points (=19.2 ms at 512 Hz sampling rate) met a 0.05 alpha criterion. This approach has the potential to provide a fuller description of the data, which can also serve for hypothesis generation for future studies. The suitability of this method for assessing reliable effects and controlling for multiple comparisons is discussed elsewhere (Murray et al. 2001; Molholm et al. 2002).

Results

Behavioral

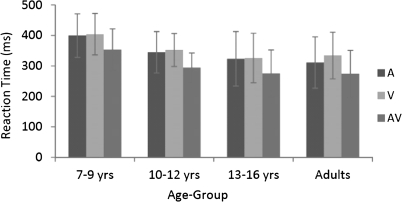

A 2-way mixed-design ANOVA revealed a main effect of age-group on reaction time (F3,58 = 4.346, P < 0.01), due to faster reaction times as age increased. There was also a main effect of stimulus condition (F2,116 = 176.052, P < 0.01). This reflected faster reaction times to the multisensory condition compared with either the auditory-alone or visual-alone conditions. There was no interaction between age-group and stimulus condition, and as can be seen in Figure 1, reaction time followed a similar pattern across the 4 age-groups. Planned comparisons between each of the 2 unisensory conditions and the multisensory condition (Table 1) confirmed the presence of a robust RSE in all age-groups. Individual subject analysis comparing the reaction time with each of the unisensory conditions with the reaction time to the multisensory condition revealed a RSE, whereby performance was significantly faster for the multisensory condition compared with the fastest unisensory condition. RSE was observed on an individual basis for all but 2 children (one in the 7–9 year age-group and one in the 10–12 year age group).

Figure 1.

Mean reaction times (and SD) for auditory (A), visual (V), and AV conditions across age-groups.

Table 1.

Group data from t-tests comparing mean reaction times with the multisensory stimulus condition and each of the unisensory stimulus conditions (auditory-alone and visual-alone)

| Age-group | Multisensory versus auditory-alone | Multisensory versus visual-alone |

| 7- to 9-year olds | t16 = 5.597, P < 0.001 | t16 = 12.082, P < 0.001 |

| 10- to 12-year olds | t14 = 6.582, P < 0.001 | t14 = 14.574, P < 0.001 |

| 13- to 15-year olds | t16 = 8.807, P < 0.001 | t16 = 24.009, P < 0.001 |

| Adults | t12 = 10.706, P < 0.001 | t12 = 33.548, P < 0.001 |

Note: All 4 predefined age-groups responded significantly faster to the multisensory stimulus condition than to either of the unisensory stimulus conditions.

Percent hits followed a pattern that was similar to that of the reaction time data. A 2-way mixed-design ANOVA revealed a main effect of age group on percent hits (F3,58 = 10.727, P < 0.01), due to higher percent hits as age increased (Table 2). There was also a main effect of stimulus condition (F2,116 = 129.570, P < 0.01). This reflected higher percent hits to the multisensory condition compared with either the auditory-alone or visual-alone conditions. Age-group and stimulus condition interacted (F6,116 = 2.649, P = 0.019) because adult performance reached ceiling and therefore did not differ as a function of stimulus condition. Percent hits for each of the stimulus conditions for each of the age-groups are reported in Table 2.

Table 2.

Percent hits (percent of trials in which a response occurred within the valid reaction time range) and SD for each stimulus condition for each age-group

| Age-group | Percent hits (SD) |

||

| Multisensory | Auditory-alone | Visual-alone | |

| 7- to 9-year olds | 93 (4.32)a | 89 (5.79) | 89 (6.80) |

| 10- to 12-year olds | 96 (4.86)a | 93 (6.24) | 92 (5.64) |

| 13- to 16-year olds | 97 (3.68)a | 96 (5.07) | 95 (6.10) |

| Adult | 99 (0.65) | 99 (0.83) | 99 (0.88) |

Note: Percent hits to the auditory-alone condition did not differ significantly from percent hits to the visual-alone condition for any of the age-groups.

Percent hits to the multisensory condition are significantly higher than to either of the unisensory conditions (P ≤ 0.05).

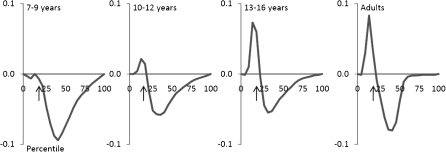

Testing the Race Model

Individual subject analysis of the reaction time distributions revealed violation of the race model for all but 6 children. Five of these children were from the 7–9 year age-group (the youngest age-group) and one was from the 10–12 year age-group. To test the reliability of these violations, the data from each of the age groups for each of the quantiles (corresponding to the cumulative distribution of the fastest to the slowest reaction times, over 20 “quantiles”) were submitted to a t-test. These revealed significant race model violations in the 10- to 12-year olds, the 13- to 16-year olds, and the adults but not in the 7- to 9-year-old age-group due to variability in the quantile in which violation was seen (Fig. 2). In the 10- to 12-year olds, group violations of the race model reached significance over the fourth quantile, in the 13- to 15-year-old group over the fourth, fifth, and sixth quantiles, and in the adult group over the third, fourth, and fifth quantiles. A post hoc ANOVA of race model violation in the fourth quantile, where it was most consistently violated across the participants in all age-groups, yielded a main effect of age-group (F3,61 = 6.007, P = 0.001), with Fisher’s Least Significant Difference showing that the 2 oldest groups exhibited significantly more violation than the 2 youngest groups.

Figure 2.

Miller inequality for the 4 age groups. Violations greater than zero signify violation of the race model. Arrows indicate the approximate location of the fourth quantile, race model was most consistently violated.

Electrophysiological Data

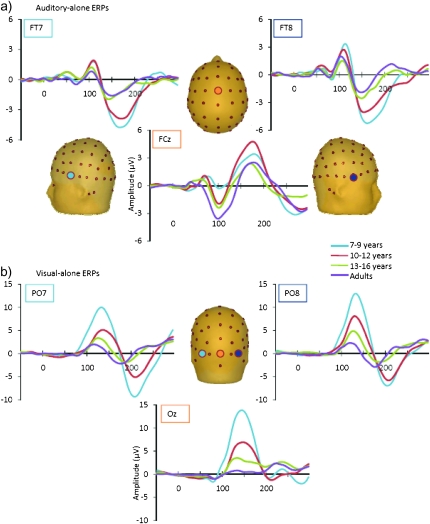

Unisensory Responses

In all age-groups, the auditory-alone ERP was characterized by the typical P1-N1-P2 complex; however, the timing and topography of these components varied with respect to age (see Fig. 3a). Specifically, the peak latency of the frontocentrally focused auditory P1 moved earlier as age increased, peaking at 80 ms in the 7- to 9-year olds, 74 ms in the 10- to 12-year olds, 68 ms in the 13- to 16-year olds, and 40 ms in adults. Additionally, the amplitude of the auditory P1 component was larger in the 2 younger groups than in the older groups, which is consistent with previous findings of larger P1 amplitude in children around 9–10 years of age (Ponton et al. 2000; Ceponiene et al. 2002). In accordance with previous findings (e.g., Ceponiene et al. 2002), the auditory N1 was largest at frontocentral sites for the 3 oldest groups (the adults, 13- to 16-year olds and 10- to 12-year olds), peaking at approximately 105 ms in these 3 groups. In contrast, the frontocentrally focused auditory N1 in the youngest group (7- to 9-year olds) appeared smaller and slightly later than in the older age-groups, peaking at approximately 115 ms. The lateral N1 appeared largest and most well defined in the younger groups, peaking at approximately 170 ms in the 7- to 9-year olds and at 165 ms in the 10- to 12-year olds (e.g., Gomes et al. 2001). In the 13- to 16-year olds and the adults, the relatively small lateral N1 peaked at approximately 160 ms and 150 ms, respectively. The auditory P2 was centrally focused with a similar latency peak amplitude across the groups (180 ms in the 7- to 9-year-old groups and 10- to 12-year-old groups, at 165 in the 13- to 16-year-old group, and at 175 ms in the adults).

Figure 3.

Grand averaged unisensory auditory and visual ERPs are depicted in panels (a) and (b), respectively, for the 4 age-groups. Auditory-alone responses are shown from representative frontocentral and frontotemporal electrode sites (FT7, FT8, and FCz) and visual-alone responses from representative occipital sites (PO7, PO8, and Oz).

The overall morphology of the response elicited by the visual-alone stimulus was quite similar across age-groups. There was a reduction in overall amplitude as age increased, and there were small changes in the peak latencies of the P1 and N1 responses (see Fig. 3b), as is typically observed in developmental data (e.g., Lippe et al. 2007). The visual P1 appeared maximal over occipital regions in the 3 pediatric groups, peaking at approximately 140 ms in the 7- to 9-year-old group, 145 ms in the 10- to 12-year-old group, and 127 ms in the 13- to 16-year-old group. In adults, the visual P1 was more parietally focused and peaked at approximately 137 ms. The timing of the P1 was somewhat later than typical; since the difference was seen across all age-groups, this is likely due to stimulation parameters. The visual N1 was focused over lateral occipital electrode sites and peaked between 182 and 207 ms, with latency decreasing as a function of age. These unisensory “components” (P1, N1, etc.) are thought to represent distinct processing stages and to reflect multiple sources of neuronal generators within the relevant primary and association cortices (auditory cortical regions for the auditory componentry and visual cortical regions for the visual componentry) (e.g., Naatanen and Picton 1987; Di Russo et al. 2002; Foxe and Simpson 2002). It is also assumed that the complexity of the information extracted from the signal increases as a function of the increasing response latency of the responses (Foxe and Simpson 2002; Murray et al. 2006). The developmental trajectories of auditory and visual sensory responses have been described and analyzed in great detail elsewhere (e.g., Ceponiene et al. 2002; Kuefner et al. 2010). Since these developmental data on unisensory responses appear highly similar to those reported in earlier studies, and the focus of this investigation is on multisensory processing, they are not considered further.

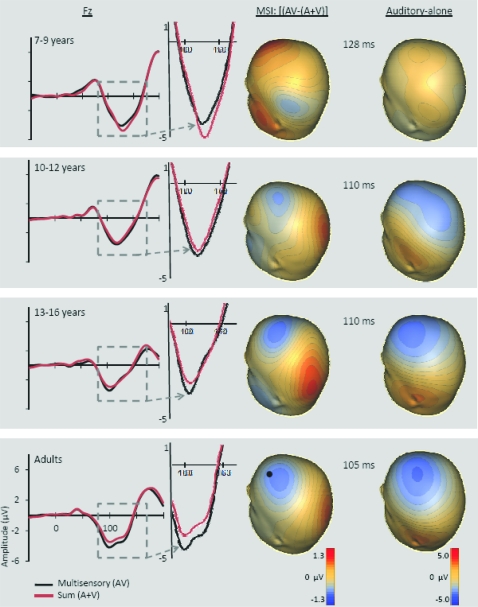

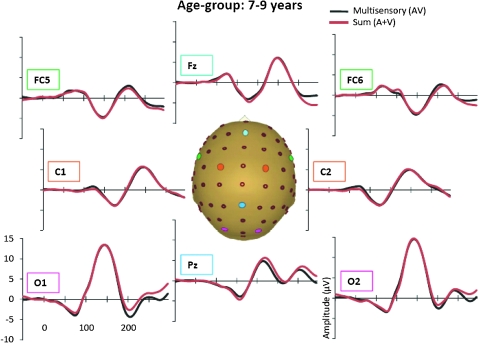

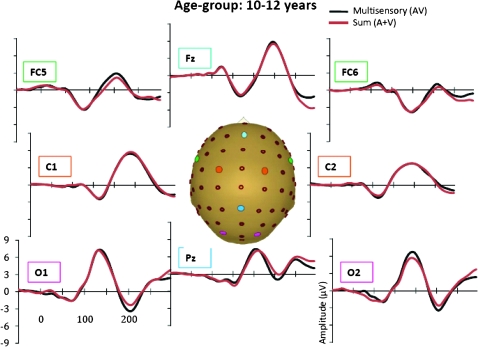

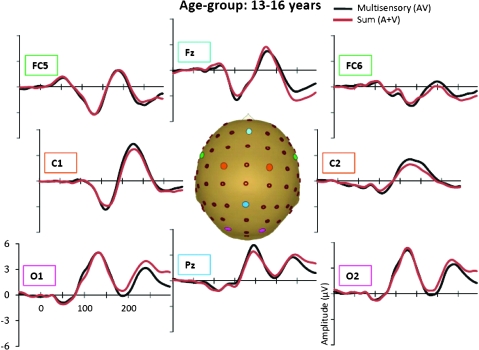

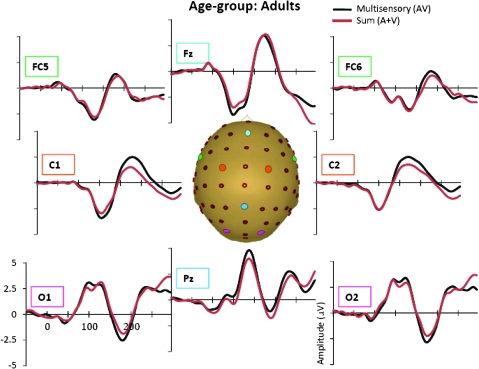

Multisensory Responses

In the AV response, 6 distinct spatiotemporal patterns between 0 and 250 ms were readily identified. These were similar to the auditory and visual unisensory componentry described above and similarly varied across age-groups in their precise timing and topography. These spatiotemporal patterns were used to define the time windows and scalp regions used to test for multisensory effects and are delineated in Table 3 for each of the age-groups. Multisensory and sum ERPs were compared using a mixed-design ANOVA for each time interval of interest with the between subjects factor of age-group and the within subjects factors of condition (sum vs. multisensory) and region. For the analysis of data around the time and region of the frontocentral auditory N1 (frontocentral, 95–138 ms), there was a significant main effect of condition (F1,58 = 6.591, P = 0.013) and an interaction of condition and age-group (F3,58 = 3.966, P = 0.012). Follow-up tests revealed that the amplitude of the multisensory response was significantly more negative than that of the sum response in the 2 oldest age-groups (13- to 16-year olds and adults) but not in the 2 younger age-groups. Observationally, this pattern was reversed for the youngest group (7- to 9-year olds) such that the amplitude of the sum response was more negative than that of the multisensory response, but this difference was not significant at the tested latency. Electrode Fz best illustrates this frontocentral condition by age–group interaction, at about 100–140 ms (see Fig. 4 where the effect is highlighted and also Figs 5–8). Scalp voltage maps of this effect revealed highly similar scalp distributions for the 3 oldest age-groups, with a stable positive–negative configuration suggestive of primary neural sources in parietal cortex (see Fig. 4). The negative portion of this configuration had a slightly leftward bias. The data from the youngest age-group did not conform to this pattern; since this effect was not significant, we do not describe it further. The auditory-alone voltage maps are illustrated at the same latencies for comparison and show a clearly different distribution that is consistent with neural sources in the temporal lobe in the region of auditory cortex for the 3 oldest groups. For the youngest group, the auditory-alone response was close to baseline at the tested latency and hence does not show an interpretable distribution. In the latency and region of the auditory P2 (frontocentral, 165–210 ms), a marginal trend was noted for the interaction between condition and age-group (F3,58 = 3.548, P = 0.065), which post hoc tests revealed was associated with a significant difference between the amplitude of the multisensory and sum response in only the adult group (P = 0.026). Finally, there was a main effect of condition around the latency and region of the visual N1 (parieto–temporal–occipital region, 166–210), with the multisensory response more negative going than the sum response (F1,58 = 4.280, P = 0.045); this effect is illustrated in Figures 5–8 and Supplementary Figure 1 in electrodes O1 and O2. No additional main effects or interactions involving the factor of condition were revealed by these analyses.

Figure 4.

For each of the 4 age-groups, the grand-averaged ERPs for the multisensory (black) and sum (red) responses at electrode Fz (location indicated by a black dot) are depicted on the left side of the figure. The response from ∼100–140 ms is enlarged to show the group by condition interaction effect. On the right side, voltage maps depict the scalp distribution of this effect (i.e., the difference between the multisensory and sum responses) and the corresponding auditory-alone response within the latencies used in the ANOVA and correlation analysis.

Figure 5.

Grand-averaged ERPs for the multisensory (black) and sum (red) responses for the 7- to 9-year-old age-group.

Figure 6.

Grand-averaged ERPs for the multisensory (black) and sum (red) responses for the 10- to 12-year-old age-group.

Figure 7.

Grand-averaged ERPs for the multisensory (black) and sum (red) responses for the 13- to 16-year-old age-group.

Figure 8.

Grand-averaged ERPs for the multisensory (black) and sum (red) responses for the adult age-group.

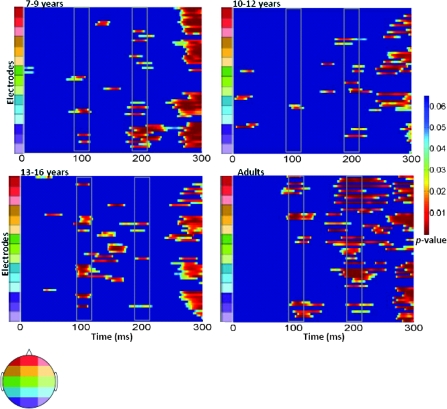

Cluster Based t-Tests on the Multisensory Versus Sum Responses

A more comprehensive picture of the spatialtemporal characteristics of the AV interactions can be gained from the secondary analysis in which restricted cluster plots were generated from t-tests performed between the multisensory and sum conditions for all scalp electrodes for data points from 0 to 300 ms poststimulus onset (Fig. 9). Three main clusters of AV interactions were apparent, although not all were observed in each of the age-groups. In contrast to an early study using a highly similar paradigm (Molholm et al. 2002), no early MSIs (earlier than 100 ms) were observed in our adult group in the cluster plot analysis, even when the statistical criterion was relaxed from 10 to just 5 consecutive significant data points. We suspect that this lack of early MSI (at least in our adult group) reflects paradigm differences between the studies. (In their 2002 study, Molholm and colleagues titrated the location of stimulus presentation so that a robust C1 visual response was observed on an individual subject basis, whereas this procedure was not followed here due to the time constraints imposed by working with a pediatric population.) Consistent with the primary analysis, AV interactions were seen over frontocentral scalp regions from about 100 to 120 ms in the cluster plots of the youngest group and the 2 older groups (the 13- to 16-year olds and the adults) but not in the 10- to 12-year-old group. Examination of the waveforms (electrode Fz, Fig. 4) clarifies that the frontal AV interactions seen in the cluster plot of the 7- to 9-year-old group are in the opposite direction (with sum more negative than multisensory) than for the other age-groups. The cluster plots reveal an additional pattern of AV interactions in the 100–150 ms time window over parietal scalp in the 2 older groups (the 13- to 16-year olds and the adults) but not in the 2 younger groups (see electrode Pz in Figs 5–8). The next clear AV interactions were seen between 190 and 240 ms. In the adult group, interactions in this time frame were widespread, appearing over anterior, central, and parietal regions (for example, see Fig. 8, electrodes C1, C2, and Pz). Consistent with the a priori analysis, the adult group also exhibited AV interactions over frontocentral scalp in this time frame. The interactions at around 200 ms were somewhat lateralized over frontal and central regions in the 3 child age-groups (see FC5 and FC6 in Figs 5–8), perhaps explaining why they were not picked up in the a priori analysis (“auditory P2” test). The cluster plots reveal an additional region of AV interactions around 200 ms in the 7- to 9-year olds over parietal scalp regions (Fig. 5, electrode Pz). Finally, all 4 groups showed widespread AV interactions over multiple scalp regions from 275 ms onward. This most likely reflects cortical activity related to the motor responses made following the occurrence of a stimulus. In this time frame, the sum response, where the supposed motor-related activity is represented twice, was larger.

Figure 9.

Significant P values over time for 64 scalp electrodes from running t-tests comparing the multisensory and sum ERPs for each of the 4 age-groups. Differences between the 2 conditions were only considered when at least 10 consecutive data points (=19.2 ms at 512 Hz sampling rate) met a 0.05 alpha criterion. P values are differentiated with the color scale shown to the right. Blue indicates an absence of significant P values. Time is plotted on the x-axis from 0 to 300 ms. Electrodes are plotted on the y-axis. Starting from the bottom of the graph, the electrodes are divided into sections from posterior to anterior scalp. Each color represents 4–5 electrodes, the relative positions of which are located on the corresponding head shown to the left. Solid gray boxes around 100 ms and 200 ms indicate the 2 main time windows discussed in the text.

Correlation Analysis

A post hoc correlation analysis was performed to test for a relationship between the behavioral and neurophysiological measures of AV integration. Specifically, a correlation between race model violation in the fourth quantile, where the race model was most consistently violated across the full set of participants, and the difference between the multisensory and sum-evoked potentials in the frontocentral region between 95 and 138 ms was computed (the same temporal window and scalp regions used for the ANOVAs—see Table 3). This revealed a significant correlation of 0.35 (r60 = 0.352, P = 0.005). To see how this might be related to stage of development, we also examined if race model violation was related to age and found a significant correlation of 0.48 (r60 = 0.478, P < 0.001).

Discussion

Just how the human brain integrates multisensory information during the childhood years and how this develops over time has not been well characterized to date. Here, we undertook a cross-sectional investigation to examine the developmental trajectory of AV MSI, using related electrophysiological and behavioral metrics and sampling from 4 age-groups from 7 years of age to adulthood. These data revealed maturation in the manner in which very basic auditory and visual stimuli are integrated during a simple reaction time task, both in terms of facilitation of behavior and in terms of the underlying neural processes.

For the behavioral data, simply looking at mean reaction times, all groups exhibited a significant speeding of response time when the visual and auditory stimuli were presented simultaneously compared with when they were presented alone. Since behavioral facilitation can result from probability summation, however, race model violation was used as a test of whether multisensory processing had contributed to performance facilitation. This indicated that multisensory facilitation of behavior was still clearly immature at 8 years of age (in the 7- to 9-year-old group) but seemed to have reached mature levels by about 15 years of age, with similar patterns of facilitation and race model violation for the 13–16 year group and the adult group (see Fig. 2). A more complete picture of the development of multisensory facilitation can be gathered by considering the individual subject data as a function of age-group. On an individual basis, all the participants in the 2 oldest age-groups (13–16 years and adults) demonstrated race model violation. Furthermore, across these 2 groups, the magnitude of violation was highly similar, as suggested in Figure 2 and by the follow-up test on data from the fourth quantile. In contrast, for the youngest group, race model violation was only seen in 12 of the 17 participants, and for these 12, the magnitude of violation tended to be very small and was variable across participants in terms of where in the reaction time distribution it was seen (resulting in no effect across participants, see Fig. 2). For the 10- to 12-year olds, the second to youngest age-group examined, there was significant race model violation in all but one participant, but like the youngest group, violation was of lesser magnitude than for the 2 older groups of participants, and there was greater variability in terms of where in the reaction time distribution violation was seen. Thus, from the behavioral data, there appears to be a gradual fine-tuning of the ability to benefit from the simultaneous presentation of auditory and visual response cues. Using a very similar paradigm, Barutchu, Crewther, et al. (2009) failed to find a consistent increase in race model violation as a function of age for children from 6 to 11 years of age. In their data, there was no evidence for race model violation in the 10- to 11-year-old age-group nor in the 6 year olds, but there was in the intervening age-groups and in adults. There were similarities to the present findings insofar as race model violation was observed to be smaller and more variable for the children compared with a group of young adults. The failure to observe as systematic a relationship as was seen here might be due to a difference in how the test of the race model was instantiated. Whereas here the reaction time distribution was divided into 20 quantiles and the percent of responses that fell into each determined on a within subject basis, as we have done in previous tests of the race model (Molholm et al. 2002, 2004; Murray, Molholm, et al. 2005), Barutchu and colleagues fit the reaction times to only 10 probability values, from 0.05 to 0.95. Furthermore, these researchers presented just 40 stimuli of each class (120 total) in their experiment, which would provide only 4 trials of each stimulus type per decile, whereas participants in this study received a minimum of 335 trials of each type (>1000 total).

In line with the behavioral findings, brain measures of AV integration also indicated a systematic relationship between age-group and multisensory processing. This was clearly evident in the time frame of the frontocentrally focused auditory N1. Here, in the youngest group, the multisensory response was less negative going than the sum response, whereas this relationship was shifted for the 2 oldest age-groups such that the multisensory response was clearly more negative going (Fig. 4). In the intervening age-group of 10- to 12-year olds, the multisensory response was just barely more negative going than the sum response (Fig. 4), suggestive of a transitional stage. These data showed a significant positive correlation with the behavioral measure of MSI, suggesting that the underlying brain processes (or a subset of the brain processes) contributed to the observed multisensory behavioral facilitation.

Examination of the scalp distribution of this MSI effect in the time frame of the auditory N1 suggested parietal generators for the 3 oldest age-groups. The parietal region is known to play a key role in the integration of multisensory inputs in both humans and in nonhuman primates. The intraparietal sulcus (IPS) in particular has been implicated in multisensory processing across a number of paradigms, types of stimuli, and sensory combinations (e.g., auditory, visual, tactile, and proprioceptive inputs). This region is involved in sensory motor transforms and the coordination of multiple spatial-reference frames (e.g., retinotopic, somatotopic, etc.) (e.g., Anderson et al. 1997; Mullette-Gillman et al. 2009) and has been shown to be part of neural networks involved in the processing of AV speech (e.g., Benoit et al. 2010), cross-modal spatial attention (e.g., Teder-Salejarvi et al. 1999; Macaluso et al. 2003), AV object recognition (Werner and Noppeney 2010), and visuohaptic object recognition (Kim and James 2010) among other multisensory processes (see e.g., Calvert 2001). The superior portion of the parietal lobe is also involved in multisensory processing (Molholm et al. 2006; Moran et al. 2008), and it has been suggested that in humans this may represent the homolog of a portion of IPS in nonhuman primates (Molholm et al. 2006; Moran et al. 2008).

The protracted maturation of cortical MSI seen in these data may also be related to the maturational trajectories of the underlying unisensory systems. For example, while the inner ear and brainstem auditory structures are remarkably well developed at birth and reach maturity by about 6 months of age, based on changes in the P1 and N1 of the auditory-evoked response it has been argued that the maturation of auditory cortex is a decade-long process that extends into adolescence (e.g., Ponton et al. 2000; Moore and Linthicum 2007). Obviously, if there is not a stable unisensory input–output function, this could impact processes into which these data feed (e.g., MSI processes). Another possibility, not mutually exclusive with the above, is that such protracted maturation relates to the need for prolonged plasticity in order to gain from the local statistical relationships among the many multisensory inputs that are encountered on a daily basis (e.g., Yu et al. 2010). This fits well with evidence for changes in how multisensory inputs are weighted over development (Gori et al. 2008) as well as preliminary data from our laboratory, which suggest developmental changes in the extent of multisensory gain compared with unisensory stimulation.

Though not specifically investigated to date, well-known developmental changes in attentional capacity (Paus et al. 1990; Posner and Rothbar 1998; Konrad et al. 2005) are likely to play a significant role in the ability to use multiple information sources and therefore in how different sensory inputs are weighted during the performance of a task. The possibility that maturational differences in attentional capacity may have contributed to some of the differences in MSI observed in the current study is in line with our behavioral data in which overall performance improved with age. However, differences in performance could reflect a number of variables, including maturational effects on processing speed (Kail 1991; Fry and Hale 2000; Luna et al. 2004), the development of higher order goal directed planning and execution of behavior (Welsh et al. 1991; Anderson 2002; Luciana et al. 2005), and attention. Future work will be required to parse these potential contributions to the maturation of multisensory processing.

Higher order perceptual processes, such as object and speech recognition depend on intact lower level sensory processing (Doniger, Silipo, et al. 2001; Fitch and Tallal 2003; Leitman et al. 2007); if the corticocortical pathways that mediate basic multisensory processing are in a state of fluctuation throughout middle childhood as suggested by the current neurophysiological findings, then subsequent stages of multisensory processing that are responsible for object and speech recognition are likely affected. Given the apparent ease with which typically developing children recognize multisensory objects and speech, it is tempting to assume that the sensory and perceptual processes that underlie this fundamental skill are mature by middle childhood. However, the data here suggest that the basic brain processes needed to support multisensory object processing and speech recognition, for example, are not the same in children as in adults. These important perceptual processes may be less automatic in children and may rely more heavily on later, more effortful stages of multisensory processing.

While the electrophysiological data afforded a straightforward interpretation of multisensory processes in the time frame of the frontocentral auditory N1, this was not so clearly the case for the next prominent multisensory modulation at about 200-ms poststimulus onset. The a priori test corresponding to the timing and topography of the visual N1 showed a main effect of condition and no interaction with group, but consideration of the cluster maps indicated that modulation in this time frame was most evident in the youngest and oldest age-groups (7–9 years and adults) and showed very different distributions for each (Fig. 9). These showed that AV interactions around 200 ms were widespread in adults, appearing across frontal, central, and parietal areas. In the 7- to 9-year-old age-group, effects between about 180 and 220 ms were primarily observed over parietal and occipital regions. One possible explanation for the differential MSI effects relates to their falling within the time frame that is associated with automatic visual “object recognition” processes (based on electrophysiological studies; e.g., Doniger, Foxe, et al. 2001; Murray, Foxe, et al. 2005). Given this, it may be that the inputs are treated as objects by the youngest age-group, resulting in processing focused over visual scalp regions (though not focused over more lateral posterior scalp regions as might be expected for object processing) and as “potential” objects by the oldest, resulting in a more widespread activation that includes “higher order” frontal areas. Such differences could reflect developmental changes in degrees of specialization of object processing. Granted somewhat speculative, this possible explanation of the data is put forth in the spirit of generating models of the development of multisensory processing for directed hypothesis testing.

Conclusions

With the use of behavioral probes, it has been established that in humans the processes underlying MSI continue to mature well into middle childhood, if not beyond this stage of development. To the best of our knowledge, however, the underlying changes in brain activity that must accompany changes in behavior have not been previously documented. Here, electrophysiology was used to characterize the developmental trajectory of the brain processes underlying AV multisensory processing over middle childhood and to ally this with developmental changes in the extent to which auditory and visual cues are integrated to speed performance in a simple reaction time task. The data show that changes in the brain processes underlying MSI in the 100–120 ms time frame are systematically related to increased multisensory gains in performance (as indexed by race model violations), with the latter increasing as a function of age. This is consistent with protracted plasticity in a dynamic system that continues to update the relative significance of multidimensional inputs from the environment. These data provide an important point of reference against which to assay the development of multisensory processes in clinical populations where integration problems are suspected.

Funding

The National Institute of Mental Health at the National Institutes of Health (MH085322 to S.M. and J.J.F.); from pilot grants from Cure Autism Now (to J.J.F.); The Wallace Foundation (to J.J.F. and S.M.). A.B.B. received additional support from a Doctoral Student Research grant from the Graduate Center of the City University of New York and N.R. from a Post Doctoral Research Grant from the Fondation du Québec de Recherche sur la Société et la Culture.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Acknowledgments

We extend our heartfelt appreciation to Dr Pejman Sehatpour for his help in ERP data analysis and to Daniella Blanco and Dr Charles Weaver for their help in ERP data collection. Finally, we are truly indebted to the families that so generously committed their time to this effort. Conflict of Interest : None declared.

References

- Anderson P. Assessment and development of executive function (EF) during childhood. Child Neuropsychol. 2002;8:71–82. doi: 10.1076/chin.8.2.71.8724. [DOI] [PubMed] [Google Scholar]

- Anderson RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Bahrick LE, Hernandez-Reif M, Flom R. Development of infant learning about specific face-voice relations. Dev Psychol. 2005;41:541–542. doi: 10.1037/0012-1649.41.3.541. [DOI] [PubMed] [Google Scholar]

- Bair WN, Kiemel T, Jeka JJ, Clark JE. Development of multisensory reweighting for posture control in children. Exp Psychol. 2007;183:435–446. doi: 10.1007/s00221-007-1057-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barutchu A, Crewther DP, Crewther SG. The race that precedes coactivation: development of multisensory facilitation in children. Dev Sci. 2009;12:464–473. doi: 10.1111/j.1467-7687.2008.00782.x. [DOI] [PubMed] [Google Scholar]

- Barutchu A, Danaher J, Crewther SG, Innes-Brown H, Shivdasani MN, Paolini AG. Audiovisual integration in noise by children and adults. J Exp Child Psychol. 2009;105:38–50. doi: 10.1016/j.jecp.2009.08.005. [DOI] [PubMed] [Google Scholar]

- Benoit MM, Raij T, Lin FH, Jaaskelainen IP, Stufflemeam S. Primary and multisensory cortical activity is correlated with audiovisual percepts. Hum Brain Mapp. 2010;31:526–538. doi: 10.1002/hbm.20884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA. Crossmodal processing brain: insights from functional neuroimaging studies. Cereb Cortex. 2001;11:1110–1123. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- Carriere B, Royal DW, Perrault TJ, Morrison SP, Vaughan JW, Stein BE, Wallace MT. Visual deprivation alters the development of cortical multisensory integration. J Neurophysiol. 2007;98:2858–2867. doi: 10.1152/jn.00587.2007. [DOI] [PubMed] [Google Scholar]

- Ceponiene R, Rinne R, Naatanen R. Maturation of cortical sound processing as indexed by event-related potentials. Clin Neurophysiol. 2002;113:870–882. doi: 10.1016/s1388-2457(02)00078-0. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Martinez A, Sereno MI, Pitzalis S, Hillyard SA. Cortical sources of the early components of the visual evoked potential. Hum Brain Mapp. 2002;15:95–111. doi: 10.1002/hbm.10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doniger GM, Foxe JJ, Schroeder CE, Murray MM, Higgins BA, Javitt DC. Visual perceptual learning in human object based recognition areas: a repetition priming study using high-density electrical mapping. Neuroimage. 2001;13:305–313. doi: 10.1006/nimg.2000.0684. [DOI] [PubMed] [Google Scholar]

- Doniger GM, Silipo G, Rabinowicz EF, Snodgrass JG, Javitt DC. Impaired sensory processing as a basis for object-recognition deficits in schizophrenia. Am J Psychiatry. 2001;158:1818–1826. doi: 10.1176/appi.ajp.158.11.1818. [DOI] [PubMed] [Google Scholar]

- Fitch RH, Tallal P. Neural mechanisms of language-based learning impairments: insights from human populations and animal models. Behav Cogn Neurosci Rev. 2003;2:155–178. doi: 10.1177/1534582303258736. [DOI] [PubMed] [Google Scholar]

- Forster B, Cavina-Pratesi C, Aglioti SM, Berlucchi G. Redundant target effect and intersensory facilitation from visual–tactile interactions in simple reaction time. Exp Brain Res. 2002;143:480–487. doi: 10.1007/s00221-002-1017-9. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Morocz IA, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10:77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Simpson GV. Flow of activation from V1 to frontal cortex in humans. A framework for defining “early” visual processing. Exp Brain Res. 2002;142:139–150. doi: 10.1007/s00221-001-0906-7. [DOI] [PubMed] [Google Scholar]

- Fry AF, Hale S. Relationships among processing speed, working memory, and fluid intelligence in children. Biol Psychol. 2000;54:1–34. doi: 10.1016/s0301-0511(00)00051-x. [DOI] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Gomes H, Dunn M, Ritter W, Kurtzberg D, Brattson A, Kreuzer JA, Vaughan HG. Spatiotemporal maturation of the central and lateral N1 components to tones. Dev Brain Res. 2001;129:147–155. doi: 10.1016/s0165-3806(01)00196-1. [DOI] [PubMed] [Google Scholar]

- Gondan M, Niederhaus B, Rösler F, Röder B. Multisensory processing in the redundant-target effect: a behavioral and event-related potential study. Percept Psychophys. 2005;67:713–726. doi: 10.3758/bf03193527. [DOI] [PubMed] [Google Scholar]

- Gori M, Del Viva M, Sandini G, Burr D. Young children do not integrate visual and haptic form information. Curr Biol. 2008;18:694–698. doi: 10.1016/j.cub.2008.04.036. [DOI] [PubMed] [Google Scholar]

- Harrington LK, Peck CK. Spatial disparity affects visual-auditory interactions in human sensorimotor processing. Exp Brain Res. 1998;122:247–252. doi: 10.1007/s002210050512. [DOI] [PubMed] [Google Scholar]

- Hughes H, Reuter-Lorenz PA, Nozawa G, Fendrich R. Visual-auditory interactions in sensorimotor processing: saccades versus manual responses. J Exp Psychol Human. 1994;20:131–153. doi: 10.1037//0096-1523.20.1.131. [DOI] [PubMed] [Google Scholar]

- Kail R. Developmental change in speed of processing during childhood and adolescence. Psychol Bull. 1991;109:490–501. doi: 10.1037/0033-2909.109.3.490. [DOI] [PubMed] [Google Scholar]

- Kim S, James TW. Enhanced effectiveness in visuo-haptic object-selective brain regions with increasing stimulus salience. Hum Brain Mapp. 2010;31:678–693. doi: 10.1002/hbm.20897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Konrad K, Neufang S, Theil CM, Specht K, Hanisch C, Fan J, Herpertz-Dahlmann B, Fink GR. Development of attentional networks: an fMRI study with children and adults. Neuroimage. 2005;28:429–439. doi: 10.1016/j.neuroimage.2005.06.065. [DOI] [PubMed] [Google Scholar]

- Kuefner D, de Heering A, Jacques C, Palmero-Soler E, Rossion B. Early visual evoked electrophysiological responses over the human brain (P1, N170) show stable patterns of face-sensitivity from 4 years to adulthood. Front Hum Neurosci. 2010;3:1–22. doi: 10.3389/neuro.09.067.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leavitt VM, Molholm S, Ritter W, Shpaner M, Foxe JJ. Auditory processing in schizophrenia during the middle latency period (10–50 ms): high-density electrical mapping and source analysis reveal subcortical antecedents to early cortical deficits. J Psychiatry Neurosci. 2007;3:339–353. [PMC free article] [PubMed] [Google Scholar]

- Leitman DI, Hoptman MJ, Foxe JJ, Saccente E, Wylie GR, Nierenberg J, Jalbrzikowski M, Lim KO, Javitt DC. The neural substrates of impaired prosodic detection in schizophrenia and its sensorial antecedents. Am J Psychiatry. 2007;164:474–482. doi: 10.1176/ajp.2007.164.3.474. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Infants’ responsiveness to the auditory and visual attributes of a sounding/moving stimulus. Percept Psychophys. 1992;52:519–528. doi: 10.3758/bf03206713. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Ghazanfar AA. The emergence of multisensory systems through perceptual narrowing. Trends Cogn Sci. 2009;13:470–478. doi: 10.1016/j.tics.2009.08.004. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DL. Heterogeneity and heterochrony in the development of intersensory perception. Brain Res Cogn Brain Res. 2002;14:41–63. doi: 10.1016/s0926-6410(02)00060-5. [DOI] [PubMed] [Google Scholar]

- Lippe S, Roy MS, Perchet C, Lassonde M. Electrophysiological markers of visuocortical development. Cereb Cortex. 2007;17:100–107. doi: 10.1093/cercor/bhj130. [DOI] [PubMed] [Google Scholar]

- Lovelace CT, Stein BE, Wallace MT. An irrelevant light enhances auditory detection in humans: a psychophysical analysis of multisensory integration in stimulus detection. Brain Res Cogn Brain Res. 2003;17:447–453. doi: 10.1016/s0926-6410(03)00160-5. [DOI] [PubMed] [Google Scholar]

- Luciana M, Conklin HM, Hooper CJ, Yarger RS. The development of nonverbal working memory and executive control processes in adolescents. Child Dev. 2005;76:697–712. doi: 10.1111/j.1467-8624.2005.00872.x. [DOI] [PubMed] [Google Scholar]

- Luna B, Garver KE, Urban TA, Lazar NA, Sweeney JA. Maturation of cognitive processes from late childhood to early adulthood. Child Dev. 2004;75:1357–1372. doi: 10.1111/j.1467-8624.2004.00745.x. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Eimer M, Frith CD, Driver J. Preparatory states in crossmodal spatial attention: spatial specificity and possible control mechanisms. Exp Brain Res. 2003;149:62–74. doi: 10.1007/s00221-002-1335-y. [DOI] [PubMed] [Google Scholar]

- Maravita A, Bolognini N, Bricolo E, Marzi CA, Savazzi S. Is audiovisual integration subserved by the superior colliculus in humans? Neuroreport. 2008;19:271–275. doi: 10.1097/WNR.0b013e3282f4f04e. [DOI] [PubMed] [Google Scholar]

- Miller JO. Divided attention: evidence for coactivation with redundant signals. Cogn Psychol. 1982;13:247–279. doi: 10.1016/0010-0285(82)90010-x. [DOI] [PubMed] [Google Scholar]

- Miller JO. Time course of coactivation in bimodal divided attention. Percept Psychophys. 1986;40:331–343. doi: 10.3758/bf03203025. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt D, Foxe J. Multisensory visual–auditory object recognition in humans: a high-density electrical mapping study. Cereb Cortex. 2004;14:452–465. doi: 10.1093/cercor/bhh007. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high density electrical mapping study. Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke J, Schwartz TH, Foxe JJ. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. 2006;96:721–729. doi: 10.1152/jn.00285.2006. [DOI] [PubMed] [Google Scholar]

- Moore JK, Linthicum F. The human auditory system: a timeline of development. Int J Audiol. 2007;46:460–478. doi: 10.1080/14992020701383019. [DOI] [PubMed] [Google Scholar]

- Moran RJ, Molholm S, Reilly RB, Foxe JJ. Changes in effective connectivity of human superior parietal lobule under multisensory and unisensory stimulation. Eur J Neurosci. 2008;27:2303–2312. doi: 10.1111/j.1460-9568.2008.06187.x. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 2009;19:1761–1775. doi: 10.1093/cercor/bhn207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray MM, Foxe JJ, Higgins BA, Javitt DC, Schroedrer CE. Visuo-spatial response interactions in early cortical processing during a simple reaction time task: a high density electrical mapping study. Neuropsychologia. 2001;39:828–844. doi: 10.1016/s0028-3932(01)00004-5. [DOI] [PubMed] [Google Scholar]

- Murray MM, Foxe JJ, Wylie GR. The brain uses single-trial multisensory memories to discriminate without awareness. Neuroimage. 2005;27:473–478. doi: 10.1016/j.neuroimage.2005.04.016. [DOI] [PubMed] [Google Scholar]

- Murray MM, Imber ML, Javitt DC, Foxe JJ. Boundary completion is automatic and dissociable from shape discrimination. J Neurosci. 2006;26:12043–12054. doi: 10.1523/JNEUROSCI.3225-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Ritter W, Heslenfeld DJ, Schroeder CE, Javitt DC, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Naatanen R, Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Neil PA, Chee-Ruiter C, Scheier C, Lewkowicz DJ, Shimojo S. Development of multisensory spatial integration and perception in humans. Dev Sci. 2006;9:454–464. doi: 10.1111/j.1467-7687.2006.00512.x. [DOI] [PubMed] [Google Scholar]

- Patterson ML, Werker JF. Two-month-old infants match phonetic information in lips and voice. Dev Sci. 2003;6:191–196. [Google Scholar]

- Paus T, Babenko V, Radil T. Development of an ability to maintain verbally instructed central gaze fixation studied in 8- to 10-year-old children. Int J Psychophysiol. 1990;10:53–61. doi: 10.1016/0167-8760(90)90045-f. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Kwong B, Don M. Maturation of human central auditory system activity: evidence from multi-channel evoked potentials. Clin Neurophysiol. 2000;111:220–236. doi: 10.1016/s1388-2457(99)00236-9. [DOI] [PubMed] [Google Scholar]

- Posner MI, Rothbar MK. Attention, self-regulation and consciousness. Philos Trans R Soc Lond B Biol Sci. 1998;35:1915–1927. doi: 10.1098/rstb.1998.0344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross LA, Molholm S, Gomez-Ramirez M, Sehatpour P, Brandwein AB, Russo N, Gomes H, Saint-Amour D, Foxe JJ. International multisensory research forum. Hamburg (Germany): 2008. Audiovisual integration in word recognition in typically developing children and children with autistic spectrum disorder. [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Molholm S, Javitt DC, Foxe JJ. Impaired multisensory processing in schizophrenia: deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophr Res. 2007;97:173–183. doi: 10.1016/j.schres.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Russo N, Foxe JJ, Brandwein AB, Altschuler T, Gomes H, Molholm S. Multisensory processing in children with autism: high-density electrical mapping of auditory-somatosensory integration. Autism Res. 2010;3:1–15. doi: 10.1002/aur.152. [DOI] [PubMed] [Google Scholar]

- Stanford TR, Stein BE. Superadditivity in multisensory integration: putting the computation in context. Neuroreport. 2007;18:787–792. doi: 10.1097/WNR.0b013e3280c1e315. [DOI] [PubMed] [Google Scholar]

- Stein BE, Burr D, Constantinidis C, Laurienti PJ, Meredith MA, Perrault TJ, Ramachandran R, Roder B, Rowland BA, Satian K, et al. Semantic confusion regarding the development of multisensory integration: a practical solution. Eur J Neurosci. 2010;31:1713–1720. doi: 10.1111/j.1460-9568.2010.07206.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Labos E, Kruger L. Sequence of changes in properties of neurons of superior colliculus of the kitten during maturation. J Neurophysiol. 1973;36:667–679. doi: 10.1152/jn.1973.36.4.667. [DOI] [PubMed] [Google Scholar]

- Talsma D, Woldorff MG. Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J Cogn Neurosci. 2005;17:1098–1114. doi: 10.1162/0898929054475172. [DOI] [PubMed] [Google Scholar]

- Teder-Salejarvi WA, McDonald JJ, Di Russo F, Hillyard SA. An analysis of audio-visual crossmodal integration by means of event-related potential (ERP) recordings. Brain Res Cogn Brain Res. 2002;14:106–114. doi: 10.1016/s0926-6410(02)00065-4. [DOI] [PubMed] [Google Scholar]

- Teder-Salejarvi WA, Munte T, Sperlich F, Hillyard SA. Intra-modal and cross-modal spatial attention to auditory and visual stimuli. An event-related brain potential study. Brain Res Cogn Brain Res. 1999;8:327–343. doi: 10.1016/s0926-6410(99)00037-3. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Carriere BN, Perrault TJ, Vaughan JW, Stein BE. The development of cortical multisensory integration. J Neurosci. 2006;26:11844–11849. doi: 10.1523/JNEUROSCI.3295-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Perrault TJ, Hairston WD, Stein BE. Visual experience is necessary for the development of multisensory integration. J Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Development of multisensory neurons and multisensory integration in cat superior colliculus. J Neurosci. 1997;17:2429–2444. doi: 10.1523/JNEUROSCI.17-07-02429.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Early experience determines how the senses will interact. J Neurophysiol. 2007;97:921–926. doi: 10.1152/jn.00497.2006. [DOI] [PubMed] [Google Scholar]

- Welsh MC, Pennington BF, Groisser DB. A normative-developmental study of executive function: a window on prefrontal function in children. Dev Neuropsychol. 1991;7:131–149. [Google Scholar]

- Werner S, Noppeney U. Distinct functional contributions of primary sensory and association areas to audiovisual integration in object categorization. J Neurosci. 2010;30:2662–2675. doi: 10.1523/JNEUROSCI.5091-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wylie GR, Javitt DC, Foxe JJ. Task switching: a high density electrical mapping study. Neuroimage. 2003;20:2322–2342. doi: 10.1016/j.neuroimage.2003.08.010. [DOI] [PubMed] [Google Scholar]

- Yu L, Rowland BA, Stein BE. Initiating the development of multisensory integration by manipulating sensory experience. J Neurosci. 2010;30:4904–4913. doi: 10.1523/JNEUROSCI.5575-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.