Abstract

There is growing evidence that efficacious interventions for autism are rarely adopted or successfully implemented in public mental health and education systems. We propose applying diffusion of innovation theory to further our understanding of why this is the case. We pose a practical set of questions that administrators face as they decide about the use of interventions. Using literature from autism intervention and dissemination science, we describe reasons why efficacious interventions for autism are rarely adopted, implemented, and maintained in community settings, all revolving around the perceived fit between the intervention and the needs and capacities of the setting. Finally, we suggest strategies for intervention development that may increase the probability that these interventions will be used in real-world settings.

Keywords: Autism spectrum disorders, Intervention, Community mental health, Public schools, Implementation science, Diffusion of innovation

Autism interventionists practicing in the community generally rely on techniques unsupported by research (Stahmer et al. 2005); when efficacious interventions are used, they often are not implemented the way they were designed (Stahmer 2007). Most autism programs incorporate elements of different strategies, which have not been tested in combination (Chasson et al. 2007). As a result, autism programming in the community has not been found to improve adaptive, social, or cognitive functioning (Chasson et al. 2007).

From 2002 to 2004, the National Institute of Mental Health convened stakeholders to inform government agencies about the state of the science of psychosocial interventions for autism. Based on these meetings, Lord et al. (2005) and Smith et al. (2007) wrote thoughtful reviews on the research-to-practice gap, which emphasize that efficacy research is not paralleled by systematic research on the best ways to implement interventions in the settings in which most children receive care. Parallel research in other areas of child psychopathology suggests similar challenges in cultivating the support of payers, practitioners, and families in disseminating and implementing evidence-based practices (Schoenwald and Hoagwood 2001).

We propose applying diffusion of innovation theory (Rogers 1962, 2003) to further our understanding of the dissemination and implementation of efficacious autism interventions. This theory provides a framework for describing how, why, and at what rate new technologies spread through social systems. It has been used to better understand the dissemination and implementation of interventions in diverse fields, such as HIV/AIDS (Svenkerud and Singhal 1998), substance abuse (Gotham 2004), and conduct disorder (Glisson and Schoenwald 2005). It is new, however, to autism intervention research.

The Diffusion of Innovation Model

The guiding principle for the diffusion of innovation framework is that an innovation’s reception is dependent on social context, which explains why proven-efficacious interventions are not used in community practice, while other interventions with minimal research support gain widespread acceptance. As Freedman (2002) summarizes, “social forces consistently trump unvarnished effectiveness” in how interventions are used (p. 1539).

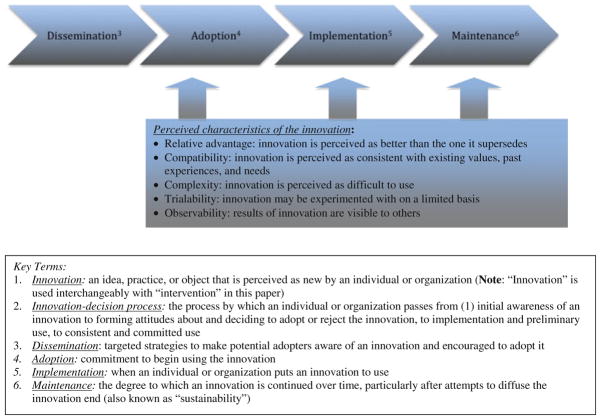

Diffusion is the process by which a new practice (an innovation) is communicated over time among members of a social system (Rogers 1995, 2003). The decision to accept, adopt, and use the intervention is not an instantaneous act, but a process consisting of four stages: dissemination, adoption, implementation and maintenance (Rohrbach et al. 1993). As depicted in Fig. 1, each stage includes processes that affect how the innovation is integrated into standard practice. During dissemination, administrators are made aware of programs and encouraged to adopt them. During adoption, administrators form attitudes towards the intervention and commit to initiate the program. During implementation, practitioners begin to use the innovation. Re-invention (changing or modifying an innovation) is especially likely at this phase. Finally, during maintenance (or sustainability), the innovation moves from implementation to institutionalization. At this phase, administrators and practitioners make a commitment to continue or discontinue use of the program.

Fig. 1.

The innovation1-decision process2

These four stages are affected by a complex array of variables, including perceived characteristics of the innovation (Rogers 2003), which have been show to predict 49–87 percent of the variance in how quickly it is adopted (Rogers 1995). These characteristics have been defined in a wide variety of ways in the diffusion of innovation literature, ranging from objective, “primary” ratings, such as size or cost, to subjective, “secondary” ratings, such as adopter’s ratings of specific attributes of the innovation (Tornatzky and Klein 1982). Here, we take Rogers’ (2003) approach, which emphasizes that it is the potential adopter’s perceptions of the attributes of an innovation, not the attribute as classified by external objective raters, that most strongly affects the rate of adoption. Within his framework, five characteristics of the innovation are most influential: relative advantage, compatibility, complexity, trialability, and observability. We focus here on three of these attributes: relative advantage, compatibility, and complexity, which are the most widely studied attributes in Rogers’ model and have the most consistent significant relationships with innovation adoption (Tornatzky and Klein 1982). While many perspectives are important, we adopt that of program administrators, with the understanding that they often have the most say in whether interventions are adopted (Rohrbach et al. 2005; Yell et al. 2005).

In what follows, we define relative advantage, compatibility, and complexity. Then, within this framework, we pose a practical set of questions facing administrators as they decide about the use of interventions.

What are Relative Advantage, Compatibility, and Complexity?

Relative advantage is the degree to which an innovation is perceived as better than the program it supersedes. Innovations that have an unambiguous advantage are more readily adopted—otherwise, potential users will not consider them further. In other words, “relative advantage is a sine qua non for adoption” (Greenhalgh et al. 2004, p. 594). Nevertheless, relative advantage alone does not guarantee widespread adoption of an innovation. Diffusion also requires that an innovation be compatible with the values, beliefs, past history, and current needs of the adopters. As Rogers (1995) notes, “The innovation may be ‘new wine,’ but it is poured into ‘old bottles’ (the clients’ existing perceptions)” (p. 241). Compatibility with organizational values and capacities also is required (Greenhalgh et al. 2004). Complexity is the degree to which an innovation is perceived as difficult to use. Generally speaking, innovations that are perceived as complex are less easily adopted.

While theoretical presentation can be useful, a model’s utility stems from practical application. Therefore, here we present guiding questions within this framework that administrators consider when deciding whether to implement a given intervention: Which program works better? Is the cost of changing worth it? Does this program meet my administrative requirements? Can my staff do this? Will staff and parents accept it?

To illustrate the following points, Table 1 applies these questions to two different evidence-based interventions for autism in public schools: discrete-trial training (DTT), a prototypical technique in applied behavior analysis (ABA) based on operant discrimination learning (Lovaas 1987), and pivotal response training (PRT), a less structured, more naturalistic approach (Koegel et al. 1987). While their approaches are quite different, both DTT and PRT have strong empirical support (Howlin et al. 2007; Jocelyn et al. 1998; Koegel et al. 2003; National Research Council 2001; Schreibman 2000), and they raise similar issues with respect to their dissemination and implementation in community settings, as demonstrated in Table 1.

Table 1.

Guiding questions applied to the diffusion of discrete trial training (DTT) and pivotal response training (PRT) in public schools

| Key guiding questions | Discrete Trial Training (DTT) and Pivotal Response Training (PRT) in Public Schools |

|---|---|

| 1. Which program works better… | |

| 1a. …based on what evidence? | While some randomized trials support the use of DTT, most evidence for DTT and PRT come from single-subject studies, which may not meet federal standards Although DTT and PRT are accepted as EBP in recent practice reviews, most evidence about them appears in peer-reviewed journals, which school administrators may have difficulty accessing and interpreting PRT is less widely known, studied, and disseminated than DTT |

| 1b. …for what children? | Children enrolled in efficacy studies for DTT and PRT have more familial resources and are less heterogeneous than those in public schools; administrators may believe that DTT will not be equally effective or appropriate for their students Concerns may be exacerbated because of focus on single-subject design |

| 1c. …for what outcomes? | Outcomes in DTT efficacy studies focus on IQ and symptoms of autism Outcomes in PRT studies focus on language, joint attention, play, and social skills Outcomes in schools focus on academic achievement (i.e., reading and math) |

| 2. Is the cost of changing worth the relative advantage offered by the new program? | DTT and PRT are expensive due to time and staffing requirements, particularly the number of hours spent on one-to-one intervention Changing to DTT or PRT requires time and resources DTT and PRT are rarely compared to other interventions, making it difficult to ascertain the advantage of changing programs |

| 3. Does this program have all the components I need for full implementation? | DTT and PRT are specific instructional strategies and are not necessarily paired with educational content DTT and PRT are one-on-one interventions and do not include strategies for group work Some components of PRT are difficult to implement in the classroom and must be combined with other interventions |

| 4. Can my staff do this with the resources available to them? | DTT and PRT require intensive training and ongoing consultation DTT also requires certification Classrooms are not adequately staffed to deliver one-to-one DTT interventions DTT (and some models of PRT) requires rigorous data collection, which may be perceived as excessively burdensome |

| 5. Will important constituencies accept this program? | Payers may endorse one specific program (e.g., Lovaas methods) and not fund other, less-known strategies Some parents may prefer naturalistic, play-based developmental approaches over DTT Some teachers and administrators may not see PRT as sufficiently didactic and/or within the scope of their work |

Which Program Works Better?

Mental health programs generally have no legal requirement to implement practices with proven efficacy, although the evidence-based practice movement has made considerable inroads into reconceptualizing community practice (Kazdin 2004; Weisz et al. 2004). School systems, on the other hand, are bound by the Individuals with Disabilities Education Act and the No Child Left Behind Act of 2001 (PL 107–110, Sect. 1001), which require them to implement evidence-based practices (Browder and Cooper-Duffy 2003). When districts do not use interventions with proven efficacy, courts can require costly alternatives, such as increased one-to-one intervention or private school placements (Yell and Drasgow 2000). School administrators, therefore, face enormous pressure to find justifiable programs. Mental health program administrators face similar pressures from payers and constituencies. This search can lead to more questions than answers. What sources will I use to determine the relative effectiveness of a given program? For what children was the program developed and tested, and do these children look like the students I serve? What outcomes did these programs measure, and are they the outcomes that I consider most important? Each question is addressed below.

… Based on What Evidence?

Many researchers assume that proven efficacy will be the primary selection criterion. In reality, administrators use a broader range of evidence. Rather than peer-reviewed journals, they often rely on information gathered locally in partnership with staff and community members (Honig and Coburn 2008). This information often consists of “practice wisdom” (nonempirical indicators that are naturally built into practice and include the opinions of colleagues, client statements, and practitioner observations and inferences; Elks and Kirkhard 1993). Practice wisdom is most frequently used when the research base is perceived as lacking (Herie and Martin 2002). Administrators report difficulty accessing research because the evidence has not been produced or research syntheses are not available. Administrators often perceive scientific evidence as not providing useful recommendations or not specific to the administrator’s situation, resulting in their feeling that this evidence cannot meaningfully guide decision making (Honig and Coburn 2008).

From this perspective, evidence regarding efficacious autism practices is weak and contradictory. Researchers’ understandable reluctance to draw clear-cut conclusions from ambiguous data is at odds with the demands of practitioners who must make concrete decisions about which interventions will be used (Stanovich and Stanovich 1997). No Child Left Behind and practice standards of assessing efficacy used for mental health interventions (Chambless and Hollon 1998; Weisz and Hawley 1998) consider independent randomized controlled trials (RCT) to be the gold standard. This narrow interpretation of scientific validity has significant implications for adopting intervention practices. The majority of autism intervention studies have focused on single-subject experimental designs (Machalicek et al. 2007; Odom et al. 2003; Rogers and Vismara 2008). While these studies have made tremendous contributions to the autism intervention field by demonstrating improvement among children with autism across broad areas of functioning, current federal and practice standards may make it difficult for administrators to justify the adoption of these strategies into practice.

While the National Research Council (2001) outlined key principles common to many intervention programs for autism, it also acknowledged that “there are virtually no data on the relative merit of one model over another” (National Research Council 2001, p. 171). While some states have developed best practice guidelines, many simply list the available treatments without regard for evidence. Even guidelines that consider experimental evidence offer no specific endorsements (Akshoomoff and Stahmer 2006).

…for What Children?

Many practitioners believe that the children they serve present more challenges than the children with whom interventions are tested and that proven-efficacious programs will not work in their settings (Cochran-Smith and Lytle 1999). The belief that their students or clients are different may have merit: children enrolled in efficacy studies represent only a subset of those served in community settings. Most autism interventions have been tested with preschool-aged children, with comparatively little data to guide administrators’ decisions regarding programming for older children (Akshoomoff and Stahmer 2006). Almost all of the comprehensive intervention programs are university-based. While single-subject studies have tended to occur in more naturalistic settings, such as a special education settings with a teacher and/or paraprofessional (Machalicek et al. 2007), these studies often focus on a single interventionist targeting a specific problem within a single child. The extent to which administrators and practitioners perceive these studies as applicable to broader programming decisions also may be constricted as a result. Children enrolled in efficacy studies also typically have greater familial resources and less heterogeneity than the typical child served in community settings, due to exclusion criteria and self-selection (Lord et al. 2005). Almost all of the current interventions in autism have been built on studies with white middle-class populations. While studies generally advocate for parental involvement, few have studied this involvement in families with fewer financial resources, less education, and more ethnic heterogeneity.

…for What Outcomes?

The mission of the education system is to promote academic achievement. NCLB requires that states implement assessment systems in reading and math (Yell et al. 2005). The mission of the mental health system is to maximize child functioning. Most efficacy studies of autism intervention, however, measure the symptoms of autism (e.g., joint attention, imitation, challenging behaviors) or IQ (National Research Council 2001), which may not directly relate to their outcomes of interest.

Is the Cost of Changing Worth the Relative Advantage Offered by the New Program?

The resource and staffing requirements to implement most proven-efficacious autism interventions are intensive. For example, estimated average costs for discrete trial training range from $22,500 to $75,670 per year (Chasson et al. 2007; Motiwala et al. 2006), with an expected course from two to six years (Jacobson et al. 1998). Many evidence-based interventions may be too costly to implement in an era in which the number of children diagnosed with autism in the special education system increases an average of 17% a year (US Department of Education 2004).

In addition to the cost of the new program per se, the change itself demands effort, time, and resources. Even if administrators are confident in a new program, they still must assess whether it poses a sufficient relative advantage to justify these costs. This is not easy to answer. The vast majority of intervention studies rely on a treatment-as-usual comparison group in which the usual treatment is not specified (Rogers and Vismara 2008). Therefore, administrators cannot determine whether the new program offers worthwhile advantages over current practice. When justifying the costs of changing programs, administrators also take into account whether the new program will be more cost-effective in the long run. Almost no intervention study has assessed short or long-term costs (National Research Council 2001).

Does this Program Have all the Components I Need for Full Implementation?

Interventions that are most readily transported into community settings address a broad range of needs, with program materials and clear procedural guidelines (Boardman et al. 2005). Autism interventions rarely are designed this way. Rather, they usually represent an instructional strategy without a curriculum (necessary for classroom implementation) or strategies for group work (necessary for classrooms and some mental health settings). While the National Research Council (2001) describes ten comprehensive programs, only three are designed for public schools, and none specifically address issues of implementation in community mental health settings. Of these ten, only four are commercially packaged or have publicly-available manuals (Lord et al. 2005).

Can My Staff Do This with the Resources Available to Them?

While administrators may have primary authority to adopt new programs, frontline staff determine whether and how they are used (Rohrbach et al. 2005). In this respect, teachers and clinicians delivering autism interventions can be thought of as “street-level bureaucrats.” Lipsky (1980) describes the large degree of influence held by public agency employees responsible for implementing public policies. These street-level bureaucrats often experience relative autonomy from organizational authority, and their work objectives are often vague or contradictory. As a result, they have considerable relatively high degrees of discretion in determining the nature, amount, and quality of the work provided by their agencies. When street-level bureaucrats are either unable or unwilling to administer policies enacted at a higher echelon, it is unlikely that they will be implemented as intended. Taking into account practitioners’ capabilities and preferences therefore is critical for effective delivery of evidence-based practices.

First, administrators must consider whether staff have the skills and resources to implement the program as intended. This is particularly important for autism programming, given the personnel requirements needed to delivery the high-intensity, individualized interventions that have proven most efficacious (Simpson 2003). Most evidence-based comprehensive programs require staff to blend several strategies to match children’s needs (Swiezy et al. 2008). Staff therefore must be trained in multiple approaches.

Practitioners may perceive autism interventions as overly burdensome (Maurice et al. 2001). For example, strategies based on applied behavior analysis demand high and consistent effort (Rakos 2006) and take considerable training to master (Lerman et al. 2004). Comprehensive programs for autism are even more complex. For example, STAR (Strategies for Teaching Based on Autism Research), an educational program for children with autism with published outcome data (Arick et al. 2003), requires that teachers learn three instructional methods in combination with curriculum content, student profiles and data sheets, and specific classroom set-up.

Most community settings are not staffed by professionals with the skills to implement these programs effectively (Heidgerken et al. 2005; Simpson 2003). For example, special education certifications and child psychology licensure are created under the assumption that generically trained personnel can address the needs of all children with disabilities (Simpson 2003). Even states offering licensure in specific disability categories often do not offer sufficient autism-specific training (Scheuermann et al. 2003). Simpson (2003) notes, “preparing qualified [professionals]…to educate…students with autism …is the most significant challenge facing the autism field” (p. 194). Because practitioners’ preparation so often is poor, administrators must find ways for them to acquire skills on the job. Specialized training is rarely available, however, and often lacks recommended elements of effective practices (Scheuermann et al. 2003).

Administrators also must take into account organizational capacity (Boardman et al. 2005). For example, the most common element of proven-efficacious practices consists of many hours per day of active engagement (Dunlap 1999). The National Research Council (2001) recommends that children receive a minimum of 25 h a week, 12 months a year, of individualized intervention. Most classrooms have insufficient staff-student ratios to meet these requirements, and mental health centers rarely are able to devote that much time to any one child. Comparatively little time, therefore, is available for individualized interaction.

In community clinics, administrators, practitioners, and parents also must take into account funding for autism intervention. Children with autism have medical expenditures that are extraordinarily high in comparison to expenditures of children without autism diagnoses (Mandell et al. 2006), and families of children with autism are more likely than other families of children with special needs to report problems acquiring appropriate service referrals and obtaining an adequate number of service visits (Krauss et al. 2003).

Will Important Constituencies Accept this Program?

Diffusion of innovation theory emphasizes that interventions must fit the perceived needs, values, and beliefs of key stakeholders. Finding a program acceptable to all stakeholders is challenging because parents, staff, and administrators often disagree about which practices for children with autism are consistent with their values (Feinberg and Vacca 2000; Sperry et al. 1999). Should intervention be highly structured and didactic, or naturalistic and play-based (Ospina et al. 2008)? Should it focus on social or academic development? Should it rely on intensive staff-child interactions or incorporate peer interactions? While this has not been studied in mental health settings, constituencies vary in their acceptance of didactic versus play-based instructional approaches in schools (Jennett et al. 2003). Didactic approaches such as discrete trial training are often perceived by parents as cold, rigid and dogmatic (Simpson 2001). On the other hand, some teachers and administrators embrace these didactic approaches, but balk at less structured, naturalistic play-based interventions. In one study, didactic practices based on operant conditioning were the source of most disagreement among parents, teachers and administrators (Callahan et al. 2008).

Administrators often also must take into account the requirements imposed by external funders, such as private or public insurance companies. Insurance companies may emphasize certain intervention strategies over others. For example, Pennsylvania Act 62 of 2008, which requires health insurance companies to cover up to $36,000 yearly for behavioral and other clinical services until the age of 21, explicitly mentions coverage of ABA, but not other behavioral or developmental approaches. Many of the services commonly used by children with autism such as educational testing, rehabilitation, coursework, and educational counseling are often excluded under private insurance plans (Young et al. 2009).

Summary

Given the current state of evidence and the proliferation of interventions, administrators face difficult decisions in determining which programs should be adopted. Administrators must weed through various claims of effectiveness, and take into account whether their staff can implement the program with available resources, and whether the program can be sustained over time. To complicate matters further, there often is tremendous parental advocacy for specific approaches. Because these issues are so emotionally charged, administrators may face harsh criticism, regardless of their decision.

Implications for Intervention Researchers

The research-practice gap in autism mirrors that in other conditions of childhood. A large body of research shows that interventions for children with psychiatric and developmental disorders are not as effective in communities as they are in research settings and do not sustain over time (Storch and Crisp 2004; Weisz et al. 2005). The lag between the development of evidence-based treatment and its integration into routine practice is estimated to be 20 years (Walker 2004). Developers and advocates of effective interventions have a responsibility to cultivate the conditions that will facilitate successful diffusion.

Diffusion of innovation theory highlights that the study any intervention is always the study of that intervention in a context. Rather than treating contextual factors as nuisance variables, diffusion of innovation theory suggests that they are critical to the adoption and continued, committed use of the intervention. This idea was discussed in the NIH-sponsored meetings on the state of autism intervention research, where there was consensus that key stakeholders (e.g., families, teachers, clinicians, and administrators) must be involved in developing a research agenda to foster large-scale use of effective treatments (Lord et al. 2005). Despite this consensus, there was disagreement on how to move from efficacy trials (testing the intervention under ideal circumstances) to effectiveness trials (testing the intervention under real-world conditions).

In their excellent review of the current challenges facing the field of autism intervention research, Smith et al. (2007) propose a model for systematically validating and disseminating interventions for autism. This model provides a strong framework in setting the agenda for autism intervention research. However, as researchers in related fields have highlighted, in order for efficacious interventions for be successfully implemented, the community context must be considered explicitly throughout all phases of research.

These researchers (Glasgow et al. 2003; Schoenwald and Hoagwood 2001; Weisz et al. 2004) suggest that it may be necessary to rethink the current “efficacy-to-effectiveness” sequence, often described as the “stage pipeline” model (Rohrbach et al. 2006). A report of the National Institute of Mental Health’s Advisory Council (National Institute of Mental Health 2001) describes a model of treatment development that attends to service delivery issues at the outset. Similarly, Weisz and colleagues (2004) state, “To create the most robust, practice-ready treatments, the field [should] consider a shift from the traditional model to a model that brings treatments into the crucible of clinical practice early in their development and treats testing in practice settings as a sequential process, not a single final phase” (p. 304). Weisz and colleagues advocate research models that attend to setting characteristics from the start, in the initial pilot and testing phases. They outline steps—from manual development to wide-scale dissemination—that focus on the setting in which the service ultimately will be delivered. This model is designed to accelerate the pace at which interventions are developed, adapted, refined, and implemented in communities (Weisz et al. 2004). Other researchers (Glasgow et al. 2003) have developed frameworks to increase external validity in trials by providing criteria for evaluating interventions on their efficacy and applicability to real-world practice. They also emphasize that participatory research methods should be built into efficacy studies, rather than left for later phases of research.

Successful efforts to adapt interventions to the practice context are likely to be bidirectional. In addition to adapting interventions to improve their fit with the values and capacities of public settings, adaptations within these settings may have to occur to improve practices (Hoagwood and Johnson 2003). Researchers have improved organizational capacity to support interventions by working with key stakeholders to improve their willingness and ability to adopt, implement, and maintain the intervention. The ARC (availability, responsiveness and continuity) model (Glisson and Schoenwald 2005) and the RE-AIM (reach, efficacy, adoption, implementation, and maintenance) framework (Glasgow et al. 2003) address barriers to the “fit” between social context and intervention by focusing organizational and community efforts on a specific population and problem, building community support for services that target the problem, creating alliances among providers and community stakeholders, encouraging desired provider behaviors, and developing a social context that fosters effective services delivery.

To highlight how these considerations can be incorporated within Smith et al.’s (2007) current model for conducting autism intervention research, Table 2 presents Smith et al.’s recommendations for each phase of autism intervention research. In the right-hand column, we present suggestions from dissemination, implementation, and community-based participatory research (Glasgow et al. 2003; Glasgow et al. 2001; Glisson 2007; Glisson and Schoenwald 2005; Israel et al. 2005; Weisz et al. 2004) on how to refine these phases in order to enhance the likelihood of uptake of efficacious autism interventions in the community, We also add a fifth stage, which is not explicitly considered within the scope of the Smith et al. (2007) model. The general principles presented are described in more detail below.

Table 2.

Incorporating diffusion of innovation research into Smith et al.’s (2007) recommendations for future autism intervention research

| Phase | Goals and activities | Refinements from dissemination and implementation research |

|---|---|---|

| 1. Formulation and systematic application of a new intervention using single-case or between-group designs | Conduct initial efficacy studies to refine techniques and document clinical significance of effects | Involve parents, practitioners, and administrators in the research from the early stages: Build on prior community-academic relationships Conduct a community analysis to identify key opinion leaders and other potential community partners Use change agents as “boundary spanners” who cultivate relationships with the community Target research towards issues that are most salient to public interest and actual practice, as identified by these community partners |

| 2. Manualization and protocol development via pilot studies, surveys and focus groups | Manualize efficacious intervention Put togetxher a manual for comparison group Develop treatment fidelity measures Test feasibility of implementing manuals across sites Assess acceptability of interventions to clinicians and families Examine sustainability of interventions in community settings Estimate sample size |

Continue to elicit guidance from the treatment developers, administrators, community practitioners, and families to encourage: Faithfulness to the core principles guiding the treatment protocol; and Goodness of fit with the clinical setting, practitioners, and clients Make successive modifications to protocol as problems of fit are identified Build formal mechanisms for evaluating sustainability and planning for sustainability of protocol in community settings, including: Continuing to partner with administrators, practitioners, and families to assess long-term feasibility of protocol Assessing potential long-term costs and other potential treatment demands Building data collection mechanisms into protocol that can be used in community to monitor fidelity of implementation, child outcomes, and stakeholder satisfaction |

| 3. Efficacy studies using randomized clinical trials | Evaluate efficacy of intervention in large-scale trial Demonstrate consistent effects across sites, as a step towards disseminating the intervention Conduct hierarchical analyses of mediators and moderators |

Recruit a diverse sample representative of all children with ASD Report exclusions, participation rates, dropouts, and representativeness on key characteristics Include outcome measures relevant to the system in which the intervention ultimately will be implemented, and assess both positive (anticipated) and negative (unintended) outcomes Include proxy measures of adoption, such as expressed interest of community practitioners in participating or providing feedback on protocol Consider the representativeness of the intervention agents by: Describing the participation rate and characteristics of those delivering the intervention, and how these agents compare with those who will eventually implement it in the community Including a variety of intervention agents with respect to background/experience, and report on potential differences in implementation and outcomes associated with these differences Collect data on likely treatment demands, such as time, staffing, parent involvement required, and total cost of implementation Continue to evaluate and plan for sustainability of protocol, as described above |

| 4. Community effectiveness studies using between-group designs | Assess whether competent clinicians in community can implement treatment |

Implement two-stage community-based effectiveness trials: Series of group-design partial effectiveness studies testing the newly adapted treatment protocol in the context representative community care. Explore, in stepwise fashion, the extent to which the protocol works with referred youths, in clinical care settings, when used by representative practitioners, and when compared to usual care Full effectiveness group-design clinical trials in community setting: Include all referred clients with ASD in community settings with community practitioners Report exclusions, participation rates, dropouts, and representativeness Include organization-wide economic outcomes, along with child outcomes Assess willingness of stakeholders from multiple settings to adopt/adapt program Continue having administrators, practitioners, and parents assess fit Report on representativeness of settings, participation rates, and reasons for declining Assess practitioners’ ability to implement intervention components in routine practice Systematically program for institutionalization of the program elements after formal study assistance is terminated by strengthening organizational capacity Utilize formal data collection mechanisms and comprehensive follow-up to monitor fidelity of implementation, child outcomes, and stakeholder satisfaction, and provide ongoing consultation as needed Plan for self-regulation and stabilization by providing training and program materials, and incrementally facilitating independent use |

| 5. Sustainability studies focused on the relation between the treatment program and the practice contexts in which it is employed | Not addressed | Assess protocol’s sustainability, with treatment fidelity and youth outcomes, over time, after the research support is withdrawn |

Target Research Towards Issues that are Most Salient to Public Practice

Autism interventions often receive broad media coverage and are adopted before claims of effectiveness have been adequately tested. Researchers increasingly recognize the need to test the validity of these claims quickly, but funding to test these claims is slow compared with the rate of information diffusion through commercial media (Lord 2000). Rapid funding of studies of autism interventions that have attracted significant public interest should be encouraged (Lord 2000). Similarly, intervention research should target ecologically valid outcomes that match the needs of stakeholders.

Enhance Generalizability of Intervention Studies by Including Heterogeneous Samples in More Naturalistic Settings

Most treatment research samples do not reflect demographics or clinical presentation of the general population (2005). Lord et al. (2005) suggest some strategies for increasing sample diversity. This issue also has been addressed elsewhere (Swanson and Ward 1995; Yancey et al. 2006). For example, Yancey et al. (2006) reviewed 95 studies describing methods of increasing minority enrollment and retention and identified several key recommendations, including: (a) reducing restraints on eligibility; (b) improving communication with potential minority participants to establish mutually beneficial goals and counteract mistrust of scientific research (e.g., by using personal contact rather than mass mailings); (c) facilitating community involvement by hiring outreach workers from the target population and working through community-based organizations, such as churches and schools; and (d) improving retention by providing intensive follow-up, having the same staff over time, and having accessible locations for intervention implementation and data collection, regular telephone reminders, and timely compensation.

Involve Stakeholders in Research from the Protocol Development Stage, and Have Them Assess the Fit of the Prototype to their Needs, Values, and Setting

Hoagwood and Johnson (2003) identify several organizational elements that must be understood to assess intervention fit, such as local policies, staffing, financing, and coordination of services. It is also critical to identify stakeholders’ perceptions of key barriers to diffusion, and collaboratively address these barriers. Participatory decision-making should be used throughout the dissemination and implementation process as a way to foster a strong sense of “team.” Finally, researchers can rely on social validity research to assess the fit of the program to stakeholders’ needs and values (Callahan et al. 2008; Gresham, Cook, Crews, and Kern 2004). Social validity research systematically assesses whether the goals, procedures, and outcomes of specific programs or interventions are acceptable to key stakeholders (Callahan et al. 2008).

Include Formal Data Collection and Comprehensive Follow-Up to Monitor Implementation Fidelity, Child Outcomes and Stakeholder Satisfaction, and Provide Ongoing Consultation

A review of autism intervention studies found that only 18% reported fidelity data (Wheeler et al. 2006). While measuring fidelity is fundamental to any study of intervention, it is particularly important for effectiveness studies, where fidelity is expected to be highly variable. Researchers also should study outcomes that are salient to stakeholders, and incorporate measures of stakeholder satisfaction. For example, researchers again can collect and report data to key stakeholders, monitor progress in solving problems stakeholders identify, and recommend changes (Glisson and Schoenwald 2005).

Plan for Intervention Maintenance by Providing Information, Training and Tools, and Incrementally Facilitating Community Practitioners’ Independent Use of the Intervention

In the absence of planning and support, community use of evidence-based interventions does not sustain over time (Shediac-Rizkallah and Bone 1998). Researchers should plan for sustainability by examining implementation during the study and determining what supports or modifications are necessary for it to continue after the study ends. Here again, autism intervention researchers can benefit from strategies identified in other fields, including: addressing multiple pathways to sustainability (e.g., policy change), rather than focusing exclusively on training; having organizational—in addition to individual—commitment to ensure stability; establishing program ownership among stakeholders and strengthening champion roles and leadership actions; building and maintaining local expertise; and establishing feasible evaluation strategies to monitor implementation quality and effectiveness (Israel et al. 2006; Johnson et al. 2004; Rohrbach et al. 2006).

Conclusion

The combination of increasing numbers of children identified with autism and increased advocacy for interventions that community practitioners often are ill-equipped to provide has created a crisis (Shattuck and Grosse 2007). Researchers often assume that this crisis can be resolved through the development of interventions, which will be disseminated automatically once efficacy is ascertained; however, efficacious treatments are rarely adopted or successfully implemented in community settings (Proctor et al. 2009), leading researchers to conclude that the “pipeline” model assumes an unrealistic progression from efficacy to uptake (Proctor et al. 2009; Schoenwald and Hoagwood 2001; Weisz et al. 2004). The autism intervention research community has the opportunity to learn from these experiences. Some collaborators with Lord et al. (2005) believe that “understanding what treatments are most beneficial, when done well, must precede the question of their effectiveness in the real world.” We respectfully disagree. A growing body of research suggests strategies for linking intervention development and the settings in which we hope interventions ultimately will be used. Autism intervention researchers must change current practice by (a) partnering with communities to facilitate the successful adoption, implementation, and maintenance of interventions that have already been developed, and (b) developing new interventions in collaboration with these communities to ensure that the interventions meet the community’s needs and capabilities, thereby increasing the likelihood of successful diffusion.

We do not suggest that researchers abandon the traditional randomized controlled trial. In fact, the RCT can be a key component of this type of research. For example, the National Institute of Mental Health, the Institute of Education Science, and the Agency for Healthcare Research and Quality all have renewed emphasis on comparative effectiveness research, field trials, and community-based participatory research, in which researchers partner with community settings to test interventions using rigorous research designs (Israel et al. 2006; Proctor et al. 2009).

Autism intervention researchers have made enormous advances in our knowledge of the best ways to intervene with children with autism. However, this knowledge has yet to affect many of the children who need it most. By making the diffusion of efficacious interventions a research priority, we can increase the probability that every child with autism benefits from the best intervention models that research has to offer.

Acknowledgments

This work was supported by a grant from the National Institute of Mental Health (F31MH088172-02).

Contributor Information

Hilary E. Dingfelder, Email: dingfeld@psych.upenn.edu, Department of Psychology, University of Pennsylvania, 3720 Walnut Street, Philadelphia, PA 19104, USA

David S. Mandell, Email: mandelld@mail.med.upenn.edu, Center for Mental Health Policy and Services Research, Department of Psychiatry, University of Pennsylvania School of Medicine, 3535 Market Street, 3rd Floor, Philadelphia, PA 19104, USA

References

- Akshoomoff NA, Stahmer A. Early intervention programs and policies for children with autism spectrum disorders. In: Fitzgerald HE, Lester BM, Zuckerman B, editors. The crisis in youth mental health: Critical issues and effective Programs. Vol. 1. Childhood disorders. Westport, CT: Praeger; 2006. pp. 109–131. [Google Scholar]

- Arick J, Young H, Falco R, Loos L, Krug D, Gense M, et al. Designing an outcome study to monitor the progress of students with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities. 2003;18:75–87. [Google Scholar]

- Boardman AG, Argüelles ME, Vaughn S, Hughes MT, Klingner J. Special education teachers’ views of research-based practices. The Journal of Special Education. 2005;39:168–180. [Google Scholar]

- Browder DM, Cooper-Duffy K. Evidence-based practices for students with severe disabilities and the requirement for accountability in “No Child Left Behind”. The Journal of Special Education. 2003;37:157–163. [Google Scholar]

- Callahan K, Henson RK, Cowan AK. Social validation of evidence-based practices in autism by parents, teachers, and administrators. Journal of Autism and Developmental Disorders. 2008;38:678–692. doi: 10.1007/s10803-007-0434-9. [DOI] [PubMed] [Google Scholar]

- Chambless DL, Hollon SD. Defining empirically supported therapies. Journal of Consulting and Clinical Psychology. 1998;66(1):7–18. doi: 10.1037//0022-006x.66.1.7. [DOI] [PubMed] [Google Scholar]

- Chasson GS, Harris GE, Neely WJ. Cost comparison of early intensive behavioral intervention and special education for children with autism. Journal of Child and Family Studies. 2007;16:401–413. [Google Scholar]

- Cochran-Smith M, Lytle S. Relationships of knowledge and practice: Teacher learning in communities. In: Iran-Nejar A, Pearson PD, editors. Review of research in education. Washington, D. C: American Educational Research Associates; 1999. [Google Scholar]

- Dunlap G. Consensus, engagement, and family involvement for young children with autism. Journal of the Association for Persons with Severe Handicaps. 1999;24:222–225. [Google Scholar]

- Feinberg E, Vacca J. The drama and trauma of creating policies on autism: Critical issues to consider in the new millennium. Focus on Autism and Other Developmental Disabilities. 2000;15:130–137. [Google Scholar]

- Freedman J. The diffusion of innovations into psychiatric practice. Psychiatric Services. 2002;53(12):1539–1540. doi: 10.1176/appi.ps.53.12.1539. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health. 2003;93(8):1261–1267. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, McKay HG, Piette JD, Reynolds KD. The RE-AIM framework for evaluating interventions: What can it tell us about approaches to chronic illness management? Patient Education and Counseling. 2001;44:119–127. doi: 10.1016/s0738-3991(00)00186-5. [DOI] [PubMed] [Google Scholar]

- Glisson C. Assessing and changing organizational culture and climate for effective services. Research on Social Work Practice. 2007;17(6):736–747. [Google Scholar]

- Glisson C, Schoenwald SK. The ARC organizational and community intervention strategy for implementing evidence-based children’s mental health treatments. Mental Health Services Research. 2005;7(4):243–259. doi: 10.1007/s11020-005-7456-1. [DOI] [PubMed] [Google Scholar]

- Gotham HJ. Diffusion of mental health and substance abuse treatments: Development, dissemination, and implementation. Clinical Psychology: Science and Practice. 2004;11(2):160–176. [Google Scholar]

- Greenhalgh T, Robert G, MacFarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gresham FM, Cook CR, Crews SD, Kern L. Social skills training for children and youth with emotional and behavioral disorders: Validity considerations and future directions. Behavioral Disorders. 2004;30:32–46. [Google Scholar]

- Heidgerken A, Geffken G, Modi A, Frakey L. A survey of autism knowledge in a health care setting. Journal of Autism and Developmental Disorders. 2005;3:323–330. doi: 10.1007/s10803-005-3298-x. [DOI] [PubMed] [Google Scholar]

- Herie M, Martin GW. Knowledge diffusion in social work: A new approach to bridging the gap. Social Work. 2002;47:85–95. doi: 10.1093/sw/47.1.85. [DOI] [PubMed] [Google Scholar]

- Hoagwood K, Johnson J. School psychology: A public health framework. I. From evidence-based practices to evidence-based policies. Journal of School Psychology. 2003;41:3–21. [Google Scholar]

- Honig MI, Coburn C. Evidence-based decision making in school district central offices: Toward a policy and research agenda. Educational Policy. 2008;22:578–608. [Google Scholar]

- Howlin P, Gordon R, Pasco G, Wade A, Charman T. The effectiveness of picture exchange communication system (PECS) training for teachers of children with autism: A pragmatic, group randomised controlled trial. Journal of Child Psychology and Psychiatry. 2007;48(5):473–481. doi: 10.1111/j.1469-7610.2006.01707.x. [DOI] [PubMed] [Google Scholar]

- Israel BA, Krieger J, Vlahov D, Ciske S, Foley M, Fortin P, et al. Challenges and facilitating factors in sustaining community-based participatory research partnerships: Lessons learned from the Detroit, New York City and Seattle Urban Research Centers. Journal of Urban Health: Bulletin of the New York Academy of Medicine. 2006;83:1022–1040. doi: 10.1007/s11524-006-9110-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Israel BA, Parker EA, Rowe Z, Salvatore A, Minkler M, López J, et al. Community-based participatory research: Lessons learned from the Centers for Children’s Environmental Health and Disease and Prevention Research. Environmental Health Perspectives. 2005;113(10):1463–1471. doi: 10.1289/ehp.7675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobson JW, Mulick JA, Green G. Cost-benefit estimates for early intensive behavioral intervention for young children with autism: General model and single state case. Behavioral Interventions. 1998;13:201–226. [Google Scholar]

- Jennett HK, Harris SL, Mesibov GB. Commitment to philosophy, teacher efficacy, and burnout among teachers of children with autism. Journal of Autism and Developmental Disorders. 2003;33:583–593. doi: 10.1023/b:jadd.0000005996.19417.57. [DOI] [PubMed] [Google Scholar]

- Jocelyn L, Casiro O, Beattie D, Bow J, Kneisz J. Treatment of children with autism: A randomized controlled trial to evaluate a caregiver-based intervention program in community day-care centers. Journal of Developmental Behavioral Pediatrics. 1998;19:326–334. doi: 10.1097/00004703-199810000-00002. [DOI] [PubMed] [Google Scholar]

- Johnson K, Hays C, Center H, Daley C. Building capacity and sustainable prevention innovations: A sustainability planning model. Evaluation and Program Planning. 2004;27:135–149. [Google Scholar]

- Kazdin AE. Evidence-based treatments: Challenges and priorities for practice and research. Child and Adolescent Psychiatric Clinics of North America. 2004;13:923–940. doi: 10.1016/j.chc.2004.04.002. [DOI] [PubMed] [Google Scholar]

- Koegel LK, Carter CM, Koegel RL. Teaching children with autism self-initiations as a pivotal response. Topics in Language Disorders. 2003;23:134–145. [Google Scholar]

- Koegel RL, O’Dell MC, Koegel LK. A natural language teaching paradigm for nonverbal autistic children. Journal of Autism and Developmental Disorders. 1987;17:187–200. doi: 10.1007/BF01495055. [DOI] [PubMed] [Google Scholar]

- Krauss MW, Gulley S, Sciegaj M, Wells N. Access to speciality medical care for children with mental retardation, autism, and other special health care needs. Mental Retardation. 2003;41:329–339. doi: 10.1352/0047-6765(2003)41<329:ATSMCF>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Lerman DC, Vorndran CM, Addison L, Kuhn SC. Preparing teachers in evidence-based practices for young children with autism. School Psychology Review. 2004;33(4):510–526. [Google Scholar]

- Lipsky M. Street-level bureaucracy: Dilemmas of the individual in public services. New York: Russell Sage Foundation; 1980. [Google Scholar]

- Lord C. Commentary: Achievements and future directions for intervention research in communication and autism spectrum disorders. Journal of Autism and Developmental Disorders. 2000;30(5):393–398. doi: 10.1023/a:1005591205002. [DOI] [PubMed] [Google Scholar]

- Lord C, Wagner A, Rogers S, Szatmari P, Aman M, Charman T, et al. Challenges in evaluating psychosocial interventions for autistic spectrum disorders. Journal of Autism and Developmental Disorders. 2005;35(6):695–708. doi: 10.1007/s10803-005-0017-6. [DOI] [PubMed] [Google Scholar]

- Lovaas O. Behavioral treatment and normal educational and intellectual functioning in young autistic children. Journal of Consulting and Clinical Psychology. 1987;55:3–9. doi: 10.1037//0022-006x.55.1.3. [DOI] [PubMed] [Google Scholar]

- Machalicek W, O’Reilly MF, Beretvas N, Sigafoos J, Lancioni G. A review of interventions to reduce challenging behavior in school settings for students with autism spectrum disorders. Research in Autism Spectrum Disorders. 2007;1:229–246. [Google Scholar]

- Mandell D, Cao J, Ittenbach R, Pinto-Martin J. Medicaid expenditures for children with autistic spectrum disorders: 1994 to 1999. Journal of Autism and Developmental Disorders. 2006;36(4):475–485. doi: 10.1007/s10803-006-0088-z. [DOI] [PubMed] [Google Scholar]

- Maurice C, Mannion K, Setso S, Perry L. Parent voices: Difficulty in accessing behavioral intervention for autism; working toward solutions. Behavioral Interventions. 2001;16:147–165. [Google Scholar]

- Motiwala SS, Gupta S, Lilly MB. The cost-effectiveness of expanding intensive behavioural intervention to all autistic children in Ontario. Healthcare Policy. 2006;1:135–151. [PMC free article] [PubMed] [Google Scholar]

- National Institute of Mental Health. Report of the National Advisory Mental Health Council’s workgroup on child and adolescent mental health intervention development. Bethesda, MD: 2001. Blueprint for change: research on child and adolescent mental health. [Google Scholar]

- National Research Council. Educating children with autism. Washington, DC: National Academy Press; 2001. [Google Scholar]

- Odom SL, Brown WH, Frey T, Karasu N, Smith-Canter LL, Strain PS. Evidence-based practices for young children with autism: Contributions for single-subject design research. Focus on Autism and Other Developmental Disabilities. 2003;18(3):166–175. [Google Scholar]

- Ospina MB, Seida JK, Clark B, Karkhaneh M, Hartling L, Tjosvold L, et al. Behavioural and developmental interventions for autism spectrum disorder: A clinical systematic review. PLoS ONE. 2008;3:e3755. doi: 10.1371/journal.pone.0003755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons GA, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy In Mental Health. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakos RF. Applied behavior analysis: niche therapy par excellance. Behavior and Social Issues. 2006;15:187–191. [Google Scholar]

- Rogers EM. Diffusion of innovation. New York: Free Press; 1962. [Google Scholar]

- Rogers EM. Diffusion of innovations. 4. New York: Free Press; 1995. [Google Scholar]

- Rogers EM. Diffusion of innovations. 5. New York: Free Press; 2003. [Google Scholar]

- Rogers SJ, Vismara LA. Evidence-based comprehensive treatments for early autism. Journal of Clinical Child & Adolescent Psychology. 2008;37:8–38. doi: 10.1080/15374410701817808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohrbach LA, Graham JW, Hansen WB. Diffusion of a school-based substance abuse prevention program: predictors of program implementation. Preventive Medicine. 1993;22:237–260. doi: 10.1006/pmed.1993.1020. [DOI] [PubMed] [Google Scholar]

- Rohrbach LA, Grana R, Sussman S, Valente TW. Type II translation: transporting prevention interventions from research to real-world settings. Evaluation & the Health Professions. 2006;29(3):302–333. doi: 10.1177/0163278706290408. [DOI] [PubMed] [Google Scholar]

- Rohrbach LA, Ringwalt CL, Ennett ST, Vincus AA. Factors associated with adoption of evidence-based substance use prevention curricula in US school districts. Health Education Research: Theory & Practice. 2005;20:514–526. doi: 10.1093/her/cyh008. [DOI] [PubMed] [Google Scholar]

- Scheuermann B, Webber J, Boutot EA, Goodwin M. Problems with personnel preparation in autism spectrum disorders. Focus on Autism and Other Developmental Disabilities. 2003;18:197–206. [Google Scholar]

- Schoenwald SK, Hoagwood K. Effectiveness, transportability, and dissemination of interventions: What matters when? Psychiatric Services. 2001;52(9):1190–1197. doi: 10.1176/appi.ps.52.9.1190. [DOI] [PubMed] [Google Scholar]

- Schreibman L. Intensive behavioral/psychoeducational treatments for autism: Research needs and future directions. Journal of Autism and Developmental Disorders. 2000;30(5):373–378. doi: 10.1023/a:1005535120023. [DOI] [PubMed] [Google Scholar]

- Shattuck P, Grosse S. Issues related to the diagnosis and treatment of autism spectrum disorders. Mental Retardation and Developmental Disabilities Research Reviews. 2007;13:129–135. doi: 10.1002/mrdd.20143. [DOI] [PubMed] [Google Scholar]

- Shediac-Rizkallah MC, Bone LR. Planning for the sustainability of community-based health programs: Conceptual frameworks and future directions for research, practice and policy. Health Education Research. 1998;13:87–108. doi: 10.1093/her/13.1.87. [DOI] [PubMed] [Google Scholar]

- Simpson RL. ABA and students with autism spectrum disorders: Issues and considerations for effective practice. Focus on Autism and Other Developmental Disabilities. 2001;16(2):68–71. [Google Scholar]

- Simpson RL. Policy-related research and perspectives. Focus on Autism and Other Developmental Disabilities. 2003;18:192–196. [Google Scholar]

- Smith T, Scahill L, Dawson G, Guthrie D, Lord C, et al. Designing research studies on psychosocial interventions in autism. Journal of Autism and Developmental Disorders. 2007;37:354–366. doi: 10.1007/s10803-006-0173-3. [DOI] [PubMed] [Google Scholar]

- Sperry LA, Whaley KT, Shaw E, Brame K. Services for young children with autism spectrum disorders: Voices of parents and providers. Infants and Young Children. 1999;11(4):17–33. [Google Scholar]

- Stahmer AC. The basic structure of community early intervention programs for children with autism: Provider descriptions. Journal of Autism and Developmental Disorders. 2007;37(7):1344–1354. doi: 10.1007/s10803-006-0284-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stahmer AC, Collings NM, Palinkas LA. Early intervention practices for children with autism: Descriptions from community providers. Focus on Autism and Other Developmental Disabilities. 2005;20(2):66–79. doi: 10.1177/10883576050200020301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanovich PJ, Stanovich KE. Research into practice in special education. Journal of Learning Disabilities. 1997;30:477–481. doi: 10.1177/002221949703000502. [DOI] [PubMed] [Google Scholar]

- Storch EA, Crisp HL. Taking it to the schools—transporting empirically supported treatments for childhood psychopathology to the school setting. Clinical Child and Family Psychology Review. 2004;7(4):195–209. doi: 10.1007/s10567-004-6084-y. [DOI] [PubMed] [Google Scholar]

- Svenkerud PJ, Singhal A. Enhancing the effectiveness of HIV/AIDS prevention programs targeted to unique population groups in Thailand: lessons learned from applying concepts of diffusion of innovation and social marketing. Journal of Health Communication. 1998;3:193–216. doi: 10.1080/108107398127337. [DOI] [PubMed] [Google Scholar]

- Swanson GM, Ward AJ. Recruiting minorities into clinical trials: Toward a participant-friendly system. Journal of the National Cancer Institute. 1995;87:1747–1759. doi: 10.1093/jnci/87.23.1747. [DOI] [PubMed] [Google Scholar]

- Swiezy N, Stuart M, Korzekwa P. Bridging for success in autism: Training and collaboration across medical, educational, and community systems. Child and Adolescent Psychiatric Clinics of North America. 2008;17:907–922. doi: 10.1016/j.chc.2008.06.001. [DOI] [PubMed] [Google Scholar]

- Tornatzky L, Klein KJ. Innovation characteristics and innovation adoption-implementation: A meta-analysis of findings. Transactions on Engineering Management. 1982;EM-29(1):28–43. [Google Scholar]

- US Department of Education. Report of children with disabilities receiving special education under part B of the Individuals with Disabilities Education Act o. Document Number) 2004. [Google Scholar]

- Walker HM. Commentary: Use of evidence-based interventions in schools: where we’ve been, where we are, and where we need to go. School Psychology Review. 2004;33(3):398–407. [Google Scholar]

- Weisz JR, Chu BC, Polo AJ. Treatment dissemination and evidence-based practice: Strengthening intervention through clinician-researcher collaboration. Clinical Psychology: Science and Practice. 2004;11:300–307. [Google Scholar]

- Weisz JR, Doss AJ, Hawley KM. Youth psychotherapy outcome research: A review and critique of the evidence base. Annual Review of Psychology. 2005;56:337–363. doi: 10.1146/annurev.psych.55.090902.141449. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Hawley KM. Finding, evaluating, refining, and applying empirically supported treatments for children and adolescents. Journal of Clinical Child Psychology. 1998;27(2):206–216. doi: 10.1207/s15374424jccp2702_7. [DOI] [PubMed] [Google Scholar]

- Wheeler JJ, Baggett BA, Fox J, Blevins L. Treatment integrity: A review of intervention studies conducted with children with autism. Focus on Autism and Other Developmental Disabilities. 2006;21(1):45–54. [Google Scholar]

- Yancey AK, Ortega AN, Kumanyika SK. Effective recruitment and retention of minority research participants. Annual Review of Public Health. 2006;27:1–28. doi: 10.1146/annurev.publhealth.27.021405.102113. [DOI] [PubMed] [Google Scholar]

- Yell ML, Drasgow E. Litigating a free appropriate public education: The Lovaas hearings and cases. The Journal of Special Education. 2000;33(4):205–214. [Google Scholar]

- Yell ML, Drasgow E, Lowrey KA. No child left behind and students with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities. 2005;20:130–139. [Google Scholar]

- Young A, Ruble LA, McGrew JH. Public vs. private insurance: Cost, use, accessibility, and outcomes of services for children with autism spectrum disorders. Research in Autism Spectrum Disorders. 2009;3:1023–1033. [Google Scholar]