Abstract

Background

Application of user-centred design principles to Computerized provider order entry (CPOE) systems may improve task efficiency, usability or safety, but there is limited evaluative research of its impact on CPOE systems.

Objective

We evaluated the task efficiency, usability, and safety of three order set formats: our hospital's planned CPOE order sets (CPOE Test), computer order sets based on user-centred design principles (User Centred Design), and existing pre-printed paper order sets (Paper).

Participants

27staff physicians, residents and medical students.

Setting

Sunnybrook Health Sciences Centre, an academic hospital in Toronto, Canada.

Methods

Participants completed four simulated order set tasks with three order set formats (two CPOE Test tasks, one User Centred Design, and one Paper). Order of presentation of order set formats and tasks was randomized. Users received individual training for the CPOE Test format only.

Main Measures

Completion time (efficiency), requests for assistance (usability), and errors in the submitted orders (safety).

Results

27 study participants completed 108 order sets. Mean task times were: User Centred Design format 273 s, Paper format 293 s (p=0.73 compared to UCD format), and CPOE Test format 637 s (p<0.0001 compared to UCD format). Users requested assistance in 31% of the CPOE Test format tasks, whereas no assistance was needed for the other formats (p<0.01). There were no significant differences in number of errors between formats.

Conclusions

The User Centred Design format was more efficient and usable than the CPOE Test format even though training was provided for the latter. We conclude that application of user-centred design principles can enhance task efficiency and usability, increasing the likelihood of successful implementation.

Keywords: Computerized provider order entry (CPOE), order sets, randomized trial, usability testing, medical order entry systems

Introduction

Computerized Provider Order Entry (CPOE) can decrease medication errors, improve quality of care and potentially reduce adverse drug events.1 However, fewer than 20% of hospitals across seven Western countries have CPOE.2 One barrier to successful CPOE implementation is poor usability of CPOE systems.3–6

Usability refers to the level of ease with which a user is able to complete tasks. CPOE usability plays a significant role in CPOE acceptance by providers. Poor usability can lead to errors,5 inefficiency,7 and rejection of the CPOE system. A key solution to usability problems is the user-centred design method.8–10 User-centred design considers the needs and limitations of end users into each stage of the design process, using methods such as heuristic evaluations, cognitive walkthroughs, field studies, task analyses and usability testing.11–13

Despite the potential importance of user-centred design on successful CPOE implementation, there are limited quantitative data on the role of user centred design in CPOE design and implementation. One controlled trial showed that improved visibility of educational links within a CPOE system increased usage of educational resources from 0.6% to 3.8%.14 Computerized ordering efficiency can be significantly impaired by usability problems such as vague and incorrect system messages, unfamiliar language, and non-informative system feedback.15 A recent qualitative review of CPOE design aspects and usability16 identified only 11 articles that addressed any usability aspects of CPOE design, and none had a quantitative evaluative component. Usability will likely be an important enabler for compliance with meaningful use legislation in the US.17–19

Sunnybrook Health Sciences Centre was planning to implement CPOE using a vendor system. We wanted to evaluate the efficiency, usability and safety of this system prior to implementation. We specifically developed a prototype electronic order set system (User Centred Design) using user-centred design principles for this study to evaluate the impact of user-centred design on efficiency, usability and safety. We used order sets for the evaluation because the efficient entry of order sets is an important CPOE implementation success factor.

Methods

Design and setting

We studied three order set formats: the Sunnybrook CPOE test design format (CPOE Test), a user-centred design format developed specifically for this study (User Centred Design), and our existing pre-printed paper order set format (Paper). For each test subject, the sequence of presentation for the three order set formats was randomly assigned to minimize the potential for ordering effects.

The study was conducted in March–May 2009. We chose to study order sets from our inpatient General Internal Medicine service, which is responsible for approximately 40% of all admissions. At Sunnybrook, all physician orders are written on paper. A limited subset of these orders, such as laboratory and radiology, are subsequently entered into our electronic patient care system (OACIS Clinical Care suite) and conveyed to the appropriate ancillary service. Physicians occasionally enter these written orders into OACIS, but most written orders are entered into OACIS by nurses or administrative staff. We conducted the study at Sunnybrook Health Sciences Centre in the Information Services Usability Evaluation Laboratory.

Participants

Twenty seven representative end-users (staff physicians, residents and medical students) volunteered to participate in response to informational flyers around the hospital, announcements at regular educational rounds, and email requests.

Materials and methods

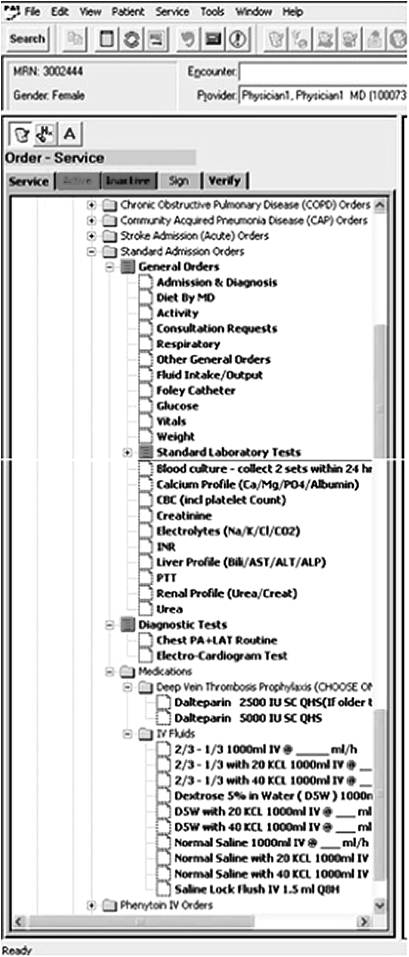

Sunnybrook's CPOE team created the CPOE Test platform using the OACIS Clinical Care Suite (Dinmar 2003, client server version 7.0). OACIS has been in use at Sunnybrook for over 10 years for viewing selected clinical results, and managing a limited number of orders, primarily laboratory tests and diagnostic imaging. The Sunnybrook CPOE technical team configured order sets within the OACIS system (figure 1) based on the content of existing pre-printed paper order sets (figure 2).

Figure 1.

CPOE Test system General Internal Medicine standard admission order set.

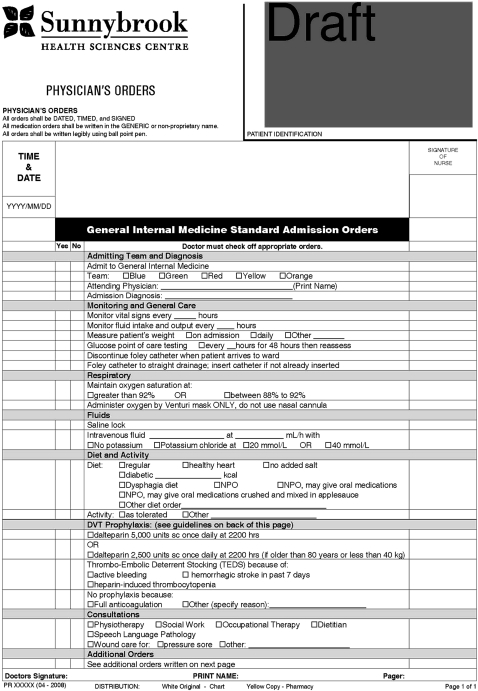

Figure 2.

Sunnybrook Health Sciences Centre's pre-printed paper standard admission orders order set form.

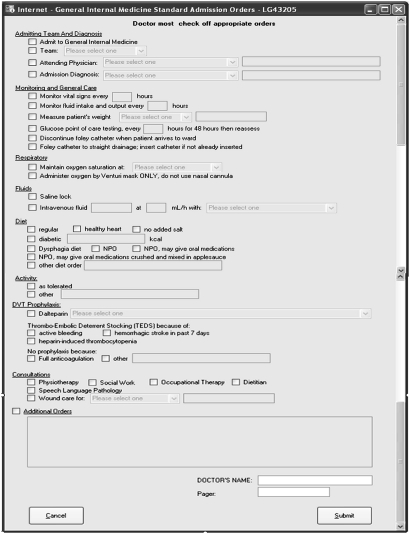

We developed a User Centred Design interface for a computerized order set specifically for this study (figure 3). We first analyzed the task of completing an admission order set to determine the necessary functionality of a computerized order set system, including an analysis of the existing Sunnybrook paper admission order sets. We then conducted heuristic evaluation of the CPOE Test order set system. A heuristic evaluation is the systematic assessment of the usability of a user interface. It typically involves the application of a set of human factors design principles by individuals trained in usability to the interface and the identification of any violations of these principles. Heuristic evaluation is a widely adopted method of usability testing because it is easy to perform and relatively inexpensive.20 We used the commonly accepted Nielsen usability principles21 in our evaluation. Four evaluators (three human factors engineers and one physician) found a total of 92 unique usability violations for the CPOE Test order set system (appendix 1 online). Following usability design principles, we used an iterative design process to create the User Centred Design format. This format was used only as a prototype and did not possess the same background functionality as the CPOE Test system; the User Centred Design format was not linked to the hospital database, and it did not perform any complex error checking (eg, drug-drug interactions or allergies); however, it did include remedies for many of the discovered usability violations and perform basic error checking on user inputs. Selected examples of usability principles, violations and remedies are listed in table 1.

Figure 3.

User Centred Design format for General Internal Medicine standard admission order set.

Table 1.

Representative examples of usability principles, violations and remedies

| Usability principle22 | Description | Usability violation | User centred design remedy |

| Visibility of System Status | The system should always keep the user informed about what is going on through appropriate feedback within reasonable time. | Two different order entry modes (search and catalog) are virtually identical and easily confused. User may be unable to complete a specific order if the wrong mode is in use. | There is only one order entry mode. This mode allows user to accomplish all order entry tasks. |

| Consistency | The user should not have to wonder whether different words, situations, or actions mean the same thing. | Drug information is inconsistently displayed during an ordering task. For example, the user selects morphine 1 mg po q4h from the drug catalog, which appears as ‘morphine sulfate q4h po 1 mg’ on another screen. | Drug information is consistently displayed throughout the ordering task. |

| User Control and Freedom | After choosing a system function by mistake, the user needs a clearly marked ‘emergency exit’ to leave the unwanted state without having to go through an extended dialog. | There is no obvious way to undo actions. The user must learn to right click on the order then select ‘undo’ from the bottom of a long drop down list. | User selects an order with a left mouse click, and deselects the same order with another left mouse click. There is no need to right click and no need to select the ‘undo’ action from a drop down list. |

| Help Users Recognize, Diagnose and Recover from Errors | Error messages should be expressed in plain language (no codes), precisely indicate the problem and constructively suggest a solution. | User cannot easily see a list of all orders prior to submission, so errors are difficult to recognize. | All orders are fully displayed on a single screen for review throughout the ordering process. |

We used the existing Sunnybrook General Internal Medicine pre-printed paper order set forms as our comparator (Paper format). Figure 2 is an example of the standard admission order set for General Internal Medicine.

We used Morae usability testing software designed by TechSmith22 to record the study data. The software provides the capability of recording a computer's activity from another computer for increased user insight. For the usability study, Morae captured the screen activity of the participant's computer, survey responses, and observer input from the observer computer.

Procedure

Each participant received a 15-min orientation to the CPOE Test order set system. We then asked participants to submit a trial order set to ensure understanding of the ordering process. This trial task had to be completed unaided, and the study began only when this was successfully accomplished. If users requested assistance during the trial task, users were asked to perform another trial task. This individualized training was more extensive than existing classroom training sessions at Sunnybrook for other computer applications. The training sessions, including orientation and trial task(s), took approximately 30 min for each participant.

We did not provide any training for the User Centred Design and Paper order set formats. At the time of the study, the standard pre-printed paper admission orders and the stroke admission orders were in routine use. The community acquired pneumonia (CAP) and chronic obstructive pulmonary disease (COPD) order sets were developed and approved, but not fully implemented. The format of the paper CAP and COPD order sets was consistent with the format of the standard admission order set and the stroke admission order set. In all formats, participants were able to order any medication appropriate for the patient scenario, even if that medication was not part of the pre-defined order set.

Each participant completed four ordering tasks for four common general internal medicine conditions: community acquired pneumonia (CAP), exacerbation of chronic obstructive pulmonary disease (COPD), acute stroke, and urinary tract infection (UTI) (appendix 2 online). We provided printed case scenarios for each ordering task where the medications that needed to be ordered were indicated in the scenario. Each participant completed two ordering tasks using the CPOE Test format, one task using the User Centred Design format, and one task using the Paper format. Participants completed two tasks with the CPOE Test order set system so that we could collect as much information as possible about its usability and safety to guide future design decisions. The order of exposure of order set formats and the ordering tasks was randomized. All four ordering tasks were completed for each task format. Overall the four ordering tasks were balanced across each of the three ordering formats. One of us (JC) conducted the study sessions, with each session averaging 60 min.

Main measures

For task efficiency, we recorded the time to complete each order set. We ensured that the start time was comparable for each task and each format. The task started when the participant expanded the order set folder in the CPOE Test order set system, selected the User Centred Design program window, or put their pen to paper for the pre-printed paper order set forms. The task ended when the participant pressed the ‘submit’ button in either the CPOE Test or User Centred Design formats, or handed the completed paper order set forms to the study observer. The Morae software was then used to compute the task times from these start and end point markers.

For usability, we noted the number of times participants requested assistance. We asked participants to explicitly ask for assistance before the observer would interrupt and offer help.

For safety, a staff physician reviewed all 108 completed order sets for errors. Each error was rated for potential for harm (no potential for harm, potential for moderate harm, potential for severe harm including death or permanent loss of body function).

We obtained measures for two secondary (post hoc) analyses of task efficiency. First, we noticed some unique features of the CPOE Test task that were not present in the User Centred Design task, but consumed user time. These unique features of the CPOE test included: basic error checking functionality built into the CPOE system and confusing abbreviations in the laboratory orders that distracted users but were not central to our study question. We wanted to ensure that our results did not simply reflect these unique features of the CPOE task. Therefore, we reviewed all Morae recordings to determine the time spent on these unique features, then subtracted the time spent on these activities from the time spent completing the CPOE Test task.

Second, after completing the study, we realized that the first page of the Paper order sets had been omitted from the CAP and COPD Paper format ordering tasks (n=13 Paper tasks). To address for this oversight, we needed an estimate of time required to complete the first page of the Paper order sets. The first page of the UTI Paper order set is identical to the first page of the CAP and COPD Paper order sets. Therefore, the time to complete the Paper UTI order set is a reasonable, albeit extreme, estimate of the time to complete the first page of the CAP and COPD Paper order sets. Therefore, we added the mean time to complete the UTI Paper order sets (280 s) to the mean time for the 13 Paper order sets where the first page was not completed. This adjustment overestimates the time to complete these first page of these Paper order sets, because (i) many participants manually wrote in the missing first page orders and (ii) the UTI scenario task times include the time required to write additional orders relevant to the UTI scenario. Therefore, the “true” task time for the completion of the Paper order sets lies somewhere between our primary analysis and this adjusted time.

Statistical analysis

Analysis of task efficiency involved a repeated measures analysis of variance (ANOVA) to determine whether the means of the comparing groups were statistically different. It also accounted for the correlation among observations from the same subject. We compared the number of assists between formats using McNemar's test for paired proportions.23 We performed a Poisson regression analysis on the error data to model the error count data. We used SAS version 9.1 to perform the analyses.

Research ethics

The Sunnybrook Research Ethics Board approved the study. Each participant provided written consent to participate in the study.

Results

Twenty-seven volunteer physicians, residents, and medical students participated in the study. All volunteers who participated completed the study; there were no drop-outs. The average age of participants was 39 years of age with 59% having at least 6 months of experience working in Sunnybrook's General Internal Medicine unit. Participants' self-rated level of comfort with computers was very high (85%) or high (15%). Participants' self-rated proficiency with OACIS was very good or excellent for viewing medication lists (89%) and for ordering x-rays or labs (59%). The majority of participants were also experienced in completing Sunnybrook's pre-printed paper order sets; 89% of participants reported completing at least 11 order sets in their medical careers at Sunnybrook, and 67% reported completion of 50 or more. Overall, each ordering task was conducted 27 times, with equal balance of ordering tasks across each ordering format.

Task efficiency

The mean task times for the User Centred Design format was 273 s, similar to the mean task time for the Paper format (293 s, p=0.73), and significantly shorter than the CPOE Test format (637 s, p<0.0001). The task efficiency results were consistent across the entire study population. The task time for the CPOE test format was the longest for 92% of the subjects (25 of 27). After we adjusted for time spent on the unique features of the CPOE Test task (such as built-in error checking and confusing laboratory abbreviations), the mean CPOE test task time fell to 547 s, but remained significantly longer than the two other groups (p<0.0001). Finally, after we corrected for the error in executing the Paper order set tasks, the mean task time for using paper order sets rose to 428 s, which was still significantly lower than the mean CPOE Test task time (p=0.02), but higher than the mean task time for the User Centred Design format (p=0.0088) (table 2).

Table 2.

Mean task times by format for our primary analysis and our two secondary (post hoc) analyses

| Format | Primary analysis | Secondary analysis adjusting for unique features of CPOE test format tasks | Secondary analysis adjusting for missing page 1 for CAP and COPD paper format tasks |

| User Centred Design (n=27 tasks) | 273 s | 273 s | 273 s |

| CPOE Test (n=54 tasks) | 637 s* | 547 s* | 547 s† ‡ |

| Paper (n=27 tasks) | 293 s§ | 293 s§ | 428 s¶ |

p<0.0001 compared to User Centred Design and Paper formats.

p<0.001 compared to User Centred Design format.

p=0.02 compared to Paper format.

p=0.73 compared to User Centred Design format.

p=0.0088 compared to User Centred Design format.

Usability

Users requested assistance in 31% (17 of 54) of the CPOE Test format tasks; whereas no assistance was requested by any user for any task involving Paper or User Centred Design formats (p<0.01 using McNemar's test).

Safety

We analyzed the proportion of order sets with at least one error, and the proportion of order sets with at least one potentially harmful error. We found no statistically significant differences between proportion of order sets with at least one error (p=0.92 for CPOE Test and Paper, p=0.38 for CPOE Test and User Centred Design, and p=0.50 for Paper and User Centred Design). However, we did find a marginally statistically significant difference in the proportion of order sets with at least one potentially harmful error, with the CPOE Test format was marginally greater than that of the Paper format (p=0.04). No other differences were found in the frequency of potentially harmful errors by format (table 3).

Table 3.

Proportion of order sets with at least one error and at least one potentially harmful error by format

| Format | At least one error | At least one potentially harmful error |

| User centred design (n=27) | 63% | 41% |

| CPOE test (n=54) | 54% | 43%* |

| Paper (n=27) | 70% | 19% |

p=0.04 compared to Paper format.

Some potentially harmful errors by task were: failed to order antibiotics or the patient's pre-admission medication metoprolol (community acquired pneumonia scenario), failed to order bronchodilators (COPD scenario), ordered full dose intravenous heparin instead of low dose subcutaneous heparin (acute stroke scenario), and failed to order intravenous fluids for a vomiting volume depleted patient who was taking nothing by mouth (UTI scenario). We did not observe qualitative differences in the types of errors by ordering format (CPOE Test, UCD or paper).

Discussion

We found that our User Centred Design format was more efficient and more usable than the CPOE Test system. We also found that the User Centred Design format was as efficient and usable as the existing Paper format. The User Centred Design format had a similar number of errors as the CPOE test and paper based formats. Our secondary post hoc analyses showed similar results, strengthening our conclusion that the User Centred Design format was more efficient and more usable than the CPOE Test format, and similar to the existing Paper format.

Our results provide quantitative evaluative evidence of the impact of user-centred design, supporting the link between design, efficiency, and usability.3 4 7 10 Our physicians admit approximately 12–20 patients per day to our service, so a time difference of 5 min per ordering task is of great clinical importance. We found no differences in the number of ordering errors between formats, and a marginal increase in potentially harmful errors with our CPOE Test system compared to Paper. Our ordering error results are not comparable to field studies of medication errors with other CPOE systems, because we conducted our study in a simulated environment where our participants may have been less diligent than in usual practice.1 Regardless, the error rate in the CPOE Test and User Centred Design formats were high, so we need to make further design improvements to reduce or trap these errors. Our User Centred Design format was only a functional prototype, which lacked basic medication ordering decision support. The next iteration of a User Centred Design format would benefit from basic decision support, reminders to order important preventive treatments such as DVT prophylaxis, and cues to order the patient's preadmission medications.

We provided no training on our User Centred Design format, whereas we provided a total of approximately 13 h of training to the 27 physicians on the CPOE test system. Despite this difference in training intensity, we found that that the User Centred Design format was more efficient and more usable than CPOE Test format. Budgets for annual maintenance (training and support) in hospital CPOE implementations have been estimated to be as high as $1.35 million for a 500-bed hospital.24 Our results raise the enticing possibility that good design could reap further dividends through reduced need for support and training.

We have used the results of our study to inform the redesign of our CPOE Test system, and we have deferred implementation until these design issues are addressed. Our user-centred design recommendations could not be implemented in this version of our vendor system due to limitations in the existing software architecture. However, our recommendations are being integrated into future versions of our vendor system.

Our study had several strengths. Our study population represented a broad range of users, from junior residents to staff physicians, with a broad range of experience with computers, our existing electronic patient record, and our existing paper order sets. Our study design controlled for variations between participants, because all participants completed tasks in all order set formats. Our efficiency results were consistent across the study sample, suggesting that prior computer experience or order set experience was not a major determinant of our results.

Our study has several important limitations. We evaluated only one CPOE system that was still under development, so our results cannot be generalized to other CPOE systems. Our participants were using the CPOE Test format for the first time; so their efficiency would likely improve with further use. Our small sample size could not detect small differences in usability or safety between User Centred Design and Paper formats, but was able to detect statistically significant differences between CPOE Test and Paper formats. Our estimate of task efficiency for the Paper format was imperfect because of our error in executing our study procedures. However, our conclusions were similar after making extreme assumptions to correct for this error. We did not evaluate the reliability of our method for detecting and classifying errors in the submitted orders. Finally, we focused only on the task efficiency and safety of the ordering process. We did not examine task efficiency or safety at the order verification, dispensing or administration phases.

Other hospitals may develop a usability lab with a small investment in the necessary software, the expertise of usability or human factors engineer(s) to conduct the studies, and a room with computers. We found no difficulty in getting volunteers to participate in our usability study since clinicians are highly motivated to contribute to the design of clinical systems. We have also conducted usability evaluations prior to the purchase of other clinical information systems and intravenous infusion devices.25

Conclusions

We found that our User Centred Design format was more efficient and more usable than our CPOE Test format. We also found that the User Centred Design format was as efficient and usable as the existing Paper format. We conclude that application of user-centred design principles can enhance task efficiency and usability, increasing the likelihood of successful implementation. We have deferred our own CPOE implementation until these design issues are addressed.

Supplementary Material

Acknowledgments

We thank the staff at Sunnybrook Health Sciences Centre who supported and participated in this research, and Alex Kiss from the Department of Research Design and Biostatistics for his statistical support.

Footnotes

Funding: This research was funded by Information Services, Sunnybrook Health Sciences Centre.

Competing interests: None.

Ethics approval: The Sunnybrook Research Ethics Board approved the study.

Provenance and peer review: Not commissioned; externally peer reviewed

References

- 1.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med 2003;163:1409–16 [DOI] [PubMed] [Google Scholar]

- 2.Aarts J, Koppel R. Implementation of computerized physician order entry in seven countries. Health Aff (Millwood) 2009;28:404–14 [DOI] [PubMed] [Google Scholar]

- 3.Teich JM, Osheroff JA, Pifer EA, et al. The CDS Expert Review Panel Clinical decision support in electronic prescribing: recommendations and an action plan. Report of the joint clinical decision support workgroup. J Am Med Inform Assoc 2005;12:365–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ash J, Stavrl P, Kuperman G. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc 2003;10:229–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Campbell EM, Sittig DF, Ash JS, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006;13:547–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sengstack PP, Gugerty B. CPOE systems: success factors and implementation issues. J Healthc Inf Manag 2004. 18:36–45 [PubMed] [Google Scholar]

- 7.Niazkhani Z, Pirnejad H, Berg M, et al. The impact of computerized provider order entry systems on inpatient clinical workflow: a literature review. J Am Med Inform Assoc 2009;16:539–49 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Martikainen S, Ikävalko P, Korpela M. Participatory interaction design in user requirements specification in healthcare. Stud Health Technol Inform 2010;160:304–8 [PubMed] [Google Scholar]

- 9.Hua L, Gong Y. Developing a user-centered voluntary medical incident reporting system. Stud Health Technol Inform 2010;160:203–7 [PubMed] [Google Scholar]

- 10.Wickens C, Gordon S, Liu Y. An Introduction to Human Factors Engineering. New York, NY: Addison-Wesley Educational Publishers Inc, 1998:453–4 [Google Scholar]

- 11.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform 2004;37:56–76 [DOI] [PubMed] [Google Scholar]

- 12.Kushniruk AW, Patel VL, Cimino JJ. Usability testing in medical informatics: cognitive approaches to evaluation of information systems and user interfaces. Proc AMIA Annu Fall Symp 1997:218–22 [PMC free article] [PubMed] [Google Scholar]

- 13.Konstantinidis G, Anastassopoulos GC, Karakos AS, et al. A user-centered, object-oriented methodology for developing health information systems: a Clinical Information System (CIS) example. J Med Syst Published Online First: 23 April 2010. doi:10.1007/s10916-010-9488-x [DOI] [PubMed] [Google Scholar]

- 14.Rosenbloom ST, Geissbuhler AJ, Dupont WD, et al. Effect of CPOE user interface design on user-initiated access to educational and patient information during clinical care. J Am Med Inform Assoc 2005;12:458–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Khajouei R, Peek N, Wierenga PC, et al. Effect of predefined order sets and usability problems on efficiency of computerized medication ordering. Int J Med Inform 2010;79:690–8 [DOI] [PubMed] [Google Scholar]

- 16.Khajouei R, Jaspers MWM. CPOE system design aspects and their qualitative effect on usability. Stud Health Technol Inform 2008;136:309–14 [PubMed] [Google Scholar]

- 17.Bloomrosen M, Starren J, Lorenzi NM, et al. Anticipating and addressing the unintended consequences of health IT and policy: a report from the AMIA 2009 Health Policy Meeting. J Am Med Inform Assoc 2011;18:82–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med 2010;363:501–4 [DOI] [PubMed] [Google Scholar]

- 19.Karsh BT, Weinger MB, Abbott PA, et al. Health information technology: fallacies and sober realities. J Am Med Inform Assoc 2010;17:617–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang J, Johnson TR, Patel VL, et al. Using usability heuristics to evaluate patient safety of medical devices. J Biomed Inform 2003;36:23–30 [DOI] [PubMed] [Google Scholar]

- 21.Nielsen J. Enhancing the Explanatory Power of Usability Heuristics. Proceedings of the Sigchi Conference on Human Factors in Computing Systems: Celebrating Interdependence. New York: Association for Computing Machinery, 1994. doi:10.1145/191666.191729 [Google Scholar]

- 22.TechSmith Corporation Morae usability testing and market research software. 2009. http://www.techsmith.com/morae.asp Archived at: http://www.webcitation.org/5mOSwzAJI

- 23.Altman DG. Practical Statistics for Medical Research. 1st edn London: Chapman and Hall, 1991 [Google Scholar]

- 24.Kuperman GJ, Gibson RF. Computer physician order entry: benefits, costs, and issues. Ann Intern Med 2003;139:31–9 [DOI] [PubMed] [Google Scholar]

- 25.Etchells E, O'Neill C, Robson JI, et al. Human factors engineering in action: Sunnybrook's Patient Safety Service. In: Gosbee JW, Gosbee LL, eds. Using Human Factors Engineering to Improve Patient Safety. 2nd edn Oakbrook, IL: Joint Commission International, 2010:89–102 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.