Abstract

Objective

To model how individual violations in routine clinical processes cumulatively contribute to the risk of adverse events in hospital using an agent-based simulation framework.

Design

An agent-based simulation was designed to model the cascade of common violations that contribute to the risk of adverse events in routine clinical processes. Clinicians and the information systems that support them were represented as a group of interacting agents using data from direct observations. The model was calibrated using data from 101 patient transfers observed in a hospital and results were validated for one of two scenarios (a misidentification scenario and an infection control scenario). Repeated simulations using the calibrated model were undertaken to create a distribution of possible process outcomes. The likelihood of end-of-chain risk is the main outcome measure, reported for each of the two scenarios.

Results

The simulations demonstrate end-of-chain risks of 8% and 24% for the misidentification and infection control scenarios, respectively. Over 95% of the simulations in both scenarios are unique, indicating that the in-patient transfer process diverges from prescribed work practices in a variety of ways.

Conclusions

The simulation allowed us to model the risk of adverse events in a clinical process, by generating the variety of possible work subject to violations, a novel prospective risk analysis method. The in-patient transfer process has a high proportion of unique trajectories, implying that risk mitigation may benefit from focusing on reducing complexity rather than augmenting the process with further rule-based protocols.

Keywords: Computational modelling, complex networks, social network analysis, computer simulation, communication, decision support, safety, machine, learning, prospective risk analysis, agent-based modelling, process engineering, patient safety

Background

Adverse events occur in 5–10% of all hospital admissions and result in significant social and economic costs.1–3 Hospitals are complex systems,4 5 where care is delivered via a web of organizational6 7 and social interactions8 supported by information systems.4 9 10 Violations of prescribed work practices, which include deliberate and accidental deviations from prescribed work practices (including communication errors), contribute to a large proportion of adverse events.11–13 Individual violations do not always lead to adverse events but rather, adverse events are often caused by a chain of violations.14–16

Given the complexity of the socio-technical interactions in healthcare delivery, and the regularity with which work activity deviates from prescribed practice, existing methods of risk analysis that borrow heavily from risk analysis in engineered systems may not always be appropriate. Risk analysis involves identifying where risk exists, as well as identifying the causes and impacts of adverse events.17 Common methods of prospective risk analysis (as opposed to retrospective analyses such as root cause analysis, which is performed after an adverse event has occurred) include failure modes and effects analysis,18–22 and hazard analysis and critical control points.23–25 More quantitatively focused analyses include probabilistic risk assessment, involving either fault tree analysis (FTA) or event tree analysis (ETA).17 26 27

As quantitative methods, FTA and ETA may be used to prospectively analyze the likelihood of systemic failure given the individual likelihoods of specific faults and events. While the methods address multiplicative (combinations of events that must occur together to create risk) and additive (any one event can trigger an adverse event risk) likelihoods, the methods have general limitations. First, the methods rely on a linear dependency of faults to create the structure of static trees, whereas healthcare environments are more dynamic than this—actors modify their behavior based on what they know about the environment as it changes in time—and have non-linear dependencies. Second, workarounds28 29 contribute to risk in the same manner as other violations but evolve as a consequence of optimizing the multiple objectives of healthcare delivery—quality, safety, and efficiency. Existing methods of prospective risk analysis consider faults as binary entities26 and do not consider the manner in which workarounds may still achieve the intended goal after diverging from the prescribed work practice.

Our aim was to provide an alternative approach to this problem of risk assessment by explicitly simulating the spread of possible trajectories a routine process may take that deviate from prescribed work practice. Such simulations help determine which trajectories may lead to adverse events, and estimate how often these risky trajectories occur. We define a trajectory as a single instance of a routine work process. A trajectory in our simulation is characterized both by sequential and parallel actions taken by human and information system agents. An end-of-chain risk then counts the proportion of trajectories in which a specified adverse event is possible. The two adverse events of interest in our scenarios are misidentification of a patient and compromised infection control.

Methods

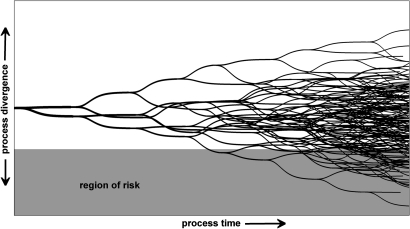

We now describe the general methods for constructing an agent-based model for prospective risk analysis and illustrate the method using an in-patient transfer case study. We additionally describe the simulation approach taken and validation of the model. For the purpose of this research, a violation describes any intended deviation from a prescribed work process (such as a workaround), unintended deviation (such as a slip), or a communication error (in which an information exchange does not occur or is erroneous). Figure 1 is a schematic of a representative workflow, illustrating how violations may lead to a divergence from the prescribed work practice, leading to risk for a proportion of the trajectories. Each fork in the trajectory diagram represents a violation within a specific trajectory.

Figure 1.

A representative workflow. Processes are represented as a series of trajectories, where each opportunity for violation is indicated by a fork. A proportion of trajectories are indicated to diverge far enough away from the prescribed work practice to create the risk of an adverse event. Note that the likelihood of each trajectory is not indicated, and this is the focus of the proposed method.

To simulate work processes we used agent-based modeling to specify and then simulate the behavior of human actors in a clinical process, as well as the information systems the actors use. An agent in an agent-based model may be described as any entity that is situated in, perceives, and affects an environment in order to achieve one or more goals.30 31 In multi-agent systems, the agents interact with each other and their environment to achieve individual goals. Individual goals may or may not be in conflict with the goals of others, and may see coordination in behavior to achieve shared goals.

In an agent-based model, agents ‘know’ how to carry out their own roles for a given work process, including communicative actions with other agents or interaction with the environment. An agent is typically represented by:

Process knowledge: Agents do not need to know about an entire work process but must know enough to achieve their own goals. Process knowledge is usually represented as a plan schematic, composed of a set of actions. In the case study presented below, a porter agent knows about the actions it needs to perform if the patient has an infectious status. It does not know how to perform the patient identification check usually performed by the ward nurse (see online supplementary appendix). In some agent representations, there is a further distinction between agent goals and processes, when an agent must prioritize among goals or craft a strategy to accomplish a goal from a set of possible plan elements.

Individual beliefs: Agents store data that correspond to their understanding about the current state of the environment. These beliefs may be partial or incorrect. Agents update beliefs as they engage with other agents and the world when they detect changes to the environmental state. For example, the porter agent may update its belief about the infectious status of the patient by interacting with a transfer form—a digital object representing the paper form a human porter might handle. If the information in the form is incorrect, then the porter may, for example, erroneously believe a patient is not infectious (see online supplementary appendix).

Agents have autonomy over their own actions. Actions can be triggered by a change in beliefs, be part of a process plan already underway, or be requested by another agent. When there are multiple actions to be performed, an agent has the autonomy to choose among them. If there is more than one way to perform an action, agents can choose how to perform that action, or omit the action entirely, depending on their beliefs. For example, a porter must use adequate infection control for an infectious patient but may choose to perform the required actions in different orders or at different times during the work process (see online supplementary appendix).

Brahms (Agent iSolutions, NASA Ames Research Center, Moffett Field, California, USA) is an example of an agent-based modeling environment.32 33 It has been used in the present work because it can capture the fine-scale decision-making and behavior of agents and their interactions with information systems, other agents, and the environment. Brahms models agents at an abstract level that does not require representation of cognitive processes,34–38 but is more detailed than discrete event simulations often used to simulate patient flow over much longer time periods.39–43

Formulation process

A user of the simulation framework formulates a new model by first translating a set of work practices and associated violations into the design of an agent-based model. Prescribed work practices are context-specific implementations of one or more guidelines, policies, or protocols. There may be multiple ways of completing a task that are in accordance with a policy, so we describe a set of prescribed work practices that lead to a safe outcome. The requirement for modeling in an agent-based model is that the prescribed work practices describe the process in enough detail for actions to be represented at the same level of abstraction as the observed violations.

Just because an action is the next stage in a formal sequence description does not mean it will always occur in the real world. Agents may be interrupted, distracted, or fail to undertake an action for any number of reasons. To capture this uncertainty, each action is assigned a probability function. These functions are typically based on the empirical data, but in the absence of such data may be estimated. When an agent is triggered to undertake an action, the probability function determines whether it actually occurs. Failure to take an action may, for example, lead to a process violation. Thus any two simulations starting from the same initial conditions are likely to have different trajectories, levels of process violation, and outcomes.

Each simulation is run individually within the Brahms environment and exported as time-stamped logs of activities and changes in beliefs. A log of activities and changes in beliefs describes a trajectory. The process is implemented such that if all decisions are taken as expected (no agents ever enact a violation of the prescribed work practice), then the agents behave according to the prescribed work practice. When the likelihood of violations is non-zero, the behavior of the agents may diverge from the prescribed work practices.

By aggregating the trajectories of repeated simulations that are instantiated consistently according to a specified scenario, it is possible to quantify and examine the distribution of trajectories and distinguish those in which a risk of an adverse event is present. The number of simulations required to accurately map a set of trajectories is described in table 1. While relatively few simulations are required to identify common trajectories, rare trajectories may require multiple simulation runs before they are identified. Analysis involves measuring the proportion of simulations that contribute to or pass on risk during the main stages of the process.

Table 1.

The number of simulations required to find rare trajectories (at least once) given a specific likelihood and assuming a binomial distribution (across simulations) where a success is defined by the presence of the end-of-chain risk

| Likelihood of a trajectory | Representation in the simulation | Simulations required to find trajectory (95% confidence) |

| 0.500 | Reference likelihood | 8 |

| 0.444 | Observed level of inadequate infection control | 9 |

| 0.100 | Reference likelihood | 46 |

| 0.010 | Reference likelihood | 473 |

| 9.45×10−3 | Precision chosen in experiments | 500 |

| 0.001 | Reference likelihood | 4742 |

Case study—in-patient transfers

The process of inter-ward patient transfer we chose to simulate is an example of a process that is characterized by ad hoc communication, inconsistency, and poor team coordination.44 Actors involved in the in-patient transfer process are expected to conform to infection control precautions and patient identification checks defined by policy.45 46 The aim of the process modeled here is to safely transfer the correct patient from a ward to the radiology department within the hospital. The process begins when it is scheduled by the coordinator in radiology in response to a request from a ward. The coordinator instructs a hospital porter to transfer a patient from the ward. The porter is given a transfer form which contains information about the patient including multiple-redundant identification information (including a unique identifier) and any specific requirements for infection control associated with this patient. The coordinator may additionally communicate these requirements verbally to the porter. On arrival at the ward, the porter hands over the transfer form to a ward nurse for a sign-off—this includes verification of the patient's identification and the correctness of the information on the transfer form. The ward nurse may additionally communicate transfer requirements to the porter verbally. If a nursing escort from the ward is required to accompany the porter, a clinical handover will take place between the ward and radiology nurses.

A set of violations associated with the inter-ward patient transfer process were recorded in a separate study involving 101 transfers.47 In that study, four violations were observed in each transfer, on average. In that study, the results suggest that a failure to perform patient identification checks was a significant issue (occurring in 42% of transfers) and the use of adequate infection control precautions was used as an example for redundancy analysis. Poor compliance rates were suggested as the main cause of low system reliability. The data from this observational study were used to create the prescribed work practice in the model (the actions that conform to the policies45 46) and to populate the model with the likelihoods of each violation. Ethics approval for the protocol was received from the New South Wales Department of Health Ethics Committee (EC00290) with application number 08/019 and site specific application 08/043.

In our model, we represent the in-patient transfer process using four human agents, six objects, and 186 activities, of which 31 are driven by an empirically-defined likelihood. Besides the human agents, we also define the non-human information systems as agents, namely the transfer form and the patient record, since they participate in communication acts and hold possibly erroneous information. A simplified version of the process is represented in the Results section below. Violations most associated with misidentification and infection control are labeled where they occur in the process. The flow of activities is broadly represented from top to bottom for the significant agents in the process (including the coordinator, the radiology nurse, the porter, the patient's record and identification, the transfer form, and the ward nurse), following the solid arrows. Communications between each of the agents are illustrated as horizontally-oriented communication channels. Although represented simplistically in comparison to the full specification of the behavior, the schematic captures the main interactions that may create, pass along, or ameliorate risk.

Structural and behavioral validation

In order to demonstrate the validity of the approach, we follow the formal process detailed by Barlas,48 which includes both structural and behavioral validation. Structural testing was done here via the analysis of boundary conditions, since the structure necessarily conforms to the prescribed work practices. Therefore, the model is checked to ensure that agents always perform the prescribed work practice in the absence of any violations. For the scenarios we examine, this means that a misidentified patient will be recognized at the first identification check, and adequate infection control is always used when a patient is recorded as being infectious. The resulting trajectories should be the same for every simulation and always match the prescribed work practice. This was confirmed by repeated simulation, in which the likelihood of all violations was set to zero. Behavioral testing involves comparing the behavior of the model with the behavior observed in the real world. This validation is performed for the infection control scenario but is not performed for the misidentification scenario because misidentified patients were never observed in the associated observation study. Consequently, we did not perform tests to determine the predictive validity of the model and the behavior of the model is validated by demonstrating that the model reproduces the aggregate behavior under boundary and a sample of realistic conditions. In the latter case, the model is calibrated by the same data against which it is tested.

Simulation experiments

The purpose of running repeated simulations is to determine the range of potential trajectories that evolve as a consequence of combining individual violations within a framework that captures the technical and social constraints associated with interacting agents in a hospital environment. Each simulation is instantiated by defining values for the agents and objects (creating the scenario) and pre-defined likelihoods for each individual violation (creating the potential for divergence from the prescribed practice). While the violations are defined by empirical results, the properties of the agents and objects are instantiated to reflect a specific scenario—in the case study this is either the misidentification scenario or the infection control scenario. In order to produce coherent measures of risk, the resulting trajectories (one for each simulation) are categorized as being incomplete, completed without risk, and completed subject to risk, where the risk is defined by the context of the scenario.

In the misidentification scenario, a patient requires transfer from the ward to radiology but is incorrectly identified at the outset (the identification band does not match the patient's record), indicating an initial risk of 100%, which is reduced during multiple identification checks. In this case, the risk is ameliorated during the routine process. The purpose of the scenario is to see if the process catches or passes along the risk of misidentification. A completed transfer with a misidentified patient may lead to a wrong patient/location/test adverse event.

Alternatively, in the infection control scenario, new risks evolve as a consequence of inadequate infection control. In this case, an infectious patient (correctly identified as infectious on the patient record) is transferred to the radiology department. There is no initial risk but the effects of individual violations combine to generate the potential for inadequate infection control and an end-of-chain risk of contagion. In this case, the purpose of the scenario is to see if the process generates new risks in relation to infection control. If inadequate infection control is used during a patient transfer, the process may lead to hospital-acquired infections, which are considered adverse events.

Results

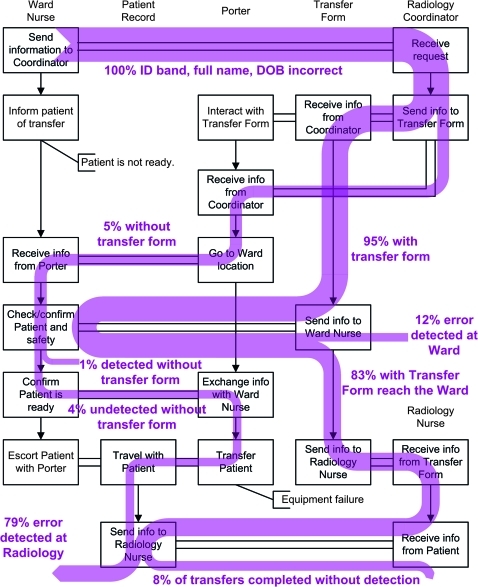

In the misidentification scenario, the process begins with 100% risk of misidentification because the simulation is instantiated such that the patient's record information does not match the patient. Through each simulation, identification checks involving the patient, patient identification band, patient record, and transfer form are used to confirm the identity of the patient and any of these checks can ameliorate the risk. The reduction in risk is illustrated by the reduction in thickness of the aggregated trajectories represented in figure 2. In Figure 2, the boxes are a simplification of the policy, the vertical arrows indicate chronology for the six agents, and the horizontal channels indicate communication between agents. The risk of misidentification is ameliorated partially by the ward nurse and to a greater degree by the radiology nurse during identification checks performed with the patient (figure 2). Of the 500 simulations, 323 featured either the capture of a misidentification or a complete transfer where the misidentification error is never caught. The remaining simulations were halted as a consequence of other factors including patient unavailability and equipment failure. Of the 323 that met the criteria, 26 (8.05%) were complete transfers in which the misidentification was not caught. In 297 (87.3%) simulations, the patient reackihed the radiology department before the misidentification error was identified. Since there were no misidentified patients observed in the 101 observed patient transfers, we are unable to validate the likelihoods.

Figure 2.

A distribution of trajectories for the misidentification scenario indicates the gradual amelioration of risk for a patient with incorrect details. The boxes indicate the important steps in the policy of the patient transfer process, vertical arrows indicate chronology, and horizontal channels indicate information transfer between the six agents. The shaded trajectories indicate the presence of risk at each point in the process, and percentages indicate the proportion of the completed simulations that are associated with risk along the given trajectory. DOB, date of birth; ID, identification.

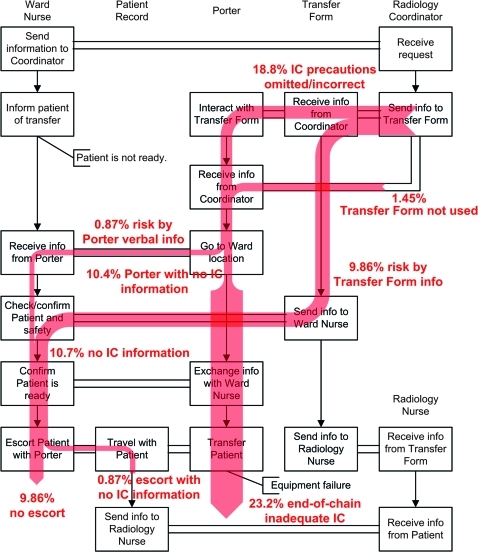

In the infection control scenario, the risk begins at 0% (an infectious patient is not an infection control risk if all actors adhere to policy) and accumulates as a consequence of missing information on the transfer form or in verbal exchanges, and the porter's violations relating to adequate infection control precautions. The risk of inadequate infection control is generated as a consequence of missing or incorrect information on the transfer form, and from violations committed by porters with access to the correct information (figure 3). Of the 500 simulations, 345 were complete (others were stopped for reasons such as patient unavailability and equipment failure during transport). For the 345 simulations that were complete, the number of times that the agents did not ensure adequate infection control precautions was 84 (24.3%), assuming post-completion tasks were always completed. As a consequence of having received misinformation, the lack of information exchange, or the lack of response to correct information, the porter completes the process with an infection risk in 80 (23.2%) cases. The ward nurse completes the process with an infection risk in 37 (10.7%) cases. The results may be interpreted to mean that, in the presence of the workarounds observed, the process is effective in ensuring adequate infection control 76.8% of the time.

Figure 3.

A distribution of trajectories for the infection control scenario indicates the increasing risk associated with lack of adequate infection control in the in-patient transfer process. The boxes indicate the important steps in the policy of the patient transfer process, vertical arrows indicate chronology, and horizontal channels indicate information transfer between the six agents. The shaded trajectories indicate the presence of risk at each point in the process, and percentages indicate the proportion of the completed simulations that are associated with risk along the given trajectory. IC, infection control.

In order to validate the results of the infection control scenario, we compare the observed behavior of the agents in response to an infectious patient with the simulated behavior of the agents in response to an infectious patient. In the observations, 27 transfers involved an infectious patient. Of those, 12 transfers were completed without the porter using infection control (which gives 95% CI 24% to 65%). In comparison, 80 of the 345 simulated transfers were completed without the ward nurse or the porter ensuring adequate infection control (which gives 95% CI 19% to 28%). The results suggest that the simulated results match the observed results (p=0.0136), however, we would be hesitant in using this to form a conclusion due to the small number of observations and because it is only one behavior among the multitude represented in the simulations.

Discussion

The value of using this simulation framework is that it allows a user to quantitatively examine how individual violations combine along trajectories of routine work practices to create risk. The results suggest two main conclusions. First, the proportion of unique trajectories is very high as a consequence of the number of redundant steps required in passing along information about the patient's identity and infection control requirements. Second, chains of percolated errors that lead to risk (or fail to ameliorate risk) are visible when the results of many repeated simulations are aggregated.

The simulation framework presented here complements existing prospective risk analysis methods. It requires more detailed observation of work practices and individual violations than with traditional prospective risk analysis such as FTA and ETA, and consequently has some benefits over existing methods. First, the trajectories offer more precision because the simulation framework explicitly models the behavior of the individuals in the scenario and the information systems with which they interact (rather than logically combining the probabilities of individual violations). A further benefit of this precision is that it allows a user to uncover a fuller range of possible work activity trajectories, of which many may not have been observed (this is indicated by the number of unique trajectories in the simulations). Finally, in traditional forms of prospective risk analysis, the user encodes probabilities for a pre-determined risk of their choice, whereas the simulation framework is scenario-driven. In this case, extra information is available about the likelihood of conforming to the prescribed practice, near misses, rare events, and potentially unforeseen risks.

The in-patient transfer process

We confirmed the structural correctness of the implementation by initially setting all the probability functions to not allow violations. In simulations run under these conditions, agents never diverge from the prescribed work practices. Consequently, simulations always result in the mitigation of any incoming risk of misidentification and adequate infection control is always used. From this baseline instantiation of the model, we can confirm that the policy is reliably implemented if the prescribed work practices are never violated.

Regarding the misidentification scenario, the simulations suggest that the process will capture approximately 92% of wrong patient errors, leaving 8% in which there is the potential for the error to persist through the entire process. The ward nurse often defers the identification check to the later redundant identification check performed by the radiology nurse. A great deal of process efficiency is lost here since a misidentified patient is more likely to be transferred before the error is caught by the process. We might speculate that this may have evolved as a consequence of diffusion of responsibility,26 49 where individuals are unaware of whether another redundant step will or has been taken, in this case, assuming that the check will be performed. This issue highlights the complex nature of redundancy—it appears that the existence of redundant checks across disparate locations and roles may have decreased the levels of compliance. In line with the conclusions suggested by Ong et al,47 we advocate increasing compliance in the existing identification checks before introducing new ones.

Regarding the infection control scenario, the risk of infection is generated by violations in information flow, namely omissions and errors in the transfer form or verbal exchanges between the coordinator, porter, and ward nurse. The results suggest that the individuals will take enough steps to ensure adequate infection control in three-quarters of patient transfers involving an infectious patient. Furthermore, the results suggest that communication errors and procedural errors are approximately equal in their contribution to the risk (in 10.4% of cases, the porter does not have the correct information, and in another 12.8%, the porter does not use adequate infection control despite having correct information), so changes at either point in the process would be appropriate. Since a substantial proportion of risk-associated trajectories occur when the correct information is present, we suggest that other factors such as inadequate understanding of the risks or time pressures may also contribute to the risk of infection.

Limitations

The model is not validated for the purpose of prediction—as such, we would urge caution in interpreting the overall likelihoods for risk as being definitive for the in-patient transfer process in the future, or for other hospitals. We feel this is reasonable because the purpose of the method is to analyze the breadth of possible risks in routine processes rather than to predict specific behaviors. A full validation of the model used in the case study would include further calibration for a number of scenarios (we have calibrated for only the infection control scenario due to limitations of the observed data) and testing against a new set of observations representing the same scenarios. This would demonstrate that the model is capable of predicting behavior, and would thus provide evidence that it could be used as a tool for testing the effects of new organizational process interventions in silico.

When we implement the stochastic process that takes likelihoods from the observed study to inform the likelihood of an agent taking a particular action (or making a specific decision), that stochastic process is independent of the simulation. This means that we have not captured the potential for individual errors to systematically occur together. For example, the model does not take into account whether a ward nurse is more or less likely to also check a patient's full name if he or she contemporaneously checks the identification band of that patient. Similarly, we expect variability among porters, which is not represented by the model we have constructed.

There is much in the way of contextual information that is not captured in regard to misidentification. For example, other information such as gender, ethnicity, other appearance-related information, and prior contact may all be used to provide partial visual identification of a patient, which may be an informal and efficient method of identification that is already employed to augment the policy. In the case of infection control, other cues besides the information transmitted by the coordinator may influence a porter's infection control choices. Given that the simulated agents are able to perceive only the policy-based portion of information present in the real world, the model is likely to be a conservative estimate of the flow of risk.

General conclusions

The inter-ward patient transfer process produces a wide variety of possible behavior trajectories. The proportion of simulations that were unique (considering different combinations of violations that occurred) was 97.4% for the misidentification scenario and 96.4% for the infection control scenario. This result is significant because it suggests that the presence of violations (in the form of workarounds, unintended violations, and communication errors) increases the complexity of a work process, thus creating unique scenarios for which policy based on prescribed work practices cannot account.

This particular process reflects a well-defined workflow with numerous checkpoints and redundant operations that are included with the aim of minimizing the potential for risk. Yet despite this, the potential for risk, either risk not mitigated by the process (in the misidentification scenario) or the risk generated by the process (in the infection control scenario), persists. Our analysis suggests that augmenting the formal process with additional procedures, of the type often suggested in the literature,50–52 may result in further unintended consequences53 54 due to the increased complexity of the procedure and the workarounds that evolve as a consequence. The complexity is increased by increasing the number of trajectory forks, thus increasing the number of possible trajectories. We suggest an alternative—to reduce the complexity of the task and enhance the effectiveness of the remaining steps.

Using the in-patient transfer process as an example, we have demonstrated that an agent-based modeling approach to prospective risk analysis may assist in targeting policy changes according to the flow of risk in a process, complementing existing methods. The simulation framework presented here is likely to generalize to any work process (a) for which a set of policies or guidelines are defined in enough detail to permit the modeling of a prescribed work practice, (b) for which the process is observable such that it is possible to collect information about violations from repeated observations, and (c) involves multiple agents in the form of humans and the information systems that support them (be they verbal, written, or software-driven). The method models the effect of combined violations as divergences from a prescribed work practice, indicating the likelihood of individual trajectories that may or may not lead to iatrogenic harm.

Acknowledgments

The authors would like to acknowledge two anonymous reviewers whose comments greatly improved this article.

Footnotes

Funding: This study was supported by an Australian Research Council Linkage grant (LP0775532) and National Health and Medical Research Council (NHMRC) Program Grant 568612.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.de Vries EN, Ramrattan MA, Smorenburg SM, et al. The incidence and nature of in-hospital adverse events: a systematic review. Qual Saf Health Care 2008;17:216–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zegers M, de Bruijne MC, Wagner C, et al. Adverse events and potentially preventable deaths in Dutch hospitals: results of a retrospective patient record review study. Qual Saf Health Care 2009;18:297–302 [DOI] [PubMed] [Google Scholar]

- 3.Runciman WB, Moller J. Iatrogenic Injury in Australia. Adelaide: Australian Patient Safety Foundation, 2001 [Google Scholar]

- 4.Westbrook JI, Braithwaite J, Georgiou A, et al. Multimethod evaluation of information and communication technologies in health in the context of wicked problems and sociotechnical theory. J Am Med Inform Assoc 2007;14:746–55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Iedema R. New approaches to researching patient safety. Soc Sci Med 2009;69:1701–4 [DOI] [PubMed] [Google Scholar]

- 6.Anderson JG, Ramanujam R, Hensel D, et al. The need for organizational change in patient safety initiatives. Int J Med Inform 2006;75:809–17 [DOI] [PubMed] [Google Scholar]

- 7.Lee R, Cooke D, Richards M. A system analysis of a suboptimal surgical experience. Patient Saf Surg 2009;3:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McKnight LK, Stetson PD, Bakken S, et al. Perceived information needs and communication difficulties of inpatient physicians and nurses. J Am Med Inform Assoc 2002;9(6 Suppl 1):S64–9 [PMC free article] [PubMed] [Google Scholar]

- 9.Parnes B, Fernald D, Quintela J, et al. Stopping the error cascade: a report on ameliorators from the ASIPS collaborative. Qual Saf Health Care 2007;16:12–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Anderson JG, Jay SJ, Anderson M, et al. Evaluating the capability of information technology to prevent adverse drug events: a computer simulation approach. J Am Med Inform Assoc 2002;9:479–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Leape LL, Brennan TA, Laird N, et al. The nature of adverse events in hospitalized patients. N Engl J Med 1991;324:377–84 [DOI] [PubMed] [Google Scholar]

- 12.Wilson RM, Harrison BT, Gibberd RW, et al. An analysis of the causes of adverse events from the Quality in Australian Health Care Study. Med J Aust 1999;170:411–15 [DOI] [PubMed] [Google Scholar]

- 13.Parker J, Coiera E. Improving clinical communication: a view from psychology. J Am Med Inform Assoc 2000;7:453–61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Woolf SH, Kuzel AJ, Dovey SM, et al. A string of mistakes: the importance of cascade analysis in describing, counting, and preventing medical errors. Ann Fam Med 2004;2:317–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Reason J. Human error: models and management. BMJ 2000;320:768–70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rasmussen J, Nixon P, Warner F. Human error and the problem of causality in analysis of accidents [and discussion]. Philos Trans R Soc Lond B Biol Sci 1990;327:449–62 [DOI] [PubMed] [Google Scholar]

- 17.Marx DA, Slonim AD. Assessing patient safety risk before the injury occurs: an introduction to sociotechnical probabilistic risk modelling in health care. Qual Saf Health Care 2003;12(Suppl 2):ii33–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.van Leeuwen JF, Nauta MJ, de Kaste D, et al. Risk analysis by FMEA as an element of analytical validation. J Pharm Biomed Anal 2009;50:1085–7 [DOI] [PubMed] [Google Scholar]

- 19.Bonnabry P, Cingria L, Sadeghipour F, et al. Use of a systematic risk analysis method to improve safety in the production of paediatric parenteral nutrition solutions. Qual Saf Health Care 2005;14:93–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Burgmeier J. Failure mode and effect analysis: an application in reducing risk in blood transfusion. Jt Comm J Qual Improv 2002;28:331–9 [DOI] [PubMed] [Google Scholar]

- 21.Robinson DL, Heigham M, Clark J. Using failure mode and effects analysis for safe administration of chemotherapy to hospitalized children with cancer. Jt Comm J Qual Patient Saf 2006;32:161–6 [DOI] [PubMed] [Google Scholar]

- 22.Chiozza ML, Ponzetti C. FMEA: a model for reducing medical errors. Clin Chim Acta 2009;404:75–8 [DOI] [PubMed] [Google Scholar]

- 23.Baird DR, Henry M, Liddell KG, et al. Post-operative endophthalmitis: the application of hazard analysis critical control points (HACCP) to an infection control problem. J Hosp Infect 2001;49:14–22 [DOI] [PubMed] [Google Scholar]

- 24.Bonan B, Martelli N, Berhoune M, et al. The application of hazard analysis and critical control points and risk management in the preparation of anti-cancer drugs. Int J Qual Health Care 2009;21:44–50 [DOI] [PubMed] [Google Scholar]

- 25.Griffith C, Obee P, Cooper R. The clinical application of hazard analysis critical control points (HACCP). Am J Infect Control 2005;33:e39 [Google Scholar]

- 26.Wreathall J, Nemeth C. Assessing risk: the role of probabilistic risk assessment (PRA) in patient safety improvement. Qual Saf Health Care 2004;13:206–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Garnerin P, Pellet-Meier B, Chopard P, et al. Measuring human-error probabilities in drug preparation: a pilot simulation study. Eur J Clin Pharmacol 2007;63:769–76 [DOI] [PubMed] [Google Scholar]

- 28.Koppel R, Wetterneck T, Telles JL, et al. Workarounds to barcode medication administration systems: Their occurrences, causes, and threats to patient safety. J Am Med Inform Assoc 2008;15:408–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Spear SJ, Schmidhofer M. Ambiguity and workarounds as contributors to medical error. Ann Intern Med 2005;142:627–30 [DOI] [PubMed] [Google Scholar]

- 30.Wooldridge M, Jennings NR. Intelligent agents: Theory and practice. Knowl Eng Rev 1995;10:115–52 [Google Scholar]

- 31.Franklin S, Graesser A. Is it an agent, or just a program?: a taxonomy for autonomous agents. In: Müller JP, Wooldridge MJ, Jennings NR, eds. Intelligent Agents III. Berlin: Springer-Verlag, 1997 [Google Scholar]

- 32.Sierhuis M, Clancey WJ, van Hoof RJJ. Brahms: a multi-agent modelling environment for simulating work processes and practices. International Journal of Simulation and Process Modelling 2007;3:134–52 [Google Scholar]

- 33.Sierhuis M, Jonker C, van Riemsdijk B, et al. Towards organization aware agent-based simulation. International Journal of Intelligent Control and Systems 2009;14:62–76 [Google Scholar]

- 34.Anderson JR, Matessa M, Lebiere C. ACT-R: a theory of higher level cognition and its relation to visual attention. Hum Comput Interact 1997;12:439–62 [Google Scholar]

- 35.Altmann EM, Trafton JG. Memory for goals: an architectural perspective. Proceedings of the Twenty First Annual Meeting of the Cognitive Science Society. Hillsdale, NJ: Erlbaum, 1999:19–24 [Google Scholar]

- 36.Altmann EM, Trafton JG. Memory for goals: an activation-based model. Cogn Sci 2002;26:39–83 [Google Scholar]

- 37.Salvucci DD, Taatgen NA. Threaded cognition: an integrated theory of concurrent multitasking. Psychol Rev 2008;115:101–30 [DOI] [PubMed] [Google Scholar]

- 38.John BE, Kieras DE. The GOMS family of user interface analysis techniques: comparison and contrast. ACM Trans Comput-Hum Interact 1996;3:320–51 [Google Scholar]

- 39.Hoot NR, LeBlanc LJ, Jones I, et al. Forecasting emergency department crowding: a discrete event simulation. Ann Emerg Med 2008;52:116–25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jun JB, Jacobson SH, Swisher JR. Application of discrete-event simulation in health care clinics: a survey. J Oper Res Soc 1999;50:109–23 [Google Scholar]

- 41.Eldabi T, Paul RJ, Young T. Simulation modelling in healthcare: reviewing legacies and investigating futures. J Oper Res Soc 2007;58:262–70 [Google Scholar]

- 42.Laskowski M, Mukhi S. Agent-based simulation of emergency departments with patient diversion. In: Weerasinghe D, eds. Electronic Healthcare. Berlin: Springer, 2009, 25–37 [Google Scholar]

- 43.Cardoen B, Demeulemeester E. Capacity of clinical pathways—a strategic multi-level evaluation tool. J Med Syst 2008;32:443–52 [DOI] [PubMed] [Google Scholar]

- 44.Catchpole K, Sellers R, Goldman A, et al. Patient handovers within the hospital: translating knowledge from motor racing to healthcare. Qual Saf Health Care 2010;19:318–22 [DOI] [PubMed] [Google Scholar]

- 45.NSW Health [Internet] Correct Patient, Correct Procedure and Correct Site. 2007. http://www.health.nsw.gov.au/policies/pd/2007/PD2007_079.html (accessed 20 Sep 2010). [Google Scholar]

- 46.NSW Health [Internet] Hand Hygiene Policy. 2010. http://www.health.nsw.gov.au/policies/pd/2010/PD2010_058.html (accessed 20 Sep 2010). [Google Scholar]

- 47.Ong M-S, Coiera E. Safety through redundancy: a case study of in-hospital patient transfers. Qual Saf Health Care 2010;19:e32. [DOI] [PubMed] [Google Scholar]

- 48.Barlas Y. Formal aspects of model validity and validation in system dynamics. Syst Dyn Rev 1996;12:183–210 [Google Scholar]

- 49.West E. Organisational sources of safety and danger: sociological contributions to the study of adverse events. Qual Health Care 2000;9:120–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Singh H, Naik A, Rao R, et al. Reducing diagnostic errors through effective communication: Harnessing the power of information technology. J Gen Intern Med 2008;23:489–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kopec D, Kabir MH, Reinharth D, et al. Human errors in medical practice: Systematic classification and reduction with automated information systems. J Med Syst 2003;27:297–313 [DOI] [PubMed] [Google Scholar]

- 52.Bates DW, Gawande AA. Improving safety with information technology. N Engl J Med 2003;348:2526–34 [DOI] [PubMed] [Google Scholar]

- 53.Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA 2005;293:1197–203 [DOI] [PubMed] [Google Scholar]

- 54.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: The nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104–12 [DOI] [PMC free article] [PubMed] [Google Scholar]