Abstract

Methods

Clinical guideline adherence for diagnostic imaging (DI) and acceptance of electronic decision support in a rural community family practice clinic was assessed over 36 weeks. Physicians wrote 904 DI orders, 58% of which were addressed by the Canadian Association of Radiologists guidelines.

Results

Of those orders with guidelines, 76% were ordered correctly; 24% were inappropriate or unnecessary resulting in a prompt from clinical decision support. Physicians followed suggestions from decision support to improve their DI order on 25% of the initially inappropriate orders. The use of decision support was not mandatory, and there were significant variations in use rate. Initially, 40% reported decision support disruptive in their work flow, which dropped to 16% as physicians gained experience with the software.

Conclusions

Physicians supported the concept of clinical decision support but were reluctant to change clinical habits to incorporate decision support into routine work flow.

Keywords: Decision support, guidelines, diagnostic imaging, family practice, rural catchment area, practice change, clinical decision support, machine learning, clinicial guidelines, diagnostic imaging, rural practice

Introduction

Evidence has mounted over the past decade that between 10 and 20% of imaging studies are unnecessary,1 amounting to millions in wasteful spending annually. Because all medical procedures involve risk, inappropriate diagnostic imaging (DI) is also a patient-safety issue.2 Unnecessary exposure to radiation is particularly troubling in children who are the most vulnerable to radiation-induced cancers.3 4

Clinical guidelines can significantly reduce inappropriate imaging if clinicians follow the guidelines.5–10 Presenting guidelines in interactive electronic format may be more effective than hard-copy formats11 in changing physician clinical behavior to best current practice. The highest probability of guideline effectiveness in clinical decision support appears to be a specific reminder delivered to the clinician about best practice relevant to a specific patient at the time of consultation with that patient.12 13 Median improvement in practice with this methodology was 14% as opposed to half that amount from dissemination of educational materials (8%), audit and feedback (7%), and multifaceted educational intervention involving educational outreach (detailing) (6%).14

Computer-based order-entry (CPOE) systems are well suited to providing decision support to clinicians in real-time during their regular work flow. Evidence is accumulating that CPOE by itself has a positive effect in reducing unnecessary DI orders.15–17 There are, however, two significant and continuing issues in the implementation of computer decision support for DI.

Methods

Participants

The Steinbach Family Medicine Clinic in rural Manitoba was chosen as the project site based on expressions of interest from their lead physician and an initial round of capacity checking interviews and on-site visits. During the project, the clinic had between 15 and 19 physicians at any one time. The majority (17) were family medicine and general practitioners. Two were surgeons whose use of DI decision support was very narrow and very rare. Physicians joined and left the partnership as their personal circumstances indicated. During the length of this project (36 weeks), one physician left, and three joined. There were a number of locums and residents working in the clinic at various times. Set-up and training were accomplished in 10 weeks; full data collection was maintained for 28 weeks. Through the study period, 16 physicians used the CPOE and decision support at least once. These data entered analysis.

Although DI ordering through decision support and CPOE was not mandatory, it was made available to any interested on-site physician. Repeated training and individual technical problem-solving were offered on demand throughout the project. All physicians, nurses and clerical staff at the clinic were trained on the decision-support system, but only data from physicians were analyzed. Initially, a few nurses and clerical staff entered DI orders for physicians, but that ceased as soon as physicians realized they were personally required to interact with decision support because any DI ordering decision (ie, to ignore best practice advice supplied by decision support) constituted a legal medical act. This increased involvement of physicians in DI order entry is similar to that observed in other CPOE studies.15

Decision-support software

Two Medicalis software systems were utilized—the computerized physician order entry (CPOE) (SmartReq) and the decision-support system (Decision Support Server)—the latter incorporating The Canadian Association of Radiologists (CAR) DI Referral Guidelines. A project server was housed on-site at the Steinbach clinic to enable decision support to interact with the clinic's electronic medical record (EMR), Jonoke. Decision support communicated with the EMR and DI order entry via web-based handoffs among the servers. The electronic interaction was almost instantaneous and eliminated the necessity of any patient data entry for decision support or DI order creation. The project affected the physicians' usual work flow in two ways:

DI orders could be created electronically (as opposed to locating the written form required by each imaging site and writing out the order, although this function was previously most often delegated to clerical staff); and

Decision support would query all DI orders that contravened the guidelines. That query might ask for more explanatory detail and/or suggest an alternative DI procedure or no DI at all. Although the query or prompt screens were well marked and immediate, each of these query routes would take time for the physician to read, consider, and respond.

Physician work flow with electronic decision support and CPOE

The physician logged into the clinic EMR system to automatically acquire patient data from the EMR database. All physicians did this automatically to access patient history, lab results, current medications, and other clinical details regardless of potential interest in DI.

If the physician wanted DI, a mouse click triggered the CPOE with embedded clinical support.

Within CPOE, the physician ordered an imaging study from a series of drop-down menus and provided relevant clinical information by clicking relevant signs, symptoms, history, and differential diagnoses. Free-text fields were available to provide more detailed information if desired. Even with physicians entering free text, this data input typically took less than 1 min.

The CPOE linked to decision support to determine if the imaging study ordered was appropriate according to the CAR guidelines, based on the clinical information provided. If necessary, the decision-support software requested additional information in order to make this determination.

If the request matched the guidelines, it was considered appropriate and returned to the EMR to record and print the DI requisition. This exchange was almost instantaneous (less than 5 s). The printed DI requisition was either given to the patient to take to an appropriate imaging site or faxed directly to the imaging site.

If the imaging study ordered was not recommended by the guidelines, the relevant guideline appeared on the screen, along with a recommendation for a more appropriate study or suggesting that no imaging was required at that time. A determination of inappropriate DI ordering appeared as quickly as those presenting a match to guidelines: less than 5 s.

The physician could follow the advice of the guideline or continue with the original order by over-riding the guideline prompt. Physician response varied to this intended pause for reflection. Some over-rode the guideline prompt immediately; others took time to read the presented rationale for an alternate course, then made a decision to accept or ignore the prompt. Physicians typically spent less than 1 min with this review. Four physicians reported their preference to put the DI order on hold and return to it at the end of the day when they could take more time to consider the presented alternative.

Following or over-riding the guideline prompt returned the physician to the EMR to print the DI requisition if still needed. Not including the variable amount of time physicians spent in reviewing the prompt information, completing a DI order after a prompt presentation took 10 s.

All the details of each order process were saved within the software and were available for later analysis.

Qualitative methods

Individual interviews were conducted with participating physicians at baseline prior to CPOE, halfway through the project and at project conclusion. Two methods were employed: a self-rating analog scale completed by each respondent; and a 15 min interview following a standard interview guide. Interviews were recorded, transcribed, and coded for response themes.

Results

Quantitative results

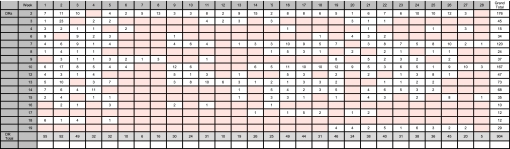

Analysis was based on the automatic data collection maintained by the software system. There was significant participation variation across physicians (table 1).

Table 1.

Decision-support usage rates by week

|

Three physicians did not use the CPOE or decision support at any time during the study period and are not included in the above table. Sixteen physicians used the DI decision-support system to some extent; five were extensive users. Three of the 16 physicians accounted for 51% of all decision-support use, and two others for an additional 16%. These top five users maintained their use rates throughout the study.

Physicians placed 904 DI orders through the CPOE and decision support. The CAR guidelines addressed 524 (58%) of the orders. Of those DI orders with relevant guidelines, 401 (76%) initially placed orders were appropriate. The decision-support system identified 123 (24%) orders as inappropriate and supplied a best-practice prompt. Physicians followed the decision-support advice in 31 (25%) cases, for which they were presented prompt information. Five orders were canceled on prompting to do so; 26 were changed. There was significant variation among physicians in their willingness to change their orders on receiving a prompt: nine changed at least one order during the project; one physician changed nine orders.

Qualitative results

All participating physicians completed all analog rating scales and almost all qualitative interviews: of a possible 52 interview opportunities, 45 were completed.

On average, the physicians reported themselves as only middling involved in the project. This result may be an artefact of pooled qualitative measures. Physicians who began as involved users remained so throughout the project (see table 1).

The largest challenge identified was perceived interference with usual work flows, specifically the interactivity between EMR and the CPOE decision support. This interaction was perceived to be too slow, although it clocked at less than 1 s. The time required to interact with decision support was also perceived by physicians to be too long. When asked at the project's conclusion if they would use decision support if the software worked more smoothly, 16/19 (84%) stated ‘yes.’

Half of the physicians initially questioned the validity of the CAR guidelines.

These above two concerns were considered solved or corrected by 46% of respondents (21% fully satisfied and a further 25% partially) at the project's conclusion.

Anonymized performance summaries delivered by email were rated of moderate benefit.

Perceived effects of project participation changed during the project (table 2).

Table 2.

Perceived effects of project participation

| At baseline | At midpoint | At conclusion | |

| Access to on-site CME seminars | 13/15 (86%) | 10/19 (53%) | 6/19 (32%) |

| Usefulness of CME provided | 12/15 (80%) | 15/19 (79%) | 15/19 (79%) |

| Ordering better diagnostic imaging tests | 10/15 (66%) | 9/19 (47%) | 10/19 (52%) |

| Participation detrimental to practice efficiency | 6/15 (40%) | 13/19 (68%) | 3/19 (16%) |

| Commitment to using diagnostic-imaging decision support | 11/15 (73%) | 10/19 (52%) | 9/19 (47%) |

CME, continuing medical education.

Lessons learned

Acceptance of decision-support technology was primarily affected by the perceived disruption of clinical work flow. Even as actual disruption diminished with improvements in software function and presentation, the perception remained and may have prevented effective use of decision support.

Improving the interface between decision-support software and clinicians is a continuous process requiring regular review with users, willingness, and capability to modify software to suit user groups.

Passive interventions such as those used in this study (individual performance information and continuing medical education (CME) on demand) are not effective in improving physician compliance with best-practice guidelines.

The Medicalis decision-support software with the CAR Guidelines is well suited to clinical-decision support: it is sufficiently configurable to minimize disruption to clinical work flow, and it produces detailed monitoring of DI decision-making by individual physicians in identifiable clinical situations.

Adequate time is required to assess the computer readiness of physician participants and to identify early adopters. Regardless of the time pressure to collect data, it is unwise to introduce new decision-support software to all potential participating physicians at one time. Early adopters tend to be IT-experienced innovators more tolerant of start-up interface problems and interested in correcting them. That initial support will bolster their colleagues' adoption in phased roll-outs.

Effective decision support requires physician acceptance of oversight into clinical decision-making. Effective medical leadership requires continual quality improvement in healthcare delivery. Further studies should investigate methods to optimize the utility of the detailed information produced by decision-support software coupled with evidence-based guidelines for quality improvement at practitioner, group, and systems levels.

Supplementary Material

Appendices

Five appendices are included as supplementary data:

Behavior-change supports

Interview protocols

Line-graph-use data

Changed orders detail

Unchanged orders detail

Footnotes

Funding: This study was made possible through financial contributions from Health Canada and from the Canadian Association of Radiologists. The views expressed herein do not necessarily represent the views of either Health Canada or Canadian Association of Radiologists. Health Canada contract #: HC 6804-15-2008/9300006.

Competing interests: None.

Ethics approval: Ethics approval was provided by the University of Manitoba Research Ethics Board.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Picano E. Sustainability of medical imaging. BMJ 2004;328:578–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Smith-Bindman R, Lipson J, Marcus R, et al. Radiation dose associated with common computed tomography examinations and the associated lifetime attributable risk of cancer. Arch Intern Med 2009;169:2078–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Huang B, Law MW, Mak HK, et al. Pediatric 64-MDCT coronary angiography with ECG-modulated tube current: radiation dose and cancer risk. Am J Roentgenol 2009;193:539–44 [DOI] [PubMed] [Google Scholar]

- 4.Graf WD, Kayyali HR, Alexander JJ, et al. Neuroimaging-use trends in non-acute pediatric headache before and after clinical practice parameters. Pediatrics 2008;122:1001–5 [DOI] [PubMed] [Google Scholar]

- 5.Stiell IG, McKnight RD, Greenberg GH, et al. Implementation of the Ottawa ankle rules. J Am Med Assoc 1994;271:827–32 [PubMed] [Google Scholar]

- 6.Stiell IG, Clement CM, Grimshaw J, et al. Implementation of the Canadian C-Spine rule: prospective 12 centre cluster randomised trial. BMJ 2009;339:b4146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Auleley GR, Ravaud P, Giraudeau B, et al. Implementation of the Ottawa ankle rules in France. a multicenter randomized controlled trial. J Am Med Assoc 1997;277:1935–9 [PubMed] [Google Scholar]

- 8.Verma S, Hamilton K, Hawkins HH, et al. Clinical application of the Ottawa ankle rules for the use of radiography in acute ankle injuries: an independent site assessment. Am J Roentgenol 1997;169:825–7 [DOI] [PubMed] [Google Scholar]

- 9.Leddy JJ, Smolinski RJ, Lawrence J, et al. Prospective evaluation of the Ottawa ankle rules in a university sports medicine center: with a modification to increase specificity for identifying malleolar fractures. Am J Sports Med 1998;26:158–65 [DOI] [PubMed] [Google Scholar]

- 10.Perry JJ, Stiell IG. Impact of clinical decision rules on clinical care of traumatic injuries to the foot and ankle, knee, cervical spine, and head. Injury 2006;37:1157–65 [DOI] [PubMed] [Google Scholar]

- 11.Garg A, Adhikari N, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. J Am Med Assoc 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 12.Grimshaw J, Russell I. Achieving health gain through clinical guidelines: developing scientifically valid guidelines. Qual Health Care 1993;2:243–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Grimshaw JM, Thomas RE, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess 2004;8:1–72 [DOI] [PubMed] [Google Scholar]

- 15.Vartanians VM, Sistrom CL, Weilburg JB, et al. Increasing the appropriateness of outpatient imaging: effects of a barrier to ordering low-yield examinations. Radiology 2010;255:842–9 [DOI] [PubMed] [Google Scholar]

- 16.Sistrom CL, Dang PA, Weilburg JB, et al. Effect of computerized order entry with integrated decision support on the growth of outpatient procedure volumes: seven-year time series analysis. Radiology 2009;251:147–55 [DOI] [PubMed] [Google Scholar]

- 17.Rosenthal DI, Weilburg JB, Schultz T, et al. Radiology order entry with decision support: initial clinical experience. J Am Coll Radiol 2006;3:799–806 [DOI] [PubMed] [Google Scholar]

- 18.Schnipper JL, Linder JA, Palchuk MB, et al. ‘Smart Forms’ in an electronic medical record: documentation-based clinical decision support to improve disease management. J Am Med Inform Assoc 2008;15:513–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cabana MD, Rand CS, Powe NR, et al. Why don't physicians follow clinical practice guidelines? A framework for improvement. J Am Med Assoc 1999;282:1458–65 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.