Abstract

Objective

To synthesize the literature on clinical decision-support systems' (CDSS) impact on healthcare practitioner performance and patient outcomes.

Design

Literature search on Medline, Embase, Inspec, Cinahl, Cochrane/Dare and analysis of high-quality systematic reviews (SRs) on CDSS in hospital settings. Two-stage inclusion procedure: (1) selection of publications on predefined inclusion criteria; (2) independent methodological assessment of preincluded SRs by the 11-item measurement tool, AMSTAR. Inclusion of SRs with AMSTAR score 9 or above. SRs were thereafter rated on level of evidence. Each stage was performed by two independent reviewers.

Results

17 out of 35 preincluded SRs were of high methodological quality and further analyzed. Evidence that CDSS significantly impacted practitioner performance was found in 52 out of 91 unique studies of the 16 SRs examining this effect (57%). Only 25 out of 82 unique studies of the 16 SRs reported evidence that CDSS positively impacted patient outcomes (30%).

Conclusions

Few studies have found any benefits on patient outcomes, though many of these have been too small in sample size or too short in time to reveal clinically important effects. There is significant evidence that CDSS can positively impact healthcare providers' performance with drug ordering and preventive care reminder systems as most clear examples. These outcomes may be explained by the fact that these types of CDSS require a minimum of patient data that are largely available before the advice is (to be) generated: at the time clinicians make the decisions.

Keywords: Quality, machine learning

Introduction

In ‘Crossing the quality chasm,’ the Institute of Medicine pointed out the wide variations in healthcare practice, and the inefficiencies, dangers, and inequalities that have resulted from nonoptimal patient care.1 Evidence-based medicine (EBM) aims to reduce practice variation and improve quality of care. It does so by combining the clinical skills and experience of the healthcare professional and preferences of the patient with the best external clinical evidence available in order to make balanced decisions about medical care.2 EBM has, since its introduction in the 1980s, become widespread and has been adopted by international healthcare organizations such as the WHO and the Institute of Medicine. EBM seems a fairly common-sense solution, but it has proved to be far from simple to implement. A report from Grol et al provides a brief overview of strategies for the effective implementation of change in patient care.3 One of the interventions discussed is the use of reminders and computers for the implementation of evidence in daily practice. It is concluded that, among other interventions on the organizational and team level, professional development needs to be built into daily patient care as much as possible. This preferably should take place at the point of care with clinical decision-support tools and real-time patient-specific reminders to help doctors make the best decisions. Clinical decision support is defined as: ‘providing clinicians or patients with computer-generated clinical knowledge and patient-related information, intelligently filtered or presented at appropriate times, to enhance patient care.’4 Clinical knowledge incorporated in clinical decision-support systems (CDSS), for instance, can be based on available best evidence which is represented in guideline recommendations.

There are many different types of clinical tasks that can be supported by CDSS. A well-known and frequently applied CDSS is the patient-monitoring device (eg, an ECG or pulse oximeter) that warns of changes in a patient's condition. CDSS integrated in Electronic Medical Record systems (EMRs) and computerized physician order entry systems (CPOEs) can send reminders or warnings for deviating laboratory test results, check for drug–drug interactions, dosage errors, and other prescribing contraindications such as a patient's allergies, and generate lists of patients eligible for a particular intervention (eg, immunizations or follow-up visits). When a patient's case is complex or rare, or the healthcare practitioner making the diagnosis is inexperienced, a CDSS can help in formulating likely diagnoses based on (a) patient data and (b) the system's knowledge base of diseases. Subsequently, the CDSS can formulate treatment suggestions based upon treatment guidelines.

Research into the impact of CDSS on healthcare practitioner performance and patient outcomes in hospital settings has increased, and evidence of the effectiveness of CDSS has been synthesized into several systematic reviews (SRs). However, an overview of this evidence based on a critical appraisal of SRs focusing on CDSS impact is not available. Therefore, we set out to provide a synthesis of high-quality SRs examining CDSS interventions in hospital settings. The objective is (a) to summarize their effects on practitioner performance and patient outcome, and (b) to highlight areas where more research is needed.

Methods

Search strategy

To find relevant SRs, we developed a search strategy in cooperation with an experienced clinical Librarian. The search strategy was first developed for Medline to be adapted later to search Embase, Inspec, Cinahl, and Cochrane/Dare. To identify SRs we used a multiple-term search strategy as proposed by Montori et al5 and multiple keywords and Medical Subject Headings (MeSH) terms for the interventions CDSS or CPOE (table 1). No time period or language limitation was applied. Box 1 displays the search strategy for PubMed.

Table 1.

Groups of keywords and Medical Subject Headings (MeSH) terms (in bold) used in the search strategy

| Medical order entry systems | Decision support systems, clinical |

| Computerized order entry | Decision-support systems |

| Computerized prescriber order entry | Clinical decision-support systems |

| Computerized provider order entry | Reminder systems |

| Electronic order entry | Computer assisted decision-making |

| Electronic prescribing | Diagnosis, computer-assisted |

| Electronic physician order entry | Therapy, computer-assisted |

| Computerized physician order entry | Expert systems |

| Drug-therapy, computer-assisted |

Box 1. Search strategy in PubMed.

(literature review[tiab] OR critical appraisal[tiab] OR meta analysis[pt] OR systematic review[tw] OR medline[tw]) AND (medical order entry systems[mh] OR medical order entry system*[tiab] OR computerized order entry[tiab] OR computerized prescriber order entry[tiab] OR computerized provider order entry[tiab] OR computerized physician order entry[tiab] OR electronic order entry[tiab] OR electronic prescribing[mh] OR electronic prescribing[tiab] OR cpoe[tiab] OR drug-therapy, computer assisted[mh] OR computer assisted drug therapy[tiab] OR decision support systems, clinical[mh] OR decision support system*[tiab] OR reminder system*[tiab] OR decision-making, computer assisted[mh] OR computer assisted decision making[tiab] OR diagnosis, computer assisted[mh] OR computer assisted diagnosis[tiab] OR therapy, computer assisted[mh] OR computer assisted therapy[tiab] OR expert systems[mh] OR expert system*[tiab] OR *CDS*[tiab]).

Inclusion of relevant studies

To assess whether publications that were found were relevant, we applied the following inclusion criteria: types of studies, intervention, target groups, and outcome measures.

Studies

Only SRs were eligible for inclusion. To determine wether a publication was a SR, we used the checklist for assessment of systematic reviews of the Dutch Cochrane Centre.6 For the initial screening of titles and abstracts, we considered a review to be systematic if at least (a) Medline had been searched, and (b) the methodological quality of the included studies had been assessed by the reviewer(s). For the screening based on full text, we added the AMSTAR criteria7 8 as described hereafter.

Intervention

CDSS combining clinical knowledge with patient characteristics, including CPOE systems decision-support functionality and CDSS for diagnostic performance.

Target groups

The CDSS interventions studied should be aimed at healthcare professionals such as: physicians, nurses, and other practitioners who are directly responsible for patient care in the hospital setting (in- and outpatient). We excluded CDSS interventions aimed at healthcare professionals who are indirectly involved in patient care at ancillary clinical departments such as laboratories, radiology, pathology, and physiological function departments.

Outcome measures

Within the SRs, either practitioner performance or patient outcomes should be measured.

A two-stage inclusion process was applied. In the first stage, titles and abstracts of articles identified by the search strategy were screened by two reviewers independently to assess whether these publications met the inclusion criteria. When the title and/or abstract provided insufficient information to determine relevance, full paper copies of the articles were retrieved in order to determine wether they fulfilled the inclusion criteria. Additionally, a manual search of the reference list of the selected full text papers was performed to identify SRs which our search could have missed. Any disagreements between the two reviewers were resolved by discussion and consultation of a third reviewer to reach consensus.

The second stage of inclusion relates to the methodological assessment of the reviews. All reviews that remained after the first stage were assessed with the Assessment of Multiple Systematic Reviews (AMSTAR) tool.7 8 AMSTAR is an 11-item measurement tool for the assessment of multiple systematic reviews that have good reliability and validity.8 9 The AMSTAR items are scored as ‘Yes,’ ‘No,’ ‘Can't answer,’ or ‘Not applicable.’ The AMSTAR criteria comprise: (1) ‘A priori’ design provided; (2) duplicate study selection/data extraction; (3) comprehensive literature search; (4) status of publication used as inclusion criterion; (5) list of studies (included/excluded) provided; (6) characteristics of included studies documented; (7) scientific quality assessed and documented; (8) appropriate formulation of conclusions; (9) appropriate methods of combining studies; (10) assessment of publication bias; and (11) conflict of interest statement.

The maximum score on AMSTAR is 11 and scores of 0–4 indicate that the review is of low quality; 5–8 that the review is of moderate quality; and 9–11 that the review is of high quality. Data were extracted only from high-quality reviews (=with scores of 9 and above) because low-quality reviews may reach different conclusions than high-quality reviews, and also to avoid false conclusions that are based on low-quality evidence.7

Two reviewers undertook independent critical appraisal. Discussion among the two reviewers and a third independent reviewer occurred on all dual-appraised articles to verify appraisal processes, and to resolve disagreements on individual item score allocation.

Data extraction and management

Data extraction was independently performed by two reviewers and verified by a third reviewer. The data abstracted were categorized in outcome measures: (a) practitioner performance and (b) patient outcome. The following data were extracted from each included SR using a structured data collection form: data related to clinical settings and target groups of CDSS implementation, number and type of trials included, and main outcomes and conclusions. Separate summaries were made for practitioner performance and patient outcomes. Within these summaries, a distinction was made between general SRs, disease/therapy-specific SRs and setting/patient population-specific SRs. Results on practitioner performance and patient outcomes were assessed by grading them on the strength of evidence for improvement. The evidence strength is based on the included randomized controlled trials (RCT) in the SRs. RCT was defined as an experimental design used for testing the effectiveness of a clinical decision-support tool in which individuals are assigned randomly to the intervention and a control group (standard procedure) and for which the outcomes are compared:

strong evidence: results based on RCTs and effect in 50% of more of the studies;

limited evidence: results based on RCTs and effect in 40–50% of the studies;

insufficient evidence: results based on non-randomized studies or effects in less than 40% of the studies.

Results

Selection of studies

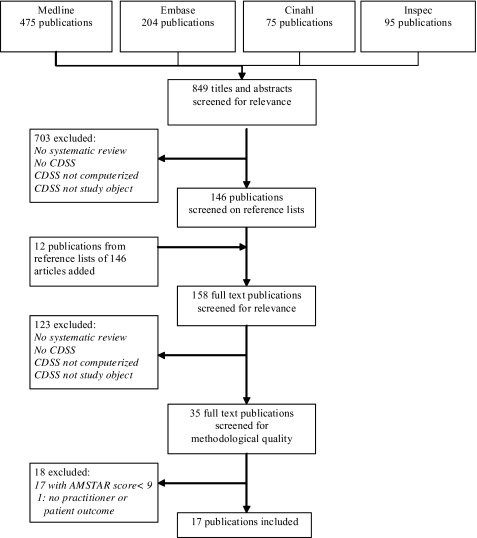

After duplicates had been removed, the searches in the different databases resulted in an initial set of 849 references of potential interest. Initial sifting based on title and abstract resulted in exclusion of 703 articles with full agreement between the two reviewers, reducing the first set to 146 references. An additional manual search of the reference list of the selected studies resulted in another 12 potentially relevant references. Full texts of the remaining potential relevant articles (n=158) were assessed independently by two reviewers against the inclusion criteria. Agreement between reviewers in this phase was 94% with subsequent exclusion of 115 articles. Discussion among the three reviewers was needed for eight references, and agreement was subsequently reached (all eight articles excluded). A set of 35 references finally proved to fulfil the inclusion criteria for type and content of study.

In the following stage, two reviewers independently assessed the 35 included reviews on their methodological quality, using the AMSTAR tool.7 8 Initial reviewer agreement on 354 of the 385 (35×11) individual AMSTAR item scores was reached in this phase (92%); disagreements on score allocations were resolved through discussion with a third reviewer.

Further study excluded two SRs as preliminary reports in conference proceedings for which a full SR article was included.10 11 Of the remaining set of 33 SRs, 15 had a mean quality score lower than 9 and were excluded,10–26 and 18 (51%) were high-quality SRs and advanced to the stage of data-extraction and analysis. One of these 18 SRs was excluded because it did not provide results on practitioner performance or patient outcomes. The flow diagram of the inclusion process is shown in figure 1. Table 2 provides the critical appraisal results of the SRs included.

Figure 1.

Search flow for systematic review literature on clinical decision-support systems. Cochrane/Dare results were all duplicates of the other searches.

Table 2.

Systematic review critical appraisal based on Assessment of Multiple Systematic Reviews (AMSTAR) 11-item measurement tool7 8

| First author, year | AMSTAR criteria | Total | ||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | ||

| Included | ||||||||||||

| Johnston, 1994 | Y | Y | Y | Y | Y | Y | Y | N | Y | N | Y | 9 |

| Hunt, 1998 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | 9 |

| Montgomery, 1998 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | 10 |

| Chatellier, 1998 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | 9 |

| Walton, 1999 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | 11 |

| Kaushal, 2003 | Y | Y | Y | Y | N | Y | Y | Y | Y | N | Y | 9 |

| Balas, 2004 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | 9 |

| Garg, 2005 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | 10 |

| Liu, 2006 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | 10 |

| Sanders, 2006 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | 9 |

| Randell, 2007 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | N | 9 |

| Shamliyan, 2007 | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | 10 |

| Wolfstadt, 2008 | Y | C | Y | Y | Y | Y | Y | Y | Y | N | Y | 9 |

| Yourman, 2008 | Y | C | Y | Y | Y | Y | Y | Y | Y | N | Y | 9 |

| Mollon, 2009 | Y | Y | Y | Y | Y | N | Y | Y | Y | N | Y | 9 |

| Durieux, 2009 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | 10 |

| Tan, 2009 | Y | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | 10 |

| Excluded | ||||||||||||

| Shiffman, 1999 | Y | N | Y | N | N | Y | Y | Y | N | N | N | 5 |

| Kaplan, 2001 | Y | N | N | N | N | Y | Y | Y | Y | N | N | 5 |

| Montani, 2001 | Y | N | Y | N | N | Y | Y | Y | N | N | N | 6 |

| Jackson, 2005 | Y | Y | Y | N | Y | Y | Y | Y | Y | N | N | 8 |

| Chaudhry, 2006 | Y | Y | Y | Y | Y | Y | Y | N | N | N | Y | 8 |

| Conroy, 2007 | Y | Y | Y | Y | N | Y | Y | N | N | N | N | 6 |

| Eslami, 2007 | Y | Y | Y | Y | Y | N | N | Y | Y | N | N | 7 |

| Georgiou, 2007 | Y | Y | Y | N | N | Y | Y | Y | N | N | N | 6 |

| Rommers, 2007 | Y | N | Y | Y | N | N | Y | Y | Y | N | N | 6 |

| Shebl, 2007 | Y | N | Y | Y | N | Y | Y | Y | N | N | Y | 7 |

| Sintchenko, 2007 | Y | N | Y | Y | Y | Y | Y | Y | Y | N | N | 8 |

| Ammenwerth, 2008 | Y | Y | Y | N | Y | Y | Y | Y | Y | N | N | 8 |

| Mack, 2009 | Y | N | Y | Y | N | N | Y | N | N | N | N | 4 |

| Van Rosse, 2009 | Y | N | Y | Y | Y | Y | Y | N | N | N | N | 6 |

| Schedlbauer, 2009 | Y | Y | Y | N | Y | N | Y | Y | Y | N | N | 7 |

Scale of item score: C, can't answer; N, no; NA, not applicable; Y, yes.

The AMSTAR criteria are (1) a priori design; (2) duplicate study selection and data extraction; (3) comprehensive literature search; (4) inclusive publication status; (5) included studies provided; (6) characteristics of included studies provided; (7) quality assessment of studies; (8) study quality used appropriately in formulating conclusions; (9) appropriate methods used to combine studies; (10) publication bias assessed; and (11) conflict of interest stated.

Synthesis of evidence

The results of the 17 included SRs are summarized in tables 3, 4.27–43 The SRs were published between 1994 and 2009. In total these SRs included 411 references, 229 of which represented unique studies, with 188 RCTs. Of these unique 188 RCTs, 108 RCTs studied practitioner performance or patient outcome. No increase in SR quality, with regard to fulfilllment of the AMSTAR criteria, was visible over the years. Sixteen of the 17 SRs examined the influence of CDSS on practitioner performance: nine general SRs,27–33 40 41 five disease- or therapy-specific SRs34–37 42 and two setting-or patient-population-specific SRs.38 39 Evidence that CDSS significantly impacted practitioner performance was found in 52 out of 91 unique studies of the 16 SRs that examined this effect (57%). Twelve of these 16 SRs found strong evidence that CDSS improved practitioner performance: six general SRs,27–32 four disease-or therapy specific SRs 34–37 and two setting- or patient-population-specific SRs.38 39 Two general SRs found limited evidence,40 41 and one general33 and one disease- or therapy-specific SRs42 found insufficient evidence that CDSS impacted practitioner performance. Findings were mainly positive for computer reminder systems for preventive care and computer-assisted drug ordering and dosing systems. Preventive care CDDS led to improvements in management of high blood pressure, diabetes care, and asthma care. Drug-prescribing CDDS resulted in improvements in clinicians' ordering patterns of drug dosages and frequencies, decreases in serious medication ordering errors, and adequate drug concentrations in patients. There was insufficient evidence that CDSS improved anticoagulant prescription by physicians.

Table 3.

Levels of evidence for clinical decision-support systems (CDSSs) impacting practitioner performance and patient outcomes of general systematic reviews

| First author, year | CDSS focus | No of trials and subjects | Conclusions |

| Johnston, 1994 | Healthcare practitioners | 28 RCTs 41 924 patients |

|

| Hunt, 1998 | Healthcare practitioners | 68 RCTs ∼65 000 patients |

|

| Walton, 1999 | Healthcare practitioners | Six RCTs, one non-randomized 671 patients |

|

| Kaushall, 2003 | Seven RCTs, three pre–post, two non-randomized; ∼19 000 patients |

|

|

| Garg, 2005 | Healthcare practitioners | 88 RCTs, 12 controlled non-randomized trials ∼92 895 patients 3826 practitioners |

|

| Randell, 2007 | Clinical nurses | Eight RCTs ∼24 000 patients, ∼100 nurses |

|

| Shamliyan, 2007 | Healthcare practitioners | One RCT, eight prospective non-randomized, three retrospective studies ∼200 000 patients |

|

| Wolfstadt, 2008 | Healthcare practitioners | 10 prospective non-randomized, ∼300 000 patients |

|

| Mollon, 2009 | Healthcare practitioners | 41 RCTs, 612 556 patients 2963 providers |

|

| Durieux, 2009 | Healthcare practitioners | 20 RCTs, three controlled trials ∼3000 patients |

|

IE, insufficient evidence; LE, limited evidence; RCT, randomized controlled trial; SE, strong evidence.

Table 4.

Levels of evidence for clinical decision-support systems (CDSSs) impacting practitioner performance and patient outcomes of disease-/therapy-specific and setting-/patient-population-specific systematic reviews

| First author, year | CDSS focus | No of trials and subjects | Conclusions |

| Disease-/therapy-specific systematic reviews | |||

| Montgomery, 1998 | Hypertension | Seven RCTs 11 962 patients, 91 practices Note: three unique studies |

|

| Chatellier, 1998 | Anticoagulant therapy | Nine RCTs 1336 patients |

|

| Balas, 2004 | Diabetes care | 44 RCTs, 31 CDSS focused 6109 patients |

|

| Liu, 2006 | Acute abdominal pain | One RCT 5193 patients |

|

| Sanders, 2006 | Asthma care | 18 RCTs, three uncontrolled trials nine CDSS focused 5757 patients |

|

| Setting/patient-population-specific systematic reviews | |||

| Yourman, 2008 | Medication prescribing adults ≥60 years | Five RCTs, one pre-/poststudy, one cohort study, three interrupted time series |

|

| Tan, 2009 | Effects of CDSS on neonatal care | Three RCTs 282 patients, 27 health professionals |

|

IE, insufficient evidence; LE, limited evidence; RCT, randomized controlled trial; SE, strong evidence.

Sixteen out of the 17 SRs studied the impact of CDSS on patient outcomes. Evidence that CDSS significantly impacted patient outcomes was found in 25 out of 81 unique studies of the 16 SRs that examined this effect (30%). These effects were related to drug ordering and dosing systems and CDSS for preventive care and disease management. Only three of these 16 SRs29 35 37 found strong evidence that CDSS impacted patient outcomes: one general SR29 and two disease-/therapy-specific SRs.35 37 Two general SRs found limited evidence,30 41 and the remaining 11 SRs found insufficient evidence: seven general,27 28 31–33 40 41 two disease-/therapy-specific34 36 and the two setting-/patient-population-specific SRs.38 39

Discussion

Quality of studies included in the systematic reviews

Although we merely synthesized the findings of SRs with an AMSTAR score of 9–11, the methodology quality of the studies included in these SRs was a major discussion point. The SRs included a total of 229 unique studies with 188 RCTs (82%). Non-randomized uncontrolled interventions may provide biased overestimated effects of CDSS. None of the 10 studies in the SR by Wolfstadt et al,43 for example, were RCTs, which highly reduced the significance of their findings. Risk of contamination of results was another concern in the RCT studies included in at least three SRs.32 37 38 Finally, Liu et al36 reported that the uncontrolled before–after study and interrupted-time series, both taking limited account of known but not at all for unknown confounding factors, have been the most popular to evaluate CDSS for acute abdominal pain. Walton et al29 concluded that a common bias in many of the studies of their SR was that the same clinicians treated patients allocated to the CDSS intervention and control condition. As a result, the effects of the CDSS may spill over into the control group. Contamination of the control group in this manner would tend to make it more difficult to show a beneficial effect from CDSS.

Regarding the lack of positive findings on patient outcomes, many authors discussed that this may be due to the small sample sizes in the original studies that consequently were underpowered.9 28 38 Furthermore, follow-up periods in most studies were often not long enough to assess long-term differences on patient outcomes related to the computerized interventions (eg, see Shamliyan et al33). Studies with too small sample sizes or too short follow-up periods are at risk of overinterpretation of non-significant results. Fortunately, Hunt et al28 and Garg et al31 indicate that the number and methodological quality of trials have improved over time. An increase in RCTs including a power analysis to calculate the minimum sample size required to show an impact of CDSS was also reported.

Synthesis of the systematic reviews results

It is clear from our synthesis that few SRs have found benefits on patient outcomes, though many SRs have been too small in sample size or too short in time to reveal clinically important effects related to patients. There is, however, significant evidence that CDSS can positively impact healthcare providers' performance with preventive care reminder systems and drug prescription systems as most clear examples. Exceptions are anticoagulant prescription systems for which the findings thus far are inconclusive. The studies of diagnostic CDSS are likewise less positive.

An explanation for the findings related to diagnostic CDSS can be found in evidence that suggests that clinicians, based on their clinical experience, are better able to rule out alternative diagnoses than diagnostic CDSS. This may lower the impact of these CDSS in clinical practice. Moreover, the level of specificity of diagnostic advice varies considerably among diagnostic CDSS.36 The specificity level of computer-generated advice is also known to highly influence the chance that physicians adhere to the advice, with low specificity resulting in computer-advice fatigue and in situations where physicians ignore the advice.12 Second, the diagnostic reasoning models of these CDSS often require input of a large number of patient data (demographic data, data on complaints, symptoms, previous history, physical examination, laboratory, and other tests) to deliver the decision support. As long as these data are not electronically available, for example in an electronic patient record (EMR), clinicians are to enter all these data items manually. The burden of data entry may make them give up and not use the CDSS: as a consequence, they may perform no better than unaided clinicians. There is evidence that arduous data-entry facilities adversely affect clinicians' satisfaction with CDSS that makes them abandon the CDSS.12 When data entry is incomplete, the diagnoses generated by CDSS will be less accurate, and this also may reduce their impact in practice. Part of the data that diagnostic CDSS need becomes available during the clinical process. This prolongs the time that these CDSS can deliver their advice. Advice that shows up too late in the work flow of CDSS users increases the likelihood that they over-ride it. There is indeed evidence that the impact of diagnostic CDSS is lowered if the time that their output becomes available mismatches clinicians' workflow and does not reach them in time.36 44

Unlike diagnostic CDSS, most preventive care systems and CDSS drug-prescribing systems require a limited number of patient data items for input to the decision-support facility. Preventive care reminder systems are for the most applied in routine tasks (blood-pressure tests, Pap smears, vaccinations), prompt doctors to call patients in for a procedure, or alert them that a procedure is due when the patient is at the physician's office. Most CDSS for drug prescription warn clinicians when there is a drug interaction or an allergy listed in the patient's data file, or when they have ordered an unusual dose or frequency of a certain drug. Only few drug prescription CDSSs can also perform drug-disease and/or drug–lab interaction checking and have the advanced feature of patient-specific dose calculation. So, the majority of these CDSS require patient data that are largely available before the advice is (to be) generated: at the time and place clinicians make the decisions. It has been shown that computer advice improves providers' right drug choices and reduces the likelihood of adverse drug events when it is delivered at the time when it is most needed.33 45 So, usage patterns of diagnostic CDSS seem to depend on their complexity, the number of additional data items to collect, the ease of data entry, and the extra time needed to work with the CDSS.36 Several studies on diagnostic aids indeed suggest that CDSS was inefficient because it required more time and effort from the user compared to the paper-based situation.31 36 In contrast, preventive care and drug-prescribing CDSS require minimal to no extra data input from the user except the data they already produced in the context of a patient visit or ordering task. As a result, these types of CDSS minimize the interruption of the user's workflow.

Anticoagulant prescribing CDSS share certain features with diagnostic CDSS and are therefore more complex than other drug-prescribing CDSS. Anticoagulant therapy is a course of drug therapy that a clinician must supervise carefully because it carries a number of risks. For example, many drugs can interact dangerously with anticoagulants, and the patient needs to be monitored continuously for complications. Anticoagulation therapy guidelines thus vary by patient and situation, and the clinician must take care to confirm that the course of therapy is appropriate.46 Management of this therapy by CDSS therefore requires a full patient history dataset to learn about the patient's lifestyle and to identify any risk factors which could complicate the therapy. These factors likewise complicate the entry of patient data: data which are needed to deliver the computer advice for support of clinicians in their decision-making and in managing the therapy during the course of its administration. All these aspects may explain the low impact of anticoagulant prescribing CDSS in practice.

The efficacy of CDSS can be improved when the specificity and sensitivity levels of their advice increase, the need for manual input of (extra) patient data is minimized, and the computer advice is given at the time the clinicians make decisions. The future impact of CDSS will therefore depend on (1) the progress in the biomedical-informatics research domain related to knowledge discovery and reasoning, and (2) the development of integrated environments with a merging of EMRs and CDSS. Research progress in knowledge discovery and reasoning has been impressive over the past decade with advanced machine learning, data-mining techniques, and temporal reasoning as a few examples. Even more sophisticated knowledge discovery techniques that permit the integration of clinical expertise with machine-learning methods are under way.47 Less advancement has, however, been achieved in the integration and sharing of this knowledge in EMRs. The current lack of commonly accepted terminologies, ontologies, and standards for intelligent interfacing make the electronic exchange and interoperation of healthcare data and knowledge hard to achieve. To fully realize the potential of EMRs, future challenges are in defining and reaching agreement on these communication and data-sharing standards, and in realizing complete datasets that are coded according to agreed upon terminological systems.47 Future CDSS should be integrated with these EMRS and provide their decision support at the right time with minimal interruption of clinicians' work flow. Physicians seem to perform better in circumstances where CDSS automatically prompt them than when they have to initiate the interaction themselves.45 This suggests that these systems should work in the background and continuously monitor and check whether the care (to be) delivered to individual patients is in accordance with applicable guidelines. The CDSS should then only deliver its advice in situations where clinicians do not follow these guideline recommendations or when unforeseen patient outcomes occur.

Advantages and limitations of selection process

This synthesis of high-quality SRs on CDSS has several strengths and weaknesses. First, the literature search was thorough: we did not limit our search to a certain time period, and we screened 849 SRs for relevance. Second, we critically appraised the quality of the preincluded SRs based on the standardized AMSTAR 11-item measurement tool. Third, we used two independent reviewers for preselection of SRs based on predefined inclusion criteria, for the assessment of SRs' quality and for the final data extraction. Fourth, we manually searched the reference lists of the selected SRs to identify SRs that we could have missed in our literature search.

One limitation of the study is that we excluded SRs with an AMSTAR score below 9.

One could argue that not all item scores of the AMSTAR measurement tool should have an equal weight in critical appraisal of the SRs. Furthermore, in certain clinical domains, the quality of candidate studies may be systematically poorer than in other domains. As a consequence, SRs of CDSS studies in these domains would never achieve a score of 9 or above while these same studies may be the benchmark by which clinical guidelines are set.

Another limitation of the study is that 14 of the 17 SRs with an AMSTAR score of 9 or higher did not assess the likelihood of publication bias. As explained earlier, publication bias against studies that failed to show an effect which were not included in the SRs limits the results of this synthesis of evidence on CDSS that impact practitioner performance and patient outcomes.

On the one hand, we defined effect in 50% or more of the RCTs as strong evidence, in 40–50% of the RCTs as limited evidence, and in less than 40% of the RCTs or non-randomized studies as insufficient evidence respectively. These ranges, along with the strict inclusion criteria of this synthesis may have underestimated CDSS success rates and their impact on practitioners' performance and patient outcomes. On the other hand, there was a large overlap in studies included in the SRs which may have led to an overestimation of CDSS impact rates. We therefore analyzed the number of unique studies from the total number included in all SRs and provided overall estimates of the evidence that CDSS significantly impacted practitioner performance and patient outcomes. Finally, the conclusions from this synthesis are probably limited in so far as the SRs included in this synthesis described CDSS, some of which were developed more than a decade ago. As discussed, CDSS are constantly evolving; newer generations of CDSS probably have greater capability and usability, and will therefore have a different impact on practitioners' performance and patient outcomes.

Acknowledgments

We thank A Leenders, clinical librarian, for the development of the search strategy.

Footnotes

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed

References

- 1.Institute of Medicine Crossing the Quality Chasm: A New Health System for the 21st Century. Washington DC: National Academy of Sciences, 2001 [PubMed] [Google Scholar]

- 2.Sackett DL, Rosenberg WM, Gray JA, et al. Evidence based medicine: what it is and what it isn't. BMJ 1996;312:71–2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients' care. Lancet 2003;362:1225–30 [DOI] [PubMed] [Google Scholar]

- 4.Osheroff JA, Pifer EA, Teich JM, et al. Improving Outcomes with Clinical Decision Support: An Implementer's Guide. Chicago: Healthcare Information and Management Systems Society, 2005 [Google Scholar]

- 5.Montori VM, Wilczynski NL, Morgan D, et al. Optimal search strategies for retrieving systematic reviews from Medline: analytical survey. BMJ 2005;330:68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dutch Cochrane Centre http://www.cochrane.nl/Files/documents/Checklists/SR-RCT.pdf, 2009

- 7.Shea BJ, Grimshaw JM, Wells GA, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol 2007;7:10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shea BJ, Bouter LM, Peterson J, et al. External validation of a measurement tool to assess systematic reviews (AMSTAR). PLoS One 2007;2:e1350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shea BJ, Hamel C, Wells GA, et al. AMSTAR is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. J Clin Epidemiol 2009;62:1013–20 [DOI] [PubMed] [Google Scholar]

- 10.Niès J, Colombet I, Degoulet P, et al. Determinants of success for computerized clinical decision support systems integrated in CPOE systems: a systematic review. AMIA Annu Symp Proc 2006:594–8 [PMC free article] [PubMed] [Google Scholar]

- 11.Langton KB, Johnston ME, Haynes RB, et al. A critical appraisal of the literature on the effects of computer-based clinical decision support systems on clinician performance and patient outcomes. Proc Annu Symp Comput Appl Med Care 1992:626–30 [PMC free article] [PubMed] [Google Scholar]

- 12.Shiffman RN, Liaw Y, Brandt CA, et al. Computer-based guideline implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999;6:104–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kaplan B. Evaluating informatics applications—clinical decision support systems literature review. Int J Med Inform 2001;64:15–37 [DOI] [PubMed] [Google Scholar]

- 14.Montani S, Bellazzi R, Quaglini S, et al. Meta-analysis of the effect of the use of computer-based systems on the metabolic control of patients with diabetes mellitus. Diabetes Technol Ther 2001;3:347–56 [DOI] [PubMed] [Google Scholar]

- 15.Jackson CL, Bolen S, Brancati FL, et al. A systematic review of interactive computer-assisted technology in diabetes care. Interactive information technology in diabetes care. J Gen Intern Med 2006;21:105–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 17.Conroy S, Sweis D, Planner C, et al. Interventions to reduce dosing errors in children: a systematic review of the literature. Drug Saf 2007;30:1111–25 [DOI] [PubMed] [Google Scholar]

- 18.Eslami S, de Keizer NF, Abu-Hanna A. The impact of computerized physician medication order entry in hospitalized patients—a systematic review. Int J Med Inform 2008;77:365–76 [DOI] [PubMed] [Google Scholar]

- 19.Georgiou A, Williamson M, Westbrook JI, et al. The impact of computerised physician order entry systems on pathology services: a systematic review. Int J Med Inform 2007;76:514–29 [DOI] [PubMed] [Google Scholar]

- 20.Rommers MK, Teepe-Twiss IM, Guchelaar HJ. Preventing adverse drug events in hospital practice: an overview. Pharmacoepidemiol Drug Saf 2007;16:1129–35 [DOI] [PubMed] [Google Scholar]

- 21.Shebl NA, Franklin BD, Barber N. Clinical decision support systems and antibiotic use. Pharm World Sci 2007;29:342–9 [DOI] [PubMed] [Google Scholar]

- 22.Sintchenko V, Magrabi F, Tipper S. Are we measuring the right end-points? Variables that affect the impact of computerised decision support on patient outcomes: a systematic review. Med Inform Internet Med 2007;32:225–40 [DOI] [PubMed] [Google Scholar]

- 23.Ammenwerth E, Schnell-Inderst P, Machan C, et al. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc 2008;15:585–600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mack EH, Wheeler DS, Embi PJ. Clinical decision support systems in the pediatric intensive care unit. Pediatr Crit Care Med 2009;10:23–8 [DOI] [PubMed] [Google Scholar]

- 25.Van Rosse F, Maat B, Rademaker CM, et al. The effect of computerized physician order entry on medication prescription errors and clinical outcome in pediatric and intensive care: a systematic review. Pediatrics 2009;123:1184–90 [DOI] [PubMed] [Google Scholar]

- 26.Schedlbauer A, Prasad V, Mulvaney C, et al. What evidence supports the use of computerized alerts and prompts to improve clinicians' prescribing behavior? J Am Med Inform Assoc 2009;16:531–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Johnston ME, Langton KB, Haynes RB, et al. Effects of computer-based clinical decision support systems on clinician performance and patient outcome. A critical appraisal of research. Ann Intern Med 1994;120:135–42 [DOI] [PubMed] [Google Scholar]

- 28.Hunt DL, Haynes RB, Hanna E, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA 1998;280:1339–46 [DOI] [PubMed] [Google Scholar]

- 29.Walton R, Dovey S, Harvey E, et al. Information in practice. Computer support for determining drug dose: systematic review and meta-analysis. BMJ 1999;318:984–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med 2003;163:1409–16 [DOI] [PubMed] [Google Scholar]

- 31.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 32.Mollon B, Chong J, Holbrook AM, et al. Features predicting the success of computerized decision support for prescribing: a systematic review of RCTs. BMC Med Inform Decis Mak 2009;9:11–20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Shamliyan TA, Duval S, Du J, et al. Just what the doctor ordered. Review of the evidence of impact of compurized physician order entry systems on medication errors. Health Serv Res 2008;43:32–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Montgomery AA, Fahey T. A systematic review of the use of computers in the management of hypertension. J Epidemiol Community Health 1998;52:520–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Balas EA, Krishna S, Kretschmer RA, et al. Computerized knowledge management in diabetes care. Med Care 2004;42:610–21 [DOI] [PubMed] [Google Scholar]

- 36.Liu LY, Wyatt JC, Deeks JJ, et al. Systematic reviews of clinical decision tools for acute abdominal pain. Health Technol Assess 2006;10:1–167 iii–iv. [DOI] [PubMed] [Google Scholar]

- 37.Sanders DL, Aronsky D. Biomedical informatics applications for asthma care: a systematic review. J Am Med Inform Assoc 2006;13:418–27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yourman L, Concato J, Agostini JV. Use of computer decision support interventions to improve medication prescribing in older adults: a systematic review. Am J Geriatr Pharmacother 2008;6:119–29 [DOI] [PubMed] [Google Scholar]

- 39.Tan K, Dear PR, Newell SJ. Clinical decision support systems for neonatal care. Cochrane Database Syst Rev 2005;(2):CD004211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Randell R, Mitchell N, Dowding D, et al. Effects of computerized decision support systems on nursing performance and patient outcomes: a systematic review. J Health Serv Res Policy 2007;12:242–9 [DOI] [PubMed] [Google Scholar]

- 41.Durieux P, Trinquart L, Colombet LI, et al. Computerized advice on drug dosage to improve prescribing practice. Cochrane Database Syst Rev 2008;(3):CD002894. [DOI] [PubMed] [Google Scholar]

- 42.Chatellier G, Colombet I, Degoulet P. An overview of the effect of computer-assisted anticoagulant therapy on the quality of anticoagulation. Int J Med Inform 1998;49:311–20 [DOI] [PubMed] [Google Scholar]

- 43.Wolfstadt JI, Gurwitz JH, Field TS, et al. The effect of computerized physician order entry with clinical decision support on the rates of adverse drug events: a systematic review. J Gen Intern Med 2008;23:451–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Khajouei R, Jaspers MW. The impact of CPOE medication systems' design aspects on USABILITY, Workflow and medication orders. Methods Inf Med 2010;49:3–19 [DOI] [PubMed] [Google Scholar]

- 45.Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.American Heart Association/American College of Cardiology Foundation Guide to Anticoagulation Therapy. http://www.heart.org/HEARTORG/Conditions/CongenitalHeartDefects/TheImpactofCongenitalHeartDefects/Anticoagulation_UCM_307110_Article.jsp (accessed 2 Dec 2010).

- 47.Patel VL, Shortliffe EH, Stefanelli M, et al. The coming of age of artificial medicine. Artif Intell Med 2009;46:5–17 [DOI] [PMC free article] [PubMed] [Google Scholar]