Abstract

Aim: This study investigated brain areas involved in the perception of dynamic facial expressions of emotion.

Methods: A group of 30 healthy subjects was measured with fMRI when passively viewing prototypical facial expressions of fear, disgust, sadness and happiness. Using morphing techniques, all faces were displayed as still images and also dynamically as a film clip with the expressions evolving from neutral to emotional.

Results: Irrespective of a specific emotion, dynamic stimuli selectively activated bilateral superior temporal sulcus, visual area V5, fusiform gyrus, thalamus and other frontal and parietal areas. Interaction effects of emotion and mode of presentation (static/dynamic) were only found for the expression of happiness, where static faces evoked greater activity in the medial prefrontal cortex.

Conclusions: Our results confirm previous findings on neural correlates of the perception of dynamic facial expressions and are in line with studies showing the importance of the superior temporal sulcus and V5 in the perception of biological motion. Differential activation in the fusiform gyrus for dynamic stimuli stands in contrast to classical models of face perception but is coherent with new findings arguing for a more general role of the fusiform gyrus in the processing of socially relevant stimuli.

Keywords: facially expressed emotions, fMRI, dynamic facial expressions, biological motion, superior temporal sulcus

Abstract

Ziel: Diese Studie untersuchte die Hirnareale, die bei der Wahrnehmung von dynamischen Gesichtsausdrücken von Emotionen aktiv sind.

Methoden: N=30 gesunde Probanden wurden mittels fMRT untersucht während sie prototypische Gesichtsausdrücke der Emotionen Angst, Ekel, Traurigkeit und Freude sahen. Mittels Morphing-Techniken wurden alle Gesichter sowohl als statische Portraitaufnahmen als auch als dynamische Filme präsentiert, in denen sich der Gesichtsausdruck von neutral zu emotional entwickelt.

Ergebnisse: Unabhängig von der jeweils gezeigten Emotion zeigte sich bei dynamischen Stimuli (im Vergleich zu statischen) Aktivität in folgenden Arealen: bilateraler superiorer Temporalsulcus, visuelles Areal V5, Gyrus fusiformis, Thalamus und weitere frontale und parietale Gebiete. Interaktionseffekte zwischen gezeigter Emotion und Modus der Präsentation (statisch/dynamisch) zeigten sich nur für den Ausdruck von Freude. Dort lösten die statischen Gesichter vermehrt Aktivität im medialen prefrontalen Kortex aus.

Fazit: Unsere Ergebnisse bestätigen vorherige Untersuchungen bezüglich der neuronalen Korrelate der Wahrnehmung dynamischer emotionaler Gesichtsausdrücke und stehen im Einklang mit Studien, welche die Bedeutung des superioren Temporalsulcus und V5 für die Wahrnehmung biologischer Bewegung zeigten. Die spezifische Aktivität des Gyrus fusiformis bei der Präsentation dynamischer Stimuli steht zwar im Kontrast zu klassischen Modellen der Gesichtswahrnehmung, passt jedoch zu neuen Befunden, die für eine allgemeine Funktion des Gyrus fusiformis bei der Verarbeitung sozial relevanter Stimuli sprechen.

Introduction

Facial expressions facilitate social interactions as they help communicating changes in affective states. The importance of dynamic (i.e. naturalistic) information in facial emotion recognition has been pointed out already in the early days of emotion research [1]. However, up to now empirical studies have mainly used static displays to assess the perception or recognition of emotions (e.g. [2]). Consequently, investigations of the neural basis of emotion perception using neuroimaging techniques were mostly conducted using static facial displays (e.g. [3]). Recent data provide strong support for an affect program account of emotions when viewing static faces, i.e. the perception of a distinct emotion activates a unique set of brain areas presumably involved in (but not necessarily exclusively dedicated to) processing that particular emotion [4]. For the perception of static facial expressions, associations were established between fear and the amygdala, disgust and the insula/operculum/globus pallidus, and anger and the lateral orbitofrontal cortex [4]. Interestingly, both happiness and sadness activated a similar area in the medial prefrontal cortex (MPFC), an area presumably involved in the processing of emotions in general [4].

Relatively little is known, however, about the neural substrates of the perception of dynamic facial expressions of emotion. Some hypotheses can be derived from investigations of the perception of biological motion in general. Natural body movements, eye gaze shifts and mouth movements have been demonstrated to activate the superior temporal sulcus (STS) in several studies [5], [6]. Additionally, human visual area V5 has been shown to be sensitive to coherent visual motion [7], [8]. Hence, it can be assumed that the STS and V5 should be involved in the perception of dynamic facial expressions.

Accordingly, one established neurobiological model states that the invariant aspects of faces (e.g. physiognomy) are mainly processed in the face-responsive area of the fusiform gyrus, whereas the changeable aspects (e.g. lip movements, emotional expressions) primarily activate the face-responsive region of the STS [9].

So far, only a few neuroimaging studies contribute empirical data to this issue. The first one used fMRI to compare the effects of dynamic versus static facial displays of anger and fear, respectively [10]. Passive viewing of dynamic stimuli (especially fearful faces) resulted in higher activation of the fusiform gyrus and amygdala compared to viewing of static stimuli. Another study using PET contrasted dynamic and static expressions of anger and happiness [11]. Visual area V5, STS, periamygdaloid cortex, and cerebellum showed higher activation upon dynamic as compared to static angry expressions while V5, extrastriate cortex, brain stem, and middle temporal cortex were more active for dynamic happy expressions. The third study investigated fearful and happy dynamic and static facial expressions using fMRI [12]. As in the study by Kilts and colleagues [11], dynamic fearful expressions selectively activated the amygdala. Occipital, temporal, and the right ventral premotor cortex were more active for dynamic expressions of both fear and happiness. The most recent study with fMRI compared static and dynamic natural displays of facial emotions (anger and surprise) and found enhanced activity in V5, STS, fusiform gyrus and inferior occipital gyrus when processing dynamic faces [13].

Our fMRI investigation had thus two goals. First, we wanted to replicate the findings of the previous studies using dynamic facial stimuli with some methodological improvements: the inclusion of a comparably large group of healthy subjects and the application of emotional expressions derived from a standardized and valid picture set (JACFEE; (Japanese and Caucasian Facial Expressions of Emotion; [14]). Dynamic stimuli were created using morphing techniques to give the impression of neutral faces shifting into fearful, sad, disgusted or happy facial expressions [15]. Second, we wanted to explore if differences in brain activation between emotions in the case of static faces that support the affect program account are also apparent when viewing dynamic faces. Since previous studies with dynamic faces only used limited sets of emotions, we presented static and dynamic expressions of four emotions (fear, sadness, disgust and happiness) to cover a wider range of stimuli and check for differences between emotions on an exploratory level.

Predictions about brain regions processing dynamic facial expressions were as follows:

Visual area V5 was expected to specifically respond to dynamic facial expressions since the area is sensitive to coherent visual motion [7], [8] and was active for dynamic emotions in two previous studies [11], [13].

Similarly, the STS was expected to selectively respond to dynamic stimuli, given its role in the processing of biological motion [5], [6] and its activity in three of the above mentioned studies [11], [12].

Despite its known role in the processing of invariant aspects of faces when viewing static faces [9] we assumed that the fusiform gyrus would be relatively more active in the processing of dynamic expressions of emotions as has been shown before in three similar studies for limited sets of emotions [10], [12], [13].

Concerning the interaction of mode of presentation (static/dynamic) x emotional content, exploratory analyses were conducted. We predicted that emotion-specific effects are evident when comparing static versus dynamic facial expressions.

Method

Subjects

We investigated 30 healthy subjects (16 male) aged between 19 and 35 years (M=23.0, SD=3.7) without any history of psychiatric or neurological illnesses. All subjects were medical students at the University of Ulm, recruited from a larger database as described in [16], who gave written informed consent before inclusion to the study. This study was conducted in accordance with the Declaration of Helsinki, under the terms of local legislation and was formally approved by the ethics committee of the University of Ulm.

Current or lifetime Axis I disorder was excluded by screening all subjects with a Structured Clinical Interview for Diagnosis – Axis I (SCID-I). Furthermore, participants had normal scores in questionnaires screening for depression and anxiety symptoms, i.e. the German version of the Center for Epidemiologic Studies Depression Scale (CES-D, [17]; German version: “Allgemeine Depressionsskala”, ADS [18]) with mean ADS scores of 8.3 (SD 4.5) and the State-Trait Anxiety Inventory for Adults (STAI, German Version, [19]) with mean STAI-S scores of 34.2 (SD 4.5) and mean STAI-T scores of 32.1 (SD 6.6).

Task and stimuli

Subjects were presented with 48 picture and 48 video stimuli depicting male and female caucasian faces with emotional expression from the JACFEE picture set (Japanese and Caucasian Facial Expressions of Emotion; [14]). Concerning the picture stimuli, four different faces were shown for each of the four emotions fear, happiness, sadness and disgust in a randomized order for 1000 ms each. Each of the four faces per emotion was shown three times for each of the four emotions over the course of the experiment resulting in 4x3x4=48 stimuli. The video stimuli were presented alternating with the picture stimuli in a randomized order showing the same 48 faces from the static stimuli with the facial expression developing from neutral to the exact emotional expression as the static stimuli. The JACFEE set also contains neutral expressions for each actor that we used as starting points for the morphing procedure. Morph sequences were created using our own software tool (FEMT, Facial Expression Morphing Tool; [15], which generates video files of which the duration is specified by the number of frames morphed between the first and the last image given a constant frame rate of 25 frames s–1. Since all videos lasted 1000 ms (as the pictures), the final emotional expression was held for several hundred milliseconds depending on the velocity, with which the expression developed in the first place. Velocities for morphing the emotional expression could be regarded as adequate as healthy subjects judged those dynamic stimuli to appear realistic in another study [20]. Subjects were instructed to attentively perceive the pictures and videos with the emotional expressions. After scanning, subjects were asked to rate the valence of each picture and video stimulus on a scale from 1 (very negative) to 9 (very positive) and the intensity of the emotional expression on a scale from 1 (not intensive at all) to 10 (very intensive).

Image acquisition methods, preprocessing and analysis

All magnetic resonance imaging (MRI) data were obtained with a 3-Tesla Magnetom Allegra (Siemens, Erlangen, Germany) MRI system equipped with a head volume coil at the Department of Psychiatry of the University of Ulm. We obtained 610 volumes of functional images using an echo-planar pulse sequence (EPI). Each volume comprised 30 axial slices covering the whole cerebrum (TR/TE = 1980/35 ms, 64x64 matrix). Slice thickness was 3.2 mm with a 0.8 mm gap resulting in a voxel size of 3x3x4 mm. Stimuli were presented with LCD video goggles (Resonance Technologies, Northridge, CA). Additionally we acquired three-dimensional T1 weighted anatomical volumes (1x1x1 mm voxels) for each subject.

Image processing and statistical analyses were carried out using BrainVoyager QX (Version 1.9, BrainInnovation, Rainer Goebel, Maastricht, The Netherlands). Images were preprocessed including motion correction (trilinear/sinc interpolation), slice scan time correction (sinc interpolation), high frequency temporal filtering (fast fourier transform, cut-off: 3 cycles in time course) and removal of linear trends. Functional images and anatomical images were co-registered and transformed into Talairach space with a resampled voxel size of 1x1x1 mm. Spatial smoothing was applied with a kernel of 8 mm FWHM. Intrinsic autocorrelations were accounted for by AR(1).

After preprocessing, first level analysis was performed on each subject estimating the variance of voxels according to a general linear model. The four different types (fear, happiness, disgust, sadness) of each picture and video stimuli were each modeled separately as a boxcar function and convolved with the hemodynamic response function. This resulted in 8 regressors of interest. Realignment parameters were included in the model as regressors of no interest. In a second level group analysis (random effects model) we calculated a main contrast comparing the regressors modeling picture and the regressors modeling video stimuli. Statistical maps for this confirmative group analysis were thresholded at p<0.001 FDR, corrected for multiple comparisons. Only clusters with more than 100 voxels were included.

In an explorative analysis we calculated interaction contrasts to identify brain regions with a difference in activation between static and dynamic emotion expression specific for a certain emotion, e.g. [(happiness/pictures vs. happiness/videos) vs. (all other emotions/pictures vs. all other emotions/videos)]. Hereby we intended to detect brain regions sensitive to motion in the context of one specific emotion but not the others. Statistical maps for the explorative group analysis were thresholded at p<0.001, uncorrected for multiple comparisons. Only clusters with more than 100 voxels were included.

Results

Behavioral results

Concerning the valence of the facial emotion expressions, both, pictures (P) and videos (V) of the expression of happiness were clearly rated positive (M P/V = 8.2/8.0), the other emotions clearly negative (M P/V = disgust 2.5/2.4, sadness 3.1/3.1, fear 2.3/2.1). Concerning intensity ratings, happiness was perceived as the most intensely expressed emotion, sadness as the least intense (M P/V = happiness 8.7/8.4, fear 8.0/8.5, disgust 7.9/7.9, sadness 6.1/6.2). There were no significant differences in valence or intensity ratings between picture and video stimuli.

Confirmative analysis

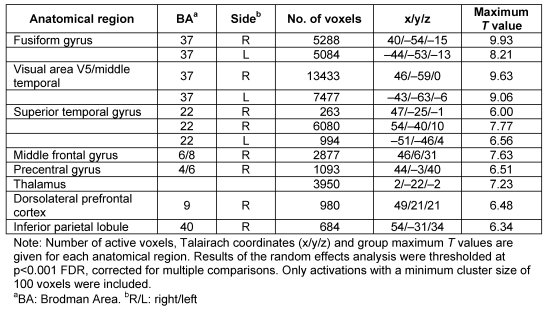

In the analysis conducted to identify brain regions irrespective of a specific emotion, we found increased fMRI activation upon dynamic as compared to static facial emotion expressions of bilateral superior temporal gyrus, visual area V5, the fusiform gyrus, thalamus and right-sided dorsolateral prefrontal cortex, middle frontal gyrus, precentral gyrus and the inferior parietal lobule at very conservative thresholds (Table 1 (Tab. 1), Figure 1 (Fig. 1)). As depicted in Figure 1 (Fig. 1), activations, particularly of the visual area V5 and the fusiform gyrus, did not differ between emotions. A conjunction analysis of the four contrasts dynamic > static facial emotion (one for each emotion) revealed overlapping effects in right visual area V5 medial frontal and superior temporal gyrus as well as bilateral fusiform gyrus at p<0.001 FDR corrected and in all brain areas listed in Table 1 (Tab. 1) at a more lenient threshold of p<0.005 FDR corrected. Apart from higher activation of the primary visual cortex (V1, x/y/z=5/–76/12; T=–5.60), no comparatively increased fMRI activation was found for static emotion expression even at lower thresholds down to p=0.05, uncorrected for multiple comparisons.

Table 1. fMRI activation for the contrast dynamic > static facial expressions for all emotions.

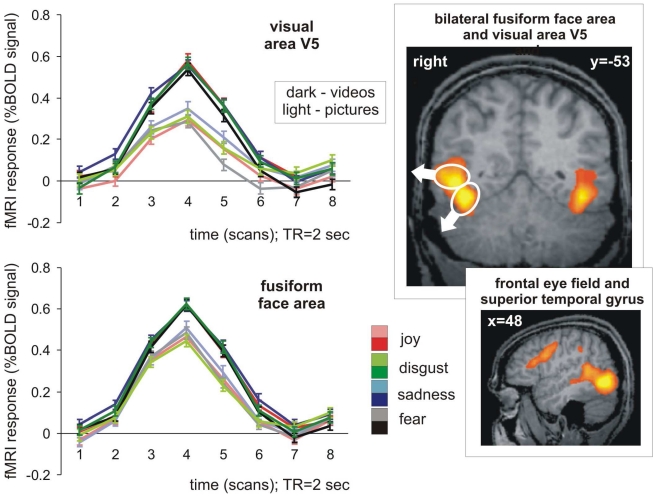

Figure 1. fMRI activation for the contrast dynamic > static facial expressions for all emotions. Time courses of blood oxygen level dependant (BOLD) signal change highlight the contrast in visual area V5 and the fusiform gyrus. Light colors represent time courses of activations following static (picture) stimuli presentation, dark colors represent activation related to dynamic (video) stimuli.

Explorative analysis

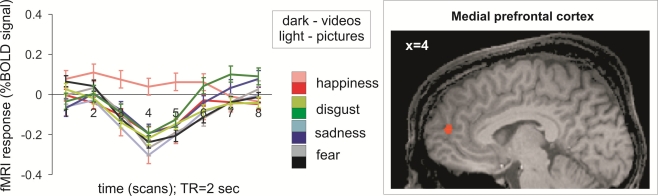

The interaction analysis of emotion and presentation mode (static/dynamic) conducted to detect brain regions with emotion-specific differences in static vs. dynamic emotion expression yielded one meaningful significant activation of the medial prefrontal cortex (Figure 2 (Fig. 2): BA 9/10; x/y/z=–4/52/16, T=4.1, no. of voxels = 211) for the expression of happiness. The perception of the static facial expression of happiness resulted in a significantly higher activation of this brain region than the perception of the dynamic facial expression of happiness. The interaction analysis with the other emotion expressions revealed no significant difference.

Figure 2. fMRI activation for the contrast static > dynamic facial expression of happiness vs. static > dynamic facial expression of all other emotions. Time courses of blood oxygen level dependant (BOLD) signal change highlight the contrast in the medial prefrontal cortex with greater signal upon perception of happiness presented as a static facial expression. Light colors represent time courses of activations following static (picture) stimuli presentation, dark colors represent activation related to dynamic (video) stimuli.

Discussion

As expected according to predictions 1 and 2, dynamic stimuli preferentially activated visual area V5 and parts of the superior temporal gyrus in the area of the STS similarly for all four emotions investigated. Both regions are discussed to be involved in the processing of biological motion as well as socially relevant stimuli in general [5], [6], [7], [8]. Additionally, V5 was also selectively active for dynamic faces in two studies similar to ours [11], [13], and the STS in three similar studies [11], [12], [13].

In line with prediction 3, but contrary to the classical view of face processing [9], [21], the fusiform gyrus was clearly more active when processing dynamic compared to static facial expressions irrespective of emotional content. Our activation site lies in an area of the fusiform gyrus that has been termed as “fusiform face area” (FFA), since it has been postulated to be the area where faces are processed in a modular and category specific fashion [21]. More recently, the FFA was suggested to be the main site for the processing of invariant (i.e. static) aspects of faces [9]. On the other hand, three of the four neuroimaging studies that are similar to ours, revealed higher activity in the fusiform gyrus for the perception of dynamic versus static expressions, with the respective coordinates very close to ours [10], [12], [13]. In line with these findings, recent studies showed that the fusiform gyrus is sensitive to movements of the mouth [22] and the eyes of humans [23].

Thus, our results add to an expanded view of FFA functions. In an early opposition to the hypothesis of a domain-specific (faces) function of the FFA [21], an alternative model suggests that the FFA preferentially processes objects of individual expertise [24]. For instance, it has been shown that the FFA preferentially responds to cars in car experts [25]. The empirical finding of FFA activity when viewing faces thus may stem from the fact that most humans do have a great expertise in handling such stimuli. Integrating those positions, the FFA can be considered as an area with a bias for faces but with the developmental ability to acquire a special role for visual stimuli that are widespread, salient and socially relevant [26]. Since dynamic facial expressions match real-life conditions closer than static ones, human expertise handling them is certainly greater, thus offering an explanation for the increased activation of the fusiform gyrus when viewing dynamic as compared to static facial expressions.

Therefore, our findings are in line with recent suggestions based on neuroimaging findings that the strict separation between identity and emotional expression in classical models of face processing [9], [21] no longer can be held true [27]. Another explanation for the activity in the fusiform gyrus when viewing dynamic faces could be that it is due to increased visual attention dynamic stimuli receive opposed to static ones. It has been reported that activity along the fusiform gyrus related to the perception of faces is sensitive to selective attention [28], [29].

The remaining network of brain regions including the dorsolateral prefrontal cortex, middle frontal gyrus, precentral gyrus and the inferior parietal lobule that was more active when viewing dynamic facial expressions has been shown, among other functions, to be involved in the control of eye movements and visual attention [30]. It seems plausible that dynamic faces are simply more interesting, of higher biological relevance and therefore call for more visual attention. It has been shown in other contexts that moving stimuli with signal value have a higher capacity to catch visual attention [31].

Activity in the thalamus when processing dynamic facial expressions is in line with research conducted by [22] finding thalamus activity when people passively view movements of mouth, eyes, and hand.

As demonstrated by the time courses of BOLD signal changes in Figure 1 (Fig. 1), two areas with differential effects between dynamic and static faces, V5 and FFA, did not show an effect of emotion. With one exception, this phenomenon was evident throughout the exploratory interaction analyses of presentation mode (static/dynamic) and emotion considering emotion-specific effects. Therefore, our assumption (prediction 4) that there are brain areas with emotion-specific effects when comparing static and dynamic presentations were largely not confirmed. Only the MPFC was selectively more active when processing static but not dynamic displays of happiness. This is in accordance with a meta-analysis showing that the MPFC is active when confronted with the facial expression of happiness [4]. However, in this same meta-analysis, the MPFC was also selectively active upon viewing expressions of sadness, which was not the case in our study. The MPFC is supposed to play a fairly general role in the processing of emotions [4], and is involved in a wide variety of other tasks such as mentalizing [32] or self-referential processing [33]. Therefore it is difficult to draw specific conclusions from the MPFC activity when viewing static facial expressions of happiness.

The major methodological limitation may be that we only contrasted static and dynamic facial displays of emotion. Since we did not show faces with moving elements (e.g. opening of eyes or mouth) without emotional meaning, our data do not allow to decide whether the activation sites listed are actually implied in the processing of emotional facial dynamics or if those areas simply are involved when viewing biological facial motion in general. Future studies should at least include dynamic non-emotional faces to clarify the issue. Confirming our results, though, [13] showed enhanced activity in right STS and fusiform gyrus upon presentation of dynamic facial displays of emotion even when using a control condition with phase-scrambled versions of facial stimuli.

Conclusions

This study investigated brain activity when viewing dynamic as opposed to static displays of facial emotions. With a comparably large group of healthy subjects and four emotions applied, we showed a network of brain areas relevant to the perception of dynamic facial expressions irrespective of emotional content including visual area V5, STS and the fusiform gyrus. This partially confirms previous findings with dynamic facial emotions and is in line with the known role of V5 and STS in the processing of biological motion. Activity in the fusiform gyrus, on the other hand, stands in contrast to classical models of face perception but corresponds to recent studies postulating the involvement of this area in the processing of socially relevant stimuli, which dynamic faces rather than static ones represent. Future studies should test whether the reported regions are actually confined to emotion-specific differences between static and dynamic faces or whether they contribute to the processing of biological motions in general. Finally, it would be an interesting approach to apply a comparable study design to subjects with reduced emotional awareness (i.e. in alexithymia) to test if those subjects recruit different brain regions when viewing facial stimuli.

Notes

Competing interests

The authors declare that they have no competing interests.

References

- 1.Bassili JN. Facial motion in the perception of faces and of emotional expression. J Exp Psychol Hum Percept Perform. 1978;4(3):373–379. doi: 10.1037/0096-1523.4.3.373. Available from: http://dx.doi.org/10.1037/0096-1523.4.3.373. [DOI] [PubMed] [Google Scholar]

- 2.Calder AJ, Keane J, Manly T, Sprengelmeyer R, Scott S, Nimmo-Smith I, Young AW. Facial expression recognition across the adult life span. Neuropsychologia. 2003;41(2):195–202. doi: 10.1016/S0028-3932(02)00149-5. Available from: http://dx.doi.org/10.1016/S0028-3932(02)00149-5. [DOI] [PubMed] [Google Scholar]

- 3.Kesler-West ML, Andersen AH, Smith CD, Avison MJ, Davis CE, Kryscio RJ, Blonder LX. Neural substrates of facial emotion processing using fMRI. Brain Res Cogn Brain Res. 2001;11(2):213–226. doi: 10.1016/S0926-6410(00)00073-2. Available from: http://dx.doi.org/10.1016/S0926-6410(00)00073-2. [DOI] [PubMed] [Google Scholar]

- 4.Murphy FC, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotions: a meta-analysis. Cogn Affect Behav Neurosci. 2003;3(3):207–233. doi: 10.3758/CABN.3.3.207. Available from: http://dx.doi.org/10.3758/CABN.3.3.207. [DOI] [PubMed] [Google Scholar]

- 5.Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends Cogn Sci. 2000;4(7):267–278. doi: 10.1016/S1364-6613(00)01501-1. Available from: http://dx.doi.org/10.1016/S1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- 6.Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philos Trans R Soc Lond B Biol Sci. 2003;358(1431):435–445. doi: 10.1098/rstb.2002.1221. Available from: http://dx.doi.org/10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tootell RB, Taylor JB. Anatomical evidence for MT and additional cortical visual areas in humans. Cereb Cortex. 1995;5(1):39–55. doi: 10.1093/cercor/5.1.39. Available from: http://dx.doi.org/10.1093/cercor/5.1.39. [DOI] [PubMed] [Google Scholar]

- 8.Rees G, Friston K, Koch C. A direct quantitative relationship between the functional properties of human and macaque V5. Nat Neurosci. 2000;3(7):716–723. doi: 10.1038/76673. Available from: http://dx.doi.org/10.1038/76673. [DOI] [PubMed] [Google Scholar]

- 9.Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4(6):223–233. doi: 10.1016/S1364-6613(00)01482-0. Available from: http://dx.doi.org/10.1016/S1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- 10.LaBar KS, Crupain MJ, Voyvodic JT, McCarthy G. Dynamic perception of facial affect and identity in the human brain. Cereb Cortex. 2003;13(10):1023–1033. doi: 10.1093/cercor/13.10.1023. Available from: http://dx.doi.org/10.1093/cercor/13.10.1023. [DOI] [PubMed] [Google Scholar]

- 11.Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage. 2003;18(1):156–168. doi: 10.1006/nimg.2002.1323. Available from: http://dx.doi.org/10.1006/nimg.2002.1323. [DOI] [PubMed] [Google Scholar]

- 12.Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M. Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Brain Res Cogn Brain Res. 2004;20(1):81–91. doi: 10.1016/j.cogbrainres.2004.01.008. Available from: http://dx.doi.org/10.1016/j.cogbrainres.2004.01.008. [DOI] [PubMed] [Google Scholar]

- 13.Schultz J, Pilz KS. Natural facial motion enhances cortical responses to faces. Exp Brain Res. 2009;194(3):465–475. doi: 10.1007/s00221-009-1721-9. Available from: http://dx.doi.org/10.1007/s00221-009-1721-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Matsumoto D, Ekman P. Japanese and Caucasian Facial Expressions of Emotion (JACFEE) and Neutral Faces (JACNeuF) [Slides] Dr. Paul Ekman, Department of Psychiatry, University of California, San Francisco, 401 Parnassus, San Francisco, CA 94143-0984; 1988. [Google Scholar]

- 15.Kessler H, Hoffman H, Bayerl P, Neumann H, Basic A, Deighton RM, Traue HC. Die Messung von Emotionserkennung mittels Computer-Morphing – Neue Methoden für Forschung und Klinik. [Measuring emotion recognition with computer morphing: New methods for research and clinical practice]. Nervenheilkunde. 2005;24:611–614. (Ger). [Google Scholar]

- 16.Abler B, Hofer C, Viviani R. Habitual emotion regulation strategies and baseline brain perfusion. Neuroreport. 2008;19(1):21–24. doi: 10.1097/WNR.0b013e3282f3adeb. Available from: http://dx.doi.org/10.1097/WNR.0b013e3282f3adeb. [DOI] [PubMed] [Google Scholar]

- 17.Radloff LS. The CES-D scale: A self-report depression scale for research in the general population. Appl Psychol Measurement. 1977;1:385–401. doi: 10.1177/014662167700100306. Available from: http://dx.doi.org/10.1177/014662167700100306. [DOI] [Google Scholar]

- 18.Hautzinger M, Bailer M, Worall H, Keller F. Beck-Depressions-Inventar (BDI) Bern: Huber; 1994. [Google Scholar]

- 19.Laux L, Glanzmann P, Schaffner P, Spielberger CD. Das Stait-Trait-Angstinventar. Weinheim, Germany: Beltz; 1981. [Google Scholar]

- 20.Hoffmann H, Traue HC, Bachmayr F, Kessler H. Perceived Realism of Dynamic Facial Expressions of Emotion - Optimal Durations for the Presentation of Emotional Onsets and Offsets. Cognition and Emotion. 2010;24(8):1369–1376. doi: 10.1080/02699930903417855. Available from: http://dx.doi.org/10.1080/02699930903417855. [DOI] [Google Scholar]

- 21.Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional anatomy of biological motion perception in posterior temporal cortex: an FMRI study of eye, mouth and hand movements. Cereb Cortex. 2005;15(12):1866–1876. doi: 10.1093/cercor/bhi064. Available from: http://dx.doi.org/10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- 23.Pelphrey KA, Singerman JD, Allison T, McCarthy G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia. 2003;41(2):156–170. doi: 10.1016/S0028-3932(02)00146-X. Available from: http://dx.doi.org/10.1016/S0028-3932(02)00146-X. [DOI] [PubMed] [Google Scholar]

- 24.Gauthier I, Nelson CA. The development of face expertise. Curr Opin Neurobiol. 2001;11(2):219–224. doi: 10.1016/S0959-4388(00)00200-2. Available from: http://dx.doi.org/10.1016/S0959-4388(00)00200-2. [DOI] [PubMed] [Google Scholar]

- 25.Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000;3(2):191–197. doi: 10.1038/72140. Available from: http://dx.doi.org/10.1038/72140. [DOI] [PubMed] [Google Scholar]

- 26.Cohen Kadosh K, Johnson MH. Developing a cortex specialized for face perception. Trends Cogn Sci. 2007;11(9):367–369. doi: 10.1016/j.tics.2007.06.007. Available from: http://dx.doi.org/10.1016/j.tics.2007.06.007. [DOI] [PubMed] [Google Scholar]

- 27.Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45(1):174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. Available from: http://dx.doi.org/10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 28.Wojciulik E, Kanwisher N, Driver J. Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. J Neurophysiol. 1998;79(3):1574–1578. doi: 10.1152/jn.1998.79.3.1574. [DOI] [PubMed] [Google Scholar]

- 29.Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30(3):829–841. doi: 10.1016/S0896-6273(01)00328-2. Available from: http://dx.doi.org/10.1016/S0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- 30.Pierrot-Deseilligny C, Milea D, Muri RM. Eye movement control by the cerebral cortex. Curr Opin Neurol. 2004;17(1):17–25. doi: 10.1097/00019052-200402000-00005. Available from: http://dx.doi.org/10.1097/00019052-200402000-00005. [DOI] [PubMed] [Google Scholar]

- 31.Franconeri SL, Simons DJ. Moving and looming stimuli capture attention. Percept Psychophys. 2003;65(7):999–1010. doi: 10.3758/BF03194829. Available from: http://dx.doi.org/10.3758/BF03194829. [DOI] [PubMed] [Google Scholar]

- 32.Frith U, Frith CD. Development and neurophysiology of mentalizing. Philos Trans R Soc Lond B Biol Sci. 2003;358(1431):459–473. doi: 10.1098/rstb.2002.1218. Available from: http://dx.doi.org/10.1098/rstb.2002.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Northoff G, Bermpohl F. Cortical midline structures and the self. Trends Cogn Sci. 2004;8(3):102–107. doi: 10.1016/j.tics.2004.01.004. Available from: http://dx.doi.org/10.1016/j.tics.2004.01.004. [DOI] [PubMed] [Google Scholar]