Abstract

Evidence-based practice (EBP) is a concept that was popularized in the early 1990’s by several physicians who recognized that medical practice should be based on the best and most current available evidence. Although this concept seems self-evident, much of medical practice was based on outdated textbooks and oral tradition passed down in medical school. Currently, exercise science is in a similar situation. Due to a lack of regulation within the exercise community, the discipline of exercise science is particularly prone to bias and misinformation, as evidenced by the plethora of available programs whose efficacy is supported by anecdote alone. In this manuscript, we provide a description of the five steps in EBP: 1) develop a question, 2) find evidence, 3) evaluate the evidence, 4) incorporate evidence into practice, and 5) re-evaluate the evidence. Although objections have been raised to the EBP process, we believe that its incorporation into exercise science will improve the credibility of our discipline and will keep exercise practitioners and academics on the cutting edge of the most current research findings.

Beneath all physiologic phenomena lie causal mechanisms. The purpose of the scientific process as it relates to human physiology is to uncover these mechanisms. Unfortunately, knowledge of a phenomenon is often buried deep beneath many partially understood or even misunderstood mechanisms rendering what we currently know incomplete. Ideally, with each research experiment we gain a more complete understanding of a given phenomenon; thus, knowledge is dynamic and continually evolves.

The evolution of knowledge creates a unique challenge; instructors and practitioners must teach and practice with incomplete knowledge. Despite their best efforts to incorporate the latest scientific evidence, by the time a lecture is delivered, it is likely that science has already uncovered more of the story. Usually, discovery simply adds to the information that was presented to students, but sometimes advances in knowledge radically change scientific thought. In the 1930’s and 1940’s it was believed that muscle contractions were the result of folding and unfolding of long protein filaments located within skeletal muscle sarcomeres.[1] With the invention of a new, more powerful light microscope,[2] Huxley and Hanson were able to see two proteins, actin and myosin, that appeared to slide over each other during the shortening and lengthening of a muscle.[3, 4] Now, 55 years later, the sliding filament theory of muscle contraction is taught to all physiology students as the basis of muscular contraction. While the teachers of the 1940’s taught to the best of their ability based on contemporary knowledge, Huxley and Hanson’s discovery rendered what was taught incomplete and inaccurate.

Evidence-based medicine (EBM) is a term coined in the early 1990’s to describe a paradigm in which clinical decisions are based on the highest available levels of research knowledge or evidence.[5, 6] Although this idea seemed obvious, it was a novel approach to clinical practice. In fact, in 2000 it was estimated that only 15–40% of clinical decisions were based on research evidence.[7, 8] The medical community’s call to evidence-based practice (EBP) argued that knowledge is dynamic and that clinicians should incorporate the latest evidence into practice to optimize clinical outcomes. EBP has since spread from the field of medicine to other health fields. Much has been written about the incorporation of EBP into nursing,[9, 10] physical therapy,[11–13] and various medical disciplines including orthopedics.[14–16]

Exercise science is susceptible to misinformation and bogus claims—perhaps more than any other field. This is evident from a cursory knowledge of the personal training industry. While organizations such as the National Strength & Conditioning Association (NSCA) and the American College of Sports Medicine (ACSM) have done much to legitimize the credentials of exercise scientists, the field is still full of misinformation. This misinformation is due, in part, to the lack of standardization among agencies that certify instructors in exercise and sports science. Many exercise certifications require minimal academic training. In fact, the only requirements of some certification agencies are to attend a weekend-long workshop and pass a written multiple choice exam. The result of such certifications is under-qualified exercise professionals equipped with minimal theoretical knowledge of training physiology and little or no ability to access the latest scientific research pertinent to their profession. These certified exercise professionals often base their practice on a flawed set of theoretical knowledge, personal experience or anecdotal hearsay, and non-peer reviewed publications. Inevitably, misinformation leaks into the field and exercise specialists are poorly equipped to evaluate the legitimacy of information, resulting in a plethora of devices, nutritional supplements, and program theories that have little or no scientific merit.

The intention of academia and practice should be to constantly evolve with the literature in a quest to create highly effective exercise programs that are based on current knowledge. We propose that evidence-based practice be taught in undergraduate exercise science programs as the foundation of all exercise programming. The purpose of this paper is to introduce the structural framework of EBP as it relates to exercise science and to present the advantages and limitations of EBP in the discipline of exercise science.

1. The Mechanics of Evidence-Based Practice

Sackett et al. have suggested that EBP is applicable in three distinct facets of medicine: prognosis, diagnosis, and intervention.[17] While it might be argued that there are diagnostic and prognostic components of exercise science, EBP’s primary application in exercise is at the intervention or programming level. Thus, we will focus solely on the programming aspect.

1.1 Step One: Develop a question

The evaluation and interpretation of client or patient data should lead to the development of a focused, practical question.[17–20] The question should include information about the subject population, exercise parameters (e.g. duration, frequency, intensity, etc.), and desired adaptations. Questions that are too broad will yield enormous amounts of information making interpretation of the literature difficult. Narrowing the population will result in a smaller and more focused list of abstracts. When considering the population, at least five questions should be addressed: 1) is the scenario gender specific, 2) are there underlying clinical issues, 3) what is the training experience of the client, 4) what is the chronological age of the client and, 5) what is the desired outcome of the program (e.g. increased, strength, power, cardiovascular fitness, etc.)? Each of these questions may be useful in narrowing the search and providing better evidence to construct the program.

1.2 Step Two: Search for Evidence

Acquiring appropriate, reputable evidence has become increasingly easier with the availability of peer reviewed research on the internet. The definition of “evidence” has been a source of debate as some have mistakenly assumed that advocates of EBP believe that research alone constitutes evidence. Others have argued strongly for the value of clinical intuition and experience as important contributors to evidence.[21, 22] We believe that there are three primary sources of evidence: professional experience, academic preparation, and research knowledge.

1.2.1 Sources of evidence

When evaluating the three sources of evidence, it is important to understand that each element of knowledge is incomplete, open to interpretation, and therefore potentially biased. Professional experience can be a valuable source of evidence. Lessons learned through field experience can reinforce academic knowledge. However, it is vital to understand that knowledge gained through professional experience is the least objective and most influenced by bias. For instance, in prescribing medicine, doctors may be influenced by relationships with drug companies, the preference of their mentors, and the static set of knowledge taught in medical school. While this information is often valid, it is important to understand that it may not represent the best treatment scenario. Exercise science practitioners are prone to these same biases. It is difficult to deviate from methodologies taught by mentors and well-respected colleagues. While many of these methodologies are valid and effective, mentors were, at best, working from the best evidence available at that point in time.

Second, academic preparation provides a valuable source of evidence. The best professors strive to incorporate recent research findings into courses teaching foundational scientific principles. Academic courses typically utilize textbooks containing fundamental physiology knowledge that is needed to read scientific research. However, there are two inherent flaws to academic preparation: 1) the material delivered in academic lectures is often outdated by the time it is presented and 2) lecture material is subject to the personal bias and current knowledge of the professor. By the time a professor prepares and delivers a lecture, new evidence has already been added to the body of knowledge. It is estimated that by the time a new edition of a textbook is published, it is at least one year out of date;[23] similarly, with the time required to develop coursework, academic lectures are likely several months out of date by the time they are delivered--assuming they are updated each semester with the latest evidence. Like practitioners, instructors are prone to bias. There is a tendency for instructors to teach with a style similar to those who mentored them. Additionally, there is a propensity to teach the very material that one was taught. Thus, when information is presented to students it may be severely out of date; this underscores the need for the incorporation of less biased research evidence.

Scientific research constitutes the level of evidence that is least prone to bias. The motto for the Royal Society of London is the Latin phrase, “nullius in verba," which is translated, "on no man's word." The best way to minimize bias is to remove the human element and let the data speak for itself. While the interpretation of research evidence can be biased, research evidence per se is less biased than personal experience, textbooks, or academic lectures. It is our opinion that research evidence should provide the foundation for all practical programming decisions. Empirical data should exert the ultimate influence on exercise programming as it is a less biased form of evidence.

1.2.2 Gathering Evidence

In past generations, the collection of peer reviewed materials required travel to libraries, searching through card catalogs, retrieving materials from library shelves, and extensive photocopying. Today, the internet enables easy access to quality resources including medical databases and peer reviewed journal articles online. Instead of traveling to the library, practitioners need only to search topics using publicly available databases such as PubMed and Google Scholar. The result is a vast availability of research requiring minimal effort.

1.3 Step Three: Evaluate Evidence

The proponents of EBP argue that there is a hierarchy to evidence and that not all evidence (even scientific research) should be considered equal.[20] The highest level of evidence is a systematic review of randomized controlled trials.[24] This type of research evidence is assigned a Level 1a ranking as it represents a series of replicated randomized controlled trials. Level 1b evidence is a single randomized controlled trial with narrow confidence intervals.[24] Systematic reviews of non-experimental studies (e.g. cohort studies), both single well-designed cohort studies and poorly designed randomized controlled trials, and outcomes research are given rankings of 2a, 2b, and 2c, respectively.[24] Systematic reviews of case-control studies (3a) and case-control studies (3b) are given lower rankings due to the greater potential for bias.[24] Finally, expert opinion, textbooks, decisions based on mechanistic research (basic science), and practical experience are given Level 5 rankings.[24] In this system, the research which is least prone to bias, replicated randomized controlled trials (i.e., a systematic review), is recognized as the highest level of evidence while the information that is most prone to bias, expert opinion, is assigned the lowest level of evidence. This provides an impartial method of ranking evidence and determining its influence on practical decision making.

1.4 Step Four: Incorporate Evidence Into Practice

The highest available level of evidence should be used as the basis for exercise prescription. If practice is currently founded on reputable Level 5 evidence, then it is likely that new evidence will only fine tune current exercise programs. However, it is prudent for all individuals who prescribe exercise to understand the science upon which prescriptions are based. This may be especially important for exercise professionals who currently base their practice on non-peer reviewed material, professional experience, non-expert opinion, or less reputable certification agencies.

In medicine, EBP is incorporated at the individual level [25]; the patient has a voice in treatment options. EBP in exercise science should also include the input of the individual. Exercise scientists will increase their professional credibility by discussing the evidence behind program development with their clients/patients. This is particularly important when research leads to a non-traditional training approach or goes against current trends.

In the past, patients of medical doctors blindly followed their recommendations based on the authority of their office. Some in the medical community adamantly oppose the shift to medical practice based on the authority of research evidence, disregarding the fact that the science upon which recommendations are made is the ultimate authority, not a physician. The shift from authoritarian medicine (“Do this because I said so”) to authoritative medicine (“Do this because research evidence says so”) produces less biased medical prescription[26] and will yield similar results in exercise prescription.

1.5 Step Five: Routinely Re-evaluate the Evidence

The final step in EBP is a constant re-evaluation of practice. It is sensible for practitioners to continually read on particular subjects in order to stay abreast of new studies relevant to their field. However, it is impossible to keep up with every journal and thus it is necessary to routinely repeat searches on a particular topic.

2. Potential Criticisms and Benefits of EBP in Exercise Science

2.1 Potential Criticisms of EBP in Exercise Science

A variety of arguments have been levied against evidence-based practice in the field of medicine. While we believe that EBP is both important and necessary to the advancement of exercise science, we recognize that similar contentions will be raised to its inclusion in our field.

Some have suggested that the implementation of EBP minimizes the importance of the practitioner’s experience and intuition; this argument is certainly applicable to the prescription of exercise. We acknowledge that personal experience is a valuable source of evidence and, in many cases, the only source of evidence to substantiate practice. However, the prudent search for additional information can only improve practice and add to the repertoire of experienced exercise professionals. In many cases, research validates what the practitioner has known for years through practical experience or intuition. However, at times research goes against popular thought.

A second argument against EBP is that it removes the “art” or creativity from practice. This is certainly a valid concern as creativity in program design can mitigate the monotony of exercise training. Some contend that focusing on evidence as the driving factor for practice could lead to a “cook book” approach where every individual gets the same treatment.[27] While this is a valid concern, the current state of the exercise industry suggests a need for the re-evaluation of programs based on evidence.

Popular trends in exercise training including the use of new devices, dietary supplements, and novel programming techniques are often implemented based on the recommendation of expert or non-expert opinion alone (Level 5 evidence). Faced with a dearth of evidence to substantiate such practice, exercise specialists argue that the use of these techniques constitutes the “art” or creativity of training. However, exercise specialists must not violate the proven principles of training physiology for the sake of “art”. Music is a beautiful art; talented composers are able to carefully arrange notes to create beautiful expressions. Thousands of songs have been written from only twelve musical notes. Each song involves a unique arrangement of melodies, harmonies, rhythms, etc. It is the arrangement of these twelve notes that results in the creativity of each composition. Despite the limited number of notes, each song has a unique “sound.” The same can be said of the art of exercise training. While the proven concepts of training physiology may limit the strategies employed, it certainly does not limit creativity. Instead, exercise specialists’ ability to creatively order volume, intensity, rest periods, tempo, and exercises, using established evidence, typifies the art of training.

2.2 Benefits of EBP in Exercise Science

We believe that there are two primary benefits of the inclusion of EBP into exercise prescription. First, EBP will improve the quality of exercise programming. The consuming desire of an exercise professional who wants to excel should be to provide the best exercise program for every individual based on the current set of knowledge. The result of the continuous search for evidence is a better product for the client/patient.

Second, the incorporation of EBP will enhance the legitimacy of our profession. Although there are many qualified and outstanding graduates of exercise science programs employed as exercise specialists, the lack of licensure hurts our credibility with medical professionals and with the general public. The credibility crisis is worsened by a multitude of certifications requiring varying levels of competencies. Between certification agencies, there exist varying recommendations as to the appropriate exercise prescriptions and differing terminology to describe them. This creates problems when exercise specialists converse with each other and with medical professionals. Therefore, the incorporation of evidence will provide a common foundation upon which various groups can agree. Additionally, EBP gives authority to the exercise professional. Instead of standing behind agency recommendations, or answering questions based on academic knowledge, we can stand on the credibility of the latest research findings.

3. Incorporation of EBP Into Exercise Science

There are two viable points of entry for EBP into the discipline of exercise science: undergraduate programs and national certification agencies. These two avenues will capture the majority of the new professionals who will fill leadership roles within the exercise community.

There are several clear ways to incorporate EBP into undergraduate exercise science programs. One, teach a mandatory class on EBP. This course would introduce the concepts of EBP and provide practical instruction in: constructing answerable questions, searching for evidence (research), and evaluating research to reach practical conclusions. Most importantly, this course would emphasize the dynamic nature of scientific knowledge; i.e., that students should stay abreast of and search for current best evidence (especially those not in research positions) and not base their training and coaching entirely on information gained during an undergraduate education. Two, a more thorough approach, would incorporate EBP as an undertone of the entire program. Thus, in addition to standard lectures on basic physiologic and physical principles, each class would involve searches for best evidence to answer specific questions. By graduation, students would have years of practice finding and evaluating research. This repetitive exposure is particularly important for those who will not have careers generating research but who can and should be competent research consumers. Three, students could complete a senior EBP thesis, investigating a specific question. A project of this nature would necessitate the implementation of one or both of the previously discussed options so that students would have adequate familiarity with the concepts of EBP.

The other readily accessible route by which EBP could be introduced to the exercise science community is through the certification process. The two most highly regarded certifying bodies in the exercise science field are the American College of Sports Medicine (ACSM) and the National Strength and Conditioning Association (NSCA); not incidentally, both of these agencies require a bachelor’s degree in an exercise-related or allied health field to even test for their higher level certifications. However, both agencies also offer certifications for personal trainers that require only a high school diploma or its equivalent. Recognizing that commercial demand for personal trainers and coaches makes it practically impossible to require a college degree for all certifications, the inclusion of EBP as a fundamental component of these “lower level” certifications would serve to further elevate the profession in competency, prestige, and ultimately, public perception. As organizations, the ACSM and NSCA would benefit from both the increased prestige of their certifications and the increased revenue from mandatory workshops necessary to teach the concepts of EBP.

Although undergraduate programs and national certifications will expose a large portion of incoming exercise professionals to the principles of EBP, admittedly, sub-standard organizations will continue to certify under-qualified trainers. However, strengthening the professional skills of those that obtain undergraduate degrees or upper-tier certifications will create a clear distinction between those that are truly competent to prescribe exercise and those that are not.

4. Conclusion

A. Bradford Hill stated, “All scientific work is incomplete--whether it is observational or experimental. All scientific work is liable to be upset or modified by advancing knowledge. That does not confer upon us a freedom to ignore the knowledge we already have, or to postpone the action that it appears to demand at a given time.” As exercise professionals, we are obligated to act based on the current state of scientific knowledge to develop the best possible programs for our clients/patients. In doing so, we must recognize that the best program in 2010 may not be the best program in 2015. Evidence-based practice is not a new concept; there are certainly exercise professionals who already employ its principles. In keeping with the motto of the Royal Society of London (“nullius in verba”), we propose that the use of evidence be the driving component of exercise prescription. To implement this philosophy, the principles of EBP must be incorporated into the curriculum of exercise science programs. Our appeal to exercise science instructors and practitioners is to teach and implement programs founded on the most recent scientific evidence. This will improve the programs provided to the individual and our credibility as a profession.

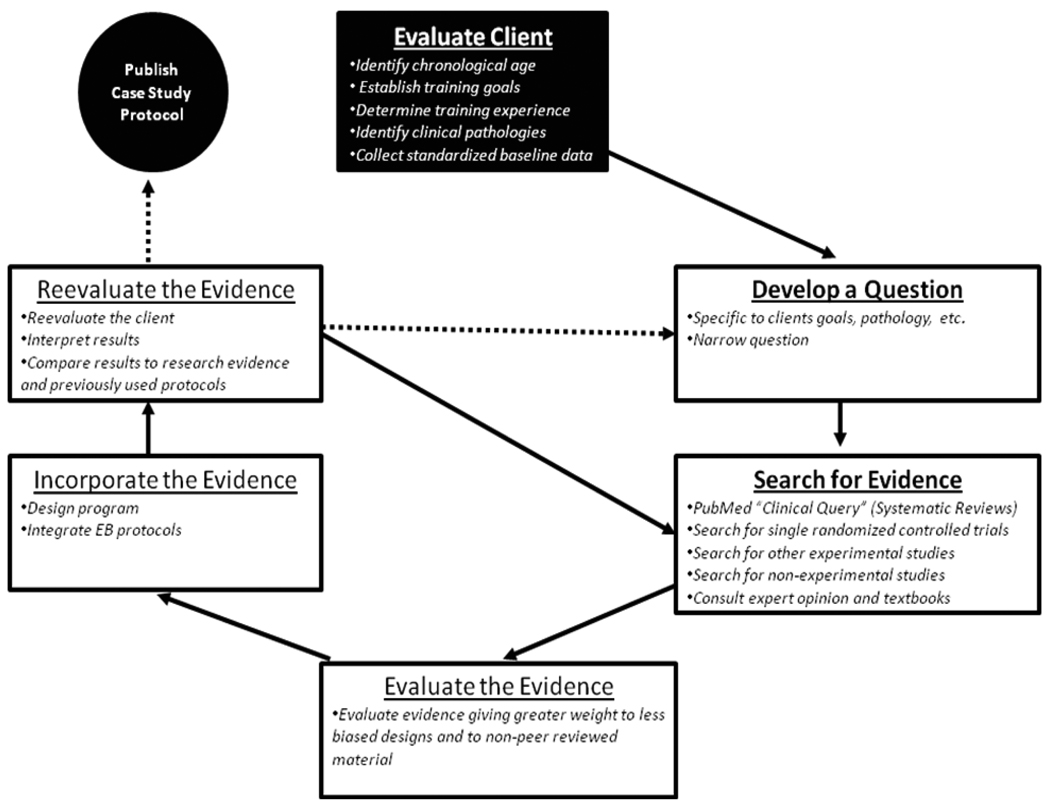

Figure 1.

The fundamental steps of evidence-based practice in the context of individual exercise prescription.

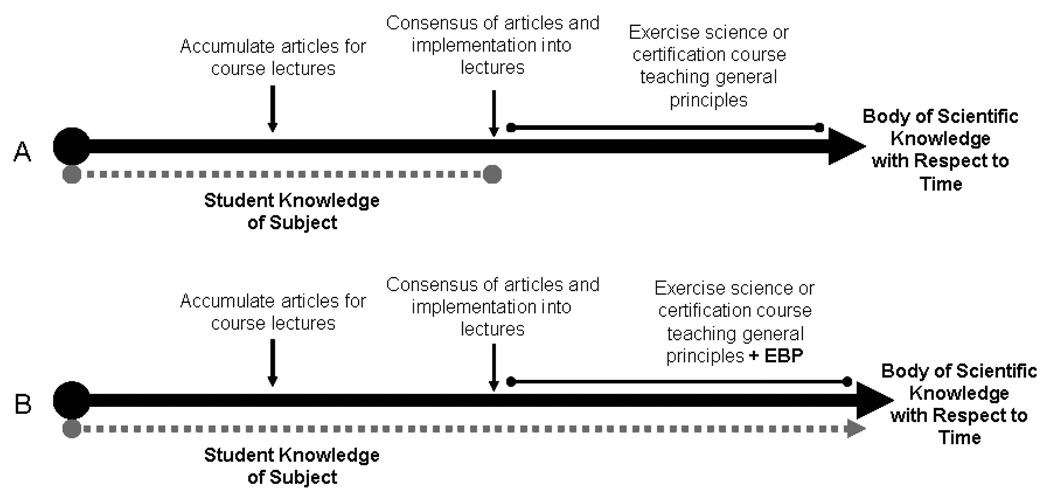

Figure 2.

Theoretical knowledge base of student enrolled in traditional course or certification that teaches only general principles (A) vs. a course or certification teaching both general principles and the philosophy of evidence-based practice.

Table 1.

Classic levels of evidence for rehabilitation practice. Table reprinted in part with the permission of SLACK Inc.[24]

| LEVEL | CLASSIC "LEVELS OF EVIDENCE” FOR THERAPY/ PREVENTION |

PLACEMENT OF ADDITIONAL TYPES OF CLINICAL EVIDENCE |

|---|---|---|

| 1a | Systematic review (with homogeneity) of randomized controlled trials (RCT) | Clinical practice guidelines (CPG's) where recommendations are based on systematic reviews that contain multiple RCTs and the development includes supplemental data or expert opinion to make recommendations only where evidence is lacking |

| 1b | Individual RCT (with narrow Confidence Interval) | |

| 1c | All or none | |

| 2a | Systematic review (with homogeneity) of cohort studies | Lower quality CPGs that are based on informal evidence review and expert consensus, where few RCT's are identified |

| 2b | Individual cohort study (including low quality RCT; e.g., <80% follow-up) | |

| 2c | “Outcomes” research | |

| 3a | Systematic review (with homogeneity) of case-control studies | Structured consensus processes based on quantitative ratings of agreement and formal consensus processes using qualified experts |

| 3b | Individual case-control study | |

| 4 | Case-series (and poor quality cohort and case-control studies) | Unstructured quantitative or qualitative expert consensus; large descriptive practice analysis/survey that defines common ground; critical appraisal/comprehensive systematic review or synthesis of biologic studies, or first principles |

| 5 | Expert opinion without explicit critical appraisal, or based on physiology, bench research, or “first principles.” | CPG's that are not based on the use of evidence review or quantitative data; clinical protocols or rehabilitation theory |

ACKNOWLEDGEMENTS

K. English was partially supported by a National Center for Medical and Rehabilitation Research (NICHD) at the National Institute of Health (T32 HD007539).

Footnotes

The authors have no conflict of interests.

REFERENCES

- 1.Huxley AF. Cross-bridge action: present views, prospects, and unknowns. Journal Of Biomechanics. 2000;33(10):1189–1195. doi: 10.1016/s0021-9290(00)00060-9. [DOI] [PubMed] [Google Scholar]

- 2.Huxley AF, Niedergerke R. Structural changes in muscle during contraction; interference microscopy of living muscle fibres. Nature. 1954;173(4412):971–973. doi: 10.1038/173971a0. [DOI] [PubMed] [Google Scholar]

- 3.Huxley H, Hanson J. Changes in the cross-striations of muscle during contraction and stretch and their structural interpretation. Nature. 1954;173(4412):973–976. doi: 10.1038/173973a0. [DOI] [PubMed] [Google Scholar]

- 4.Huxley AF, Niedergerke R. Measurement of muscle striations in stretch and contraction. The Journal Of Physiology. 1954;124(2):46–47. [PubMed] [Google Scholar]

- 5.Guyatt GH, Sackett DL, Cook DJ. Users' guides to the medical literature. II. How to use an article about therapy or prevention. A. Are the results of the study valid? Evidence-Based Medicine Working Group. JAMA: The Journal Of The American Medical Association. 1993;270(21):2598–2601. doi: 10.1001/jama.270.21.2598. [DOI] [PubMed] [Google Scholar]

- 6.Oxman AD, Sackett DL, Guyatt GH. Users' guides to the medical literature. I. How to get started. The Evidence-Based Medicine Working Group. JAMA: The Journal Of The American Medical Association. 1993;270(17):2093–2095. [PubMed] [Google Scholar]

- 7.Wegscheider K. Evidence-based medicine - dead-end or setting off for new shores. Herzschr Elektrophys. 2000;11 Suppl 2:II/1–II/7. [Google Scholar]

- 8.Ellis J, Mulligan I. Inpatient general medicine is evidence based. Lancet. 1995;346(8972):407. [PubMed] [Google Scholar]

- 9.Shorten A, Wallace M. Evidence based practice. Australian Nursing Journal. 1996;4(2):22–24. [PubMed] [Google Scholar]

- 10.Simpson B. Evidence-based nursing practice: the state of the art. The Canadian Nurse. 1996;92(10):22–25. [PubMed] [Google Scholar]

- 11.Partridge C. Evidence based medicine--implications for physiotherapy? Physiotherapy Research International: The Journal For Researchers And Clinicians In Physical Therapy. 1996;1(2):69–73. doi: 10.1002/pri.6120010203. [DOI] [PubMed] [Google Scholar]

- 12.Partridge C, Edwards S. The bases of practice--neurological physiotherapy. Physiotherapy Research International: The Journal For Researchers And Clinicians In Physical Therapy. 1996;1(3):205–208. doi: 10.1002/pri.59. [DOI] [PubMed] [Google Scholar]

- 13.Compton J, Robinson M. The move towards evidence-based medicine. The Medical Journal Of Australia. 1995;163(6):333. [PubMed] [Google Scholar]

- 14.Poolman RW, Sierevelt IN, Farrokhyar F, Mazel JA, Blankevoort L, Bhandari M. Perceptions and Competence in Evidence-Based Medicine: Are Surgeons Getting Better? Journal of Bone & Joint Surgery, American Volume. 2007;89(1):206–215. doi: 10.2106/JBJS.F.00633. [DOI] [PubMed] [Google Scholar]

- 15.Madhok R, Stothard J. Promoting evidence based orthopaedic surgery: An English experience. Acta Orthopaedica Scandinavica. 2002;73:26–29. doi: 10.1080/000164702760379512. [DOI] [PubMed] [Google Scholar]

- 16.Hanson BP, Bhandari M, Audige L, Helfet D. The need for education in evidence-based orthopedics. Acta Orthopaedica Scandinavica. 2004;75(3):328–332. doi: 10.1080/00016470410001277. [DOI] [PubMed] [Google Scholar]

- 17.Sackett DL, Straus SE, Richardson SW, Rosenberg W, Haynes RB. Evidence-Based Medicine: How to Practice and Teach EBM. 2nd edition. Edinburgh: Hancourt Publishers Limited; 2000. [Google Scholar]

- 18.Hunt DL, Haynes RB, Browman GP. Searching the medical literature for the best evidence to solve clinical questions. Annals Of Oncology. 1998;9(4):377–383. doi: 10.1023/a:1008253820228. [DOI] [PubMed] [Google Scholar]

- 19.Sackett DL, Rosenberg WM. On the need for evidence-based medicine. Journal Of Public Health Medicine. 1995;17(3):330–334. [PubMed] [Google Scholar]

- 20.Antes G, Galandi D, Bouillon B. What is evidence-based medicine? Langenbeck's Archives Of Surgery. 1999;384(5):409–416. doi: 10.1007/s004230050223. [DOI] [PubMed] [Google Scholar]

- 21.Parker M. Whither our art? Clinical wisdom and evidence-based medicine. Medicine, Health Care, And Philosophy. 2002;5(3):273–280. doi: 10.1023/a:1021116516342. [DOI] [PubMed] [Google Scholar]

- 22.Michel LA. The epistemology of evidence-based medicine. Surgical Endoscopy. 2007;21(2):145–151. doi: 10.1007/s00464-006-0905-7. [DOI] [PubMed] [Google Scholar]

- 23.Fletcher RH, Fletcher SW. Evidence-based approach to the medical literature. Journal Of General Internal Medicine: Official Journal Of The Society For Research And Education In Primary Care Internal Medicine. 1997;12 Suppl 2:S5–S14. doi: 10.1046/j.1525-1497.12.s2.1.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Law M, MacDermid J. Evidence-Based Rehabilitation: A Guide to Practice. 2nd Edition. Thorofare, NJ: SLACK Inc; 2008. [Google Scholar]

- 25.Straus SE, Sackett DL. Applying evidence to the individual patient. Annals Of Oncology: Official Journal Of The European Society For Medical Oncology / ESMO. 1999;10(1):29–32. doi: 10.1023/a:1008308211595. [DOI] [PubMed] [Google Scholar]

- 26.Davidoff F, Case K, Fried PW. Evidence-based medicine: why all the fuss? Annals Of Internal Medicine. 1995;122(9):727. doi: 10.7326/0003-4819-122-9-199505010-00012. [DOI] [PubMed] [Google Scholar]

- 27.Sauerland S, Lefering R, Neugebauer EA. The pros and cons of evidence-based surgery. Langenbeck's Archives Of Surgery. 1999;384(5):423–431. doi: 10.1007/s004230050225. [DOI] [PubMed] [Google Scholar]

- 28.Franco G, Grandi P. Evaluation of medical decisions effectiveness: a 4-year evidence-based study in a health care setting. International Archives of Occupational & Environmental Health. 2008;81(7):921–928. doi: 10.1007/s00420-007-0288-7. [DOI] [PubMed] [Google Scholar]

- 29.Delorme TL, West FE, Shriber WJ. Influence of progressive resistance exercises on knee function following femoral fractures. The Journal Of Bone And Joint Surgery American Volume. 1950;32(A:4):910–924. [PubMed] [Google Scholar]

- 30.DeLorme TL, Watkins AL. Progressive resistance exercise: technic and medical application. New York; United States: Appleton-Centry-Crofts; 1951. [Google Scholar]

- 31.Delorme TL, Watkins AL. Technics of progressive resistance exercise. Archives Of Physical Medicine And Rehabilitation. 1948;29(5):263–273. [PubMed] [Google Scholar]

- 32.Gallagher JR, DeLorme TL. The use of the technique of progressive-resistance exercise in adolescence. The Journal Of Bone And Joint Surgery American Volume. 1949;31A(4):847–858. [PubMed] [Google Scholar]

- 33.Rhea MR, Alderman BL. A Meta-Analysis of Periodized Versus Nonperiodized Strength and Power Training Programs. Research Quarterly for Exercise & Sport. 2004;75(4):413–422. doi: 10.1080/02701367.2004.10609174. [DOI] [PubMed] [Google Scholar]

- 34.Baker D. A series of studies on the training of high-intensity muscle power in rugby league football players. Journal of Strength & Conditioning Research. 2001;15(2):198–209. [PubMed] [Google Scholar]

- 35.Lohman T, Going S, Pamenter R, Hall M, Boyden T, Houtkooper L, et al. Effects of resistance training on regional and total bone mineral density in premenopausal women: a randomized prospective study. Journal Of Bone And Mineral Research. 1995;10(7):1015–1024. doi: 10.1002/jbmr.5650100705. [DOI] [PubMed] [Google Scholar]

- 36.Spirduso WW, Cronin DL. Exercise dose-response effects on quality of life and independent living in older adults. Medicine & Science in Sports & Exercise. 2001;33(6 Suppl):S598–s608. doi: 10.1097/00005768-200106001-00028. [DOI] [PubMed] [Google Scholar]