Abstract

Purpose:

To elicit priority rankings of indicators of quality of care among providers and decision-makers in continuing care in Alberta, Canada.

Methods:

We used modified nominal group technique to elicit priorities and criteria for prioritization among the quality indicators and resident/client assessment protocols developed by the interRAI consortium for use in long-term care and home care.

Results:

The top-ranked items from the long-term care assessment data were pressure ulcers, pain and incontinence. The top-ranked items from the home care data were pain, falls and proportion of clients at high risk for residential placement. Participants considered a variety of issues in deciding how to rank the indicators.

Implications:

This work reflects the beginning of a process to better understand how providers and policy makers can work together to assess priorities for quality improvement within continuing care.

Abstract

Objet :

Favoriser le classement des indicateurs de la qualité des soins chez les fournisseurs et les décideurs dans le contexte des soins prolongés en Alberta, au Canada.

Méthodologie :

Nous avons employé une technique de groupe nominal modifiée pour favoriser la priorisation et définir les critères pour les indicateurs de la qualité et les protocoles d'évaluation des clients/résidents développés par le consortium interRAI pour les soins prolongés et les soins à domicile.

Résultats :

Les items situés aux premiers rangs selon les données sur l'évaluation des soins prolongés sont les escarres de décubitus, la douleur et l'incontinence. Les items situés aux premiers rangs selon les données pour les soins à domicile sont la douleur, les chutes et le nombre de clients présentant un haut risque de placement en résidence. Les participants ont tenu compte de plusieurs enjeux dans leur décision pour le classement des indicateurs.

Répercussions :

Ce travail est le point de départ d'un processus pour mieux comprendre comment les fournisseurs et les responsables de politiques peuvent travailler ensemble à l'évaluation des priorités visant l'amélioration de la qualité dans le contexte des soins prolongés.

Inconsistencies in quality among continuing care facilities may be responsible for the variation in resident outcomes that exists across these settings (Rantz et al. 1996). To address such inconsistencies, many jurisdictions have mandated use of the Resident Assessment Instruments (RAI) to standardize care practice data and enable comparisons across facilities (Rantz et al. 1996). The RAI instruments facilitate routine, standardized assessment and documentation of resident characteristics (Rantz et al. 1996, 1997; Hirdes et al. 1999, 2004; Frijters et al. 2001), and several instruments have been developed for use in continuing care (i.e., home care, assisted living and long-term care facility living) (Alberta Health and Wellness 2008). In Canada, the Canadian Institute for Health Information (CIHI) has adopted the RAI 2.0 as the Canadian standard for use in long-term care (LTC) and the RAI Home Care instrument (RAI-HC) in home care (Carpenter et al. 1999, 2000; Frijters et al. 2001; Hirdes et al. 2001; Berta et al. 2006). The RAI-HC and the RAI 2.0 share some items, but the content of each is relevant to the populations cared for in each setting.

Quality of Care

The purposes of the RAI tools include standardizing resident assessment and forming an evidence base to influence clinical practice and policy decisions (interRAI 2006). To meet this mandate, the interRAI group has developed a number of tools using RAI data to improve quality of care. These tools include individual resident or client assessment protocols (RAPs for LTC, CAPs for HC) and unit- and facility-level quality indicators (QIs).

Assessment protocols

These are standardized protocols linked to care plans for commonly encountered problems in LTC and HC settings. Their purpose is to guide care planning for an individual resident or client. Assessment protocols are triggered by specific data entered into a RAI assessment. For example, the RAP for falls prevention is triggered by a LTC facility resident having fallen within the past 90 to 180 days and other information included in RAI 2.0. The RAI 2.0 consists of 18 RAPs (Morris et al. 2005), and the RAI-HC contains 30 CAPs (Morris et al. 2002). A major update released in late 2008 changed the naming convention for the assessment protocols to a standard “Client Assessment Protocol” across all continuing care settings. We use the older terminology because our study was conducted before this change was implemented.

Quality indicators

These are derived from RAI data aggregated to the facility level. They represent the proportion of residents with a given condition (Zimmerman et al. 1995; Zimmerman 2003; Hirdes et al. 2004; Dalby et al. 2005). The QIs provide information about how an organization could focus its attention to provide higher quality of care (Rantz 1995; Ryther 1995). Awareness of problem areas can lead to quality improvement activities, improved care processes and better resident outcomes, as well as influence policy decisions and strategic planning (Rantz et al. 1997, 2004; Zimmerman 2003). There are different versions of QIs in use across jurisdictions. We used the versions approved by CIHI (Hirdes et al. 2001; Center for Health Systems Research and Analysis 2006). Twenty-five QIs are used in LTC and 30 in HC settings.

The RAPs and CAPs focus on different service settings – LTC versus HC, respectively – and provide individually focused recommendations for improving care. In contrast, the purpose of the QIs is to influence facility-wide quality improvement activities by highlighting areas in which a facility may be performing poorly. For both the QIs and CAPs/RAPs, there are areas of overlap between HC and LTC and areas distinct to each setting. For example, the RAI 2.0 and RAI-HC have QIs for falls and pain, whereas only the RAI-HC has a QI for influenza vaccination (Hirdes et al. 2001; Center for Health Systems Research and Analysis 2006).

Implementation of the RAI 2.0 and RAI-HC occurred in Alberta between 2004 and 2008. During this time, Continuing Care Standards were promulgated by Alberta Health and Wellness as part of an initiative to support high-quality continuing care (Alberta Health and Wellness 2008). As a result, quality of care has been a central concern of decision-makers and policy makers in the province.

Impetus for Prioritization

The primary motivation and funding for this project came from the Knowledge Brokering Group (KBG), a group of researchers and decision-makers in Alberta who obtained funding from the Canadian Health Services Research Foundation and the Alberta Heritage Foundation for Medical Research to establish a demonstration project linking LTC and HC decision-makers and researchers. The main focus of the KBG project was on promoting use of the RAI data through extensive education and interaction with researchers.

Despite their different purposes, the QIs and the CAPs/RAPs both represent information that clinicians and managers obtain from RAI assessments. While these tools are intended to facilitate decision-making, KBG participants and other continuing care decision-makers had identified the large number of possible quality issues generated by these instruments as a concern for decision- and policy makers in Alberta. Without prioritizing information from the RAI data, decision-makers and clinicians find it difficult to select areas in which to focus their quality improvement efforts. Previous research has shown that providing undifferentiated QI data to staff does not always improve care (Popejoy et al. 2000; Rantz et al. 2001), and that facility staff may be able to focus on only one or two areas of quality improvement at a time (Rantz et al. 2001). One approach to dealing with this issue of perceived information overload is to develop a priority-based structure for information from RAI tools, permitting decision-makers and clinicians to select high-priority areas aligned to their strategic plans.

While competing priorities will likely always exist among clinicians, health organization managers and policy makers, developing a priority-based structure for the RAI information may help to focus and align quality improvement efforts across different sectors within continuing care by highlighting those areas most likely to have the greatest effect on resident outcomes.

More broadly, there have been calls for multi-criteria approaches to priority setting in healthcare in which evidence-based resources, economics and equity are all considered (Baltussen and Niessen 2006; Urquhart et al. 2008). Key aspects of priority setting include a systematic, open and explicit process in which research evidence, maximization of benefit, minimization of cost, equity and efficiency are all considered (Mitton and Donaldson 2003). One component of a multifaceted approach is to include multiple voices in the prioritization process.

To begin to address this expressed need for prioritization, we elicited stakeholder views about priorities for quality improvement and safety. As a secondary objective, we elicited their criteria for rating priorities. We were unable to find any description of priority-setting criteria for quality improvement in continuing care in the literature, nor were KBG members aware of criteria used in the field. The project was deemed exempt from ethics review by the Health Research Ethics Board at the University of Alberta.

Methods

We used a modified nominal group technique to elicit and rank provider priorities and criteria among the QIs and CAPs/RAPs. Although there are differences between the QIs and CAPs/RAPs, both were included for prioritization because of the widespread perception of significant overlap between the two, and the concern that they may be perceived as competing for attention by staff within facilities.

Participants

Our focus was to understand provider priorities from the perspective of regional representatives. Regional RAI implementation leaders from the former nine regional health authorities in Alberta were sent a letter describing the project (the health system in Alberta has since reorganized into a single authority). The regional representatives were asked to nominate at least one owner–operator, one facility manager and one front-line staff person from LTC facilities and HC agencies in their region. We received varying numbers of nominees from eight of the nine regions, with no response from the ninth region (the former Northern Lights region in the north of the province). Project staff invited all nominees to participate in a meeting close to their region.

One of the eight responding regions was unable to participate owing to staffing issues at the time of the meeting. This left a total of 47 people representing seven of the nine health regions to participate in the four meetings, summarized in Table 1. While the former regions differed in whether they were rural, urban or a mix of both, there are no systematic differences that we are aware of, although the former Chinook and Aspen regions went further than other regions in adapting the RAI data to create reports and tools. Representatives from both regions were active participants in the regional and final meetings.

TABLE 1.

Summary of meeting participants

| Calgary N=10 | Red Deer N=5 | Edmonton N=14* | Final Meeting N=28** | ||

|---|---|---|---|---|---|

| No. of males | 2 | 1 | 2 | 7 | |

| Level of representation | Regional | 5 | 5 | 10 | 14 |

| Organizational | 5 | 0 | 4 | 11 | |

| Researchers | 0 | 0 | 0 | 3 | |

| Practice setting | Rural | 0 | 2 | 8 | 4 |

| Urban | 8 | 0 | 6 | 20 | |

| Mixed | 2 | 3 | 4 | ||

| Type of continuing care | Long-term care | 7 | 5 | 9 | Unknown |

| Home care | 3 | 1 | 5 | Unknown |

Six people participated via teleconference.

Included a mix of participants from the previous meetings as well as new participants from the regions and the KBG.

The participants were either in management roles at their respective facilities or worked directly for the regional health authority. Participants specialized in HC or LTC, but were knowledgeable about the full continuing care spectrum and the use of RAI tools at the organizational level. Participants reflected the mix of health professionals providing continuing care services, and included nurses, occupational therapists, physical therapists, dietitians and one physician. The majority of the meeting participants had experience as front-line care providers but were no longer in those roles.

Meeting process

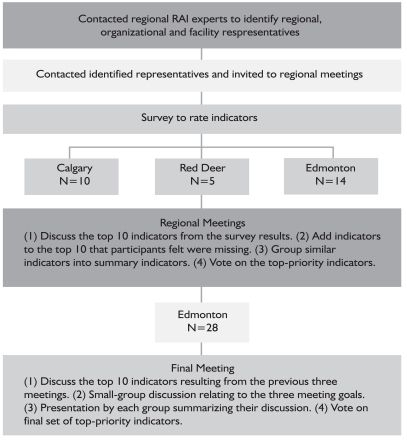

Four meetings took place in Alberta between February and May 2008. Three regional meetings were held (Calgary, Red Deer and Edmonton), followed by one final meeting of all the regions, held in Edmonton in conjunction with the Canadian InterRAI National Meeting. We used the same process for each regional meeting. We provide a graphic representation of the process used to organize the regional meetings in Figure 1.

FIGURE 1.

Meeting process

Two weeks prior to the meeting, participants were sent two questionnaires, one for the RAI 2.0 25 QIs/18 RAPs and one for the RAI-HC 22 QIs/30 CAPs. These listed the CIHI-approved QIs and the CAPs/RAPS and asked participants to rate each item on a scale where 1 was “not important” and 7 “very important” for quality improvement purposes. All participants were asked to consider aggregated data (e.g., for a unit or HC case worker/office) as their reference. These questionnaires were sent back to the research team in advance of the meeting, and average ratings for each item were calculated.

We began each meeting by listing the top 10 rated priorities based on the item averages from the questionnaires. We then held a facilitated discussion among all participants at a meeting with three goals:

-

1.

To determine whether participants felt that items not included in the top 10 rated priorities should be included.

-

2.

To elicit a richer description of the importance of the participant-selected indicators (e.g., what makes pain a high priority?).

-

3.

To elicit the criteria underlying indicator priority rating more generally (e.g., what criteria did you use in determining indicator priorities?).

The QIs and CAPs/RAPs were treated as equivalent for the purpose of these discussions.

At each meeting, one author (AS) facilitated the discussions and another (KD) took notes and tallied votes. CJM participated as a facilitator at the first regional meeting. The purpose of the discussion was to come to agreement when there were areas of disagreement. There was no requirement to achieve complete consensus. During the discussion, participants acknowledged that some of the indicators addressed similar concepts. These items were then grouped together and became the summary items displayed in Table 2. Participants were also given the opportunity to discuss indicators that they felt were important but did not make the top 10 and add them to the list of priority indicators. Discussions lasted between three and four hours and were complete when all participants agreed that they had voiced their opinions. After discussion, we asked all participants to vote for their top priorities from the summary indicators that were created and added during the discussion. Each participant could cast three votes. The project staff did not vote. We audio-recorded the three regional meetings. We did not transcribe the audiotapes, but took field notes during the meetings and checked these against the audio recordings to ensure that we captured major themes that emerged in the discussion. The discussions cycled through the three goals of the meeting iteratively rather than linearly.

TABLE 2.

Final priority-rated summary indicators for the RAI-MDS 2.0 and RAI-HC

| LTC Indicator (RAI 2.0 QIs/RAPs) | Votes | HC Indicator (RAI-HC QIs/CAPs) | Votes |

|---|---|---|---|

| Pressure ulcers | 16 | Pain | 12 |

| Pain | 15 | Falls | 10 |

| Incontinence | 8 | Institutional placement | 8 |

| Falls | 7 | Brittle informal support | 4 |

| Little or no activity | 7 | Less social activity/Social isolation | 4 |

| Uses antianxiety, antipsychotic, hypnotic | 5 | Exhibits distressing behaviour | 3 |

| Behavioural symptoms | 5 | Medications | 3 |

| Dehydration | 4 | Malnutrition | 3 |

| Depression symptoms without antidepressants | 4 | Unmet need | 2 |

| Physically restrained | 3 | Disease management | 2 |

| Malnutrition | 3 | Bladder incontinence | 2 |

| Delirium | 1 | Depression/Anxiety | 2 |

| Polypharmacy | 1 | Delirium | 2 |

| Oral health | 1 | Hospitalization | 1 |

| Disease management | 1 | Pressure ulcers | 1 |

| Changes in ADL | 1 | ||

| Hazardous environment | 1 |

The fourth and final meeting was held in Edmonton on May 30, 2008. All previous meeting participants as well as KBG project members were invited to participate. We circulated the preliminary report summarizing the study and initial results prior to the meeting and asked participants unable to attend the final meeting to send their input via e-mail. We received no additional feedback via e-mail.

Twenty-eight people attended the meeting. The format was similar to the previous meetings with a few exceptions. After the discussion of the prioritized items, the participants were broken into small groups and asked to discuss the three goals from the previous meetings and summarize their thoughts for the larger group. Participants voted for their top priorities among those that had been listed initially as well as those that were added after the group discussion. Each participant received three votes.

Finally, we assembled summary tables with notes describing the discussions and sent these out to participants from all four meetings, with requests for feedback.

Results

Rankings

Table 2 lists the summary indicators ranked according to number of votes received at the final meeting. The RAI items grouped to form the summary items are listed in Appendices A and B. The top-rated indicators in LTC coming from RAI 2.0 data were pressure ulcers, pain, incontinence, falls and little or no activity among residents. In HC, the top-rated indicators were pain, falls, institutional placement, brittle informal support systems and decreased social activity/social isolation. In the discussions resulting in these ratings, participants in all meetings expressed concern about areas of overlap between indicators, as well as perceived interrelationships among the indicators that they felt reflected a complex reality, making identification of important single indicators in isolation difficult. For example, the interrelationships among pain, nutritional intake or nutritional status and falls were discussed at some length in two of the three meetings. Pain can decrease appetite and food intake, leading to weakness and other symptoms such as dizziness, which can greatly increase risk of falls. Many participants voiced concern that because these complex causal pathways could not be easily disentangled, focusing on pain might be more important as a root cause of other problems (also indicators of care needs or poor quality) that are equally important but may result from a problem reflected in the pain indicator.

Criteria

We asked participants to identify their criteria for priority setting at all four meetings. A number of criteria related to the potential impact of the indicator including (a) the ability to create change, (b) the potential implications of critical incidents related to the item, (c) perceived indicator effectiveness, (d) indicator potential to optimize care and (e) indicator utility to clients/caregivers rather than to policy makers or the media.

Other criteria related to desirable indicator traits included (a) stability, (b) dependency of the indicator on other indicators (the complex interrelationship referred to above), (c) indicator ability to represent the “big picture” of the client's status and (d) indicator relationship to the Continuing Care Standards promulgated by the provincial ministry.

Other criteria included (a) occurrence of the indicator across settings – if issues exist in both LTC and HC, they were considered more important; (b) impact on resource use, feasibility and barriers to using the indicator; (c) public perception of the indicator; (d) sentinel events; (e) safety; (f) autonomy and preference; (g) client well-being; and (h) value in risk adjustment. One concern about including the CAPs/RAPs in the discussion was that they are not risk adjusted. When using the indicators, participants felt that people need to be clear about which ones have been risk adjusted and which have not.

Discussion

Indicator groupings

At each meeting, there was considerable discussion about overlap among items, particularly across the QIs and CAPs/RAPs, but also within each set. Participants articulated an urgent need to further assess the overlap among these items, and that once overlap is reduced, the number of possible indicators for focus will be significantly decreased. This discussion was not focused primarily on issues of redundancy, but more about the clinical relationships among QIs and CAPs/RAPs.

Participants agreed that there are clear relationships between different items or indicators. For example, items such as medication use, dehydration, poor nutrition and so on may influence falls. Placing high priority on falls risk and prevention may require that equal priority be placed on precursor indicators. There was consensus that creating a conceptual map among indicators might assist with setting priorities, allowing organizations and care providers to utilize an indicator, such as falls, as a “high-level” indicator and assess the “causal” indicators to determine plans of action.

In part, this consensus may reflect the reality that the QIs, as well as the CAPs/RAPs, have been developed iteratively over time as the instruments and their use have evolved. The QIs come from different initiatives and projects, with different methods and purposes (Zimmerman et al. 1995; Berg et al. 2002; Zimmerman 2003). As a result, there is no overarching conceptual map for these indicators, and the same is true of the CAPs/RAPs. In general, they are found useful in facilities and among continuing care organizations, but they do not embody a high level of purpose-driven planning. We believe that it may be possible to use existing data to explore the conceptual underpinnings of these important tools, to rationalize them and make them more useful for the field.

These challenges provide the basis for future research examining the relationships between the QIs and CAPs/RAPs. In this work, we will assess models of indicator relationships beginning with a comprehensive review of the literature for each indicator area. Then, we will use secondary analysis of a large, Canadian RAI 2.0 data set to test the model structures to determine whether the literature-based theories are reflected in the current RAI 2.0 data. In a final step, we will use decision-maker and clinician input to explore the utility of these indicator models to provide users with information that assists them in planning their quality improvement activities. Our initial plans focus on RAI 2.0 data, but a similar process is needed for RAI-HC. To our knowledge, QIs have not yet been finalized for all of the newer instruments developed by the interRAI consortium.

We hypothesize that illustrating the QI interrelationships may assist decision-makers, clinicians and policy makers to focus on those indicator areas that come earlier in the causal hierarchy. Affecting quality areas early in the causal chain may then improve the related QI areas, improving efficiency of quality improvement efforts.

Challenges

Participants discussed some of the challenges that they face within the continuing care sector. They found keeping up with the priorities set by the regions challenging, and were concerned that the current system does not capture the medical complexity within facilities. Providers are involved in multiple roles; facilities and agencies have fewer resources and take on more complicated clients. The QIs do not depict the day-to-day reality inside the facilities and the daily challenges encountered by staff. This finding gives rise to concern because of the possibility that facilities will one day be rewarded or penalized for their QI scores. In the United States, some QIs are already publicly reported. There are important considerations that Canadian jurisdictions should take into account as they discuss similar approaches (Hutchinson et al. 2009).

Participants also discussed the need to integrate the continuing care system across the different care streams – that is, HC, supportive living, LTC – at least through common data elements (Frijters et al. 2001). Currently, the regions and facilities are using different software and have access to different tools, reports and resources. A standardized reporting system would facilitate transfers and data comparisons across facilities and regions.

Because only the RAI 2.0 and RAI-HC instruments are mandated in Alberta, the RAI-HC is used in supportive living settings as well as in HC. Meeting participants voiced concern that the RAI-HC does not capture some critical elements that influence care planning for clients in supportive living settings, a distinct group between HC clients and LTC facility clients on the spectrum of care need.

Participants discussed additional elements they would like to see included in the tools. The RAI-HC and RAI 2.0 indicators do not capture “resident and family choice.” Whether they should or not is certainly a matter for debate. The continuing care standards in Alberta incorporate negotiation, preferences and resident choices as core elements (Alberta Health and Wellness 2008). These choices do not always reflect “best” care processes and may result in worse QI scores despite the fact that staff are respecting the residents' or families' wishes. Other components that participants felt were missing from the tool include (a) no RAI-HC QI for risk of facility placement, (b) no QI or RAP for hearing and (c) no assessment in the RAI-HC of level of formal support needed versus what is available.

Some of the issues raised about what may not be included in the RAI tools have been addressed in newer instrument versions. However, it is important to note that the focus of the RAI tools, other than QIs, remains on care planning for the individual client, and not resource allocation decisions based on what is available in the environment. Planning for resource allocation requires information outside the scope of the RAI instruments. In addition, there will always be competition for resource allocation and competing priorities, which cannot be reconciled through any single process. However, a more cohesive and collaborative approach to defining priorities, and discussing the varying criteria and their weighting in setting priorities, may help provide a more equitable and transparent process for determining where to focus attention.

Limitations

This was a brief, time-limited project designed to obtain feedback from a variety of experts across the province. We succeeded in getting participation from representatives in seven of the nine regions, across a wide range of provider types. Although many participants had prior experience as direct care providers in continuing care, we had only one participating physician and no current front-line providers. We concur with statements made by several participants that both physicians and current front-line provider opinions would extend and deepen the priorities identified. The views of residents and family members would also be of value, although these lie outside the scope of the present project.

Summary

We wish to acknowledge the years of research by the interRAI consortium towards development of current indicators and care protocols and hope that this report is informative to the groups who continue this complex work into the future. This report reflects the beginning of processes to deepen our understanding of how providers and policy makers can work together to assess and act upon priorities. The goal of this project was to elicit the voices of stakeholders who provide care to people in need of continuing care services to assess priorities among indicators of quality of care. The meeting participants are responsible for improving quality of care in continuing care settings in Alberta. While their opinions will not, and probably should not, dictate how priorities are set at regional or provincial levels, they contribute important insights to the prioritization discussion. Future research on the interrelationships among the indicators of problems in care processes might inform future iterations of QI development.

Acknowledgements

This work was supported by the Knowledge Brokering Group project.

The Knowledge Brokering Group project was supported by the Canadian Health Services Research Foundation (grant number KBD-1215-09) and the Alberta Heritage Foundation for Medical Research, which provided matching funds.

Appendices

Appendix A.

RAI 2.0 Summary Indicator Composition

| Summary Indicator | Grouped Indicators |

|---|---|

| Pressure ulcers | Proportion (a) at risk for developing pressure ulcers; (b) with pressure ulcers |

| Pain | Proportion with pain |

| Incontinence | Proportion (a) with an incontinence care plan; (b) bladder/bowel incontinent; (c) occasionally bladder/bowel incontinent without a toileting program |

| Falls | Proportion (a) at risk for falls; (b) who have had falls |

| Little or no activity | Proportion (a) where inactivity may be a complication; (b) with little or no activity |

| Uses antianxiety, antipsychotic or hypnotic drugs | Proportion (a) receiving antianxiety or hypnotics; (b) receiving antipsychotics; (c) who received hypnotics more than twice in the last week |

| Exhibits behavioural symptoms | Proportion (a) with behavioural symptoms; (b) with behavioural symptoms affecting others |

| Dehydration | Proportion that are dehydrated |

| Depression symptoms without antidepressants | Proportion who have symptoms of depression without antidepressant therapy |

| Physically restrained | Proportion that are being physically restrained |

| Malnutrition | Proportion who have a malnutrition problem |

| Delirium | Proportion who have delirium |

| Polypharmacy | Proportion who receive nine or more different medications |

| Oral health | Proportion with dental care or oral health problems |

| Disease management | Disease management |

Appendix B.

RAI HC Summary Indicator Composition

| Summary Indicator | Grouped Indicators |

|---|---|

| Pain | Proportion (a) who have pain that limits their ability to function; (b) who have disruptive or intense daily pain; (c) with inadequate pain control |

| Falls | Proportion (a) who have had a recent fall or who are at risk of falling; (b) who have had a fall |

| Institutional placement | Proportion at high risk of residential facility placement in the next three months |

| Brittle informal support | Proportion with brittle informal support system |

| Less social activity/social isolation | Proportion who are alone for long periods of time/always and report feeling lonely or are distressed by declining social activity |

| Exhibit distressing behaviour | Proportion who exhibit distressing behaviours |

| Medications | Proportion (a) having problems with medication management; (b) taking psychotropic drugs and require a medication review or would benefit from more/different medication monitoring; (c) whose medications have not been reviewed by a physician within the last 180 days |

| Malnutrition | Proportion who are malnourished or have an increased risk of developing nutritional problems |

| Unmet need | Proportion with unmet need |

| Disease management | Disease management |

| Bladder incontinence | Proportion (a) with urinary incontinence and/or have an indwelling catheter; (b) with failure to improve/incidence of bladder incontinence |

| Depression/Anxiety | Proportion who suffer from depression or anxiety |

| Delirium | Proportion with delirium |

| Hospitalization | Proportion who have been hospitalized, visited emergency departments or received emergent care |

| Pressure ulcers | Proportion (a) with pressure ulcers or at risk of developing pressure ulcers; (b) with failure to improve/incidence of skin ulcers |

| Changes in ADL | Proportion with failure to improve/incidence of decline in activities of daily living long form |

| Hazardous environment | Proportion whose environmental conditions are hazardous |

Contributor Information

Anne Sales, Faculty of Nursing, University of Alberta, Edmonton, AB.

Hannah M. O'Rourke, Faculty of Nursing, University of Alberta, Edmonton, AB.

Kellie Draper, Faculty of Nursing, University of Alberta, Edmonton, AB.

Gary F. Teare, Director of Quality Measurement and Analysis, Saskatchewan Health Quality Council, Saskatoon, SK.

Colleen Maxwell, Departments of Community Health Sciences and Medicine, Faculty of Medicine, University of Calgary, Calgary, AB.

References

- Alberta Health and Wellness. Continuing Care Health Service Standards. 2008. Retrieved January 12, 2011. < http://www.health.alberta.ca/documents/Continuing-Care-Standards-2008.pdf>.

- Baltussen R., Niessen L. Priority Setting of Health Interventions: The Need for Multi-Criteria Decision Analysis. Cost Effectiveness and Resource Allocation. 2006;4:14. doi: 10.1186/1478-7547-4-14. Retrieved January 12, 2011. < http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1560167/>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berg K., Mor V., Morris J., Murphy K.M., Moore T., Harris Y. Identification and Evaluation of Existing Nursing Homes' Quality Indicators. Health Care Financing Review. 2002;23(4):19–36. [PMC free article] [PubMed] [Google Scholar]

- Berta W., Laporte A., Zarnett D., Valdmanis V., Anderson G. A Pan-Canadian Perspective on Institutional Long-Term Care. Health Policy. 2006;79(2–3):175–94. doi: 10.1016/j.healthpol.2005.12.006. [DOI] [PubMed] [Google Scholar]

- Carpenter G.I., Bernabei R., Hirdes J.P., Mor V., Steel K. Building Evidence on Chronic Disease in Old Age. British Medical Journal. 2000;320(7234):528–29. doi: 10.1136/bmj.320.7234.528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter G.I., Hirdes J.P., Ribbe M.W., Ikegami N., Challis D., Steel K., Bernabei R., Fries B. Targeting and Quality of Nursing Home Care. A Five-Nation Study. Aging – Clinical and Experimental Research. 1999;11(2):83–89. [PubMed] [Google Scholar]

- Center for Health Systems Research and Analysis. Chronic Quality Indicator/Quality Measure Definitions. 2006. Retrieved January 12, 2011. < http://www.chsra.wisc.edu/chsra/qi/qi-qm_matrix_version_1.pdf>.

- Dalby D.M., Hirdes J.P., Fries B.E. Risk Adjustment Methods for Home Care Quality Indicators (HCQIs) Based on the Minimum Data Set for Home Care. BMC Health Services Research. 2005;5(11):7. doi: 10.1186/1472-6963-5-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frijters D., Achterberg W., Hirdes J.P., Fries B.E., Morris J.N., Steel K. Integrated Health Information System Based on Resident Assessment Instruments. Tijdschrift Voor Gerontologie En Geriatrie. 2001;32(1):8–16. [PubMed] [Google Scholar]

- Hirdes J.P., Fries B.E., Morris J.N., Ikegami N., Zimmerman D., Dalby D., Aliaga P., Hammer S., Jones R. InterRAI Home Care Quality Indicators (HCQIs) for MDS-HC Version 2.0. 2001. Retrieved January 12, 2011. < http://www.interrai.org/applications/hcqi_table_final.pdf>. [DOI] [PubMed]

- Hirdes J.P., Fries B.E., Morris J.N., Ikegami N., Zimmerman D., Dalby D.M., Aliaga P., Hammer S., Jones R. Home Care Quality Indicators (HCQIs) Based on the MDS-HC. Gerontologist. 2004;44(5):665–79. doi: 10.1093/geront/44.5.665. [DOI] [PubMed] [Google Scholar]

- Hirdes J.P., Fries B.E., Morris J.N., Steel K., Mor V., Frijters D., Labine S., Schalm C., Stones M.J., Teare G., Smith T., Marhaba M., Perez E., Jonsson P. Integrated Health Information Systems Based on the RAI/MDS Series of Instruments. Healthcare Management Forum. 1999;12(4):30–40. doi: 10.1016/S0840-4704(10)60164-0. [DOI] [PubMed] [Google Scholar]

- Hutchinson A.M., Draper K., Sales A. Public Reporting of Nursing Home Quality of Care: Lessons from the United States Experience for Canadian Policy Discussion. Healthcare Policy. 2009;5(2):87–105. [PMC free article] [PubMed] [Google Scholar]

- interRAI. Mission and Vision. 2006. Retrieved January 12, 2011. < http://www.interrai.org/section/view/>.

- Mitton C., Donaldson C. Tools of the Trade: A Comparative Analysis of Approaches to Priority Setting in Healthcare. Health Services Management Research. 2003;16(2):96–105. doi: 10.1258/095148403321591410. [DOI] [PubMed] [Google Scholar]

- Morris J.N., Bernabei R., Ikegami N., Gilgen R., Frijters D., Hirdes J.P., Fries B.E., Steel K., Carpenter I., DuPasquier J., Henrard J.C., Belleville-Taylor P. 2002. RAI Home Care (RAI-HC) Manual Canadian Version (2nd ed.). Ottawa: Canadian Institute for Health Information [Google Scholar]

- Morris J.N., Hawes C., Mor V., Phillips C., Fries B.E., Nonemaker S., Murphy K. 2005. Resident Assessment Instrument (RAI) MDS 2.0 and RAPs Canadian Version User's Manual (2nd ed.). Ottawa: Canadian Institute for Health Information [Google Scholar]

- Popejoy L.L., Rantz M.J., Conn V., Wipke-Tevis D., Grando V.T., Porter R. Improving Quality of Care in Nursing Facilities: Gerontological Clinical Nurse Specialist as Research Nurse Consultant. Journal of Gerontological Nursing. 2000;26(4):6–13. doi: 10.3928/0098-9134-20000401-04. [DOI] [PubMed] [Google Scholar]

- Rantz M.J. Quality Measurement in Nursing: Where Are We Now? Journal of Nursing Care Quality. 1995;9(2):1–7. doi: 10.1097/00001786-199501000-00004. [DOI] [PubMed] [Google Scholar]

- Rantz M.J., Hicks L., Petroski G.F., Madsen R.W., Mehr D.R., Conn V., Zwygart-Staffacher M., Maas M. Stability and Sensitivity of Nursing Home Quality Indicators. Journals of Gerontology, Series A, Biological Sciences and Medical Sciences. 2004;59(1):79–82. doi: 10.1093/gerona/59.1.m79. [DOI] [PubMed] [Google Scholar]

- Rantz M.J., Mehr D.R., Conn V.S., Hicks L.L., Porter R., Madsen R.W., Petrowski G.F., Maas M. Assessing Quality of Nursing Home Care: The Foundation for Improving Resident Outcomes. Journal of Nursing Care Quality. 1996;10(4):1–9. doi: 10.1097/00001786-199607000-00002. [DOI] [PubMed] [Google Scholar]

- Rantz M.J., Popejoy L., Mehr D.R., Zwygart-Stauffacher M., Hicks L.L., Grando V., Conn V.S., Porter R., Scott J., Maas M. Verifying Nursing Home Care Quality Using Minimum Data Set Quality Indicators and Other Quality Measures. Journal of Nursing Care Quality. 1997;12(2):54–62. doi: 10.1097/00001786-199712000-00011. [DOI] [PubMed] [Google Scholar]

- Rantz M.J., Popejoy L., Petroski G.F., Madsen R.W., Mehr D.R., Zwygart-Stauffacher M., Hicks L.L., Grando V., Wipke-Tevis D.D., Bostick J., Porter R., Conn V.S., Maas M. Randomized Clinical Trial of a Quality Improvement Intervention in Nursing Homes. Gerontologist. 2001;41(4):525–38. doi: 10.1093/geront/41.4.525. [DOI] [PubMed] [Google Scholar]

- Ryther B.J. Update on Using Resident Assessment Data in Quality Monitoring. In: Miller T.V., Rantz M.J., editors. Quality Assurance in Long Term Care. Gaithersburg, MD: Aspen Publishers; 1995. [Google Scholar]

- Urquhart B., Mitton C., Peacock S. Introducing Priority Setting and Resource Allocation in Home and Community Care Programs. Journal of Health Services Research & Policy. 2008;13(Suppl. 1):41–45. doi: 10.1258/jhsrp.2007.007064. [DOI] [PubMed] [Google Scholar]

- Zimmerman D.R. Improving Nursing Home Quality of Care through Outcomes Data: The MDS Quality Indicators. International Journal of Geriatric Psychiatry. 2003;18(3):250–57. doi: 10.1002/gps.820. [DOI] [PubMed] [Google Scholar]

- Zimmerman D.R., Karon S.L., Arling G., Clark B.R., Collins T., Ross R., Sainfort F. Development and Testing of Nursing Home Quality Indicators. Health Care Financing Review. 1995;16(4):107–27. [PMC free article] [PubMed] [Google Scholar]