Abstract

Principal stratification has recently become a popular tool to address certain causal inference questions, particularly in dealing with post-randomization factors in randomized trials. Here, we analyze the conceptual basis for this framework and invite response to clarify the value of principal stratification in estimating causal effects of interest.

Keywords: causal inference, principal stratification, surrogate endpoints, direct effect, mediation

1. Introduction

The past few years have seen a substantial number of studies claiming to be using “The Principal StrataApproach” or “A Principal Strata Framework,” a termcoined by Frangakis and Rubin (2002). An examination of these studies reveals that they fall into four distinct categories, each subscribing to a different interpretation of “principal strata (PS)” and each making different assumptions and claims. The purpose of this note is to clarify this distinction and to identify areas of application where these interpretations may be useful.

2. Notation and preliminaries

We begin with the usual potential-outcome notation, Yx (u), which, for every unit u, defines a functional relationship

| (1) |

between a treatment variable X and an outcome variable Y. Here y and x stand for specific values taken by Y and X, respectively, and u may stand either for the identity of a unit (e.g., a person’s name) or, more functionally, for the set of unit-specific characteristics that are deemed relevant to the relation considered (Pearl, 2000, p. 94).

For any function f, the population of units can be partitioned into a set of homogeneously responding classes, called “equivalence classes” (Pearl, 2000, p. 264), such that all units in a given class respond in the same way to variations in X. For example, if X and Y are binary, then, for any given u, the relationship between X and Y must be one of four functions:

| (2) |

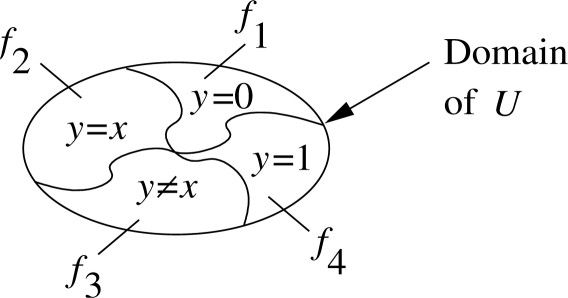

Therefore, as u varies along its domain, regardless of how complex the variation, the only effect it can have is to switch the relationship between X and Y among these four functions. This partitions the domain of U into four regions, as shown in Fig. 1, where each region contains those points u that induce the same function.

Figure 1:

The canonical partition of U into four equivalence classes, each inducing a distinct functional mapping from X to Y for any given function y = f (x, u).

If u is represented by a multi dimensional vector of unit-specific characteristics, we can regard the class membership of u as a lower dimension variable, R which, together with X, determines the value of Y. Pearl (1993) and Balke and Pearl (1994a,b) called this variable “response variable” while Heckerman and Shachter (1995) named it a “mapping variable,” (see Lauritzen, 2004).

The relation of this partition to the potential-outcome paradigm (Neyman, 1923, Rubin, 1974) is simple. Each equivalence class corresponds to an intersection of two potential outcomes (assuming binary variables), as shown in Table 1. The types described in Table 1 are often given problem-specific names, for example, 1 - doomed, 2 - responders, 3 - hurt, 4 - always healthy (see Heckerman and Shachter (1995) or Imbens and Rubin (1997)).

Table 1:

| Response Type | Functional Description | Potential outcome Description |

|---|---|---|

| Type-1 | f (x, u) = 0 | Y0 (u) = 0 and Y1 (u) = 0 |

| Type-2 | f (x, u) = x | Y0 (u) = 0 and Y1 (u) = 1 |

| Type-3 | f (x, u) = 1 − x | Y0 (u) = 1 and Y1 (u) = 0 |

| Type-4 | f (x, u) = 1 | Y0 (u) = 1 and Y1 (u) = 1 |

3. Applications

The idea of characterizing units by their response function, rather than their baseline features has several advantages, stemming primarily from the parsimony achieved by the former. Whereas each unit may have thousands of features, standing in unknown relationships to X and Y, the number of functions that those features can induce is limited by the cardinality of X and Y, and each such function defines the response of Y unequivocally.

Robins and Greenland were the first to capitalize on this advantage and have used classification by response type as a cornerstone in many of their works, including confounding (1986) attribution (1988, 1989a,b) and effect decomposition (1992).

Pearl (1993) and Balke and Pearl (1994a,b) formulated response types as variables in a graph, and used the low dimensionality (16) of two response variables to derive tight bounds on treatment effects under condition of noncompliance (Balke and Pearl, 1997).

Chickering and Pearl (1997) as well as Imbens and Rubin (1997) used the parsimony of response type classification in a Bayesian framework, to obtain posterior distributions of causal effects in noncompliance settings. It is obviously easier to assign meaningful priors to a 16-dimensional polytope than to a space of the many features that characterize each unit (see Pearl, 2009a, Ch. 8).

Baker and Lindeman (1994) and Imbens and Angrist (1994) introduced a new element into this analysis. Realizing that the population averaged treatment effect (ATE) is not identifiable in experiments marred by noncompliance, they have shifted attention to a specific response type (i.e., compliers) for which the causal effect was identifiable, and presented the latter as an approximation for ATE. This came to be known as LATE (Local Average Treatment Effect) and has spawned a rich literature with many variants (Angrist, Imbens, and Rubin, 1996, Heckman and Vytlacil, 2001, Heckman, 2005) all focusing on a specific stratum or a subset of strata for which the causal effect could be identified under various combinations of assumptions and designs. However, most authors in this category do not state explicitly whether their focus on a specific stratum is motivated by mathematical convenience, mathematical necessity (to achieve identification) or a genuine interest in the stratum under analysis.

Though membership in response-type classes is generally not identifiable and is vulnerable to unpredictable changes,1 such membership may occasionally be at the center of a research question. For example, the effect of treatment on subjects who would have survived regardless of treatment may become the center of interest in the context of censorship by death (Robins, 1986). Likewise, survival in cancer cases caused by hormone replacement therapy need be distinguished from survival in cancer cases caused by other factors (Sjölander, Humphreys, and Vansteelandt, 2010). In such applications, expressions of the form

| (3) |

emerge organically as the appropriate research questions, where Z is some post-treatment variable, and the condition (Zx = z, Zx′ = z′) specifies the response-type stratum of interest.

4. The Frangakis-Rubin Framework

Thus far, we discussed principal strata as a classification of units into equivalence classes that arises organically from the logic of counterfactuals and the inference challenges posed by the study. A different perspective was proposed in the paper of Frangakis and Rubin (2002) who attached the label “principal strata” to this classification. Frangakis and Rubin viewed the presence of a stratum (Zx = z, Zx′ = z′) behind the conditioning bar (Eq. (3)), as a unifying conceptual principle, deserving of the title “framework,” because it seems to be correcting for variations in Z without the bias produced by standard adjustment for post-treatment variables. In their words: “We are aware of no previous work that has linked such recent approaches for noncompliance to the more general class of problems with post-treatment variables.” The approach that subsequently emerged from this perspective, and came to be known as the “principal strata framework” presumes that most if not all problems involving post-treatment variables can, and should be framed in terms of strata-specific effects.

We have seen in Section 3, however, that the class of problems involving post-treatment variables is not monolithic. In some of those problems (e.g., noncompliance), post-treatment variables serve as useful information sources for bounding or approximating population-wide questions, while in others, they define the research question itself. More importantly, while some of those problems can be solved by conditioning on principal strata, others cannot. In those latter cases, constraining solutions to be conditioned on strata, as in (3), may have unintended and grossly undesirable consequences, as we shall see in the sequel.

4.1. The principal strata direct effect (PSDE)

A typical example where definitions based on principal stratification turned out inadequate is the problem of mediation, which Rubin (2004, 2005) tried to solve using an estimand called “Principal Stratification Direct Effect” (PSDE). In mediation analysis, we seek to measure the extent to which the effect of X on Y is mediated by a third variable, Z, called “mediator.” Knowing the direct (unmediated) effect permits us to assess how effective future interventions would be which aim to modify, weaken or enhance specific subprocesses along various pathways from X to Y. For example, knowing the extent to which sex discrimination affects hiring practices would help us assess whether efforts to eliminate educational disparities have the potential of reducing earning disparity between men and women.

Whereas causal notions of “direct effect” (Robins and Greenland, 1992, Pearl, 2001) measure the effects that would be transmitted in the population with all mediating paths (hypothetically) deactivated, the PSDE is defined as the effects transmitted in those units only for whom mediating paths happened to be deactivated in the study. This seemingly mild difference in definition leads to unintended results that stand contrary to common usage of direct effects (Robins, Rotnitzky, and Vansteelandt, 2007, Robins, Richardson, and Spirtes, 2009, VanderWeele, 2008), for it excludes from the analysis all individuals who are both directly and indirectly affected by the causal variable X (Pearl, 2009b). In linear models, as a striking example, a direct effect will be flatly undefined, unless the X → Z coefficient is zero. In some other cases, the direct effect of the treatment will be deemed to be nil if a small subpopulation exists for which treatment has no effect on both the outcome and the mediator (Pearl, 2011). These definitional inadequacies point to a fundamental clash between the Principal Strata Framework and the very notion of mediation.

Indeed, taking a “principal strata” perspective, Rubin found the concept of mediation to be “ill-defined.” In his words: “The general theme here is that the concepts of direct and indirect causal effects are generally ill-defined and often more deceptive than helpful to clear statistical thinking in real, as opposed to artificial problems” (Rubin, 2004). Conversely, attempts to define and understand mediation using the notion of “principal-strata direct effect” have concluded that “it is not always clear that knowing about the presence of principal stratification effects will be of particular use” (VanderWeele, 2008). It is now becoming widely recognized that the natural direct and indirect effects formulated in Robins and Greenland (1992) and Pearl (2001) are of greater interest, both for the purposes of making treatment decisions and for the purposes of explanation and identifying causal mechanisms (Joffe, Small, and Hsu, 2007, Albert and Nelson, 2011, Mortensen, Diderichsen, Smith, and Andersen, 2009, Imai, Keele, and Yamamoto, 2010, Robins et al., 2007, 2009, Pearl, 2009a, Petersen, Sinisi, and van der Laan, 2006, Hafeman and Schwartz, 2009, Kaufman, 2010, Sjölander, 2009).

This limitation of PSDE stems not from the notion of “principal-strata” per se, which is merely a benign classification of units into homogeneously responding classes. Rather, the limitation stems from adhering to an orthodox philosophy which prohibits one from regarding a mediator as a cause unless it is manipulable. This prohibition prevents us from defining the direct effect as it is commonly used in decision making and scientific discourse – an effect transmitted with all mediating paths “deactivated” (Pearl, 2001, Avin, Shpitser, and Pearl, 2005, Albert and Nelson, 2011), and forces us to use statistical conditionalization (on strata) instead. Path deactivation requires counterfactual constructs in which the mediator acts as an antecedent, written Yz, regardless of whether it is physically manipulable. After all, if we aim to uncover causal mechanisms, why should nature’s pathways depend on whether we have the technology to manipulate one variable or another. The whole philosophy of extending the potential outcome analysis from experimental to observational studies (Rubin, 1974) rests on substituting physical manipulations with reasonable assumptions about how treatment variables are naturally chosen by the so called “treatment assignment mechanism.” Mediating variables are equally deserving of such substitution.

4.2. Principal surrogacy

A second area where a PS-restricted definition falls short of expectation is “surrogate endpoint” (Frangakis and Rubin, 2002). At its core, the problem concerns a randomized trial where one seeks “endpoint surrogate,” namely, a variable Z that would allow good predictability of outcome for both treatment and control (Ellenberg and Hamilton, 1989). More precisely, “knowing the effect of treatment on the surrogate allows prediction of the effect of treatment on the more clinically relevant outcome” (Joffe and Green, 2009).

To meet this requirement, Frangakis and Rubin offered a definition called “principal surrogacy” which requires that causal effects of X on Y may exist if and only if causal effects of X on Z exist (see Joffe and Green (2009)). Again, their definition rests solely on what transpires in the study, where data is available on both the surrogate (Z) and the endpoint (Y), and does not require good predictions under the new conditions created when data on the surrogate alone are available.2

Pearl and Bareinboim (2011) present examples where a post-treatment variable Z passes the “principal surrogacy” test and yet it is useless as a predictor under the new conditions. Conversely, Z may be a perfect surrogate (i.e., a robust predictor of effects) and fail the “principal surrogacy” test. In short, a fundamental disconnect exists between the notion of “surrogate endpoint,” which requires robustness against future interventions affecting Z and the class of definitions that the principal strata framework can articulate, given its resistance to conceptualizing such interventions.3

5. Conclusions

The term “principal strata (PS)” is currently used to connote four different interpretations

PS as a partition of units by response type,

PS as an approximation to research questions concerning population averages (e.g., bounds and LATE analysis under noncompliance),

PS as a genuine focus of a research question (e.g., censorship by death),

PS as an intellectual restriction that confines its analysis to the assessment of strata-specific effects (see Addendum, p. 13).

My purpose in writing this note is to invite investigators using the PS framework to clarify, to their readers as well as to themselves, what role they envision this framework to play in their analysis, and how it captures the problem they truly care about.4

I have no reservation regarding interpretations 1–3, though a clear distinction between the three would be a healthy addition to the principal stratification literature. I have strong reservation though regarding the 4th; frameworks should serve, not alter research questions.

The popularization of Frangakis and Rubin “Principal Strata Framework” has had a net positive effect on causal research; it attracted researchers to the language of counterfactuals and familiarized them with its derivational power. At the same time, it has created the impression that conditioning on strata somehow bestows legitimacy on one’s results and thus exonerates one from specifying research questions and carefully examining whether conditioning on strata properly answers those questions. It has further encouraged researchers to abandon policy-relevant questions in favor of a framework that restricts those questions from being asked, let alone answered.

I hope by bringing these observations up for discussion, a greater clarity will emerge as to the goals, tools and soundness of causal inference methodologies.

Addendum – Questions, Answers, Discussions

Question to Author

You state that you are concerned that individuals might be using principal stratification ‘as an intellectual restriction that confines its analysis to the assessment of strata-specific effects.’ Can you provide any examples in the literature where you felt that researchers might be using principal stratification in this manner?

Author’s Reply

In retrospect, I feel that way about ALL PS papers that I have read, with the exception of ONE - Sjölander et al. (2010) on Hormone Replacement Therapy, which explicitly justifies why one would be interested in stratum-specific effects.

To substantiate the basis of my perception I cite the lead articles by Rubin (2004, 2005) where the PSDE is motivated and introduced. What if not an “intellectual restriction” could spawn a definition of “Direct Effect” that excludes from the analysis all units for whom X has both direct and indirect effect on Y?

According to Rubin (2005), the problem was originally posed by Fisher (1935) and Cochran (1957) in the context of agricultural experiments. Forgiving their mistaken solutions for a moment (they had no graphs for guidance), we find that both Fisher and Cochran were very clear about what their research questions were: To estimate the effect of X on Y after allowance is made for the variations in Y DIRECTLY DUE TO variations in Z itself.

The phrase “due to variations in Z” clearly identifies Z as a secondary CAUSE of Y, for which allowance needed to be made.

What, if not an “intellectual restriction” could compel us to change the research question from its original intent and replace it with another, in which Z is NOT treated as a secondary cause of Y, but only as a variable affected by X. This is, in essence, a restriction against writing down the counterfactual Yxz.

Frangakis and Rubin (2002) state explicitly that they refrain from using “a priori counterfactuals,” namely Yz. In their words: “This [Robins and Greenland’s] framework with its a priori counterfactual estimands, is not compatible with the studies we consider, which do not directly control the post-treatment variable (p. 23).” This resolution to avoid counterfactuals that are not directly controlled in the study is a harsh and unjustifiable “intellectual restriction,” especially when the problem statement calls for an estimand involving Yxz, and especially when refraining from considering Yxz leads to unintended conclusions (e.g., that the direct effect of a grandfather’s income on a child education can only be defined in those families where that income did not affect the father’s education.)

One should note that in the agricultural experiments considered by Fisher and Cochran, the post-treatment variables (e.g., plant-density or eelworms) were not controlled either, yet this did not prevent Fisher and Cochran from asking a reasonable, policy relevant question: To what extent do these post-treatment variables affect the outcome?

But your question highlights an important observation: most PS-authors do not view PS as a restriction. True; they actually view it as a liberating intellectual license; a license to assess quantities with a halo of legitimacy, without telling us why these quantities are the ones that the author cares about, or how relevant they are for policy questions or scientific understanding.

This is the power of the word “framework”; working within a “framework” assures an investigator that someone would surely find a reason to appreciate the quantity estimated, as long as it fits the mold of the “framework.”

But how do we alert researchers to the possibility that they might be solving the wrong problem? One way is to present them with weird conclusions that emerge from their method, and ask them: “Do you really stand behind such conclusions?” This is what I tried to do with “PS direct effects,” and “principal surrogacy.” I hope the examples illuminate what the PS framework computes.

Footnotes

Author Notes: This research was supported in parts by NIH #1R01 LM009961-01, NSF #IIS-0914211 and #IIS-1018922, and ONR #N000-14-09-1-0665 and #N00014-10-1-0933.

For example, those who comply in the study may or may not comply under field conditions, where incentives to receive treatment are different.

Note that if conditions remain unaltered, the surrogacy problem is solved trivially by correlation. Therefore, no formal definition of surrogacy is complete without making change in conditions an integral part of the definition.

The resistance, as in the case of mediation, arises from the prohibition on writing expressions containing the term Yz, in which Z acts as an counterfactual antecedent.

I purposely refrain from discussing the issue of identification, namely, the assumptions needed for estimating principal strata effects in observational studies. Such issues tend to conflate the more fundamental problems of definition and interpretation which should take priority in methodological discussions. Joffe and Green (2009) compare identification conditions for both principal surrogacy and mediation-based surrogacy.

References

- Albert JM, Nelson S. Biometrics. 2011. “Generalized causal mediation analysis”. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angrist J, Imbens G, Rubin D. “Identification of causal effects using instrumental variables (with comments),”. Journal of the American Statistical Association. 1996;91:444–472. doi: 10.2307/2291629. [DOI] [Google Scholar]

- Avin C, Shpitser I, Pearl J. Proceedings of the Nineteenth International Joint Conference on Artificial Intelligence IJCAI-05. Edinburgh, UK: Morgan-Kaufmann Publishers; 2005. “Identifiability of path-specific effects,”; pp. 357–363. [Google Scholar]

- Baker SG, Lindeman KS. “The paired availability design: A proposal for evaluating epidural analgesia during labor,”. Statistics in Medicine. 1994;13:2269–2278. doi: 10.1002/sim.4780132108. [DOI] [PubMed] [Google Scholar]

- Balke A, Pearl J. Proceedings of the Twelfth National Conference on Artificial Intelligence. I. Menlo Park, CA: MIT Press; 1994a. “Probabilistic evaluation of counterfactual queries,”; pp. 230–237. [Google Scholar]

- Balke A, Pearl J. “Counterfactual probabilities: Computational methods, bounds, and applications,”. In: de Mantaras RL, Poole D, editors. Uncertainty in Artificial Intelligence 10. San Mateo, CA: Morgan Kaufmann; 1994b. pp. 46–54. [Google Scholar]

- Balke A, Pearl J. “Bounds on treatment effects from studies with imperfect compliance,”. Journal of the American Statistical Association. 1997;92:1172–1176. doi: 10.2307/2965583. [DOI] [Google Scholar]

- Chickering D, Pearl J. “A clinician’s tool for analyzing noncompliance,”. Computing Science and Statistics. 1997;29:424–431. [Google Scholar]

- Cochran WG. “Analysis of covariance: Its nature and uses,”. Biometrics. 1957;13:261–281. doi: 10.2307/2527916. [DOI] [Google Scholar]

- Ellenberg S, Hamilton J. “Surrogate endpoints in clinical trials: Cancer,”. Statistics in Medicine. 1989:405–413. doi: 10.1002/sim.4780080404. [DOI] [PubMed] [Google Scholar]

- Fisher R. The Design of Experiments. Edinburgh: Oliver and Boyd; 1935. [Google Scholar]

- Frangakis C, Rubin D. “Principal stratification in causal inference,”. Biometrics. 2002;1:21–29. doi: 10.1111/j.0006-341X.2002.00021.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenland S, Robins J. “Identifiability, exchangeability, and epidemiological confounding,”. International Journal of Epidemiology. 1986;15:413–419. doi: 10.1093/ije/15.3.413. [DOI] [PubMed] [Google Scholar]

- Greenland S, Robins J. “Conceptual problems in the definition and interpretation of attributable fractions,”. American Journal of Epidemiology. 1988;128:1185–1197. doi: 10.1093/oxfordjournals.aje.a115073. [DOI] [PubMed] [Google Scholar]

- Hafeman D, Schwartz S. “Opening the black box: A motivation for the assessment of mediation,”. International Journal of Epidemiology. 2009;3:838–845. doi: 10.1093/ije/dyn372. [DOI] [PubMed] [Google Scholar]

- Heckerman D, Shachter R. “Decision-theoretic foundations for causal reasoning,”. Journal of Artificial Intelligence Research. 1995;3:405–430. [Google Scholar]

- Heckman J. “The scientific model of causality,”. Sociological Methodology. 2005;35:1–97. doi: 10.1111/j.0081-1750.2006.00163.x. [DOI] [Google Scholar]

- Heckman J, Vytlacil E. “Policy-relevant treatment effects,”. The American Economic Review. 2001;91:107–111. doi: 10.1257/aer.91.2.107. papers and Proceedings of the Hundred Thirteenth Annual Meeting of the American Economic Association. [DOI] [Google Scholar]

- Imai K, Keele L, Yamamoto T. “Identification, inference, and sensitivity analysis for causal mediation effects,”. Statistical Science. 2010;25:51–71. doi: 10.1214/10-STS321. [DOI] [Google Scholar]

- Imbens G, Angrist J. “Identification and estimation of local average treatment effects,”. Econometrica. 1994;62:467–475. doi: 10.2307/2951620. [DOI] [Google Scholar]

- Imbens G, Rubin D. “Bayesian inference for causal effects in randomized experiments with noncompliance,”. Annals of Statistics. 1997;25:305–327. doi: 10.1214/aos/1034276631. [DOI] [Google Scholar]

- Joffe M, Green T. “Related causal frameworks for surrogate outcomes,”. Biometrics. 2009:530–538. doi: 10.1111/j.1541-0420.2008.01106.x. [DOI] [PubMed] [Google Scholar]

- Joffe M, Small D, Hsu C-Y. “Defining and estimating intervention effects for groups that will develop an auxiliary outcome,”. Statistical Science. 2007;22:74–97. doi: 10.1214/088342306000000655. [DOI] [Google Scholar]

- Kaufman J. “Invited commentary: Decomposing with a lot of supposing,”. American Journal of Epidemiology. 2010;172:1349–1351. doi: 10.1093/aje/kwq329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauritzen S. “Discussion on causality,”. Scandinavian Journal of Statistics. 2004;31:189–192. doi: 10.1111/j.1467-9469.2004.03-200A.x. [DOI] [Google Scholar]

- Mortensen L, Diderichsen F, Smith G, Andersen A. “The social gradient in birthweight at term: Quantification of the mediating role of maternal smoking and body mass index,”. Human Reproduction. 2009;24:2629–2635. doi: 10.1093/humrep/dep211. [DOI] [PubMed] [Google Scholar]

- Neyman J. “On the application of probability theory to agricultural experiments. Essay on principles. Section 9,”. Statistical Science. 1923;5:465–480. [Google Scholar]

- Pearl J. “Aspects of graphical models connected with causality,”. Proceedings of the 49th Session of the International Statistical Institute. 1993. pp. 391–401. Tome LV, Book 1, Florence, Italy.

- Pearl J. Causality: Models, Reasoning, and Inference. 2nd edition. New York: Cambridge University Press; 2000. 2009. [Google Scholar]

- Pearl J. Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence. San Francisco, CA: Morgan Kaufmann; 2001. “Direct and indirect effects,”; pp. 411–420. [Google Scholar]

- Pearl J. Causality: Models, Reasoning, and Inference. 2nd edition New York: Cambridge University Press; 2009a. [Google Scholar]

- Pearl J. “Causal inference in statistics: An overview,”. Statistics Surveys. 2009b;3:96–146. doi: 10.1214/09-SS057. < http://ftp.cs.ucla.edu/pub/statser/r350.pdf>. [DOI] [Google Scholar]

- Pearl J. “The mediation formula: A guide to the assessment of causal pathways in nonlinear models,”. 2011. Technical Report R-363, < http://ftp.cs.ucla.edu/pub/statser/r363.pdf>, Department of Computer Science, University of California, Los Angeles, to appear in C. Berzuini, P. Dawid, and L. Bernardinelli (Eds.), Statistical Causality. Forthcoming, 2011. [DOI] [PubMed]

- Pearl J, Bareinboim E. “Transportability across studies: A formal approach,”. 2011. Technical Report R-372, < http://ftp.cs.ucla.edu/pub/statser/r372.pdf>, Department of Computer Science, University of California, Los Angeles, CA.

- Petersen M, Sinisi S, van der Laan M. “Estimation of direct causal effects,”. Epidemiology. 2006;17:276–284. doi: 10.1097/01.ede.0000208475.99429.2d. [DOI] [PubMed] [Google Scholar]

- Robins J. “A new approach to causal inference in mortality studies with a sustained exposure period – applications to control of the healthy workers survivor effect,”. Mathematical Modeling. 1986;7:1393–1512. doi: 10.1016/0270-0255(86)90088-6. [DOI] [Google Scholar]

- Robins J, Greenland S. “Estimability and estimation of excess and etiologic fractions,”. Statistics in Medicine. 1989a;8:845–859. doi: 10.1002/sim.4780080709. [DOI] [PubMed] [Google Scholar]

- Robins J, Greenland S. “The probability of causation under a stochastic model for individual risk,”. Biometrics. 1989b;45:1125–1138. doi: 10.2307/2531765. [DOI] [PubMed] [Google Scholar]

- Robins J, Greenland S. “Identifiability and exchangeability for direct and indirect effects,”. Epidemiology. 1992;3:143–155. doi: 10.1097/00001648-199203000-00013. [DOI] [PubMed] [Google Scholar]

- Robins J, Richardson T, Spirtes P. 2009. “On identification and inference for direct effects,”. Technical report Harvard University, MA. [Google Scholar]

- Robins J, Rotnitzky A, Vansteelandt S. “Discussion of principal stratification designs to estimate input data missing due to death,”. Biometrics. 2007;63:650–654. doi: 10.1111/j.1541-0420.2007.00847_2.x. [DOI] [PubMed] [Google Scholar]

- Rubin D. “Estimating causal effects of treatments in randomized and nonrandomized studies,”. Journal of Educational Psychology. 1974;66:688–701. doi: 10.1037/h0037350. [DOI] [Google Scholar]

- Rubin D. “Direct and indirect causal effects via potential outcomes,”. Scandinavian Journal of Statistics. 2004;31:161–170. doi: 10.1111/j.1467-9469.2004.02-123.x. [DOI] [Google Scholar]

- Rubin D. “Causal inference using potential outcomes: Design, modeling, decisions,”. Journal of the American Statistical Association. 2005;100:322–331. doi: 10.1198/016214504000001880. [DOI] [Google Scholar]

- Sjölander A. “Bounds on natural direct effects in the presence of confounded intermediate variables,”. Statistics in Medicine. 2009;28:558–571. doi: 10.1002/sim.3493. [DOI] [PubMed] [Google Scholar]

- Sjölander A, Humphreys K, Vansteelandt S. “A principal stratification approach to assess the differences in prognosis between cancers caused by hormone replacement therapy and by other factors,”. The International Journal of Biostatistics. 2010;6 doi: 10.2202/1557-4679.1225. article 20. [DOI] [PubMed] [Google Scholar]

- VanderWeele T. “Simple relations between principal stratification and direct and indirect effects,”. Statistics and Probability Letters. 2008;78:2957–2962. doi: 10.1016/j.spl.2008.05.029. [DOI] [Google Scholar]