Abstract

Human and nonhuman primates rely almost exclusively on vision for social communication. Therefore, tracking eye-movements and examining visual scan paths can provide a wealth of information about many aspects of primate social information processing. While eye-tracking techniques have been utilized with humans for some time, similar studies in nonhuman primates have been less frequent over recent decades. This has largely been due to the need for invasive manipulations, such as the surgical implantation of devices to limit head movement, which may not be possible in some laboratories or at some universities, or may not be congruent with some experimental aims (i.e., longitudinal studies). It is important for all nonhuman primate researchers interested in visual information processing or operant behavior to realize that such invasive procedures are no longer necessary. Here we briefly describe new methods for fully noninvasive video eye-tracking with adult rhesus monkeys (Macaca mulatta). We also describe training protocols that require only ~30 days to accomplish and quality control measures that promote reliable data collection. It is our hope that this brief overview will reacquaint nonhuman primate researchers with the benefits of eye-tracking and promote expanded use of this powerful methodology.

Introduction

For diurnal primates, including humans, vision is the dominant sensory modality. As reviewed by Kirk and Kay [2004], the visual acuity of monkeys, apes and humans (i.e., their ability to distinguish between closely spaced objects) is surpassed only by large, diurnal birds of prey. In addition, forward facing eyes with overlapping visual fields and trichromatic photoreceptors provide excellent binocular color vision [Kirk and Kay 2004]. The combination of these attributes enables quick and accurate detection of salient features (i.e., optimal food sources, conspecifics, potential predators, etc.) within a complex three-dimensional environment [Kirk and Kay 2004]. It comes as little surprise that primate species living in large social groups have developed a wide array of visual signals (including facial expressions and body postures), and perceptual abilities that facilitate social communication [Tomasello and Call, 1997a]. However, the factors that influence how primates attend to and process the content of these visual social signals remains largely unknown.

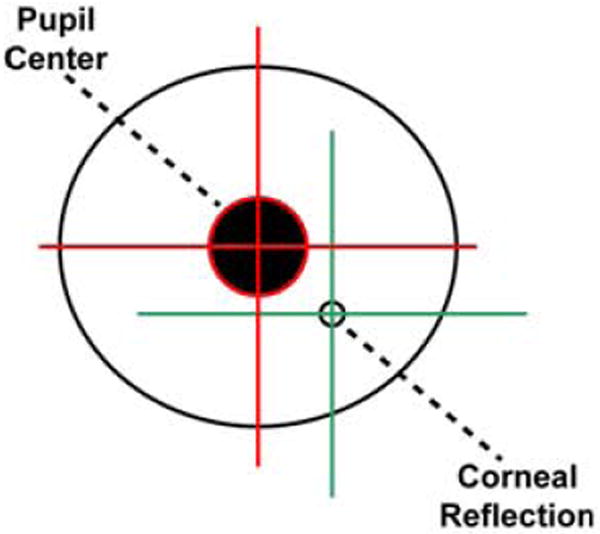

During the past two decades, noninvasive infrared video eye-tracking systems have provided researchers with a powerful tool to objectively and precisely measure multiple aspects of visual attention in humans. After being calibrated for a particular individual, these systems track two critical features of the eye – the pupil center and the corneal reflection [specifically the first Purkinje image, Duchowski 2007, see Figure 1]. The positional difference between these two points is used to identify and measure the viewer’s Point of Gaze (POG) on a computer screen in real time as a sequence of two-dimensional (x,y) coordinates. Depending on the specific system and manufacturer, these coordinates can be recorded anywhere between 60 Hz – 1 kHz or higher. POG data can be analyzed offline to provide information regarding visual search patterns, to identify areas of a stimulus that capture a majority of the viewer’s attention, to calculate the probability of shifting gaze from one location to another, etc. POG can also be used within a testing paradigm as a way for the subject to make an operant choice between two or more stimuli (e.g., as in a gambling or visual memory task).

Figure 1.

A diagram of the primate eye showing the two features that modern infrared eye-tracking systems use to identify and measure the viewer’s Point of Gaze (POG) on a computer screen in real time.

The use of eye-tracking technology has provided a wealth of information regarding how humans process visual social signals, such as facial expressions of emotion. For example, typically developing human adults fixate more on the eyes than any other facial feature, followed by the mouth and nose, respectively [Ekman and Friesen 1975; Walker-Smith et al. 1977]. The direction of gaze and shape of the eye region are both critical for identifying the target of a particular facial expression, as well as decoding the specific emotion being conveyed [especially fear, Adolphs et al. 2005]. Eye-tracking technology can also be used to generate patterns of pupil dilation during visual information processing which can indicate autonomic nervous system activation related to the emotional salience of stimuli and/or the cognitive processing load [Silk et al. 2007; Steinhauer et al. 2004]. Despite these very consistent findings, the basic pattern of visual attention during face perception can be influenced by many factors including age [Haith et al. 1977], genotype [Beevers et al. 2010], individual differences in personality [Perlman et al. 2009] and psychiatric illnesses such as autism [Pelphrey et al. 2002] or generalized anxiety disorder [Bradley et al. 2000].

Given these and perhaps other factors that influence eye-gaze patterns during social perception, research with appropriate animal models may be a useful complement to human eye-tracking studies. For example, visual attention in animals can be assessed in highly homogenous subject pools across multiple experimental paradigms or developmental stages. Animal models also permit the direct manipulation of brain systems responsible for visual social attention via pharmacological inactivation or lesions. Nonhuman primates, especially rhesus monkeys (Macaca mulatta) and chimpanzees (Pan troglodytes), are excellent animal models for human abilities, as they exhibit neural, social and cognitive sophistication that is comparable to humans [Capitanio and Emborg 2008; Semendeferi et al. 2002; Tomasello and Call 1997b], as well as similar genetic polymorphisms relevant to psychiatric illnesses [serotonin transporter, Claw et al. 2010; Lesch et al. 1997; monoamine oxidase A, Newman et al. 2005; Wendland et al. 2006]. Previous studies have shown that when macaques and chimpanzees view social stimuli, such as faces, their visual scan paths closely resemble that of humans. Of all facial features, the eyes draw the most visual attention [Gothard et al. 2004; Hirata et al. 2010; Kano and Tomonaga 2009; Kano and Tomonaga 2010; Keating and Keating 1982; Keating and Keating 1993; Nahm and Amaral 1997], although other facial features, such as the nose, mouth and ears, are also fixated to a lesser extent [Wilson and Goldman-Rakic 1994]. Macaques, like humans, also appear to process faces holistically, as opposed to collections of independent facial features [Dahl et al. 2010; Dahl et al. 2007; Dahl et al. 2009]. Watson and colleagues [2009] also recently showed that rhesus monkeys carrying the short allele of the serotonin transporter genetic polymorphism spend less time looking at faces and the eye-region in particular than monkeys carrying two copies of the long allele. This finding suggests that factors related to temperament and emotion may affect visual responses to social stimuli similarly in both human and nonhuman primates.

However, one drawback of many previous eye-tracking studies with nonhuman primates has been the need for surgical implantation of scleral search coils to measure POG and/or stainless steel or titanium head-posts to eliminate movement artifacts. These invasive and expensive procedures greatly limit the number of animals that a given investigator can afford to study and also limit the number of institutions that can support such work. Only the eye-tracking studies with chimpanzees described above [Hattori et al. 2010; Hirata et al. 2010; Kano and Tomonaga 2009; Kano and Tomonaga 2010] did not use invasive procedures to position the head or restrict its movement. In these studies, however, the experimenter manually positioned and held the animal’s head in an appropriate location throughout the entire testing session. It is therefore unclear how the presence of the experimenter influenced the results of those studies.

To mitigate some of the complications inherent to nonhuman primate eye-tracking and make this technique more widely accessible, we have developed several new strategies for conducting fully noninvasive eye-tracking studies with adult rhesus monkeys. In particular, we describe here a new technique for noninvasive head restraint using thermoplastic frames that are typically used for human neuroimaging. The thermoplastic within each frame can be molded to fit snuggly around the animal’s head, forming an individualized helmet. These helmets are then attached to the animal’s testing chair to restrain head movement within reasonable tolerances. We also describe methods for habituating animals to these thermoplastic helmets, techniques for calibrating the eye-tracker software prior to each testing session, and strategies for ensuring that the eye-tracker calibration does not “drift” during a prolonged testing session.

Fitting the animals with thermoplastic helmets

We tested our noninvasive eye-tracking methods with adult male rhesus monkeys (N = 5). Each was born and maternally reared at the California National Primate Research Center (CNPRC) in one of 24 half-acre outdoor enclosures that each contained approximately seventy animals of all possible age/sex classes. For the current testing, the animals were housed indoors within standard adult macaque laboratory cages (66 cm width × 61 cm length × 81 cm height) with a minimum of 6 hours of socialization permitted with a neighboring animal on weekdays. The housing room was maintained on a twelve-hour light/dark cycle. All animals were maintained on a diet of fresh fruit, vegetables and monkey chow (Lab Diet #5047, PMI Nutrition International Inc., Brentwood, MO), with water available ad libitum. We began working with these animals when they ranged in age between 5.8 and 8.7 years and weighed 10 – 14 kg.

All testing occurred while the animals sat in a modified primate chair with a slanted top (Crist Instrument Co., Inc., Damascus, MD; 35 cm wide × 33.5 cm long × 57 – 69.8 cm tall) which was mounted on a rolling base (54.7 cm wide × 54.7 cm long × 15.5 cm tall). This chair consisted of an aluminum frame and adjustable floor grate, enclosed by 1.2 cm thick polycarbonate on the sides and top. A vertical guillotine door on the back of the chair allowed the animals to enter from a modified holding cage without the need for standard pole-and-collar handling.

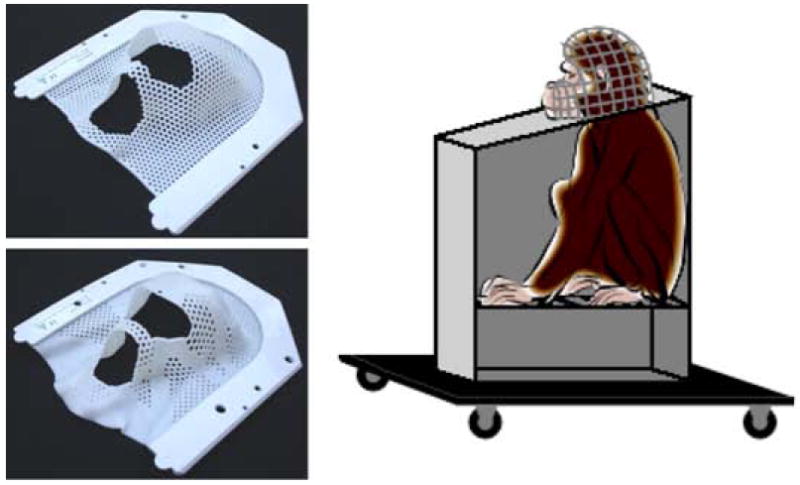

Eye-tracking experiments have, in the past, typically required surgical implantation of a stainless steel or titanium head-post onto the animal’s skull so that all head movement is restricted [see for example, Gothard et al. 2004; Nahm and Amaral 1997; Watson et al. 2009]. However, some other less invasive methods have been developed, such as the technique described by Fairhall and colleagues [2006]. To completely avoid invasive surgical procedures, we created individualized thermoplastic helmets that could be affixed to the primate chair. The animals were transported individually to a testing room, transferred into the primate chair and lightly sedated with Ketamine Hydrochloride (5 – 10 mg/kg, IM). Helmets were made using commercially-available thermoplastic mesh sheets (Civco Medical Solutions, Kalona, IA; 24.8 cm wide × 28 cm long × 3.2 mm thick). For younger animals (5 – 6 years old), full mesh sheets (model MT-APU) provided adequate rigidity and durability. For older animals (7+ years old), we recommend stronger sheets that include a combination of thicker mesh and strips of solid thermoplastic (model MT-APUI-22-32) to fully immobilize the head. These thermoplastic sheets, regardless of mesh/solid composition, were bordered on three sides by a U-shaped hard plastic frame. The thermoplastic material was made pliable by soaking the entire frame in an 80° C water bath for 1 minute. The thermoplastic frame was initially stretched with the experimenter’s fist and then pressed down over the sedated animal’s head. The frame was quickly attached to the chair top using four wing bolts. As the thermoplastic sheet cooled, it was carefully molded around the animal’s head, paying particular attention to creating a relatively snug fit around its brow ridge, the back of its head, the temples, the nose and the sides of its snout. The thermoplastic cooled and became rigid in ~60 seconds. In some cases, cooling time was accelerated using a cool air gun or fan. Once fully formed and cooled, the helmet was removed and holes were cut out around the eye and snout regions (Figure 2). Once the animals recovered from sedation, they were returned to their home cage. If a helmet became damaged during the training or testing sessions, it was removed and easily repaired by re-heating the thermoplastic (under hot tap water or with a hot air gun) and applying a scrap piece of thermoplastic to the damaged area. The fit of each thermoplastic helmet could also be adjusted after the initial mask-making session without the need for additional animal sedation. For example, if the pitch of the animal’s head when sedated was different from its natural orientation while awake, the helmet could be removed and modified, again using hot water or air directed at the appropriate site.

Figure 2.

Photographs showing molded thermoplastic masks made from full mesh sheets (top left) or sheets that included a thicker mesh and strips of solid thermoplastic (bottom left). The right side shows a diagram of a monkey sitting in the testing chair while wearing a thermoplastic helmet.

After creating individualized thermoplastic helmets, the experimenter progressively habituated each animal to sitting in the primate chair with its helmet on for successively longer periods of time up to 90 minutes. Positive reinforcement was provided in the form of small pieces of fruit delivered by hand into the animal’s mouth or fruit juices administered through a mouthpiece attached the top-left of the chair (Crist Instrument Co., Inc.; model # 5-RLD-00A). An animal was considered habituated to wearing its thermoplastic helmet once 1) it did not display any fearful or aggressive facial expressions (i.e., grimaces or open-mouth threats) or vocalizations as the helmet was applied, and 2) did not struggle against the helmet while it was on. These criteria needed to be met for two consecutive days before the animal could advance to the next stage. The experimenter noted each animal’s behavioral responses to this and all other habituation phases described below on a daily basis to document progress towards habituation goals. We elected to not collect more objective measures of stress (e.g., cortisol levels from blood samples) since those procedures themselves could have introduced an additional physical stressor that may have slowed this phase of habituation significantly. In total, this phase of helmet acclimation lasted only 3 – 8 days for these animals.

Habituating the animals to the eye-tracking context

Once acclimated to the helmets, the testing chair was rolled into a sound-attenuating testing chamber (Acoustic Systems, Austin, TX; 2.1 m wide × 2.4 m tall × 1.1 m deep) so the animals could be habituated to the video eye-tracker (Applied Science Laboratories, Bedford, MA; model R-HS-S6). The chamber was equipped with a ventilation fan and overhead lighting that was turned off during testing. A wide-screen color video monitor (60.96 cm diagonal; Gateway Inc., Irvine, CA; model LP2424) was positioned at the monkey’s eye level (vertical center 93 cm from floor). The video monitor was positioned 127 cm from the animals’ eyes, while the eye-tracking camera was positioned on a tripod 53.3 cm from the animals’ eyes and 63.5 – 71.1 cm vertically from the floor (optimal height differed for each animal). The animal’s mouthpiece was connected to an automatic juice dispenser (Crist Instrument Co., Inc.; model # 5-RLD-E3) so that reward could be dispensed throughout the testing session. A white noise generator (60 dB) was used to mask outside auditory distractions.

Visual stimuli were presented to the monkey using a PC running the Eprime 2.0 Professional software package (Psychology Software Tools, Pittsburgh, PA). All eye-gaze data were collected using the Eye-Trac 6 .NET User Interface program (Applied Science Laboratories) on a separate PC. Infrared luminance level, pupil threshold and corneal reflection threshold were set individually for each animal at the start of each session. Sampling rate for the infrared eye-tracking camera was set to 120 Hz. A standard nine-point calibration (3 × 3 matrix of calibration stimuli) was conducted prior to testing with each animal to ensure accuracy of POG data collection. Calibration stimuli were small videos (8.89 × 5.72 cm on screen, 4° visual angle) of rhesus monkeys from the outdoor housing enclosures at the CNPRC. Six different calibration sequences were created and rotated pseudorandomly across test days to help maintain attention. The animals readily attended to these calibration stimuli, which resulted in all subjects showing reliable calibration (i.e., animal’s POG cross-hair followed action in all calibration videos) within 1 – 2 days of starting eye-tracker training

Each animal was then trained to fixate color animated GIF images placed at random locations on a 50% gray background. Each animated image was shown for 15 - 30 seconds and juice rewards were given manually by the experimenter whenever the animal’s eye-gaze fell within the boundaries of the animated image. Once the animal demonstrated at least 2 consecutive days of consistent fixation on these animated images (total training days ranged between 2 – 4 days for all animals), it was transferred to the second training phase where it viewed either photographs (color or black and white; 5-second duration) or color video clips (taken from commercial movies and nature documentary DVDs; 30-second duration) on a 50% gray background. Each photograph or movie was separated by four 50% gray screens: 1) blank, 10-second duration, 2) black square target (3.4° visual angle) at center, 3) same black square target positioned randomly at one of eight points around the screen periphery, and 4) blank, 10-second duration. The animal was required to fixate each black square target for at least 250 ms to obtain a juice reward and move onto the next picture or movie trial. The targets were intended to maintain the animal’s attention on the video screen and also allowed the experimenter to detect any appreciable “drift” in eye-tracker calibration during a prolonged testing session. If significant drift occurred (e.g., animal’s POG no longer reliably fell within square targets), the testing session was paused and the eye-tracker was re-calibrated. The animals completed this final phase of training once they could finish 100 picture or 50 movie trials in less than 90 minutes on 3 consecutive days. All animals completed this training phase in 11 – 18 days. Total training time for all animals, including all procedures described up to this point, ranged from 17 to 30 days.

Noninvasive head restraint results in predictable face scanning patterns

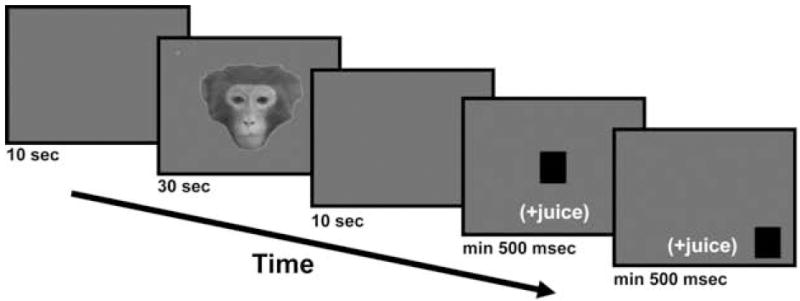

To determine if the noninvasive thermoplastic head restraints produced reliable and predictable POG data, we conducted a pilot study focused on the perception of faces. Upright, forward-oriented, neutral facial expressions were gathered from six adult male and female rhesus monkeys that were unfamiliar to the animals in this study. Face stimuli were converted to black and white, separated from their original background using Adobe Photoshop CS software (version 8.0) and placed onto a 50% gray background. The faces spanned 9.1 – 11.1° visual angle in the vertical direction and 10.7 – 11.2° visual angle in the horizontal plane. The test images (face + background) were all 737 × 983 pixels and displayed in a standard 3:4 aspect ratio. Two circular anchor points (30% gray, 0.23° visual angle, positioned in the top left and bottom right corners) were placed on each image so that POG coordinates could be mapped back onto each image during analysis. Each animal viewed one of these upright face identities per day. The same face was presented during ten consecutive 30-second trials, each separated with the same sequence of blank screens and center/peripheral square targets described above (Figure 3). The animal was required to fixate each target for 500 ms and received juice rewards after each successful fixation (180 ms juice for the center target, 360 ms juice for the peripheral targets).

Figure 3.

A schematic of a typical testing trial. A 10-second blank gray screen preceded and succeeded a 30-second presentation of a neutral facial expression. The animal was also required to fixate a center and peripheral target for at least 500 ms to receive a juice reward and proceed to the next trial.

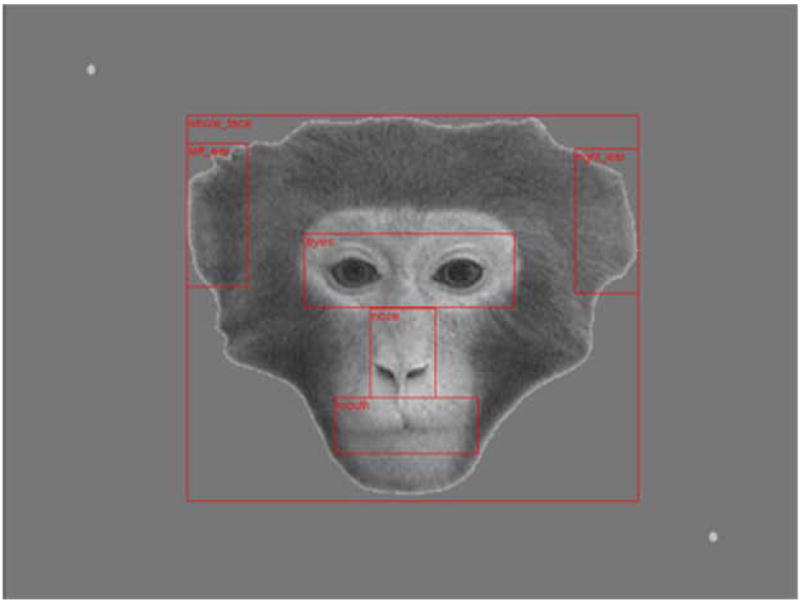

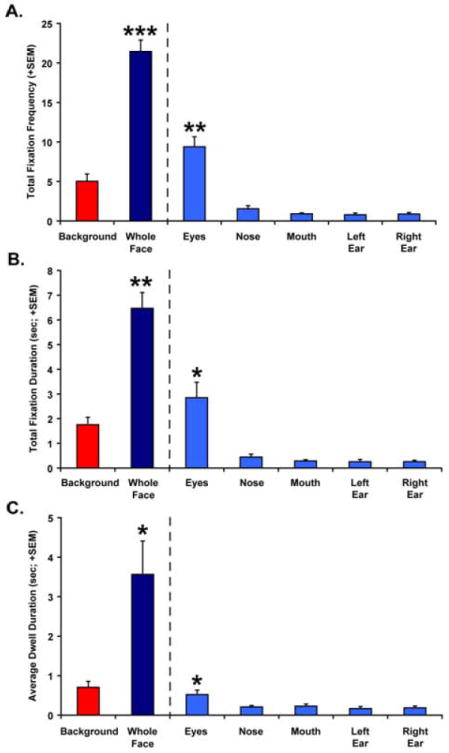

The ASL Results software package (Applied Science Laboratories) was used for analysis of all POG data. During preliminary analyses, we found that visual interest in neutral facial expressions waned quickly with successive presentations. Therefore, only data from the first 10 seconds of the first presentation of a given face identity was used to validate for the accuracy of video eye-tracking with noninvasive thermoplastic head restraints. We measured each animal’s total fixation duration, total frequency of fixations and average gaze dwell duration on a rectangular area of interest (AOI) that encompassed the entire face picture versus the remaining gray background. We also measured these same variables for smaller rectangular AOIs that encompassed specific areas of the face (e.g., eyes, nose, mouth, left ear and right ear). Figure 4 shows each of these AOIs on a sample image. A fixation was recorded if eye-gaze coordinates remained within 1° × 1° visual angle for 100 ms. The duration of a given fixation was ended when eye-gaze coordinates deviated by more than 1° × 1° visual angle for more than 360 ms. These fixation onset and offset criteria were ASL Results software default values. Total fixation duration was the cumulative time (maximum = 10 seconds) that the animal spent fixating within the AOI. Similarly, the total fixation frequency represented the cumulative number of discrete fixations that fell within an AOI during the 10-second analysis time window. Finally, the average dwell duration measured the average amount of time that eye-gaze remained within a given AOI without leaving. Data were compared across AOIs (Face vs. Background and Eyes vs. Nose vs. Mouth vs. Left Ear vs. Right Ear) using paired-sample t-tests.

Figure 4.

A sample facial expression with discrete areas of interest (AOIs) superimposed. AOIs were defined for the Whole Face, Eyes, Nose, Mouth, Right Ear and Left Ear.

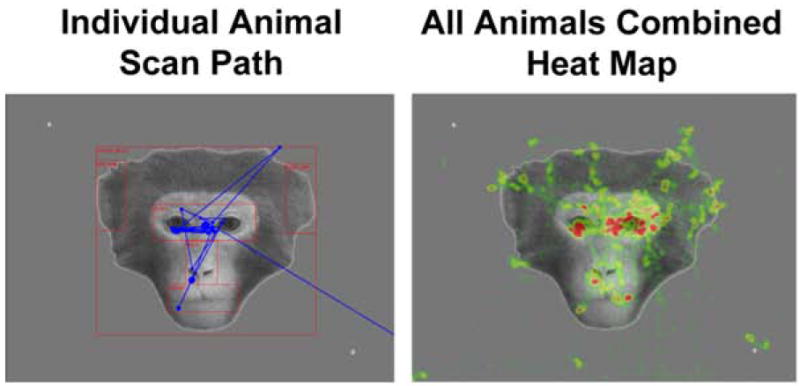

The left image in Figure 5 shows a sample scan path for one animal with the first face that they saw during the experiment. The combined gaze data for all animals are also shown (as a heat map) for this same face picture in the right image of Figure 5. The animals clearly devoted a majority of their visual attention on the faces relative to the background. This impression was substantiated by analysis of the total fixation frequency, total fixation duration and average dwell duration measures. The animals fixated on the Whole Face AOI significantly more [t(4) = 8.741, P = 0.001, two-tailed] and did so for a significantly longer duration [t(4) = 5.756, P < 0.01, two-tailed] than the Background AOI (Figure 6A and B). The Whole Face AOI also captured the animals’ attention for a significantly longer time than the Background AOI [t(4) = 3.034, P < 0.05, two-tailed], as measured by the average dwell duration (Figure 6C).

Figure 5.

The scan path for one animal (left image) is shown in blue superimposed over one of the neutral face stimuli to show the typical pattern of gaze during the first 10 seconds of exposure to this stimulus. The combined fixation data for all animals (right image; same 10 second epoch) are also shown for this same face picture. For this map, higher fixation frequencies result in “hotter” colors such as red or yellow. Areas of low fixation frequency are shown in green.

Figure 6.

Total fixation frequency (A), total fixation duration (B) and average dwell duration (C) data for all animals during the first 10 seconds of viewing all neutral face pictures. Data are shown for the Background and Whole Face AOIs on the left of each graph. Values for the discrete subregions of the face (Eyes, Nose, Mouth, Left Ear and Right Ear) are shown on the right side of each panel. Vertical bars indicate Standard Errors of the Mean. * P < 0.05 ** P < 0.01, *** P < 0.001

Comparison of the discrete AOIs within the face also revealed that the animals devoted more visual attention to the Eyes AOI than all other face components. The total fixation frequency [t(5) = 5.825 – 7.413, all P < 0.01, two-tailed; Figure 6A] and total fixation duration measurements [t(5) = 3.644 – 4.371, all P < 0.05, two-tailed; Figure 6B] for the Eyes AOI were significantly higher than all other regions of the face. Total fixation frequency and total fixation duration did not differ significantly between the other subregions of the face. The Eyes AOI also captured the animals’ attention more (longer average dwell duration) than all other components of the face, but the difference was only significant for the Eyes vs. Nose AOI comparison [t(5) = 3.040, P < 0.05, two-tailed; Figure 6C]. Dwell duration did not differ appreciably between the other components of the face.

These results were exactly as expected considering similar findings from previous studies in humans, chimpanzees and macaques [Ekman and Friesen 1975; Gothard et al. 2004; Hirata et al. 2010; Kano and Tomonaga 2009; Kano and Tomonaga 2010; Keating and Keating 1982; Keating and Keating 1993; Nahm and Amaral 1997; Walker-Smith et al. 1977; Watson et al. 2009; Wilson and Goldman-Rakic 1994]. These results validate the new alternative procedures described here. It is also worth noting that the amount of time required to habituate our naïve animals to the thermoplastic helmets and train them to participate in a passive-viewing face perception task required only 17 – 30 days. This is significantly less time than other noninvasive methods previously published [approximately 6 months for the technique described by Fairhall et al. 2006].

Exciting future prospects for noninvasive eye-tracking

Given that many nonhuman primate species rely heavily on vision for social communication, eye-tracking offers a controlled, objective and now a fully noninvasive and efficient way to explore the many factors that may influence patterns of social attention or social cognition in general. For example, visual scan paths could be compared across species of macaques or across groups of nonhuman primates with different mating systems (i.e., monogamous vs. polygamous) or which differ in average group size. Face perception strategies could also be examined across genders within a given species, or across groups of animals with different genetic polymorphisms linked to neurotransmitter function. The effect(s) of psychological factors, such as the type of rearing (naturalistic, lack of maternal/paternal care, high-stress, etc.) or an individual’s dominance rank, on aspects of social cognition could also be examined with eye-tracking technology. Since eye-tracking can be performed noninvasively, longitudinal studies of developmental changes in visual social attention could be performed with monkeys from infancy through adolescence and even into adulthood (a span of ~5 years for rhesus monkeys). Finally, eye-tracking methodology could be combined with other techniques (such as functional neuroimaging or psychophysiological recordings) to examine the neurobiological and physiological substrates of social cognition and emotion. Given all of these possibilities, the future is certainly bright for the use of noninvasive eye-tracking methods in nonhuman primate research.

Acknowledgments

This work was supported by funding from the National Institutes of Mental Health (K99-MH083883) to C.J. Machado and (R37-MH57502) to Dr. David G. Amaral, as well as funds from the intramural research program of the National Institute of Mental Health to E.E. Nelson. All work was carried out at the California National Primate Research Center (RR00069), under protocols approved by the University of California, Davis, Institutional Animal Care and Use Committee and adhered to the American Society of Primatologists (ASP) Principles for the Ethical Treatment of Non Human Primates. We thank the veterinary and animal services staff of the California National Primate Research Center and the National Institutes of Mental Health for helping to refine the animal handling procedures described in this article. We would also like to thank M. Archibeque for technical assistance during eye-tracker set-up and initial animal training, as well as Dr. E. Bliss-Moreau, G. Moadab and K. Henning for assistance with collecting the data described here. Thanks also go out to R. Murillo for creating the visual stimuli used for data collection and to two anonymous reviewers who made helpful suggestions after reading a previous draft of this paper.

References

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433(7021):68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Beevers CG, Ellis AJ, Wells TT, McGeary JE. Serotonin transporter gene promoter region polymorphism and selective processing of emotional images. Biological Psychololgy. 2010;83(3):260–5. doi: 10.1016/j.biopsycho.2009.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley BP, Mogg K, Millar NH. Covert and overt orienting of attention to emotional faces in anxiety. Cognition and Emotion. 2000;14(6):789–808. [Google Scholar]

- Capitanio JP, Emborg ME. Contributions of non-human primates to neuroscience research. Lancet. 2008;371(9618):1126–35. doi: 10.1016/S0140-6736(08)60489-4. [DOI] [PubMed] [Google Scholar]

- Claw KG, Tito RY, Stone AC, Verrelli BC. Haplotype structure and divergence at human and chimpanzee serotonin transporter and receptor genes: implications for behavioral disorder association analyses. Molecular Biology & Evolution. 2010;27(7):1518–29. doi: 10.1093/molbev/msq030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahl CD, Logothetis NK, Bulthoff HH, Wallraven C. The Thatcher illusion in humans and monkeys. Proceedings of the Royal Society: Biological Sciences. 2010;277(1696):2973–81. doi: 10.1098/rspb.2010.0438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahl CD, Logothetis NK, Hoffman KL. Individuation and holistic processing of faces in rhesus monkeys. Proceedings of the Royal Society: Biological Sciences. 2007;274(1622):2069–76. doi: 10.1098/rspb.2007.0477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahl CD, Wallraven C, Bulthoff HH, Logothetis NK. Humans and macaques employ similar face-processing strategies. Current Biology. 2009;19(6):509–13. doi: 10.1016/j.cub.2009.01.061. [DOI] [PubMed] [Google Scholar]

- Duchowski AT. Eye tracking methodology: Theory and practice. London: Springer; 2007. [Google Scholar]

- Ekman P, Friesen WV. Unmasking the face: A guide to recognizing emotions from facial cues. Oxford: Prentice Hall; 1975. [Google Scholar]

- Fairhall SJ, Dickson CA, Scott L, Pearce PC. A non-invasive method for studying an index of pupil diameter and visual performance in the rhesus monkey. Journal of Medical Primatology. 2006;35(2):67–77. doi: 10.1111/j.1600-0684.2005.00135.x. [DOI] [PubMed] [Google Scholar]

- Gothard KM, Erickson CA, Amaral DG. How do rhesus monkeys (Macaca mulatta) scan faces in a visual paired comparison task? Animal Cognition. 2004;7(1):25–36. doi: 10.1007/s10071-003-0179-6. [DOI] [PubMed] [Google Scholar]

- Haith MM, Bergman T, Moore MJ. Eye contact and face scanning in early infancy. Science. 1977;198(4319):853–5. doi: 10.1126/science.918670. [DOI] [PubMed] [Google Scholar]

- Hattori Y, Kano F, Tomonaga M. Differential sensitivity to conspecific and allospecific cues in chimpanzees and humans: A comparative eye-tracking study. Biology Letters. 2010;6(5):610–13. doi: 10.1098/rsbl.2010.0120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirata S, Fuwa K, Sugama K, Kusunoki K, Fujita S. Facial perception of conspecifics: chimpanzees (Pan troglodytes) preferentially attend to proper orientation and open eyes. Animal Cognition. 2010;13(5):679–88. doi: 10.1007/s10071-010-0316-y. [DOI] [PubMed] [Google Scholar]

- Kano F, Tomonaga M. How chimpanzees look at pictures: a comparative eye-tracking study. Proceedings of the Royal Society: Biological Sciences. 2009;276(1664):1949–55. doi: 10.1098/rspb.2008.1811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kano F, Tomonaga M. Face scanning in chimpanzees and humans: Continuity and discontinuity. Animal Behaviour. 2010;79(1):227–235. [Google Scholar]

- Keating CF, Keating EG. Visual scan patterns of rhesus monkeys viewing faces. Perception. 1982;11(2):211–9. doi: 10.1068/p110211. [DOI] [PubMed] [Google Scholar]

- Keating CF, Keating EG. Monkeys and mug shots: cues used by rhesus monkeys (Macaca mulatta) to recognize a human face. Journal of Comparative Psychology. 1993;107(2):131–9. doi: 10.1037/0735-7036.107.2.131. [DOI] [PubMed] [Google Scholar]

- Kirk EC, Kay RF. The evolution of high visual acuity in the anthropoidea. In: Ross CF, Kay RF, editors. Anthropoid origins: New visions. New York: Kluwer/Plenum Publishing; 2004. pp. 539–602. [Google Scholar]

- Lesch KP, Meyer J, Glatz K, Flugge G, Hinney A, Hebebrand J, Klauck SM, Poustka A, Poustka F, Bengel D, et al. The 5-HT transporter gene-linked polymorphic region (5-HTTLPR) in evolutionary perspective: alternative biallelic variation in rhesus monkeys. Rapid communication. Journal of Neural Transmition. 1997;104(11-12):1259–66. doi: 10.1007/BF01294726. [DOI] [PubMed] [Google Scholar]

- Nahm FKD, Amaral DG. How do monkeys look at faces? Journal of Cognitive Neuroscience. 1997;9(5):611–623. doi: 10.1162/jocn.1997.9.5.611. [DOI] [PubMed] [Google Scholar]

- Newman TK, Syagailo YV, Barr CS, Wendland JR, Champoux M, Graessle M, Suomi SJ, Higley JD, Lesch KP. Monoamine oxidase A gene promoter variation and rearing experience influences aggressive behavior in rhesus monkeys. Biological Psychiatry. 2005;57(2):167–72. doi: 10.1016/j.biopsych.2004.10.012. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32(4):249–61. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Perlman SB, Morris JP, Vander Wyk BC, Green SR, Doyle JL, Pelphrey KA. Individual differences in personality predict how people look at faces. PLoS One. 2009;4(6):e5952. doi: 10.1371/journal.pone.0005952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Semendeferi K, Lu A, Schenker N, Damasio H. Humans and great apes share a large frontal cortex. Nature Neuroscience. 2002;5(3):272–276. doi: 10.1038/nn814. [DOI] [PubMed] [Google Scholar]

- Silk JS, Dahl RE, Ryan ND, Forbes EE, Axelson DA, Birmaher B, Siegle GJ. Pupillary reactivity to emotional information in child and adolescent depression: links to clinical and ecological measures. American Journal of Psychiatry. 2007;164(12):1873–80. doi: 10.1176/appi.ajp.2007.06111816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinhauer SR, Siegle GJ, Condray R, Pless M. Sympathetic and parasympathetic innervation of pupillary dilation during sustained processing. International Journal of Psychophysiology. 2004;52(1):77–86. doi: 10.1016/j.ijpsycho.2003.12.005. [DOI] [PubMed] [Google Scholar]

- Tomasello M, Call J. Social strategies and communication. In: Tomasello M, Call J, editors. Primate Cognition. New York: Oxford University Press; 1997a. pp. 231–72. [Google Scholar]

- Tomasello M, Call J. Theories of primate social cognition. In: Tomasello M, Call J, editors. Primate Cognition. New York: Oxford University Press; 1997b. pp. 342–64. [Google Scholar]

- Walker-Smith GJ, Gale AG, Findlay JM. Eye movement strategies involved in face perception. Perception. 1977;6(3):313–26. doi: 10.1068/p060313. [DOI] [PubMed] [Google Scholar]

- Watson KK, Ghodasra JH, Platt ML. Serotonin transporter genotype modulates social reward and punishment in rhesus macaques. PLoS One. 2009;4(1):e4156. doi: 10.1371/journal.pone.0004156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wendland JR, Hampe M, Newman TK, Syagailo Y, Meyer J, Schempp W, Timme A, Suomi SJ, Lesch KP. Structural variation of the monoamine oxidase A gene promoter repeat polymorphism in nonhuman primates. Genes Brain and Behavior. 2006;5(1):40–5. doi: 10.1111/j.1601-183X.2005.00130.x. [DOI] [PubMed] [Google Scholar]

- Wilson FA, Goldman-Rakic PS. Viewing preferences of rhesus monkeys related to memory for complex pictures, colours and faces. Behavioral Brain Research. 1994;60(1):79–89. doi: 10.1016/0166-4328(94)90066-3. [DOI] [PubMed] [Google Scholar]