Abstract

Does opportunity make the thief or are people dispositionally prone to deceive? The interaction between personality and the circumstances surrounding deception is crucial to understand what promotes dishonesty in our society. Due to its inherent spontaneity and sociality, deceptive behaviour may be hardly reproducible in experimental settings. We developed a novel paradigm in the form of an interactive game where participants can choose whether to lie to another person in situations of loss vs. gain, and of no-reputation-risk vs. reputation-risk linked to the disclosure of their deceptive behaviour to others. Thus, our ecological paradigm allowed subjects to spontaneously decide when to lie and face the challenge of deceiving others. In the case of loss, participants lied to reverse the outcome in their favour. Deception was lower in the reputation-risk condition where personality traits concerning social interactions also played an important role.

The results suggest that deception is definitely promoted by unfavourable events, and that maintaining one's own reputation encourages honesty, particularly in socially inclined individuals.

Introduction

As we think about political lies, tax evasion or swindlers, we realize that social interactions are very often permeated by deceptive behaviours. Although this behaviour is publicly condemned [1] people keep telling lies. The issue of the spread of dishonesty among societies has been addressed from several disciplines like psychology [2], [3], [4] and social psychology [5], [6], [7]. Importantly, the evolutionary game theory described the role of defection and cooperation in solving social dilemmas [8], [9], [10]. Yet, little is known about the circumstances under which this behaviour is promoted and how personality dispositions influence situational deception. Studies suggest that deciding whether to deceive involves a motivational conflict between the temptation to dishonestly achieve some benefit and the desire to act in a social appropriate manner [7]. Experimental research on deception has to face with the challenge of devising tasks that can induce such a conflict in a naturalistic way. The majority of the paradigms used thus far in the neuroscientific study of deception instruct subjects when to lie and do not provide a partner to lie to. Thus, these paradigms largely neglect two fundamental aspects of deception namely intentionality and sociality (for a review see [11]). More ecological paradigms in which subjects could spontaneously deceive [12], [13] other people [14] have been used only recently. Here we devised a novel experimental paradigm able to induce the conflict that people experience when faced with the choice to deceive or not another person and tested the effect on deceptive behaviour of both situational and dispositional variables. It is well known, for example, that deciding to be dishonest depends on the circumstances as the same person can decide to cheat during an academic exam but never evade taxes, or he/she could lie about his/her curriculum but never lie to his/her friends. Moreover, the circumstances where the people's goals could not be accomplished non-deceptively, promote lying behaviour [2].

It has also been demonstrated that people deceive less when the potential monetary reward related to cheating is significantly high thus making the circumstance morally challenging; or when cues to their moral standards are provided [6]. In contrast, people deceive more when the monetary reward for cheating is replaced by tokens and the moral implications of the circumstance can be reinterpreted [6].

Among the situations that may change the tendency to lie, reputation management plays an important role. People care so much about how they are regarded by others that acquiring a good reputation activates reward-related brain areas [15]. Moreover, building and maintaining one's own reputation plays an important role in promoting cooperation and prosocial behaviour [16], [17]. In a similar vein, the presence of potentially disapproving people or authority figures (i.e. of external cues to self-regulation) elicits a reduction in the expression of racial bias [18], [19], [20]. Importantly, under realistic deception situations people risk to loose their social capital [21]. Thus, the decision to deceive has to take into account the ability to manage one's own reputation. Despite the importance of this variable, no study has investigated the role of reputation management on deception thus far.

Another important determinant of deception is the dispositional tendency to behave according to one's own individual differences in personality traits. Studies indicate, for example, that people who self-reported to tell more lies during their everyday life scored high in impression management, manipulativeness and extroversion scales [3]. Likewise, manipulative people when asked about their lie-telling behaviour in everyday life, seem not to perceive themselves as frequent liars, and report to feel less guilty about their lies and comfortable when imagining to lie in high-stakes situations [22].

No systematic evidence for the comparative influence of situational and dispositional variables on deceptive behaviour has been provided. In the present study, we investigate how the decision to deceive another individual is influenced by two important situational variables (namely favourable vs. unfavourable reality and the reputation risk vs. no reputation risk) and by dispositional individual differences (personality traits). We created an experimental situation in which subjects were free to decide whether to tell the truth or to lie to an opponent player in order to obtain an economical benefit (self-gain lies) or to help another individual (altruistic lies). Moreover, we measured the impact of reputation on this decision. The participants' concern about their reputation was induced by telling them that the other player would be aware of their decision. Moreover, we tested whether the perceived reputation risk and the deceptive behaviour was enhanced in subjects who played with the opponent seated in the same room (Presence-Group) with respect to those who played with the opponent seated in a different room (No-Presence Group). Specifically, we asked whether facing the event of a loss would promote deception among participants (as measured by the number of self-gain lies) and whether the impact of reputation would decrease self-gain lies and increase altruistic lies.

To investigate the interactions between personality and deceptive behaviour, we examined extensively the participants' personality (by means of the short form of Temperamental and Character Inventory, [23]). Moreover, we examined manipulativeness, moral disengagement, impression management and self-deception as individual differences dimensions that we expected to be important predictors of deception in social interactions.

Methods

Participants and Design

Fifty-two participants (26 females, age between 19 and 29 years, mean = 24.38) were recruited. Two participants were excluded from the analysis because they did not believe they were playing against a real person. Twenty-six of them (13 females) played in the No-Presence Group and twenty-four (12 females) played in the Presence Group. Subjects were paid 10 euros for their participation and had the possibility to extra win up to 30 euros during the game. All the subjects signed written informed consent prior to be enrolled. The experimental protocol was approved by the independent Ethics Committee of the Santa Lucia Foundation (Scientific Institute for Research Hospitalization and Health Care) and was carried out in accordance with the principles of the 1964 Declaration of Helsinki.

Experimental Task

Subjects (Ss) performed in a two-cards game where an ace of heart was associated with gain, and an ace of spades was associated with loss. Each subject was instructed that the opponent player (OP) was the first mover and was supposed to choose one of the two covered decks without knowing the outcome of the choice, which would be communicated by the S. By lying, S had the chance to reverse the outcome and thus to win when he/she had actually lost (self-gain lie) or to lose when he/she had actually won (altruistic lie). Trials in which the S was supposed to gain or to lose were defined as Favourable and Unfavourable Reality respectively. S performed the game in two conditions: the Reputation-Risk (R), in which S knew that OP was informed about his/her lies; and the No-Reputation Risk (NR) in which S knew that the OP was not informed. The order of the two conditions was counterbalanced across participants. The two players had 25 euros each for playing. For each trial, the winner took money from the other player's payoff. S were told that the amount of gain/loss on each trial was arbitrarily decided by a computer algorithm. The precise amount of gain/loss was communicated only at the end of the task. This allowed to rule out that the subjects' behaviour was based on a trial by trial computation of gain/loss.

Materials and Procedure

Subjects were seated comfortably in an acoustically shielded room. Stimulus presentation timing and randomization were controlled by using E-prime ver.1.2 software (Psychology Software Tools Inc., Pittsburgh, PA) running on a PC. Participants sat 57 cm away from a 22 inch LCD monitor where the stimuli, two play cards consisting in an ace of heart and an ace of spades, appeared on a white background (see figure 1). Each trial started with the presentation of a central fixation cross lasting 1000 msec, followed by the presentation of the stimuli. The left/right position of the heart/spades ace was counterbalanced. After a varying time interval (between 2000 and 3000 msec), one of the two cards became bigger, indicating the OP's choice. This randomized interval was employed for the No-Presence Group to provide tangible probes that the OP was a real person. For the Presence-Group, the actor triggered the stimulus presentation by clicking a mouse. After each OP's choice, participants were asked to press on a keypad either the “V” key to communicate the truth (the italian word for truth is verità) or the “M” key to lie (the italian word for lie is menzogna). The stimulus remained visible on the screen until the response was given. Each block contained 80 trials, half of them providing the Unfavourable Reality (the OP won), the other half the Favourable Reality (the OP lost).

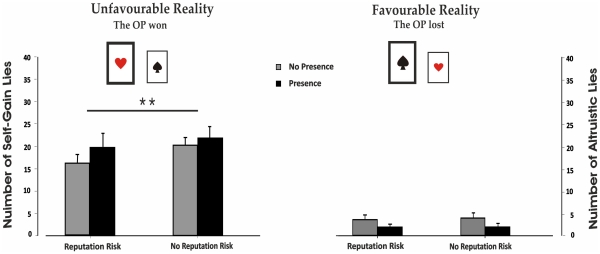

Figure 1. Number of lies.

Self-gain and altruistic lies (mean ± standard error) produced by the two subject groups (No-Presence Group, grey bars; Presence Group, black bars) in the two possible opponent (OP) choice outcomes (favourable/unfavourable) in the two conditions (Reputation Risk/No-Reputation Risk) are reported. The number of Self-Gain lies is significantly reduced in the Reputation Risk Condition (p = .01).

To avoid any spurious influence, in no condition was the experimenter in the testing room.

No-Presence of the OP (Group 1)

Subjects were told that they were going to play an online card game with another participant situated in a different room of the building and that they would meet the other player at the end of the game.

Physical Presence of the OP (Group 2)

After reading the task instructions, subjects were blindfolded while the other participant (the actor) entered the room. Once subjects were seated, they could see only the computer screen in front of them and had no chance to look at the other player. This procedure assured us that the actor's physical features would not influence the subjects' performance. In addition, we informed Ss that they were not allowed to talk and that thanks to a microphone in the room the examiner could stop the experiment in the event that someone spoke. That S did not actually talk was double checked by asking the actors in the room. Importantly, unbeknownst to the subjects, the OP choice was controlled by a computer.

Manipulation Check

After the game, participants were qualitatively debriefed about the interaction. We asked them questions like: “Did you enjoy the game?”; “Has your opponent been lucky?”; “Was he/she luckier in the first or in the second block?”. The two subjects who declared they did not believe or were sceptical about the fact that the OP was a real player were excluded from the analysis.

Personality Measures

After the manipulation check, subjects performed the electronic version of the short form of Temperamental and Character Inventory designed to assess four temperamental (Novelty Seeking; Harm Avoidance; Reward Dependence; Persistence) and three character (Self-Directedness; Cooperativeness; Self-Transcendence) dimensions [23]. Moreover, subjects were administered the Balanced Inventory of Desirable Responding (BIDR) [24], the Machiavellanism Scale (MACH IV) [25] and the Moral and Civic Disengagement [26].

Results

Binomial Analysis (Truth vs. Lie)

The number of lie and truth responses was collected and used as dependent variable. The percentage of Truth responses in the NR block for both groups (No-Presence: 70%; Presence: 70%) was higher than the percentage of Lie responses (binomial test, No-Presence: Truth/Lie, p<.01; Presence: Truth/Lie, p<.01). Also in the Reputation-Risk Block the percentage of Truth responses was higher for both groups (No Presence: 75%; Presence: 73%; binomial test, No Presence: Truth/Lie, p = .01; Presence: Truth/Lie, p = .01) ( Table 1 , first and second row).

Table 1. Percentage of Truth and Lie responses in each condition.

| NO-PRESENCE GROUP | PRESENCE GROUP | |||||

| Lie | Truth | p | Lie | Truth | p | |

| REPUTATION RISK | 25% | 75% | = .01 | 27% | 73% | = .01 |

| NO REPUTATION RISK | 30% | 70% | <.01 | 30% | 70% | <.01 |

The null hypothesis in the binomial test is the case in which two categories are equally likely to occur. When this test is statistically significant one category is much likely to occur than the other. Our data show that the truth responses are significantly more likely to occur in all conditions except in Unfavourable Reality i.e. when OPs won and Ss lost. In this case, lie and truth responses were comparable both in the No-Presence Group (p = .12) and in the Presence Group (p = .12).

Higher percentage of Truth than Lie responses was found for both groups in Favourable Reality condition (No-Presence 90%, Presence 95%; binomial test, No-Presence: Truth/Lie, p<.01; Presence: Truth/Lie, p<.01). By contrast, the percentage of truth and lie responses did not differ across groups in the Unfavourable Reality (binomial test, No-Presence: Truth/Lie, p = .12; Presence: Truth/Lie, p = .12) ( Table 1 , third and fourth row).

Analysis of Deceptive Responses

A 2×2×2 analysis of variance (ANOVA) for mixed models with Group (No-Presence vs. Presence) as a between-subjects factor and Reality (Favourable vs. Unfavourable) and Reputation (Reputation Risk vs. No-Reputation Risk) as within-subject factors was run. ANOVA did not show any significance of Group F(1,48) = .03, p = .87 nor its interaction with Reputation F(1,48) = 1.22, p = .28, or Reality F(1,48) = 2.07, p = .16. Interestingly, a main effect of Reputation F(1,48) = 10.93, p<.01, η2 = .19 was found revealing that subjects told more lies in the NR than in the R block. In addition, the main effect of Reality was significant F(1,48) = 100.53, p<.001, η2 = .68. This effect is accounted for by the fact that subjects lied more in the Unfavourable Reality than in the Favourable Reality, thus producing more self-gain lies then altruistic lies. Crucially, a significant Risk × Reality interaction was found F (1,48) = 7.58, p = .01, η2 = .14. The Newman-Keuls post-hoc test for multiple comparisons showed that whereas no difference in the amount of altruistic lies between the R block and the NR block was found, subjects produced more self-gain lies in the NR block respect to the R block ( Figure 1 ).

Personality Measures

Since the tendency to deceive was comparable in the two experimental groups, correlational analyses between self-gain or altruistic lies and personality traits were performed in all the subjects. No significant correlation was found. We sought to determine whether dispositional personality factors predicted how much reputation influenced deception. To this aim, we created a quantitative index of deception by subtracting Altruistic Lies from the Self-Gain Lies for each block. Then, we computed the impact of reputation on deception (RoD) as follows:

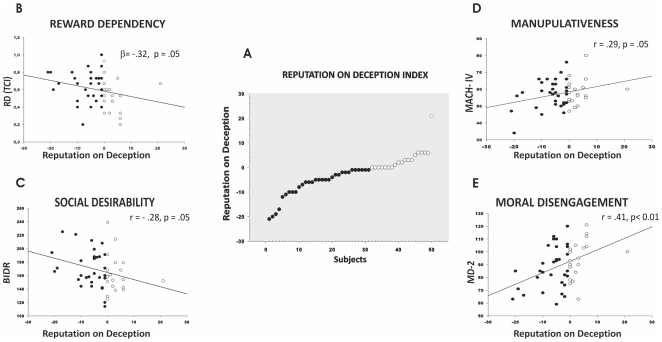

Negative and positive scores indicate more lies in the NR and the R blocks respectively ( Figure 2 , A).

Figure 2. Correlations between personality traits and the impact of reputation on deception (RoD).

A) the panel shows the RoD index for each subject. Black dots indicate subjects in whom deceptive behaviour was influenced by reputation risk. White dots indicate subjects in whom deceptive behaviour was not affected by reputation risk. The left part of the figure shows the negative correlations. B) indicates the TCI reward dependence scale is an independent predictor of RoD index. C) shows the significant negative correlation between RoD and Social Desirability Responding [Impression Management + Self-deceptive enhancement (BIDR)]. The right part of the panel show the positive correlations between RoD and Manipulativeness (MACH IV) (D) and Moral Disengagement (MD 2) (E). The higher impact of reputation (lower RoD), the higher the reward dependence and social desirable traits; the lower impact (higher RoD), the higher manipulativeness and moral disengagement traits.

The seven scales of TCI were entered in a standard multiple regression model as predictors, with the RoD as dependent variable. The regression model was significant (R = .52, adjR2 = .15, F (7,42) = 2.22, p = .05). “Reward Dependence” (ß = −.32, t42 = −2.03, p = .05) was the independent predictor of the impact of reputation on deceiving ( Figure 2 , B). For regression analyses, we computed the Cohen's f 2: R2/(1 – R2), as an index of effect size. Cohen's f 2 was computed on the adjusted R2 (f2 = .18).

In addition, we found a significant negative correlation between RoD and the BIDR Total Score (r = −.28, p = .05) indicating that the higher the impact of reputation, the more the subjects responded according to social desirability ( Figure 2 , C). Furthermore, RoD correlated positively with MD2 (r = .41, p<.01) and MACH IV (r = .29, p = .05). Thus, subjects who showed high scores in moral disengagement ( Figure 2 , D) and manipulativeness ( Figure 2 , E) were less influenced by reputation risk while being deceptive.

Discussion

We devised a novel experimental paradigm in the form of a cards game to investigate the effect of situations and dispositions in promoting deceptive behaviour in an ecological context where participants decided when to lie to another individual. Important situational variables were manipulated. Indeed, participants were faced with the moral dilemma of reverting their loss by lying to an opponent person who might or might not be in the same room. Moreover, the subjects' deceptive behaviour could or could not be disclosed to others and participants were thus informed that their reputation could or could not be at risk. Moreover, personality measures allowed us to assess the participants' disposition both in general and towards deception.

At least two key results concerning the situational manipulations were obtained. First, although participants were overall more truthful (more than 70% of total responses) than liar (around 30% of the total responses) and thus tended not to deceive others, a clear effect of Unfavourable Reality, i.e. of the situation where participants were more tempted to deceive, was found. In this latter situation, participants produced an equal number of lie and truth responses. Thus, the event of loss did increase deceptive behaviour although not to the point of inducing more lies than truth responses. That our participants in general did not lie as default is in keeping with an extensive study reporting that only a minority of 791 subjects tended to cheat and suggesting that the range of acceptable dishonesty was limited by the internal reward of being honest [6]. Importantly, although our paradigm did not abolish the participants' tendency to act in a socially appropriate and sensible manner, it was effective in pushing participants towards deception.

The second main result is that the reputation risk situation influenced the production of self-gain but not of altruistic lies. Studies indicate that altruistic lies, i.e. those produced to provide someone else's benefit at one's own cost, are rated as the most acceptable and likely to occur. In contrast, the self-gain lies, i.e. those involving the liar's benefit at others' cost, are rated as the least acceptable [4]. Different circumstances favour the production of one or the other type of lie. Guilty evoked by positive iniquity (i.e. when winning a lottery at the cost of others), for example, make people deceptively help others. In contrast, envy evoked by negative iniquity (i.e. when loosing a lottery to others' gain) leads people to deceptively hurt others [5].

According to the Theory of Self-Concept Maintenance, producing lies co-exist with the need to regard themselves as honest individuals [6]. Two main strategies permit to act dishonestly without affecting the positive concept of self. The first has to do with the subjects' tendency to categorize their own behaviour in a malleable way and thus to reinterpret reality in a self-serving manner finding a rationalization for dishonest actions. A clear reduction of deceptive behaviour is obtained by making very high the monetary gain that can be obtained by cheating. Indeed, in the above circumstances, the categorization of ones' own behaviour is less malleable and finding rationalizations for deception is much harder [6]. The second strategy is the inattention to one's own moral standards, implemented so as to avoid any link between self and dishonest actions [6]. In this vein, inducing subjects to pay attention to their own moral standards by asking them to recall the Ten Commandments or to read a code of honour, decreases the subjects' tendency to cheat [6].

In our experimental paradigm, subjects were told the interaction was just a game no matter whether reputation was or was not at risk. Since categorization malleability is comparable in two conditions, finding rationalizations for being deceptive might have been equally at play. Also, we reasoned that if reputation enhances attention to people's moral standard, deception might be classified as wrong no matter if produced with the intent to obtain self-gain or to help others. We found that reputation-risk decreased self-gain but did not increase altruistic lies. This suggests that, at least in our experimental conditions, is more important for people to appear honest than altruistic if this implies deception.

Indirect reciprocity, an important mechanism for the evolution of cooperation [27], consists in acts where the return for a favour comes from someone other than the recipient of the benefaction (i.e. “I scratch your back and someone else will scratch mine”). Helping others even when they cannot directly reciprocate, pays off anyway. Indeed, studies demonstrate that people who help another individual are more likely to receive help from others [16], [17]. It is relevant that indirect reciprocity may be linked to one's own reputation building [27]. Our subjects knew that the other player would have not be able to reciprocate directly their help. However, they could have spread out the voice about their honesty. Thus, we posit that a kind of indirect reciprocity might have played a role in the reputation condition of our study.

It is also worth noting that the pattern of deceptive behaviour was very similar when the opponent was or was not physically in the participants' same room during the interactive game. This is somewhat counterintuitive because the reputation risk should be higher when the opponent is in the same room. Indeed, reputation management can be easily induced in subjects even by subtle observation cues (i.e. pictures of eyes or eye-like stimuli) [28], [29]. A possible explanation for our result is that not allowing participants to have any physical contact with the other player made the same-room situation very similar to the online interaction. Therefore, it is likely that being aware that other people would know about the subject's behaviour has been sufficient to induce a strong concern about the deception-related reputation risk independently of whether the target of the deceptive behaviour was in the same or in a different room.

An interesting link between deceptive behaviour and dispositional tendencies was also found in our study. Indeed, the impact of reputation on self-gain deceptive behaviour turned out to be associated to a specific personality profile. In particular the participants who lied less in the reputation-risk situation were also highly reward dependent, i.e. they were very sensitive to signals of social approval [30]. Additionally, these subjects showed high social desirable responding (BIDR) traits, a measure that included both the intentional tendency of distorting one's own self by imaging to be perceived more favourably by others (Impression Management) and the unintentional tendency to portray oneself in a positive light (Self-Deception). This result is in line with studies showing that external cues to self-regulation influence the expression of prejudice selectively in people with specific personality traits [20], [31]. It has been shown, for example, that during a stereotype inhibition task performed in a private or in a public condition (where the experimenter was checking for signs of prejudice), a larger error-related positive component was elicited only in subjects who cared about their social image and tried to appear non-prejudiced [32]. Our data shows that also in the case of deception, the reputation has an impact only for people highly concerned about their social image. In addition, we found that the impact of reputation was less important for high manipulative and moral disengaged subjects. Manipulative people admit they cheat to get what they want [25] and moral disengaged people are particularly able to find moral self-sanction to their misbehaviours [33]. Taken together, our results have important practical implications. Indeed, in modern societies often permeated by deception, people need to understand on what circumstances one can promote or inhibit this kind of behaviour. We found that when people face unfavourable situations, the temptation to deceive becomes stronger. This tendency was not influenced by dispositional variables. Importantly, however, we found a clear link between personality dimensions and the reputation risk connected to the public disclosure of the deceptive behaviour. Mere knowledge of this risk seemed to work as an important restraint on deception. It must be noticed that in our paradigm subjects did not risk to be punished for their behaviour. Tellingly, evolutionary game theory studies [34], [35], [36] reviewed the role of reward and punishment in promoting public cooperation and found that under certain circumstances, reward and not only punishment can promote cooperation. This suggests that promoting a policy of transparency where the entire decision making process is carried out publicly and where building one's own reputation works as a social reward, could be a good deterrent for deception in social contexts. It is worth noting, however, that not all the people are affected by reputation. Thus, further investigation is needed to understand the complex interaction between dispositional and situational determinants of deception. As a final remark, we want to emphasize that the novel paradigm used in the present study turned out to be adept to induce a conflict between the temptation to deceive and the desire to act in a socially appropriate manner. Therefore, we propose it as a potentially very useful for testing lie and deception in ecological contexts at behavioural and neural levels.

Acknowledgments

Thanks are due to Alessandra Mancini, Manuela Melosini and Mariella Pazzaglia for help and discussion.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: S.M.A. is funded by Ministero Istruzione Università e Ricerca (Progetti di Ricerca di Interesse Nazionale, PRIN), Sapienza University of Rome and by IRCCS Fondazione Santa Lucia, Rome. The funders had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Nyberg D. Chicago: University of Chicago Press; 1993. The vanished truth: Truth telling and deceiving in ordinary life. [Google Scholar]

- 2.DePaulo BM, Kashy DA, Kirkendol SE, Wyer MM, Epstein JA. Lying in everyday life. J Pers Soc Psychol, 1996;70:979–995. [PubMed] [Google Scholar]

- 3.Kashy DA, DePaulo BM. Who lies? J Pers Soc Psychol. 1996;70:1037–1051. [PubMed] [Google Scholar]

- 4.McLeod BA, Genereux RL. Predicting the acceptability and likelihood of lying: The interaction of personality with type of lie. Pers Indiv Differ. 2008;45:591–596. [Google Scholar]

- 5.Gino F, Pierce L. Dishonesty in the name of equity. Psychol Sci. 2009;20:1153–1160. doi: 10.1111/j.1467-9280.2009.02421.x. [DOI] [PubMed] [Google Scholar]

- 6.Mazar N, Amir O, Ariely D. The Dishonesty of Honest People: A Theory of Self-Concept Maintenance. J Marketing Res. 2008;45:633–644. [Google Scholar]

- 7.Mead NL, Baumeister RF, Gino F, Schweitzer ME, Ariely D. Too Tired to Tell the Truth: Self-Control Resource Depletion and Dishonesty. J Exp Soc Psychol. 2009;45:594–597. doi: 10.1016/j.jesp.2009.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Szabó G, Fáth G. Evolutionary games on graphs. Phys Rep. 2007;446:97–216. [Google Scholar]

- 9.Perc M, Szolnoki A. Coevolutionary games-a mini review. Biosystems. 2010;99:109–125. doi: 10.1016/j.biosystems.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 10.Helbing, D, Szolnoki A, Perc M, Szabó G. Evolutionary Establishment of Moral and Double Moral Standards through Spatial Interactions. PLoS Comput Biol. 2010;6(4):e1000758. doi: 10.1371/journal.pcbi.1000758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sip KE, Roepstorff A, McGregor W, Frith CD. Detecting deception: The scope and limits. Trends Cogn Sci. 2008;12:48–53. doi: 10.1016/j.tics.2007.11.008. [DOI] [PubMed] [Google Scholar]

- 12.Seth AK, Iversen JR, Edelman GM. Single trial discrimination of truthful from deceptive responses during a game of financial risk using alphaband MEG signals. Neuroimage. 2006;32:465–476. doi: 10.1016/j.neuroimage.2006.02.050. [DOI] [PubMed] [Google Scholar]

- 13.Greene JD, Paxton JM. Patterns of neural activity associated with honest and dishonest moral decisions. Proc of the Natl Acad Sci USA. 2009;106:12506–12511. doi: 10.1073/pnas.0900152106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sip KE, Lynge M, Wallentin M, McGregor WB, Frith CD, et al. The production and detection of deception in an interactive game. Neuropsychologia. 2010;48:3619–3626. doi: 10.1016/j.neuropsychologia.2010.08.013. [DOI] [PubMed] [Google Scholar]

- 15.Izuma K, Saito DN, Sadato N. Processing of social and monetary rewards in the human striatum. Neuron. 2008;58:284–94. doi: 10.1016/j.neuron.2008.03.020. [DOI] [PubMed] [Google Scholar]

- 16.Nowak M, Sigmund K. Evolution of indirect reciprocity. Nature. 2005;437:1291–8. doi: 10.1038/nature04131. [DOI] [PubMed] [Google Scholar]

- 17.Milinski M, Semmann D, Krambeck HJ. Reputation helps solve the ‘tragedy of the commons’. Nature. 2002;415:424–426. doi: 10.1038/415424a. [DOI] [PubMed] [Google Scholar]

- 18.Blanchard EA, Lilly T, Vaughn LA. Reducing the expression of racial prejudice. Psychol Sci. 1991;2:101–5. [Google Scholar]

- 19.Monteith MJ, Deneen NE, Tooman GD. The effect of social norm activation on the expression of opinions concerning gay men and blacks. Basic Appl Soc Psych. 1996;18:267–87. [Google Scholar]

- 20.Plant EA, Devine PG. Internal and external motivation to respond without prejudice. J Pers Soc Psychol. 1998;75:811–32. doi: 10.1177/0146167205275304. [DOI] [PubMed] [Google Scholar]

- 21.Ganis G, Keenan JP. The cognitive neuroscience of deception. Soc Neurosci. 2009;4:465–472. doi: 10.1080/17470910802507660. [DOI] [PubMed] [Google Scholar]

- 22.Gozna LF, Vrij A, Bull R. The impact of individual differences on perceptions of lying in everyday life and in a high stake situation. Pers Indiv Differ. 2001;31:1203–16. [Google Scholar]

- 23.Cloninger CR. Washington University, St Louis, Missouri: Centre for Psychobiology of Personality; 1994. The Temperament and Character Inventory (TCI): a guide to its development and use. [Google Scholar]

- 24.Paulhus DL. Buffalo/Toronto: Multi-Health Systems; 1998. Paulhus deception scales: Manual of the balanced inventory of social desirable responding (BIDR-7). [Google Scholar]

- 25.Christie R, Geis F. New York: Academic Press; 1970. Studies in Machiavellianism. [Google Scholar]

- 26.Caprara GV, Capanna C. Moral civic disengagement and values. Ricerche di Psicologia. 2005;28:67–84. [Google Scholar]

- 27.Nowak M. Five rules for the evolution of cooperation. Science. 2006;314(5805):1560–3. doi: 10.1126/science.1133755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Haley KJ, Fessler DMT. Nobody's watching? Subtle cues affect generosity in an anonymous economic game. Evol Hum Behav. 2005;26:245–256. [Google Scholar]

- 29.Kurzban R, DeScioli P, O'Brien E. Audience effects on moralistic punishment. Evol Hum Behav. 2007;28:75–84. [Google Scholar]

- 30.Cloninger CR. A systematic method for clinical description and classification of personality variants. Arch Gen Psychiat. 1987;44:573–588. doi: 10.1001/archpsyc.1987.01800180093014. [DOI] [PubMed] [Google Scholar]

- 31.Dunton BC, Fazio RH. An individual difference measure of motivation to control prejudiced reactions. Pers Soc Psychol Bull. 1997;23:316–26. [Google Scholar]

- 32.Amodio DM, Kubota JT, Harmon-Jones E, Devine PG. Alternative mechanisms for regulating racial responses according to internal vs. external cues. Soc cogn affect neurosci. 2006;1:26–36. doi: 10.1093/scan/nsl002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bandura A. Englewood Cliffs, NJ: Prentice Hall; 1986. Social foundations of thought and action: A Social Cognitive Theory. [Google Scholar]

- 34.Szolnoki A, Perc M. Reward and cooperation in the spatial public goods game. EPL. 2010;92(3):38003. [Google Scholar]

- 35.Helbing D, Szolnoki A. Punish, but not too hard: How costly punishment spreads in the spatial public goods game. New J Phys. 2010;12:083005. [Google Scholar]

- 36.Sigmund K, Hauert C, Nowak M. Reward and punishment. Proc of the Natl Acad Sci USA. 2001;98(19):10757–62. doi: 10.1073/pnas.161155698. [DOI] [PMC free article] [PubMed] [Google Scholar]