Abstract

Evidence remains mixed about whether individuals with autism look less to eyes and whether they look more at mouths. Few studies have examined how spontaneous attention to facial features relates to face processing abilities. The current study tested the ability to discriminate gender from facial features, namely eyes and mouths, by comparing accuracy scores of 17 children with autism and 15 adults with autism to 17 typically developing children and 15 typically developing adults. Results indicated that all participants regardless of diagnosis discriminated gender more accurately from eyes than from mouths. However, results indicated that compared to adults without autism, adults with autism were significantly worse at discriminating gender from eyes.

Keywords: Autism, Gender Discrimination, Face Perception, Facial Features

Research suggests individuals with autism process facial information differently than typicality developing individuals do, with a particular processing impairment of eye information. The Diagnostic and Statistical Manual of Mental Disorders (DSM-IV-TR) cites impaired eye to eye gaze as a symptom of autism (American Psychiatric Association, 2000). Empirically, individuals with autism perform worse than typically developing individuals on the “Reading the Mind in the Eyes” Test (Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001) demonstrating difficulty identifying thoughts and intentions of eyes presented in isolation. Unlike typically developing individuals, individuals with autism process the lower half of faces/mouths better or as well as the upper half of faces/eyes on identity recognition tasks with familiar faces (Langdell, 1978), unfamiliar faces (Riby, Doherty-Sneddon, & Bruce, 2009), and with isolated facial features (Joseph & Tanaka, 2003). Additionally, eye tracking studies reveal that while passively viewing faces, individuals with autism demonstrat greater fixation to mouths compared to individuals without autism during social scenes (Fletcher-Watson, Leekman, Benson, Frank, & Findlay, 2009; Klin, Jones, Schultz, Volkmar, & Cohen, 2002; Norbury, et al., 2009), images of facial emotions (Pelphrey, et al., 2002), and photographs of faces where high-spatial frequency information is removed (i.e., bubble technique, Spezio, Adolphs, Hurley, & Piven, 2007b). Eye tracking during emotion recognition tasks reveals that individuals with autism fixate on mouths more than individuals without autism (Neumann, Spezio, Piven, & Adolphs, 2006; Pelphrey, et al., 2002; Spezio, Adolphs, Hurley, & Piven, 2007a). Furthermore, a looking bias to mouths may appear as early as six months of age in infants later diagnosed with autism (Merin, Young, Ozonoff, & Rogers, 2007).

Three explanations are proposed for why individuals with autism have difficulty processing eye information, resulting in perhaps an increased reliance on mouths during face processing. First, researchers suggest that eye avoidance by individuals with autism is due to an overarousal from the heightened emotional information conveyed by eyes. The intensity of eyes may be disconcerting to individuals with autism, and Dalton et al. (2005) found amygdala activation was positively associated with eye fixations for individuals with autism. Second, researchers suggest that greater attention to mouths helps individuals with autism obtain verbal information. Thus, they become accustomed to looking at mouths rather than eyes (e.g., Klin, et al., 2002). Joseph and Tanaka (2003) proposed that language impairments associated with autism may “foster an early and enduring tendency to attend to mouths in an effort to disambiguate speech sounds via lip reading, especially when other communicative cues from the eyes are inaccessible.” (p. 538) Finally, researchers suggest that individuals with autism simply cannot process information from eyes well, and therefore learn to compensate by relying on mouth information instead (e.g., Joseph & Tanaka, 2003).

Nevertheless, other empirical evidence demonstrates individuals with autism do attend to and process facial information from eyes better than mouths similar to typically developing individuals. Several eye tracking studies testing individuals with autism also suggest that similar to matched controls, individuals with autism spend significantly more time fixating to the eyes compared to any other feature (Hernandez, et al., 2009) and that initial fixations while passively viewing emotional faces tend to be toward the eyes (van der Geest, Kemner, Verbaten, & van Engeland, 2002). Behavioral evidence also suggests more attention to eyes versus mouths by individuals with autism during emotion recognition (Hobson, Ouston, & Lee, 1988). Furthermore, Bar-Haim, Shulman, Lamy, and Reuveni (2006) demonstrated that children with autism did not differ from control children in attention to eyes and mouths during a probe-detection task where participants located a dot that materialized on a face. Rather, children with autism were as fast as children without autism at detecting a dot’s onset near the eyes than mouth suggesting attention was oriented toward the eyes in anticipation of the probe for all children.

Contradicting evidence from research with and without eye tracking raises an important consideration. Although eye tracking informs us where participants look, it does not inform us whether attentional processing occurs. Even if individuals look directly at specific facial features, we cannot know from eye movements whether they are processing information about facial features. To know whether facial information is attended to and processed, we need to investigate performance on behavioral tasks. Therefore, the central question of the current study is whether individuals with autism process facial information from eyes and mouths, and how their performance compares to typically developing individuals on accuracy with eyes relative to mouth information. To answer this question, we employed a simple gender discrimination task relying on perceptual processing without any memory demands.

Gender discrimination is an important ability required for establishing and maintaining competent social interactions. Previous research demonstrates that individuals with autism have difficulty discriminating gender from whole faces if faces are not typically masculine or feminine (Newell, Best, Gastgeb, Rump, & Strauss, in press). Adults with autism also show significantly slower reaction times during gender identification tasks (Behrmann, et al., 2006; Newell, et al., in press). Another reason for employing a gender task is that successful gender discrimination relies on using eye information (e.g., Schyns, Bonnar, & Gosselin, 2002). Eye regions, especially brow to lid distances, are salient in providing perceptual differences necessary to discriminate gender (Campbell, Benson, Wallace, Doesbergh, & Coleman, 1999). Brow thickness, distance between eye and brow, and eye shape and size are distinct between men and women (Burton, Bruce, & Dench, 1993). McPartland (2005) found that lower accuracy on a gender task was associated with fewer fixations to the eyes by individuals with autism. Limited attention to eyes may negatively impact how well individuals with autism abstract social information from eyes, thus impairing social interactions. Within typical development, individuals learn to discriminate gender by 8 to 12 years of age (Newell, et al., in press). Adults accurately discriminate facial gender within a seconds (O'Toole, et al., 1998) and are quite accurate at identifying gender from eyes presented in isolation (Roberts & Bruce, 1988).

Therefore, given evidence that individuals with autism may look less to eyes, this study is interested in whether, with development, individuals with autism learn enough perceptual information about facial features to successfully discriminate gender from eyes and mouths in isolation from whole faces. If individuals with autism look less at eyes, they may have less experiential knowledge about how eyes vary within and between genders. Additionally, if individuals with autism look more at mouths, do they develop enhanced experiential knowledge about how mouths vary within and between genders as compared to typically developing individuals?

Participants completed two gender discrimination tasks on a computer, one with eyes and one with mouths. It was predicted that the control group would perform better on the eye task than on the mouth task because eyes provide more useful gender cues. In contrast, it was predicted that the autism group may discriminate gender from eyes and mouths 1) equally as well as the control group, 2) better on the mouth task relative to the eye task, or 3) poorer on both tasks relative to the control group.

Method

Participants

The autism group included 17 children and 15 adults. All participants were high-functioning and met DSM-IV-TR criteria for autism (American Psychiatric Association, 2000). Autism diagnoses were confirmed with the Autism Diagnostic Interview-Revised (ADI-R; Rutter, LeCouteur, & Lord, 2003) and the Autism Diagnostic Observation Schedule (ADOS; Lord, Rutter, DiLavore, & Risi, 2003). IQs were assessed using the Wechsler Abbreviated Scale of Intelligence (WASI; Wechsler, 1999). The control group included typically developing individuals, 17 children and 15 adults. Groups were matched by gender, ethnicity, chronological age, and verbal, performance, and full scale IQ scores. Table 1 reports diagnostic matching criteria and group demographic information. There were no significant differences between groups for any of the demographic variables. Control participants had a negative family history of autism in first and second degree relatives and a negative family history in first degree relatives of affective, anxiety, or other major psychiatric disorders based on the Family History Screen (Weissman, et al., 2000).

Table 1.

Participant Demographic Characteristics

| Adults (N = 30) | Children (N = 34) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Autism Group (N = 15) | Control Group (N = 15) | Autism Group (N = 17) | Control Group (N = 17) | |||||||

| M (SD) | Range | M (SD) | Range | p value | M (SD) | Range | M (SD) | Range | p value | |

| CA | 21.3 (6.7) | 16 – 38 | 23.3(6.4) | 16 – 36 | .41 | 11.6 (1.6) | 8 – 14 | 12.0 (2.0) | 8 – 14 | .51 |

| VIQ | 104.5 (12.7) | 81 – 126 | 107.9 (8.5) | 91 – 123 | .41 | 100.5 (10.8) | 82 – 117 | 104.4 (8.9) | 88 – 121 | .27 |

| PIQ | 104.7 (10.0) | 80 – 118 | 109.5 (9.2) | 86 – 119 | .18 | 108.2 (15.9) | 87 – 139 | 107.3 (8.0) | 88 – 120 | .83 |

| FSIQ | 105.1 (8.6) | 92 – 125 | 109.9 (8.9) | 88 – 120 | .14 | 104.8 (12.2) | 85 – 126 | 106.8 (8.6) | 86 – 121 | .57 |

| M/F | 13/2 | 13/2 | 17/0 | 17/0 | ||||||

| Ethnicity | 15 Caucasian | 15 Caucasian | 17 Caucasian | 17 Caucasian | ||||||

Note. CA = Chronological Age in years; VIQ = Verbal IQ; PIQ = Performance IQ; FSIQ = Full Scale IQ; M: F = Number of Male to Female participants. Ethnicity obtained by self-report.

Stimuli

Stimuli were color images of 38 adult eye regions and 38 adult mouth regions. Eye stimuli were created by cropping eye regions from faces to show a band approximately 14.7 cm (length) X 4.0 cm (height) displaying eyes, brows, and temples (see Figures 1 and 2). Mouth stimuli were created by cropping mouth regions from faces to show a band approximately 7.2 cm (length) X 2.5 cm (height) displaying lips and surrounding skin, but no teeth (see Figures 3 and 4).

Figure 1.

Example stimulus of female eye region.

Figure 2.

Example stimulus of male eye region.

Figure 3.

Example stimulus of female mouth region.

Figure 4.

Example stimulus of male mouth region.

Procedure

Before testing, written informed consent was obtained using procedures approved by the University of Pittsburgh Medical Center Institutional Review Board. All participants were compensated.

Participants were seated in front of a 40 cm computer monitor at a viewing distance of 61 cm. The visual angle was 14° for eye stimuli and 7° for mouth stimuli. The larger sized stimuli ensured that any lack of gender discrimination was not due to poor acuity. Testing lasted approximately ten minutes. During the two tasks, participants judged whether each feature belonged to a female or male face. Participants pressed buttons labeled male or female. Stimuli remained visible until participants made a decision. Task order was not counterbalanced; rather, the eye task was always presented first as a way to orient participants to the seemingly strange task. However, a 15 minute delay separated tasks.

Results

The primary dependent measure was accuracy, which was calculated as the percentage of correct responses for each task. Although groups were not significantly different on any demographic variables, additional correlations were conducted between overall accuracy and VIQ, PIQ, and FSIQ for all groups (e.g., children with autism, children without autism, adults with autism, and adults without autism). There were no significant associations of accuracy with measures of IQ.

A three-way ANOVA was performed on mean accuracy scores, with group (autism vs. control) and age (child vs. adult) as between-subjects factors and feature (eyes vs. mouth) as a within-subjects factor. There was a significant main effect of feature, F (1,60) = 335.15, p < .001 and a significant three-way interaction of all factors, F(1,60) = 4.44, p < .05.

To interpret this three-way interaction, separate two-way ANOVAs were conducted on mean accuracy scores for children and adults with group (autism vs. control) as a between-subjects factor and feature (eye vs. mouth) as a within-subjects factor. For children, there was a significant main effect of feature, F (1,32) = 150.28, p < .001, indicating that accuracy was greater for the eye task (M =78.76%, SD = 9.08%) than the mouth task (M = 57.91%, SD = 8.00%); however, there was no significant interaction. See Table 3a. Similarly, for adults, there was a significant main effect of feature, F (1,28) = 196.17, p < .001, indicating that both groups had greater accuracy discriminating gender from eyes than mouths. See Table 3b. There also was a significant main effect of group, F (1,28) = 6.99, p =.01, indicating that control adults had greater accuracy than adults with autism, as shown in Table 3b. More importantly, there was a significant feature X group interaction, F (1,28) = 6.25, p = .01. As confirmed with post-hoc t-tests, this interaction indicated that although groups did not differ in accuracy with mouths, t (28) = 0.83, p >.05; the adult control group was significantly more accurate at discriminating gender from eyes than the adult autism group, t (28) = 3.45, p < .01.

Table 3.

| Table 3a | ||

|---|---|---|

|

Children’s Mean Percentage Correct (Standard Deviation) by Group for Eye vs. Mouth Tasks | ||

| Child Autism Group | Child Control Group | |

| Eye Task | 77.4% (10.2%) | 80.2% (7.9%) |

| Mouth Task | 55.5% (9.6%) | 60.4% (5.1%) |

| Table 3b | ||

|---|---|---|

|

Adults’ Mean Percentage Correct (Standard Deviation) by Group for Eye vs. Mouth Tasks | ||

| Adult Autism Group | Adult Control Group | |

| Eye Task | 77.9% (9.5%) | 87.8% (5.9%) |

| Mouth Task | 60.1% (7.6%) | 62.3% (6.9%) |

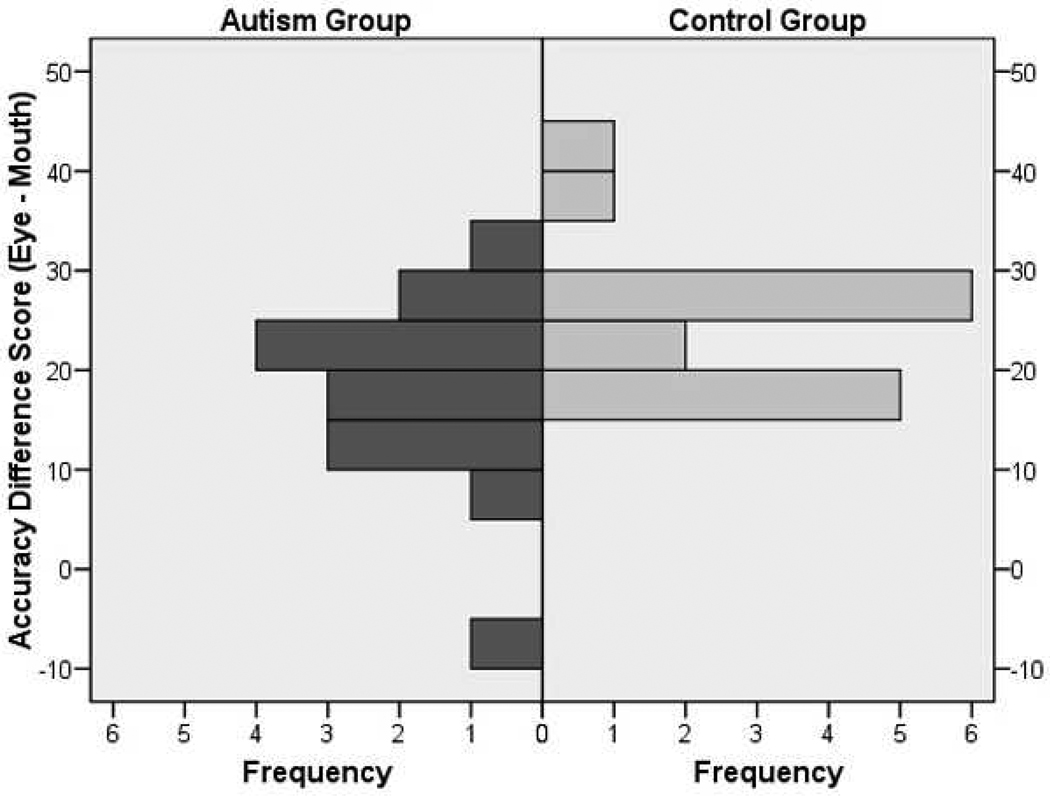

Finally, the significant difference in adult performance on gender discrimination from eyes was explored further to look at individual differences. The distribution of mean accuracy difference scores of performance on the eye task relative to the mouth task (i.e., eye performance minus mouth performance) for adults revealed an interesting pattern. As seen in Figure 5, almost all participants, in both the control and autism groups, discriminated gender better from eyes than from mouths. However, there was a subgroup of about 30% of the adults with autism who discriminated gender from eyes relative to mouths at an almost equal accuracy rate, with only a difference in accuracy of ±15% between tasks.

Figure 5.

Distribution of accuracy difference scores between the eye and mouth tasks for adult groups.

Discussion

Using a simple gender discrimination task, we examined whether individuals with autism process gender information from eyes and mouths in the first investigation of gender discrimination of facial features by individuals with autism. Results revealed individuals with autism, like typicality developing individuals, discriminated gender more accurately from eye than mouth information. However, adults with autism were less accurate than adults without autism discriminating gender from eyes. This is consistent with previous findings that individuals with autism do not perform as well as typically developing individuals on gender discrimination tasks with whole faces (Newell, et al., in press).

The current findings confirm gender information is more discernable from eyes than mouths, and importantly this preferential pattern is true regardless of diagnosis. These results may be surprising given that some studies suggest individuals with autism have been shown to look at the mouth more than eyes in some studies (Klin, et al., 2002; Langdell, 1978; Pelphrey, et al., 2002). Perhaps individuals with autism should have performed poorly on the eye task given their limited attention to eyes in general. Considering the increased attention to mouths, one might predict that individuals with autism should have been better at discriminating gender from mouths than eyes. These results support previous evidence suggesting that individuals with autism can use information from eyes for face processing, and do not appear to be superior to typically developing individuals at using mouth information to process faces (e.g., Rutherford, Clements, & Sekuler, 2007). Processing gender information more accurately from the eyes than mouth indicates that individuals with autism have attended to the eyes over the course of development.

Children with and without autism were not significantly different at using eyes or mouths to discriminate gender. One possible explanation is that typically developing children are still developing gender discrimination abilities (Newell, et al., in press). Evidence supporting the idea that the children in the current sample may still be developing gender discrimination abilities is supported by the result that typically developing individuals improved over development on the eye task; whereas, individuals with autism did not.

There was a significant group difference between adults with autism and adults without autism at using eyes to discriminate gender. One explanation for group difference is that individuals with autism may be proficient at discriminating gender from eyes when the gender is typical, but poor when the gender is less typical because the gender information is less obvious. Research shows that individuals with autism have difficulty discriminating gender from whole faces when faces are less typical exemplars of each gender (Newell, et al., in press). Individuals with autism also have difficulty categorizing less typical exemplars of basic emotions (Rump, Giovannelli, Minshew, & Strauss, in press) and of non-social object categories (Gastgeb, Strauss, & Minshew, 2006). Therefore in the current study, individuals with autism may be able to discriminate gender from eyes or mouths when exemplars are typical of men or women, but their discrimination abilities may be limited for less typical exemplars. Future research should investigate more closely if gender typicality of facial features influences gender discrimination accuracy in individuals with autism.

With regard to individual differences, the current results suggest that most adults with autism use eye information more than mouth information to discriminate gender. However a subgroup of adults in the autism group discriminated gender equally well from eyes and mouths. This trend is interesting given that autism is a spectrum disorder with a high degree of variability in severity of symptoms and suggests that future studies should consider how individual performance within samples of individuals with autism relates to their performance or attention to eye versus mouth information.

There are a few limitations within the current sample. Participants included only high-functioning individuals with autism; thus, results may not generalize to the full spectrum of autism disorders. Second, although the sample included children and adults, the developmental findings were based on cross-sectional analyses rather than a longitudinal approach. Additionally, because facial feature were displayed in isolation, we cannot generalize the results to processing of whole faces as would be seen in the real world. Despite these limitations, the current findings are useful for understanding the development of face processing abilities such as gender discrimination in individuals with autism. In addition, these results provide clear evidence that individuals with autism are looking at eyes and learning how to process gender information. Future studies of face processing in individuals with autism should consider that amount of time looking to eyes may not reflect the quality of processing eye information. It seems that regardless of attention paid to eyes in general, adults with autism still process enough information from eyes to successfully abstract gender information, although not quite to the level of success as typically developing adults.

Acknowledgements

This research was supported by a NIH Collaborative Program of Excellence in Autism (CPEA) Grant P01-HD35469 to Nancy J. Minshew and Mark S. Strauss. We are grateful to the participants and their families for making this research possible and to the CPEA staff for their efforts in recruiting and scheduling participants. We especially thank Holly Gastgeb and Keiran Rump for their time testing participants.

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4th ed. Washington, DC: American Psychiatric Association; 2000. [Google Scholar]

- Bar-Haim Y, Shulman C, Lamy D, Reuveni A. Attention to Eyes and Mouth in High-Functioning Children with Autism. Journal of Autism and Developmental Disorders. 2006;36(1):131–137. doi: 10.1007/s10803-005-0046-1. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The "Reading the mind in the eyes" Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry. 2001;42(2):241–251. [PubMed] [Google Scholar]

- Behrmann M, Avidan G, Leonard GL, Kimchi R, Luna B, Humphreys K, et al. Configural processing in autism and its relationship to face processing. Neuropsychologia. 2006;44(1):110–129. doi: 10.1016/j.neuropsychologia.2005.04.002. [DOI] [PubMed] [Google Scholar]

- Burton AM, Bruce V, Dench N. What's the difference between men and women? Evidence from facial measurement. Perception. 1993;22(2):153–176. doi: 10.1068/p220153. [DOI] [PubMed] [Google Scholar]

- Campbell R, Benson PJ, Wallace SB, Doesbergh S, Coleman M. More about brows: How poses that change brow position affect perceptions of gender. Perception. 1999;28:489–504. doi: 10.1068/p2784. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, et al. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8(4):519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fletcher-Watson S, Leekman SR, Benson V, Frank MC, Findlay JM. Eye-movements reveal attention to social information in autism spectrum disorder. 2009 doi: 10.1016/j.neuropsychologia.2008.07.016. [DOI] [PubMed] [Google Scholar]

- Gastgeb HZ, Strauss MS, Minshew NJ. Do Individuals With Autism Process Categories Differently? The Effect of Typicality and Development. Child Development. 2006;77(6):1717–1729. doi: 10.1111/j.1467-8624.2006.00969.x. [DOI] [PubMed] [Google Scholar]

- Hernandez N, Metzger A, Magné R, Bonnet-Brilhault F, Roux S, Barthelemy C, et al. Exploration of core features of a human face by healthy and autistic adults analyzed by visual scanning. Neuropsychologia. 2009;47(4):1004–1012. doi: 10.1016/j.neuropsychologia.2008.10.023. [DOI] [PubMed] [Google Scholar]

- Hobson RP, Ouston J, Lee A. What's in a face? The case of autism. British Journal of Psychology. 1988;79(4):441–453. doi: 10.1111/j.2044-8295.1988.tb02745.x. [DOI] [PubMed] [Google Scholar]

- Joseph RM, Tanaka J. Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry. 2003;44(4):529–542. doi: 10.1111/1469-7610.00142. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Langdell T. Recognition of faces: An approach to the study of autism. Journal of Child Psychology and Psychiatry. 1978;19(3):255–268. doi: 10.1111/j.1469-7610.1978.tb00468.x. [DOI] [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S. Autism diagnostic observation schedule (ADOS) Los Angeles, CA: Western Psychological Services; 2003. [Google Scholar]

- McPartland JC. Dissertation Abstracts International: Section B: The Sciences and Engineering. Vol. 66 2005. Face perception and recognition processes in Asperger syndrome as revealed by patterns of visual attention. [Google Scholar]

- Merin N, Young G, Ozonoff S, Rogers S. Visual fixation patterns during reciprocal social interaction distinguish a subgroup of 6-month-old infants At-risk for autism from comparison infants. Journal of Autism and Developmental Disorders. 2007;37(1):108–121. doi: 10.1007/s10803-006-0342-4. [DOI] [PubMed] [Google Scholar]

- Neumann D, Spezio ML, Piven J, Adolphs R. Looking you in the mouth: abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Social Cognitive and Affective Neuroscience. 2006;1(3):194–202. doi: 10.1093/scan/nsl030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newell LC, Best CA, Gastgeb H, Rump KM, Strauss MS. The development of categorization and facial knowledge: Implications for the study of autism. In: Oakes LM, Cashon CH, Casasola M, Rakison DH, editors. Early Perceptual and Cognitive Development. New York: Oxford Press; (in press). [Google Scholar]

- Norbury CF, Brock J, Cragg L, Einav S, Griffiths H, Nelson K. Eye-movement patterns are associated with communicative competence in autistic spectrum disorders. Journal of Child Psychology and Psychiatry. 2009;50:834–842. doi: 10.1111/j.1469-7610.2009.02073.x. [DOI] [PubMed] [Google Scholar]

- O'Toole AJ, Deffenbacher KA, Valentin D, McKee K, Huff D, Abdi H. The perception of face gender: The role of stimulus structure in recognition and classification. Memory & Cognition. 1998;26(1):146–160. doi: 10.3758/bf03211378. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32(4):249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Riby DM, Doherty-Sneddon G, Bruce V. The eyes or the mouth? Feature salience and unfamiliar face processing in Williams syndrome and autism. The Quarterly Journal of Experimental Psychology. 2009;62(1):189–203. doi: 10.1080/17470210701855629. [DOI] [PubMed] [Google Scholar]

- Roberts T, Bruce V. Feature saliency in judging the sex and familiarity of faces. Perception. 1988;17(4):475–481. doi: 10.1068/p170475. [DOI] [PubMed] [Google Scholar]

- Rump KM, Giovannelli JL, Minshew NJ, Strauss MS. The Development of Emotion Recognition in Individuals with Autism: An Expertise Approach. Child Development. doi: 10.1111/j.1467-8624.2009.01343.x. (in press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutherford MD, Clements KA, Sekuler AB. Differences in discrimination of eye and mouth displacement in autism spectrum disorders. Vision Research. 2007;47(15):2099–2110. doi: 10.1016/j.visres.2007.01.029. [DOI] [PubMed] [Google Scholar]

- Rutter M, LeCouteur A, Lord C. Autism diagnostic interview, revised (ADI-R) Los Angeles, CA: Western Psychological Services; 2003. [Google Scholar]

- Schyns PG, Bonnar L, Gosselin F. Show Me the Features! Understanding Recognition From the Use of Visual Information. Psychological Science. 2002;13(5):402–409. doi: 10.1111/1467-9280.00472. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RSE, Piven J. Abnormal use of facial information in high-functioning autism. Journal of Autism and Developmental Disorders. 2007a;37(5):929–939. doi: 10.1007/s10803-006-0232-9. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RSE, Piven J. Analysis of face gaze in autism using "Bubbles". Neuropsychologia. 2007b;Vol, 45(1):144–151. doi: 10.1016/j.neuropsychologia.2006.04.027. [DOI] [PubMed] [Google Scholar]

- van der Geest JN, Kemner C, Verbaten MN, van Engeland H. Gaze behavior of children with pervasive developmental disorder toward human faces: A fixation time study. Journal of Child Psychology and Psychiatry. 2002;43(5):669–678. doi: 10.1111/1469-7610.00055. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler abbreviated scale of intelligence (WASI) San Antonio: The Psychological Corporation; 1999. [Google Scholar]

- Weissman MM, Wickramaratne P, Adams P, Wolk, Verdeli, Olfson Brief screening for family psychiatric history. Archives of General Psychiatry. 2000;57:675–682. doi: 10.1001/archpsyc.57.7.675. [DOI] [PubMed] [Google Scholar]