Abstract

Emotion recognition was investigated in typically developing individuals and individuals with autism. Experiment 1 tested children (5 to 7 years, n = 37) with brief video displays of facial expressions that varied in subtlety. Children with autism performed worse than the control children. In Experiment 2, three age groups (8 to 12 years, n = 49; 13 to 17 years, n = 49; and adults n = 45) were tested on the same stimuli. Whereas the performance of control individuals was best in the adult group, the performance of individuals with autism was similar in all age groups. Results are discussed with respect to underlying cognitive processes that may be affecting the development of emotion recognition in individuals with autism.

When Kanner first articulated his description of the autistic child in 1943, he viewed “[their] inability to form the usual, biologically provided affective contact with people,” as a primary feature of the disorder. Since that time, numerous studies have further elucidated the components of this complex syndrome. Kanner’s original conceptualization has now been somewhat modified, but “disturbances in affective contact” are still cited as a central feature of autism. Relating emotionally to others presents a major challenge to individuals with autism as they maneuver through the social world. Both clinicians (Attwood, 1998; Hobson, 2004) and parents (Capps, Kasari, Yirmiya, & Sigman, 1993) note that for individuals with autism, one of the difficulties they face in their understanding of self and others is in the understanding of emotion. Individuals with autism frequently fail to react appropriately to the emotions of others, and researchers have suggested that an impairment in emotional expression recognition may contribute to these inappropriate reactions and to their understanding of emotion in general.

Emotion Recognition in Typically Developing Individuals

The ability to recognize facial expressions of emotion is crucial to establishing interpersonal connections early in life. Recognition of facial expressions is one of the primary signals used to understand the feelings and intentions of others, and it has been argued that the ability to recognize basic emotional expressions may be universal (Darwin, 1872; Ekman, 2003). Research has shown that typically developing infants can discriminate static displays of happy, sad, and surprised faces as early as three or four months of age (Young-Browne, Rosenfeld, & Horowitz, 1977), and dynamic displays of happy and angry faces by seven months of age (Soken & Pick, 1992). By the age of four years, typically developing children can freely label prototypical (full or exaggerated) displays of happiness, sadness, and anger with almost perfect accuracy, and are also becoming more adept at recognizing fear and surprise (Widen & Russell, 2003). While some research suggests the ability to recognize most emotional expressions reaches adult levels by 10 years of age (e.g., Bruce, et al., 2000; Durand, Gallay, Seigneuric, Robichon, & Baudouin, 2007; Mondloch, Geldart, Maurer, & Le Grand, 2003), this appears to be dependant on task demands. While by this age children are able to identify prototypical emotional expressions at levels comparable to adults, studies indicate that even by adolescence, individuals may still exhibit some difficulty with recognizing less intense emotions (Herba, Landau, Russell, Ecker, & Phillips, 2006; Thomas, De Bellis, Graham, & LaBar, 2007). This skill, along with the speed with which individuals process emotions, appears to continue to develop through adolescence before reaching its peak in adulthood (De Sonneville, et al., 2002; Thomas et al., 2007). By adulthood, individuals are not only highly proficient and very fast at perceiving prototypical expressions of emotions in others (De Sonneville, et al.; Ekman, 2003), but they are also able to identify even very subtle expressions of emotion (Calder, Young, Perrett, Etcoff, & Rowland, 1996).

Emotion Recognition in Individuals with Autism

Over 35 studies have examined the ability of both children and adults with autism to recognize common categories of facial expression, yet no studies to date have clearly delineated the developmental course of this skill in this population. Indeed, it remains unclear if individuals with autism truly have a deficit in recognizing emotional expression in faces. Some studies suggest the ability is intact (e.g., Capps, Yirmiya, & Sigman, 1992; Gepner, Deruelle, & Grynfeltt, 2001; Ozonoff, Pennington, & Rogers, 1990), while others suggest it is impaired, relative to controls (Celani, Battacchi, & Arcidiacono, 1999; Hobson, Ouston, & Lee, 1989; Lindner & Rosen, 2006; Macdonald, et al., 1989). Given the plethora of methodologies applied to this question and the wide age ranges of the samples used, it is difficult to pinpoint exactly what accounts for these equivocal findings. Researchers appear to be more focused on how individuals with autism perform relative to controls, and are failing to consider the developmental course of this ability and how the age of the participants and the methodology employed might affect their results. When the literature is reframed in this light, a somewhat more consistent trend begins to emerge.

Studies using perceptually oriented tasks (e.g., matching, “same or different,” and sorting) to test emotion recognition suggest that when individuals with autism of all ages are given unambiguous, relatively prototypic stimuli and sufficient processing time, they are capable of performing at levels similar to control groups (e.g., Gepner, et al., 2001; Hobson, Ouston, & Lee, 1988; Humphreys, Minshew, Leonard, & Behrmann, 2007; Ozonoff, et al., 1990; Piggot, et al., 2004). Findings from studies that require participants to produce a label for or match a label to a facial expression are more equivocal. Only when close attention is paid to the age of the participants involved does a clearer picture emerge. Findings suggest that by 10 years of age, both low and high functioning children with autism are worse than controls at labeling basic, prototypic expressions (Lindner & Rosen, 2006; Tantam, Monaghan, Nicholson, & Stirling, 1989). By 12 years of age, however, high functioning children with autism are typically no different from controls at recognizing basic, prototypic expressions, and this lack of a difference appears to be consistent in the vast majority of studies examining high functioning individuals from 12 years through to adulthood (e.g., Capps, et al., 1992; Grossman, Klin, Carter, & Volkmar, 2000). Although these studies show limited impairment for basic, prototypical emotions, other research shows that individuals with autism have difficulty when the stimuli are either shown only briefly (Critchley, et al., 2000; Mazefsky & Oswald, 2007; Pelphrey, Sasson, Reznick, Goldman, & Piven, 2002), or when the stimuli are more subtle (Humphreys, et al., 2007). While not directly tested, these studies suggest that individuals with autism become more adept at recognizing basic, prototypic emotional expressions as they become older, but that even adults may still struggle with more fleeting or subtle emotions. In essence, individuals with autism may never reach the level of proficiency demonstrated by typically developing adults.

To date, studies of emotion recognition in individuals with autism have not adequately examined the participants’ true level of proficiency at recognizing expressions. When examining studies of emotion recognition in autism, it is apparent that the majority of studies allow participants to examine the stimuli for as long as necessary before providing a response (e.g., Hobson, et al., 1989; Humphreys, et al., 2007; Macdonald, et al., 1989). This may not reflect the demands and processes required when recognizing expressions in natural settings.

The degree of exaggeration of the expression that must be recognized is also important to consider. Many expressions portrayed in everyday settings are subtle. Thus, only the ability to process the nuances of less intense emotions will allow for successful recognition of that emotion. The prototypic emotion stimuli which are most commonly presented in studies with individuals with autism may not test the limits of their abilities and hence may mask existing difficulties.

The current study sought to examine the development of emotion recognition skills in individuals with autism using a paradigm that examined more closely their true level of proficiency. Given that in typically developing individuals, proficiency at emotion recognition seems to continue to develop through adulthood, this study is unique in addressing the development of emotion recognition in individuals with autism by using well matched groups of children, adolescents, and adults with the goal of determining how individuals at various ages performed on the same tasks. In addition, stimuli varied in subtlety and were presented very briefly in order to test the limits of our participants’ abilities. Finally, dynamic video stimuli were presented because dynamic emotion displays facilitate recognition, particularly for more subtle facial expressions (Ambadar, Schooler, & Cohn, 2005).

Experiment 1

Method

Participants

Participants were 19 children with high-functioning autism and 18 matched controls recruited from an educational organization that specializes in educating children with autism. Children with autism were previously diagnosed at this institute by child psychologists who had expertise in autism. In addition, at the time of the study these children were administered a diagnostic evaluation consisting of the Autism Diagnostic Observation Schedule-General (ADOS-G; Lord et al., 2000); only those children meeting the cut-off scores for autism on the ADOS-G algorithm were included. Children with Asperger’s disorder or PDD-NOS were excluded (see Table 1 for mean ADOS scores). The 18 control participants were matched on chronological age and a standard score equivalent of Verbal Mental Age (VMA) obtained using the Peabody Picture Vocabulary Test Revised (PPVT-R; Dunn & Dunn, 1981). No significant differences were found between groups in terms of chronological age and VMA (see Table 1).

Table 1.

Demographic Characteristics of Autism and Control Groups for Experiment 1

| Autism Group (N=19) | Control Group (N=18) | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Age | 6.4 | 0.8 | 6.0 | 0.8 |

| VMA | 97.89 | 15.86 | 105.61 | 11.99 |

| ADOS | ||||

| Comm | 5.68 | 1.80 | ||

| Soc | 9.79 | 2.12 | ||

| Total | 15.47 | 3.47 | ||

| Gender (M:F) | 14:5 | 11:7 | ||

| Ethnicity | 19 Caucasian | 18 Caucasian | ||

Note:Age is indicated in years.

VMA = Verbal Mental Age Standard Score (where M=100 and SD = 15).

ADOS Comm = Score on the Communication subscale; ADOS Soc = Score on the Reciprocal Social Interaction subscale; ADOS Total = Comm + Soc Ethnicity was obtained by self report.

Apparatus

Both control and autism participants were tested either at home or in the laboratory, depending on the parents’ preference. Participants sat in front of a 43-cm monitor controlled by a laptop computer.

Stimuli

For the pretest, 12 colored photographs of prototypical facial expressions were selected from the NimStim face stimulus set (three each of happy, sad, angry, and afraid) (Tottenham, et al., in press). Each model only appeared once within the stimulus set.

To create dynamic stimuli for the test phase, approximately 60 digital videos were made of volunteer male and female adults ranging in age from 18–30 years. Volunteers all wore a black robe to hide clothing and were filmed in front of a black background so that the videos provided a dynamic display of just the face. Each volunteer was instructed to model the facial expressions of “happy,” “sad,” “angry,” and “afraid.” All videos were viewed by four individuals (one of whom had training and experience in the Facial Action Coding System [Ekman & Friesen, 1975]), and the one best example of each emotion was chosen by consensus. Thus, four videos, each exhibiting a different emotional expression, were chosen to be used for the final test stimuli. Each emotional expression was posed by a different model. An additional video of happy was selected to serve as the sample video for the test phase; this model was different from those selected for the test phase.

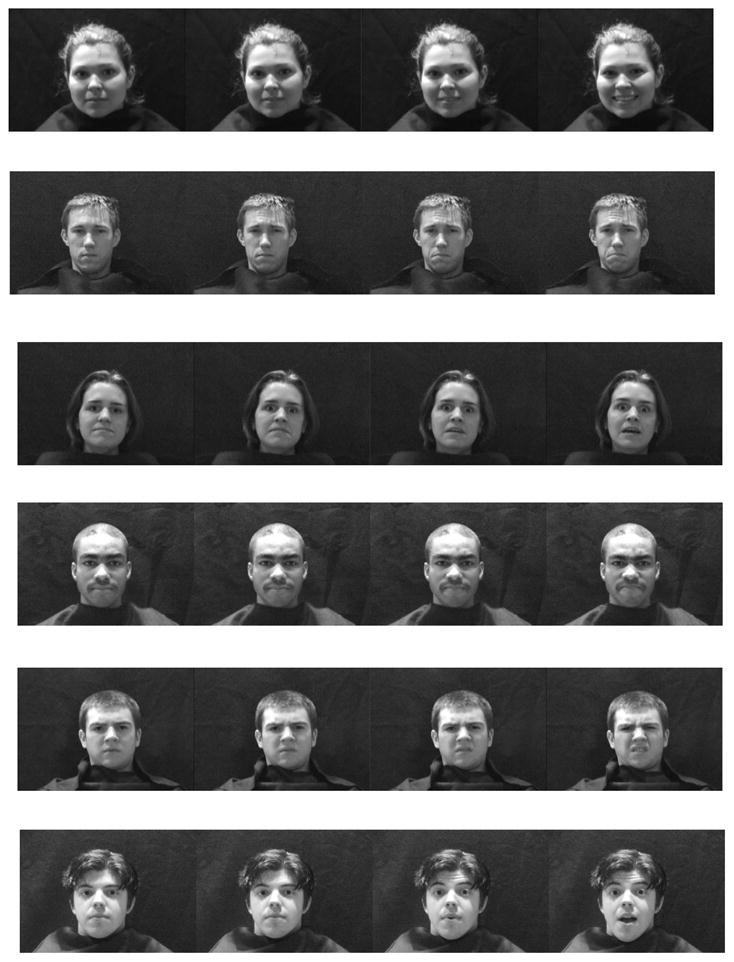

Each of the selected videos (four for the test phase and one sample) was edited so that it progressed from a neutral expression to the prototypical pose of that expression. This was accomplished by careful examination of the videos in a frame-by-frame fashion. Following this editing, each video was then divided into four film clips, each moving from either neutral to 25%, 50%, 75%, or 100% of the expression (to be referred to as Levels I, II, III and IV, respectively). This allowed for a total of 16 trial stimuli (4 clips of each of the 4 emotions). Static images of the end point for each of these stimuli can be seen in Figure 1.

Figure 1.

Figure 1a. Stimuli used for “happy”; levels 1 though 4

Figure 1b. Stimuli used for “sad”; levels 1 though 4

Figure 1c. Stimuli used for “afraid”; levels 1 though 4

Figure 1d. Stimuli used for “angry”; levels 1 though 4

Figure 1e. Stimuli used for “disgusted”; levels 1 though 4 (used only in Experiment 2)

Figure 1f. Stimuli used for “surprised”; levels 1 though 4 (used only in Experiment 2)

Following this procedure, digital videos were edited such that each clip was prefaced by a black screen with a centered yellow “ball” of approximately 2.54 centimeter in diameter. This was done to provide a fixation point to ensure that attention was focused on the center of the screen prior to viewing each clip. Thus the video progressed from a fixation point to a neutral expression and then proceeded to Level I, II, III, or IV. After the partial or whole expression was reached, the video would return to the original black screen with fixation point. To ensure that no exposure-time advantage would occur, each clip was edited to run for a length of 500 milliseconds.

Video clips were piloted on undergraduate students (N = 29). After viewing each clip, the screen went blank, and participants were asked to identify the emotion. In addition, they were asked to indicate how confident they were of their judgment on a seven point Likert scale, with one indicating a “guess” response, and seven indicated a “certain” response. The clips were presented level by level from the most difficult (i.e., Level I) to the least difficult (i.e., Level IV). The four different emotions were randomized within level. Pilot ratings indicated that by Level III of each emotion video, the majority of participants were able to make an accurate identification. See Appendix for accuracy percentages and “confidence in decision” ratings.

Procedure

Pretest

To ensure that participants understood and recognized the facial expressions of happy, sad, angry, and fearful, they were presented with the 12 stimuli from the NimStim display set. Participants were first presented with a sheet of paper with iconic face drawings displaying each of the four emotions as well as a neutral expression. These served as the answer choices for both the pretest and test phases. Participants were then presented with three sets of the four target emotions, with the emotions randomized within each set, and were asked to name the displayed emotion. The stimuli were displayed on the screen one at a time, and remained on the screen until the participant verbalized a response or pointed to one of the iconic faces. All participants were able to identify these exaggerated, static expressions with over 90% accuracy.

Test

Participants were read instructions prior to the test phase (see Appendix B). They were then shown the Level I video clips of each emotion in randomized order. After all Level I clips were seen, each of the successive levels was shown, with randomization of expression within each level. After each presentation of a clip, the screen went blank. At that point, participants were asked to identify the emotion they thought they saw on the clip.

Scoring

For the test phase, there were two possible ways to score the dependent measures. The first system was to count the total number of trials for each emotion where the participant correctly identified the facial expression, from which we derived a total for each of the four emotions. For the second system, the level at which each participant correctly identified the expression and then continued to identify it correctly at every subsequent (i.e., easier) level was recorded. This number was then translated into “number of consecutive levels recognized” for each emotion (for example, if the participant first correctly recognized “happy” at level II, and then identified it correctly again at level III and level IV, a score of 3 was earned (three consecutive levels recognized; the participant was able to accurately identify the emotion from the 50% level onward). If however, the participant first correctly recognized “happy” at level II, failed to identify it correctly at level III, but then again recognized it correctly again at level IV, a score of 1 was earned (only 1 consecutive level recognized; the participant was only able to accurately identify the emotion at the 100% level). This second scoring system was used to obtain a more accurate measure of when participants could identify the expression since they were less likely to be given credit for randomly guessing the correct response on any particular trial. The minimum score for each emotion was 0, indicating none of the levels of that emotion were recognized, and the highest score for each emotion was a 4, indicating that all four levels had been correctly identified.

Data was analyzed using both scoring systems, and the results were essentially the same (the means when using the first system was necessarily higher). Given that our second system provided a more accurate measure of the participants’ performance, “number of consecutive levels recognized” is the variable reported in all subsequent analyses.

Results

A score of each participant’s average performance was created by adding together their “number of consecutive levels recognized” score for each of the four emotions and then dividing the total by four. Higher scores indicated better performance. For the both children with autism and control children, neither age (autism: r = .33, n = 19, p = .16; control: r = .05, n = 18, p = .86) nor standardized VMA (autism: r = −.1, n = 19, p = .68; control: r = −.25, n = 18, p = .31) were significantly correlated with the average performance score.

A primary measure of interest was how the children’s mean performance scores across all four emotions differed by diagnosis. A t-test was run on the average performance score for the children with autism (M = 1.80, SD = .52) versus the control children (M = 2.42, SD = .59) (t(35) = −3.34, p < .01). As a group, the children with autism performed significantly worse across emotions than did the control children, suggesting a general difficulty with emotion recognition.

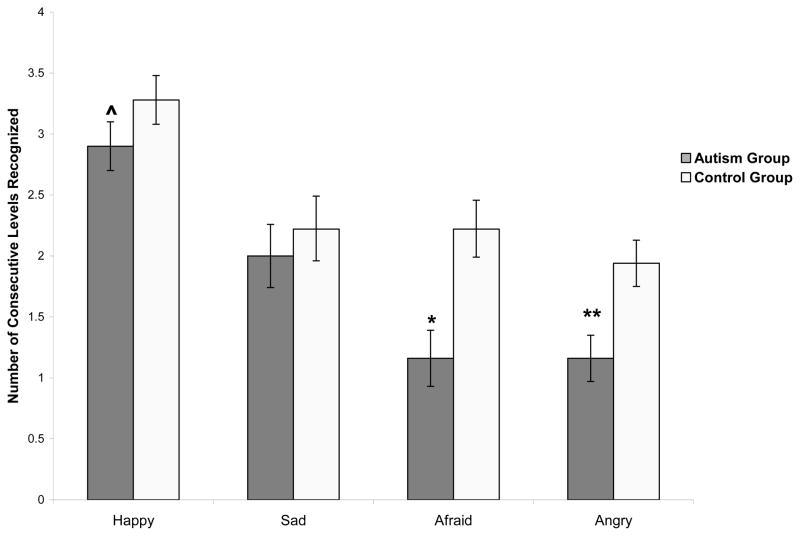

The next measure of interest was how the groups differed on the individual emotions. T-tests (using a Bonferroni adjusted alpha level of 0.0125) illustrated that while typically developing children scored significantly better on “afraid” (t(35) = −3.92, p < .001) and “angry” (t(35) = −2.38, p < .0125), they were only marginally better for “happy” (t(35) = −1.38, p = .09), and showed no difference for “sad” (t(35) = −.60, p = .28) (see Figure 2 for means).

Figure 2.

Experiment 1: Mean “Consecutive Correct” scores for happy, sad, afraid, and angry

Discussion

The results of Experiment 1 demonstrated that although children with autism were able to accurately recognize a number of the dynamic, briefly presented emotion stimuli, they were generally not as proficient as controls. Indeed, typically developing children were, on average, able to correctly identify “anger” and “afraid” stimuli at one full level of subtlety earlier than children with autism. Importantly, although more pronounced in the children with autism, both groups were less proficient at recognizing more subtle, briefly displayed emotions. Aside from “happy,” for which both groups performed relatively well, typically developing children could recognize, on average, only two consecutive levels (stimuli exhibiting 75% and 100% of the emotion) of the other three emotions, while the children with autism could recognize, on average, two consecutive levels of “sad”, and could, on average, only reliably identify “afraid” and “angry” by the final, easiest level (stimuli exhibiting 100% of the emotion).

These findings illustrate that by as early as 5 to 7 years of age, children with autism are less proficient at recognizing some emotional expressions than are typically developing children. This stands in contrast to performance on the pretest, and highlights the benefits of being able to process the stimuli for as long as needed. The results also illustrate that children are not equally proficient at recognizing different emotions. “Happy” appeared to be the easiest emotion for both groups to recognize, which stands to reason given that “happy” is also the first emotional expression that infants learn to discriminate (Bornstein & Arterberry, 2003; Kuchuk, Vibbert, & Bornstein, 1986), and can be recognized primarily by movement of the mouth (Ekman, 2003). The children with autism exhibited significantly more difficulty with “angry” and “afraid.” These two emotions require integration of information from the mouth, eyes, and forehead, and if the children with autism were relying on processing these expressions in a more featural and less holistic manner, this could account for the difficulties they exhibited.

Given the relative difficulty in recognizing the brief, subtle emotions experienced by the children with autism in this experiment, an important question is whether their skills would improve as they enter later childhood, adolescence and adulthood, or if they would never become as proficient as typically developing individuals at recognizing these expressions. To address this, Experiment 2 used the same procedure with additional expressions and with well controlled age groups of older children, adolescents, and adults.

Experiment 2

Method

Participants

Child participants were 26 children with high-functioning autism and 23 typically developing control children between the ages of 8 and 12. Adolescent participants were 24 adolescents with high-functioning autism and 25 typically developing control adolescents (age 13–17 years). Adult participants were 21 adults with high-functioning autism and 24 typically developing controls (age 18 to 53 years). Control participants in each age group (child, adolescent, and adult) were matched with the autism group on age, full scale IQ, verbal IQ, and performance IQ. Table 2 summarizes the participants’ demographic characteristics. No significant differences existed between the autism and control groups on any of the demographic variables.

Table 2a.

Demographic characteristics of child and adolescent autism and control groups for Experiment 2

| Children | Autism Group (N=26) | Control Group (N=23) | ||

|---|---|---|---|---|

| M | SD | M | SD | |

| Age | 10.46 | 1.27 | 10.26 | 1.63 |

| VIQ | 103.12 | 10.23 | 104.09 | 10.10 |

| PIQ | 110.00 | 17.53 | 108.04 | 9.10 |

| FSIQ | 107.15 | 13.39 | 106.87 | 9.74 |

| ADOS | ||||

| Comm | 4.92 | 1.41 | ||

| Soc | 9.38 | 1.91 | ||

| Total | 14.29 | 2.84 | ||

| Gender (M:F) | 24:2 | 18:5 | ||

| Ethnicity | 26 Caucasian | 21 Caucasian | ||

| 2 African American | ||||

|

| ||||

| Adolescents | Autism Group (N=24) | Control Group (N=25) | ||

| M | SD | M | SD | |

|

| ||||

| Age | 14.96 | 1.23 | 14.64 | 1.25 |

| VIQ | 104.42 | 13.16 | 104.88 | 11.45 |

| PIQ | 107.33 | 10.80 | 105.08 | 9.52 |

| FSIQ | 106.21 | 11.78 | 105.84 | 11.23 |

| ADOS | ||||

| Comm | 5.04 | 1.43 | ||

| Soc | 9.91 | 2.27 | ||

| Total | 14.95 | 3.05 | ||

| Gender (M:F) | 21:3 | 22:3 | ||

| Ethnicity | 19 Caucasian | 22 Caucasian | ||

| 1 Asian American | 2 African American | |||

| 4 Other | 1 Other | |||

Note: Age is indicated in years. SD = standard deviation; VIQ = Verbal IQ; PIQ = Performance IQ, FSIQ = Full scale IQ. ADOS Comm = Score on the Communication subscale; ADOS Soc = Score on the Reciprocal Social Interaction subscale; ADOS Total = Comm + Soc. Ethnicity was obtained by parent report.

Participants with autism were administered a diagnostic evaluation consisting of the Autism Diagnostic Observation Schedule-General (ADOS-G; Lord et al., 2000) with confirmation by expert clinical opinion (for ADOS means see Table 2). Additionally, the Autism Diagnostic Interview-Revised (ADI-R; Lord, Rutter, & LeCouteur, 1994) was administered to a parent, except for two adult participants where a close relative was interviewed. Both the ADOS-G and ADI-R were scored using the DSM-IV (American Psychiatric Association, 2000) scoring algorithm for autism (see Table 2 for mean ADOS scores). Children and adolescents with Asperger’s disorder or PDD-NOS were excluded. Participants with autism were also required to be in good medical health, free of seizures, have a negative history of traumatic brain injury, and have an IQ > 80 as determined by the Wechsler Abbreviated Scale of Intelligence (WASI; Wechsler, 1999).

Control participants were volunteers recruited from the community. Parents of potential control participants completed questionnaires of demographic and family information to determine eligibility. Control participants were required to be in good physical health, free of past or current neurologic or psychiatric disorders, have a negative family history of first degree relatives with major psychiatric disorders, and have a negative family history in first and second degree relatives of autism spectrum disorder. Control participants were also excluded if they had a history of poor school attendance or evidence of a disparity between general level of ability and academic achievement suggesting a learning disability. The Wide Range Achievement Test-IV was administered to exclude the presence of a learning disability.

Apparatus

Testing occurred in a quiet laboratory room. Each participant sat in front of a 43-cm. monitor controlled by a computer.

Stimuli

Stimuli were exactly the same as those used in Experiment 1, with the addition of two new emotions, “surprise” and “disgust” (using unique models), to both the pretest and test phase (test phase stimuli can be seen in Figure 1). Thus, for Experiment 2 there were a total of 24 trial stimuli (4 clips of each of the 6 emotions).

Procedure

The procedure was similar to Experiment 1 except answer choices appeared on the screen in written format. Instructions were also more age appropriate.

Results

Pretest

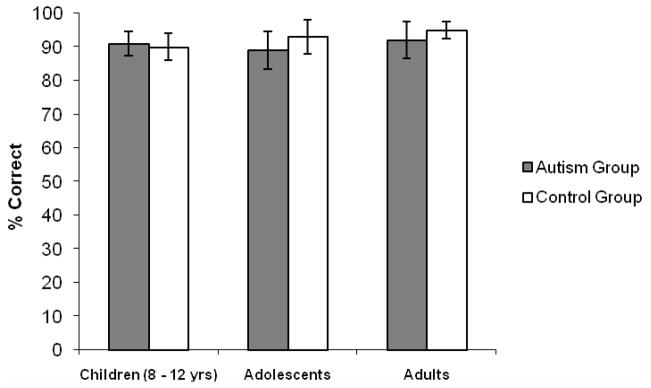

Scores on the pretest, which used the static prototypic faces, varied somewhat more than in Experiment 1. A two-way ANOVA was conducted on the percent correct scores for the pretest data (which included all six emotions as well as neutral) with Diagnosis (autism vs. control) and Age Group (children vs. adolescents vs. adults) as the between subjects variables. Neither the interaction, F(2, 136) = 1.13, p = .33, nor either of the main effects (Diagnosis, F(1, 136) = 1.27, p = .26; Age Group, F(2, 136) = 1.52, p = .22) were significant, indicating that all participants were equally adept at identifying static, exaggerated emotional expressions. Figure 3 illustrates the results from each group.

Figure 3.

Experiment 2: Mean percent correct of pretest exaggerated expressions for all age groups

Test

Several preliminary analyses determined whether any group differences (autism vs. control) varied by emotion or whether the emotions could be combined for further analyses. These analyses indicated that for the test phase, a consistent pattern of results existed for “afraid,” “angry,” “disgusted,” and “surprised,” but not for “happy” or “sad”. Indeed, a Diagnosis by Emotion interaction was found, F(5, 608) = 2.32, p < .05. Bonferroni post-hoc test indicated that only for “happy” (p = .57) and “sad” (p = .39) were there no significant differences between the diagnostic groups. These findings paralleled Experiment 1, which also showed that both groups performed similarly on these two emotions. When “happy” and “sad” were excluded from the analyses, the Diagnosis by Emotion interaction was no longer significant, F(3, 382) = .88, p = .45. Therefore, the first set of analyses examined data for “afraid,” “angry,” “disgusted,” and “surprised,” but exclude “happy” and “sad”. The results for these two emotions are discussed separately from the others.

Of primary interest was whether there were differences between the groups in their ability to recognize expressions, and whether this differed by age. Thus, a mixed ANOVA was conducted, with Emotion as the within subject variable, and Diagnosis (autism vs. control) and Age Group (children vs. adolescent vs. adults) as between-subjects variables. Results indicated a significant main effect of Emotion F(3, 382) = 51.44, p < .001, and the Bonferroni post-hoc test indicated that performance was best for “surprise” (which differed significantly from the other three emotions; all p-values <.001), followed by “disgust” (which differed from “anger” and “fear”; both p-values < .05), followed by “anger” and “fear,” (which did not differ significantly from each other; p = 1.0)

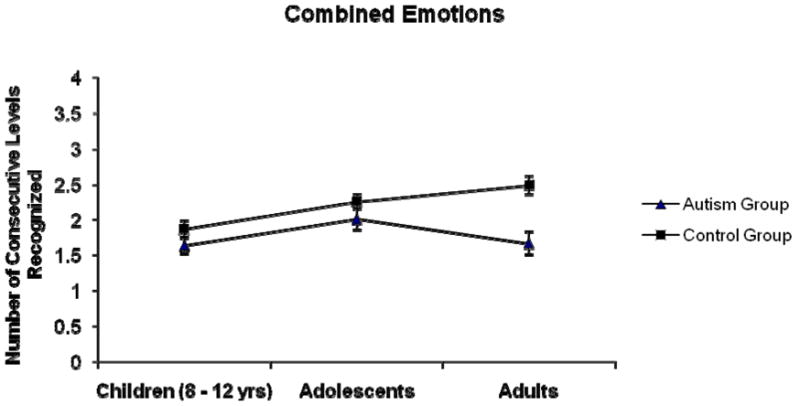

There was also a significant main effect of Diagnosis, F(1, 136) = 16.57, p < .001, and Age Group, F(2, 136) = 5.03, p < .01. More importantly, results indicated a significant interaction between the Diagnosis and Age Group variables, F(2, 136) = 3.30, p < .05. The mean scores, collapsed across the four emotions, are presented in Figure 4.

Figure 4.

Experiment 2: Mean and standard error of “Consecutive Correct” scores by age group collapsed across afraid, angry, disgusted, and surprised

To understand this interaction, follow-up analyses (Bonferroni post-hoc tests) revealed that the control adults were significantly better at identifying the four emotions than were the adults with autism (p < .001). In contrast, the performance of the children and adolescents with autism did not differ from their matched controls (p = .22 & p = .17, respectively). Additionally, the performance of the control group differed significantly by age; F(2, 69) = 7.11, p < .01, with the post-hoc test indicating that control children performed marginally worse than control adolescents (p = .06) and significantly worse than the adults (p < .001). This difference between age groups, however, was not seen in the participants with autism F(2,67) = 2.07, p = .13 (all post-hoc p-values > .2).

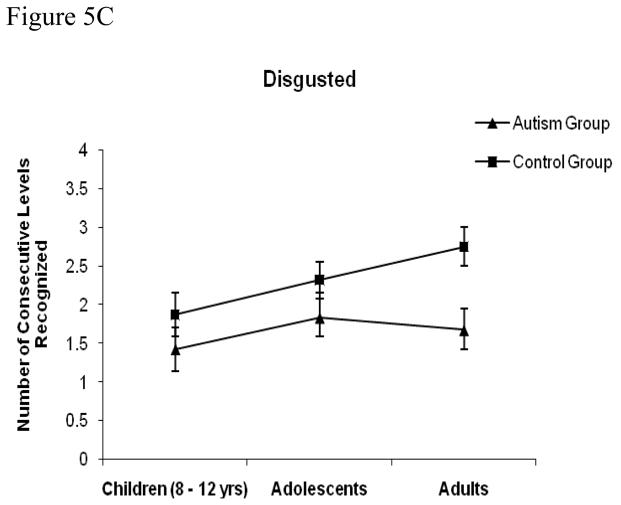

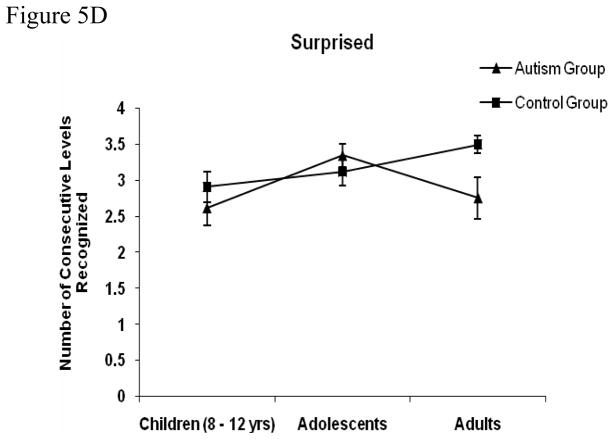

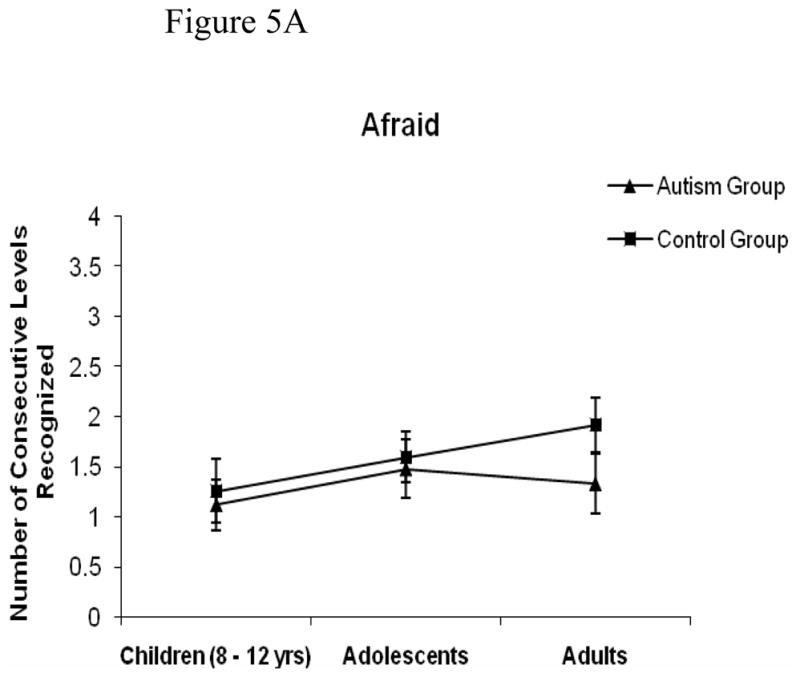

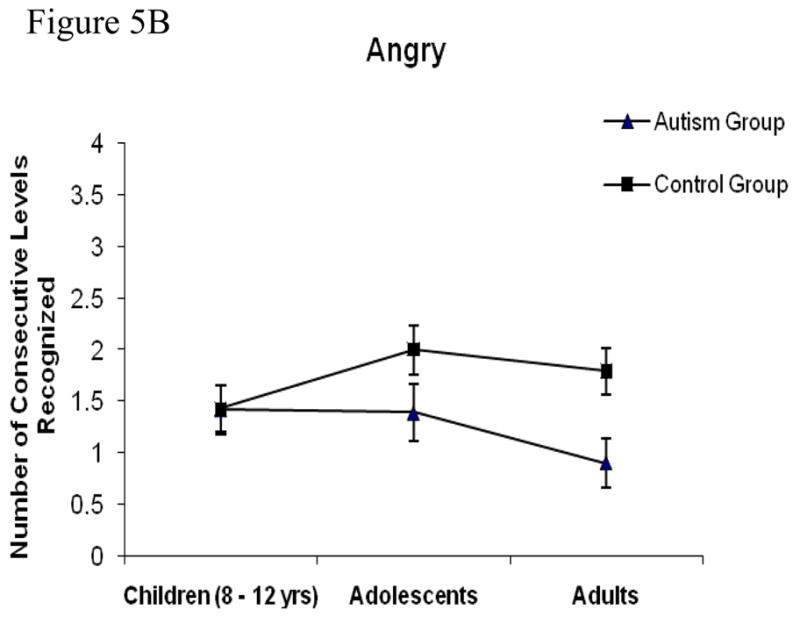

Results for the individual emotions of “afraid,” “angry,” “disgust,” and “surprise” are shown in Figures 5a to 5d. As can be seen, the pattern for the individual emotions reflects the collapsed results in that for each expression, the control adults perform significantly better than do the adults with autism (using a Bonferroni adjusted alpha level of 0.01, all tests are t-tests with p < .01, except for “afraid,” where p = .08). In contrast, the child and adolescent autism and control groups do not differ from each other on any of the individual expressions (children, all p-values > .19; adolescents, all p-values > .11).

Figure 5.

Experiment 2: Mean and standard error of “Consecutive Correct” scores by age group for (a) afraid (b) angry (c) disgusted and (d) surprised

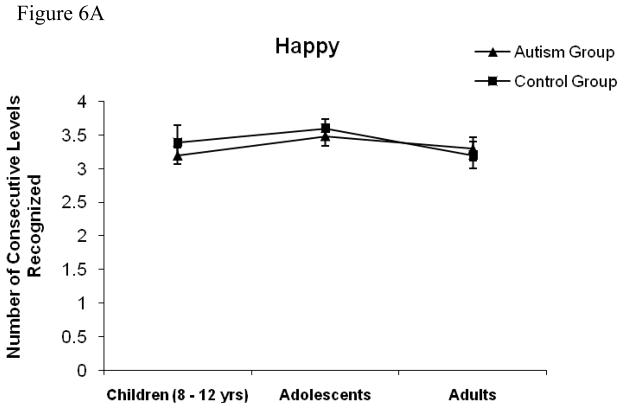

The pattern for “happy” and “sad” diverged from the other four emotions. Most participants were at ceiling for “happy,” thus the means for each group were very similar (see Figure 6a). A two-way ANOVA of Diagnosis (autism vs. control) and Age Group (children versus adolescents versus adults) yielded no significant main effect of Diagnosis (F(1, 136) = .32, p = .57), or Age Group (F(2, 136) = 1.63, p = .2), and no significant interaction (F(2, 136) = .33, p = .72).

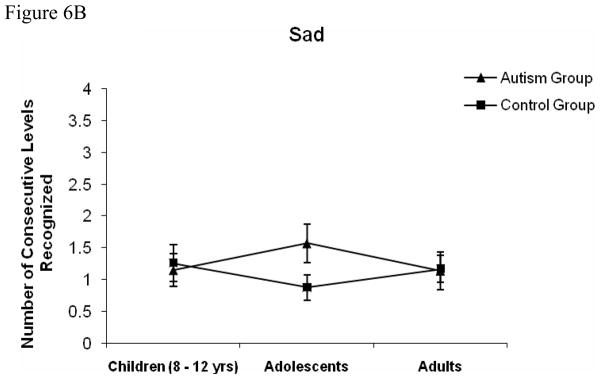

Figure 6.

Experiment 2: Mean and standard error of “Consecutive Correct” scores by age group for (a) happy and (b) sad

Results for sad were anomalous (see Figure 6b) in that most participant in all groups performed at or close to floor. A two-way ANOVA showed that the main effects of Diagnosis (F(1, 136) = .75, p = .39) and Age Group (F(2, 136) = .04, p = .97) were not significant, and neither was the interaction (F(2, 136) = 1.40, p = .25.

Discussion

Results of Experiment 2 again emphasize the importance of testing the limits of emotion recognition abilities in individuals with autism. While none of the groups (based on diagnosis or age group) differed in their abilities to recognize prototypic stimuli presented without time limits, all groups exhibited relative difficulty when the stimuli were more subtle and displayed only briefly. Of note was how performance differed by both diagnosis and age group. A general improvement in performance with increasing age was seen in the control group in that the control adults performed significantly better than the control children. This developmental difference, however, was not seen in the individuals with autism. The adults with autism performed no better than the children with autism. It appears that the emotion recognition skills of the children with autism “catch up” to their typically developing peers between the ages of 8 and 12 years, and remain relatively comparable through adolescence. However, whereas typically developing children continue to develop proficiency for recognizing emotional expressions through to adulthood, individuals with autism do not seem to be able refine their skills beyond those present by late childhood.

As with the young children in Experiment 1, “happy” was the easiest expression for all participants in Experiment 2 to discriminate, and many individuals were at ceiling for this emotion. This contributed to the lack of variability within the “happy” results and in turn led us to analyze this emotion separately. It is unclear as to what contributed to the anomalous results for “sad” in this sample. A number of participants (both individuals with autism and controls) performed at floor for all levels of this emotion, again reducing variability in the results and motivating us to examine it separately from the other emotions. The most likely explanation for this result this that there was something inherent in the particular stimulus used for the study that presented specific difficulty for the individuals in Experiment 2.

General Discussion

This was the first study to look at the ability to recognize facial expressions in young children through to adults in both typically developing individuals and individuals with autism. In contrast to prior research, this study controlled for the subtlety of the expressions and required participants make judgments of only briefly presented dynamic expressions. As mentioned earlier, such manipulations are extremely important. To date, the majority of research with both typically developing individuals and with individuals with autism has presented only prototypic expressions and allowed the participants to the view the expressions for as long as necessary before identifying them. Under such conditions, both developmental changes and potential differences between populations may be masked by what are likely ceiling effects in performance. This was clearly evident in our results of no group differences in the pretests.

When considering the results from the typically developing individuals, it appears that with development, these individuals become more proficient at recognizing subtle facial expressions; there was continuing improvement in the ability to recognize facial expressions from the child group through to the adult group. Although our paradigm was different, these findings are similar to those of Thomas, et al. (2007), who also found an improvement into adulthood. Thus, our results provide further evidence that many previous findings of no improvement in emotion recognition after the age of 10 (e.g., Bruce, et al., 2000; Mondloch, et al., 2003) may have resulted from these studies using methods that lead to performance ceiling effects. That is, by showing participants prototypical expressions and allowing unlimited time to respond, developmental changes that may be occurring past middle childhood have been masked. Given that there does appear to be improvement through to adulthood, at issue is what is changing with development.

Behavioral studies of face processing suggest that typically developing individuals slowly shift from a predominant reliance on more featural processing of faces in young childhood to ultimately having adult expertise in configural processing of faces (Mondloch, Dobson, Parsons, & Maurer, 2004; Mondloch, Le Grand, & Maurer, 2002; Schwarzer, 2000). Configural processing is typically seen as the ability to perceive the spatial distances or relationships among aspects of the faces (e.g., eye separation) as opposed to attending to single non-spatial features such as the nose (Maurer, Le Grand, & Mondloch, 2002). For emotion recognition, however, this distinction between configural and featural aspects of the face may not be as straightforward as is usually depicted in the facial recognition literature. Spatial distances can also vary in how easily they can be discriminated. For example, categorizing or perceiving whether a face is demonstrating fear or surprise requires the ability to pick up muscle movement difference in the eye region (Ekman, 2003). When these expressions are prototypic or exaggerated, the amount of spatial movement from neutral to the full expression is much larger then when these expressions are subtle. Thus, in order to discriminate subtle facial expressions, individuals must be able to perceive very small motoric spatial differences of the muscles. We are suggesting that with development, not only do typical individuals rely more on configural information, but they also get better at making subtle configural discriminations; thus, they may be getting better at picking up very small changes in muscle movements that occur during more subtle displays of emotion.

There is also evidence that with development, typically developing individuals become better at recognizing and categorizing faces (e.g., Carey, Diamond, & Woods, 1980). Most cognitive models of face recognition in adults assume that individuals compare the faces they are viewing to either stored exemplars or to a multidimensional prototype that contains the mean of all of the dimensions that typically vary in faces (e.g., Valentine & Endo, 1992). Valentine and others have called this multidimensional representation the “face space.” It is assumed that the center of this “face space” represents the central tendency of all the features and configurations that vary in faces. As an individual gains experience with faces in their environment, the faces are represented in this face-space according to the values of their features. More typical values (and therefore more typical faces) lie closer to the center of this framework, while less typical faces fall along the outer edges of the framework (Valentine, 1991). The development of a face-space framework depends on experience and necessitates that over time, individuals be able to abstract both central tendencies and the range of values of features from their experience with faces. In essence, as children get older they continue to develop a more refined prototype. Essentially, the “face space” represents the totality of an individual’s experiences with faces and can be viewed as the knowledge base that drives a “top-down” perceptual learning processes that allow individuals to make more subtle facial discriminations as experience and learning increases.

While the face-space model has not previously been applied to recognizing emotional expressions, it is likely that emotional expressions are also discriminated by comparing multiple aspects of the to-be-categorized expression (e.g., the movement of the eyes region, mouth, or eyebrows) to separate prototypes of each of the expressions. Essentially, recognizing facial expressions can be considered a type of categorization task (Humphreys et al., 2007). As with faces, facial expression categories may have “typicality structures” where individual instances of an expression vary in typicality. It is likely that prototypical expressions are easiest to categorize, and as expressions become more subtle or less prototypical, comparisons to prototypes becomes more difficult thus the expression is more difficult to categorize. Similar to the development of face recognition, with development and consequently experience, this comparison process may become more sophisticated and precise as individuals develop proficiency at discriminating expressions. Over development, children and adolescents are not necessarily developing new or different underlying processes, but rather they are getting more efficient at processing subtle configural information and comparing the information to a central representation (McKone & Boyer, 2006).

The results from the individuals with autism found that while young children with autism did not recognize expressions as well as the typically developing controls, these group differences were not seen with the older children. However, by adulthood, individuals with autism were again performing worse than the typically developing controls. In essence, the adults with autism never seem to attain the levels of proficiency at emotion recognition of typically developing adults. These findings seem to indicate, as suggested earlier, that the lack of a developmental approach and a failure to consider task demands may be contributing to the equivocal findings regarding emotion recognition skills in this population.

Some previous research has shown that individuals with autism have impairments only in recognizing certain emotions. While this has varied from “surprise” (Baron-Cohen, Spitz, & Cross, 1993), to “anger” (Teunisse & De Gelder, 2001), the somewhat more consistent finding has been that they exhibit a specific deficit in the recognition of “fear” (Howard, et al., 2000; Humphreys et al., 2007; Pelphrey et al, 2002). Our findings did not show any specific deficit for one particular emotion. In the youngest age group, the children with autism performed worst on “afraid” and “angry,” but the mean score for “angry” was no lower than that for “afraid.” In all three of our older age groups, there was no one emotion that caused specific difficulty over and above the others for the individuals with autism. It is not clear how to interpret our results in light of the previous findings, since the current study was the only one to simultaneously vary expression intensity, use motion, and limit how long participants had to view the expression. Additionally, when only one exemplar of each expression is used (as was the case in the current study as well as others), results may be idiosyncratic to the particular exemplar used for each expression. The current study was not designed to carefully compare performance on different expressions, and future research designed to do so will require multiple exemplars of each expression and intensity.

At issue, then, is why the adults with autism never reach the levels of proficiency at emotion recognition of typically developing adults. Research on the face recognition abilities of individuals with autism has suggested that they have a tendency to process faces and, hence, probably facial expressions, based more on featural than configural information (see reviews by Dawson, Webb, & McPartland, 2005; Sasson, 2006). It is unclear from this research whether individuals with autism are unable to process configural information or whether they are just less efficient at processing this information. McKone and colleagues (Gilchrist & McKone, 2003; McKone & Boyer, 2006) have found that in typically developing individuals, even young children can process configural facial information provided that information is obvious (e.g., a large degree of eye separation). They suggest that with development, there is not a shift in processing strategies from features to configurations, but rather there is a developing ability to process subtle configural information. Similarly, it may be that individuals with autism are able to process spatial information, but they do not achieve the level of proficiency needed to perceive subtle facial expressions as well as typically developing adults can.

A second contributory factor may be that individuals with autism have poor mental representations of the basic emotions. As discussed, to develop a mature representation of faces (e.g., a face-space) it is necessary abstract prototypic representations of facial information. With respect to emotion, it is critical to have stored prototypic representations or exemplars of the basic emotional expressions. Research with individuals who have autism suggests that they may have difficulties in abstracting prototypical representations of categorical information (e.g., Gastgeb, Strauss, & Minshew, 2006; Klinger & Dawson, 2001). Thus, it might be expected that individuals with autism would have difficulty comparing a subtle facial expression to their inadequately formed stored prototypic representations of the different basic expressions. Interestingly, recent results have suggested that individuals with autism have difficulty not only with face categories, but seem to have a general difficulty processing and categorizing complex, subordinate stimuli (Behrmann, et al., 2006; Gastgeb, et al., 2006). Thus, the difficulty exhibited with subtle facial expressions may be more general in nature.

While the present study was not designed to test these various possibilities, it is the first study to demonstrate how the ability to recognize emotional expression compares in individuals with autism and typically developing individuals at different ages. More importantly, it demonstrates that while even young children with autism are able to perceive prototypic facial expressions, individuals with autism never achieve a level of proficiency that is comparable to typically developing adults. As the underlying cause of these differences are explored in future research, it will be critically important for future studies to carefully control the difficulty of the emotion expression task to with respect to both the subtlety of the expressions and the amount of time individuals have to process this information.

Table 2b.

Demographic characteristics of adult autism and control groups for Experiment 2

| Adults | Autism Group (N=21) | Control Group (N=24) | ||

|---|---|---|---|---|

| M | SD | M | SD | |

| Age | 27.00 | 7.92 | 28.29 | 11.8 |

| VIQ | 104.24 | 13.18 | 105.33 | 6.90 |

| PIQ | 105.14 | 17.33 | 105.75 | 6.87 |

| FSIQ | 105.10 | 11.47 | 106.50 | 6.37 |

| ADOS | ||||

| Comm | 4.90 | 1.07 | ||

| Soc | 9.75 | 2.12 | ||

| Total | 14.65 | 2.60 | ||

| Gender (M:F) | 20:1 | 20:4 | ||

| Ethnicity | 21 Caucasian | 19 Caucasian | ||

| 2 African American | ||||

| 1 Other | ||||

Note: Age is indicated in years. SD = standard deviation; VIQ = Verbal IQ; PIQ = Performance IQ, FSIQ = Full scale IQ. ADOS Comm = Score on the Communication subscale; ADOS Soc = Score on the Reciprocal Social Interaction subscale; ADOS Total = Comm + Soc. Ethnicity was obtained by parent report.

Acknowledgments

This study was supported by an NICHD Collaborative Program of Excellence in Autism (CPEA) Grant P01-HD35469 and also by a National Alliance for Autism Research Pre-doctoral Mentor Based Fellowship Award in Autism Research. The data in Experiment 1 partially formed the doctoral dissertation of Joyce L. Giovannelli at the University of Pittsburgh. Preliminary versions of the findings were presented at the International Meeting for Autism Research, Boston, and the International Meeting for Autism Research, Montreal, Canada. We are grateful to Catherine Best, Holly Gastgeb, and Desiree Wilkinson for testing participants and also for commenting on prior versions of this manuscript. In addition, we would like to thank the individuals who served as models for our dynamic stimuli.

Appendix A. Pilot Group Ratings of Emotion Stimuli

| Emotion | Stimulus Level | Percentage Correct | Confidence in Rating | |

|---|---|---|---|---|

| Ma | (SD) | |||

| Happy | ||||

| Level 1 | 86.2% | 3.79 | (1.01) | |

| Level 2 | 93.1% | 3.79 | (1.21) | |

| Level 3 | 86.2% | 4.48 | (.91) | |

| Level 4 | 100% | 4.86 | (.52) | |

| Sad | ||||

| Level 1 | 6.9% | 2.79 | (1.24) | |

| Level 2 | 37.9% | 3.21 | (1.24) | |

| Level 3 | 65.5% | 3.48 | (1.18) | |

| Level 4 | 69% | 4.59 | (.63) | |

| Angry | ||||

| Level 1 | 3.4% | 2.97 | (.91) | |

| Level 2 | 31.0% | 3.48 | (1.15) | |

| Level 3 | 51.7% | 3.35 | (1.17) | |

| Level 4 | 79.3% | 4.24 | (.91) | |

| Afraid | ||||

| Level 1 | 17.2% | 2.79 | (1.11) | |

| Level 2 | 65.5% | 3.79 | (1.08) | |

| Level 3 | 93.1% | 4.41 | (.63) | |

| Level 4 | 82.8% | 4.58 | (.63) | |

“Confidence in own rating” scale was a 7 point Likert Scale with 1 = “just guessing” to 7 = “completely sure”

Appendix B. Instructions for Test Phase: Experiment 1

“This is a game where you are going to look at people’s faces and try to guess what they are feeling. You are going to see movies of people’s faces, and your job is to tell me how that person is feeling. First you are going to see a yellow ball on the screen and you need to look at the ball very closely, because the movies are going to be really fast, like this (snap fingers), and then you won’t see them anymore. For each face you can choose from these (point to iconic faces): “happy”, “sad”, “angry”, “scared”, or “none.” OK? Let’s practice first. In some of the movies it will be easy to tell what the person is feeling, like this one (present sample “happy” clip, at level IV), and some of them are going to be hard, like this one (present sample “happy” clip at level I). Ready to start?”

Contributor Information

Keiran M. Rump, University of Pittsburgh

Joyce L. Giovannelli, Children’s Advantage, Ravenna, Oh

Nancy J. Minshew, University of Pittsburgh School of Medicine

Mark S. Strauss, University of Pittsburgh

References

- Ambadar Z, Schooler JW, Cohn JF. Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science. 2005;16:403–410. doi: 10.1111/j.0956-7976.2005.01548.x. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 4. Washington, DC: American Psychiatric Association; 2000. Text Revision (DSM-IV-TR) [Google Scholar]

- Attwood T. Asperger’s syndrome: A guide for parents and professionals. London: Jessica Kingsley; 1998. [Google Scholar]

- Baron-Cohen S, Spitz A, Cross P. Do children with autism recognize surprise? A research note. Cognition and Emotion. 1993;7:507–516. [Google Scholar]

- Behrmann M, Avidan G, Leonard GL, Kimchi R, Luna B, Humphreys K, et al. Configural processing in autism and its relationship to face processing. Neuropsychologia. 2006;44:110–129. doi: 10.1016/j.neuropsychologia.2005.04.002. [DOI] [PubMed] [Google Scholar]

- Bornstein MH, Arterberry ME. Recognition, categorization, and apperception of the facial expression of smiling by 5-month-old infants. Developmental Science. 2003;6:585–599. [Google Scholar]

- Bruce V, Campbell RN, Doherty-Sneddon G, Import A, Langton S, McAuley S, et al. Testing face processing skills in children. British Journal of Developmental Psychology. 2000;18:319–333. [Google Scholar]

- Calder AJ, Young AW, Perrett DI, Etcoff NL, Rowland D. Categorical perception of morphed facial expressions. Visual Cognition. 1996;3:81–117. [Google Scholar]

- Capps L, Kasari C, Yirmiya N, Sigman M. Parental perception of emotional expressiveness in children with autism. Journal of Consulting and Clinical Psychology. 1993;61:475–484. doi: 10.1037//0022-006x.61.3.475. [DOI] [PubMed] [Google Scholar]

- Capps L, Yirmiya N, Sigman M. Understanding of simple and complex emotions in non-retarded children with autism. Journal of Child Psychology and Psychiatry. 1992;33:1169–1182. doi: 10.1111/j.1469-7610.1992.tb00936.x. [DOI] [PubMed] [Google Scholar]

- Carey S, Diamond R, Woods B. Development of face recognition: A maturational component? Developmental Psychology. 1980;16:257–269. [Google Scholar]

- Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. Journal of Autism and Developmental Disorders. 1999;29:57–66. doi: 10.1023/a:1025970600181. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Daly EM, Bullmore ET, Williams SC, Van Amelsvoort T, Robertson DM, et al. The functional neuroanatomy of social behaviour: Changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain: A Journal of Neurology. 2000;123:2203–2212. doi: 10.1093/brain/123.11.2203. [DOI] [PubMed] [Google Scholar]

- Darwin C. The Expression of the Emotions in Man and Animals. Oxford: Oxford University Press; 1872. [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Developmental Neuropsychology. 2005;27:403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- De Sonneville LMJ, Verschoor CA, Njiokiktjien C, Op het Veld V, Toorenaar N, Vranken M. Facial identity and facial emotions: Speed, accuracy, and processing strategies in children and adults. Journal of Clinical and Experimental Neuropsychology. 2002;24:200–213. doi: 10.1076/jcen.24.2.200.989. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test Revised. American Guidance Service; MN: 1981. [Google Scholar]

- Durand K, Gallay M, Seigneuri A, Robichon F, Baudouin J. The development of facial emotion recognition: The role of configural information. Journal of Experimental Child Psychology. 2007;97:14–27. doi: 10.1016/j.jecp.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Ekman P. Emotions Revealed. New York: Owl Books; 2003. [Google Scholar]

- Ekman P, Friesen WV. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues. Englewood Cliffs, NJ: Prentice Hall; 1975. [Google Scholar]

- Gastgeb HZ, Strauss MS, Minshew NJ. Do individuals with autism process categories differently: The effect of typicality and development. Child Development. 2006;77:1717–1729. doi: 10.1111/j.1467-8624.2006.00969.x. [DOI] [PubMed] [Google Scholar]

- Gepner B, Deruelle C, Grynfeltt S. Motion and emotion: A novel approach to the study of face processing by young autistic children. Journal of Autism and Developmental Disorders. 2001;31:37–45. doi: 10.1023/a:1005609629218. [DOI] [PubMed] [Google Scholar]

- Gilchrist A, McKone E. Early maturity of face processing in children: Local and relational distinctiveness effects in 7-year-olds. Visual Cognition. 2003;10:769–793. [Google Scholar]

- Grossman JB, Klin A, Carter AS, Volkmar FR. Verbal bias in recognition of facial emotions in children with Asperger syndrome. Journal of Child Psychology and Psychiatry. 2000;41:369–379. [PubMed] [Google Scholar]

- Herba CM, Landau S, Russell T, Ecker C, Phillips ML. The development of emotion-processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry. 2006;47:1098–1106. doi: 10.1111/j.1469-7610.2006.01652.x. [DOI] [PubMed] [Google Scholar]

- Hobson P. The Cradle of Thought. Oxford: Oxford University Press; 2004. [Google Scholar]

- Hobson R, Ouston J, Lee A. What’s in a face? The case of autism. British Journal of Psychology. 1988;79:441–453. doi: 10.1111/j.2044-8295.1988.tb02745.x. [DOI] [PubMed] [Google Scholar]

- Hobson R, Ouston J, Lee A. Naming emotion in faces and voices: Abilities and disabilities in autism and mental retardation. British Journal of Developmental Psychology. 1989;7:237–250. [Google Scholar]

- Howard M, Cowell P, Boucher J, Broks P, Mayes A, Farrant A, et al. Convergent neuroanatomical and behavioural evidence of an amygdala hypothesis of autism. Neuroreport. 2000;11:2931–2935. doi: 10.1097/00001756-200009110-00020. [DOI] [PubMed] [Google Scholar]

- Humphreys K, Minshew N, Leonard GL, Behrmann M. A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia. 2007;45:685–695. doi: 10.1016/j.neuropsychologia.2006.08.003. [DOI] [PubMed] [Google Scholar]

- Kanner L. Autistic disturbances of affective contact. Nervous Child. 1943;2:217–250. [PubMed] [Google Scholar]

- Klinger LG, Dawson G. Prototype formation in autism. Development and Psychology. 2001;13:111–124. doi: 10.1017/s0954579401001080. [DOI] [PubMed] [Google Scholar]

- Kuchuk A, Vibbert M, Bornstein MH. The perception of smiling and its experiential correlates in 3-month-old infants. Child Development. 1986;57:1054–1061. [PubMed] [Google Scholar]

- Lindner JL, Rosen LA. Decoding of emotion through facial expression, prosody, and verbal content in children and adolescents with Asperger’s syndrome. Journal of Autism and Developmental Disorders. 2006;36:769–777. doi: 10.1007/s10803-006-0105-2. [DOI] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook JE, Leventhal BL, DiLavore PC, et al. The Autism Diagnostic Observation Schedule Generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;24:659–685. [PubMed] [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders. 1994;24:659–695. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Macdonald H, Rutter M, Howlin P, Rios P, Le Conteur A, Evered C, et al. Recognition and expression of emotional cues by autistic and normal adults. Journal of Child Psychology and Psychiatry. 1989;30:865–877. doi: 10.1111/j.1469-7610.1989.tb00288.x. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Mazefsky CA, Oswald DP. Emotion perception in Asperger’s syndrome and high-functioning autism: The importance of diagnostic criteria and cue intensity. Journal of Autism and Developmental Disorders. 2007;37:1086–1095. doi: 10.1007/s10803-006-0251-6. [DOI] [PubMed] [Google Scholar]

- McKone E, Boyer BL. Sensitivity of 4-year-olds to featural and second-order relational changes in face distinctiveness. Journal of Experimental Child Psychology. 2006;94:134–162. doi: 10.1016/j.jecp.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Dobson KS, Parsons J, Maurer D. Why 8-year-olds cannot tell the difference between Steve Martin and Paul Newman: Factors contributing to the slow development of sensitivity to the spacing of facial features. Journal of Experimental Child Psychology. 2004;89:159–181. doi: 10.1016/j.jecp.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Geldart S, Maurer D, Le Grand R. Developmental changes in face processing skills. Journal of Experimental Child Psychology. 2003;86:67–84. doi: 10.1016/s0022-0965(03)00102-4. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Le Grand R, Maurer D. Configural face processing develops more slowly than featural face processing. Perception. 2002;31:553–566. doi: 10.1068/p3339. [DOI] [PubMed] [Google Scholar]

- Ozonoff S, Pennington BF, Rogers SJ. Are there emotion perception deficits in young autistic children? Journal of Child Psychology and Psychiatry. 1990;31:343–361. doi: 10.1111/j.1469-7610.1990.tb01574.x. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick J, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Piggot J, Kwon H, Mobbs D, Blasey C, Lotspeich L, Menon V, et al. Emotional attribution in high-functioning individuals with autistic spectrum disorder: A functional imaging study. Journal of the American Academy of Child & Adolescent Psychiatry. 2004;43:473–480. doi: 10.1097/00004583-200404000-00014. [DOI] [PubMed] [Google Scholar]

- Sasson NJ. The development of face processing in autism. Journal of Autism and Developmental Disorders. 2006;36:381–394. doi: 10.1007/s10803-006-0076-3. [DOI] [PubMed] [Google Scholar]

- Schwarzer G. Development of face processing: The effect of face inversion. Child Development. 2000;71:391–401. doi: 10.1111/1467-8624.00152. [DOI] [PubMed] [Google Scholar]

- Soken NH, Pick AD. Intermodal perception of happy and angry expressive behaviors by seven-month-old infants. Child Development. 1992;63:787–795. [PubMed] [Google Scholar]

- Tantam D, Monaghan L, Nicholson H, Stirling J. Autistic children’s ability to interpret faces: A research note. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1989;30:623–630. doi: 10.1111/j.1469-7610.1989.tb00274.x. [DOI] [PubMed] [Google Scholar]

- Teunisse JP, de Gelder B. Impaired categorical perception of facial expressions in high-functioning adolescents with autism. Child Neuropsychology. 2001;7:1–14. doi: 10.1076/chin.7.1.1.3150. [DOI] [PubMed] [Google Scholar]

- Thomas LA, De Bellis MD, Graham R, LaBar KS. Development of emotional facial recognition in late childhood and adolescence. Developmental Science. 2007;10:547–558. doi: 10.1111/j.1467-7687.2007.00614.x. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson CA. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research. doi: 10.1016/j.psychres.2008.05.006. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valentine T. A unified account of the effects of distinctiveness, inversion, and race in face recognition. The Quarterly Journal of Experimental Psychology. 1991;43A:161–204. doi: 10.1080/14640749108400966. [DOI] [PubMed] [Google Scholar]

- Valentine T, Endo M. Towards an exemplar model of face processing - the effects of race and distinctiveness. Quarterly Journal of Psychology Section A-Human Experimental Psychology. 1992;44:671–703. doi: 10.1080/14640749208401305. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Abbreviated Intelligence Scale. San Antonio: The Psychological Corporation; 1999. [Google Scholar]

- Widen SC, Russell JA. A closer look at preschoolers’ freely produced labels for facial expressions. Developmental Psychology. 2003;39:114–128. doi: 10.1037//0012-1649.39.1.114. [DOI] [PubMed] [Google Scholar]

- Young-Browne G, Rosenfeld HM, Horowitz FD. Infant discrimination of facial expressions. Child Development. 1977;48:555–562. [Google Scholar]