Abstract

Action requires knowledge of our body location in space. Here we asked if interactions with the external world prior to a reaching action influence how visual location information is used. We investigated if the temporal synchrony between viewing and feeling touch modulates the integration of visual and proprioceptive body location information for action. We manipulated the synchrony between viewing and feeling touch in the Rubber Hand Illusion paradigm prior to participants performing a ballistic reaching task to a visually specified target. When synchronous touch was given, reaching trajectories were significantly shifted compared to asynchronous touch. The direction of this shift suggests that touch influences the encoding of hand position for action. On the basis of this data and previous findings, we propose that the brain uses correlated cues from passive touch and vision to update its own position for action and experience of self-location.

Keywords: Parietal Cortex, Touch, Action, Human Body, Body Location, Rubber Hand Illusion

1. Introduction

Visually-guided action is the basis for the vast majority of our interactions with the world, yet there are many open questions about how visual and somatosensory representations interact to guide action. It is known that the brain combines information about the location of the body from visual and proprioceptive input (Rossetti, Desmurget, & Prablanc, 1995; van Beers, Sittig, & Gon, 1999). Here we ask if interactions with the external world prior to action influence how this visual and proprioceptive information is combined. To this end, we investigated if the temporal synchrony between viewing and feeling touch given prior to action modulates the integration of visual and proprioceptive body location information for action.

Electrophysiology studies in monkeys have found multi-modal neurons in the parietal cortex that respond to, and integrate, visual and somatosensory information. Graziano et al. (2000) manipulated the visual position of an artificial monkey arm and the position of the monkey’s real arm that was hidden. Some parietal neurons combine positional cues from both vision and proprioception (Graziano, Cooke, & Taylor, 2000). Interestingly, correlated cues regarding touch can modulate the influence of the visual information: response rates for some neurons were only sensitive for visual information of arm position after stroking the visible artificial monkey arm and the hidden real monkey arm synchronously, but not asynchronously. This suggests that the temporality between cross-modal information modulates body position information in cortical areas thought to be involved in visually-guided actions (Culham, Cavina-Pratesi, & Singhal, 2006).

It is known that the temporality between viewing and feeling touch affects the perception of body position. In the Rubber Hand Illusion (RHI), an artificial human hand is touched synchronously with a person’s own hidden hand. Participants indicate that the position of their own hand is closer to the artificial hand after synchronous stroking as compared to asynchronous stroking (Botvinick & Cohen, 1998; Tsakiris & Haggard, 2005). Furthermore, synchronous touch may induce a sense of ownership for the rubber hand.

However, it is unclear if the temporality of touch modulates action. In fact, it has been argued that the processing of perception and of action is distinctive and that the vision-for-action system is thought to resist perceptual (body) illusions such as the RHI (Dijkerman & de Haan, 2007; Goodale, Gonzalez, & Kroliczak, 2008; Goodale & Milner, 1992). Furthermore, previous studies on visual-motor control have frequently placed emphasis on movement and generated movement feedback for adaptive calibration of body position for action (Holmes, Snijders, & Spence, 2006; Newport, Pearce, & Preston, 2010; Redding & Wallace, 2002).

Newport et al. (2010) found that the synchrony of viewing and performing a repeated active movement of touching a brush affected reaching performance (also see Kammers, Longo, Tsakiris, Dijkerman, & Haggard, 2009 for different result). The design of this study, however, combines active movement and touch, thereby making it unclear, which factor affects action. It might be possible, as discussed by the authors, that dynamic active movement is necessary for subsequent changes in reaching position. Indeed it has been reported that the synchrony of prior passive touch without movement does not affect reaching actions but can affect the grip aperture in grasping movements (Kammers, de Vignemont, Verhagen, & Dijkerman, 2009; Kammers, Kootker, Hogendoorn, & Dijkerman, 2010). This suggests that the synchrony of prior passive touch affects selective actions only. However, it might also be possible that methodological differences between these two studies could account for the different results. In the reaching study, for example, actions that involved both hands were implemented to assess the effect of the RHI on the right hand. In contrast, the grasping study employed a task that involved an action with only one hand – the hand for which the RHI was induced.

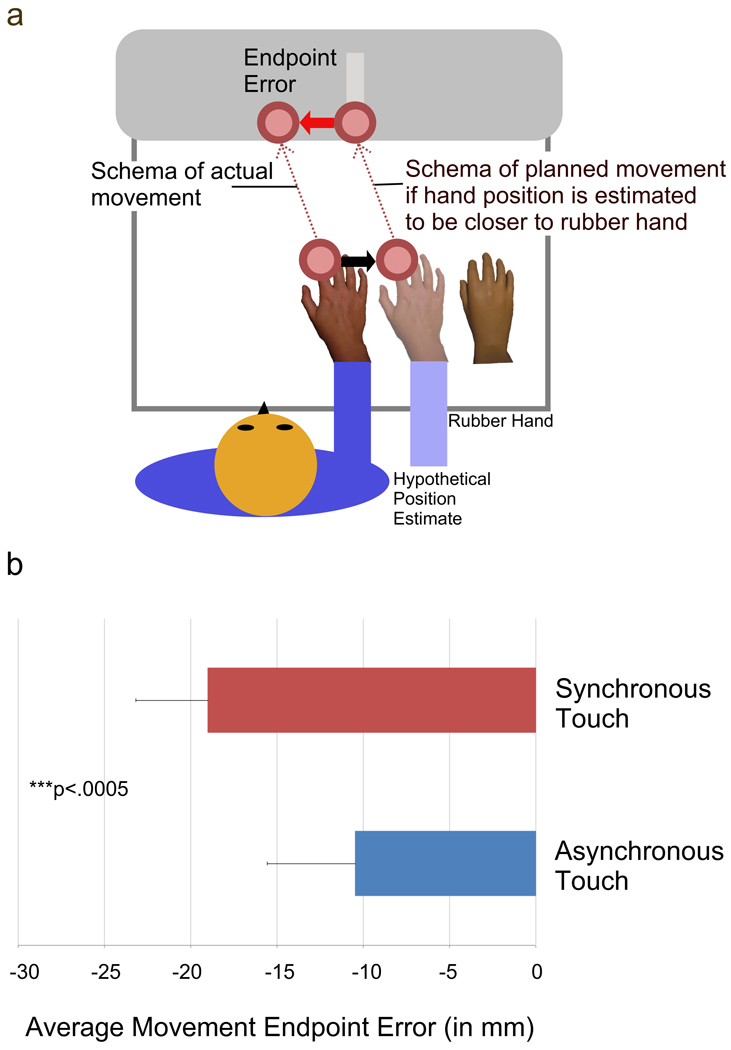

Our study explicitly investigates if reaching actions are affected by the synchrony of prior passive touch for a uni-manual task. Immediately after synchronous or asynchronous touch was applied in a RHI paradigm, participants performed fast ballistic reaching actions towards visual targets. Consistent with the electrophysiological results in monkeys (Graziano et al., 2000), we predicted that the synchrony between viewing and feeling touch in the RHI increases the influence of available visual information regarding body position. If the timing between viewing and feeling touch modulates the weighting of visual information to estimate body position, then hand position should be perceived more towards the visible artificial hand for synchronous touch as compared to asynchronous touch. How would such a shift in hand position affect action? A shift of the hand position towards the visible artificial hand would subsequently lead to misperception of hand position relative to the visible target. During the movement task, the participant should aim towards the target using spatial information from the estimated hand location and not the actual hand location. Therefore the actual performed reaching trajectories would be shifted away from the actual target position towards the opposite side of the visible artificial hand (also see Figure 2a).

Figure 2. Predictions and Results.

(a) Schemata of predictions for planned and actual movement towards the target if reaching is affected by the illusion. If hand position for the planned movement is shifted towards the rubber hand, then the actual executed reaching trajectories will be shifted in opposite direction and result in systematic endpoint errors when reaching toward the target position. Please note that the touch screen is placed vertically in front of the participant. (b) Mean endpoint error (in mm) (N=14) calculated as the difference between the endpoint of the movement and the actual target position in the horizontal direction. The bars indicate one standard error of the mean. The endpoint error was significantly larger when the Rubber Hand Illusion was induced by means of synchronous touch compared with the control condition of asynchronous touch (t[1,13]=−4.92, p<.0005).

2. Materials and Methods

2.1. Participants

Fifteen participants took part in the experiment. The data of one participant had to be discarded, due to a problem with movement recording. All remaining fourteen participants were right handed, had normal or corrected-to-normal vision, and were naïve to the purpose of the experiment (female: 5; age range: 18–32 years, Mean: 21.2 years, SD: 4.0 years). The experiment took approximately 75 min and participants were compensated with $20. All participants gave their informed consent prior to the start of the experiment. The experiment was conducted in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and was approved by the Macquarie University Ethics Review Committee.

2.2. Apparatus and Setup

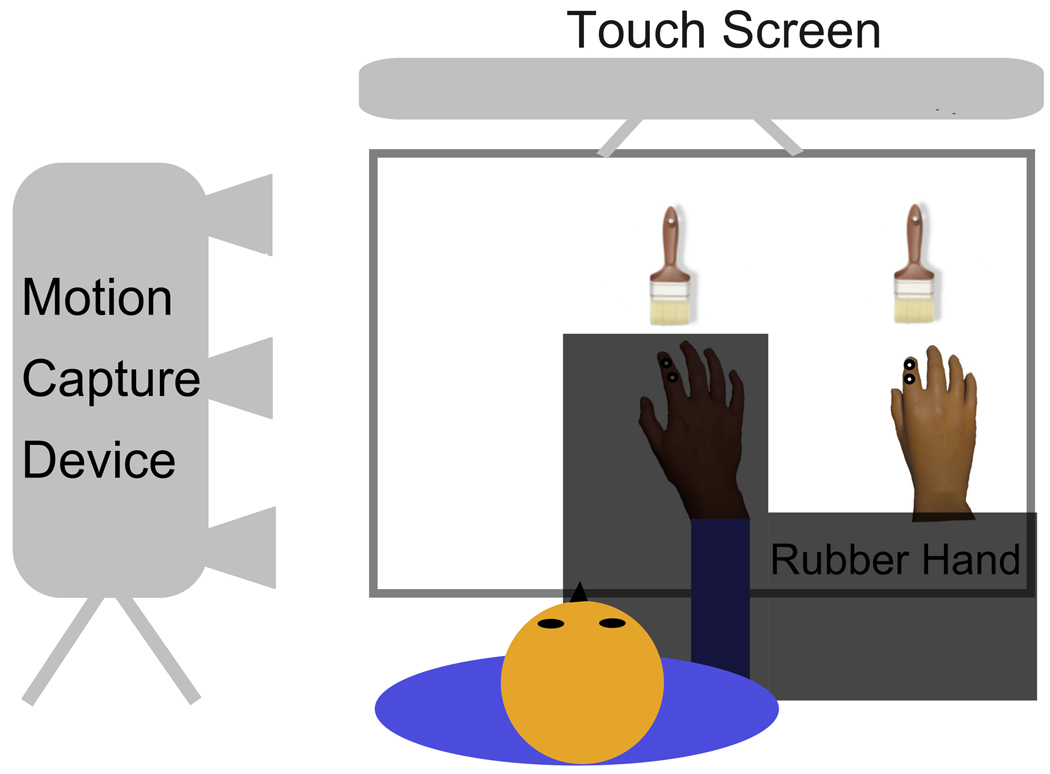

The setup is schematized in Figure 1. Participants sat in front of a table and placed their right hand inside an open frame. The index finger was placed on a slightly elevated knob, which marked the starting position for participants to return to after each trial. A realistic looking right prosthetic rubber hand (Otto Bock Australia Pty Ltd.) was placed to the right of the participant’s hand such that the index fingers of rubber hand and real hand were separated by 20 cm. A touch screen was situated vertically and 50 cm in front of the participant and centered with the participant’s body midline. A black cloth held up by the frame occluded the view of participant’s own hand, but not the rubber hand. Additionally, only the upper half of the screen was visible to all participants, as part of the frame design occluded the lower half. This setup assured that participants could not see their own hand during any time of the experiment. Using an Optotrak Certus system (Northern Digital Inc., USA), hand motion was tracked at 200 Hz via two infrared markers placed on the index finger of the participant’s real right hand. We attached the two markers to the index finger at slightly different angles to minimize the possibility of missing data points due to the obstruction of a sensor during movement. For analysis we chose the marker with the fewest missing data points. Because the required movement in our task was a forward movement, we did not observe any significant data loss for any marker. Likewise, two dummy markers were placed on the index finger of the rubber hand to match the actual hand. All experiments were performed in the dark, with the only illumination coming from the touch screen.

Figure 1. Experimental Setup and Measuring Movement.

A schema of the apparatus and setup is depicted. Participants sat in front of a vertically positioned touch screen. The movement during the reaching task was captured with an optical motion capture system using a camera on left side of participant and infrared markers placed on the participants’ right hand. Two dummy markers were placed on rubber hand. Please note that the participant’s actual hand was not visible at any time during the experiment. The experimenter was situated on the right side of the table in order to apply synchronous or asynchronous brush strokes to both hands using two identical brushes during stroking periods.

2.3. Procedure

Before each movement task, illusory ownership was manipulated using two distinct conditions. In the first condition, both the rubber hand and the real hand were stroked synchronously in order to induce the RHI. In the second condition, the hands were stroked asynchronously (i.e. delay between visual and tactile stimuli) which causes no (or a markedly reduced) RHI (Botvinick & Cohen, 1998; Tsakiris & Haggard, 2005). Participants performed two blocks of each condition, for a total of four blocks, and the order of the blocks was counterbalanced across participants.

Each block started with a two minute stroking period (induction phase) in which participants were asked to focus their attention on the rubber hand, while both real and rubber hand were touched, either synchronously or asynchronously. This was followed by a reaching response. Each subsequent trial consisted of a 22.5 sec top-up stroking period followed by a reaching response. Using two identical brushes, the middle and ring finger of both participant and rubber hands were stroked. The experimenter was situated on the side of the table and stroked the rubber and real hand with two identical brushes. The black cloth of the frame in which both hands were placed occluded the experimenter’s hands from view of the participant. Each stroke started at the base of the knuckles and moved towards the fingertips. The experimenter performed stroking manually at a constant rate such that 80 total strokes were completed in approximately 2 min or 15 strokes in 22.5 sec (0.67 Hz). The consistency and frequency of stroking was maintained by the experimenter with the help of a metronomic beat supplied through headphones from an external source. The same frequency of stroking was maintained in all stroking periods. For asynchronous conditions, visual stimulation preceded tactile stimulation and both were carried out at the equivalent rate of one full stroke of the synchronous stroking such that quantity and temporality of visual and touch stimuli was kept constant between conditions. During all stroking periods a blank white image on the LCD touch screen provided sufficient illumination to see the stroking of the rubber hand.

At the end of each stroking period, a white fixation cross appeared in the centre of the touch screen, and participants were asked to fixate on it. After 2 sec the fixation cross disappeared and a white target line (height 40 cm, width 0.5 cm) followed. Target location was randomized among 40 possible positions spaced out equally at 0.5 cm intervals over the 20 cm distance between the real and rubber hand. Participants had to respond immediately by making a ballistic movement towards the target and touching the screen collinear with the target line, beneath the viewing field. To ensure that motion was in fact ballistic, participants were given 600 ms to reach the halfway distance to the target and participants could not see their hand. If this midline was not crossed within the given time, feedback was given that reminded participants to respond faster and the trial was terminated. If participants crossed the midline within the allotted time-frame, the target would disappear to reduce any fine adjustments in movement nearing the target. Further, additional feedback was given if index finger was not constantly moving towards the target. That is, any backwards motion or hovering in space resulted in the termination of the trial. At the completion of a reaching response, the participant returned to the starting position. Each block consisted of a total of 20 reaching responses.

After each block in either the synchronous or asynchronous stroking condition, participants were asked to fill out a questionnaire to assess their subjective experience of the RHI (see Table 1 for wording). The questionnaire consisted of 11 rating scales that were partly based on previous studies Bovinick and Cohens’ original rubber hand study (1998). Three additional items regarding the experience of movement control for own hand and rubber hand were also included. Participants responded by rating their answers on a scale from 1 to 10, where 1 corresponded to strongly disagree, and 10 to strongly agree. Furthermore, in two items participants were asked to rate vividness and duration of the illusion on a scale from 1 to 10, where 1 corresponded to not realistic/never, and 10 to very realistic/the whole time (Ehrsson, Spence, & Passingham, 2004).

Table 1. Rating Scale Data.

Wording for individual rating scales and median values for synchronous and asynchronous touch conditions are presented. The interquartile range (IQR) is given in parenthesis. Two-sided paired Wilcoxon Signed-Rank Tests were performed for each rating scale; z-values and Bonferroni corrected P-values are given.

| Rating Scale |

Wording | Synchronous Brushing Median (IQR) |

Asynchronous Brushing Median (IQR) |

z-value | P-value (corrected) |

|---|---|---|---|---|---|

| 1 | It seemed as if I were feeling the touch of the brush in the location where I saw the rubber hand touched. | 8.25 (2.38) | 6.50 (4.38) | −3.05 | .03* |

| 2 | It seemed as though the touch I felt was caused by the brush touching the rubber hand. | 7.50 (2.63) | 3.25 (3.75) | −3.08 | .03* |

| 3 | I felt as if the rubber hand was my hand. | 7.50 (1.88) | 4.25 (2.88) | −2.77 | .07(*) |

| 4 | It seemed as if I might have more than one right hand or arm. | 4.00 (2.00) | 2.75 (3.13) | −1.29 | 1.00 |

| 5 | It felt as if my (real) hand were drifting towards the right (towards the rubber hand). | 4.00 (2.88) | 3.75 (2.75) | −1.74 | 1.00 |

| 6 | It seemed as if the touch I was feeling came from somewhere between my own hand and the rubber hand. | 3.75 (2.13) | 4.75 (3.13) | −.60 | 1.00 |

| 7 | The rubber hand began to resemble my own (real) hand, in terms of shape, skin tone, freckles or some other visual features. | 7.50 (2.25) | 7.25 (3.25) | −2.02 | 0.56 |

| 8 | It felt as if I could have moved the rubber hand if I had wanted. | 5.00 (3.25) | 3.75 (3.50) | −1.58 | 1.00 |

| 9 | It felt as if I was in control of the rubber hand. | 5.00 (2.50) | 3.75 (2.63) | −1.23 | 1.00 |

| 10 | It felt like I was in control of my real hand. | 7.25 (1.38) | 8.00 (1.50) | −1.11 | 1.00 |

| 11 | It felt like I couldn't really tell where my hand was. | 4.25 (3.75) | 4.25 (3.75) | −.98 | 1.00 |

| 12 | Please indicate how realistic the feeling was that the rubber hand is your hand during stroking in this block. | 7.50 (2.25) | 4.50 (2.88) | −3.22 | .02* |

| 13 | Please indicate how much of the time the feeling that the rubber hand is your hand was present during stroking in this block. | 6.75 (2.75) | 4.50 (2.88) | −3.09 | .03* |

4. Results

4.1. Rating Scales

In Table 1 the results for individual rating scales are given. We conducted paired Wilcoxon Signed-Rank Tests (two-sided) to compare illusion conditions for each rating scale; z-values and Bonferroni corrected P-values are given in Table 1. Rating responses for Rating Scales 1, 2, 12 and 13 are significantly higher in the synchronous condition as compared to the asynchronous condition (all P<.05, Bonferroni corrected). Furthermore, we found a trend (P=.07, Bonferroni corrected) for Rating Scale 3. The results for individual rating scales are thus similar to previous studies with a similar number of participants (Botvinick & Cohen, 1998; Ehrsson et al., 2004). These studies also found a significant difference for Rating Scale 3, for which we found a trend. Thus overall the analysis of the rating scales confirmed that participants experienced the RHI in the synchronous condition and not (or to a lesser extent) in the asynchronous condition.

4.2. Results for Reaching Performance

Trials in which participants did not move fast enough as well as trials in which they did not move constantly forward were removed from the analysis. This resulted on average in 36.0 (SEM=.9) valid trials for each participant for the synchronous touch condition and 36.6 (SEM=1.0) trials for the asynchronous condition. Importantly, we did not find a significant difference between the conditions in the number of excluded trials (t=−1.21, p>.05).

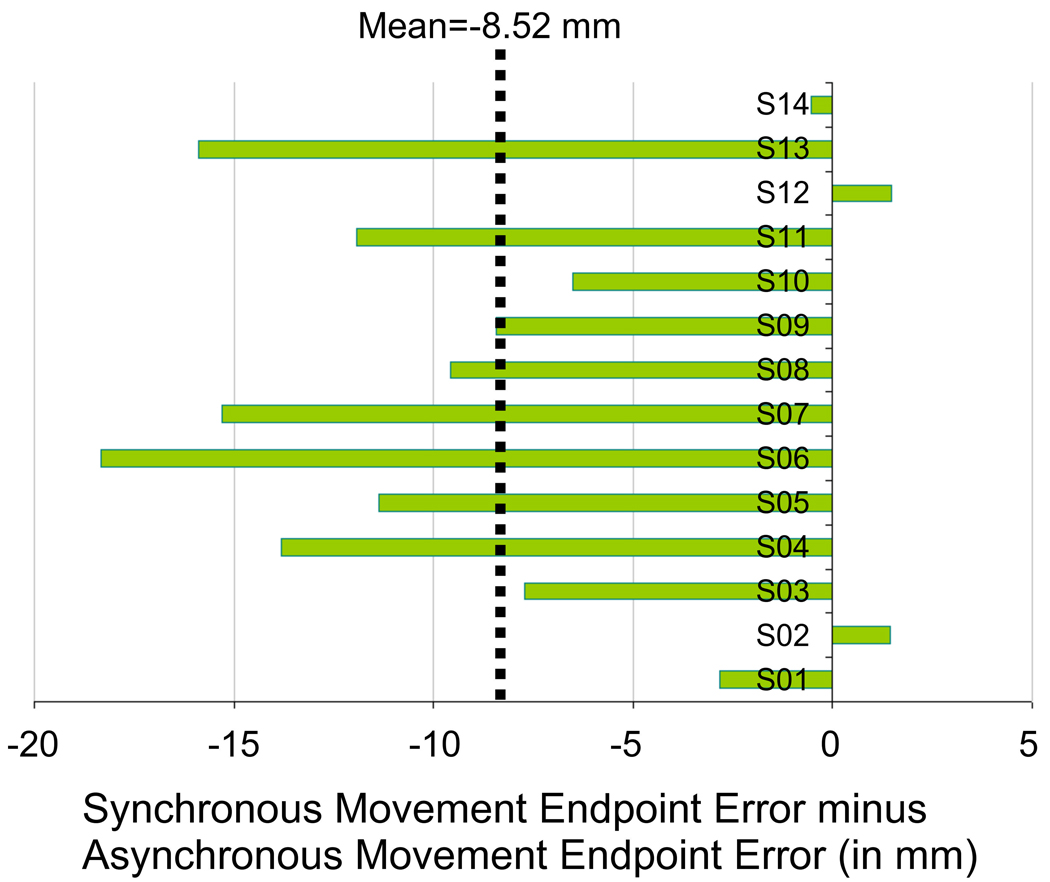

A repeated measures design was used to compare reaching performance during synchronous (RHI) and asynchronous (no RHI) stimulation conditions (order counterbalanced across participants). The averaged data for endpoint errors is depicted in Figure 2b. We found a significant shift of movement endpoint errors in the synchronous condition when compared to the asynchronous condition (synchronous touch: Mean=−19.0 mm, SEM=5.1; asynchronous touch: Mean=−10.5, SEM=4.2; t[1,13]=−4.923, p<.0005, paired t-test, two-sided). The endpoint error was shifted 8.5 mm towards the left of the targets when the RHI was induced compared with the control condition. This shift amounts to on average 4.25% of the distance between real hand and rubber hand. Notably, a shift was found in almost all participants. This can be observed in Figure 3, where the shift in movement endpoint error (synchronous minus asynchronous condition) is depicted for individual participants. In addition, we compared the initial movement direction between conditions. Initial movement direction was defined as the angle of the instantaneous velocity when 10% of the movement distance towards the screen was reached; this occurred on average 90.4 msec (SEM=4.7) after movement onset in the synchronous touch condition and 87.2 msec (SEM=4.1) after movement onset in the asynchronous touch condition (not significant different, p>.05). The initial movement direction was significantly different between conditions (synchronous touch: Mean=72.5°, SEM=2.1; asynchronous touch: Mean= 69.8°, SEM=1.8; t[1,13]=2.366, p<.05). Results from the endpoint movement error and initial movement direction indicate that the reaching trajectory was significantly more shifted to the side of the target opposite the side of the seen rubber hand in the synchronous condition as compared to the asynchronous condition.

Figure 3. Effect of Touch: Individual Endpoint Errors.

Difference in individual endpoint errors (in mm) between synchronous touch (RHI) and asynchronous touch (no RHI – control). The endpoint errors for the asynchronous touch condition were subtracted from the endpoint errors for the synchronous touch condition.

We also analysed other movement parameters such as curvature, mean velocity, movement onset, movement duration, peak velocity and time to peak. Curvature is defined as the maximal path offset (distance perpendicular to the straight line between starting position and end position of the movement) divided by the length of a straight line between starting position and end position of the movement. Mean velocity is the mean velocity across all data points. Peak velocity is the maximal tangential velocity. Time to peak is the time after target onset until maximum velocity was reached. Movement onset is calculated as the time after target onset when the movement velocity amounted to 5% of peak velocity. Movement duration is the time from movement onset until the screen was touched. We found that these other movement parameters were not significantly affected by the illusion manipulation (all p > .10; see Table 2 for statistics).

Supplementary Table 2. Results: Movement Parameters.

Mean values across participants and SEM (in parentheses) are given for synchronous and asynchronous touch conditions. Two-sided paired t-tests were performed to compare conditions; t-values and p-values are given.

| Parameter | Unit | Synchronous Brushing Mean (SEM) |

Asynchronous Brushing Mean (SEM) |

t-value [1,13] |

p-value |

|---|---|---|---|---|---|

| Endpoint error | mm | −19.00 (5.07) | −10.48 (4.19) | −4.92 | .00028* |

| Initial movement direction | degree | 72.47 (2.14) | 69.79 (1.80) | 2.37 | .0342* |

| Curvature | ratio max pathoffset /distance | 0.095 (0.009) | 0.093 (0.008) | 0.706 | 0.493 |

| Mean velocity | m/sec | 0.423 (0.016) | 0.425 (0.016) | −0.419 | 0.682 |

| Peak velocity | m/sec | 1.03 (0.052) | 1.06 (0.060) | −1.32 | 0.209 |

| Time to peak | msec | 190.86 (8.71) | 183.67 (9.83) | 1.76 | 0.101 |

| Movement onset | msec | 242.24 (7.82) | 248.38 (7.68) | −1.18 | 0.260 |

| Movement duration | msec | 480.94 (40.26) | 468.33 (37.11) | 1.14 | 0.274 |

We also performed non-parametric Spearman’s rank correlation analyses and correlated the difference in endpoint error (as depicted in Figure 3) and initial movement direction between synchronous and asynchronous condition with the difference in rating scale response between synchronous and asynchronous condition for each rating scale. We found a significant negative correlation between endpoint error difference and rating scale difference for Rating Scale 9 “It felt as if I was in control of the rubber hand” (Spearman’s rho=−.594, P=.025, 2-sided, uncorrected). That is, the stronger the subjective experience was concerning control of the rubber hand in the synchronous condition as compared to the asynchronous condition, the more negative was the endpoint error (and towards the left) in the synchronous condition as compared to the asynchronous condition. This is interesting, as it seems to suggest that the subjective experience of control for the seen rubber hand is related to the amount of shift in estimated body position for action towards the seen hand. Note however, that we did not find a significant difference between illusion conditions for this rating scale. Furthermore, this correlation is not significant when correcting for multiple comparisons. In addition, we found non-significant trends for endpoint error and Rating Scale 11 “I felt like I couldn’t really tell where my hand was” (Spearman’s rho=−.496, P=.071, 2-sided, uncorrected), Rating Scale 12 indicating vividness of the illusion (Spearman’s rho=−.495, P=.072, 2-sided, uncorrected) and Rating Scale 13 indicating duration of the illusion (Spearman’s rho=−.479, P=.083, 2-sided, uncorrected). We did not find any significant correlations for any of the other rating scales, as well as for any comparisons regarding initial movement direction (all P>.05).

5. Discussion

Our results clearly show that action is affected by the temporality of touch in the RHI paradigm. Endpoint movement location and initial movement direction were significantly shifted after synchronous touch as compared to asynchronous touch. Furthermore, other movement parameters were not significantly affected by touch. This indicates that the position of the movement trajectory was shifted whereas other aspects of the movement were not modulated by touch. Our findings are consistent with the assumption that the hand movement is planned from a starting position closer to the visible rubber hand in the synchronous condition. In other words, the synchrony between viewing and feeling touch affects the estimation of body part location for reaching movements.

Previous research suggested that the body representation for reaching actions is not affected by the temporality of passive touch in the RHI paradigm. The RHI was therefore considered to predominantly affect perceptual body representations (Dijkerman & de Haan, 2007). It has also been argued that only actions involving postural information, such as those required for grip size during grasping motion, are influenced by prior passive touch (Kammers et al., 2010). An investigation of the effect of the passive touch synchrony on visual-guided reaching found no effect (Kammers, de Vignemont et al., 2009). This previous study however used bi-manual actions to assess the effect of prior touch. The RHI was induced for the right hand and participants reached either towards their own left hand or towards an external object with both hands. In our design as well as in the grasping study by Kammers et al. (2010), uni-manual tasks were implemented. That is an action was required only involving the hand which received prior brush strokes. In both of these experiments with a uni-manual task, an effect on action was found. This might mean that action is only affected by the RHI when the hand that received prior touch is used in an action task. However when both parts of the body – one that received synchronous touch and one which did not - are used in a task, the effect of passive prior touch might be diminished. It is possible, that when using both hands, the brain also uses information regarding body metrics such as the typical distance between the left and right body parts to estimate body position for action.

Other experimental factors might also have influenced the fact that we found an effect of passive synchronous touch on reaching in contrast to Kammers et al. (2009). For example, we stroked not one but two fingers when inducing the RHI. In fact, also in the grasping study, which reported an effect of prior touch on action two fingers were stroked. It is possible, that stroking two fingers enhances the effect of prior touch on action. In a previous study on the perceptual drift in the RHI, both stroking of one finger as well as of two fingers was implemented (Tsakiris & Haggard, 2005). Their data seems to suggest that the perceptual drift spreads more to non-stroked fingers when two fingers are stroked, in this case a finger between both stroked fingers. Further experimental aspects that differed between our and the previous reaching study using passive touch were that we conducted more trials and did not implement a perceptual task before the motor task.

Kammers, Longo et al. (2009) performed a study in which a video version of the RHI was implemented and one aim was to investigate the effect of synchrony of viewing and performing prior passive or active movement on reaching actions. In this study only the hand which was involved in prior movements was used in the task, only one finger was moved during the induction period, and no perceptual measurement was performed before the motor measurement. The authors did not find an effect for the synchrony of viewing and performing prior movement on reaching performance towards external targets. In contrast, Newport et al. (2010) did find an effect of synchrony on reaching in a video version of the RHI. Several experimental aspects differed between these two studies and could potentially explain the contradictory findings. For example, two hands were visually present to either side of the real hand in the study that reported an effect on reaching (Newport et al., 2010). One of the hands moved synchronous while the other moved asynchronous, or they both moved synchronous. The results suggest that encoding of hand position for action was shifted towards a hand which moved synchronously. It is possible that the presence of two hands simultaneously might enhance the effects on action. One further aspect might be relevant for the discussion of our findings. In the study that did not find an effect, a delay of 100 ms between performed and projected movement was present in the synchronous condition. Whereas in the study which found an effect the projection delay amounted to only 17 ms. Thus it might be possible that the effect of prior cues on action is especially sensitive to even small temporal delays (see Shimada, Fukuda, & Hiraki, 2009 for effects of temporal delays in the RHI paradigm on perception). In our study, both viewed and felt touch was carefully performed in order to be synchronous.

Here we show that human reaching actions can indeed be affected by the synchrony of seen and felt passive touch. This synchrony also modulates perceived body position (Botvinick & Cohen, 1998; Tsakiris & Haggard, 2005). Newport et al. (2010) found that the synchrony of viewing and performing a repeated active movement touching a brush also affects reaching performance. In this study however, active movement and touch were combined, whereas in our study the influence of passive touch in the classic RHI paradigm was investigated. Together, these results imply that the RHI does affect reaching actions. On the basis of these findings, we propose that the brain may use correlated cues from passive touch to update its own position for action and experience of self-location.

These results could be used in support of a common neural multisensory system to estimate body location both for action and perception. In fact, other factors have been shown to affect body location for both action and perception. For example, the plausibility of a seen artificial hand, both for hand form and hand orientation (Graziano et al., 2000; Holmes et al., 2006; Tsakiris & Haggard, 2005). Furthermore, correlated sensory-motor cues when observing a movement that is synchronous to one’s own movement can also modulate perception of self-location and visually-guided reaching (Holmes & Spence, 2005; Newport et al., 2010; Tsakiris, Prabhu, & Haggard, 2006).

However, we cannot exclude the possibility that there are two systems to estimate body position one for action and one for perception on the basis of this data, because both might be influenced separately by the synchrony of touch. We did find an endpoint error in synchronous condition of −19 mm which amounts to 9.5% of total distance between the both hands. The difference between synchronous and asynchronous condition amounted to −8.5 mm (4.25% of total distance). The reported perceptual position drift in previous studies for the synchronous condition has typically been larger, this did however vary - between 6–40% of total distance (Costantini & Haggard, 2007; Kammers, de Vignemont et al., 2009; Makin, Holmes, & Ehrsson, 2008; Zopf, Savage, & Williams, 2010). We did not measure the perceptual position drift in the present study because we were concerned that measuring the perceived hand position before the reaching task might reduce the affect of the synchrony of touch on action, and measuring the perceived hand position after the reaching movement has been shown to reduce the size of the perceived position change (Kammers, Mulder, de Vignemont, & Dijkerman, 2009). It is also possible that although the reaching movement used in our study is very fast to reduce the amount of on-line corrections, proprioceptive feedback during the movement itself regarding hand position could reduce the effect of the illusion on action to some extent (Desmurget & Grafton, 2000). Ideally, one would have to measure the effect on action and perception on separate trials and implement similar methods and task requirements. However, in this study we tried to maximize the amount of reaching trials. It would be interesting to investigate in future studies how these two measures are related.

The existence of a common system or two dissociable visual systems for action and perception has been widely argued using the findings from purely visual illusions such as the Ebbinghaus illusion on action and perception (Aglioti, DeSouza, & Goodale, 1995; Bruno, 2001; Franz & Gegenfurtner, 2008; Franz, Gegenfurtner, Bulthoff, & Fahle, 2000; Goodale et al., 2008; Goodale & Milner, 1992; Smeets & Brenner, 2006). Interestingly, the findings and discussions highlight that conditions and methods determine the presence and size of illusion effects on action.

Studies in both monkeys and humans indicate that parietal areas are involved in the processing of body position. The parietal cortical network is highly complex, receiving input from multiple areas including the somatosensory cortex, and likewise projecting to various regions, including the premotor and motor cortex (Pandya & Kuypers, 1969; Pearson & Powell, 1985). Graziano et al. (2000) have shown that neurons within a parietal area (area 5) encode the position of both an invisible and an artificial arm. Furthermore, touch is able to modulate neural position coding. These findings indicate that the parietal cortex integrates information from multiple modalities to form a configuration of the body, often termed body schema (Gallagher, 2005). Information about body configuration from the parietal cortex is then thought to be relayed to premotor and motor areas, where movements are planned (Johnson, Ferraina, Bianchi, & Caminiti, 1996; Jones, Coulter, & Hendry, 1978; Pandya & Kuypers, 1969; Pearson & Powell, 1985). In humans, using functional imaging, it has also been suggested that intraparietal areas combine information from proprioception, vision and touch (Lloyd, Shore, Spence, & Calvert, 2003; Makin, Holmes, & Zohary, 2007). For example, Makin et al. (2007) investigated the respresentation of peripersonal body space which relies on information about body position. They found that the encoding of an approaching ball was modulated in the anterior intraparietal sulcus by proprioceptive information for hand location as well as visual hand position information. This area was also activated by simple touch. Furthermore, also in humans the synchrony of touch of an artificial hand and real hand affects signals from parietal areas (Ehrsson et al., 2004). More specifically, the intraparietal cortex was related to conditions that are necessary for the RHI to occur, i.e. synchronous touch and congruent position. In fact, Ehrsson et al. (2004) suggested that the pattern of activity in the posterior parietal cortex found when inducing the RHI relates to “the recalibration of proprioceptive representation of the upper limb in the reaching circuit” (p. 877). Parietal lesions in neuropsychological patients may cause problems in visually-guided reaching as in optical ataxia (Karnath & Perenin, 2005). Furthermore, parietal lesions have also been reported to be related to a disturbed sense of ownership for part of the body (Critchley, 1953). Interestingly, a disturbed sense of ownership in patients has been related to position sense deficits (Vallar & Ronchi, 2009). In sum, results from previous studies indicate that the parietal cortex integrates information from multiple modalities to encode body position. Furthermore and important for the interpretation of our findings, the temporal synchrony between viewing and feeling touch has been related to modulations of neural activity encoding body position.

We provide evidence that the temporal synchrony between viewing and feeling passive touch has direct behavioral effects on human interaction with the environment. Thus, the current study bridges the gap between previous neural findings and observable behavior and opens up many possibilities for future studies; for example studies further investigating the conditions and limits of the influence of passive touch on action, as well as studies exploring the neural basis of body representation(s) in the parietal cortex that may modulate perception and action.

In our study and in previous studies (e.g. Botvinick & Cohen, 1998; Ehrsson et al., 2004) participants generally disagree with statements such as “It felt as if my hand were drifting towards the rubber hand” and “It seemed as if the touch I was feeling came from somewhere between my own hand and the rubber hand” both after synchronous and asynchronous touch. Thus, in contrast to changes in body position for perception and action, participants do not seem to consciously perceive body position changes during the rubber hand illusion (a dissociation also noted by Makin et al., 2008). We did however find an indication that the subjective experience of control for the seen rubber hand is related to the individual amount of shift in estimated body position for action towards the seen hand.

In conclusion, the synchrony between viewing and feeling touch is functionally relevant for the encoding of the body position used in action and perception. We propose that the brain analyses correlated multisensory as well as sensory-motor cues to update its own position for action and experience of self-location.

Acknowledgements

We would like to thank Glenn Carruthers for fruitful discussions and Chris Baker, Dwight Kravitz and Anina Rich for their insightful comments on an earlier version of this manuscript. MF is an Australian Research Fellow and MAW is a Queen Elizabeth II Fellow, both funded by the Australian Research Council.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aglioti S, DeSouza JF, Goodale MA. Size-contrast illusions deceive the eye but not the hand. Curr Biol. 1995;5(6):679–685. doi: 10.1016/s0960-9822(95)00133-3. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Cohen J. Rubber hands 'feel' touch that eyes see. Nature. 1998;391(6669):756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Bruno N. When does action resist visual illusions? Trends Cogn Sci. 2001;5(9):379–382. doi: 10.1016/s1364-6613(00)01725-3. [DOI] [PubMed] [Google Scholar]

- Costantini M, Haggard P. The rubber hand illusion: sensitivity and reference frame for body ownership. Conscious Cogn. 2007;16(2):229–240. doi: 10.1016/j.concog.2007.01.001. [DOI] [PubMed] [Google Scholar]

- Critchley M. The parietal lobes. New York: Hafner; 1953. [Google Scholar]

- Culham JC, Cavina-Pratesi C, Singhal A. The role of parietal cortex in visuomotor control: what have we learned from neuroimaging? Neuropsychologia. 2006;44(13):2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Grafton S. Forward modeling allows feedback control for fast reaching movements. Trends Cogn Sci. 2000;4(11):423–431. doi: 10.1016/s1364-6613(00)01537-0. [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, de Haan EH. Somatosensory processes subserving perception and action. Behav Brain Sci. 2007;30(2):189–201. doi: 10.1017/S0140525X07001392. discussion 201-139. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, Spence C, Passingham RE. That's my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305(5685):875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- Franz VH, Gegenfurtner KR. Grasping visual illusions: consistent data and no dissociation. Cogn Neuropsychol. 2008;25(7–8):920–950. [PubMed] [Google Scholar]

- Franz VH, Gegenfurtner KR, Bulthoff HH, Fahle M. Grasping visual illusions: no evidence for a dissociation between perception and action. Psychol Sci. 2000;11(1):20–25. doi: 10.1111/1467-9280.00209. [DOI] [PubMed] [Google Scholar]

- Gallagher S. How the body shapes the mind. Oxford: Oxford University Press; 2005. [Google Scholar]

- Goodale MA, Gonzalez CL, Kroliczak G. Action rules: why the visual control of reaching and grasping is not always influenced by perceptual illusions. Perception. 2008;37(3):355–366. doi: 10.1068/p5876. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF, Taylor CS. Coding the location of the arm by sight. Science. 2000;290(5497):1782–1786. doi: 10.1126/science.290.5497.1782. [DOI] [PubMed] [Google Scholar]

- Holmes NP, Snijders HJ, Spence C. Reaching with alien limbs: visual exposure to prosthetic hands in a mirror biases proprioception without accompanying illusions of ownership. Percept Psychophys. 2006;68(4):685–701. doi: 10.3758/bf03208768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes NP, Spence C. Visual bias of unseen hand position with a mirror: spatial and temporal factors. Exp Brain Res. 2005;166(3–4):489–497. doi: 10.1007/s00221-005-2389-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson PB, Ferraina S, Bianchi L, Caminiti R. Cortical networks for visual reaching: physiological and anatomical organization of frontal and parietal lobe arm regions. Cereb Cortex. 1996;6(2):102–119. doi: 10.1093/cercor/6.2.102. [DOI] [PubMed] [Google Scholar]

- Jones EG, Coulter JD, Hendry SH. Intracortical connectivity of architectonic fields in the somatic sensory, motor and parietal cortex of monkeys. J Comp Neurol. 1978;181(2):291–347. doi: 10.1002/cne.901810206. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, de Vignemont F, Verhagen L, Dijkerman HC. The rubber hand illusion in action. Neuropsychologia. 2009;47(1):204–211. doi: 10.1016/j.neuropsychologia.2008.07.028. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, Kootker JA, Hogendoorn H, Dijkerman HC. How many motoric body representations can we grasp? Exp Brain Res. 2010;202(1):203–212. doi: 10.1007/s00221-009-2124-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kammers MPM, Longo MR, Tsakiris M, Dijkerman HC, Haggard P. Specificity and coherence of body representations. Perception. 2009;38(12):1804–1820. doi: 10.1068/p6389. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, Mulder J, de Vignemont F, Dijkerman HC. The weight of representing the body: addressing the potentially indefinite number of body representations in healthy individuals. Exp Brain Res. 2009 doi: 10.1007/s00221-009-2009-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karnath HO, Perenin MT. Cortical control of visually guided reaching: evidence from patients with optic ataxia. Cereb Cortex. 2005;15(10):1561–1569. doi: 10.1093/cercor/bhi034. [DOI] [PubMed] [Google Scholar]

- Lloyd DM, Shore DI, Spence C, Calvert GA. Multisensory representation of limb position in human premotor cortex. Nature Neuroscience. 2003;6(1):17–18. doi: 10.1038/nn991. [DOI] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Ehrsson HH. On the other hand: dummy hands and peripersonal space. Behav Brain Res. 2008;191(1):1–10. doi: 10.1016/j.bbr.2008.02.041. [DOI] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Zohary E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J Neurosci. 2007;27(4):731–740. doi: 10.1523/JNEUROSCI.3653-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newport R, Pearce R, Preston C. Fake hands in action: embodiment and control of supernumerary limbs. Exp Brain Res. 2010;204(3):385–395. doi: 10.1007/s00221-009-2104-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pandya DN, Kuypers HG. Cortico-cortical connections in the rhesus monkey. Brain Res. 1969;13(1):13–36. doi: 10.1016/0006-8993(69)90141-3. [DOI] [PubMed] [Google Scholar]

- Pearson RCA, Powell TPS. The projections of the primary somatosensory cortex upon area 5 in the monkey. Brain Research Reviews. 1985;9:89–107. doi: 10.1016/0165-0173(85)90020-7. [DOI] [PubMed] [Google Scholar]

- Redding GM, Wallace B. Strategic calibration and spatial alignment: a model from prism adaptation. J Mot Behav. 2002;34(2):126–138. doi: 10.1080/00222890209601935. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol. 1995;74(1):457–463. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- Shimada S, Fukuda K, Hiraki K. Rubber hand illusion under delayed visual feedback. PLoS One. 2009;4(7):e6185. doi: 10.1371/journal.pone.0006185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smeets JB, Brenner E. 10 years of illusions. J Exp Psychol Hum Percept Perform. 2006;32(6):1501–1504. doi: 10.1037/0096-1523.32.6.1501. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Haggard P. The rubber hand illusion revisited: visuotactile integration and self-attribution. J Exp Psychol Hum Percept Perform. 2005;31(1):80–91. doi: 10.1037/0096-1523.31.1.80. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Prabhu G, Haggard P. Having a body versus moving your body: How agency structures body-ownership. Conscious Cogn. 2006;15(2):423–432. doi: 10.1016/j.concog.2005.09.004. [DOI] [PubMed] [Google Scholar]

- Vallar G, Ronchi R. Somatoparaphrenia: a body delusion. A review of the neuropsychological literature. Exp Brain Res. 2009;192(3):533–551. doi: 10.1007/s00221-008-1562-y. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. J Neurophysiol. 1999;81(3):1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- Zopf R, Savage G, Williams MA. Crossmodal congruency measures of lateral distance effects on the rubber hand illusion. Neuropsychologia. 2010;48(3):713–725. doi: 10.1016/j.neuropsychologia.2009.10.028. [DOI] [PubMed] [Google Scholar]