Abstract

The resurgence of temporal patterns of key pecking by pigeons was investigated in two experiments. In Experiment 1, positively accelerated and linear patterns of responding were established on one key under a discrete-trial multiple fixed-interval variable-interval schedule. Subsequently, only responses on a second key produced reinforcers according to a variable-interval schedule. When reinforcement on the second key was discontinued, positively accelerated and linear response patterns resurged on the first key, in the presence of the stimuli previously correlated with the fixed- and variable-interval schedules, respectively. In Experiment 2, resurgence was assessed after temporal patterns were directly reinforced. Initially, responding was reinforced if it approximated an algorithm-defined temporal pattern during trials. Subsequently, reinforcement depended on pausing during trials and, when it was discontinued, resurgence of previously reinforced patterns occurred for each pigeon and for 2 of 3 pigeons during a replication. The results of both experiments demonstrate the resurgence of temporally organized responding and replicate and extend previous findings on resurgence of discrete responses and spatial response sequences.

Keywords: resurgence, temporal patterns of responding, behavioral history, discrete-trial procedure, key peck, pigeons

Resurgence is the recurrence of previously reinforced responding when current responding is no longer reinforced (Epstein, 1983). It has been suggested to be involved in the selection and provenance of operants, novel behavior and creativity (Epstein, 1996) and several issues related to application. The latter include addiction relapse (Podlesnik, Jimenez-Gomez & Shahan, 2006), failures of behavioral intervention integrity (Lattal & St. Peter Pipkin, 2009; Lieving, Hagopian, Long & O'Connor, 2004), and even Freudian regression (Keller & Schoenfeld, 1950).

Resurgence has been investigated using a three-phase procedure (Epstein & Skinner, 1980; Leitenberg, Rawson & Mulick, 1975). In the first, Training, phase, some form of responding is reinforced. In the second, Response-Elimination, phase, reinforcement of the first response is discontinued and an alternative response is reinforced. In the third, Resurgence, phase, nonreinforcement of the first response continues and reinforcement of the alternative response is discontinued. Laboratory studies of the resurgence of discrete responses have established basic parameters of this phenomenon (e.g., da Silva, Maxwell & Lattal, 2008; Doughty, da Silva, & Lattal, 2007; Epstein, 1983, 1985; Leitenberg, et al., 1975; Lieving & Lattal, 2003; Podlesnik & Shahan, 2009; Winterbauer & Bouton, 2010). Given its theoretical and practical significance, it has been of interest to extend the analysis of resurgence beyond that observed with discrete responses associated with a single operandum. These extensions largely have involved the resurgence of spatial patterns of responding, consisting of responses distributed across different operanda.

Sánchez-Carrasco and Nieto (2005), for example, initially trained two groups of rats to emit sequences of three lever presses distributed across two levers. During the Response-Elimination phase, a different sequence was reinforced for rats in each group. In the Resurgence phase, an increase in sequence variability was observed for rats in both groups, but those sequences that were reinforced during training occurred at a higher frequency than did other sequences. Bachá-Mendez, Reid, and Mendoza-Soylovna (2007; see also Reed & Morgan, 2006) replicated these results in two experiments in which rats also were trained to emit sequences of two lever presses distributed between two levers. In each of four phases (Experiments 1 and 2), a given sequence was reinforced while reinforcement for previously trained sequences was discontinued. Resurgence of previously reinforced response sequences was observed for each rat in both experiments.

The extension of resurgence to multielement operants has focused almost exclusively on the analysis of spatially defined response sequences. The resurgence of multielement operants defined as temporal patterns of responding has been investigated in only a single experiment. Carey (1951; see also Keller & Schoenfeld, 1950) assessed the recurrence of temporally defined sequences of lever presses by rats. For one group, reinforcers initially were dependent on the occurrence of two lever presses with interresponse times (IRTs) ≤ 0.25 s (“double” lever press). For the other, IRTs of specific durations were not required and reinforcers were dependent on only one lever press (“single” lever press). In the Response-Elimination phase, only single lever presses by rats in the first group were reinforced and, for the rats in the second group, only lever presses with IRTs ≤ 0.25 s were reinforced. In the Resurgence phase, the frequency of the IRTs reinforced in the first phase increased (i.e., there was a resurgence of double or single lever presses) for rats in both groups, while the frequency of recently reinforced responding decreased. Although primogenial, Carey's experiment is not a convincing demonstration of the resurgence of temporally organized behavior. Because the research was reported only in abstract form, it is not possible to glean the details of his procedure and to assess the validity and reliability of his findings. (Note: The Columbia University library was unable to find the dissertation on which the abstract seems to have been based: Carey, 1953. The dissertation research also is described briefly by Dinsmoor, 1990).

In comparison to the two-response temporally organized patterns that Carey (1951) arranged, multiple responses also may be organized, or become organized, into units. For example, Zeiler (1977) suggested that fixed-ratio (FR) schedules may organize the responses in ratios into behavioral units, and others have made similar suggestions for fixed-interval (FI) schedules (Dews, 1970; Ferster & Skinner, 1957; Shull, 1970; Shull, Guilkey & Witty, 1972; Zeiler, 1968, 1977). Multiple temporally organized responses also have been reinforced directly (Hawkes & Shimp, 1975, 1998; Wasserman, 1977). Hawkes and Shimp (1975) exposed pigeons to a discrete-trial procedure in which reinforcement was, in different conditions, dependent on positively and negatively accelerated patterns of responding. The temporal patterns generated by Hawkes and Shimp were based on algorithms that specified a constant rate of change in rate of key pecking during each trial. The criterion for reinforcement was based on how much the obtained patterns deviated from the algorithms and, as that criterion was made successively more stringent, the frequency of patterns that approximated the models increased systematically.

Previous experiments have established the resurgence of spatially organized multielement operants (e.g., Reed & Morgan, 2006; Sánchez-Carrasco & Nieto, 2005). The present experiments complement these earlier findings by analyzing the resurgence of temporally organized patterns of responding.

EXPERIMENT 1

In this experiment a discrete-trial multiple schedule was used to assess whether the different temporal patterns established in either component would resurge differentially in a manner similar to that observed with discrete (Doughty et al., 2007; Epstein, 1983) and spatially organized (e.g., Sánchez-Carrasco & Nieto, 2005) responses.

Method

Subjects

Three male White Carneau pigeons (775, 847 and 691) were maintained at 80% (± 15 g) of their free-feeding body weights. They were housed individually, with free access to water and health grit, in a colony room with a 12∶12 hr light∶dark cycle. Each had a history of responding under a variety of reinforcement schedules.

Apparatus

Two plywood operant chambers for pigeons (30 cm long × 32 cm wide × 38 cm high) were used. The front wall was an aluminum panel with three 2-cm diameter Gerbrands Co. response keys, 9 cm apart (center to center) and with their lower edge 25 cm from the floor. The center and right keys were used and each was operated by a minimum force of 0.15 N. The center key could be transilluminated white or green. The right key was transilluminated red in one chamber, and blue in the other chamber. General illumination was provided by two 28-V white houselights located in the lower right corner of the aluminum panel for one chamber, and on the ceiling, 12 cm from the midline of the aluminum panel, for the other. A food hopper was located behind a rectangular aperture (5 cm × 4 cm) at the center of the aluminum panel, with its lower edge 8 cm from the floor. When raised, the hopper was illuminated by a 28-V DC white light and provided 3-s access to mixed grain, during which the keylights and houselight were off. White noise and a ventilation fan in each chamber masked extraneous sounds. Programming of conditions and data recording were accomplished by using MED-PC® interfacing and software and an IBM® microcomputer located in an adjacent room.

Procedure

A two-component discrete-trial multiple schedule of reinforcement was used. Throughout the experiment, each session started with a 180-s blackout, during which the keylights and the houselight were off. During trials, the houselight and the appropriate response key were transilluminated for 5 s (see description of the Training phase, below, for an exception). Trials were separated by 10-s intertrial intervals (ITIs), during which the houselight and keylights were off. Responses during the first 5 s of the ITI had no programmed consequences, but a differential-reinforcement-of-other-behavior (DRO) 5-s schedule was in effect during the last 5 s of the ITI to preclude responses from occurring near trial onset. Each schedule component occurred with a .5 probability at the beginning of each session, with the caveat that the same component could not occur on more than three consecutive trials and that each session contained an equal number of both components. Sessions ended after 90 trials of each schedule component and were conducted 7 days a week, at approximately the same time each day, during the light period of the colony room light/dark cycle. Table 1 (rightmost column) shows the number of sessions that each of the phases described below was in effect. The sequence of phases and conditions in each were as follows.

Table 1.

Mean reinforcement rate (standard deviations in parenthesis), and total number of sessions for each pigeon on each phase of Experiment 1.

Training

A multiple FI variable-interval (VI) schedule was in effect on the center key. In the presence of a white keylight, responding was reinforced according to a VI 15-s schedule, arranged according to the distribution described by Fleshler and Hoffman (1962). The timer controlling the VI schedule operated only when the keylight was white. Reinforcers made available but not collected in one VI component were carried over to the next VI component. In the presence of a green keylight, an FI schedule was in effect. To equate reinforcement rates between schedule components, on FI trials reinforcers were produced with a probability of .33 by the first response after 5 s elapsed. VI trials always were 5 s in duration and reinforcers could occur at any time within a trial (and, because of the variable interval, some trials were concluded without a reinforcer being arranged or delivered). FI trials ended with the first response after 5 s, and varied slightly in actual duration depending on how soon after this interval elapsed the pigeons actually responded. During FI trials, reinforcer deliveries, when scheduled, always occurred at the end of the trial. This phase lasted for a minimum of 20 sessions and until positively accelerated and linear patterns of responding consistently occurred during FI and VI components, respectively.

Response Elimination

The multiple schedule was as described for the Training phase, except that during each 5-s trial both the center and right keylights were transilluminated. The center keylight was white or green, and the color of the right keylight was the same in both components (blue for Pigeon 847 and red for Pigeons 691 and 775). In this phase, reinforcement of pecking the center key was discontinued, and right-key responding was reinforced according to a VI 15-s schedule, arranged as described for the Training phase. A 2-s pause–response changeover delay (Shahan & Lattal, 1998) was in effect such that, during each trial, responding on the right key was never reinforced within 2 s of a response on the center key. This phase lasted for a minimum of 15 sessions and until responding on the right key occurred consistently, and response rates on the center key were less than one response per min in both components, for three consecutive sessions.

Resurgence

The multiple schedule as described under the Response-Elimination phase was in effect; however, extinction was programmed on the center and right keys in both components. This phase lasted for 15 sessions.

Results

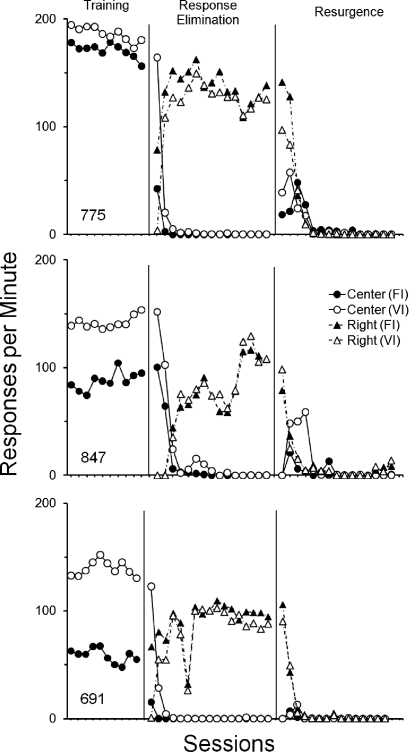

Figure 1 shows response rates in each schedule component during the last 10 sessions of the Training phase and all sessions of the Response-Elimination and Resurgence phases. During Training, response rates on the center key were higher in the VI component for each pigeon, although a less pronounced response rate difference between schedule components occurred for Pigeon 775. Across Response-Elimination sessions, responding on the center key eventually ceased as response rates on the right key increased and stabilized. Table 1 (leftmost column) shows that reinforcement rates were similar in both components during the Training and Response Elimination phases, although slightly higher in the VI component for each pigeon.

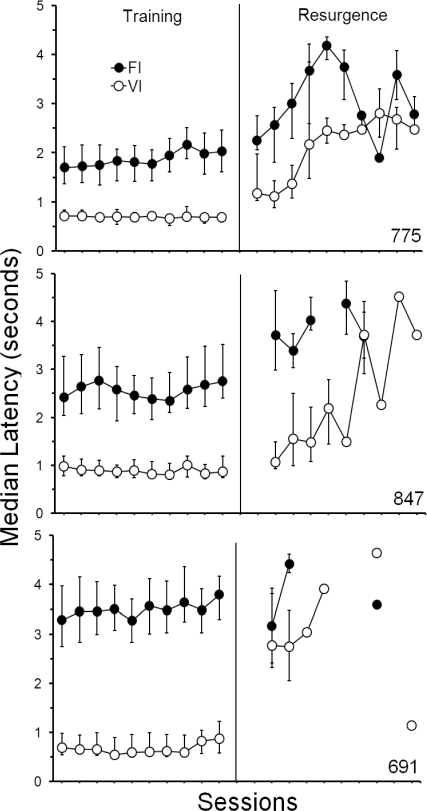

Fig 1.

Responses per minute during each schedule component for the last 10 sessions of the Training phase and all sessions of the Response-Elimination and Resurgence phases of Experiment 1.

Resurgence of key pecking occurred for each pigeon. Relative to the last three sessions of the Response-Elimination phase, response rates on the right key decreased, and responding on the center key increased in both components during the initial sessions of the Resurgence phase. In absolute terms (i.e., responses per minute), more resurgence occurred in the previously VI component. After extended exposure to the Resurgence phase, response rates on both keys fell to zero or near zero for each pigeon.

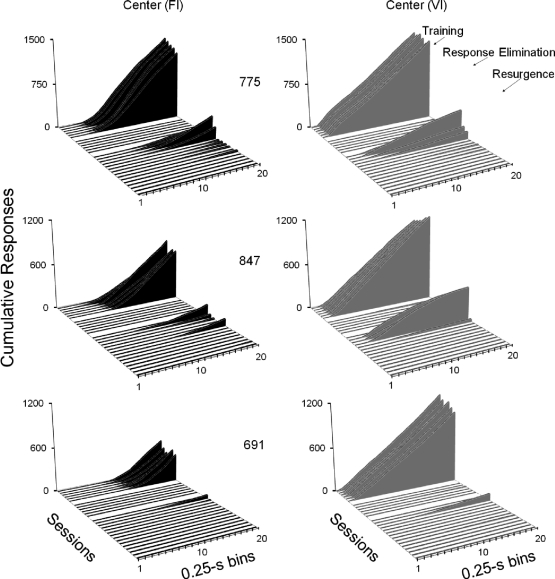

Figure 2 shows center-key cumulative response distributions in 0.25-s bins, accumulated across the 90 trials of each schedule component within a session, for each pigeon. In each graph, the distributions, from upper to lower diagonal, are from the last six sessions of the Training and Response-Elimination phases, and from all sessions of the Resurgence phase. The same Resurgence-phase data are shown in Figure 3, using an expanded scale on the Y-axis to show in greater detail the resurgence effect. During the Training phase, positively accelerated and linear patterns of responding occurred consistently in the FI and VI components, respectively, for each pigeon. Pauses followed by positively accelerated response patterns during the 5-s trials occurred during FI components and more linear response distributions occurred during VI components. For each pigeon, these patterns were absent during the last six sessions of the Response-Elimination phase. For Pigeons 775 and 847, resurgence of the temporal patterns of responding established in the Training phase occurred. That is, during the first five sessions of the Resurgence phase, positively accelerated and more linear patterns of responding occurred in the former FI and VI components, respectively—although slight negative acceleration in patterns was observed for Pigeon 847 during the VI component, differential resurgence between the two components was observed for this pigeon. Pigeon 691 exhibited positively accelerated patterns of responding in the presence of both stimuli, and although patterning still was different in the presence of each stimulus, these differences are related primarily to the frequency of responding in each component and not necessarily to differential patterning as observed for Pigeons 775 and 847.

Fig 2.

Cumulative response distributions in 0.25-s bins for each pigeon in Experiment 1. Each graph shows, from upper to lower diagonal, distributions for the last six sessions of the Training and Response Elimination phases, and all sessions of the Resurgence phase during FI (black) and VI (gray) schedule components. Phases are separated by white lines in the horizontal plane on each graph.

Fig 3.

Cumulative response distributions in 0.25-s bins for each pigeon in Experiment 1. Each graph shows, from upper to lower diagonal, distributions for all sessions of the Resurgence phase. Other details as in Figure 2.

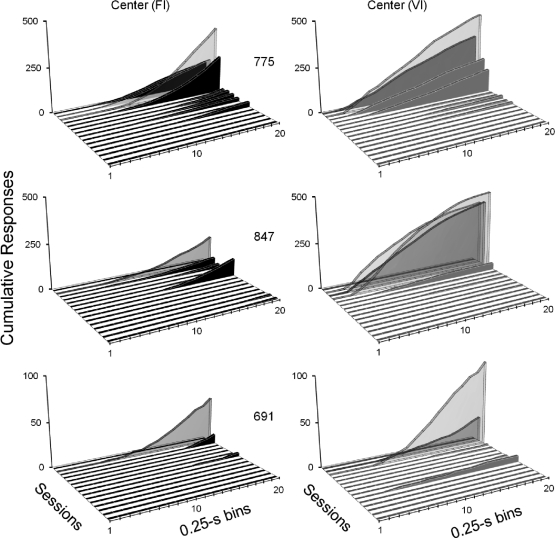

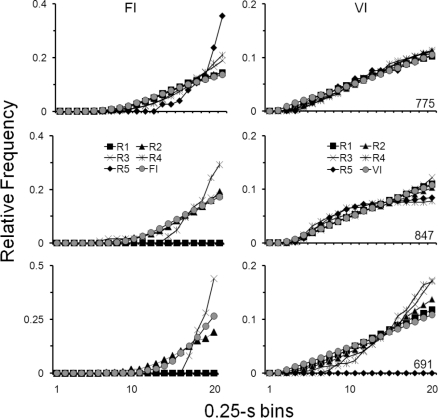

The data in Figure 4 support the previous analyses and allow a direct comparison of responding in each component during the last session of the Training phase and each of the first five sessions of the Resurgence phase. Cumulative response distributions in 0.25-s bins (see Figures 2 and 3) are shown, for each pigeon, as relative frequency distributions in the FI (left graphs) and VI (right graphs) components, respectively. During the initial sessions of the Resurgence phase (e.g., sessions R1, R2 and R3), the patterns of responding in each component were similar to those patterns occurring during the last Training-phase session, for each pigeon. That is, positively accelerated and linear patterns of responding occurred in the FI and VI components, respectively. As with the data in Figures 2 and 3, less differentiation in patterning between schedule components occurred for Pigeon 691 during the Resurgence phase, and for Pigeon 847, patterns in the VI component during sessions four (R4) and five (R5) of the Resurgence phase were slightly negatively accelerated. Thus, the similarity of patterns within components (indicated by the overlap between the distributions of Training and Resurgence-phase sessions in Figure 4), and the differential patterning between components (indicated by the differences in distributions seen between functions in the left and right graphs) during Training and Resurgence phases support the previous descriptions of resurgence of temporal patterns of responding.

Fig 4.

Relative frequency distributions of cumulative responses in 0.25-s bins, for each pigeon in Experiment 1. Left and right graphs show relative frequency distributions in the FI and VI components, respectively, for the last session of the Training phase (gray circles) and for each of the first five sessions of the Resurgence phase (R1 through R5). Distributions were generated for each component by dividing the number of responses in each bin by the total number of responses.

In addition to the visual analysis of cumulative response distributions in each component, the differential patterning receives further support from an analysis of latencies to the first response in a trial. Figure 5 shows, for the last 10 sessions of the Training phase and the first 10 sessions of the Resurgence phase, the median latency (in seconds) to the first response on the center key within a trial in each component. During the Training phase, latencies were longer in FI than in VI components. During the initial sessions of the Resurgence phase, for all pigeons, latencies increased in both components, but were higher in the former FI. As the Resurgence phase progressed, latencies during each component became undifferentiated. This, however, was more a function of a decrease in rate of responding in both components (as seen in Figures 2 and 3) than of the patterns in both components becoming more similar.

Fig 5.

Median latency (in seconds) to the occurrence of the first center-key response within a trial, during each schedule component. Latencies are shown for the last 10 sessions of the Training phase and the first 10 sessions of the Resurgence phase of Experiment 1. Error bars extend from the 25th to the 75th percentile. Missing data, for either component, reflect sessions in which responding did not occur. Data points without error bars indicate sessions in which only one response occurred and, thus, represent the latency for the occurrence of that response.

Discussion

The responding maintained by discrete-trial FI and VI schedules resurged in much the same way as does free-operant responding (e.g., Lieving & Lattal, 2003). Furthermore, responding organized into temporal patterns resurged in a similar way as both individual responses (e.g., da Silva et al., 2008; Epstein, 1983) and sequences of responses (e.g., Sánchez-Carrasco & Nieto, 2005; Reed & Morgan, 2006). This was true for both the magnitude and time course of resurgence, and these effects were replicated (cf. Lieving & Lattal).

The differential resurgence of the temporal response patterns is not prima facie evidence of the resurgence of response patterns as conditionable behavioral units. Although not a requirement for reinforcement under such contingencies, positively accelerated and linear patterns of responding typically are established on, respectively, FI and VI schedules of reinforcement (Catania & Reynolds, 1968; Ferster & Skinner, 1957; Shull, 1970; Shull, et al., 1972; Zeiler, 1968, 1977). In the present experiment, exposure to FI and VI schedules during the Training phase could have changed the organization of behavior from discrete responses into specific distributions of responses in time. Hawkes and Shimp (1975, 1998) established control over response distributions across 5-s intervals by directly reinforcing such patterns, and Wasserman (1977) obtained similar results within 8-s intervals. Nonetheless, because there was no contingency between a particular pattern and reinforcement in the present experiment, the different patterns that developed were the result of indirect variables operating within FI and VI schedules and not the result of direct reinforcement of a particular pattern of responding. The purpose of the second experiment, therefore, was to further assess the resurgence of temporal patterns of responding when a contingency between specific temporal patterns and reinforcement was in effect during the Training phase.

EXPERIMENT 2

In Experiment 2, resurgence was assessed after specific temporal patterns of responding were directly reinforced during the Training phase using the procedure developed by Hawkes and Shimp (1975, 1998).

Method

Subjects

Three male White Carneau pigeons (617, 955 and 119) were housed as described in Experiment 1and maintained at 80% (± 15 g) of their free-feeding body weights. Each had a history of responding under different schedules of food reinforcement.

Apparatus

Three operant chambers as described in Experiment 1 were used. The only difference was that the front panel of one chamber contained two 2-cm diameter Gerbrands Co. response keys, separated by 15 cm (center to center). The right key was used in one chamber (Pigeon 119) and the center key in the other two (Pigeons 617 and 955). The keys were transilluminated red and no houselight was used in this experiment. All other details were as described in Experiment 1.

Procedure

A discrete-trial procedure was used in Experiment 2. During each phase, sessions started after a 60-s blackout, during which the keylight was off. During trials, the keylight remained on for 5 s (see description of Pretraining 1, below, for an exception). As in Experiment 1, trials were followed by 10-s ITIs, during which the keylight was off. Responses during the first 5 s of the ITI had no programmed consequences, but, as in Experiment 1, a DRO 5-s schedule was in effect during the last 5 s of the ITI to preclude responses from occurring near trial onset. Sessions ended after 60 trials, and were conducted 7 days a week at approximately the same time, during the light period of the light/dark cycle. The rightmost column of Table 2 shows the number of sessions that each of the post-pretraining phases was in effect. The sequence of phases and conditions in each were as follows.

Table 2.

Mean proportion of reinforced trials (standard deviations in parenthesis), and total number of sessions for each pigeon on each phase of experiment 2.

Pretraining 1

Each pigeon first received five 60-reinforcer sessions during which an FI 5-s schedule of reinforcement was in effect during each trial to assure that the pigeons responded consistently when the keylight was on. All procedural details were as described above, except that trials varied slightly in actual duration depending on how soon a response occurred after 5 s elapsed.

Following the above procedure, to directly reinforce positively accelerated patterns of responding, a schedule described by Hawkes and Shimp (1975) was in effect during each trial. Considering a 5-s trial and subintervals of 1 s, the required positively accelerated response pattern was defined based on the function:

where f(t) is the response rate at time t, which is the interval in seconds from the beginning of a trial. f(t)′, the first derivative of f(t), specifies the rate of change in rate of responding across successive 1-s subintervals of a 5-s trial. Because positively accelerated patterns were required in the present experiment, f(t)′ was set to +1. Thus, the function specifies the number of responses required to occur during each subinterval of a trial.

The required pattern was the standard against which obtained patterns in each trial were compared, and the deviation of obtained from required pattern was calculated as the sum of squared deviations (hereafter, D). Mathematically, it is expressed as (Hawkes & Shimp, 1975, p.6):

where fi and oi refer to, respectively, the required and the obtained number of responses at the ith-s subinterval of a 5-s trial. Thus, the lower the value of D, the better the match between obtained and required patterns. On any trial, if D = 0, obtained and required response patterns perfectly match and if D = 30, no responses were emitted.

To reinforce temporal patterns that did not perfectly match the required pattern of responding, a goodness-of-fit criterion (hereafter, C; cf. Hawkes & Shimp, 1975) was set as an arbitrary value against which the sum of square deviations (Equation 2) in each trial was compared. The criterion C was defined as an integer greater than zero and reinforcers were delivered at the end of a trial if responses were emitted (i.e., if D ≠ 30) and if D ≤ C (see Appendix for a detailed description). During Pretraining 2, the pigeons were exposed to sessions in which the value of C changed, within sessions, based on their performance. This was done to determine a parameter that, once fixed during the Training phase, would consistently generate and maintain positively accelerated patterns of responding across trials.

Pretraining 2

Within a session, the value of C was decreased by one unit after four consecutive trials ending in reinforcer delivery. Similarly, if four consecutive trials ended without reinforcement, the value of C was increased by one unit (e.g., if C was initially set to 10, after four consecutive reinforced or nonreinforced trials, its value would be 9 or 11, respectively). During the first session, the value of C was set to 20 for each pigeon. Thereafter, the initial value of C in a session was set equal to its terminal value during the immediately preceding session. This was done unless the terminal value of C was greater than its initial value within a session, in which case C was set equal to the lower of the two values (e.g., if during Session 2, the initial and terminal values of C were, respectively, 9 and 15, C was set to 9 at the beginning of Session 3).

This pretraining phase was in effect for a minimum of 10 sessions, and until responding consistently occurred across trials and the terminal values of C did not increase or decrease systematically across sessions. These criteria were achieved after 13 sessions for Pigeons 617 and 955, and 60 sessions for Pigeon 119. During the last six sessions of this phase, mean terminal values of C (with standard deviation and range in parenthesis) for Pigeons 617, 955 and 119 were, respectively, 6.33 (SD = 2.33; 3–9), 8.16 (SD = 1.32; 6–10) and 9.16 (SD = 1.32; 7–11).

Training

The criterion C was fixed at a constant value across sessions. For each pigeon, C initially was set to 8. This value was maintained for Pigeons 617 and 955 but, after six sessions Pigeon 119's responding ceased completely. For this reason, C was set at 10 for this pigeon. The contingencies of reinforcement in effect, then, established that reinforcers would occur only if D ≤ 8 (Pigeons 617 and 955) and if D ≤ 10 (Pigeon 119). This phase was in effect for a minimum of 15 sessions and until positively accelerated patterns of responding occurred consistently across six consecutive sessions.

Response Elimination

The value of C was set at 30, such that reinforcers were presented only if no responses occurred within a trial. The first response within a trial cancelled the programmed reinforcer for that trial, and additional responses were recorded but had no programmed consequences. This phase was in effect for a minimum of 10 sessions, and until positively accelerated patterns of responding were not systematically observed for 6 consecutive sessions.

Resurgence

The procedure in this phase was identical to the Training phase except that reinforcement never followed at the end of a trial. This phase was in effect for 30 sessions.

Replication

A second exposure to Training (fixed C value), Response-Elimination and Resurgence phases was conducted, but the schedule of reinforcement during Training permitted more variability in patterning because C was set to 16 for Pigeons 617 and 995, and to 20 for Pigeon 119. Procedural details, the minimum number of sessions and stability criteria for each phase were as previously described, with the exception that Pigeon 119 was exposed to 21, rather than 30, sessions during the replication of the Resurgence phase. The actual number of sessions that each replication phase was in effect is shown in Table 2.

Results

Table 2 shows the mean proportion of session reinforced trials for the last 10 sessions of Training and Response-Elimination phases. Although reduced during the first Training phase (especially for Pigeon 119), the obtained proportion of reinforced trials was sufficient to maintain consistent responding across sessions (see Figure 6). During all subsequent phases, the proportion of reinforced trials increased relative to the first Training phase, indicating that responding was meeting the requirements of the reinforcement contingency in effect during each phase. Thus, the procedure successfully established and eliminated responding during Training and Response-Elimination phases, respectively.

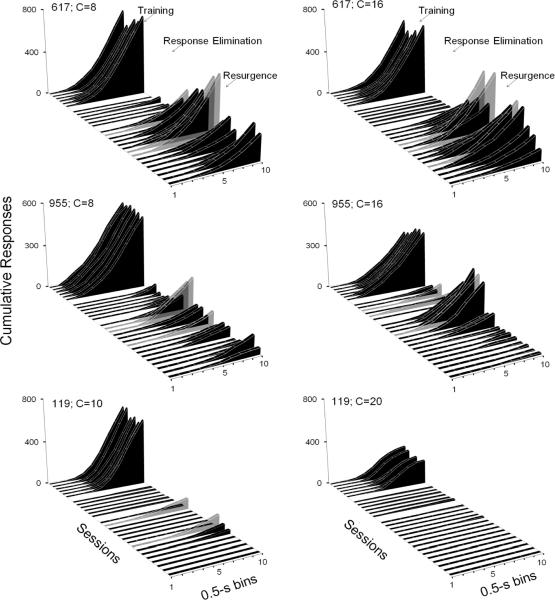

Fig 6.

Cumulative response distributions in 0.5-s bins for each pigeon during the first exposure (left graphs) and replication (right graphs) of each phase of Experiment 2. Each graph shows, from upper to lower diagonal, distributions for the last six sessions of the Training and Response Elimination phases, and the first 15 sessions of the Resurgence phase. Phases are separated by white lines in the horizontal plane on each graph.

Figure 6 shows, for each pigeon, session cumulative response distributions in 0.5-s bins, accumulated across the 60 trials within a session, during the first exposure (left graphs) and replication (right graphs) of each phase of Experiment 2. Each graph shows, from upper to lower diagonal, distributions for each of the last six sessions of the Training and Response-Elimination phases, and the first 15 sessions of the Resurgence phase. For each pigeon, during the last six sessions of the first and second exposures to the Training phases, positively accelerated response patterns occurred consistently, that is, there were pauses at the beginning of trials followed by positively accelerated responding until the end of trials. During the last six sessions of both Response-Elimination phases, responding was systematically reduced within and across sessions and previously observed positively accelerated patterns did not occur (Pigeons 617 and 119) or occurred at lower frequencies as compared to the terminal sessions of both Training phases (Pigeon 955). These data show that the pigeons were pausing, or not pecking, during almost all trials during the Response-Elimination phase sessions. Figure 6 shows that resurgence of positively accelerated patterns occurred for each pigeon during the first exposure, and for 2 of the 3 (Pigeons 617 and 955) during the replication of each phase. Rate of responding (and consequently, patterning) was reduced systematically across sessions of both Resurgence phases. For Pigeons 617 and 955 (left and right graphs), however, positively accelerated patterns of responding still were observed after 15 Resurgence-phase sessions.

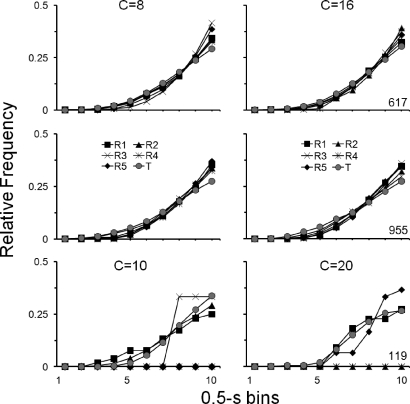

Figure 7 shows that the patterns of responding that occurred during the initial sessions of both Resurgence phases were similar to the patterns observed during the Training phase. Cumulative response distributions in 0.5-s bins are shown, for each pigeon, as relative frequency distributions during the first exposure (left graphs) and replication (right graphs) for the last Training-phase session (T; gray circles) and for each of the first five sessions of the Resurgence phase (R1 through R5). For Pigeons 617 and 955, patterns of responding during the first five sessions of Resurgence phases were similar to those during the last session of Training (i.e., Training and Resurgence-phase session distributions practically overlap). Although for Pigeon 119 the overlap between Training and Resurgence-phase response distributions is less systematic, positively accelerated patterns of responding during the first session of the Resurgence phases (e.g., R1) were similar to those during the last session of each Training phase.

Fig 7.

Relative frequency distributions of cumulative responses in 0.5-s bins, for each pigeon in Experiment 2. Left and right graphs show relative frequency distributions in the first exposure and replication, respectively, for the last session of the Training phase (T; gray circles) and for each of the first five sessions of the Resurgence phase (R1 through R5). Other details as in Figure 5.

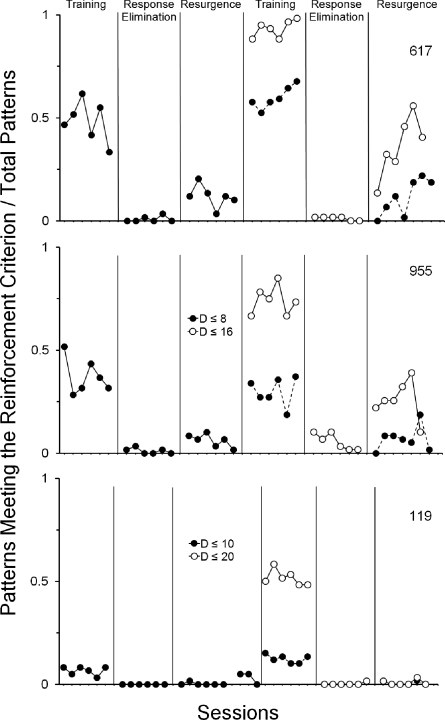

The data in Figure 8 show that the proportion of patterns that were reinforcement-eligible during the terminal sessions of both Training phases decreased, or approached zero, during the last six sessions of both Response-Elimination phases. During the Resurgence phases, the proportion of these patterns increased relative to those that occurred during the Response- Elimination phase. These data complement the visual analysis of cumulative response distributions presented in Figures 6 and 7 and indicate that during the Resurgence phases, the proportion of patterns that occurred (i.e., that resurged) were similar to those occurring during the Training phases (and, thus, would have produced reinforcement under the contingencies in effect during the Training phases).

Fig 8.

Proportion of patterns meeting the reinforcement criterion for the last six sessions of the Training and Response-Elimination phases, and the first six sessions of the Resurgence phases of Experiment 2. Closed and open circles represent, respectively, the proportion of patterns that met (i.e., during the Training phase) or would have met (i.e., during Response-Elimination and Resurgence phases) the requirements for reinforcement on the first exposure and replication of the Training phase. The closed circles connected by a dotted line represent the proportion of the patterns occurring on each phase during the replication of the procedure that would meet the reinforcement criterion under the contingencies in effect during the first Training phase.

Discussion

The results of this experiment replicate and extend those of Experiment 1 by demonstrating the resurgence of temporal patterns when a contingency between the occurrence of positively accelerated patterns of responding and reinforcement was in effect during the Training phases. The Hawkes and Shimp (1975, 1998) procedure allowed temporal patterns to be specified a priori, rather than as in Experiment 1, where the development of patterns depended on indirect variables operative in interval schedules of reinforcement.

Although some degree of variability (within and across classes of patterns) was permitted by the contingencies in effect during both Training phases (especially during the second exposure to each phase; see Appendix), the procedure was effective in establishing both positively accelerated patterns of responding and the conditions for analyzing their resurgence. These results therefore also replicate those reported by Hawkes & Shimp (1975, 1998; see also Wasserman, 1977) by demonstrating that the direct reinforcement of patterns during both Training phases (when C = 8 or 10, and when C = 16 or 20) established and maintained positively accelerated patterns of responding.

GENERAL DISCUSSION

The present experiments demonstrate the resurgence of temporal patterns of responding. It was observed whether the patterns developed as an indirect effect of conventional schedules of reinforcement (VI or FI, Experiment 1) or as the result of an explicit reinforcement contingency (Experiment 2). Although Carey (1951) analyzed the resurgence of response sequences in time, his analysis was of the temporal spacing of two lever-press responses. By contrast, the resurgence of multiple responses occurring in different temporal patterns was established in the present experiments.

The resurgence of temporal patterns in the present study was qualitatively similar to the resurgence of both single responses (Epstein, 1983; Lieving & Lattal, 2003) and of response sequences (e.g., Bachá-Mendez et al., 2007; Reed & Morgan, 2006; Sánchez-Carrasco & Nieto, 2005). That is, such resurgence was manifest within the first or second session of the Resurgence phase, it reached asymptote during the next few sessions and thereafter declined. In addition, the fact that resurgence was replicated robustly in 2 out of 3 pigeons in Experiment 2 is similar to Lieving and Lattal's (2003) replication of single-response resurgence during successive resurgence tests (see also da Silva et. al., 2008, Experiment 2).

The present results contribute to an understanding of the variables that affect the occurrence of resurgence. Doughty et al. (2007), for example, found greater resurgence of key pecking by pigeons when the operant response in the Response-Elimination phase was different, as opposed to being the same, as the response in the Training phase. Winterbauer and Bouton (2010) observed that resurgence of rats' lever pressing occurred following the alternative reinforcement of lever-pressing during the Response-Elimination phase according to a variety of reinforcement schedules, and regardless of the reinforcement differential between the Training and Response-Elimination phases. The present results demonstrate how the conditions of reinforcement of the response in the Training phase affect the qualitative manifestation of subsequent resurgence.

da Silva et al. (2008, Experiment 2) showed that resurgence was related directly to response rates during the Training phase (cf. Reed & Morgan, 2007). By contrast, when response rates were held constant while varying reinforcement rates during the Training phase, resurgence did not differ systematically as a function of reinforcement rate (da Silva et al., Experiment 3; but see Podlesnik & Shahan, 2009, 2010). The present results suggest that rate is only one index of responding that predicts subsequent resurgence. Patterns of responding in time is another. In the da Silva et al. experiments, response patterns were controlled by using concurrent VI schedules in the Training phases. Were different schedules (e.g., FI and VI) used in the Training phase, resurgence would be an interactive function of both rate and pattern of responding. If, for example, response rates were equal in the FI and VI schedules, differential resurgence still might result, as a function of the different response patterns.

In terms of responding in time as a consideration in resurgence, the present results are related to those of Bruzek, Thompson and Peters (2009), who trained and subsequently resurged simulated infant-caregiving responses of humans. Although the caregiving responses had to occur in a prescribed order to be effective (e.g., participants first had to hold and manipulate a toy in a specific position to play with the simulated infant), the temporal pattern of responding was important insofar as it reflected the order of responses. That is, no requirements of specific temporal distributions of responses in time were in effect in their study. In Experiment 1 of the present study, neither a sequence of topographically different responses nor a sequence of topographically similar discrete responses was specified; however, the different reinforcement schedules established different temporal patterns which resurged under appropriate conditions. By contrast, in the present Experiment 2, a sequence of topographically similar responses was specified. Thus, the temporal distribution of responses, unlike that in Bruzek et al., was critical: more responses had to occur at the end than at the beginning of the interval if the pattern was to be reinforced. These results, therefore, complement Bruzek et al.'s analysis of the resurgence of ordered and topographically different responses by showing that topographically similar responses that are temporally organized (Hawkes & Shimp, 1975) also resurge.

When an operant is extinguished, it is typically the case that other responses occur, a generative effect of extinction (Lattal, St. Peter Pipkin, & Escobar, in press). Extinction-induced responding can be either discrete, well-defined responses or combinations of several topographically distinct responses organized sequentially in space and in time. An example of the latter is extinction-induced aggression, where an organism engages in a compound sequence of responses directed toward another organism or facsimile thereof (Azrin, Hutchinson, & Hake, 1966). Several observers have suggested that these compounds of temporally organized sequences are instances of resurgence (Doughty & Oken, 2008; Epstein, 1985; Lieving & Lattal, 2003; Morgan & Lee, 1996). The difficulty with such an interpretation, however, has been the lack of experimental evidence for the resurgence of compound, temporally organized responses. By providing such evidence, the present results lend support to this interpretation of extinction-induced responding.

Acknowledgments

This research was conducted in partial fulfillment of the requirements of Master of Science in Psychology by the first author at West Virginia University. The research was supported by a grant to the first author from the Eberly College of Arts and Sciences at West Virginia University, and an Experimental Analysis of Behavior Fellowship (2009) to him from the Society for the Advancement of Behavior Analysis.

Appendix

According to the function described in Equation 1—see Method section of Experiment 2 —considering a 5-s trial and 1-s subintervals, if f(t)′ = +1, the required number of responses during the interval from 0 s to 1 s is zero, and from 1 s to 2 s is one (i.e., at t = 1 s, f(1) = 0, and at t = 2 s, f(2) = 1, Hawkes & Shimp, 1975). At the interval from 4 s to 5 s, the required number of responses is 4 and the total number of required responses within a trial equals 10 (i.e., 0, 1, 2, 3 and 4 responses, if t = 1 s, 2 s, 3 s, 4 s and 5 s, respectively). Although the required number of responses within each 1-s subinterval is specified, no restrictions are in effect as to exactly when these responses should occur (e.g., as long as one response occurs during the 1-s to 2-s interval, the requirement as specified by the function in Equation 1 is achieved; this is true if the response occurs at 1.25 s, at 1.75 s or at 1.98 s).

The value of the goodness-of-fit criterion, C, specified the maximum accepted deviation of obtained and required patterns and set the conditions for reinforcer delivery. Setting C = 1, for example, establishes a more restrictive condition in which only patterns that almost perfectly match the required pattern will produce the reinforcer. In contrast, setting C to a higher value (e.g., C = 20) allows higher levels of variability in responding, and patterns that considerably deviate from the required patterns might produce a reinforcer. If the value of C is not controlled, the contingencies in effect would be similar to an FI 5-s schedule of reinforcement. The use of a fixed value of C during the Training phases in Experiment 2 served also as a reference for subsequent analyses of relative frequency of patterns during the first exposure and the replication of the Training and the Resurgence phases.

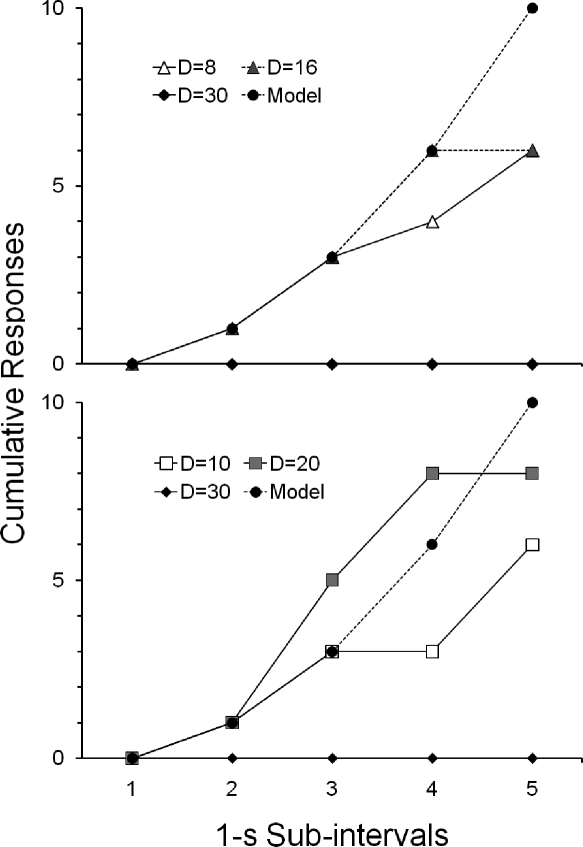

Hypothetical cumulative response distributions in 1-s subintervals of a 5-s trial are shown in Figure A1. The required pattern described by Hawkes and Shimp's (1975) model (i.e., the function in Equation 1) is represented in closed circles in both upper and lower graphs. Also shown are examples of positively accelerated patterns in which deviations from this model occurred (i.e., classes of patterns in which D = 8 or 16, in the upper graph; and when D = 10 or 20, in the lower graph). In both graphs, patterns in which no responses occurred within a trial (i.e., D = 30) are also shown. These deviations from the model, as described in the Method section of Experiment 2, defined the contingencies of reinforcement in effect for Pigeons 617 and 955 (upper graph) and Pigeon 119 (lower graph) during the first exposure and replication of each phase of Experiment 2.

Fig A1.

Cumulative response distributions (hypothetical data) in 1-s subintervals of a 5-s trial, showing the pattern described by Hawkes and Shimp's (1975) model. Also shown are the deviations from this model that defined reinforcement contingencies for Pigeons 617 and 955 (upper graph) and Pigeon 119 (lower graph) during the first exposure and replication of each phase of Experiment 2.

Two sources of pattern variability can operate under this schedule of reinforcement (Hawkes & Shimp, 1975, 1998). One of these sources results from no restrictions being imposed by the contingencies on when responses should occur within each 1-s subinterval of a trial. Another source of variability is under the experimenter's control and is defined by the value at which C is set. It should also be noted that a given value of D (e.g., 2) does not define a single response pattern, but a response class of which that pattern is a member, because different distributions of responses within a trial can yield the same value of D (see function in Equation 2 in the Method section of Experiment 2).

REFERENCES

- Azrin N.H, Hutchinson R.R, Hake D.F. Extinction-induced aggression. Journal of the Experimental Analysis of Behavior. 1966;9:191–204. doi: 10.1901/jeab.1966.9-191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bachá-Mendez G, Reid A.K, Mendoza-Soylovna A. Resurgence of integrated behavioral units. Journal of the Experimental Analysis of Behavior. 2007;87:5–24. doi: 10.1901/jeab.2007.55-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruzek J.L, Thompson R, Peters L. Resurgence of infant caregiving responses. Journal of the Experimental Analysis of Behavior. 2009;92:327–344. doi: 10.1901/jeab.2009-92-327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey J.P. Reinstatement of previously learned responses under conditions of extinction: A study of “regression” [Abstract] American Psychologist. 1951;6:284. [Google Scholar]

- Carey J.P. Reinstatement of learned responses under conditions of extinction: A study of regression. 1953. Unpublished doctoral dissertation, Columbia University.

- Catania A.C, Reynolds G.S. A quantitative analysis of the responding maintained by interval schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:327–383. doi: 10.1901/jeab.1968.11-s327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- da Silva S.P, Maxwell M.E, Lattal K.A. Concurrent resurgence and behavioral history. Journal of the Experimental Analysis of Behavior. 2008;90:313–331. doi: 10.1901/jeab.2008.90-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dews P.B. The theory of fixed-interval responding. In: Schoenfeld W.N, editor. The theory of reinforcement schedules. New York, NY: Appleton Century Crofts; 1970. pp. 43–61. (Ed.) [Google Scholar]

- Dinsmoor J.A. Academic roots: Columbia University, 1943–1951. Journal of the Experimental Analysis of Behavior. 1990;54:129–149. doi: 10.1901/jeab.1990.54-129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doughty A.H, da Silva S.P, Lattal K.A. Differential resurgence and response elimination. Behavioural Processes. 2007;75:115–128. doi: 10.1016/j.beproc.2007.02.025. [DOI] [PubMed] [Google Scholar]

- Doughty A.H, Oken G. Extinction-induced response resurgence: A selective review. The Behavior Analyst Today. 2008;9:27–33. Retrieved from http://www.baojournal.com/VOL-9/BAT%209-1.pdf. [Google Scholar]

- Epstein R. Resurgence of previously reinforced behavior during extinction. Behaviour Analysis Letters. 1983;3:391–397. [Google Scholar]

- Epstein R. Extinction-induced resurgence: Preliminary investigations and possible applications. Psychological Record. 1985;35:143–153. [Google Scholar]

- Epstein R. Cognition, creativity & behavior: Selected essays. Westport, CT: Praeger; 1996. [Google Scholar]

- Epstein R, Skinner B.F. Resurgence of responding after cessation of response-independent reinforcement. Proceedings of the National Academy of Science USA. 1980;77:6251–6253. doi: 10.1073/pnas.77.10.6251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. East Norwalk, CT: Appleton-Century-Crofts; 1957. [Google Scholar]

- Fleshler M, Hoffman H.S. A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkes L, Shimp C.P. Reinforcement of behavioral patterns: Shaping a scallop. Journal of the Experimental Analysis of Behavior. 1975;23:3–16. doi: 10.1901/jeab.1975.23-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkes L, Shimp C.P. Linear responses. Behavioural Processes. 1998;44:19–43. doi: 10.1016/s0376-6357(98)00029-1. [DOI] [PubMed] [Google Scholar]

- Keller F, Schoenfeld W. Principles of psychology: A systematic text in the science of behavior. East Norwalk, CT: Appleton-Century-Crofts; 1950. [Google Scholar]

- Lattal K.A, St. Peter Pipkin C. Resurgence of previously reinforced responding: Research and application. The Behavior Analyst Today. 2009;10:254–265. Retrieved from http://www.baojournal.com/VOL-10/BAT%2010-2.pdf. [Google Scholar]

- Lattal K.A, St. Peter Pipkin C, Escobar R. Operant extinction: Elimination and generation of behavior. In: Madden G, editor. Handbook of Behavior Analysis. Washington. D.C: American Psychological Association; In press. (Ed.) [Google Scholar]

- Leitenberg H, Rawson R.A, Mulick J.A. Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Lieving G.A, Hagopian L.P, Long E.S, O'Connor S. Response class hierarchies and resurgence of severe problem behavior. Psychological Record. 2004;54:621–634. [Google Scholar]

- Lieving G.A, Lattal K.A. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan D.L, Lee K. Extinction-induced response variability in humans. Psychological Record. 1996;46:145–160. [Google Scholar]

- Podlesnik C.A, Jimenez-Gomez C, Shahan T.A. Resurgence of alcohol seeking produced by discontinuing non-drug reinforcement as an animal model of drug relapse. Behavioural Pharmacology. 2006;17:369–374. doi: 10.1097/01.fbp.0000224385.09486.ba. [DOI] [PubMed] [Google Scholar]

- Podlesnik C.A, Shahan T.A. Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior. 2009;37:357–364. doi: 10.3758/LB.37.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Podlesnik C.A, Shahan T. Extinction, relapse, and behavioral momentum. Behavioural Processes. 2010;84:400–411. doi: 10.1016/j.beproc.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed P, Morgan T.A. Resurgence of response sequences during extinction in rats shows a primacy effect. Journal of the Experimental Analysis of Behavior. 2006;86:307–315. doi: 10.1901/jeab.2006.20-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed P, Morgan T.A. Resurgence of behavior during extinction depends on previous rate of response. Learning & Behavior. 2007;35:106–114. doi: 10.3758/bf03193045. [DOI] [PubMed] [Google Scholar]

- Sánchez-Carrasco L, Nieto J. Resurgence of three-response sequences in rats. Revista Mexicana de Análisis de la Conducta. 2005;31:215–226. [Google Scholar]

- Shahan T.A, Lattal K.A. On the functions of the changeover delay. Journal of the Experimental Analysis of Behavior. 1998;69:141–160. doi: 10.1901/jeab.1998.69-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shull R.L. The response–reinforcement dependency in fixed-interval schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1970;14:55–60. doi: 10.1901/jeab.1970.14-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shull R.L, Guilkey M, Witty W. Changing the response unit from a single peck to a fixed number of pecks in fixed-interval schedules. Journal of the Experimental Analysis of Behavior. 1972;17:193–200. doi: 10.1901/jeab.1972.17-193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wasserman E.A. Conditioning of within-trial patterns of key pecking in pigeons. Journal of the Experimental Analysis of Behavior. 1977;28:213–220. doi: 10.1901/jeab.1977.28-213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer N.E, Bouton M.E. Mechanisms of resurgence of an extinguished instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2010;36:343–353. doi: 10.1037/a0017365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeiler M.D. Fixed and variable schedules of response-independent reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:405–414. doi: 10.1901/jeab.1968.11-405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeiler M.D. Schedules of reinforcement: The controlling variables. In: Honig W.K, Staddon J.E.R, editors. Handbook of operant conditioning. Englewood, NJ: Prentice Hall, Inc; 1977. pp. 201–232. (Eds.) [Google Scholar]