Abstract

Background

The reporting of serious adverse events (SAEs) is a requirement when conducting a clinical trial involving human subjects, necessary for the protection of the participants. The reporting process is a multi-step procedure, involving a number of individuals from initiation to final review, and must be completed in a timely fashion.

Purpose

The purpose of this project was to automate the adverse event reporting process, replacing paper-based processes with computer-based processes, so that personnel effort and time required for serious adverse event reporting was reduced, and the monitoring of reporting performance and adverse event characteristics was facilitated.

Methods

Use case analysis was employed to understand the reporting workflow and generate software requirements. The automation of the workflow was then implemented, employing computer databases, web-based forms, electronic signatures, and email communication.

Results

In the initial year (2007) of full deployment, 588 SAE reports were processed by the automated system, eSAEy™. The median time from initiation to Principal Investigator electronic signature was less than 2 days (mean 7 ± 0.7 days). This was a significant reduction from the prior paper-based system, which had a median time for signature of 24 days (mean of 45 ± 5.7 days). With eSAEy™, reports on adverse event characteristics (type, grade, etc.) were easily obtained and had consistent values based on standard terminologies.

Limitation

The automated system described was designed specifically for the work flow at Thomas Jefferson University. While the methodology for system design, and the system requirements derived from common clinical trials adverse reporting procedures are applicable in general, specific work flow details may not relevant at other institutions.

Conclusion

The system facilitated analysis of individual investigator reporting performance, as well as the aggregation and analysis of the nature of reported adverse events.

Keywords: adverse event reporting, informatics, data management, data and safety monitoring

Introduction

Adverse events are commonly reported on paper forms. In the typical reporting work flow, the clinical research associate responsible for a study is usually the individual who initiates the report. He/she enters information on the adverse event which occurred, including specifically what the event was, when it occurred, when it was reported to the Principal Investigator, whether it is still on-going, its severity, whether it can be attributed to the clinical trial, action taken, and whether it resulted in hospitalization, whether it was reported to external entities (commercial sponsor, Food & Drug Administration), whether the event is in the current study informed consent, and the need for informing other study participants of its occurrence.

The central data item – specifically what event occurred – should be precisely and uniformly expressed, using standard nomenclature that will make it possible to directly compare the occurrence with other reported events for the study. Also, precisely identifying the event is necessary for accurately assessing the severity of the occurrence, as the definitions of severity grades are often unique for each adverse event. Consulting manuals for event definitions and corresponding severity grade definitions can be a time consuming task for the originator of the report.

Once a report is initially generated, it must be reviewed and approved by the study’s Principal Investigator and by IRB reviewers, and possibly additional study medical monitors. For paper-based systems this involves either labor-intensive “hand deliveries” or the added delays of intramural mail deliveries. Also, with these systems, it is often difficult to establish exactly where a given report is in the review cycle. Furthermore, with paper-based systems, identifying investigators with overdue report reviews, and retrospective analysis of reporting work efficiency to determine work flow bottlenecks is difficult to accomplish and imposes a large administrative burden on clinical research management.

Automating the process for the creation and processing of clinical reports can yield significant advantages over traditional, paper-based systems [1,2]. For the reporting of clinical trial serious adverse events, these advantages could include decreasing clinical staff effort, quicker study conduct review, and better protection of human research participants through more timely and precise reporting. Recognizing this potential area for improving clinical trials conduct, Thomas Jefferson University developed an electronic serious adverse event reporting system, named eSAEy™, to replace its paper-based system. This system has been deployed for all trials conducted at the institution, as well as trials overseen by the Jefferson IRB, but conducted at affiliated hospitals.

Methods

eSAEy™ Design Objectives

By 2004, a clinical trials management system computer application that had initially been developed within the Kimmel Cancer Center at Jefferson for cancer trials had been extended to manage all trials at the University [3,4]. Having all of University’s clinical trials in one database repository provided a basis for developing a paper-less, completely electronic serious adverse events reporting application for all trials overseen by the Thomas Jefferson University Institutional Review Board. The objectives to be achieved by going “paper-less” were

-

1

more timely reporting, and

-

2

the ability to track the status of a report from generation, through signing, to final review.

In particular, identifying specific investigator non-compliance with reporting requirements was desired. Additional objectives were

-

3

to consistently have precise, standard specification of the adverse event and its associated severity grade, and

-

4

to facilitate analyses of reported adverse events.

The potential barriers to achieving these objectives included investigator and staff resistance to a new process, and the difficulty of implementing the complex work flow involved in the reporting process, which involves many individuals at different locations, and a sometimes iterative review process.

Work Flow Analysis

Payne and Graham [5] proposed a three axis model of the life cycle of electronic clinical documents: the stages of the documents, the roles of those involved with the document, and the actions involved by those involved at each document stage. Their model includes the workflow rules that govern which roles can perform which actions at which stage of the document life cycle. They focused on the workflow involved in creating, reviewing, editing, and using electronic clinical documents in the healthcare delivery setting.

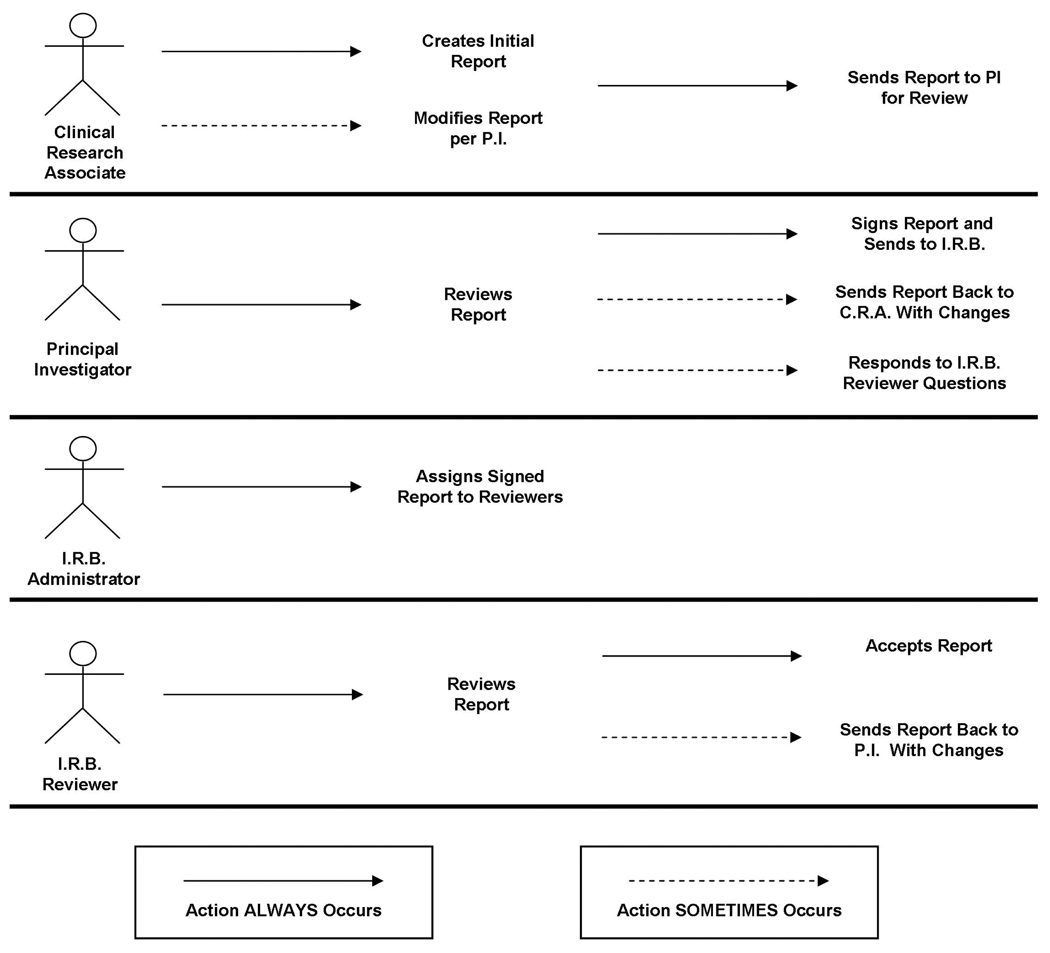

Similarly, the Use Case scenarios diagrammed below in Figure 1, show how the various categories of individuals (i.e., use case “roles”) are involved in the SAE reporting work flow. (These “stick figure” use case diagrams are a type of behavioral diagram used with the Unified Modeling Language™ (UML®) software engineering design tool [6]. These diagrams present a graphical overview of the functionality provided by a system in terms of individuals and their goals.) The SAE reporting work flow diagram, Figure 2, shows how the individuals in Figure 1 interact.

Figure 1.

Use Case Diagram for Serious Adverse Event reporting.

Figure 2.

Serious Adverse Event reporting workflow. (P.I. is the Principal Investigator, C.R.A. is the clinical research associate, and the OHR is the Office of Human Research, with responsibility to the IRB.)

Figure 1 shows that the SAE reporting work flow involves clinical research associates, study Principal Investigators, an Institutional Review Board administrator, and IRB reviewers. The work flow, diagrammatically shown in Figure 2, begins with report creation by the clinical research associate, as the “original author” of the report. (Occasionally the Principal Investigators or an attending physician is the “original author.”) The report then must be brought to the attention of the Principal Investigator, who must always review the entire report for accuracy. For example, the Principal Investigator must concur with the clinical research associate’s attribution for the adverse event (i.e., whether or not it was related to the clinical trial, and if so, to what degree – “possibly, probably, definitely”). The correct attribution may require information uniquely known by the study Principal Investigator. So a need exists for iterative review and modification between the clinical research associate and the Principal Investigator during report construction. A means for easily communicating comments and questions, as well as documenting and tracking these communications is indicated.

It should be noted that just as the Principal Investigator occasionally is the initial originator of an AE report, the Principal Investigator can also choose to make changes to the report him/herself. This rarely occurs. Notice of the modification would be sent via email to the study’s clinical research associate.

When the Principal Investigator is satisfied that the report is accurate, he/she then signs the report. To automate this component of the work flow, an electronic signature compliant with F.D.A. regulations [7] is needed. The IRB administrator must know of the signed approval by the Principal Investigator, so that the report, as submitted by the Principal Investigator, can be assigned to IRB reviewers for further review. The IRB reviewers may have questions for the Principal Investigator about the information in the SAE report before deciding whether the study can continue. Once again, a means for easily communicating comments and questions, as well as documenting and tracking these communications is indicated.

The AE reporting work flow described here is specific to Thomas Jefferson University. However, using the same use case methodology, variations of the work flow that may occur at other institutions can readily be accommodated.

System Implementation

The eSAEy™ system has a thin browser-based client. The server side software is written in HTML and PHP, along with some client-side javascript code. eSAEy™ is deployed on a Linux server using Apache web server software. PostgreSQL databases are employed as the data repository. The University’s “campus key” username/password authentication, maintained by the IT and Human Resources departments, is used. This campus key authentication is not only used for application access, but also for electronic signatures. Application database authorization tables are used to grant appropriate functionality to users. Additional authorization to certain specific functionality is also provided. For example, only the individual noted as the trial Principal Investigator in the clinical trials repository database can sign an AE report for that study.

Based on the work flow Use Cases, system requirements were developed that specified email communication between all users, but with application-based message input boxes and application-based email messaging, rather than user email client based communication. For example, when the Principal Investigator reviews a report and has questions for the clinical research associate who entered the report data, he/she does not have to go to their email program, but rather enters the question directly into a text box in the eSAEy application. The eSAEy database has all needed email addresses, including that of the authoring clinical research associate, and will send the email when the Principal Investigator presses the “send email” button.

Since report data is stored in database tables, and Adobe™ PDF reports can be generated “on the fly” when requested, the email messages to the Principal Investigators and reviewers do not contain attachments, but contain web links, uniform resource locations (URLs), to the web server-side application that generates the reports. This enhances system security: no patient data is in the email. The email recipient must log in to the linked program before they can access the generated report.

Audit trail information is maintained for each report, with time stamps indicating when report creation, Principal Investigator’s signature, and IRB reviewers evaluation occurred. No report database records are ever physically deleted. When a report is modified (as perhaps when the Principal Investigator instructs the clinical research associate to change an attribution), the original report’s “report history” database column is updated to “deleted,” and the modified report record’s history field notes “modification of report xxx,” where xxx is the database record identifier of the original report. Furthermore, all components of the email communications – from, to, subject, body – are stored in a database table, available for auditing.

Results

eSAEy™ was deployed in September 2006 for all University investigators and staff. Deployment to the cancer center’s community hospital “Cancer Network” investigators and staff did not begin until November 2007 because of authentication issues associated with providing “campus keys” to non-University employees, and with establishing a secure portal for off-campus access. This paper will therefore only report experiences with main campus users. Also, quantitative measures will be reported for the calendar year 2007. During the first four months of operation (September to December 2006) typical start-up problems were encountered – for example, some Principal Investigators not realizing that they must use eSAEy for SAE reporting instead of the previous paper system – that would skew quantitative measures on reports generated and reporting event intervals.

Problems Encountered

The creation of the eSAEy™ system involved analyzing the existing adverse event reporting work flow and then deciding where technology-assisted improvements could be made. As discussed in the Methods section above, work flow analysis entailed constructing use cases to inform the system design by identifying all the categories of individuals (“roles”) involved, and their activities. However, an accurate and complete definition of all use cases for a role was not always realizable on the first pass, and several iterations would sometimes be required. This caused problems with the system development process, as occasionally a newly discovered use case would demand significant design changes. One subset of work flow tasks that particularly needed detailed analysis was reporting requirements, which sometimes dictated data model specifications that were not apparent from the work flow activities.

The majority of the problems in the first full year of deployment were associated with obtaining the Principal Investigator’s review and approval. Clinical research associates had few problems using the web interface to enter the data elements of the reports, a process they have encountered previously, such as with the Jefferson web-based patient registration application. Clinical research associates appreciated having automatic email messaging replace hand-delivery of reports to the Principal Investigators for review.

The Principal Investigator, on the other hand, experienced a significant alteration in their adverse event reporting activities, that they had to learn and to which they had to become accustomed. Previously they were given a printed report to review and sign with a pen, if no modifications were needed. The clinical research associate may even have been present, having hand-delivered the report. With eSAEy™ the Principal Investigators have to respond to an email notice to invoke a web application, log in, and review on-screen displays of reports. To sign the report, the Principal Investigator must re-authenticate him/herself by re-entering their “campus key password.” The re-authentication was criticized by one Principal Investigator as unnecessarily slowing him down, since he had already logged-in. In fact, the re-authentication step does slow down the electronic signature process – and intentionally so. The objective was to make sure that the report signing was a deliberate action, and not one that could be accidentally made. An improvement appreciated by investigators is the ability to review reports anywhere, anytime. As access to eSAEy™ is available via a secure hospital portal, the Principal Investigator can review and approve reports at any hour, from any location having an internet-connected computer.

The dependency on internet-connectivity does mean, however, that the ability to use eSAEy™ to report adverse events is lost if there is a loss of internet service. Fortunately no incident of serious network loss has happened since eSAEy™ was deployed. The formerly-used paper forms could always be used for temporary data logging should a prolonged system outage occur (the application is included in Jefferson’s Information Technology Disaster Recovery and Systems Failover plan).

Work Flow Metrics

During calendar year 2007, a total of 588 AE reports were filed using the eSAEy system. As can be seen from Figure 3, the median time interval between report creation and signing by the study Principal Investigator was less than 2 days. This is consistent with the desired reporting objectives of having SAE reports filed within 48 hours. 84% of the reports were signed by the Principal Investigator in less than a week. (Keep in mind that Principal Investigator signing may be delayed by a second iteration of the clinical research associate sending a report to the Principal Investigator for signature, should the Principal Investigator request changes on initial review.) However there was a significant number (94, or 16% of total) of reports for which the Principal Investigator took more than a week to sign. Further analysis showed that four Principal Investigators accounted for two-thirds of these egregiously overdue reports (61, or 65% of the delinquent reports, 10% of all reports).

Figure 3.

Interval between AE report creation and signing by the Principal Investigator.

Upon the Principal Investigator signing a report, a notice is sent via automatic email to the “SAE Administrator.” Among other responsibilities, this individual assigns a signed report to a set of one to three reviewers in various departments, who review the report information. These reviewers receive notification via email of their review assignments, and by clicking on a web link, access to a web page that allows them to automatically send inquiries about the report to the Principal Investigator, or approve the report as written. Mining the eSAEy™ database for 2007 showed a median time between Principal Investigator’s signature and completed reviews of only 7 days.

Achievement of Objectives

As discussed above in the Methods section, four objectives motivated and guided the development of system: 1) more timely reporting; 2) the ability to track the status of a report from generation, through signing, to final review; 3) to consistently have precise, standard specification of the adverse event and its associated severity grade; and 4) to facilitate analyses of reported adverse events.

Assessing the improvement in report timeliness, the first objective, is difficult as the prior paper system was not conducive to obtaining work flow quantitative measures. Nevertheless, paper reports from 2005 and 2006, prior to the September deployment of eSAEy™, were reviewed and the interval between event onset and Principal Investigator report approval was noted. We compared the time intervals in days from report creation to PI signature of the report before and after the eSAEy™ system was adopted. For this analysis a random sample of 50 reports from 2006 and 49 reports from 2005 were used as the pre-eSAEy™ data set. All the reports from year 2007 (n=588) were used for the post-eSAEy™ data set. Neither set of time intervals was normally distributed. Both had a number of high-valued outliers. The mean ± SEM time interval for the pre- eSAEy™ data set was 45 ± 5.7 days. The median interval for the pre- eSAEy™ data set was 24 days and the inter-quartile range was 52. The mean ± SEM time interval for the post- eSAEy™ data set was 7 ± 0.7 days. The median interval for the post- eSAEy™ data set was 1 day and the inter-quartile range was 4. The two samples were compared with a Mann-Whitney Test and the post- eSAEy™ time-intervals were significantly less than the pre- eSAEy™ time intervals (P<0001). (See figure 4.)

Figure 4.

The pre- and post- eSAEy™ mean time required for Principal Investigator approval.

This dramatic improvement in the timeliness of Principal Investigator report approval is partly a result of the instantaneous email communication replacing hand-delivery or intramural mail. But the achievement of the second objective, the ability to track the current work flow status of a report, also has contributed to more timely reporting: any Principal Investigator with outstanding reports receives a daily email reminder, and a list of Principal Investigators with unsigned reports, which includes the number of outstanding reports and their event onset date range, is emailed daily to appropriate clinical trial departmental administrators (e.g., Office of Human Research, Clinical Research Management Office). While a few investigators, as noted above, failed to follow the report review guidelines, the system was able to identify their non-compliance.

The third objective for precise, uniform reporting has been accomplished through the application’s user interface, which provides pull-down menus when possible (as when selecting the AE or corresponding severity grade), as opposed to free text entry, and data entry validation algorithms. Accuracy, however, cannot be guaranteed by the software.

The IRB reviewers still must judge whether the correct adverse event, grade, and attribution was chosen by the investigator. The fourth objective was met straight forwardly by this automated system. Reports with systematic adverse event descriptions are easily compiled from the eSAEy™ database, which is linked to the Jefferson clinical trials database. Previously, these metrics would have required a good deal of time and effort of sorting through paper reports to obtain the desired information. eSAEy™ makes this easy: generating a report on serious adverse events reported for a study is a simple matter of a menu report selection followed by a study identifier selection.

In addition to meeting our objectives, which focused on developing a more responsive system than possible with paper, eSAEy™ was designed using current software engineering best practices. The system requirements flowed directly from modeling and use case analysis of the reporting work flow. The use of standard terminology for AE definitions and severity grades assures semantic interoperability with other systems that use accepted standard terminologies – as noted above, eSAEy™ this offers the possibility of integration with external electronic data capture systems. Semantic interoperability is considered essential for all computer systems used in basic and clinical research by many investigators, and agencies such as the National Institutes of Health, and evident in the core concepts guiding computer tool development [8]. Also, attention was given to regulatory and legal considerations in our design process, which meant the inclusion of extensive, detailed logging of all system events, and the requirement that no data, once entered, was ever physically deleted. This ability to obtain complete audit trails is necessary not only for adverse event reporting, but in many other clinical applications.

Discussion

Other Studies and Systems

Prior studies of clinical trials adverse event reporting have focused primarily on the monitoring and review infrastructure, rather than on the operational aspects of the reporting process. The published results [9] of a 2000 expert panel on clinical research safety had recommendations for regulatory agencies, IRB’s, data monitoring committees, sponsors, and investigators. These recommendations included harmonization of nomenclature and reporting requirements, defining IRB responsibilities, and increased communication of information from sponsors. Of relevance here, a need for better aggregation and summarization of adverse event data was cited. A more recent study of clinical trials was critical of the systems for mandated data collection and reporting, finding them unnecessarily bureaucratic to the point of impeding clinical research [10]. While this study proposed changes to the underlying monitoring and review infrastructure, transferring local IRB responsibilities to centrally sanctioned investigator networks, the potential for automating the tedious and expensive manual processes commonly encountered in the conduct of clinical trials was noted.

Two systems for assisting the SAE reporting process review among remote sites participating in joint trials have been described. The first such system [11], for the Adolescent Trials Network for HIV/AIDS Interventions (ATN), addresses certain aspects of clinical trial management, including a web-based query and notification system for clinical trial participant management, adverse events, and regulatory/IRB requirements. This system facilitates communication using automated emails between site coordinators at the various network institutions and the investigators and regulatory personnel for these trials. Coordinators had web templates for entry of certain basic adverse event information, although no application assistance was provided to the coordinator to correctly choose and describe the adverse event or its severity, and needed information (documentation of whether the event is listed in the informed consent, for example) is not included. The system also did not address the work flow of adverse event reporting, but focused only on notification of an event occurrence.

The Harvard School of Public Health IRB and Information Technology Department have created another system for assisting the SAE reporting process review among remote sites, the “Serious Adverse Events Forum” (SAEF) [12]. This is a web-based content management system that facilitates review of AE reports by authorized individuals. The trials are being conducted with collaborators in disease-endemic countries. These trials typically have numerous reports, often with sparse event descriptions. SAEF is linked to a database that provides a complete copy of protocol information in addition to AE reports, including study design, accrual status, and consent forms. The objective of SAEF is to facilitate study safety assessments of these trials being conducted in developing countries. The web-based forum makes review and communication easier and has greatly reduced the turnaround time for resolution of AE reporting from these remote sites.

The National Cancer Institute’s “cancer Biomedical Informatics Grid” (caBIG®) initiative [13] has the goals of accelerating cancer research and improving cancer patient outcomes by providing an infrastructure for sharing data and informatics tools. One caBIG® application under development is the “cancer Adverse Event Reporting System” (caAERS) [14], which will capture AE’s, provide notification alerts, interface with local clinical trial databases, utilize controlled vocabularies and coding systems, and generate reports for submission to external agencies. The current version does not support electronic signatures. A non-production caAERS prototype was delivered in 2006, and the initial production deployments are expected in early 2009.

Some commercial clinical trials management system products (e.g., Velos Inc.’s eResearch) have a comprehensive approach to adverse event reporting, like eSAEy™ and caAERS, focusing on automating the operational aspects to improve reporting timeliness and accuracy, while reducing staff effort. The commonly encountered decision for institutions as to whether to develop system software, such as for automated adverse event reporting, or purchase a vendor solution, can often be resolved by considering local resources and needs:

Are in-house software development resources available for application development and maintenance? If not, a vendor solution is needed.

How much funding is available for application development or purchase? While using in-house resources is not necessarily less costly than purchasing, line item budget allocations for systems purchases may be more difficult to obtain than having existing staff resources focused on a particular application development project.

Is there an existing legacy system involved? If the legacy system will continued to be used, must the new system interface with it? Can the legacy system functionality by expanded to provide the desired services?

Are there important and unique institutional requirements that must be satisfied? What is the cost and timeline for vendor system customizations?

Thomas Jefferson University chose to develop an automated adverse event reporting system in-house, using the staff of the cancer center’s Informatics Shared Resource to design and develop the application. External funding (see Acknowledgements) was obtained for the project. The cancer center team had previously shown the needed experience and expertise to undertake this project by its development of a clinical trials management system for all trials at Thomas Jefferson University. The group was well positioned to interface this legacy application to eSAEy™. The application design was tailored to the Jefferson work flow, and included provisions for adverse event reporting from external hospitals (members of the Jefferson Cancer Network) that use the Jefferson IRB for clinical trials.

Conclusion

The eSAEy system has been in routine use for reporting adverse events at our University for over a year. While there had been some initial resistance by some individuals who experienced significant changes to their work flow, no major problems have been encountered. The automated system has made obtaining metrics on reporting conduct and report content a straightforward process of retrieving database information. Work flow metrics so obtained for 2007 show an acceptable turn-around time for report signing and review, with some exceptions. The ease of aggregating and analyzing the nature of reported adverse events has also been demonstrated. The data obtained can serve as a baseline for future analyses.

Acknowledgements

This project was supported in part by NIH grants # NIH/NCRR S07 RR18208-02 (“Human Subjects Research Enhancements Program”) and NCI P30 CA 56036-08.

References

- 1.Tang PC, LaRosa MP, Gorden SM. Use of computer-based records, completeness of documentation, and appropriateness of documented clinical decisions. J Am Med Inform Assoc. 1999 May–Jun;6(3):245–251. doi: 10.1136/jamia.1999.0060245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Haller G, Myles PS, Stoelwinder J, Langley M, Anderson H, McNeil J. Integrating incident reporting into an electronic patient record system. J Am Med Inform Assoc. 2007 Mar–Apr;14(2):175–181. doi: 10.1197/jamia.M2196. Epub 2007 Jan 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.London JW, Mervis S. 10th World Congress on Health and Medical Informatics. London: 2001. Cancer Clinical Trials Treatment Management Application. [Google Scholar]

- 4.London JW, Mervis S, Kalf GF. 11th World Congress on Medical Informatics. San Francisco: 2004. Tools for the Performance of Clinical Trials Research. [Google Scholar]

- 5.Payne TH, Graham G. Managing the life cycle of electronic clinical documents. J Am Med Inform Assoc. 2006 Jul–Aug;13(4):438–445. doi: 10.1197/jamia.M1988. Epub 2006 Apr 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Object Management Group. Unified Modeling Language. [Accessed February 4, 2009]; Available at: http://www.uml.org/ [Google Scholar]

- 7.Title 21 Code of Federal Regulations (21 CFR Part 11) for Electronic Records and Electronic Signatures.

- 8.caBIG Core Concepts. [Accessed February 4, 2009]; Available at: https://cabig.nci.nih.gov/overview/caBIG_core_concepts.

- 9.Morse MA, Califf RM, Sugarman J. Monitoring and ensuring safety during clinical research. JAMA. 2001 Mar 7;285(9):1201–1205. doi: 10.1001/jama.285.9.1201. [DOI] [PubMed] [Google Scholar]

- 10.Califf RM. Clinical trials bureaucracy: unintended consequences of well-intentioned policy. Clin Trials. 2006;3(6):496–502. doi: 10.1177/1740774506073173. [DOI] [PubMed] [Google Scholar]

- 11.Mitchell R, Shah M, Ahmad S, Rogers AS, Ellenberg JH. Adolescent Medicine Trials Network for HIV/AIDS Interventions. A unified web-based query and notification system (QNS) for subject management, adverse events, regulatory, and IRB components of clinical trials. Clin Trials. 2005;2(1):61–71. doi: 10.1191/1740774505cn68oa. [DOI] [PubMed] [Google Scholar]

- 12.Schlauch M, Smith B, Eastwood D, Putney S, Houman F. SAEF Serious Adverse Events Forum -- A New Approach to IRB Review of Safety Reporting. Annual Human Research Protection Programs Conference; Washington, DC; 2006. Poster abstract. [Google Scholar]

- 13.caBIG® Community Website. [Accessed January 16, 2008]; Available at: https://cabig.nci.nih.gov/

- 14.Cancer Adverse Event Reporting System (caAERS) [Accessed on January 16, 2008]; Available at: https://cabig.nci.nih.gov/tools/caAERS.