Abstract

Image processing of a fundus image is performed for the early detection of diabetic retinopathy. Recently, several studies have proposed that the use of a morphological filter may help extract hemorrhages from the fundus image; however, extraction of hemorrhages using template matching with templates of various shapes has not been reported. In our study, we applied hue saturation value brightness correction and contrast-limited adaptive histogram equalization to fundus images. Then, using template matching with normalized cross-correlation, the candidate hemorrhages were extracted. Region growing thereafter reconstructed the shape of the hemorrhages which enabled us to calculate the size of the hemorrhages. To reduce the number of false positives, compactness and the ratio of bounding boxes were used. We also used the 5 × 5 kernel value of the hemorrhage and a foveal filter as other methods of false positive reduction in our study. In addition, we analyzed the cause of false positive (FP) and false negative in the detection of retinal hemorrhage. Combining template matching in various ways, our program achieved a sensitivity of 85% at 4.0 FPs per image. The result of our research may help the clinician in the diagnosis of diabetic retinopathy and might be a useful tool for early detection of diabetic retinopathy progression especially in the telemedicine.

Key words: Template matching, hemorrhage, fundus image

Introduction

Diabetic retinopathy (DR) is a major public health issue. In South Korea, a recent survey indicated that approximately four million patients suffer from DR. Among them, 100,000 patients had a visual acuity worse than 0.1.1 The severity of DR is closely related to the time at which the patient was afflicted with the illness. Seventeen percent of diabetic subjects manifested DR 5 years after the diagnosis of diabetes and 97% 15 years after diagnosis.2 Early detection of DR, tight blood sugar control, and proper treatment in proliferative stage of diabetic retinopathy can prevent diabetic patients from blindness. Therefore, the early detection of DR and proper management of diabetes are important to preserve the level of vision. There are two kinds of fundoscopic findings in patients with diabetic retinopathy. One is a dark lesion that includes hemorrhage and microaneurysms. The other is a bright lesion such as exudates and cotton wool spots.3 This paper focuses on the detection of hemorrhages in diabetic retinopathy because the hemorrhage is one important diagnostic criterion to determine the severity of diabetic retinopathy. Currently, the diagnosis of DR is made manually by an ophthalmologist who detects and calculates the hemorrhage size. Automating the diagnosis of DR will help in managing DR more efficiently and accurately.

Many people have attempted to automate the detection of hemorrhage in the past. Spencer and Frame suggested a method using top-hat transformation and a matched filter.4,5 Niemeijer et al.6 developed this research further and introduced the hybrid methods. Fleming et al.7 proposed a method for the detection of microaneurysms by using a watershed transform in fluorescein angiograms. Wavelet transformation combined with template matching was also proposed as the solution for the detection of microaneurysms.8 Hatanaka et al.9 used density analysis to detect hemorrhages.

Although the research with top-hat transformation and a matched filter seems promising, it used a fixed kernel size which restricted the research to certain sizes and shapes of hemorrhages. In contrast, template matching could detect various sizes and shapes of hemorrhages as it can vary the sort of template used. The density analysis by Hatanaka extracts the optic nerve head and finds hemorrhage candidates. However, sensitivity of this method is low compared with the use of a morphological filter. Furthermore, the hemorrhage connected to the blood vessel must be processed twice in the density analysis,9 and the method from watershed transform and wavelet transformation is restricted to microaneurysms of small size.7,8 In contrast, template matching does not require the processing of blood vessels and is not restricted to microaneurysms.

Another difficulty in defining a hemorrhage is that it usually does not have any fixed size or shape. Hemorrhages gathered in a small area are especially difficult to count. In this research, our pre-screening program counts the overlapped area with the region of interest (ROI) of a hemorrhage made by an ophthalmologist and automatically distinguishes a true positive from a false positive (FP) or false negative (FN). In addition, the images are preprocessed and hemorrhages are filtered. Small hemorrhages (<100 pixels) which are not easy to discriminate from microaneurysm have been extracted.

The DR screening program is designed to support the ophthalmologist and general physician who do not have ophthalmologist in his district.10 To calculate the severity of DR manually, an ophthalmologist counted the hemorrhage pixel by pixel in previous method. Our program has automated this process by using template matching. Fifty-two fundus images segmented by an ophthalmologist were used to prove its effectiveness in our study.

Methods

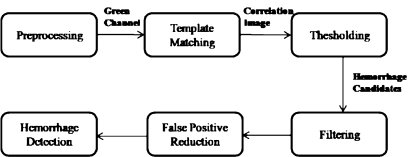

Extraction of hemorrhages from the fundus image was performed as follows: An RGB image first underwent preprocessing. Hemorrhage candidates were then extracted using template matching, after which region growing recovered the shape of the hemorrhage. Finally, FPs were eliminated from the hemorrhage candidates using a 2D filter. This flow is explained in Figure 1.

Fig. 1.

Flowchart of the proposed method.

Figure 2a shows the fundus image. The program performs the preprocessing on this fundus image. Figure 2b is the correlation image on which template matching is performed with the preprocessed image. Figure 2c contains the hemorrhage candidate. This figure is preprocessed with hue saturation value (HSV) brightness correction and contrast-limited adaptive histogram equalization (CLAHE). Figure 2d shows the shapes of the hemorrhages. The shape of hemorrhage was obtained by region growing. To find the exact hemorrhage, threshold and filtering were applied to hemorrhage candidates which were shown in Figure 2c, d. This eliminates the FPs from the hemorrhage candidates.

Fig. 2.

a Original image. b Result of NCC template matching. c Candidate hemorrhage. d Result after the adaptive seed region growing segmentation.

Preprocessing

RGB representation is composed of red, green, and blue colors. Green channel represents red structures well and is better contrasted than other channels. Red channel is relatively bright and the vascular structure of the choroid is visible. The retinal vessels are also visible but show less contrast than in green channel. Blue channel is noisy and contains little information.11 So the green channel among the RGB channels was used for preprocessing.

Preprocessing consists of two processes: making the brightness of the image uniform and enhancing the contrast between the hemorrhage and background. To correct the non-uniform illumination, the DR screening program can apply a 3D Gaussian algorithm and HSV brightness correction to the RGB image. To enhance the contrast, the program can use the CLAHE algorithm and the general contrast enhancement algorithm.12 In this paper, HSV brightness correction and CLAHE were combined sequentially for the preprocessing.

Brightness Correction Using Gaussian Smoothing (3D Gaussian)

The brightness must be equally corrected from the center to the skirt region of the fundus image. We are able to remove variations in illumination by smoothening the image with a large-scale median filter (15 × 15, 10sigma) and subtracting the filtered image from the original. It has effects of leaving only features of a smaller scale than the filter, such as the vessels and the small hemorrhages in the image.5,6 The images were then normalized between pixel values of 0 and 255. However, this method can destroy the larger features than the filter, so this program used HSV brightness correction instead of 3D Gaussian.

Brightness Correction Using HSV Brightness

A scheme of brightness correction was performed using HSV space. First, the brightness values of the HSV space were calculated. The brightness correction value Bc(i,j) is given by the following equation:9

|

1 |

where V(i,j) is the brightness value of the HSV space.

Next, the RGB values were changed by Bc(i,j). A fundus image shows any non-uniform illumination in the background. The region of the optic disk is bright and there is a spherical 3D decrease in brightness toward the outer regions. To improve the performance of our system, irregular illumination was corrected by this method.

Contrast-Limited Adaptive Histogram Equalization

The shape of a photograph is rectangular. However, the shape of an eye is rounded because the rectangular picture contains the round eye; in the fundus image, the dark outside region which surrounds an eye appears. In general histogram equalization, the pixel of dark outside region is added to the histogram, so values of pixels near this dark outside region were lower than the expected ones. CLAHE operates on small regions, called tiles, while the general algorithm works on the entire image.12 As the effect of extremely dark and bright regions is restricted to the local tile, a uniform image can be obtained. The setting for CLAHE algorithm was configured with size of tiles to be 8 × 8 with clip limit 8. The example of CLAHE algorithm appears in Figure 2c, d.

Template Matching

Template matching was done using a circular-shaped template and the program then performing normalized cross-correlation (NCC) template matching. The hemorrhage candidates were then extracted using the templates of various shapes and sizes because a single template could not cover the entire set of candidates.

Template matching with NCC allows more effective detection than other methods, like, sum of squared difference, in the hemorrhage detection. The following equation expresses the NCC:

|

2 |

where b(,) is the sub-image, t(,) is the template, and b* respective t* means values of the gray levels in b and t, respectively.

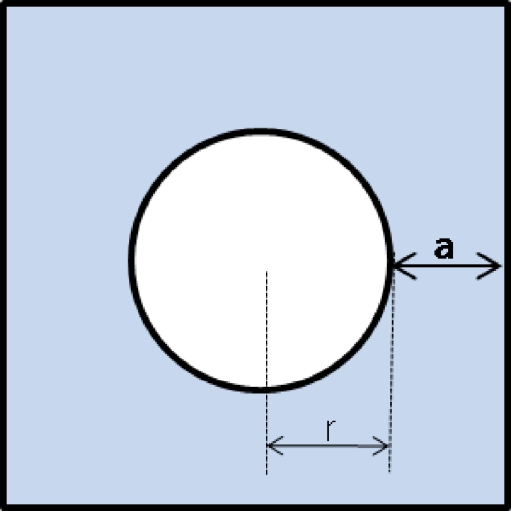

The shape of template is defined as the radius of the circle (r) and the outside width (a) as shown in Figure 3. The greater the radius of the circle, the larger the detected hemorrhages are. The longer the outside width of the template, the more background is included. Therefore, the independent circular hemorrhages could be easily detected but the gathered hemorrhages can be missed. In contrast, the short outside width makes it easy to detect the gathered hemorrhage. In this study, the (r,a) values of the four templates were (6,12), (10,12), (7,2), and (15,2). The former two templates discriminate the independent spots more clearly, and the latter two templates are more effective for gathering spots. Various radiuses of the circle are used to discriminate spots with various sizes.

Fig. 3.

Shape of template.

Figure 2b shows the correlation image. The darker the image, the farther its shape are from the shape of the template on the considered pixel. The screening program then finds the location which expresses the different shape from the template. Proper thresholds are applied to the correlation image to find the candidates.

Actually, the correlation image has a value between −1 and 1. First, the pixel whose correlation value is around −1 is extracted. Then, sequential pixels whose correlation value is bigger than −1 are extracted. The number of sequential pixels is the threshold which is selected. As sensitivity of template depends on the shape of template, this threshold was selected accordingly. In this study, 150 and 300 pixels were used. The (15,2) template is less sensitive than other templates because the radius of a circle is large and the outside width is small. Thus, more pixels must be selected. Other templates used 150 pixels as the threshold (to adjust for bias, the threshold value selected from the histograms was decreased by 3.)

Region Growing Segmentation

A hemorrhage candidate after template matching has only the information of the center point and the presumed size and lacks information of the exact shape which can be recovered using region growing segmentation. There are two methods of region growing segmentation: adaptive seed region growing segmentation (ASRGS) and region growing segmentation using the local threshold (RGSLT). We used the RGSLT at first. But as RGSLT requires the user to set the kernel size, ASRGS was used in this program.

Region Growing Segmentation Using the Local Threshold

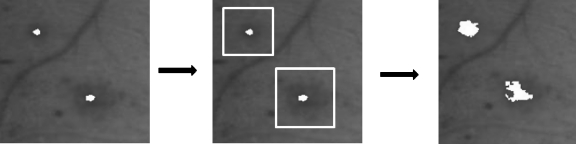

First, a 30 × 30 to 60 × 60 rectangle patch from the center of hemorrhage is set on the image. In this rectangle patch, the otsu threshold is applied.13 After the gray level image is reduced to a binary image, the hemorrhage can be extracted. This flow is explained in Figure 4. Since the patch size must be bigger than the size of the hemorrhage, the size of the patch was decided carefully. Although this method requires users to set the kernel size, this method segments the small hemorrhage effectively and there is no divergence of hemorrhage region.

Fig. 4.

Flowchart of the region growing segmentation using the local threshold.

Adaptive Seed Region Growing Segmentation

An ASRGS algorithm was used for hemorrhage detection. The basis of ASRGS algorithm is the identification of similar pixels within a region to determine the location of a boundary. To establish whether two adjacent pixels are similar, they must satisfy some criteria such as gray level or color. In ASRGS, adjacent pixels within the same region are considered to have fairly homogeneous grayscale properties.

Consider each pixel of the image in raster order. A pixel p at coordinates (x,y) has eight neighboring pixels which touch one of their edges or corners with coordinates (x ± 1, y ± 1) or (x ± 1, y ∓ 1). Consider an adjacent pixel pi, where i is the horizontal, vertical, or diagonal coordinate relative to p. The first step of the algorithm was to calculate the difference in intensity between p and pi. If the difference was less than or equal to the threshold value T

|

where “KM” is the kernel mean value, the subscript is the kernel size, and “d” is the distance between p and pi, then pi was added to the region and set to p. The process was repeated until all pixels considered for merging and the original pixel comprised a region. When the distance between p and pi exceeded 10 pixels, the threshold value was decreased by 1 every 3 pixels to prevent the hemorrhage from diverging. The result of this method is shown in Figure 2d.

False Positive Reduction

False positive reduction is possible twice after template matching and region growing are done. As the result after template matching renders the same result as the circular morphological filter does, reduction of false positive does not require as many object features as other algorithm does. To classify each of candidate objects as either a red lesion or a non-red lesion, six filters are used. First, we used the shape, size, and compactness of the hemorrhages as first set of filters. These features are shown in 1–4. The second set of filters used was the 5 × 5 kernel value and foveal filter. Next, we used 5 and 6 filters. Since the mean intensity under kernel is calculated on the reference image, the second filter needs the reference image.

Area

The aspect ratio r1 = w/h in which w is the width of the bounding box of hemorrhage and h is the height of the bounding box of the hemorrhage.

Compactness

The aspect ratio r2 = l1/l2 in which l1 is the long-axis length and l2 is the short-axis length.

The mean intensity under the 5 × 5 kernel from the center of hemorrhage.

Three mean intensities under the 5 × 5, 40 × 40, and 70 × 70 kernels (foveal filter).

Area

To eliminate the FPs from the hemorrhage candidates, the area feature is used twice. Before region growing was performed, hemorrhage candidates were selected according to their sizes. This eliminates small spots selected wrongly from template matching, and candidates whose sizes are below the area threshold were excluded. After region growing recovers the exact shape of the hemorrhage, thresholds of the area of hemorrhage is used to remove the FPs.

Compactness and the Aspect Ratio

Compactness represents how circular the shape is. The equation of compactness is as follows:

|

3 |

in which “p” is the perimeter, hence the length of the boundary line, and “A” is the area of the spot. If the compactness was between 0.4 and 2.4, candidates after template matching were accepted. If the compactness was <9, the aspect ratio r1 was between 0.5 and 2.5 and the aspect ratio r2 was lower than 3, then candidates after region growing are accepted. This filtering removes the long spot which comes from the vessel.

Mean Intensity Under the 5 × 5 Kernel

This filter is performed on hemorrhage candidates after template matching. If the mean intensity under the 5 × 5 kernel of hemorrhage candidates is outside the range between 20 and 140, these candidates are rejected. This filter removes the variations near the optic disk which are wrongly recognized as the hemorrhage. Because CLAHE makes a fundus image even in the brightness, the variations near the optic disk cannot be distinguished by the mean intensity of kernel with CLAHE. So a fundus image processed by general contrast enhancement algorithm is used as the reference image.

Foveal Filter

In Figure 5, the mean intensity under kernel 1 is the intensity of hemorrhage, while the mean intensity under kernel 3 is the intensity of the background. Hence, the difference of intensity between kernel 1 and kernel 3 is basically the difference between the hemorrhage and the background. If this difference is not large, the object is not considered a hemorrhage. However, when the size of the hemorrhage is big, even if the difference of the hemorrhage and the background is not large, the object is considered a hemorrhage. This is called a kernel compensation. As such, if the difference in intensity between kernels 2 and 3 is above the determined value but that between kernels 1 and 3 is small, the object is considered a hemorrhage. Judging the object using three kernels is referred to as the foveal filter.14 The size of kernels 1, 2, and 3 is 5 × 5, 40 × 40, and 70 × 70, respectively. The differences between kernels 1 and 3 and kernels 2 and 3 are 18 and 3, respectively. The mean intensity of the kernel is calculated from the reference image processed by the general contrast enhancement algorithm.

Fig. 5.

Foveal filter.

And Operation of Two Channels

Information of hue channel in the HSV space can be used along with the information of green channel if the AND operation of two channels is utilized. After hemorrhage candidates are extracted from green channel as well as from hue channel, hemorrhages in places where at the same time the correlation values of each channel are above a certain threshold are extracted. In this method, the number of FPs is so small that the improvement of true positives without increasing the number of FPs is possible if the corresponding result is added to the existing image by the “OR” operation.

Results

Fifty-two fundus images were obtained for tests with an array size of 1,536 × 1,024 pixels and 24-bit color, 1,600 × 1,216 pixels and 24-bit color, 1,158 × 948 pixels and 24-bit color and 1,278 × 948 pixels and 24-bit color. An ophthalmologist then segmented the area of the hemorrhages manually. If there was an overlap between the area found by an ophthalmologist and the expected area of hemorrhage, the expected area was considered a valid hemorrhage. In many papers, a specificity value is also proposed with a sensitivity value.3 We can decide whether a patient of a fundus image has diabetic retinopathy after finding how much hemorrhages are included in a fundus image. In this case, a sensitivity and a specificity can be proposed together. However, our paper concentrates on the counting of big hemorrhages and their area. As we focused on the counting of the hemorrhages, the sensitivity and the number of FPs per image was the basis for judging the performance of the system. So the free response receiver operating characteristic (FROC) curve was used to express the relationship between sensitivity and the number of FPs per image.15 In receiver operating characteristic analysis, the area under the curve can be used for decision criteria because the max value of the sensitivity and the specificity is 1. However, as the number of FPs is not bound to a specific number, the area under the curve value cannot be calculated in FROC analysis.

In Tables 1, 2, 3, and 4, the TP area specifies the area of hemorrhages correctly found by the screening program. It is calculated by adding the area of TP hemorrhages. The FN area means the area of FN hemorrhages.

Table 1.

Results of Preprocessing Processes

| TP per image | FP per image | FN per image | TP area (%) | FN area (%) | Sensitivity | |

|---|---|---|---|---|---|---|

| HSV Bc and CLAHE | 12.8 | 5.5 | 6.0 | 72 | 28 | 0.68 |

| 3D Gaussian | 11.6 | 8.1 | 7.2 | 66 | 34 | 0.62 |

| No preprocessing | 11.6 | 7.4 | 7.2 | 68 | 32 | 0.62 |

Table 2.

Result of the 5 × 5 Mean Kernel Filter

| TP per image | FP per image | FN per image | TP area (%) | FN area (%) | Sensitivity | |

|---|---|---|---|---|---|---|

| Before | 13.2 | 8.5 | 5.6 | 73 | 27 | 0.70 |

| After | 12.8 | 5.5 | 6.0 | 72 | 28 | 0.68 |

Table 3.

Results of Foveal Filter

| TP per image | FP per image | FN per image | TP area (%) | FN area (%) | Sensitivity | |

|---|---|---|---|---|---|---|

| Before | 13.2 | 14.6 | 5.6 | 73 | 27 | 0.70 |

| Intermediate results | 11.8 | 3.9 | 7.0 | 60 | 40 | 0.63 |

| Foveal filter result | 12.8 | 5.5 | 6.0 | 72 | 28 | 0.68 |

Table 4.

Results of Threshold Variations in Template Matching

| TP per image | FP per image | FN per image | TP area (%) | FN area (%) | Sensitivity | |

|---|---|---|---|---|---|---|

| Case 1 | 12.5 | 6.6 | 6.3 | 69 | 31 | 0.66 |

| Case 2 | 12.5 | 6.8 | 6.3 | 69 | 31 | 0.66 |

| Original case | 12.8 | 5.5 | 6.0 | 72 | 28 | 0.68 |

Preprocessing Performance

The general contrast enhancement algorithm was compared to the CLAHE algorithm combined with HSV brightness correction. The general contrast enhancement algorithm generated an irregular image due to the optic disk and the dark outside area. CLAHE solved this problem and the number of FPs per image of CLAHE algorithm was lower than that of the other algorithms in Table 1.

Filter Performance

Results of the filter using the 5 × 5 kernel were shown in Table 2. By using this filter, the number of FPs per image was reduced by 3.0.

Results of the foveal filter were shown in Table 3.

“Before” is the test result before application of the foveal filter. “Foveal filter result” is the test result after application of the foveal filter. To recover the big missed hemorrhages from the FNs, a kernel compensation technology was used. “Intermediate results” is the result before application of the kernel compensation technology. “Intermediate results” showed 3.9 FP per image, which was lower than the number of FPs per image of “Before’ by 10.7; however, the TP area decreased by 13%. After kernel compensation, the TP area recovered to 72%.

Different Applications of Thresholds in Template Matching

In Table 4, case 1 is when the system classifies the fundus images into three cases depending on the amount of hemorrhage and gives the other threshold to three cases. In Table 4, case 2 is when the system calculates the mean of thresholds from 52 correlation images and sets the mean as the threshold. Case 1 shows lower FPs per image than case 2. But the original case shows lower FPs per image and a higher sensitivity than other cases.

Performance Evaluation

The results shown in “Preprocessing Performance,” “Filter Performance,” and “Different Applications of Thresholds in Template Matching” reveal that the system achieved a sensitivity of 68% at 5.5 FPs per image, with the TP area as 72%. Since the system missed some small hemorrhages, the TP area was large in comparison with the sensitivity. The paper of Fleming et al. 7 showed that sensitivity in the detection of individual microaneurysms was lower than 65%, and the number of FPs was more than four per image.

However, the subjective evaluation, when we compared the detected hemorrhages and segmented ROI of hemorrhages manually, showed a better result than this result. As our system focuses on the detection of big hemorrhages and the calculation of the area, the small faint hemorrhage can be ignored. Although the human eye has no difficulty identifying most small hemorrhages, there will inevitably be disagreements between human experts, especially regarding the fainter dots.6 So there can be small hemorrhages which are skipped from the pre-segmented ROI. This decreased the performance of our system. So if it was difficult to judge whether the object was a hemorrhage, the confused object was simply judged to be a hemorrhage. When the confused small hemorrhages among FP hemorrhages were excluded, the system achieved a sensitivity of 82% at 2.6 FPs per image. When the small hemorrhage skipped from the pre-segmented ROI was excluded from the FNs, the sensitivity went up to 96%. In other words, most big hemorrhages were caught by our system. The mean and standard deviation of area of microaneurysms was 69 ± 40 pixels.7 To include two complicated cases in the performance evaluation, hemorrhages with a size smaller than 100 pixels were ignored.

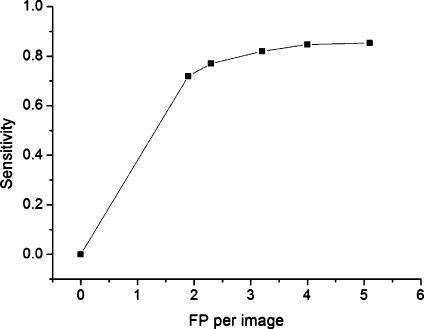

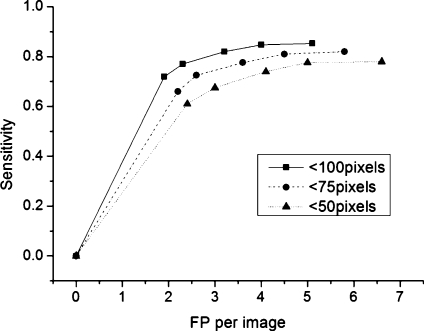

Table 5 shows the result after the small hemorrhages are ignored. Sensitivity increased by 17% and the number of FP decreased by 2.5 when the area threshold is 7. Area threshold is used in the area filtering after template matching. The FROC curve is presented as this threshold varies. Figure 6 shows the FROC curve when candidates’ sizes below 100 pixels were excluded

Table 5.

Results of Hemorrhage Extraction by Varying AREA Thresholds

| Case | AREA threshold | TP per image | FP per image | FN per image | Sensitivity |

|---|---|---|---|---|---|

| 1 | 4 | 12.9 | 5.1 | 2.2 | 0.85 |

| 2 | 7 | 12.8 | 4.0 | 2.3 | 0.85 |

| 3 | 10 | 12.1 | 3.2 | 2.7 | 0.82 |

| 4 | 15 | 10.8 | 2.3 | 3.2 | 0.77 |

| 5 | 20 | 9.6 | 1.9 | 3.7 | 0.72 |

Fig. 6.

Free response receiver operating characteristic (FROC) curve from the data of Table 5.

Threshold for the hemorrhages whose identification was difficult to judge was set as 50, 75, and 100 pixels, respectively. Each FROC curve is drawn in Figure 7. This figure shows that the hemorrhages between 50 and 100 pixels decreased the performance of the system the most. For the hemorrhages whose sizes are above 100 pixels, the system achieved 85% sensitivity at 4.0 FPs per image.

Fig. 7.

FROC curve with the different area threshold (50,75,100 pixels).

Examples of FP and FN Hemorrhages

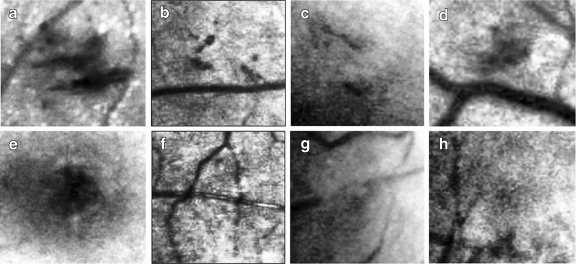

Figure 8 shows the examples of FP and FN hemorrhages. The contrast enhancing green channel image is shown.

Fig. 8.

Missed hemorrhages and wrongly detected hemorrhage. a–d Examples of FNs. e–h Examples of FPs.

Figure 8a–d shows examples of FNs. In Figure 8a, a hemorrhage was missed because its shape took an elongated form like a vessel. In Figure 8b, a hemorrhage was missed because its size was small and it was gathered near a vessel. In Figure 8c, a hemorrhage was missed because its size was small, had an elongated shape, and the contrast was vague. In Figure 8d, a hemorrhage was missed because it was located between vessels and its size was small.

Figure 8e–h are examples of FPs. In Figure 8e, the fovea was misclassified as a hemorrhage. In Figure 8f, the cross point of the vessels was misclassified. In Figure 8g, the spot located between the disconnected vessel was misclassified. In Figure 8h, the faint wide spot was misclassified.

There were spots in which the RGB color was similar to the color of the background, but they revealed themselves when the green channel was separated and the contrast enhancement was performed. Because the green channel represents the reflected light related with blood, it is very possible that the spot revealed in the green channel is a hemorrhage.

Discussion and Conclusions

Extracting hemorrhages from a fundus image is made difficult by several factors. First, the intensity of the background is non-uniform. Second, the contrast between the background and hemorrhage is too small in many instances. Third, the shape of the hemorrhage is irregular. Although the use of a morphological filter to extract the hemorrhage from the fundus image has been proposed, there has been no research concerning the extraction of hemorrhages using template matching with templates of various shapes. In our study, we attempted to analyze the effectiveness and performance of this method.

We found that six out of eight cases in the examples of FPs and FNs were related with vessels. The paper of Sinthanayothin et al.16 which reported methods for microaneurysms and other red lesion detection has attempted to locate all retinal vessels which are then excluded from further analysis. Moreover, the method of Fleming et al.7 can detect a microaneurysm which occurs on a vessel, for example if it is much darker or wider than the vessel. Although our system can distinguish the vessels with the circular spot, further study on vessels will help eliminate FPs from the vessels.

Examples of FPs include the fovea case. If the detection algorithm of the fovea is applied, this FP can be eliminated. The area around the optic disk has been cleared by filtering the high pixel value of the hemorrhage candidates as explained in Section 2.3.3. This has eliminated the FPs from the optic disk. The structure of the fundus image can give us wide information about the hemorrhage detection.

In many researches, the performance of systems is judged by sensitivity and specificity. This system decided whether the fundus image had diabetic retinopathy or not based on detecting microaneurysms or other lesions. Since diabetic retinopathy has many grades, the method to detect diabetic retinopathy from detection of several lesions must be explained,17 and we have difficulties at finding a paper specifying the method exactly. As a method to find the symptoms of diabetic retinopathy from a fundus image is not described, maybe it is difficult to compare the sensitivity and specificity of each research.3

From many researches, although the detection rate per image is higher than 80%, the detection rate per lesion is low compared with 80%.3,7,15 In other words, there are many missed hemorrhages and wrongly detected hemorrhage. We must calculate the size and number of each hemorrhage in fundus images because we start this research to measure variation of status of patients in diabetic retinopathy. Since the detection rate per lesion is important, we analyzed this rate deeper. Our study showed that the sensitivity for big hemorrhages using template matching was 85% and at the image where 15 hemorrhages were extracted, the number of FPs was 4.0. This is an acceptable result for the automation of calculating the size of hemorrhage.

Acknowledgments

This work was supported by a research grant from Seoul industrial-educational cooperation project (grant ST090841) and the original project of National Cancer Center, Korea (grant 0810122).

References

- 1.The 4th Korea National Health & Nutrition Examination Survey, Ministry of Welfare and Family Affairs, South Korea, 2008

- 2.Klein R, Klein B, Moss S, Davis M, DeMets D. The Wisconsin epidemiologic study of diabetic retinopathy. Prevalence and risk of diabetic retinopathy when age at diagnosis is less than 30 years. Arch Ophthalmol. 1984;102(4):520–526. doi: 10.1001/archopht.1984.01040030398010. [DOI] [PubMed] [Google Scholar]

- 3.Patton N, Aslam TM, MacGillivary T, Deary IJ, Dhillon B, Eikelboom RH, Yogesan K, Constable IJ. Retinal image analysis: Concepts, applications and potential. Progress Retinal Eye Res. 2006;25:99–127. doi: 10.1016/j.preteyeres.2005.07.001. [DOI] [PubMed] [Google Scholar]

- 4.Spencer T, Olson J, McHardy K, Sharp P, Forrester J. An image-processing strategy for the segmentation and quantification in fluorescein angiograms of the ocular fundus. Comput Biomed Res. 1996;29:284–302. doi: 10.1006/cbmr.1996.0021. [DOI] [PubMed] [Google Scholar]

- 5.Frame A, Undrill P, Cree M, Olson J, McHardy K, Sharp P, Forrester J. A comparison of computer based classification methods applied to the detection of microaneurysms in ophthalmic fluorescein angiograms. Comput Biol Med. 1998;28:225–231. doi: 10.1016/S0010-4825(98)00011-0. [DOI] [PubMed] [Google Scholar]

- 6.Niemeijer M, Ginneken BV, Staal J, Suttorp-Schulten MS, Abramoff MD. Automatic detection of red lesions in digital color fundus photographs. IEEE Trans Medical Imaging. 2005;24(5):584–592. doi: 10.1109/TMI.2005.843738. [DOI] [PubMed] [Google Scholar]

- 7.Fleming AD, Philip S, Goatman KA, et al. Automated microaneurysm detection using local contrast normalization and local vessel detection. IEEE Trans in Medical Imaging. 2006;25(9):1223–1232. doi: 10.1109/TMI.2006.879953. [DOI] [PubMed] [Google Scholar]

- 8.Quellec G, Lamard M, Josselin PM, Cazuguel G, Cochener B, Roux C. Optimal wavelet transform for the detection of microaneurysms in retina photographs. IEEE Trans Med. 2008;27(9):1230–1241. doi: 10.1109/TMI.2008.920619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hatanaka Y, Nakagawa T, Hayashi Y, Hara T, Fujita H. Improvement of Automated Detection Method of Hemorrhages in Fundus Images. Canada: IEEE EMBS Vancouver; 2008. pp. 5429–5432. [DOI] [PubMed] [Google Scholar]

- 10.Abramoff MD, Viergever MA, Niemeijer M, Russell SR, Suttorp-Schulten MSA, Ginneken B. Evaluation of a system for automatic detection of diabetic retinopathy from color fundus photographs in a large population of patients with diabetes. Diabetes Care. 2008;31(2):193–198. doi: 10.2337/dc07-1312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Suri JS, Wilson DL, Laxminarayan S. Handbook of biomedical image analysis. 2005;II:320–323. [Google Scholar]

- 12.Zuiderveld K. Contrast Limited Adaptive Histogram Equalization. San Diego: Academic Press Professional Inc.; 1994. [Google Scholar]

- 13.Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Sys Man Cyber. 1979;9:62–66. doi: 10.1109/TSMC.1979.4310076. [DOI] [Google Scholar]

- 14.Heucke L, Knaak M, Orglmeister R. A new image segmentation method based on human brightness perception and foveal adaptation. IEEE Signal Processing Letters. 2000;7(6):129–131. doi: 10.1109/97.844629. [DOI] [Google Scholar]

- 15.Zhang X, Chutatape O: A SVM approach for detection of hemorrhages in background diabetic retinopathy. Proceedings of 2005 IEEE International Joint Conference on Neural Networks, 2005. IJCNN ’05

- 16.Sinthanayothin C, Boyce JF, Williamson TH, Cook HK, Mensah E, Lal S, Usher D. Automatic detection of diabetic retinopathy on digital fundus images. Diabetic Med. 2002;19(2):105–112. doi: 10.1046/j.1464-5491.2002.00613.x. [DOI] [PubMed] [Google Scholar]

- 17.Bouhaimed M, Gibbins R, Owens D. Automated detection of diabetic retinopathy: Results of a screening study. Diabetes Technol Ther. 2008;10(2):142–148. doi: 10.1089/dia.2007.0239. [DOI] [PubMed] [Google Scholar]