Abstract

Strategies specifically designed to facilitate the training of mental health practitioners in evidence-based practices (EBPs) have lagged behind the development of the interventions themselves. The current paper draws from an interdisciplinary literature (including medical training, adult education, and teacher training) to identify useful training and support approaches as well as important conceptual frameworks that may be applied to training in mental health. Theory and research findings are reviewed, which highlight the importance of continued consultation/ support following training workshops, congruence between the training content and practitioner experience, and focus on motivational issues. In addition, six individual approaches are presented with careful attention to their empirical foundations and potential applications. Common techniques are highlighted and applications and future directions for mental health workforce training and research are discussed.

Keywords: Training, Uptake, Workforce development, Implementation, Dissemination

Over the past decades, the mental health field has seen a surge in the development and testing of evidence-based practices (EBPs) for the treatment of a wide variety of adult and youth psychosocial problems. Unfortunately, the advances in EBPs have largely outpaced the development of technologies designed to support their implementation by practitioners in real-world contexts (Fixsen et al. 2005; Ganju 2003; Gotham 2006). One result of this lag is a shortage of treatment providers who are adequately trained and supported to provide EBPs (Kazdin 2008; Weissman et al. 2006). Although reviews of implementation science identify practitioner training as a core implementation component (e.g., Fixsen et al. 2005), research has been limited and trainers in behavioral health repeatedly fail to make use of the existing strategies that have received empirical support (Stuart et al. 2004). The general lack of attention to evidence-based training and implementation methods has been cited as a major contributor to the “research-to-practice gap” commonly described in the mental health literature (Kazdin 2008; McHugh and Barlow 2010; Wandersman et al. 2008). Consequently, efforts to develop or identify the most effective methods and strategies for training existing mental health practitioners in EBPs and/or the core skills underlying many EBPs (e.g., cognitive behavioral strategies, behavioral parenting strategies) have received increasing attention in the literature (e.g., Dimeff et al. 2009; Long 2008; Stirman et al. 2010).

Not surprisingly, a disconnect between the scientific literature and the behavior of community professionals is not unique to mental health. Multiple disciplines, including the fields of medicine and education, grapple with how to train their workforces to implement practices that have received empirical support (e.g., Grimshaw et al. 2002; Speck 1996). Although there are no simple answers or “magic bullets” to ensure effective training (Oxman et al. 1995), different fields have emphasized unique training methods and conceptualizations that may contribute to mental health professionals' and service researchers' efforts to provide training in EBPs. For instance, Stuart et al. (2004) discussed the applicability of a model of evidence-based teaching practice to training behavioral health professionals and argued that such an interdisciplinary knowledge exchange has the potential to dramatically improve training efforts.

In the adult education literature, Thompson (1976) distinguished training from activities such as teaching and education by referring to it as the “mastery of action” (p. 153). According to Thompson, teaching and education focus primarily on the understanding of principles that, unlike training, are not necessarily accompanied by specific behaviors or an intended purpose. These two workforce development activities—initial education and training or “retraining”—are certainly overlapping and share a variety of methods. Nevertheless, a full education and training review is beyond the scope of the current paper. Instead, we will focus primarily on methods which can be used to train an existing workforce, one that already received their initial educational training, in order to enhance their professional behaviors to be in closer alignment with EBPs. Across disciplines, methods for training an existing workforce have been referred to using terms such as inservice training, professional development, continuing education, and retraining.

In the current paper, training is conceptualized as skill acquisition and implementation support for providers currently in practice. Our discussion of training will include two complementary processes that have been previously identified as essential to effective implementation (Fixsen et al. 2005; Joyce and Showers 2002): (1) Methods through which professionals learn new skills and behaviors and (2) Systems of ongoing feedback or support to refine and further develop those skills. Although organizational factors have been found to be an important element in training success (Beidas and Kendall 2010), our emphasis will be on in-person or “face-to-face” models of changing behavior. (For detailed discussion of how systems can interfere with or support program implementation, see Kelleher 2010; McCarthy and Kerman 2010).

Below, we first describe general findings and themes from the teacher training, adult education, and medical training literatures and relate them to the process of workforce training/retraining. Important theories with less empirical support from fields such as adult education are noted where appropriate to highlight important considerations that have been discussed in theories and research from other fields. Next, we detail what is known empirically about specific training approaches. Drawing on the interdisciplinary literature, we will discuss approaches that have been proven effective or appear promising in other workforce development research and consider how these strategies may apply to inservice training in the mental health field. A search for reviews, meta-analyses, and original research on theories of training and education and on approaches that have been the subject of prior research was conducted using a snowballing methodology (i.e., identification of articles and review of their reference sections) and the common bibliographic databases of a variety of disciplines (e.g., MEDLINE, ERIC, etc.). The general training themes and specific approaches included were selected on the basis of (a) the strength of their empirical foundation, (b) their potential applicability to the training of mental health practitioners, or (c) a combination of the two.

General Themes from Training Theory and Research

Existing training literatures often make use of sophisticated and detailed theoretical frameworks for instructing professionals in the use of complicated interactive processes (e.g., Knowles 1968; Meverach 1995). To highlight some important theories and findings that are relevant to the training of mental health professionals regardless of the approach that is selected for training, we briefly discuss important general themes that have emerged from the theoretical and empirical literature on training.

Extended Contact

Perhaps the most robust and consistent finding across disciplines is that single-exposure training models (i.e., “train and hope,” Stokes and Baer 1977) or the simple provision of information (e.g., through provision of a manual or set of guidelines) are ineffective methods of producing practitioner behavior change (e.g., Fixsen et al. 2005; Grimshaw et al. 2001; Hoge and Morris 2004; Joyce and Showers 2002; Speck 1996). Historically, “single-shot” classroom-based workshops, lasting somewhere between a few hours and a few days, have been the most widely used method of training practitioners (Hoge et al. 2007; Joyce and Showers 2002). These types of interventions rely heavily on didactic presentation of information and may or may not be coupled with interactive strategies, such as modeling and role plays. Didactic workshop training models can be effective for the dissemination of information and can yield increases in provider knowledge (Fixsen et al. 2005), but are limited in the extent to which they produce consistent or sustained behavior change (e.g., Beidas and Kendall 2010; Miller et al. 2004; Sholomskas et al. 2005). For instance, Lopez and colleagues (this issue) found that exposure to a one-shot, state-mandated EBP training was only associated with practice-related behavioral change for clinicians who had received a previous training on the EBP.

In addition to didactics, ongoing contact (through coaching, follow-up visits, etc.) appears to be important, in part, because of the length of time required to build proficiency in a new practice and the opportunity for active learning (Birman et al. 2000). Within the education literature, Joyce, Showers, and colleagues (Joyce and Showers 2002; Showers et al. 1987) have estimated that it requires 20–25 implementation attempts in order to achieve consistent professional behavior change. The medical and mental health literatures also support the importance of continued contact (e.g., Bruns et al. 2006; Soumerai and Avorn 1987).

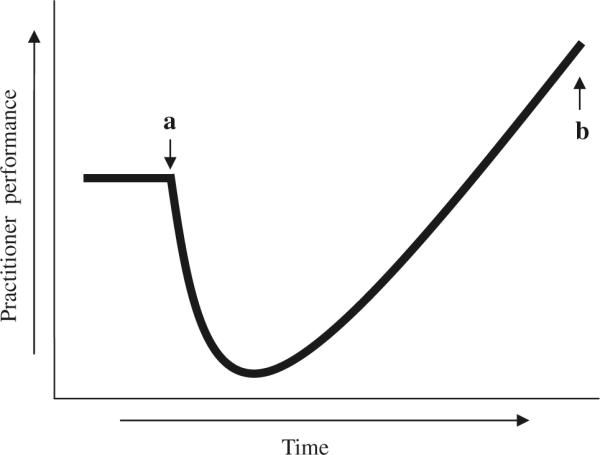

Some insight into why ongoing contact is important comes from teacher education and the notion that newly-learned skills and behavior are particularly fragile. Meverach (1995) conceptualizes professional development as beginning with a “survival” phase and ultimately reaching a state in which new knowledge and skills are consistently and successfully applied and adapted (see Fig. 1). The survival phase is characterized by practitioners questioning new techniques, feeling ambivalent about their use, and experiencing a disruption in their existing knowledge. Meverach has suggested that, during this phase, even experienced practitioners may behave like novices; for example, they may be highly dependent on consultants and inflexible or mechanical in their practice. As a result of the interference of new skills on the use of old ones, this phase involves an initial decline in performance. Indirect support for this process can be found in the mental health literature, which sometimes shows initial decreases in skills from training (Beidas and Kendall 2010).

Fig. 1.

Theoretical U-curve process of professional development (adapted from Meverach 1995). a New material introduced; beginning of “survival” phase; b independent, adaptive implementation

Research demonstrating that participants can first experience a decline in skill level before eventually surpassing their baseline competence has a number of important implications. First, it suggests that training models in which practitioner use of new behaviors is touted to result in relatively rapid success may be overly optimistic. In many models, use of newly-introduced skills is believed to result in observed improvements in service recipients (e.g., clients, patients, students, etc.) which, in turn, reinforce implementation (e.g., Han and Weiss 2005). Second, Meverach's curve brings to light potential concerns about trainee morale and engagement. Multiple barriers already exist to interfere with practitioner participation in trainings (e.g., limited time) and the added possibility that participation could result in short-term worsening of overall professional practice may be difficult to justify. Intuitively, it seems that these phases could be a time to use a disproportionate amount of coaching or consultation resources in order to overcome the anticipated decline and support the practitioner. Regardless, further research is necessary in order to determine the extent of any short-term deleterious effects of training as well as develop ways to overcome them.

Provider Motivation

Level of practitioner engagement in training is an under-explored but essential area of research, as it may impact the success of specific training approaches. In the nursing literature, Puetz (1980) found that practitioners who sought out continuing education opportunities were already more likely to be motivated and competent. Likewise, other research has established that more competent practitioners often tend to get more out of training initiatives, demonstrating greater improvement than their peers (e.g., Siqueland et al. 2000). These findings are disheartening because they indicate that providers with the lowest competence are also those least likely to engage in or benefit from trainings designed to improve their practice. In recognition of this research, Fixsen et al. (2005) described the importance of staff selection for training among their core components and suggested that there may be certain characteristics that would make particular staff suitable (or unsuitable) for EBP training. However, selection of only the most competent or motivated practitioners is unlikely to result in the large-scale changes in standards of care that are the purported goal of many dissemination and implementation researchers (e.g., Daleiden and Chorpita 2005; Ganju 2003). Instead, it is precisely those practitioners who are less likely to be reached by trainings that ideally are the target of training initiatives. It is important to examine the determinants of provider motivation and engagement in training opportunities in order to understand the extent to which these factors are malleable, boost participation, and foster widespread adaptation of EBP.

Existing motivational enhancement strategies often take direction from contemporary theories of motivation (e.g., Fishbein et al. 2001), identifying specific high-concern or low-performance areas for improvement in order to build provider commitment (e.g., Feifer et al. 2006). Soumerai and Avorn (1990) stated that training is unlikely to be successful unless clear problems indicate that existing practices are inadequate. Within the mental health field, the popularity of newer interventions that demonstrate efficacy for cases where existing treatments are largely ineffective (e.g., Dialectical Behavior Therapy; Linehan 1993) might be an example of the importance of this phenomenon. Pre-training interventions to provide information and boost provider motivation may be a relatively low-cost solution for motivation problems. Unfortunately, studies specifically investigating the effectiveness of clinician engagement interventions are virtually nonexistent.

Congruence with Existing Experience

Without exception, adult learners come to new learning situations armed with attitudes and prior knowledge that frame their expectations (Moll 1990). For example, Aarons and Palinkas (2007) have identified coherence between an EBP and providers' existing experience as an important motivator that will likely impact expression of newly taught behaviors. In general, the interaction between existing knowledge and new skills has important implications for training success or failure (Meverach 1995; Ripple and Drinkwater 1982). In this vein, when discussing teacher professional development, Guskey (1994) has observed that “the magnitude of change persons are asked to make is inversely related to their likelihood of making it” (p. 119). If a training program involves skills that are far outside of a practitioner's knowledge base, it is likely that greater planning and precision will be necessary on the part of the training's organizers (Joyce and Showers 2002).

Simultaneously, training programs for busy professionals have to provide skills that are different enough from existing practices to justify the training effort. The interface between existing skills and new behavior likely becomes more difficult when behaviors presented in training are perceived to be minor variations on existing practice. An example from the mental health literature comes from Motivational Interviewing (MI). Because MI includes techniques that are common components of many respected treatment models and philosophies, some clinicians may believe that they already use MI in their practice even when they are not implementing it with fidelity (e.g., Baer et al. 2004; Miller et al. 2004). Similarly, recent work by Garland et al. (2010) has revealed that many community clinicians routinely use evidence-based elements in their treatment of child disruptive behavior, but that the level of use is typically superficial. In the absence of information about how the content of training will advance their current practice, clinicians may decline participation or enter into the training without the necessary commitment to achieve sustained implementation. Participation in a training that includes concepts that are highly congruent with existing knowledge could be perceived as invalidating of practitioners' expertise, an outcome that may be particularly likely if participation is mandated.

Specific Approaches

The research findings described above provide important context and considerations for the design of specific training approaches. Existing approaches vary in the extent to which they integrate these findings. Nearly all recognize the need for ongoing contact with trainers (e.g., in the form of extended coaching or feedback) and many consider the influence of trainees' prior experiences. Far fewer have built in explicit attention to maximizing provider motivation, although the majority of approaches incorporate techniques that may serve that function (e.g., goal identification).

In this section, different training approaches—and the techniques that comprise them—are described using a common framework. Approaches represent sets of specific techniques that are frequently implemented together with the intention of promoting the acquisition and/or implementation of skills. Approaches often carry “brand names,” which typically reflect a particular line of training research (e.g., academic detailing). Techniques are the specific actions taken by trainers and professional development staff in the course of implementing the approach (e.g., providing didactic information, conducting a baseline assessment). As described below and presented in Table 1, a number of techniques are common across different training approaches. Table 2 contains a more comprehensive summary of techniques and literature linking each approach with professional outcomes (i.e., changes in professionals' knowledge, understanding, or skill implementation). Classification of professional outcomes is modeled after Hammick et al.'s (2007) review of inter-professional education. Using the framework of approaches, techniques, and professional outcomes, we provide detailed descriptions of specific training approaches and the evidence for their effectiveness including academic detailing, interprofessional education, problem-based learning, coaching, point-of-care reminders, and self-regulated learning. Each is followed by discussion of its applicability to mental health providers.

Table 1.

Techniques shared among different training approaches

| Academic detailing | Interprofessional education | Problem-based learning | Coaching | Reminders | Self-regulated learning | |

|---|---|---|---|---|---|---|

| Interactive didactics (inclusion of active learning strategies— e.g., Socratic questioning, role-plays) | X | X | X | |||

| Goal identification (clear development or selection of learning or performance goals) | X | X | X | X | X | |

| Small group discussion (communication with subgroups of professionals about training topics) | X | X | ||||

| Critical thinking (promotion of deductive reasoning, etc.) | X | X | ||||

| Self-reflection (promotion of increased self-awareness of professional behaviors) | X | X | ||||

| Peer collaboration (clear cooperation among a group of professional learners) | X | X | Xa | X | X | |

| Independent access to information (prompts to access high-quality information— e.g., from literature, experts) | X | X | ||||

| Direct feedback (feedback given to learners about their performance) | X | X | X | X | ||

| Follow-up (ongoing contact and/or assessment) | X | X | X | X |

Peer coaching

Table 2.

Training approaches and their associated techniques and research-supported professional outcomes

| Approach | Techniques | Professional outcomes with research supporta |

|---|---|---|

| Academic detailing | Pre-training assessment and feedback | Modification of perceptions and/or attitudes (Markey and Schattner 2001) Acquisition of knowledge and/or skills (Markey and Schattner 2001) Behavioral change: Self-reported implementation of indicated procedures (Bloom et al. 2007) Reduced implementation of contraindicated practice (Nardella et al. 1995) Increased compliance with clinical guidelines (Soumerai et al. 1993) |

| Interactive didactics | ||

| Presentation of both sides of controversies | ||

| Delivery of a small number of key messages | ||

| Concise graphic reference materials | ||

| Repetition of key messages | ||

| Goal identification/specific change plans | ||

| Follow-up | ||

| Reinforcement of behavior change | ||

| Interprofessional education | Interactive didactics | Modification of perceptions and/or attitudes (Hammick et al. 2007) Acquisition of knowledge and/or skills (Hammick et al. 2007) Behavioral changes: Self-reported clinician behavior change (Hammick et al. 2007) Benefits to patients/clients (Hammick et al. 2007) |

| Small group discussion | ||

| Electronic/online learning | ||

| Peer collaboration | ||

| Problem-based learning | Goal identification | Acquisition of knowledge and/or skills (Gijbels et al. 2005; Cohen-Schotanus et al. 2008) |

| Use of clinical examples | ||

| Peer collaboration | ||

| Deductive reasoning | ||

| Small group discussion | ||

| Prompts to integrate existing knowledge | ||

| Independent access to information | ||

| Follow-up | ||

| Coaching | Goal identification | Acquisition of knowledge and/or skills (Miller et al. 2004; Showers et al. 1987; van den Hombergh et al. 1999) Behavioral changes: Sustained implementation (Showers et al. 1987) Changes in organizational practice: Increased collaborative learning and changes in organizational culture (Wenger 1998) Benefits to patients/clients (Schoenwald et al. 2004) |

| Direct feedback | ||

| Self-reflection | ||

| Prompts to generate alternative solutions | ||

| Reminders | Goal identification | Behavioral changes: Increased implementation of indicated practice (Austin et al. 1994; Tierney et al. 1986; Shea et al. 1996) Reduced implementation of non-indicated practice (Buntinx et al. 1993) Changes in organizational practice: Improved team communication and functioning (Haynes et al. 2009) |

| Peer collaboration | ||

| Direct feedback | ||

| Follow-up | ||

| Self-regulated learning | Goal identification | Acquisition of knowledge and/or skills (Coomarasamy and Khan 2004—study of evidence-based medicine) |

| Critical thinking | ||

| Self-reflection | ||

| Independent access to information | ||

| Evaluation of available evidence/literature | ||

| Outcome evaluation |

Classification of professional outcomes was modeled after Hammick et al. (2007)

Academic Detailing (AD)

Definition

Academic detailing (AD) (also known as “public interest detailing,” “educational outreach,” “peer education,” or “educational visiting”) involves in-person visits from trained personnel who meet with clinicians in their work settings to discuss the use of specific practices with the aim of changing performance (Tan 2002).

Techniques

Targeted pre-training assessment and feedback about the knowledge and current practices of individuals and groups is a common component of AD, often with the intent of increasing motivation. This may include survey evaluations of providers' understanding of particular concepts, tracking specific practices (e.g., indicated referrals), or direct audit of client outcomes (e.g., symptom reductions, functional outcomes, return for services) using administrative data. Following assessment, AD facilitators typically provide didactic information; present both sides of relevant professional controversies in order to establish their credibility; encourage learner participation through active, open-ended questioning; deliver a small number of key empirically-derived messages; distribute concise graphic reference materials; repeat key messages; develop specific plans for individual or group behavior change; return for follow-up visits; and reinforce behavior changes throughout their repeated contacts with clinicians (e.g., through public praise). Often, AD trainers target the most problematic, ineffective, or costly provider behaviors, such as poor adherence to child immunization schedules (Bloom et al. 2007). Visits are typically few in number, usually one or two (O'Brien et al. 2008).

Evidence

Relative to some of the other approaches described below, AD has received substantial research attention and empirical support. Since its inception, research on AD has demonstrated positive outcomes either in isolation or when combined with other strategies to influence clinician knowledge, prescribing practices, or the adoption of clinical guidelines (Davis and Taylor-Vaisey 1997; Grindrod et al. 2006). Some data suggest that AD is most effective when it consistently includes techniques such as “audit and feedback” procedures (Grimshaw et al. 2001). Although many studies of AD have focused exclusively on its effects on knowledge and attitudes (e.g., Markey and Schattner 2001), an increasing number of rigorous studies have investigated its impact on a wider range of clinician behavior. Recently, O'Brien et al. (2008) conducted a meta-analysis of 69 randomized controlled trials examining the effects of AD on providers' performance in healthcare settings. Findings generally indicated small to moderate effects. Most of the reviewed trials targeted behaviors relevant to provision of treatments such as smoking cessation counseling and evidence-based residential care, with fewer than half examining prescribing practices. Greater variability in outcomes was found among non-prescribing practices, suggesting that, while the strategy can be used effectively to impact the complex behaviors most relevant to mental health practitioners (e.g., therapy techniques), there is simultaneously a greater likelihood of failure. This finding seems to underscore the importance of the proper selection and execution of practitioner change strategies and further investigation into which factors influence their effectiveness.

Applications

Although a number of AD interventions have been implemented to change psychiatric prescribing practices (Soumerai 1998), very few exist in the literature on psychosocial interventions for mental health problems. Nonetheless, there is potential for multiple applications, especially when focusing on relatively specific practitioner behaviors. For example, AD could be used to increase clinician use of standardized assessment tools, which is often not routine in community practice (Garland et al. 2003). Kerns et al. (2010) describe a model that provides training plus telephone-based consultation by child psychologists who are experts in EBP to child welfare case workers. The goal is to aid the workers to more effectively use mental health screening data so they may refer youth on their caseloads to appropriate EBPs in their communities. Results of a preliminary evaluation of this model found high levels of feasibility and acceptability of the practice.

Interprofessional Education (IPE)

Definition

Interprofessional education (IPE), also known as team-based learning and interprofessional learning, is an approach in which members of two or more professions “learn from, with, and about each other to improve collaboration and the quality of care” (Hammick et al. 2007, p. 736).

Techniques

The unique feature of IPE is its emphasis on learning by bringing together multiple disciplines for multiple contacts and exercises. Techniques used in IPE are based on principles of adult learning. Typically, IPE involves a combination of didactics (e.g., seminars or workshops), role plays and other simulation-based learning, action-based learning exercises (e.g., problem-based learning), small group discussion, e-learning (e.g., web-based resources), and facilitator support (Reeves 2009). Furthermore, consultation and peer-to-peer networks are utilized to share information and overcome clinical barriers.

Evidence

Evidence of the effectiveness of IPE is somewhat limited. Much of the research to date has been conducted with undergraduate learners. Hammick et al. (2007) reviewed 21 studies of IPE that evaluated a range of outcomes: knowledge, attitude change, behavior change, change in organizational practice, and benefits to patients. Only six of the studies examined IPE among post-graduate professionals. The most commonly reported outcomes were knowledge and attitude change; the few that reported on behavior change utilized self-reports. Four studies reported on patient- or system-level outcomes for quality improvement or screening activities that utilized IPE. The majority of these studies reported positive findings on reactions, attitudes, and knowledge. Approximately half of the studies also reported positive changes in behaviors, service delivery, and patient-care outcomes. Despite these promising findings, more rigorous research methodologies and assessment strategies are needed.

Randomized controlled studies comparing IPE to no-education controls have only recently emerged in the literature, and no RCTs to date have compared IPE to uniprofessional education models or alternative strategies (Reeves 2010). Reeves (2010) reviewed six studies of IPE with professional groups that include a randomized controlled trial design or a controlled before-and-after design. The three studies that assessed teamwork or communication found improvements in those variables after IPE, and those that compared IPE to a control found IPE to be superior. One study examined patient depression outcomes and another examined patient satisfaction scores but did not find significant differences in outcomes. One study found evidence of more inquiries regarding domestic violence after training in their intervention group, but case findings and quality of domestic violence did not differ between the intervention and control group. Taken together, research on IPE to date suggests some impact on practitioner attitudes and organizational behaviors, but there is little evidence that it results in improvements in practice behaviors or outcomes.

Application

IPE has been used to facilitate quality improvement programs and prevention and screening initiatives (Hammick et al. 2007; Reeves 2010). It may also be a promising approach to apply to multicomponent mental health interventions for which collaboration of multiple professionals is necessary. Based on this hypothesis, the California Institute for Mental Health (CiMH) has developed an application of IPE called the Community Development Team (CDT) model to bridge the gap between research-based mental health treatments for youth and usual care practice. The CDT model brokers a relationship between developers of EBPs and a diverse array of staff and stakeholders to improve the effectiveness of clinical training and consultation, and forms peer-to-peer networks to support exchange of information about implementation challenges and solutions (Bruns et al. 2008). Treatments to which this model has been applied include multicomponent models such as Multidimensional Treatment Foster Care (MTFC; Fisher and Chamberlain 2000), Multisystemic Therapy (MST; Henggeler 1999), and the wraparound process (Walker and Bruns 2006). Currently, a randomized trial is underway to test whether providing implementation support through the CDT model improves adoption of MTFC (Tseng and Senior Program Team 2007).

Problem-Based Learning (PBL)

Definition

Problem-based learning (PBL) is a method of learning (or teaching) that utilizes real-world examples to guide students to develop knowledge that they can flexibly apply to problems, and extend and improve upon throughout their careers (Barrows 1984; Glaser 1990). It was originally intended to be used exclusively in structured didactic approaches (Taylor and Mifflin 2008).

Techniques

The concept of PBL has evolved and expanded over the years (Taylor and Mifflin 2008), but the approach emphasizes the use of clinical examples and facilitation by an instructor to promote participants' use of deductive reasoning, self-directed learning, and collaborative work to arrive at new knowledge (Gijbels et al. 2005). PBL occurs in three phases, which build in extended contact. In the first, trainees are presented with a problem and work in small groups to analyze the problem, identify and synthesize their relevant existing knowledge, recognize what they need to assess, and clarify their learning objectives. Through its emphasis on trainee's existing knowledge, PBL is the only approach to explicitly address the congruence between the training and professionals' existing experiences. In the second phase, trainees are directed to work individually to access resources (e.g., experts, journals). In the third phase, they meet again as a group to review new knowledge.

Evidence

Several reviews and meta-analyses have been conducted to examine the effectiveness of PBL over more traditional didactic approaches (Albanese and Mitchell 1993; Berkson 1993; Colliver 2000; Dochy et al. 2003; Gijbels et al. 2005; Smits et al. 2002; Vernon and Blake 1993). Results of these meta-analyses have been somewhat mixed in terms of the relative benefits of PBL with respect to knowledge or understanding, with some studies showing that PBL fared worse on basic reasoning or tests of knowledge (Albanese and Mitchell 1993; Vernon and Blake 1993), while later studies showed no differences on knowledge outcomes (Berkson 1993; Colliver 2000; Smits et al. 2002). With the exception of Berkson's review, which suggested that PBL is more stressful for trainees, most of the studies concluded that PBL tends to be more enjoyable and motivating for trainees than traditional didactic approaches. Most recently, Gijbels et al. (2005) conducted a meta-analysis to examine the impact of PBL on three levels of outcomes: understanding of concepts, understanding of principles, and links from concepts and principles to conditions and procedures for application. They concluded that the effects of PBL over traditional educational approaches differ across levels of outcomes, with the strongest effects favoring PBL at the level of linking concepts and principles to application. Finally, Cohen-Schotanus et al. (2008) compared two consecutive cohorts of medical students, one of which (n = 175) received a conventional curriculum, the second (n = 169) participated in a PBL curriculum. While PBL students rated themselves more highly on clinical competence, they did not score more highly on appreciation for the curriculum or clinical competence, and they were not more likely to read journals than students in the conventional curriculum group. Due in part to lack of evidence of superiority to traditional teaching practices, many medical education programs have now integrated PBL with more structured, didactic learning approaches (Taylor and Mifflin 2008).

Applications

PBL was originally developed for medical education, but has been applied across a number of disciplines and educational contexts. Gijbels and colleagues' findings that PBL is effective in training professionals to link concepts to application of skills and procedures have implications for the training of mental health professionals. As the application of knowledge in actual practice is an important, but often elusive goal of training in EBPs, opportunities for clinicians to apply principles to increasingly complex clinical cases may help them solidify knowledge and allow them to plan for implementing the EBP in practice. In addition, PBL may be more engaging and motivating for clinicians than traditional didactic approaches. However, as few studies have examined the impact of PBL on actual behaviors in practice, PBL strategies may best be integrated with observation or coaching to ensure that the knowledge is being translated into practice.

Coaching

Definition

Coaching is one of the most common forms of extended contact following training. Two main types of coaching are described in the literature, expert coaching and peer coaching. Expert coaching involves a person with expertise in the content of a particular intervention or clinical process providing direct feedback and advice. Often, the purpose of expert coaching is to emulate program developers and provide feedback enabling close approximation to high-fidelity implementation (Schoenwald et al. 2004). Peer coaching, employed most extensively within the education field, describes professional peer-to-peer feedback and dialogue (Showers 1984). According to Ackland (1991), “peer coaching programs are non-evaluative, based on the observation of classroom teaching followed by constructive feedback, and aimed to improve instruction techniques” (p. 23).

Techniques

Coaching can occur through provision of direct feedback following observation (van Oorsouw et al. 2009), or coaching professionals to systematically reflect on their own practice (Veenman and Denessen 2001). Coaching may also be conducted by content experts, following a didactic workshop, or within a reciprocal peer-coaching paradigm (Zwart et al. 2007). Specific coaching skills that have been demonstrated to impact learning include working toward agreement on learning goals, strategies designed to develop autonomy (e.g., prompting trainees to generate alternative behaviors that are in line with professional goals), providing feedback, and remaining on task during coaching (Veenman and Denessen 2001; Veenman et al. 1998).

Evidence

A comprehensive review by Showers et al. (1987) summarized the research on teacher coaching (expert or peer) and its relation to the uptake and sustainability of knowledge transfer. Reviewed studies typically yielded very large effect sizes of 10.0 or greater. Regardless of the specific approach, coaching of some sort has been found to be essential to ensure transfer of training (Herschell et al. 2010; Joyce and Showers 2002; Showers et al. 1987). Other identified benefits may be less directly tangible, such as organization cultural shifts and increases in collaborative learning (e.g., Wenger 1998).

Expert Coaching

Expert coaching has received increasing empirical attention from a range of purveyors of psychosocial interventions. Miller et al. (2004) found that coaching or feedback from experts in MI (Miller and Rollnick 2002) increased the retention of clinically-significant changes in therapist proficiency. A groundbreaking study of expert coaching within the context of MST found that high-quality coaching was related to clinician adherence to the intervention protocol and, indirectly via the therapists, to youth outcomes (Schoenwald et al. 2004). A study of Dialectical Behavior Therapy (Linehan 1993) found that the timing of the coaching is important. Practitioners received maximum benefit from coaching once they had achieved higher levels of intervention knowledge (Hawkins and Sinha 1998).

Peer Coaching

The few experimental studies examining variants of peer coaching provide encouraging data for several different approaches. Veenman and Denessen (2001) summarized five studies of teacher coaching and found that provision of specific training on how to be an effective coach was related to increased coaching quality. In addition, peer coaching in medicine has been found to lead to greater improvements in practice than coaching from assistants (van den Hombergh et al. 1999). The process of using peers as a mechanism for feedback also appears to be generally feasible and acceptable to professionals (Murray et al. 2008). As a strategy to support continued use of new programs or therapies, peer coaching has the potential to be an alternative to costly expert coaching models. However, before this recommendation can be made, further research is required to determine how to provide the most valuable and structured experiences for learners. Although research has identified increased challenges when peer coaching is relatively unstructured (e.g., Murray et al. 2008), more research is needed to identify the most effective ways to provide support and consider organizational variables.

Applications

Expert Coaching

Within behavioral health interventions, it has been most common to use an expert feedback model (e.g., MST; Schoenwald et al. 2000). Other interventions such as Trauma-Focused Cognitive Behavioral Therapy (Cohen et al. 2006) and Parent–Child Interaction Therapy (Eyberg et al. 1995) use strategies whereby practitioners submit videotapes and receive feedback from trained consultants.

Peer Coaching

Few evidence-based interventions in mental health explicitly utilize peer coaching strategies. One example of an EBP that strongly encourages, but does not require, a peer coaching model is the Triple P Positive Parenting Program (Sanders 1999). In this program, practitioners form peer support groups. Guidance, typically provided during program training and/or consultation with agencies, is given regarding formation of supportive peer groups, development of effective agendas, and use of feedback that is consistent with the model. Triple P also extends peer supports to include an international network of providers who can interact and provide online coaching through a secure website. At the time of this writing, there is at least one ongoing Triple P study of the advantages and potential challenges of peer support.

Reminders

Definition

Point-of-care reminders are prompts given to practitioners (either written or electronic) to engage in specific clinical behaviors under predefined circumstances. Reminders may be displayed on screen or placed as stickers or print outs in clinical charts and may also occur in the form of clinical checklists.

Techniques

Of the approaches discussed in the current paper, reminders are among the least complex. Following initial training stages, reminders represent one basic way to provide ongoing follow-up support to training recipients. Reminders are typically administered to practitioners in the context of their routine activities, when targeted clinical presentations occur. Practitioners may be prompted to deliver a specific service (e.g., administer a test) or collaborate with a colleague surrounding a case. Although the majority of reminders are received automatically, a few examples have required practitioners to request them in relevant clinical situations (Shojania et al. 2010). Reminders frequently require a response from practitioners (e.g., acknowledging receipt or indicating that the necessary clinical service has been delivered).

Evidence

In the medical literature, reminders have been used to support a variety of clinician activities ranging from specific prescribing behavior to more complex clinical decision making. A considerable body of research supports the use of reminders and checklists for highly-specific behaviors (Davis et al. 1995). For example, reminders have been found to be more effective than traditional feedback for reducing the use of non-indicated diagnostic tests (Buntinx et al. 1993) and increasing compliance with preventive care (Austin et al. 1994; Tierney et al. 1986). Recently, use of reminders in the form of a 19-item surgical safety checklist was found to significantly and substantially reduce complications (from 11 to 7%) and deaths (from 1.5 to 0.8%) from surgery. Researchers hypothesized that use of these relatively straightforward checklists improved surgical outcomes by improving both the behavior of individual surgical team members as well as surgical team communication (Haynes et al. 2009).

In a meta-analysis of RCTs of reminder systems for preventive medical procedures, Shea et al. (1996) found that both computerized and manual reminders significantly increased practitioner use of vaccinations and some types of cancer screening. Reminders were not found to significantly impact screening for cervical cancer, which the authors speculated might have been a result of the time required (more than the other screening procedures) and patient resistance. A recent systematic review found a nonsignficant trend toward greater compliance when a practitioner response was required (Shojania et al. 2010). Few differences have been found between electronic or paper formats (Dexheimer et al. 2008; Shojania et al. 2010).

Applications

Although reminders have been found to be one of the most effective training support strategies in the medical literature (Buntinx et al. 1993; Davis et al. 1995; Haynes et al. 2009), their effectiveness for training in mental health may be enhanced if combined with other methods (Ockene and Zapka 2000). Reminders can be used to support the implementation of specific practices following training with more intensive methods (e.g., workshops, coaching). Although a few examples of point-of-care reminders already exist within the mental health literature, the practice appears to have been used sparingly. Furthermore, some results from the mental health literature contrast with those described above; for example, computerized reminders for depression screening may be superior to paper reminders, rather than equivalent (Cannon and Allen 2000).

Self-Regulated Learning (SRL)

Definition

Self-regulated learning (SRL) is a process whereby practitioners guide their own goal-setting and self-monitoring for skill acquisition by determining whether changes are required in their approach and then evaluating the effectiveness of any changes made (Pintrich 2004; Zimmerman 2000). SRL is similar to the adult education concept of self-directed learning, but some self-directed learning conceptualizations also include examining broader socio-political forces and engaging in social action (Merriam and Caffarella 1999).

Techniques

SRL is designed to increase the uptake of information that has been disseminated in the scientific literature (Haynes et al. 1995). Bennett and Bennett (2000) describe a SRL process to improve use of EBPs in occupational therapy. This includes framing the clinical question that they are seeking to answer, searching the extant literature for evidence, understanding the quality and features/limitations of the evidence, conducting a critical appraisal of the evidence, applying the new knowledge to clinical decision-making, and then evaluating the effectiveness of the approach. Of note, these techniques are very similar to techniques espoused by “evidence-based medicine” (Sackett 1997) or “evidence-based practice” (Hoge et al. 2003); approaches physicians utilize when making clinical decisions. In this way, SRL also represents a general framework for ongoing learning and problem solving throughout one's career.

One important component of self-regulated learning is critical thinking/critical reflection (Phan 2010). The key tenets of critical reflection include taking experiences or observations and purposefully considering multiple perspectives and factors. Critical reflection can be applied both within the initial learning process as well as within specific skill acquisition. Furthermore, it is critical that SRL is not misconstrued as “minimal guidance” or an unguided framework. There is evidence to suggest that unguided instruction is likely less effective and efficient (Kirschner et al. 2006). Instead, SRL should be considered an intentional workforce development strategy that may follow direct instruction.

Evidence

The self-regulatory process has been discussed most extensively within the medical (e.g., Bennett and Bennett 2000; Tulinius and Hϕlge-Hazelton 2010), leadership development (e.g., Hannah et al. 2009), motivational enhancement (e.g., Sansone and Thoman 2006), and education (e.g., Pintrich 2000; Phan 2010) literatures. Still, the evidence-base for SRL is generally less than that for the other strategies discussed above. A compelling meta-analysis of studies examining training strategies for evidence-based medicine, an approach with extraordinary similarities to SRL, found that training practitioners on how to use evidence-based medicine was effective for increasing their knowledge of the approach, but for attitudes, skills, and behaviors to change, training must be integrated into clinical practice (Coomarasamy and Khan 2004).

Applications

Within the mental health field, applications of SRL have been attempted in a more limited capacity (e.g., Belar et al. 2001; Bennett-Levy and Padesky 2010; Sanders and Murphy-Brennan 2010) and empirical support is only just emerging. Bennett-Levy and Padesky (2010) recently presented preliminary evidence that a component of the SRL model may be effective within EBPs for mental health. They found that prompting clinicians to reflect on their use of cognitive-behavioral therapy after a workshop increased training uptake.

Discussion

The workforce development themes and approaches reviewed above represent an illustrative subset of options for consideration as the mental health field endeavors to improve professional competence and the adoption of EBPs. It appears unlikely that the use of traditional workshop models or any single strategy will result in success. As described above and displayed in Table 1, the specific approaches actually comprise multiple overlapping techniques. Based on our review, we identified nine techniques (interactive didactics, goal identification, small group discussion, critical thinking, self-reflection, peer collaboration, independent access to information, direct feedback, and follow-up) that were shared by at least two of the selected approaches. Additionally, multiple approaches reviewed above (e.g., AD, Reminders) have been found to be most effective when combined with other approaches. In their classic study, Oxman et al. (1995) reviewed 102 training studies and found that individual approaches typically demonstrate modest effects, but that the effects increase when they are used in combination. In developing training programs, combinations of approaches and techniques should be carefully selected in order to match the content of the intervention or practice being trained, the individual practitioner, and the service setting (including organizational context and the client population served; Beidas and Kendall 2010). Issues of trainee motivation and engagement should also be carefully considered.

With these overarching recommendations in hand, the primary question becomes: which approaches should be considered when developing a training and workforce development plan? As stated previously, the concepts discussed demonstrate a wide range of research support with regard to their effects on provider behavior. Further, the studies themselves display a wide range of methodological rigor. Some studies, particularly those within the medical field, provide relatively convincing results that substantiate the use of particular approaches (e.g., academic detailing, reminders) to support the acquisition and application of new behaviors. Simultaneously, other approaches (e.g., SRL) have received little rigorous study. Very few studies have been conducted that provide “head-to-head” comparisons of different approaches, making it difficult to lay out clear recommendations for use. Furthermore, because each of the training approaches reviewed combines several techniques (e.g., assessment, feedback, discussion of specific cases), tightly controlled comparisons are difficult to implement and no outcome data are available at the technique level. Meta-analyses of training components help to fill this gap, but the lack of direct comparisons limits conclusions that can be drawn about the most effective training models (Grimshaw et al. 2001). Future research should begin to “unpack” and systematically vary the use of different techniques in training studies.

In addition, most evidence has been generated in contexts and settings that are quite distinct from those in which mental health practitioners are asked to implement EBPs. Nonetheless, certain approaches have been relatively well-established and should be considered. For example, despite the significant demand on the consultant (and resulting high costs), intensive coaching or mentoring of some form will likely be necessary to bring about the complex behavior change required when implementing new therapies. Coaching could be augmented by the use of reminders or checklists, which are moderately effective and can be implemented at a low cost. As expert coaching is gradually tapered, a transition to peer coaching and reminders could continue to support sustained provider implementation of new practices.

Even the approaches with less of an empirical foundation may have utility. This is especially true given the consistent finding that “training-as-usual” (i.e., single-shot training methods) is largely ineffective. In light of this, existing methods could likely be replaced with little risk of reducing the impact of training-as-usual. Depending on the context and the EBP, the approaches with stronger support may be enhanced by making the training interdisciplinary (i.e., IPE), emphasizing real-world clinical examples (i.e., PBL), and promoting self-regulated learning as a means of monitoring and facilitating fidelity and effectiveness.

Looking beyond the level of research support for different training approaches, the mental health service delivery field must also consider the question of fit and how those approaches function in the context of different trainees, EBPs, settings, and client populations.

For example, although academic detailing may not be very appropriate as a first-line method to reinforce a large number of complex skills that are expected of a practitioner learning a new EBP, it may be highly effective at reinforcing some key decisions that must be made in the process of evidence-based service delivery, such as considering placement options for an individual child or youth, encouraging staff and supervisors to look carefully at assessment data, or ensuring that an individual consumer is matched to an appropriate EBP. Academic detailing may also be highly important during the exploration and initial installation stages of EBP implementation (Fixsen et al. 2005), when opinion leaders must be mobilized to support practitioners to be motivated to apply new techniques. Similarly, interprofessional or team-based training approaches may be most effective for complex, multicomponent interventions that involve team-based implementation or otherwise require contributions from collaborators across many disciplines and/or systems, such as Assertive Community Treatment (Stein and Santos 1998), or the wraparound process (Bruns et al. 2008). As described in vivid terms in the Haynes et al. (2009) study on reducing surgical complications and deaths, reminders and checklists also have been found to be highly effective at reinforcing both practitioner competence as well as team functioning in complex interventions, and should be considered in behavioral health interventions that share these characteristics. As a final example, problem-based learning may be a highly appropriate training component to include in the new array of modularized intervention approaches that, rather than demanding a standardized sequence of steps or activities, ask practitioners to link a complex set of concepts, principles, and clinical techniques to a diverse range of clinical presentations (e.g., Chorpita et al. 2009).

A surprisingly consistent aspect of the different literatures reviewed here is that studies have largely neglected to include outcome data for service recipients (Garet et al. 2001; Muijs and Lindsay 2008), a point that is reflected in Table 2. Although intervention fidelity represents an essential outcome for training and implementation studies, more research in the mental health field should include client-level variables (i.e., symptom change) as additional outcome data. Not surprisingly, effects of provider training on service recipients are typically smaller than their effects on provider behavior (Forsetlund et al. 2009). Transfer of training or implementation fidelity could be included as key mediator variables when examining the relationship between training and client outcome.

Finally, even with mounting evidence to support the use of various training approaches, virtually no research has attended to the mechanisms responsible for the documented links between their use and observed professional outcomes. In much the same way that the field of psychotherapy has become increasingly focused on identifying mediators of treatment outcome, it will be important for training research to identify its own set of mediating variables. Potential training mediators may include improved team functioning as a result of IPE, use of deductive reasoning following PBL and SRL, or increased self-awareness after coaching.

Conclusion

No amount of research will convince or equip practitioners to spontaneously adopt new EBPs (Blase et al. 2010). Instead, comprehensive training programs are essential to achieve implementation. Successful trainings in complex psychotherapy practices are likely to be time and resource intensive, involve careful attention to clinician engagement, utilize active methods of promoting initial skill acquisition, and provide ongoing supports to solidify skills and strengthen training transfer. Many of the techniques and findings from the education and medical literatures can likely be applied to training mental health practitioners in EBP. Nonetheless, considerable work remains to identify how the themes, approaches, and techniques presented above can be best adapted and packaged, which will be most feasible in what contexts, and how effective they can be in producing meaningful and sustained change among both mental health workers and service recipients.

Acknowledgments

This publication was made possible in part by grant number F32 MH086978 from the National Institute of Mental Health (NIMH), awarded to the first author.

References

- Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: Service provider perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2007;34:411–419. doi: 10.1007/s10488-007-0121-3. [DOI] [PubMed] [Google Scholar]

- Ackland R. A review of the peer coaching literature. Journal of Staff Development. 1991;12:22–27. [Google Scholar]

- Albanese MA, Mitchell S. Problem-based learning: A review of literature on its outcomes and implementation issues. Academic Medicine. 1993;68:52–81. doi: 10.1097/00001888-199301000-00012. [DOI] [PubMed] [Google Scholar]

- Austin SM, Balas EA, Mitchell JA, Ewigman BG. Effect of physician reminders on preventive care: Meta-analysis of randomized clinical trials. The annual symposium on computer application in medical care; 1994. pp. 121–124. [PMC free article] [PubMed] [Google Scholar]

- Baer JS, Rosengren DB, Dunn CW, Wells EA, Ogle RL, Hartzler B. An evaluation of workshop training in motivation interviewing for addition and mental health clinicians. Drug and Alcohol Dependence. 2004;34:99–106. doi: 10.1016/j.drugalcdep.2003.10.001. [DOI] [PubMed] [Google Scholar]

- Barrows HS. A specific, problem-based, self-directed learning method designed to teach medical problem-solving skills, self-learning skills and enhance knowledge retention and recall. In: Schmidt HG, de Volder ML, editors. Tutorials in problem-based learning. A new direction in teaching the health profession. Van Gorcum; Assen: 1984. [Google Scholar]

- Beidas RS, Kendall PC. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science & Practice. 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belar CD, Brown RA, Hersch LE, Hornyak LM, Rozensky RH, Sheridan EP, et al. Self-assessment in clinical health psychology: A model for ethical expansion of practice. Professional Psychology: Research and Practice. 2001;32:135–141. doi: 10.1037/0735-7028.32.2.135. [DOI] [PubMed] [Google Scholar]

- Bennett S, Bennett JW. The process of evidence-based practice in occupational therapy: Informing clinical decisions. Australian Occupational Therapy Journal. 2000;47:171–180. [Google Scholar]

- Bennett-Levy J, Padesky C. Reflective practice: Water for the seeds of learning?. In: McManus J, editor. Let a hundred flowers blossom: A variety of effective training practices in CBT; The 6th World Congress of Behavioral and Cognitive Therapies; Boston, MA. 2010, June. [Google Scholar]

- Berkson L. Problem-based learning: Have the expectations been met? Academic Medicine. 1993;68:S79–S88. doi: 10.1097/00001888-199310000-00053. [DOI] [PubMed] [Google Scholar]

- Birman BF, Desimone L, Porter AC, Garet MS. Designing professional development that works. Educational Leadership. 2000;57:28–33. [Google Scholar]

- Blase KA, Fixsen DL, Duda MA, Metz AJ, Naoom SF, Van Dyke MK. Real scale up: Improving access to evidence-based programs. The Blueprints Conference; San Antonio, Texas. 2010, April. [Google Scholar]

- Bloom JA, Nelson CS, Laufman LE, Kohrt AE, Kozinetz CA. Improvement in provider immunization knowledge and behavior following a peer education intervention. Clinical Pediatrics. 2007;46:706–714. doi: 10.1177/0009922807301484. [DOI] [PubMed] [Google Scholar]

- Bruns EJ, Hoagwood KE, Rivared JC, Wotring J, Marsenich L, Carter B. State implementation of evidence-based practice for youths, part II: Recommendations for research and policy. Journal of the American Academy of Child and Adolescent Psychiatry. 2008a;47:499–504. doi: 10.1097/CHI.0b013e3181684557. [DOI] [PubMed] [Google Scholar]

- Bruns EJ, Rast J, Walker JS, Peterson CR, Bosworth J. Spreadsheets, service providers, and the statehouse: Using data and the wraparound process to reform systems for children and families. American Journal of Community Psychology. 2006;38:201–212. doi: 10.1007/s10464-006-9074-z. [DOI] [PubMed] [Google Scholar]

- Bruns EJ, Walker JS, Penn M. Individualized services in systems of care: The wraparound process. In: Stroul B, Blau G, editors. The system of care handbook: Transforming mental health services for children, youth, and families. Brookes; Baltimore: 2008b. [Google Scholar]

- Buntinx F, Winkens R, Grol R, Knottnerus JA. Influencing diagnostic and preventive performance in ambulatory care by feedback and reminders. Family Practice. 1993;10:219–228. doi: 10.1093/fampra/10.2.219. [DOI] [PubMed] [Google Scholar]

- Cannon DS, Allen SN. A comparison of the effects of computer and manual reminders on compliance with a mental health clinical practice guideline. Journal of the American Medical Informatics Association. 2000;7:196–203. doi: 10.1136/jamia.2000.0070196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chorpita BF, Bernstein A, Daleiden EL, The Research Network on Youth Mental Health Driving with roadmaps and dashboards: Using information resources to structure the decision models in service organizations. Administration and Policy in Mental Health. 2009;35:114–123. doi: 10.1007/s10488-007-0151-x. [DOI] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Deblinger E. Treating trauma and traumatic grief in children and adolescents. Guilford; New York: 2006. [Google Scholar]

- Cohen-Schotanus J, Muijtjens AMM, Schönrock-Adema J, Geertsma J, van der Vleuten CPM. Effects of convention and problem-based learning on clinical and general competencies and career development. Medical Education. 2008;42:256–265. doi: 10.1111/j.1365-2923.2007.02959.x. [DOI] [PubMed] [Google Scholar]

- Colliver JA. Effectiveness of problem-based learning curricula: Research and theory. Academic Medicine. 2000;75:259–266. doi: 10.1097/00001888-200003000-00017. [DOI] [PubMed] [Google Scholar]

- Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. British Medical Journal. 2004;329:1017. doi: 10.1136/bmj.329.7473.1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daleiden EL, Chorpita BF. From data to wisdom: Quality improvement strategies supporting large-scale implementation of evidence-based services. Child and Adolescent Psychiatric Clinics of North America. 2005;14:329–349. doi: 10.1016/j.chc.2004.11.002. [DOI] [PubMed] [Google Scholar]

- Davis DA, Taylor-Vaisey A. Translating guidelines into practice: A systematic review of theoretic concepts, practice experience and research evidence in the adoption of clinical practice guidelines. Canadian Medical Association Journal. 1997;157:408–416. [PMC free article] [PubMed] [Google Scholar]

- Davis DA, Thompson MA, Oxman AD, Haynes RB. Changing physician performance: A systematic review of the effect of continuing medical education strategies. Journal of the American Medical Association. 1995;274:700–705. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- Dexheimer JW, Talbot TR, Sanders DL, Rosenbloom ST, Aronsky D. Prompting clinicians about preventive care measures: A systematic review of randomized controlled trials. Journal of the American Medical Informatics Association. 2008;15:311–320. doi: 10.1197/jamia.M2555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimeff LA, Koerner K, Woodcock EA, Beadnell B, Brown MZ, Skutch JM, et al. Which training method works best? A randomized controlled trial comparing three methods of training clinicians in dialectical behavior therapy skills. Behaviour Research and Therapy. 2009;47:921–930. doi: 10.1016/j.brat.2009.07.011. [DOI] [PubMed] [Google Scholar]

- Dochy F, Segers M, van den Bossche P, Gijbels D. Effects of problem based learning: A meta-analysis. Learning and Instruction. 2003;13:533–568. [Google Scholar]

- Eyberg SM, Boggs SR, Algina J. Parent-child interaction therapy: A psychosocial model for the treatment of young children with conduct problem behavior and their families. Psychopharmacology Bulletin. 1995;31:83–91. [PubMed] [Google Scholar]

- Feifer C, Ornstein SM, Jenkins RG, Wessell A, Corley ST, Nemeth LS, et al. The logic behind a multimethod intervention to improve adherence to clinical practice guidelines in a nationwide network of primary care practices. Evaluation and the Health Professions. 2006;29:65–88. doi: 10.1177/0163278705284443. [DOI] [PubMed] [Google Scholar]

- Fishbein M, Triandis H, Kanfer F, Becker M, Middlestadt S, Eichler A. Factors influencing behavior and behavior change. In: Baum A, Revenson T, Singer J, editors. Handbook of health psychology. Erlbaum; Mahwah, NJ: 2001. [Google Scholar]

- Fisher PA, Chamberlain P. Multidimensional treatment foster care: A program for intensive parenting, family support, and skill building. Journal of Emotional and Behavioral Disorders. 2000;8:155–164. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; Tampa, FL: 2005. [Google Scholar]

- Forsetlund L, Bjørndal A, Rashidian A, Jamtvedt G, O'Brien MA, Wolf F, et al. Cochrane Database of Systematic Reviews. Issue 2. John Wiley & Sons; 2009. Continuing education meetings and workshops: Effects on professional practice and health care outcomes. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganju V. Implementation of evidence-based practices in state mental health systems: Implications for research and effectiveness studies. Schizophrenia Bulletin. 2003;29:125–131. doi: 10.1093/oxfordjournals.schbul.a006982. [DOI] [PubMed] [Google Scholar]

- Garet MS, Porter AC, Desimone L, Birman BF, Yoon KS. What makes professional development effective? Results from a national sample of teachers. American Educational Research Journal. 2001;38:915–945. [Google Scholar]

- Garland AF, Brookman-Frazee L, Hurlburt MS, Accurso EC, Zoffnes RJ, Haine-Schlagel R, et al. Mental health care for children with disruptive behavior problems: A view inside therapists' offices. Psychiatric Services. 2010;61:788–795. doi: 10.1176/appi.ps.61.8.788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garland AF, Kruse M, Aarons GA. Clinicians and outcome measurement: What's the use? Journal of Behavioral Health Services & Research. 2003;30:393–405. doi: 10.1007/BF02287427. [DOI] [PubMed] [Google Scholar]

- Gijbels D, Dochy F, van den Bossche P, Segers M. Effects of problem-based learning: A meta-analysis from the angle of assessment. Review of Educational Research. 2005;75:27–61. [Google Scholar]

- Glaser R. Toward new models for assessment. International Journal of Educational Research. 1990;14:475–483. [Google Scholar]

- Gotham HJ. Advancing the implementation of evidence-based practices into clinical practice: How do we get there from here? Professional Psychology: Research and Practice. 2006;37:606–613. [Google Scholar]

- Grimshaw JM, Eccles MP, Walker AE. Changing physicians' behavior: What works and thoughts on getting more things to work. Journal of Continuing Education in the Health Professions. 2002;22:237–243. doi: 10.1002/chp.1340220408. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Shirran L, Thomas R, Mowatt G, Fraser C, Bero L, et al. Changing provider behavior: An overview of systematic reviews of interventions. Medical Care. 2001;39:II-2–II-45. [PubMed] [Google Scholar]

- Grindrod KA, Patel P, Martin JE. What interventions should pharmacists employ to impact health practitioners' prescribing practices? Annals of Pharmacotherapy. 2006;40:1546–1557. doi: 10.1345/aph.1G300. [DOI] [PubMed] [Google Scholar]

- Guskey TR. Results-oriented professional development: In search of an optimal mix of effective practices. Journal of Staff Development. 1994;15:42–50. [Google Scholar]

- Hammick M, Freeth D, Koppel I, Reeves S, Barr H. A best evidence systematic review of interprofessional education. Medical Teacher. 2007;29:735–751. doi: 10.1080/01421590701682576. [DOI] [PubMed] [Google Scholar]

- Han SS, Weiss B. Sustainability of teacher implementation of school-based mental health programs. Journal of Abnormal Child Psychology. 2005;33:665–679. doi: 10.1007/s10802-005-7646-2. [DOI] [PubMed] [Google Scholar]

- Hannah ST, Woolfolk RL, Lord RG. Leader self-structure: A framework for positive leadership. Journal of Organizational Behavior. 2009;30:269–290. [Google Scholar]

- Hawkins KA, Sinha R. Can front-line clinicians master the conceptual complexities of dialectical behavior therapy? An evaluation of a State Department on Mental Health training program. Journal of Psychiatric Research. 1998;32:379–384. doi: 10.1016/s0022-3956(98)00030-2. [DOI] [PubMed] [Google Scholar]

- Haynes RB, Hayward RS, Lomas J. Bridges between health care research evidence and clinical practice. Journal of the American Medical Informatics Association. 1995;2:342–350. doi: 10.1136/jamia.1995.96157827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes AB, Weiser TG, Berry WR, Lipsitz SR, Breizat AH, Dellinger EP, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. New England Journal of Medicine. 2009;360:491–499. doi: 10.1056/NEJMsa0810119. [DOI] [PubMed] [Google Scholar]

- Henggeler SW. Multisystemic therapy: An overview of clinical procedures, outcomes, and policy implications. Child Psychology and Psychiatry Review. 1999;4:2–10. [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010;30:448–466. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoge MA, Morris JA, editors. Administration and Policy in Mental Health. Vol. 32. 2004. Implementing best practices in behavioral health workforce education: Building a change agenda; pp. 85–89. Special issue. [DOI] [PubMed] [Google Scholar]

- Hoge MA, Morris JA, Daniels AS, Stuart GW, Huey LY, Adams N. An action plan for behavioral health workforce development. Annapolis Coalition on the Behavioral Health Workforce; Cincinnati, OH: 2007. [Google Scholar]

- Hoge MA, Tondora J, Stuart GW. Training in evidence-based practice. Psychiatric Clinics of North America. 2003;26:851–865. doi: 10.1016/s0193-953x(03)00066-2. [DOI] [PubMed] [Google Scholar]

- Joyce BR, Showers B. Student achievement through staff development. 3rd ed. Association for Supervision and Curriculum Development; Alexandria, VA: 2002. [Google Scholar]

- Kazdin AE. Evidence-based treatment and practice: New opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. American Psychologist. 2008;63:146–159. doi: 10.1037/0003-066X.63.3.146. [DOI] [PubMed] [Google Scholar]

- Kelleher K. Organizational capacity to deliver effective treatments for children and adolescents. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37:89–94. doi: 10.1007/s10488-010-0284-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerns SEU, Dorsey S, Trupin EW, Berliner L. Project focus: Promoting emotional health and wellbeing for youth in foster care through connections to evidence-based practices. Report on Emotional and Behavioral Disorders in Youth. 2010;10:30–38. [Google Scholar]

- Kirschner PA, Sweller J, Clark RE. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discover, problem-based, experiential, and inquiry-based teaching. Educational Psychologist. 2006;41:75–86. [Google Scholar]

- Knowles MS. Andragogy, not pedagogy. Adult Leadership. 1968;16:350–352. [Google Scholar]

- Linehan M. Cognitive-behavioral treatment of borderline personality disorder. Guildford Press; New York: 1993. [Google Scholar]

- Long N. Closing the gap between research and practice: The importance of practitioner training. Clinical Child Psychology and Psychiatry. 2008;13:187–190. doi: 10.1177/1359104507088341. [DOI] [PubMed] [Google Scholar]

- Markey P, Schattner P. Promoting evidence-based medicine in general practice—the impact of academic detailing. Family Practice. 2001;18:364–366. doi: 10.1093/fampra/18.4.364. [DOI] [PubMed] [Google Scholar]

- McCarthy P, Kerman B. Inside the belly of the beast: How bad systems trump good programs. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37:167–172. doi: 10.1007/s10488-010-0273-4. [DOI] [PubMed] [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: A review of current efforts. American Psychologist. 2010;65:73–84. doi: 10.1037/a0018121. [DOI] [PubMed] [Google Scholar]

- Merriam SB, Caffarella RS. Learning in adulthood: A comprehensive guide. Jossy-Bass Publishers; San Francisco, CA: 1999. [Google Scholar]

- Meverach ZR. Teachers' paths on the way to and from the professional development forum. In: Guskey TR, Huberman M, editors. Professional development in education: New paradigms & practices. Teachers College Press; New York: 1995. pp. 151–170. [Google Scholar]

- Miller WR, Rollnick S. Motivational Interviewing. 2nd ed. Guilford Press; New York: 2002. [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Marinez J, Pirritano M. A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology. 2004;72:1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Moll LC, editor. Vygotsky and education: Instructional implications of sociohistorical psychology. Cambridge University Press; Cambridge, England: 1990. [Google Scholar]

- Muijs D, Lindsay G. Where are we at? An empirical study of levels and methods of evaluating continuing professional development. British Educational Research Journal. 2008;34:195–211. [Google Scholar]

- Murray S, Ma X, Mazer J. Effects of peer coaching on teachers' collaborative interaction and students' mathematics achievement. The Journal of Educational Research. 2008;102:203–212. [Google Scholar]

- Nardella A, Pechet L, Snyder LM. Continuous improvement, quality control, and cost containment in clinical laboratory testing: Effects of establishing and implementing guidelines for preoperative tests. Archives of Pathology & Laboratory Medicine. 1995;119:518–522. [PubMed] [Google Scholar]

- O'Brien MA, Rogers S, Jamtvedt G, Oxman AD, Odgaard-Jensen J, Kristoffersen DT, et al. Cochrane Database of Systematic Reviews. Issue 4. Wiley; 2008. Educational outreach visits: Effects on professional practice and health care outcomes. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ockene JK, Zapka JG. Provider education to promote implementation of clinical practice guidelines. Chest. 2000;118:33S–39S. doi: 10.1378/chest.118.2_suppl.33s. [DOI] [PubMed] [Google Scholar]

- Oxman AD, Thomson MA, Davis DA, Haynes RB. No magic bullets: A systematic review of 102 trials of interventions to improve professional practice. Canadian Medical Association Journal. 1995;153:1423–1431. [PMC free article] [PubMed] [Google Scholar]

- Phan HP. Critical thinking as a self-regulatory process component in teaching and learning. Psicothema. 2010;22:284–292. [PubMed] [Google Scholar]

- Pintrich PR. Multiple goals, multiple pathways: The role of goal orientations in learning and achievement. Journal of Educational Psychology. 2000;92:544–555. [Google Scholar]

- Pintrich PR. A conceptual framework for assessing motivation and self-regulated learning in college students. Educational Psychology Review. 2004;16:385–407. [Google Scholar]

- Puetz BE. Differences between Indiana registered nurse attenders and nonattenders in continuing education in nursing activities. Journal of Continuing Education in Nursing. 1980;11:19–26. doi: 10.3928/0022-0124-19800301-06. [DOI] [PubMed] [Google Scholar]