Abstract

Using lensfree holography we demonstrate optofluidic tomography on a chip. A partially coherent light source is utilized to illuminate the objects flowing within a microfluidic channel placed directly on a digital sensor array. The light source is rotated to record lensfree holograms of the objects at different viewing directions. By capturing multiple frames at each illumination angle, pixel super-resolution techniques are utilized to reconstruct high-resolution transmission images at each angle. Tomograms of flowing objects are then computed through filtered back-projection of these reconstructed lensfree images, thereby enabling optical sectioning on-a-chip. The proof-of-concept is demonstrated by lensfree tomographic imaging of C. elegans.

Optofluidics is a rapidly growing field, which aims to complement microfluidic systems with optical functionality toward highly integrated lab-on-a-chip devices.1, 2 Optofluidic chips offer significant advantages over conventional optical instruments by providing increased sensitivity and higher throughput in a compact and cost-effective platform. Despite being a relatively new field of research, numerous optofluidic platforms have been demonstrated so far such as sensors,3, 4, 5 tunable dye lasers,6 and flow cytometers.7

In the meantime, there has been a significant interest in lensfree imaging modalities toward the development of microscopes that can be integrated on a chip.8, 9 These lensfree imaging modalities can potentially allow replacing conventional bulky light microscopes with their compact and on-chip counterparts for use in microfluidic systems. For the same purpose, conventional optofluidic microscopy10 utilizes microfluidic channels to deliver the sample onto the imaging system, enabling integration of lensfree imaging with microfluidics. However, optofluidic microscopy modalities currently lack the ability to perform sectional imaging of the specimen. While numerous systems have been demonstrated for sectional imaging in the optical domain,11, 12, 13, 14, 15, 16 these modalities are based on lenses and employ bulky and complex architectures, impeding their integration with microfluidic platforms.

To address this important need in optofluidics, here we present the demonstration of an optofluidic tomographic microscope, which can perform three-dimensional (3D) imaging of specimen flowing within a microfluidic channel. In this optofluidic lensfree imaging modality, using a spatially incoherent light source (600 nm center wavelength with ∼10 nm spectral bandwidth, filtered by an aperture of diameter ∼0.05–0.1 mm) placed ∼50 mm away from the sensor, digital in-line holograms8 of the sample are recorded by an optoelectronic sensor array. (Aptina MT9P031STC, Aptina, San Jose, 5 megapixels, 2.2 μm pixel size). While the holograms are being acquired, the objects are driven by electrokinetic flow along a microchannel, which is placed directly on the sensor with a slight tilt in the x-y plane (see Fig. 1). The exact value of this tilt angle is not critical and need not be known a priori; it simply ensures that the flow of the object along the microchannel generates a shift component in both x and y, enabling digital synthesis of higher resolution holograms through pixel super-resolution (SR). Owing to its unique hologram recording geometry with unit fringe magnification,8, 9 our holographic optofluidic microscopy platform permits imaging of the flowing objects using multiple illumination angles as shown in Fig. 1, which is the key to achieve optical computed tomography. Multiangle illumination for tomographic imaging would not be feasible with conventional optofluidic microscopy architectures10 since at higher illumination angles the projection images of different cross-sections of the same object would start to lose resolution due to increased distance and diffraction between the object and the aperture∕sensor planes.

Figure 1.

A schematic diagram of Optofluidic Tomography setup is shown. (Not to scale) The sample to be imaged flows within a microfluidic channel with <1 mm distance to the active area of the digital sensor array, and is illuminated from multiple angles by partially coherent light emanating from a large aperture (e.g., 0.05–0.1 mm) placed ∼50 mm away from the sample.

In our optofluidic tomography platform, at each illumination angle (spanning, e.g., θ=−50°:50°) we record several projection holograms (i.e., 15 frames) while the sample flows rigidly above the sensor array. These lower-resolution (LR) lensfree holograms are then digitally synthesized into a single super-resolved (SR) hologram by using pixel SR techniques9 to achieve a lateral resolution of <1 μm for each projection hologram corresponding to a given illumination direction. These SR projection holograms are digitally reconstructed to obtain complex projection images of the same object, which can then be back-projected using a filtered back-projection algorithm17 to compute tomograms of the objects.

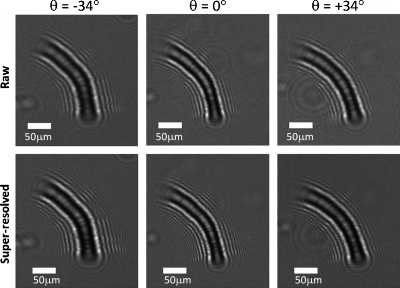

As a proof of concept for optofluidic tomography, we have conducted an experiment where a wild-type C. elegans worm was sequentially imaged during its flow within a microfluidic channel at various illumination angles spanning θ=−50°:50° in discrete increments of 2°. In our experiments, the design of the complementary metal-oxide semiconductor sensor-chip utilized for experiments ultimately limits the maximum useful angle of illumination. Most digital sensors are designed to work in lens-based imaging systems and therefore holograms recorded at illumination angles larger than ±50° exhibit artifacts. For this reason, we have limited our angular range to ±50°. For each illumination angle, we captured ∼15 holographic frames of the flowing object (in <3 s), resulting in a total imaging time of ∼2.5 min per tomogram under the electrokinetic flow condition. These illumination angles are automatically created by a computer-controlled rotation stage holding the light source, and they define rotation of the source within the x-z plane with respect to the detector array, which is located at the x-y plane. Some exemplary LR holograms recorded with this setup are illustrated in Fig. 2. As expected, for the tilted illuminations (θ=±34°) the extent of the holograms along x are wider compared to the θ=0° case. By using the subpixel shifts of the worm during its flow within the microchannel, we have synthesized SR holograms9 of the sample at each illumination angle as also illustrated in Fig. 2. These SR holograms exhibit finer fringes which are undersampled in the raw holograms.

Figure 2.

(Top) Lensfree raw holograms of a C. elegans sample at three different illumination angles (θ=0°, 34°, and −34°) are presented. (Bottom) SR holograms for the same illumination angles are shown. As expected, lensfree holograms are wider for the tilted illumination angles when compared to the vertical illumination case.

To obtain complex projection images of the sample through digital holographic reconstruction, the synthesized SR holograms are digitally multiplied with a tilted reference wave. The tilt angle of this reconstruction wave is not equal to the tilt of the light source because of the refraction of light in the microfluidic chamber. Instead, the digital reconstruction angle (θ) for projection holograms are determined by calculating the inverse tangent of the ratio Δd∕z2, where Δd denotes the lateral shifts of the holograms of objects with respect to their positions in the vertical projection image, and z2 can be either experimentally known, or determined by the digital reconstruction distance of the vertical projection hologram. It should be noted that despite the use of tilted illumination angles, the recorded holograms are still in-line holograms since the reference wave and the object wave propagate coaxially. As a result, an iterative phase recovery algorithm8 based on object-support constraint is utilized to reconstruct the complex field transmitted through the object. Throughout these iterations, the optical field is propagated back and forth between the parallel hologram and object planes. Once the iterations converge, the projection of the complex field in the plane normal to the illumination angle is obtained by interpolating the recovered field on a grid whose dimension along the tilt direction is rescaled by cos(θ). To give an example, some of these reconstructions are shown in Fig. 3 for θ=±34° and 0°, which demonstrates the multiangle SR imaging performance of our holographic optofluidic microscopy platform. The entire process of calculating a SR hologram and iteratively reconstructing the image within ∼15 iterations takes less than 0.25 s using a parallel compute unified device architecture (CUDA)-based implementation on a graphics processing unit (GPU) (NVidia Geforce GTX 480, NVidia, Santa Clara, CA).

Figure 3.

Reconstruction results of tilted angle illumination (θ=−34°,0°,+34°) in holographic optofluidic microscopy are shown, in both amplitude and phase, along with a 40× objective-lens microscope comparison image corresponding to the vertical cross-section of the worm. Raw and SR holograms of the same sample are illustrated in Fig. 2.

For weakly scattering objects, the complex field obtained through digital holographic reconstruction (as shown in Fig. 3) represents the projection of the object’s complex transmission function (phase, absorption, or scattering potential) along the direction of illumination.11 Therefore, the 3D transmission function of the object can be computed in the form of a tomogram using a filtered back-projection algorithm17 where all the complex projection images (i.e., 51 SR images for θ=−50°:2°:50°) are used as input. To illustrate its performance, a lensfree optofluidic tomogram of a C. elegans sample is illustrated in the Supplementary Movie (enhanced online), in Fig. 4, where several depth sections of the worm are provided. Such a tomographic imaging scheme especially mitigates the well-known depth-of-focus problem inherent in holographic reconstruction modalities,18 and allows optofluidic tomographic imaging with significantly improved axial resolution. This entire tomographic reconstruction process (including the synthesis of the SR holograms and the filtered back-projection) takes less than 3.5 min using a single GPU, which can be significantly improved by using several GPUs in parallel. Based on these tomographic reconstruction results, the full-width-half-maximum (FWHM) of the axial line-profile of the amplitude of the worm’s transmission was measured as ∼30 μm, which agrees well with the typical thickness of a C. elegans sample. Without computing tomograms, the same axial FWHM using a single SR vertical lensfree hologram (θ=0°) would have been ∼1 mm, which clearly demonstrates the depth-of-focus improvement using multiple projections. We would also like to emphasize that the long depth-of-focus inherent to our lensfree holograms indeed helps to satisfy the projection approximation11 for an extended depth-of-field, permitting tomographic imaging of weakly scattering samples such as C. elegans.

Figure 4.

Tomographic optofluidic imaging of C. elegans is illustrated at different depth slices (z=−6 to +6 μm). The FWHM of the amplitude of the line profile of the worm along z direction is ∼30 μm, which roughly agrees with the thickness of its body. The colorbar applies only to the tomographic images. Scale bars: 50 μm. For the other depth slices, the readers can refer to the Supplementary Movie (enhanced online).

We should also note that due to the limited angular range of holograms that can be recorded, there is a missing region in the Fourier space of the object, commonly known as the “missing wedge.”19 The most significant effect of the missing wedge is the elongation of the point spread function (PSF) in the axial direction, which limits the axial resolution to a value larger than the lateral, which we estimate to be ∼3 μm in our case. Although not implemented in this manuscript, reduction of such artifacts can be achieved by implementing iterative constraint algorithms based either on the 3D support of the object or by utilizing a priori information about the transmission function of the object, which enables iteratively filling the missing region in the 3D Fourier space of the object function.20

In summary, we have presented the demonstration of optofluidic tomographic microscopy using partially coherent lensfree digital in-line holography. This on-chip tomographic imaging modality would be quite valuable for microfluidic lab-on-a-chip platforms where high-throughput 3D imaging of the specimen is needed.

Acknowledgments

A. Ozcan gratefully acknowledges the support of NSF (CAREER Award on BioPhotonics), the Office of Naval Research (ONR) under the Young Investigator Award 2009, and the NIH Director’s New Innovator Award—Award No. DP2OD006427 from the Office of The Director, National Institutes of Health. The authors also acknowledge the support of the Bill-Melinda Gates Foundation, Vodafone Americas Foundation, and NSF BISH program (under Awards Nos. 0754880 and 0930501). S.O.I. and W.B. contributed equally to the manuscript.

References

- Psaltis D., Quake S. R., and Yang C., Nature (London) 442, 381 (2006). 10.1038/nature05060 [DOI] [PubMed] [Google Scholar]

- Monat C., Domachuk P., and Eggleton B. J., Nat. Photonics 1, 106 (2007). 10.1038/nphoton.2006.96 [DOI] [Google Scholar]

- Mandal S. and Erickson D., Opt. Express 16, 1623 (2008). 10.1364/OE.16.001623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domachuk P., Littler I. C. M., Golomb M. C., and Eggleton B. J., Appl. Phys. Lett. 88, 093513 (2006). 10.1063/1.2181204 [DOI] [Google Scholar]

- De Leebeeck A., Kumar L. K. S., de Lange V., Sinton D., Gordon R., and Brolo A. G., Anal. Chem. 79, 4094 (2007). 10.1021/ac070001a [DOI] [PubMed] [Google Scholar]

- Song W. and Psaltis D., Appl. Phys. Lett. 96, 081101 (2010). 10.1063/1.3324885 [DOI] [Google Scholar]

- Godin J., Chen C. H., Cho S. H., Qiao W., Tsai F., and Lo Y. H., J. Biophoton. 1, 355 (2008). 10.1002/jbio.200810018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mudanyali O., Tseng D., Oh C., Isikman S. O., Sencan I., Bishara W., Oztoprak C., Seo S., Khademhosseini B., and Ozcan A., Lab Chip 10, 1417 (2010). 10.1039/c000453g [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishara W., Su T., Coskun A. F., and Ozcan A., Opt. Express 18, 11181 (2010). 10.1364/OE.18.011181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heng X., Erickson D., Baugh L. R., Yaqoob Z., Sternberg P. W., Psaltis D., and Yang C., Lab Chip 6, 1274 (2006). 10.1039/b604676b [DOI] [PubMed] [Google Scholar]

- Walls J. R., Sled J. G., Sharpe J., and Henkelman R. M., Phys. Med. Biol. 50, 4645 (2005). 10.1088/0031-9155/50/19/015 [DOI] [PubMed] [Google Scholar]

- Choi W., Fang-Yen C., Badizadegan K., Oh S., Lue N., Dasari R. R., and Feld M. S., Nat. Methods 4, 717 (2007). 10.1038/nmeth1078 [DOI] [PubMed] [Google Scholar]

- Debailleul M., Simon B., Georges V., Haeberle O., and Lauer V., Meas. Sci. Technol. 19, 074009 (2008). 10.1088/0957-0233/19/7/074009 [DOI] [Google Scholar]

- Charrière F., Pavillon N., Colomb T., Depeursinge C., Heger T. J., Mitchell E. A. D., Marquet P., and Rappaz B., Opt. Express 14, 7005 (2006). 10.1364/OE.14.007005 [DOI] [PubMed] [Google Scholar]

- Zysk A. M., Nguyen F. T., Oldenburg A. L., Marks D. L., and Boppart S. A., J. Biomed. Opt. 12, 051403 (2007). 10.1117/1.2793736 [DOI] [PubMed] [Google Scholar]

- Huisken J., Swoger J., del Bene F., Wittbrodt J., and Stelzer E. H. K., Science 305, 1007 (2004). 10.1126/science.1100035 [DOI] [PubMed] [Google Scholar]

- Radermacher M., Weighted Back-Projection Methods. Electron Tomography: Methods for Three Dimensional Visualization of Structures in the Cell, 2nd ed. (Springer, New York, 2006). [Google Scholar]

- Meng H. and Hussain F., Appl. Opt. 34, 1827 (1995). 10.1364/AO.34.001827 [DOI] [PubMed] [Google Scholar]

- Mastronarde D. N., J. Struct. Biol. 120, 343 (1997). 10.1006/jsbi.1997.3919 [DOI] [PubMed] [Google Scholar]

- Verhoeven D., Appl. Opt. 32, 3736 (1993). 10.1364/AO.32.003736 [DOI] [PubMed] [Google Scholar]