Abstract

Purpose

Children with speech sound disorder (SSD) and reading disability (RD) have poor phonological awareness, a problem believed to arise largely from deficits in processing the sensory information in speech, specifically individual acoustic cues. However, such cues are details of acoustic structure. Recent theories suggest that listeners also need to be able to integrate those details to perceive linguistically relevant form. This study examined abilities of children with SSD, RD, and SSD+RD not only to process acoustic cues but also to recover linguistically relevant form from the speech signal.

Method

Ten- to 11-year-olds with SSD (n = 17), RD (n = 16), SSD+RD (n = 17), and Controls (n = 16) were tested to examine their sensitivity to (1) voice onset times (VOT); (2) spectral structure in fricative-vowel syllables; and (3) vocoded sentences.

Results

Children in all groups performed similarly with VOT stimuli, but children with disorders showed delays on other tasks, although the specifics of their performance varied.

Conclusion

Children with poor phonemic awareness not only lack sensitivity to acoustic details, but are also less able to recover linguistically relevant forms. This is contrary to one of the main current theories of the relation between spoken and written language development.

Keywords: speech sound disorder, reading disability, speech perception

1. Introduction

The overall goal of this study was to test the similarity of deficits in phonological representations in three groups of 10- to 11-year-old children who have different clinical outcomes (Speech Sound Disorder1, Reading Disability, and Speech Sound Disorder +Reading Disability: SSD, RD, and SSD+RD), despite sharing a current or past underlying deficit in phonemic awareness (PA). The choice of these three contrasting groups allowed us to address several theoretically important questions: (1) How similar are the deficits in phonological representations that disrupt speech production in SSD to those that disrupt the decoding of printed words and nonwords in RD? (2) Do these deficits in SSD persist after the speech production problem has resolved? (3) If these deficits in phonological representations in SSD and RD are similar, how do we explain differences in clinical presentation, especially SSD without later RD and RD without earlier SSD? And (4) Are the deficits in these three groups exclusively at the “segmental” level, as is widely believed?

Questions (1), (3), and (4) bear on the widely accepted phonological theory of RD (Fowler, 1991; Snowling, 2000; Stanovich & Siegel, 1994). If, as the phonological theory of RD holds, a deficit in phonemic awareness (PA) is sufficient to cause RD, there should not be children with an early PA deficit who develop normal reading. But some children with SSD who have demonstrable PA deficits go on to learn to read normally (Peterson, Pennington, Shriberg & Boada, 2009; Stothard, Snowling, Bishop, Chipchase, & Kaplan, 1998). This finding seriously threatens the phonological theory of RD, unless the underlying problem in phonological development in children with SSD without later RD is different from that found in children with early PA deficits who later become RD (RD only). Finally, if children with RD or SSD+RD have deficits in phonological representations beyond the segmental level, the phonological theory of RD is also threatened because this theory assumes that the problem in phonological representations is restricted to phonemic representations (e.g. Fowler, 1991).

The current study builds on our earlier work with a large longitudinal study of children with speech sound disorder (SSD), followed from age 5 until age 8 in order to study their reading development. In that study, we have found that deficits on measures of PA and other phonological tasks were pervasive among the children with SSD before formal literacy instruction commenced (Raitano, Pennington, Tunick, Boada, & Shriberg, 2004), but that only a minority (less than 25%) of the children developed later RD (Peterson, Pennington, Shriberg, & Boada, 2009). Specifically, in Raitano et al. (2004) we found that all four subgroups of SSD children (defined by crossing two dichotomies: language impaired (LI) vs. not LI and persisting vs. resolved speech production problems) had PA deficits relative to controls, and that even the least affected subgroup (without LI or a current problem in speech production) had a moderate PA deficit relative to controls without LI or SSD (Cohen’s d = .78). However, this same group did not differ significantly from controls on letter knowledge, which was significantly impacted in the overall SSD group by the presence of comorbid LI, but not by the persistence of speech problems. So, a disassociation between PA (and speech) deficits and intact literacy development was already apparent at age 5, with literacy development more related to language skill than to the presence of speech or PA problems. These dissociations were maintained at follow-up at age 8 (Peterson, et al. 2009), when reading data were collected. We found that reading at time 2 was predicted by time 1 language but not by time 1 speech, but that both factors predicted time 2 PA. So, at time 2, there were SSD children with persisting PA deficits who did not develop RD, contrary to the phonological hypothesis. In a separate paper (Treiman, Pennington, Shriberg, and Boada, 2008), we probed this dissociation between PA and early literacy further and found that even SSD children with chance performance on PA tasks were using letter names to learn letter sounds just as typical readers do. Taken together, these results indicate that children with SSD and PA deficits can nonetheless use letter names and sounds to learn to read, sometimes normally. In contrast, children at family risk for RD who later become RD also have early PA deficits of a similar magnitude at age 5, but only a minority of them have SSD (Pennington & Lefly, 2001). So, across these two longitudinal studies in our lab, we have found dissociations among SSD, PA deficits, and later RD that question the phonological theory of RD. To better understand these dissociations, we conducted the present study.

Specifically, the present study extends the longitudinal study of our SSD sample to a third time point in order to study phonological development in SSD and RD in more detail. In the present study, we tested a representative subset of each of two groups of SSD children (SSD only and SSD+RD) and our control group at roughly age 11 years. We also added an RD group without history of earlier SSD or language problems, who would also be expected to have a PA deficit, in order to address Question (1) concerning whether the deficits in phonological representations found in RD and SSD are similar. To answer this question, we examined sensitivity to several kinds of structure in the speech signal using different speech perception tasks.

1.1 Historical perspectives on phonological and literacy development and disorder

It is well known that early speech and language difficulties often predict the development of a reading disability once a child reaches school age (Bishop & Adams, 1990; Catts, Fey, Tomblin, & Zhang, 2002; Hall & Tomblin, 1978). While the basis for this relationship is still being explored, mounting evidence suggests that the disorders share several deficits, primarily one in phonemic awareness, thus implicating phonological development in both SSD and RD, as well as in language impairment (Bird, Bishop, & Freeman, 1995; Clarke-Klein & Hodson, 1995; Leonard, 1982; Lewis & Freebairn, 1992). Phonological structure can be considered at a number of levels, including words, syllables, demisyllables (onset and rhyme), and phonemes. However, reading and language researchers commonly measure a child’s phonological development with tasks involving PA (see Swanson, Trainin, Necoechea, and Hammill, 2003). These tasks require awareness explicitly of the phonetic structure of words so that phonemes can be separately recognized and manipulated. Reading researchers have focused on the phonemic level because of the importance of the phoneme-grapheme correspondence in learning to read an alphabetic orthography, as we have in English. Nonetheless, the “phoneme” itself is not a discrete temporal segment; instead it is conceptually defined by several different aspects of the acoustic structure of the speech signal. As a result, each child must discover anew that relation between acoustic and phonetic structure in the native language being learned.

The label SSD refers to difficulties usually arising during the preschool years in the production of intelligible spoken language (Shriberg, 2003), which led earlier clinicians and researchers to assign a primary sensorimotor deficit to the disorder, calling it “functional articulation disorder” (see discussion in Bishop, 1997). However, this view of SSD is called into question by several lines of evidence, including speech error patterns and the performance of groups with SSD on various phonological tasks, including those that do not require a spoken output. Children with SSD sometimes produce a sound correctly in one context but not in another, and most of their errors are substitutions or omissions of phonemes, not the distortions we would expect if they had an imprecise motor program (Leonard, 1995). In addition, children with SSD have reliably been shown to have difficulty recognizing and manipulating phonetic structure, (e.g., Bird, Bishop, & Freeman, 1995; Rvachew, 2007; Rvachew, Ohberg, Grawburg, & Heyding, 2003), although some studies have found that the severity of the sensorimotor and phonological deficits are not well correlated (e.g., Larrivee & Catts, 1999; Rvachew & Grawburg, 2006). Moreover, there is some evidence that children with SSD are not as sensitive to all parts of the acoustic speech signal as are typically developing children (Rvachew & Jamieson, 1989).

In comparison, RD, or dyslexia, generally does not become obvious until school age, when difficulties learning written language appear. These children typically have age-appropriate speech intelligibility, but problems are seen in learning both to recognize printed words and to spell (Dickman, 2003). Reading disability is strongly associated with phonological difficulties, so much so that a core phonological deficit has been proposed as the cause of RD (Fowler, 1991; Stanovich & Siegel, 1994; Snowling, 2000). While the relation between reading and sensitivity to phonological structure is likely bidirectional (Wagner, Torgesen, & Rashotte, 1994), there is wide consensus that RD stems from a phonological deficit, independent of the type or amount of reading instruction (Vellutino, Fletcher, Snowling, & Scanlon, 2004). As a result, children and adults with dyslexia have difficulty constructing, maintaining, and retrieving explicitly phonetic representations, causing a range of problems at the behavioral level on tasks such as verbal short-term memory, non-word repetition, learning of new phonological forms, word retrieval, and rapid naming. In particular, the phonological deficit associated with RD is observed in PA tasks, on which children and adults with RD perform especially poorly (e.g., Mann & Liberman, 1984; Nittrouer & Miller, 1999; Pennington, Van Orden, Smith, Green, & Haith, 1990; Pratt & Brady, 1988; Wagner & Torgesen, 1987). In sum, deficits on PA tasks precede and predict later RD and are among the most persistent deficits in adults with RD (e.g. Pennington et al., 1990). Moreover, deficits in PA are genetically correlated with RD (rg = .67 in Tiu, Wadsworth, Olson, & DeFries, 2001). This high genetic correlation means that about two thirds of the genetic influences acting on either PA or RD deficits are shared by both deficits. But nonetheless, the genetic correlation does not specify the nature of the relation, which could run from RD to PA instead of the reverse, and may not be specific to PA.

The phonological theory of RD has considerable empirical support, but questions about it remain (Byrne, 2009; Castles & Coltheart, 2004; Pennington, 2006; Scarborough, 2005; Treiman et al., 2008). These questions arise because 1) letter knowledge is more predictive of later reading than is PA (Scarborough, 2005); 2) pre-dyslexic children have deficits in language, letter knowledge, and learning rate in addition to their deficits in PA (Scarborough, 2005); 3) we lack definitive evidence that selectively manipulating PA in preschool improves later reading because experimental training studies purporting to demonstrate a causal link between PA and reading skill have frequently not restricted the training to PA (Castles & Coltheart, 2004); 4) the genetic influences shared by PA and later reading skill are not specific to PA, but are shared with general verbal ability, print knowledge, and rapid serial naming (Byrne, 2009); and 5) as mentioned previously, children lacking PA can still use letter names to learn letter sounds to begin to read (Treiman et al., 2008). Thus, it may be more appropriate to view a PA deficit as an important risk factor for later RD among others, but not as a sufficient cause of later RD, as the phonological theory holds. By studying children for whom there is a disassociation between earlier PA deficits and later RD, as is true for the majority of SSD children in the current Denver SSD sample, we can shed light on other risk and protective factors affecting literacy outcome.

Moreover, although children with both SSD and RD exhibit deficits in PA, the question has yet to be answered regarding whether the deficit in phonological representations underlying the PA deficit is the same for both disorders. One reason to suspect that it may be the same is that the two diagnoses are frequently given to the same children. Comorbidity of SSD with RD is estimated to be 25-30 percent (Gallagher, Frith, & Snowling, 2000; Pennington & Lefly, 2001; Snowling, 1990), suggesting that the two disorders may be related to the same underlying deficit, and yet present slightly differently. Several possibilities can be entertained for why that might be. Perhaps children with SSD and RD share the same phonological deficit, but it differs in severity.

One version of this severity hypothesis was proposed by Harm & Seidenberg (1999) based on connectionist simulations of speech perception and reading development. This theory holds that the severity of deficits in phonological representations determines whether problems are observed in both speech perception and reading (associated with more severe deficits in phonological representations) or only in reading (associated with less severe deficits). Extending their theory to children with SSD and/or RD, Pennington (2006) reasoned that the severity hypothesis would predict that children with SSD and later RD must have a more severe deficit because it affects speech perception, production, and later reading, whereas children with RD without earlier SSD have a milder deficit in phonological representations that only affects reading development. This theory also predicts that SSD children without later RD must have a different form of SSD (without deficits in phonological representations or at least milder deficits than are found in RD only children) compared to children with SSD+RD. Because 70-75 percent of children with SSD in preschool do not go on to be diagnosed with RD, this theory must also hold that most children with SSD have this different form of SSD, not associated with problems in speech perception and PA (see Rvachew, 2007, which provides some evidence for this subtype hypothesis). However, the previously discussed findings from the Denver SSD longitudinal study (Peterson et al., 2009; Raitano et al., 2004), which was explicitly designed to test this severity hypothesis, are not consistent with the subtype hypothesis; PA and other phonological deficits were pervasive in our large SSD sample, but only a minority developed later RD. Even children who did not develop later RD had persisting deficits in PA. These findings lead us to postulate that additional cognitive risk or protective factors in addition to a PA deficit determine literacy outcome in SSD. Another possibility, tested in the present study, is that the nature of the phonological deficit in SSD without RD is distinct from that found in SSD+RD or RD only. So, the current study sought to further explore the nature of the deficits underlying these disorders by employing multiple measures of speech perception in children with SSD, RD, and SSD+RD.

While there is agreement that phonological development is impaired in SSD and RD, there are different theories regarding exactly how it is impaired. These competing theories can be distinguished by how they regard the role of the phonetic segment in speech, language, and reading development. Most reading researchers have embraced a segmental theory, exemplified by the segmentation hypothesis proposed by Fowler (1991) as an elaboration of the phonological model of RD. In turn, that hypothesis was based on the work of others, such as Ferguson and Farwell (1975), Menn (1983a, 1983b), Nittrouer and Studdert-Kennedy (1987), and Waterson (1971) regarding typical speech development. The segmentation hypothesis proposes that as children’s vocabularies develop, lexical representations become increasingly segmented, such that first they are in the form of whole word shapes, then syllables, followed by onsets and rhymes, and finally by individual phonemes. Eventual PA difficulties stem from phonological representations that fail to keep pace with typical developmental changes in specificity. In the current study, two of the speech perception tasks examined children’s sensitivity for signal components clearly affiliated with phoneme representations. The third speech perception task was designed to explore relatively recent hypotheses concerning speech perception, phoneme representations, and the source of PA difficulties. Until recently, primary hypotheses concerning the source of PA difficulties have focused on acoustic properties clearly affiliated with phonetic segments. However, perhaps being sensitive to such properties is not sufficient to support the formation of well-defined phonological representations. Perhaps children must also be sensitive to speech-relevant acoustic structure that extends over ranges broader than isolated phonetic segments and perhaps it is important for listeners to be able to integrate across all kinds of acoustic structure, those obviously affiliated with phonemic segments as well as those more broad in nature, in order to derive linguistic structure.

1.2 Speech Perception and Phoneme Representation: Bottom-up effects

Phoneme representations can be thought of as learned functions that, over the course of development and using information from a variety of contexts, map acoustic structure onto the abstract categories we call phonemes. The precision with which individuals accomplish this mapping varies cross-linguistically (Caravolas and Landerl, 2010) and across ages (Treiman and Zukowski, 1991). As a result, the distinctiveness in representation of separate phonemes varies. For instance, on tasks requiring the manipulation of a single phoneme in an utterance, Chinese adults who only read logographic characters perform similarly to illiterate Portuguese adults, and are significantly worse than Chinese adults who learned to read both the traditional logographic characters and an alphabetic system (Read, Zhang, Nie, & Ding, 1986; Morais, Cary, Alegria, & Bertelson, 1979). It has also been found that illiterate adults, like pre-reading children (Treiman and Zukowski, 1991) have the ability to analyze utterances into syllables, and to identify rhyming, despite lacking skill with more segmented phonemic analysis (de Santos Loureiro et al., 2004). Normally developing children refine their phoneme representations only once they begin to learn how to read (e.g., Olson, Wise, & Forsberg, 1996), possibly due to necessary refinement of the phoneme representation in order to associate the phoneme with a particular letter, or grapheme (Castles and Coltheart, 2004). The development of this phoneme-grapheme correspondence may drive the strong correlation between the acquisition of the alphabetic system, and the ability to perform explicit manipulations of phonetic segments. Because precise representations are needed in order to comprehend alphabetic orthographies, it would be useful to have a way of evaluating the precision of those representations.

While theoretically appealing, it is difficult to develop methods of quantifying the precision of individuals’ phonemic representation, or of how well they perform the mapping of acoustic to phonetic structure. In the literature on speech perception in children with developmental language disorders or RD, many studies have focused on the labeling of phonemes based on manipulation of individual acoustic cues. For example, researchers designed stimuli in which all acoustic properties were held constant except for one, such as voice onset time, which was varied continuously. Voice onset time (VOT) is defined as the time between the release of a supralaryngeal closure and subsequent laryngeal pulsing (Lisker & Abramson, 1964). It is, so to speak, the quintessential acoustic cue. In English, short lags in the onset of voicing (laryngeal pulsing) are perceived as voiced stops [b, d, g] and long lags in VOT are perceived as voiceless stops [p, t, k]. Stimuli can be designed in which VOT varies from values signaling voiced initial stops to values signaling voiceless initial stops. When typical participants are presented with stimuli in which VOT changes across stimuli in steps of equal size, but with the rest of the cues remaining constant, they do not demonstrate continuous labeling functions. That is, there are not equivalent changes in perception across VOT steps. Instead there are regions at either end of the continuum where labeling is fairly stable. The region of ambiguous labeling is restricted to rather brief sections at the middle of the continuum. In other words, perception is categorical (e.g., Lisker & Abramson, 1970).

Studies have shown that infants can discriminate between syllables forming minimal pairs that are not part of the child’s language. Only with exposure over the first year of life do children begin to lose their abilities to discriminate minimal pairs not found in their native language (Jusczyk, Houston, & Newsome, 1999; Werker & Tees, 1983). But although responses from one-year-olds suggest recognition of adult phonemic categories, perception remains immature for many years to come. In addition to the location of the VOT phoneme boundary shifting for some contrasts as children get older (Ohde & Sharf, 1988), the slope of the VOT labeling function becomes steeper with age (Hazan & Barret, 2000; Holden-Pitt, Hazan, Revoile, Edward, & Droge, 1995; Walley & Flege, 1999). Even at the age of 12 years, typically developing children are not demonstrating labeling functions as sharp as those of adults (Hazan & Barret, 2000).

When the performance of children with RD is compared to age-matched children, some studies have reported that labeling functions are even shallower in children with RD (e.g., Bogliotti, Serniclaes, Messaoud-Galusi, & Sprenger-Charolles, 2008; Chiappe & Chiappe, 2001; Godfrey, Syrdal-Lasky, Millay, & Knox, 1981). However, other studies have only found very weak evidence or found that only a subset of RD participants had abnormal labeling performance (Adlard & Hazan, 1998; Joannise, Manis, Keating, & Seidenberg, 2000; Manis et al., 1997; McBride-Chang, 1996; Werker & Tees, 1987). Far fewer studies have examined how children with SSD label stimuli varying along acoustic continua, in spite of the fact that reduced PA abilities and high rates of comorbid language impairment are observed for these children. Those that have, however, have found that these children show poor sensitivity to the acoustic cue manipulated (e.g., Hoffman, Daniloff, Bengoa, & Schuckers, 1985; Rvachew & Jamison, 1989). Other studies have examined how well children with SSD can identify natural members of minimal pairs likely to create difficulty in production, such as /r/ vs. /w/, /s/ vs. /∫/, and /s/ vs. /ts/ (Ohde & Sharf, 1988; Raaymakers & Crul, 1988). Looking across studies it has been found that children who could not produce the difference between the phonemes or consonant clusters also could not perceive the differences.

It has become clear that there is a need to examine speech perception in these populations from other perspectives. One reason is that the studies demonstrating categorical perception of acoustic cues underlying native phonetic categories typically manipulate only one cue. In fact, several acoustic properties generally define phonetic categories, and the way that perceptual attention is distributed across those properties cannot be examined with traditional one-cue methods. The Developmental Weighting Shift theory (DWS; Nittrouer, Manning, & Meyer, 1993) suggests that the kinds of acoustic properties to which a child attends change as the child gains experience with a native language. Methods developed based on this theory attempt to present speech cues in a more natural manner, examining preference for one cue over another, instead of manipulating only one phonetic cue. Results from a series of studies using such methodology (e.g., Nittrouer, 1992; 1996a; Nittrouer & Miller, 1997; Nittrouer & Studdert-Kennedy, 1987) have shown that children initially attend to dynamic acoustic properties related to vocal tract movement from one constriction into another: that is, the formant transition. As a child becomes more skilled with the native language, between 3.5 and 7.5 years of age, attention shifts to more specific kinds of cues, such as silent gaps (indicating periods of vocal tract closure), durations of units such as the vowels preceding final stops, or the spectral distribution of fricative noises. Accordingly, the DWS theory has been supported by studies of acoustic cues such as syllable duration and formant onset frequency (Hicks & Ohde, 2005; Nittrouer, 2004; Ohde & Haley, 1997; Walley & Carrell, 1983).

The DWS has been studied in groups with varying speech and language difficulties. For example, Nittrouer (1996b) found that children with both chronic otitis media (ear infections) and low socio-economic status (SES) showed delays in the acquisition of mature weighting strategies and PA, even with the contribution of nonverbal IQ removed. Nittrouer (1999) found similar results for children with RD, such that the phonologically impaired RD group used more immature weighting strategies. Similarly, Boada and Pennington (2006) reported that children with RD relied more on dynamic formant transition cues than both chronological-age-matched controls and reading-age-matched controls. Consistent with the hypothesis that the weighting of acoustic cues may be related to the organization of phonological representations, Boada and Pennington (2006) found significant correlations between speech perception strategies and measures of PA. Again, these results indicate that skill in all three domains (speech perception, phonemic awareness, and reading) are related and reduce the likelihood that reading experience alone drives PA or the precision of phoneme representations.

1.3 Global structure analysis

Another reason to suggest that the focus of speech perception research with children who have RD or SSD needs to be broadened is that the kind of acoustic structure examined heretofore has typically involved signal detail. Following basic studies of human speech perception, investigations into the deficits underlying RD and/or SSD have most often manipulated acoustic properties that are temporally brief and spectrally isolated. These are the properties that fit the original definition of acoustic cues (Repp, 1982). However, there is structure in the speech signal that is broader spectrally and longer temporally that also supports recovery of phonological representations. This level of structure has been termed “global,” and refers to the kind of structure that can only be resolved from signal stretches covering at least several syllables (Nittrouer, Lowenstein, & Packer, 2009). This kind of structure impacts the amplitude of a signal and arises from general postural settings of the vocal tract, and from the slow modulations in vocal tract shape and size.

Such structure can be examined in three key ways: through the amplitude envelope, through amplitude modulated, and through sine-wave speech. Different components of speech production affect signal amplitude differently, creating variations in the overall amplitude, or intensity, of the speech signal across time. These variations together comprise the amplitude envelope, or temporal envelope, as it is also known. The current study examined children’s abilities to use this amplitude envelope in their speech recognition Previous experiments looking at amplitude structure in speech perception divided the signal into several frequency bands, half-wave rectifying each band to derive the amplitude envelope, and multiplying noise bands by those envelopes. Using noise as the modulated signal has the intended purpose of largely removing spectral structure. Some of the first investigators to use this signal processing technique, a kind of vocoding, were Shannon, Zeng, Wygonski, Kamath, and Ekelid (1995), and they found that adults listening to their native language had sufficient information for accurate sentence recognition with roughly four bands. This experimental manipulation was ground-breaking because it demonstrated that listeners – at least adults hearing their first language – could recover linguistic structure, even when the acoustic details studied in traditional speech perception experiments, as well as spectral structure, were greatly reduced. Thus, global amplitude structure may be important to linguistic processing of heard speech signals, something that had never been studied in typical children, let alone in children with language disabilities.

Eisenberg, Shannon, Schaefer Martinez, Wygonski, and Boothroyd (2000) were the first to use this vocoding processing scheme to examine speech perception in children. Comparing the numbers of words recognized in the sentences presented, Eisenberg et al. found that children between 5 and 7 years old were significantly less accurate than adults and older children when fewer than eight channels were presented; all listeners were quite accurate with eight or more channels. This result was later replicated by Nittrouer et al. (2009), so it may be concluded that younger children are less skilled at using both the details of the signal (acoustic cues) as well as the global structure that provides information about syllabic and word structure.

A different line of research, exploring amplitude modulation, has indicated that insensitivity to amplitude changes in non-linguistic stimuli is associated with RD and language impairment. To study this phenomenon, researchers have modulated amplitude in non-speech tasks (Corriveau, Pasquini, & Goswami, 2007; Hamalainen et al., 2005; Lorenzi, Dumont, & Fullgrabe, 2000; Muneaux, Ziegler, Truc, Thomson, & Goswami, 2004; Richardson, Thomson, Scott, & Goswami, 2004; Witton, Stein, Stoodley, Rosner, & Talcott, 2002). For example, Goswami et al. (2002) asked children with or without RD to judge whether stimuli were comprised of a single element fluctuating in loudness, or two different elements, a distinct beat and a background sound. Very slow rise times (> 250 ms) of amplitude modulation are generally perceived as a continuous sound that varies in loudness. The sharper the rise time, the more likely people are to perceive two separate sounds. Children with RD continued to hear stimuli as consisting of two sounds at longer rise times than did normally developing 11-year-old children, leading the authors to speculate that children with RD have difficulty perceiving amplitude modulations in general. Follow-up studies indicated that the difficulty with rise time detection is not associated with the controversial disordered processing of rapid transient information tested in temporal order judgment tasks (e.g., Tallal, 1980). These results suggest instead that accurate detection of relatively slow amplitude changes indicating syllable structure are more important than the detection of rapid or transient cues for the development of phonological representations and literacy (Muneaux et al., 2004; Richardson et al., 2004).

More recently, research in this area has turned to measuring neurological processing of amplitude modulations. Rapid features in speech appear to be lateralized to left-hemisphere auditory areas (Joanisse and Gati, 2003; Abrams, Nicol, Zecker, and Kraus, 2006), while information associated with amplitude is lateralized to the right hemisphere (Abrams, Nicol, Zecker, and Kraus, 2008). Examining modulated amplitude processing in young students with a range of reading abilities, Abrams and colleagues (2009) found strong correlations between several variables related to the amplitude processing, including the degree of lateralization, and standardized measures of reading and PA, indicating a biological connection between impaired perception for slow amplitude signals and abnormal neurophysiological responses in weaker readers.

While vocoded speech preserves global amplitude structure, there is a way to process speech signals to largely eliminate traditional acoustic cues but preserve global spectral structure: It is known as sine wave speech. In this processing scheme, sine waves representing the center frequencies of the first three formants are generated from the speech signal. One study investigated speech perception in adults with RD using sine wave speech (Rosner et al., 2003) and found that adults with RD were significantly less accurate than adults without RD. Because those stimuli provided only formant trajectories, and labeling experiments have frequently shown that children with RD respond differently to those signal properties than do children with typical reading abilities (e.g., Boada & Pennington, 2006; Godfrey et al., 1981; Nittrouer, 1999; Werker & Tees, 1987), it is important to explore how children with RD would respond to signals that preserve global structure, but not by preserving formant transitions. For that reason, we elected to use vocoded stimuli in this study.

1.8 Top-down context effects

Rosner et al. (2003) attributed the poorer recognition for sine wave sentences exhibited by adults with RD, compared to adults without RD, to deficits in the ability to use linguistic context effects for speech recognition. However, without specifically quantifying the extent of these top-down linguistic effects, it is not possible to conclusively attribute the differences between groups to this phenomenon. In other words, the difference in recognition between groups was attributed to variability in how well listeners in the two groups could recover the original message from these impoverished signals using their familiarity with phonotactic, syntactic, and semantic regularities rather than from their abilities to perceptually organize the signals themselves. In designing this current study, it was hypothesized that any differences that might be found among groups would be attributable to differences in children’s abilities to recover coherent, linguistically relevant form from the vocoded stimuli. Therefore, we needed to be able to quantify top-down linguistic context effects in order to rule them out as the source of group differences, if any were observed.

Fortunately, metrics for quantifying these effects have been developed, and here we use one that was proposed by Boothroyd (e.g., 1968; Boothroyd & Nittrouer, 1988). This metric, known as the j factor, derives from the fact that the probability of recognizing a complete sentence is dependent on the probabilities of recognizing the separate words that comprise the sentence. If sentence context played no role in recognition, then the probability of recognizing a whole sentence correctly would be directly related to the probability of recognizing each of the words such that:

| (1) |

where ps is the probability of recognizing the complete sentence, pw is the probability of recognizing each word, and n is the number of words in the sentence. Words, however, can be recognized at poorer SNRs when presented in sentences rather than in isolation, and so sentence context itself must influence the perception of individual words. Therefore we can change (1) to:

| (2) |

where j is the number of independent channels of information, and is between 1 and n. We now have a way of solving for the effective number of information channels in the sentence:

| (3) |

In this formulation, the independent channels indexed by j are not appropriately viewed as actual words. Rather, j is a dimensionless factor that serves as an index of how strongly sentence context influences recognition. The smaller j is, the greater the effect of sentence context on recognition.

Nittrouer and Boothroyd (1990) investigated top-down linguistic contributions to sentence recognition for adults and children 4 to 6 years of age. Two kinds of sentences were developed for that study: sentences that provided clear syntactic structure, but no useful semantic information (syntax-only sentences) and sentences that provided clear syntactic structure, as well as strong semantic constraints (syntax + semantics sentences). All sentences were constructed from four monosyllabic words, and were embedded in noise at several signal-to-noise levels. In that study, mean j factors for the syntax-only sentences were 3.08 for children and 3.43 for adults. This difference was not significant, and indicates that children and adults effectively required slightly more than 3 independent channels of information to understand these sentences. On the other hand, evidence was obtained from the syntax + semantics sentences that children may not use semantic constraints as effectively as adults in sentence recognition. For that reason, sentences that provided clear syntactic structure, but no useful semantic information were used in this study. The participants in this study, all 10 or 11 years old at the time of testing, should be able to use these syntactic constraints, even if their language development was somewhat delayed. In addition, the sentences in this study consisted of four words, all monosyllables. The shorter sentences should help minimize memory demands, which would have been greater for the Rosner et al. (2003) study that used five to nine word sentences, including many multisyllabic words.

1.9 Current Study

In summary, this study investigated the abilities of four groups of children (SSD, RD, SSD+RD, and typical controls) to use three kinds of acoustic structure in speech perception: temporal fine structure (VOT), spectral fine structure (fricative noise spectra and onset formant transitions), and global structure (amplitude envelopes derived from vocoded sentences). These three sets of stimuli were well-distinguished by whether acoustic structure supported recovery specifically of phonetic segments (VOT and fricative stimuli), or broader, linguistically relevant form (vocoded sentences). This latter manipulation permitted us to inquire as to whether children with poor PA have difficulty only recovering phonetic structure, or if their problems extend to other kinds of linguistically relevant structures, as well, a question that has not been adequately addressed in past work. Including children with two distinct, but perhaps related disorders (as well as a comorbid group) allowed us to examine more closely if a single deficit underlies both disorders.

For the current study, the decision to include a VOT continuum was made partly because VOT is readily responded to in a categorical manner by almost all listeners, including infants (e.g., Eimas, Siqueland, Jusczyk, & Vigorito, 1971) and even animals of other species (e.g., Kluender, Diehl, & Killeen, 1987; Kuhl & Miller, 1978). This property is known to have a clear physiological correlate in the auditory periphery (e.g., Sinex, McDonald, & Mott, 1991), and even children with hearing loss who demonstrate other problems with speech perception are able to label these stimuli (Nittrouer & Burton, 2002). For these reasons it was suspected that if children with SDD and/or RD would be able to label any stimuli in a typical fashion, it would be these that varied in VOT. Having a demonstration of typical labeling would be useful because it would indicate that when delayed or deviant labeling functions are observed for these children it is not for reasons unrelated to their actual perception of the stimuli, such as attentional or cognitive factors.

The other two speech perception tasks were included in order to specifically address our main research questions, including: (1) How similar are the deficits in phonological representations that disrupt speech production in SSD to those that disrupt the decoding of printed words and nonwords in RD? (2) Do these deficits in SSD persist after the speech production problem has resolved? (3) If these deficits in phonological representations in SSD and RD are similar, how do we explain differences in clinical presentation, especially SSD without later RD and RD without earlier SSD? And (4) Are the deficits in these three groups exclusively at the “segmental” level, as is widely believed?

If a single deficit underlies both SSD and RD, children in all groups should perform similarly. If different deficits underlie these disorders, different patterns of performance across the tasks should be observed. In particular, if the phonological theory of RD is accurate, children with a history of SSD who do not develop a reading disability must have a different pattern of performance on speech perception tasks, indicating a different cause for their poor performance on PA tasks in the past, than children with RD. Additionally, inclusion of the comorbid group of children with SSD+RD allows us to examine the subtype hypothesis by directly comparing children diagnosed with SSD in preschool who go on to be diagnosed with RD in the early grades, to those children with SSD who do not later receive an RD diagnosis.

Based on previous findings within our lab, the prediction was that these groups that share PA deficits, but differ in RD outcome, also share similar problems detecting structure in the speech signal, thus challenging the phonological theory of RD. If these problems in speech perception extend beyond the segmental level, then the phonological theory of RD faces an even more serious challenge because the segmental theory of phonological development upon which it rests will need to be revised.

2. Material and Methods

2.1 Participants

Children in three of the four groups (SSD, SSD+RD, and Control) were drawn from a longitudinal study of SSD in which testing took place when children were 5 to 6 years of age, 7 to 8 years of age, and then for this report when children were 10 to 11 years of age. An additional group of children with RD were recruited from the Neuropsychology Clinic at the University of Denver, local reading tutors, and the Colorado Learning Disabilities Research Center (CLDRC) twins study. For most children, SSD diagnosis was completed by certified speech pathologists prior to participating in our study. For those that had not previously been diagnosed, diagnosis was performed by Richard Boada, the project co-PI and a certified speech pathologist. At the time of the current study children were not re-tested for persistence of SSD because so few children had persistent speech problems at age 8; however no obvious speech problems were noted by testers.

To qualify as having RD, children had to meet two criteria. First, they either had to have been previously diagnosed with a reading disorder or had to have a history of reading intervention. Second, they had to demonstrate a positive history of reading problems based on parental responses to the Learning Disability Questionnaire, an in-house instrument that is currently being developed within the Neuropsychology Clinic at the University of Denver. This questionnaire includes 7 items specific to reading, and responses range from 1 (“not at all”) to 5 (“always”). A child is considered to have a positive finding on the questionnaire if the mean score of answers to the seven questions is three or above. As important as it was to verify that the children in this RD group had histories of reading problems, it was equally as important to verify that there was no evidence of a comorbid SSD. To do that, parents completed another questionnaire, designed by the first author, asking detailed questions about the child’s development of speech production skills. All children in this group were found to have reached developmental milestones for speech production at typical ages and had not received any speech or language therapy. Children with RD were matched as closely as possible in age to participants in the other three groups.

To participate, all children had to pass audiometric screenings of the frequencies 500, 1,000, 2,000, and 4,000 Hz presented at 25 dB HL to each ear separately.

Table 1 shows demographic data for children in the four groups, including relevant non-speech perception tasks, age and an environmental variable associated with socio-economic status, parental years of education. One-way analyses of variance (ANOVAs) were performed on scores for each independent variable shown in Table 1. As can be seen, children’s ages at the time of testing and parental years of education were similar across groups. One-way ANOVAs were not significant for either of these variables.

Table 1.

Demographic data for children. Perceptual reasoning scores are from the WISC-IV. PPVT = Peabody Picture Vocabulary Test. TOWRE = Test of Word Reading Efficiency. Scores for those three tasks are given as standard scores. G-F Speech Errors = Number of errors on the Goldman-Fristoe Test of Articulation, administered at 5 years of age. PA raw = sum of items correct across the tasks of phoneme reversal and pig Latin. Standard deviations are shown in parentheses.

| N = | Control 16 |

SSD 17 |

RD 16 |

SSD+RD 17 |

One-way ANOVA result |

|---|---|---|---|---|---|

|

Age

(months) |

130.8 (6.0) |

132.7 (7.6) |

133.9 (9.1) |

129.1 (6.4) |

F (3, 62) = 1.34 |

|

Parental

Education (yrs) |

16.5 (1.0) |

16.7 (1.8) |

15.9 (2.1) |

15.4 (1.7) |

F (3, 62) = 2.23 |

|

Perceptual

Reasoning |

114.2 (9.3) |

109.9 (14.6) |

101.6 (11.1) |

94.8 (8.8) |

F (3, 62) = 9.85, p < .001 |

|

Processing

Speed |

107.5 (9.4) |

108.7 (12.8) |

94.4 (11.2) |

98.4 (14.3) |

F (3, 62) = 5.40, p < .01 |

| PPVT | 117.3 (10.6) |

109.5 (9.6) |

102.1 (6.0) |

97.2 (6.4) |

F (3, 62) = 18.04, p < .001 |

| TOWRE | 107.8 (13.4) |

103.1 (10.9) |

81.4 (9.5) |

83.9 (9.6) |

F (3, 62) = 24.34, p < .001 |

|

G-F

Speech Errors at Age 5 |

3.8 (2.6) |

20.4 (11.2) |

-- | 23.6 (13.3) |

|

| PA raw | 39.2 (7.6) |

37.8 (9.9) |

25.4 (9.7) |

24.7 (11.4) |

F (3, 62) = 10.49, p < .001 |

2.2 Cognitive Measures

2.2.1 Perceptual reasoning

In order to verify that no child was significantly impaired in non-verbal abilities, three subtests of the WISC-IV (Wechsler, 2003) making up the Perceptual Reasoning Index (the Block Design, Picture Concepts, and Matrix Reasoning tasks) were administered to all children. Means for all groups were well within normal limits, but analysis indicated a main effect of group, F (3, 62) = 9.85, p < .001, and post hoc comparisons with Bonferroni corrections (p < .05) showed that children in the Control group performed better than children in the other three groups, children in the SSD and RD groups performed similarly to each other, and children in the SSD group performed better than those in the SSD+RD group.

2.2.2 Processing Speed

The Coding and Symbol Search tasks of the WISC-IV were used to assess non-verbal processing speed for these children. Again, means for all groups were within normal limits, but analyses indicated a main effect of group, F (3, 62) = 5.40, p = .002, and post hoc comparisons with Bonferroni corrections (p < .05) showed that only children in the RD group had significantly poorer scores than children in the Control and SSD groups.

2.2.3 PPVT

All children were given the Peabody Picture Vocabulary Test, 3rd edition (PPVT; Dunn & Dunn, 1997). The PPVT-III is a receptive vocabulary task and does not require any reading or phonological output. Children simply point to the picture (out of a set of four) that illustrates the word spoken by the examiner. Although this task measures only receptive vocabulary, it often serves as a general measure of linguistic competency. Again, means for all groups were well within the normal range. Nonetheless, there was a significant group effect, F (3, 62) = 18.04, p < .001. Post hoc comparisons with Bonferroni corrections (p < .05) showed a steady worsening of scores from the Control to the SSD to the RD to the SSD+RD groups, such that children in the Control and SSD groups performed similarly, children in the SSD and RD groups performed similarly but the RD group performed worse than the Control group, and children in the RD and SSD+RD groups performed similarly but the SSD+RD group performed worse than both the Control and SSD groups.

2.2.4 Phoneme awareness (PA)

Two tasks measured PA. The first was a phoneme reversal task (Pennington & Lefly, 2001) consisting of 24 words with two or three phonemic segments. Children were asked to verbally produce the phonemic reversal of words presented orally by the examiner. They were told that they must reverse the sounds in words, not just the letters. Four training words were provided, with feedback, and 24 test stimuli formed this first part of the composite PA variable. These stimuli are provided in the appendix.

For the second part of the composite PA score, the pig Latin task, children heard 26 words that they needed to convert into pig Latin; no feedback was provided during testing. Practice was provided using eight words prior to testing, with feedback. Accuracy data from the 26 testing stimuli was added to accuracy data from the phoneme reversal task to create a PA composite score to be used as the dependent variable. These stimuli are also provided in the appendix.

Total numbers of items completed correctly are shown in Table 1. A significant group effect was found, F (3, 62) = 10.49, p < .001. Post hoc comparisons with Bonferroni corrections (p < .05) revealed that the two groups of children with RD performed similarly: The post hoc comparison was not significant for these two groups. Children in the Control and SSD groups performed similarly to each other (i.e., no significant post hoc comparison), and better than both groups of children with RD. For the comparisons of each of the Control and SSD groups with each of the RD and SSD+RD groups results were significant.

At the time that the data reported here were collected, children in the SSD group showed no PA deficits. Relevant to the current study, however, is the fact that they had a history of mild deficits. The top two rows of Table 2 show mean scores for the same PA tasks described above when these children were tested at the two earlier times. (Children in the RD group are not included because they were not tested previously.) At 5-6 years of age, children in the SSD group performed more poorly than children in the Control group, t(31) = 2.0, p = .054, but by 7-8 years of age there was no difference between those groups. Thus, over the course of two years and during early reading instruction, these children managed to acquire sufficient sensitivity to phonetic structure to perform PA tasks as do typically developing children. This finding differs from that of some others, who have shown that children with SSD who had poor PA in kindergarten continued to have poor PA at the end of first grade (Rvachew, 2007).

Table 2.

Mean PA scores for the three groups of children tested at 5-6 and 7-8 years of age are shown in the top two rows. Mean percentages of phonemes repeated correctly in a non-word (NW) repetition task at the same ages are shown in the bottom two rows. Standard deviations are shown in parentheses.

| Control | SSD | SSD+RD | |

|---|---|---|---|

|

PA score

5-6 years |

11.3 (4.9) |

8.7 (5.1) |

4.9 (2.5) |

|

PA score

7-8 years |

28.4 (4.3) |

25.7 (1.8) |

18.7 (6.6) |

|

| |||

|

NW repetition

5-6 years |

85.30 (7.08) |

71.47 (7.55) |

58.74 (7.97) |

|

NW repetition

7-8 years |

91.18 (4.24) |

82.35 (6.63) |

76.19 (6.42) |

In addition to those PA scores, a non-word repetition task had been administered to the children in the Control, SSD, and SSD+RD groups at earlier test times. Mean percentages of phonemes repeated correctly are shown in the bottom two rows of Table 2. One-way ANOVAs showed significant group effects at both the first test time, F(2, 45) = 48.18, p < .001, as well as the second, F(2, 45) = 25.05, p < .001, and post hoc comparisons with Bonferroni corrections (p < .05) revealed differences between the Control and SSD groups at both of those times. So, on this task children with SSD continued to show deficits, at least through age 7 to 8 years. Consequently, it is fair to conclude that children in the SSD group had delayed development of PA. However, it is clear from the continuum of scores represented on these tasks that these children were less delayed than the children in the comorbid SSD+RD group.

2.3 Grouping variables

2.3.1 TOWRE

In order to verify reading ability, all children were given the Test of Word Reading Efficiency (TOWRE; Torgesen, Wagner, & Rashotte, 1999). The TOWRE measures how many single words and non-words are accurately read within 45 seconds, giving an estimate of the child’s reading fluency. As expected, there was a significant main effect of group, F (3, 62) = 24.35, p <.001. Children in both the RD and SSD+RD groups performed more poorly than children in the SSD and Control groups, and scored more than one standard deviation below the typical mean on this test of reading ability.

2.3.2 G-F Speech Errors

SSD is a disorder that is generally diagnosed during the preschool years. Symptoms often attenuate during the early elementary grades. For that reason, scores from the Goldman-Fristoe Test of Articulation (Goldman & Fristoe, 1986) administered when these children were 5 years of age are shown on Table 1. Raw scores are shown here because they are more precise than standard scores, allowing more accurate comparison across groups. As can be seen, the two groups of children with SSD at the time of the test made more errors than did children in the Control group, F (1, 49) = 8.65, p < .01. Children in the RD group were not tested because they were not in an experimental protocol at 5 years of age.

2.4 Speech Perception Stimuli

Three sets of stimuli were generated. The VOT and fricative-vowel stimuli both used a 10-kHz sampling rate, with low-pass filtering below 4.8 kHz.

2.4.1 VOT

These stimuli were taken from a study examining labeling of syllable-initial stops with varying VOT by children with and without hearing loss (Nittrouer & Burton, 2002). The stimuli were constructed with natural burst noises and synthetic vocalic portions. Ten milliseconds of burst noise was excised from natural tokens of a male speaker saying /da/ and /ta/, and used in the construction of these stimuli. Because /d/ and /t/ share the same place of closure, the spectra of these noises do not differ greatly: A /t/ noise just has a little more energy in the high-frequency regions. The nine vocalic portions were 270 ms long. The first formant (F1) transition took place over the first 40 ms, and changed during that time from 200 Hz to its steady-state frequency of 650 Hz. The second and third formants (F2 and F3) changed over the first 70 ms of the vocalic portions. F2 started at 1800 Hz, and fell to its steady-state frequency of 1130 Hz. F3 started at 3000 Hz, and fell to its steady-state frequency of 2500 Hz. F4 and F5 were held constant at their default frequencies of 3250 Hz and 3700 Hz, respectively. The f0 was constant at 120 Hz for the first 70 ms, and then fell linearly through the rest of the vocalic portion to an ending frequency of 100 Hz. The onset of voicing was cut back in 5-ms steps from 0 ms to 40 ms, making a total of nine vocalic portions. With the 10 ms of burst noise, this means that VOT actually varied from 10 to 50 ms. There was no source provided to F1 before the onset of voicing. Aspiration noise was the source to the formants higher than F1 before the onset of voicing. Each burst noise was combined with each vocalic portion, making 18 stimuli in all.

2.4.2 Fricative-vowel syllables

These stimuli were used previously by Nittrouer (1992; 1996a; 1996b; Nittrouer & Lowenstein, 2009), and consisted of synthetic fricative noises concatenated with natural vocalic portions. The noises were single pole, and 150 ms in duration. The center frequencies of these noises varied from 2.2 kHz to 3.8 kHz in 200-Hz steps, making a total of nine such noises. The vocalic portions were taken from an adult, male speaker saying Sue and shoe. The fricative noises were removed from those samples. Here we indicate which context the vocalic portion came from by placing the original fricative label in parentheses. Five portions from each fricative context were used that matched in terms of duration and intonation contour. The five /(∫)u/ portions had a mean duration of 348 ms, and a mean f0 of 97 Hz. The five /(s)u/ portions had a mean duration of 347 ms, and a mean f0 of 99 Hz. The five /(∫)u/ portions had formant transitions appropriate for a preceding /∫/, and the five /(s)u/ portions had formant transitions appropriate for a preceding /s/. Each of these ten vocalic portions was concatenated with each of the nine synthetic fricative noises, making a total of 90 stimuli: five tokens each of 18 stimuli (9 noises × 2 formant transitions).

2.4.3 Vocoded sentences

Thirty-six four-word sentences from Nittrouer et al. (2009) were used. All sentences consisted entirely of monosyllabic words, were syntactically appropriate for English, but semantically anomalous. Most of these sentences had some version of a subject-verb structure (e.g., Dumb shoes will sing. Knees talk with mice.), although five had a command structure (e.g., Paint your belt warm.) Furthermore, all words were selected to be within the vocabulary of the typically developing children between 4 and 6 years of age, and so the 10 to 11-year-olds in this study should have been familiar with them. Finally, memory load was reduced by having sentences that consisted of just four monosyllabic words. Six sentences were used for practice, and thirty were used for testing. Sentences were recorded by an adult male speaker of American English at a 44.1-kHz sampling rate with 16-bit digitization. All sentences were equalized for mean RMS amplitude across sentences before any processing was done.

To create the vocoded stimuli, a MATLAB routine was written. Both four- and eight-channel stimuli were created. All signals were first low-pass filtered with an upper cut-off frequency of 8,000 Hz. For the four-channel stimuli, cut-off frequencies between bands were 800, 1,600, and 3,200 Hz; for the eight-channel stimuli, cut-off frequencies were 400, 800, 1,200, 1,800, 2,400, 3,000, and 4,500 Hz. Each channel was half-wave rectified, and results used to modulate white noise limited by the same band-pass filters as those used to divide the speech signal into channels. Resulting bands of modulated noise were low-pass filtered using a 160-Hz high-frequency cut-off, and combined. These stimuli were used previously by Nittrouer et al. (2009), and a complete list of the sentences can be found there.

2.5 Procedures

Children were first given the hearing screening, and administered the tasks from the WISC-IV, TOWRE, PPVT, and the two PA tasks. The three speech perception tasks (VOT, fricative-vowel syllables, and vocoded sentences) were then given, in random order across participants. The entire session took between two and two and a half hours. Breaks were given as needed to help maintain attention to the tasks and children were regularly rewarded with play money that could be exchanged for real money at the end of the testing session.

2.5.1 VOT and Fricative-Vowel Syllables

Procedures for the VOT and fricative-vowel syllables were identical. Stimuli were presented auditorily one at a time, and children had to assign one of two response labels to each. They indicated their choice by pointing to one of two pictures, and saying the “name” of that picture. The label sue corresponded to a picture of a girl, and shoe corresponded to a shoe. Pictures of a horse and a sea serpent were used for da and ta. Children were told that these were the names of the animals. The experimenter entered children’s responses into the computer.

Two kinds of training were provided. Initial training on responding to the words sue/shoe or da/ta was done with natural tokens produced by the experimenter in which the child was asked to point to the picture that matched the given name while simultaneously saying the name of that picture. Having listeners both point to and say their response served first as a check that they were appropriately associating the correct picture with each label. Later, during testing, it was an indication that they were consistently paying attention. After successful training with live voice, the participant put on headphones attached to the computer and he or she heard the best exemplars of the two categories involved in the test. For sue, for example, the best exemplar was the 3.8 kHz noise combined with the /(s)u/ vocalic portion. Again the child was asked to point to one of two pictures while saying the name of the picture. For this training, each end point was presented five times each, with order randomized. The child had to respond correctly 9 out of 10 times in this training phase to proceed to the testing phase. In this way it was assured that children were able to associate each label with the correct picture reliably.

During testing, stimuli were presented in five blocks of 18, consisting of all stimuli for the set. In order to maintain motivation, all participants were rewarded with 50 cents after each block of 18 and notified as to how many blocks they completed and how many they had remaining. As a check on whether children maintained general attention or not, the numbers of correct responses to the endpoint stimuli throughout testing were examined. These stimuli were distributed among all other stimuli during testing. Therefore, finding that children continued to respond accurately to these endpoints, which they had shown they could do during practice, indicated that they paid attention throughout the entire session. Children had to continue responding with 90 percent or greater accuracy to these endpoint stimuli during testing in order for their data to be included in analysis.

After testing, probit functions were fit to the resulting data. Closely related to logit functions, these functions are effectively z-transformations, only with 5 added to each z-score so that no value is negative. From this distribution, a mean (i.e., the point on the function where the probability of either response is the same) and a slope are derived. The mean is generally termed the phoneme boundary, as it is the point at which responses change from being primarily one category to the other. The separation between functions is defined at these phoneme boundaries, and serves to estimate the extent to which the listener based responses on the property defining the two separate functions. For the VOT stimuli, that property was whether the noise burst was appropriate for a /d/ or a /t/. For the fricative-vowel syllables, that property was whether the voiced formant transitions were appropriate for a syllable initial /s/ or /∫/. The greater the separation between functions, the greater the perceptual weight assigned to that acoustic property.

The slope of the functions serves as an estimate of the perceptual weight assigned to the acoustic property that varied across the continua. For the VOT stimuli, that was VOT. For the fricative-vowel syllables, that property was the center frequency of the fricative noise.

2.5.2 Vocoded sentences

For this task participants were asked to repeat all the words that they heard in a given sentence. For training purposes, children heard the same sentence twice in a row, first in a natural form and then in the processed form. After each presentation the child was asked to repeat the sentence. During testing, the child heard each processed sentence, twice in a row. After each presentation, the child repeated the sentence as best as possible. Every child heard all thirty sentences in a random order. Half of the sentences were presented as 8-channel vocoded signals, and half were presented as 4-channel vocoded signals. The software randomly chose which sentences to provide as 8- and 4-channel signals at the start of testing for each participant. Following the experimental task, all sentences were presented one more time in their non-vocoded format in order to verify that the children heard the words correctly under normal circumstances.

For statistical analyses, the mean numbers of words repeated correctly across the two trials served as the dependent variable. Because there were 15 sentences in each of the 4- and 8-channel conditions, maximum correct was 60 words. In addition, j factors were computed for each child. This analysis informed us about top-down linguistic context effects across groups.

3. Results

This study seeks to explore the similarities and differences in phonological representations, as measured by different speech perception tasks, in three different clinical groups: SSD only, RD only, and SSD+RD (individuals with a history of SSD and current RD). In order to explore these potential differences among clinical groups and a control group, each speech perception task is analyzed independently.

Prior to conducting inferential statistics, all dependent variables were checked for skewness, kurtosis, and outliers. All variables met criteria for normality. One child in the SSD+RD group was found to have an extraordinarily high number of errors on the sentence recognition post test (32 errors, compared to an average of 5 for the rest of the group). Her data for that task were removed. Data were lost for one child in the SSD-only group on the fricative labeling task due to computer error. Her data were removed for that one task only.

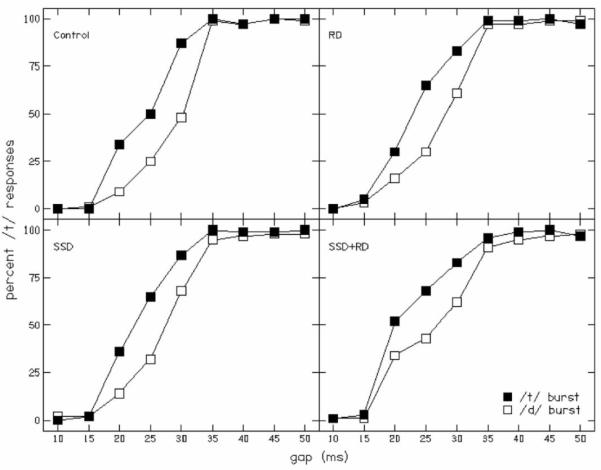

3.1 VOT

Figure 1 illustrates mean labeling functions for each group for the VOT stimuli. Table 3 shows mean phoneme boundaries and slopes for each group, as well as mean separation between phoneme boundaries. It is apparent that outcomes were similar across groups, and statistical analyses did not reveal a significant group effect either for mean slope or for the separation between the labeling functions.

FIGURE 1.

Labeling functions for the VOT task. Statistical analyses did not reveal a significant group effect either for mean slope or for the separation between the labeling functions. RD = Reading Disability, SSD = Speech Sound Disorder.

Table 3.

Outcomes for the VOT labeling task. Mean phoneme boundaries (in ms of VOT), mean separation between those phoneme boundaries (again in ms of VOT) and slopes (in probit units per ms of change in VOT) for each group are shown, with standard deviations in parentheses.

| Control | SSD | RD | SSD+RD | |

|---|---|---|---|---|

| Phoneme boundaries | ||||

| /d/ burst | 28.6 (2.3) |

27.2 (3.4) |

27.6 (3.3) |

26.4 (4.6) |

| /t/ burst | 24.2 (2.7) |

23.2 (2.7) |

23.9 (3.9) |

22.7 (4.2) |

| Separation |

4.4

(1.9) |

4.1

(2.2) |

3.7

(2.5) |

4.5

(3.6) |

|

| ||||

| Slope | ||||

| /d/ burst | 0.25 (0.11) |

0.24 (0.10) |

0.21 (0.09) |

0.18 (0.08) |

| /t/ burst | 0.23 (0.06) |

0.23 (0.08) |

0.23 (0.08) |

0.23 (0.11) |

| Mean Slope |

0.24

(0.07) |

0.23

(0.07) |

0.22

(0.07) |

0.21

(0.08) |

3.2 Fricative-vowel syllables

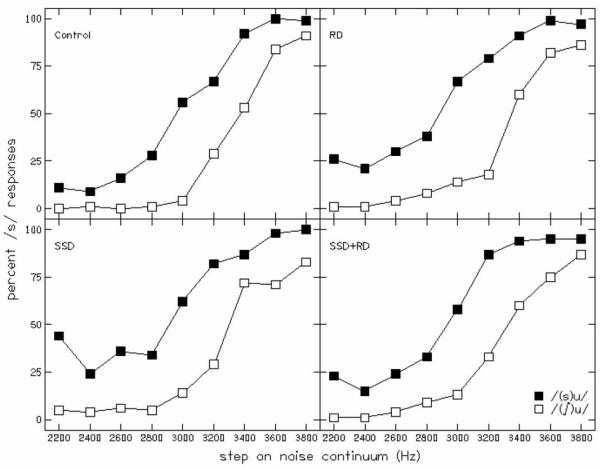

Figure 2 shows mean labeling functions for each group, and Table 4 provides mean phoneme boundaries and slopes. Regarding the separation between phoneme boundaries, it appears as if these separations are greater for all three clinical groups than for the Control group. Surprisingly, however, a one-way ANOVA failed to reveal a significant group effect [F(3, 61) = 2.07, p = .11], an outcome that differs from results of earlier investigators (Boada & Pennington, 2006; Nittrouer, 1999) who found delays in the DWS for children with RD. In those studies, children with RD, and so with poor PA, weighted formant transitions more in these very same fricative-vowel stimuli than did children with age-appropriate PA, leading to greater separations between functions based on formant transitions. But although the effect was not significant, there appears to be a trend for children in the clinical groups to have more widely separated labeling functions, suggesting greater weighting of those formant transitions.

FIGURE 2.

Labeling functions for fricative-vowel syllables. Children in the SSD group and RD group weighted formant transitions more than children in the Control group. Mean slope for the Control group was significantly different from those for all three clinical groups. RD = Reading Disability, SSD = Speech Sound Disorder.

Table 4.

Outcomes for the fricative labeling task. Mean phoneme boundaries (in Hz of fricative noise), mean separation between those phoneme boundaries (again in Hz) and slope (in probit units per kHz of fricative noise) for each group are shown. Standard deviations are in parentheses.

| Control | SSD | RD | SSD+RD | |

|---|---|---|---|---|

| Phoneme boundaries | ||||

| /(∫)u/ | 3381 (133) |

3404 (264) |

3421 (260) |

3359 (162) |

| (s)u/ | 2916 (263) |

2676 (272) |

2720 (311) |

2825 (253) |

| Separation |

474

(238) |

728

(409) |

701

(395) |

534

(320) |

|

| ||||

| Slope | ||||

| /(∫)u/ | 5.21 (2.28) |

3.47 (1.81) |

3.72 (1.62) |

3.57 (2.27) |

| /(s)u/ | 3.93 (2.00) |

1.88 (0.80) |

2.85 (2.06) |

2.77 (1.73) |

| Mean slope |

4.57

(1.85) |

2.68

(1.03) |

3.28

(1.41) |

3.16

(1.92) |

Additionally, we were mindful of the fact that significant group differences were observed for three cognitive and linguistic factors: perceptual reasoning, processing speed, and PPVT scores. Therefore, we computed Pearson product-moment correlation coefficients between each of those measures and the measure of separation between phoneme boundaries. None of those correlation coefficients was significant (p > .1).

Turning our attention to the slopes of the functions, which indicate the amount of weight assigned to the fricative-noise spectra, it is apparent that mean slope across the two labeling functions did show a significant group effect, F(3,61) = 4.25, p = .009. Post hoc t tests (with a Bonferroni correction) revealed that mean slope for the Control group was significantly different from the SSD group (p < .05) and was approaching significance for a comparison with the SSD+RD group (p < .1). Cohen’s ds (Cohen, 1988) were computed on these differences, and these values were 1.26 and 0.75, respectively, for the two comparisons.

Again, we computed Pearson product-moment correlation coefficients for each of the measures of perceptual reasoning, processing speed, and PPVT against mean slopes of the labeling functions. Only the correlation coefficient between mean slopes and PPVT scores was significant, r(65) = .38, p = .008. Thus, these two measures have roughly 11 percent of their variability in common. It’s difficult to assume any cause and effect relationship with this result since the only words associated with the task were “sue” and “shoe”.

3.3 Vocoded sentences

Table 5 provides the mean numbers of words (out of 120) repeated correctly by each group in the post-test presentation of unprocessed sentences. A one-way ANOVA performed on these numbers showed a significant group effect, F(3,60) = 5.59, p = .002, but post hoc comparisons revealed a significant difference only between children in the Control and SSD+RD groups. Cohen’s d for this contrast was 1.23.

Table 5.

Mean numbers of words repeated correctly in a post-test of sentence recognition with unprocessed signals. The numbers in parentheses are the standard deviations.

| Control | SSD | RD | SSD+RD | |

|---|---|---|---|---|

| Words repeated correctly |

117.25 (1.34) |

116.12 (1.45) |

115.38 (1.89) |

114.47 (2.90) |

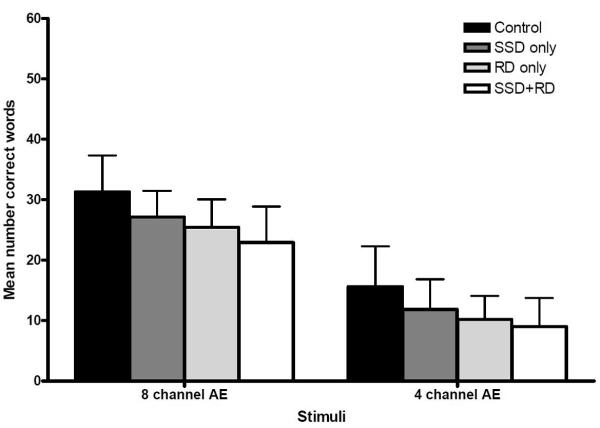

Figure 3 shows mean correct word recognition for the 8- and 4-channel vocoded sentences. This information is also provided in Table 6. It is apparent that children in all three experimental groups repeated fewer words correctly in both vocoded conditions than did children in the Control group. A two-way ANOVA with condition as the within-groups factor and group as the between-groups factor showed significant effects of condition, F(1,60) = 440.32, p < .001, and group, F(3,60) = 8.30, p < .001. However, the Condition × Group interaction was not significant. This analysis was also performed using the numbers of words correctly repeated in the post test as a covariate, and the group effect was still significant, F(3,59) = 4.42, p = .007. That finding supports the suggestion that variation across groups in how well children could repeat these sentences was explained by something other than the factors that are typically viewed as supporting sentence recall abilities in general, such as syntactic knowledge and working memory. Presumably much of the reason that we did not find effects of these latter factors is because we explicitly tried to control for them by keeping syntactic structure simple and sentences short.

FIGURE 3.

Percent words recognized correctly (out of 60 possible) in 8- and 4-channel stimuli. Error bars are standard deviations. Children in the three experimental groups performed more poorly than children in the Control group, with the SSD vs Control effect lost after accounting for multiple comparisons. RD = Reading Disability, SSD = Speech Sound Disorder.

Table 6.

Outcomes for the sentence recognition task. Mean percent correct word recognition (out of 60 possible) for each trial in each condition, as well as means across the two trials for the amplitude envelope sentences are shown. Standard deviations are in parentheses.

| Condition and trial | Control | SSD | RD | SSD+RD |

|---|---|---|---|---|

| 8-channel | ||||

| Trial 1 | 28.88 (7.09) |

25.35 (5.04) |

24.00 (5.66) |

21.27 (5.75) |

| Trial 2 | 33.62 (5.66) |

28.94 (3.99) |

26.94 (3.75) |

24.6 (6.42) |

| Mean |

31.25

(6.04) |

27.15

(4.31) |

25.46

(4.60) |

22.93

(5.95) |

|

| ||||

| 4-channel | ||||

| Trial 1 | 13.75 (6.79) |

10.41 (5.06) |

9.13 (3.82) |

8.27 (4.70) |

| Trial 2 | 17.50 (6.83) |

13.29 (5.19) |

11.31 (4.57) |

9.80 (4.95) |

| Mean |

15.63

(6.68) |

11.85

(5.00) |

10.22

(3.89) |

9.03

(4.71) |

Looking at each condition separately, the effect of group was significant for both: 8- channel F(3,60) = 6.90, p < .001; 4-channel F(3,60) = 4.84, p = .004. In both conditions, post hoc t-tests also revealed that children in the three experimental groups performed more poorly than children in the Control group, but these comparisons were only significant for the contrasts of Control vs. RD and Control vs. SSD+RD when Bonferroni corrections (p = .05) were applied. Thus, children in the SSD group performed more similarly to children in the Control group than did children in the other two clinical groups. Cohen’s ds were computed between Control group and each of the other groups for sentence recognition scores in the 8- and 4-channel conditions. These are shown in Table 7.

Table 7.

Cohen’s ds between the Control group and each of the other groups for sentence recognition with the 8- and 4-channel vocoded stimuli.

| SSD | RD | SSD+RD | |

|---|---|---|---|

| 8-channel | 0.78 | 1.00 | 1.14 |

|

| |||

| 4-channel | 0.64 | 1.08 | 1.39 |

Again, we wanted to examine whether cognitive and linguistic factors may have explained any proportion of the variance in group differences, and so Pearson product moment correlation coefficients were computed between perceptual reasoning, processing speed and PPVT scores vs. numbers of words repeated correctly for the 8-channel and 4-channel stimuli. The PPVT scores were significantly correlated with each of the dependent measures: 8-channel r(64) = .51, p < .001; 4-channel r(64) = .44, p < .001. Therefore, it may be concluded that these scores shared 20 to 25 percent of their variance. Again, however, it is difficult to assign any kind of cause-and-effect relationship between these variables because receptive vocabulary is such a general measure of language ability and the words in these sentences were explicitly selected to be within the vocabularies of typically developing 4- to 6-year-olds.