This article synthesizes discussions held during an internationalmeeting, “Surveillance forDecisionMaking: The Example of 2009 Pandemic Influenza A/H1N1,” held at Harvard School of Public Health in June 2010. It defines the needs for surveillance in terms of the key decisions thatmust be made in response to a pandemic: how large a response to mount and which control measures to implement, for whom, and when. The article describes the sources of surveillance and other population-based data that can form the basis for such evidence, and the interpretive tools needed to process raw surveillance data. It concludes with key lessons of the 2009 pandemic for designing and planning surveillance in the future.

Abstract

This article synthesizes and extends discussions held during an international meeting on “Surveillance for Decision Making: The Example of 2009 Pandemic Influenza A/H1N1,” held at the Center for Communicable Disease Dynamics (CCDD), Harvard School of Public Health, on June 14 and 15, 2010. The meeting involved local, national, and global health authorities and academics representing 7 countries on 4 continents. We define the needs for surveillance in terms of the key decisions that must be made in response to a pandemic: how large a response to mount and which control measures to implement, for whom, and when. In doing so, we specify the quantitative evidence required to make informed decisions. We then describe the sources of surveillance and other population-based data that can presently—or in the future—form the basis for such evidence, and the interpretive tools needed to process raw surveillance data. We describe other inputs to decision making besides epidemiologic and surveillance data, and we conclude with key lessons of the 2009 pandemic for designing and planning surveillance in the future.

The first year of the 2009-10 influenza A/H1N1 pandemic was the first test of the local, national, and global pandemic response plans developed since the reemergence of human cases of avian influenza H5N1 in 2003. The plans specified that response decisions be based on estimates of the transmissibility and, in some cases, the severity of the novel infection. Although public health surveillance provided a critical evidence base for many decisions made during this phase, its implementation also revealed the practical challenges of gathering representative data during an emerging pandemic1 and showed that such decisions, with their significant public health and economic consequences, must often be made before many key data are available.2

In this article, we reflect on the nature and timing of decisions made during the course of the first year of the pandemic and on the corresponding urgent need to gather surveillance data and process it into useful evidence. Our goal is to suggest how surveillance systems can be improved to provide better, more timely data to estimate key parameters of a future pandemic, with the goal of improving management of that pandemic. We also attempt to identify the human and technical capabilities needed to process and interpret these data to maximize their value for decision makers. While we cite examples from many countries, we focus to some extent in this article on the United States experience. More generally, no attempt has been made to be exhaustive in citing the literature, which includes more than 2,000 references with just the keywords “H1N1 AND surveillance” between the start of the pandemic and the end of 2010.

We use the term surveillance here to encompass ongoing monitoring of disease activity and gathering of clinical and epidemiologic data that define the disease, such as comparative severity in different risk groups. In section 1 we describe the key decisions made during the 2009 pandemic, emphasizing early decisions about matters of broad strategy and tactics,3 and their evidentiary inputs. Section 2 identifies sources of surveillance data. In section 3 we discuss methods of processing surveillance data into usable evidence, and in section 4 we examine inputs, other than surveillance or epidemiologic inputs, that can inform, or indeed disrupt, public health decisions. Section 5 concludes with lessons learned for future pandemics.

1. Pandemic Response Decisions and Their Evidentiary Inputs

1.1 Decision to Respond

As a novel influenza virus emerges, the first set of decisions required of global, national, and local authorities involves whether to progress beyond routine monitoring and devote resources to a large-scale response. The World Health Organization (WHO) and ProMED-mail together report more than 3,000 alerts of human infectious diseases annually.4 Consequently, devoting extraordinary public health resources to tracking and preparing a response must depend on the estimated risk that the outbreak will reach a given jurisdiction and cause widespread, serious illness. Unfortunately, the extent of transmission—and therefore the severity of the disease—may be unclear during the early stages of a pandemic. For example, infection in Mexico was already widespread by late April 2009 when the link was made between the unusual cases of pneumonia reported in March and April and a novel strain of influenza.5,6 Specialized laboratory tests and epidemiologic follow-up of individual cases ultimately provided the critical information that confirmed the novelty of the H1N1 virus and its presence in Mexico and U.S. border states.

1.2 Overall Scale of Response

Once a novel strain of influenza establishes widespread human-to-human transmission, global spread will be rapid, warranting an escalated response. This may include deploying or reassigning public health workers to pandemic-related activities, acquiring and deploying supplies (such as antivirals, antibiotics, vaccines, ventilators, and personal protective equipment), and commencing nonpharmaceutical interventions (eg, school closures, border screenings). For each of these measures, decision makers must balance the costs against the likely benefits, both of which depend on the epidemiologic characteristics of the novel infection and on the expected scale of damage arising from an unmitigated pandemic—estimated as the number of individuals infected multiplied by the probability that each infection will lead to severe illness, hospitalization, or death. Predicting the timing of such events could also inform decision making. Control measures may be cost-effective, for example, if they avert a sharp, acute increase in demand on healthcare services in a population, but less so if an unmitigated pandemic were less peaked and hence less disruptive.

Rapidly generated transmissibility and severity estimates are essential for predicting the scale and time course of a pandemic.7 With influenza, most important are measures of severity per infected individual—that is, the probability of death, hospitalization, or other severe outcome. Historically, influenza pandemics have led to symptomatic infection in between 15% and 40% of the population.8 This variability is minor compared with variation in severity per symptomatic case as measured, for example, by the case-fatality ratio, which varies by orders of magnitude among pandemics. Thus, a key task for surveillance early in a pandemic is to estimate the per-infection severity of the new pandemic strain precisely enough to define the appropriate scale of response. This proved challenging in 2009 up until late summer, because early estimates indicated an uncertainty ranging from the mildest possible pandemic envisaged in preparedness planning to a level of severity requiring highly stringent interventions.5,9–12 As we discuss further in section 4.1, another form of uncertainty was whether the severity, drug-sensitivity, or other characteristics of the infection might change as the pandemic progressed; unlike the first form of uncertainty, this question could not be resolved using better data.

As a pandemic unfolds, surveillance data and epidemiologic studies may provide early indications of the impact of public health interventions. Ideally, data on the economic costs (including indirect costs for socially disruptive measures such as school dismissals) and the public health and economic benefits of interventions would be formally weighed within a cost-benefit or cost-effectiveness framework to inform policy decisions. Yet, we know of no such evaluations having been undertaken during a pandemic. Instead, more informal comparisons have typically informed decision making, including in 2009.

1.3 Measures to Protect Individuals

Another set of decisions involves interventions to protect individuals against infection and against severe morbidity or mortality following infection. Guidelines or policies are needed to help clinicians and health systems determine whom to test for infection, whom to treat and under what circumstances, whom to advise on early treatment when a high risk of complications exists, and whether to make antiviral drugs more readily available for such individuals.13 Decisions about how much vaccine to purchase are based in part on the severity of infection in population subgroups and on ensuring adequate supplies for at least those in greatest need.

Comparative severity of infection is estimated from risk factor studies, in which the frequency of particular demographic characteristics or particular comorbidities in the general population is compared with the frequency among people with severe outcomes (death, ICU admission, hospitalization).14–16 Ideally, one would also have estimates of the effectiveness of prevention and treatment in the high-risk groups, since prioritizing groups for intervention presupposes that the interventions will help them. In practice, data on intervention effectiveness in high-risk groups may be difficult to obtain in time, but may be inferred from experience with seasonal influenza,14,17 despite epidemiologic differences between pandemic and seasonal disease.

1.4 Measures to Slow Transmission

Decisions also must be made about interventions to prevent transmission. In the early phases of a pandemic, these may include screening travelers as they enter or leave a jurisdiction and isolating those who are symptomatic. Mathematical models indicate that such measures are unlikely to delay global spread of influenza by more than about 2 weeks.18–20 In 2009, some jurisdictions judged this delay adequate to justify stringent border controls, and evidence shows that the controls measurably delayed the start of local transmission of the H1N1 virus in several regions.21 Once community transmission is established, however, the measures are less useful, so a key role for surveillance is to inform decision makers about the extent of infection within their jurisdictions.

Other nonpharmaceutical interventions to slow transmission in a community include school dismissals and cancellation of public gatherings; these measures are often undertaken reactively based on transmission in an individual school or district. These decisions specifically rely on fine-grained local data (see sidebar: Local Surveillance Data).

Local Surveillance Data: When Is It Necessary?

In an ideal situation, every public health authority could access its own high-quality, local data to synthesize into evidence relevant for its disease control decisions. Gathering, processing, and interpreting data, however, cost money, time, and expertise, and few jurisdictions worldwide can undertake these activities independently. Decision makers must instead rely on a heterogeneous mix of local, regional, and global data sources.

Local authorities, particularly elected officials, may want data on the progress of the local epidemic for a variety of reasons. In addition, from a public health and evidence-based decision-making perspective, 3 basic arguments favor geographically distributed surveillance.

First, infection may remain geographically focal for weeks to months. In 2009, transmission remained focal within Mexico for at least 1 month, probably longer. Once the virus spread to other countries, transmission was again initially confined to certain areas. When early transmission is geographically circumscribed, the first estimates of key parameters that serve to inform decision makers worldwide must rely on data from areas of ongoing transmission. In the UK, even by the end of the summer 2009 wave of transmission, seroprevalence was higher in London and the West Midlands than elsewhere.65

Second, even when infection spreads virtually everywhere, its characteristics may differ across regions, resulting in different local priorities for control. In 2009, many risk factors for severe outcome—race/ethnicity,45,135 income,35 comorbidities,14,135 healthcare access,14,135 and exposure to bacterial co-infections76—varied geographically on scales ranging from neighborhood to continent. Global awareness of these and other risk factors depends on having some form of surveillance available in populations where risk factors are concentrated. Awareness of local disparities in infection rates or severe disease35,71 within a jurisdiction can also improve resource allocation.

Third, some decisions, such as school dismissals (and reopenings) in response to within-school transmission, require very detailed, real-time local data. During the 2009 pandemic, these decisions had to be made based on limited data with known biases. One example is school absences, which can be due either to influenza infection or to fear of acquiring influenza at school. More generally, decisions about specific tactics to control transmission in particular settings—such as schools, hospitals, or other institutions, or aircraft or other vehicles—necessarily rely on local data. Surveillance to trigger and guide such interventions has been called “control-focused” surveillance, in contrast to the “strategy-focused” surveillance3 that is discussed throughout most of this article.

These factors favoring local data must be balanced against resource limitations and competing public health priorities. For many questions—specifically, those for estimating overall severity—high-quality data from within a country or a group of countries with broadly similar health systems are likely adequate and often more reliable than local data from an individual jurisdiction. As noted in section 3.1, sharing of data across hemispheres can be particularly valuable, given the seasonality of transmission.86,87

To avoid making misleading comparisons, consumers of local surveillance data should also know the factors that differ among jurisdictions. In the spring-summer of 2009, the city of Milwaukee, Wisconsin, confirmed about 3 times as many cases as did New York City, even though New York has about 8 times the population. This was due not to a greater incidence in Milwaukee, but to different decisions regarding whom to test. Milwaukee tested many mildly ill individuals, while New York City focused on hospitalized cases.44 Clearly, without knowledge of surveillance differences, it is easy to misinterpret differences in case numbers.

The above considerations suggest that to prepare for future pandemics:

decision makers should be educated about the limitations of local data and the cost-benefit trade-off of gathering high-quality data at the local level;

national public health agencies should maintain the epidemiologic and laboratory capacity to study focal outbreaks, including those in areas that lack high-quality routine surveillance or local capacity for such investigations; and

high-quality routine surveillance systems should be geographically distributed within and among countries to improve the likelihood that some systems will be in place in populations that experience early waves of infection. This approach should improve the timeliness of estimates of key parameters for national or international use.

Vaccination can also be used to slow transmission and protect nonvaccinated people. Appropriately targeting vaccines for this purpose requires identifying the groups most likely to become infected and then infect others in the population.22,23 Although school-age children play a key role in this process, their importance often declines as a pandemic wave progresses. Changes in the age distribution of cases can help track this trend.22

1.5 Investment Allocation

Decision makers must allocate limited resources between measures to protect individuals and measures to reduce transmission. In addition to money, resource limitations can involve public health personnel, public attention, and supplies. For example, the supply of antiviral drugs is limited, as is the virus's susceptibility to them, which can also be considered a limited “resource.”24 Consequently, using antivirals to slow transmission may hinder their future use for treatment.19,25

Limited vaccine supplies create a trade-off between vaccinating those at highest risk and those most likely to transmit infection (see sidebar: Prioritizing Vaccination). The key questions for prioritization are: Who is at highest risk, and how readily can they be identified? On the other hand, who are the transmitters? When vaccine becomes available in substantial quantities, will transmission be ongoing at a high level? Will the group driving transmission early in the pandemic (eg, schoolchildren) still be the key driver, or will susceptible members of that group have been largely exhausted, making their vaccination less effective?22

Prioritizing Vaccination.

Vaccines have 2 effects: direct protection of the vaccinated individual against infection and its consequences and indirect protection of the population, in which certain individuals are vaccinated to reduce their risk of becoming infected and subsequently passing on the infection. The ability of immune individuals to protect others against infection is often called herd immunity.

When vaccine availability is limited, the choice of whom to vaccinate first is partly a strategic decision to focus on either direct protection or on herd immunity. Table 1 describes key differences in these strategies and in the information required to implement them.

Predictions of the likely timing and magnitude of the peak (or peaks) of disease incidence would also facilitate response planning and resource allocation by anticipating likely periods of intense stress on healthcare providers. Optimally targeting vaccination depends on the number of doses available prior to the peak of transmission (see sidebar: Prioritizing Vaccination). Raw surveillance data by definition provides information about the past, not predictions of the future course of the pandemic. We argue in section 3.4, however, that carefully designed surveillance programs, combined with mathematical and statistical modeling to infer the number of infected individuals, could provide considerable insight and help decision makers prioritize particular scenarios.

Decisions about prioritizing interventions will need to balance projected benefits of prioritizing particular groups against important considerations of logistics and public acceptance. As in all matters of public health prioritization, the calculation of projected benefits depends on one's assumptions about the relative value of preventing morbidity versus mortality, and about the relative value of preventing mortality in various groups. This question has been heavily debated,26 and, in the case of pandemic influenza, it is clear that unless vaccines are so plentiful that transmission can be completely or nearly halted,27 policies to minimize total mortality may differ from those to minimize years of life lost or disability-adjusted years of life lost.28–30 Moreover, efforts to target particular groups may result in underuse of available supplies, be difficult to implement, or provoke negative public response if some individuals disagree with the choice of whom to prioritize. In the U.S., a public engagement process in 2006 documented public preferences for groups that should be prioritized in the event of a pandemic.31

1.6 Timing of Responses

Finally, decision makers must determine when to set policies in motion, when to change existing policies, and which decisions to delay. A key lesson of the 1976 swine flu outbreak was that certain decisions can and should be delayed until evidence accumulates.32 Decisions that cannot wait can be revised in light of new evidence on severity and intervention effectiveness. During the 2009 pandemic, for example, early decisions to close schools in the U.S. in April were quickly revised as evidence grew to suggest an illness severity in the U.S. lower than that first reported in Mexico. (Later, it became clear that the severity in Mexico was also lower than it initially appeared.33)

Table 1.

Considerations in the Use of Vaccines for Direct Protection of Vulnerable People vs. Herd Immunity

| Strategy | ||

|---|---|---|

| Direct Protection | Herd Immunity | |

| Goal | To protect the vaccinated directly against infection, illness, hospitalization, or death | To protect the population (including the nonvaccinated) against infection (and its consequences) by reducing transmission |

| Criteria for who should receive priority for vaccination | Individuals who will benefit most from the vaccine's effects: groups at high per capita risk of severe outcomes (infection risk × severity) | Individuals at high risk of becoming infected and transmitting infection to others (initially, schoolchildren; also, certain healthcare workers) |

| Data used to identify priority groups | Predictors of high risk of severe outcome (eg, risk factors for death or hospitalization, compared with the general population) Evidence that the vaccine is effective in the high-risk groups (difficult to obtain in the pandemic setting, but possible to extrapolate from seasonal vaccines) |

Incidence rate and/or force of infection by age group22 Estimates of potentially infectious contacts per day in different groups136,137 |

| Factors favoring the strategy | Convincing data on who is at highest risk (Note: Predictors of high risk need not be causal, only reliable markers of high risk.) Good immunogenicity of the vaccine in the high-risk groups Limited quantities of vaccine Late availability of vaccine |

High-risk groups are unknown or vaccine has limited effectiveness in them (eg, the elderly during seasonal influenza17) Large supplies of vaccine available in time to significantly reduce transmission7,22,23 Evidence available on the key transmitters22 (Note: This may change over time, as most affected groups become increasingly immune and contribute less to transmission as the epidemic progresses.) |

2. Sources of Surveillance and Epidemiologic Data

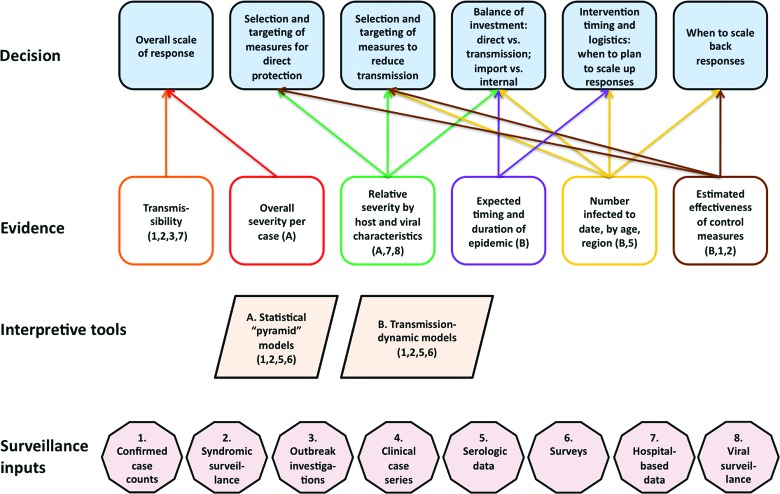

This section describes data sources—currently or potentially available—that provide the evidentiary basis for the decisions outlined in section 1 and shown in Figure 1.

Figure 1.

A schematic view of the public health decisions required in a pandemic response, the evidence needed to make these decisions in an informed fashion, and the sources of data and interpretive tools necessary to generate this evidence. The numbers under “surveillance inputs” follow the order of section 2. Color images available online at www.liebertonline.com/bsp

2.1. Confirmed Cases

Awareness of the novel influenza strain first arose from its detection in young patients in California who presented with influenzalike illness (ILI) and were tested for influenza as part of routine surveillance. Soon after, laboratory data on cases of severe atypical pneumonia in young adults in Mexico confirmed the presence of the pandemic strain of H1N1 (pH1N1). These early cases confirmed that pH1N1 could cause severe lower respiratory tract infection in young adults, a characteristic of previous pandemics.

The earliest quantitative surveillance data specific to 2009 H1N1 were daily reports of laboratory-confirmed or probable cases of the infection. As public awareness spread and more laboratories acquired the capacity to test for the new virus, testing in many jurisdictions, including the U.S., became increasingly common, including for mild cases. By one estimate, the fraction of cases detected in the U.S. was increasing 10% per day during late April to early May.34

Case reports to public health officials in some countries included age, gender, comorbidities, outcome (ie, hospitalization, ICU admission, recovery, or death), date of symptom onset, geographic location, and the like. By mid-May, however, the proportion of cases tested had declined in the U.S. because of testing fatigue and a lack of resources to consistently test a growing case burden.1 Similar changes occurred worldwide, prompting WHO to recommend the cessation of routine testing of all suspect cases. Counting confirmed cases of hospitalized, ICU-admitted, or fatal H1N1 infection became more feasible and thus the focus in some systems, including the U.S. Emerging Infections Program (hospitalizations), New York City,35,36 and the later phases of surveillance in Hong Kong.35–37

As case counts grew, aggregate reporting replaced individual case reports in most jurisdictions, so details of individual patients were often no longer available. Furthermore, most symptomatic cases were not tested, confirmed, or reported,38 and the proportion tested varied geographically and over time.

Early in the 2009 pandemic, population-wide case count data were useful for estimating transmissibility5,34,39–43 and served as a key input for WHO's declaration of Pandemic Phase 5 and the decision by many countries to undertake a large-scale response. These data were also used to make initial severity estimates,11,44,45 although biases were recognized, leading to considerable uncertainty in the estimates. Combining case counts among travelers to Mexico returning to their home countries with estimates of travel volume and assumptions about their exposure led to early estimates that the number of confirmed cases in Mexico was several orders of magnitude lower than the total number of infections.5,6 This showed that severity was considerably lower than a simple ratio of deaths to confirmed cases would have suggested. Overall, however, the varied rates of testing and reporting reduced the usefulness of case count data alone to estimate key parameters.

2.2. Syndromic Surveillance

Perhaps the most widely used sources of data for monitoring the course of the 2009 pandemic were syndromic surveillance systems, which track visits to primary care providers or emergency departments for a defined syndrome, such as influenzalike illness or acute respiratory illness.

Some systems, including ILINet in the U.S. and similar systems elsewhere, routinely track seasonal and pandemic influenza. Others, such as emergency department surveillance systems, were originally designed to detect natural disease outbreaks and acts of bioterrorism. To our knowledge, the earliest appearance of the pandemic did not trigger a quantitative alert in any of these systems, although 4 of the earliest cases in the U.S. presented at providers who were members of ILINet and so were tested and flagged for attention. At a later stage of pandemic spread, both the purpose-built influenza syndromic surveillance systems and the more general, detection-oriented systems proved very valuable for tracking the relative level of ILI over time, across age groups, and across geographic areas—data that were important for many of the decisions described in section 1. With several assumptions, ILI surveillance can be transformed into symptomatic influenza case estimates (see sidebar: From Syndromic Surveillance to Estimates of Symptomatic Influenza).

From Syndromic Surveillance to Estimates of Symptomatic Influenza.

Can syndromic data be used to make reliable estimates of influenza-attributable symptomatic disease? The answer depends on one's ability to accurately estimate several key quantities that define the relationship between syndromic surveillance outputs and the underlying number of pandemic influenza-attributable symptomatic infections. These quantities may change over time, so a system that provides reliable estimates in one situation may or may not remain reliable from month to month.

Here we make these relationships explicit and identify the key quantities that must be estimated to convert syndromic data into estimates of pandemic influenza-attributable symptomatic illness. In this box, the term symptomatic is used to mean “meeting the definition of influenzalike illness (ILI): fever, and either cough or sore throat.”

We define the following quantities for week w:

Cw = the number of ILI consultations per 100,000 population per week

Fw = the number of people with ILI whose symptoms are caused by pandemic influenza per 100,000 persons per week

Pw = the probability that an individual with ILI caused by pandemic influenza seeks medical attention and is diagnosed with ILI

Nw = the number of individuals with ILI seeking care per 100,000 population per week, whose symptoms are not caused by pandemic influenza

The relationship among these quantities is:

Cw = Fw Pw + Nw

where Fw is the number we would like to estimate, since this represents the true incidence of ILI due to pandemic influenza.

Cw can be measured using data from a variety of sources, such as the sentinel systems in France,138 the United Kingdom,139 and New Zealand,45 and other general-purpose systems, such as those based in health maintenance organizations in the U.S. Weekly data on the proportion of ILI among primary care consultations can be obtained from systems like the ILINet in the U.S.; unlike the population-based systems mentioned above, this is not an incidence rate.

In settings where an incidence rate Cw of ILI consultations is available, the challenge in determining Fw is to estimate Pw and Nw.

Nw may be negligible, especially in adults, if the pandemic occurs outside the normal winter respiratory infection season, as happened in the northern hemisphere in 2009. However, this need not be the case. In Mexico, the start of the pandemic overlapped with the end of seasonal influenza, and in the southern hemisphere, pandemic H1N1 transmission coincided with the normal winter season.

Pw measures the probability that a symptomatic individual in week w who is truly infected with pandemic influenza seeks care and is diagnosed with ILI. Pw appears to vary, at least geographically and possibly over time, perhaps in response to levels of public concern. In the U.S., Pw has been consistently estimated at about 40% to 60%;38 during the 2009 pandemic in New Zealand, by contrast, it was estimated at 5.5%.45

However, at times of greater public concern about influenza, both Pw and Nw—the incidence of consultations for ILI caused by something other than actual pandemic influenza—will rise. Thus, for example, New York City saw spikes in consultations by the worried ill, and sometimes by the worried well, following news reports about outbreaks or deaths. The use of specific case definitions—for example, the requirement for measured fever to define ILI—should reduce variability due to the worried well but does not prevent changes in syndromic counts due to increases in the number of worried ill—that is, increases in Pw and Nw. Even more specific case definitions, such as emergency department consultation resulting in hospital admission for ILI, should also reduce the impact of the worried ill; this approach was used in New York City in 2009.

One approach to estimate Fw is to make assumptions (based on telephone or web surveys and knowledge of other causes of ILI) about Pw and Nw.

Another approach is to estimate weekly the proportion of all medically attended ILI caused by pandemic influenza. In our notation, this proportion is Fw/C w. This proportion can be estimated by testing a representative subset of symptomatic individuals meeting the syndromic case definition (here, medically attended ILI) for pandemic influenza infection.1 Such an estimate can give a consistent relative measure of symptomatic pandemic influenza infection, but not a rate per population, since it does not directly estimate Pw. To limit the laboratory burden, the total number of cases tested must be limited, even as case numbers grow (perhaps by testing a fixed number of random samples weekly). Even so, testing will be limited to populations where sufficient laboratory capacity is available.

Syndromic measures of ILI consultations are easy to understand, relatively inexpensive and scalable. In the U.S., coverage of emergency department surveillance increased significantly during the pandemic because multiple jurisdictions contributed age-stratified data to the Distribute Network,46 which was updated in nearly real-time on the Web. Syndromic surveillance also was used to compare trends in medically attended ILI cases in 2 neighboring jurisdictions, one of which implemented school dismissal while the other did not, to make a nearly real-time assessment of whether school dismissal affected transmission.47

The 2009 experience did provide 2 clear examples where syndromic data were misleading. The first was a brief surge in ILI encounters observed in many syndromic systems in the U.S. during weeks 17 and 18, coinciding with intense media coverage of early cases and outbreaks. Syndromic data are sensitive to changes in healthcare-seeking behavior, and the tendency of mildly ill patients—the so-called worried ill or worried well—to seek care during periods of heightened concern can trigger false signals.48 In addition to being subject to misinterpretation, these false alarms perturb natural baseline patterns in the data, making it more difficult to detect subsequent increases representing real illness. In some jurisdictions during spring 2009, the initial surge in worried well visits was still subsiding just as ILI activity began accelerating, making these systems less useful for detecting the onset of community-wide illness.

A second example was the temporary slowing in the growth of ILI activity in the U.S. around week 38 (mid-September).49 Data gathered from most U.S. regions for that time showed the trend in consultations for ILI becoming almost flat over a 2- to 3-week period before accelerating to reach a true peak about 4 weeks later. This false peak remains unexplained, but it was credible at the time because of its replication throughout the country.

2.3. Outbreak Investigations

Investigations of outbreaks in defined populations are a classic tool of public health practice, usually designed to discover the cause of an outbreak, identify risk factors, and assess intervention effectiveness. In an influenza pandemic, however, outbreak investigations offer key advantages over routine, population-based surveillance in defining characteristics of the new infection. They can identify focal pockets of infectious transmission weeks or months before the eventual global spread of the infection, since initial seeding into particular geographic areas occurs with the arrival of 1 or a few infected people, and transmission may be concentrated in particular groups, such as schools or universities. In the 2009 pandemic, for example, early outbreaks were observed in contained settings such as schools,50–53 military camps,54 and universities.55

Outbreak investigations in sufficiently large but localized populations can also provide relatively unbiased estimates of case severity, because severe outcomes (hospitalizations, deaths: the numerators for severity estimates) can be determined with high reliability, while symptomatic attack rates (the denominator) can be estimated using surveys. One of the earliest compelling indications of the relatively low symptomatic case-fatality and case-hospitalization ratios in young adults in 2009 came from the University of Delaware outbreak.55 Such studies provide rapid, reliable data, although estimates may be limited to certain demographic groups.

Another advantage of studying outbreaks, particularly in nonresidential settings, is that once a case is identified, household contacts can be tested for infection (through virus detection and serology), monitored for symptoms and outcomes, and later tested again serologically. Viral shedding or serologic data, or both, can be combined with symptom data to estimate the proportion of infectious or infected individuals with particular symptom profiles, including asymptomatic infection, and thus aid in evaluating case definitions. These prospective studies can also estimate the distribution of shedding times.56,57 As noted earlier, confirmed cases identified as a result of people having sought medical care often represent a biased sample skewed toward severe cases. Identifying possible cases through exposure, such as by household contact tracing, yields a less biased sample. In 2009, a study of household contacts of cases from a Pennsylvania school outbreak provided estimates of household transmission rates and further evidence on severity.58

Outbreak investigations could be improved through advanced planning of study designs and by having epidemiologically trained personnel conduct the studies. Since not every outbreak can be fully investigated, planners should concentrate resources on investigations likely to generate the most useful and generalizable data. Investigation plans should also be adaptable to the location judged most likely to be informative, which cannot be predicted until the pandemic is under way. Collaborations between public health authorities and experts in statistical analysis of outbreak data58 can help quantify epidemiologic parameters of the pandemic and define how they are used to model the future spread of the infection.

2.4. Clinical Case Series

Descriptive data on mild, hospitalized, and fatal cases are valuable for many of the evidentiary needs described in section 1. These data comprise line lists, with each line describing at least an individual's demographics (age, sex, place of residence), preexisting medical conditions, and outcome, and ideally including data on the course of the illness and its treatment. Data for severe cases are collected by state and local health departments and often come from hospital records,14,35 while data for milder cases could be obtained from primary care providers, albeit with more difficulty in a setting facing intense healthcare demands.

Assessing risk factors requires data on the frequency of the same preexisting conditions and demographic traits in the general population. Remarkably, comorbidity frequencies in the general population are often hard to obtain, even in resource-rich countries, particularly for rare diseases (such as neurologic disorders) or for co-occurrence of more than one condition.59 Nonetheless, combining comorbidity data from a few hundred cases with estimates of population frequencies can suggest the factors associated with large excess risks. In addition, these hospitalized case series provide one element of the severity “pyramid” (section 3.1) by defining the proportion of hospitalized cases that require ICU admission or mechanical ventilation, and the proportion that are fatal.

2.5. Serologic Data

As described in section 1.3, measures of severity per infected individual are extremely valuable for informing decisions about the scale and targeting of response to an emerging pandemic. To estimate severity per infected individual, it is of course necessary to estimate the number of infected people. The gold standard for detecting infection is testing paired serum samples (preexposure and convalescent on the same person) for virus neutralization or, more often, hemagglutination inhibition.60 The rapid initiation of prospective studies required to collect paired samples is challenging in a pandemic. Therefore, cross-sectional studies of convalescent sera provide a viable alternative. Although other kinds of data—estimates of the number of symptomatic, medically attended, or virologically confirmed infections in a population—are useful for estimating the number of infections, these measures are difficult to interpret because the “multiplier” relating any of them to true infections likely varies by time, population, and characteristics (eg, age) within a population. All the sources of uncertainty noted in the sidebar on syndromic surveillance contribute to uncertainty in the ratio between serological infections and symptomatic or medically attended infections. If serologic data are not available, these sources of uncertainty combine with uncertainty about the proportion of symptomatic infections to result in wide confidence bounds on the ratio of severe outcomes to infection.61

Another potentially highly valuable use of serologic data is to estimate parameters for transmission-dynamic models to project the course of an epidemic.62,63 Transmissibility can be estimated from the early growth rate of case numbers, which does not depend on the proportion of cases reported (as long as that proportion stays constant or changes are accounted for34,37). To determine the timing and magnitude of the peak in incidence, the estimated transmissibility must be combined with an estimate of the absolute number of individuals in age groups who have been infected at a particular time. If sufficient resources are available, the number infected can be measured continuously in almost real time by an ongoing serologic study.64 More economically, one could estimate, at one point for a defined population, the proportion of true infections (serologically determined) that result in medically attended illness, hospitalization, or other more convenient surveillance measure. Syndromic or hospitalization surveillance can thereafter be a proxy for ongoing serological surveillance.

The 2009 pandemic illustrated the challenges of using serology to detect infection. Development of specific serologic markers of pandemic H1N1 infection was hampered by cross-reactions with antibodies resulting from prior seasonal influenza infection. In addition, the experts needed to develop and optimize such assays were in demand for other tasks, including developing assays for vaccine candidate evaluation. Nevertheless, serologic data were obtained on statistically meaningful samples of the population in the United Kingdom65 and Hong Kong.56,64 Workers in Hong Kong gathered symptomatic and serologic data within the same defined population, in the context of a household study56 that yielded a multiplier between symptomatic and serologic cases. More detailed quasi-population-based serologic studies have been described from Hong Kong in recent meetings.64 The value of improving the capacity to conduct large-scale serosurveillance in many populations for future pandemics engendered lively debate at the Symposium, as it has in other recent influenza meetings (see sidebar: Debating the Value of Large-Scale Serosurveillance). For early estimates of seroprevalence, serosurveys may be needed in populations where laboratory capacity for processing samples is absent or inadequate. Collaborative arrangements may be needed (and ideally should be set up in advance) to ensure timely processing of samples and dissemination of results.

Debating the Value of Large-Scale Serosurveillance.

Perhaps the most spirited debate at the CCDD Symposium and, indeed, among the authors of this report, concerned the priority and feasibility of conducting serosurveillance during a pandemic. Both sides agree on the importance of estimating the cumulative incidence of infection over time as the pandemic unfolds, for reasons described in sections 1 and 2. The argument in favor of serosurveillance61 emphasizes that serologic testing is the gold standard for such estimates and that all other approaches suffer from considerable uncertainty. The argument against serosurveillance emphasizes the expense and logistical difficulty of wide-scale serologic testing, the unavailability and limitations of early serologic tests (which add uncertainty to estimates), and the possibility of obtaining estimates of infection from nonserologic sources. In short, a large-scale prepandemic investment would be needed to improve current influenza serologic assay technology sufficiently so that valid serologic tests could be developed quickly at the start of the next pandemic.

As noted, challenges in serologic testing include: the need to obtain ethical approval for serologic testing in some locations; poor sensitivity and specificity of tests for some novel viruses (including many early tests for 2009 H1N1); the variable time to seroconversion, which adds variance to estimates of the proportion positive at any one time; and the labor involved in extensive testing, especially repeat testing. Proponents of serosurveillance note that statistical models can be used to adjust for the sensitivity, specificity, and variation in time to seroconversion; in fact, work is under way on such models. They also stress that tests with low sensitivity and specificity, which might be poor tools for clinical diagnosis of an individual, can still provide valuable information about the proportion of the population infected, given the appropriate statistical adjustment.

With regard to using nonserologic approaches to estimating the fraction of the population already infected (eg, data on patient visits), supporters of serosurveillance note that in 2009 such efforts resulted in broad uncertainty spanning several orders of magnitude and lasting until just before the peak of transmission, at which point predicting the peak was no longer very useful.62,63 However, those skeptical of committing resources to serosurveillance argue that these calculations could have had narrower ranges of uncertainty. They note that in pandemic and seasonal flu, the proportion of infected people who are asymptomatic or whose symptoms fall below the standard definition of ILI has been repeatedly estimated at between 25% and 75%,140–147 thereby defining a limited range for the conversion factor between estimates of population-based, influenza-attributable ILI incidence (cases per capita) and estimates of infection incidence. In the U.S., estimates of the incidence of symptomatic pandemic influenza had a range of uncertainty of about 2.5-fold.81 Combining this with an uncertainty of about 3-fold in the multiplier between symptomatic cases and infections, one obtains about a 4-fold uncertainty in the number of infections.

Further work is needed, perhaps based on data from the 2009 pandemic, to assess how well such proxies can approximate retrospectively collected serologic data, as well as how nonserologic data sources could be improved to optimize the measurement of absolute incidence. In addition to the immediate benefits of serologic surveillance, the benefits to future epidemiologic studies of being able to retrospectively track the spread of infection in a population should be considered. The costs and benefits of enhanced nonserologic surveillance providing such proxies could then be compared against those for serologic surveillance.

2.6. Telephone and Web-Based Surveys

Telephone surveys, including the U.S. Centers for Disease Control and Prevention's (CDC's) nationwide Behavioral Risk Factors Surveillance System (BRFSS),66 and a more limited telephone survey focused on ILI in New York City,67 provided estimates of several quantities of interest to decision makers, including symptomatic infection incidence over a month-long recall window, the probability that a symptomatic individual would seek medical attention, and vaccine coverage. Such a telephone survey led to one of the earliest robust estimates of the symptomatic case-fatality ratio.67

Telephone surveys can be performed rapidly and at reasonable cost proportionate to the number of individuals sampled, and standard methods exist to adjust such surveys to reflect the population as a whole.66 However, with questions that cover long recall periods (eg, “Have you had this symptom in the past month?”), the concern is how well individuals can recount their illness history. While the estimate of 12% of New Yorkers reporting ILI during peak spring transmission is plausible,67 a similar study performed outside the influenza season indicated 18% to 20% of New Yorkers with self-reported ILI.68 Clearly, statistical adjustment for recall bias is required, as is more work to ascertain how well these surveys reflect true symptomatic incidence. If these issues were addressed, surveys of illness in populations with well-ascertained severe outcomes could become a very valuable tool for rapid severity assessment that should be incorporated into pandemic plans.

Web-based surveys are a novel way to obtain estimates of symptomatic incidence. Influenzanet,69 a Web cohort survey that tracks influenza, is used in 5 countries.70 Individuals are invited to join—and invite friends to join—a cohort surveyed weekly for influenza symptoms. The Web cohort design has several advantages: The low marginal cost of including more subjects and more frequent queries can yield a large sample. Better recall results are likely, since individuals describe symptoms from a shorter time period, such as the prior week. Furthermore, repeatedly surveying the cohort is a cost-effective way to collect fairly detailed demographic and other data for comparing risk profiles of symptomatic and asymptomatic individuals.

Since raw data from a Web cohort are unrepresentative of the overall regional population, it will be important to assess how well incidence estimates from Web cohorts track true population-based estimates. As with many other surveys, it should be possible to develop techniques that weight raw data from Web surveys to create nationally representative estimates, but these corrections will be population-specific and may be difficult in jurisdictions with less extensive demographic data.

2.7. Hospital- and ICU-Based Data

Hospitalized patients can be characterized in greater detail than most patients with mild influenza, offering the opportunity to identify risk factors and perform detailed clinical studies. Some jurisdictions, such as New York City, focused much of their surveillance effort on hospitalized patients—having judged that the smaller volume of case reports and relatively consistent case definition over time would allow careful characterization of a defined subset of more severe cases. High ascertainment of hospitalized cases enabled the calculation of population-based hospitalization rates, which could be compared across populations to reveal important epidemiologic features of the pandemic, including the disproportionate impact of the pandemic on high-poverty neighborhoods in New York City35 and on racial/ethnic minorities in Wisconsin.71

In 2009, several jurisdictions decided that hospitalizations were the most reliable basis for estimating both cumulative case numbers and weekly trends.37,38 This decision reflects the judgment that the rate of hospitalizations is less affected by changing levels of concern in the population than other measures (such as physician consultation for ILI). As with ILI data (see sidebar: Syndromic Surveillance), hospitalization data can be converted into estimates of total cases only by making assumptions about the fraction of cases hospitalized.

Combining Syndromic Surveillance with Viral Testing.

The value of syndromic surveillance increases when combined with viral testing of a representative subset of individuals with a particular case definition,1 as this can generate a data-based estimate of the proportion of consultations caused by H1N1 influenza. Although not necessary for every case, this testing should be performed according to an algorithm that minimizes clinician discretion to ensure that cases tested represent a defined syndromic population.1

To limit the laboratory burden of testing, the total number of cases tested must be limited even as case numbers grow (perhaps by testing a fixed number or fixed proportion of random samples per week). Even so, testing will be limited to certain populations where sufficient laboratory capacity is available.

This approach was applied in the outpatient setting as a special study, in which patients with ILI in 8 states and approximately 40 practices were tested with influenza rapid antigen tests and PCR to determine the proportion of outpatient ILI visits caused by influenza.148 To determine the incidence of influenza-associated hospitalizations, patients with ILI admitted to the 200 hospitals in the U.S. Emerging Infections Program were also tested with RT-PCR.49

Even without assumptions about how many infections each hospitalization represents, the number and rate of hospitalizations in various age groups can measure the relative burden of severe influenza disease during a pandemic. Some jurisdictions maintain hospitalization surveillance during seasonal influenza. In the U.S. during 2009, age-specific influenza hospitalization rates reported by the Emerging Infections Program (EIP) Influenza Network were an important indicator of pandemic severity72,73 because they could be compared against estimates from prior nonpandemic seasons.74,75

The use of hospital-based data can present several challenges, the most important being the variation across hospitals in the automation and timeliness of computerized medical records. Also, in many settings, considerable effort is required to extract and analyze data on hospitalized patients, and protocols for testing for influenza infection can vary and depend on clinician discretion. The sensitivity and specificity of even “gold standard” PCR-based tests may be suboptimal.76 Many clinicians initially lacked access to these tests and were limited to using less sensitive, rapid tests,77 leading to underestimation of influenza-positive cases. Once the rapid tests were known to be low-sensitivity, their utility in clinical decisions was reduced, lowering the incentives for clinicians to test at all and further hampering ascertainment.38,44 Despite these caveats, hospital-based surveillance proved useful for many purposes in the 2009 pandemic.

2.8. Virologic Surveillance

In the U.S., virologic surveillance takes 2 forms: submission of influenza viral specimens and submission of data on respiratory samples tested for respiratory viruses, along with the number and percent positive for influenza. Worldwide, specimens are collected and tested by WHO Collaborating Centers.

In a pandemic setting, enhanced diagnostic sample collection makes available many more viruses for testing within such systems. Other sources of virus strains include hospital-based surveillance systems, such as the U.S. Emerging Infections Program (EIP) Influenza Project and ILINet in the U.S., whereby virus samples taken for clinical care are further characterized for surveillance purposes.

Since the method of choosing isolates for testing is not standardized, the representativeness of the tested strains is uncertain.1 Nonetheless, these samples can generally illustrate the proportion of cases that, at a given level of severity, are positive for pandemic influenza infection (or for other strains of influenza), while further testing of selected strains can characterize genetic and phenotypic changes that perhaps involve drug susceptibility, antigenicity, and virulence.

Each week in the U.S., the WHO and the National Respiratory and Enteric Virus Surveillance System (NREVSS) laboratories submit data from sample testing of respiratory viruses to CDC, with each laboratory reporting the number of samples submitted for respiratory virus testing and the number testing positive for influenza. During the influenza season and the 2009 pandemic, the percentage of samples positive for influenza provided information about the location and intensity of influenza circulation. Data from more than 900,000 tests were reported during the pandemic—almost 4 times as many test results as CDC receives during an average influenza season.

Global virologic surveillance and national systems outside the U.S. have similar objectives and in many cases use similar systems. WHO's FluNet reports weekly data from National Influenza Centers on the number of specimens positive for influenza A (by subtype and sometimes lineage within subtype) and for influenza B.78

2.9. Surveillance in Resource-Limited Settings

The challenges of conducting surveillance during a pandemic are magnified in settings with limited (and uneven) access to health care and limited surveillance infrastructure. As this summary was being written, WHO was attempting to estimate the total burden of the pandemic worldwide. Unfortunately, for many parts of the world, representative data are simply unavailable, even after the fact.

Several approaches should be considered for future planning. In middle-income countries with significant public health infrastructure, it should be feasible to expand population-based surveillance for respiratory illness that provides a baseline for comparison with an emerging pandemic.79,80 It should also be possible to plan rapid outbreak investigations and hospital-based surveillance to characterize a pandemic's severity, clinical course, and risk groups. Whether such preparations would be an efficient use of public health resources in middle-income areas remains to be determined. In some cases, it may be preferable to invest in different priorities and depend on other jurisdictions and WHO for guidance.

In the developing world, developing comprehensive national surveillance is difficult and may be a poor use of limited public health funds. However, most countries have access to influenza laboratory capacity, either in country in the form of a national influenza center or in a nearby country. Many low-income countries also currently perform hospital- and clinic-based surveillance for mild and severe respiratory disease in large urban centers and can provide valuable data on the relative severity. It would be valuable to set up a network that combines data from areas in the developing world with unusually good surveillance resources that could include demographic surveillance system (DSS) sites, as well as medical study sites funded by institutions like the U.S. CDC, the UK Medical Research Council, the Wellcome Trust, and the Pasteur Institute's RESPARI network. Such a network could also provide timely evidence on the characteristics of a novel influenza strain in developing country settings, including those with a greater burden of other infections, including HIV, TB, and pneumococcal disease.

3. Interpreting Surveillance Data for Decision Making

Raw surveillance data on a novel influenza strain, especially from established systems with background data on nonpandemic influenza, can broadly illustrate the trajectory of symptomatic infections in time and space. To inform many decisions, however, surveillance data must be processed to estimate particular quantities—for example, transmissibility and severity measures or the cumulative proportion of the population infected to date—and to define the pandemic's possible course through formal prediction or plausible planning scenarios.

3.1. Estimating Severity and Disease Burden

Severity estimation and disease burden estimation are different approaches to answering interrelated questions: How many cases? How many deaths (burden)? What is the ratio of deaths to cases (severity)?

As described in section 1, case-fatality or other case-severity ratios are probably the most important quantitative inputs for early decision making. However, estimating both the numerator and denominator of these ratios is challenging. For discussion purposes here, we focus on symptomatic case-fatality ratios, where the numerator is fatalities and the denominator is symptomatic cases.

Symptomatic case number estimates can come from surveys (with some correction for the proportion of symptomatic cases truly due to pandemic virus infection67,81,82) or from data at other levels of the “severity and reporting pyramid,”38 such as confirmed cases38 or hospitalizations81 combined with an estimate of the proportion of symptomatic cases hospitalized.81 If one made assumptions about the proportion of asymptomatic cases, these estimates could be converted into estimates per infection (see sidebar: Large-Scale Serosurveillance).

Case ascertainment will always be less than 100% and will vary over space and time in the pandemic. If unaccounted for, ascertainment can bias severity estimates. In the earliest phases of a pandemic, symptomatic case numbers can be biased by the preferential detection of the most severe cases, leading to substantial overestimates of severity, since these cases are more likely than typical cases to be fatal.

For example, as of May 5, 2009, Mexico had reported almost 1,100 confirmed H1N1 cases and 42 deaths from H1N1,10 a crude case-fatality proportion of about 4%. In hindsight, this high apparent severity was largely, or entirely, attributable to the underascertainment of mild cases, of which there were probably several orders of magnitude more than the number confirmed.5,6 As public health efforts scaled up and an increasing number of milder cases were detected, this bias declined,34 but it did not disappear, showing how even with the most intense efforts, only a minority of symptomatic cases may be virologically confirmed.38

The numerator—fatalities—can be directly estimated in jurisdictions with routine viral testing of fatal cases. Experience in 2009 showed that some fatal cases are diagnosed only on autopsy,36,76 posing a risk for underestimating the numerator. A second source of variability is differences between jurisdictions in the definition of influenza deaths, which may include all fatalities in individuals in whom the virus was detected or only those in whom the virus was judged to have caused the death. Another potential source of error in estimating the number of deaths is the delay from symptom onset to death from pandemic influenza, which can be a week or longer.11,82,83 For this reason, deaths counted at time t may not correspond to all the cases up to time t but to the cases that had occurred up to a week or more before t. In the exponentially growing phase of the pandemic, there may be many recently infected individuals who will die but have not yet died; they are counted in the denominator but not the numerator. If unaccounted for, this “censoring bias” can lead to an underestimate of severity as much as about 3-fold to 6-fold during the growing phase of a flu pandemic.11 Two basic approaches can address this bias: One is to correct for it based on the growth rate in disease incidence and the lag time between case reporting and death reporting.11 Another is to perform analyses after transmission has subsided in a population, by which time most deaths will have been registered in the data set.44

Notwithstanding these sources of bias, it is particularly challenging to precisely estimate the case-fatality proportion when the true proportion is low. In any population with a statistically robust number of deaths (eg, more than 10 cases) and a symptomatic case-fatality proportion of 1 in 10,000 (0.01%), it would require 100,000 documented symptomatic cases (or a correspondingly large number of confirmed cases) to directly estimate the ratio—an impractical approach.1 The 2009 pandemic highlighted the need for other approaches.

One alternative is to conduct surveys within defined outbreak populations to estimate the number who are ill and relate this number to the directly measured number of severe outcomes. For example, in an early H1N1 outbreak at the University of Delaware, 10% of student respondents and 5% of faculty and staff on a campus of 29,000 reported ILI that resulted in 4 hospitalizations but no deaths.55 While this could not yield a precise estimate of the symptomatic case-fatality or case-hospitalization proportions, it provided useful upper bounds. A telephone survey yielded similar estimates in New York City.67

Another approach to estimating case-fatality proportion is to decompose the severity “pyramid,” instead relying on some types of surveillance to estimate the ratio of deaths to hospitalizations and on other types to estimate the ratio of hospitalizations to symptomatic cases.44 Bayesian evidence synthesis methods84 are a natural framework for combining the uncertainty in the inputs to such estimates into a single estimate of uncertainty in severity measures.44

Overall, the presence of countervailing biases (the underascertainment of both numerator and denominator) made initial severity assessment challenging in the 2009 pandemic. Although both biases were recognized, it was difficult at the time to identify the more severe bias. In retrospect, censoring bias was minor compared to the underascertainment of mild cases, making early estimates of severity higher than current estimates based on more complete data. There was also important uncertainty about whether estimates differed between populations (eg, U.S. versus Mexico) because the severity was truly different or because ascertainment patterns differed. These conclusions are outbreak-specific; in SARS, for example, ascertainment was relatively complete, but censoring bias—perhaps more acute than in 2009 because of the longer delay from symptom onset to death—led to substantial underestimates of severity until the bias was corrected for.85

All of these considerations, described in the context of attempting to estimate overall risk of mortality, are relevant as well to more complex measures of severity, such as years of life lost.29

Because influenza is seasonal, experiences in one hemisphere can—and did—inform planners and decision makers in the other. In 2009, the southern hemisphere was the first to have a full, uninterrupted winter season with the novel H1N1 virus. Rapid reviews of the experience in the southern hemisphere's winter season86,87 provided evidence for northern hemisphere planners that the capacity of intensive care units would likely be adequate overall, though local shortages might occur.

3.2. Interpreting Clinical Data

Data on the characteristics of severe clinical cases are directly relevant to decisions about prioritizing prevention (eg, vaccination) and using scarce resources to treat those most likely to benefit. Choosing priority groups for such preventive measures as vaccination should depend in part on the per capita relative risk of having various groups suffer severe outcomes without vaccination.30 For a particular group—pregnant women, for example—this risk can be estimated by dividing the proportion of pregnant women among individuals with severe outcomes by the proportion of pregnant women in the general population.

This same measure of comparative severity applies to prioritizing other measures that are distributed to uninfected people. In 2009, it was proposed that certain groups might benefit from predispensed (or easier access to) antiviral drugs to aid in early treatment. The potential benefits of such a policy depend mainly on the per capita risk of severe outcomes in the priority groups compared to the general population.88

To prioritize treatment of symptomatic individuals, the relevant measure of comparative risk is severity per case, not per capita, since the decision involves a person with presumed or known infection, not a randomly chosen group member. The distinction between these 2 measures is that per capita severity is equal to per case severity times the risk of becoming a case. For example, in most countries, people over age 50 showed considerably higher severity per case, but only modestly higher per capita severity, because they were less likely than younger people to be infected.

The goal for all the purposes outlined above is to identify predictors of severe outcome rather than understand why the predictors are associated with the severe outcome.88 In the 2009 pandemic, morbid obesity was identified in some case series as a predictor of hospitalization and death,89 prompting much discussion about whether morbid obesity itself caused the outcomes or whether it was a marker of other conditions that did so. This question of etiology is unimportant when allocating resources; if high-risk individuals can be identified, they can receive priority for prevention or treatment and the benefit will be the same, regardless of whether the identifying factor is causal or only a marker.

3.3. Estimating Transmissibility

A standard summary measure of transmission intensity is its reproductive number—the mean number of secondary cases caused per primary case. When this exceeds 1, incidence grows; below 1, incidence declines. Absent mitigation measures, an estimate of the reproductive number of a strain at baseline can inform how intensely transmission must be reduced to slow or stop the growth in the number of cases.90 To halt growth, the critical proportion of transmission events that must be blocked is given by 1 minus the reciprocal of the reproduction number. Estimates of changes in the reproduction number over time91,92 can indicate the impact of control measures37 or of intrinsic changes in transmissibility due to depletion of susceptible individuals, seasonality, or other changes.93

A common approach to estimate transmissibility of a newly emerging infection relies on estimates of 2 quantities: the exponential growth rate of the number of cases and the distribution of the serial interval or generation time—that is, the time from infection to transmission.94 The minimal data required to derive the reproductive number from these 2 estimates are a time series of the number of new cases (ideally, a daily time series) and an estimate of the serial interval distribution,92 which can come from early outbreak investigations.5,95 The assumption is that the distribution of intervals between symptom onsets approximates that of the intervals between times of infections.96 Remarkably, with certain assumptions, one can infer both the serial interval distribution and the reproductive number using only the time series of new cases.34,97

Key challenges in estimating reproduction numbers from epidemic curve time series include changes over time in the fraction of cases ascertained—which can affect apparent growth rates and therefore bias reproductive number estimates—and reporting delays, whereby, even in a growing epidemic, recent case incidence will appear to drop off due to recent, unreported cases.7 There is growing methodological and applied literature on addressing these challenges34,37,94,96,98 and obtaining corrected estimates or bounds for the reproductive number. Analyzing viral sequence data, discussed below, can provide a partially independent estimate of transmissibility and validate conclusions made from purely case-based estimates.

3.4. Real-Time Predictive Modeling

Transmission-dynamic models can be used to predict the possible future course of an epidemic (eg, the number of infections per day in various groups) given certain assumptions. These assumptions, or model inputs, include such quantities as the reproductive number of the infection, the relative susceptibility and infectiousness of different groups in the population, the natural history of infectiousness, and the nature and timing of possible interventions. Transmission-dynamic models have been widely used as planning tools to assess the likely effectiveness of interventions for pandemic influenza19,25,99–101 and many other infections.102,103 In these cases, the models are applied to hypothetical epidemics, and the input assumptions are taken from past epidemics of similar viruses. In this section, we consider a distinct though related application of transmission-dynamic models: predicting the dynamics of a pandemic as it unfolds by using real-time data on the incidence and prevalence of infection to date in various population subgroups.

Since reliable predictions of a pandemic's time course are tremendously helpful for response planning and decision making (sections 1.5–1.6), it would be valuable if transmission-dynamic models were employed in real time to make and update predictions of the course of transmission. This would require 3 ingredients: (a) a sufficiently accurate mathematical model of the key processes that influence transmission; (b) data on the current and past incidence of infection with the pandemic strain, population immunity, and other parameters needed to set initial model conditions; and (c) an assumption that the biological properties of the influenza virus would not change within the time scale of prediction.

Transmission-dynamic models are now computationally capable of including virtually unlimited amounts of detail in the transmission process,104 but knowledge of some of their inputs is limited. For example, while much is known about the factors that affect influenza transmission, areas of uncertainty remain, including the exact contributions of household, school, and community transmission;104 the contribution of school terms, climatic factors, and other drivers to transmission seasonality;93,105–107 and the role of long- and short-term immune responses to infection with other strains in susceptibility to pandemic infection.108 Our understanding of behavioral responses to pandemics is also at an early stage.109

During a pandemic, incidence data are imperfect and subject to substantial uncertainty in the “multiplier” between observed measures of incidence and true infection (see sidebars: Syndromic Surveillance and Large-Scale Serosurveillance). Since infection and resulting immunity drive the growth, peaking, and decline of epidemics, this conversion factor is crucial to setting model parameters. Finally, changes in the antigenicity, virulence, or drug resistance of a circulating strain could invalidate otherwise reliable model predictions.

Efforts at real-time modeling in the 2009 pandemic showed that uncertainty in the number of individuals infected in various age groups at any given time hampered efforts to forecast the pandemic using transmission-dynamic models. Despite this limitation, the 2009 experience illustrated the potential of predictive models to provide policy guidance by generating plausible scenarios and, as important, by showing that certain scenarios are less plausible and thus of lower priority for planning.

In one published case study, Ong and colleagues set up an ad hoc monitoring network among general practitioners in Singapore for influenzalike illness and used the reported numbers of daily visits for ILI to estimate, in real time, the parameters of a simple, homogeneously mixed transmission-dynamic model, which they then used to predict the course of the outbreak.62 Early predictions of this model were extremely uncertain and included the possibility of an epidemic much larger than that which occurred. This uncertainty reflected the limitation of the input data (here, physician consultations). Without a known multiplier, it was impossible to scale the number of infections anticipated by the model to the number of consultations. By late July, the growth in the incidence of new cases had slowed, providing the needed information to scale the observed data to the dynamics of infection, allowing for more accurate and more precise predictions.62

Data on healthcare-seeking behavior were used in a similar effort in the United Kingdom, but with a more detailed, age-stratified transmission-dynamic model. Here, too, the timing and magnitude of the peak were difficult to predict because of uncertainty in the conversion factor between observed consultations and true infections—although in this case the authors, by their own description, had made a guess that was roughly accurate63 when tested against serologic data.65

A third effort was made in late 2009 to assess the likelihood of a winter wave in U.S. regions. Based on estimates of the rate of decline of influenza cases detected by CDC surveillance in November to December, combined with estimates of the possible boost in transmissibility that might occur due to declining absolute humidity,107 it was anticipated that any winter wave would be modest and likely geographically limited. Further analysis after the fact showed that the southeastern U.S. was the region most likely to experience further transmission due to a seasonal boost in transmissibility, a finding consistent with observations.93

These experiences indicate that real-time predictive modeling is possible but will also include considerable uncertainty if undertaken responsibly. For transmission-dynamic modeling, the most important source of uncertainty lies in the multiplier between cases observed in surveillance systems and infections. Modelers must therefore seek out the best data to estimate parameters for models by paying careful attention to publicly available data and by building relationships with those who conduct surveillance prior to pandemics, so that information transfer is facilitated in the midst of an event. As noted by the authors of the studies described above, and in reviews by several consortia of transmission modelers,7,61 the factor that could most improve the reliability of real-time models is having nearly real-time estimates of cumulative incidence—whether through serosurveillance or other means.

The real-time, predictive modeling described above is one of the developing frontiers within the broader scope of transmission-dynamic modeling. These approaches differ from scenario-based modeling, which may be performed before or during a pandemic to provide robust estimates of the possible effects of interventions under particular assumptions rather than predict the short-term dynamics of the infection. Scenario-based modeling18,19,23,25,90,104,110 has significantly improved our understanding of epidemic dynamics and the likely responses to interventions, but it is most helpful when predictions are robust to variations in assumptions that may be difficult to pin down during an epidemic.

3.5. Interpreting Virologic Data

Simple virologic confirmation of pandemic H1N1 infection was an integral part of case-based surveillance, especially early on and for severe cases. The proportion of viral samples that are positive, alone or in combination with ILI data, provides a measure of incidence (with the caveats described in previous sections). A more novel approach is to use viral sequence data to time the origin, rate of growth, and other characteristics of a pandemic,5 applying methods that rely on the coalescent theory developed in recent decades in population genetics, in which the quantitative history of a population can be inferred from the pattern of branching in a phylogenetic tree.111