Abstract

Instrumental responses are hypothesized to be of two kinds: habitual and goal-directed, mediated by the sensorimotor and the associative cortico-basal ganglia circuits, respectively. The existence of the two heterogeneous associative learning mechanisms can be hypothesized to arise from the comparative advantages that they have at different stages of learning. In this paper, we assume that the goal-directed system is behaviourally flexible, but slow in choice selection. The habitual system, in contrast, is fast in responding, but inflexible in adapting its behavioural strategy to new conditions. Based on these assumptions and using the computational theory of reinforcement learning, we propose a normative model for arbitration between the two processes that makes an approximately optimal balance between search-time and accuracy in decision making. Behaviourally, the model can explain experimental evidence on behavioural sensitivity to outcome at the early stages of learning, but insensitivity at the later stages. It also explains that when two choices with equal incentive values are available concurrently, the behaviour remains outcome-sensitive, even after extensive training. Moreover, the model can explain choice reaction time variations during the course of learning, as well as the experimental observation that as the number of choices increases, the reaction time also increases. Neurobiologically, by assuming that phasic and tonic activities of midbrain dopamine neurons carry the reward prediction error and the average reward signals used by the model, respectively, the model predicts that whereas phasic dopamine indirectly affects behaviour through reinforcing stimulus-response associations, tonic dopamine can directly affect behaviour through manipulating the competition between the habitual and the goal-directed systems and thus, affect reaction time.

Author Summary

When confronted with different alternatives, animals can respond either based on their pre-established habits, or by considering the short- and long-term consequences of each option. Whereas habitual decision making is fast, goal-directed thinking is a time-consuming task. Instead, habits are inflexible after being consolidated, but goal-directed decision making can rapidly adapt the animal's strategy after a change in environmental conditions. Based on these features of the two decision making systems, we suggest a computational model using the reinforcement learning framework, that makes a balance between the speed of decision making and behavioural flexibility. The behaviour of the model is consistent with the observation that at the early stages of learning, animals behave in a goal-directed way (flexible, but slow), but after extensive learning, their responses become habitual (inflexible, but fast). Moreover, the model explains that the animal's reaction time must decrease through the course of learning, as the habitual system takes control over behaviour. The model also attributes a functional role to the tonic activity of dopamine neurons in balancing the competition between the habitual and the goal-directed systems.

Introduction

A very basic assumption in theories of animal decision making is that animals possess a complicated learning machinery that aims for maximizing rewards and minimizing threats to homeostasis [1]. The primary question within this framework is then how the brain, constrained by computational limitations, uses past experiences to predict rewarding and punishing consequences of possible responses.

The dual-process theory of decision making proposes that two distinct brain mechanisms are involved in instrumental responding: the “habitual”, and the “goal-directed” systems [2]. The habitual system is behaviourally defined as being insensitive to outcome-devaluation, as well as contingency-degradation. For example, in the experimental paradigm of outcome-devaluation, the animal is first trained for an extensive period to perform a sequence of actions for gaining access to a particular outcome. The outcome is then devaluated by being paired with an aversive stimuli (conditioned taste-aversion), or by over-consumption of that outcome (sensory-specific satiety). The critical observation is that in the test phase, which is performed in extinction, the animal continues responding for the outcome, even though it is devaluated. The goal-directed process, on the other hand, is defined as being sensitive to outcome-devaluation and contingency-degradation. This behavioural sensitivity is shown to emerge when the pre-devaluation training phase is limited, rather than extensive Adams [3].

Based on these behavioural patterns, two different types of associative memory structures are proposed for the two systems. The behavioural autonomy demonstrated by the habitual system is hypothesized to be based on the establishment of associations between contextual stimuli and responses (S-R), whereas representational flexibility of the goal-directed system is suggested to rely on associations between actions and outcomes (A-O).

A wide range of electrophysiological, brain imaging, and lesion studies indicate that different, and topographically segregated cortico-striato-pallido-thalamo-cortical loops underlie the two learning mechanisms discussed above (see [4] for review). The sensorimotor loop, comprising of glutamatergic projections from infralimbic cortices to dorsolateral striatum, is shown to be involved in habitual responding. In addition, phasic activity of dopamine (DA) neurons, originating from midbrain and projecting to different areas of the striatum is hypothesized to carry a reinforcement signal, that is shown to play an essential role in the formation of S-R associations. The associative loop, on the other hand, is proposed to underlie goal-directed responding. Some critical components of this loop include dorsomedial striatum and paralimbic cortex.

The existence of two parallel neuronal circuits involved in decision making arises the question of how the two systems compete for taking control over behaviour. Daw and colleagues, proposed a reinforcement learning model in which, the competition between the two systems is based on the relative uncertainty of the systems in estimating the value of different actions [5]. Their model can explain some behavioural aspects of interaction between the two systems. A critical analysis of their model is provided in the discussion section.

In this paper, based on the model proposed in [5], and using the idea that reward maximization is the performance measure of the decision making system of animals, we propose a novel, normative arbitration mechanism between the two systems that can explain a wider range of behavioural data. The basic assumption of the model is that the habitual system is fast in responding, but inflexible in adapting its behavioural strategy to new conditions. The goal-directed system, in contrast, can rapidly adapt its instrumental knowledge, but is considerably slower than the habitual system in making decisions. In the proposed model, not only the two systems seek to maximize the accrual of reward -by different algorithms-, but the arbitration mechanism between them is also designed in a way to exploit the comparative advantages of the two systems in value estimation.

As a direct experimental observation for supporting the assumptions of the model, it has been reported classically that when rats traverse a T-maze to obtain access to an outcome, at the choice points, they pause and vicariously sample the alternative choices before committing to a decision [6]–[8]. This behaviour, called “vicarious trial-and-error” (VTE), is defined by head movements from one stimulus to another at a choice point, during simultaneous discrimination learning [9]. This hesitation- and conflict-like behaviour is suggested to be indicative of deliberation or active processing by a planning system [6], [7], [10], [11]. Important for our discussion, it has been shown that after extensive learning, VTE frequency declines significantly [6], [12], [13]. This observation is interpreted as a transition of behavioural control from the planning system to the habitual one, and shows difference in the decision-time between habitual and goal-directed responding [14].

Beside being supported by the VTE behaviour, the assumption about the relative speed and flexibility of the two systems allows the model to explain some behavioural data on choice reaction time. The model also predicts that whereas phasic activity of DA neurons indirectly affects the arbitration through intervening in habit formation, tonic activity of DA neurons can directly influence the competition by modulating the cost of goal-directed deliberation.

Model

The Preliminaries

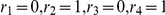

Reinforcement learning (RL) is learning how to establish different types if instrumental associations for the purpose of maximizing the accrual of rewards [15]. In the RL framework, stimuli and responses are referred to as states and actions, respectively. An RL agent perceives its surrounding environment in the form of a finite set of states,  , in each of which, one action among a finite set of actions,

, in each of which, one action among a finite set of actions,  , can be taken. The dynamics of the environment can be formulated by a transition function and a reward function. The transition function, denoted by

, can be taken. The dynamics of the environment can be formulated by a transition function and a reward function. The transition function, denoted by  , represents the probability of reaching state

, represents the probability of reaching state  after taking action

after taking action  at state

at state  . The reward function,

. The reward function,  , indicates the probability of receiving reward

, indicates the probability of receiving reward  , by executing action

, by executing action  at state

at state  . This structure, known as the Markov Decision Process (MDP), can be demonstrated by a 4-tuple,

. This structure, known as the Markov Decision Process (MDP), can be demonstrated by a 4-tuple,  . At each time-step,

. At each time-step,  , the agent is in a certain state, say

, the agent is in a certain state, say  , and makes a choice, say

, and makes a choice, say  , from several alternatives on the basis of subjective values that it has assigned to them through its past experiences in the environment. This value, denoted by

, from several alternatives on the basis of subjective values that it has assigned to them through its past experiences in the environment. This value, denoted by  , is aimed to be proportional to the sum of discounted rewards that are expected to be received after taking action

, is aimed to be proportional to the sum of discounted rewards that are expected to be received after taking action  onward:

onward:

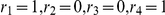

|

(1) |

is the discount factor, which indicates the relative incentive value of delayed rewards compared to immediate ones.

is the discount factor, which indicates the relative incentive value of delayed rewards compared to immediate ones.

Model-free and model-based RL, are two variants of reinforcement learning with behavioural characteristics similar to the habitual and goal-directed systems, respectively [5]. These two variants are in fact two different mechanisms for estimating the  -function of equation 1 , based on the feedbacks,

-function of equation 1 , based on the feedbacks,  , that the animal receives from the environment through learning.

, that the animal receives from the environment through learning.

In temporal difference RL (TDRL), which is an implementation of model-free RL, a prediction error signal,  , is calculated each time the agent takes an action and receives a reward from the environment. This prediction error is calculated by comparing the prior expected value of taking that action,

, is calculated each time the agent takes an action and receives a reward from the environment. This prediction error is calculated by comparing the prior expected value of taking that action,  , with its realized value after receiving reward,

, with its realized value after receiving reward,  :

:

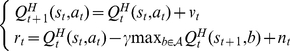

| (2) |

is the maximum value of all feasible actions available at

is the maximum value of all feasible actions available at  . The prediction error signal is hypothesized to be carried by the burst firing of midbrain dopamine neurons. This signal can be used to update the estimated value of actions:

. The prediction error signal is hypothesized to be carried by the burst firing of midbrain dopamine neurons. This signal can be used to update the estimated value of actions:

| (3) |

is the learning rate, representing the degree to which the prediction error adjusts the

is the learning rate, representing the degree to which the prediction error adjusts the  -values of the habitual system. Assuming that the reward and transition functions of the environment are stationary, equations 2 and 3 will lead the

-values of the habitual system. Assuming that the reward and transition functions of the environment are stationary, equations 2 and 3 will lead the  -values to eventually converge through learning to the expected sum of discounted rewards. Therefore, after a sufficiently long learning period, the habitual system will be equipped with the instrumental knowledge required for taking the optimal behavioural strategy. This optimal decision making is achievable without the agent knowing the dynamics of the environment. This is why this mechanism is known as model-free reinforcement learning. The gradual convergence of

-values to eventually converge through learning to the expected sum of discounted rewards. Therefore, after a sufficiently long learning period, the habitual system will be equipped with the instrumental knowledge required for taking the optimal behavioural strategy. This optimal decision making is achievable without the agent knowing the dynamics of the environment. This is why this mechanism is known as model-free reinforcement learning. The gradual convergence of  -values to their steady levels, leads the habitual system toward being insensitive to sudden changes in the environment's dynamics, such as outcome-devaluation and contingency degradation. Instead, as all the information required for making a choice between several alternatives is cached in S-R associations through the course of learning, the habitual responses can be made within a short interval after the stimulus is presented.

-values to their steady levels, leads the habitual system toward being insensitive to sudden changes in the environment's dynamics, such as outcome-devaluation and contingency degradation. Instead, as all the information required for making a choice between several alternatives is cached in S-R associations through the course of learning, the habitual responses can be made within a short interval after the stimulus is presented.

Instead of keeping and updating point estimations, by using Kalman reinforcement learning [16], the habitual system in our model keeps probability distributions for the  -values of each state-action pair (See Methods for mathematical details). These probability distributions contain substantial information that will be later used for arbitration between the habitual and the goal-directed systems.

-values of each state-action pair (See Methods for mathematical details). These probability distributions contain substantial information that will be later used for arbitration between the habitual and the goal-directed systems.

In contrast to the habitual process, the value estimation mechanism in a model-based RL is based on the transition and reward functions that the agent has learned through past experiences [5], [15]. In fact, through the course of learning, the animal is hypothesized to learn the causal relationship between various actions and their outcomes, as well as the incentive value of different outcomes. Based on the former component of the environment's dynamics, the goal-directed system can deliberate the short-term and long-term consequences of each sequence of actions. Then by using the learned reward function, calculating the expected value for each action sequence will be possible.

Letting  denote the value of each action calculated by this method, the recursive value-iteration algorithm below can compute it (See Methods for algorithmic details):

denote the value of each action calculated by this method, the recursive value-iteration algorithm below can compute it (See Methods for algorithmic details):

| (4) |

Due to employing the estimated model of the environment for value estimation, the goal-directed system can rapidly revise the estimated values after an environmental change, as soon as the transition and reward functions are adapted to the new conditions. This can explain why the goal-directed system is sensitive to outcome-devaluation and contingency-degradation [5]. But according to this computational mechanism, one would expect the value estimation by the goal-directed system to take a considerable amount of time, as compared to the habit-based decision time. The difference in speed and accuracy of value estimation by the habitual and goal-directed processes is the core assumption of the arbitration mechanism proposed in this paper, that allows the model to explain a set of behavioural and neurobiological data.

Speed/Accuracy Trade-off

If we assume for simplicity that the goal-directed system is always perfectly aware of the environment's dynamics, then it can be concluded that this system has perfect information about the value of different choices at each state. This is a valid assumption in most of the experimental paradigms considered in this paper. For example, in outcome-devaluation experiments, due to the existence of a re-exposure phase between training and test phases, the subjects have the opportunity to learn new incentive values for the outcomes. Although the goal-directed system, due to its flexible nature, will always have “more accurate” value estimations compared to the habitual system, the assumption of having “perfect” information might be violated under some conditions (like reversal learning tasks). This violation will naturally lead to some irrational arbitrations between the systems.

Thus, the advantage of using the goad-directed system can be approximated by the advantage of having perfect information about the value of actions. But this perfect information can be extracted from transition and reward functions at the cost of losing time; a time which could be instead used for taking rapid habitual actions and thus, receiving less rewards in magnitude, but more in frequency. This trade-off is the essence of the arbitration rule between the two systems that we propose here. In other words, we hypothesize that animals balance the benefits of deliberations against their cost. Its benefit is proportional to the value of having perfect information, and its cost is equal to the potential reward that could be acquired during the time that the organism is waiting for the goal-directed system to deliberate.

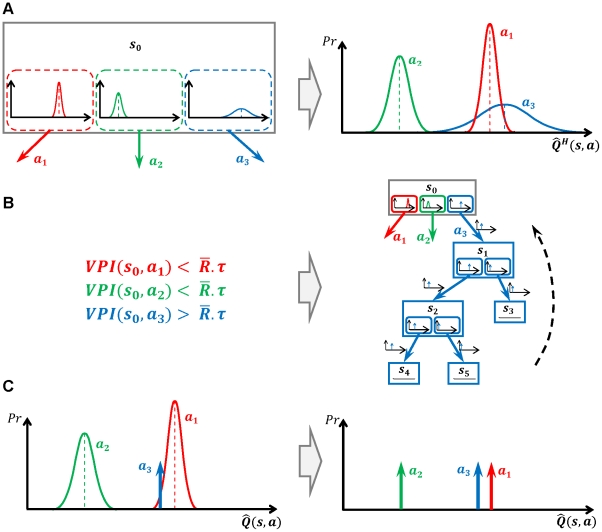

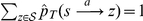

As illustrated schematically in Figure 1 , at each time-step, the habitual system has an imperfect estimate for the value of each action in the form of a distribution function. Using these distribution functions, the expected benefit of estimating the value of each action  by the goal-directed system is computed (see below). This benefit, called “value of perfect information”, can be denoted by

by the goal-directed system is computed (see below). This benefit, called “value of perfect information”, can be denoted by  . The cost of deliberation, denoted by

. The cost of deliberation, denoted by  , is also computed separately (See below). Having the cost and benefit of deliberation for each action, if the benefit is greater than the cost, i.e.

, is also computed separately (See below). Having the cost and benefit of deliberation for each action, if the benefit is greater than the cost, i.e.  , the arbitrator will decide to run the goal-directed system for estimating the value of action

, the arbitrator will decide to run the goal-directed system for estimating the value of action  ; otherwise, the value of action

; otherwise, the value of action  that will be used for action selection will be equal to the mean of the distribution function cached in the habitual system for that action. Finally, based on the estimated values of different actions that have been derived from either of the two instrumental systems, a softmax action selection rule, in which the probability of choosing each action increases exponentially with its estimated value, can be used (See Methods). Upon executing the selected action and consequently receiving a reward and entering a new state, both the habitual and goal-directed systems will update their instrumental knowledge for future exploitations.

that will be used for action selection will be equal to the mean of the distribution function cached in the habitual system for that action. Finally, based on the estimated values of different actions that have been derived from either of the two instrumental systems, a softmax action selection rule, in which the probability of choosing each action increases exponentially with its estimated value, can be used (See Methods). Upon executing the selected action and consequently receiving a reward and entering a new state, both the habitual and goal-directed systems will update their instrumental knowledge for future exploitations.

Figure 1. An example for showing the proposed arbitration mechanism between the two processes.

(A) The agent is at state  and three choices are available:

and three choices are available:  ,

,  and

and  . The habitual system, as shown, has an estimate for the value of each action in the form of probability distribution functions, based on its previous experiences. These uncertain estimated values are then compared to each other in order to calculate the expected gain of having the exact value of each action (

. The habitual system, as shown, has an estimate for the value of each action in the form of probability distribution functions, based on its previous experiences. These uncertain estimated values are then compared to each other in order to calculate the expected gain of having the exact value of each action ( ). In the case of this example, action

). In the case of this example, action  has the highest mean value, according to the uncertain knowledge in the habitual system. However, it is probable that the exact value of this action be less than the mean value of action

has the highest mean value, according to the uncertain knowledge in the habitual system. However, it is probable that the exact value of this action be less than the mean value of action  . In that case, the best strategy would be to choose action

. In that case, the best strategy would be to choose action  , rather that

, rather that  . Thus, it is worth knowing the exact value of

. Thus, it is worth knowing the exact value of  (

( has a high value). (B) The exact value of actions is supposed to be attainable if a tree search is performed in the decision tree, by the goal-directed system. However, the benefit of search must be higher than its cost. The benefit of deliberation for each action is equal to its

has a high value). (B) The exact value of actions is supposed to be attainable if a tree search is performed in the decision tree, by the goal-directed system. However, the benefit of search must be higher than its cost. The benefit of deliberation for each action is equal to its  signal, whereas the cost of deliberation is equal to

signal, whereas the cost of deliberation is equal to  , which is the total reward that could be potentially acquired during the deliberation time,

, which is the total reward that could be potentially acquired during the deliberation time,  (

( is the average over acquired rewards during some past actions). Since for action

is the average over acquired rewards during some past actions). Since for action  , the benefit of deliberation has exceeded its cost, the goal-directed system is engaged in value estimation. (C) Finally, action selection is carried out based on the estimated values for actions, which have come from either the habitual (for actions

, the benefit of deliberation has exceeded its cost, the goal-directed system is engaged in value estimation. (C) Finally, action selection is carried out based on the estimated values for actions, which have come from either the habitual (for actions  and

and  ) or the goal-directed (for action

) or the goal-directed (for action  ) system. For those actions that are not deliberated, the mean value of their distribution function is used for action selection.

) system. For those actions that are not deliberated, the mean value of their distribution function is used for action selection.

Based on the decision theoretic ideas of “value of information” [17], a measure has been proposed in [18] for information value in the form of expected gains in performance, resulted from improved policies if perfect information was available. This measure, which is computed from probability distributions over the  -value of choices, is used in the original paper for proposing an optimal solution for the exploration/exploitation trade-off. Here, we use the same measure for estimating the benefit of goal-directed search.

-value of choices, is used in the original paper for proposing an optimal solution for the exploration/exploitation trade-off. Here, we use the same measure for estimating the benefit of goal-directed search.

To see how this measure can be computed, assume that the animal is in the state  , and one of the available actions is

, and one of the available actions is  , with the estimated value

, with the estimated value  assigned to it by the habitual system. At this stage, we are interested to know how much the animal will benefit if it understands that the true value of actions

assigned to it by the habitual system. At this stage, we are interested to know how much the animal will benefit if it understands that the true value of actions  is equal to

is equal to  , rather than

, rather than  . Obviously, any new information about the exact value of an action is valuable only if it improves the previous policy of the animal that was based on

. Obviously, any new information about the exact value of an action is valuable only if it improves the previous policy of the animal that was based on  . This can happen in two scenarios: (a) when knowing the exact value signifies that an action previously considered to be sub-optimal is revealed to be the best choice, and (b) when the new knowledge shows that the action which was considered to be the best, is actually inferior to some other actions. Therefore, the gain of knowing that the true value of

. This can happen in two scenarios: (a) when knowing the exact value signifies that an action previously considered to be sub-optimal is revealed to be the best choice, and (b) when the new knowledge shows that the action which was considered to be the best, is actually inferior to some other actions. Therefore, the gain of knowing that the true value of  is

is  can be defined as [18]:

can be defined as [18]:

|

(5) |

and

and  are the actions with the best and second best expected values, respectively. In the definition of the gain function, the first and the second rules correspond to the second and the first scenarios discussed above, respectively.

are the actions with the best and second best expected values, respectively. In the definition of the gain function, the first and the second rules correspond to the second and the first scenarios discussed above, respectively.

According to this definition, calculating the gain function for each choice requires knowing the true value of that state-action pair,  , which is unavailable. But, as the habitual system is assumed to keep a probability distribution function for the value of actions, the agent has access to the probability of possible values of

, which is unavailable. But, as the habitual system is assumed to keep a probability distribution function for the value of actions, the agent has access to the probability of possible values of  . Using this probability distribution of

. Using this probability distribution of  , the animal can take expectation over the gain function to estimate the value of perfect information (

, the animal can take expectation over the gain function to estimate the value of perfect information ( ):

):

|

(6) |

Intuitively, and crudely speaking, the value of perfect information for an action is somehow proportional to the overlap between the distribution function of that action and the distribution function of the expectedly best action. Exceptionally, for the case of the expectedly best action, the  signal is proportional to the overlap between its distribution function and the distribution function of the expectedly second best action. It is worth to emphasize that for the calculation of

signal is proportional to the overlap between its distribution function and the distribution function of the expectedly second best action. It is worth to emphasize that for the calculation of  signals, the goal-directed system has in no way been involved and instead, all the necessary information has been provided by the habitual process. The

signals, the goal-directed system has in no way been involved and instead, all the necessary information has been provided by the habitual process. The  signal for an action expresses the degree to which having perfect information about that action, i.e. knowing its true value, results in policy improvement and thus,

signal for an action expresses the degree to which having perfect information about that action, i.e. knowing its true value, results in policy improvement and thus,  is indicative of the benefit of deliberation.

is indicative of the benefit of deliberation.

It is worth mentioning that computing the  integral proposed in equation 6 is shown to have a closed form equation [18] and thus, the integral doesn't need to be actually taken. Therefore, assuming that the time needed for evaluating

integral proposed in equation 6 is shown to have a closed form equation [18] and thus, the integral doesn't need to be actually taken. Therefore, assuming that the time needed for evaluating  is considerably less than that of running the goal-directed system is plausible.

is considerably less than that of running the goal-directed system is plausible.

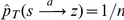

For computing the cost of deliberation, on the other hand, assuming that deliberation about the value of each action takes a fixed time,  , the cost of deliberation can be quantified as

, the cost of deliberation can be quantified as  ; where

; where  is the average rate of reward per time unit. Average reward can be interpreted as the opportunity cost of latency in responding to the environmental stimuli [19]. It means that when the average reward has a high value, every second in which a reward is not obtained is costly. Average reward can be computed as an exponentially-weighted moving average of obtained rewards:

is the average rate of reward per time unit. Average reward can be interpreted as the opportunity cost of latency in responding to the environmental stimuli [19]. It means that when the average reward has a high value, every second in which a reward is not obtained is costly. Average reward can be computed as an exponentially-weighted moving average of obtained rewards:

| (7) |

The arbitration mechanism proposed above, is an approximately optimal trade-off between speed and accuracy of responding. This means that given that the assumptions are true, the arbitration mechanism calls or doesn't call the goal-directed system, based on the criterion that sum of discounted rewards, as defined in equation 1 , should be maximized [See Methods for optimality proof]. The most challenging assumption, as mentioned before, is that the goal-directed system is assumed to have perfect information on the value of choices. As some cases that challenge the validity of this assumption one could mention the cases where only the goal-directed system is affected (for example after receiving some verbal instructions by the subject). Clearly, the cached values in the habitual system and thus the  signal will not be affected under such treatments, though the real accuracy that the goal-directed system has in estimating values has changed.

signal will not be affected under such treatments, though the real accuracy that the goal-directed system has in estimating values has changed.

Results

Outcome-Sensitivity after Moderate vs. Extensive Training

First discovered by Adams [3] and later replicated in a lengthy series of studies [20]–[23], it has been shown that the effect that the devaluation of outcome exerts on the animal's responses depends upon the extent of pre-devaluation training; i.e. responses are sensitive to outcome devaluation after moderate training, whereas overtraining makes responding insensitive to devaluation.

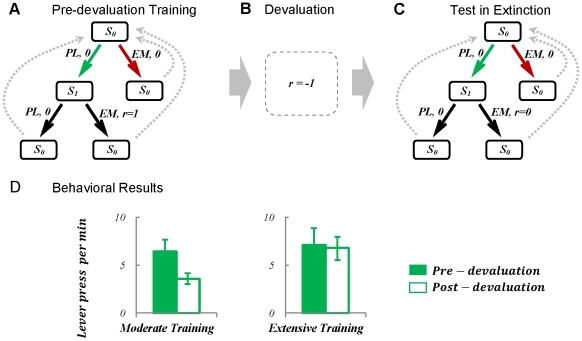

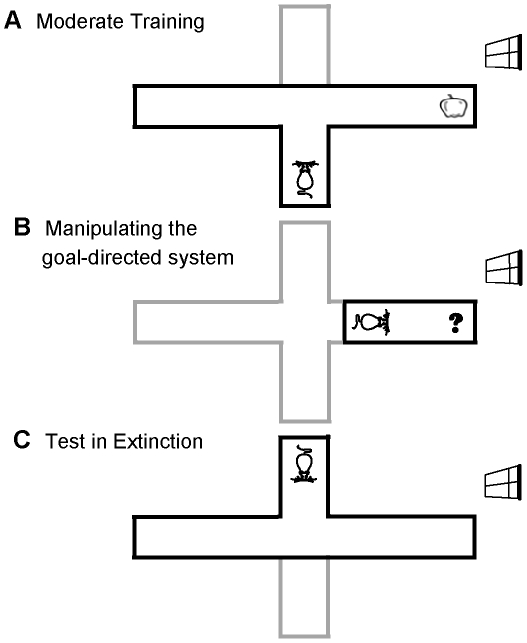

To check the validity of the proposed model, the model has been simulated in a schedule analogous to those used in the above mentioned experiments. The formal representation of the task, which was first suggested in [5], is illustrated in Figure 2 . As the figure shows, the procedure is composed of 3 phases. The agent is first placed in an environment where pressing the lever ( ) followed by entering the food magazine (

) followed by entering the food magazine ( ) results in obtaining a reward with the magnitude of one; but magazine entry before lever press, or pressing the lever and not entering the magazine leads to no reward. As the task is supposed to be cyclic, after performing each chain of actions, the agent goes to the initial state and will start afresh (Figure 2:A). After a certain amount of training in this phase, the food outcome is devalued by being paired with poison, which is aversive with magnitude of one (equivalently, its reward is equal to -1) (Figure 2:B). Finally, to assess the effect of devaluation, the performance of the agent is measured in extinction, i.e. in the absence of any outcome (neither appetitive, nor aversive), in order to avoid the instrumental associations acquired during training from being affected in the test phase (Figure 2:C).

) results in obtaining a reward with the magnitude of one; but magazine entry before lever press, or pressing the lever and not entering the magazine leads to no reward. As the task is supposed to be cyclic, after performing each chain of actions, the agent goes to the initial state and will start afresh (Figure 2:A). After a certain amount of training in this phase, the food outcome is devalued by being paired with poison, which is aversive with magnitude of one (equivalently, its reward is equal to -1) (Figure 2:B). Finally, to assess the effect of devaluation, the performance of the agent is measured in extinction, i.e. in the absence of any outcome (neither appetitive, nor aversive), in order to avoid the instrumental associations acquired during training from being affected in the test phase (Figure 2:C).

Figure 2. Formal representation of the devaluation experiment with one lever and one outcome, and behavioural results.

(A) In the training phase, the animal is put in a Skinner box where pressing the lever  followed by a nose-poke entry in the food magazine (enter-magazine:

followed by a nose-poke entry in the food magazine (enter-magazine:  ) leads to obtaining the food reward. Other action sequences, like entering the magazine before pressing the lever (i.e.

) leads to obtaining the food reward. Other action sequences, like entering the magazine before pressing the lever (i.e.  ) result in no reward. As the task is supposed to be cyclic, the agent will return back to the initial state,

) result in no reward. As the task is supposed to be cyclic, the agent will return back to the initial state,  , after taking each sequence of responses. (B) In the second phase, the devaluation phase, the food outcome which used to be acquired during the training period is devalued by being paired with illness. (C) The animal's behaviour is then tested in the same Skinner box used for training, with the difference that no outcome is delivered to the animal anymore, in order to avoid changes in behaviour due to new reinforcement. (D) Behavioural results (adopted from ref [22]) show that the rate of pressing the lever decreases significantly after devaluation for the case of moderate pre-devaluation training. In contrast, it doesn't show a significant change, when the training period has been extensive. Error bars represent

, after taking each sequence of responses. (B) In the second phase, the devaluation phase, the food outcome which used to be acquired during the training period is devalued by being paired with illness. (C) The animal's behaviour is then tested in the same Skinner box used for training, with the difference that no outcome is delivered to the animal anymore, in order to avoid changes in behaviour due to new reinforcement. (D) Behavioural results (adopted from ref [22]) show that the rate of pressing the lever decreases significantly after devaluation for the case of moderate pre-devaluation training. In contrast, it doesn't show a significant change, when the training period has been extensive. Error bars represent  (standard error of the mean).

(standard error of the mean).

The behavioural results, as illustrated in Figure2:D, show that behavioural sensitivity to goal-devaluation depends on the extent of pre-devaluation training. In the moderate training case, the rate of responding has significantly decreased after devaluation, which is an indicator of goal-directed responding. However, after extensive training, no significant sensitivity to devaluation of the outcome is observed, implying that responding has become habitual.

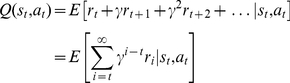

Through numerical simulation, homogeneous agents, i.e. agents with equal free parameters of the model, have carried out the experimental procedure under two scenarios: moderate vs. extensive pre-devaluation training. The only difference between the two scenarios is in the number of training trials in the first phase of the schedule: 40 trials for the moderate, and 240 trials for the extensive training scenario. The results are illustrated separately for these two scenarios in Figure 3 . It must be noted that since neither the “lever-press” nor the “enter-magazine” actions are performed by the animal during the devaluation phase, the habitual knowledge remains intact in this period; i.e. the habitual system is not simulated during the devaluation period. Devaluation is assumed to only affect the reward function, used by the goal-directed system.

Figure 3. Simulation results of the model in the schedule depicted in Figure 2 .

The model is simulated under two scenarios: moderate training (left column), and extensive training (right column). In the moderate training scenario, the agent has experienced the environment for 40 trials before devaluation treatment, whereas in the extensive training scenario, 240 pre-devaluation training trials have been provided. In sum, the figure shows that after extensive training, but not moderate training, the  signal is below

signal is below  at the time of devaluation (Plot

at the time of devaluation (Plot  against

against  ). Thus, the behaviour in the second scenario, but not the first, doesn't change right after devaluation (Plot

). Thus, the behaviour in the second scenario, but not the first, doesn't change right after devaluation (Plot  against

against  . Also, plot

. Also, plot  against

against  ). The low value of the

). The low value of the  signal at the time of devaluation for the second scenario is because there is little overlap between the distribution functions of the values of the two available choices (Plots

signal at the time of devaluation for the second scenario is because there is little overlap between the distribution functions of the values of the two available choices (Plots  and

and  ). The opposite is true for the first scenario (Plots

). The opposite is true for the first scenario (Plots  and

and  ). Numbers along the horizontal axis in plots

). Numbers along the horizontal axis in plots  to

to  , and

, and  to

to  , represent trial numbers. Each “trial” ends when the simulated agent receives a reward; e.g. in the schedule of Figure 2 , each time the agent chooses

, represent trial numbers. Each “trial” ends when the simulated agent receives a reward; e.g. in the schedule of Figure 2 , each time the agent chooses  at state

at state  , the trial number is counted up. Plots

, the trial number is counted up. Plots  and

and  show the distribution functions of the habitual system over its estimated

show the distribution functions of the habitual system over its estimated  -values, at one trial before devaluation. Bar charts

-values, at one trial before devaluation. Bar charts  and

and  show the average probability of performing

show the average probability of performing  at 10 trials before (filled bars) and 10 trials after (empty bars) devaluation. All data reported are means over 3000 runs. The

at 10 trials before (filled bars) and 10 trials after (empty bars) devaluation. All data reported are means over 3000 runs. The  for all bar charts is close to zero and thus, not illustrated.

for all bar charts is close to zero and thus, not illustrated.

Figure 3:A and G show that at the early stages of learning, the  signal has a high value for both of the actions,

signal has a high value for both of the actions,  and

and  , at the initial state,

, at the initial state,  . This indicates that due to initial ignorance of the habitual system, knowing the exact value of both of the actions will greatly improve the agent's behavioural strategy. Hence, the benefit of deliberation is more than its cost,

. This indicates that due to initial ignorance of the habitual system, knowing the exact value of both of the actions will greatly improve the agent's behavioural strategy. Hence, the benefit of deliberation is more than its cost,  . By obtaining a reward, the

. By obtaining a reward, the  signal elevates gradually. Concurrently, as the

signal elevates gradually. Concurrently, as the  -values estimated by the habitual process for the two actions converge to their real values through learning, the difference between them increases (Figure 3:D and J). This increase leads to the overlap between the distribution functions over the two actions becoming less and less (Figure 3:E and K) and consequently, the

-values estimated by the habitual process for the two actions converge to their real values through learning, the difference between them increases (Figure 3:D and J). This increase leads to the overlap between the distribution functions over the two actions becoming less and less (Figure 3:E and K) and consequently, the  signal decreasing gradually.

signal decreasing gradually.

Now by focusing on the moderate training scenario, it is clear that when devaluation has occurred at the trial number 40, the  signals have not yet become less than

signals have not yet become less than  (Figure 3:A). Thus, the actions have been goal-directed at the time of devaluation and hence, the agent's responses have shown a great sensitivity to devaluation at the very early stages after devaluation; i.e. the probability of choosing action

(Figure 3:A). Thus, the actions have been goal-directed at the time of devaluation and hence, the agent's responses have shown a great sensitivity to devaluation at the very early stages after devaluation; i.e. the probability of choosing action  has sharply decreased to 50%, which is equal to that of action

has sharply decreased to 50%, which is equal to that of action  (Figure 3:B and F). Figure 3:C also shows that in the moderate training scenario, deliberation time has always been high; indicating that actions have always been deliberated using the goal-directed system.

(Figure 3:B and F). Figure 3:C also shows that in the moderate training scenario, deliberation time has always been high; indicating that actions have always been deliberated using the goal-directed system.

In contrast to the moderate training scenario, the  signal is below

signal is below  at the time of devaluation in the extensive training scenario (Figure 3:G). This means that at this point of time, the cost of devaluation has exceeded its benefit and hence, actions are chosen habitually. This can be seen in Figure 3:I, where deliberation time has reached zero after almost 100 training trials. As a consequence, the agent's responses have not sharply changed after devaluation (Figure 3:H and L). Because the test has been performed in extinction, the average reward signal has gradually decreased to zero after devaluation and concurrently, the

at the time of devaluation in the extensive training scenario (Figure 3:G). This means that at this point of time, the cost of devaluation has exceeded its benefit and hence, actions are chosen habitually. This can be seen in Figure 3:I, where deliberation time has reached zero after almost 100 training trials. As a consequence, the agent's responses have not sharply changed after devaluation (Figure 3:H and L). Because the test has been performed in extinction, the average reward signal has gradually decreased to zero after devaluation and concurrently, the  signal has slowly raised again, due to the reduction of the difference between the

signal has slowly raised again, due to the reduction of the difference between the  -values of the two choices (Figure 3:J) and so, the augmentation of the overlap between their distribution functions. At the point that

-values of the two choices (Figure 3:J) and so, the augmentation of the overlap between their distribution functions. At the point that  has exceeded

has exceeded  , the agent's responses have become goal-directed again and so, deliberation time has boosted (Figure 3:I). Consistently, the rate of selection of each of the two choices has been adapted to the post-devaluation conditions (Figure 3:H).

, the agent's responses have become goal-directed again and so, deliberation time has boosted (Figure 3:I). Consistently, the rate of selection of each of the two choices has been adapted to the post-devaluation conditions (Figure 3:H).

In a nutshell, the simulation of the model in these two scenarios is consistent with the behavioural observation that moderately trained behaviours are sensitive to outcome devaluation, but extensively trained behaviours are not. Moreover, the model predicts that after extensive training, deliberation time declines; a prediction that is consistent with the VTE behaviour observed in rats [6]. Furthermore, the model predicts that deliberation time increases with a lag after devaluation in the extensive training scenario, whereas it remains unchanged before and after devaluation in the moderate training scenario.

Just for the sake of more clarification, the reason that the mean value of  in Figures 3:E and K is above zero is because of the cyclic nature of the task, i.e. by taking action

in Figures 3:E and K is above zero is because of the cyclic nature of the task, i.e. by taking action  at state

at state  , the agent goes back to the same state, which might have a positive value.

, the agent goes back to the same state, which might have a positive value.

Outcome-Sensitivity in a Concurrent Schedule

The focus of the previous section was on simple tasks with only one response for each outcome. In another class of experiments, the development of behavioural autonomy has been assessed in more complex tasks where two different responses produce two different outcomes [21], [24]–[26]. Among those experiments, to the best of our knowledge, it is only in the experiment in [26] that the two different choices ( and

and  ) are concurrently available and hence, the animal is given a choice between the two responses (Figure 4:A). In the others, the two different responses are trained and also tested in separate sessions and so, their schedules are not compatible with the requirements of the reinforcement learning framework that is used in our model.

) are concurrently available and hence, the animal is given a choice between the two responses (Figure 4:A). In the others, the two different responses are trained and also tested in separate sessions and so, their schedules are not compatible with the requirements of the reinforcement learning framework that is used in our model.

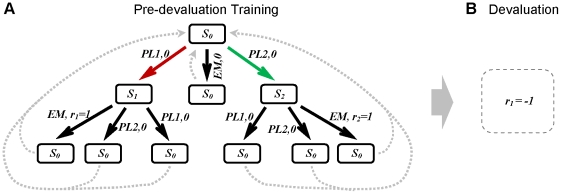

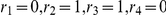

Figure 4. Tree representation of the devaluation experiment with two levers available concurrently.

(A) In the training phase, either pressing lever one  or pressing lever two

or pressing lever two  , if followed by entering the magazine

, if followed by entering the magazine  , results in acquiring one unit of either of the two rewards,

, results in acquiring one unit of either of the two rewards,  or

or  , respectively. The reinforcing value of the two rewards is equal to one. Other action sequences lead to no reward. As in the task of Figure 2 , this task is also assumed to be cyclic. (B) In the devaluation phase, the outcome of one of the responses (

, respectively. The reinforcing value of the two rewards is equal to one. Other action sequences lead to no reward. As in the task of Figure 2 , this task is also assumed to be cyclic. (B) In the devaluation phase, the outcome of one of the responses ( ) is devalued (

) is devalued ( ), whereas the rewarding value of the outcome of the other response (

), whereas the rewarding value of the outcome of the other response ( ) has remained unchanged. After the devaluation phase, the animal's behaviour is tested in extinction (for space consideration, this phase is not illustrated). Similar to the task of Figure 2 , neither

) has remained unchanged. After the devaluation phase, the animal's behaviour is tested in extinction (for space consideration, this phase is not illustrated). Similar to the task of Figure 2 , neither  nor

nor  is delivered to the animal in the test phase.

is delivered to the animal in the test phase.

In [26], rats received extensive concurrent instrumental training in a task where pressing the two different levers produces different types of outcomes: food pellets and sucrose solution. Although the outcomes are different, they have equal reinforcing strength, in terms of the response rates supported by them. A task similar to that used in their experiment is formally depicted in Figure 4.

After extensively reinforcing the two responses, one of the outcomes was devalued by flavour aversion conditioning, as illustrated in Figure 4:B. Subsequently, given a choice between the two responses, the sensitivity of instrumental performance to this devaluation was assessed in extinction tests. The results of their experiment showed that devaluation reduced the relative performance of the response associated with the devalued outcome at the very early stage of the test phase, even after extensive training. Thus, it can be concluded that whatever the amount of instrumental training, S-R habits do not overcome goal-directed decision making when two responses with equal affective values are concurrently available.

Simulating the proposed model in the task of Figure 4 has replicated this behavioural observation. As illustrated in Figure 5:A, initially, the  signal for the two responses has a high value which gradually decreases over time as the variance of the distribution functions over the estimated values of the two responses decreases; meaning that the habitual process becomes more and more certain about the estimated values. However, due to the forgetting effect, i.e. the habitual system forgets very old samples and does not use them in approximating the distribution function, the variance of the distribution functions over the values of actions doesn't converge to zero, but instead, converges to a level higher than zero. Moreover, because the strength of the two reinforcers is equal, as revealed in Figure 5:D ,the distribution functions do not get divorced (Figure 5:E). As a result of these two facts, the

signal for the two responses has a high value which gradually decreases over time as the variance of the distribution functions over the estimated values of the two responses decreases; meaning that the habitual process becomes more and more certain about the estimated values. However, due to the forgetting effect, i.e. the habitual system forgets very old samples and does not use them in approximating the distribution function, the variance of the distribution functions over the values of actions doesn't converge to zero, but instead, converges to a level higher than zero. Moreover, because the strength of the two reinforcers is equal, as revealed in Figure 5:D ,the distribution functions do not get divorced (Figure 5:E). As a result of these two facts, the  signal has converged at a level higher than

signal has converged at a level higher than  (Figure 5:A). This has led to the performance remaining goal-directed (Figure 5:C) and sensitive to devaluation of one of the outcomes; i.e. after devaluing the outcome of the action

(Figure 5:A). This has led to the performance remaining goal-directed (Figure 5:C) and sensitive to devaluation of one of the outcomes; i.e. after devaluing the outcome of the action  , its rate of selection has sharply decreased and instead, the probability of selecting

, its rate of selection has sharply decreased and instead, the probability of selecting  has increased (Figure 5:B and F).

has increased (Figure 5:B and F).

Figure 5. Simulation results for the task of Figure 4 .

The results show that since the reinforcing value of the two outcomes is equal, there is a huge overlap between the distribution functions over the  -values of actions

-values of actions  and

and  , at state

, at state  , even after extensive training (240 trials) (Plots

, even after extensive training (240 trials) (Plots  and

and  ). Accordingly, the

). Accordingly, the  signals (benefit of goal-directed deliberation) for these two actions remain higher than the

signals (benefit of goal-directed deliberation) for these two actions remain higher than the  signal (cost of deliberation) (Plot

signal (cost of deliberation) (Plot  ) and thus, the goal-directed system is always engaged in value-estimation for these two choices. The behaviourally observable result is that responding remains sensitive to revaluation of outcomes, even though devaluation has happened after a prolonged training period (Plots

) and thus, the goal-directed system is always engaged in value-estimation for these two choices. The behaviourally observable result is that responding remains sensitive to revaluation of outcomes, even though devaluation has happened after a prolonged training period (Plots  and

and  ).

).

As it is clear from the above discussion, the relative strength of the reinforcers critically affects the arbitration mechanism in our model. In fact, the model predicts that when the affective values of the two outcomes are close enough to each other, the  signal will not decline and hence, the behaviour will remain goal-directed and sensitive to devaluation, even after extensive training. But if the two outcomes have different reinforcing strength, then their corresponding distribution functions will gradually get divorced and thus, the

signal will not decline and hence, the behaviour will remain goal-directed and sensitive to devaluation, even after extensive training. But if the two outcomes have different reinforcing strength, then their corresponding distribution functions will gradually get divorced and thus, the  signal will converge to zero. This leads to the habitual process taking control of behaviour and the performance becoming insensitive to outcome devaluation. This prediction is in contrast to the model proposed in [5], in which the arbitration between the two systems is independent of the relative incentive values of the two outcomes. In fact, in that model, whether the value of an action comes from the habitual or the goal-directed system, only depends on the uncertainty of the two systems about their estimated values and thus, the arbitration between the two systems is independent of the estimated value for other actions.

signal will converge to zero. This leads to the habitual process taking control of behaviour and the performance becoming insensitive to outcome devaluation. This prediction is in contrast to the model proposed in [5], in which the arbitration between the two systems is independent of the relative incentive values of the two outcomes. In fact, in that model, whether the value of an action comes from the habitual or the goal-directed system, only depends on the uncertainty of the two systems about their estimated values and thus, the arbitration between the two systems is independent of the estimated value for other actions.

Reaction-Time in a Reversal Learning Task

Using a classical reversal learning task, Pessiglione and colleagues have measured human subjects' reaction time by temporal decoupling of deliberation and execution processes [27]. Reaction time, in their experiment, is defined as the interval between stimulus presentation and the subsequent response initiation. Subjects are required to choose between two alternative responses (“go” and “no-go”), as soon as one of the two stimuli (“ ” and “

” and “ ”) appear on the screen. As shown in Figure 6:A, at each trial, one of the two stimuli

”) appear on the screen. As shown in Figure 6:A, at each trial, one of the two stimuli  and

and  will appear in random, and after the presentation of each stimuli, only one of the two actions results in a gain, whereas the other action results in a loss (

will appear in random, and after the presentation of each stimuli, only one of the two actions results in a gain, whereas the other action results in a loss ( ). The rule governing the appropriate response must be learned by the subject through trial and error. After several learning trials, the reward function changes without warning (

). The rule governing the appropriate response must be learned by the subject through trial and error. After several learning trials, the reward function changes without warning ( ). This second phase is called the reversal phase. Finally, during the extinction phase, the “go” action never leads to a gain, and the appropriate action is to always choose the “no-go” response (

). This second phase is called the reversal phase. Finally, during the extinction phase, the “go” action never leads to a gain, and the appropriate action is to always choose the “no-go” response ( ).

).

Figure 6. Tree representation of the reversal learning task, used in [27], and the behavioural results.

(A) When each trial begins, one of the two stimuli,  or

or  , is presented in random on a screen. The subject can then choose whether to touch the screen (

, is presented in random on a screen. The subject can then choose whether to touch the screen ( action) or not (

action) or not ( action). The task is performed in three phases: training, reversal, and extinction. During the training phase, the subject will receive a reward if the stimulus

action). The task is performed in three phases: training, reversal, and extinction. During the training phase, the subject will receive a reward if the stimulus  is presented and the action

is presented and the action  is performed by the subject, or if the stimulus

is performed by the subject, or if the stimulus  is presented and the action

is presented and the action  is selected (

is selected ( ). During the reversal phase, the reward function is reversed, meaning that the

). During the reversal phase, the reward function is reversed, meaning that the  action must be chosen when the stimulus

action must be chosen when the stimulus  is presented, and vice versa (

is presented, and vice versa ( ). Finally, during the extinction phase, regardless of the presented stimulus, only the

). Finally, during the extinction phase, regardless of the presented stimulus, only the  action leads to a reward (

action leads to a reward ( ). (B) During both the training and reversal phases, subjects' reaction time is high at the early stages when they don't have enough experience with the new conditions yet. However, after some trials, the reaction time declines significantly. Error bars represent

). (B) During both the training and reversal phases, subjects' reaction time is high at the early stages when they don't have enough experience with the new conditions yet. However, after some trials, the reaction time declines significantly. Error bars represent  .

.

To analyse the results of the experiments, the authors have divided each phase into two sequential periods: a “searching” period during which the subjects learn the reward function by trial and error, and an “applying” period during which the learned rule is applied. The results show that in the searching period of each phase, the subjects might choose either the right or the wrong choice, whereas during the applying period, they almost always choose the appropriate action. Moreover, as shown in Figure 6:B, the subjects' reaction time is significantly lower during the applying period, compared to the searching period.

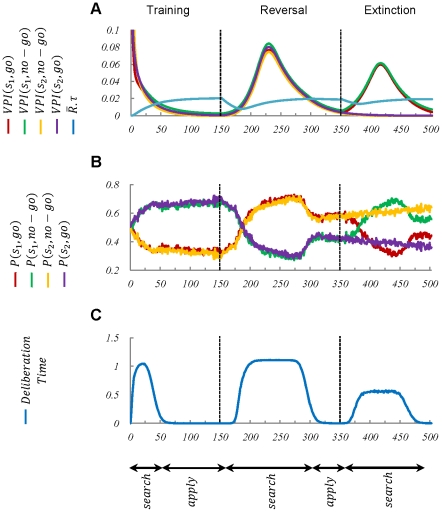

Figure 7 shows that our model captures the essence of experimental results reported in [27]. In fact, the model predicts that during the searching period, the goal-directed process is involved in decision making, whereas during the applying period, the arbitration mechanism doesn't ask for its help in value estimation. It should be noticed that the reaction time reported in [27], is presumably the sum of stimulus-recognition time, deliberation time, etc. Thus, a fixed value, which is the sum of all the other processes involved in choice selection, must be added to the deliberation time computed by our model.

Figure 7. Simulation results of the model in the reversal learning task depicted in Figure 6 .

Since the  signals have high values at the early stages of learning (plot

signals have high values at the early stages of learning (plot  ), the goal-directed system is active and thus, the deliberation time is relatively high (plot

), the goal-directed system is active and thus, the deliberation time is relatively high (plot  ). After further training, the habitual system takes control over behaviour (plot

). After further training, the habitual system takes control over behaviour (plot  ) and as a result, the model's reaction time decreases (plot

) and as a result, the model's reaction time decreases (plot  ). After reversal, it takes some trials for the habitual system to realize that the cached

). After reversal, it takes some trials for the habitual system to realize that the cached  -values are not precise anymore (equivalent to an increase in the variance of

-values are not precise anymore (equivalent to an increase in the variance of  ). Thus, after some trials after reversal, the

). Thus, after some trials after reversal, the  signal increases again (plot

signal increases again (plot  ), which results in re-activation of the goal-directed system. As a result, the model's reaction time increases again (plot

), which results in re-activation of the goal-directed system. As a result, the model's reaction time increases again (plot  ). A similar explanation holds for the rest of the trials. In sum, consistent with the experimental data, the reaction time is higher during the searching period, than the applying period.

). A similar explanation holds for the rest of the trials. In sum, consistent with the experimental data, the reaction time is higher during the searching period, than the applying period.

One might argue that variations in reaction time in the mentioned experiment could also be explained by a single habitual system, by assuming that lack of sufficient learning induces a hesitation-like behaviour. For example, high uncertainty in the habitual system at the early stages of learning a task, or after a change is recognized, can result in a higher-than-normal rate of exploration [18]. Thus, assuming that exploration takes more time than exploitation, reaction time will be higher when the uncertainty of  -values is high. However, as emphasized by the authors in [27], uncertainty doesn't have any effect on the subject's movement time, but only on the reaction time. In fact, movement time remains constant through the course of the experiment. Movement time is defined as the interval between response initiation and submission of the choice. Since movement time is unaffected by the extent of learning, it is unlikely that variations in reaction time be due to a hesitation-like effect and thus, as an alternative, it can be attributed to involvement of deliberative processes. Moreover, such an explanation lacks a normative rationale for the assumption that exploration takes more time than exploitation.

-values is high. However, as emphasized by the authors in [27], uncertainty doesn't have any effect on the subject's movement time, but only on the reaction time. In fact, movement time remains constant through the course of the experiment. Movement time is defined as the interval between response initiation and submission of the choice. Since movement time is unaffected by the extent of learning, it is unlikely that variations in reaction time be due to a hesitation-like effect and thus, as an alternative, it can be attributed to involvement of deliberative processes. Moreover, such an explanation lacks a normative rationale for the assumption that exploration takes more time than exploitation.

Reaction-Time as a Function of the Number of Choices

According to a classical literature in behavioural psychology, choice reaction time (CRT) is fastest when only one possible response is available, and as the number of alternatives increases, so does the response latency. Originally, Hick [28] found that in choice reaction time experiments, CRT increases in proportion to the logarithm of the number of alternatives. Later on, a wealth of evidence validated his finding (e.g., [29]–[35]), such that it became known as “Hick's law”.

Other researchers [36], [37] found that Hick's law holds only for unpracticed subjects, and that training shortens CRT. They also found that in well-trained subjects, there is no difference in CRT as the number of choices varies.

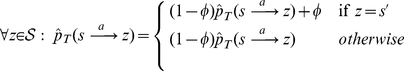

In a typical CRT experiments, a certain number of stimuli and the same number of responses are used in each session of the experiment. Figure 8 shows the tree representation of an example task with four stimuli and four alternatives. In each trial, one of the four alternatives appears at random, and only one of the four responses results in a reward. As in the CRT experiments the subjects are provided with a prior knowledge about the appropriate response after the presentation of each stimuli, we assume that this declarative knowledge can be fed into and used by the goal-directed system in the form of transition and reward functions. Furthermore, subjects are asked to make true responses, and at the same time as fast as possible. Hence, since subjects know the structure of the task in advance, they show very high performance (as defined by the rate of correct responses) in the task.

Figure 8. The tree representation of the task for testing the Hick's law.

In this example, at each trial, one of the four stimuli is presented with equal probabilities. After observing the stimulus, only one of four available choices lead to a reward ( ). The task structure is verbally instructed to the subjects before they start performing the task. The interval between the appearance of the stimulus and the initiation of a response is measured as “reaction time”. The experiment is performed under different numbers of stimulus-response pairs; e.g. some subjects perform the task when only one stimulus-response pair is available (

). The task structure is verbally instructed to the subjects before they start performing the task. The interval between the appearance of the stimulus and the initiation of a response is measured as “reaction time”. The experiment is performed under different numbers of stimulus-response pairs; e.g. some subjects perform the task when only one stimulus-response pair is available ( ), whereas for other subjects the number of stimulus-response pairs might be different.

), whereas for other subjects the number of stimulus-response pairs might be different.

As demonstrated in Figure 9 , the behaviour of the model has replicated the results of CRT experiments: at the early stages of learning, the deliberation time increases as the number of choices increases, whereas after sufficient training, no difference in deliberation time can be seen. It must be mentioned that in contrast to behavioural data, our model predicts a linear correlation between the CRT and the number of alternatives, rather than a logarithmic function. Again, a fixed value characterizing stimulus-identification time must be added to the deliberation time computed by our model in order to reach the reaction time reported in the CRT literature.

Figure 9. Simulation results for the task of Figure 8 .

Consistent with the behavioural data, the results show that as the number of stimulus-response pairs increase, the reaction time also increases. Moreover, if extensive training is provided to the subjects, the reaction time decreases and becomes independent from the number of choices.

Since in CRT experiments a declarative knowledge about appropriate responses is provided to the subjects, they have a relatively high performance from the very beginning of the experiment. The proposed model can explain this behavioural characteristic due to the fact that at the early stages of the experiment, when the habitual system is totally ignorant about the task structure, the goal-directed system controls the behaviour and exploits the prior knowledge fed into it. Thus, a single habitual system cannot explain the performance profile of subjects, even though it might be able to replicate the reaction-time profile. For example, a habitual system that uses a winner-take-all neural mechanism for the  -values of different choices to compete [38], [39] also predicts that at the early stages of learning where the

-values of different choices to compete [38], [39] also predicts that at the early stages of learning where the  -values are close to each other, reaching a state that one action overcomes the others takes longer, compared to the later stages where the best choice has a markedly higher

-values are close to each other, reaching a state that one action overcomes the others takes longer, compared to the later stages where the best choice has a markedly higher  -value than other actions. Such a mechanism also predicts that at the early stages, if the number of choices increases, the reaction time will also increase. However, since feeding the subject's declarative knowledge into the habitual system is not consistent with the nature of this system, a single habitual system cannot explain the performance of subjects in Hick's experiment.

-value than other actions. Such a mechanism also predicts that at the early stages, if the number of choices increases, the reaction time will also increase. However, since feeding the subject's declarative knowledge into the habitual system is not consistent with the nature of this system, a single habitual system cannot explain the performance of subjects in Hick's experiment.

Discussion

Neural Implications

As mentioned, training-induced neuroplasticity in cortico-basal ganglia circuits is suggested to be mediated by dopamine (DA), a key neuromodulater in the brain reward circuitry. Whereas phasic activity of midbrain DA neurons is hypothesized to carry the prediction error signal [40], [41], and thus imposes an indirect effect on behaviour through its role in learning the value of actions, the tonic activity of DA has shown to have a direct effect on behaviour. For example, DA agonists have been demonstrated to have an invigorating effect on a range of behaviours [42]–[46]. It is also shown that higher levels of intrastriatal DA concentration is correlated with higher rates of responding [47], [48], whereas DA antagonist or DA depletion results in reduced responsivity [49]–[53].

Based on these evidence, it has been suggested in previous RL models that tonic DA might report the average reward signal ( ) [19]. By adopting the same assumption, our model also provides a normative explanation for those mentioned experimental results, in terms of tonic DA-based variations in deliberation time.

) [19]. By adopting the same assumption, our model also provides a normative explanation for those mentioned experimental results, in terms of tonic DA-based variations in deliberation time.

Rationality of Type II

In the economic literature of decision theory, rational individuals make optimal choices based on their desires and goals [54], without taking into account the time needed to find the optimal action. In contrast, models of bounded rationality are concerned with information and computational limitations imposed on individuals when they are encountered with alternative choices. Normative models of rational choice that take into account the time and effort required for decision making are known as rationality of type II. This notion emphasizes that computing the optimal answer is feasible, but not economical in complex domains.

First introduced by Herbert Simon, it was argued that agents have limited computational power and that they must react within a reasonable amount of time [55], [56]. To capture this concept, [57] used the Scottish word “satisficing” which means satisfying, to refer to a decision making mechanism that searches until an alternative that meets the agent's aspiration level criterion is found. In other words, the search process is continued until a satisfactory solution is found. Borrowed from psychology, aspiration level denotes a solution evaluation criterion that can be either static or context-dependent and acquired by experience. A similar idea has been taken by neuroscientists to explain the speed/accuracy trade-off, using signal detection theory (see [58] for review). In this framework, the accumulated information gathered from a sequence of observations from a noisy evidence must reach a certain threshold, in order for the animals to convert the accumulated information into a categorical choice. If the threshold goes up, the accuracy increases. As in this case more information must be gathered to satisfy that increased level of accuracy, response latency will decrease.

Simon's initial proposal has launched much attempt in both social science and computer science to develop models that sacrifice optimality in favor of fast-responding. The focus has been on complex uncertain environments, where the agent must respond in a limited amount of time. The answer given to this dilemma in social science is often based on a variety of domain-specific heuristic methods [59], [60] in which, rather than employing a general-purpose optimizer, animals use a set of simple and hard-coded rules to make their decisions in each particular situation. In the artificial intelligence literature, on the other hand, the answer is often based on approximate reasoning. In this approach, details of a complex problem are ignored in order to build a simpler representation of the original problem. Finding the optimal solution of this simple problem will be feasible in an admissible amount of time [61].

To capture the concept of time limitation and to incorporate it into models of decision making, we have used the dual-process theory of decision making. The model we have proposed is based on the assumption that the habitual process is fast in responding to environmental stimuli, but is slow in adapting its behavioural strategies, particularly in environments with low stability. The goal-directed system, in contrast, needs time for deliberating the value of different alternatives by tracing down the decision tree, but, is flexible in behavioural adaptation. The rule for arbitrating between these two systems assumes that animals balance decision quality against the computational requirements of decision-making.

However, the optimality of the arbitration rule is based on the strong assumption that the goal-directed decision process has perfectly learned the environmental contingencies. This assumption might be violated at some points, particularly at the very early stages of learning a new task. When both systems are totally ignorant of the task structure, although the habitual system is in desperate need of having perfect information (high  signal), the goal-directed system doesn't have any information to provide. Thus, deliberation not only doesn't improve animal's strategy, but leads to a waste of the time that could be used for blind exploration. Though, since the goal-directed system is very efficient in terms of exploiting the experienced contingencies, this sub-optimal behaviour of the model doesn't last long. More importantly, in real world situations, the goal-directed process seems to always have considerably more accurate information than the habitual system, even in environments that have never been explored before. This is because many environmental contingencies can be discovered by mere visual observation (e.g. searching for food in an open field) or verbal instruction (as in the Hick's task discussed before), without any experience being required.