Abstract

Purpose

The purpose of this study was to investigate musical timbre perception in cochlear-implant (CI) listeners using a multidimensional scaling technique to derive a timbre space.

Methods

Sixteen stimuli that synthesized western musical instruments were used [S. McAdams et al., Psychol. Res. 58, 177–192 (1995)]. Eight CI listeners and 15 normal-hearing (NH) listeners participated. Each listener made judgments of dissimilarity between stimulus pairs. Acoustical analyses that characterized the temporal and spectral characteristics of each stimulus were performed to examine the psychophysical nature of each perceptual dimension.

Results

For NH listeners, the timbre space was best represented in three dimensions, one correlated with the temporal envelope (log-attack time) of the stimuli, one correlated with the spectral envelope (spectral centroid), and one correlated with the spectral fine structure (spectral irregularity) of the stimuli. The timbre space from CI listeners, however, was best represented by two dimensions, one correlated with temporal envelope features and the other weakly correlated with spectral envelope features of the stimuli.

Conclusion

Temporal envelope was a dominant cue for timbre perception in CI listeners. Compared to NH listeners, CI listeners showed reduced reliance on both spectral envelope and spectral fine structure cues for timbre perception.

I. INTRODUCTION

Cochlear implants (CIs) were originally designed to aid speech recognition for severely hard-of-hearing individuals. Recently implanted CI patients can achieve average sentence recognition scores above 80% correct in quiet (see Dorman and Wilson, 2008). While the temporal-envelope-based coding strategies can support speech recognition, it has been well-documented that these strategies do not provide sufficient information for pitch perception or music appreciation (e.g., Gfeller and Lansing, 1991; Gfeller et al., 2002a; McDermott, 2004; Kong, Cruz, Jones, & Zeng, 2004; Galvin, Fu, & Nogaki, 2007).

The spectral and temporal properties of music are complex and dynamic. Both categories of cues contribute to the recognition of familiar tunes and the appraisal of the characteristics of music. The aesthetic appreciation of music, however, is multi-faceted. It does not solely depend on listeners’ ability to follow the melody or pitch contours. Anecdotally, some CI listeners can enjoy music by following the musical rhythms or the lyrics, despite their inability to track many of the melodic and pitch-based characteristics. Kong et al. (2004) systematically studied CI listeners’ ability to discriminate tempos, rhythmic patterns, and melodies. They reported that CI listeners were able to follow rhythmic cues for music perception, but their ability to perceive melodies (pitch cues) was poor, similar to normal-hearing listeners listening to stimuli processed with a one-channel vocoder.

Another important characteristic of musical sounds is timbre. By definition, timbre is the perceptual attribute that distinguishes two sounds that have the same pitch, loudness, and duration (ANSI, 1973). For musical timbre, this perceptual attribute distinguishes instruments (e.g., guitar vs. piano) playing the same note with the same loudness. One approach of studying timbre perception is to evaluate listeners’ ability to discriminate among or recognize musical instruments. This approach has been used extensively to investigate musical timbre perception in CI listeners (e.g., Dorman, Basham, McCandless, & Dove, 1991; Gfeller and Lansing, 1991; Gfeller, Knutson, Woodworth, Witt, & DeBus, 1998; Gfeller, Witt, Woodworth, Mehr, & Knutson, 2002b; Gfeller et al., 2002c; Schulz and Kerber, 1994; Leal et al., 2003; McDermott and Looi, 2004; Nimmons et al., 2008). In these studies, musical notes or songs played by different instruments (e.g., violin, piano, flute, etc.) were presented to CI listeners and they were asked to identify the instruments either in open set or in closed set. Across studies, CI listeners’ performances were generally poorer than normal-hearing (NH) listeners, with average identification scores in the range of about 40–60% correct depending on the number of possible alternatives in a closed set compared to 90–100% correct identification obtained in NH listeners (Schulz and Kerber, 1994; Gfeller et al., 1998; 2002b; McDermott and Looi, 2004; Kang et al., 2009). Another approach to study timbre perception is to evaluate listeners’ subjective rating or overall appraisal (i.e., liking) of sounds; this includes subjective characteristics such as “pleasantness” or “naturalness” (e.g., Gfeller and Lansing, 1991; Schulz and Kerber, 1994; Looi, McDermott, McKay, & Hickson, 2007), or sound quality on different perceptual dimensions, such as dull/brilliant, compact/scattered, and full/empty (Gfeller et al., 2002b). Results from these studies showed that, in general, CI listeners rated the sound quality lower than NH listeners (Schulz and Kerber, 1994; Gfeller and Lansing, 1991; Gfeller et al., 1998; 2000; 2002b). Gfeller et al. (2002b) reported that CI listeners rated string instruments and instruments played in the higher-frequency range as more scattered, less full, and duller when compared with NH listeners’ ratings.

The present study takes another approach to investigate timbre perception in CI listeners (i.e., exploring the timbre space). The timbre space for NH listeners has been studied extensively (e.g., McAdams. Winsberg, Donnadieu, De Soete, & Krimphoff, 1995; Marozeau, de Cheveign, McAdams, & Winsberg, 2003; Caclin, McAdams, Smith, & Winsberg, 2005). However, it has not been thoroughly examined in listeners with significant auditory impairments. In this approach, we aim to (1) derive a multidimensional musical timbre space for CI listeners to compare with the timbre space for NH listeners, and (2) determine the psychophysical nature of the dimensions in the CI timbre space on the basis of the acoustic parameters (temporal and spectral features of the musical instruments) described in previous studies (Krimphoff, McAdams, & Winsberg, 1994; McAdams et al., 1995; Marozeau et al., 2003; Calcin et al., 2005; Peeters, 2004). Because timbre is a multidimensional perceptual attribute, the perception of musical timbre can be represented in a multidimensional Euclidean space using a Multidimensional Scaling (MDS) technique, such that the distances among the stimuli reflect their relative dissimilarities. These perceptual dimensions are often described by terms like “brightness,” “roughness,” “nasality,” “naturalness,” or “impulsiveness.” Recent studies have tried to better define “timbre” by varying different physical parameters of acoustic signals and examining changes in perceived timbre (McAdams et al., 1995; Marozeau et al., 2003; Caclin et al., 2005, Marozeau and de Cheveigne, 2007). McAdams et al. (1995) tested a large group of NH listeners (n=98) with 18 digitally synthesized instruments. The goal of their study was to investigate the physical correlates of the most salient dimensions of timbre. Listeners were asked to make dissimilarity ratings between stimulus pairs. This study indicated that the NH timbre space can be represented by three dimensions (3D), with each dimension associated with an acoustic feature. The first timbre dimension was highly correlated with the “log-attack time” of the stimuli and the second dimension was highly correlated with the “spectral centroid” (i.e., the spectral center of gravity) of the stimuli. The third dimension, however, was only weakly correlated with “spectral flux” (i.e., the fluctuation of the spectrum over time). A more recent study by the same group of researchers (Caclin et al., 2005) investigated the psychophysical nature of timbre dimensions by varying individual acoustic properties (i.e., log-attack time, spectral centroid, and spectral flux or spectral irregularity) of harmonic complexes independently. They concluded that when attack time, spectral centroid, and spectral flux were manipulated independently in the stimuli set, the NH timbre space was best fit with a two-dimensional (2D) model. While spectral flux alone contributes to timbre perception, it was not a robust cue for NH listeners to perceive differences in timbre when all three cues were present in the stimuli set. On the other hand, when attack time, spectral centroid, and spectral irregularity (differences in amplitude between adjacent harmonics) were manipulated independently in the stimulus set, the NH timbre space was best fit with a 3D model with one dimension significantly correlated with the attack time, one dimension correlated with the spectral centroid, and one dimension correlated with the spectral irregularity. Based on these results, timbre can be described as a characteristic or quality of sound that depends on both temporal and spectral aspects of sound, both independently and interdependently.

While the relative contribution of different spectral and temporal acoustic features to musical timbre perception in NH listeners is still not fully understood, it has been generally agreed upon that the temporal envelope (e.g., attack time) and spectral envelope (e.g., spectral centroid) are the dominant cues for timbre perception. Previous research has examined CI listeners’ ability to discriminate (i.e., measuring just-noticeable difference) independent temporal and spectral acoustic features (attack time and spectral centroid) of the stimuli that contribute to timbre perception and found that CI listeners performed similarly to NH controls (Pressnitzer, Bestel, & Fraysse, 2005). In the present study, we replicated the experimental procedure performed by McAdams et al. (1995) to investigate the relative contribution of temporal and spectral cues to the perception of musical timbre in CI listeners. To our knowledge, our study is the first to derive timbre space in CI listeners. Based on previous findings on the ability of CI listeners to perceive temporal and spectral cues for music perception (see McDermott, 2004; Won, Drennan, Kang, and Rubinstein, 2010 for review), we hypothesized that CI listeners are able use temporal cues to perceive musical timbre, but their ability to use spectral cues is reduced; thus the timbre space in CI listeners should be different than that obtained from NH listeners.

II. METHODS

1. Listeners

Fifteen NH listeners (4 males, 11 females), ages 19 to 32 years served as a control group. All listeners had hearing thresholds no greater than 20 dB HL from 250 to 8000 Hz bilaterally. One listener had extensive musical training for over 15 years, one received some training learning the piano as a child, and the remaining listeners did not have any formal musical training.

Eight CI listeners (C1–C8, 5 males, 3 females), ages 15 to 63 years (mean = 36.75 years) participated in the study. Table I shows detailed demographic information for each listener, including age, onset of hearing loss, etiology of hearing loss, duration of severe-to-profound hearing loss prior to implantation, the CI processor used, and phoneme (consonant and vowel) identification scores. Half of the listeners were under the age of 30, close to the age range of the NH listeners. The remaining listeners are in the age range from 46 to 63 years old. All listeners, except for C1, were pre-lingually or peri-lingually hard-of-hearing bilaterally. For these listeners, hearing loss was progressive from mild to severe-to-profound in three listeners (C4, C5, C8). The other four listeners had a severe hearing loss at birth or at a very young age (C2, C3, C6, C7). Three listeners (C1, C5, C6) used a hearing aid (HA) in the non-implanted ear. Subject C8 was implanted in both ears at the time of the study, and the ear that received the first implant, which also yielded better speech recognition, was tested in this study. This listener was implanted with a five-channel ineraid device and used a Geneva processor in the ear that was tested in this experiment. None of the CI listeners received formal musical training.

Table I.

CI subjects detailed demographic information

| Subject | Age | Gender | CI Ear | Onset HL* (CI ear) | Etiology (CI ear) | Yrs severe HL prior CI** | CI Processor | Yrs of CI use | Consonant | Vowel |

|---|---|---|---|---|---|---|---|---|---|---|

| C1 | 46 | M | L | mid-20s | Unknown | 5 | Harmony | 1.5 | 41% | DNT |

| C2 | 23 | M | R | Birth | Congenital | 16 | Harmony | 7 | 39% | 64% |

| C3 | 21 | M | L | Birth | Meningitis | 17 | ESPrit 3G | 4 | DNT | 60% |

| C4 | 53 | F | L | Birth | Genetic (progressive) | 30 | Harmony | 4 | 52% | 77% |

| C5 | 57 | M | L | 5 | Premature Birth | 6 | Harmony | 5 | 53% | 79% |

| C6 | 16 | F | R | Birth | Congenital | 3 | Freedom | 1.5 | 81% | 83% |

| C7 | 15 | F | R | Birth | Congenital | 1 | ESPrit 3G | 14 | 92% | 90% |

| C8 | 63 | M | R | 5 | Unknown (progressive) | 3 | Geneva | 23 | 63% | 78% |

Age of onset of hearing loss in the cochlear-implant ear.

Duration of severe to profound hearing loss >1000 Hz in the cochlear-implant ear prior to implantation.

DNT = did not test

Phoneme recognition was evaluated on each CI listeners as part of our overall assessment routines. Due to time constraints, listener C1 was only tested with consonant recognition and listener C3 was only tested with vowel recognition. Consonant recognition consisted of 16 consonants /p,t,k,b,d,g,f, ɵ,s, ʃ,v, ð,z, ʒ,m,n/ recorded by three male and three female talkers (Shannon, Jensvold, Padilla, Robert, & Wang, 1999) in the /aCa/ context. Vowel recognition consisted of nine monophthongs /i,ɪ, ɛ, æ, ɝ, ʌ, u, ʊ, ɔ/ in the /hVd/ context recorded from three male and three female talkers in our laboratory. Nine blocks of testing were performed on each listener for each task, yielding a total of 54 trials per stimulus per task per listener, except for listener C1 who was only tested with a total of 42 trials per stimulus per task, due to time constraints. In our subject group, consonant and vowel identification scores ranged from 39–92% (mean = 60%) and 60–90% (mean = 76%), respectively (see Table I). This range of performance indicates that our sample represents a typical CI population (see Wilson and Dorman, 2008).

2. Stimuli

The stimuli were the digitally synthesized musical instruments used in McAdams et al. (1995), which were developed by Wessel, Bristow, and Settel (1987). These sounds were synthesized on a Yamaha TX802 FM Tone Generator. In order to reduce the session duration, only 16 out of the original 18 stimuli were selected. These 16 stimuli were bassoon (bsn), english horn (ehn), guitar (gtr), guitarnet (gtn), harpsichord (hcd), French horn (hrn), harp (hrp), obochord (obc), piano (pno), striano (sno), bowed string (stg), trombone (tbn), trumpar (tpr), trumpet (tpt), vibrone (vbn), and vibraphone (vbs). Eleven of the instruments were designed to imitate traditional western instruments (e.g., piano, guitar) and five were hybrids of two instruments (e.g., vibrone) to contain the perceptual characteristics of both instruments (see Table II and descriptions in McAdam et al., 1995). The hybrid instruments were designed by McAdam et al. (1995) to investigate if an instrument that is physically a mixture of two other instruments will appear in the perceptual space located somewhere between the two instruments of which it is composed. All stimuli had the same fundamental frequency (F0) 311 Hz (E-flat 4). The durations of these sounds ranged from 495 ms to 1096 ms. According to McAdams et al. (1995), these physical differences in duration across stimuli were required to produce similar perceptual duration for NH listeners. The stimuli were scaled to have equal maximum level. This specific set of stimuli was selected for the present study because it has been tested extensively with NH listeners. In order to validate our derived timbre space, it was important to use the same stimuli and methods to facilitate comparisons with previous studies.

Table II.

Names of the musical instruments, 3-letter label for each instrument, and total durations for each of the 16 stimuli.

| Name of the instruments (origins of hybrids in parentheses) | Label | Total duration (ms)* |

|---|---|---|

| Bassoon | bsn | 495 |

| English horn | ehn | 507 |

| Guitar | gtr | 569 |

| Guitarnet (guitar/carinet) | gtn | 557 |

| Harpsichord | hcd | 521 |

| French horn | hrn | 569 |

| Harp | hrp | 707 |

| Obochord (oboe/harpsichord) | obc | 544 |

| Piano | pno | 1008 |

| Striano (bowed string/piano) | sno | 775 |

| Bowed string | stg | 1071 |

| Trombone | tbn | 563 |

| Trumpar (trumpet/guitar) | tpr | 635 |

| Trumpet | tpt | 520 |

| Vibrone (vibraphone/trombone) | vbn | 1096 |

| Vibraphone | vbs | 770 |

| Mean | 682 | |

| Standard deviation | 207 | |

values taken from McAdams et al. (1995)

3. Procedure

All listeners were tested in a double-walled sound-attenuating booth (8′6″ × 8′6″). Stimuli were presented from a loudspeaker (M-Audio Studiophile BX8a Deluxe) one meter in front of the listeners. This loudspeaker has flat frequency response (±2 dB) from 40 to 22000 Hz. First, each listener was asked to adjust the level of individual sounds at their most comfortable listening level for equal loudness. During the loudness-balancing procedure, the stimulus –bassoon was chosen as the reference sound and the listeners were asked to adjust the level of this sound to reach their most comfortable listening level. Once the level of this reference sound was determined, the listeners then adjusted the level of the rest of the stimuli to match the loudness of this reference sound. After the adjustment for each individual stimulus, the listeners played the sounds one at a time and made further adjustments as necessary if any of the stimuli sounded noticeably louder or softer than the rest. Thus, the presentational levels were different for different stimuli and for different listeners. Cochlear-implant listeners used their own normal CI settings during the entire experiment. Those who wore a hearing aid in the non-implanted ear (C1, C5, C6) or those who had two CIs (C8) were instructed to turn-off their HA but leave their ear-mold in place in that ear, or to turn off the non-test implant. A foam earplug was inserted in the implanted ear(s) or in the non-implanted ear for non-HA users during testing to prevent any potential acoustic stimulation in case residual hearing was preserved.

Prior to the experiment, each listener was instructed that the goal of the study was to estimate the similarity of sound quality between sounds. They were told that each sound used in the experiment was the same musical note and that the loudness should be roughly the same across sounds given that they already adjusted the level of each sound to produce equal loudness prior to the experiment.

For the experiment, listeners were first familiarized with the 16 stimuli by listening to each stimulus one at a time as many times as they wanted. Subsequently, all possible pairs of the 16 stimuli (16 × 16 = 256 pairs) were presented in random order. Subjects were instructed to judge the dissimilarity of the two sounds in each pair by adjusting the sliding bar on a computer screen. The sliding bar was marked with numbers from 1 to 10 in steps of 1, and with labels “most similar” next to the number “1” and “most different” next to the number “10.” Subjects were encouraged to use the whole range of the scale. For each trial, the listener could play the pair as many times as they wanted before making a dissimilarity rating. Each listener was allowed to take a break at any time during the experiment. Before data collection, each listener received a training session that consisted of 20 randomly selected pairs to ensure that they understood the task and to practice making the dissimilarity rating. Each NH listener performed the experiment once and each CI listener performed the experiment twice or three times depending on the availability of the listener. Overall, each pair of stimuli was rated 30 times by the NH group and 42 times by the CI group.

4. Acoustical analyses

One of the goals of this study was to investigate the psychophysical nature of the dimensions of the CI timbre space. To achieve this goal, acoustical analyses were performed on the 16 stimuli used in this study, based on the descriptions in Krumhansl (1989), Krimphoff et al., (1994), McAdams et al. (1995), and Peeters, McAdams, & Herrera (2000) for harmonic signals, and mathematical formulae (see Appendix) provided by Peeters (2004). These analyses were performed to examine the following temporal and spectral characteristics of the stimuli, which have been shown in various reports (Krimphoff et al., 1994; McAdams et al., 1995; Marozeau et al., 2003) to contribute to the perception of timbre using the stimuli employed in this study:

Log-Attack Time: defined as the logarithm of the duration from the time at which the amplitude of the stimulus reaches its threshold (i.e., 10% of its maximum value in our calculation) to the time at which the amplitude reaches its maximum.

Spectral Centroid: defined as the spectral center of gravity, which is calculated as the amplitude weighted mean of the harmonic peaks averaged over the sound duration.

Spectral Spread: defined as the spread of the spectrum around its means, which is calculated as the amplitude weighted standard deviation of the harmonic peaks averaged over the sound duration.

Spectral Flux: defined as the amount of variation of spectrum over time, which is calculated as the normalized cross-correlation between the amplitude spectra of two successive time frames.

Spectral Irregularity: defined as the deviation of the amplitude harmonic peaks from a global spectral envelope derived from a running mean of the amplitude of three adjacent harmonics averaged over the sound duration.

In addition, the temporal feature – impulsiveness, and the spectral features – spectral centroid and spectral spread of the stimuli were computed using a perceptual-based model described by Marozeau et al. (2003). In this model, “impulsiveness” is calculated as one minus the ratio that is defined as the duration during which the smoothed power (instantaneous power smoothed by convolution with a 3.2-ms square window) is above 50% of its maximum value divided by the duration for which the smoothed power is above 10%. Spectral centroid and spectral spread are defined the same as above, but this computation took middle-ear filtering and the logarithmic band conversion to the spectrum, by mimicking the perceptual critical bands, into account. The details of these computations can be found in Marozeau et al. (2003).

While log-attack time and impulsiveness represent the temporal characteristics (temporal envelope in particular) of the stimuli, the rest represent the spectral characteristics. The spectral information can be further divided into two categories: spectral envelope and spectral fine structure. The spectral centroid and spectral spread categorize the overall spectral shape (i.e., spectral envelope) of the stimuli averaged over time. On the other hand, the spectral irregularity (spectral fine structure) describes the relationship between individual harmonics and the global spectral envelope. Thus, in order to use this cue to perceive timbre, listeners will need to have the ability to encode the variations of amplitude between neighboring harmonics. The last acoustic parameter, spectral flux, categorizes the change of the overall spectrum over time and can be categorized as a “spectro-temporal” parameter.

III. Results

1. NH timbre space and acoustic correlates

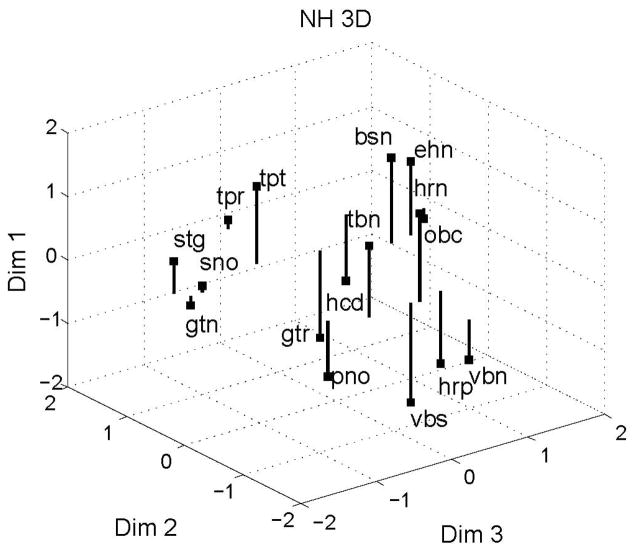

The dissimilarity matrices from 15 NH listeners were analyzed using a weighted Euclidean model – Individual Differences MDS (INSCAL) analysis (Carroll and Chang, 1970) in SPSS version 17.0. In this model, the salience of each resulting dimension in the perceptual map is weighted differently for each listener. The dissimilarity matrices were first examined in different numbers of dimensions for interpretation. The stress (a goodness-of-fit measure for MDS analysis) was calculated as a function of number of dimensions, from two to five. The goodness of fit can be evaluated by a measure of stress, which will decrease with the number of dimensions. An optimal fit is usually indicated by the presence of a kneepoint in the stress function. Our results show a decrease in stress as the number of dimensions increased, but no clear “kneepoint” was identified. Subsequently, we fit the data with a 3D solution based on previous experiments with the same stimuli (Krumhansl, 1989, McAdams et al 1995). The 3D timbre perceptual map is shown in Fig. 1. This map displays the relatively dissimilarity distance among the 16 stimuli used in the experiment. The overall weight for each dimension was derived from the INDSCAL analysis. Dim 1 received the greatest weight (0.29) followed, by Dim 2 (0.12) and Dim 3 (0.10), which received weights of about one-half and one-third of that of Dim 1, respectively. These weights indicate the relative saliency of each perceptual dimension to the perception of timbre -- the higher the weight, the more salient the perceptual dimension.

Figure 1.

Normal-hearing listeners’ three-dimensional timbre space.

Results from the acoustical analyses on each of the temporal and spectral acoustic parameters described above were used to correlate with the coordinates of the 16 stimuli for each of the MDS dimensions for the 3D solutions provided by the INSCAL analysis (Table III). This was done to determine the psychophysical nature of each dimension. Due to the fact that some of the acoustical parameters are significantly correlated with each other in our stimuli (e.g., spectral centroid and spectral spread are significantly correlated (r = 0.58, p = 0.02)) and the number of correlations examined, an α-level of 0.01 was selected for all correlational analyses between perceptual dimensions and acoustic parameters. This value helps to reduce the chances of over interpretation of weak correlations when the selected acoustic parameters merely covary with the true underlying parameters (e.g., see discussion in Caclin et al., 2005).

Table III.

Correlations between acoustic parameters and the coordinates of 16 stimuli for each dimension in the NH 3D perceptual space.

| Acoustic parameters | Dim 1 | Dim 2 | Dim 3 |

|---|---|---|---|

| Log-attack time | 0.79†† | 0.07 | −0.37 |

| Spectral centroid | −0.07 | 0.82†† | 0.32 |

| Spectral spread | −0.64** | 0.34 | −0.15 |

| Spectral flux | −0.15 | −0.13 | 0.12 |

| Spectral irregularity | 0.22 | 0.27 | 0.60** |

Significant correlations are bolded in this table

p ≤ 0.01,

p ≤ 0.005,

p ≤ 0.001

Dim 1 was found significantly correlated with log-attack time (temporal envelope cue) (r = 0.79, p ≤ 0.0005); Dim 2 was significantly correlated with spectral centroid (spectral envelope cue) (r = 0.82, p ≤ 0.0001); and Dim 3 was significantly correlated with spectral irregularity (spectral fine structure) (r = 0.60, p ≤ 0.01). Spectral flux was not correlated (p > 0.05) with any of the dimensions. It is worth noting that the spectral spread was also significantly correlated with Dim 1 (r = −0.64, p ≤ 0.01), which seems surprising given that Dim 1 was strongly correlated with the temporal characteristics of the stimuli (log-attack time) and that it has been repeatedly reported in the literature that one of the dimensions in a 3D timbre space is a temporal dimension. Careful examination of the stimuli and the acoustic parameters revealed that spectral spread covaried to some extent with the temporal envelope of the stimuli, such as the impulsiveness of the sound (r = −0.55, p ≤ 0.05) and that the impulsiveness feature was highly correlated with Dim 1 (r = 0.83, p ≤ 0.001). Other spectral parameters – spectral centroid, spectral irregularity, and spectral flux were not correlated with the temporal envelope of the stimuli, and they were not correlated with each other.

Patterns of results remained the same when the temporal and spectral acoustic parameters were computed with the perceptual-based model described by Marozeau et al. (2003). Figure 2 displays the relationships between the physical dimension and the perceptual timbre dimension that best represents the acoustic parameter. The solid line on some of the panels represents a regression line indicating significant correlation between the acoustic parameter and the perceptual dimension.

Figure 2.

Scatter plots relating each acoustic parameter to the MDS dimensions that best represent it for normal-hearing data: (a) log-attack time versus Dim 1; (b) spectral centroid versus Dim 2; (c)–(d) spectral spread versus Dim 1 and Dim 3; (e) spectral variation versus Dim 3; (f) spectral deviation versus Dim 3. Solid lines represent regression lines. Note that no regression line was plotted in panel (d) and (e) due to lack of correlation between spectral spread and Dim 3, and between spread variation and Dim 3, respectively.

2. CI timbre space and acoustic correlates

Multi-dimensional scaling (MDS) analyses were performed for CI group data using INSCAL analysis and for individual listeners using the traditional MDSCAL procedure, implemented in Matlab (R2009a) according to the SMACOFF algorithm (Borg and Groenen, 1997). For individual listeners, an average dissimilarity matrix was created by averaging the matrices from different runs (2–3 runs depending on the listener) and by folding the matrix (for example, the pair gtn-tpt was averaged with the pair tpt-gtn) resulting in 4 to 6 estimations per pair of instruments. MDSCAL analysis was then performed on the averaged, folded matrix for each listener.

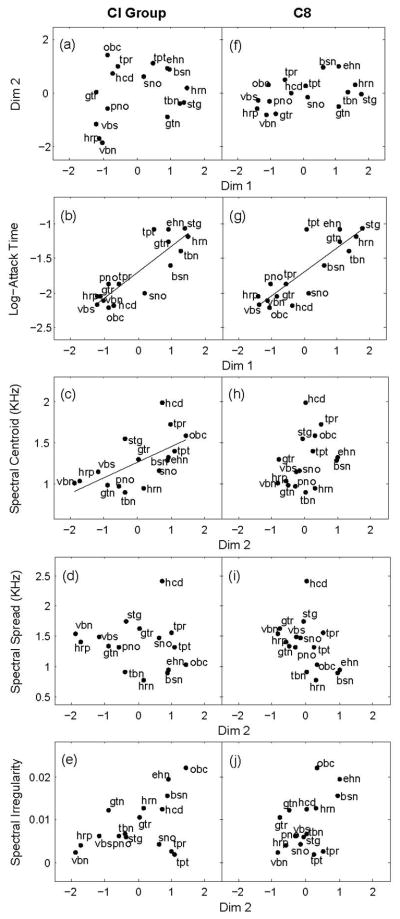

For the CI group data, the INDSCAL analysis showed no clear knee point in the stress function relative to the number of dimensions. Thus, a CI timbre space map was derived using a 3D solution. Similar to NH data, the overall weight was the highest for Dim 1 (0.35), followed by Dim 2 (0.12) and Dim 3 (0.11), suggesting that similar to their NH counterparts, CI listeners relied greatly on the temporal envelope cues to perceive timbre. Unlike the NH results, in which each of the three MDS dimensions associated with one or two acoustic features, Dim 2 and Dim 3 in the CI 3D space did not significantly correlate with any of the temporal or spectral parameters under investigation. The temporal envelope feature log-attack time was strongly correlated with Dim 1 (r = 0.88, p ≤ 0.0001) (see Table IV). The spectral envelope and fine structure features, however, did not significantly correlate with any of the perceptual dimensions. These patterns of results differed considerably from those obtained in NH listeners, which suggests that the spectral cues are less salient or reliable cues to CI listeners than to NH listeners for timbre perception. In order to further investigate the psychophysical relations, the CI group data was fit with a 2D solution. In this 2D solution: (1) Dim 1 was strongly and significantly correlated (r = 0.88, p ≤ 0.001) with log-attack time; (2) Dim 2 was significantly correlated with spectral centroid (r = 0.62, p ≤ 0.01). The overall weight for Dim 1 (0.40) was considerably higher than that for Dim 2 (0.17), suggesting that perceptual salience was higher for temporal envelope than for spectral envelope cues for CI listeners (see Table V for perceptual weights for individual listeners). It is noted that the correlation between spectral centroid and Dim 2 improved from 0.50 in the 3D solution to 0.62 in the 2D solution, whereas the strength of correlation between log-attack time and Dim 1 remained unchanged in the 2D solution. This shows that the fit of the model to the relevant acoustic parameters was enhanced by reducing the number of dimensions to 2D.

Table IV.

Correlations between acoustic parameters and the coordinates of 16 stimuli for each dimension in the CI 3D and 2D timbre space.

| Three-dimensional (3D) space | |||

|---|---|---|---|

| Acoustic parameters | Dim 1 | Dim 2 | Dim 3 |

| Log-attack time | 0.88†† | 0.15 | 0.09 |

| Spectral centroid | 0.17 | 0.50 | 0.49 |

| Spectral spread | 0.47 | −0.33 | 0.41 |

| Spectral flux | 0.02 | −0.07 | −0.23 |

| Spectral irregularity | −0.20 | 0.49 | −0.07 |

| Two-dimensional (2D) space | ||

|---|---|---|

| Acoustic parameters | Dim 1 | Dim 2 |

| Log-attack time | 0.88†† | −0.18 |

| Spectral centroid | 0.18 | 0.62** |

| Spectral spread | 0.48 | 0.14 |

| Spectral flux | 0.02 | 0.16 |

| Spectral irregularity | −0.19 | −0.47 |

Significant correlations are bolded in this table

p ≤ 0.01,

p ≤ 0.005,

p ≤ 0.001

Table V.

Perceptual weight for each dimension in the 2D solution for each CI listener.

| Subject | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | Overall | |

| Dim 1 | 0.49 | 0.59 | 0.68 | 0.56 | 0.49 | 0.86 | 0.30 | 0.90 | 0.40 |

| Dim 2 | 0.44 | 0.63 | 0.44 | 0.55 | 0.40 | 0.18 | 0.24 | 0.16 | 0.17 |

We then performed MDS analysis on individual CI data, using the MDSCAL model. Half of the listeners (C2, C4, C6, C8) showed a clear kneepoint for 2 dimensions and the other half (C1, C3, C5, C7) did not show a clear kneepoint. Preliminary analyses using a 3D solution for the four CI listeners who did not exhibit a clear kneepoint, showed a lack of psychophysical correlates with the third dimension, and their patterns of results were similar to the CI group data. We subsequently fit the data with a 2D model for all CI listeners. In the 2D solution after procrustean rotation toward the overall INDSCAL solution for the CI group data, one of the dimensions significantly (p ≤ 0.01) correlated with log-attack time for almost all subjects, except for C7 (see Table VI for individual data). The other dimension was significantly correlated with the spectral envelope parameters (spectral centroid or spectral spread) in only three listeners (C3: Dim 2 and spectral centroid; C6: Dim 2 and spectral spread; C7: Dim 1 and spectral spread). The significant correlation between Dim 1 and spectral spread for listener C7 and lack of correlation with any temporal parameters (e.g., log-attack time and impulsiveness), suggests that Dim 1 is a spectral dimension for this listener. Dim 2 for listener C6, which was significantly correlated with spectral spread, was also significantly correlated with impulsiveness (r = 0.69, p ≤ 0.005). Given that spectral spread and impulsiveness covary, Dim 2 could be also a temporal dimension for C6. Two listeners (C1, C2) showed significant correlation between Dim 2 and spectral irregularity. The remaining three listeners (C4, C5, and C8) did not show any significant correlation between Dim 2 and any of the spectral parameters, suggesting that these three listeners relied mainly on temporal envelope cues to perceive timbre differences.

Table VI.

Correlations between acoustic parameters and the coordinates of 16 stimuli for each dimension (in a 2D solution) for each CI subject.

| Subject | Log-attack time | Spectral centroid | Spectral spread | Spectral flux | Spectral irregularity | |

|---|---|---|---|---|---|---|

| C1 | Dim 1 | 0.63** | 0.13 | −0.21 | −0.01 | −0.12 |

| Dim 2 | 0.47 | 0.31 | −0.39 | −0.35 | 0.63** | |

| C2 | Dim 1 | 0.82†† | −0.20 | 0.47 | −0.04 | 0.06 |

| Dim 2 | 0.14 | 0.55 | −0.22 | −0.04 | 0.66** | |

| C3 | Dim 1 | 0.81†† | −0.24 | −0.44 | 0.02 | 0.06 |

| Dim 2 | 0.20 | 0.74†† | −0.02 | 0.04 | 0.55 | |

| C4 | Dim 1 | 0.70† | 0.39 | −0.22 | −0.02 | 0.24 |

| Dim 2 | 0.47 | 0.38 | −0.40 | −0.06 | 0.55 | |

| C5 | Dim 1 | 0.79†† | −0.17 | −0.42 | 0.01 | 0.16 |

| Dim 2 | 0.33 | 0.54 | 0.23 | −0.31 | 0.05 | |

| C6 | Dim 1 | 0.88†† | −0.17 | −0.50 | −0.15 | 0.24 |

| Dim 2 | 0.45 | −0.49 | −0.66** | −0.09 | 0.12 | |

| C7 | Dim 1 | 0.24 | −0.26 | −0.70† | 0.15 | 0.26 |

| Dim 2 | 0.18 | 0.57 | −0.06 | −0.20 | 0.39 | |

| C8 | Dim 1 | 0.86†† | −0.10 | −0.35 | 0.06 | 0.22 |

| Dim 2 | 0.42 | 0.36 | −0.45 | −0.04 | 0.49 |

Significant correlations are bolded in this table

p ≤ 0.01,

p ≤ 0.005,

≤ 0.001

In summary, CI data were best described in a 2D perceptual model with one dimension representing the temporal envelope characteristic and the other dimension representing the spectral characteristic of the stimuli. With only one exception (C7), all CI listeners showed significant correlation between one dimension and the log-attack time. Only half of the listeners showed significant correlation between the one dimension and spectral parameters. It is noted that while the strength of correlation with log-attack time was strong and comparable between NH and CI listeners, correlation with spectral envelope was considerably lower in CI group data (r = 0.62) compared to NH listeners (r = 0.82), suggesting that spectral envelope cues were less reliable or salient in the CI group.

Figure 3 left panels show the 2D space for the CI group data (Fig. 3a) and the acoustic correlates to each dimension (Fig.3b – 3e). It is apparent that the correlation between Dim 1 and log-attack time is considerably higher than the correlation between Dim 2 and spectral centroid. The right panels show the 2D space (Fig. 3f) and the acoustic correlates (or lack of) for each perceptual dimension (Fig. 3g – 3j) for one listener to illustrate that some CI listeners (e.g., C8 in Fig. 3 right panels) relied primarily on the temporal envelope cues to perceive differences in timbre, while others (e.g., the CI group data in Fig 3 left panels) used temporal and spectral envelope cues for timbre perception. It is clear in this figure that, the perceptual distance between stimuli in Dim 2 was very small for listener C8 compared to the NH and CI group space.

Figure 3.

Cochlear implant (CI) two-dimensional timbre space (upper panels) and acoustic correlates for Dim 1 and Dim 2 (lower panels). Left panels show the CI group data and right panels show results from listener C8.

III. DISCUSSION

1. NH timbre space and acoustic correlates

Our results show that NH listeners used temporal envelope, spectral envelope, and spectral fine structure cues to perceive differences in musical timbre, consistent with previous findings (Krimphoff et al., 1994; Caclin et al., 2005). The third dimension, however, did not correlate with the acoustic parameter spectral flux, which was reported by McAdams et al. It should be noted that although spectral flux was significantly correlated with Dim 3 in McAdams et al. (1995), the correlation was weak (r = 0.54). Our NH Dim 3 was significantly correlated with spectral irregularity, consistent with results reported by Krimphoff et al. (1994). This difference between our results and McAdams et al.’s results could be due to a number of factors, including: (1) the use of a loudspeaker in this study instead of headphones; (2) the number of stimuli used (16 stimuli in this study as opposed to 18 stimuli in McAdams et al.); and (3) differences in analysis models (INDSCAL in this study and CLASCAL in McAdams et al.). Indeed, the lack of correlation between Dim 3 and spectral flux was also found recently by McAdams and colleagues in Caclin et al. (2005). Caclin et al. (2005) found that an MDS 2D solution provided a better fit to their dissimilarity data and spectral flux was not correlated with any of the perceptual dimensions when attack-time, spectral centroid, and spectral flux were the varying parameters in the stimuli set. They concluded that this could be due to the fact that their model of spectral flux is not perceptually relevant, or spectral flux is a less salient parameter than the other two (log-attack time and spectral centroid).

2. Temporal and spectral cues for timbre perception in CI listeners

Unlike NH results, CI results were best represented in a 2D timbre space. The reduced number of dimensions for CI listeners could be interpreted as: (1) the relative significance of temporal and spectral cues to timbre perception is different between NH and CI listeners; (2) CI listeners may not have detected smaller perceptual differences between instruments, particularly in the spectral domain. In general, one of the perceptual dimensions in the CI 2D space can be regarded as a temporal dimension, in which this perceptual dimension was highly correlated with the temporal envelope characteristics of the stimuli. The other dimension can be considered a spectral dimension, which was correlated with the spectral envelope characteristics of the stimuli. However, the weak or lack of the correlation between spectral centroid/spectral irregularity and the second dimension in the CI group data suggests that the spectral cues were less reliable cues for timbre perception in CI listeners compared with their NH counterparts. Indeed, the patterns of results in the CI group data are supported by the findings from individual CI listeners, where only half of the CI listeners showed significant correlation between a perceptual dimension and spectral envelope and spectral fine structure parameters. The other half of the listeners, on the other hand, relied mainly on temporal envelope cues to perceive differences in musical timbre.

The contribution of temporal and spectral envelope cues for musical timbre perception has also been reported previously (Gfeller et al., 1998; 2002b; 2002c; Dermott, 2004; Pressnitzer et al., 2005). Dermott (2004) showed a confusion matrix from a group of CI listeners tested on a musical instrument identification task. The 16 instruments used in the study were categorized into two groups: non-percussive single instrument and percussive single instrument. They found that more confusions were made among the instruments within the same category (i.e., percussive or non-percussive) than between categories. Based on these patterns of confusions, they concluded that temporal envelope cues are salient cues for musical timbre perception. Pressnitzer et al. (2005) created bandpass-filtered harmonic complexes that varying in the attack-time and spectral centroid independently to obtain just-noticeable difference (JND) measures from a group of CI listeners. They reported that performance was good for both attack-time and spectral centroid discrimination tasks, suggesting that temporal and spectral envelope cues contributed to timbre perception in CI listeners. Unlike Pressnitzer et al.’s study, we derived timbre space and investigated the relative salience of cues for timbre perception for CI listeners. The fact that CI listeners were able to discriminate differences in spectral centroid, as reported in Pressnitzer et al. (2005), did not necessarily imply that the spectral centroid cue is a reliable or salient cue for timbre perception in CI listeners, especially when other cues (e.g., temporal envelope cues) are also available in the stimulus set. Although our findings were consistent with previous reports on musical instrument identification task, it is unclear if the patterns of results in this study can generalize to stimuli that have higher F0s. At higher F0s, the spacing between harmonics is larger, resulting in harmonics be encoded in different CI channels that are farther apart compared to stimuli with lower F0s. This would affect the degree of reliability and saliency of spectral cues for timbre and pitch perception in CI listeners. Singh, Kong, and Zeng (2009) found that melody recognition improved when the set of stimuli was in a higher frequency range compared to the lower frequency ranges. In addition, Gfeller et al. (2002b) reported significant differences in the perception of timbre at different frequency ranges.

Unlike pitch perception, for which temporal and spectral envelope cues elicit only non-salient pitch, temporal and spectral envelope cues are robust for musical timbre perception (McAdams et al., 1995). This difference could explain the relatively good performance (40–60%) in musical instrument identification compared to the close to chance level performance for melody recognition in the CI listeners when the frequency difference between notes were a few semitones (see McDermot, 2004; Kong et al., 2004), due to mis- or under-representation of temporal and/or spectral fine structure cues. The reduced ability of CI listeners to utilize the fine structure cues could be attributed to both biological and device-related factors, including: electrode-to-frequency mismatch, reduced number of neural survivals resulted from long-duration of deafness, and the removal of fine structure of the acoustic signals in the current envelope-based speech processing strategies.

3. Timbre and speech perception in electric hearing

Based on the fact that the temporal and spectral envelopes contribute considerably to both speech (Shannan, Zeng, Kamath, Wygonski, & Ekelid, 1995) and timbre perception, the relationship in performance between these two measures warrants some discussion. Particularly, in quiet, consonant recognition relies heavily on temporal envelope cues and vowel recognition required coarse spectral representation. The relationship between speech recognition and timbre perception has been investigated previously. Kang et al. (2009) found a significant correlation between CNC word recognition (both in quiet and in noise) and musical instrument identification scores in a large group of CI listeners (n=42). Gfeller et al. (1998), on the other hand, showed a lack of association between musical instrument identification scores and speech recognition scores in a group of 28 CI listeners.

In this study, consonant and vowel recognition scores (see results in Table I) were obtained from each CI listeners. Correlational analyses were performed between phoneme recognition scores and the correlation values of log-attack time with Dim 1 (temporal dimension), and correlation values of spectral centroid with Dim 2 (spectral dimension). Similar to the findings reported by Gfeller et al. (1998), we also did not find any significant correlation (p > 0.05) between phoneme (consonant and vowel) recognition and timbre perception on the temporal or spectral dimension. That is, listeners who achieved high level of speech recognition (>80%) were not necessarily able to use temporal (e.g., C7) or spectral (e.g., C6) envelope cues to perceive timbre differences. In addition, the lack of correlation between timbre and speech perception in our study was consistent with the absence of relationship between rhythmic pattern recognition, melody recognition, and speech recognition reported previously (e.g., Kong et al., 2004). However, the difference between the findings in Kang et al. (2009), which showed a significant correlation between instrument identification and speech recognition, and the findings in this study could be attributed to (1) the small sample size in our data; (2) differences in stimuli (both speech and musical) and tasks (subjective distance vs. identification).

IV. CONCLUSIONS

The present study was designed to investigate timbre perception ability in CI listeners and to derive musical timbre space from CI listeners. This space was examined for correlations with known acoustic parameters that contribute to musical timbre perception as reported in the literature. Results showed that CI listeners, unlike their NH counterparts, were less able to use spectral cues to distinguish differences in musical timbre. In addition, no reliable relationship was found between phoneme recognition and the salience of temporal and spectral cues for timbre perception in our CI listeners.

Although we have demonstrated that CI listeners can use temporal and spectral envelope cues for timbre perception, it is still unclear how their ability to perceive differences in musical timbre affects their perception of the “pleasantness,” “naturalness,” “richness,” or other perceptual descriptors of sounds. In order to bridge between the present work and these perceptual characteristics, future studies could examine the relationship between timbre space and subjective ratings or appraisal of sound quality.

Acknowledgments

We are grateful to all listeners for their participation in the experiment. We would like to thank Prof. Stephen McAdams for providing the stimuli. We also thank two anonymous reviewers and the Associate Editor Prof. Chris Turner for their helpful comments and suggestions. This work was supported by NIH-NIDCD (R03 DC009684-02) to YYK and Northeastern University Provost Faculty Development Research Grant to YYK and ME.

Appendix

Mathematical formulae used to calculate the five acoustic temporal and spectral parameters for our stimuli:

- Log-Attack Time (LAT):

-

Harmonic Spectral Centroid (HSC):where

-

Harmonic Spectral Spread (HSS):where

-

Harmonic Spectral Flux (HSF):where

-

Harmonic Spectral Irregularity (HSI)where

A is the amplitude of the kth harmonic peak in the tth frame, f is the frequency (Hz) of the kth harmonic, K is total number of harmonic peaks, and T is the total number of frames.

Footnotes

Publisher's Disclaimer: This is an author-produced manuscript that has been peer reviewed and accepted for publication in the Journal of Speech, Language, and Hearing Research (JSLHR). As the “Papers in Press” version of the manuscript, it has not yet undergone copyediting, proofreading, or other quality controls associated with final published articles. As the publisher and copyright holder, the American Speech-Language-Hearing Association (ASHA) disclaims any liability resulting from use of inaccurate or misleading data or information contained herein. Further, the authors have disclosed that permission has been obtained for use of any copyrighted material and that, if applicable, conflicts of interest have been noted in the manuscript.

References

- ANSI. American National Standard – Psychoacoustical Terminology S3.20. New York: American National Standards Institute; 1973. [Google Scholar]

- Borg I, Groenen PJF. Modern Multidimensional Scaling: Theory and Applications. New York: Springer, New York; 1997. [Google Scholar]

- Caclin A, McAdams S, Smith BK, Winsberg S. Acoustic correlates of timbre space dimensions: a confirmatory study using synthetic tones. Journal of the Acoustical Society of America. 2005;118:471–482. doi: 10.1121/1.1929229. [DOI] [PubMed] [Google Scholar]

- Carroll JD, Chang JJ. Analysis of indifferences in multidimensional scaling via an n-way generalization of Eckart-Young decomposition. Psychometrika. 1970;35:283–319. [Google Scholar]

- Dorman M, Basham K, McCandless G, Dove H. Speech understanding and music appreciation with the Ineraid cochlear implant. The Hearing Journal. 1991;44:32–37. [Google Scholar]

- Galvin J, Fu QJ, Nogaki G. Melodic contour identification by cochlear implant listeners. Ear and Hearing. 2007;28:302–319. doi: 10.1097/01.aud.0000261689.35445.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K, Lansing CR. Melodic, rhythmic, and timbral perception of adult cochlear implant users. Journal of Speech and Hearing Research. 1991;34:916–920. doi: 10.1044/jshr.3404.916. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Christ A, Knutson JF, Witt S, Murray KT, Tyler RS. Musical backgrounds, listening habits, and aesthetic enjoyment of adult cochlear implant recipients. Journal of American Academy of Audiology. 2000;11:390–406. [PubMed] [Google Scholar]

- Gfeller K, Knutson JF, Woodworth G, Witt S, DeBus B. Timbral recognition and appraisal by adult cochlear implant users and normal-hearing adults. Journal of American Academy of Audiology. 1998;9:1–19. [PubMed] [Google Scholar]

- Gfeller K, Turner C, Mehr M, Woodworth G, Fearn R, Knutson JF, Witt S, Stordahl J. Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants International. 2002a;3:29–53. doi: 10.1179/cim.2002.3.1.29. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Witt S, Woodworth G, Mehr M, Knutson J. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Annals of Otology, Rhinology and Laryngology. 2002b;111:349–356. doi: 10.1177/000348940211100412. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Witt S, Adamek M, Mehr M, Rogers J, Stordahl J, Ringgenberg S. Effects of training on timbre recognition and appraisal by postlingually deafened cochlear implant recipients. Journal of American Academy of Audiology. 2002c;13:132–145. [PubMed] [Google Scholar]

- Kang R, Nimmons GL, Drennan W, Longnion J, Ruffin C, Nie K, Won JH, Worman T, Yueh B, Rubinstein J. Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear and Hearing. 2009;30:411–418. doi: 10.1097/AUD.0b013e3181a61bc0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong YY, Cruz R, Jones JA, Zeng FG. Music perception with temporal cues in acoustic and electric hearing. Ear and Hearing. 2004;25:173–185. doi: 10.1097/01.aud.0000120365.97792.2f. [DOI] [PubMed] [Google Scholar]

- Krimphoff J, McAdams S, Winsberg S. Caracterisation du timbre des sons complexes. II: Analyses acoustiques et quantification psychophysique. Journal de Physique. 1994;4:625–628. [Google Scholar]

- Krumhansl CL. Why is musical timbre so hard to understand? In: Nielsen S, Olsson O, editors. Structure and Perception of Electroacoustic Sound and Music. Amsterdam: Elsevier; 1989. pp. 43–53. [Google Scholar]

- Kruskal JB. Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika. 1964;29:115–129. [Google Scholar]

- Leal MC, Shin YJ, Laborde M, Calmels MN, Verges S, Lugardon S, Andrieu S, Deguine O, Fraysse B. Music perception in adult cochlear implant recipients. Acta Otolaryngologica. 2003;123:826–835. doi: 10.1080/00016480310000386. [DOI] [PubMed] [Google Scholar]

- Looi V, McDermott H, McKay C, Hickson L. Comparisons of quality ratings for music by cochlear implant and hearing aid users. Ear and Hearing. 2007;28:59S–61S. doi: 10.1097/AUD.0b013e31803150cb. [DOI] [PubMed] [Google Scholar]

- Marozeau J, de Cheveigne A. The effect of fundamental frequency on the brightness dimension of timbre. Journal of the Acoustical Society of America. 2007;121:383–387. doi: 10.1121/1.2384910. [DOI] [PubMed] [Google Scholar]

- Marozeau J, de Cheveigne A, McAdams S, Winsberg S. The dependency of timbre on fundamental frequency. Journal of the Acoustical Society of America. 2003;114:2946–2957. doi: 10.1121/1.1618239. [DOI] [PubMed] [Google Scholar]

- McAdams S, Winsberg S, Donnadieu S, De Soete G, Krimphoff J. Perceptual scaling of synthesized musical timbres: common dimensions, specificities, and latent subject classes. Psychological Research. 1995;58:177–192. doi: 10.1007/BF00419633. [DOI] [PubMed] [Google Scholar]

- McDermott HJ. Music perception with cochlear implants: a review. Trends in Amplification. 2004;8:49–82. doi: 10.1177/108471380400800203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nimmons GL, Kang RS, Drennan WR, Longnion J, Ruffin C, Worman T, Yueh B, Rubinstein J. Clinical assessment of music perception in cochlear implant listeners. Otology and Neurotology. 2008;29:149–155. doi: 10.1097/mao.0b013e31812f7244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peeters G. A large set of audio features for sound description (similarity and classification) in the CUIDADO project. CUIDADA I.S.T. Project Report 2004 [Google Scholar]

- Peeters G, McAdams S, Herrera P. Instrument sound description in the context of MPEG-7. Proceedings of International Conference in Computer Music; 2000. pp. 166–169. [Google Scholar]

- Pressnitzer D, Bestel J, Fraysse B. Music to electric ears: pitch and timbre perception by cochlear implant patients. Annals of the New York Academy of Sciences. 2005;1060:343–345. doi: 10.1196/annals.1360.050. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Jensvold A, Padilla M, Robert ME, Wang X. Consonant recordings for speech testing. Journal of the Acoustical Society of America. 1999;106:L71–74. doi: 10.1121/1.428150. [DOI] [PubMed] [Google Scholar]

- Schulz E, Kerber M. Music perception with the Med-El implants. In: Hochmair-Desoyer IJ, Hochmair ES, editors. Advances in Cochlear Implants. Vienna: Manz; 1994. pp. 326–332. [Google Scholar]

- Singh S, Kong YY, Zeng FG. Cochlear implant melody recognition as a function of melody frequency range, harmonicity, and number of electrodes. Ear and Hearing. 2009;30:160–168. doi: 10.1097/AUD.0b013e31819342b9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Kang RS, Rubinstein JT. Psychoacoustic abilities associated with music perception in cochlear implant users. Ear and Hearing. 2010 doi: 10.1097/AUD.0b013e3181e8b7bd. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wessel DL, Bristow D, Settel Z. Control of phrasing and articulation in synthesis. Proceedings of the International Computer Music Conference; 1987. pp. 108–116. [Google Scholar]

- Wilson BS, Dorman MF. Cochlear implants: A remarkable past and a brilliant future. Hearing Research. 2008;242:3–21. doi: 10.1016/j.heares.2008.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]