Abstract

The present, subjective value of a reinforcer typically decreases as a function of the delay to its receipt, a phenomenon termed delay discounting. Delay discounting, which is assumed to reflect impulsivity, is hypothesized to play an important role in drug abuse. The present study examined delay discounting of cocaine injections by rhesus monkeys. Subjects were studied on a discrete-trials task in which they chose between 2 doses of cocaine: a smaller, immediate dose and a larger, delayed dose. The immediate dose varied between 0.012 and 0.4 mg/kg/injection, whereas the delayed dose was always 0.2 mg/kg/injection and was delivered after a delay that varied between 0 and 300 s in different conditions. At each delay, the point at which a monkey chose the immediate and delayed doses equally often (i.e., the ED50) provided a measure of the present, subjective value of the delayed dose. Dose–response functions for the immediate dose shifted to the left as delay increased. The amount of the immediate dose predicted to be equal in subjective value to the delayed dose decreased as a function of the delay, and hyperbolic discounting functions provided good fits to the data (median R2 = .86). The current approach may provide the basis for an animal model of the effect of delay on the subjective value of drugs of abuse.

Keywords: delay discounting, cocaine, impulsivity, choice, monkeys

Much of behavior, with drug abuse being a particularly relevant example, may be usefully conceptualized as involving a choice among available alternatives. The effects of delay on choice have been widely studied, and a number of quantitative models have been proposed that describe how the present, or subjective, value of a reinforcer changes as a function of the delay to its receipt (e.g., Ainslie, 1974; Mazur, 1987; Myerson & Green, 1995; Rachlin, 2006; Rachlin & Green, 1972). The model that has the most currency at the present time is based on the hyperboloid discounting function (for a recent review, see Green & Myerson, 2004):

| (1) |

where V represents the present, subjective value of a reinforcer of a specified amount (A), which is to be delivered after a delay (D). The parameter k reflects the degree to which individuals discount the value of the delayed reinforcer (a larger k indicates steeper discounting), and the parameter s reflects the nonlinearity with which individuals scale amount and time. Note that when s = 1.0, Equation 1 reduces to a simple hyperbola. A major strength of discounting models such as Equation 1 is that they represent precise, quantitative descriptions of the relationship between delay and reinforcer value (Kagel, Battalio, & Green, 1995).

The hyperboloid discounting model accurately describes discounting by human and nonhuman subjects for a variety of reinforcers (Green & Myerson, 2004), and it has assumed an increasingly prominent role in the study of drug abuse (for a recent review, see Reynolds, 2006). Many studies have shown that substance abusers discount delayed reinforcers at a greater rate than nonabusing control subjects (for a review, see Bickel & Marsch, 2001). This finding is frequently interpreted as indicating greater impulsivity on the part of substance abusers, which would help account for their choosing drug self-administration, a source of relatively immediate reinforcement, over more delayed, nondrug reinforcers.

Much of the existing data on the discounting of delayed drug reinforcers comes from studies that examined hypothetical choices between a drug and a nondrug reinforcer in human subjects. However, the extent to which the results of such studies may be applicable to actual drugs as reinforcers is unknown. A few studies have examined the effects of delay on behavior maintained by drug injections in nonhuman primates (e.g., Beardsley & Balster, 1993; Stretch, Gerber, & Lane, 1976). Studies of the effects of delay on the choice between different doses of cocaine (Anderson & Woolverton, 2003; Woolverton & Anderson, 2006) and between cocaine and food (Woolverton & Anderson, 2006) have demonstrated that choice between cocaine and another reinforcer, whether drug or nondrug, can be systematically manipulated by varying the delay between response and drug injection. However, there is no previous nonhuman primate study that has examined the form of the discounting function when drug injection is the delayed reinforcer.

Accordingly, the present study was designed to examine the delay discounting of cocaine injections. Rhesus monkeys were implanted with iv catheters and allowed to choose between an immediate and a delayed injection of cocaine. The dose of the delayed injection was fixed at 0.2 mg/kg but the delay varied across conditions. The dose of the immediate injection was varied across conditions to establish the dose–response relationship at each delay, and the point at which a monkey would be predicted to choose the immediate and delayed doses equally often (i.e., the ED50) provided a measure of the present, subjective value of the delayed dose. Discounting functions then were fit to these indifference points to assess how well the hyperboloid model (Equation 1) describes the discounting of drugs in primates.

Materials and Methods

Animals and Apparatus

Seven male rhesus monkeys (Macaca mulatta) weighing between 8.2 and 11.2 kg served as subjects. All had a history of drug self-administration under choice conditions. Monkeys AV40, M439, and AV27 previously had chosen between cocaine and food with and without delays under conditions similar to those in the present study (Woolverton & Anderson, 2006). The other monkeys previously had chosen between drug injections and food under concurrent variable-ratio schedules (in an unpublished study by Woolverton). Shortly after the end of each session, each monkey was fed a sufficient amount of monkey chow (Teklad 25% Monkey Diet; Harlan/Teklad, Madison, Wisconsin) to maintain stable body weight at about 95% of its original weight. Each monkey also was given fresh fruit and a chewable vitamin tablet daily. All monkeys were weighed approximately every 2 months, and original body weight was maintained (±5%) over the course of the experiment by adjusting feeding. Water was available continuously.

Each monkey was fitted with a stainless steel restraint harness and tether (Lomir Biomedical, Malone, New York) that was attached to the rear of a 1.0-m3 experimental cubicle (Plas-Labs, Lansing, Michigan) in which the monkey lived for the duration of the experiment. Exhaust fans mounted on the back of each cubicle provided ventilation and masking noise. Two response levers (PRL-001; BRS/LVE, Beltsville, Maryland) were mounted in steel boxes on the inside of the transparent front of each experimental cubicle, 10 cm above the floor and 47 cm apart. Four jeweled stimulus lights, two white and two red, were mounted directly above each lever. Drug injections were delivered by peristaltic injection pumps (7540X; Cole-Parmer Company, Chicago, Illinois) located outside the cubicle. Programming and recording of experimental events were accomplished with a Macintosh computer and associated interfaces located in an adjacent room.

For two monkeys (AGA and AV27), only two dose–response functions could be obtained before all veins that could be catheterized were used. As a consequence, discounting functions could not be fit to their data, and the results for these subjects are not reported.

Procedure

Each monkey had a double lumen iv catheter implanted according to the following protocol. The monkey was injected with a combination of atropine sulfate (0.04 mg/kg im) and ketamine hydrochloride (10 mg/kg im) followed 20–30 min later by inhaled isoflurane. When anesthesia was adequate, the catheter was surgically implanted into a major vein with the tip terminating near the right atrium. The distal end of the catheter was passed subcutaneously to the mid-scapular region, where it exited the subject’s back. After surgery, the monkey was returned to the experimental cubicle. The catheter was threaded through the spring arm and out the back of the cubicle and was connected to the injection pump. An antibiotic (Kefzol; Eli Lilly & Company, Indianapolis, Indiana) was administered (22.2 mg/kg im) twice daily for 7 days postsurgery to prevent infection. If a catheter became nonfunctional during the experiment, it was removed, and the monkey was removed from the experiment for a 1–2 week period to allow any infection to clear. After health was verified, a new catheter was implanted as before. The catheter was filled between sessions with a solution of at least 40 units/ml heparin to prevent clotting at the catheter tip.

Experimental sessions began at 11:00 a.m. each day, 7 days per week, and were signaled by illumination of the white lever lights. Each session consisted of two 10-trial blocks in which a single response on either lever initiated a cocaine injection. Two forced-choice (sampling) trials were presented at the start of each block and were followed by eight free-choice trials. During the forced-choice trials, only one lever was active, signaled by the illumination of the corresponding set of white lever lights. Forced-choice trials in each block consisted of one exposure to the consequences associated with the left lever and one exposure to the consequences associated with the right lever. This ensured that the subject had exposure to the contingencies programmed for each lever before being allowed to choose between them. Responding on the inactive lever had no programmed consequence.

During free-choice trials, both levers were active and both sets of white lever lights were illuminated. After a lever press, all lights were extinguished, and both the delay until reinforcement and a 10-min interval to the beginning of the next trial began timing. In this way, reinforcement rate did not vary with the delay until reinforcement. At the end of the delay, a reinforcer was presented. The red lights above the lever whose press resulted in reinforcer presentation were illuminated during the 10-s cocaine injection.

The options on free-choice trials were a 0.2 mg/kg/injection of cocaine, injected after a delay, and another dose of cocaine injected immediately. Delays to the 0.2 mg/kg/injection ranged between 0 (no delay) and 300 s. The immediate dose of cocaine ranged between 0.012 and 0.4 mg/kg/injection. A given set of conditions was in effect until choice was stable. Two stability criteria had to be met: The number of choices of the immediate dose on free-choice trials was within ±2 of the running three-session mean for three consecutive sessions, and there was no upward or downward trend over the past three sessions. Stability usually was obtained within 4–10 sessions. Once stability was achieved, the outcomes associated with the two levers were reversed, and stable preference was redetermined. Conditions were studied in an irregular order both within and across monkeys.

Data Analysis

For a given pair of lever-reversal conditions, the mean number of choices of the immediate dose from the last three sessions of each condition (i.e., six sessions of stable responding) was used as the measure of preference. Once a dose–response function (preference as a function of immediate dose) for the immediate injection was determined at a given delay, indifference points (50% choice for each alternative) were calculated on the basis of fits of a logistic dose–response function to the data (GraphPad Software, 2004). Hyperboloid discounting functions (Equation 1 with A = 0.2 mg/kg/injection) were then fit to the indifference points for each monkey.

Drugs

Cocaine HCl (National Institute on Drug Abuse, Rockville, Maryland) was dissolved in 0.9% saline. Injection volumes were approximately 1.5 ml per injection. Doses are expressed as the salt.

Results

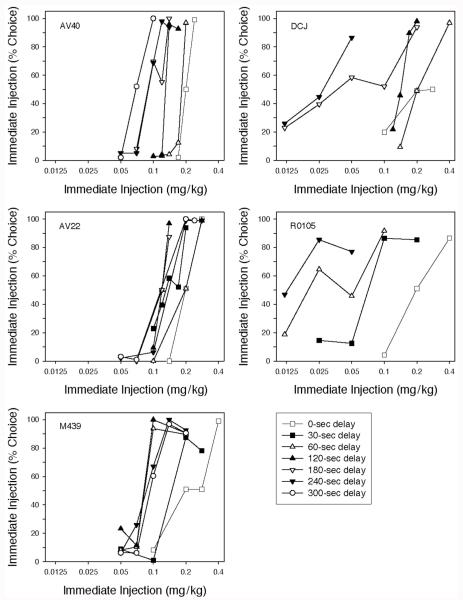

All of the monkeys completed all of the trials in every session over the course of the experiment. As may be seen in Figure 1, choice of the immediate injection increased as a function of dose in all monkeys in every delay condition. In the condition where choice of either lever produced an immediate 0.2 mg/kg/injection (represented by the open squares in Figure 1), monkeys typically exhibited an exclusive preference for one lever that continued after reversal of the lever–injection pairing (i.e., a lever or position bias). Thus, 50% choice levels under these conditions were the result of averaging 0% and 100% immediate dose choices. Lever or position biases also were observed at immediate drug doses intermediate between those resulting in (near) exclusive preference for the delayed injection and (near) exclusive preference for the immediate injection (e.g., see the data for AV40 in the 300-s delayed injection shown in Figure 1A).

Figure 1.

Percentage choice of an immediate injection of cocaine. The dose of the immediate injection is indicated on the horizontal axis; the dose of the alternative was always 0.2 mg/kg/injection, delivered after the delay indicated by the symbol (see legend). Each point is the mean of six sessions, three in which responses on the left lever produced the immediate injection and three in which it was produced by responses on the right lever.

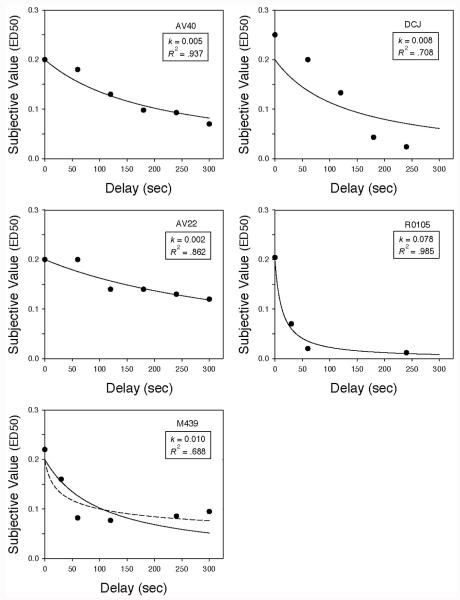

The dose–response relationship for cocaine shifted systematically to the left as the delay to the 0.2 mg/kg/injection was increased, indicating that the present value of a delayed drug reinforcer decreased as the delay to its receipt increased. Figure 2 replots the ED50 values estimated from the data in Figure 1 (i.e., the immediate dose judged equal in value to the delayed 0.2 mg/kg/injection) as a function of delay. As may be seen, a simple hyperbolic discounting function (Equation 1 with s = 1.0), represented by the solid curves, provided good fits to the data (median R2 = .862).

Figure 2.

Delay discounting functions. Subjective value was calculated as the immediate dose at which a monkey would be predicted to choose the immediate and delayed injections equally often (i.e., the ED50 in mg/kg/injection). ED50 values were calculated from the dose–response relationships shown in Figure 1. The solid curves represent hyperbolic discounting functions (i.e., Equation 1 with s = 1.0), and the corresponding estimates of the k parameter and proportions of variance accounted for are shown. For M439, the dashed curve represents Equation 1 with s as a free parameter (see text).

Estimates of the discounting rate parameter (k) ranged between 0.002 and 0.078, with a median of 0.008. For one monkey (M439), the fit was substantially improved by allowing the exponent (s) to be a free parameter (see the dashed curve in the lower left panel of Figure 2). The estimated value of s (0.259) for this subject was significantly less than 1.0, t(4) = 3.70, p < .05, as is frequently observed in human discounting data (Green & Myerson, 2004). When s was a free parameter, the R2 for this subject increased to .827 from only .688 when s was fixed at 1.0.

Discussion

Consistent with previous reports (Anderson & Woolverton, 2003; Johanson & Schuster, 1975), the frequency with which immediate cocaine was chosen increased with the dose. Also as reported previously (Anderson & Woolverton, 2003; Woolverton & Anderson, 2006), the introduction of a delay between lever press and injection decreased the frequency with which the delayed dose was chosen. Increasing the delay shifted the dose–response function for choice of the immediate dose to the left, consistent with the hypothesis that the present value of a delayed drug reinforcer decreases as the delay to its receipt increases. These effects were observed in all 5 monkeys.

The important new finding in the present study is that the relationship between the subjective value of a cocaine injection and the delay until its receipt was well described by a hyperbolic discounting function (median R2 = .86). Values of R2 for individual subjects were comparable with those reported previously for rats and pigeons when food and water were used as reinforcers (Green, Myerson, Holt, Slevin, & Estle, 2004; Mazur, 2000; Richards, Mitchell, de Wit, & Seiden, 1997). Thus, when the alternative to a delayed dose of cocaine is another cocaine injection, a simple hyperbolic discounting function provides an account of choice between immediate and delayed reinforcers that is as accurate as when nondrug reinforcers maintain behavior. In addition, the present results are similar to findings with humans given hypothetical choices involving drug reinforcers (e.g., Bickel, Odum, & Madden, 1999; Madden, Begotka, Raiff, & Kastern, 2003; Petry, 2003) as well as findings involving hypothetical monetary rewards (for a recent review, see Green & Myerson, 2004).

The discounting rates for the delayed 0.2 mg/kg/injection cocaine, as indexed by the k parameter in Equation 1, were for the most part lower than the rates found in experiments with rats and pigeons discounting nondrug reinforcers. In the present study, 4 of the 5 monkeys studied had k values that were less than 0.010 (the median k for the group was 0.008). Comparison of these discounting rates with those observed in previous research with nonhumans, in which k values have typically been greater than 0.10, suggest that monkeys discount the value of a relatively high dose of cocaine at a relatively low rate, at least when the alternative is a lower dose of cocaine. It is unclear, however, whether this is because we are comparing drugs to nondrug reinforcers or because we are comparing monkeys to nonprimates (Green & Estle, 2003; Tobin, Logue, Chelonis, Ackerman, & May, 1996). Nevertheless, the present results at least raise the possibility that drug choice is not impulsive in this situation. Said another way, when a high dose of cocaine, available after a delay, was the alternative to an immediate low dose, the monkeys in the present study showed significant self-control in obtaining the higher dose. This conclusion is consistent with the proposition of Heyman (1996) that drug taking can be a well organized and planned behavior. It will be of considerable interest to determine whether low discounting rates also are observed in monkeys when the choice is between a drug and a nondrug reinforcer.

The monkeys studied in the present experiment showed substantial individual variability in their k values. The reasons for these individual differences are, at this point, unclear. Studies with human subjects have reported that drug abusers show higher k values than controls (e.g., Bickel & Marsch, 2001; Bretteville-Jensen, 1999; Kirby & Petry, 2004; Vuchinich & Simpson, 1998). It is not known, however, whether the higher discounting rates of substance abusers are a cause or an effect of drug abuse, and this issue is difficult to address in human studies. With this in mind, we examined the history of the monkey who showed by far the largest k value (and thus the steepest discounting) of any of the subjects in this study. This monkey, R0105, turned out also to have had relatively little experience with cocaine self-administration prior to the beginning of the present study (193 days), compared with some of the other subjects. However, the monkey with the briefest drug self-administration history (DCJ, 112 days) and the monkey with the longest history (AV40, 783 days) both showed much lower k values (and much shallower discounting) than R0105. Thus, the data provide little support for the idea that a simple history of administering a particular drug (e.g., cocaine) leads to an increase in the rate at which the drug is discounted. Alternatively, individual differences in k may be pre-existing. Animal research that examines the source of individual differences in discounting rate (by systematically varying prior drug history, for example) provides an excellent approach to addressing this important issue.

Animal models permit the experimental analysis of the discounting of actual drug reinforcers. Such models make it possible to study the behavioral and pharmacological conditions that determine the rate at which the value of drugs is discounted. For example, it will be of interest to study the effect of the magnitude (dose) of the delayed reinforcer on discounting. In humans, larger delayed reinforcers are discounted less steeply than smaller delayed reinforcers (e.g., Kirby, 1997; Raineri & Rachlin, 1993; Thaler, 1981), and such magnitude effects have been reported with nonmonetary as well as monetary rewards (Estle, Green, Myerson, & Holt, 2007). In contrast, magnitude effects have not been observed with nonhuman subjects (Green et al., 2004; Richards et al., 1997), but monkeys have not yet been studied in this regard. If monkeys, like humans, discount smaller amounts of nondrug reinforcers more steeply than larger amounts, then it would be of interest to know whether drug choice is more impulsive when smaller amounts (doses) are involved.

Another interesting question is whether discounting rate varies across drugs in a manner that predicts their relative liability for abuse. For example, are drugs that are discounted more steeply when injections are delayed more or less liable to be abused than drugs that are discounted less steeply? Alternatively, the abuse liability of a drug might be predictable on the basis of choice between an immediate drug injection and a delayed alternative reinforcer (either another drug or a nondrug reinforcer, such as food). That is, drugs with greater abuse liability may lead to steeper discounting of delayed alternative reinforcers. Clearly, a nonhuman primate model that involves both drug self-administration and the discounting of real delayed reinforcers—rather than the discounting of hypothetical outcomes, as is the case in most human discounting studies—would be extremely useful in the investigation of these and related issues. Although it took more than a year to establish the individual discounting functions in the present study, research in our own and other laboratories suggests that it may be possible to greatly reduce the amount of time required (Negus, 2003; Paronis, Gasior, & Bergman, 2002), thereby facilitating further research on discounting and drugs of abuse.

Acknowledgments

We gratefully acknowledge the technical assistance of Emily Mathis, Theresa Vasterling, and Amanda Ledbetter in conducting this experiment. This research was supported by National Institutes of Health Grant DA08731 to William L. Woolverton. Preparation of the article also was supported in part by National Institutes of Health Grant MH55308 to Leonard Green and Joel Myerson.

References

- Ainslie GW. Impulse control in pigeons. Journal of the Experimental Analysis of Behavior. 1974;21:485–489. doi: 10.1901/jeab.1974.21-485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson KG, Woolverton WL. Effects of dose and infusion delay on cocaine self-administration choice in rhesus monkeys. Psychopharmacology. 2003;167:424–430. doi: 10.1007/s00213-003-1435-9. [DOI] [PubMed] [Google Scholar]

- Beardsley PM, Balster RL. The effects of delay of reinforcement and dose on the self-administration of cocaine and procaine in rhesus monkeys. Drug and Alcohol Dependence. 1993;34:37–43. doi: 10.1016/0376-8716(93)90044-q. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Marsch LA. Toward a behavioral economic understanding of drug dependence: Delay discounting processes. Addiction. 2001;96:73–86. doi: 10.1046/j.1360-0443.2001.961736.x. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Odum AL, Madden GJ. Impulsivity and cigarette smoking: Delay discounting in current, never, and ex-smokers. Psychopharmacology. 1999;146:447–454. doi: 10.1007/pl00005490. [DOI] [PubMed] [Google Scholar]

- Bretteville-Jensen AL. Addiction and discounting. Journal of Health Economics. 1999;18:393–407. doi: 10.1016/s0167-6296(98)00057-5. [DOI] [PubMed] [Google Scholar]

- Estle SJ, Green L, Myerson J, Holt DD. Discounting of monetary and directly consumable rewards. Psychological Science. 2007;18:58–63. doi: 10.1111/j.1467-9280.2007.01849.x. [DOI] [PubMed] [Google Scholar]

- GraphPad Prism [Computer software] GraphPad Software; San Diego, CA: 2004. [Google Scholar]

- Green L, Estle SJ. Preference reversals with food and water reinforcers in rats. Journal of the Experimental Analysis of Behavior. 2003;79:233–242. doi: 10.1901/jeab.2003.79-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J, Holt DD, Slevin JR, Estle SJ. Discounting of delayed food rewards in pigeons and rats: Is there a magnitude effect? Journal of the Experimental Analysis of Behavior. 2004;81:39–50. doi: 10.1901/jeab.2004.81-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heyman GM. Resolving the contradictions of addiction. Behavioral and Brain Sciences. 1996;19:561. [Google Scholar]

- Johanson CE, Schuster CR. A choice procedure for drug reinforcers: Cocaine and methylphenidate in the rhesus monkey. Journal of Pharmacology and Experimental Therapeutics. 1975;193:676–688. [PubMed] [Google Scholar]

- Kagel JH, Battalio RC, Green L. Economic choice theory: An experimental analysis of animal behavior. Cambridge University Press; New York: 1995. [Google Scholar]

- Kirby KN. Bidding on the future: Evidence against normative discounting of delayed rewards. Journal of Expermental Psychology: General. 1997;126:54–70. [Google Scholar]

- Kirby KN, Petry NM. Heroin and cocaine abusers have higher discount rates for delayed rewards than non-drug-using controls. Addiction. 2004;99:461–471. doi: 10.1111/j.1360-0443.2003.00669.x. [DOI] [PubMed] [Google Scholar]

- Madden GJ, Begotka AM, Raiff BR, Kastern LL. Delay discounting of real and hypothetical rewards. Experimental and Clinical Psychopharmacology. 2003;11:139–145. doi: 10.1037/1064-1297.11.2.139. [DOI] [PubMed] [Google Scholar]

- Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. Erlbaum; Hillsdale, NJ: 1987. pp. 55–73. [Google Scholar]

- Mazur JE. Tradeoffs among delay, rate, and amount of reinforcement. Behavioral Processes. 2000;49:1–10. doi: 10.1016/s0376-6357(00)00070-x. [DOI] [PubMed] [Google Scholar]

- Myerson J, Green L. Discounting of delayed rewards: Models of individual choice. Journal of the Experimental Analysis of Behavior. 1995;64:263–276. doi: 10.1901/jeab.1995.64-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Negus SS. Rapid assessment of choice between cocaine and food in rhesus monkeys: Effects of environmental manipulations and treatment with d-amphetamine and flupenthixol. Neuropsychopharmacology. 2003;28:919–931. doi: 10.1038/sj.npp.1300096. [DOI] [PubMed] [Google Scholar]

- Paronis CA, Gasior M, Bergman J. Effects of cocaine under concurrent fixed-ratio schedules of food and IV drug availability: A novel choice procedure in monkeys. Psychopharmacology. 2002;163:283–291. doi: 10.1007/s00213-002-1180-5. [DOI] [PubMed] [Google Scholar]

- Petry NM. Discounting of money, health, and freedom in substance abusers and controls. Drug and Alcohol Dependence. 2003;71:133–141. doi: 10.1016/s0376-8716(03)00090-5. [DOI] [PubMed] [Google Scholar]

- Rachlin H. Notes on discounting. Journal of the Experimental Analysis of Behavior. 2006;85:425–435. doi: 10.1901/jeab.2006.85-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Green L. Commitment, choice and self-control. Journal of the Experimental Analysis of Behavior. 1972;17:15–22. doi: 10.1901/jeab.1972.17-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raineri A, Rachlin H. The effect of temporal constraints on the value of money and other commodities. Journal of Behavioral Decision Making. 1993;6:77–94. [Google Scholar]

- Reynolds B. A review of delay-discounting research with humans: Relations to drug use and gambling. Behavioral Pharmacology. 2006;17:651–667. doi: 10.1097/FBP.0b013e3280115f99. [DOI] [PubMed] [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. Journal of the Experimental Analysis of Behavior. 1997;67:353–366. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stretch R, Gerber GJ, Lane E. Cocaine self-injection behaviour under schedules of delayed reinforcement in monkeys. Canadian Journal of Physiology and Pharmacology. 1976;54:632–638. doi: 10.1139/y76-089. [DOI] [PubMed] [Google Scholar]

- Thaler R. Some empirical evidence on dynamic inconsistency. Economics Letters. 1981;8:201–207. [Google Scholar]

- Tobin H, Logue AW, Chelonis JJ, Ackerman KT, May JG., III Self-control across species (Columba livia, Homo sapiens, and Rattus norvegicus) Journal of Comparative Psychology. 1996;108:126–133. doi: 10.1037/0735-7036.108.2.126. [DOI] [PubMed] [Google Scholar]

- Vuchinich RE, Simpson CA. Hyperbolic temporal discounting in social drinkers and problem drinkers. Experimental and Clinical Psychopharmacology. 1998;6:292–305. doi: 10.1037//1064-1297.6.3.292. [DOI] [PubMed] [Google Scholar]

- Woolverton WL, Anderson KG. Effects of delay to reinforcement on the choice between cocaine and food in rhesus monkeys. Psychopharmacology. 2006;186:99–106. doi: 10.1007/s00213-006-0355-x. [DOI] [PubMed] [Google Scholar]