Abstract

A survey was distributed, using a sequential mixed-mode approach, to a national sample of obstetrician-gynecologists. Differences between responses to the web-based mode and the on-paper mode were compared to determine if there were systematic differences between respondents. Only two differences in respondents between the two modes were identified. University-based physicians were more likely to complete the web-based mode than private practice physicians. Mail respondents reported a greater volume of endometrial ablations compared to online respondents. The web-based mode had better data quality than the paper-based mailed mode in terms of less missing and inappropriate responses. Together, these findings suggest that, although a few differences were identified, the web-based survey mode attained adequate representativeness and improved data quality. Given the metrics examined for this study, exclusive use of web-based data collection may be appropriate for physician surveys with a minimal reduction in sample coverage and without a reduction in data quality.

Keywords: survey, online, physician, distribution mode, web-based, internet, questionnaire

Introduction

The internet is becoming a prime location for physician communication; in 2002, 92.2% of physicians reported having email accounts and 85% reported checking email several times a week or daily. (Bennett et al, 2004) The internet is also becoming a significant resource for information about physicians, and web-based surveys are increasingly becoming a mainstream alternative to mail based surveys. Advantages of web-based surveys are ease of implementation, rapid attainment of responses, and elimination of the cost of stationery (Wyatt, 2000). Additionally, many of the available web-based survey development tools facilitate data entry and cleaning and allow control over question order and skip patterns, leading to potentially improved data quality.

Given the importance of physician surveys to determine knowledge and practice patterns, nearly universal access of physicians to the internet, and the potential advantages of web-based surveys, continued assessment of web-based survey distribution could facilitate survey research about physicians. High response rates are a key component of survey research. While some web-based studies have noted exceptional response rates among physicians (Potts & Wyatt, 2002), others have noted poor response rates, which can jeopardize validity and generalizability of the data obtained (VanGeest Johnson, & Welch, 2001; Braithwaite, Emery, deLusignan, & Sutton, 2003; Kim et al, 2000; Hallowell, Patel, Bales, & Gerber, 2000).

To evaluate these issues, we conducted this cross-sectional study [as part of a larger study to evaluate physician practice patterns and knowledge pertaining to abnormal uterine bleeding (AUB)] to assess the feasibility of a web-based approach to surveying a large national sample of obstetrician-gynecologists. We surveyed members of The American College of Obstetricians and Gynecologists (ACOG) because this society represents 90% of practicing obstetrician-gynecologists in the United States. As part of the larger project, we chose a sequential mixed mode approach (using web-based and postal mail modes) because ACOG fellows were accustomed to receiving surveys by postal mail and we thought this would optimize our response rate in this particular population. For this study, we compared respondent characteristics and responses between the web-based and paper versions of the survey. Respondents who completed the mail survey served as “proxys” for individuals who would have been “non-respondents” if only the web-based version had been offered. We hypothesized, based on previous studies, that there would be differences in physician characteristics between participants who completed the web-based survey versus those who completed by mail.

Methods

Sample

We conducted a cross-sectional survey of members of the ACOG from October 2008 through May 2009. The study was approved by the Women and Infants Hospital Institutional Review Board. (IRB # 08-0093)

Questionnaires were sent to 802 ACOG members. Six hundred two recipients were members of the Collaborative Ambulatory Research Network (CARN). The Collaborative Ambulatory Research Network is a group of practicing obstetrician-gynecologists who volunteer to participate in survey research (Hill, Erickson, Holzman, Power, Schulkin, 2001). The other 200 recipients were randomly selected ACOG members who had not received a survey from ACOG in the previous 2 years. The sample pool of 602 potential participants from CARN and 200 potential participants from ACOG members was chosen by the Department of Research at ACOG based on their previous experience with physician surveys within their organization. The random selection of potential participants was not influenced by available email or mailing addresses. ACOG surveys typically achieve a 30–50% response rate; With at least 120 online responses and 90 mail responses, we had the ability to detect at least a 20% difference in characteristics between modes with alpha 0.05 and power of 80%.

Survey

We developed a questionnaire on AUB to be distributed to ACOG members. With this questionnaire, we aimed to evaluate physicians’ knowledge about AUB, their practice patterns for treating AUB, and the content and characteristics that physicians would require of an instrument for incorporation into routine screening and evaluation of women with AUB. Several drafts of the questionnaire were developed and subsequently revised and the final questionnaire was reviewed by experts in heavy menstrual bleeding and survey methodology.

We distributed the surveys using a sequential mixed method approach; All potential participants with email addresses received the web-based version and then all potential participants without an email address or who did not respond to the web-based version received the mail-based version. We chose this approach because it has been described as a way to reduce non-response error, especially among web-based surveys. (Shaefer & Dillman, 1998, De Leeuw, 2005) Although physicians should have high internet access rates, ACOG members are accustomed to receiving mail-based surveys and not all members provided ACOG with an email address. Employing a mixed method approach specifically allowed us to investigate whether or not responses differed between physicians completing the web-based mode and physicians choosing to complete the mail rather than web-based mode.

For the web-based version of the survey, we used DatStat Illume (DatStat Illume is a trademark of DatStat, Incorporated, Seattle, Washington) and designed the web-based survey using standards suggested by Crawford et al. (Crawford, McCabe, & Pope, 2005). DatStat Illume is a sophisticated computer software package with excellent data security (using Secure Sockets Layer and providing public key encryption and server authentication) and capacity to track participant responses in a password protected database on the web. The software also allows for complex skip patterns, allowing respondents to complete the survey in a time-efficient manner. The paper survey was developed as a Microsoft Word document. The content of the survey was the same for the web and paper-based surveys and included multiple-choice questions about the physician and his/her practice, practice patterns for the evaluation and treatment of heavy menstrual bleeding, and inquiries about the concepts that should be included in a comprehensive heavy menstrual bleeding questionnaire. Strict attention was paid to recommended web-based survey design and paper-based survey formatting. Both the web-based and the paper-based survey were reviewed by experts and critiqued by colleagues of the Principal Investigator.

Initially, we conducted a pilot survey with 25 physicians at Women & Infants Hospital (Providence, RI). Participants received an e-mail invitation including a hyperlink to the web-based questionnaire. Three e-mail reminders, one reminder per week, were sent out following the e-mail invitation. The questionnaire and protocol for participant contact were revised based on feedback from the pilot participants.

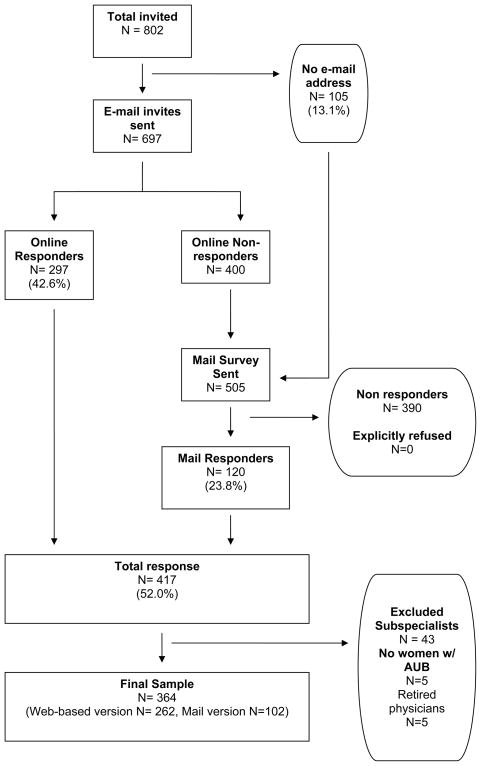

We followed principles of data collection recommended by Dillman to maximize response rate (Shaefer & Dillman, 1998). First, pre-notification letters of the survey were mailed (n=802). (See figure 1) Ten days after the pre-notification letter, all study participants with a valid email address were sent an email invitation to participate in the web-based survey, which included an embedded link to a page that explained informed consent and included an embedded link to the secure and confidential website for survey completion. The first e-mail reminder was sent approximately one week after the e-mail invitation for non-responders. Three e-mail reminders followed and were sent in five-day increments. For non-responders to the web-based survey as well as physicians with an unavailable e-mail address, a paper-based version of the survey was sent by mail six weeks after the pre-notification letters, which was ten days after the last email reminder were sent.

Figure 1.

Participant flow diagram

The informed consent page of the web-survey included information about the study, including the risks and benefits. Participants were asked to click a “button” at the end of page if they understood the study and consented to participate. For the paper survey, a letter containing information on informed consent was attached to the survey. The end of the letter addressed informed consent by the following: “By reading this letter, completing the paper survey, and submitting the paper survey, you are consenting to participate in this study”.

Data Analyses

Web-based responses were automatically entered into the DatStat Illume Web database and surveys returned by mail were manually entered by a research assistant into the same DatStat software program that was used by study participants. The research assistant recorded “events” where mail respondents either skipped or answered a question inappropriately or checked off more responses than were indicated. The DatStat interface was not configured in a way that allowed double data entry. To verify the data entered by the research assistant, the Principal Investigator reviewed every mail survey and the associated entered and recorded data to verify that the data were accurate. All discrepancies were confirmed by a third reviewer.

For the substantive component of the research project, we were interested in obtaining information about how physicians care for women with AUB. Because of this, we excluded subspecialists (Maternal Fetal Medicine, Gynecology Oncology, and Urogynecology) who were unlikely to provide care to a substantial number of women with this problem and physicians who responded that they did not provide care for women with heavy or irregular menstrual bleeding. However, general obstetrician/gynecologists who reported that they also did urogynecology remained in the sample.

We compared respondents who used the web-based (online respondents) versus mailed version (mail respondents) of the survey. Respondents who completed the mail survey served as “proxys” for individuals who would have been “non-respondents” if only the web-based version had been offered. We compared these two groups of respondents in terms of demographics, volume of patients with AUB, and volume of AUB-related surgery. Additionally, for analyses about mode of data collection, we randomly selected one representative measure from each of the following sections of the survey: typical discussions with patients with AUB, usage of questionnaires in clinical practice, and knowledge about treatment of AUB. We chose only one item from each section to avoid replicating the full analyses being performed for our main study on physician knowledge and practice patterns. These comparisons allowed us to determine systematic differences between online and mail responders and whether or not these differences could be controlled for in analyses. Additional analyses were performed to compare the “quality” of the data obtained from respondents to the web-based and the mail versions. To do this, we calculated the number of “unusable answers” for each survey returned and compared the mean number of “unusable answers” between the web-based and mail modes. “Unusable answers” included questions inappropriately left unanswered, the number of questions inappropriately answered (included those that should have been skipped as part of a skip pattern, checked off more responses than requested, or checked off fewer responses than requested). Categorical variables were compared using Fisher’s exact tests and two-sided p-values were calculated, with p<0.05 considered statistically significant.

Odds ratios and adjusted odds ratios were calculated, using logistic regression, to determine the “odds of completing the survey by mail”. These odds ratio calculations were made for several physician characteristics and practice pattern variables (listed above) and were adjusted for potential confounders including type of practice, years since completing residency, type of subspecialty, and number of patients seen with heavy or irregular menstrual bleeding. These factors were controlled for individually and then entered into a full model. Data were analyzed using SAS 9.1 (Cary, NC).

Results

Of the 802 physicians in the study sample, 697 (87%) had email addresses on file and were sent the web-based version of the survey. (Figure 1) In the initial phase of distribution of the survey by email, only 1% (N=1 of 100) of emails “bounced-back” as undeliverable. Throughout all phases of both email and mail survey distribution, no physicians who were contacted for participation (either by email or mail) returned a response that they refused to participate. Five physicians returned blank surveys with a note that they were “retired”. Two-hundred ninety seven completed the web-based version (42.6%). The physicians who did not respond to the web-based survey request and the physicians who had no email address on file received mail versions of the survey (n= 505) and 120 physicians responded (23.8%). In total, 417 questionnaires (either web-based or mail) were returned, resulting in a response rate of 52.0%. Because the intent of the content of the survey was to examine obstetrician-gynecologists’ knowledge and practice patterns relating to heavy or irregular menstrual bleeding, we excluded subspecialists physicians who responded that they did not provide care for women with heavy or irregular menstrual bleeding, and physicians who returned the mail survey with a note that they were “retired” (n=53). A total of 364 surveys from obstetrician-gynecologists remained in our sample.

For this study, we were primarily interested in the differences in physician characteristics between those completing the web-based and mail mode. (Table 1) Overall, representation was obtained from all geographical districts and the sample was equally divided in terms of gender (49% male, 51% female). Of respondents, 42% completed residency more than 20 years ago and 81% reported that more than 75% of their work time is spent providing direct patient care.

Table 1.

Comparison of demographic characteristics between participants who responded using the web-based version (n=262) and participants who responded using the mail version (n=102)

| N (%) N=364 |

Web-based version N=262* | Mail version N=102* | P-value | |

|---|---|---|---|---|

| Type of sub-specialty† | ||||

| General Ob-Gyn | 337(94) | 243(72) | 94(28) | P=0.46 |

| Reproductive Endocrinology Subspecialty | 15(4) | 10(67) | 5(33) | P=0.77 |

| Urogynecology | 9(3) | 4(44) | 5(56) | P=0.12 |

| Minimally invasive gynecology/laparoscopy | 35(10) | 22(63) | 13(37) | P=0.24 |

| Clinical Research | 16(4) | 15(94) | 1(6) | P=0.05 |

| Geographical Districts | ||||

| Midwest | 76(23) | 48(63) | 28(37) | P=0.15 |

| Northeast | 69(21) | 54(78) | 15(21) | |

| South | 116(36) | 87(75) | 29(25) | |

| West | 65(20) | 50(77) | 15(23) | |

| Gender | ||||

| Male | 167(49) | 118(70) | 49(29) | P=0.21 |

| Female | 173(51) | 133(77) | 40(23) | |

| Years since completing residency‡ | ||||

| ≤ 20 years | 201(58) | 153(76) | 48(24) | P=0.32 |

| > 20 years | 143(42) | 102(71) | 41(29) | |

| Type of Practice | ||||

| Private practice | 283(82) | 201(71) | 82(29) | P=0.01 |

| Community hospital faculty | 16(5) | 12(75) | 4(25) | |

| University hospital faculty | 37(11) | 35(95) | 2(5) | |

| Other type of practice§ | 8(2) | 7(88) | 1(12) | |

| Proportion of time providing direct patient care | ||||

| 0–50% | 24(7) | 22(92) | 2(8) | P=0.12 |

| 51–75% | 40(12) | 29(73) | 11(27) | |

| 76–100% | 276(81) | 201(73) | 75(27) | |

Missing data: 5 online respondents logged in and did not answer any questions. Thirteen mail respondents answered only the first three screening questions. Other columns that do not add up to the total number of respondents represent random missing responses to individual questions.

May add to > 100% because multiple choices could be selected

Also compared <5 years, 5–10 years, 11–20 years, and >20 years and found no difference

Other type of practice included military and government practice, “other”

There were no mode differences in physician reports of geographic districts, gender, years since completing residency, and proportion of time providing direct patient care. University hospital faculty were more likely to complete the web-based version than private practice physicians (95% vs. 71%, p=0.01).

We evaluated whether or not there were differences in terms of clinical practice and knowledge between physicians who completed the web-based and mail versions because this is important in determining survey distribution and analyses for future studies in this population. (Table 2) Physicians completing the mail version, on average, reported more surgical volume in terms of ablations and hysterectomies than their web-based-version counterparts. Where 17% (n=16) of the mail- version physicians performed 6 or more ablations per month, only 5% (n=14) of physicians completing the web-based version reported this level of volume (p=0.002). Similarly, 92% of the physicians completing the mail version but only 83% of physicians completing the web-based version performed at least one hysterectomy per month (p=0.06). There was no difference in other physician characteristics or knowledge about AUB between web-based and mail-version respondents.

Table 2.

Comparison of web-based (n=262) and mail (n=102) responses.

| Web-based version (n= 262) N (%) | Mail version (n= 102) N (%) | P -value | |

|---|---|---|---|

| Average number of patients evaluated per month who report heavy or irregular menstrual bleeding | |||

| 1–10 | 123(48) | 42(44) | P=0.55 |

| 11 or more | 131(52) | 53(56) | |

| Average number of endometrial ablations performed per month | |||

| None | 53(21) | 12(13) | P=0.002 |

| 1–5 | 188(74) | 66(70) | |

| 6 or more | 14(5) | 16(17) | |

| Average number of hysterectomies performed per month | |||

| None | 44(17) | 7(7) | P=0.06 |

| 1–5 | 193(75) | 79(84) | |

| 6 or more | 19(7) | 8(9) | |

| Respondents who replied that they always ask the patient which treatments she has tried for her bleeding† | 247(97) | 82(92) | P=0.07 |

| Respondents who reported that they use questionnaires routinely in their practice | 178(70) | 57(64) | P=0.29 |

| Respondents who answered at least 2 of 3 of the knowledge questions correctly | 67 (26) | 19 (21) | P = 0.39 |

Missing data: 5 online respondents logged in and did not answer any questions. Thirteen mail respondents answered only the first three screening questions. Other columns that do not add up to the total number of respondents represent random missing responses to individual questions.

We randomly selected one representative measure from each of the following sections of the survey: typical discussions with patients with AUB, usage of questionnaires in clinical practice, and knowledge about treatment for AUB

Because these differences in characteristics of respondents between the two modes could potentially be accounted for by several physician characteristic variables such as years in practice, type of clinical practice, type of subspecialty, and number of patients with heavy or irregular menstrual bleeding, we analyzed the data controlling for these variables. (Table 3) In these analyses we found the odds of completing the survey by mail were increased among respondents with a higher volume of endometrial ablations, even when adjusting for physician characteristics (OR=3.02, 95% CI 1.33–6.84). After adjusting for potential confounders, other characteristics including volume of hysterectomies, average number of patients who report heavy or irregular menstrual bleeding, asking about previous treatments for AUB, and answering knowledge questions correctly were not associated with increased odds of responding by mail.

Table 3.

Odds of completing the survey by mail, as compared to completing the survey online. Odds of completing the survey by mail served as a “proxy” for odds of not responding to the web-based survey

| Unadjusted Odds ratio (95% CI) | Model I* | Model 2* | Model 3* | Model 4* | Full model* | |

|---|---|---|---|---|---|---|

| Average number of patients per month who report heavy or irregular menstrual bleeding | ||||||

| 1–10 | Ref | Ref | Ref | Ref | --- | Ref |

| 11 or more | 1.19 (0.74–1.90) | 0.97 (0.59–1.59) | 1.06 (0.64–1.73) | 1.14 (0.71–1.84) | --- | 0.89 (0.53–1.49) |

| Average number of endometrial ablations per month | ||||||

| None | 0.65 (0.33–1.28) | 0.77 (0.37–1.61) | 0.61 (0.29–1.25) | 0.69 (0.34–1.38) | 0.65 (0.33–1.31) | 0.78 (0.36–1.68) |

| 1–5 | Ref | Ref | Ref | Ref | Ref | Ref |

| 6 or more | 3.26 (1.51–7.03) | 2.96 (1.34–6.51) | 3.00 (1.36–6.64) | 3.29 (1.52–7.11) | 3.26 (1.49–7.13) | 3.02 (1.33–6.84) |

| Average number of hysterectomies per month | ||||||

| None | 0.39 (0.17–0.90) | 0.51 (0.22–1.22) | 0.36 (0.15–0.85) | 0.42 (0.18–0.99) | 0.41 (0.17–0.94) | 0.49 (0.19–1.23) |

| 1–5 | Ref | Ref | Ref | Ref | Ref | Ref |

| 6 or more | 1.03 (0.43–2.45) | 0.89 (0.36–2.23) | 0.89 (0.35–2.22) | 1.06 (0.44–2.54) | 0.99 (0.41–2.39) | 0.87 (0.34–2.23) |

| How often ask patient which treatments she has tried for her bleeding | ||||||

| Always | Ref | Ref | Ref | Ref | Ref | Ref |

| Sometimes | 2.64(0.93–7.49) | 2.25(0.79–6.43) | 2.80(0.97–8.13) | 2.70(0.94–7.74) | 2.81(0.97–8.16) | 2.38(0.79–7.15) |

| Respondents use of questionnaires in practice | ||||||

| Used questionnaires | Ref | Ref | Ref | Ref | Ref | Ref |

| No questionnaires | 1.32 (0.79–2.19) | 1.28 (0.76–2.14) | 1.36 (0.81–2.28) | 1.28 (0.77–2.13) | 1.30 (0.78–2.17) | 1.29 (0.76–2.20) |

| Answered at least 2 of the knowledge questions correctly | ||||||

| 2 or more correct | Ref | Ref | Ref | Ref | Ref | Ref |

| < 2 correct | 1.31(0.74–2.34) | 1.15(0.64–2.09) | 1.26(0.70–2.26) | 1.32(0.74–2.35) | 1.31(0.73–2.33) | 1.09(0.60–2.00) |

Model 1: Odds ratios adjusted for type of current practice

Model 2: Odds ratios adjusted years since completing residency

Model 3: Odds ratios adjusted for type of subspecialty

Model 4: Odds ratios adjusted for average number of patients evaluated per month who report heavy or irregular menstrual bleeding

Model 5: Full model. Odds ratios adjusted for all four characteristics (type of current practice, number of years since completing residency, type of sub-specialty, average number of patients evaluated per month who report heavy or irregular menstrual bleeding)

Item non-response and inappropriate responses to questions (marking more than one answer when not directed to do so, writing in answers in the margins, answering questions which should have been skipped, not answering questions which should have been answered) poses a challenge for data analyses. (Table 4) We found a significantly higher number of missed questions and “unusable items” per respondent for the mail mode when compared to the web-based mode (7.4 vs. 1.3, p<0.0001 and 8.5 vs. 1.3, p<0.0001) Online respondents had an average of 1 “unusable item” (range 0–45 items) which was attributable to missed/skipped questions.

Table 4.

Mean number of unusable responses obtained from the web-based and mail versions of the survey

| N | # Missed questions per respondent | # Failed skipped patterns per respondent | # of inappropriate responses per respondent | # of unusable responses total per respondent | |

|---|---|---|---|---|---|

| Mean (range) | Mean (range) | Mean (range) | Mean (range) | ||

| Online | 262 | 1.3 (0–45) | NA | NA | 1.3 (0–45) |

| 102 | 7.4 (0–55) | 0.4 (0–2) | 0.85 (0–6) | 8.5 (0–55) | |

Discussion

This study assessed differences in obstetrician-gynecologists who responded either online or on-paper to a survey distributed with a sequential mixed mode approach. We found: 1) There was no difference in gender and number of years since residency between the respondents to the two modes. 2) University-based physicians were more likely to complete the survey on line than private practice physicians 3) After adjusting for potential confounders, mail respondents reported a greater volume of endometrial ablations compared to online respondents and 4) There was a significantly higher number of missed questions and “unusable items” per respondent for the mail compared to web-based mode.

A major concern of previous web-based studies of physicians has been that respondents may not be representative of the population as a whole. “Coverage error”, when there is a mismatch between the population the investigator wants to sample and the population who actually has access to the internet and the survey, is often a problem for web-based surveys. However, web-based surveys may be especially useful for professional associations like ACOG, given very high access to the internet and the fact that 87% of our target population provided email addresses. “Nonresponse error” could be introduced when members of the study population are unable or unwilling to complete the survey. For our study, we considered “mail respondents” proxys for “non-respondents”. We found that online and mail respondents were similar in terms of geographic districts in which they practice, years since competing residency, and gender which suggests that demographic representativeness may be less of an issue now than it was in the 1990s and early 2000s, when access to the internet and comfortable usage of the internet was not universal. For example, Braithwaite et al found that respondents to a web-based questionnaire were more likely to be male, in practice less than 10 years, and report using web-based based decision support. (Braithwaite et al., 2003) With increasing access to the internet, this may no longer be as much of a problem. Still, with a web-only approach, physicians who do not have email addresses, do not have working email addresses, or who do not check their email regularly may be underrepresented if researchers used a web-based only approach.

One important finding, which is relevant to those considering web-based research with physician samples, was that university-based physicians were more likely than private practice physicians to complete the survey on line. This could be attributed to the fact the university-based physicians may spend more time at computers working on research, administrative duties, and education and were therefore more likely to complete the survey online. Although university-based physicians were more likely to complete the survey on line than on paper, 79% of respondents to the on line version were private physicians indicating that they were well represented in terms of survey response. Based on this finding, we would suggest collecting data on practice type in future web-based surveys so that it is possible to control for this factor in data analyses.

We randomly selected one representative measure from several sections of the survey to determine if responses to the web-based and mail versions differed. Although we found no difference in typical discussions with patients with AUB, usage of questionnaires in clinical practice, and knowledge about treatment of AUB, we did find that mail respondents reported higher AUB-related surgical volume, in particular endometrial ablation, than online respondents. We are not completely sure what to make of the difference in numbers of ablations and why this was not explained by other related factors such as practice type and volume of patients. Unfortunately, it is not possible to determine the motivators for deciding to complete the mail version with the available data. There are at least two potential explanations. First, based on the study design there is a 5% chance of type I error. Second, physicians receiving the mail survey were able to easily scan through the entire survey to determine whether or not it was interesting to them. It is possible that physicians who performed more ablations were more interested in AUB and therefore more likely to complete the survey by mail because they were able to initially review the entire survey and determine that it interested them. In contrast, web-based respondents were shown one question at a time.

Improved quality of data is considered one potential advantage to web-based survey data collection. Our study showed that the mail version respondents provided more “unusable answers”. In survey research, obtaining less “unusable answers” improves the ease of data analysis and could potentially improve the validity of study results and conclusions. Likely facilitating the quality of data in our survey was our use of a sophisticated web-based survey administration program which allowed elaborate skip pattern incorporation and flexible options for survey formatting. DatStat, the administration program we used for this study, provides systems for detailed participant tracking, participant authentication, and advanced options for survey formatting. Researchers should be aware when selecting from the numerous available web-based survey products that each system has relative advantages and disadvantages. These different products vary widely in terms of data security, authentication of respondents, data quality (ability to program skip logic) and formatting options. Increasing sophistication of the survey tool capabilities typically translate into increased cost, though products exist for most research budgets.

Our study had several strengths. First, the data represent responses from a national sample of gynecologists with good representation from all geographic districts and even distribution of male and female respondents. Second, adequate “coverage” of the intended study population was achieved given email addresses were available for 87% of the study sample. In initial testing of the email list, we received only one “bounce-back” email out of 100 sent. Additionally, we received no surveys returned by mail as undeliverable. However, it is possible that surveys were delivered to incorrect addresses by email or mail and no “bounce-back” or “return-to-sender” was generated.

One limitation of our study was that we used a sequential mixed mode approach, rather than randomizing physicians to the modes of survey administration. Because respondents were not randomly allocated to the web-based or mail mode, there may be unmeasured characteristics that differ between those who completed the survey by mail versus web. Despite this limitation, we chose the sequential mixed mode approach for this study based on its successful use in several previous physician survey studies. (Kim et al., 2000; Hallowell et al., 2000; Kroth et al., 2009) Also, this approach allowed us to obtain a robust amount of data from the physicians who chose not to respond to the web-based version. These specific data on the “web-based nonrespondents” will be useful in the planning of future web-based physician surveys.

Physician surveys provide important information about knowledge, practice patterns, and attitudes. Information obtained can help tailor educational materials and clinical practice guidelines. Based on our findings of adequate representativeness and improved data quality, we would suggest that using a web-based data collection approach can be appropriate for physician surveys.

Acknowledgments

This research was supported by (1) National Institutes of Health-funded K23- HD057957 Career Development Award (Matteson) and (2) The Centers for Disease Control and Prevention and Grant #R60 MC 05674 from the Maternal and Child Health Bureau, Health Resources and Services Administration, Department of Health and Human Services (Schulkin)

Contributor Information

Kristen A. Matteson, Department of Obstetrics and Gynecology, Women and Infants Hospital, Alpert Medical School of Brown University, Providence, RI.

Britta L. Anderson, The American College of Obstetricians and Gynecologists, Washington D.C

Stephanie B. Pinto, Department of Obstetrics and Gynecology, Women and Infants Hospital, Alpert Medical School of Brown University, Providence, RI

Vrishali Lopes, Department of Obstetrics and Gynecology, Women and Infants Hospital, Alpert Medical School of Brown University, Providence, RI.

Jay Schulkin, The American College of Obstetricians and Gynecologists, Washington D.C.

Melissa A. Clark, Department of Obstetrics and Gynecology, Women and Infants Hospital, Alpert Medical School of Brown University, Providence, RI, Department of Community Health and Obstetrics and Gynecology, Alpert Medical School of Brown University, Providence, RI.

References

- Bennet NL, Casebeer L, Kristofco R, Strasser SM. Physicians’ internet health-seeking behaviors. Journal of Continuing Education in the Health Professions. 2004;24:31–38. doi: 10.1002/chp.1340240106. [DOI] [PubMed] [Google Scholar]

- Braithwaite D, Emery J, deLusignan S, Sutton S. Using the internet to conduct surveys of health professionals: a valid alternative? Family Practice. 2003;20(5):545–551. doi: 10.1093/fampra/cmg509. [DOI] [PubMed] [Google Scholar]

- Crawford S, McCabe SE, Pope D. Applying web-based survey design standards. Journal of Prevention and Intervention in the Community. 2005;29(1/2):43–66. [Google Scholar]

- De Leeuw To mix or not to mix data collection modes in surveys. Journal of Official Statistics. 2005;21(2):233–255. [Google Scholar]

- Hill LD, Erickson K, Holzman GB, Power ML, Schulkin J. Practice trends in outpatient obstetrics and gynecology: findings of the Collaborative Ambulatory Research Network, 1995–2000. Obstetrical & Gynecological Survey. 2001;56(8):505–516. doi: 10.1097/00006254-200108000-00024. [DOI] [PubMed] [Google Scholar]

- Hollowell CMP, Patel RV, Bales GT, Gerber GS. Internet and postal survey of endourologic practice patterns among American urologists. The Journal of Urology. 2000;163(6):1779–1782. [PubMed] [Google Scholar]

- Kim HL, Hollowell CMP, Patel RV, Bales GT, Clayman RV, Gerber GS. Use of new technology in endourology and laparoscopy by American urologists: internet and postal survey. Urology. 2000;56(5):760–765. doi: 10.1016/s0090-4295(00)00731-7. [DOI] [PubMed] [Google Scholar]

- Kroth PJ, McPherson L, Leverence R, Pace W, Daniels E, Rhyne RL, Williams RL. Combining web-based and mail surveys improves response rates: A PBRN study from PRIME Net. Annals of Family Medicine. 2009;7:245–248. doi: 10.1370/afm.944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Potts HW, Wyatt JC. Survey of doctors’ experience of patients using the Internt. Journal of Medical Internet Research. 2002;4(1):e5. doi: 10.2196/jmir.4.1.e5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaefer DR, Dillman DA. Development of a standard email methodology: Results of an experiment. Public Opinion Quarterly. 1998;62:378–397. [Google Scholar]

- VanGeest JB, Johnson TP, Welch VL. Methodologies for improving response rates in surveys of physicians: A systematic review. Evaluation and the Health Professions. 2007;30(4):303–321. doi: 10.1177/0163278707307899. [DOI] [PubMed] [Google Scholar]

- Wyatt JC. When to use web-based surveys. Journal of the American Medical Informatics Association. 2000;7:426–239. doi: 10.1136/jamia.2000.0070426. [DOI] [PMC free article] [PubMed] [Google Scholar]