Abstract

Neuroscientists have often described cognition and emotion as separable processes implemented by different regions of the brain, such as the amygdala for emotion and the prefrontal cortex for cognition. In this framework, functional interactions between the amygdala and prefrontal cortex mediate emotional influences on cognitive processes such as decision-making, as well as the cognitive regulation of emotion. However, neurons in these structures often have entangled representations, whereby single neurons encode multiple cognitive and emotional variables. Here we review studies using anatomical, lesion, and neurophysiological approaches to investigate the representation and utilization of cognitive and emotional parameters. We propose that these mental state parameters are inextricably linked and represented in dynamic neural networks composed of interconnected prefrontal and limbic brain structures. Future theoretical and experimental work is required to understand how these mental state representations form and how shifts between mental states occur, a critical feature of adaptive cognitive and emotional behavior.

Keywords: neurophysiology, orbitofrontal cortex, value, reward, aversive, reinforcement learning

Introduction

The past century has witnessed a debate concerning the nature of emotion. When the brain is confronted with a stimulus that evokes emotion, does it first respond by activating a range of visceral and behavioral responses, which are only then followed by the conscious experience of emotion? For example, when we encounter a threatening snake, does autonomic reactivity, as well as behaviors such as freezing or fleeing, emerge prior to the feeling of fear? This view, championed by the psychologists William James and Carle Lange around the turn of the twentieth century (James 1884, 1894; Lange 1922), has attracted renewed interest because of the influential work of Damasio and colleagues (Damasio 1994). Alternatively, do visceral and behavioral responses occur as a result of central processing in the brain—processing that gives rise to emotional feelings—which then regulates or controls a variety of bodily responses [a possibility raised decades ago by Walter Cannon (1927) and Philip Bard (1928)]?

Neuroscientists have often sidestepped this debate by operationally defining a particular aspect of emotion—e.g., learning about fear—and using a specific behavioral or physiological assay—e.g., freezing—to investigate the neural basis of the process (Salzman et al. 2005). This approach is agnostic about which response comes first: the visceral and behavioral expression of emotion or the feeling of emotion. But it has proven powerful in helping to identify and characterize the neural circuitry responsible for specific aspects of emotional expression and regulation. These investigations have shown that one brain area, the amygdala, plays a vital role in many emotional processes (Baxter & Murray 2002, Lang & Davis 2006, LeDoux 2000, Phelps & LeDoux 2005) and that the amygdala and its interconnections with the prefrontal cortex (PFC) likely underlie many aspects of the interactions between emotion and cognition (Barbas & Zikopoulos 2007, Murray & Izquierdo 2007, Pessoa 2008, Price 2007).

Today, we still lack a resolution to the original debate concerning the relationship between emotional feelings and the bodily expression of emotions, in large part because both viewpoints appear to be supported in some circumstances. Emotional feelings do not necessarily involve visceral and behavioral components and vice versa (Lang 1994). But neurobiological advances—in particular, emerging data on the intimate relationship between the PFC and limbic areas such as the amygdala—begin to suggest a solution. As discussed below, the amygdala is essential for many of the visceral and behavioral expressions of emotion; meanwhile, the PFC—especially its medial and orbital regions—appears to be responsible for many of the cognitive aspects of emotional responses. However, recent studies suggest that both the functional and the electrophysiological characteristics of the amygdala and the PFC overlap and intimately depend on each other. Thus, the neural circuits mediating cognitive, emotional, physiological, and behavioral responses may not truly be separable and instead are inextricably linked. Moreover, we lack a unifying conceptual framework for understanding how the brain links these processes and how these processes change in unison.

Mental States: Synthesizing Cognition and Emotion

Here, we propose a theoretical foundation for understanding emotion in the context of its intimate relation to the cognitive, physiological, and behavioral responses that constitute emotional expression. We review recent neurobiological data concerning the amygdala and the PFC and discuss how these data fit into a proposed framework for understanding interactions between emotion and cognition.

The concept of a mental state plays a central role in our theoretical framework. We define a mental state as a disposition to action—i.e., every aspect of an organism's inner state that could contribute to its behavior or other responses—which may comprise all the thoughts, feelings, beliefs, intentions, active memories, and perceptions, etc., that are present at a given moment. Thus mental states can be described by a large number of variables, and the set of all mental state variables could provide a quantitative description of one's disposition to behavior. Of note, the identification of mental state variables is constrained by the language we use to describe them. Consequently, mental state variables are not necessarily unique, and they are not necessarily independent from each other. Mental state variables need not be conscious or unconscious because both types of variables can predispose one to action. Overall, an organism's mental state incorporates internal variables, such as hunger or fear, as well as the representation of a set of environmental stimuli present at a given moment, and the temporal context of stimuli and events. Any given mental state predisposes an organism to respond in certain ways; these actions may be cognitive (e.g., making a decision), behavioral (e.g., freezing or fleeing), or physiological (e.g., increasing heart rate). Mental state variables are useful theoretical constructs because they provide quantitative metrics for analyzing and understanding behavioral and brain processes.

The concept of a mental state is intimately related to, but distinct from, what we call a brain state. Each mental state corresponds to one or more states of the dynamic variables—firing rates, synaptic weights, etc.—that describe the neural circuits of the brain; the full set of values of these variables constitutes a brain state. How are the variables characterizing a mental state represented at the neural circuit level—i.e., the current brain state? This is one way to phrase a fundamental and long-standing question for neuroscientists. At one end of the spectrum is the possibility that each neuron encodes only one variable. For example, a neuron may respond only to the pleasantness of a sensory stimulus, and not to its identity, to its meaning, or to the context in which the stimulus appears. When neurons encode only one variable, other neurons may easily read out the information represented, and the representation can, in principle, be modified without affecting other mental state variables.

One of the disadvantages of the type of representation described immediately above is well illustrated by what is known as the “binding problem” (Malsburg 1999). If each neuron represents only one mental state variable, then it is difficult to construct representations of complex situations. For example, consider a scene with two visual stimuli, one associated with reward and the other with punishment. The brain state should contain the information that pleasantness is associated with the first stimulus and not with the other. If neurons represent only one mental state variable at a time, like stimulus identity, or stimulus valence, then “binding” the information about different variables becomes a substantial challenge. In this case, there must be an additional mechanism that links the activation of the neuron representing pleasantness to the activation of the neuron representing the first stimulus. One simple and efficient way to solve this problem is to introduce neurons with mixed selectivity to conjunctions of events, such as a neuron that responds only when the first stimulus is pleasant. In this scheme, the representations of pleasantness and stimulus identity would be entangled and more difficult to decode, but the number of situations that could be represented would be significantly larger. As discussed below, different brain areas may contain representations with different degrees of entanglement.

How do emotions fit into the conceptual framework of mental states arising from brain states? One influential schema for characterizing emotion posits that emotions can vary along two axes: valence (pleasant versus unpleasant or positive versus negative) and intensity (or arousal) (Lang et al. 1990, Russell 1980). These two variables can simply be conceived as components of the current mental state. Two mental states correspond to different emotions when at least one of the two mental state variables—valence or intensity—is significantly different. Thus, variables describing emotions have the same ontological status as do variables that describe cognitive processes such as memory, attention, decision-making, language, and rule-based problem-solving. Below, we describe neurophysiological data documenting that variables such as valence and arousal are strongly encoded in the amygdala–prefrontal circuit, along with variables related to other cognitive processes. We suggest that neural representations in the amygdala may be more biased toward encoding mental state variables characterizing emotions (valence and intensity). PFC neurons may encode a broader range of variables in an entangled fashion, reflecting the complexity of the behavior and cognition that are its putative outputs.

The concept of a mental state unites cognition and emotion as part of a common framework. How does this framework contribute to the debate about the relationship between emotions and bodily responses? We argue that the issues raised in the debate essentially dissolve when one conceptualizes emotions as part of mental states: Neither emotional feelings nor bodily responses necessarily come first or second. Rather, both of these aspects of emotion are outputs of the neural networks that represent mental states. Furthermore, all the thoughts, physiological responses, and behaviors that constitute emotion are part of an ongoing feedback loop that alters the dynamic, ever-fluctuating brain state and generates new mental states from moment to moment.

How do mental states that integrate emotion and cognition arise from the activity of neural circuits? Below, we describe a potential anatomical substrate—the amygdala–prefrontal circuit—for emotional-cognitive interactions in the brain and how neurons in these areas could dynamically contribute to a subject's mental state. First, we review the bidirectional connections between the amygdala and the PFC that could form the basis of many interactions between cognition and emotion. Second, we review neurobiological studies that used lesions and pharmacological inactivation to investigate the function of the amygdala–PFC circuitry. Third, we review neurophysiological data from the amygdala and the PFC that reveal encoding of variables critical for the representation of mental states and for the learning algorithms—specifically, reinforcement learning (RL)—that emphasize the importance of encoding these parameters for adaptive behavior. For all these topics, we focus on data collected from nonhuman primates. Compared with their rodent counterparts, non-human primates are much more similar to humans in terms of both behavioral repertoire and anatomical development.

Finally, we describe a theoretical proposal that explains how mental states might emerge in the brain and how the amygdala and the PFC could play an integral role in this process. In particular, we propose that the interactions between emotion and cognition may be understood in the context of mental states that can be switched or gated by internal or external events.

An Anatomical Substrate for Interactions Between Emotion and Cognition

This review focuses on interactions between the amygdala and the PFC because of their long-established roles in mediating emotional and cognitive processes (Holland & Gallagher 2004, Lang & Davis 2006, LeDoux 2000, Miller & Cohen 2001, Ochsner & Gross 2005, Wallis 2007). The amygdala is most often discussed in the context of emotional processes; yet it is extensively interconnected with the PFC, especially the posterior orbitofrontal cortex (OFC), and the anterior cingulate cortex (ACC). Here we provide a brief overview of amygdala and PFC anatomy, with an emphasis on the potential anatomical basis of interactions between cognitive and emotional processes.

Amygdala

The amygdala is a structurally and functionally heterogeneous collection of nuclei lying in the anterior medial portion of each temporal lobe. Sensory information enters the amygdala from advanced levels of visual, auditory, and somatosensory cortices, from the olfactory system, and from polysensory brain areas such as the perirhinal cortex and the parahippocampal gyrus (Amaral et al. 1992, McDonald 1998, Stefanacci & Amaral 2002). Within the lateral nucleus, the primary target of projections from unimodal sensory cortices, different sensory modalities are segregated anatomically. But, owing in part to intrinsic connections, multimodal encoding subsequently emerges in the lateral, basal, accessory basal, and other nuclei of the amygdala (Pitkanen & Amaral 1998, Stefanacci & Amaral 2000). Output from the amygdala is directed to a wide range of target structures, including the PFC, the striatum, sensory cortices (including primary sensory cortices, connections which are probably unique to primates), the hippocampus, the perirhinal cortex, the entorhinal cortex, and the basal forebrain, and to subcortical structures responsible for aspects of physiological responses related to emotion, such as autonomic responses, hormonal responses, and startle (Davis 2000). In general, subcortical projections originate from the central nucleus, and projections to cortex and the striatum originate from the basal, accessory basal, and in some cases the lateral nuclei (Amaral et al. 1992, 2003; Amaral & Dent 1981; Carmichael & Price 1995a; Freese & Amaral 2005; Ghashghaei et al. 2007; Stefanacci et al. 1996; Stefanacci & Amaral 2002; Suzuki & Amaral 1994).

Prefrontal Cortex

The PFC, located in the anterior portion of the cerebral cortex and defined by projections from the mediodorsal nucleus of the thalamus (Fuster 2008), is composed of a group of interconnected brain areas. The distinctive feature of primate PFC is the emergence of dysgranular and granular cortices, which are completely absent in the rodent. In rodents, prefrontal cortex is entirely agranular (Murray 2008, Preuss 1995, Price 2007, Wise 2008). Therefore, much of the primate PFC does not have a clear-cut homolog in rodents. The PFC is often grouped into different subregions; Petrides & Pandya (1994) have described these as dorsal and lateral areas (Walker areas 9, 46, and 9/46), ventrolateral areas (47/12 and 45), medial areas (32 and 24), and orbitofrontal areas (10, 11, 13, 14, and 47/12). Of note, there are extensive interconnections between different PFC areas, allowing information to be shared within local networks (Barbas & Pandya 1989, Carmichael & Price 1996, Cavada et al. 2000), and information also converges from sensory cortices in multiple modalities (Barbas et al. 2002). In general, dorsolateral areas receive input from earlier sensory areas (Barbas et al. 2002). Orbitofrontal areas receive inputs from advanced stages of sensory processing from every modality, including gustatory and olfactory (Carmichael & Price 1995b, Cavada et al. 2000, Romanski et al. 1999). Thus, extrinsic and intrinsic connections make the PFC a site of multimodal convergence of information about the external environment.

In addition, the PFC receives inputs that could inform it about internal mental state variables, such as motivation and emotions. Orbital and medial PFC are closely connected with limbic structures such as the amygdala (see below) and also have direct and indirect connections with the hippocampus and rhinal cortices (Barbas & Blatt 1995, Carmichael & Price 1995a, Cavada et al. 2000, Kondo et al. 2005, Morecraft et al. 1992). Medial and part of orbital PFC has connections to the hypothalamus and other subcortical targets that could mediate autonomic responses (Ongur et al. 1998). Neuromodulatory input to the PFC from dopaminergic, serotonergic, noradrenergic, and cholinergic systems could also convey information about internal state (Robbins & Arnsten 2009). Finally, outputs from the PFC, especially from dorsolateral PFC, are directed to motor systems, consistent with the notion that the PFC may form, represent, and/or transmit motor plans (Bates & Goldman-Rakic 1993, Lu et al. 1994). Altogether, the PFC receives inputs that provide information about many external and internal variables, including those related to emotions and to cognitive plans, providing a potential anatomical substrate for the representation of mental states.

Anatomical Interactions Between the PFC and Amygdala

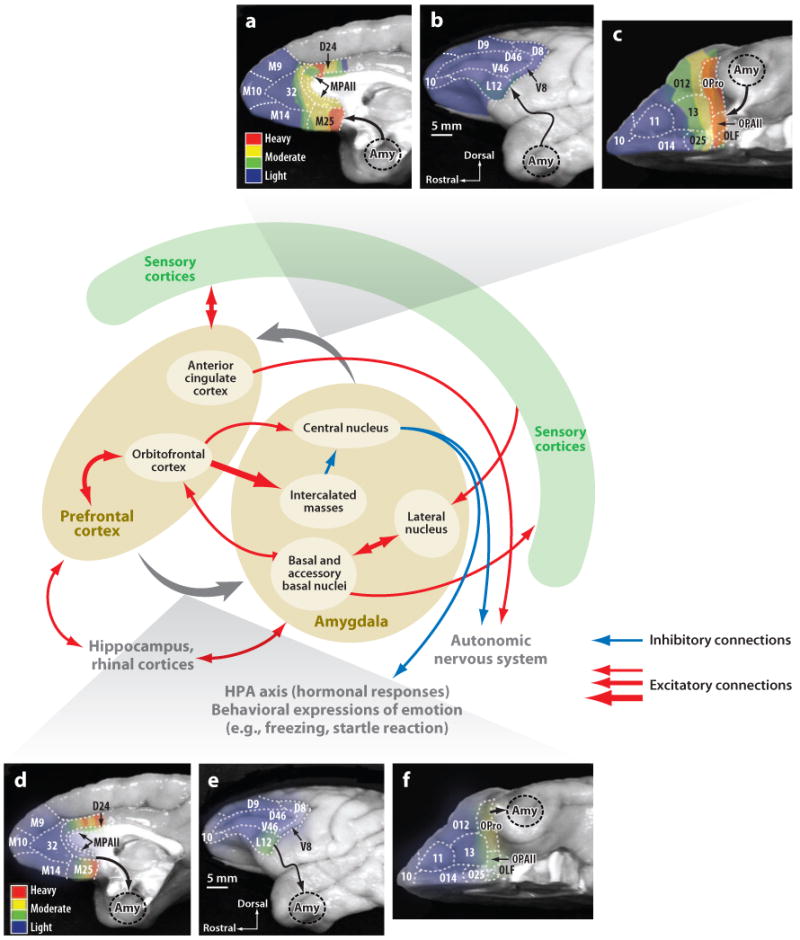

Although there are diffuse bidirectional projections between amygdala and much of the PFC [see, e.g., figure 4 of Ghashghaei et al. (2007)], the densest interconnections are between the amygdala and orbital areas (e.g., caudal area 13) and medial areas (e.g., areas 24 and 25). The extensive anatomical connections among the amygdala, the PFC, and related structures are summarized in Figure 1. Amygdala input to the PFC often terminates in both superficial and deep layers. OFC output to the amygdala originates in deep layers, and in some cases also in superficial layers, suggesting both feedforward and feedback modes of information transmission (Ghashghaei et al. 2007).

Figure 1.

Overview of anatomical connections of the amygdala and the prefrontal cortex (PFC). Schematic showing some (but not all) the main projections of the amygdala and the PFC. The interconnections of the amygdala and the PFC (and especially the OFC) are emphasized. (a–c) Summary of projections from the amygdala to the PFC (density of projections is color coded). (d–f) Summary of projections from the PFC to the amygdala (projection density is color coded). The complex circuitry between the amygdala and the OFC is also highlighted (red arrows connect the structures). Medial amygdala nuclei not shown. Many additional connections of both amygdala and PFC are not shown. Panels a–f were adapted with permission from figures 5 and 6 of Ghashghaei et al. (2007).

Previous work has established that the OFC output to the amygdala is complex and segregated, targeting multiple systems in the amygdala (Ghashghaei & Barbas 2002). Some OFC output is directed to the intercalated masses, a ribbon of inhibitory neurons in the amygdala that inhibits activity in the central nucleus (Ghashghaei et al. 2007, Pare et al. 2003). In addition, the OFC projects directly to the central nucleus, providing a means by which the OFC can activate this output structure in addition to inhibiting it (Ghashghaei & Barbas 2002, Stefanacci & Amaral 2000, 2002). Finally, the OFC projects to the basal, accessory basal, and lateral nuclei, where it may influence computations occurring within the amygdala (Ghashghaei & Barbas 2002, Stefanacci & Amaral 2000, 2002). Overall, the bidirectional communication between the amygdala and the OFC, as well as the connections with the rest of the PFC, provides a potential basis for the integration of cognitive, emotional, and physiological processes into a unified representation of mental states.

The Role of the Amygdala and the PFC in Representing Mental States: Lesion Studies

Recent studies using lesions or pharmacological inactivation combined with behavioral studies in monkeys have begun to reveal the specific roles of the primate amygdala and various regions of the PFC in cognitive and emotional processes. We focus here on studies that have helped demonstrate the roles of these brain structures in processes such as valuation, rule-based actions, emotional processes, attention, goal-directed behavior, and working memory—processes that are likely to set some of the variables that constitute a subject's mental state.

Amygdala

Historically, lesions of primate amygdala produced a wide range of behavioral and emotional effects (Aggleton & Passingham 1981, Jones & Mishkin 1972, Kluver & Bucy 1939, Spiegler & Mishkin 1981, Weiskrantz 1956); but in recent years, scientists have increasingly recognized the importance of using anatomically precise lesions that spare fibers of passage. Many older studies had employed aspiration or radiofrequency lesions, which destroy both gray and white matter. By contrast, recent studies using excitotoxic chemical injections, which specifically kill cell bodies, have revised our understanding of cognitive and emotional functions that require the amygdala (Baxter & Murray 2000, Izquierdo & Murray 2007). Some conclusions, however, have been confirmed over many studies using both old and new techniques. In particular, scientists have most prominently used two types of behavioral tasks to establish the amygdala's role in forming or updating associations between sensory stimuli and reinforcement. First, consistent with findings from rodents, the primate amygdala is required for fear learning induced by Pavlovian conditioning (Antoniadis et al. 2009). Second, the amygdala is required for updating the value of a rewarding reinforcer during a devaluation procedure (Machado & Bachevalier 2007, Malkova et al. 1997, Murray & Izquierdo 2007). In this type of task, experimenters satiate an animal on a particular type of reward and test whether satiation changes subsequent choice behavior such that the animal chooses the satiated food type less often; amygdala lesions eliminate the effect of satiation. Pharmacological inactivation of the amygdala has confirmed the amygdala's role in updating a representation of a reinforcer's value; however, once this updating process finishes, the amygdala does not appear to be required (Wellman et al. 2005). In addition, the amygdala is important for other aspects of appetitive conditioned reinforcement (Parkinson et al. 2001) and for behavioral and physiological responding to emotional stimuli such as snakes and intruders in a manner consistent with its playing a role in processing both emotional valence and intensity (Izquierdo et al. 2005, Kalin et al. 2004, Machado et al. 2009). Finally, experiments using ibotenic acid instead of aspiration lesions in the amygdala have led to revisions in our understanding of the amygdala for reversal-learning task performance (during which stimulus-reinforcement contingencies are reversed). Recent evidence indicates that the amygdala is not required for reversal learning on tasks involving only rewards, unlike previous accounts (Izquierdo & Murray 2007). Overall, these data link the amygdala to functions that rely on neural processing related to both emotional valence and intensity.

Prefrontal Cortex

A long history of studies have used lesions to establish the importance of the PFC in goal-directed behavior, rule-guided behavior, and executive functioning more generally (Fuster 2008, Miller & Cohen 2001, Wallis 2007). These complex cognitive processes form an integral part of our mental state. In addition, lesions of orbitofrontal cortex (OFC) cause many emotional and cognitive deficits reminiscent of amygdala lesions, including deficits in reinforcer devaluation and in behavioral and hormonal responses to emotional stimuli (Izquierdo et al. 2004, 2005; Kalin et al. 2007; Machado et al. 2009; Murray & Izquierdo 2007). Recently, investigators have employed detailed trial-by-trial data analysis to enhance the understanding of the effects of lesions; this work led investigators to propose that ACC and OFC are more involved in the valuation of actions and stimuli, respectively (Kennerley et al. 2006, Rudebeck et al. 2008, Rushworth & Behrens 2008).

In addition, a recent study separately examined lesions of the dorsolateral PFC, the ventrolateral PFC, the principal sulcus (PS), the ACC, and the OFC on a task analogous to the Wisconsin Card Sorting Test (Buckley et al. 2009) used to assay PFC function in humans (Stuss et al. 2000). In the authors' version of the task, monkeys must discover by trial and error the current rule that is in effect; subjects needed to employ working memory for the rule, as well as to utilize information about recent reward history to guide behavior. Lesions in different PFC regions caused distinct deficit profiles: Deficits in working memory, reward-based updating of value representations, and active utilization of recent choice-outcome values were ascribed primarily to PS, OFC, and ACC lesions, respectively (Buckley et al. 2009). Of note, this study used aspiration lesions of the targeted brain regions, which almost certainly damaged fibers of passage located nearby.

A classic finding following aspiration lesions of the OFC is a deficit in learning about reversals of stimulus-reward contingencies (Jones & Mishkin 1972). However, a recent study used ibotenic acid to place a discrete lesion in OFC areas 11 and 13 (Kazama & Bachevalier 2009) and failed to find a deficit in reversal learning. We therefore may need to revise our understanding of how OFC contributes to reversal learning (similar to revisions made with reference to amygdala function, see above); however, the lack of an effect in the recent study may have been due to the anatomically restricted nature of the lesion. This issue will require further investigation.

Prefrontal-Amygdala Interactions

The amygdala is reciprocally connected with the PFC, primarily OFC and ACC, but also diffusely to other parts of the PFC (Figure 1). Studies have begun to examine possible functional interactions between the amygdala and the OFC in mediating different aspects of reinforcement-based and emotional behavior. In one powerful set of experiments, Baxter and colleagues (2000) performed a crossed surgical disconnection of the amygdala and the OFC by lesioning amygdala on one side of the brain and the OFC in the other hemisphere [connections between the amygdala and the OFC are ipsilateral (Ghashghaei & Barbas 2002)]. As noted above, bilateral lesions of monkey amygdala or the OFC impair reinforcer devaluation; consistent with this finding, the authors found that surgical disconnection also impaired reinforcer devaluation, indicating that the amygdala and the OFC must interact to update the value of a reinforcer. Notably, in humans, neuroimaging studies on rare patients with focal amygdala lesions have revealed that the BOLD signal related to reward expectation in the ventromedial PFC is dependent on a functioning amygdala (Hampton et al. 2007). Investigators have also described functional interactions between the amygdala and the OFC in rodents (Saddoris et al. 2005, Schoenbaum et al. 2003); however, as noted above, rodent OFC may not necessarily correspond to any part of the primate granular/dysgranular PFC (Murray 2008, Preuss 1995, Wise 2008).

The lesion studies described above support the notion that the PFC and the amygdala, often in concert with each other, participate in executive functions such as attention, rule representation, working memory, planning, and valuation of stimuli and actions. In addition, these structures mediate aspects of emotional processing, including processing related to emotional valence and intensity. Together these variables form an integral part of what we have termed a mental state. However, one must exercise some caution when interpreting the results of lesion studies: Owing to potential redundancy in neural coding among brain circuits, a negative result does not necessarily imply that the lesioned area is not normally involved in the function in question. As discussed in the next section, neurons in many parts of the PFC have complex, entangled physiological properties. Given redundancy in encoding, it is therefore not surprising that lesions in these parts of the PFC often do not impair functioning related to the full range of response properties.

Neurophysiological Components of Mental States

We have defined mental states as action dispositions, where actions are broadly defined to include cognitive, physiological, or behavioral responses. Here, we focus on neural signals in the PFC and the amygdala that may encode key cognitive and emotional features of a mental state: the valuation of stimuli, the valence and intensity of emotional reactions to stimuli, our knowledge of the context of sensory stimuli and the requisite rules in that context, and our plans for interacting with stimuli in the environment. We review recent neurophysiological recordings from behaving nonhuman primates that demonstrate coding of all these variables, and they often feature entangled encoding of multiple variables.

Neural Representations of Emotional Valence and Arousal in the Amygdala and the OFC

In recent years, a number of physiological experiments have been directed at understanding the coding properties of neurons in the amygdala and the OFC. The amygdala has long been investigated with respect to aversive processing and its prominent role in fear conditioning, primarily in rodents (Davis 2000, LeDoux 2000, Maren 2005). However, a number of scientists have recognized that the amygdala also plays a role in appetitive processing (Baxter & Murray 2002). Early neurophysiological experiments in monkeys established the amygdala as a potential locus for encoding the affective properties of stimuli (Fuster & Uyeda 1971; Nishijo et al. 1988a, b; Sanghera et al. 1979; Sugase-Miyamoto & Richmond 2005).

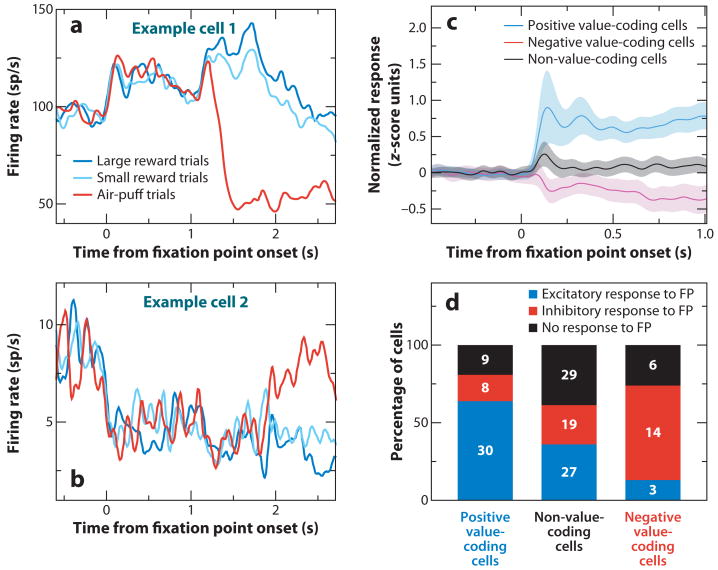

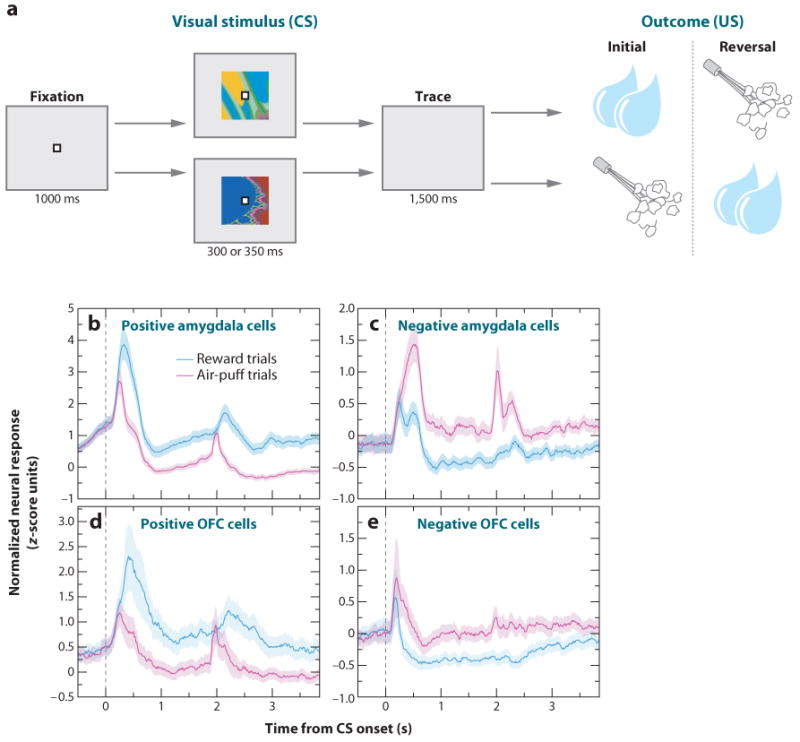

To determine whether neurons in the primate amygdala preferentially encoded rewarding or aversive associations, Paton and colleagues (2006) recorded single neuron activity while monkeys learned that visual stimuli—novel abstract fractal images—predicted liquid rewards or aversive air puffs directed at the face, respectively. The experiments employed a Pavlovian procedure called trace conditioning, in which there is a brief temporal gap (the trace interval) between the presentation of a conditioned stimulus (CS) and an unconditioned stimulus (US) (Figure 2a). Monkeys exhibited two behaviors that demonstrated their learning of the stimulus-outcome contingencies: anticipatory licking (an approach behavior) and anticipatory blinking (a defensive behavior). After monkeys learned the initial CS-US associations, reinforcement contingencies were reversed. Neurophysiological recordings revealed that the amygdala contained some neurons that respond more strongly when a CS is paired with a reward (positive value-coding neurons), and other neurons respond more strongly when the same CS is paired with an aversive stimulus (negative value-coding neurons). Although individual neurons exhibited this differential response during different time intervals (e.g., during the CS interval or parts of the trace interval), across the population of neurons, the value-related signal was temporally extended across the entire trial (Figure 2b,c). Positive and negative value-coding neurons appeared to be intermingled in the amygdala; both types of neurons dispersed within (and perhaps beyond) the basolateral complex (Belova et al. 2008, Paton et al. 2006).

Figure 2.

Neural representation of positive and negative valence in the amygdala and the OFC. (a) Trace-conditioning task involving both appetitive and aversive conditioning. Monkeys first centered gaze at a fixation point. Each experiment used novel abstract images as conditioned stimuli (CS). After fixating for 1 s, monkeys viewed a CS briefly, and following a 1.5-ms trace interval, unconditioned stimulus (US) delivery occurred. One CS predicted liquid reward, and a second CS predicted an aversive air puff directed at the face. After monkeys learned these initial associations, as indicated by anticipatory licking and blinking, the reinforcement contingencies were reversed. A third CS appeared on one-third of the trials, and it predicted either nothing or a much smaller reward throughout the experiment (not depicted in the figure). (b–e) Normalized and averaged population peri-stimulus time histograms (PSTHs) for positive and negative encoding amygdala (b,c) and OFC (d,e) neurons.

Theoretical accounts of reinforcement learning often posit a neural representation of the value of the current situation as a whole (state value). Data from Belova et al. (2008) suggest that the amygdala could encode the value of the state instantiated by the CS presentation. Neural responses to the fixation point, which appeared at the beginning of trials, were consistent with a role of the amygdala in encoding state value. One can argue that the fixation point is a mildly positive stimulus because monkeys choose to look at it to initiate trials; and indeed, positive value-coding neurons tend to increase their firing in response to fixation point presentation, and negative value-coding neurons tend to decrease firing (Figure 3). Neural signaling after reward or air-puff presentation also indicates that amygdala neurons track state value, as differential levels of activity, on a population level, extending well beyond the termination of USs (Figure 2b,c). All these signals related to reinforcement contingencies could be used to coordinate physiological and behavioral responses specific to appetitive and aversive systems; therefore, they form a potential neural substrate for positive and negative emotional variables.

Figure 3.

Amygdala neurons track state value during the fixation interval. (a,b) PSTHs aligned on fixation point onset from two example amygdala neurons (a, positive encoding; b, negative encoding) revealing responses to the fixation point consistent with their encoding state value. (c) Averaged and normalized responses to the fixation point for positive, negative, and nonvalue-coding amygdala neurons. (d) Histograms showing the number of cells that increased, decreased, or did not change their firing rates as a function of which valence the neuron encoded. Note that the fixation point may be understood as a mildly positive stimulus, so positive neurons tend to increase their response to it and negative neurons decrease their response. Adapted with permission from Belova et al. (2008, figure 2).

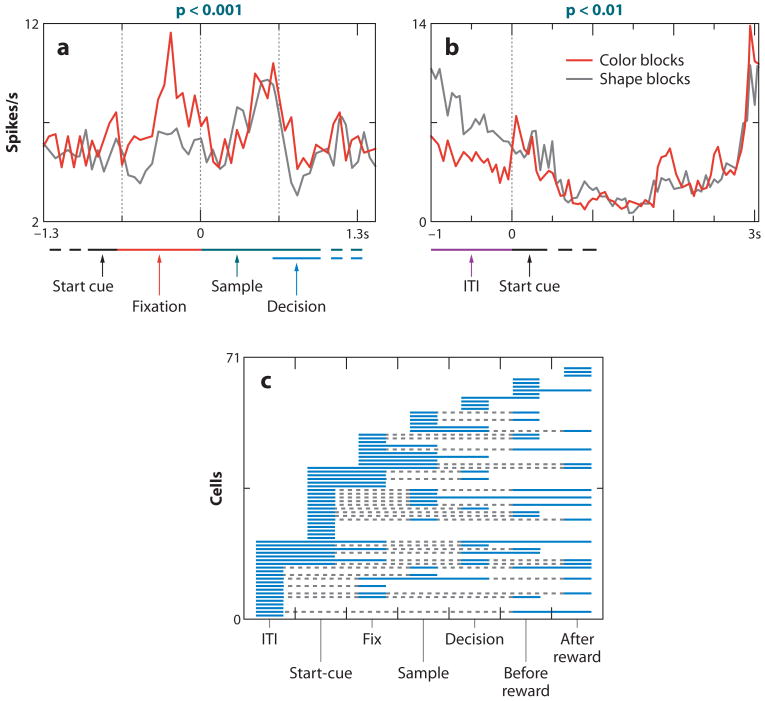

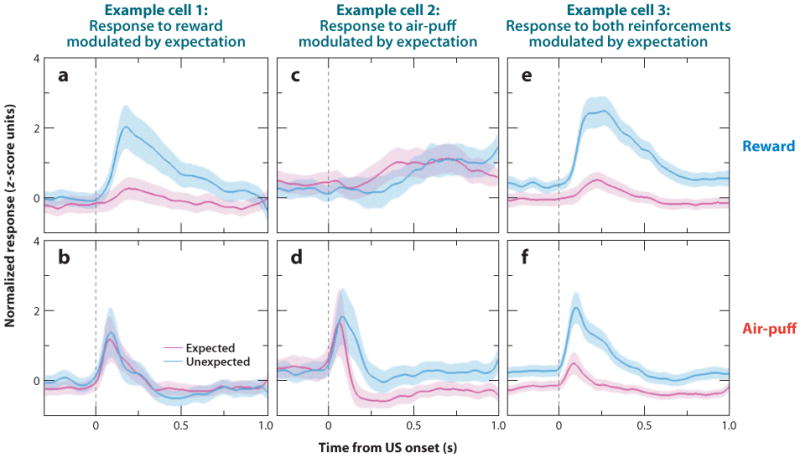

As discussed earlier, however, valence is only one dimension of emotion; a second dimension is emotional intensity, or arousal. Recent data also link the amygdala to this second dimension. Belova and colleagues (2007) measured responses to rewards and aversive air puffs when they were either expected or unexpected. Surprising reinforcement is generally experienced as more arousing than when the same reinforcements occur predictably; consistent with this notion, expectation often modulated responses to reinforcement in the amygdala—in general, neural responses were enhanced when reinforcement was surprising. For some neurons, this modulation occurred only for rewards or for air puffs, but not for both (Figure 4a–d). These neurons therefore could participate in valence-specific emotional and cognitive processes. However, many neurons modulated their responses to both rewards and air puffs (Figure 4e, f). These neurons could underlie processes such as arousal or enhanced attention, which occur in response to intense emotional stimuli of both valences. Consistent with this role, neural correlates of skin conductance responses, which are mediated by the sympathetic nervous system, have been reported in the amygdala (Laine et al. 2009). Moreover, this type of valence-insensitive modulation of reinforcement responses by expectation could be appropriate for driving reinforcement learning through attention-based learning algorithms (Pearce & Hall 1980).

Figure 4.

Valence-specific and valence-nonspecific encoding in the amgydala. (a–f). Normalized and averaged neural responses to reinforcement when it was expected (magenta) and unexpected (cyan) for reward (a,c,e) and air puff (b,d, f). Expectation modulated reinforcement responses for only one valence of reinforcement in some cells (a–d) but modulated reinforcement responses for both valences in many cells (e,f). These responses are consistent with a role of the amygdala in valence-specific processes, as well as valence-nonspecific processes, such as attention, arousal, and motivation. Adapted with permission from Belova et al. (2007, figure 3).

Of course, the amygdala does not operate in isolation; in particular, its close anatomical connectivity and functional overlap with the OFC raises the question of how OFC processing compares with and interacts with amygdala processing. Using a paradigm similar to that described above, Morrison and Salzman discovered that the OFC contains neurons that prefer rewarding or aversive associations, as in the amygdala, and that, across the population, the signals extend from shortly after CS onset until well after US offset (Figure 2d,e; data largely collected from area 13) (Morrison & Salzman 2009, Salzman et al. 2007). OFC responses to the fixation point are also modulated according to whether a cell has a positive and negative preference, in a manner similar to the amygdala (S. Morrison & C.D. Salzman, unpublished data). Together, these data suggest that the OFC could also participate in a representation of state value.

Both positive and negative valences are represented in the amygdala and the OFC, but how might OFC and amygdala interact with each other? Unpublished data indicate that the appetitive system—composed of cells that prefer positive associations—updates more quickly in the OFC, adapting to changes in reinforcement contingencies faster than the appetitive system in the amygdala (S. Morrison & C.D. Salzman, personal communication, 2009). However, the opposite is true for the aversive system: Negative-preferring amygdala neurons adapt to changes in reinforcement contingencies more rapidly than do their counterparts in the OFC. Thus, the computational steps that update representations in appetitive and aversive systems are not the same in the amygdala and the OFC, even though the neurons appear to be anatomically interspersed in both structures. In contrast, after reinforcement contingencies are well learned in this task, the OFC signals upcoming reinforcement more rapidly than does the amygdala in both appetitive and aversive cells. This finding is consistent with a role for the OFC in rapidly signaling stimulus values and/or expected outcomes once learning is complete—a signal that could be used to exert prefrontal control over limbic structures such as the amygdala or to direct behavioral responding more generally.

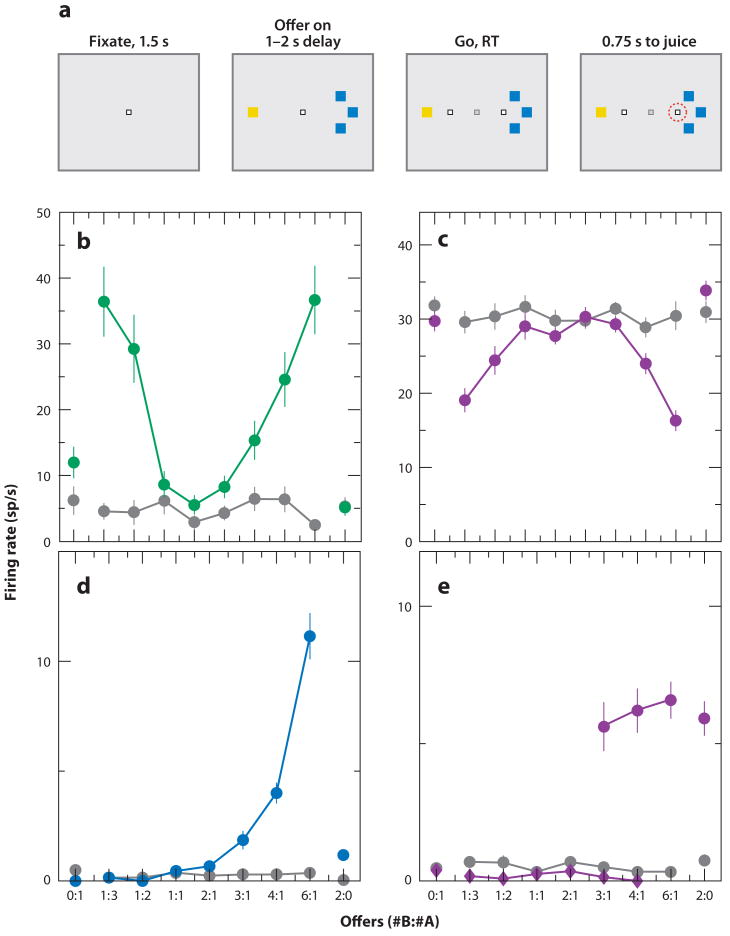

The studies described above used Pavlovian conditioning—a procedure in which no action is required of the subject to receive reinforcement—to characterize neural response properties in relation to appetitive and aversive processing. However, many other studies have used decision-making tasks to quantify the extent to which neural response properties are related to reward values (Dorris & Glimcher 2004; Kennerley et al. 2008; Kim et al. 2008; Lau & Glimcher 2008; McCoy & Platt 2005; Padoa-Schioppa & Assad 2006, 2008; Platt & Glimcher 1999; Roesch & Olson 2004; Samejima et al. 2005; Sugrue et al. 2004; Wallis 2007; Wallis & Miller 2003); moreover, similar tasks are often used to examine human valuation processes using fMRI (Breiter et al. 2001, Gottfried et al. 2003, Kable & Glimcher 2007, Knutson et al. 2001, Knutson & Cooper 2005, McClure et al. 2004, Montague et al. 2006, O'Doherty et al. 2001, Rangel et al. 2008, Seymour et al. 2004). The strength of decision-making tasks is that the investigator can directly compare the subjects' preferences, on a fine scale, with neuron signaling. For example, Padoa-Schioppa & Assad (2006) trained monkeys to indicate which of two possible juice rewards they wanted; they offered the juices in different amounts by presenting visual tokens that indicated both juice type and juice amount (Figure 5a). Using this task, they discovered that multiple signals were present in different populations of neurons in the OFC. Some OFC neurons encoded what the authors termed “chosen value”: Firing was correlated with the value of the chosen reward; some neurons preferred higher and lower values, respectively (Figure 5b,c). These cell populations are reminiscent of the positive and negative value-coding neurons uncovered using the Pavlovian procedure described above. However, because negative valences were not explored in these experiments, these neurons may represent motivation, arousal, or attention, which are correlated with reward value (Maunsell 2004, Roesch & Olson 2004). Other OFC neurons encoded the value of one of the rewards offered (offer value cells; Figure 5d) and others still simply encoded the type of juice offered (taste neurons; Figure 5e), consistent with previous identification of taste-selective neurons in the OFC (Pritchard et al. 2007, Wilson & Rolls 2005). Further data suggested that the OFC responses were menu-invariant—i.e., if a cell prefers A to B, and B to C, it will also prefer A to C (Padoa-Schioppa & Assad 2008). This characteristic is called transitivity; it implies the ability to use the representation of value as a context-independent economic currency that could support decision-making. However, this finding may depend on the exact design of the task because other studies, focusing on partially overlapping regions of the OFC, have reported neural responses that reflect relative reward preferences, i.e., responses that vary with context and do not meet the standard of transitivity (Tremblay & Schultz 1999).

Figure 5.

OFC neural responses during economic decision-making. (a) Behavioral task. Monkeys centered gaze at a fixation point and then viewed two visual tokens that indicate the type and quantity of juice reward being offered for potential saccades to each location (tokens, yellow and blue squares). After fixation point extinction, the monkey is free to choose which reward it wants by making a saccade to one of the targets. The amounts of juices offered of each type are titrated against each other to develop a full psychometric characterization of the monkey's preferences as a function of the two juice types offered. (b–e) Activity of four neurons revealing different types of response profiles. X-axis shows the quantity of each offer type. Chosen value neurons increased (b) or decreased (c) their firing when the value of their chosen option increased. Offer value neurons (d) increased their firing when the value of one of the juices offered increased. Juice neurons (e) increased their firing for trials with a particular juice type offered, independent of the amount of juice offered. Adapted from Padoa-Schioppa & Assad (2006, figures 1 and 3) with permission.

This rich variety of response properties in the OFC and the amygdala still represents only a subset of the types of encoding that have been observed in these brain areas. For example, amygdala neurons recorded during trace conditioning often exhibited image selectivity (Paton et al. 2006), and similar signals have been observed in the OFC (S. Morrison & C.D. Salzman, personal communication). Moreover, investigators have also described amygdala neural responses to faces, vocal calls, and combinations of faces and vocal calls (Gothard et al. 2007, Kuraoka & Nakamura 2007, Leonard et al. 1985). Meanwhile, the OFC neurons also encode gustatory working memory and modulate their responses depending on reward magnitude, reward probability, and the time and effort required to obtain a reward (Kennerley et al. 2008). Overall, in addition to encoding variables related to valence and arousal/intensity—two variables central to the representation of emotion—amygdala and OFC neurons encode a variety of other variables in an entangled fashion (Paton et al. 2006, Rigotti et al. 2010a).

Neural Representations of Cognitive Processes in the PFC

We have reviewed briefly the encoding of valence and arousal in the amygdala and the OFC, and now we turn our attention to the encoding of other mental state variables in the PFC. Our goal is not to discuss systematically every aspect of PFC neurophysiology, but instead to highlight response properties that may play an especially vital role in setting the variables that constitute a mental state: encoding of rules, which are essential for appropriately contextualizing environmental stimuli and other variables; flexible encoding of stimulus-stimulus associations across time and sensory modality; and encoding of complex motor plans.

Encoding of rules in the PFC

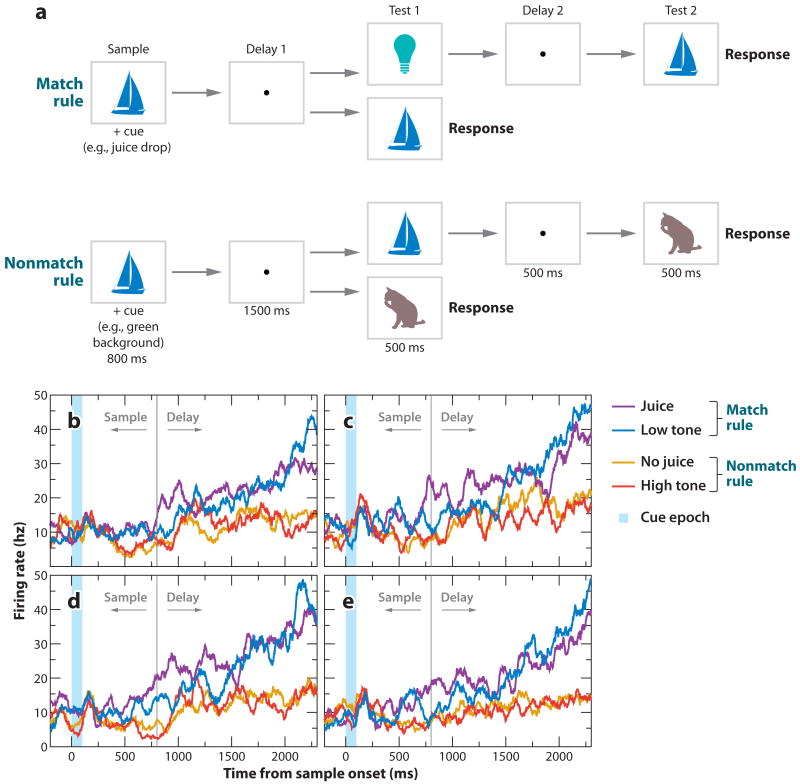

Understanding rules for behavior forms the basis for much of our social interaction; therefore, rules must routinely be represented in our brains. A critical feature of our cognitive ability is the ability to apply abstract, as opposed to concrete, rules, i.e., rules that can be generalized and flexibly applied to new situations. In a striking demonstration of this type of rule encoding in the PFC, Wallis and Miller recorded from three parts of the PFC (dorsolateral, ventrolateral, and the OFC) while monkeys performed a task requiring them to switch flexibly between two abstract rules (Figure 6) (Wallis et al. 2001). In this task, monkeys viewed two sequentially presented visual cues that could be either matching or nonmatching. In different blocks of trials, monkeys had to apply either a match rule or a nonmatch rule—indicated by the presentation of another cue at the start of the trial—to guide their responding. The visual stimuli utilized in the blocks were identical; thus, the only difference between the blocks was the rule in effect, and this information must be a part of the monkey's mental state. Many neurons in all three parts of the PFC exhibited selective activity depending on the rule in effect; some neurons preferred match and others nonmatch (Figure 6). Of note, rule-selective activity was only one type of selectivity that was present: Neurons often responded selectively to the stimuli themselves, as well as to interactions between the stimuli and the rules. Therefore, it appears that these neurons represent abstract rules along with other variables in an entangled manner.

Figure 6.

Single neurons encode rules in PFC. (a) Behavioral task. Monkeys grasped a lever to initiate a trial. They then had to center gaze at a fixation point while viewing a sample object, wait during a brief delay, and then view a test object. Two types of trials are depicted (double horizontal arrows). On match rule trials, monkeys had to release the lever if the test object matched the sample object. On nonmatch rule trials, monkeys had to release the lever if the test object did not match the sample. Otherwise, they had to hold the lever until a third object appeared that always required lever release. The rules in effect varied trial-by-trial by virtue of a different sensory cue (e.g., tones or juice) presented during viewing of the sample object. (b,c) PFC neurons encoding match (b) or nonmatch (c) rules. Activity was higher in relation to the rule in effect regardless of the stimuli shown. Adapted with permission from Wallis et al. (2001, figures 1 and 2).

In the work by Wallis and Miller, the rule in effect was cued on every trial, and the monkeys switched from one rule to the other on a trial-by-trial basis. In contrast, Mansouri and colleagues (2006) used a task in which the rule switched in an uncued manner on a block-by-block basis, and monkeys had to discover the rule in effect in a given block (an analog of the Wisconsin Card Sorting Task). In one block of trials, monkeys had to apply a color-match rule to match two stimuli, and in the other block, monkeys had to apply a shape-match rule. The authors discovered that neural activity in the dorsolateral PFC encoded the rule in effect; different neurons encoded color and shape rules (Figure 7a,b). Rule encoding occurred during the trial itself but also during the fixation interval, and even during the intertrial interval (ITI) (Figure 7c). This observation implies that a neural signature of the rule in effect was maintained throughout a block of trials—even when the monkey was not performing a trial—as if the monkey had to keep the rule in mind. We suggest that this representation of rules therefore represents a distinctive component of a mental state.

Figure 7.

PFC neurons encode rules in effect across time within a trial. Monkeys performed a task in which they had to match either the shape or color of two simultaneously presented objects with a sample object viewed earlier in the trial. Monkeys learned by trial and error whether a shape or color rule was in effect within a block of trials, and block switches were uncued to the monkey. (a,b) Two PFC cells that fired differentially depending on the rule in effect; activity differences emerged during the fixation (a) and intertrial intervals (ITI) (b). Activity is aligned on a start cue, which occurs before fixation on every trial. During the sample interval, one stimulus is presented over the fovea. During the decision interval, two stimuli are presented to the left and right; one matched the sample stimulus in color, and the other matched in shape. The correct choice can be chosen only if one has learned the rule in effect for the current block. (c) Distribution of activity differences between shape and color rules for each cell studied in each time interval of a trial. Each line corresponds to a single cell, and the solid parts of a line indicate when the cell fired differentially between color and shape blocks. Encoding of rules occurred in all time epochs, indicating that PFC neurons encode the rule in effect across time within a trial. Adapted with permission from Mansouri et al. (2006, figures 2 and 3).

Temporal integration of sensory stimuli and actions

One's current situation is defined not only in terms of the stimuli currently present, but also by the temporal context in which those stimuli appear, as well as by the associations those stimuli have with other stimuli. Fuster (2008) proposed that a cardinal function of the PFC is to provide a representation that reflects the temporal integration of relevant sensory information. Indeed, Fuster and colleagues (2000) have demonstrated this type of encoding in areas 6, 8, and 9/46 of the dorsolateral PFC. In this study, monkeys performed a task in which they had to associate an auditory tone (high or low) with a subsequently presented colored target (red or green). The authors discovered cells that responded selectively to associated tones and colors, e.g., cells that fired strongly only for the high tone and its associated target. Meanwhile, failure to represent the correct association accurately was correlated with behavioral errors; thus, PFC neurons' ability to form and represent cross-temporal and cross-modality representations was linked to subsequent actions.

The integration of sensory stimuli in the environment, as described above, is key for setting mental state variables; moreover, if we recall that a mental state can be defined as a disposition to action, any representation of planned actions must clearly be an important element of our mental state. Neural signals related to planned actions have been reported in numerous parts of the PFC in several tasks (Fuster 2008, Miller & Cohen 2001). In recent years, scientists have used more complex motor tasks to explore encoding of sequential movement plans. In the dorsolateral PFC, Tanji and colleagues have described activity related to cursor movements that will result from a series of planned arm movements (Mushiake et al. 2006). This activity therefore reflects future events that occur as a result of planned movements. Other studies of PFC neurons have discovered neural ensembles that predict a sequence of planned movements (Averbeck et al. 2006); when the required sequence of movements changes from block to block, the neural ensemble coding changes, too. In a manner reminiscent of rule encoding, this coding of planned movements was also present during the ITI, as if these cells were keeping note of the planned movement sequence throughout the block of trials (Averbeck & Lee 2007). Thus, the PFC not only tracks stimuli across time, but also represents the temporal integration of planned actions and the events that hinge on them. Dorsolateral PFC may well interact with the OFC and the ACC, and, via these areas, the amygdala, to make decisions based on the values of both environmental stimuli and internal variables and then to execute these decisions via planned action sequences.

Neural Networks and Mental States: A Conceptual and Theoretical Framework for Understanding Interactions between Cognition and Emotion

We have so far reviewed how neurons in the amygdala and the PFC may encode neural signals representing variables—some more closely tied to emotional processes, and others to cognitive processes—that are components of mental states and how these representations are often entangled (i.e., more than one variable is encoded by a single neuron). But how do these neurons interact within a network to represent mental states in their entirety? Moreover, how can cognitive processes regulate emotional processes?

A central element of emotional regulation involves developing the ability to alter one's emotional response to a stimulus. In general, one can consider at least two basic ways in which this can occur. First, learning mechanisms may operate to change the representation of the emotional meaning of a stimulus. Indeed, one could simply forget or overwrite a previously stored association. Moreover given a stimulus previously associated with a particular reinforcement, such that the stimulus elicits an emotional response, re-experiencing the stimulus in the absence of the associated reinforcement can induce extinction. Extinction is thought to be a learning process whereby previously acquired responses are inhibited. In the case of fear extinction, scientists currently believe that original CS-US associations continue to be stored in the brain (so that they are not forgotten or overwritten), and inhibitory mechanisms develop that suppress the fear response (Quirk & Mueller 2008). Second, mechanisms must exist that can change or switch one's emotional responses depending on one's knowledge of his/her context or situation. A simple example of this phenomenology occurs when playing the game of blackjack. Here, the same card, such as a jack of clubs, can be rewarding, if it makes a total of 21 in your hand, or upsetting, if it makes a player go bust. Emotional responses to the jack of clubs can thereby vary on a moment-to-moment basis depending on the player's knowledge of the situation (e.g., his/her understanding of the rules of the game and of the cards already dealt). Emotional variables here depend critically on the cognitive variables representing one's understanding of the game and one's current hand of cards. Although mechanisms for this type of emotional regulation remain poorly understood, it presumably involves PFC-amygdala neural circuitry.

What type of theoretical framework could describe these different types of emotional regulation? Are there qualitative differences between the neural mechanisms that underlie them? Here we briefly describe one possible approach for explaining this phenomenology. Our proposal is built on the assumption that each mental state corresponds to a large number of states of dynamic variables that describe neurons, synapses, and other constituents of neural circuits. These components must interact such that neural circuit dynamics can actively maintain a representation of the current disposition of behavior, i.e., the current mental state. Complex interactions between these components must therefore correspond to the interactions between mental state variables such as emotional and cognitive parameters. Indeed, when brain states change, these changes typically and inherently involve correlated modifications of multiple mental state variables. In this section, we discuss how a class of neural mechanisms could underlie the representation of mental states and the potential interaction between cognition and emotion. We construct a conceptual framework whereby cognition-emotion interactions can occur via two sorts of mechanisms: associative learning and switching between mental states representing different contexts or situations.

A natural candidate mechanism for representing mental states is the reverberating activity that has been observed at the single neuron level in the form of selective persistent firing rates, such as that which has been described in the PFC (e.g., Figure 7) and other structures (Miyashita & Chang 1988, Yakovlev et al. 1998). Each mental state could be represented by a self-sustained, stable pattern of reverberating activity. Small perturbations of these activity patterns are damped by the interactions between neurons so that the state of the network is attracted toward the closest pattern of persistent activity representing a particular mental state. For this reason, these patterns are called attractors of the neural dynamics. Attractor networks have been proposed as models for associative and working memory (Amit 1989, Hopfield 1982), for decision-making (Wang 2002), and for rule-based behavior (O'Reilly & Munakata 2000, Rolls & Deco 2002). Here, we suggest a scenario in which attractors represent stable mental states and every external or internal event encountered by an organism may steer the activity from one attractor to a different one. This type of mechanism could provide stable yet modifiable representations for the mental states, just like the on and off states of a switch. Thus mental states could be maintained over relatively long timescales but could also rapidly change in response to brief events.

Attractor networks can be utilized to model associative learning. Consider again the experiment performed by Paton and colleagues (2006), described in the section on neural representation of emotional variables. In a simple model, one can assume that learning involves modifying connections from neurons representing the CS (for simplicity, called external neurons) to some of the neurons representing the mental state, in particular those that represent the value of the CS in relation to reinforcement (called internal valence neurons). When the CSs are novel, a monkey does not know what to expect (reward or air puff). The monkey may know that it will be one of the two outcomes. Therefore, the CS in that particular context could induce a transition into one of the preexistent attractors representing the possible states. Some of these states correspond to the expectation of positive or negative reinforcement, and other states could correspond to neutral valence states. The external input starts a biased competition between all these different states. If the reinforcement received differs from the expected one, then the synapses connecting external and internal neurons will be modified such that the competition between mental states will generate a bias toward the correct association (see e.g., Fusi et al. 2007). This learning process is typical of situations in which there are one-to-one associations and, for example, the same CS always has the same value. The monkey can simply learn the stimulus-value associations by trial and error; with appropriate synaptic learning rules, the external connections are modified as needed.

In the situation described above, one CS always predicts reward, and the other punishment. But such conditions do not always exist. For example, Paton and colleagues reversed reinforcement contingencies after learning had occurred. In principle, learning these reversed contingencies could involve modifying the external connections to the neural circuit, thereby having new associations overwrite or override the previous associations. However, reversal tasks may not simply erase or unlearn associations; instead, reversal tasks may rely on processes similar to those invoked during extinction (Bouton 2002, Myers & Davis 2007). Increasing evidence implicates the amygdala-PFC circuit as playing a fundamental role in extinction (Gottfried & Dolan 2004, Izquierdo & Murray 2005, Likhtik et al. 2005, Milad & Quirk 2002, Olsson & Phelps 2004, Pare et al. 2004, Quirk et al. 2000).

For the second type of emotional regulation, during which emotional responses to stimuli depend on knowledge of one's situation or context, we need a qualitatively different learning mechanism. Consider a hypothetical variant of the experiment by Paton et al. (2006), in which the associations are reversed and changed multiple times. For example, stimulus A may initially be associated with a small reward and B with a small punishment. Then, in a second block of trials, A becomes associated with a large reward, and B with a large punishment. Assume that as the experiment proceeds, subjects go back and forth between these two types of blocks of trials so that the two contexts are alternated many times. In this case, if we can store a representation of both the two alternating contexts, we can adopt a significantly more efficient computational strategy. Instead of learning and forgetting associations, we can simply switch from one context to the other. For example, on the first trial of a block, if a large punishment follows B, the monkey can predict that seeing A on subsequent trials will result in its receiving a large reward. Overall, in the first context, A and B can lead to only small rewards and punishments, respectively. In the second context, A and B always predict large rewards and punishments. To implement this switching type of computational strategy, internal synaptic connections within the neural network must be modified to create the neural representations of the mental states corresponding to the two contexts (Rigotti et al. 2010a).

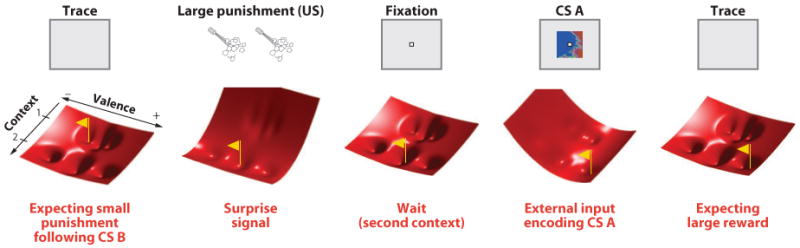

To illustrate how a model employing an attractor neural network can describe the case of mental state switching described above, we employ an energy landscape metaphor [see e.g., Amit (1989) and Figure 8]. Each network state can be described as a vector containing the activation states of all neurons, and it can be characterized by its energy value. If we know the energy for each state, then we can predict the network behavior because the network state will evolve toward the state corresponding to the closest minimum of the energy. In Figure 8, we represent each network state as a point on a plane and the corresponding energy as a surface that resembles a hilly landscape. To describe the hypothetical experiment under discussion, which involves switching between contexts, we assume that two variables, context and valence (with two contexts and five different valences represented), characterize each mental state and that only one brain state corresponds to each mental state. As a consequence, for each point on the context-valence plane there is only one energy value (Figure 8, red surface). The network naturally relaxes toward the bottom of the valleys (minima of the energy), which represent different mental states. At the neural level, each of these points corresponds to a particular pattern of persistent activity. The six valleys in Figure 8 correspond to six potential mental states created after modifying internal connections to represent the two different contexts and the related CS-US associations. As a result of the interactions due to recurrent connections, the cognitive variable corresponding to context constrains the set of accessible emotional states. In both contexts, interactions with external neurons representing CS A or B can tilt temporarily the energy surface and bring the neural network into a different valley, corresponding to a different mental state. The final destination depends on the initial mental state representing the context. The valences of the states differ in the two contexts because valences associated with large rewards and punishments exist only in the second context.

Figure 8.

The dynamics of context-dependent values. We consider a hypothetical variation of the experiment by Paton et al. (2006) in which there are two contexts. In the first context, CS A and B predict small rewards and punishments, respectively. In the second context, CS A and B are associated with large rewards and punishments. Six mental states now correspond to six valleys in the energy landscape. In panel 1, we consider the first trial of context 2, immediately after switching from context 1. Stimulus B has just been presented (not shown), and it is believed to predict small punishment. However a large punishment is delivered (not shown), and a surprise signal tilts the energy function (panel 2), inducing a transition to the neutral mental state of context 2 at the beginning of the next trial (panel 3; we assume for this example that the fixation interval has a neutral value). Now the system has already registered that it is in context 2. Consequently, the appearance of CS A tilts the energy landscape, and the mental state settles at a large positive value (panel 4). After CS disappearance, the network relaxes into the high positive value mental state of context 2. Thus the network does not need to relearn that CS A predicts a large reward because the network has already formed a representation for all the mental states contained within this simple experiment. Just knowing that the context has changed is sufficient for subjects to make an accurate prediction about impending reinforcement. For a detailed attractor model implementing this form of context dependency see Rigotti et al. (2010b).

This example illustrates the cognitive regulation of emotion because changes in a cognitive variable (context) cause a change in the possible associated emotional parameters (valence). Analogous mechanisms could underlie how other cognitive variables can influence emotional responses. For example, different social situations can demand different emotional responses to similar sensory stimuli, and knowledge of the social situation (essentially a context variable) can thereby constrain the emotional responses possible.

We based the forgoing discussion on the assumption that the mental states are represented by attractors of the neural dynamics. Alternative and complementary solutions are based on neural representations of mental states that change in time (Buonomano & Maass 2009, Jaeger & Hass 2004). For these neural systems, every trajectory or set of trajectories in the space of all possible brain states represents a particular mental state. These dynamic systems can generate complex temporal sequences that are important for motor planning (Susillo & Abbott 2009). However, they cannot instantaneously generalize to situations in which events are timed differently and they can be difficult to decode. Generally speaking, all known models of mental states provide a useful conceptual framework for understanding the principles of the dynamics of neural circuits, but they fall short of capturing the richness and complexity of real biological neural networks. For example, brain states are not encoded solely in the neuronal spiking activity. Investigators only now have begun to study interactions among dynamic variables operating on diverse timescales, all contributing to a particular brain state [see e.g., Mongillo et al. (2008) for a working memory model based on short-term synaptic facilitation].

The theoretical framework we propose provides a means for representing mental states in the distributed activity of networks of neurons encoding entangled representations. Because we have defined mental states as action dispositions, it is natural to wonder how mental states are linked to the selection and execution of actions. In recent years, reinforcement learning (RL) algorithms have provided an elegant framework for understanding both how subjects choose their actions to maximize reward and minimize punishment and how the brain may represent modeled parameters during this process (Daw et al. 2006, Dayan & Abbott 2001, Sutton & Barto 1998). In particular, scientists have attempted to link two types of RL algorithms onto specific neural structures: a model-based algorithm and a model-free algorithm (Daw et al. 2005). Model-based algorithms are suited for goal-directed actions and likely involve the PFC, whereas model-free algorithms could mediate the generation of habitual behavior (Graybiel 2008) and may involve the striatum. Of note, both types of RL algorithms actually require an already formed representation of states (i.e., of the relevant variables of one's current situation) to enable one to assign values to them to guide action selection. If one drives in an unfamiliar neighborhood packed with restaurants and needs to choose a restaurant, one must first build a mental map of the environment as it is experienced before assigning values to possible destinations. This process of creating mental state representations is not provided for by RL algorithms, which involve only the assignment and updating of values to already created states.

Creating a representation of mental states involves forging links between the many mental state variables that neurons represent. Recent work on the neural basis of object perception represents an initial step toward understanding how variables may be combined to support the formation of a mental state representation. The perception of objects requires one to develop a representation that is invariant for many viewing conditions, such as the precise retinal position of the object, the size, scale, or pose of the object, or the amount of clutter the object appears within the visual field. Di Carlo and colleagues have now provided evidence that unsupervised learning arising from the temporal contiguity of stimuli experienced during natural viewing leads to the formation of an invariant representation of a visual object (Li & DiCarlo 2008). Object perception corresponds to only one component of a mental state, but the scientific approach pioneered by Di Carlo's group may provide a path for understanding how other mental state variables also become linked to create a unified representation of a particular state. Indeed, some theoreticians have proposed that simple mechanisms such as temporal contiguity might underlie new mental state formation (O'Reilly & Munakata 2000, Rigotti et al. 2010a).

Conclusions

The conceptual framework we have put forth posits that mental states are composed of many variables that together correspond to an action disposition. These variables include parameters, such as valence and arousal, which are often ascribed to emotional processes, as well as parameters ascribed to cognitive processes, such as perceptions, memories, and plans. Of course, mental state parameters also include variables encoding our visceral state. Much in the way that Wittgenstein argued that philosophical controversies dissolve once one carefully disentangles the different ways in which language is being used (Wittgenstein 1958), we argue that the debate between scientists about the origin of emotional feelings—whether visceral processes precede or follow emotional feeling—dissolves. Instead, all these parameters may be linked and together form the representation of our mental state.

This conceptual framework has broad implications for understanding interactions between cognition and emotion in the brain. On the one hand, emotional processes can influence cognitive processes; on the other hand, cognitive processes can regulate or modify our emotions. Both of these interactions can be implemented by changing mental state variables (either emotional or cognitive ones); emotions and thoughts shift together, corresponding to the new mental state. Of course, different mechanisms may exist for implementing these interactions between cognition and emotion, such as mechanisms involving learning and extinction, as well as mechanisms that support the creation of new mental state representations, such as when one learns a new rule. The process of understanding the complex encoding properties of the amygdala, the PFC, and related brain structures, as well as understanding their functional interactions, is in its infancy. Somehow the intricate connectivity of these brain structures gives rise to mental states and accounts for interactions between cognition and emotion that are fundamental to our well-being and our existence.

Acknowledgments

We thank B. Lau and S. Morrison for helpful discussion, as well as comments on the manuscript, and H. Cline for invaluable support. C.D.S. gratefully acknowledges funding support from NIH (R01 MH082017, R01 DA020656, and RC1 MH088458) and the James S. McDonnell Foundation. S.F. receives support from DARPA SyNAPSE, the Gatsby Foundation, and the Sloan-Swartz Foundation.

Glossary

- PFC

prefrontal cortex

- ACC

anterior cingulate cortex

- OFC

orbitofrontal cortex

- CS

conditioned stimulus

- US

unconditioned stimulus

Footnotes

Errata: An online log of corrections to Annual Review of Neuroscience articles may be found at http://neuro.annualreviews.org/

Disclosure Statement: The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review.

Contributor Information

C. Daniel Salzman, Email: cds2005@columbia.edu.

Stefano Fusi, Email: sf2237@columbia.edu.

Literature Cited

- Aggleton J, editor. The Amygdala—A Functional Analysis. Oxford: Oxford Univ. Press; 2000. [Google Scholar]

- Aggleton JP, Passingham RE. Syndrome produced by lesions of the amygdala in monkeys (Macaca mulatta) J Comp Physiol Psychol. 1981;95:961–77. doi: 10.1037/h0077848. [DOI] [PubMed] [Google Scholar]

- Amaral D, Price J, Pitkanen A, Carmichael S. Anatomical organization of the primate amygdaloid complex. In: Aggleton J, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York: Wiley-Liss; 1992. pp. 1–66. [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Dent JA. Development of the mossy fibers of the dentate gyrus: I. A light and electron microscopic study of the mossy fibers and their expansions. J Comp Neurol. 1981;195:51–86. doi: 10.1002/cne.901950106. [DOI] [PubMed] [Google Scholar]

- Amit D. Modeling Brain Function—The World of Attractor Neural Networks. New York: Cambridge Univ. Press; 1989. [Google Scholar]

- Antoniadis EA, Winslow JT, Davis M, Amaral DG. The nonhuman primate amygdala is necessary for the acquisition but not the retention of fear-potentiated startle. Biol Psychiatry. 2009;65:241–48. doi: 10.1016/j.biopsych.2008.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Lee D. Prefrontal neural correlates of memory for sequences. J Neurosci. 2007;27:2204–11. doi: 10.1523/JNEUROSCI.4483-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Sohn JW, Lee D. Activity in prefrontal cortex during dynamic selection of action sequences. Nat Neurosci. 2006;9:276–82. doi: 10.1038/nn1634. [DOI] [PubMed] [Google Scholar]

- Barbas H, Blatt GJ. Topographically specific hippocampal projections target functionally distinct prefrontal areas in the rhesus monkey. Hippocampus. 1995;5:511–33. doi: 10.1002/hipo.450050604. [DOI] [PubMed] [Google Scholar]

- Barbas H, Ghashghaei H, Rempel-Clower N, Xiao D. Anatomic basis of functional specialization in prefrontal cortices in primates. In: Grafman J, editor. Handbook of Neuropsychology. Amersterdam: Elsevier Science B.V.; 2002. pp. 1–27. [Google Scholar]

- Barbas H, Pandya DN. Architecture and intrinsic connections of the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1989;286:353–75. doi: 10.1002/cne.902860306. [DOI] [PubMed] [Google Scholar]

- Barbas H, Zikopoulos B. The prefrontal cortex and flexible behavior. Neuroscientist. 2007;13:532–45. doi: 10.1177/1073858407301369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bard P. A diencephalic mechanism for the expression of rage with special reference to the sympathetic nervous system. Am J Physiol. 1928;84:490–515. [Google Scholar]

- Bates JF, Goldman-Rakic PS. Prefrontal connections of medial motor areas in the rhesus monkey. J Comp Neurol. 1993;336:211–28. doi: 10.1002/cne.903360205. [DOI] [PubMed] [Google Scholar]

- Baxter M, Murray E. Reinterpreting the behavioural effects of amygdala lesions in nonhuman primates. See Aggleton. 2000:545–68. [Google Scholar]

- Baxter M, Murray EA. The amygdala and reward. Nat Rev Neurosci. 2002;3:563–73. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Baxter M, Parker A, Lindner CC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci. 2000;20:4311–19. doi: 10.1523/JNEUROSCI.20-11-04311.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–84. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci. 2008;28:10023–30. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME. Context, ambiguity, and unlearning: sources of relapse after behavioral extinction. Biol Psychiatry. 2002;52:976–86. doi: 10.1016/s0006-3223(02)01546-9. [DOI] [PubMed] [Google Scholar]

- Breiter H, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–39. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]