Abstract

Objective

To measure third-year pharmacy students' level of motivation while completing the Pharmacy Curriculum Outcomes Assessment (PCOA) administered as a low-stakes test to better understand use of the PCOA as a measure of student content knowledge.

Methods

Student motivation was manipulated through an incentive (ie, personal letter from the dean) and a process of statistical motivation filtering. Data were analyzed to determine any differences between the experimental and control groups in PCOA test performance, motivation to perform well, and test performance after filtering for low motivation-effort.

Results

Incentivizing students diminished the need for filtering PCOA scores for low effort. Where filtering was used, performance scores improved, providing a more realistic measure of aggregate student performance.

Conclusions

To ensure that PCOA scores are an accurate reflection of student knowledge, incentivizing and/or filtering for low motivation-effort among pharmacy students should be considered fundamental best practice when the PCOA is administered as a low-stakes test

Key words: Pharmacy Curriculum Outcomes Assessment (PCOA), testing, motivation, examination

INTRODUCTION

Postsecondary professional programs use standardized tests to measure students' knowledge of curriculum content. Standardized tests usually have consequences for the test taker in that they often affect grades, progression in an educational program, and/or licensure. In such cases, the tests are referred to as “consequential” or “high-stakes” tests. Conversely, tests that have minimal or no consequences for the test taker are referred to as “non-consequential” or “low-stakes” tests.

Performance scores on high-stakes tests are meant to be valid measures of students' ability. The importance of achieving a positive outcome on the test implies that the student will take a high-stakes test seriously and will be motivated to do well. This is not necessarily the case with low-stakes tests. Although some students give their best effort regardless of the consequences, under low-stakes testing situations, some students are not motivated to give their best effort.1,2 When students do not give their best effort, the resulting scores will not adequately represent what they know.3 Because of this phenomenon, the validity of low-stakes test results, and thus their use as a measure of content knowledge, is threatened.1,3-5

When colleges and schools of pharmacy changed their curricula to meet the demands of the doctor of pharmacy (PharmD) degree, they struggled to find reliable methods to assess curricular and student learning outcomes. The lack of standardized assessment methods led programs to develop “homegrown” assessment tools for progression and outcomes assessment. Some believed that standardized instruments would lessen the burden on individual programs, be more cost effective, and provide a means for sharing and comparing data among institutions.6 Others cautioned that standardized, high-stakes progress examinations could inhibit creativity in curricular development at a time when this was desirable.7

The PCOA is a national standardized assessment tool developed by the National Association of Boards of Pharmacy (NABP) in cooperation with the Accreditation Council for Pharmacy Education (ACPE) and the American Association of Colleges of Pharmacy (AACP). Its purpose is to provide colleges and schools of pharmacy with data to assess critical factors in the curriculum and identify individual student's strengths and limitations in content knowledge. Pilot tested in 2008, the PCOA is administered as a norm-referenced standardized curricular assessment tool. Although the need for reliable methods to assess curricular and student learning outcomes remains high, less than 20% of colleges and schools of pharmacy administer the PCOA.

The PCOA is a 3-hour, multiple-choice, 220-item, examination. The test items are scored and collapsed into the content knowledge areas outlined by ACPE: basic biomedical sciences, pharmaceutical sciences, social/behavioral/administrative sciences, and clinical sciences. This alignment with the accreditation requirements for pharmacy curricula allows for a more focused analysis of strengths and limitations at the program level, as well as for individual students. Wilkes University uses the PCOA as a low-stakes test administered in the third year (P3) of the PharmD program solely for the purpose of providing feedback to students about their strengths and limitations in content knowledge prior to entering advanced pharmacy practice experiences. The test also is used to inform the school's curriculum committee regarding potential areas for concern. However, because Wilkes has chosen to administer the PCOA as a low-stakes test, students' motivation for doing well and, thus, the validity of the data to inform students and the curriculum comes into question.

In studies conducted to identify and evaluate factors that influence students' performance in low-stakes testing situations, student motivation consistently emerges as a major issue.3,8,9 As a concept, motivation has its roots in the expectancy-value models of achievement motivation.10-12 These models define expectancy as a student's belief that he or she can complete a task successfully and value as a student's beliefs about why he or she should complete a task. Students' expectancy beliefs regarding their potential for success are influenced by their competence and how difficult they believe the task to be. Students' value beliefs are influenced by: how important they think it is to do well (consequences); how enjoyable the task is believed to be; how useful the task is to their overall plan; and how the cost to engage in the task is weighed against engaging in something more worth their time and effort.

Together, expectancy and value beliefs impact students' achievement behavior. Test-taking effort can be defined as the extent to which students expend energy toward achieving optimum performance in a testing situation.3(p2) In low-stakes testing situations, students with weak expectancy-value belief systems usually exhibit low student effort. As a result, test performance will not reflect best effort and test results will not reflect actual content knowledge or ability.1,3,4

Experts have expressed concern about the validity of data gathered from tests that have no consequences and its usefulness as a measure of student ability.3-4,8,13 Early studies showed poorer performance on low-stakes tests compared with performance on high-stakes tests. Moreover, motivation scores were considerably lower under low-stakes conditions compared with high-stakes conditions.14 A synthesized analysis of 25 studies that looked at the relationship between test-taker motivation and test performance across all test types showed that, in nearly all cases, highly motivated test-takers performed better than test-takers with low motivation. Furthermore, test-takers exhibit higher motivation to perform on high-stakes tests versus low-stakes tests. 3 When motivation was manipulated (by declaring the test high-stakes, incentivizing test takers, or manipulating the statistics), test-taker performance improved, suggesting those tests were a more realistic measure of test-taker ability. Studies that sought to determine whether there was a relationship between motivation and ability concluded that, in the absence of some form of manipulation, there was either no significant correlation or only a weak one.14-16

In low-stakes testing conditions, having a way to manage the bias resulting from low-motivation effort is desirable. Studies have attempted to increase test validity in low-stakes testing situations by focusing on ways to improve motivation.1,4,8,17 This is good news for educational institutions that rely on results from low-stakes testing to inform decisions regarding curricular and program offerings, as well as the interests of stakeholders such as accreditors and funders. Understanding better ways to interpret low-stakes test results to arrive at realistic measures of what students have learned is critical for colleges and schools of pharmacy.

There are 2 broad categories of strategies for improving motivation. First, testing methods can be altered to increase students' interest in and belief in the importance and utility of the test, eg, raising the testing stakes, providing incentives, increasing intrinsic motivation by reducing the “boring” factor, and providing feedback. These suggestions may work well in some testing situations but implementation could prove problematic when standardized tests are involved, and an attempt to manipulate the testing conditions might be challenged. The second method is data manipulation, of which there are 3 types. Rapid response filtering and effort-moderated item-response testing are most often associated with computer-based testing. The third type, motivation filtering, is suitable for both computer-based and paper-and-pencil tests and is particularly useful in situations where motivation can be proven to be unrelated to ability.2 The purpose behind motivation filtering is to systematically remove the test scores of unmotivated test takers to reduce bias.

METHODS

The purpose of this exploratory study was to determine the level of motivation of P3 students during the administration of the PCOA as a low-stakes test to better understand the validity of the PCOA to measure student content knowledge. Student motivation was manipulated in 2 ways: by providing students with an incentive to do well, and through statistical manipulation using scores on the Student Opinion Scale.

Student Opinion Scale

The Student Opinion Scale is a 10-item Likert- type instrument that was developed to measure motivation as a construct using the expectancy-value theory as its basis. It was designed for use with instruments that measure other constructs (eg, PCOA) in that it quantifies the level of participants' motivation related to the other instrument. According to the test developers, the scale is particularly useful when administered with low-stakes tests.

The scale is comprised of 2 subscales: importance and effort. The importance subscale is similar to the “value” portion of the expectancy-value theory and is operationally defined in the scale as how important doing well on a test is to a student. Effort is defined as how much mental effort or sense of duty was involved in the test taker answering a test item.18 The Student Opinion Scale has been moderately correlated with several achievement tests including Information Literacy and Scholastic Aptitude Test (SAT) total score. However, scores on the effort portion of the scale have not correlated with SAT total scores, confirming other researchers' conclusion that motivation is not correlated with ability.

To date, reliability studies have been conducted on undergraduate students only. This study is providing data on P3 students, the academic equivalent of first-year graduate students. Because the purpose of the Student Opinion Scale is to provide information about student motivation during a testing situation, the scale should be administered at the end of a test or series of tests. In this way, the testing conditions for proctored, standardized tests are not altered. As students complete the scheduled test or tests, the proctor directs them to complete the 10-item Student Opinion Scale. Either paper-and-pencil or computer-based administration is acceptable.

Each of the subscales, importance and effort, has 5 items worth 5 points each. The subscale scores are reported separately as the test is not meant to yield a combined importance-effort score (ie, 50 points). The test uses a 5-point Likert scale on which 1 = strongly disagree, 3 = neutral, and 5 = strongly agree. Two test items in each subscale are reverse scored.

Motivation Filtering

To address the questionable validity of data from low-stakes assessments, motivation filtering was first introduced by Wise and colleagues in 2003.5 In this and subsequent studies, the researchers used the motivation-effort subscales of the Student Opinion Scale as the filter.2-3,17 “The logic underlying motivation filtering is that the data from those giving low effort are untrustworthy, and by deleting these data, the remaining data will better represent the proficiency levels of the target group of students.”2(p66)

The key assumption made when using motivation filtering is that motivation does not correlate with ability. As stated previously, students' value beliefs related to levels of effort and importance impact motivation in test situations but may not coincide with a student's ability to perform well. That is, students with low ability may be equally as likely to exhibit varying levels of motivation as students with high ability. Another assumption is that the Student Opinion Scale is administered immediately following the test.

According to Wise and colleagues, there are 2 criteria that must be met before motivation filtering can be applied to test scores. There must be a significant correlation between test scores (performance on the test) and motivation-effort subscale scores. There also must be an insignificant or low correlation between motivation (effort) and student ability. Student ability is characterized by scores on tests such as the SAT, grade point average (GPA), or similarly acceptable measurements of aptitude.

Once both the criteria are met, the filtering process can proceed. Ability scores are identified and matched to each student. Then the mean for each ability score is calculated. These mean scores represent the mean ability scores of the total group and are used as the baseline for comparison at each filtered level. For example, students taking the PCOA were matched on their SAT-Math, SAT-Verbal, and GPA scores. The group means for each of these ability scores were calculated for the experimental and control groups, creating a baseline of ability scores for each group.

Students then are ranked from lowest to highest by their motivation-effort score on the Student Opinion Scale. Students are filtered systematically from the group based on their effort scores. Each time a filter is applied, the mean ability scores of the remaining students is calculated and compared with baseline ability scores. As long as the filtered ability scores remain similar to the ability scores of the total group, the filtering process can continue. Optimum filtering occurs at the motivation-effort score level immediately preceding the point at which the filtered ability scores and the baseline ability scores show considerable variability.2(p72-73) For their study, Wise and colleagues chose a filtered mean SAT score that exceeded 3 points from baseline as an indication that overfiltering had taken place.

Study Procedures

This study used an experimental design and was approved by the Wilkes University Human Subjects Review Board. Prior to administration of the 2010 PCOA, the researchers randomly assigned P3 students to an experimental or control group. The NABP gave permission for the researchers to place a sealed envelope at each student's assigned seat prior to their arrival to take the test. Immediately before taking the PCOA, students were instructed to open their envelope, which contained 1 of 2 different letters from the dean of the school of pharmacy (Appendix 1). The experimental group's letter was personalized and highlighted the importance of the students giving their best effort on the PCOA so that the results would provide students with quality information about their content knowledge and provide the pharmacy program with data to use in making decisions regarding the curriculum. The control group's letter was not personalized (eg, the greeting line read “Dear P3 Student”). It briefly described the PCOA and how students could use their results to identify limitations in their content knowledge. A letter from the dean was determined to be an appropriate incentive.

A proctor administered the PCOA, following the standardized procedures required by the NABP. Immediately after the students completed the PCOA, the proctor administered the Student Opinion Scale.

Data were analyzed to determine whether there were any significant differences between the experimental and control groups in PCOA test performance, motivation to perform well, or test performance after filtering for low motivation-effort. As per Human Subjects Review Board-approved protocol, students were given the option to include their data in the study and told that the findings would be included in a paper submitted for publication. Four students declined (3 in the experimental group and 1 in the control group) to have their data included. Additionally, 1 student's SAT scores were not available, so his/her PCOA and Student Opinion Scale data were removed from the analysis. The total number of students included in the analyses was 65 (32 in the experimental group and 33 in the control group).

Data Analyses

Experimental and control group data were examined individually to determine whether they met the criteria for filtering. A correlation matrix was created for the motivation-effort scores, PCOA scores, SAT-Math, SAT-Verbal, and GPA. In order to proceed with the filtering process, the results had to indicate a significant correlation between motivation-effort and PCOA scores and no correlation between motivation-effort and ability scores.

Motivation filtering was accomplished by systematically removing student's PCOA scores based on their motivation-effort scores. The filtering process began with eliminating the lowest motivation-effort scores and continued in rank order until the mean ability scores of students at a particular level ceased to be similar to the mean ability scores of the total group. The PCOA scores of the students who remained after optimal filtering was achieved were expected to more closely represent the actual content knowledge of the group.

RESULTS

Criteria for Filtering

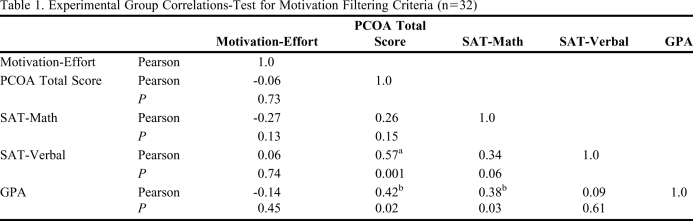

A correlation matrix was produced to determine whether the data from students in the experimental group (motivational letter) met the criteria for motivation filtering. As Table 1 indicates, no significant relationship was found between motivation-effort scores and performance on the PCOA, or between motivation-effort scores and any of the ability scores. As only half of the criteria for motivation filtering were met, proceeding to motivation filtering with the experimental group was not appropriate.

Table 1.

Experimental Group Correlations-Test for Motivation Filtering Criteria (n=32)

Abbreviations: Sig =significance, PCOA= Pharmacy Curriculum Outcomes Assessment, SAT, GPA= grade point average.

Correlation is significant at the 0.01 level (2-tailed).

Correlation is significant at the 0.05 level (2-tailed).

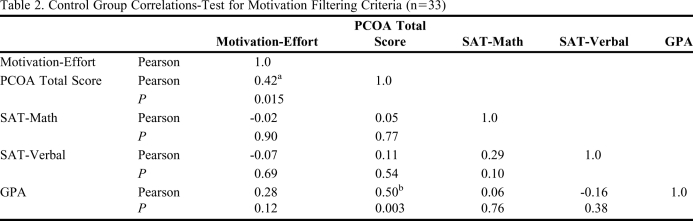

The correlation matrix produced to address the criteria for motivation filtering (Table 2) indicated that data from the students in the control group (form letter) met both criteria and that motivation filtering could be applied. A significant correlation was found between motivation-effort scores and PCOA scores, but no relationship was found between motivation-effort scores and any of the ability scores.

Table 2.

Control Group Correlations-Test for Motivation Filtering Criteria (n=33)

Abbreviations: Sig =significance, PCOA= Pharmacy Curriculum Outcomes Assessment, SAT = Scholastic Aptitude Test, GPA= grade point average.

Correlation is significant at the 0.05 level (2-tailed).

Correlation is significant at the 0.01 level (2-tailed).

Motivation Filtering (Control Only)

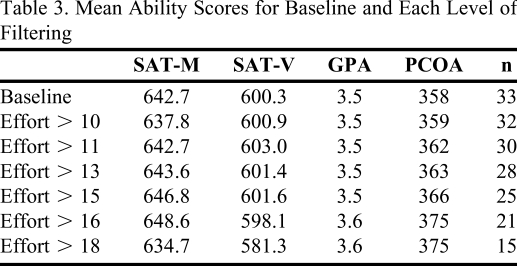

The filtering process began with the lowest motivation-effort score and continued until a marked difference in mean ability scores from baseline was observed. The scores ranged from a low of 10 to a high of 24, with a maximum possible score of 25. As each filter was applied, the recalculated mean ability scores for the remaining students in the control group were compared to the ability scores for the total control group (baseline). As stated above, Wise and colleagues used a differential of >3 points from SAT baseline to determine optimum filtering. The data in this study suggested that optimum filtering occurred at a motivation-effort score of 16, which was 4.1 points above baseline for SAT-Math, 1.3 points above baseline for SAT-Verbal, and 0.1 points above baseline for GPA. The mean ability and PCOA scores for the total group and at each level of filtering are provided in Table 3 along with the number of students remaining in the unfiltered pool. Eight students' scores were filtered, leaving 25 students in the control group at the point where optimum filtering seemed to have occurred.

Table 3.

Mean Ability Scores for Baseline and Each Level of Filtering

Abbreviations: SAT-M=Scholastic Aptitude Test-Math Portion, SAT-V=Scholastic Aptitude Test-Verbal Portion, GPA=grade point average, PCOA= Pharmacy Curriculum Outcomes Assessment

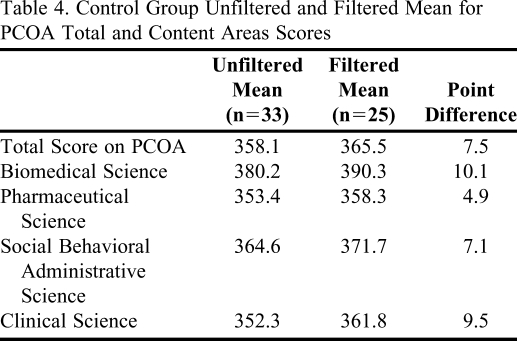

Applying the filter caused the total mean PCOA score for the control group to increase 7.5 points and the aggregate mean score for each of the 4 PCOA content areas to increase between 4.9 and 10.1 points (Table 4). Furthermore, the correlation between the control group's motivation-effort and the PCOA scores was no longer significant (unfiltered r = 0.421; p > 0.05; filtered r = 0.36). This absence of a statistical relationship between motivation-effort and performance on the PCOA as low-stakes at the point of optimum filtering suggests that filtering has achieved what it was intended to achieve, ie, to reduce or remove the impact of the level of motivation-effort on the PCOA scores. The remaining (filtered) scores should be a better representation of student content knowledge and therefore a better gauge of curricular success.

Table 4.

Control Group Unfiltered and Filtered Mean for PCOA Total and Content Areas Scores

Abbreviations: PCOA= Pharmacy Curriculum Outcomes Assessment

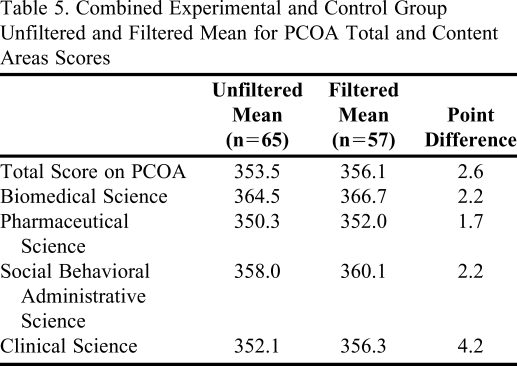

To complete the process, the 25 filtered control group PCOA total and content area scores were added to the experimental group database, creating a filtered database of 57 students' scores that more closely represented the actual content knowledge of the P3 class. Using this new data set, the PCOA filtered mean scores for the total test and for each of the 4 content areas (n = 57) were compared with the original unfiltered scores of the experimental and control groups combined (n = 65). For each comparison, the filtered mean scores were between 1.7 and 4.2 points higher than the unfiltered scores (Table 5).

Table 5.

Combined Experimental and Control Group Unfiltered and Filtered Mean for PCOA Total and Content Areas Scores

Abbreviations: PCOA= Pharmacy Curriculum Outcomes Assessment

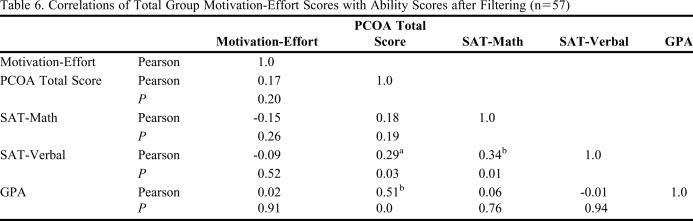

The relationships between motivation-effort and the PCOA and ability scores of the 57 remaining students were recalculated. Motivation-effort was no longer correlated with PCOA scores, nor was there any relationship between effort and any of the ability scores (Table 6). As with the initial correlations calculated for the experimental group, PCOA scores correlated with SAT-Verbal and GPA.

Table 6.

Correlations of Total Group Motivation-Effort Scores with Ability Scores after Filtering (n=57)

Abbreviations: Sig =significance, PCOA= Pharmacy Curriculum Outcomes Assessment, SAT, GPA= grade point average.

Correlation is significant at the 0.05 level (2-tailed).

Correlation is significant at the 0.01 level (2-tailed).

DISCUSSION

Wilkes University School of Pharmacy has participated in the PCOA since 2008. We purposefully chose to administer the PCOA to P3 students as a low-stakes test, ie, there were no consequences to students' grades or progression to the P4 year. Students and their advisors have been encouraged to use the results of the PCOA to provide valuable information about individual strengths and limitations in students' content knowledge. Although faculty members and administration always have assumed that the usefulness of the PCOA scores to individual students is directly related to how much effort they put into doing well on the examination, they have questioned the validity of the test results because of its low-stakes classification. This has led to a general concern among the program's decision-makers regarding the validity of the data to inform the curriculum as a whole.

Because the school does not want to change the test from low to high stakes, the dilemma of how best to garner useful data needed to be addressed and this exploratory study attempted to do that. The research on motivation in low-stakes testing offers a variety of ways to manage the legitimacy of test scores as measures of content knowledge. These fall into 2 broad categories: incentivizing and statistical manipulation. This study drew from both categories. The first expectation was that incentivizing would impact students' effort to perform well on the PCOA, and the second was that if the experimental or control group's motivation-effort scores met the criteria for filtering, the resulting filtered PCOA scores would be a better representation of the content knowledge of the P3 students who took the examination.

The letters from the dean were written with the intent of manipulating motivation through incentivizing. Creating this personalized incentive positively impacted the experimental group's motivation to give their best effort on the PCOA because the criteria for filtering the experimental groups' PCOA scores based on reported motivation-effort were not met. In other words, the experimental group's PCOA scores were more closely correlated with ability than with motivation, implying that the PCOA scores of the experimental group were likely a fair representation of those students' content knowledge. Conversely, the control group did meet the criteria for motivation filtering. Their scores did not correlate with ability, suggesting their scores did not represent their actual content knowledge.

Motivation filtering appears to provide a relatively easy way to minimize the impact of low effort on the aggregate results of low-stakes tests. The types of data needed to establish baseline ability data are available readily and the calculations required for the filtering process are straightforward. Perhaps more important than the ease of filtering for low motivation-effort, the potential to calculate a more accurate and statistically valid representation of students' aggregate content knowledge is enormously helpful to those making decisions about the efficacy of the curriculum.

Limitations

The SOS is a self-report measure of motivation and as such is limited in its reliability and usefulness by how truthfully a student responds to the 10 Likert-type scaled items. Students may have indicated that they gave low or high effort when in fact the opposite was true. This limitation was somewhat controlled by using the ability scores to establish an acceptable ability baseline. In this way, the tendency for over- or underfiltering was managed; however, the limitation remains.

The intended use of the PCOA is to inform programs about the effectiveness of their curriculum and to inform students about their content knowledge. This study was limited in its value to individual students because it focused primarily on aggregate outcomes. Applying a filtering process is meant to improve the validity of the data for programmatic use; however, as part of the filtering process, an individual student's results may be eliminated from the aggregate results, diminishing the personal worth and applicability of the scores.

This study was limited to P3 students at 1 school. Although students were randomly assigned to the experimental or control group, further application and testing of the treatment to other pharmacy programs is needed before the results can be generalized. That said, the design is easily replicated.

Finally, some may reject the motivation filtering process used because there is no set cut point that identifies optimum filtering. The process for deciding when optimum filtering has occurred is potentially problematic.

CONCLUSIONS

As Wilkes School of Pharmacy wishes to continue to administer the PCOA as a low-stakes test for content knowledge, it is important for the program to determine best practices for obtaining optimum aggregate data. This study found that using a meaningful incentive for students to give their best effort on the test should be a primary consideration. In addition, the study suggested that test scores should be screened and, if necessary, filtered based on motivation scores. Combining incentivizing to improve motivation with filtering the PCOA scores of students who also have low motivation scores is a promising best practice for programs that want to administer the PCOA as low stakes.

ACKNOWLEGMENTS

The author would like to thank Dr. Vernon Harper for introducing her to the Student Opinion Scale and for his encouragement in using the instrument to improve programmatic understanding of low-stakes performance.

Appendix 1. Dean's Letters to Experimental and Control Groups Prior to Taking the PCOA

Experimental Group Letter

Dear (Student's First Name)

Please give your very best effort on this exam.

Scores that are truly reflective of what you know will provide you with an excellent assessment of your strengths and weaknesses in content knowledge. The collective results, from you and your classmates, will help us to better understand the Wilkes Pharmacy Program and assist us in making decisions about the curriculum.

The Pharmacy Curriculum Outcomes Assessment exam you are about to take is administered annually to all P-3 students in our program. The PCOA is a 220 question, standardized, multiple-choice test designed to measure knowledge in the following content areas:

Basic Biomedical Sciences

Pharmaceutical Sciences

Social, Behavioral, & Administrative Pharmacy Sciences

Clinical Sciences

You will receive your scores via email in about 6 weeks.

Thank you and good luck!

Sincerely

Control Group Letter

Dear P-3 student

The Pharmacy Curriculum Outcomes Assessment exam you are about to take is administered annually to all P-3 students in our program. The PCOA is a 220 question, standardized, multiple-choice test designed to measure knowledge in the following content areas:

Basic Biomedical Sciences

Pharmaceutical Sciences

Social, Behavioral, & Administrative Pharmacy Sciences

Clinical Sciences

You will receive your scores via email in about 6 weeks. We encourage you to use them to help you identify areas where you have limitations in content knowledge in preparation for your P-4 year.

Thank you and good luck.

Sincerely

REFERENCES

- 1.Cole JS, Bergin DA, Whittaker TA. Predicting student achievement for low stakes tests with effort and task value. Contemp Educ Psychol. 2008;33(4):609–624. [Google Scholar]

- 2.Wise VL, Wise SL, Bhola DS. The generalizability of motivation filtering in improving test score validity. Educ Asses. 2006;11:65–83. [Google Scholar]

- 3.Wise SL, DeMars CE. Low examinee effort in low-stakes assessment: problems and potential solutions. Educ Assess. 2005;10:1–17. [Google Scholar]

- 4.Wise SL, Owens KM, Yang ST, Weiss B, Kissel HL, Kong X, Horst SJ. 2005. An investigation of the effects of self-adapted testing on examinee effort and performance in a low-stakes achievement test. Paper presented at annual meeting of the National Council on Measurement in Education Montreal; 2005. [Google Scholar]

- 5.Sundre DL, Wise SL. 2003. Motivation filtering: an exploration of the impact of low examinee motivation on the psychometric quality of tests. Paper presented at the annual meeting of the National Council on Measurement in Education. Chicago, IL; 2003. [Google Scholar]

- 6.Kirschenbaum HL, Brown ME, Kalis MM. Programmatic curricular outcomes assessment at colleges and schools of pharmacy in the United States and Puerto Rico. Am J Pharm Educ. 2006;(705):70. doi: 10.5688/aj700108. Article 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;(714):71. doi: 10.5688/aj710466. Article 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sundre DL, Kitsantas A. An exploration of the psychology of the examinee: can examinee self-regulation and test-taking motivation predict consequential and non-consequential test performance? Contemp Educ Psychol. 2004;29(1):6–26. [Google Scholar]

- 9.Wise SL. An investigation of the differential effort received by items on a low-stakes computer-based test. Appl Meas Educ. 2006;19(2):95–114. [Google Scholar]

- 10.Pintrich PR, Schunk DH. Motivation in Education: Theory, Research, and Applications. 2nd ed. Upper Saddle River, NJ: Merrill Prentice Hall; 2002. 5. [Google Scholar]

- 11.Wigfield A, Eccles J. Expectancy-value theory of achievement motivation. Contemp Educ Psychol. 2000;25(1):68–81. doi: 10.1006/ceps.1999.1015. [DOI] [PubMed] [Google Scholar]

- 12.Eccles JS, Wigfield A. Motivational beliefs, values, and goals. Ann Rev Psychol. 2002;53:109–132. doi: 10.1146/annurev.psych.53.100901.135153. [DOI] [PubMed] [Google Scholar]

- 13.Banta TW. Building a Scholarship of Assessment. San Francisco, CA: Jossey-Bass; 2002. [Google Scholar]

- 14.DeMars C. Does the relationship between motivation and performance differ with ability? Paper presented at the annual meeting of National Council on Measurement in Education. Montreal 1999. Retrieved ERIC ED: 430 037:1–23. [Google Scholar]

- 15.Abdelfattah F. The relationship between motivation and achievement in low-stakes examinations. Soc Behav Pers. 2010;38(2):159–168. [Google Scholar]

- 16.Steedle JT. Incentives, motivation, and performance on a low-stakes test of college learning. Collegiate Learning Assessment. http://www.collegiatelearningassessment.org/files/Steedle_2010_Incentives_Motivation_and_Performance_on_a_Low-Stakes_Test_of_College_Learning.pdf Accessed March 25, 2011.

- 17.Thelk AD, Sundre DL, Zjorst SJ, Finney SJ. Motivation matters: using the student opinion scale to make valid inferences about student performance. J Gen Educ. 2009;58(3):129–151. [Google Scholar]

- 18.Sundre DL. The Student Opinion Scale: SOS. Test Manual. Harrisburg, VA: The Center for Assessment & Research Studies; [Google Scholar]