Abstract

The complexity of semiparametric models poses new challenges to statistical inference and model selection that frequently arise from real applications. In this work, we propose new estimation and variable selection procedures for the semiparametric varying-coefficient partially linear model. We first study quantile regression estimates for the nonparametric varying-coefficient functions and the parametric regression coefficients. To achieve nice efficiency properties, we further develop a semiparametric composite quantile regression procedure. We establish the asymptotic normality of proposed estimators for both the parametric and nonparametric parts and show that the estimators achieve the best convergence rate. Moreover, we show that the proposed method is much more efficient than the least-squares-based method for many non-normal errors and that it only loses a small amount of efficiency for normal errors. In addition, it is shown that the loss in efficiency is at most 11.1% for estimating varying coefficient functions and is no greater than 13.6% for estimating parametric components. To achieve sparsity with high-dimensional covariates, we propose adaptive penalization methods for variable selection in the semiparametric varying-coefficient partially linear model and prove that the methods possess the oracle property. Extensive Monte Carlo simulation studies are conducted to examine the finite-sample performance of the proposed procedures. Finally, we apply the new methods to analyze the plasma beta-carotene level data.

Key words and phrases: Asymptotic relative efficiency, composite quantile regression, semiparametric varying-coefficient partially linear model, oracle properties, variable selection

1. Introduction

Semiparametric regression modeling has recently become popular in the statistics literature because it keeps the flexibility of nonparametric models while maintaining the explanatory power of parametric models. The partially linear model, the most commonly used semiparametric regression model, has received a lot of attention in the literature; see Härdle, Liang and Gao [9], Yatchew [32] and references therein for theory and applications of partially linear models. Various extensions of the partially linear model have been proposed in the literature; see Ruppert, Wand and Carroll [26] for applications and theoretical developments of semiparametric regression models. The semiparametric varying-coefficient partially linear model, as an important extension of the partially linear model, is becoming popular in the literature. Let Y be a response variable and {U, X, Z} its covariates. The semiparametric varying-coefficient partially linear model is defined to be

| (1.1) |

where α0(U) is a baseline function, α(U) = {α1(U),…, αd1(U)}T consists of d1 unknown varying coefficient functions, β = (β1,…, βd2)T is a d2-dimensional coefficient vector and ε is random error. In this paper, we will focus on univariate U only, although the proposed procedure is directly applicable for multivariate U. Zhang, Lee and Song [33] proposed an estimation procedure for the model (1.1), based on local polynomial regression techniques. Xia, Zhang and Tong [31] proposed a semilocal estimation procedure to further reduce the bias of the estimator for β suggested in Zhang, Lee and Song [33]. Fan and Huang [5] proposed a profile least-squares estimator for model (1.1) and developed statistical inference procedures. As an extension of Fan and Huang [5], a profile likelihood estimation procedure was developed in Lam and Fan [18], under the generalized linear model framework with a diverging number of covariates.

Existing estimation procedures for model (1.1) were built on either least-squares- or likelihood-based methods. Thus, the existing procedures are expected to be sensitive to outliers and their efficiency may be significantly improved for many commonly used non-normal errors. In this paper, we propose new estimation procedures for model (1.1). This paper contains three major developments: (a) semiparametric quantile regression; (b) semiparametric composite quantile regression; (c) adaptive penalization methods for achieving sparsity in semiparametric composite quantile regression.

Quantile regression is often considered as an alternative to least-squares in the literature. For a complete review on quantile regression, see Koenker [17]. Quantile-regression-based inference procedures have been considered in the literature; see, for example, Cai and Xu [2], He and Shi [10], He, Zhu and Fung [11], Lee [19], among others. In Section 2, we propose a new semiparametric quantile regression procedure for model (1.1). We investigate the sampling properties of the proposed method and their asymptotic normality. When applying semiparametric quantile regression to model (1.1), we observe that all quantile regression estimators can estimate α(u) and β with the optimal rate of convergence. This fact motivates us to combine the information across multiple quantile estimates to obtain improved estimates of α(u) and β. Such an idea has been studied for the parametric regression model in Zou and Yuan [35] and it leads to the composite quantile regression (CQR) estimator that is shown to enjoy nice asymptotic efficiency properties compared with the classical least-squares estimator. In Section 3, we propose the semiparametric composite quantile regression (semi-CQR) estimators for estimating both nonparametric and parametric parts in model (1.1). We show that the semi-CQR estimators achieve the best convergence rates. We also prove the asymptotic normality of the semi-CQR estimators. The asymptotic theory shows that, compared with the semiparametric least-squares estimators, the semi-CQR estimators can have substantial efficiency gain for many non-normal errors and only lose a small amount of efficiency for normal errors. Moreover, the relative efficiency is at least 88.9% for estimating varying-coefficient functions and is at least 86.4% for estimating parametric components.

In practice, there are often many covariates in the parametric part of model (1.1). With high-dimensional covariates, sparse modeling is often considered superior, owing to enhanced model predictability and interpretability [7]. Variable selection for model (1.1) is challenging because it involves both nonparametric and parametric parts. Traditional variable selection methods, such as stepwise regression or best subset variable selection, do not work effectively for the semiparametric model because they need to choose smoothing parameters for each sub-model and cannot cope with high-dimensionality. In Section 4, we develop an effective variable selection procedure to select significant parametric components in model (1.1). We demonstrate that the proposed procedure possesses the oracle property, in the sense of Fan and Li [6].

In Section 5, we conduct simulation studies to examine the finite-sample performance of the proposed procedures. The proposed methods are illustrated with the plasma beta-carotene level data. Regularity conditions and technical proofs are given in Section 6.

2. Semiparametric quantile regression

In this section, we develop the semiparametric quantile regression method and theory. Let ρτ (r) = τr − rI (r < 0) be the check loss function at τ ∈ (0, 1). Quantile regression is often used to estimate the conditional quantile functions of Y,

The semiparametric varying-coefficient partially linear model assumes that the conditional quantile function is expressed as Qτ (u, x, z) = α0,τ (u) + xT ατ (u) + zT βτ.

Suppose that {Ui,Xi,Zi,Yi}, i = 1, …, n, is an independent and identically distributed sample from the model

| (2.1) |

where ετ is random error with conditional τ th quantile being zero. We obtain quantile regression estimates of α0,τ (·), ατ (·) and βτ by minimizing the quantile loss function

| (2.2) |

Because (2.2) involves both nonparametric and parametric components, and because they can be estimated by different rates of convergence, we propose a three-stage estimation procedure. In the first stage, we employ local linear regression techniques to derive an initial estimates of α0,τ (·), ατ (·) and βτ. Then, in the second and third stages, we further improve the estimation efficiency of the initial estimates for βτ and (α0,τ (·),ατ (·)), respectively.

For U in the neighborhood of u, we use a local linear approximation

for j = 0,…, d1. Let {ã0,τ, b̃0,τ, ãτ, b̃τ, β̃τ} be the minimizer of the local weighted quantile loss function

where a = (a1,…, ad1)T, b = (b1,…, bd1)T, K(·) is a given kernel function and Kh(·) = K(·/h)/h with a bandwidth h. Then,

We take {α̃0,τ (u), α̃τ (u), β̃τ} as the initial estimates.

We now provide theoretical justifications for the initial estimates. First, we give some notation. Let fτ (·|u, x, z) and Fτ (·|u, x, z) be the density function and cumulative distribution function of the error conditional on (U, X, Z) = (u, x, z), respectively. Denote by fU(·) the marginal density function of the covariate U. The kernel K(·) is chosen as a symmetric density function and we let

We then have the following result.

THEOREM 2.1. Under the regularity conditions given in Section 7, if h →0 and nh → ∞ as n → ∞, then

| (2.3) |

where A1(u) = E[fτ (0|U, X, Z) (1, XT, ZT)T (1, XT, ZT)|U = u] and B1(u) = E[(1, XT, ZT)T (1, XT, ZT)|U = u].

Theorem 2.1 implies that β̃τ is a -consistent estimator—this is because we only use data in a local neighborhood of u to estimate βτ. Define and compute an improved estimator of βτ by

| (2.4) |

We call it the semi-QR estimator of βτ. The next theorem shows the asymptotic properties of β̂τ.

THEOREM 2.2. Let . Under the regularity conditions given in Section 7, if nh4→0 and nh2/ log(1/h)→∞ as n → ∞, then the asymptotic distribution of β̂τ is given by

| (2.5) |

where Sτ = E[fτ (0|U,X,Z)ZZT] and Ξτ = τ(1 − τ)E[{Z − ξτ (U,X,Z)}{Z − ξτ (U,X,Z)}T].

The optimal bandwidth in Theorem 2.1 is h ~ n−1/5. This bandwidth does not satisfy the condition in Theorem 2.2. Hence, in order to obtain the root-n consistency and asymptotic normality for β̂τ, undersmoothing for α̃0,τ (u) and α̃τ (u) is necessary. This is a common requirement in semiparametric models; see Carroll et al. [3] for a detailed discussion.

After obtaining the root-n consistent estimator β̂τ, we can further improve the efficiency of α̃0,τ (u) and α̃τ (u). To this end, let {â0,τ, b̂0,τ, âτ, b̂τ} be the minimizer of

We define

| (2.6) |

THEOREM 2.3. Under the regularity conditions given in Section 7, if h→0 and nh → ∞ as n → ∞, then

| (2.7) |

where A2(u) = E[fτ (0|U,X,Z)(1,XT)T (1, XT)|U = u] and B2(u) = E[(1, XT)T (1, XT)|U = u].

Theorem 2.3 shows that α̂0,τ (u) and α̂τ (u) have the same conditional asymptotic biases as α̃0,τ (u) and α̃τ (u), while they have smaller conditional asymptotic variances than α̃0,τ (u) and α̃τ (u), respectively. Hence, they are asymptotically more efficient than α̃0,τ (u) and α̃τ (u).

3. Semiparametric composite quantile regression

The analysis of semiparametric quantile regression in Section 2 provides a solid foundation for developing the semiparametric composite quantile regression (CQR) estimates. We consider the connection between the quantile regression model (2.1) and model (1.1) in the situations where the random error ε is independent of (U,X, Z). Let us assume that Y = α0(U) + XT α(U) + ZT β + ε, where ε follows a distribution F with mean zero. In such situations, Qτ (u, x, z) = α0(u) + cτ + xT α(u) + zT β, where cτ = F−1(τ). Thus, all quantile regression estimates [α̂τ (u) and β̂τ for all τ] estimate the same target quantities [α(u) and β] with the optimal rate of convergence. Therefore, we can consider combining the information across multiple quantile estimates to obtain improved estimates of α(u) and β. Such an idea has been studied for the parametric regression model, in Zou and Yuan [35], and it leads to the CQR estimator that is shown to enjoy nice asymptotic efficiency properties compared with the classical least-squares estimator. Kai, Li and Zou [13] proposed the local polynomial CQR estimator for estimating the nonparametric regression function and its derivative. It is shown that the local CQR method can significantly improve the estimation efficiency of the local least-squares estimator for commonly used non-normal error distributions. Inspired by these nice results, we study semiparametric CQR estimates for model (1.1).

Suppose {Ui,Xi,Zi,Yi, i = 1, …, n} is an independent and identically distributed sample from model (1.1) and ε has mean zero. For a given q, let τk = k/(q + 1) for k = 1, 2, …, q. The CQR procedure estimates α0(·), α(·) and β by minimizing the CQR loss function,

To this end, we adapt the three-stage estimation procedure from Section 2.

First, we derive good initial semi-CQR estimates. Let {ã0, b̃0, ã, b̃, β̃} be the minimizer of the local CQR loss function

where a0 = (a01, …, a0q)T, a = (a1, …, ad1)T and b = (b1, …, bd1)T. Initial estimates of α0(u) and α (u) are then given by

| (3.1) |

To investigate asymptotic behaviors of α̃0(u), α̃(u) and β̃, let us begin with some new notation. Denote by f (·) and F(·) the density function and cumulative distribution function of the error, respectively. Let ck = F−1(τk) and C be a q × q diagonal matrix with Cjj = f (cj). Write c = C1, c = 1T C1 and

Let τkk′ = τk ∧ τk′ − τkτk′ and let T be a q × q matrix with the (k, k′) element being τkk′. Write t = T1, t = 1T T1 and

The following theorem describes the asymptotic sampling distribution of {ã0, b̃0, ã, b̃, β̃}.

THEOREM 3.1. Under the regularity conditions given in Section 7, if h → 0 and nh → ∞ as n → ∞, then

where α0(u) = (α0(u)+c1,…, α0(u) + cq)T and β0 is the true value of β.

With the initial estimates in hand, we are now ready to derive a -consistent estimator of β by

| (3.2) |

which is called the semi-CQR estimator of β.

THEOREM 3.2. Under the regularity conditions given in Section 7, if nh4 → 0 and nh2/ log(1/h)→∞ as n → ∞, then the asymptotic distribution of β̂ is given by

| (3.3) |

where S = E(ZZT) and , with δk(u, x, z) being the kth column of the d2 × q matrix

Finally, β̂ can also be used to further refine the estimates for the nonparametric part. Let {â0, b̂0, â, b̂} be the minimizer of

where a0 = (a01, …, a0q)T. We then define the semi-CQR estimators for α0(u) and α(u) as

| (3.4) |

We now study the asymptotic properties of α̂0(u) and α̂(u). Let

THEOREM 3.3. Under the regularity conditions given in Section 7, if h→0 and nh →∞as n→∞, the asymptotic distributions of α̂0(u) and α̂(u) are given by

and

where [·]11 denotes the upper-left q × q submatrix and [·]22 denotes the lower-right d1 × d1 submatrix.

REMARK 1. α(u) and β represent the contributions of covariates. They are the central quantities of interest in semiparametric inference. Li and Liang [21] studied the least-squares-based semiparametric estimation, which we will refer to as “semi-LS” in this work. The major advantage of semi-CQR over the classical semi-LS is that semi-CQR has competitive asymptotic efficiency. Furthermore, semi-CQR is also more stable and robust. Intuitively speaking, these advantages come from the fact that semi-CQR utilizes information shared across multiple quantile functions, whereas semi-LS only uses the information contained in the mean function.

To elaborate on Remark 1, we discuss the relative efficiency of semi-CQR relative to semi-LS. Note that E(Y|U) = α0(U) + E(X|U)T α(U) + E(Z|U)T β. It then follows that Y = E(Y|U) + {X − E(X|U)}T α(U) + {Z − E(Z|U)}T β + ε. Without loss of generality, let us consider the situation in which E(X|U) = 0 and E(Z|U) = 0. Then, all D1(u),D2(u),Σ1(u) and Σ2(u) become block diagonal matrices. Thus, from Theorem 3.3, we have

and

where

and

Note that

with all columns of δ(u, x, z) the same. Thus, Δ = tΔ0 with Δ0 = E[{Z − δ1(U,X,Z)}{Z − δ1(U,X,Z)}T]. It is easy to show that E{δ1(U,X,Z)ZT} = 0 and we then have

Therefore,

| (3.5) |

If we replace R2(q) with 1 in equations (23) and (24), we end up with the asymptotic normal distributions of the semi-LS estimators, as studied in Li and Liang [21]. Thus, R2(q) determines the asymptotic relative efficiency (ARE) of semi-CQR relative to semi-LS. By direct calculations, we see that the ARE for estimating α(u) is R2(q)−4/5 and the ARE for estimating β is R2(q)−1. It is interesting to see that the same factor, R2(q), also appears in the asymptotic efficiency analysis of parametric CQR [35] and nonparametric local CQR smoothing [13]. The basic message is that, with a relatively large q (q ≥ 9), R2(q) is very close to 1 for the normal errors, but can be much smaller than 1, meaning a huge gain in efficiency, for the commonly seen non-normal errors. It is also shown in [13] that limq→∞R2(q)−1 ≥ 0.864 and hence limq→∞R2(q)−4/5 ≥ 0.8896, which implies that when a large q is used, the ARE is at least 88.9% for estimating varying-coefficient functions and at least 86.4% for estimating parametric components.

REMARK 2. The baseline function estimator α̂0(u) converges to α0(u) plus the average of uniform quantiles of the error distribution. Therefore, the bias term is zero when the error distribution is symmetric. Even for nonasymmetric distributions, the additional bias term converge to the mean of the error, which is zero for a large value of q. Nevertheless, its asymptotic variance differs from that of the semi-LS estimator by a factor of R1(q). The study in Kai, Li and Zou [13] shows that R1(q) approaches 1 as q becomes large and R1(q) could be much smaller than 1 with a smaller q (q ≤ 9) for commonly used non-normal distributions.

REMARK 3. The factors R1(q) and R2(q) only depend on the error distribution. We have observed from our simulation study that, as a function of q, the maximum of R2(q) is often closely approximated by R2 (q = 9). Hence, if we only care about the inference of α(u) and β, then q = 9 seems to be a good default value. On the other hand, R1 (q = 5) is often close to the maximum of R1(q) based on our numerical study and hence q = 5 is a good default value for estimating the baseline function. If prediction accuracy is the primary interest, then we should use a proper q to maximize the total contributions from R1(q) and R2(q). Practically speaking, one can choose a q from the interval [5, 9] by some popular tuning methods such as K-fold cross-validation. However, we do not expect these CQR models to have significant differences in terms of model fitting and prediction because, in many cases, R1(q) and R2(q) vary little in the interval [5, 9].

4. Variable selection

Variable selection is a crucial step in high-dimensional modeling. Various powerful penalization methods have been developed for variable selection in parametric models; see Fan and Li [7] for a good review. In the literature, there are only a few papers on variable selection in semiparametric regression models. Li and Liang [21] proposed the nonconcave penalized quasi-likelihood method for variable selection in semiparametric varying-coefficient models. In this section, we study the penalized semiparametric CQR estimator.

Let pλn(·) be a pre-specified penalty function with regularization parameter λn. We consider the penalized CQR loss

| (4.1) |

By minimizing the above objective function with a proper penalty parameter λn, we can get a sparse estimator of β and hence conduct variable selection.

Fan and Li [6] suggested using a concave penalty function since it is able to produce an oracular estimator, that is, the penalized estimator performs as well as if the subset model were known in advance. However, optimizing (4.1) with a concave penalty function is very challenging because the objective function is nonconvex and both loss and penalty parts are nondifferentiable. Various numerical algorithms have been proposed to address the computational difficulties. Fan and Li [6] suggested using local quadratic approximation (LQA) to substitute for the penalty function and then optimizing using the Newton–Raphson algorithm. Hunter and Li [12] further proposed a perturbed version of LQA to alleviate one drawback of LQA. Recently, Zou and Li [34] proposed a new unified algorithm based on local linear approximation (LLA) and further suggested using the one-step LLA estimator because the one-step LLA automatically adopts a sparse representation and is as efficient as the fully iterated LLA estimator. Thus, the one-step LLA estimator is computationally and statistically efficient.

We proposed to follow the one-step sparse estimate scheme in Zou and Li [34] to derive a one-step sparse semi-CQR estimator, as follows. First, we compute the unpenalized semi-CQR estimate β̂(0), as described in Section 3. We then define

We define β̂OSE = argminβ Gn(β) and call this the one-step sparse semi-CQR estimator. Indeed, this is a weighted L1 regularization procedure.

We now show that the one-step sparse semi-CQR estimator β̂OSE enjoys the oracle property. This property holds for a wide class of concave penalties. To establish the idea, we focus on the SCAD penalty from Fan and Li [6], which is perhaps the most popular concave penalty in the literature. Let denote the true value of β, where β10 is a s-vector. Without loss of generality, we assume that β20 = 0 and that β10 contains all nonzero components of β0. Furthermore, let Z1 be the first s elements of Z and define

THEOREM 4.1 (Oracle property). Let pλ(·) be the SCAD penalty. Assume that the regularity conditions (B1)–(B6) given in the Appendix hold. If , λn →0, nh4 →0 and nh2/ log(1/h)→∞ as n → ∞, then the one-step semi-CQR estimator β̂OSE must satisfy:

sparsity, that is, , with probability tending to one;

- asymptotic normality, that is,

where with λk(u, x, z) being the kth column of the matrix λ(u, x, z).(4.2)

Theorem 4.1 shows the asymptotic magnitude of the optimal λn. For a given data set with finite sample, it is practically important to have a data-driven method to select a good λn. Various techniques have been proposed in previous studies, such as the generalized cross-validation selector [6] and the BIC selector [27]. In this work, we use a BIC-like criterion to select the penalization parameter. The BIC criterion is defined as

where dfλ is the number of nonzero coefficients in the parametric part of the fitted model. We let λ̂BIC = argmin BIC(λ). The performance of λ̂BIC will be examined in our simulation studies in the next section.

REMARK 4. Variable selection in linear quantile regression has been considered in several papers; see Li and Zhu [22] and Wu and Liu [30]. The developed method for sparse semiparametric CQR can be easily adopted for variable selection in semiparametric quantile regression. Consider the penalized check loss

| (4.3) |

For its one-step version, we use

| (4.4) |

where β̂(0) denotes the unpenalized semiparametric quantile regression estimator defined in Section 2. We can also prove the oracle property of the one-step sparse semiparametric quantile regression estimator by following the lines of proof for Theorem 4.1. For reasons of brevity, we omit the details here.

5. Numerical studies

In this section, we conduct simulation studies to assess the finite-sample performance of the proposed procedures and illustrate the proposed methodology on a real-world data set in a health study. In all examples, we fix the kernel function to be the Epanechnikov kernel, that is, , and we use the SCAD penalty function for variable selection. Note that all proposed estimators, including semi-QR, semi-CQR and one-step sparse semi-CQR, can be formulated as linear programming (LP) problems. In our study, we solved these estimators by using LP tools.

EXAMPLE 1. In this example, we generate 400 random samples, each consisting of n = 200 observations, from the model

where α1(U) = sin(6πU), α2(U) = sin(2πU), β1 = 2, β2 = 1 and β3 = 0.5. The covariate U is from the uniform distribution on [0, 1]. The covariates X1,X2,Z1,Z2 are jointly normally distributed with mean 0, variance 1 and correlation 2/3. The covariate Z3 is Bernoulli with Pr(Z3 = 1) = 0.4. Furthermore, U and (X1,X2,Z1,Z2,Z3) are independent. In our simulation, we considered the following error distributions: N(0, 1), logistic, standard Cauchy, t-distribution with 3 degrees of freedom, mixture of normals 0.9N(0, 1) + 0.1N(0, 102) and log-normal distribution. Because the error is independent of the covariates, the least-squares (LS), quantile regression (QR) and composite quantile regression (CQR) procedures provide estimates for the same quantity and hence are directly comparable.

Performance of β̂τ and β̂

To examine the performance of the proposed procedures with a wide range of bandwidths, three bandwidths for LS were considered, h = 0.085, 0.128, 0.192, which correspond to the undersmoothing, appropriate smoothing and oversmoothing, respectively. By straightforward calculation, as in Kai, Li and Zou [13], we can produce two simple formulas for the asymptotic optimal bandwidths for QR and CQR: hCQR = hLS · R2(q)1/5 and hQR,τ = hLS ·{τ(1−τ)/f [F−1(τ)]}1/5, where hLS is the asymptotic optimal bandwidth for LS. We considered only the case of normal error. The bias and standard deviation based on 400 simulations are reported in Table 1. First, we see that the estimators are not very sensitive to the choice of bandwidth. As for the estimation accuracy, all three estimators have comparable bias and the differences are shown in standard deviation. The LS estimates have the smallest standard deviation, as expected. The CQR estimates are slightly worse than the LS estimates.

Table 1.

Summary of the bias and standard deviation over 400 simulations

| Bias(SD) | ||||

|---|---|---|---|---|

| h | Method | β̂1 | β̂2 | β̂3 |

| 0.085 | LSE | −0.012 (0.128) | 0.008 (0.121) | −0.009 (0.171) |

| CQR9 | −0.009 (0.131) | 0.009 (0.125) | −0.007 (0.172) | |

| QR0.25 | −0.017 (0.163) | 0.009 (0.161) | −0.151 (0.237) | |

| QR0.50 | −0.012 (0.155) | 0.011 (0.151) | −0.007 (0.198) | |

| QR0.75 | −0.007 (0.165) | 0.005 (0.158) | 0.122 (0.216) | |

| 0.128 | LSE | −0.009 (0.121) | 0.005 (0.117) | −0.008 (0.164) |

| CQR9 | −0.010 (0.127) | 0.008 (0.121) | −0.005 (0.163) | |

| QR0.25 | −0.010 (0.159) | 0.003 (0.152) | −0.082 (0.227) | |

| QR0.50 | −0.008 (0.154) | 0.011 (0.147) | −0.004 (0.193) | |

| QR0.75 | −0.012 (0.163) | 0.003 (0.161) | 0.071 (0.207) | |

| 0.192 | LSE | −0.007 (0.128) | 0.001 (0.123) | −0.008 (0.169) |

| CQR9 | −0.009 (0.131) | 0.005 (0.127) | −0.005 (0.169) | |

| QR0.25 | −0.006 (0.169) | −0.004 (0.169) | −0.061 (0.230) | |

| QR0.50 | −0.005 (0.153) | 0.006 (0.152) | −0.007 (0.191) | |

| QR0.75 | −0.012 (0.170) | 0.007 (0.171) | 0.049 (0.225) | |

In the second study, we fixed h = 0.128 and compared the efficiency of QR and CQR relative to LS. Reported in Table 2 are RMSEs, the ratios of the MSEs of the QR and CQR estimators to the LS estimator for different error distributions. Several observations can be made from Table 2.When the error follows the normal distribution, the RMSEs of CQR are slightly less than 1. For all other non-normal distributions in the table, the RMSE can be much greater than 1, indicating a huge gain in efficiency. These findings agree with the asymptotic theory. For QR estimators, their performance varies and depends heavily on the level of quantile and the error distribution. Overall, CQR outperforms both QR and LS.

Table 2.

Summary of the ratio of MSE over 400 simulations

| RMSE | |||

|---|---|---|---|

| Method | β̂1 | β̂2 | β̂3 |

| Standard normal | |||

| CQR9 | 0.920 | 0.932 | 1.011 |

| QR0.25 | 0.585 | 0.594 | 0.460 |

| QR0.50 | 0.621 | 0.631 | 0.724 |

| QR0.75 | 0.554 | 0.528 | 0.561 |

| Logistic | |||

| CQR9 | 1.044 | 1.083 | 1.016 |

| QR0.25 | 0.651 | 0.664 | 0.502 |

| QR0.50 | 0.826 | 0.871 | 0.799 |

| QR0.75 | 0.661 | 0.732 | 0.527 |

| Standard Cauchy | |||

| CQR9 | 15,246 | 106,710 | 52,544 |

| QR0.25 | 8894 | 56,704 | 24,359 |

| QR0.50 | 19,556 | 137,109 | 66,560 |

| QR0.75 | 8223 | 62,282 | 26,210 |

| t-distribution with df = 3 | |||

| CQR9 | 1.554 | 1.546 | 1.683 |

| QR0.25 | 1.000 | 0.948 | 0.819 |

| QR0.50 | 1.354 | 1.333 | 1.451 |

| QR0.75 | 0.935 | 1.059 | 0.859 |

| 0.9N(0, 1)+ 0.1N(0, 102) | |||

| CQR9 | 5.752 | 4.860 | 5.152 |

| QR0.25 | 3.239 | 3.096 | 2.300 |

| QR0.50 | 5.430 | 4.730 | 4.994 |

| QR0.75 | 3.790 | 2.952 | 2.515 |

| Log-normal | |||

| CQR9 | 3.079 | 3.369 | 3.732 |

| QR0.25 | 5.198 | 5.361 | 3.006 |

| QR0.50 | 2.787 | 2.829 | 3.139 |

| QR0.75 | 0.819 | 0.868 | 0.823 |

Performance of α̂τ and α̂

We now compare the LS, QR and CQR estimates for α by using the ratio of average squared errors (RASE). We first compute

where {uk: k = 1, …, ngrid} is a set of grid points uniformly placed on [0, 1] with ngrid = 200. RASE is then defined to be

| (5.1) |

for an estimator ĝ, where ĝLS is the least-squares-based estimator.

The sample mean and standard deviation of the RASEs over 400 simulations are presented in Table 3, where the values in the parentheses are the standard deviations. The findings are quite similar to those in Table 2. We see that CQR performs almost as well as LS when the error is normally distributed. Also, its RASEs are much larger than 1 for other non-normal error distributions. The efficiency gain can be substantial. Note that for the Cauchy distribution, RASEs of QR and CQR are huge—this is because LS fails when the error variance is infinite.

Table 3.

Summary of the RASE over 400 simulations

| Normal | Logistic | Cauchy | t3 | Mixture | Log-normal | |

|---|---|---|---|---|---|---|

| CQR9 | 0.968 (0.104) | 1.040 (0.134) | 12,872 (176719) | 1.428 (1.299) | 3.292 (1.405) | 2.455 (1.498) |

| QR0.25 | 0.666 (0.160) | 0.720 (0.203) | 7621 (110692) | 0.958 (0.647) | 2.029 (1.003) | 3.490 (3.224) |

| QR0.50 | 0.771 (0.184) | 0.881 (0.206) | 13,720 (187298) | 1.274 (1.166) | 3.155 (1.323) | 2.155 (1.674) |

| QR0.75 | 0.681 (0.191) | 0.713 (0.201) | 5781 (87909) | 0.896 (0.325) | 1.953 (0.905) | 0.824 (0.679) |

EXAMPLE 2. The goal is to compare the proposed one-step sparse semi-CQR estimator with the one-step sparse semi-LS estimator. In this example, 400 random samples, each consisting of n = 200 observations, were generated from the varying-coefficient partially linear model

where β = (3, 1.5, 0, 0, 2, 0, 0, 0)T and the covariate vector (X1, X2, ZT)T is normally distributed with mean 0, variance 1 and correlation 0.5|i−j| (i, j = 1,…, 10). Other model settings are exactly the same as those in Example 1. We use the generalized mean square error (GMSE), as defined in [21],

| (5.2) |

to assess the performance of variable selection procedures for the parametric component. For each procedure, we calculate the relative GMSE (RGMSE), which is defined to be the ratio of the GMSE of a selected final model to that of the unpenalized least-squares estimate under the full model.

The results over 400 simulations are summarized in Table 4, where the column “RGMSE” reports both the median and MAD of 400 RGMSEs. Both columns “C” and “IC” are measures of model complexity. Column “C” shows the average number of zero coefficients correctly estimated to be zero and column “IC” presents the average number of nonzero coefficients incorrectly estimated to be zero. In the column labeled “U-fit” (short for “under-fit”), we present the proportion of trials excluding any nonzero coefficients in 400 replications. Likewise, we report the probability of trials selecting the exact subset model and the probability of trials including all three significant variables and some noise variables in the columns “C-fit” (“correct-fit”) and “O-fit” (“over-fit”), respectively.

Table 4.

One-step estimates for variable selection in semiparametric models

| Method | RGMSE Median (MAD) |

No. of zeros | Proportion of fits | |||

|---|---|---|---|---|---|---|

| C | IC | U-fit | C-fit | O-fit | ||

| Standard normal | ||||||

| One-step LS | 0.335 (0.194) | 4.825 | 0.000 | 0.000 | 0.867 | 0.133 |

| One-step CQR | 0.288 (0.213) | 4.990 | 0.000 | 0.000 | 0.990 | 0.010 |

| Logistic | ||||||

| One-step LS | 0.352 (0.197) | 4.805 | 0.000 | 0.000 | 0.870 | 0.130 |

| One-step CQR | 0.289 (0.206) | 4.975 | 0.000 | 0.000 | 0.975 | 0.025 |

| Standard Cauchy | ||||||

| One-step LS | 0.956 (0.249) | 2.920 | 0.795 | 0.595 | 0.108 | 0.297 |

| One-step CQR | 0.005 (0.021) | 5.000 | 0.295 | 0.210 | 0.790 | 0.000 |

| t-distribution with df = 3 | ||||||

| One-step LS | 0.346 (0.179) | 4.803 | 0.000 | 0.000 | 0.860 | 0.140 |

| One-step CQR | 0.183 (0.177) | 4.987 | 0.000 | 0.000 | 0.988 | 0.013 |

| 0.9N(0, 1)+0.1N(0, 102) | ||||||

| One-step LS | 0.331 (0.190) | 4.848 | 0.000 | 0.000 | 0.883 | 0.117 |

| One-step CQR | 0.060 (0.083) | 4.997 | 0.000 | 0.000 | 0.998 | 0.003 |

| Log-normal | ||||||

| One-step LS | 0.303 (0.182) | 4.845 | 0.000 | 0.000 | 0.887 | 0.113 |

| One-step CQR | 0.111 (0.118) | 4.990 | 0.000 | 0.000 | 0.990 | 0.010 |

From Table 4, we see that both variable selection procedures dramatically reduce model errors, which clearly show the virtue of variable selection. Second, the one-step CQR performs better than the one-step LS in terms of all of the criteria: RGMSE, number of zeros and proportion of fits, and for all of the error distributions in Table 4. It is also interesting to see that in the normal error case, the one-step CQR seems to perform no worse than the one-step LS (or even slightly better). We performed the Mann–Whitney test to compare their RGM-SEs and the corresponding p-value is 0.0495. This observation appears to be contradictory to the asymptotic theory. However, this “contradiction” can be explained by observing that the one-step CQR has better variable selection performance. Note that the one-step CQR has significantly higher probability of correct-select than the one-step LS, which also tends to overselect. Thus, the one-step LS needs to estimate a larger model than the truth, compared to the one-step CQR.

EXAMPLE 3. As an illustration, we apply the proposed procedures to analyze the plasma beta-carotene level data set collected by a cross-sectional study [24]. This data set consists of 273 samples. Of interest are the relationships between the plasma beta-carotene level and the following covariates: age, smoking status, quetelet index (BMI), vitamin use, number of calories, grams of fat, grams of fiber, number of alcoholic drinks, cholesterol and dietary beta-carotene. The complete description of the data can be found in the StatLib database via the link lib.stat.cmu.edu/datasets/Plasma_Retinol.

We fit the data by using a partially linear model with U being “dietary beta-carotene.” The covariates “smoking status” and “vitamin use” are categorical and are thus replaced with dummy variables. All of the other covariates are standardized. We applied the one-step sparse CQR and LS estimators to fit the partially linear regression model. Five-fold cross-validation was used to select the bandwidths for LS and CQR. We used the first 200 observations as a training data set to fit the model and to select significant variables, then used the remaining 73 observations to evaluate the predictive ability of the selected model.

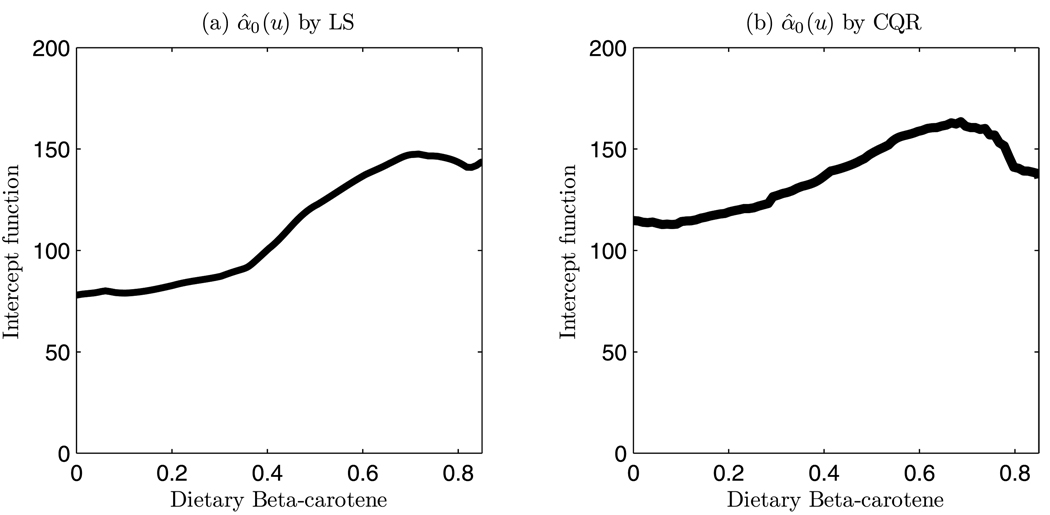

The prediction performance is measured by the median absolute prediction error (MAPE), which is the median of {|yi − ŷi|, i = 1, 2,…, 73}. To see the effect of q on the CQR estimate, we tried q = 5, 7, 9. We found that the selected Z-variables are the same for these three values of q and their MAPEs are 58.52, 58.11, 62.43, respectively. Thus, the effect of q is minor. The resulting model with q = 7 is given in Table 5 and the estimated intercept function is depicted in Figure 1. From Table 5, it can be seen that the CQR model is much sparser than the LS model. Only two covariates, “fiber consumption per day” and “fairly often use of vitamin” are included in the parametric part of the CQR model. Meanwhile, the CQR model has much better prediction performance than the LS model, whose MAPE is 111.28.

Table 5.

Selected parametric components for plasma beta-carotene level data

| Age | 0 | 0 | ||

| Quetelet index | 0 | 0 | ||

| Calories | −100.47 | 0 | ||

| Fat | 52.60 | 0 | ||

| Fiber | 87.51 | 29.89 | ||

| Alcohol | 44.61 | 0 | ||

| Cholesterol | 0 | 0 | ||

| Smoking status (never) | 51.71 | 0 | ||

| Smoking status (former) | 72.48 | 0 | ||

| Vitamin use (yes, fairly often) | 130.39 | 30.21 | ||

| Vitamin use (yes, not often) | 0 | 0 | ||

| MAPE | 111.28 | 58.11 |

Fig. 1.

Plot of estimated intercept function of dietary beta-carotene: (a) the estimated intercept function by LS method; (b) the estimated intercept function by CQR method with q = 7.

6. Discussion

We discuss some directions in which this work could be further extended. We have focused on using uniform weights in composite quantile regression. In theory, we can use nonuniform weights, which may provide an even more efficient estimator when a reliable estimate of the error distribution is available. Koenker [16] discussed the theoretically optimal weights. Bradic, Fan and Wang [1] suggested a data-driven weighted CQR for parametric linear regression, in which the weights mimic the optimal weights. The idea in Bradic, Fan and Wang [1] can be easily extended to the semi-CQR estimator, which will be investigated in detail in a future paper.

Penalized Wilcoxon rank regression has been considered independently in Leng [20] and Wang and Li [29] and found to achieve a similar efficiency property of CQR for variable selection in parametric linear regression. We could also generalize rank regression to handle semiparametric varying-coefficient partially linear models. In a working paper, we show that rank regression is exactly equivalent to CQR using q = n − 1 quantiles with uniform weights. This result indicates that CQR is more flexible than rank regression because we can easily use flexible nonuniform weights in CQR to further improve efficiency, as in Bradic, Fan and Wang [1]. Obviously, CQR is also computationally more efficient than rank regression. We note that in parametric linear regression models, rank regression has no efficiency gain over least-squares for estimating the intercept. This result is expected to hold for estimating the baseline function in the semiparametric varying-coefficient partially linear model.

When the number of varying coefficient components is large, it is also desirable to consider selecting a few important components. This problem was studied in Wang and Xia [28], where a LASSO-type penalized local least-squares estimator was proposed. It would be interesting to apply CQR to their method to further improve the estimation efficiency.

7. Proofs

To establish the asymptotic properties of the proposed estimators, the following regularity conditions are imposed:

-

(C1)

the random variable U has bounded support Ω and its density function fU(·) is positive and has a continuous second derivative;

-

(C2)

the varying coefficients α0(·) and α(·) have continuous second derivatives in u ∈ Ω;

-

(C3)

K(·) is a symmetric density function with bounded support and satisfies a Lipschitz condition;

-

(C4)

the random vector Z has bounded support;

-

(C5)for the semi-QR procedure,

- Fτ (0|u, x, z) = τ for all (u, x, z), and fτ (·|u, x, z) is bounded away from zero and has a continuous and uniformly bounded derivative;

- A1(u) defined in Theorem 2.1 and A2(u) defined in Theorem 2.3 are nonsingular for all u ∈ Ω;

-

(C6)for the semi-CQR procedure,

- f (·) is bounded away from zero and has a continuous and uniformly bounded derivative;

- D1(u) defined in Theorem 3.1 and D2(u) defined in Theorem 3.3 are non-singular for all u ∈ Ω.

Although the proposed semi-QR and semi-CQR procedures require different regularity conditions, the proofs follow similar strategies. For brevity, we only present the detailed proofs for the semi-CQR procedure. The detailed proofs for the semi-QR procedure was given in the earlier version of this paper. Lemma 7.1 below, which is a direct result of Mack and Silverman [23], will be used repeatedly in our proofs. Throughout the proofs, identities of the form G(u) = Op(an) always stand for supu∈Ω |G(u)| = Op(an).

LEMMA 7.1. Let (X1,Y1),…, (Xn,Yn) be i.i.d. random vectors, where the Yi’s are scalar random variables. Assume, further, that E|Y|r < ∞ and that supx ∫ |y|r f (x, y)dy < ∞, where f denotes the joint density of (X,Y). Let K be a bounded positive function with bounded support, satisfying a Lipschitz condition. Then,

provided that n2ε−1h→∞for some ε <1 −r−1.

Let ηi,k = I (εi ≤ ck)−τk and , where . Furthermore, let , where ek is a q-vector with 1 at the kth position and 0 elsewhere.

In the proof of Theorem 3.1, we will first show the following asymptotic representation of {ã0, b̃0, ã, b̃, β̃}:

| (7.1) |

where S* (u) = diag{D1(u), cµ2B2(u)} and

The asymptotic normality of {ã0, b̃0, ã, b̃, β̃} then follows by demonstrating the asymptotic normality of .

PROOF OF THEOREM 3.1. Recall that {ã0, ã, β̃, b̃0, b̃} minimizes

We write , where . Then, θ̃* is also the minimizer of

where Ki(u) = K{(Ui − u)/h}. By applying the identity [14]

| (7.2) |

we have

where

Since is a summation of i.i.d. random variables of the kernel form, it follows, by Lemma 7.1, that

The conditional expectation of can be calculated as

Then,

It can be shown that . Therefore, we can write Ln(θ*) as

By applying the convexity lemma [25] and the quadratic approximation lemma [4], the minimizer of can be expressed as

| (7.3) |

which holds uniformly for u ∈ Ω. Meanwhile, for any point u ∈ Ω, we have

| (7.4) |

Note that S* (u) = diag{D1(u), cµ2B2(u)} is a quasi-diagonal matrix. So,

| (7.5) |

where . Let

Note that

By some calculations, we have that . By the Cramér–Wold theorem, the central limit theorem for Wn,1(u) holds. Therefore,

Moreover, we have , thus

So, by Slutsky’s theorem, conditioning on {U,X,Z}, we have

| (7.6) |

We now calculate the conditional mean of :

| (7.7) |

The proof is completed by combining (7.5), (7.6) and (7.7).

PROOF OF THEOREM 3.2. Let . Then,

where . Then,

is also the minimizer of

By applying the identity (7.2), we can rewrite Ln(θ) as follows:

where . Let us now calculate the conditional expectation of Bn(θ):

Define Rn(θ) = Bn(θ) − E[Bn(θ)|U,X,Z]. It can be shown that Rn(θ) = op(1). Hence,

where . By (7.3), the third term in the previous expression can be expressed as

where

Therefore,

It can be shown that Sn = E(Sn)+op(1) = cS + op(1). Hence,

Since the convex function converges in probability to the convex function , it follows, by the convexity lemma [25], that the quadratic approximation to Ln(θ) holds uniformly for θ in any compact set Θ. Thus, it follows that

| (7.8) |

By the Cramér–Wold theorem, the central limit theorem for Wn holds and . Therefore, the asymptotic normality of β̂ is followed by

This completes the proof.

PROOF OF THEOREM 3.3. The asymptotic normality of α̂0(u) and α̂(u) can be obtained by following the ideas in the proof of Theorem 3.1.

PROOF OF THEOREM 4.1. Use the same notation as in the proof of Theorem 3.2. Minimizing

is equivalent to minimizing

where . Similar to the derivation in the proof of Theorem 5 in Zou and Li [34], the third term above can be expressed as

| (7.9) |

Therefore, by the epiconvergence results [8, 15], we have and the asymptotic results for holds.

To prove sparsity, we only need to show that with probability tending to 1. It suffices to prove that if β0j = 0, then . By using the fact that , if , then we must have . Thus, we have . However, under the assumptions, we have . Therefore, . This completes the proof.

Contributor Information

Bo Kai, Department of Mathematics, College of Charleston, Charleston, South Carolina 29424, USA, kaib@cofc.edu.

Runze Li, Department of Statistics, Pennsylvania State University, University Park, Pennsylvania 16802, USA, rli@stat.psu.edu.

Hui Zou, School of Statistics, University Of Minnesota, Minneapolis, Minnesota 55455, USA, hzou@stat.umn.edu.

REFERENCES

- 1.Bradic J, Fan J, Wang W. Penalized composite quasi-likelihood for ultrahigh-dimensional variable selection. 2010 doi: 10.1111/j.1467-9868.2010.00764.x. Available at arXiv:0912.5200v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cai Z, Xu X. Nonparametric quantile estimations for dynamic smooth coefficient models. J. Amer. Statist. Assoc. 2009;104:371–383. MR2504383. [Google Scholar]

- 3.Carroll R, Fan J, Gijbels I, Wand M. Generalized partially linear single-index models. J. Amer. Statist. Assoc. 1997;92:477–489. MR1467842. [Google Scholar]

- 4.Fan J, Gijbels I. Local Polynomial Modelling and Its Applications. London: Chapman & Hall; 1996. MR1383587. [Google Scholar]

- 5.Fan J, Huang T. Profile likelihood inferences on semiparametric varying-coefficient partially linear models. Bernoulli. 2005;11:1031–1057. MR2189080. [Google Scholar]

- 6.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Amer. Statist. Assoc. 2001;96:1348–1361. MR1946581. [Google Scholar]

- 7.Fan J, Li R. Statistical challenges with high dimensionality: Feature selection in knowledge discovery. In: Sanz-Sole M, Soria J, Varona J, Verdera J, editors. Proceedings of the International Congress of Mathematicians. III. 2006. pp. 595–622. Eur. Math. Soc., Zürich. MR2275698. [Google Scholar]

- 8.Geyer C. On the asymptotics of constrained M-estimation. Ann. Statist. 1994;22:1993–2010. MR1329179. [Google Scholar]

- 9.Härdle W, Liang H, Gao J. Partially Linear Models. Heidelberg: Physica Verlag; 2000. MR1787637. [Google Scholar]

- 10.He X, Shi P. Bivariate tensor-product B-splines in a partly linear model. J. Multivariate Anal. 1996;58:162–181. MR1405586. [Google Scholar]

- 11.He X, Zhu Z, Fung W. Estimation in a semiparametric model for longitudinal data with unspecified dependence structure. Biometrika. 2002;89:579–590. MR1929164. [Google Scholar]

- 12.Hunter D, Li R. Variable selection using MM algorithms. Ann. Statist. 2005;33:1617–1642. doi: 10.1214/009053605000000200. MR2166557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kai B, Li R, Zou H. Local composite quantile regression smoothing: An efficient and safe alternative to local polynomial regression. J. Roy. Statist. Soc. Ser. B. 2010;72:49–69. doi: 10.1111/j.1467-9868.2009.00725.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Knight K. Limiting distributions for L1 regression estimators under general conditions. Ann. Statist. 1998;26:755–770. MR1626024. [Google Scholar]

- 15.Knight K, Fu W. Asymptotics for lasso-type estimators. Ann. Statist. 2000;28:1356–1378. MR1805787. [Google Scholar]

- 16.Koenker R. A note on L-estimates for linear models. Statist. Probab. Lett. 1984;2:323–325. MR0782652. [Google Scholar]

- 17.Koenker R. Quantile Regression. Cambridge: Cambridge Univ. Press; 2005. MR2268657. [Google Scholar]

- 18.Lam C, Fan J. Profile-kernel likelihood inference with diverging number of parameters. Ann. Statist. 2008;36:2232–2260. doi: 10.1214/07-AOS544. MR2458186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lee S. Efficient semiparametric estimation of a partially linear quantile regression model. Econometric Theory. 2003;19:1–31. MR1965840. [Google Scholar]

- 20.Leng C. Variable selection and coefficient estimation via regularized rank regression. Statist. Sinica. 2010;20:167–181. MR2640661. [Google Scholar]

- 21.Li R, Liang H. Variable selection in semiparametric regression modeling. Ann. Statist. 2008;36:261–286. doi: 10.1214/009053607000000604. MR2387971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Li Y, Zhu J. L1-norm quantile regression. J. Comput. Graph. Statist. 2007;17:163–185. MR2424800. [Google Scholar]

- 23.Mack Y, Silverman B. Weak and strong uniform consistency of kernel regression estimates. Probab. Theory Related Fields. 1982;61:405–415. MR0679685. [Google Scholar]

- 24.Nierenberg D, Stukel T, Baron J, Dain B, Greenberg E. Determinants of plasma levels of beta-carotene and retinol. American Journal of Epidemiology. 1989;130:511–521. doi: 10.1093/oxfordjournals.aje.a115365. [DOI] [PubMed] [Google Scholar]

- 25.Pollard D. Asymptotics for least absolute deviation regression estimators. Econometric Theory. 1991;7:186–199. MR1128411. [Google Scholar]

- 26.Ruppert D, Wand M, Carroll R. Semiparametric Regression. Cambridge: Cambridge Univ. Press; 2003. MR1998720. [Google Scholar]

- 27.Wang H, Li R, Tsai C. Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika. 2007;94:553–568. doi: 10.1093/biomet/asm053. MR2410008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wang H, Xia Y. Shrinkage estimation of the varying coefficient model. J. Amer. Statist. Assoc. 2009;104:747–757. MR2541592. [Google Scholar]

- 29.Wang L, Li R. Weighted Wilcoxon-type smoothly clipped absolute deviation method. Biometrics. 2009;65:564–571. doi: 10.1111/j.1541-0420.2008.01099.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wu Y, Liu Y. Variable selection in quantile regression. Statist. Sinica. 2009;19:801–817. MR2514189. [Google Scholar]

- 31.Xia Y, Zhang W, Tong H. Efficient estimation for semivarying-coefficient models. Biometrika. 2004;91:661–681. MR2090629. [Google Scholar]

- 32.Yatchew A. Semiparametric Regression for the Applied Econometrician. Cambridge: Cambridge Univ. Press; 2003. [Google Scholar]

- 33.Zhang W, Lee S, Song X. Local polynomial fitting in semivarying coefficient model. J. Multivariate Anal. 2002;82:166–188. MR1918619. [Google Scholar]

- 34.Zou H, Li R. One-step sparse estimates in nonconcave penalized likelihood models (with discussion) Ann. Statist. 2008;36:1509–1533. doi: 10.1214/009053607000000802. MR2435443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zou H, Yuan M. Composite quantile regression and the oracle model selection theory. Ann. Statist. 2008;36:1108–1126. MR2418651. [Google Scholar]