Abstract

Gender differences are an important factor regulating our daily interactions. Using functional magnetic resonance imaging we show that brain areas involved in processing social signals are activated differently by threatening signals send from male and female facial and bodily expressions and that their activation patterns are different for male and female observers. Male participants pay more attention to the female face as shown by increased amygdala activity. But a host of other areas show selective sensitivity for male observers attending to male threatening bodily expressions (extrastriate body area, superior temporal sulcus, fusiform gyrus, pre-supplementary motor area, and premotor cortex). This is the first study investigating gender differences in processing dynamic female and male facial and bodily expressions and it illustrates the importance of gender differences in affective communication.

Keywords: fMRI, emotion, social threat, faces, bodies, gender differences, gender of actor

Introduction

Facial and bodily expressions are among the most salient affective signals regulating our daily interactions and they have a strong biological basis (de Gelder, 2006, 2010). Therefore it stands to reason that gender figures prominently among factors that determine affective communication. Previous studies have already reported gender differences in how the brain processes facial emotions. But it is not known whether gender differences also influence how emotional expressions of the whole body are processed. It is also unclear whether there is a relation between the gender of the observer and that of the image shown. The goal of this study was to address both issues. We first give a systematic overview of the core areas that underlie the perception of facial and bodily expressions of emotion (Kret et al., 2011) and then outline the implications for gender differences.

The cortical network underlying face perception is well known and includes the fusiform face area (FFA; Kanwisher et al., 1997), the occipital face area (Puce et al., 1996; Gauthier et al., 2000), the superior temporal sulcus (STS) and the amygdala (AMG; Haxby et al., 2000). Recent studies show that the brain areas involved in whole body perception overlap with the face network and confirm the involvement of AMG, fusiform gyrus (FG), and STS in face and body perception (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; Peelen and Downing, 2007; Meeren et al., 2008; van de Riet et al., 2009; Kret et al., 2011). Two areas in the body perception network have been the targets of categorical selectivity research. The one reported first is an area at the junction of the middle temporal and middle occipital gyrus, labeled the extrastriate body area (EBA; Downing et al., 2001). A later added one is in the FG, at least partly overlapping with FFA (Kanwisher et al., 1997) and termed the fusiform body area (FBA; Peelen and Downing, 2005). Recent evidence suggests that these areas are particularly responsive to bodily expressions of emotion (Grèzes et al., 2007; Peelen et al., 2007; Pichon et al., 2008).

Yet so far the relation between categorization by the visual system and emotion perception is not clear. Furthermore, photographs of bodily expressions also trigger areas involved in action perception (de Gelder et al, 2004). Recent studies with dynamic stimuli have proven useful for better understanding the respective contribution of action and emotion-related components. A study by Grosbras and Paus (2006) showed that video clips of angry hands trigger activations that largely overlap with those reported for facial expressions in the FG. Increased responses in the STS and the temporo-parietal junction (TPJ) have been reported for dynamic threatening body expressions (Grèzes et al., 2007; Pichon et al., 2008, 2009). Different studies have demonstrated a role for TPJ in “theory of mind”, the ability to represent and reason about mental states, such as thoughts and beliefs (Saxe and Kanwisher, 2003; Samson et al., 2004). Other functions of this area involve reorienting attention to salient stimuli, sense of agency, and multisensory body-related information processing, as well as in the processing of phenomenological and cognitive aspects of the self (Blanke and Arzy, 2005). Whereas TPJ is implicated in higher level social cognitive processing (for a meta-analysis, see Decety and Lamm, 2007), STS has been frequently highlighted in biological motion studies (Allison et al., 2000) and shows specific activity for goal-directed actions and configural and kinematic information from body movements (Perrett et al., 1989; Bonda et al., 1996; Grossman and Blake, 2002; Thompson et al., 2005). Observing threatening actions (as compared to neutral or joyful actions) increases activity in regions involved in action preparation: the pre-supplementary motor area (pre-SMA; de Gelder et al., 2004; Grosbras and Paus, 2006; Grèzes et al., 2007) and premotor cortex (PM; Grosbras and Paus, 2006; Grèzes et al., 2007; Pichon et al., 2008, 2009). To our knowledge, it is still unclear whether these above described regions relate to gender differences.

Common sense intuitions view women as more emotional than men. Yet research suggests this presumed difference is based more on an expressive and less on an experiential difference (Kring and Gordon, 1998). For example, Moore (1966) found that males reported more violent scenes than females during binocular rivalry, possibly because of cultural influences that socialize males to act more violently than females. A growing body of research demonstrates gender differences in the neural network involved in processing emotions (Kemp et al., 2004; Hofer et al., 2006; Dickie and Armony, 2008). Two observations are a stronger right hemispheric lateralization but also higher activation levels in males as compared to females (Killgore and Cupp, 2002; Schienle et al., 2005; Fine et al., 2009).

A different issue is whether how the gender of the person we observe influences our percept, depends on our gender. Evidence suggests that pictures of males expressing anger tend to be more effective as conditioned stimuli than pictures of angry females (Öhman and Dimberg, 1978). Previous behavioral studies indicate enhanced physiological arousal in men but not in women during exposure to angry male as opposed to female faces (Mazurski et al., 1996). Fischer et al. (2004) observed that exposure to angry male as opposed to angry female faces activated the visual cortex and the anterior cingulate gyrus significantly more in men than in women. A similar sex-differential brain activation pattern was present during exposure to fearful but not neutral faces. Aleman and Swart (2008) report stronger activation in the STS in men than women in response to faces denoting interpersonal superiority. These studies suggest a defensive response in men during a confrontation with threatening males.

Evolutionary theorists suggest that ancestral males formed status hierarchies, and that dominant males were more likely to attract females. Men's position within these hierarchies could be challenged, possibly explaining why men use physical aggression more often than females (Bosson et al., 2009). While socialization of aggressiveness might involve learning to control and inhibit angry behavior, pressures for this may be stronger on females than on males (Eron and Huesmann, 1984). Moreover, there are many studies reporting a relationship between high levels of testosterone and increased readiness to respond vigorously and assertively to provocations and threats (Olweus et al., 1988). A physically strong male expressing threat with his body is likely to represent a large threat and may be more relevant for the observer. It is thus conceivable that the perception of and reactivity to emotional expressions depends on the gender of the observer and observed.

Taken together, there are strong indications that males and females differ in the recruitment of cerebral networks following female and male emotional expressions. We tested this hypothesis here by measuring female and male participants’ hemodynamic brain activity while they watched videos showing threatening (fearful or angry) or neutral facial or bodily expressions of female or male actors. First, we expected male observers to react more strongly to signals of threat than females. Second, since threatening male body expressions are potentially harmful, we expected the male as compared to female videos to trigger more activation in regions involved in processing affective signals (AMG), body-related information (EBA, FG, STS, and TPJ), and motor preparation (pre-SMA and PM; de Gelder et al., 2010).

Materials and Methods

Participants

Twenty-eight participants (14 females, mean age 19.8 years old, range 18–27 years old; 14 males; mean age: 21.6 years old, range 18–32 years old) took part in the experiment. Half of them viewed neutral and angry expressions and the other half neutral and fearful expressions. Participants had no neurological or psychiatric history, were right-handed and had normal or corrected-to-normal vision. All gave informed consent. The study was performed in accordance to the Declaration of Helsinki and was approved by the local medical ethical committee. Two participants were discarded from analysis, due to task miscomprehension and neurological abnormalities.

Materials

Video recordings were made of 26 actors expressing six different facial and bodily emotions. For the body video sessions all actors were dressed in black and filmed against a green background. For the facial videos, actors wore a green shirt, similar as the background color. Recordings used a digital video camera under controlled and standardized lighting conditions. To coach the actors to achieve a natural expression, pictures of emotional scenes were, with the help of a projector, shown on the wall in front of them and a short emotion inducing story was read out by the experimenter. The actors were free to act the emotions in a naturalistic way as response on the situation described by the experimenter and were not put under time restrictions. Fearful body movements included stretching the arms as if to protect the upper body while leaning backward. Angry body movements included movements in which the body was slightly bended forward, some actors showed their fists, whereas others stamped their feet and made resolute hand gestures. Additionally, the stimulus set included neutral face and body movements (such as pulling up the nose, coughing, fixing one's hair, or clothes). Distance to the beamer screen was 600 mm. All video clips were computer-edited using Ulead and After Effects, to a uniform length of 2 s (50 frames). The faces of the body videos were masked with Gaussian masks so that only information of the body was perceived. Based on a separate validation study, 10 actors were included in the current experiment. To check for quantitative differences in movement between the movies, we estimated the amount of movement per video clip by quantifying the variation of light intensity (luminance) between pairs of frames for each pixel (Peelen et al., 2007). For each frame, these absolute differences were averaged across pixels that scored (on a scale reaching a maximum of 255) higher than 10, a value which corresponds to the noise level of the camera. These were then averaged for each movie. Angry and fearful expressions contained equal movement (M = 30.64, SD 11.99 versus M = 25.41, SD 8.71) [t(19) = 0.776, ns] but more movement than neutral expressions (M = 10.17, SD 6.00) [t(19) = 3.78, p < 0.005] and [t(19) = 4.093, p < 0.005]. Threatening male and female video clips did not differ in the amount of movement (M = 31.48, SD 10.89 versus M = 29.16, SD 11.05) [t(19) = 2.07, ns] and neutral male versus female videos were also equal in terms of movement (M = 26.70, SD 9.68 versus M = 24.11, SD 7.92) [t(9) = 1.26, ns]. In addition, we generated scrambled movies by applying a Fourier-based algorithm onto each movie. This technique scrambles the phase spectra of each movies’ frames and allows to generate video clips served as low level visual controls and prevents habituation.

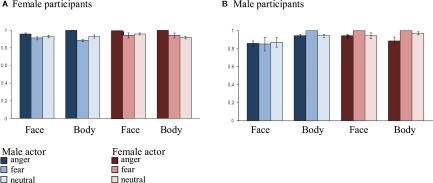

For a full description of the validation, see Kret et al. (2011). Importantly, in order to ascertain that the participants of our study could also recognize the emotional expressions they had seen in the scanner, they completed a small validation study shortly after the scanning session. They were guided to a quiet room where they were seated in front of a computer and categorized the non-scrambled stimuli they had previously seen in the scanner. They were instructed to wait with their response until the end of the video when a question mark appeared in the middle of the screen and were then required to respond as accurate as possible. Two emotion labels were pasted on two keys of the computer keyboard and participants could either choose between a neutral and angry label or between a neutral and fearful label. There were three practice trials included with emotions from different actors than the ones used in the experiment. A trial started with a central fixation cross (800 ms) after which the video was presented (2 s) which was followed by a blank screen with a central question mark, with a duration of 1–3 s. Main and interaction effects were tested in SPSS in an ANOVA with three within-subject variables, [emotion (threat and neutral), category (face and body), gender of actor (female and male)] and one between subject variable, [gender of observer (female and male)]. One male participant pressed the same button for all stimuli and was excluded from analysis. There were no main or interaction effects. See Figure 1.

Figure 1.

Recognition rates per condition and gender of the observer-validation afterward. (A) Mean recognition rate across conditions in the female observers. (B) Mean recognition rate across conditions in the male observers. There were no significant differences between accuracy rates for male or female participants and neither between the recognition of male or female actors.

Experimental design

The experiment consisted of 176 trials {80 non-scrambled [10 actors (five males) two expressions (threat, neutral) two categories two repetitions] and 80 scrambled videos and 16 oddballs (inverted videos)} which were presented in two runs. There were 80 null events (blank, green screen) with a duration of 2000 ms. These 176 stimuli and 80 null events were randomized within each run (see Table 1). A trial started with a fixation cross (500 ms), followed by a video (2000 ms) and a blank screen (2450 ms). An oddball task was used to control for attention and required participants to press a button each time an inverted video appeared so that trials of interest were uncontaminated by motor responses. Stimuli were back-projected onto a screen positioned behind the subject's head and viewed through a mirror attached to the head coil. Stimuli were centered on the display screen and subtended 11.4° of visual angle vertically for the body stimuli, and 7.9° of visual angle vertically for the face stimuli.

Table 1.

Experimental design.

| Version 1. anger–neutral (N = 14) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Non-scrambled | Scrambled | Oddball* | Blank screen | |||||

| Anger | Neutral | Anger | Neutral | Anger | Neutral | 80 | ||

| RUN 1 | ||||||||

| Faces | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Bodies | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Total | 40 | 40 | 40 | 40 | 8 | 8 | ||

| RUN 2 | ||||||||

| Faces | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Bodies | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Total | 40 | 40 | 40 | 40 | 8 | 8 | ||

| Version 2. fear–neutral (N = 14) | ||||||||

| Non-scrambled | Scrambled | Oddball | Blank screen | |||||

| Fear | Neutral | Fear | Neutral | Fear | Neutral | 80 | ||

| RUN 1 | ||||||||

| Faces | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Bodies | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Total | 40 | 40 | 40 | 40 | 8 | 8 | ||

| RUN 2 | ||||||||

| Faces | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Bodies | Male | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | |

| Female | 5 * 2 | 5 * 2 | 5 * 2 | 5 * 2 | 2 | 2 | ||

| Total | 40 | 40 | 40 | 40 | 8 | 8 | ||

The oddball condition consisted of videos that were presented upside down. 5 * 2: five unique identities that were presented twice. All stimuli were presented in random order.

Procedure

Participants’ head movements were minimized by an adjustable padded head-holder. Responses were recorded by a keypad, positioned on the right side of the participant's abdomen. After the two experimental runs, participants were given a functional localizer. Stimulus presentation was controlled by using Presentation software (Neurobehavioral Systems, San Francisco, CA, USA). After the scanning session participants were given a 10-min break. They were then guided to a quiet room where they were seated in front of a computer and categorized the non-scrambled stimuli they had previously seen in the scanner.

fMRI data acquisition

Parameters of the Functional Scans

Functional images were acquired using a 3.0-T Magnetom scanner (Siemens, Erlangen, Germany). Blood oxygenation level dependent (BOLD) sensitive functional images were acquired using a gradient echo-planar imaging (EPI) sequence (TR = 2000 ms, TE = 30 ms, 32 transversal slices, descending interleaved acquisition, 3.5 mm slice thickness, with no interslice gap, FA = 90°, FOV = 224 mm, matrix size = 64 mm × 64 mm). An automatic shimming procedure was performed before each scanning session. A total of 644 functional volumes were collected for each participant [total scan time = 10 min per run (two runs with the anatomical scan in between)].

The localizer scan parameters were: TR = 2000 ms, TE = 30 ms, FA = 90°, 28 slices, matrix size = 256 mm × 256 mm, FOV = 256 mm, slice thickness = 2 mm (no gap), number of volumes = 328 (total scan time = 11 min).

Parameters of the Structural Scan

A high-resolution T1-weighted anatomical scan was acquired for each participant (TR = 2250 ms, TE = 2.6 ms, FA = 9°, 192 sagittal slices, voxel size 1 × 1 × 1 mm, Inversion Time (TI) = 900 ms, FOV = 256 mm × 256 mm, 192 slices, slice thickness = 1 mm, no gap, total scan time = 8 min).

Statistical parametric mapping

Functional images were processed using SPM2 software (Wellcome Department of Imaging Neuroscience)1. The first five volumes of each functional run were discarded to allow for T1 equilibration effects. The remaining 639 functional images were reoriented to the anterior/posterior commissures plane, slice time corrected to the middle slice and spatially realigned to the first volume, subsampled at an isotropic voxel size of 2 mm, normalized to the standard MNI space using the EPI reference brain and spatially smoothed with a 6 mm full width at half maximum (FWHM) isotropic Gaussian kernel. Statistical analysis was carried out using the general linear model framework (Friston et al., 1995) implemented in SPM2.

At the first level analysis, eight effects of interest were modeled: face threat (fear or anger, depending on the version of the experiment) female, face neutral female, body threat female, body neutral female, face threat male, face neutral male, body threat male, body neutral male. Null events were modeled implicitly. The BOLD response to the stimulus onset for each event type was convolved with the canonical hemodynamic response function over a duration of 2000 ms. For each subject's session, six covariates were included in order to capture residual movement-related artifacts (three rigid-body translations and three rotations determined from initial spatial registration), and a single covariate representing the mean (constant) over scans. To remove low frequency drifts from the data, we applied a high-pass filter using a cut-off frequency of 1/128 Hz. Statistical maps were overlaid on the SPM's single subject brain compliant with MNI space, i.e., Colin27 (Holmes et al., 1998) in the anatomy toolbox2 (see Eickhoff et al., 2005 for a description). The atlas of Duvernoy was used for macroscopical labeling (Duvernoy, 1999).

The beta values of the ROIs (see next paragraph) were extracted for the following conditions: face threat male and female, body threat male and female, face neutral male and female, and body neutral male and female. The reason for combining two studies into one analysis was because the overall pattern of responses to these two types of threat stimuli was similar. Since there were almost no differences across the two hemispheric ROIs and our interest does not concern hemispheric lateralization, we pool bilateral ROIs to reduce the total number of areas. Main and interaction effects were tested in SPSS in an ANOVA with three within-subject variables [emotion (threat and neutral), category (face and body), gender of actor (female and male)] and one between subject variable (gender of observer) and were further investigated with Bonferroni-corrected paired comparisons and Bonferroni-corrected two-tailed t-tests.

Localization of face- and body-sensitive regions

Face- and body-sensitive voxels in EBA, FG, STS, and AMG were identified using a separate localizer scan session in which participants performed a one backward task on face, body, house, and tool stimuli. The localizer consisted of 20 blocks of 12 trials of faces, bodies (neutral expressions, 10 male, and 10 female actors), objects, and houses (20 tools and 20 houses). Body pictures were selected from our large database of body expressions and we only included the stimuli that were recognized as being absolutely neutral. To read more about the validation procedure, we refer the reader to the article of van de Riet et al. (2009). The tools (for example pincers, a hairdryer etc.) and houses were selected from the Internet. All pictures were equal in size and were presented in grayscale on a gray background. Stimuli were presented in a randomized blocked design and were presented for 800 ms with an ISI of 600 ms.

Preprocessing was similar to the main experiment. At the first level analysis, four effects of interest were modeled: faces, bodies, houses, and tools. For each subject's session, six covariates were included in order to capture residual movement-related artifacts (three rigid-body translations and three rotations determined from initial spatial registration), and a single covariate representing the mean (constant) over scans. To remove low frequency drifts from the data, we applied a high-pass filter using a cut-off frequency of 1/128 Hz. We smoothed the images of parameter estimates of the contrasts of interest with a 6-mm FWHM isotropic Gaussian kernel. At the group level, the following t-tests were performed: face > house, body > house, and subsequently a conjunction analysis [body > house AND face > house]. The resulting images were thresholded liberal (p < 0.05, uncorrected) to identify the following face- and body-sensitive regions: FG, AMG, STS, EBA (see Table 2). ROIs were defined using a sphere with a radius of 5 mm centered onto the group peak activation. All chosen areas appeared in the whole brain analysis (faces and bodies versus scrambles) and are well known to process facial and bodily expressions (de Gelder, 2006; de Gelder and Hadjikhani, 2006; Grèzes et al., 2007; Meeren et al., 2008; Pichon et al., 2008, 2009; van de Riet et al., 2009; for a recent review, see de Gelder et al., 2010). We were also interested to investigate gender differences in TPJ (Van Overwalle and Baetens, 2009), which is involved in higher social cognition and pre-SMA and the PM that are involved in the preparation of movement and environmentally triggered actions (Hoshi and Tanji, 2004; Nachev et al., 2008). These three areas could not be located with our localizer. However, since there are arguments against using the same data set of the main experiment for the localization of specific areas (Kriegeskorte et al., 2009), we defined TPJ, PM, and pre-SMA by using coordinates from our former studies (Grèzes et al., 2007; Pichon et al., 2008, 2009). In Kret et al. (2011), we revealed that FG was equally responsive to emotional faces and bodies, and therefore we chose to pool FFA and FBA. Also, our localizer showed a considerable overlap between the two areas and our whole brain analysis on the main experiment revealed that the FG as a whole responded much more to dynamic bodies than to dynamic faces.

Table 2.

Coordinates used to create regions of interest.

| Hemisphere | Anatomical region | x | y | z | Reference | Contrast |

|---|---|---|---|---|---|---|

| R | Fusiform face/body area | 42 | −46 | −22 | Localizer | [Body > house AND face > house] |

| L | −42 | −46 | −22 | » | ||

| R | Amygdala | 18 | −4 | −16 | Localizer | Face > house |

| L | −18 | −8 | −20 | Localizer | Face > house | |

| R | Superior temporal sulcus | 54 | −52 | 18 | Localizer | [Body > house AND face > house] |

| L | −54 | −52 | 18 | » | ||

| R | Extrastriate body area | 52 | −70 | −2 | Localizer | Body > house |

| L | −50 | −76 | 6 | Localizer | Body > house | |

| R | Temporo-parietal junction | 62 | −40 | 26 | 1 + 2 + 3* | |

| L | −60 | −40 | 24 | 2 + 3* | ||

| R | Premotor cortex | 42 | 2 | 44 | 2 | |

| L | −46 | 10 | 54 | 2 | ||

| R | Pre-supplementary motor area | 8 | 18 | 66 | 1 | |

| L | −8 | 18 | 66 | » |

The localization of functional regions may differ between participants and there are arguments to define a fROI per participant and not base its location on the group level. However, the fROIs that we chose are very different from one another in terms of the location but also of the activity level and specific function. We opted for the group level for a number of reasons. First, group localization may be preferable for testing the behavior of a functional ROI if the region is small or if the criteria response is weak (for example in case of the AMG; Downing et al., 2001). Second, not all the ROIs were detectable in each participant. Localization at the individual level risks that one fails to identify an ROI in some individuals. Third, participants often show multiple peaks in one area and it is sometimes arbitrary to decide which one to take if the stronger peak lies further away from the group peak than the weaker peak. Fourth, not all our fROIs were easy to localize with our localizer and we therefore for some areas use a coordinate from the literature (see Table 2). Our definition of the ROIs has the advantage that their anatomy is easy to report. In tables, we specify exactly around which peak the sphere was drawn which may be meaningful for meta-analyses.

Results

In order to examine gender differences in face and body responsive areas, we specified the gender of the actors and observers by extracting the beta values of pre-defined ROIs. We checked the patterns of both fear and anger to ensure that both factors contributed the same way to the common effects that are reported below (See Figure 3).

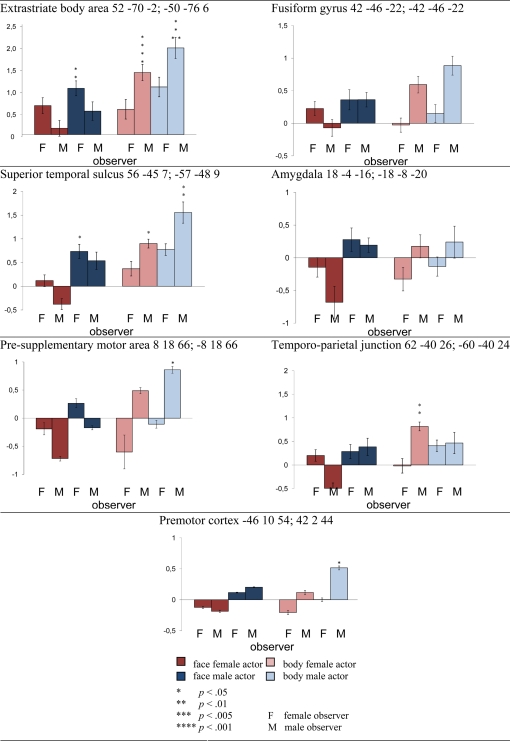

Figure 3.

Threatening facial and bodily expressions as a function of gender. Difference scores between threatening and neutral videos. t-Tests are two-tailed, Bonferroni-corrected. Planned comparisons are described in the text and are not indicated in this figure. Regions are followed by the MNI coordinate. EBA, STS, pre-SMA, and PM were active following bodily expressions, especially when threatening, even more so when expressed by a male actor and above all when observed by a male participant. FG was equally responsive to faces and bodies but the interaction between category, emotion, and observer revealed more activation for threatening than neutral male bodies in male participants. AMG was more active for faces than bodies, specifically for male observers watching female faces. TPJ showed an effect of emotion and was more responsive to bodies than faces.

-

1. Extrastriate body area showed a main effect of emotion (F(1,24) = 44.597, p < 0.001) and category (F(1,24) = 147.764, p < 0.001) and was more active for threatening versus neutral and for bodily versus facial expressions (p < 0.001). An interaction between actor and emotion was found (F(1,24) = 4.706, p < 0.05). Both male and female actors induced more activity when expressing threatening versus neutral emotions (female actors: t(25) = 4.206, p < 0.001; male actors: t(25) = 6.412, p < 0.001). Since we expected more brain activity in response to threatening male than threatening female actors, we conducted two planned comparison t-tests. First, we compared the difference between threatening and neutral expressions in male actors versus female actors and found a significant difference (t(25) = 2.216, p < 0.05, one-tailed). Second, to ascertain that the interaction was mainly driven by male threat, we compared threatening male versus threatening female expressions, which yielded a difference (t(25) = 1.760, p < 0.05, one-tailed). EBA showed an interaction between category and emotion (F(1,24) = 8.775, p < 0.01). Faces and bodies induced more activity when expressing a threatening versus neutral emotion (faces: t(25) = 3.362, p < 0.05; bodies: t(25) = 6.349, p < 0.001) yet this difference was larger in bodies versus faces (t(25) = 11.501, p < 0.001). An interaction between category, emotion, and observer was found (F(1,24) = 9.499, p < 0.005). Male observers showed more activity for threatening than neutral bodies (t(11) = 7.481, p < 0.001), threatening bodies than faces (t(11) = 8.662, p < 0.001), and neutral bodies than faces (t(11) = 7.661, p < 0.001). Female observers showed more activity for threatening than neutral faces (t(13) = 3.987, p < 0.05), threatening bodies than faces (t(13) = 7.688, p < 0.001), and neutral bodies than faces (t(13) = 8.353, p < 0.001). The interaction was partly driven by female observers’ enhanced activity for threatening versus neutral faces but this difference was not significantly larger in female than male observers (p = 0.227).

Since we expected more brain activity in response to threatening male body expressions in male observers, we conducted two planned comparisons. First, we compared male observers response to threatening versus neutral male body expressions (t(11) = 5.601, p < 0.001, one-tailed). Second, we expected this difference to be larger in male than female observers and therefore we compared the difference between threatening minus neutral male body expressions between male and female observers which yielded a difference (t(24) = 1.716, p < 0.05, one-tailed).

In summary, EBA not only processes bodies but is also sensitive to emotion. Moreover, highest activity was observed in male participants while watching threatening male body expressions.

-

2. Fusiform gyrus showed a main effect of emotion (F(1,24) = 10.430, p < 0.005) and was more active for threatening than neutral expressions (p < 0.005). There was an interaction between category, emotion, and observer (F(1,24) = 4.695, p < 0.05). Male observers showed more activity for threatening than neutral bodies (t(11) = 5.106, p < 0.001).

Since we expected more brain activity in response to threatening male body expressions in male observers, we conducted two planned comparisons. First, we compared male observers response to threatening versus neutral male body expressions (t(11) = 3.054, p < 0.05, one-tailed). Second, we expected this difference to be larger in male than female observers and therefore we compared the difference between threatening minus neutral male body expressions between male and female observers which yielded a difference (t(24) = 1.835, p < 0.05, one-tailed).

Fusiform gyrus was responsive to emotional expressions from faces and bodies and most responsive to threatening male bodies in male observers.

-

3. Superior temporal sulcus showed main effects of emotion (F(1,24) = 21.191, p < 0.001) and category (F(1,24) = 6.846, p < 0.05) and was more active for bodies than faces (p < 0.05) and for emotional than neutral videos (p < 0.001). An interaction between emotion and category was observed (F(1,24) = 10.799, p < 0.005). Whereas STS did not differentially respond to emotional versus neutral faces (p = 0.371), activity was higher for emotional versus neutral bodies (t(25) = 4.571, p < 0.005). The gender of the actor interacted with emotion (F(1,24) = 7.632, p < 0.05). STS was more active following male threatening than neutral expressions (t(25) = 4.669, p < 0.001) and threatening male versus female stimuli (t(25) = 2.881, p < 0.05). An interaction between category, emotion, and observer was observed (F(1,24) = 6.498, p < 0.05). Male observers showed more activity for threatening versus neutral bodies (t(11) = 5.536, p < 0.001) threatening bodies versus faces (t(11) = 4.178, p < 0.05) and females did not (p = 0.420; p = 0.882). The interaction was partly driven by female observers’ enhanced activity for threatening versus neutral faces but this was not significantly different (p = 0.230). The difference between threatening versus neutral faces was not significantly larger in female versus male observers (p = 0.810).

Since we expected more brain activity in response to threatening male body expressions in male observers, we conducted two planned comparisons. First, we compared male observers response to threatening versus neutral male body expressions (t(11) = 4.251, p < 0.001, one-tailed). Second, we expected this difference to be larger in male than female observers and therefore we compared the difference between threatening minus neutral male body expressions between male and female observers which yielded a marginally significant difference (t(24) = 1.454, p = 0.079, one-tailed).

Similar to EBA and FG, STS was responsive to emotional expressions from faces and bodies and was most responsive to threatening male bodies in male observers.

-

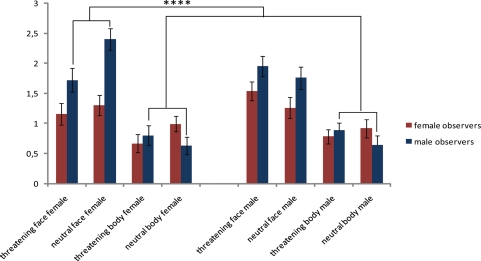

4. Amygdala showed a main effect of category (F(1,24) = 22.402, p < 0.001) and was more active for faces than bodies (p < 0.001). An interaction between category and observer was found (F(1,24) = 4.325, p < 0.05). Male observers only showed more activity for faces than bodies (male observers: t(11) = 4.914, p < 0.001 female observers: p = 0.294). Inspecting the graph (see Figure 2) gives more insight into this effect. The enhanced response in the male observers for facial expressions, was only significant in case of female faces (female faces versus all bodies t(11) = 5.185, p < 0.001); male faces (p = 0.813).

In contrast to our expectations, and in contrast to the activity pattern in the regions described above as well as with TPJ, the AMG was not more responsive to emotional than neutral stimuli but showed enhanced activity for faces, in particular in the male observers, but only when they observed female faces.

5. Temporo-parietal junction showed a main effect of category (F(1,24) = 16.227, p < 0.001) and emotion (F(1,24) = 4.374, p < 0.05) and was more active for threatening than neutral expressions (p < 0.05) and for bodies than faces (p < 0.001). There was an interaction between gender of the actor and observer (F(1,24) = 4.351, p < 0.05). Follow-up comparisons did not yield any significant effects.

-

6. Premotor cortex showed a main effect of category (F(1,24) = 4.670, p < 0.05) and responded more to bodies than to faces (p < 0.05). There was an interaction between gender of the actor and emotion (F(1,24) = 5.764, p < 0.05) but follow-up t-tests did not yield significant differences. Since we expected more brain activity in response to threatening male than threatening female actors, we conducted two planned comparison t-tests. First, we compared the difference between threatening and neutral expressions in male actors versus female actors and found a marginally significant difference (t(25) = 1.514, p = 0.07, one-tailed). Second, to ascertain that the interaction was mainly driven by male threat, we compared threatening male versus threatening female expressions, which yielded a difference (t(25) = 1.720, p < 0.05, one-tailed). Moreover, an interaction between gender of the actor and gender of the observer was found (F(1,24) = 4.670, p < 0.05) but follow-up t-tests did not yield significant differences. Since we expected more brain activity in response to threatening male body expressions in male observers, we conducted two planned comparisons. First, we compared the difference in brain activity between threatening minus neutral male body expressions versus this difference for female actors which yielded a significant difference in the male observers (t(11) = 1.853, p < 0.05, one-tailed). Second, we compared the difference between threatening minus neutral male body expressions in male versus female observers which yielded a difference (t(24) = 1.827, p < 0.05, one-tailed).

Even though there was no significant interaction between category, observer and emotion, the activity pattern looks very similar to EBA, FG, and STS and again, responds mostly to male threatening body expressions in male observers.

-

7. Pre-supplementary motor area showed a main effect of gender (F(1,24) = 9.215, p < 0.01) and responded more to male than female expressions (p < 0.01). There was an interaction between gender of the actor and emotion (F(1,24) = 6.438, p < 0.05). Pre-SMA was more responsive to threatening expressions from males than females (t(25) = 3.555, p < 0.05). Moreover, an interaction between gender of the actor and gender of the observer was found (F(1,24) = 6.157, p < 0.05). Although female observers were equally responsive as male observers, they showed more activity for male versus female actors, irrespective of emotion (t(25) = 3.982, p < 0.05). There was an interaction between category * emotion * gender of the observer (F(1,24) = 6.239, p < 0.05). In contrast to females, male observers were more responding to threatening than neutral bodies (female observer: (p = 0.236) male observer: t(11) = 4.170, p < 0.05).

Further analysis revealed that this effect in male observers was derived from threatening male versus neutral male bodies t(11) = 3.130, p < 0.005, one-tailed). We expected this difference to be larger in male than female observers and consequently compared the difference between threatening minus neutral male body expressions between male and female observers which yielded a significant difference (t(24) = 1.944, p < 0.05, one-tailed).

Pre-supplementary motor area responded mostly to male threatening body expressions in male observers.

Figure 2.

The amygdala showed more activity for faces than bodies as was shown by a main effect. However, an interaction between category and observer revealed that this increased activity for faces was specific to male observers. Although there was no interaction with gender of the actor, the enhanced response in the male observers for facial expressions, was only valid in case of female faces. The amygdala was not more responsive to emotional than neutral stimuli but showed enhanced activity for faces, in particular in the male observers, especially when they observed female faces.

Discussion

Previous studies showed gender differences in how the brain processes facial emotions. The present study has three innovative aspects. First, we used facial expressions but also whole body images. Second, all stimuli consisted of video clips. Third, we investigated the role of the gender of the observer as well as that of the stimulus displays.

Overall, we found a higher BOLD response in STS, EBA, and pre-SMA when participants observed male versus female actors expressing threat. But interestingly, in these regions, as well as in FG, there was an interaction between category, emotion, and observer. There was more activation for male threatening versus neutral body stimuli in the male participants. Threatening bodies and not faces triggered highest activity in STS, especially in the male participants.

Whereas male observers responded more to threatening body expressions than females did, the opposite tendency was observed in the EBA for female observers. Females were not more responsive to faces than males, but the difference in brain activity following a threatening versus neutral face was significant in this region in female observers only. However, this difference score between threatening minus neutral faces was not significantly larger in female than in male observers. So, the three-way interaction between category, emotion, and observer in EBA was mainly driven by male observers’ response to threatening body expressions. Ishizu et al. (2009) found that males showed a greater response in EBA than females when imagining hand movement. Our male subjects possibly imagined themselves reacting to the threatening male actor more than females did. Alternatively, there was an automatic, increased response to threatening body expressions (Tamietto and de Gelder, 2010). This latter explanation is plausible because the male observers additionally showed a clear motor preparation response in the PM and the pre-SMA toward threatening male body expressions.

Our results are similar to previous studies that show male observers to be more reactive to threatening signals than female observers (Aleman and Swart, 2008; Fine et al., 2009). Hess et al. (2004) finds that facial cues linked to perceived dominance (square jaw, heavy eyebrows, high forehead) are more typical for men, who are generally perceived as more dominant than women. So far, nothing is known about bodily cues and perceived dominance in humans but the physical differences between men and women may be important for interpreting our results. If there is a significant difference in power or status between men and women, then threat from an anger expression can elicit different responses depending on the status or power of the angry person.

The AMG was more active for facial than bodily expressions, independent of emotion. This effect was specifically for male observers watching female faces. This is consistent with earlier findings. Fischer et al. (2004) reported an increased response in the left AMG and adjacent anterior temporal regions in men, but not in women, during exposure to faces of the opposite versus the same sex. Moreover, AMG activity in male observers was increased for viewing female faces with relatively large pupils indicating an index of interest (Demos et al., 2008). Possibly, female faces provide more information to relevant males than male faces, whereas the distinction at the level of the face between male and female faces is less important for female observers. Other studies have reported that the AMG is face but not emotion specific (Van der Gaag et al., 2007; see Kret et al., 2011 for further discussion). But the striking fact here is that all the other areas that reflect sensitivity of the male observers are emotion sensitive, in contrast with the AMG results. This disjunction between AMG face-gender and STS, EBA, PM, pre-SMA gender–emotion sensitivity indicates that the AMG indeed plays a different role than being at the service of emotion encoding and fits with the notion that it encodes salience and modulates recognition and social judgment (Tsuchiya et al., 2009).

In our study males showed a clear motor preparation response to threatening male body language, and females did not. In males, the fight-or-flight response is characterized by the release of vasopressin. The effects of vasopressin are enhanced by the presence of testosterone and influence the defense behavior of male animals (Taylor et al., 2002). Testosterone level is a good predictor of the presence of aggressive behavior and dominance (van Honk and Schutter, 2007) and AMG activity to angry but also to fearful faces in men (Derntl et al., 2009). In contrast, oxytocin has caused relaxation and sedation as well as reduced fearfulness and reduced sensitivity to pain (Uvnas-Moberg, 1997). Testosterone inhibits the release of oxytocin as shown in Jezova et al. (1996; for a discussion, see Taylor et al., 2000). In addition to the increased quantity of oxytocin released in females as compared to males, McCarthy (1995) has found that estrogen enhances the effects of oxytocin. Therefore, oxytocin may be vital in the reduction of the fight-or-flight response in females. Although we cannot report any measure that could support such an interpretation, it is well known that the endocrine system plays an important role in modulating behavior. Comparing the levels of these hormones with specific neuronal responses may give more insight in these gender effects.

Conclusion

Increased activation in FG, STS, EBA, PM, and pre-SMA was observed for threatening versus neutral male body stimuli in male participants. AMG was more active for facial than bodily expressions, independent of emotion, yet specifically for male observers watching female faces. Human emotion perception depends to an important extent on whether the stimulus is a face or a body and whether observers and observed are male or female. These factors that have not been at the forefront of emotion research are nevertheless important for human emotion theories.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was partly supported by Human Frontiers Science Program RGP54/2004, NWO Nederlandse Organisatie voor Wetenschappelijk Onderzoek 400.04081 to Beatrice de Gelder, and EU FP6-NEST-COBOL043403 and FP7 TANGO to Beatrice de Gelder. We thank the two reviewers for their helpful and insightful comments and suggestions.

Footnotes

References

- Aleman A., Swart M. (2008). Sex differences in neural activation to facial expressions denoting contempt and disgust. PLoS ONE 3, e3622. 10.1371/journal.pone.0003622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T., Puce A., McCarthy G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278 [DOI] [PubMed] [Google Scholar]

- Blanke O., Arzy S. (2005). The out-of-body experience: disturbed self-processing at the temporo-parietal junction. Neuroscientist 11, 16–24 10.1177/1073858404270885 [DOI] [PubMed] [Google Scholar]

- Bonda E., Petrides M., Ostry D., Evans A. (1996). Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J. Neurosci. 16, 3737–3744 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosson J. K., Vandello J. A., Burnaford R. M., Weaver J. R., Arzu Wasti S. (2009). Precarious manhood and displays of physical aggression. Pers. Soc. Psychol. Bull. 35, 623–634 [DOI] [PubMed] [Google Scholar]

- de Gelder B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249 [DOI] [PubMed] [Google Scholar]

- de Gelder B., Hadjikhani N. (2006). Non-conscious recognition of emotional body language. Neuroreport 17, 583–586 10.1097/00001756-200604240-00006 [DOI] [PubMed] [Google Scholar]

- de Gelder B., Snyder J., Greve D., Gerard G., Hadjikhani N. (2004). Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl. Acad. Sci. U.S.A. 101, 16701–16706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J., Meeren H. K. M., Sinke C. B. A., Kret M. E., Tamietto M. (2010). Standing up for the body. Recent progress in uncovering the networks involved in processing bodies and bodily expressions. Neurosci. Biobehav. Rev. 34, 513–527 [DOI] [PubMed] [Google Scholar]

- Decety J., Lamm C. (2007). The role of the right temporoparietal junction in social interaction: how low-level computational processes contribute to meta-cognition. Neuroscientist 13, 580–593 10.1177/1073858407304654 [DOI] [PubMed] [Google Scholar]

- Demos K. E., Kelley W. M., Ryan S. L., Davis F. C., Whalen P. J. (2008). Human amygdala sensitivity to the pupil size of others. Cereb. Cortex 18, 2729–2734 10.1093/cercor/bhn034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derntl B., Windischberger C., Robinson S., Kryspin-Exner I., Gur R. C., Moser E. (2009). Amygdala activity to fear and anger in healthy young males is associated with testosterone. Psychoneuroendocrinology 34, 687–693 10.1016/j.psyneuen.2008.11.007 [DOI] [PubMed] [Google Scholar]

- Dickie E. W., Armony J. L. (2008). Amygdala responses to unattended fearful faces: interaction between sex and trait anxiety. Psychiatry Res. 162, 51–57 10.1016/j.pscychresns.2007.08.002 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Jiang Y., Shuman M., Kanwisher N. (2001). A cortical area selective for visual processing of the human body. Science 293, 2470–2473 10.1126/science.1063414 [DOI] [PubMed] [Google Scholar]

- Duvernoy H. M. (1999). The Human Brain: Surface, Three-Dimensional Sectional Anatomy with MRI, and Blood Supply. Wien, NY: Springer Verlag [Google Scholar]

- Eickhoff S. B., Stephan K. E., Mohlberg H., Grefkes C., Fink G. R., Amunts K., Zilles K. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage 25, 1325–1335 10.1016/j.neuroimage.2004.12.034 [DOI] [PubMed] [Google Scholar]

- Eron L. D., Huesmann L. R. (1984). “The control of aggressive behavior by changes in attitudes, values, and the conditions of learning,” in Advances in the Study of Aggression: Vol. I, eds Blanchard R. T., Blanchard C. (Orlando, FL: Academic Press; ), 139–171 [Google Scholar]

- Fine J. G., Semrud-Clikemanb M., Zhu D. C. (2009). Gender differences in BOLD activation to face photographs and video vignettes. Behav. Brain Res. 201, 137–146 [DOI] [PubMed] [Google Scholar]

- Fischer H., Sandblom J., Herlitz A., Fransson P., Wright C. I., Backman L. (2004). Sex-differential brain activation during exposure to female and male faces. Neuroreport 15, 235–238 10.1097/00001756-200402090-00004 [DOI] [PubMed] [Google Scholar]

- Friston K., Holmes A. P., Worsley K., Poline J., Frith C., Frackowiak R. (1995). Statistical parametric maps in functional imaging: a general linear approach. Hum. Brain Mapp. 2, 189–210 10.1002/hbm.460020402 [DOI] [Google Scholar]

- Gauthier I., Skudlarski P., Gore J. C., Anderson A. W. (2000). Expertise for cars and birds recruits brain areas involved in face recognition. Nat. Neurosci. 3, 191–197 [DOI] [PubMed] [Google Scholar]

- Grèzes J., Pichon S., de Gelder B. (2007). Perceiving fear in dynamic body expressions. NeuroImage 35, 959–967 10.1016/j.neuroimage.2006.11.030 [DOI] [PubMed] [Google Scholar]

- Grosbras M. H., Paus T. (2006). Brain networks involved in viewing angry hands or faces. Cereb. Cortex 16, 1087–1096 [DOI] [PubMed] [Google Scholar]

- Grossman E., Blake R. (2002). Brain areas active during visual perception of biological motion. Neuron 35, 1167–1175 10.1016/S0896-6273(02)00897-8 [DOI] [PubMed] [Google Scholar]

- Hadjikhani N., de Gelder B. (2003). Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr. Biol. 13, 2201–2205 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233 [DOI] [PubMed] [Google Scholar]

- Hess U., Adams R. B., Jr., Kleck R. E. (2004). Facial appearance, gender, and emotion expression. Emotion 4, 378–388 10.1037/1528-3542.4.4.378 [DOI] [PubMed] [Google Scholar]

- Hofer A., Siedentopf C. M., Ischebeck A., Rettenbacher M. A., Verius M., Felber S. (2006). Gender differences in regional cerebral activity during the perception of emotion: a functional MRI study. NeuroImage 32, 854–862 10.1016/j.neuroimage.2006.03.053 [DOI] [PubMed] [Google Scholar]

- Holmes C. J., Hoge R., Collins L., Woods R., Toga A. W., Evans A. C. (1998). Enhancement of MR images using registration for signal averaging. J. Comput. Assist. Tomogr. 22, 324–333 10.1097/00004728-199803000-00032 [DOI] [PubMed] [Google Scholar]

- Hoshi E., Tanji J. (2004). Functional specialization in dorsal and ventral premotor areas. Prog. Brain Res. 143, 507–511 10.1016/S0079-6123(03)43047-1 [DOI] [PubMed] [Google Scholar]

- Ishizu T., Noguchi A., Ito Y., Ayabe T., Kojima S. (2009). Motor activity and imagery modulate the body-selective region in the occipital-temporal area: a near-infrared spectroscopy study. Neurosci Lett. 465, 85–89 10.1016/j.neulet.2009.08.079 [DOI] [PubMed] [Google Scholar]

- Jezova D., Jurankova E., Mosnarova A., Kriska M., Skultetyova I. (1996). Neuroendocrine response during stress with relation to gender differences. Acta Neurobiol. Exp. 56, 779–785 [DOI] [PubMed] [Google Scholar]

- Kanwisher N., McDermott J., Chun M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kemp A. H., Silberstein R. B., Armstrong S. M., Nathan P. J. (2004). Gender differences in the cortical electrophysiological processing of visual emotional stimuli. NeuroImage 21, 632–646 10.1016/j.neuroimage.2003.09.055 [DOI] [PubMed] [Google Scholar]

- Killgore W. D., Cupp D. W. (2002). Mood and sex of participant in perception of happy faces. Percept. Mot. Skills 95, 279–288 [DOI] [PubMed] [Google Scholar]

- Kret M. E., Pichon S., Grèzes J., de Gelder B. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. NeuroImage 54, 1755–1762 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N., Simmons W. K., Bellgowan P. S. F., Baker C. I. (2009). Circular analysis in systems neuroscience: the dangers of double dipping. Nat. Neurosci. 12, 535–540 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kring A. M., Gordon A. H. (1998). Sex differences in emotion: expression, experience, and physiology. J. Pers. Soc. Psychol. 74, 686–703 [DOI] [PubMed] [Google Scholar]

- Mazurski E. J., Bond N. W., Siddle D. A. T., Lovibond P. F. (1996). Conditioning with facial expressions of emotion: effects of CS sex and age. Psychophysiology 33, 416–425 10.1111/j.1469-8986.1996.tb01067.x [DOI] [PubMed] [Google Scholar]

- McCarthy M. M. (1995). “Estrogen modulation of oxytocin and its relation to behavior,” in Oxytocin: Cellular and Molecular Approaches in Medicine and Research, eds Ivell R., Russell J. (New York: Plenum; ), 235–242 [Google Scholar]

- Meeren H. K., Hadjikhani N., Ahlfors S. P., Hamalainen M. S., de Gelder B. (2008). Early category-specific cortical activation revealed by visual stimulus inversion. PLoS ONE 3, e3503. 10.1371/journal.pone.0003503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore M. (1966). Aggression themes in a binocular rivalry situation. J. Pers. Soc. Psychol. 3, 685–688 [DOI] [PubMed] [Google Scholar]

- Nachev P., Kennard C., Husain M. (2008). Functional role of the supplementary and pre-supplementary motor areas. Nat. Rev. Neurosci. 9, 856–869 [DOI] [PubMed] [Google Scholar]

- Öhman A., Dimberg U. (1978). Facial expressions as conditioned stimuli for electrodermal responses: a case of preparedness? J. Pers. Soc. Psychol. 6, 1251–1258 [DOI] [PubMed] [Google Scholar]

- Olweus D., Mattson A., Schalling D., Low H. (1988). Circulating testosterone levels and aggression in adolescent males: a causal analysis. Psychosom. Med. 50, 261–272 [DOI] [PubMed] [Google Scholar]

- Peelen M. V., Atkinson A. P., Andersson F., Vuilleumier P. (2007). Emotional modulation of body-selective visual areas. Soc. Cogn. Affect. Neurosci. 2, 274–283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen M. V., Downing P. E. (2005). Selectivity for the human body in the fusiform gyrus. J. Neurophysiol. 93, 603–608 10.1152/jn.00513.2004 [DOI] [PubMed] [Google Scholar]

- Peelen M. V., Downing P. E. (2007). The neural basis of visual body perception. Nat. Rev. Neurosci. 8, 636–648 [DOI] [PubMed] [Google Scholar]

- Perrett D. I., Harries M. H., Bevan R., Thomas S., Benson P. J., Mistlin A. J., Chitty A. J., Hietanen J. K., Ortega J. E. (1989). Frameworks of analysis for the neural representation of animate objects and actions. J. Exp. Biol. 146, 87–113 [DOI] [PubMed] [Google Scholar]

- Pichon S., de Gelder B., Grèzes J. (2008). Emotional modulation of visual and motor areas by still and dynamic body expressions of anger. Soc. Neurosci. 3, 199–212 [DOI] [PubMed] [Google Scholar]

- Pichon S., de Gelder B., Grèzes J. (2009). Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. NeuroImage, 47, 1873–1883 10.1016/j.neuroimage.2009.03.084 [DOI] [PubMed] [Google Scholar]

- Puce A., Allison T., Asgari M., Gore J. C., McCarthy G. (1996). Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J. Neurosci. 16, 5205–5215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samson D., Apperly I. A., Chiavarino C., Humphreys G. W. (2004). Left temporoparietal junction is necessary for representing someone else's belief. Nat. Neurosci. 7, 499–500 [DOI] [PubMed] [Google Scholar]

- Saxe R., Kanwisher N. (2003). People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind.” NeuroImage 19, 1835–1842 10.1016/S1053-8119(03)00230-1 [DOI] [PubMed] [Google Scholar]

- Schienle A., Schafer A., Stark R., Walter B., Vaitl D. (2005). Gender differences in the processing of disgust- and fear-inducing pictures: an fMRI study. Neuroreport 16, 277–280 10.1097/00001756-200502280-00015 [DOI] [PubMed] [Google Scholar]

- Tamietto M., de Gelder B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709 [DOI] [PubMed] [Google Scholar]

- Taylor S. E., Klein L. C., Lewis B. P., Gruenewald T. L., Gurung R. A. R., Updegraff J. A. (2000). Biobehavioral responses to stress in females: tend-and-befriend, not fight-or-flight. Psychol. Rev. 107, 441–429 [DOI] [PubMed] [Google Scholar]

- Taylor S. E., Klein L. C., Lewis B. P., Gruenewald T. L., Gurung R. A. R., Updegraff J. A. (2002). Sex differences in biobehavioral responses to threat: reply to Geary and Flinn (2002). Psychol. Rev. 109, 751–753 [Google Scholar]

- Thompson J. C., Clarke M., Stewart T., Puce A. (2005). Configural processing of biological motion in human superior temporal sulcus. J. Neurosci. 25, 9059–9066 10.1523/JNEUROSCI.2129-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N., Moradi F., Felsen C., Yamazaki M., Adolphs R. (2009). Intact rapid detection of fearful faces in the absence of the amygdala. Nat. Neurosci. 12, 1224–1225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uvnas-Moberg K. (1997). Oxytocin linked antistress effects: the relaxation and growth response. Acta Physiol. Scand. 640(Suppl.), 38–42 [PubMed] [Google Scholar]

- van de Riet W. A. C., Grèzes J., de Gelder B. (2009). Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Soc. Neurosci. 4, 101–120 [DOI] [PubMed] [Google Scholar]

- Van der Gaag C., Minderaa R. B., Keysers C. (2007). Facial expressions: what the mirror neuron system can and cannot tell us. Soc. Neurosci. 2, 179–222 [DOI] [PubMed] [Google Scholar]

- van Honk J., Schutter D. J. L. G. (2007). Testosterone reduces conscious detection of signals serving social correction implications for antisocial behavior. Psychol. Sci. 18, 663–667 [DOI] [PubMed] [Google Scholar]

- Van Overwalle F., Baetens K. l. (2009). Understanding others’ actions and goals by mirror and mentalizing systems: a meta-analysis. NeuroImage 48, 564–584 10.1016/j.neuroimage.2009.06.009 [DOI] [PubMed] [Google Scholar]