Abstract

Folk psychology advocates the existence of gender differences in socio-cognitive functions such as ‘reading’ the mental states of others or discerning subtle differences in body-language. A female advantage has been demonstrated for emotion recognition from facial expressions, but virtually nothing is known about gender differences in recognizing bodily stimuli or body language. The aim of the present study was to investigate potential gender differences in a series of tasks, involving the recognition of distinct features from point light displays (PLDs) depicting bodily movements of a male and female actor. Although recognition scores were considerably high at the overall group level, female participants were more accurate than males in recognizing the depicted actions from PLDs. Response times were significantly higher for males compared to females on PLD recognition tasks involving (i) the general recognition of ‘biological motion’ versus ‘non-biological’ (or ‘scrambled’ motion); or (ii) the recognition of the ‘emotional state’ of the PLD-figures. No gender differences were revealed for a control test (involving the identification of a color change in one of the dots) and for recognizing the gender of the PLD-figure. In addition, previous findings of a female advantage on a facial emotion recognition test (the ‘Reading the Mind in the Eyes Test’ (Baron-Cohen, 2001)) were replicated in this study. Interestingly, a strong correlation was revealed between emotion recognition from bodily PLDs versus facial cues. This relationship indicates that inter-individual or gender-dependent differences in recognizing emotions are relatively generalized across facial and bodily emotion perception. Moreover, the tight correlation between a subject's ability to discern subtle emotional cues from PLDs and the subject's ability to basically discriminate biological from non-biological motion provides indications that differences in emotion recognition may - at least to some degree – be related to more basic differences in processing biological motion per se.

Introduction

In everyday life, humans are constantly observing and interpreting the movements of others in an attempt to deduce their moods and emotional states. Folk psychology advocates the existence of gender differences in a number of these socio-cognitive functions such as ‘reading’ the mental states of others or discerning subtle differences in others body language. However, from an empirical perspective, the issue of gender differences in recognizing emotions is still a topic of debate. Studies using self-report questionnaires have revealed that females have higher empathy scores than males [1]–[4]. However, it is possible that the answers of participants might have been associated with indices of social desirability, potentially leading to biased results [3], [5]. Next to self-reports, the majority of studies addressing gender differences in the socio-cognitive domain employed paradigms involving emotion recognition from facial expressions. A meta-analysis on this topic revealed that 80% of studies show a female advantage [6], [7], however with relatively small effect sizes. Other studies even showed no gender differences in the recognition of facially expressed emotions [8], [9]. Recently it has been suggested that this inconsistency across studies can be explained by differences in the nature of stimuli, such that studies using emotional expressions of high intensity show fewer differences between male and female decoders than those using subtle expressions with less intensity [10].

However, facial expressions are not the only source for conveying emotionally relevant information. In every-day situations, other sources - such as the communicator's body language or “bodily kinematics” - might be important as well, especially when facial expressions are inconsistent or unavailable to the observer.

Concerning this topic, neuroscience and social cognition research are increasingly focusing on the role of the observer's own motor system in understanding or ‘reading’ others bodily kinematics [11]–[14]. Already within the framework of the social-cognitive simulation theory [15], [16] and the ideomotor theory [17], it was posited that the ‘understanding of other's actions and behavior’ may be essentially motor, rather than sensory in nature. The ideomotor principle - as first contended by James (1890) - assumed that “every representation of a movement awakens in some degree the actual movement which is its object” [18] and that common perceptual-motor representations are formed by the correlated experience of executing and perceiving actions. Accordingly, simulating other's actions by matching perceived movements onto the observers' own motor system has been proposed to be the general mechanism by which observers can read or understand the actions, intentions or emotions of others [11]–[14].

First neurophysiological evidence for the ‘embodied simulation theory’ was provided by the discovery of mirror neurons in the macaque monkey brain. Using single neuron recordings, the group of Rizzolatti et al., identified the existence of a particular class of neurons in the ventral premotor [19] and later in parietal cortices [20], which fire when the monkey performs a specific action, and also when it observes the same action performed by another individual. First indications of the existence of a mirror neuron system (MNS) in the human brain emerged from transcranial magnetic stimulation (TMS) studies showing ‘resonating’ activity in the observer's motor system when movements of others are observed [21]–[23], and similar findings on resonant activation of the motor cortex during mere observation of actions were provided from studies using electroencephalography (EEG) [24], [25] and magneto-encephalo-graphy (MEG) [26]. To date, also a large number of brain imaging studies have explored which brain regions become increasingly activated during the observation of other's actions and both the inferior frontal gyrus (IFG) and the inferior parietal lobule (IPL) have consistently been identified to be key areas of the human mirror system [27]–[29]. Based on its remarkable properties, the human MNS is hypothesized to be the neural mechanism by which observed movements are simulated or matched onto the observer's own motor system in order to read or understand the actions, intentions or emotions of others (i.e., embodied simulation) [13], [30]. Interestingly, several neurophysiological studies addressed the issue of gender differences in the MNS, and all reported mirror activations to be generally stronger in female compared to male participants [31]–[33]. Also voxel-based morphometry studies demonstrated that female participants display larger gray matter volumes than male participants in regions of the mirror system [34], [35]. To date, however, the issue of behavioral gender differences in action or emotion understanding remains fairly unexplored.

Prompted by findings of neurophysiological gender differences in mirror system functioning, the present study aimed to investigate whether gender differences are also quantifiable on behavioral tasks involving the understanding or reading of other's bodily kinematics. In our paradigm, point light displays (PLD) were used, in which biological motions are depicted solely by the kinematics of light points placed on the joints of an actor [36]. We employed these highly simplified versions of biological motion to exclude that the results were influenced by differences in stimulus intensity as reported for complex and more natural stimuli such as facial expressions [10]. Although PLDs lack visual properties such as color, shading, and contours, they are easily recognized as depicting biological motion [37] and have repeatedly been shown to activate the human MNS [38]–[41]. Using fMRI, Saygin et al. (2004) reported point-light biological motion animations to yield activity in frontal areas of the action observation network [38]. Similar patterns of results were found using EEG during the perception of PLDs depicting bodily actions [41], intentions or emotions [40]. In both studies, mu rhythm suppression – indicative of resonant mirror activity - was found during the perception of point-light induced biological motion but not during observation of non-biological motion displays. Also recent data from a lesion study showed that the ability to recognize PL biological motion directly relies upon and requires neuronal resources that are part of the action observation or mirror neuron system (MNS) [39].

Biological motion as depicted by PLDs, has been shown to contain all sorts of socially relevant information about human agents such as the kind of action they are executing [42], their gender [43], [44], their intentions and even their emotional state [45], [46]. In a series of experiments, we explored whether gender differences exist for the recognition of several ‘social’ features from PLDs. In a first experiment, we explored potential gender differences in the recognition of some basic aspects, such as (i) the ‘displayed actions’ or (ii) the point light figures' ‘gender’. In a second experiment, recognition of more subtle socially relevant cues, such as the ‘emotional state’ of the displayed point light figures was explored. An additional aim of the second experiment was to investigate whether potential gender differences in discerning socially relevant information from PLDs pertained to tasks involving the basic discrimination of ‘biological motion’ from ‘non-biological’ motion. In this task, subjects were required to indicate whether or not they recognized ‘a person’ in a series of human PLDs (‘biological motion’) or phase scrambled versions of the same PLD (‘non-biological motion’).

Based on the previously described stronger mirror system activation in females compared to males, we hypothesized the existence of behavioral gender differences (better performance in females) for (i) discerning subtle cues on the emotional state of the point light figure, and (potentially to a lesser extent) for (ii) recognizing the displayed actions and (ii) gender of the PLDs. Moreover, we additionally hypothesized that gender differences would pertain to the basic discrimination of ‘biological’ from ‘non-biological’ motion. No group differences were expected for a control PLD perception task, involving the identification of color changes in the moving point-light dots.

In experiment 2, we additionally tested whether the ability to recognize emotions from bodily PLD kinematics is correlated to the ability to recognize emotions from facial cues, as assessed by the ‘Reading the Mind in the Eyes Test’ (revised version) [47]. This test was previously developed as a measure of adult ‘mentalizing’ and has been shown to be a standardized and sensitive test to reveal subtle individual differences in emotion recognition from the eye region of different faces.

Materials and Methods

Ethics Statement

Written informed consent was obtained from all participants prior to the experiment. Consent forms and study design were approved by the local Ethics Committee for Biomedical Research at the Katholieke Universiteit Leuven in accordance to The Code of Ethics of the World Medical Association (Declaration of Helsinki) [48].

Participants

Experiment 1

Performance on the ‘Action Recognition Test’ and the ‘Gender Recognition Test’ was assessed in 12 males (mean age 25.1, S.D. 1.8 years) and 16 females (mean age 25.1, S.D. 3.1 years). Three out of the 28 participants were left-handed (self-reported).

Experiment 2

Performance on the ‘Biological Motion Recognition Test’, the ‘Emotion Recognition Test’, the ‘Control Color Test’ and the ‘Reading the Mind in the Eyes Test’ [47] was assessed for 15 males (mean age 27.1, S.D. 5.8 years) and 22 females (mean age 22.2, S.D. 4.4 years), who had not participated in Experiment 1. Due to technical problems, data on the ‘Emotion Recognition Test’ was lost for 2 male and 3 female participants.

All subjects were students at the K. U. Leuven, naive as to the purpose of the study and had no previous experience with point light displays.

Point light displays - Motion Capturing

One male and one female actor were selected to create the point light displays (PLD). Each actor was asked to perform 5 actions, each carried out in 4 different ‘emotional states’. The 5 actions were: (i) walking; (ii) jumping on the spot; (iii) kicking a ball using the right leg; (iv) drinking from a bottle of water, and (v) wiping the table. The 4 emotional states were: (i) neutral; (ii) happy; (iii) sad, and (iv) angry. After two or more practice trials, all actions/emotions were recorded three times for each actor. One of the three recordings was selected based on the visibility of the reflective markers, leaving a grand total of 40 recorded motion scenes (‘2 actors’ב5 actions’ב4 emotions’).

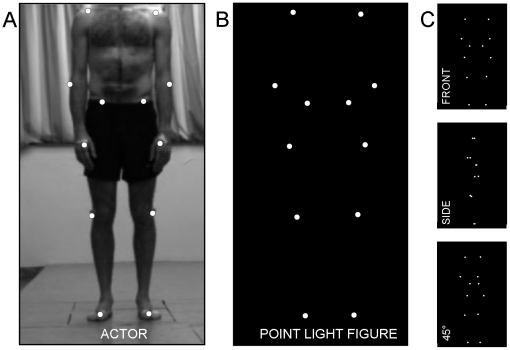

To obtain the captured motion data, an eight-camera VICON system was used (capturing system measuring at 100 Hz, Oxford Metrics, Oxford, UK). Twelve reflective markers were attached to the joints of the ankles, the knees, the hips, the wrists, the elbows, and the shoulders of the actor (Figure 1A, 1B). After the capture session, the 2-D data from all camera units (8) were processed off line to calculate the 3-D coordinates of the markers (Vicon Motion Systems, Oxford, UK). To create the actual movie files, in house made scripts were used, created with Matlab software (MathWorks, Massachusetts, U.S.A.). For each time point, 3-D coordinates of the 12 marker dots were converted as white spheres on a black background. Frames of the captured scene were rendered as audio-video interleaved (avi) movie files at a frame rate of 20 Hz. For each recorded scene, movie files with a duration of 3 s were created from three different viewpoints (front view (0°), side view (90°), intermediate view (45°) (Figure 1C) resulting in 120 PLDs in total (‘2 actors’ב5 actions’ב4 emotions’, ‘3 perspectives’).

Figure 1. To create the point light displays, twelve reflective markers were attached to an actor's shoulders, elbows, wrists, hips, knees and ankles, and were tracked using a Vicon motion-capture-system.

(A) An exemplary photograph of the male actor with the 12 markers attached to the body. (B) The corresponding point light figure. (C) Examples of point light figures, viewed from different perspectives i.e., the front, the side, and the 45° view.

In addition, for each of the PLDs, a scrambled version was created which consisted of the same individual dots, undergoing the same local trajectories as in the normal PLDs, however with the position permutated between the 12 individual trajectories. This ‘scrambling’ resulted in 120 PLDs showing non-biological ‘scrambled’ motions.

In all the presented PLDs; the dots appeared white against a black background subtending 11×12 degrees visual angle at an approximate viewing distance of 50 cm (note that subjects were free to make small trunk movements). Each dot subtended 0.25 degrees (for example movies, see Video S1, S2 and S3).

Experiment 1: Stimuli & Procedure

Experiment 1 consisted of 2 tests: the ‘Action Recognition Test’ and the ‘Gender Recognition Test’. Both tests were assessed in a quiet room, on the same computer monitor. Instructions were provided verbally and on the monitor at the start of each test.

Participants had to watch a series of short movies (duration of 3 s), representing PLDs of white dots against a black background. For the ‘Action Recognition Test’, participants were asked to indicate as fast as possible the displayed actions in the point light animations by pressing different buttons on a keyboard. The five response options (walking, jumping, kicking, drinking, wiping) were indicated on the respective response buttons. For the ‘Gender Recognition Test’, participants were asked to indicate as fast as possible the gender of the point light figure by pressing different buttons on a keyboard. The two response options (male, female) were indicated on the respective response buttons.

Reaction times (RT) to indicate the action or gender (from the start of the movie, until a response button was pressed), as well as accuracy rates (% correct answers) were assessed for all subjects. E-Prime software (Psychological Software Tools) was used for stimulus presentation and RT/response logging. Half of the participants started the experiment with the ‘Action Recognition Test’, the other half with the ‘Gender Recognition Test’. For each test, subjects were presented with the same set of 120 movies showing 2 genders (male and female); 5 actions (walking, jumping, kicking, drinking, wiping); and 4 emotional states (neutral, happy, sad, angry) from 3 different viewing perspectives (front view, side view and 45° view) (see Table S1). Movies were presented in a random order to each subject.

Experiment 2: Stimuli & Procedure

Experiment 2 consisted of the ‘Biological Motion Recognition Test’, the ‘Control Color Test’, and the ‘Emotion Recognition Test’ The principal setup was identical to experiment 1.

‘Biological Motion Recognition Test’

Subjects had to watch a series of PLDs (144) that either ‘moved like a person’ (‘biological motion’ PLDs (72)) or ‘moved not like a person’ (‘scrambled’ PLDs' (72)). Participants were asked to indicate as fast as possible whether the presented PLD represented “a person” or “not a person” by pressing different buttons on a keyboard. The two response options (person, no person) were indicated on the respective response buttons. The set of 72 ‘biological motion’ PLDs was obtained by a combination of 4 factors, i.e., 2 genders (male and female); 3 actions (walking, jumping, kicking); 4 emotional states (neutral, happy, sad, mad) and 3 different viewing perspectives (front view, side view and 45° view). Movies were presented in a random order to each subject.

‘Control Color Test’

For this test, the same set of 144 PLDs (72 ‘biological motion’ PLDs, 72 ‘scrambled’ PLDs) was presented to the subjects. However, here participants were asked to indicate as fast as possible whether one of the moving white dots changes color to either ‘red’ or ‘green’ by pressing different buttons on a keyboard. The two response options (red, green) were indicated on the respective response buttons.

‘Emotion Recognition Test’

In this test, participants were presented with a series of 144 movie trials. Each trial consisted of a ‘prime’ PLD, followed by a ‘target’ PLD. Participants were asked to indicate as fast as possible whether the presented point light figure in the ‘target’ movie performed the displayed action in a different ‘emotional state’ compared to the point light figure in the ‘prime’ movie. The emotional state of the target could either be indicated as (i) happier, (ii) sadder, (iii) angrier, or (iv) not different, from the prime. The four response options (happier, sadder, angrier, no difference) were indicated on the respective response buttons on the keyboard. Prime and target movies remained constant with respect to (i) the presented model (e.g. if the prime was male, also the target was male) and (ii) the type of action displayed (e.g., if the prime was a walking point light figure, also the target was a walking point light figure). On the other hand, the viewing perspective was always different between prime and target movies (e.g., if the prime was viewed from the front view, the target was presented either from the side view or the 45° view). The prime movie always showed a point light figure in the ‘neutral emotional state’, whereas the emotional state of the target point light figure could either be (i) neutral, (ii) happy, (iii) sad, or (iv) angry. The viewing perspective was changed between prime and target movies to increase task-difficulty and, thus, to ensure that subjects had to perceive and interpret movement kinematics rather than comparing lower-order visual properties. (e.g. the dot movement would be identical when a ‘neutral’ prime is followed by a ‘neutral’ target which could be solved by applying a visual memory strategy). The above design resulted in a grand total of 144 possible prime-target sequences, i.e., 18 prime movies (2 actors (male female)×3 actions (walking, jumping, kicking)×3 perspectives) each followed by 8 possible target movies (4 emotions (neutral, happy, sad, mad)×2 perspectives).

All participants completed the different tests in the same fixed order, starting with the ‘Biological Motion Recognition Test’, followed by the ‘Control Color Test, and finishing with the ‘Emotion Recognition Test’. This order was kept fixed such that all subjects were comparably ‘naive’ on the nature of ‘biological’ versus ‘scrambled’ PLDs in the ‘Biological Motion Recognition Test’.

Reaction times and accuracy rates were assessed for each test using E-Prime software (Psychological Software Tools).

In addition to the above tests, performance on the ‘Reading the Mind in the Eyes Test’ (revised version) was also administered for participants of experiment 2. This test was developed by the group of Baron Cohen et al. (2001) as a measure of adult ‘mentalizing’ and was shown to be a standardized and sensitive test to reveal subtle individual differences in facial emotion recognition. A detailed description of this test is provided elsewhere [47]. A computerized Dutch version of this test was adopted in the present study (created with Question Writer software, Central Question Ltd., Manchester, UK). In short, participants were presented with a series of 36 photographs of the eye region of the face of different actors and actresses, and were asked to indicate (by mouse clicking) which of four words best describes what the person in the photograph is thinking or feeling. Accuracy rates were assessed.

Normative data analysis and statistics

Normative data analyses were performed to assess the overall ‘recognizability’ of the distinct features in the presented PLDs. The percentage of participants who correctly identified the displayed PLD (% correct classification score) was calculated for all PLD movies (separately for each test). In experiment 2, only PLDs of ‘walking, ‘jumping’ and ‘kicking’ were used. To make analyses and interpretations of experiment 1 and 2 comparable, only % correct classification data on these three actions were included in the analyses of experiment 1 (i.e., leaving out recorded data on the point light animations displaying the ‘drinking’ and ‘wiping’ actions). Since % correct classification scores were not normally distributed, nonparametric tests were used for the normative analyses [Shapiro-Wilk tests: all, W<.9, p<.001]. One Sample Wilcoxon Signed Rank tests were used to determine whether the group of subjects performed significantly above chance for the different tests. Additionally, non-parametric Kruskal-Wallis ANOVA tests were performed on the % correct classification scores to explore whether the recognizability of the PLDs was influenced by the (i) model's gender, (ii) the displayed action, (iii) emotion, or (iv) viewing perspective.

Gender analyses and statistics

To explore potential differences between male and female participants in recognition performance, ANOVAs with the categorical between-group factor ‘subject's gender’ were conducted on the % correct answers and RT data, separately for all tests. Only % correct answers and RT data on the walking, ‘jumping’ and ‘kicking’ actions were included in the analyses of experiment 1 (i.e., leaving out recorded data on the point light animations displaying the ‘drinking’ and ‘wiping’ actions). Reaction times recorded from the correct trials were considered as outliers and removed from the analysis when they exceeded Q3±1.5×(Q3-Q1) with Q1 and Q3 denoting the first and third quartile over the whole set of correct trials for each subject (Electronic Statistics Textbook, 2007, StatSoft, Inc. Tulsa). Following these criteria, only a few trials were discarded from the RT analyses [‘Action Recognition Test’: 1.01%] [Gender Recognition Test’: 0.43%] [‘Biological Motion Recognition Test’: 0.88%] [‘Control Color Test’: 1.35%] [‘Emotion Recognition Test’: 0.88%]. Percentage correct answers were normally distributed for all tests [Shapiro-Wilk tests; all, W>.96, p>.1], except for the ‘Action Recognition Test’ [W = .75, p<.001] and the ‘Control Color Test’ [W = .9, p<.01]. For these variables, nonparametric Kolmogorov-Smirnov tests were adopted to compare female versus male subjects. RT data were normally distributed for all tests [W>.93, p<.001].

Correlation analysis

Performance (% correct answers and reaction times) on the ‘Emotion Recognition Test’ was correlated to performance on the ‘Biological Motion Recognition Test’ to test whether a subject's ability to discern ‘emotional’ information from PLDs was related to a subject's ability to discriminate ‘biological’ from ‘non-biological’ motion.

In addition, correlation analysis was performed between the % correct answers of the ‘Emotion Recognition Test’ and the ‘Reading the Mind in the Eyes Test’. This was done to test the relationship between the ability to recognize emotions from bodily PLD kinematics as compared to photographs statically showing the eye region. As a control, correlations with the aforementioned tests and the ‘Color test’ were performed.

All statistics were calculated with Statistica 9.0 (StatSoft. Inc. Tulsa, USA).

Results

Normative Data analysis

For all the presented PLDs (72), Table S1 reports the % correct classification scores, separately for all tests.

‘Action Recognition Test’

Participants were able to identify each of the actions (walking, jumping, and kicking) reliably and far above chance level [% correct above chance for ‘walking’: 78.9%; ‘jumping’: 77.5%; and ‘kicking’: 77.3%] as confirmed by one Sample Wilcoxon Signed Rank tests [all, Z(23)>4.2; p<.001]. Kruskal-Wallis ANOVA analyses only revealed a significant main effect of ‘Emotion’ [H(3,72) = 13.5, p = .004], which indicated that slightly more subjects recognized the displayed action from the ‘angry’ point light figure [99.6%, SEM 0.3], than from the ‘happy’ [96.2%, SEM 0.8] point light figure. The effects of ‘Model's gender’ [H(1,72) = .23, p = .63], ‘Action’ [H(2,72) = 3.1, p = .21], and ‘Perspective’ [H(2,72) = 4.2, p = .12] were not significant, indicating that action recognition was not influenced by these factors.

‘Gender Recognition Test’

Participants were able to identify the gender of the point light figure only slightly above chance level [7.5%] [Z(71) = 3.0; p = .003].

Kruskal-Wallis ANOVA analyses revealed no significant effects [all, H<5.4, p>.05], indicating that gender identification was not influenced by the (i) model's gender, (ii) action, (iii) emotion, or (iv) viewing perspective.

‘Biological Motion Recognition Test’

Participants were able to identify the ‘biological motion’ versus ‘scrambled’ PLDs reliably and above chance level [% correct above chance for ‘biological’: 42.6%; and ‘scrambled’: 36.9] [both, Z(71)>7.3; p<.001].

Kruskal-Wallis ANOVA analyses were performed only for the % correct classification scores of the ‘biological motion’ PLD (72) (not for the ‘scrambled’ PLD). A significant main effect for the factor ‘Emotion’ [H(3,72) = 9.2, p = .03] indicated that slightly fewer subjects recognized ‘biological motion’ from the ‘sad’ PL figure [87.7%, SEM 2.1], than from the ‘angry’ PL figure [93.4%, SEM 2.2]. Additionally, a main effect of ‘Perspective’ [H(2,72) = 21.9 p<.001] indicated that ‘biological motion’ recognition was the most difficult from the side view [87.5%, SEM 1.9], intermediate for the 45° view [92.8%, SEM 1.5], and the least difficult from the front view [97.6%, SEM 0.6]. The effects of ‘Model's gender’ [H(1,72) = 3.3, p = .07] and ‘Action’ [H(2,72) = 4.5, p = .12] were not significant.

‘Emotion Recognition Test’

Participants were able to identify each of the emotions (neutral, happy, sad, angry) reliably and above chance level [% correct above chance for ‘neutral’: 54.3%; ‘happy’: 44.2%; ‘sad’: 45.8%; and ‘angry’: 58.6%] [all, Z(35)>4.9; p<.001].

Kruskal-Wallis ANOVA analyses revealed no significant effects [all, H<6.9, p>.05], indicating that emotion recognition was not influenced by the (i) model's gender, (ii) action, (iii) emotion, or (iv) viewing perspective.

Female versus male differences in recognizing bodily kinematics

Potential gender differences in recognition performance were assessed for all tests.

‘Action Recognition Test’ (Experiment 1)

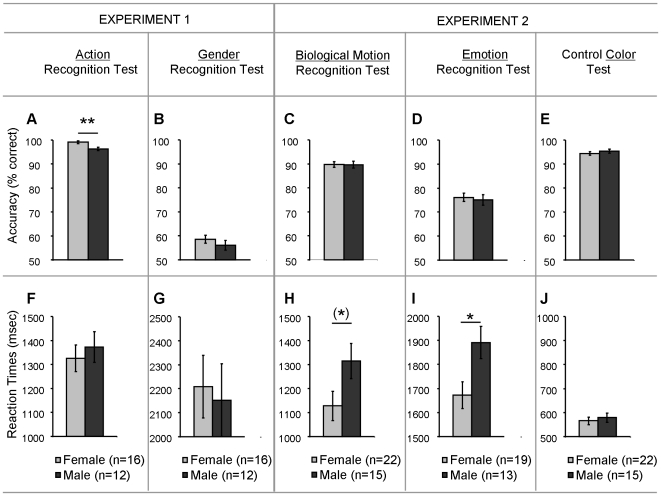

A Kolmogorov-Smirnov test on the % correct answers revealed a significant effect of gender [p<.05], indicating better action recognition for the female compared to the male participants (Figure 2A). Reaction times were comparable for both genders [F(1, 26) = .32, p = .58] (Figure 2F).

Figure 2. Gender differences in test performance.

Accuracy (% correct scores) (A–E) and Reaction times (F–J) are displayed as a function of participant group (male, female) separately for each test of experiment 1 [Action Recognition Test (A, F); Gender Recognition Test (B, G)] and experiment 2 [Biological motion Recognition Test (C, H); Emotion Recognition Test (D, I); Control Color Test (E, J)]. Vertical lines denote ±standard error. [(*) p = .05; * p<.05; ** p<.01].

‘Gender Recognition Test’ (Experiment 1)

Gender recognition from the PLDs was shown to be comparable for female and male participants, both in terms of % correct answers [F(1, 26) = .96, p = .34] (Figure 2B) and reaction times [F(1, 26) = .07, p = .78] (Figure 2G).

‘Biological Motion Recognition Test’ (Experiment 2)

As a group, females were shown to be tentatively faster compared to males in recognizing a ‘biological actor’ or ‘person’ from the PLDs. This was revealed by a significant effect of gender from the one-way ANOVA analysis on the RT data [F(1, 35) = 3.8, p = .05] (Figure 2H). Percentage correct answers were shown to be comparable for both groups [F(1, 26) = .007, p = .93] (Figure 2C).

‘Emotion Recognition Test’ (Experiment 2)

Similar to findings from the ‘Biological Motion Recognition Test’, females were shown to be significantly faster compared to males in recognizing the displayed emotions from the presented PLDs. This was revealed by a significant effect of gender from the one-way ANOVA analysis on the RT data [F(1, 30) = 6.2, p = .017] (Figure 2I). Percentage correct answers were shown to be comparable for both groups [F(1, 30) = .14, p = .72] (Figure 2D). We additionally explored the potential interaction between ‘Emotion’ and ‘Subjects' gender’ with a repeated measures ANOVA on the % correct answers and RT data (with the within factor ‘Emotion’ and the between factor ‘Subjects' gender’). However, no significant interaction effects were revealed [Acc: F(3,90) = .09, p = .96] [RT: F(3,90) = .4, p = .76], indicating that gender effects were similar for all types of emotion.

‘Control Color Test’ (Experiment 2)

Reaction times to indicate the color change of the dot, as well as % correct answers were shown to be comparable for both genders [RT: F(1, 35) = .29, p = .59] (Figure 2J) [Acc: p>.1] (Figure 2E).

‘Reading the Mind in the Eyes Test’ (Experiment 2)

One-way ANOVA analysis on the % correct answers revealed a significant effect of gender [F(1, 35) = 6.3, p = .016], replicating previous findings of a female superiority on the ‘Reading the Mind in the Eyes Test’ [47], [49].

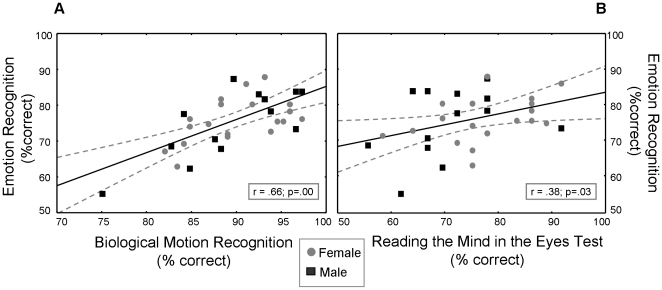

Correlated performance

Performance on the ‘Emotion Recognition Test’ was shown to correlate significantly with performance on the ‘Biological Motion Recognition Test’, both in terms of accuracy [r = .66; p<.001] (Figure 3A) and reaction times [r = .56; p<.001] (not shown in figure). These correlations indicate that a subject's ability to discern emotional information from PLDs was related to the subject's ability to basically discriminate ‘biological’ from ‘non-biological’ motion.

Figure 3. Performance on the ‘Emotion Recognition Test’ correlates with performance on the ‘Biological Motion Recognition Test’ and the ‘Reading the Mind in the Eyes Test’.

Figure 3 shows linear fits for correlated performance (% correct answers) on the ‘Emotion Recognition Test’ and respectively the ‘Biological Motion Recognition Test’ (A) and the ‘Reading the Mind in the Eyes Test’ (B) of experiment 2. Dotted lines denote 0.95 confidence intervals.

A significant correlation was also obtained between performance on the ‘Emotion Recognition Test’ and the ‘Reading the Mind in the Eyes’ Test [r = .38; p = .03] (Figure 3B), i.e., indicating that subjects scoring high on the ‘Reading the Mind in the Eyes Test’ were also good at recognizing bodily emotions from PLDs. No correlations were found between performance on the ‘Control Color Test’ and all other tests [all, r<.2; p>.1].

Discussion

The aim of the present study was to provide an objective quantification of potential gender differences in a set of socio-cognitive tasks involving the recognition of distinct features from point light displays (PLDs). Females were shown to perform better than male participants on PLD perception tasks involving bodily action recognition (experiment 1) and bodily emotion recognition (experiment 2). Interestingly, gender differences even pertained to tasks involving the basic recognition of ‘biological’ from ‘non-biological’ PLD motion (experiment 2). Moreover, previous findings of a female superiority on the ‘Reading the Mind in the Eyes test’ were replicated in the present study. No gender differences were revealed for the control test (indicate color change in one of the moving dots) and for recognizing the gender of the PLD figure. Interestingly, accuracy scores on the ‘Reading the Mind in the Eyes test’ were shown to predict the subjects' accuracy in recognizing emotions from bodily kinematics depicted by PLDs.

In experiment 1, gender differences were revealed for the ‘Action Recognition Test’ in terms of accuracy scores, such that females produced more correct answers compared to males, with comparable or even tentatively faster reaction times. The finding that performance was comparable for males and females on the ‘Gender Recognition Test’ suggests that the observed gender effect in action recognition is not related to general gender differences in reaction times or response selection abilities (i.e., selecting the correct finger to the correct response button). Overall, the lack of differences between male and female participants on the ‘Gender Recognition Test’ accords to some previous studies also showing no gender differences in gender recognition from PLDs [50], [51]. Moreover, overall gender recognition accuracy was only slightly above chance, potentially because the actors shown in the PLDs provided only subtle gender specific cues. A recent study exploring gaze patterns during gender recognition from PLDs suggested that the primary cues to gender are found in the shoulder and pelvis or ‘hip-shoulder ratio’ of a point light walker [52]. Results from our and previous studies suggest that the processing of these gender-specific cues (i.e., relating to body structure, and in particular to the relative width of shoulders and hips) may be comparable between genders.

In experiment 2, male participants appeared to adopt a more ‘time consuming’ strategy to accomplish the task compared to females both in the ‘Biological Motion’, and ‘Emotion Recognition Tests’, (as revealed by higher reaction times for males compared to females). Also here, this male-female difference seems task-specific, since reaction times on the ‘Control Color Test’ were comparable for the same male and female participants. It should be noted that the control task was relatively easy such that, theoretically, there might have been a ceiling effect in reaction times. However, even this relatively simple choice reaction time task is sufficient to control for potential gender differences in generating fast motor responses. Moreover, in light of the data from experiment 1 that revealed no gender differences on the (rather demanding) Gender recognition test, it seems unlikely that the gender effects observed in experiment 2 are solely driven by differences in response time or response selection abilities.

Overall, the finding of sex differences on bodily emotion recognition from point light animations is consistent with previous studies reporting gender differences in emotion recognition from facial expressions [6], [7], [53], [54]. A standardized and sensitive test that has been developed in this context is the ‘Reading the Mind in the Eyes Test’, in which subjects are presented with a series of photographs of the eye region of the face of different persons, and are asked to indicate which of four words best describes what the person in the photograph is thinking or feeling [47]. In the present study we were able to replicate previous findings of a female superiority in facial emotion recognition [47], [49]. Moreover, performance on this ‘facial’ emotion recognition test was shown to correlate significantly with performance on our newly developed ‘bodily’ emotion recognition test. Together, these findings indicate that gender differences in visually recognizing emotions are relatively generalized across facial and bodily emotion perception (i.e., irrespective of whether the task involves the ‘reading’ of fine movements in facial muscles, or the ‘embodiment’ of whole body emotional states). In addition, results from our Action and Biological Motion Recognition Tests provide indications that these gender differences are not restricted to the emotional domain, but are also manifest in tasks involving more ‘general’ biological motion processing.

Although substantially different in methodological approach, some recent studies explored differences between males and females in understanding actions and intentions from a visual event arrangement task. In this task, participants have to organize a set of cards depicting an event as a series of snapshots in a comic-strip fashion (i.e., requiring the understanding of the intentions and dispositions of the characters involved in the events) [55], [56]. In contrast to our results, these studies found no difference in action and intention understanding between female and male participants. However, the neural mechanisms of action and intention understanding from such reconstruction tasks (using static frames) might substantially differ from our dynamic PLD perception paradigms.

Our findings are consistent with previous studies reporting gender differences at the neurophysiological level for areas that are part of the action observation system and that respond to biological motion perception from PLDs [31]–[35]. In this respect, the presently reported differences between males and females in PLD recognition tasks may provide first indications that these neurophysiological differences are also relevant and quantifiable at the behavioral level. Interestingly, abundant reports exist on gender-related differences on empathy scores as indexed by standard questionnaires [1], [1]–[4], [57]–[60], [60,61] and also MNS activity has been shown to correlate to empathy indices [35], [62], [63]. In this respect, although rather speculative, it can be envisaged that inter-individual or gender-dependent differences in emotional processing may - at least to some degree – be related to more basic differences in processing biological motion per se. Results from our correlation analyses speak in favor of this notion by showing a tight relationship between a subject's ability to discern subtle emotional cues from PLDs and the subject's ability to basically discriminate biological from non-biological motion. Similar findings were revealed from a previous study examining a link between explicit detection of human gait from PLDs and emotion recognition [64]. Here however, only significant correlations with gait detection performance were observed for detecting ‘angry’ emotional states but not for ‘happiness’ detection.

Contrary to the notion of gender differences in the ‘biological motion processing network’, a recent magneto-encephalography study showed that gender-dependent differences in acquiring social information are related to differences in regions of the perceptual decision making network, namely, when the presence of social interactions had to be judged based on animated motions of geometric shapes [65]. Overall, it is not surprising that these non-biological stimuli produced effects outside the MNS, namely within the left prefrontal cortex, a region implicated in perceptual decision making. In addition, results indicated that females judged the presence of social interaction more rapidly than males who seemed to require more sensory evidence for social decisions [65]. Despite the apparent difference in adopted stimuli between this and our study, it should be interesting for future research to address whether the presently reported gender differences on PLD perception tasks are related to differences in the biological motion processing network, the perceptual decision making network, or in both systems. In general, such investigations may also shed light on gender-related vulnerabilities to neuropsychiatric disorders that are characterized by social cognition problems, such as autism spectrum disorders (ASD). Impairments in PLD biological motion perception have been reported in ASD [66]–[69] and males are known to be more commonly affected by the disorder than females with a ratio of about 4∶1 [70]. In this respect, it should be interesting to explore whether the normal gender difference in response to our point light action, biological motion and emotion recognition tasks is even more pronounced in ASD.

Supporting Information

Reported values refer to the percentage of participants who correctly identified each feature of the presented point light displays (N = 72) i.e., (i) the ‘type of action’ in the Action Recognition test, (ii) the ‘model's gender’ in the Gender Recognition test, (iii) a ‘person’ in the Biological Motion Recognition test, and (iv) the ‘type of emotion’ in the Emotion Recognition test. [*Sample of 28 participants; **Sample of 37 participants; ***Sample of 32 participants].

(PDF)

Exemplary movie of a point light display consisting of 12 moving white dots against a black background. The point light display shows the female actor walking on the spot (neutral emotional state, side view).

(WMV)

Scrambled version of the point light display showed in Video S1. It consists of the same individual dots, undergoing the same local trajectories as in the normal point light display, however with the position permutated between the 12 individual trajectories.

(WMV)

Exemplary movie of a ‘sad’ point light figure (female actor walking on the spot, front view).

(WMV)

Acknowledgments

We are grateful to all the subjects who participated in this research, and to Pieter Van Noten, Thomas Van Gemert, Marieke Durnez and Annelien Mestdagh, for their help in the development of the point light animations and the assessment process. We would also like to thank Johan Wagemans for his insightful feedback on the design of the distinct point light recognition tests.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: Support for this study was provided through grants from the Flanders Fund for Scientific Research (Project Grant G.0749.09 and Fellowship Grant 1.2.060.10.N.00)(http://www.fwo.be). This work was also supported by Grant P6/29 from the Interuniversity Attraction Poles program of the Belgian federal government. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Baron-Cohen S, Wheelwright S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J Autism Dev Disord. 2004;34:163–175. doi: 10.1023/b:jadd.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- 2.Davis MH. Measuring Individual-Differences in Empathy - Evidence for A Multidimensional Approach. J Pers Soc Psychol. 1983;44:113–126. [Google Scholar]

- 3.Eisenberg N, Fabes RA, Miller PA, Fultz J, Shell R, et al. Relation of Sympathy and Personal Distress to Pro-Social Behavior - A Multimethod Study. J Pers Soc Psychol. 1989;57:55–66. doi: 10.1037//0022-3514.57.1.55. [DOI] [PubMed] [Google Scholar]

- 4.Eisenberg N, Lennon R. Sex-Differences in Empathy and Related Capacities. Psychol Bull. 1983;94:100–131. [Google Scholar]

- 5.Cialdini RB, Schaller M, Houlihan D, Arps K, Fultz J, et al. Empathy-Based Helping - Is It Selflessly Or Selfishly Motivated. J Pers Soc Psychol. 1987;52:749–758. doi: 10.1037//0022-3514.52.4.749. [DOI] [PubMed] [Google Scholar]

- 6.Hall JA. Non-verbal sex differences. 1984. John Hopkins University Press, Baltimore.

- 7.Hall JA, Carter J, Horgan T. Fischer A, editor. Gender differences in the nonverbal communication of emotion. In Gender and emotion: Social psychological perspectives. 2000. Cambridge University Press, Paris.

- 8.Grimshaw GM, Bulman-Fleming MB, Ngo C. A signal-detection analysis of sex differences in the perception of emotional faces. Brain Cogn. 2004;54:248–250. doi: 10.1016/j.bandc.2004.02.029. [DOI] [PubMed] [Google Scholar]

- 9.Kessler H, Hoffmann H, Bayerl P, Neumann H, Basic A, et al. Measuring emotion recognition with computer morphing: New methods for research and clinical practice. Nervenheilkunde. 2005;24:611–614. [Google Scholar]

- 10.Hoffmann H, Kessler H, Eppel T, Rukavina S, Traue HC. Expression intensity, gender and facial emotion recognition: Women recognize only subtle facial emotions better than men. Acta Psychol. 2010 doi: 10.1016/j.actpsy.2010.07.012. In Press. [DOI] [PubMed] [Google Scholar]

- 11.Gallese V, Goldman A. Mirror neurons and the simulation theory of mind-reading. Trends Cogn Sci. 1998;2:493–501. doi: 10.1016/s1364-6613(98)01262-5. [DOI] [PubMed] [Google Scholar]

- 12.Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- 13.Gallese V. Mirror Neurons, Embodied Simulation, and the Neural Basis of Social Identification. Psychoanalytic Dialogues. 2009;19:519–536. [Google Scholar]

- 14.Sinigaglia C, Sparaci L. Emotions in action through the looking glass. J Anal Psychol. 2010;55:3–29. doi: 10.1111/j.1468-5922.2009.01821.x. [DOI] [PubMed] [Google Scholar]

- 15.Davis N, Stone T. Mental simulation. 1995. Blackwell. Oxford, UK.

- 16.Carruthers P, Smith P. Theories of theories of mind. 1996. Cambridge University Press. Cambridge, UK.

- 17.Prince W. Hurley S, Chater N, editors. An ideomotor approach to imitation. In Perspective on imitation: From neuroscience to social science. 2005. pp. 141–156. MIT Press. Cambridge, MA.

- 18.James W. The principles of psychologiy. 1890. Dover Publications, New York.

- 19.Di Pellegrino G, Fadiga L, Fogassin L, Gallese V, Rizzolatti G. Understanding motor events: a neurophysiological study. Exp Brain Res. 1992;91:176–180. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- 20.Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, et al. Parietal lobe: From action organization to intention understanding. Science. 2005;308:662–667. doi: 10.1126/science.1106138. [DOI] [PubMed] [Google Scholar]

- 21.Fadiga L, Fogassi L, Pavesi G, Rizzolatti G. Motor facilitation during action observation: a magnetic stimulation study. J Neurophysiol. 1995;73:2608–2611. doi: 10.1152/jn.1995.73.6.2608. [DOI] [PubMed] [Google Scholar]

- 22.Strafella AP, Paus T. Modulation of cortical excitability during action observation: a transcranial magnetic stimulation study. Neuroreport. 2000;11:2289–2292. doi: 10.1097/00001756-200007140-00044. [DOI] [PubMed] [Google Scholar]

- 23.Fadiga L, Craighero L, Olivier E. Human motor cortex excitability during the perception of others' action. Curr Opin Neurobiol. 2005;15:213–218. doi: 10.1016/j.conb.2005.03.013. [DOI] [PubMed] [Google Scholar]

- 24.Cochin S, Barthelemy C, Martineau L. Changes in quantified EEG with observation and execution of movements. Eur J Neurosci. 1998;10:254. doi: 10.1046/j.1460-9568.1999.00598.x. [DOI] [PubMed] [Google Scholar]

- 25.Cochin S, Barthelemy C, Lejeune B, Roux S, Martineau J. Perception of motion and qEEG activity in human adults. Electroencephalogr Clin Neurophysiol. 1998;107:287–295. doi: 10.1016/s0013-4694(98)00071-6. [DOI] [PubMed] [Google Scholar]

- 26.Hari R, Forss N, Avikainen S, Kirveskari E, Salenius S, et al. Activation of human primary motor cortex during action observation: A neuromagnetic study. Proc Natl Acad Sci U S A. 1998;95:15061–15065. doi: 10.1073/pnas.95.25.15061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, et al. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13:400–404. [PubMed] [Google Scholar]

- 28.Iacoboni M, Molnar-Szakacs I, Gallese V, Buccino G, Mazziotta JC, et al. Grasping the intentions of others with one's own mirror neuron system. PLoS Biol. 2005;3:529–535. doi: 10.1371/journal.pbio.0030079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Calvo-Merino B, Glaser DE, Grezes J, Passingham RE, Haggard P. Action observation and acquired motor skills: An fMRI study with expert dancers. Cerebral Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- 30.Rizzolatti G, Sinigaglia C. The functional role of the parieto-frontal mirror circuit: interpretations and misinterpretations. Nat Rev Neurosci. 2010;11:264–274. doi: 10.1038/nrn2805. [DOI] [PubMed] [Google Scholar]

- 31.Cheng YW, Tzeng OJL, Decety J, Imada T, Hsieh JC. Gender differences in the human mirror system: a magnetoencephalograpy study. Neuroreport. 2006;17:1115–1119. doi: 10.1097/01.wnr.0000223393.59328.21. [DOI] [PubMed] [Google Scholar]

- 32.Cheng YW, Decety J, Lin CP, Hsieh JC, Hung D, et al. Sex differences in spinal excitability during observation of bipedal locomotion. Neuroreport. 2007;18:887–890. doi: 10.1097/WNR.0b013e3280ebb486. [DOI] [PubMed] [Google Scholar]

- 33.Cheng Y, Lee PL, Yang CY, Lin CP, Hung D, et al. Gender differences in the mu rhythm of the human mirror-neuron system. PLoS One. 2008;3:e2113. doi: 10.1371/journal.pone.0002113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yamasue H, Abe O, Suga M, Yamada H, Rogers MA, et al. Sex-linked neuroanatomical basis of human altruistic cooperativeness. Cereb Cortex. 2008;18:2331–2340. doi: 10.1093/cercor/bhm254. [DOI] [PubMed] [Google Scholar]

- 35.Cheng Y, Chou KH, Decety J, Chen IY, Hung D, et al. Sex differences in the neuroanatomy of human mirror-neuron system: A voxel-based morphometric investigation. Neuroscience. 2009;158:713–720. doi: 10.1016/j.neuroscience.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 36.Johansson G. Visual-Perception of Biological Motion and A Model for Its Analysis. Percept Psychophys. 1973;14:201–211. [Google Scholar]

- 37.Cutting JE, Kozlowski LT. Recognizing Friends by Their Walk - Gait Perception Without Familiarity Cues. Bull Psychon Soc. 1977;9:353–356. [Google Scholar]

- 38.Saygin AP, Wilson SM, Hagler DJ, Bates E, Jr, Sereno MI. Point-light biological motion perception activates human premotor cortex. J Neurosci. 2004;24:6181–6188. doi: 10.1523/JNEUROSCI.0504-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Saygin AP. Superior temporal and premotor brain areas necessary for biological motion perception. Brain. 2007;130:2452–2461. doi: 10.1093/brain/awm162. [DOI] [PubMed] [Google Scholar]

- 40.Perry A, Troje NF, Bentin S. Exploring motor system contributions to the perception of social information: Evidence from EEG activity in the mu/alpha frequency range. Soc Neurosci. 2010;5:272–284. doi: 10.1080/17470910903395767. [DOI] [PubMed] [Google Scholar]

- 41.Ulloa ER, Pineda JA. Recognition of point-light biological motion: mu rhythms and mirror neuron activity. Behav. Brain Res. 2007;183:188–194. doi: 10.1016/j.bbr.2007.06.007. [DOI] [PubMed] [Google Scholar]

- 42.Dittrich WH. Action categories and the perception of biological motion. Perception. 1993;22:15–22. doi: 10.1068/p220015. [DOI] [PubMed] [Google Scholar]

- 43.Cutting JE. Program to Generate Synthetic Walkers As Dynamic Point-Light Displays. Behav Res Meth Instrum. 1978;10:91–94. [Google Scholar]

- 44.Troje NF. Decomposing biological motion: A framework for analysis and synthesis of human gait patterns. J Vis. 2002;2:371–387. doi: 10.1167/2.5.2. [DOI] [PubMed] [Google Scholar]

- 45.Dittrich WH, Troscianko T, Lea SEG, Morgan D. Perception of emotion from dynamic point-light displays represented in dance. Perception. 1996;25:727–738. doi: 10.1068/p250727. [DOI] [PubMed] [Google Scholar]

- 46.Pollick FE, Hill H, Calder A, Paterson H. Recognising facial expression from spatially and temporally modified movements. Perception. 2003;32:813–826. doi: 10.1068/p3319. [DOI] [PubMed] [Google Scholar]

- 47.Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry. 2001;42:241–251. [PubMed] [Google Scholar]

- 48.Rickham PP. Human experimentation. Code of Ethics of the World Medical Association. Declaration of Helsinki. Br Med J. 1964;2:177. doi: 10.1136/bmj.2.5402.177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Baron-Cohen S, Wheelwright S, Jolliffe T. Is There a “Language of the Eyes”? Evidence from Normal Adults, and Adults with Autism or Asperger Syndrome. Vis cogn. 1997;4:311–331. [Google Scholar]

- 50.Bidet-Ildei C, Chauvin A, Coello Y. Observing or producing a motor action improves later perception of biological motion: Evidence for a gender effect. Acta Psychol. 2010;134:215–224. doi: 10.1016/j.actpsy.2010.02.002. [DOI] [PubMed] [Google Scholar]

- 51.Schouten B, Troje NE, Brooks A, van der Zwan R, Verfaillie K. The facing bias in biological motion perception: Effects of stimulus gender and observer sex. Atten Percept Psychophys. 2010;72:1256–1260. doi: 10.3758/APP.72.5.1256. [DOI] [PubMed] [Google Scholar]

- 52.Saunders DR, Williamson DK, Troje NF. Gaze patterns during perception of direction and gender from biological motion. J Vis. 2010;10 doi: 10.1167/10.11.9. [DOI] [PubMed] [Google Scholar]

- 53.Erwin RJ, Gur RC, Gur RE, Skolnick B, Mawhinney-Hee M, et al. Facial emotion discrimination: I. Task construction and behavioral findings in normal subjects. Psychiatry Res. 1992;42:231–240. doi: 10.1016/0165-1781(92)90115-j. [DOI] [PubMed] [Google Scholar]

- 54.Rahman Q, Wilson GD, Abrahams S. Sex, sexual orientation, and identification of positive and negative facial affect. Brain Cogn. 2004;54:179–185. doi: 10.1016/j.bandc.2004.01.002. [DOI] [PubMed] [Google Scholar]

- 55.Pavlova MA, Wecker M, Krombholz K, Sokolov AA. Perception of intentions and actions: Gender stereotype susceptibility. Brain Res. 2010;1311:81–85. doi: 10.1016/j.brainres.2009.11.046. [DOI] [PubMed] [Google Scholar]

- 56.Pavlova M. Perception and understanding of intentions and actions: Does gender matter? Neurosci Lett. 2009;449:133–136. doi: 10.1016/j.neulet.2008.10.102. [DOI] [PubMed] [Google Scholar]

- 57.Davis MH, Franzoi SL. Stability and Change in Adolescent Self-Consciousness and Empathy. J Res Pers. 1991;25:70–87. [Google Scholar]

- 58.Eisenberg N, Lennon R. Sex-Differences in Empathy and Related Capacities. Psychol Bull. 1983;94:100–131. [Google Scholar]

- 59.Hoffman ML. Sex-Differences in Empathy and Related Behaviors. Psychol Bull. 1977;84:712–722. [PubMed] [Google Scholar]

- 60.Lawrence EJ, Shaw P, Baker D, Baron-Cohen S, David AS. Measuring empathy: reliability and validity of the Empathy Quotient. Psychol Med. 2004;34:911–919. doi: 10.1017/s0033291703001624. [DOI] [PubMed] [Google Scholar]

- 61.Baron-Cohen S, Richler J, Bisarya D, Gurunathan N, Wheelwright S. The systemizing quotient: an investigation of adults with Asperger syndrome or high-functioning autism, and normal sex differences. Philos Trans R Soc Lond B Biol Sci. 2003;358:361–374. doi: 10.1098/rstb.2002.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Gazzola V, Aziz-Zadeh L, Keysers C. Empathy and the somatotopic auditory mirror system in humans. Curr Biol. 2006;16:1824–1829. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- 63.Schulte-Ruther M, Markowitsch HJ, Fink GR, Piefke M. Mirror neuron and theory of mind mechanisms involved in face-to-face interactions: A functional magnetic resonance imaging approach to empathy. J Cogn Neurosci. 2007;19:1354–1372. doi: 10.1162/jocn.2007.19.8.1354. [DOI] [PubMed] [Google Scholar]

- 64.Ikeda H, Watanabe K. Anger and happiness are linked differently to the explicit detection of biological motion. Perception. 2009;38:1002–11. doi: 10.1068/p6250. [DOI] [PubMed] [Google Scholar]

- 65.Pavlova M, Guerreschi M, Lutzenberger W, Sokolov AN, Krageloh-Mann I. Cortical response to social interaction is affected by gender. Neuroimage. 2010;50:1327–1332. doi: 10.1016/j.neuroimage.2009.12.096. [DOI] [PubMed] [Google Scholar]

- 66.Parron C, Da Fonseca D, Santosn A, Moore DG, Monfardini E, et al. Recognition of biological motion in children with autistic spectrum disorders. Autism. 2008;12:261–274. doi: 10.1177/1362361307089520. [DOI] [PubMed] [Google Scholar]

- 67.Moore DG, Hobson RP, Lee A. Components of person perception: An investigation with autistic, non-autistic retarded and typically developing children and adolescents. Br J Dev Psychol. 1997;15:401–423. [Google Scholar]

- 68.Hubert B, Wicker B, Moore DG, Monfardini E, Duverger H, et al. Brief report: Recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J Autism Dev Disord. 2007;37:1386–1392. doi: 10.1007/s10803-006-0275-y. [DOI] [PubMed] [Google Scholar]

- 69.Blake R, Turner LM, Smoski MJ, Pozdol SL, Stone WL. Visual recognition of biological motion is impaired in children with autism. Psychol Sci. 2003;14:151–157. doi: 10.1111/1467-9280.01434. [DOI] [PubMed] [Google Scholar]

- 70.Newschaffer CJ, Croen LA, Daniels J, Giarelli E, Grether JK, et al. The epidemiology of autism spectrum disorders. Annu Rev Public Health. 2007;28:235–258. doi: 10.1146/annurev.publhealth.28.021406.144007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Reported values refer to the percentage of participants who correctly identified each feature of the presented point light displays (N = 72) i.e., (i) the ‘type of action’ in the Action Recognition test, (ii) the ‘model's gender’ in the Gender Recognition test, (iii) a ‘person’ in the Biological Motion Recognition test, and (iv) the ‘type of emotion’ in the Emotion Recognition test. [*Sample of 28 participants; **Sample of 37 participants; ***Sample of 32 participants].

(PDF)

Exemplary movie of a point light display consisting of 12 moving white dots against a black background. The point light display shows the female actor walking on the spot (neutral emotional state, side view).

(WMV)

Scrambled version of the point light display showed in Video S1. It consists of the same individual dots, undergoing the same local trajectories as in the normal point light display, however with the position permutated between the 12 individual trajectories.

(WMV)

Exemplary movie of a ‘sad’ point light figure (female actor walking on the spot, front view).

(WMV)