Abstract

Ever since learning and memory have been studied experimentally, the relationship between operant and classical conditioning has been controversial. Operant conditioning is any form of conditioning that essentially depends on the animal's behavior. It relies on operant behavior. A motor output is called operant if it controls a sensory variable. The Drosophila flight simulator, in which the relevant behavior is a single motor variable (yaw torque), fully separates the operant and classical components of a complex conditioning task. In this paradigm a tethered fly learns, operantly or classically, to prefer and avoid certain flight orientations in relation to the surrounding panorama. Yaw torque is recorded and, in the operant mode, controls the panorama. Using a yoked control, we show that classical pattern learning necessitates more extensive training than operant pattern learning. We compare in detail the microstructure of yaw torque after classical and operant training but find no evidence for acquired behavioral traits after operant conditioning that might explain this difference. We therefore conclude that the operant behavior has a facilitating effect on the classical training. In addition, we show that an operantly learned stimulus is successfully transferred from the behavior of the training to a different behavior. This result unequivocally demonstrates that during operant conditioning classical associations can be formed.

INTRODUCTION

Ambulatory organisms are faced with the task of surviving in a changing environment (world). As a consequence, they have acquired the ability to learn. Learning ability is an evolutionary adaptation to transient order not lasting long enough for a direct evolutionary adaptation. The order is of two types: relations in the world (classical or Pavlovian conditioning) and the consequences of one's own actions in the world (operant or instrumental conditioning). Hence, both operant and classical conditioning can be conceptualized as detection, evaluation, and storage of temporal relationships. Most learning situations comprise operant and classical components and, more often than not, it is impossible to discern the associations the animal has produced when it shows the conditioned behavior. A recurrent concern in learning and memory research, therefore, has been the question of whether for operant and classical conditioning a common formalism can be derived or whether they constitute two basically different processes (Gormezano and Tait 1976). Both one- (e.g., Guthrie 1952; Hebb 1956; Sheffield 1965) and two-process (e.g., Skinner 1935, 1937; Konorski and Miller 1937a,b; Rescorla and Solomon 1967; Trapold and Overmier 1972) theories have been proposed from early on, yet the issue remains unsolved, despite further insights and approaches (e.g., Trapold and Winokur 1967; Trapold et al. 1968; Hellige and Grant 1974; Gormezano and Tait 1976; Donahoe et al. 1993, 1997; Hoffmann 1993; Balleine 1994; Rescorla 1994; Donahoe 1997). In a recent study, Rescorla (1994) notes: “…one is unlikely to achieve a stimulus that bears a purely Pavlovian or purely instrumental relation to an outcome.” With Drosophila at the torque meter (Heisenberg and Wolf 1984, 1988), this “pure” separation has been achieved. Classical and operant learning can be compared directly in very similar stimulus situations and, at the same time, are separated for the first time with the necessary experimental rigor to show how they are related.

Operant and Classical Conditioning

Classical conditioning is often described as the transfer of the response-eliciting property of a biologically significant stimulus (US) to a new stimulus (CS) without that property (Pavlov 1927; Hawkins et al. 1983; Kandel et al. 1983; Carew and Sahley 1986; Hammer 1993). This transfer is thought to occur only if the CS can serve as a predictor for the US (e.g., Rescorla and Wagner 1972; Pearce 1987, 1994; Sutton and Barto 1990). Thus, classical conditioning can be understood as learning about the temporal (or causal, Denniston et al. 1996) relationships between external stimuli to allow for appropriate preparatory behavior before biologically significant events (“signalization”; Pavlov 1927). Much progress has been made in elucidating the neuronal and molecular events that take place during acquisition and consolidation of the memory trace in classical conditioning (e.g., Kandel et al. 1983; Tully et al. 1990, 1994; Tully 1991; Glanzman 1995; Menzel and Müller 1996; Fanselow 1998; Kim et al. 1998).

In contrast to classical conditioning, the processes underlying operant conditioning may be diverse and are still poorly understood. Technically speaking, the feedback loop between the animal's behavior and the reinforcer (US) is closed (for a general model, see Wolf and Heisenberg 1991). All operant conditioning paradigms have in common that the animal first has to “find” the motor output controlling the US (operant behavior). Analysis of operant conditioning on a neuronal and molecular level is in progress (see, e.g., Horridge 1962; Hoyle 1979, Nargeot et al. 1997, 1999a,b; Wolpaw 1997; Spencer et al. 1999) but still far from a stage comparable to that in classical conditioning.

Thus, whereas in classical conditioning a CS–US association is thought to be responsible for the learning effect, in operant conditioning a behavior–reinforcer (B–US) association is regarded as the primary process. A thorough comparison at all levels from molecules to behavior will be necessary to elucidate the processes that are shared and those that are distinct between operant and classical conditioning. In the present study, only the behavioral level is addressed.

Operant and Classical Conditioning at the Flight Simulator

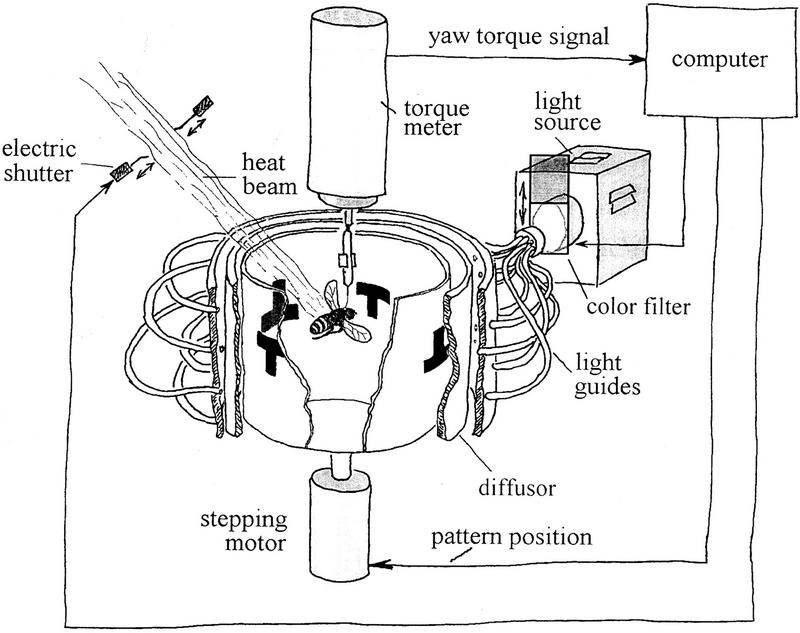

In visual learning of Drosophila at the flight simulator (Fig. 1; Wolf and Heisenberg 1991, 1997; Wolf et al. 1998) the fly's yaw torque is the only motor output recorded. With it, the fly can control the angular velocity and orientation of a circular arena surrounding it. The arena carries visual patterns on its wall, allowing the fly to choose its flight direction relative to these patterns. The fly can be trained to avoid certain flight directions (i.e., angular orientations of the arena) and to prefer others. Learning success (memory) is assessed by recording the fly's choice of flight direction once the training is over. This apparatus is well suited to compare classical and operant conditioning procedures because during training the sequence of conditioned and unconditioned stimuli can either be controlled by the fly itself as described above (closed-loop, operant training) or by the experimenter with the fly having no possibility to interfere (open-loop, classical training).

Figure 1.

Flight simulator setup. The fly is flying stationarily in a cylindrical arena homogeneously illuminated from behind. The fly's tendency to perform left or right turns (yaw torque) is measured continuously and fed into the computer. The computer controls pattern position, shutter closure, and color of illumination according to the conditioning rules.

Since operant pattern learning at the torque meter (Wolf and Heisenberg 1991) was first reported, the method has been used to investigate pattern recognition (Dill et al. 1993; Dill and Heisenberg 1995; Heisenberg 1995; Ernst and Heisenberg 1999) and structure function relationships in the brain (Weidtmann 1993; Wolf et al. 1998; Liu et al. 1999). Dill et al. (1995) have started a behavioral analysis of the learning/memory process and others (Eyding 1993; Guo et al. 1996; Guo and Götz 1997; Wolf and Heisenberg 1997; Xia et al. 1997a,b, 1999; Wang et al. 1998) have continued. Yet, a formal description of how the operant behavior is involved in the learning task is still in demand.

More recently, Wolf et al. (1998) have described classical pattern learning at the flight simulator. In contrast to operant pattern learning, the formal description here seems rather straightforward: To show the appropriate avoidance in a subsequent closed-loop test without heat the fly has to transfer during training the avoidance-eliciting properties of the heat (US+) to the punished pattern orientation (CS+), and/or the safety-signaling property of the ambient temperature (US−) to the alternative pattern orientation (CS−). As the fly receives no confirmation of which behavior will save it from the heat, it is not able to associate a particularly successful behavior with the reinforcement schedule. In other words, we assume that classical conditioning is solely based on a property transfer from the US to the CS (i.e., a CS–US association) and not on any kind of motor learning or learning of a behavioral strategy. Learning success is assessed in closed loop, precisely as after operant pattern learning.

As both kinds of training lead to an associatively conditioned differential pattern preference, it is clear that also during operant training a CS–US association must form. Knowing that this association can be acquired independently of behavioral modifications, one is inclined to interpret the operant procedure as classical conditioning taking place during an operant behavior (pseudo-operant). However, Wolf and Heisenberg (1991) have shown that operant pattern learning at the flight simulator is not entirely reducible to classical conditioning. In a yoked control in which the precise sequence of pattern movements and heating episodes of an operant learning experiment (closed loop) was presented to a second fly as a classical training (open loop, replay), no learning was observed.

Two interesting questions arise from these findings. (1) Why does the classical conditioning experiment show pattern learning (Wolf et al. 1998) whereas the yoked control of the operant experiment does not (Wolf and Heisenberg 1991)? (2) Why does the same stimulus sequence lead to an associative after-effect if the sequence is generated by the fly itself (operant training), but not if it is generated by a different fly (replay training; yoked control)? What makes the operant training more effective?

In the present study we show that the first question has a simple answer: A more extensive yoked control leads to pattern learning. For the second question two possible answers are investigated. For one, the operant and the classical component might form an additive process. In other words, during operant conditioning the fly might learn in addition to the pattern–heat association a strategy such as “Stop turning when you come out of the heat.” The operantly improved avoidance behavior would then amplify the effect of the CS–US association on recall in the memory test. One would expect careful analysis of the fly's behavior after operant training to reveal such a behavioral modification. As the alternative, the coincidence of the sensory events with the fly's own behavioral activity (operant behavior) may facilitate acquisition of the CS–US association. In this case, there would be no operant component stored in the memory trace and the CS–US association would be qualitatively the same as in classical conditioning. A transfer of this CS–US association learned in one behavior to a new behavior would be compatible with such an hypothesis.

RESULTS

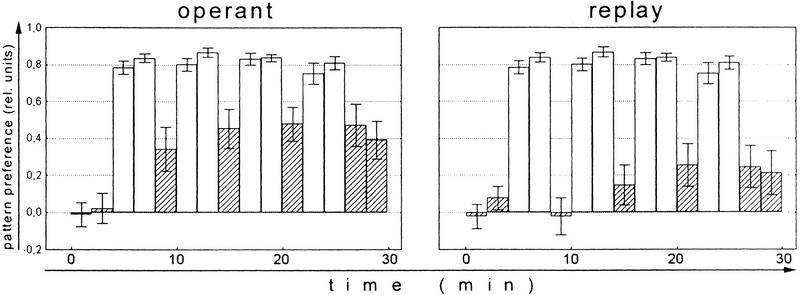

Does Classical Conditioning Occur in the Yoked Control?

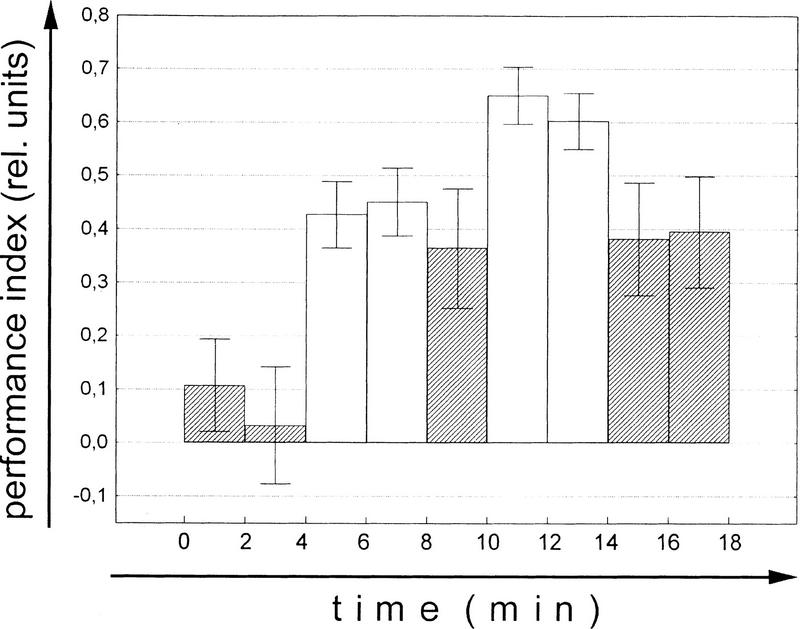

Wolf and Heisenberg (1991) have shown earlier (see Introduction) that operant conditioning at the torque meter is more effective than a classical training procedure consisting of the same sequence of pattern orientations and heat/no-heat alternations (replay; yoked control). On the other hand, classical training with stationary pattern orientations yields normal learning scores (Wolf et al. 1998). The different effect of the two classical procedures requires an explanation. In the latter experiments reinforcement is applied in a 3 sec hot/3 sec cold cycle implying that the fly is heated during 50% of the training period. In the operant experiment the amount of heat the fly receives is controlled by the fly. In the experiment of Figure 2 (operant), for example, the fly manages to keep out of the heat for 80%–90% of the time. If the amount of heat is taken as a measure of reinforcement the flies in the replay experiment receive substantially less reinforcement than the flies in the classical conditioning described above. The failure to learn under replay conditions may therefore be merely a matter of too little reinforcement. If this assumption is correct, prolonging the replay procedure should overcome this shortcoming. Figure 2 shows that this apparently is the case. The first test after the final replay training shows a significant learning score (P < 0.04, Wilcoxon matched-pairs test). Moreover, single learning scores cease to differ between the operant and the replay group already after three 4-min training blocks (Test 1: P < 0.01; Test 2: P < 0.05; Test 3: P < 0.14; Test 4: P < 0.10; Test 5: P < 0.30; Mann-Whitney U-test). Nevertheless, a significant difference between master and replay flies remains if all five learning scores are compared (P < 0.02; repeated-measures ANOVA). In other words, it is possible for a true classical (i.e., behavior-independent) component to be involved during operant conditioning, although without the operant behavior it is small. The fact that this classical component was not detected in Wolf and Heisenberg (1991) is due to the low level of reinforcement (2 × 4 min) in that study. In the present replay experiment (Fig. 2) the memory score after the second 4-min training block is not significantly different from that measured by Wolf and Heisenberg (1991).

Figure 2.

Comparison of the mean (±s.e.m.) learning scores of operant master flies and classical replay flies. N = 30. (Open bars) Training; (hatched bars) test.

Do Flies Learn Motor Patterns or Rules in Operant Conditioning?

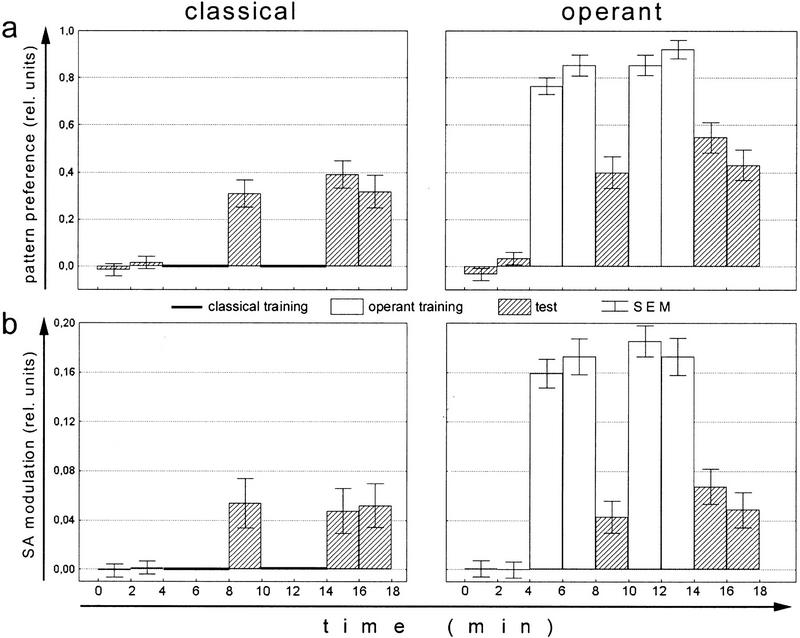

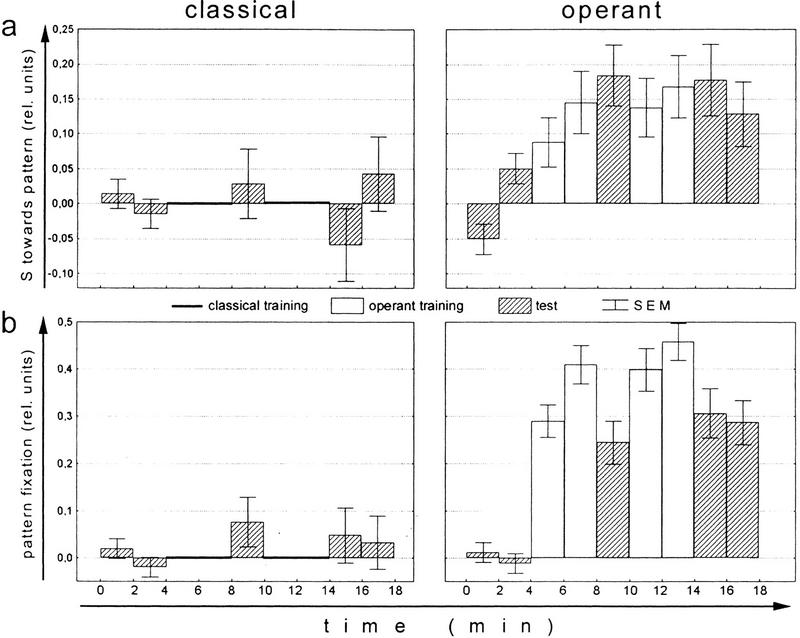

Whereas in the yoked control experiment we keep the stimulus presentation constant between groups and compare learning performance, we now keep learning performance constant by increasing the amount of reinforcement in the classical group (Figs. 3–5). Classical conditioning with stationary patterns and 50% heating time leads to about the same associative pattern preference after two training blocks of 4 min as the standard operant procedure with 10%–20% heating time (Fig. 3a). Why is operant learning more effective than classical learning? Comparing the fine structure of the flies' yaw torque after these two types of conditioning should tell us whether the flies have learned some kind of rule during the operant procedure and whether such rules can account for the smaller amount of reinforcement needed. A classically trained fly associating a flight direction towards a particular visual pattern with heat might use the same behavioral strategy to avoid this flight direction as a naive fly expressing a spontaneous pattern avoidance. If during operant training flies acquire a decisively more effective way to avoid the heat their motor output should be different from that of the naive and the classically conditioned flies.

Figure 3.

Comparing performance indices and spike amplitude of operantly and classically trained flies. For each fly, the mean of the 4-min preference test has been subtracted prior to averaging. (Open bars) Training; (hatched bars) test. (a) Mean (±s.e.m.) performance indices of classically and operantly conditioned flies. N = 100. (b) Mean (±s.e.m.) spike amplitude (SA) indices of classically and operantly conditioned flies. SA indices were calculated as (a1 − a2)/(a1 + a2) where a1 denotes the mean SA in the quadrants containing the pattern orientation associated with heat and a2 the mean SA in the other quadrants. Noperant = 97; Nclassical = 94.

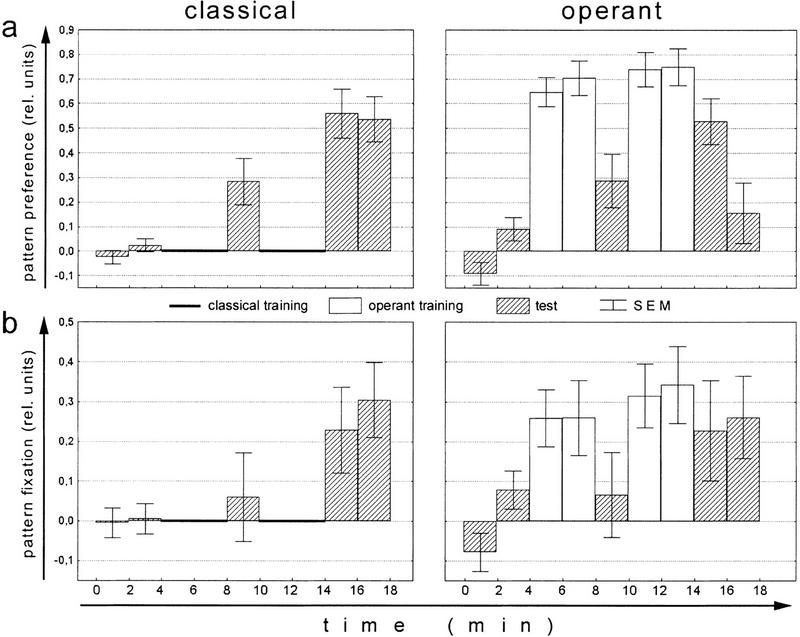

Figure 5.

Learning performance and pattern fixation of flies trained either operantly or classically with slowly rotating patterns. (a) Mean (±s.e.m.) performance indices of classically (rotating patterns) and operantly trained flies. (b) Mean (±s.e.m.) fixation indices in the cold sectors. For each fly, the mean of the four-min preference test has been subtracted prior to averaging. Noperant = 18; Nclassical = 23. (Open bars) Training; (hatched bars) test.

To analyze the flies' yaw torque behavior after the two kinds of conditioning, 100 flies were recorded in each of the set-ups. Flies from one group could control the appearance of the reinforcer (i.e., the beam of infrared light) by their choice of flight direction with respect to the angular positions of visual patterns at the arena wall (closed loop). Flies from the second group were trained in the same arena but with stationary patterns in alternating orientations (open loop). In both instances, the identical behavioral test was used to assess learning success: The fly's pattern preference was measured in closed loop without reinforcement. Both groups received the same temporal sequence of training and test periods: Four minutes of preference test without reinforcement were followed by an 8-min training phase that was interrupted by a 2-min test period after 4 min. Learning was then tested in two test periods of 2 min (Fig. 3a).

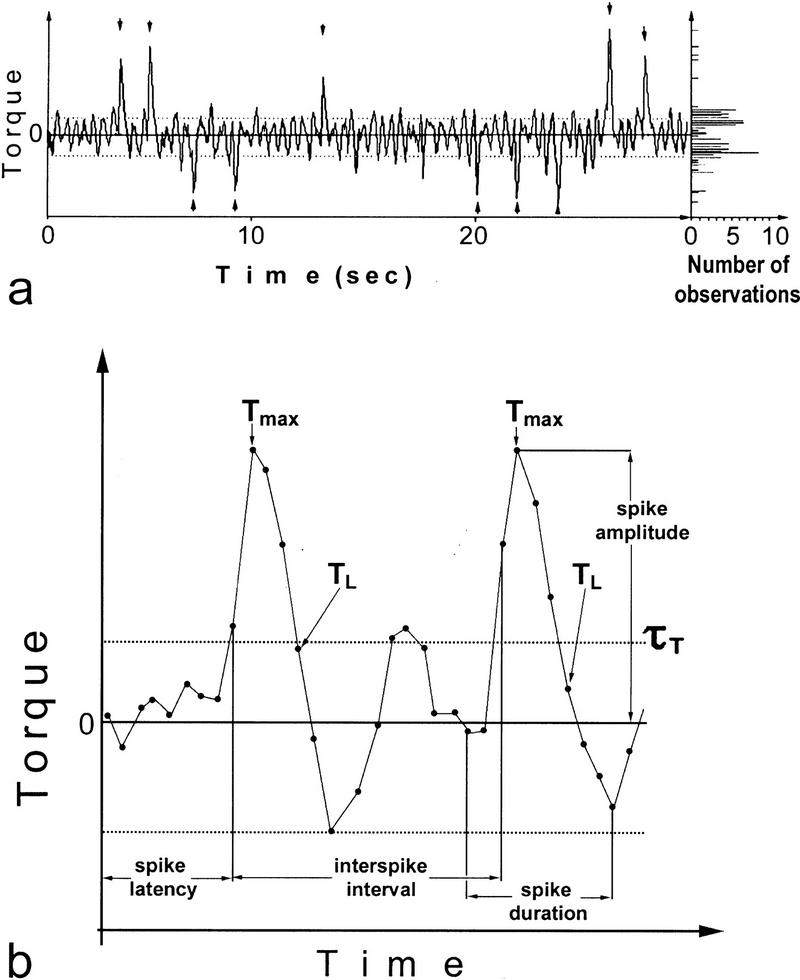

In the flight simulator, it is striking that the fly neither keeps the cylinder immobilized nor rotates it continuously: Phases of fairly straight flight are interrupted by large turns at high angular velocity (Fig. 8a, Materials and Methods). These sudden turns (body saccades) can be observed also in freely flying Drosophila. The turns are due to short pulses of torque (torque spikes). Results from previous studies suggest that the spikes are the primary behavior by which the fly adjusts its orientation in the panorama (Heisenberg and Wolf 1979, 1984, 1993; Mayer et al. 1988). Therefore, in the search for different behavioral strategies acquired during conditioning it seemed reasonable to concentrate on spiking behavior.

Figure 8.

(a) Typical 30-sec yaw torque flight trace (left) with a frequency distribution of the torque maxima and minima (right; rotated by 90°). Broken lines denote the detection thresholds. Detected spikes are indicated by arrowheads. (b) Enlarged stretch of the yaw torque flight trace with 33 data points (i.e., 1.6 sec) showing two spikes. Data points are connected by lines for better illustration. Broken lines indicate detection thresholds. (See Materials and Methods for details.)

Dynamics, timing, and polarity of torque spikes were subjected to close scrutiny (see Materials and Methods). However, most of the observed parameters were indistinguishable: Flies produced more and larger spikes when flying towards an unpreferred pattern compared with the alternative orientation, irrespective of whether this preference was spontaneous or trained operantly or classically (for detailed results, see Brembs 1996; see Fig. 3b as an example). Only the variable spike polarity revealed a statistically significant difference between the operantly and the classically trained flies (Fig. 4a). Flies trained operantly produced more spikes towards the unpunished pattern orientation than the classically trained flies (P < 0.008, repeated-measures ANOVA over all three test periods). As expected, this effect can also be seen in the fixation index for the unpunished pattern orientations, which is larger after operant than after classical training (Fig. 4b; P < 0.001, repeated-measures ANOVA over all three test periods). If indeed the operant situation during the training were causing the narrower fixation during the test the fly could have learned a rule such as “If you are out of the heat, head towards the nearest landmark.” However, this explanation probably does not apply, as will be shown in the next experiment.

Figure 4.

Spike polarity and cold pattern fixation of operantly and classically trained flies. For each fly, the mean of the 4-min preference test has been subtracted prior to averaging. (Open bars) Training; (hatched bars) test. (a) Mean (±s.e.m.) polarity indices in the cold sectors. The polarity of a spike is defined as towards pattern if it leads to a rotation of the arena that brings the center of the nearest pattern closer to the very front, which is delineated by the longitudinal axis of the fly. Accordingly, the spike polarity from pattern brings the nearest quadrant border closer to the most frontal position. The polarity index yields the fraction of spikes towards the pattern. It is defined as (st − sf)/(st + sf) with st being the number of spikes towards the pattern and sf the number of spikes away from the pattern. Noperant = 92; Nclassical = 83. (b) Mean (±s.e.m.) pattern fixation in the cold sectors. N = 100.

During classical conditioning flies were reinforced with the four patterns in fixed positions (0°, 90°, 180°, 270°). They could not obtain information about where exactly in the space between the patterns the heat was switched on or off. Operantly trained flies, however, experienced the entire 360° of angular arena orientations and thus could have obtained information about the width of the sectors (90°) for which flight directions were associated with heat. Choosing flight directions as far away as possible from the heat-associated ones would then lead to a narrow fixation peak for the unpunished pattern orientations. Dill et al. (1995) have shown that flies trained in an arena with random dot patterns that do not elicit fixation tend to choose flight directions as far away from the heat as possible. Therefore, the low fixation index and balanced spike polarity after classical training might be a consequence of the static pattern presentation.

The simplest way to provide the flies with the information about the width of the hot and cold sectors during classical training is to rotate the arena during training. Thus, for the following experiment, the panorama was slowly rotated in open loop. The learning scores of the operant control group and the classical group are nearly identical (P < 0.23, repeated-measures ANOVA over all three test periods; Fig. 5a). As can be seen in Figure 5b, classical training with rotating panorama increases the fixation index for the unpunished pattern orientations (P < 0.02; within-groups effect) and no difference between the two training procedures remains (P < 0.20; between-groups effect). In this case only pattern fixation was evaluated, as it is the direct consequence of the appropriate production of directed torque spikes.

To estimate the amount of arena rotation caused by spikes (i.e., the contribution of spikes to turning behavior) we have calculated the rotation index (see Materials and Methods). This index yielded an estimate of ∼65% (Brembs 1996) of the total arena rotation to be caused by spikes. As only 12%–25% of the experimental time is consumed by spikes (Brembs 1996), this analysis confirms the view that most arena rotation is caused by sudden turns. The remaining 35% are hidden in the torque baseline. Most of this arena rotation is likely to be caused by the “waggle” the fly is producing when flying straight, that is, resulting in zero net rotation. Thus, we have no indication of a major contribution of “slow turns” to the choice of flight direction in the flight simulator. Although these are all admittedly negative results we tentatively conclude that the operantly trained flies have not developed a more efficient strategy of avoiding “dangerous” flight directions.

Flies Can Transfer their Visual Memory to a New Behavior

So far, the experiments strongly suggest that the operant conditioning does not modify the fly's behavioral strategies or motor patterns and thus no additive operant component could be found. To gather positive evidence for the other option, namely the independence of the CS–US association formed during operant conditioning of any motor or rule learning, we investigated whether flies could be trained in one operant learning paradigm and would subsequently display the pattern preference in a different one.

In addition to the standard operant procedure at the flight simulator (fs mode) we used a new operant paradigm at the torque meter to be called switch (sw) mode. It is based on an experiment described previously (Wolf and Heisenberg 1991) in which the fly's spontaneous range of yaw torque is divided into a left and a right domain and the fly is conditioned by heat to restrict its range to one of the two domains. In the sw mode two stationary orientations of the panorama (or two colors of the illumination) are coupled to the two domains. For instance, if the fly generates a yaw torque value that falls into the left domain heat is on and the upright T is in frontal position; if the yaw torque changes to a value in the right domain heat goes off and the arena is quickly rotated by 90°, shifting the inverted T to the front (for further details, see Materials and Methods). The original experiment without visual cues is a case of pure motor learning (see Discussion). In the sw mode additionally a CS–US association may form because of the pairing of the visual cues with heat and no heat during training. Time course and 2-min performance indices of a representative experiment are shown in Figure 6. Note that avoidance scores are around PI = 0.5, corresponding to a heating time of only 25%.

Figure 6.

Mean (±s.e.m.) performance indices in a representative sw mode experiment, using color as visual cue. (Open bars) Training; (hatched bars) test.

With sw and fs mode as two different behavioral paradigms we asked whether it is possible to train the fly in one mode and make it display the pattern preference in the other one. A significant learning score after a behavioral transfer would corroborate our hypothesis that the CS–US association formed during operant conditioning in the fs mode does not rely on any motor or rule learning, but instead is a true classical (i.e., behavior-independent) association, the acquisition of which is facilitated by operant behavior.

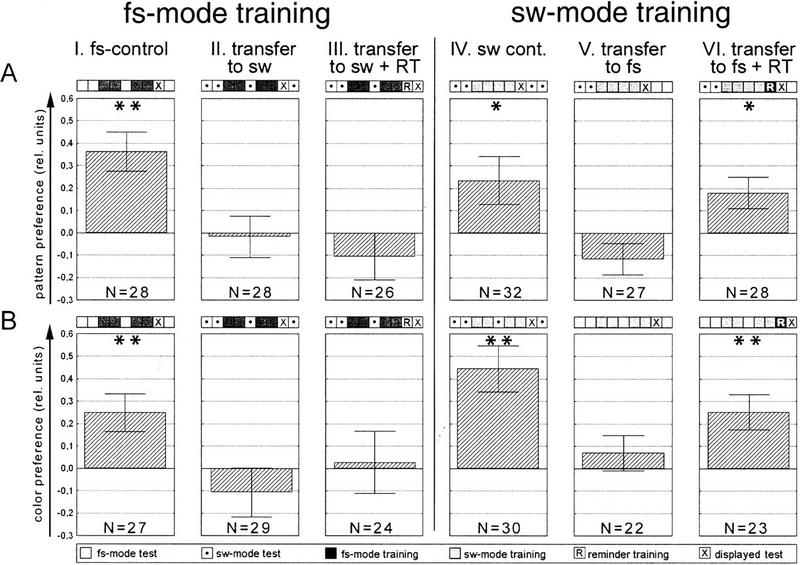

We tested the two forms of visual discrimination learning not only with patterns (upright and inverted T) but, in a second series of experiments, also with colors as described by Wolf and Heisenberg (1997; Materials and Methods). In each series six groups of flies were tested (Fig. 7): (1) training and test in fs mode; (2) training in fs mode followed by test in sw mode; (3) training in fs mode followed by reminder training and test in sw mode; (4) training and test in sw mode; (5) training in sw mode followed by test in fs mode; and (6) training in sw mode followed by reminder training and test in fs mode.

Figure 7.

Summary table presenting the results of all transfer experiments. (A) Patterns as visual cues; (B) colors as visual cues. Experimental design is schematized by the nine squares above each performance index. All experiments are divided in 2-min test or training periods, except in A, IV-VI, where 1-min periods are used. Reminder training is always 60 sec. Statistics were performed as a Wilcoxon matched pairs test against zero. (*) P < 0.05; (**) P < 0.01.

No direct transfer was observed when fs and sw modes were interchanged between training and test, neither with patterns nor with colors as visual cues (columns II and V in Fig. 7). Therefore, we included a short reminder training venturing that the flies might not easily generalize across behavioral contexts [a similar effect was recently reported by Liu et al. (1999), who showed that flies in the fs mode are unable to generalize between two monochromatic colors of illumination]. Control experiments verified that the reminder training alone is too short to sufficiently condition the fly (data not shown). With this modification significant transfer was found from sw mode training to fs mode test for pattern as well as for color preferences (Fig. 7, column VI: P < 0.04 pattern; P < 0.005 color, Wilcoxon matched-pairs test) but not in the opposite direction (Fig. 7, column III: P < 0.37 pattern; P < 0.78 color, Wilcoxon matched-pairs test). This asymmetry is no surprise. The life threatening heat in sw mode training enforces a behavioral modification that under natural conditions would be useless in expressing pattern, color, or temperature preferences. After training in fs mode the conditioned pattern or color preference does not have sufficient impact to also induce this strange restriction of the yaw torque range. We consider it more important that the memory template acquired during training in the sw mode is sufficiently independent of the operant behavior by which it was mediated to still be measurable in an entirely different behavior. Likely, the same process as in classical conditioning is at work in the operant sw mode procedure. They both result in one or two memory templates with different ratings on an attraction/avoidance scale. The orientation behavior at the flight simulator has access to these templates. This result holds across different sensory cues (CSs: colors and patterns) and across slightly different training procedures (4 min of pattern vs. 8 min of color sw mode training).

DISCUSSION

In visual orientation at the flight simulator flies display pattern and color preferences previously acquired by associative conditioning. We have applied operant and classical conditioning procedures and have subsequently compared the flies in always the same orientation task (Table 1). The three classical procedures are very similar. They differ only in the total amount of heating and the temporal structure of the changes in pattern orientation and temperature. Learning roughly parallels energy uptake during training (Table 1). If flies are heated for a total of 4 min (stationary or slowly rotating patterns), learning scores reach about the same value found after operant training (PI = 0.4). In the extended yoked control total heating is applied for 1.5 min and learning is weak (PI = 0.2). Finally, after the normal two training phases of 4 min in the yoked control heating time is about 40 sec and no significant learning index is observed. Compared with the total amount of heat the distribution and duration of hot and cold periods as well as the dynamics of pattern motion seem to be of minor importance for the learning success. The facilitating effect of operant behavior has been shown only for the fs mode (Fig. 2). A replay experiment for the switch mode is still in progress. Research in this direction has been hampered by the condition of our present fly stocks. For unknown reasons, the flies in our department to date show weakened classical learning whereas operant conditioning seems unaffected. In this study, only the flies used in the transfer experiments showed this strange deficit.

Table 1.

Reinforcement Times and Estimated Energy Uptake in Operant and Classical Conditioning at the Flight Simulator

| Heat time (min) | Est. energy | PI | N | ||

|---|---|---|---|---|---|

| Operant | fs mode | 0.7 | 41.1 | 0.45 | 30 |

| ext. fs mode | 1.5 | 85.7 | 0.47 | 30 | |

| Classical | yoked control | 0.7 | 41.1 | 0.15 | 30 |

| ext. yoked control | 1.5 | 85.7 | 0.25 | 30 | |

| rotating classical | 4 | 168.0 | 0.43 | 36 |

In the operant training, the time each fly spent in the heat was calculated from the individual avoidance scores. The amount of energy taken up by each fly (in relative units) was estimated using the temperature measured at the point of the fly and multiplying it with the time the fly spent in the heat. PI is the learning test after the last training. N is the number of flies.

The main task of this study is to determine what makes the operant training more effective than the classical one. We have considered two options. In principle, the operant training may either facilitate the formation of the CS–US association (acquisition) or support memory recall. We explicitly addressed the second option and investigated whether during operant training in addition to the CS–US association the fly might learn a behavioral strategy that in the subsequent test would improve pattern discrimination. Our results provide not the least support for this alternative. We have searched extensively for behavioral differences in the learning test between classically and operantly trained flies. If found, such differences would favor the idea that flies had modified some motor program or behavioral strategy as part of what they had learned. No evidence for acquired motor programs or rule learning during operant conditioning could be provided. Admittedly, these negative results do not entirely preclude that such differences still exist, hidden in the temporal fine structure of yaw torque modulations. However, as the advantage of the operant training is large (Fig. 2) one would expect the behavioral strategy providing it to show saliently in the torque traces.

From the way the learning test works it is apparent that during classical and operant training heat-associated pattern orientations become aversive, the others attractive. In other words, attraction and repulsion of the respective pattern orientation may be shifted to a behavior independent central state (memory template). An alternative interpretation linking the CS–US association directly to a behavior without central states would be to assume that, irrespective of open- or closed-loop, high temperature triggers an escape behavior that works without any spatial information in the arena and that this escape behavior is transferred from temperature to the respective pattern orientations (or colors) during association formation. In this case, the behavior after classical and operant conditioning should also be identical. Experiments to decide whether there is an escape behavior that can be linked to the heated pattern orientation, showed no such effect (R. Wolf, pers. comm.).

For the other option—facilitation of acquisition of the CS–US association—however, we did obtain positive evidence in the transfer experiments (Fig. 7). Our finding that the fly establishes pattern and color preferences while engaged in one behavior (sw mode) and later displays them by a different behavior (fs mode) supports the notion that conditioned preferences are behavioral dispositions (central states) rather than motor patterns (for a general discussion of behavioral dispositions, see Heisenberg 1994). The necessity of a reminder training slightly weakens this conclusion. In principle, the 60-sec reminder training in the particular situation after the switch mode could be sufficient to generate the preferences anew, despite the fact that without the preceding sw mode training it is not. We consider this interpretation unlikely and, instead, favor the view that recall of the memorized classical association is dependent not only on the sensory but also the behavioral context. In other words, an association might be easier to recall in the behavioral state in which it was acquired than in a different behavioral situation. The asymmetry in the transfer results between sw and fs modes deserves special attention. Obviously, although both sw and fs mode take place at the torque meter in the same arena and involve operant behavior, they are entirely different. Whereas in fs mode the choice of flight direction and between the two temperatures depends on the ability to fly straight and, above that, on a sequence of discrete, well-timed orienting maneuvers (i.e., torque spikes), in sw mode it is the actual value of the fly's yaw torque that controls this choice. Moreover, whereas in fs mode the fly receives instantaneous feedback on the effect its behavior has on its stimulus situation, in sw mode it can only get this feedback at the point where the experimenter decides to invert the sign of the torque trace (i.e., defines the yaw torque as zero). Evidently, fs mode is a lot less artificial then sw mode.

It is thus easily appreciated that the CS–US association formed in classical pattern learning can be expressed in the fs mode test without reminder training (Wolf et al. 1998). Judging from the transfer experiments (Fig. 7), one would predict this to be more difficult when the test after classical conditioning was in sw mode. In fact, we have independent evidence that the fly is reluctant to reduce its yaw torque range if not forced (e.g., by life-threatening heat) to do so (B. Brembs and M. Heisenberg, in prep.). Wolf and Heisenberg (1991) have shown operant behavior to flexibly and very quickly adjust the fly's stimulus situation according to its desired state. Reducing its behavioral options more permanently in anticipation of reinforcement may be an animal's last resort.

Rescorla (1994) suggested that the behavior of the animal might compete with the sensory signals in the animal's search for a predictor of the reinforcer. Unsuccessfully searching for temporal contingencies between motor output and the reinforcer could reduce the efficiency of the CS–US association in classical conditioning. Conversely, successful behavioral control of the CS and the reinforcer may increase the efficiency of the acquisition process.

We would like to propose that two basic types of operant conditioning, pure and facilitating, encompass all known examples of operant conditioning. The first one is pure operant conditioning, in which only an association between a behavior and the reinforcer (B–US) is formed. This can be described as an after-effect of operant behavior. It has been well documented before (see Introduction and, for Drosophila yaw torque motor learning, Wolf and Heisenberg 1991) and probably occurs abundantly in postural adaptation and other basic tuning processes without being much noticed.

The second type is facilitating operant conditioning. We entertain the view that as soon as the organism is enabled to form associations between other sensory stimuli and the reinforcer, operant behavior facilitates the formation of such CS–US associations. In the present study, for the first time the facilitating effect of operant behavior during acquisition of a CS–US relationship has been clearly demonstrated. Most laboratory experiments of operant conditioning such as the Skinner box or the Morris water maze consist of a multitude of sensory and motor processes in a complex sequence. Once segregated into elementary steps, the conditioning events involved would probably all be either classical, facilitating operant, or pure operant.

MATERIALS AND METHODS

Flies

The animals are kept on standard corn meal/molasses medium (for recipe, see Guo et al. 1996) at 25°C and 60% humidity with a 16-hr light/8-hr dark regime. Female flies (24–48 hr old) are briefly immobilized by cold-anesthesia and glued (Locktite UV glass glue) with head and thorax to a triangle-shaped copper hook (0.05 mm diameter) the day before the experiment. The animals are then kept individually in small vials and fed a sucrose solution until the experiment.

Apparatus

The core device of the set-up is the torque meter. Originally devised by Götz (1964) and repeatedly improved by Heisenberg and Wolf (1984), it measures a fly's angular momentum around its vertical body axis. The fly, glued to the hook as described above, is attached to the torque meter via a clamp to accomplish stationary flight in the center of a cylindrical panorama (arena, 58 mm diameter), homogeneously illuminated from behind (Fig. 1). For green and blue illumination of the arena, the light is passed through monochromatic broad-band Kodak Wratten gelatin filters (nos. 47 and 99, respectively). Filters can be exchanged by a fast magnet within 0.1 sec.

The angular position of an arbitrarily chosen point of reference on the arena wall delineates a relative flight direction of 0-360°. Flight direction (arena position) is recorded continuously via a circular potentiometer and stored in the computer memory together with yaw torque (sampling frequency 20 Hz) for later analysis. The reinforcer is a light beam (4 mm diameter at the position of the fly), generated by a 6 V, 15 W Zeiss microscope lamp, filtered by an infrared filter (Schott RG780, 3 mm thick), and focused from above on the fly. The strength of the reinforcer is determined empirically by adjusting the voltage to attain maximum learning. In all experiments the heat is life-threatening for the fly: More than 30 sec of continuous irradiation is fatal. Heat at the position of the fly is switched on and off by a computer-controlled shutter intercepting the beam (Fig. 1).

Classical Conditioning (Stationary or Rotating Patterns)

Four black, T-shaped patterns of alternating orientation (i.e., two upright and two inverted) are evenly spaced on the arena wall (pattern width ϑ = 40°, height θ = 40°, width of bars = 14°). For stationary pattern presentation, the animals are trained with patterns at 0°, 90°, 180°, and 270°. Every 3 sec the arena is quickly rotated by 90°, thus bringing the alternative pattern orientation into the frontal position. For rotating pattern presentation, the panorama is rotated in open loop (alternatingly 720° clockwise and 720° counterclockwise) at an angular velocity of 30°/sec. Reinforcement (input voltage 4.5 V) is made to be contiguous with the appearance of one of the two pattern orientations in the frontal quadrant of the fly's visual field.

Switch (sw) Mode

The fly is heated (input voltage 6.0 V) whenever the fly's yaw torque is in one half of its range (approximately corresponding to either left or right turns; for a justification of this assumption, see Heisenberg and Wolf 1993), and heat is switched off when the torque passes into the other half (henceforth yaw torque sign inversion). During yaw torque sign inversion not only temperature but also a visual cue is exchanged. Visual cues can be either colors (blue/green) or pattern orientations (upright/inverted T in front). For color as visual cue, the panorama consists of 20 evenly spaced stripes (pattern wavelength λ = 18°) and the illumination of the arena is changed from green to blue or vice versa. For pattern orientation as visual cue, one of the pattern orientations is presented stationarily in front of the fly, the other at 90° and 270° (as described in the classical set-up). Whenever the range of the fly's yaw torque passes into the other half, the arena is turned by 90° to bring the other pattern orientation in front. For technical reasons, a hysteresis is programmed into the switching procedure: Whereas pattern orientation requires a ±5.9 × 10−10 Nm hysteresis during yaw torque sign inversion, a ±2.0 × 10−10 Nm hysteresis is sufficient for color as visual cue.

Flight Simulator (fs) Mode

Closing the feedback loop to make the rotational speed of the arena proportional to the fly's yaw torque (coupling factor k = −11°/sec × 10−10 Nm) enables the fly to stabilize the rotational movements of the panorama and to control its angular orientation (flight direction). For color as visual cue (see Wolf and Heisenberg 1997) the arena is a striped drum (λ = 18°). A computer program divides the 360° of the arena into four virtual 90° quadrants. The color of the illumination of the whole arena is changed whenever one of the virtual quadrant borders passes a point in front of the fly. If pattern orientation is used as visual cue (Wolf and Heisenberg 1991), alternating upright and inverted T-shaped patterns are placed in the centers of the quadrants as described in the classical set-up above. Heat reinforcement (input voltage 6.0 V) is made contiguous either with the appearance of one of the pattern orientations in the frontal visual field or with either green or blue illumination of the arena.

Analysis of Data

Yaw Torque Evaluation

Yaw torque is the only direct behavioral variable that is recorded in our set-up. Flies use predominantly “body saccades” to accomplish rapid changes in flight direction (Heisenberg and Wolf 1979, 1984, 1993; Mayer et al. 1988). The torque meter reveals these body saccades as sudden bursts of torque (torque spikes). The amplitudes of the torque baseline and torque spikes show considerable inter- and intraindividual variation (Heisenberg and Wolf 1984). In this study, we use the amplitude and the dynamics of spikes to distinguish them from the baseline in an automated spike detector (see Fig. 8 and the following explanation). Detection thresholds are computed for every 600 data points (i.e., 30 sec of flight). Maxima and minima in the torque traces of these periods are extracted. Their frequency distribution has two peaks delineating the interval inside which the torque baseline is assumed to lie (Fig. 8a). Once a data point exceeds this threshold, the following points must follow a simple set of rules for the detector to count a spike. An array of data points containing two typical spikes is depicted in Figure 8b.

A continuous array of 2 < n < 17 data points (corresponding to 850 msec) of equal sign, the first of which exceeding the torque baseline is considered a spike if it fulfills the following empirically derived criteria (see Fig. 8b):

Tmax >|1.4 τT + [1.22 · (data resolution)]/τT|, where Tmax denotes the largest absolute value in the array and τT the appendant of the two thresholds delineating the torque baseline. Data resolution is 12 bit, that is, 4096.

|TL| < |0.2 Tmax| or τ1 < TL < τ2, where TL denotes the last of the n data points and τ1 and τ2 denote the two thresholds with τ1 < 0 < τ2.

The fairly large time window of 850 msec was chosen to also detect the occasional double spike or spikes that are immediately followed by optomotor “waggle”. For analyzing spiking behavior, the following parameters are extracted: spike amplitude, frequency, polarity, duration, latency, and interspike interval (see Fig. 8b).

During classical training, the lack of contingency between behavioral output and sensory input leads to drifting of the torque baseline over most of the torque range of the fly. Therefore, no spike detection is possible in these phases. For this reason, all comparative studies of spiking behavior in classical and operant conditioning were restricted to the (closed-loop) test periods.

Arena Position Evaluation

The pattern preference of individual flies is calculated as the performance index: PI = (ta − tb)/(ta + tb). During training, tb indicates the time the fly was exposed to the reinforcer and ta the time without reinforcement. During tests, ta and tb refer to the times when the fly chose the formerly unpunished or punished situation, respectively.

The fly's ability to stay on target, that is, to keep one pattern directly in front is assessed as the fixation index: FI = (f1 − f2)/(f1 + f2), with f1 referring to the time the fly kept the patterns in the frontal octant of its visual field, compared to the time the quadrant borders were in this position (f2).

As a quantitative measure for the subjective impression of sudden arena rotations accounted for by spikes, the sum of angular displacements during spikes is compared to the sum of interspike displacement for each 2-min period in a rotation index: RI = (rs − ri)/(rs + ri), where rs denotes the sum of angular displacement during the spikes and ri the sum of arena displacements between two spikes.

Statistics

Between-group analyses were performed with repeated-measures ANOVAs whenever more than two periods of 2 min were compared at a time. Where necessary, the mean value of the two preference tests was subtracted to homogenize data between groups. This procedure transforms the data to normality so that a parametric ANOVA is justified. Between-group comparisons of single 2-min periods were carried out with the Mann–Whitney U-test. Wilcoxon matched-pairs tests were used to test single scores against zero. In both latter cases neither transformation nor preference subtraction were carried out.

Acknowledgments

We thank Reinhard Wolf for many invaluable discussions and his unselfish assistance in designing the numerous evaluation algorithms and solving technical difficulties. Without him, this study would not have been possible. We are also indebted to Bertram Gerber whose rigorous critique significantly improved the clarity of the manuscript. The helpful comments of two anonymous referees are gratefully acknowledged as well. The work was supported by a grant (He 986/10) of the Deutsche Forschungsgemeinschaft.

The publication costs of this article were defrayed in part by payment of page charges. This article must therefore be hereby marked “advertisement” in accordance with 18 USC section 1734 solely to indicate this fact.

REFERENCES

- Balleine B. Asymmetrical interactions between thirst and hunger in Pavlovian-instrumental transfer. Q J Exp Psychol B. 1994;47:211–231. [PubMed] [Google Scholar]

- Brembs B. “Classical and operant conditioning in Drosophila at the flight simulator.” Diploma Thesis. Germany: Julius-Maximilians-Universität Würzburg; 1996. [Google Scholar]

- Carew TJ, Sahley CL. Invertebrate learning and memory: From behavior to molecules. Annu Rev Neurosci. 1986;9:435–487. doi: 10.1146/annurev.ne.09.030186.002251. [DOI] [PubMed] [Google Scholar]

- Denniston JC, Miller RR, Matute H. Biological significance as determinant of cue competition. Psych Sci. 1996;7:325–331. [Google Scholar]

- Dill M, Wolf R, Heisenberg M. Visual pattern memory without shape recognition. Phil Trans R Soc Lond B. 1995;349:143–152. doi: 10.1098/rstb.1995.0100. [DOI] [PubMed] [Google Scholar]

- Dill M, Wolf R, Heisenberg M. Visual pattern recognition in Drosophila involves retinotopic matching. Nature. 1993;365:751–753. doi: 10.1038/365751a0. [DOI] [PubMed] [Google Scholar]

- ————— Behavioral analysis of Drosophila landmark learning in the flight simulator. Learn & Mem. 1995;2:152–160. doi: 10.1101/lm.2.3-4.152. [DOI] [PubMed] [Google Scholar]

- Donahoe JW. Selection networks: Simulation of plasticity through reinforcement learning. In: Packard Dorsel V, Donahoe JW, editors. Neural-networks models of cognition. Amsterdam, The Netherlands: Elsevier Science B. V.; 1997. pp. 336–357. [Google Scholar]

- Donahoe JW, Burgos JE, Palmer DC. A selectionist approach to reinforcement. J Exp Anal Behav. 1993;60:17–40. doi: 10.1901/jeab.1993.60-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donahoe JW, Palmer DC, Burgos JE. The S-R issue: Its status in behavior analysis and in Donahoe and Palmer's “Learning and complex behavior” (with commentaries and reply) J Exp Anal Behav. 1997;67:193–273. doi: 10.1901/jeab.1997.67-193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst R, Heisenberg M. The memory template in Drosophila pattern vision at the flight simulator. Vis Res. 1999;39:3920–3933. doi: 10.1016/s0042-6989(99)00114-5. [DOI] [PubMed] [Google Scholar]

- Eyding D. “Lernen und Kurzzeitgedächtnis beim operanten Konditionieren auf visuelle Muster bei strukturellen und bei chemischen Lernmutanten von Drosophila melanogaster.” Diploma Thesis. Germany: Julius-Maximilians-Universität Würzburg; 1993. [Google Scholar]

- Fanselow MS. Pavlovian conditioning, negative feedback, and blocking: Mechanisms that regulate association formation. Neuron. 1998;20:625–627. doi: 10.1016/s0896-6273(00)81002-8. [DOI] [PubMed] [Google Scholar]

- Glanzman DL. The cellular basis of classical conditioning in Aplysia californica—it's less simple than you think. Trends Neurosci. 1995;18:30–36. doi: 10.1016/0166-2236(95)93947-v. [DOI] [PubMed] [Google Scholar]

- Gormezano I, Tait RW. The Pavlovian analysis of instrumental conditioning. Pavlov J Biol Sci. 1976;11:37–55. doi: 10.1007/BF03000536. [DOI] [PubMed] [Google Scholar]

- Götz KG. Optomotorische Untersuchung des visuellen Systems einiger Augenmutanten der Fruchtfliege Drosophila. Kybernetik. 1964;2:77–92. doi: 10.1007/BF00288561. [DOI] [PubMed] [Google Scholar]

- Guo A, Götz KG. Association of visual objects and olfactory cues in Drosophila. Learn & Mem. 1997;4:192–204. doi: 10.1101/lm.4.2.192. [DOI] [PubMed] [Google Scholar]

- Guo A, Liu L, Xia S-Z, Feng C-H, Wolf R, Heisenberg M. Conditioned visual flight orientation in Drosophila: Dependence on age, practice, and diet. Learn & Mem. 1996;3:49–59. doi: 10.1101/lm.3.1.49. [DOI] [PubMed] [Google Scholar]

- Guthrie ER. The psychology of learning. New York, NY: Harper; 1952. [Google Scholar]

- Hammer M. An identified neuron mediates the unconditioned stimulus in associative learning in honeybees. Nature. 1993;366:59–63. doi: 10.1038/366059a0. [DOI] [PubMed] [Google Scholar]

- Hawkins RD, Abrams TW, Carew TJ, Kandel ER. A cellular mechanism of classical conditioning in Aplysia: Activity-dependent amplification of presynaptic facilitation. Science. 1983;219:400–405. doi: 10.1126/science.6294833. [DOI] [PubMed] [Google Scholar]

- Hebb DO. The distinction between “classical” and “instrumental”. Can J Psychol. 1956;10:165–166. doi: 10.1037/h0083677. [DOI] [PubMed] [Google Scholar]

- Heisenberg M. Voluntariness (Willkürfahigkeit) and the general organization of behavior. Life Sci Res Rep. 1994;55:147–156. [Google Scholar]

- ————— Pattern recognition in insects. Curr Opin Neurobiol. 1995;5:475–481. doi: 10.1016/0959-4388(95)80008-5. [DOI] [PubMed] [Google Scholar]

- Heisenberg M, Wolf R. On the fine structure of yaw torque in visual flight orientation of Drosophila melanogaster. J Comp Physiol. 1979;130:113–130. [Google Scholar]

- ————— . Vision in Drosophila. Genetics of microbehavior. New York, NY: Springer; 1984. [Google Scholar]

- ————— Reafferent control of optomotor yaw torque in Drosophila melanogaster. J Comp Physiol A. 1988;130:113–130. [Google Scholar]

- ————— The sensory-motor link in motion-dependent flight control of flies. Rev Oculomot Res. 1993;5:265–283. [PubMed] [Google Scholar]

- Hellige JB, Grant DA. Eyelid conditioning performance when the mode of reinforcement is changed from classical to instrumental avoidance and vice versa. J Exp Psychol. 1974;102:710–719. doi: 10.1037/h0036341. [DOI] [PubMed] [Google Scholar]

- Hoffmann J. Die Funktion von Antizipationen in der menschlichen Verhaltenssteuerung und Wahrnehmung. Göttingen, Germany: Hogrefe; 1993. Vorhersage und Erkenntnis. [Google Scholar]

- Horridge GA. Learning of leg position by headless insects. Nature. 1962;193:697–698. doi: 10.1038/193697a0. [DOI] [PubMed] [Google Scholar]

- Hoyle G. Mechanisms of simple motor learning. Trends Neurosci. 1979;2:153–155. [Google Scholar]

- Kandel ER, Abrams T, Bernier L, Carew TJ, Hawkins RD, Schwartz JH. Classical conditioning and sensitization share aspects of the same molecular cascade in Aplysia. Cold Spring Harb Symp Quant Biol. 1983;48:821–830. doi: 10.1101/sqb.1983.048.01.085. [DOI] [PubMed] [Google Scholar]

- Kim JJ, Krupa DJ, Thompson RF. Inhibitory cerebello-olivary projections and blocking effect in classical conditioning. Science. 1998;279:570–573. doi: 10.1126/science.279.5350.570. [DOI] [PubMed] [Google Scholar]

- Konorski J, Miller S. On two types of conditioned reflex. J Gen Psychol. 1937a;16:264–272. [Google Scholar]

- ————— Further remarks on two types of conditioned reflex. J Gen Psychol. 1937b;17:405–407. [Google Scholar]

- Liu L, Ernst R, Wolf R, Heisenberg M. Context generalization in Drosophila requires the mushroom bodies. Nature. 1999;400:753–756. doi: 10.1038/23456. [DOI] [PubMed] [Google Scholar]

- Mayer M, Vogtmannn K, Bausenwein B, Wolf R, Heisenberg M. Flight control during 'free yaw turns' in Drosophila melanogaster. J Comp Physiol. 1988;163:389–399. [Google Scholar]

- Menzel R, Müller U. Learning and memory in honeybees: From behavior to neural substrates. Annu Rev Neurosci. 1996;19:379–404. doi: 10.1146/annurev.ne.19.030196.002115. [DOI] [PubMed] [Google Scholar]

- Nargeot R, Baxter DA, Byrne JH. Contingent-dependent enhancement of rhythmic motor patterns: an in vitro analog of operant conditioning. J Neurosci. 1997;17:8093–8105. doi: 10.1523/JNEUROSCI.17-21-08093.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ————— In vitro analog of operant conditioning in Aplysia. I. Contingent reinforcement modifies the functional dynamics of an identified neuron. J Neurosci. 1999a;19:2247–2260. doi: 10.1523/JNEUROSCI.19-06-02247.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ————— In vitro analog of operant conditioning in Aplysia. II. Modifications of the functional dynamics of an identified neuron contribute to motor pattern selection. J Neurosci. 1999b;19:2261–2272. doi: 10.1523/JNEUROSCI.19-06-02261.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlov IP. Conditioned reflexes. Oxford, UK: Oxford University Press; 1927. [Google Scholar]

- Pearce JM. A model of stimulus generalization for Pavlovian conditioning. Psych Rev. 1987;94:61–73. [PubMed] [Google Scholar]

- ————— Similarity and discrimination: A selective review and a connectionist model. Psych Rev. 1994;101:587–607. doi: 10.1037/0033-295x.101.4.587. [DOI] [PubMed] [Google Scholar]

- Rescorla RA. Control of instrumental performance by Pavlovian and instrumental stimuli. J Exp Psychol Anim Behav Process. 1994;20:44–50. [PubMed] [Google Scholar]

- Rescorla RA, Solomon RL. Two-process learning theory: Relationships between Pavlovian conditioning and instrumental learning. Psychol Rev. 1967;74:151–182. doi: 10.1037/h0024475. [DOI] [PubMed] [Google Scholar]

- Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black A, Prokasy WF, editors. Classical conditioning II: Current research and theory. New York, NY: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- Sheffield FD. Relation of classical conditioning and instrumental learning. In: Prokasy WF, editor. Classical conditioning. New York, NY: Appleton-Century-Crofts; 1965. pp. 302–322. [Google Scholar]

- Skinner BF. Two types of conditioned reflex and a pseudo type. J Gen Psychol. 1935;12:66–77. [Google Scholar]

- ————— Two types of conditioned reflex: A reply to Konorski and Miller. J Gen Psychol. 1937;16:272–279. [Google Scholar]

- Spencer GE, Syed NI, Lukowiak K. Neural changes after operant conditioning of the aerial respiratory behavior in Lymnea stagnalis. J Neurosci. 1999;19:1836–1843. doi: 10.1523/JNEUROSCI.19-05-01836.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Time-derivative models of Pavlovian reinforcement. In: Gabriel M, Moore J, editors. Learning and computational neuroscience: Foundations of adaptive networks. Boston, MA: MIT Press; 1990. pp. 497–537. [Google Scholar]

- Trapold MA, Overmier JB. The second learning process in instrumental conditioning. In: Black AH, Prokasy WF, editors. Classical conditioning. New York, NY: Appleton-Century-Crofts; 1972. [Google Scholar]

- Trapold MA, Winokur S. Transfer from classical conditioning and extinction to acquisition, extinction, and stimulus generalization of a positively reinforced instrumental response. J Exp Psychol. 1967;73:517–525. doi: 10.1037/h0024374. [DOI] [PubMed] [Google Scholar]

- Trapold MA, Lawton GW, Dick RA, Gross DM. Transfer of training from differential classical to differential instrumental conditioning. J Exp Psychol. 1968;76:568–573. doi: 10.1037/h0025709. [DOI] [PubMed] [Google Scholar]

- Tully T. Of mutations affecting learning and memory in Drosophila–the missing link between gene product and behavior. Trends Neurosci. 1991;14:163–164. doi: 10.1016/0166-2236(91)90096-d. [DOI] [PubMed] [Google Scholar]

- Tully T, Boynton S, Brandes C, Dura JM, Mihalek R, Preat T, Villella A. Genetic dissection of memory formation in Drosophila. Cold Spring Harb Symp Quant Biol. 1990;55:203–211. doi: 10.1101/sqb.1990.055.01.022. [DOI] [PubMed] [Google Scholar]

- Tully T, Preat T, Boynton SC, Del-Vecchio M. Genetic dissection of consolidated memory in Drosophila. Cell. 1994;79:35–47. doi: 10.1016/0092-8674(94)90398-0. [DOI] [PubMed] [Google Scholar]

- Wang X, Liu L, Xia SZ, Feng CH, Guo A. Relationship between visual learning/memory ability and brain cAMP level in Drosophila. Sci China C Life Sci. 1998;41:503–511. doi: 10.1007/BF02882888. [DOI] [PubMed] [Google Scholar]

- Weidtmann N. “Visuelle Flugsteuerung und Verhaltensplastizität bei Zentralkomplex—Mutanten von Drosophila melanogaster.” Diploma Thesis. Germany: Julius-Maximilians-Universität Würzburg; 1993. [Google Scholar]

- Wolf R, Heisenberg M. Basic organization of operant behavior as revealed in Drosophila flight orientation. J Comp Physiol A. 1991;169:699–705. doi: 10.1007/BF00194898. [DOI] [PubMed] [Google Scholar]

- ————— Visual space from visual motion: Turn integration in tethered flying Drosophila. Learn & Mem. 1997;4:318–327. doi: 10.1101/lm.4.4.318. [DOI] [PubMed] [Google Scholar]

- Wolf R, Wittig T, Liu L, Wustmann G, Eyding D, Heisenberg M. Drosophila mushroom bodies are dispensable for visual, tactile, and motor learning. Learn & Mem. 1998;5:166–178. [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR. The complex structure of a simple memory. Trends Neurosci. 1997;20:588–594. doi: 10.1016/s0166-2236(97)01133-8. [DOI] [PubMed] [Google Scholar]

- Xia SZ, Feng CH, Guo AK. Temporary amnesia induced by cold anesthesia and hypoxia in Drosophila. Physiol Behav. 1999;65:617–623. doi: 10.1016/s0031-9384(98)00191-7. [DOI] [PubMed] [Google Scholar]

- Xia SZ, Liu L, Feng CH, Guo AK. Memory consolidation in Drosophila operant visual learning. Learn & Mem. 1997a;4:205–218. doi: 10.1101/lm.4.2.205. [DOI] [PubMed] [Google Scholar]

- ————— Nutritional effects on operant visual learning in Drosophila melanogaster. Physiol Behav. 1997b;62:263–271. doi: 10.1016/s0031-9384(97)00113-3. [DOI] [PubMed] [Google Scholar]