Abstract

Background:

Over years, surgical training is changing and years of tradition are being challenged by legal and ethical concerns for patient safety, work hour restrictions, and the cost of operating room time. Surgical simulation and skill training offer an opportunity to teach and practice advanced techniques before attempting them on patients. Simulation training can be as straightforward as using real instruments and video equipment to manipulate simulated “tissue” in a box trainer. More advanced virtual reality (VR) simulators are now available and ready for widespread use. Early systems have demonstrated their effectiveness and discriminative ability. Newer systems enable the development of comprehensive curricula and full procedural simulations.

Methods:

A PubMed review of the literature was performed for the MESH words “Virtual reality, “Augmented Reality”, “Simulation”, “Training”, and “Neurosurgery”. Relevant articles were retrieved and reviewed. A review of the literature was performed for the history, current status of VR simulation in neurosurgery.

Results:

Surgical organizations are calling for methods to ensure the maintenance of skills, advance surgical training, and credential surgeons as technically competent. The number of published literature discussing the application of VR simulation in neurosurgery training has evolved over the last decade from data visualization, including stereoscopic evaluation to more complex augmented reality models. With the revolution of computational analysis abilities, fully immersive VR models are currently available in neurosurgery training. Ventriculostomy catheters insertion, endoscopic and endovascular simulations are used in neurosurgical residency training centers across the world. Recent studies have shown the coloration of proficiency with those simulators and levels of experience in the real world.

Conclusion:

Fully immersive technology is starting to be applied to the practice of neurosurgery. In the near future, detailed VR neurosurgical modules will evolve to be an essential part of the curriculum of the training of neurosurgeons.

Keywords: Haptics, simulation, training, virtual reality

INTRODUCTION

Learning through observation has been a cornerstone of surgical education in the United States for over a hundred years. This practice is being increasingly challenged recently by legal and ethical concerns for patient safety, 80-hour resident work week restrictions, and the cost of operating room (OR) time. The emerging field of surgical simulation and virtual training offers an opportunity to teach and practice neurosurgical procedures outside of the OR. There is enormous potential to address patient safety, risk management concerns, OR management, and work hour requirements with more efficient and effective training methods.[4] The current goal of simulator training is to help trainees acquire the skills needed to perform complex surgical procedures before practicing them on patients. Virtual reality (VR) simulators in their current form have been demonstrated to improve the OR performance of surgical residents in some fields, such as laparoscopic and endovascular surgery training.[5,47] In this paper, we will discuss the evolution of the VR simulators for neurosurgical training, the different types of simulators as well as current and future applications of such technology.

Definition

Many terms are used to describe virtual environments (VEs); these include “artificial reality”, “cyberspace”, “VR”, “virtual worlds”, and “synthetic environment”. All these terms refer to an application that allows the participant to see and interact with distant, expensive, hazardous, or otherwise inaccessible three-dimensional (3D) environments. An important goal in the development of these virtual systems is for the sensory and interactive user experience to approach a believable simulation of the real. A VR computer-generated 3D spatial environment can offer full immersion into a virtual world, augmentations (overlay) of the real world, or “through-the-window” worlds (non-immersive). The technology for “seeing” is real-time, while interactive 3D computer graphics and the technology for “interacting” are still evolving and varied.[50]

Immersion and presence

These are two entangled terms in VR. Immersion refers to the experience of being surrounded by a virtual world. It is the extent to which the user perceives one or more elements of the experience (e.g., tactile, spatial, or sensory) as being part of a convincing reality. Presence best describes the user's interactions with the virtual world. The term “telepresence” is often used to describe the performance of a task or set of tasks in a remote interconnected virtual world. There are two kinds of telepresence: real-time and delayed. In the former, interactions are reflected in the movement of real world objects. For example, movement of a data-glove simultaneously moves a robotic hand. With delayed telepresence, interactions are initially recorded in a visual, virtual world, and transmitted across the network when the user is satisfied with the results.

Virtual reality technique

In order to utilize VR simulators for training, planning, and performing treatment and therapy, these computer-based models must create visualizations of data (usually anatomical) and model interactions with the visualized data. There are two types of data visualization: surface rendering and volume rendering. The latter is limited by a need for greater computational processing power.[2]

In terms of modeling interactions, physically-based modeling can be used to predict how objects behave (e.g., catheter simulation). This is partially accomplished by incorporating sound, touch, and/or other forces into the simulation. These additional interactions result in simulated behavior that more closely reflects real behavior. An important advantage of behavior simulation is that it allows prediction of therapeutic outcomes, in addition to intervention planning.

ROLE OF SIMULATION IN NEUROSURGICAL TRAINING

Neurosurgeons must frequently practice and refine their skills. Practice in a controlled environment gives the performer the opportunity to make mistakes without consequences; however, providing such practice opportunities presents several challenges. Surgical mistakes can have catastrophic consequences, and teaching during surgery results in longer operating times and increases the overall risk to the patient. Every patient deserves a competent physician every time. Additionally, learning new techniques requires one-on-one instruction. However, often there is a limited number of instructors and cases, and limited time.

The Accreditation Council for Graduate Medical Education (ACGME) has recognized the need for simulation scenarios as a way to circumvent these obstacles. Simulations will be part of the new system of graduate medical education. Such simulations will encompass procedural tasks, crisis management, and the introduction of learners to clinical situations. For surgical training, plastic, animal, and cadaveric models have been developed. However, they are all less than ideal. Plastic and cadaveric models do not have the same characteristics as live tissue, and the anatomy of animal models is different. The expense of animal and cadaveric models is also prohibitive.

VR training simulators provide a promising alternative. These simulators are analogous to flight simulators, on which trainee pilots log hours of experience before taking a real plane to the skies. Surgeons can practice difficult procedures under computer control without putting a patient at risk. In addition, surgeons can practice on these simulators at any time, immune from case-volume or location limitations. Moreover, VR provides a unique resource for education about anatomical structure. One of the main challenges in medical education is to provide a realistic sense of the inter-relation of anatomical structures in 3D space. With VR, the learner can repeatedly explore the structures of interest, take them apart, put them together, and view them from almost any 3D perspective.

VR simulation is mostly used for training by combining registered patient data with anatomical information from an atlas for a case-by-case visualization of known structures.[58,67,73,79] It may be used for routine training, or to focus on particularly difficult cases and new surgical techniques. The possible applications include endoscopy, complex data visualization, radiosurgery, augmented-reality surgery, and robotic surgery.[6,24,34,63,66] The ultimate goals include the training of residents and planning and performing surgeries on simulators. Potential limitations of VR training simulators are related to the transfer of skills from the simulation to actual patient. To realistically simulate an operation, the method of interaction should be the same as in the real case. Even when this ideal situation is not possible, the VR can serve as an anatomy educational system.

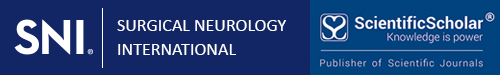

Another important factor in surgical training is the transfer of information between surgeons when evaluating a given set of data. Systems have been developed that pair 3D data manipulation with a large stereoscopic projection system, so that an instructor may manipulate the image data while sharing important information with a larger audience.[43] Systems like these have been noted to significantly enhance the teaching of procedural strategies and neurosurgical anatomy. A list of the virtual environments discussed in this paper are listed in Table 1. Each system is identified by its trademarked name or by the author or group who developed it.

Table 1.

A list of the virtual environments discussed in this paper. Each system is identified by its trademarked name or by the author or group who developed it

APPLICATION OF VIRTUAL REALITY SIMULATION IN NEUROSURGERY

Planning: Complex data visualization

Neurosurgeons are increasingly interested in computer-based surgical planning systems, which allow them to visualize and quantify the three-dimensional information available in the form of medical images. By allowing the surgeon quick and intuitive access to this three-dimensional information, computer-based visualization and planning systems may potentially lower cost of care, increase confidence levels in the OR, and improve patient outcomes.[68] Neurosurgery is an inherently three-dimensional activity; it deals with complex, overlapping structures in the brain and spine which are not easily visualized. To formulate the most effective surgical plan, the surgeon must be able to visualize these structures and understand the consequences of a proposed surgical intervention, both to the intended surgical targets and to surrounding, viable tissues.

Props interface

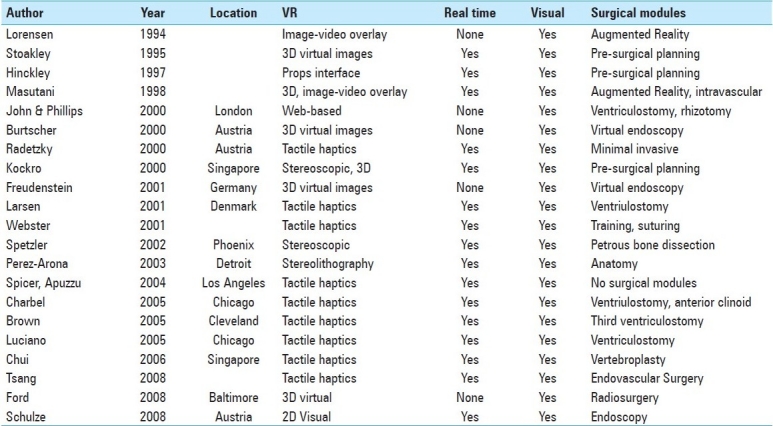

In pre-operative planning, the main focus is exploring the patient data as fully as possible, and evaluating possible intervention procedures against that data, rather than reproducing the actual operation. This is accomplished by first creating 3D images from the patient's own diagnostic images, such as computed tomography (CT) scans and magnetic resonance images (MRI). A variety of interfaces then allow the surgeon to interact with these images. The interaction method need not be entirely realistic, and it generally is not. One such example is the University of Virginia “Props” interface[28,38] [Figure 1]. Note that this interface allows for interaction with a mannequin's head without any suggestion that the surgeon will ever interact with a patient's head in the same way. Another example is the computer-based neurosurgical planning system, Netra, which incorporates a 3D user interface based on the two-handed physical manipulation of handheld tools. In one hand, the user holds a virtual, miniature head.[38] In the other hand, the user interacts with the head using a variety of tools, such as a cross-sectioning plane or stylus.

Figure 1.

A user selected a cutting plane of a mannequin head with the props interface showing the corresponding MRI image cuts part of preoperative surgical planning.[38] Permission for use obtained by IOS Press BV

The virtual representation of the cutting-plane prop mirrors all six degrees-of-freedom of the physical tool: linear motion along and rotation about the x, y, and z axes. However, object cross-sections cannot be mathematically manipulated in each of these degrees of freedom; only rotation about the axis normal to the cutting-plane and linear motion across the plane are allowed, and these motions do not affect the cross-sectional data. In this regard, the cutting-plane prop acts a bit like a flashlight. The virtual plane is much larger than the physical cutting-plane prop, so when one holds the input device to the side of the mannequin's head, on the screen the plane still virtually intersects the brain, even though the two input devices do not physically intersect.[28,39]

Worlds in miniature

Stoakley et al., introduced a user interface technique which augments a common immersive head-tracking display with a hand-held copy of the virtual environment called Worlds in Miniature (WIM) metaphor.[37,77] This system offers a second dynamic viewport onto the virtual environment in addition to the first-person perspective offered by a virtual reality system. Objects may be directly manipulated either through the immersive viewport or through the 3D viewport offered by the WIM. The user can interact with the environment by direct manipulation through either of the two object representations. Moving an object on the model moves the corresponding life-size representation of that object and vice versa.

Users interact with the WIM using props and a two-handed technique. The non-preferred hand holds a clipboard while the preferred hand holds a ball instrumented with input buttons. By rotating the clipboard, the user can visualize objects and aspects of the environment which may be obscured from the initial line of sight. The ball is used to manipulate objects. Reaching into the WIM with the ball allows manipulation of objects at a distance, while reaching out into the space within arm's reach allows manipulation on a representatively sized scale.

Planning for stereotactic surgery – StereoPlan

A three-dimensional software package (StereoPlan) for planning stereotactic frame-based functional neurosurgery was introduced by Radionics.[9,45] This software localizes the entire image volume, supports multiple image sets simultaneously and displays multiplanar reformatted data and three-dimensional views giving the surgeon information from the data. The system provides the coordinates for the standard stereotactic frames that are used to guide the route for brain surgery. Multiple measurement lines allow streamlined measuring of anatomical landmarks. This allows the clinician to track the entire trajectory and take a virtual path through the anatomy and view all structures passed through at any given depth. A more complete examination of patient data is possible, and multiple possible routes for intervention can be explored.

The Virtual Workbench

The Virtual Workbench[64] is a VR workstation modeled on the binocular microscope more familiar to medical workers than the immersion paradigm, but is ‘hands on’ in a way that a microscope is not. Using a Cathode Ray Tube (CRT) monitor, a pair of stereoscopic Crystal EyesTM glasses and a transparent mirror, the user experiences his hands and the volumetric 3D image of the medical data as co-located in the same position in real time. Using the Virtual Workbench, it is frequently necessary to rotate the display, to specify a cutting plane exposing a particular cross-section of brain data, to specify a point within a suspected tumor and ask the system to display the connected region of similar data values it appears to belong to. To facilitate the exploration of volume data sets there is a general interface which can deal with 3D medical dynamic data. This lets one turn an object by reaching in and dragging it around, select an arbitrary cutting plane by means of the stylus, crop the volume to a region of interest and zoom this up.

The dextroscope and virtual intracranial visualization and navigation

The Dextroscope, developed by Volume Interactions LTD, has become a standard tool for surgical planning on a case-specific level. Similar to the Virtual Workbench, this device allows the user to ‘reach in’ to a 3D display where hand-eye coordination allows careful manipulation of 3D objects. Patient-specific data sets from multiple imaging techniques (magnetic resonance imaging, magnetic resonance angiography, magnetic resonance venography, and computed tomography) can be coregistered, fused, and displayed as a stereoscopic 3-D object.[8] Physicians have noted that this device successfully simulates the real operating environment, and is a unique and powerful tool for developing an appreciation for spatial neurovascular relationships on a patient-to-patient basis.[21,84] Around the world, the Dextroscope has been used to plan for various neurosurgical procedures, including meningioma, ependyoma, and cerebral arteriovenous malformation (AVM) excision, microvascular decompression, and dozens of other lesion studies.[7,21,29,54,61,76,85]

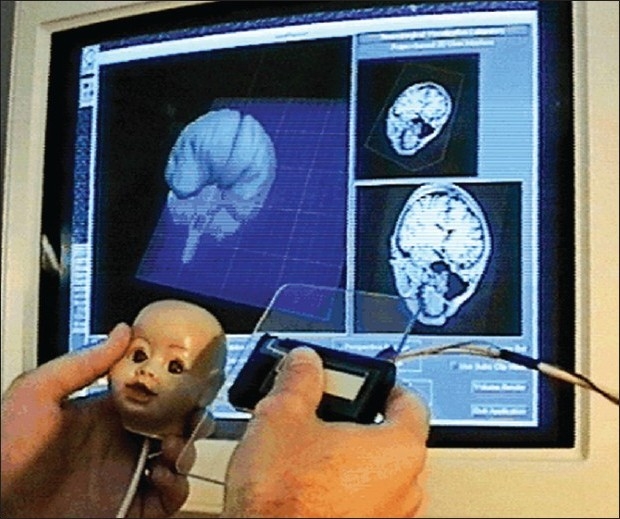

A 3D interface called Virtual Intracranial Visualization and Navigation (VIVIAN) is one of the suites of 3-D tools developed for use with the Dextroscope. The VIVIAN workspace enabled users to coregister data; selectively segment images, obtain measurements, and simulate intraoperative viewpoints for the removal of bone and soft tissue. This has been applied to tumor neurosurgery planning, a field in which volumetric visualization of the relevant structures is essential (pathology, blood vessels, skull)[12,42,72] [Figure 2].

Figure 2.

Computerized Tomography/ Magnetic Resonance Imaging/ Magnetic Resonance Angiography volume complex before (a) and after (b) registration for the Virtual Workbench. The registration landmarks can be seen as lines crossing the volume left to right.[30] Figure appears on page 7. Permission for use obtained by IEEE Intellectual Property Rights Office

Radiosurgery

In radiosurgery, X-ray beams from a linear accelerator are finely collimated and accurately aimed at a lesion. Popular products for performing radiosurgery include Radionics X-knife and Elekta's Gammaknife. Planning radiosurgery is suitable for VR, since it involves attaining a detailed understanding of 3D structure.[36] Ford et al., introduced a method for virtually framing patient data, along with superimposed fiducial markers, within a multiview planning module for the Gammaknife system.[24] Giller and Fiedler demonstrated that a virtual framing system allows for a more thorough exploration of radiosurgical preplanning strategies.[27] They emphasize the value of having ample time to plan a procedure and to test multiple approaches before the patient is fixed in the stereotactic frame.

Performing: Augmented reality surgery

In augmented reality surgery, there is a need for very accurate integration of patient data and the real patient. Lorensen et al.,[10] developed a system that claims to “enhance reality in the operating room. In this system, a computerized segmentation process classifies tissues within the 3-D volume of processed imaging data. It can differentiate between brain surface, cerebral spinal fluid, edema (fluid), tumor, and skin.[11] The surgical plan can be generated the night before the operation. Before preparing the patient for the operation, the video and computer-generated surfaces of the patient are aligned and combined. During the operation, this fused video signal gives the surgeon additional help for the localization of the tumor. The surgeon can then assess the location of surgical tools, such as a needle tip, relative to the desired location or the location of a lesion in 3D space. This can lead to accurate localization and placement during neurosurgical procedures.[60]

Tele (remote) medicine

Another application of augmented reality surgery is in Tele (remote) medicine. The Artma Virtual Patient (ARTMA Inc.) is another system which uses augmented reality to merge a video image with 3D data, specifically for stereoendoscopic modules.[53] In this system, the data fusion is superimposed with the appropriate location. The calibration is achieved by fiducial markers and surface fitting. Therefore, 3D sensors actively track the position of the stereotactical instruments. The 3D graphics are extracted from CT data or X-ray images. During the operation, the surgeon sees the endoscopic image in combination with the acquired data indicating predefined reference points that specify important anatomic structures. This allows for increased precision in localization and trajectory of incisions.

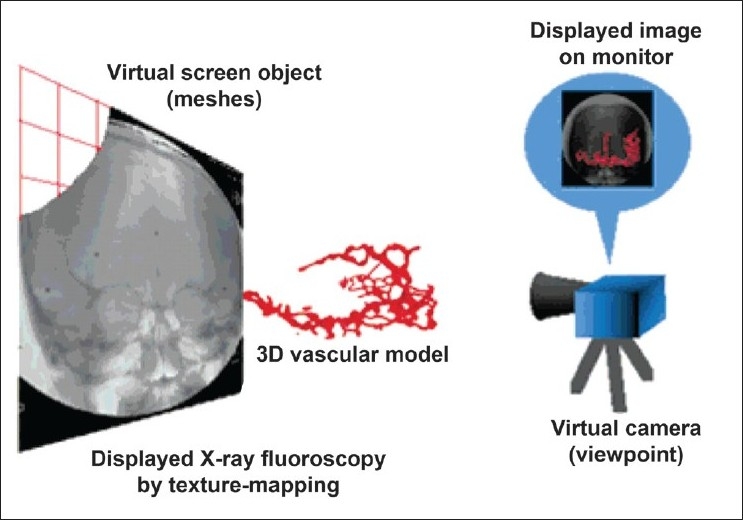

Augmented reality in intravascular neurosurgery

Masutani et al., constructed an augmented reality-based visualization system to support intravascular neurosurgery and evaluate it in clinical environments.[57] The 3D vascular models are overlaid on motion pictures from X-ray fluoroscopy by 2D/3D registration using fiducial markers. The models are reconstructed from 3D data obtained from X-ray CT angiography or from magnetic resonance angiography using the “Marching-Cubes algorithm. Intraoperative X-ray images are mapped as texture patterns on a screen object which is displayed with the vascular models. A screen object is constructed behind (or in front of) a 3D vascular model in the virtual space of the system. On this virtual screen, intraoperative live video images of X-ray fluoroscopy are displayed in succession by texture mapping. The position and direction of a virtual camera (as a viewpoint) are calculated from the coordinates of the fiducial markers so that the projective view geometry of the 3D computer graphics corresponds to that of the X-ray fluoroscopy system. This technique integrates all the elements needed for the augmented reality visualization system. These are vascular modeling, motion picture display, and real-time distortion correction of X-ray images, and 2D/3D registration. Each of these elements is described in the following sections [Figure 3].

Figure 3.

Augmented Reality visualization of a 3D vascular model with X-ray fluoroscopy. On the virtual screen, intraoperative live video images from X-ray fluoroscopy are displayed by texture mapping. The positions and orientations of all the objects and the viewpoint are registered using fiducial markers.[57] Figure appears on page 240. Permission for use obtained by John Wiley and Sons Inc. Permissions Dept

Immersive virtual reality simulators

Need for immersive virtual reality in neurosurgery simulation

Manipulation in virtual reality has focused heavily on visual feedback techniques (such as highlighting objects when the selection cursor passes through them) and generic input devices (such as the glove). Such virtual manipulations lack many qualities of physical manipulation of objects in the real world which users might expect or unconsciously depend upon. Thus, there is a need for immersive VR, where the system provides maximal primary sensory input/output including haptic and kinesthetic modalities as well as cognitive interaction and assessment. While VR technology has the potential to give the user a better understanding of the space he or she inhabits, and can improve performance in some tasks, it can easily present a virtual world to the user that is just as confusing, limiting and ambiguous as the real world. We have grown accustomed to real world constraints: things we cannot reach, things hidden from view, and things beyond our sight or behind us. VR needs to address these constraints and with respect to these issues should be “better than the real world. A key target is to go beyond rehearsal of basic manipulative skills, and enable training of procedural skills like decision making and problem solving. In this respect, the sense of presence plays an important role in the achievable training effect. To enable user immersion into the training environment, the surrounding and interaction metaphors should be the same as during the real intervention.

Requirements for immersive virtual reality simulation

Construction of a virtual reality surgical model begins with acquisition of imaging data and may involve the use of a combination of magnetic resonance imaging, computed tomography, and digital subtraction angiography. These data are captured and stored in the DICOM (DIgital COMmunication) data format, which has been widely accepted throughout the radiology community. This format allows raw image data from several imaging modalities to be combined, separated, and mathematically manipulated by means of processing algorithms that are independent of the specific imaging modality from which the data were derived. Thus, digitized plain films (X-ray and dye-contrast angiography), as well as MRI, magnetic resonance angiography, magnetic resonance venography, CT, digital subtraction angiography, and diffusion tensor image data may be processed by means of a small number of software tools for computational modeling.

To be useful, these data must be used in the construction of a model with which the surgeon may interact. The technique used to produce a model depends on the intended use and desired complexity of the model. For example, in some cases, all that is required is an anatomically accurate three-dimensional visual representation of the image data.

At the other end of the spectrum there is a need for a model that is not only anatomically accurate but also capable of being physically manipulated by an operator and responds to that manipulation. Furthermore, the model would provide tactile (i.e., haptic) as well as visual feedback. Thus, a computational model is required. There are many methods by which computational models might be generated, the vast majority of which use mathematically complex engineering solutions. One such model was created by Wang et al., at the University of Nottingham. Their surgical simulator introduced boundary element (BE) technology to model the deformable properties of the brain with surgical interaction. Combined with force-feedback and stereoscopics, this system represented a distinctive step in the development of computational surgical models.[81]

Haptics

Haptics refers to the feedback of proprioceptive, vestibular, kinesthetic, or tactile sensory information to the user from the simulated environment. Haptics does not include exclusively visual or auditory representations of the results of force application that do not provide the user with feedback through these other sensory modalities. Haptic systems remain relatively underdeveloped when compared with visual and auditory systems but they remain an essential component in many simulation systems directed toward the training of physical tasks. This is especially true of simulated surgical training environments, where the sense of highly discriminative tactile feedback is crucial for the safe and accurate manipulation of the surgical situation. Whereas real-time graphics simulations require a frame refresh rate of approximately 30 to 60 frames per second, highly discriminative haptic devices require a refresh rate of approximately 1000 frames per second. The exact value will vary with the stiffness of the material and the speed of motion. The requirement of a very high refresh rate for a convincing tactile experience, and thus the need for quicker feedback and higher processing power, has limited the relative development of haptic technologies in surgical simulation.

For haptic interaction to take place, the simulator must be able to determine when two solid objects have contacted one another (known as collision detection, or CD) and where that point of contact has occurred. Contact or restoring forces must be generated to prevent penetration of the virtual object. An important challenge in the development of faithful virtual reality surgical simulation is the implementation of real-time interactive CD. This involves determining whether virtual objects touch one another by occupying intersecting volumes of virtual space simultaneously.

The resulting movement, deformation, or fracture of the contacted surface are visually and haptically rendered in real time. A system that combines these elements can be used in surgical training. For example, one group simulated the experience of burrhole creation during a virtual craniotomy, in an effort to augment conventional surgical training.[1] Webster et al., developed a suturing simulator using a haptic device, and featuring real time deformation modeling of tissues and suture material.[82] Haptic devices can also be used to enhance performance during real surgeries. For example, tools for endovascular neurosurgery have been developed which provide haptic feedback representing force information encountered by a stent, catheter, or guide wire against endovascular surfaces.[71] The simulation of brain deformation by a spatula was also modeled, the aim is to transfer the “sensing of pressure” feeling to the trainee by their mentors, in an attempt to avoid significant brain injury from retraction.[32,33,48]

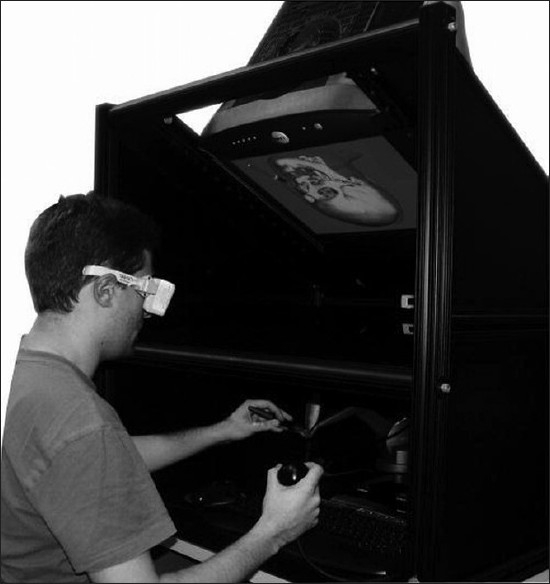

Ventriculostomy

ImmersiveTouch is a new augmented virtual reality system and is the first to integrate a haptic device and a high-resolution, high-pixel-density stereoscopic display.[55] The haptic device of the ImmersiveTouch system includes a head and hand tracking system and is collocated with the displayed visual data. This system is designed to allow easy development of VR simulation and training applications which appeal to multiple sensory modalities, including visual, aural, tactile, and kinesthetic[55] [Figure 4].

Figure 4.

Photograph showing the ImmersiveTouch™ system in operation.[13] Figure appears on page 517. Permission for use obtained by The Journal of Neurosurgery, Permissions

Raw DICOM data can be reconstructed after importation into a software package that allows slice-by-slice analysis and image filtering for the reduction of noise and artifacts. A volume of intracranial contents extending from the cortical surface to the midline can be segmented into two objects with subvoxel accuracy: the parenchyma, consisting of cortical gray matter, underlying white matter, and deep nuclei; and the lateral ventricle, consisting of cerebrospinal fluid (CSF) with an infinitely thin ependymal lining.[75]

Given the assumption that the thickness of the ependymal lining is vanishingly small, its effect on the deformation characteristics at the boundary could be ignored (except for the application of a boundary condition), and the physical properties of water were assigned throughout the volume of the ventricle to account for the presence of CSF.[49]

The first generation of haptic ventriculostomy simulators included a novel haptic feedback mechanism based on the physical properties of the regions mentioned above but presented poor graphics-haptics collocation. The second generation simulators developed for the ImmersiveTouch introduces a head-tracking system and a high-resolution stereoscopic display in an effort to provide perfect graphics-haptics collocation and enhance the realism of the surgical simulation.[56]

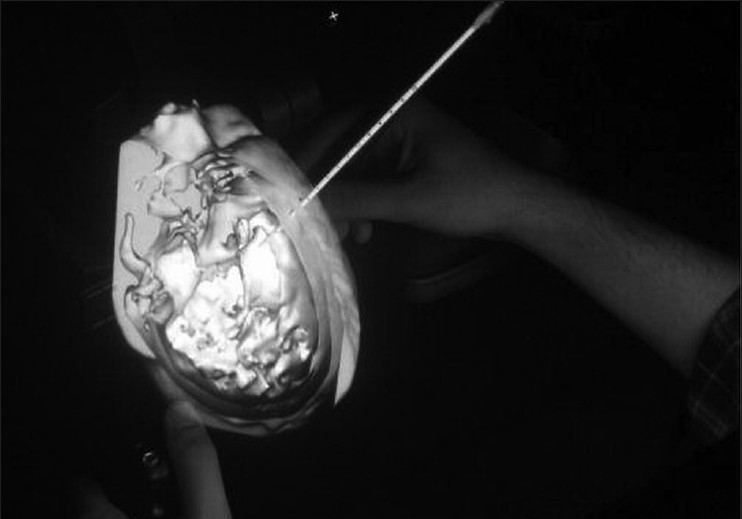

The creators of the system recognized surgeries as being composed of individual modules, which can be deconstructed and simulated individually. The proof-of-concept module for the system was a ventriculostomy catheter placement. Neurosurgical faculty members, as well as residents and medical students, found the simulation platform to have realistic visual, tactile, and handling characteristics, and saw it as a viable alternative to traditional training methods[49] [Figure 5].

Figure 5.

Photograph demonstrating catheter insertion in the ImmersiveTouch system.[13] Figure appears on page 517. Permission for use obtained by The Journal of Neurosurgery, Permissions

Banerjee et al., used the ventriculostomy module to compare the results of training on this system with free-hand ventriculostomy training. Surgical fellows and residents used the ImmersiveTouch system to simulate catheter placement into the foramen of Monro. The accuracy of the placement (mean distance of the catheter tip from the Monro foramen) was measured and was found to be comparable to the accuracy of free-hand ventriculostomy placements as reported in a retrospective evaluation.[13] These results suggest fidelity in this system's reproduction of the real procedure. Subsequently, Lemole et al., used the same procedure on simulated abnormal anatomy. Image data of a patient with a shifted ventricle was imported into the system, and surgical residents attempted to cannulate the ventricular system. With repeated attempts, participant placed the catheter closer to the target location. In addition, an increase in cannulization success was seen on repeated attempts among all groups tested.[51] This suggests that use of the ImmersiveTouch system leads to an increase in understanding of the abnormal ventricular anatomy and an effective ‘jump-start’ in the learning curve for the procedure.

Vertebroplasty

In vertebroplasty, the physician relies on both sight and feel to properly place the bone needle through various tissue types and densities, and to help monitor the injection of polymethylmethacrylate (PMMA) or cement into the vertebra. Incorrect injection and reflux of the PMMA into inappropriate areas can result in detrimental clinical complications. A recent paper focuses on the human-computer interaction for simulating PMMA injection in the virtual spine workstation. Fluoroscopic images are generated from the CT patient volume data and simulated volumetric flow using a time varying 4D volume rendering algorithm. The user's finger movement is captured by a data glove. Immersion CyberGrasp is used to provide the variable resistance felt during injection by constraining the user's thumb [Figure 6]. Based on the preliminary experiments with this interfacing system comprising both simulated fluoroscopic imaging and haptic interaction, they found that the former has a larger impact on the user's control during injection.[17]

Figure 6.

Overview of haptic and visual interaction for simulation of PMMA injection using CyberGrasp device.[17] Figure appears on page 98. Permission for use obtained by IOS Press BV

Endoscopy

Endoscopic surgery is increasing in popularity, as it provides unique and significant advantages. It has also become an extremely popular surgical application of VR, in part because it may provide more data on the limited view of the operational field. In addition, simulation of endoscopic surgery has become relatively easy due to restricted tactile feedback and limited freedom of movement of instruments during these procedures. For simulation and training, a surgeon can perform a Virtual Endoscopy – a technique whereby imaging data can be combined to form a virtual data model which is explored by the surgeon as if through a true endoscope. Endoscopic simulators are produced by many of the major medical VR companies, often with a focus on training.

Most of the current literature regarding surgical simulation relates to some form of endoscopic procedure.[14,15,18,22,23,31,44,59,62,65,83] Usually this approach is used because it provides a simplified engineering solution to the difficult problem of haptic feedback. This limitation can be partially overcome by endoscopic instruments, which are passed through ports equipped with feedback devices. This allows immediate contact between the simulator and the operator, according direct haptic feedback to the surgeon. Also, the instruments used are able to exercise only limited degrees of freedom, which simplify engineering challenges that may be associated with this type of feedback.[78,20,35,69,74]

Schulze et al., developed and tested a system whereby a virtual endoscopy planning system was translated into a useful system for intra-operative navigation of endonasal transsphenoidal pituitary surgery. They reported that the addition of patient data from the virtual system was both feasible and beneficial for this procedure, and likely for others.[70]

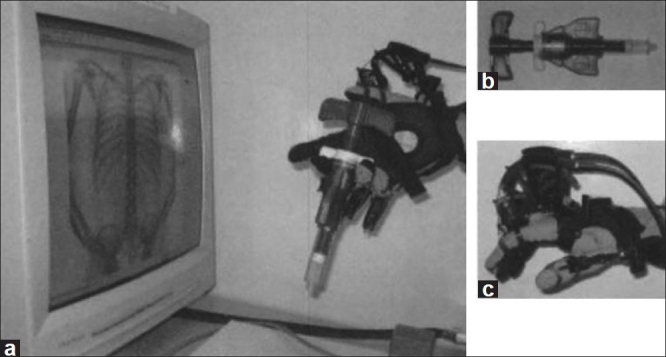

Endovascular simulation

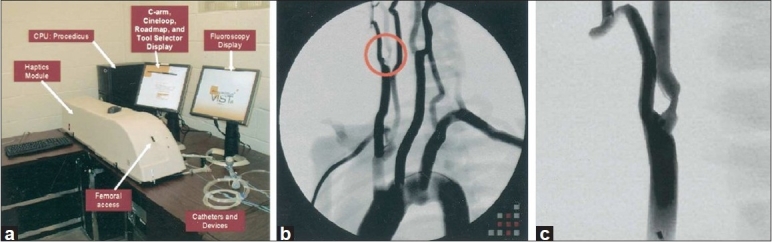

Endovascular surgery has many of the same limitations as endoscopic surgery, such as a reduction in tactile sensation and a limited freedom of movement.[25,26] There are a number of high-fidelity endovascular simulators commercially available, all of which provide haptic, aural, and visual interfaces. These simulators include the ANGIO Mentor from Simbionix, the VIST training simulator from Mentice AB, and SimSuite, from the Medical Simulation Corporation. Training modules provided by these devices include angioplasty and stenting of many major vessels[80] [Figure 7].

Figure 7.

(a) Vascular Intervention System Training simulator (VIST). (b) Simulated aortic arch angiogram screen capture with right internal carotid artery stenosis circled for clarification. (c) Close-up of circled lesion.[40] Figure appears on page 1119. Permission for use obtained by Elsevier Ltd. Global Rights Department

These simulators have been used in training for carotid artery stenting (CAS). Hsu et al., conducted a training study for this procedure with the VIST simulator and found that practice with the simulator led to an improvement in time to successful completion for both novice and advanced participants.[40] In addition, construct validity of the system was confirmed, as the advanced group outperformed the untrained group on the simulated procedure, and 75% of the participants felt the simulator was both realistic and valuable for improving their surgical skills.

Aggarwal et al., tested the transfer of skills between different simulated tasks. They found that after training with a renal angioplasty task, participants performed just as well on an iliac angioplasty task as those who train in the iliac task.[3] With a similar training method, Dawson et al., found a significant improvement in case completion time, fluoroscopy time, and volume of contrast used after two days of intense simulator training.[19]

Chaer et al., demonstrated that endovascular skills acquired through simulator-based training transfer to the clinical environment. When compared to a control group of residents who received no simulator training, those who trained for a maximum of two hours with the simulator scored significantly higher (using the global rating scale) in performing a pair of supervised occlusive disease interventions on patients.[16]

DISCUSSION

Virtual environments are being increasingly used by neurosurgeons to simulate a wide array of procedural modules. In the development of immersive and effective systems, some challenges and limitations arise.

Limitation of immersive virtual reality in neurosurgery

Open cranial operations provide a special challenge, since various tissue types may be concurrently present in the surgical field. These tissues are compacted in a three dimensional fashion, with a complex relationship to scalp, skull, and intracranial vessels. The adjacent structures must be visually distinguishable as well as demarcated by their often vastly different physical properties. Although the adult human brain is anatomically extremely complex, its physical properties within the normal parenchyma are fairly similar throughout the entire volume of the tissue. The brain and its relationships to vascular supply and the skull, as well as white matter tracts can now be imaged with high spatial and anatomic precision by noninvasive imaging modalities, including diffusion tensor imaging, which enhances differentiation between tissue types during diagnosis and tumor excision.[52] Integration of this data into a VR will require complex manipulation of raw and post processed imaging data and the anatomic subsystems also imaged and co-registered with high complexity. Texture maps and geometric forms can be used to account for the visual representation of dissimilar tissues, and dynamic finite element analyses must be utilized to account for the forces encountered during manipulation of the tissue. Moreover, the deformation of structures is quite complex and influenced by the value of retraction or resection.[81]

CONCLUSIONS

Virtual environments represent a key step toward enhancing the experience of performing and learning neurosurgical techniques. Virtual systems are currently used to train surgeons, prepare the surgical team for procedures, and provide invaluable intraoperative data. User response to these systems has been positive and optimistic about future applications for these technologies. fMRI studies using tactile virtual reality interface with a data glove showed activation maps in the anticipated modulations in motor, somatosensory, and parietal cortex, supporting the idea that tactile feedback enhances the realism of virtual hand-object interactions.[46] It is, therefore, essential for users to be involved in the design of these systems and in applied trials of their prospective uses.

An important question to ask now is whether human performance can be improved through the use of a neurosurgical virtual environment and whether that improvement can be measured. Few studies have attempted to establish statistical evidence for a virtual system enhancing neurosurgical performance over traditional planning or intra-operative systems. A group at the University of Tokyo, however, has recently established that an interactive visualization system and a virtual workstation offered significantly improved diagnostic value over traditional radiological images when detecting the offending vessels in a sample of patients (n = 17) with neurovascular compression syndrome.[41] These results look promising, but more groups must statistically examine the efficacy of new virtual systems in order to establish that this technology will lead to genuine improvements in outcomes, and an advancement of the field.

Contributor Information

Ali Alaraj, Email: alaraj@uic.edu.

Michael G. Lemole, Email: mlemole@surgery.arizona.edu.

Joshua H. Finkle, Email: josh.finkle@gmail.com.

Rachel Yudkowsky, Email: rachely@uic.edu.

Adam Wallace, Email: adam.n.wallace@gmail.com.

Cristian Luciano, Email: clucia1@uic.edu.

P. Pat Banerjee, Email: banerjee1995@gmail.com.

Silvio H. Rizzi, Email: srizzi2@uic.edu.

Fady T. Charbel, Email: fcharbel@uic.edu.

REFERENCES

- 1.Acosta E, Liu A, Armonda R, Fiorill M, Haluck R, Lake C, et al. Burrhole simulation for an intracranial hematoma simulator. Stud Health Technol Inform. 2007;125:1–6. [PubMed] [Google Scholar]

- 2.Acosta E, Muniz G, Armonda R, Bowyer M, Liu A. Collaborative voxel-based surgical virtual environments. Stud Health Technol Inform. 2008;132:1–3. [PubMed] [Google Scholar]

- 3.Aggarwal R, Black SA, Hance JR, Darzi A, Cheshire NJ. Virtual reality simulation training can improve inexperienced surgeons′ endovascular skills. Eur J Vasc Endovasc Surg. 2006;31:588–93. doi: 10.1016/j.ejvs.2005.11.009. [DOI] [PubMed] [Google Scholar]

- 4.Aggarwal R, Darzi A. Organising a surgical skills centre. Minim Invasive Ther Allied Technol. 2005;14:275–9. doi: 10.1080/13645700500236822. [DOI] [PubMed] [Google Scholar]

- 5.Aggarwal R, Hance J, Darzi A. The development of a surgical education program. Cir Esp. 2005;77:1–2. doi: 10.1016/s0009-739x(05)70794-6. [DOI] [PubMed] [Google Scholar]

- 6.Albani JM, Lee DI. Virtual reality-assisted robotic surgery simulation. J Endourol. 2007;21:285–7. doi: 10.1089/end.2007.9978. [DOI] [PubMed] [Google Scholar]

- 7.Anil SM, Kato Y, Hayakawa M, Yoshida K, Nagahisha S, Kanno T. Virtual 3-dimensional preoperative planning with the dextroscope for excision of a 4th ventricular ependymoma. Minim Invasive Neurosurg. 2007;50:65–70. doi: 10.1055/s-2007-982508. [DOI] [PubMed] [Google Scholar]

- 8. [Last cited on 2010 Dec 20]. Available from: http://www.dextroscope.com/interactivity.html .

- 9. [Last cited on 2010 Dec 20]. Available from: http://www.radionics.com/products/functional/stereoplan.shtml .

- 10. [Last cited on 2010 Dec 20]. Available from: http://evlweb.eecs.uic.edu/EVL/VR/

- 11. [Last cited on 2010 Dec 20]. Available from: http://www.cre.com/acoust.html .

- 12. [Last cited on 2010 Dec 20]. Available from: http://www.iss.nus.sg/medical/virtualworkbench. TVWWP .

- 13.Banerjee PP, Luciano CJ, Lemole GM, Jr, Charbel FT, Oh MY. Accuracy of ventriculostomy catheter placement using a head- and hand-tracked high-resolution virtual reality simulator with haptic feedback. J Neurosurg. 2007;107:515–21. doi: 10.3171/JNS-07/09/0515. [DOI] [PubMed] [Google Scholar]

- 14.Burtscher J, Dessl A, Maurer H, Seiwald M, Felber S. Virtual neuroendoscopy, a comparative magnetic resonance and anatomical study. Minim Invasive Neurosurg. 1999;42:113–7. doi: 10.1055/s-2008-1053381. [DOI] [PubMed] [Google Scholar]

- 15.Buxton N, Cartmill M. Neuroendoscopy combined with frameless neuronavigation. Br J Neurosurg. 2000;14:600–1. doi: 10.1080/02688690050206882. [DOI] [PubMed] [Google Scholar]

- 16.Chaer RA, Derubertis BG, Lin SC, Bush HL, Karwowski JK, Birk D, et al. Simulation improves resident performance in catheter-based intervention: Results of a randomized, controlled study. Ann Surg. 2006;244:343–52. doi: 10.1097/01.sla.0000234932.88487.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chui CK, Teo J, Wang Z, Ong J, Zhang J, Si-Hoe KM, et al. Integrative haptic and visual interaction for simulation of pmma injection during vertebroplasty. Stud Health Technol Inform. 2006;119:96–8. [PubMed] [Google Scholar]

- 18.Dang T, Annaswamy TM, Srinivasan MA. Development and evaluation of an epidural injection simulator with force feedback for medical training. Stud Health Technol Inform. 2001;81:97–102. [PubMed] [Google Scholar]

- 19.Dawson DL, Meyer J, Lee ES, Pevec WC. Training with simulation improves residents’ endovascular procedure skills. J Vasc Surg. 2007;45:149–54. doi: 10.1016/j.jvs.2006.09.003. [DOI] [PubMed] [Google Scholar]

- 20.Devarajan V, Scott D, Jones D, Rege R, Eberhart R, Lindahl C, et al. Bimanual haptic workstation for laparoscopic surgery simulation. Stud Health Technol Inform. 2001;81:126–8. [PubMed] [Google Scholar]

- 21.Du ZY, Gao X, Zhang XL, Wang ZQ, Tang WJ. Preoperative evaluation of neurovascular relationships for microvascular decompression in the cerebellopontine angle in a virtual reality environment. J Neurosurg. 2010;113:479–85. doi: 10.3171/2009.9.JNS091012. [DOI] [PubMed] [Google Scholar]

- 22.Dumay AC, Jense GJ. Endoscopic surgery simulation in a virtual environment. Comput Biol Med. 1995;25:139–48. doi: 10.1016/0010-4825(94)00041-n. [DOI] [PubMed] [Google Scholar]

- 23.Edmond CV, Jr, Heskamp D, Sluis D, Stredney D, Sessanna D, Wiet G, et al. Ent endoscopic surgical training simulator. Stud Health Technol Inform. 1997;39:518–28. [PubMed] [Google Scholar]

- 24.Ford E, Purger D, Tryggestad E, McNutt T, Christodouleas J, Rigamonti D, et al. A virtual frame system for stereotactic radiosurgery planning. Int J Radiat Oncol Biol Phys. 2008;72:1244–9. doi: 10.1016/j.ijrobp.2008.06.1934. [DOI] [PubMed] [Google Scholar]

- 25.Gallagher AG, Cates CU. Approval of virtual reality training for carotid stenting: What this means for procedural-based medicine. JAMA. 2004;292:3024–6. doi: 10.1001/jama.292.24.3024. [DOI] [PubMed] [Google Scholar]

- 26.Gallagher AG, McClure N, McGuigan J, Ritchie K, Sheehy NP. An ergonomic analysis of the fulcrum effect in the acquisition of endoscopic skills. Endoscopy. 1998;30:617–20. doi: 10.1055/s-2007-1001366. [DOI] [PubMed] [Google Scholar]

- 27.Giller CA, Fiedler JA. Virtual framing: The feasibility of frameless radiosurgical planning for the gamma knife. J Neurosurg. 2008;109:25–33. doi: 10.3171/JNS/2008/109/12/S6. [DOI] [PubMed] [Google Scholar]

- 28.Goble J, Hinckley K, Snell J, Pausch R, Kassell N. Two-handed spatial interface tools for neurosurgical planning. IEEE Comput. 1995:20–6. [Google Scholar]

- 29.Gonzalez Sanchez JJ, Ensenat Nora J, Candela Canto S, Rumia Arboix J, Caral Pons LA, Oliver D, et al. New stereoscopic virtual reality system application to cranial nerve microvascular decompression. Acta Neurochir (Wien) 2010;152:355–60. doi: 10.1007/s00701-009-0569-x. [DOI] [PubMed] [Google Scholar]

- 30.Guan CG, Serra L, Kockro RA, Hern N, Nowinski WL, Chan C. Volume-based tumor neurosurgery planning in the virtual workbench. Proceedings of theVRAIS’98 March1998. Atlanta, Georgia [Google Scholar]

- 31.Gumprecht H, Trost HA, Lumenta CB. Neuroendoscopy combined with frameless neuronavigation. Br J Neurosurg. 2000;14:129–31. doi: 10.1080/02688690050004552. [DOI] [PubMed] [Google Scholar]

- 32.Haase J, Boisen E. Neurosurgical training: More hours needed or a new learning culture? Surg Neurol. 2009;72:89–95. doi: 10.1016/j.surneu.2009.02.005. [DOI] [PubMed] [Google Scholar]

- 33.Hansen KV, Brix L, Pedersen CF, Haase JP, Larsen OV. Modelling of interaction between a spatula and a human brain. Med Image Anal. 2004;8:23–33. doi: 10.1016/j.media.2003.07.001. [DOI] [PubMed] [Google Scholar]

- 34.Hassan I, Gerdes B, Bin Dayna K, Danila R, Osei-Agyemang T, Dominguez E. Simulation of endoscopic procedures--an innovation to improve laparoscopic technical skills. Tunis Med. 2008;86:419–26. [PubMed] [Google Scholar]

- 35.Henkel TO, Potempa DM, Rassweiler J, Manegold BC, Alken P. Lap simulator, animal studies, and the laptent. Bridging the gap between open and laparoscopic surgery. Surg Endosc. 1993;7:539–43. doi: 10.1007/BF00316700. [DOI] [PubMed] [Google Scholar]

- 36.Hevezi JM. Emerging technology in cancer treatment: Radiotherapy modalities. Oncology (Williston Park) 2003;17:1445–56. [PubMed] [Google Scholar]

- 37.Hinckley K, Conway M, Pausch R, Proffitt D, Stoakley R, Kassell N. Revisiting haptic issues for virtual manipulation. [Last cited on 2010 Dec 20]. Available from: http://www.cs.virginia.edu/papers/manip.pdf .

- 38.Hinckley K, Pausch R, Downs JH, Proffitt D, Kassell NF. The props-based interface for neurosurgical visualization. Stud Health Technol Inform. 1997;39:552–62. [PubMed] [Google Scholar]

- 39.Hinckley K, Pausch R, Goble J, Kassell N. “passive real-world interface props for neurosurgical visualization. ACM CHI‘94 Conference on Human Factors in Computing System. 1994:452–8. [Google Scholar]

- 40.Hsu JH, Younan D, Pandalai S, Gillespie BT, Jain RA, Schippert DW, et al. Use of computer simulation for determining endovascular skill levels in a carotid stenting model. J Vasc Surg. 2004;40:1118–25. doi: 10.1016/j.jvs.2004.08.026. [DOI] [PubMed] [Google Scholar]

- 41.Kin T, Oyama H, Kamada K, Aoki S, Ohtomo K, Saito N. Prediction of surgical view of neurovascular decompression using interactive computer graphics. Neurosurgery. 2009;65:121–8. doi: 10.1227/01.NEU.0000347890.19718.0A. [DOI] [PubMed] [Google Scholar]

- 42.Kockro RA, Serra L, Tseng-Tsai Y, Chan C, Yih-Yian S, Gim-Guan C, et al. Planning and simulation of neurosurgery in a virtual reality environment. Neurosurgery. 2000;46:118–35. [PubMed] [Google Scholar]

- 43.Kockro RA, Stadie A, Schwandt E, Reisch R, Charalampaki C, Ng I, et al. A collaborative virtual reality environment for neurosurgical planning and training. Neurosurgery. 2007;61:379–91. doi: 10.1227/01.neu.0000303997.12645.26. [DOI] [PubMed] [Google Scholar]

- 44.Krombach A, Rohde V, Haage P, Struffert T, Kilbinger M, Thron A. Virtual endoscopy combined with intraoperative neuronavigation for planning of endoscopic surgery in patients with occlusive hydrocephalus and intracranial cysts. Neuroradiology. 2002;44:279–85. doi: 10.1007/s00234-001-0731-5. [DOI] [PubMed] [Google Scholar]

- 45.Krupa P, Novak Z. Advances in the diagnosis of tumours by imaging methods (possibilities of three-dimensional imaging and application to volumetric resections of brain tumours with evaluation in virtual reality and subsequent stereotactically navigated demarcation. Vnitr Lek. 2001;47:527–31. [PubMed] [Google Scholar]

- 46.Ku J, Mraz R, Baker N, Zakzanis KK, Lee JH, Kim IY, et al. A data glove with tactile feedback for fmri of virtual reality experiments. Cyberpsychol Behav. 2003;6:497–508. doi: 10.1089/109493103769710523. [DOI] [PubMed] [Google Scholar]

- 47.Laguna MP, de Reijke TM, Wijkstra H, de la Rosette J. Training in laparoscopic urology. Curr Opin Urol. 2006;16:65–70. doi: 10.1097/01.mou.0000193377.14694.7f. [DOI] [PubMed] [Google Scholar]

- 48.Larsen O, Haase J, Hansen KV, Brix L, Pedersen CF. Training brain retraction in a virtual reality environment. Stud Health Technol Inform. 2003;94:174–80. [PubMed] [Google Scholar]

- 49.Lemole GM, Jr, Banerjee PP, Luciano C, Neckrysh S, Charbel FT. Virtual reality in neurosurgical education: Part-task ventriculostomy simulation with dynamic visual and haptic feedback. Neurosurgery. 2007;61:142–8. doi: 10.1227/01.neu.0000279734.22931.21. [DOI] [PubMed] [Google Scholar]

- 50.Lemole M. Virtual reality and simulation in neurosurgical education. XIV World Congress Of Neurological Surgery. 2009 [Google Scholar]

- 51.Lemole M, Banerjee PP, Luciano C, Charbel F, Oh M. Virtual ventriculostomy with ‘shifted ventricle‘: Neurosurgery resident surgical skill assessment using a high-fidelity haptic/graphic virtual reality simulator. Neurol Res. 2009;31:430–1. doi: 10.1179/174313208X353695. [DOI] [PubMed] [Google Scholar]

- 52.Lo CY, Chao YP, Chou KH, Guo WY, Su JL, Lin CP. Dti-based virtual reality system for neurosurgery. Conf Proc IEEE Eng Med Biol Soc. 2007;2007:1326–9. doi: 10.1109/IEMBS.2007.4352542. [DOI] [PubMed] [Google Scholar]

- 53.Lorensen WE, Cline H, Nafis C, Kikinis R, Altobelli D, and Gleason L. Enhancing reality in the operating room. SIGGRAPH 94, Course Notes, Course 03. 1994:331–6. [Google Scholar]

- 54.Low D, Lee CK, Dip LL, Ng WH, Ang BT, Ng I. Augmented reality neurosurgical planning and navigation for surgical excision of parasagittal, falcine and convexity meningiomas. Br J Neurosurg. 2010;24:69–74. doi: 10.3109/02688690903506093. [DOI] [PubMed] [Google Scholar]

- 55.Luciano C, Banerjee P, Florea L, Dawe G. Design of the immersivetouch: A high-performance haptic augmented virtual reality system. 11th International Conference on Human-Computer Interactions. 2005 [Google Scholar]

- 56.Luciano C, Banerjee P, Lemole GM Jr, Charbel F. Second generation haptic ventriculostomy simulator using the immersivetouch system. Stud Health Technol Inform. 2006;119:343–8. [PubMed] [Google Scholar]

- 57.Masutani Y, Dohi T, Yamane F, Iseki H, Takakura K. Augmented reality visualization system for intravascular neurosurgery. Comput Aided Surg. 1998;3:239–47. doi: 10.1002/(SICI)1097-0150(1998)3:5<239::AID-IGS3>3.0.CO;2-B. [DOI] [PubMed] [Google Scholar]

- 58.McCracken TO, Spurgeon TL. The vesalius project: Interactive computers in anatomical instruction. J Biocommun. 1991;18:40–4. [PubMed] [Google Scholar]

- 59.McGregor JM. Enhancing neurosurgical endoscopy with the use of ‘virtual reality’ headgear. Minim Invasive Neurosurg. 1997;40:47–9. doi: 10.1055/s-2008-1053414. [DOI] [PubMed] [Google Scholar]

- 60.Meng FG, Wu CY, Liu YG, Liu L. Virtual reality imaging technique in percutaneous radiofrequency rhizotomy for intractable trigeminal neuralgia. J Clin Neurosci. 2009;16:449–51. doi: 10.1016/j.jocn.2008.03.019. [DOI] [PubMed] [Google Scholar]

- 61.Ng I, Hwang PY, Kumar D, Lee CK, Kockro RA, Sitoh YY. Surgical planning for microsurgical excision of cerebral arterio-venous malformations using virtual reality technology. Acta Neurochir (Wien) 2009;151:453–63. doi: 10.1007/s00701-009-0278-5. [DOI] [PubMed] [Google Scholar]

- 62.Noar MD. The next generation of endoscopy simulation: Minimally invasive surgical skills simulation. Endoscopy. 1995;27:81–5. doi: 10.1055/s-2007-1005639. [DOI] [PubMed] [Google Scholar]

- 63.Peters TM. Image-guidance for surgical procedures. Phys Med Biol. 2006;51:R505–40. doi: 10.1088/0031-9155/51/14/R01. [DOI] [PubMed] [Google Scholar]

- 64.Poston TL. The virtual workbench: Dextrous vr. ACM VRST‘94 -Reality Software and Technology. 1994:111–22. [Google Scholar]

- 65.Preminger GM, Babayan RK, Merril GL, Raju R, Millman A, Merril JR. Virtual reality surgical simulation in endoscopic urologic surgery. Stud Health Technol Inform. 1996;29:157–63. [PubMed] [Google Scholar]

- 66.Riva G. Applications of virtual environments in medicine. Methods Inf Med. 2003;42:524–34. [PubMed] [Google Scholar]

- 67.Rolland JP, Wright DL, Kancherla AR. Towards a novel augmented-reality tool to visualize dynamic 3-d anatomy. Stud Health Technol Inform. 1997;39:337–48. [PubMed] [Google Scholar]

- 68.Rosahl SK, Gharabaghi A, Hubbe U, Shahidi R, Samii M. Virtual reality augmentation in skull base surgery. Skull Base. 2006;16:59–66. doi: 10.1055/s-2006-931620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Satava RM. Virtual reality surgical simulator.The first steps. Surg Endosc. 1993;7:203–5. doi: 10.1007/BF00594110. [DOI] [PubMed] [Google Scholar]

- 70.Schulze F, Buhler K, Neubauer A, Kanitsar A, Holton L, Wolfsberger S. Intra-operative virtual endoscopy for image guided endonasal transsphenoidal pituitary surgery. Int J Comput Assist Radiol Surg. 2010;5:143–54. doi: 10.1007/s11548-009-0397-8. [DOI] [PubMed] [Google Scholar]

- 71.Sengupta A, Kesavadas T, Hoffmann KR, Baier RE, Schafer S. Evaluating tool-artery interaction force during endovascular neurosurgery for developing haptic engine. Stud Health Technol Inform. 2007;125:418–20. [PubMed] [Google Scholar]

- 72.Serra L, Nowinski WL, Poston T, Hern N, Meng LC, Guan CG, et al. The brain bench: Virtual tools for stereotactic frame neurosurgery. Med Image Anal. 1997;1:317–29. doi: 10.1016/s1361-8415(97)85004-9. [DOI] [PubMed] [Google Scholar]

- 73.Shuhaiber JH. Augmented reality in surgery. Arch Surg. 2004;139:170–4. doi: 10.1001/archsurg.139.2.170. [DOI] [PubMed] [Google Scholar]

- 74.Spicer MA, Apuzzo ML. Virtual reality surgery: Neurosurgery and the contemporary landscape. Neurosurgery. 2003;52:489–97. doi: 10.1227/01.neu.0000047812.42726.56. [DOI] [PubMed] [Google Scholar]

- 75.Spicer MA, van Velsen M, Caffrey JP, Apuzzo ML. Virtual reality neurosurgery: A simulator blueprint. Neurosurgery. 2004;54:783–97. doi: 10.1227/01.neu.0000114139.16118.f2. [DOI] [PubMed] [Google Scholar]

- 76.Stadie AT, Kockro RA, Reisch R, Tropine A, Boor S, Stoeter P, et al. Virtual reality system for planning minimally invasive neurosurgery.Technical note. J Neurosurg. 2008;108:382–94. doi: 10.3171/JNS/2008/108/2/0382. [DOI] [PubMed] [Google Scholar]

- 77.Stoakley R, Conway M, Pausch R, Hinckley K, Kassell N. Virtual reality on a wim: Interactive worlds in miniature, CHI‘95. 1995:265–72. [Google Scholar]

- 78.Surgical simulators reproduce experience of laparoscopic surgery. Minim Invasive Surg Nurs. 1995;9:2–4. [PubMed] [Google Scholar]

- 79.Thomas RG, John NW, Delieu JM. Augmented reality for anatomical education. J Vis Commun Med. 2010;33:6–15. doi: 10.3109/17453050903557359. [DOI] [PubMed] [Google Scholar]

- 80.Tsang JS, Naughton PA, Leong S, Hill AD, Kelly CJ, Leahy AL. Virtual reality simulation in endovascular surgical training. Surgeon. 2008;6:214–20. doi: 10.1016/s1479-666x(08)80031-5. [DOI] [PubMed] [Google Scholar]

- 81.Wang P, Becker AA, Jones IA, Glover AT, Benford SD, Greenhalgh CM, et al. A virtual reality surgery simulation of cutting and retraction in neurosurgery with force-feedback. Comput Methods Programs Biomed. 2006;84:11–8. doi: 10.1016/j.cmpb.2006.07.006. [DOI] [PubMed] [Google Scholar]

- 82.Webster RW, Zimmerman DI, Mohler BJ, Melkonian MG, Haluck RS. A prototype haptic suturing simulator. Stud Health Technol Inform. 2001;81:567–9. [PubMed] [Google Scholar]

- 83.Wiet GJ, Yagel R, Stredney D, Schmalbrock P, Sessanna DJ, Kurzion Y, et al. A volumetric approach to virtual simulation of functional endoscopic sinus surgery. Stud Health Technol Inform. 1997;39:167–79. [PubMed] [Google Scholar]

- 84.Wong GK, Zhu CX, Ahuja AT, Poon WS. Craniotomy and clipping of intracranial aneurysm in a stereoscopic virtual reality environment. Neurosurgery. 2007;61:564–8. doi: 10.1227/01.NEU.0000290904.46061.0D. [DOI] [PubMed] [Google Scholar]

- 85.Wong GK, Zhu CX, Ahuja AT, Poon WS. Stereoscopic virtual reality simulation for microsurgical excision of cerebral arteriovenous malformation: Case illustrations. Surg Neurol. 2009;72:69–72. doi: 10.1016/j.surneu.2008.01.049. [DOI] [PubMed] [Google Scholar]