Abstract

Families of infants who are congenitally deaf now have the option of cochlear implantation at a very young age. In order to assess the effectiveness of early cochlear implantation, however, new behavioral procedures are needed to measure speech perception and language skills during infancy. One important component of language development is word learning—a complex skill that involves learning arbitrary relations between words and their referents. A precursor to word learning is the ability to perceive and encode intersensory relations between co-occurring auditory and visual events. Recent studies in infants with normal hearing have shown that intersensory redundancies, such as temporal synchrony, can facilitate the ability to learn arbitrary pairings between speech sounds and objects (Gogate & Bahrick, 1998). To investigate the early stages of learning arbitrary pairings of sounds and objects after cochlear implantation, we used the Preferential Looking Paradigm (PLP) to assess infants’ ability to associate speech sounds to objects that moved in temporal synchrony with the onset and offsets of the signals. Children with normal hearing ranging in age from 6, 9, 18, and 30 months served as controls and demonstrated the ability to learn arbitrary pairings between temporally synchronous speech sounds and dynamic visual events. Infants who received their cochlear implants (CIs) at earlier ages (7–15 months of age) performed similarly to the infants with normal hearing after about 2–6 months of CI experience. In contrast, infants who received their implants at later ages (16–25 months of age) did not demonstrate learning of the associations within the context of this experiment. Possible implications of these findings are discussed.

Introduction

Over the past two decades, cochlear implantation has become a successful treatment for children and adults diagnosed with profound hearing loss. Cochlear implantation has facilitated the acquisition of spoken language for thousands of children who were prelingually deaf. However, though many children with prelingual deafness acquire language after receiving a cochlear implant (CI), there is enormous variability in outcomes and benefit. Not all children succeed in acquiring spoken language after implantation. Several demographic, audiological, and cognitive factors have been found to contribute to individual variability in speech and language outcomes (Pisoni, 2000; Pisoni, Cleary, Geers, & Tobey, 2000; Sarant, Blamey, Dowell, Clark, & Gibson, 2001).

Mounting evidence suggests that age at implantation is a strong predictor of language outcomes. Children who receive cochlear implants at younger ages tend to outperform children who receive cochlear implants later in speech perception, speech production, and language measures (Fryauf-Bertschy, Tyler, Kelsay, & Gantz, 1997; Kirk, Miyamoto, Ying, Perdew, & Zuganelis, 2002; Tobey, Pancamo, Staller, Brimacombe, & Beiter, 1991; Waltzman & Cohen, 1998). Because of findings showing better outcomes with earlier implantation, the U.S. Food and Drug Administration (FDA) has expanded the criteria for implantation to include children as young as 12 months of age. At some CI centers, children are receiving implants at even younger ages when the CI team determines that the infant is not receiving sufficient benefit from conventional amplification. Also, the average age of identifying hearing loss is dropping as increasingly more hospitals adopt universal newborn hearing screening (UNHS) policies (White, 2003). Because of these recent developments, cochlear implantation is now a viable option for many families who have young infants with profound hearing loss.

As cochlear implantation during infancy becomes more common, it is important to assess the speech perception and language skills that infants develop after implantation. However, the current clinical tests for assessing children’s speech perception and language skills were designed for children who are old enough to follow verbal instructions—typically by 2 years of age. Thus, it is important to develop new behavioral methodologies that will allow clinicians and researchers to assess the speech perception and language skills of children who are deaf or hard of hearing, who are under 2 years of age, and cannot follow verbal instructions. These types of assessment tools would be useful for determining the effectiveness of cochlear implantation during infancy and could help clinicians and families decide whether very early implantation is worth possible additional risks associated with surgery on infants, such as increased anesthetic risk (Young, 2002). Developing new behavioral measures that can track speech perception and language during infancy also will enable clinicians and researchers to measure very early the spoken language development of infants with cochlear implants and compare these results with the typical language development of infants with normal hearing. Early identification of basic speech perception skills is also important because delays in the development of these skills may have cascading effects throughout the course of language acquisition.

In a recent study, Houston, Pisoni, Kirk, Ying, and Miyamoto (2003a) measured attention to speech and speech discrimination skills of infants with normal hearing and infants with profound hearing loss before and after cochlear implantation. The authors used a modified version of the Visual Habituation (VH) procedure, which has been used extensively by developmental scientists to study speech discrimination in infants with normal hearing (Best, McRoberts, & Sithole, 1988; Polka & Werker, 1994; Werker, Shi et al., 1998). During a habituation phase, infants were presented with a visual display of a checkerboard pattern on a TV monitor and a repeating speech sound (e.g., “hop, hop, hop”) on half of the trials (“sound trials”) and the same visual display with no sound during the other trials (“silent trials”). The amount of time the infants looked to the visual display was recorded for each trial. After infants decreased their looking time to the two types of trials and reached a pre-determined habituation criterion, they were presented with one trial in which the visual display was paired with the old sound (“old trial”) and one trial in which the same visual display was paired with a novel sound (“novel trial”). Houston et al. (2003a) predicted that infants would look longer during the sound trials than the silent trials if the speech sound engaged their attention, and that they would look longer during the novel trial than the old trial if they could discriminate the sounds and, thus, detect the presentation of the novel auditory stimulus.

Houston et al. (2003a) found that 6- and 9-month-old infants with normal hearing looked significantly longer during the sound trials than the silent trials and looked significantly longer during the novel trial than the old trial. Infants who were 8.7–29.9 months of age and who had 1–6 months of CI experience also looked longer during the novel trial than the old trial, suggesting that they could detect the change in speech sounds. However, the infants with cochlear implants did not look significantly longer during the sound trials than the silent trials, even after 6 months of CI experience. In other words, during the habituation phase, infants with cochlear implants were equally interested in a novel checkerboard pattern with no sound as they were with the same checkerboard pattern with a repeating speech sound. In contrast, after habituating to the checkerboard pattern and speech sound, they dishabituated (i.e., looked longer) upon the introduction of a novel sound. Thus, though the infants with cochlear implants demonstrated the ability to detect and respond to novelty, their overall attention to the stimulus materials did not increase significantly by the presence of a repeating speech sound in contrast to the results found with infants with normal hearing. This latter finding suggests that the infants with cochlear implants’ attention to speech was reduced relative to infants with normal hearing.

Although infants in the Houston et al. (2003a) study demonstrated discrimination of gross-level differences in speech sounds, we know very little about the speech perception skills of infants with cochlear implants. It is possible that the reduced attention to speech sounds they exhibited may have cascading effects on other speech perception and language skills, such as the ability to learn about the organization of speech sounds in the ambient language, how to segment words from fluent speech, and learn the meaning of spoken words (Houston et al., 2003a).

One aspect of language acquisition that the infants with cochlear implants might have difficulty with is word learning. If a language learner’s attentional system is not highly engaged by listening to speech sounds, then he or she might be delayed or require more repetitions to learn pairings of speech sounds and objects as compared with a language learner who attends well to speech sounds and seeks out their significance. In the present study, we investigated the ability of infants with normal hearing and infants with cochlear implants to associate repeating speech sounds with objects whose motions were temporally synchronized with the repeating speech sounds. By testing infants’ ability to learn arbitrary pairings when there is intersensory redundancy, we may tap early stages of association skills that are important for word learning.

Word learning involves a sophisticated array of skills in which speech sounds are arbitrarily related to objects and events and then to more abstract concepts (Golinkoff et al., 2000). The ability to learn arbitrary relations between speech sounds and visual events and objects is typically not evidenced until about 12–14 months of age (Golinkoff, Hirsh-Pasek, Cauley, & Gordon, 1987; Hollich, Hirsh-Pasek, & Golinkoff, 2000; Schafer & Plunkett, 1998; Werker, Cohen, Lloyd, Casasola, & Stager, 1998; Woodward & Markman, 1997), but the skills needed to perceive some correspondences between auditory and visual events appears to be in place much earlier in development in infants who have intact sensory systems.

In a well-known study, Spelke (1979) investigated 4-month-olds’ ability to perceive temporal synchrony in bimodally specified events (i.e., events involving the auditory and visual modalities). Infants were presented with a repeating sound, a video of an object bouncing in temporal synchrony with the sound, and, at the same time, another video of an object that was not bouncing in synchrony with the sound. Spelke (1979) found that infants displayed more first looks to the object bouncing in temporal synchrony with the sound, suggesting that infants can perceive bimodal correspondences by 4 months of age. Using similar procedures, several other investigators also have found that infants with normal hearing are quite good at perceiving temporally and spatially based auditory-visual redundancies (Bahrick, 1983, 1987; Lawson, 1980; Lewkowicz, 1992; Pickens, 1994; Spelke, 1979; Walker-Andrews & Lennon, 1985).

Infants with normal hearing also are very adept at perceiving correspondences between acoustic-phonetic information and visual articulatory information. In a recent study, Patterson and Werker (2003) investigated intermodal correspondences in 2-month-olds. On each trial, they presented the infants with one vowel sound (either /a/ or /i/) and two videos, side-by-side, of a person articulating the two vowels. The infants looked longer and more often at the video that corresponded with the vowel they heard, suggesting that at a very young age infants with normal hearing perceive intersensory relations between vowel sounds and their articulations. Likewise, Barker and Tomblin (in press) investigated this same perceptual skill in infants with hearing loss before and at several intervals following cochlear implantation. Preliminary findings suggest that some of the infants showed evidence of matching phonetic information in the lips and voice post-implantation. The authors also found considerable variability between participants.

Some developmental scientists have hypothesized that the perception of intersensory redundancies may provide the foundation for the development of early word-learning skills (Gogate, Walker-Andrews, & Bahrick, 2001; Sullivan & Horowitz, 1983; Zukow-Goldring, 1997). Gogate et al. (2001) proposed that infants’ detection of redundant information across sensory modalities helps guide their detection of arbitrary relations needed for word learning. Indeed, there is evidence that temporal synchrony can facilitate the learning of some arbitrary pairings between speech sounds and objects.

In a recent study, Gogate and Bahrick (1998) used a habituation/switch task to investigate 7-month-olds’ use of temporal synchrony to associate spoken labels with objects. During a habituation phase, infants were presented with several repetitions of one vowel paired with one object and another vowel paired with another object. After the infants habituated to the pairings, they were presented with a sequence of test trials that consisted of two trials with the same pairings that were used during habituation and two trials with the pairings switched. It was predicted that if the infants learned the pairings of the vowels with the objects during the habituation phase, then during the test phase they would look longer when presented with the switched pair than when presented with the original pairings. In one condition, the objects were presented using back and forth movements in temporal synchrony with the vowels sounds. In a second condition, the objects were presented out-of-sync with the vowels sounds. And, in a third condition, the objects were presented stationary without any movement. Infants looked longer to the switch trials than to the control trials in the condition with temporal synchrony, suggesting that they learned the associations between the vowels and the objects. However, under the experimental conditions where the objects were still or moved out-of-sync with the vowels, the infants failed to demonstrate that they learned the vowel-object associations.

The findings by Gogate and Bahrick (1998) suggest that infants are able to use intersensory redundancy to learn arbitrary pairings between object-in-motion and speech sounds by about 7 months of age—much earlier than what is found when infants are tested on their ability to learn arbitrary word-object associations without any intersensory redundancy (Golinkoff et al., 1987; Hollich et al., 2000; Schafer & Plunkett, 1998; Werker, Cohen et al., 1998; Woodward & Markman, 1997). Thus, infants’ ability to learn pairings between auditory and visual events when there is intersensory redundancy between the pairings may mark a very early stage of word-learning skills. Moreover, assessing infants’ ability to learn such pairings may be predictive of later word-learning skills.

Although intersensory perception and word-learning skills may develop very early in infants with normal hearing, infants who are profoundly deaf and then receive cochlear implants may not develop these skills in the same way or at the same rate. First of all, intersensory perception cannot begin to develop in infants with profound hearing loss until they have access to sound. But even after cochlear implantation, the development of intersensory speech perception and word-learning skills may be delayed for several reasons. They may continue to have a reduced attention to speech post-implantation. Also, the auditory input from an implant is not as rich as it is from a normally hearing ear. Both of these factors may lead to atypical speech perception skills, which may in turn affect infants’ intersensory perception and word-learning skills. In the present investigation, we assessed the ability to associate speech sounds to visual events in infants with profound hearing loss at several intervals post-implantation and compared these results to several groups of infants with normal hearing. The infants with normal hearing ranged in ages from 6 to 30 months in order to have comparisons with younger infants who have a similar amount of experience with hearing and with older infants who are similar in chronological age.

Infants were tested using the split-screen version of the Preferential Looking Paradigm (Hollich et al., 2000). The PLP was originally developed to investigate word-learning skills in infants with normal hearing, and has been used extensively to test infants’ ability to learn novel words under a variety of conditions (Golinkoff et al., 1987; Hollich et al., 2000; Hollich, Rocroi, Hirsh-Pasek, & Golinkoff, 1999; Naigles, 1998; Schafer & Plunkett, 1998; Swingley, Pinto, & Fernald, 1999). The theoretical basis for the PLP is grounded on findings from the behavioral sciences that when an association is formed between two perceptual cues, the presence of one will trigger increased attention to the other (Allport, 1989; James, 1890). Thus, this experimental paradigm differs from the habituation/switch paradigm used by Gogate and Bahrick (1998) and leads to a different set of predictions. In a habituation/switch experiment, it is predicted that after habituating to stimulus pairs, the infant will dishabituate and look longer to the novel combination. By contrast, with the PLP, it is predicted that when presented with a sound and two objects, infants will look longer to the object that was previously associated with that sound than to another object.

In the present investigation, the PLP was used to present infants with two pairings of videos showing objects in motion and repeating speech sounds, which were temporally synchronous with the motion of the objects. Each speech sound–object pairing was presented on one side of a split-screen display TV monitor. During testing, both videos were displayed along with one of the repeating speech sounds. We predicted that if infants were able to learn to associate the visual event with the repeating speech sound, then during the test trials they should look longer to the video associated with speech sound than to the other video.

Method

Participants

Twenty-one infants with prelingual hearing loss (8 female, 13 male) who received a cochlear implant at the Indiana University Medical Center participated in this study. Inclusion criteria were the presence of profound, bilateral hearing loss, cochlear implantation prior to 2 years of age, and evidence of a CI pure-tone average threshold of 50 dB HL or better at 3 months after initial stimulation of the device (measured by visual reinforcement audiometry). Testing was conducted prior to the 3-month interval, but the data were subsequently excluded if an infant did not meet the audiological criteria at the 3-month post-stimulation interval. The data from two infants (1 female, 1 male) were excluded for this reason. Data from one of the infants (female) could not be used in the repeated-measures analyses because only one data point was obtained from that infant.

Because we were interested in investigating how age at implantation would affect performance, the remaining 18 infants were divided into two groups based on the mean age of initial CI activation (mean = 16.05 months). The “earlier implanted” group consisted of 8 infants whose implants were activated between 7 and 15 months of age (mean = 11.88 months). The “later implanted” group consisted of infants whose implants were activated between 16 and 25 months of age (mean = 19.41 months). The chronological ages of the subjects at all of the testing sessions ranged from 10.8 to 30.5 months for the “earlier implanted” group and from 16.2 to 35.7 months for the “later implanted” group. Table 1 provides a summary of information about the age at implantation, type of implant, processing strategy, and communication mode. The table also displays each of the stimulus conditions the infants completed or did not complete (15 due to crying and 1 due to falling asleep) within each interval period. In the table, “none” indicates that no testing was attempted during that interval period because the infant had either not yet reached that interval period when the data were analyzed (E05, E06, E08, L06–L10), had already passed that interval period before the study began (E01, E03, L05), or discontinued participation (L02). Finally, for comparison purposes, we also tested 4 groups of infants with normal hearing: 25 6-month-olds, 26 9-month-olds, 24 18-month-olds, and 12 30-month-olds. Twenty-eight additional infants were tested but did not complete testing due to crying (20), experimenter error (3), extreme side bias (3), parental interference (1), and falling asleep (1).

Table I.

Demographic Information of the Infants With Cochlear Implants

| ID (Male/Female) |

Age of Initial Stimulation (mos.) |

Internal CI Device |

Processing Strategy |

Communication Mode |

Conditions Completed and Not Completed Within Each Interval Period (A/H—RF/) |

|||

|---|---|---|---|---|---|---|---|---|

| Month 1 | Mos. 2–6 | Mos. 9–18 | ||||||

| Earlier Implanted | E01 (m) | 7.55 | N24 Contour | ACE | OC | None | A/H, R/F | A/H(×2) |

| E02(f) | 12.17 | N24 Contour | ACE | OC | A/H, R/F A/H | A/H, R/F | A/R R/F R/F | |

| E03(f) | 11.12 | N24 K | ACE | TC | None | A/H R/F | A/H, R/F | |

| E04 (m) | 10.77 | N24 Contour | ACE | OC | R/F A/H (×2) | A/H, R/F A/H | R/F A/H | |

| E05 (m) | 13.424 | N24 K | CIS | TC | R/F A/H | A/H, R/F | None | |

| E06 (m) | 13.786 | N24 Contour | ACE | OC | A/H, R/F (×2) | A/H, R/F | None | |

| E07 (m) | 14.345 | N24 Contour | ACE | OC | A/H, R/F A/H | A/H, R/F A/H | R/F | |

| E08 (m) | 11.84 | N24 Contour | ACE | OC | A/H R/FA/H | A/H, R/F A/H, R/F | None | |

| Mean = 11.88 | ||||||||

| Later Implanted | L01 (f) | 17.27 | N24 Contour | ACE | OC | A/H R/F | R/F A/H | A/H |

| L02 (f) | 23.67 | CII HiFocus 1 | MPS | TC | A/H, R/F | A/H (×2), R/F | None | |

| L03 (f) | 17.47 | N24 K | ACE | OC | A/H (×2), R/F | A/H, R/F(×2) | A/H, R/F | |

| L04 (f) | 19.08 | N24 Contour | ACE | OC | None | A/H A/H | A/H | |

| L05 (m) | 16.97 | N24 Contour | ACE | TC | A/H (×2), R/F | R/F | A/H, R/F | |

| L06 (m) | 22.47 | N24 Contour | ACE | OC | A/H, R/F | None | None | |

| L07 (m) | 24.18 | N24 Contour | ACE | OC | A/H (×2), R/F | A/H | None | |

| L08 (m) | 20.07 | Combi40+ | CIS+ | TC | A/H, R/F | None | None | |

| L09 (m) | 16.12 | N24 K | ACE | OC | A/H (×2), R/F | None | None | |

| L10 (m) | 16.81 | N24 Contour | ACE | TC | A/H, R/F | None | None | |

| Mean = 19.41 | ||||||||

A/H = /a/ vs. repeating /hap/ (“hop”)

OC = oral communication

R/F = rising /i/ vs. falling /i/

TC = Total Communication

Apparatus

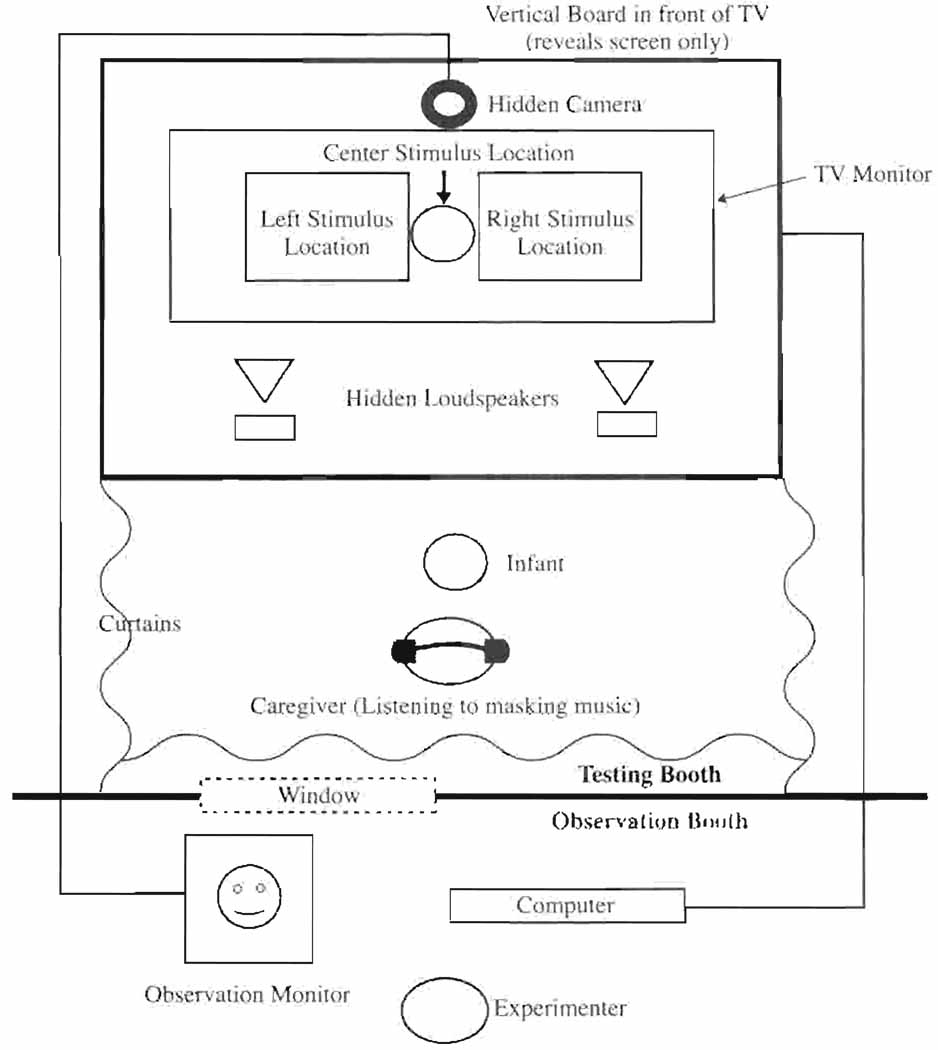

The testing was conducted in a custom-designed double-walled IAC sound booth. As shown in Figure 1, infants sat on their caregiver’s lap in front of a large 55 wide-aspect TV monitor. The visual stimuli were displayed as left and right picture-in-picture (PIP) displays on the TV monitor at approximately eye level to the infant, and the auditory stimuli were presented through both the left and right loudspeakers of the TV monitor. The experimenter observed the infant from a separate room via a hidden, closed-circuit TV camera and controlled the experiment using the Habit software package (Cohen, Atkinson, & Chaput, 2000) running on a Macintosh® G4 desktop computer.

Figure 1.

Apparatus: During the Preferential Looking Paradigm (PLP), the caregiver wears headphones playing masking music. The visual stimuli appear at the left and right stimulus locations. The “attention getter” appears at the center. All auditory stimuli are presented through both the left and the right loudspeakers in the monitor.

Stimulus Materials

Speech Stimuli

The purpose of the present experiment was to assess infants’ ability to learn to associate speech patterns with visual events. Infants would not be able to associate different speech sounds to different events if they were unable to discriminate the speech sounds. The stimuli consisted of highly contrastive speech sounds that are used clinically and have been found to be discriminable by infants with profound deafness after only 1 month of CI experience (Houston et al., 2003a). One stimulus contrast consisted of 4 seconds of the continuous steady-state vowel /a/ (“ahhh”) versus 8 repetitions over 4 seconds of the CVC pattern /hap/ (“hop”). The second contrast was 4 seconds of the vowel /i/ (“ee”) with a rising intonation versus 4 seconds of the same vowel /i/ with a falling intonation. At each testing session, the infant was presented with one of the two contrast pairs. All of the stimuli were produced by a female talker and recorded digitally into sound files. The stimuli were presented to the infants at 70±5 dB SPL via loudspeakers on the TV monitor.

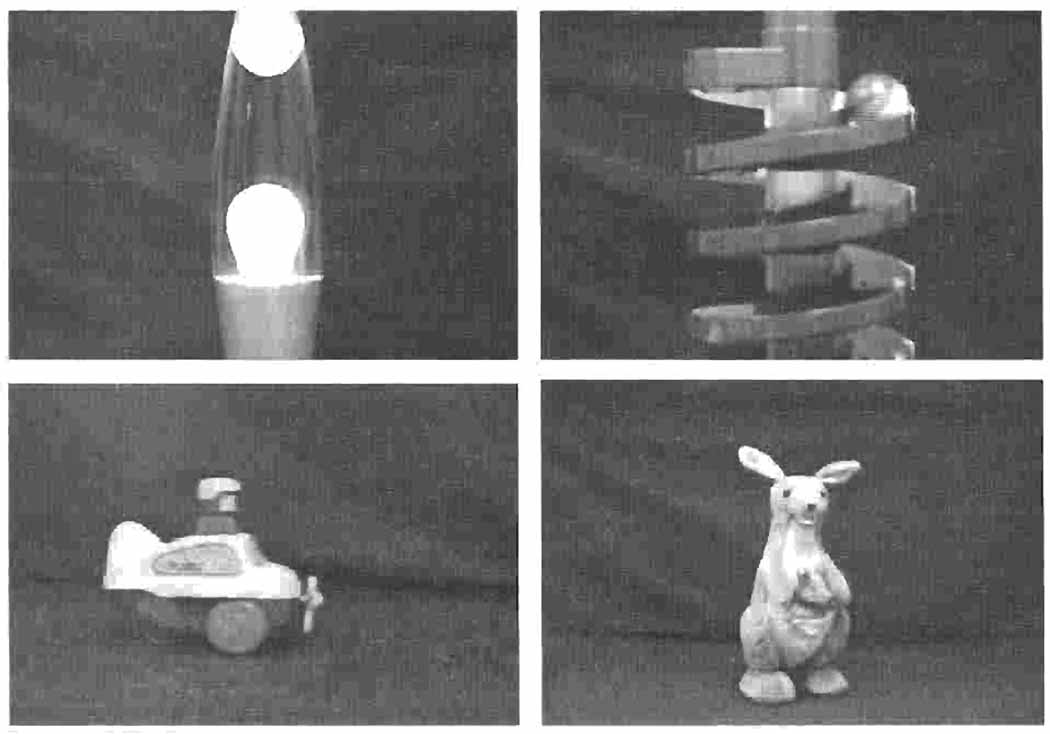

Visual Stimuli

Each speech sound was paired with a dynamically changing visual event. Examples of the visual events are displayed in Figure 2. The steady-state /a/ was paired with a 4-second video of a toy airplane moving horizontally from left to right across a table. The repetitions of /hap/ were paired with a 4-second video of a toy kangaroo hopping up and down. The rising /i/ was paired with a 4-second video of a bubble rising in a lava lamp. The falling /i/ was paired with a 4-second video of a ball rolling down a spiral ramp.

Figure 2.

Visual stimuli: (a) Rising bubble on the left and ball rolling down on the right; (b) airplane moving horizontally across on the left and kangaroo bouncing up and down on the right.

The videos were created by first recording the events using a digital video camera. Then with a digital editing software package (EditDV), the videos were edited to be temporally synchronous with the speech sounds and appear as PIP displays within a blue background. In the toy airplane video, the airplane began at the left edge and moved to the right edge. The objects in the three remaining videos were centered in the PIP display.

Each video and sound file was duplicated and the two copies were pasted together so that they were presented twice during each trial. The speech sounds were temporally synchronous with the videos such that the onset of the speech sounds co-occurred with the start of the motion of the objects, and the offsets of the videos and speech sounds were also simultaneous. For all trial types except the familiarization trials, a 1-second still-frame picture of the first frame of the video was inserted at the beginning of the video. The still image was presented to provide the infants a chance to briefly become acquainted with the visual information before hearing the speech sounds in order to avoid overloading them with information all at once.

Procedure

During the testing sessions, we measured the infants’ ability to learn two contrasting pairings of speech sounds and visual events. The infants with normal hearing were tested only once and were presented with only one set of the stimuli (the horizontally moving airplane with steady-state /a/ and the bouncing kangaroo with repeating /hap/ or the bubble rising with rising /i/ and the ball rolling down with falling /i/). The infants with cochlear implants were presented with one set of stimuli at each test session, which alternated from set to set across sessions. Before each trial, the infants’ attention was initially drawn to the TV monitor by using an “attention getter” (i.e., a small dynamic video display of a laughing baby’s face).

Infants with cochlear implants were tested on the same days they were scheduled to have their implants re-programmed after the initial stimulation of the implants (1 day, 1 week, 1 month, 2 months, 3 months, 6 months, 9 months, 12 months, and 18 months). To program the devices as best as possible for hearing, audiologists used a combination of behavioral observation audiometry and visual reinforcement audiometry to set the threshold and comfort levels. In many cases, neural response telemetry was also measured to confirm the settings. The exact method used for each infant at each appointment depended on the types of responses the audiologists could obtain from the infant. For the first few testing sessions, it was difficult to know for sure how much, if any, auditory information infants were receiving via their implants. This possibility is considered further in the Discussion section. Prior to testing, parents were encouraged to set their infants’ cochlear implants to the settings they felt would be best for enabling their child to hear speech sounds. The experimenter and parents checked the device prior to testing to make sure it was turned on and properly attached throughout the testing session.

The experiment consisted of four types of trials: familiarization trials, training trials, and test trials. The experiment began with two familiarization trials. On each familiarization trial, the two videos were presented simultaneously on the split-screen display without any sound. One PIP display appeared on the left side and the other appeared on the right. This arrangement gave the infants an opportunity to become familiarized with the visual displays before the presentation of the speech sounds.

The infants were then presented with eight training trials: four of each of the two video-speech sound pairings. Each training trial consisted of a presentation of one of the two pairings. At a given testing session, one pairing was always presented on the left side and the other pairing was always presented on the right.

After the infants completed the training phase, their ability to associate the speech sounds to the visual events was assessed with four blocks of four test trials. On each test trial, both videos were presented simultaneously on the split-screen display, with each video on the same side as during the familiarization and training phases. Infants also were presented with one of the speech sounds during two of the test trials and with the other speech sound during the other two test trials, in random order. During the final second of each trial, the video that was associated with the speech sound (i.e., the “target”) moved up and down to reinforce infants’ looking to the target video rather than to the non-target video. It was predicted that if infants were able to learn the associations between the visual displays and the speech sounds during the training, then they would, on average, look longer to the target than to the non-target on the test trials.

Between each block of test trials, infants were presented with two additional training trials, which provided additional reinforcement to the infants as to which speech sounds were paired with which videos. Infants were presented with one of each of the two types of training trials.

Data Collection

A digital video camera was used to record the infants’ looking behaviors during testing. An assistant manned the camera so that the recording was as close-up as possible in order to see the eye gazes of the infants. The digital video recordings were used for frame-by-frame analyses of the infants’ looking behavior. These analyses were performed offline by research assistants who were blind to the experimental condition and to the location of the target and non-target videos. The digital video recordings were transferred to a MacIntosh® G4 computer and converted into QuickTime™ 4.0 videos. The beginning of the trials, the initiation of the left and right looks, and initiation of looking away from the left and the right were determined by the research assistants who recorded the frame numbers onto an electronic spreadsheet using a software program designed for that purpose (Hollich, 2001). The assistant was able to determine the exact frame of the trial beginning by noticing the sudden onset of light in the testing room radiating from the TV monitor onto the infant’s face. After the frame numbers of the infant’s looks and trial onsets throughout the experimental session were entered into a spreadsheet, the order of stimulus presentation was entered into the spreadsheet. From this information, the mean looking times to the target and non-target videos were calculated using macros written to take into account each trial type, the duration of each trial, and the location of the target stimulus on each trial. The macros also discarded the looks that occurred during the first second (when there was a still frame and no sound) and the last second (when the target video moved up and down) of each trial. The mean looking times to the target and non-target videos were calculated separately for each infant and then compiled in a separate spreadsheet that was used for statistical analyses.

Results

The average looking times across blocks of test trials to the target and to the non-target was calculated for each infant. The ability to learn the association between the speech sounds and the videos was measured as the difference in the infants’ looking times to the target versus the non-target videos. Of the 77 infants with normal hearing, 67 infants were tested only once and 10 of them participated in two testing sessions at different ages. For the infants with cochlear implants, data were grouped into three post-stimulation intervals: 0–1 months (1-day, 2-week, and 1-month post-stimulation interval), 2–6 months (2, 3, and 6-month post-stimulation intervals), and 9–18 months (9-, 12-, and 18-month post-CI intervals). Eighteen infants who received implants were tested successfully at least twice after initial stimulation. Within any interval, an infant may have been tested from not at all to three times under the same or different stimulus conditions.

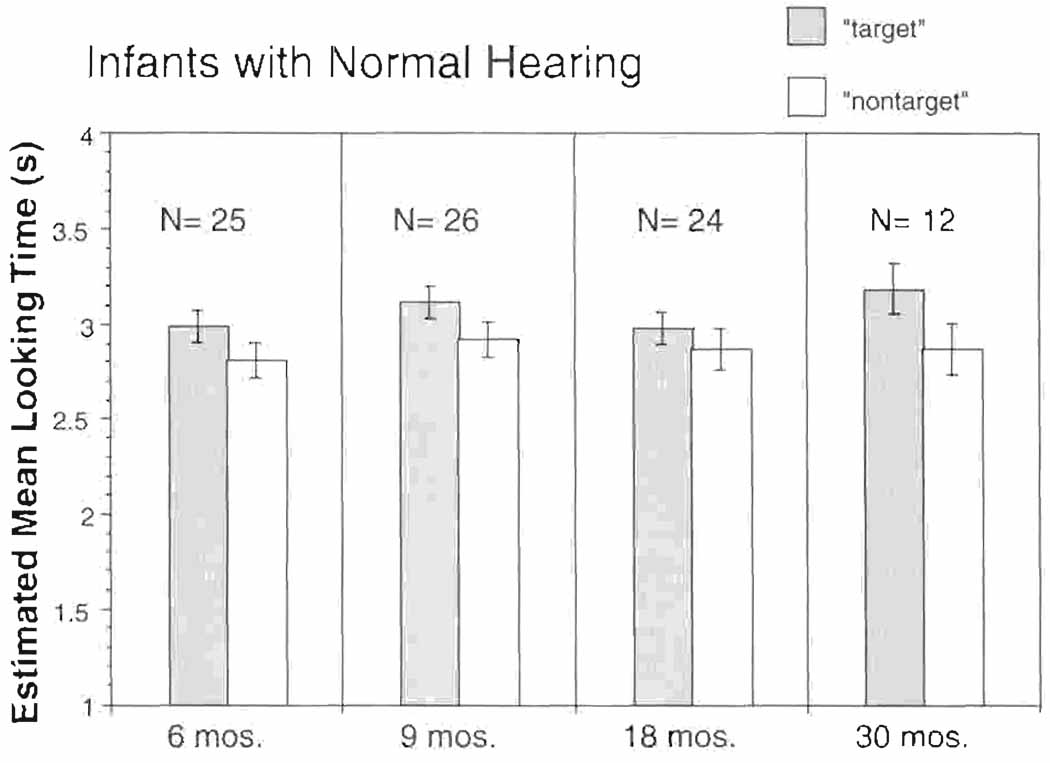

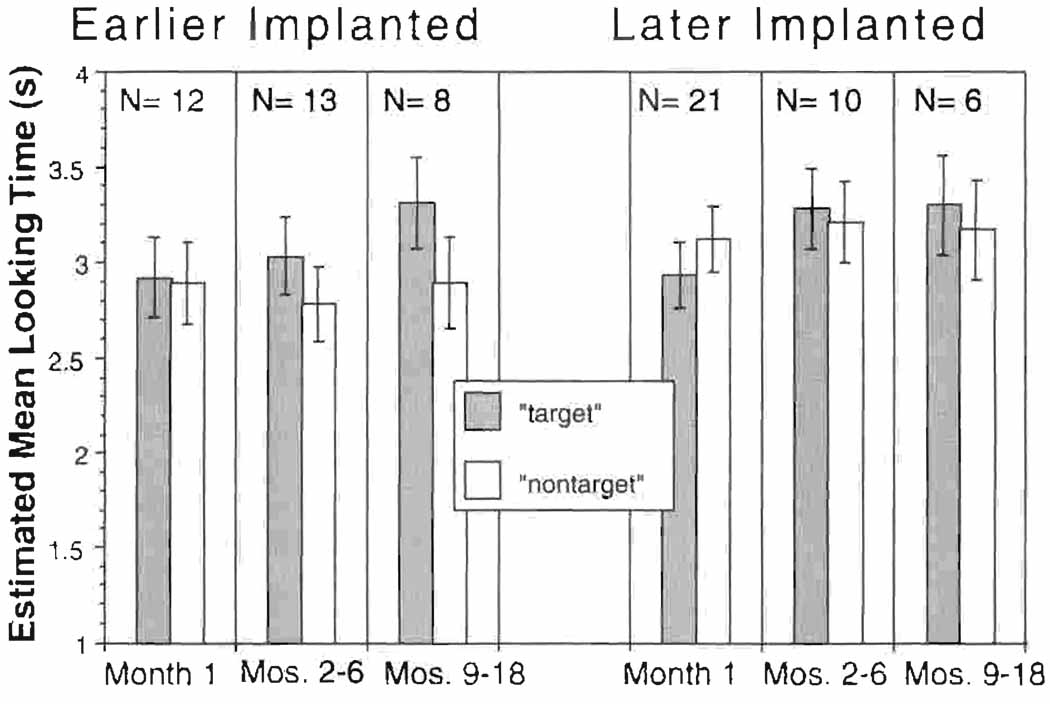

The data from the infants with normal haring and the data from the infants with cochlear implants were analyzed separately because the infants with normal hearing were not tested across the same intervals as the infants with cochlear implants and also because the factors of communication mode and processing strategy could not be applied to them. One challenge in analyzing the data is that the participants were not tested the same number of times for each condition at each interval. This creates a problem of missing data points. A traditional repeated-measures analysis of variance (ANOVA) eliminates all of the data of participants who have any missing data points. To avoid throwing out data, we used a mixed model with repeated measurements in SAS 8.02. A mixed model is similar to a repeated-measures ANOVA, but the mixed model is able to deal with missing values without eliminating data (Wolfinger & Chang, 1995). The mixed model calculates estimated (or least squared means) from the variability in the data. Thus, the looking time data presented in Figures 3 and 4 represent estimated means rather than actual means of looking times. In all analyses, alpha level was set to be 0.05. To test the hypothesis that infants demonstrate looking preference for the target over the nontarget video, all p-values were one-sided adjusted.

Figure 3.

Estimated mean looking times (and standard error) for the infants with normal hearing. Mean looking times to the target are represented by solid bars, and mean looking times to the non-target are represented by striped bars. Looking times were averaged across stimulus conditions.

Figure 4.

Estimated mean looking times (and standard error) for infants with cochlear implants. Bars on the left represent infants with very early implantation. Bars on the right represent infants with later implantation. Mean looking times to the target are represented by solid bars, and mean looking times to the non-target are represented by striped bars.

Infants with Normal Hearing

The data were subjected to a mixed model in SAS 8.02 with target condition (target vs. non-target), stimulus condition (/a/ vs. repeating /hap/ [A/H] and rising /i/ vs. falling /i/ [R/F]), and age-at-implantation group (6, 9, 18, and 30 months) as fixed factors and looking time as outcome, and with repeated measurements on the subjects tested twice. There were no significant two-way or three-way interactions. Among the three main factors, only target condition was significant (p < 0.001). Stimulus condition (p = 0.1) and age-at-stimulation group (p = 0.44) were not significant. After adjusting for the two non-significant main factors, the difference in the infants’ looking time to the target vs. the non-target video was 0.20 sec, which was significantly greater than 0 (one-tailed t-test, adjusted p < 0.001). These results suggest that the infants with normal hearing displayed a consistent looking preference for the target over the non-target video across stimulus conditions and across different ages. Figure 3 displays the estimated mean looking times to the target and to the non-target, adjusted by stimulus conditions for each age group of infants with normal hearing. As can be seen in Figure 3, infants with normal hearing displayed a consistent looking preference for the target over the non-target video across all ages.

Infants with Cochlear Implants

Of the 18 infants with cochlear implants, 8 received their implants at an earlier age (7–15 months of age) while 10 of them received their implants at later ages (17–24 months of age). The data were subjected to mixed effect models with repeated measurement in SAS 8.02. Similar to the models for the infants with normal hearing, the looking time was the dependent variable. Target condition (target vs. non-target), age-at-implantation group (earlier vs. later), stimulus condition (A/H vs. R/F), processing strategy, communication mode, and post-stimulation intervals (1 month, 2–6 months, 9–18 months) were treated as fixed factors and subject was treated as a random factor with compound symmetry variance-covariance structure within each subject over the post-stimulation intervals.

The first model included all main effects (target condition, age-at-implantation group, post-stimulation interval, stimulus condition processing strategy, and communication mode), a three-way interaction (target condition × age-at-implantation group × post-stimulation interval) and a two-way interaction (target condition × stimulus condition). The three-way interaction was not significant (p = 0.48) after adjusting for processing strategy, communication mode, and the interaction of stimulus condition and target condition. Figure 4 displays the estimated mean looking times to the target and the non-target at each post-stimulation interval, separated by age-at-implantation group. The two-way interactions that were not statistically significant (i.e., age-at-implantation group × interval [p = 0.29]; target condition × interval [p = 0.15]; and target condition × stimulus condition [p = 0.102]) were excluded from the model one at a time to systematically eliminate noise in the model. In the reduced model with the same structure, only one two-way interaction (target condition × age-at-implantation group) was marginally significant with the p-value of 0.06 after adjusting for all other main effects (interval, stimulus condition, processing strategy, communication mode), representing a marginally significant difference in patterns of looking to the target and the non-target between the earlier- and later-implanted groups.

The third step we took was to build the mixed models with the same structure to test any potential difference in looking time between target conditions stratified by the age at implantation. For the later-implanted group, none of the main effects (target condition, stimulus condition, processing strategy, communication mode, and interval) reached statistical significance. In particular, the infants’ looking time to the target vs. the non-target videos was not significantly different from 0 (p = 0.31). By contrast, target condition for the earlier-implanted group was significant (p < 0.05), but stimulus condition (p = 0.38), processing strategy (p = 0.14), communication mode (p = 0.16), and interval (p = 0.20) did not reach statistical significance. A one-tailed t-test on the difference in the looking time to target vs. non-target for the earlier-implanted group was performed. Adjusting for stimulus condition, processing strategy, communication mode, and interval, the difference in the looking time to target vs. non-target for the very early age group of infants with implants was 0.21 sec, which was significantly greater than 0 (adjusted p < 0.05).

Because the testing was conducted over several intervals for the infants with cochlear implants but not for the infants with normal hearing, it was impossible to build a model to compare the two groups directly. However, after adjusting for all other main factors, relevant interactions, and time intervals, the overall difference in the looking time to the target vs. the non-target videos for the earlier-implanted group was almost identical to the difference for the infants with normal hearing, suggesting that both groups of infants learned the correct pairings of sounds and objects. In contrast the infants who received their implants at older ages did not look longer to the target than the non-target, suggesting that this group of infants did not learn the correct pairings within the context of the present experiment.

Discussion

In this investigation of pre-word-learning skills, infants with normal hearing and infants with cochlear implants were tested on their ability to learn associations between speech sounds and objects that moved in temporal synchrony with the speech sounds. This type of task is easier than a typical word-learning task in which there is no intersensory redundancy to help link the speech sounds to objects. Thus, this task may serve as an initial benchmark to assess the foundational skills that are needed for later word learning.

The results obtained with the infants with normal hearing showed that they looked longer to the target video than to the non-target. This finding suggests that infants with normal hearing are able to learn the associations between the speech sounds and the objects-in-motion. The results did not differ significantly between age groups, demonstrating that this version of the PLP can serve as a valuable tool for assessing intermodal learning across a wide range of ages. Also, the results suggest that the PLP is a promising new tool that can be used to study fundamental skills related to word learning across infants from 6 months to 30 months of age.

We also tested infants with cochlear implants whose age range was similar to the range of the infants with normal hearing. These infants were tested at several intervals after receiving their implant. Difference in performance across testing intervals did not reach statistical significance. However, a non-significant trend is present in Figure 4, suggesting larger differences in looking times after more experience with a cochlear implant for the infants in the earlier-implanted group. As noted in the Method section above, it was difficult to know if during the first few testing sessions the infants were receiving any auditory benefit from their implants. Thus, any differences in performance between the earlier and later test periods could be due to differences in how well the implants were programmed rather than due to amount of experience with the implants.

Like the infants with normal hearing, the infants in this study who received their cochlear implants at a very early age demonstrated an ability to learn the correct pairings between the speech sounds and the objects when intersensory redundancy was present. In contrast to the infants with normal hearing and to the earlier-implanted infants, the infants who did not receive their implants until they were older did not look significantly longer to the target than the non-target video, even after 9–18 months experience with their implants. Taken together, the findings suggest that the infants who received their cochlear implants later had more difficulty learning the associations between the speech sounds and the objects-in-motion.

Why did the infants who received their implants at a later age have more difficulty with this task? One possibility is that the differences in chronological ages may have played a role. Across all of the testing intervals, the infants who received their cochlear implants later were older than the infants who received their cochlear implants earlier. Perhaps the older infants were bored with the task and did not show any learning because of poor performance. Although chronological age cannot be ruled out entirely, we feel this account is unlikely because the older infants with normal hearing (30-month-olds) displayed a significant looking preference for the target video in this task. Infants with cochlear implants between the ages of 10.8 months and 35.7 months completed all of their testing sessions, with 4 of the 70 testing sessions occurring when a child was older than 30 months.

Another possible reason why the infants who received implants at 12 months of age or younger demonstrated the ability to learn associations in this particular task and the infants who received their cochlear implants later did not is that auditory-visual association may be influenced by the age at which infants have access to both types of sensory information as well as the duration of auditory deprivation. The effects of early auditory deprivation due to profound hearing loss may affect auditory system development at many different levels. There is some evidence that auditory deprivation leads to degeneration of spiral ganglion cells in the cochlea (Leake & Hradek, 1988; Rebscher, Snyder, & Leake, 2001) and to reorganization of the sensory cortices (Neville & Bruer, 2001; Rauschecker & Korte, 1993; Teoh, Pisoni, & Miyamoto, 2003, in press). There also is evidence that early auditory deprivation impairs the development of neural pathways connecting the auditory cortex to other cortices (Kral, Hartmann, Tillein, Held, & Klinke, 2000; Ponton & Eggermont, 2001). Although it is not yet clear how these sensory and neurological reorganizations affect hearing, speech perception, and cognition, there is converging evidence with children older than in the present study that children who receive cochlear implants earlier perform better on speech perception and language tasks than infants who receive their implants later (Fryauf-Bertschy et al., 1997; Kirk et al., 2002; Tobey et al., 1991; Waltzman & Cohen, 1998). The findings from the present study suggest that it would be worthwhile to further investigate effects of age at implantation within the population of children who are all currently considered as early candidates for cochlear implants.

At present, little is known about how a period of auditory deprivation may influence perception and attention in different sensory modalities. It is possible that poor connections between the auditory and visual cortices due to auditory deprivation may affect perception of intersensory redundancy and/or the ability to learn arbitrary pairings between sound and vision. Also, poor connectivity between the auditory cortex and other cortices may influence how well infants are able to integrate auditory information with their general cognitive and attentional processes. Indeed, Houston et al. (2003a) found that though infants with profound hearing loss who received implants demonstrated detection of gross-level differences of speech sounds, they also exhibited less overall sustained attention to repeating speech sounds than infants with normal hearing. It is possible that reduced attention to speech at an early age may delay the development of mechanisms used in the perception of intersensory redundancies and ability to learn arbitrary pairings.

Although it is possible that length of auditory deprivation may play a role in the development of speech perception and language skills after implantation, there are other factors that may play a role in any differences found between groups of infants with cochlear implants. It is important to take into consideration that the nature of the input from cochlear implants is significantly different from what is conducted through a normally functioning ear. Also, it is difficult to obtain precise information about the auditory acuity of infants since their behavioral responses to auditory stimuli are often inconsistent. In the present investigation, although the speech stimuli were presented at levels that were above the pure-tone average threshold of the infants (measured at 3 months post-stimulation), the auditory information that each infant perceived through his or her device may have varied considerably. Thus, any differences found between infants or groups of infants possibly was due to differences in the quality of auditory input or another factor rather than differences in length of auditory deprivation. More accurate and reliable measures of infants’ auditory capacities would be invaluable for helping to understand the nature and causes of any difficulties infants might have with perceiving speech and learning language.

The present findings have several implications for understanding differences in the development of later language skills among children who use cochlear implants. If the infants in this study who received their implants when they were older had more difficulty learning the associations than the infants who received cochlear implants earlier, then they also might have more difficulty learning words at a later stage. In a recent study, Houston, Carter, Pisoni, Kirk, and Ying (2003b, submitted) investigated name learning in children with cochlear implants. All of the children in that study received cochlear implants after 1 year of age. Results showed that many of the children had difficulty rapidly learning names for stuffed animals relative to children with normal hearing. However, though some children may exhibit difficulty perceiving intersensory redundancy or learning arbitrary associations, neither the present study nor Houston et al. (2003b, submitted) suggest that children who receive an implant after 1 year of age will not be able to do so with sufficient experience or repetition. Of course, many of these children go on to develop good vocabularies. However, the results of the two studies do suggest that children with implants might need a great deal of experience and repetition to encode new speech patterns into memory and then link them to concrete objects in their immediate environment. The present findings further suggest that very early cochlear implantation (i.e., at or before 1 year of age) may facilitate perception of intersensory redundancy and the rapid mapping of speech sounds to visual events in infants who are congenitally deaf.

The present study represents a first attempt to assess auditory-visual association skills that may be important prerequisites for word learning in infants with cochlear implants. While these initial findings are of interest in their own right, they also raise more questions than they answer. First, it is not at all clear why infants who receive implants later did not demonstrate the ability to learn the pairings between the speech sounds and the objects. One possible reason is that they failed to detect the intersensory redundancy between the movement of the objects and the patterning speech sounds they heard through their implants, and thus the intersensory redundancy did not facilitate learning the pairings. Another possibility is that the infants did perceive the intersensory redundancy but were unable to encode the correct pairings into memory, perhaps because of an insufficient number of training trials. Further work exploring their intersensory perception and ability to learn arbitrary associations independently would provide valuable information about the nature of the delay or deficit. Fortunately, the PLP can be used as a tool for studying these skills in infants. The results of the present study demonstrate that this paradigm can be used successfully with a wide age range of infants with cochlear implants and infants with normal hearing. It would also be worthwhile to use the PLP to assess these skills in infants who use conventional amplification.

Another question that must be addressed is whether infants’ ability to learn arbitrary relations when there is intersensory redundancy will be predictive of later word-learning and language skills. Currently, we are following the children in this study and tracking their auditory, perceptual, linguistic, and cognitive skills. We will compare their performance in the present assessment to their performance on vocabulary measures, such as the MacArthur Communication Development Inventory (Fenson et al., 1993) and the Peabody Picture Vocabulary Test (Dunn & Dunn, 1997). These comparisons will help determine whether the present methodology using the PLP is a valid measure of early word-learning skills and a predictor of later vocabulary development.

Acknowledgments

Research was supported by the Deafness Research Foundation, Indiana University Intercampus Research Grant, the David and Mary Jane Sursa Perception Laboratory Fund, NIH-NIDCD Training Grant DC00012, and an NIH-NIDCD Research Grant (R01 DC006235) to Indiana University. We would like to thank Carrie Hansel, Marissa Schumacher, Riann Mohar, Jennifer Phan, Tanya Jacob, and Steve Fountain for assistance with recruiting and testing infants and for analyzing looking times; Rong Qi and Sujuan Gao for helping conduct the statistical analyses; the faculty and staff at Methodist Hospital, Indiana University Hospital, Wishard Memorial Hospital, and many pediatrician offices for their help in recruiting participants; and the infants and their parents for their participation in this project.

Contributor Information

Derek M. Houston, assistant professor of otolaryngology-head and neck surgery and is the Philip F. Holton Scholar at the Indiana University School of Medicine in Indianapolis, IN.

Elizabeth A. Ying, private practitioner who provides auditory-based, oral speech and language training for children with hearing loss. She is also the aural rehabilitation consultant for the Cochlear Implant Program at the Indiana University School of Medicine in Indianapolis, IN..

David B. Pisoni, Chancellors’ Professor of psychology and cognitive science at Indiana University in Bloomington, IN and an adjunct professor of otolaryngology-head and neck surgery at the Indiana University School of Medicine in Indianapolis, IN.

Karen Iler Kirk, associate professor and Psi Iota Xi Scholar at the Indiana University School of Medicine in Indianapolis, IN..

References

- Allport A. Visual attention. In: Posner MI, editor. Foundations of cognitive science. Cambridge, MA: MIT Press; 1989. pp. 631–682. [Google Scholar]

- Bahrick LE. Infants’ perception of substance and temporal synchrony in multimodal events. Infant Behavior and Development. 1983;6:429–451. [Google Scholar]

- Bahrick LE. Infants’ intermodal perception of two levels of temporal structure in natural events. Infant Behavior and Development. 1987;10:387–416. [Google Scholar]

- Barker BA, Tomblin JB. Bimodal speech perception in infant hearing-aid and cochlear-implant users. Archives of Otolaryngology. doi: 10.1001/archotol.130.5.582. (in press) [DOI] [PubMed] [Google Scholar]

- Best CT, McRoberts GW, Sithole NM. Examination of the perceptual re-organization for speech contrasts: Zulu click discrimination by English-speaking adults and infants. Journal of Experimental Psychology: Human Perception and Performance. 1988;14:345–360. doi: 10.1037//0096-1523.14.3.345. [DOI] [PubMed] [Google Scholar]

- Cohen LB, Atkinson DJ, Chaput HH. Habit 2000: A new program for testing infant perception and cognition. (Version 1.0) Austin, TX: The University of Texas; 2000. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3rd ed. Circle Pines, MN: American Guidance Service Inc; 1997. [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Thal D, Bates E, Hartung JP, et al. The MacArthur Communicative Development Inventories: User’s guide and technical manual. San Diego, CA: Singular Publishing Group; 1993. [Google Scholar]

- Fryauf-Bertschy H, Tyler RS, Kelsay DMR, Gantz BJ. Cochlear implant use by prelingually deafened children: The influences of age at implant use and length of device use. Journal of Speech and Hearing Research. 1997;40:183–199. doi: 10.1044/jslhr.4001.183. [DOI] [PubMed] [Google Scholar]

- Gogate LJ, Bahrick LE. Intersensory redundancy facilitates learning of arbitrary relations between vowel sounds and objects in seven-month-old infants. Journal of Experimental Child Psychology. 1998;69:133–149. doi: 10.1006/jecp.1998.2438. [DOI] [PubMed] [Google Scholar]

- Gogate LJ, Walker-Andrews AS, Bahrick LE. The intersensory origins of word comprehension: An ecological-dynamic systems view. Developmental Science. 2001;4:1–37. [Google Scholar]

- Golinkoff R, Hirsh-Pasek K, Bloom L, Smith LB, Woodward AL, Akhtar N, et al., editors. Becoming a word learning: A debate on lexical acquisition. Oxford: Oxford University Press; 2000. [Google Scholar]

- Golinkoff R, Hirsh-Pasek K, Cauley K, Gordon L. The eyes have it: Lexical and syntactic comprehension in a new paradigm. Journal of Child Language. 1987;14:23–45. doi: 10.1017/s030500090001271x. [DOI] [PubMed] [Google Scholar]

- Hollich G. System 9 coding scripts. 2001 Available: http://hincapie.psych.purdue.edu/Splitscreen/index.html [2001, May 17]

- Hollich G, Hirsh-Pasek K, Golinkoff R. Breaking the language barrier: An emergentist coalition model of word learning. Monographs of the Society for Research in Child Development. 2000;65 (3, Serial No. 262) [PubMed] [Google Scholar]

- Hollich G, Rocroi C, Hirsh-Pasek K, Golinkoff R. Testing language comprehension in infants: Introducing the split-screen preferential looking paradigm; Paper presented at the Society for Research in Child Development Biennial Meeting; Albuquerque, NM. 1999. [Google Scholar]

- Houston DM, Pisoni DB, Kirk KI, Ying E, Miyamoto RT. Speech perception skills of deaf infants following cochlear implantation: A first report. International Journal of Pediatric Otorhinolaryngology. 2003a;67:479–495. doi: 10.1016/s0165-5876(03)00005-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houston DM, Pisoni DB, Kirk KI, Ying EA. Name learning in children following cochlear implantation: A first report. 2003b doi: 10.1016/s0165-5876(03)00005-3. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James W. The principles of psychology. New York: H. Holt & Co.; 1890. [Google Scholar]

- Kirk KI, Miyamoto RT, Ying EA, Perdew AE, Zuganelis Cochlear implantation in young children: Effects of age at implantation and communication mode. The Volta Review. 2002;102(4):127–144. monograph. [Google Scholar]

- Kral A, Hartmann R, Tillein J, Held S, Klinke R. Congenital auditory deprivation reduces synaptic activity within the auditory cortex in a layer-specific manner. Cerebral Cortex. 2000;10:714–726. doi: 10.1093/cercor/10.7.714. [DOI] [PubMed] [Google Scholar]

- Lawson KR. Spatial and temporal congruity and auditory-visual integration in infants. Developmental Psychology. 1980;16:185–192. [Google Scholar]

- Leake PA, Hradek GT. Cochlear pathology of long term neomycin induced deafness in cats. Hearing Research. 1988;33:11–34. doi: 10.1016/0378-5955(88)90018-4. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Infants’ response to temporally based intersensory equivalence: The effect of synchronous sounds on visual preference for moving stimuli. Infant Behavior & Development. 1992;15:297–324. [Google Scholar]

- Naigles LR. Developmental changes in the use of structure in verb learning: Evidence from preferential looking. In: Rovee-Collier C, Lipsitt LP, Hayne H, editors. Advances in infancy research. Vol. 12. Stamford, CT: Ablex; 1998. pp. 298–318. [Google Scholar]

- Neville HJ, Bruer JT. Language processing: How experience affects brain organization. In: Bailey JDB, Bruer JT, editors. Critical thinking about critical periods. Baltimore: Paul H. Brookes; 2001. pp. 151–172. [Google Scholar]

- Patterson ML, Werker JF. Two-month-old infants match phonetic information in lips and voice. Developmental Science. 2003;6(2):191–196. [Google Scholar]

- Pickens J. Perception of auditory-visual distance relations by 5-month-olds. Developmental Psychology. 1994;30:537–544. [Google Scholar]

- Pisoni DB. Cognitive factors and cochlear implants: Some thoughts on perception, learning, and memory in speech perception. Ear and Hearing. 2000;21:70–78. doi: 10.1097/00003446-200002000-00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni DB, Cleary M, Geers AE, Tobey EA. Individual differences in effectiveness of cochlear implants in children who are prelingually deaf: New process measures of performance. The Volta Review. 2000;101:111–164. [PMC free article] [PubMed] [Google Scholar]

- Polka L, Werker JF. Developmental changes in perception of non-native vowel contrasts. Journal of Experimental Psychology: Human Perception and Performance. 1994;20:421–435. doi: 10.1037//0096-1523.20.2.421. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ. Of kittens and kids: Altered cortical maturation following profound deafness and cochlear implant use. Audiology & Neuro-Otology. 2001;6:363–380. doi: 10.1159/000046846. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Korte M. Auditory compensation for early blindness in cat cerebral cortex. Journal of Neurosciences. 1993;13:4538–4548. doi: 10.1523/JNEUROSCI.13-10-04538.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rebscher SJ, Snyder RL, Leake PA. The effect of electrode configuration and duration of deafness on threshold and selectivity of responses to intracochlear electrical stimulation. Journal of the Acoustical Society of America. 2001;109(5):2035–2048. doi: 10.1121/1.1365115. [DOI] [PubMed] [Google Scholar]

- Sarant JZ, Blamey PJ, Dowell RC, Clark GM, Gibson WPR. Variation in speech perception scores among children with cochlear implants. Ear and Hearing. 2001;22:18–28. doi: 10.1097/00003446-200102000-00003. [DOI] [PubMed] [Google Scholar]

- Schafer C, Plunkett K. Rapid word learning by fifteen-month-olds under tightly controlled conditions. Child Development. 1998;69:309–320. [PubMed] [Google Scholar]

- Spelke ES. Perceiving bimodally specified events in infancy. Developmental Psychology. 1979;15:626–636. [Google Scholar]

- Sullivan JW, Horowitz FD. The effects of intonation on infant attention: The role of rising intonation contour. Journal of Child Language. 1983;10:521–534. doi: 10.1017/s0305000900005341. [DOI] [PubMed] [Google Scholar]

- Swingley D, Pinto JP, Fernald A. Continuous processing in word recognition at 24 months. Cognition. 1999;71(2):73–108. doi: 10.1016/s0010-0277(99)00021-9. [DOI] [PubMed] [Google Scholar]

- Teoh SW, Pisoni DB, Miyamoto RT. Cochlear implantation in adults with prelingual deafness: Clinical results and underlying constraints that affect audiological outcomes. The Laryongoscope. 2003 doi: 10.1097/00005537-200410000-00007. (In press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobey E, Pancamo S, Staller S, Brimacombe J, Beiter A. Consonant production in children receiving a multichannel cochlear implant. Ear and Hearing. 1991;12:23–31. doi: 10.1097/00003446-199102000-00003. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS, Lennon EM. Auditory-visual perception of changing distance by human infants. Child Development. 1985;56:544–548. [PubMed] [Google Scholar]

- Waltzman SB, Cohen NL. Cochlear implantation in children younger than 2 years old. The American Journal of Otology. 1998;19:158–162. [PubMed] [Google Scholar]

- Werker JF, Cohen LB, Lloyd VL, Casasola M, Stager CL. Acquisition of word-object associations by 14-month-old infants. Developmental Psychology. 1998 doi: 10.1037//0012-1649.34.6.1289. [DOI] [PubMed] [Google Scholar]

- Werker JF, Shi R, Desjardins R, Pegg JE, Polka L, Patterson M. Three methods for testing infant speech perception. In: Slater A, editor. Perceptual development: Visual, auditory, and speech perception in infancy. East Sussex, UK: Psychology Press; 1998. pp. 389–420. [Google Scholar]

- White KR. The current status of EHDI programs in the United States. Mental Retardation and Developmental Disabilities Research Reviews. 2003;9:79–88. doi: 10.1002/mrdd.10063. [DOI] [PubMed] [Google Scholar]

- Wolfinger RD, Chang M. Comparing the SAS GLM and MIXED Procedures for Repeated Measures; Paper presented at the Twentieth Annual SAS Users Group International Conference; Orlando, FL. 1995. [Google Scholar]

- Woodward AL, Markman EM. Early word learning. In: Damon W, Kuhn D, Siegler R, editors. Handbook of Child Psychology. 5th ed. Vol. 2. New York: Wiley; 1997. pp. 371–420. [Google Scholar]

- Young NM. Infant cochlear implantation and anesthetic risk. Annals of Otology, Rhinology and Laryngology Supplement. 2002;189:49–51. doi: 10.1177/00034894021110s510. [DOI] [PubMed] [Google Scholar]

- Zukow-Goldring P. A social ecological realist approach to the emergence of the lexicon: Educating attention to amodal invariants in gesture and speech. In: Dent-Read C, Zukow-Goldring P, editors. Evolving explanations of development: Ecological approaches to organism-environment systems. Washington, DC: American Psychological Association; 1997. pp. 199–252. [Google Scholar]