Abstract

In many Western science systems, funding structures increasingly stimulate academic research to contribute to practical applications, but at the same time the rise of bibliometric performance assessments have strengthened the pressure on academics to conduct excellent basic research that can be published in scholarly literature. We analyze the interplay between these two developments in a set of three case studies of fields of chemistry in the Netherlands. First, we describe how the conditions under which academic chemists work have changed since 1975. Second, we investigate whether practical applications have become a source of credibility for individual researchers. Indeed, this turns out to be the case in catalysis, where connecting with industrial applications helps in many steps of the credibility cycle. Practical applications yield much less credibility in environmental chemistry, where application-oriented research agendas help to acquire funding, but not to publish prestigious papers or to earn peer recognition. In biochemistry practical applications hardly help in gaining credibility, as this field is still strongly oriented at fundamental questions. The differences between the fields can be explained by the presence or absence of powerful upstream end-users, who can afford to invest in academic research with promising long term benefits.

Keywords: Credibility cycle, Mode 2 knowledge production, Funding, Evaluations, Chemistry

Introduction

This paper explores how changes in the governance of academic research shape research practices in different scientific fields. Under labels such as ‘entrepreneurial science’ (Etzkowitz 1998), Post-Academic Science (Ziman 2000), and Mode 2 knowledge production (Gibbons et al. 1994; Nowotny et al. 2003), influential scholars have reported an increasing intertwinement of university research with practical applications. However, these diagnoses have been criticized for their theoretical shortcomings and for lack of empirical support (Pestre 2003; Hessels and van Lente 2008). Our starting point in this paper is that two major developments can be discerned in the governance of academic research, which may be (partly) in contradiction. First, the pressure on academic research has grown to contribute to practical applications of the knowledge it produces. Public support for university research has shifted from block-grant support to earmarked funding for specific projects and programs (Lepori et al. 2007; Morris 2000). University researchers are increasingly stimulated to engage in for-profit activities, patenting and subsequent royalty and licensing agreements, spin-off companies and university-industry partnerships (Slaughter and Leslie 1997; Geuna and Nesta 2006). At the same time, however, the rise of quantitative performance evaluations has increased the need for scientific accountability, which could enhance the pressure for publications in academic journals (Wouters 1997; Hicks 2009). While it seems of crucial importance for the future of academic science, the interplay between these two developments has received little attention. In particular, the understanding of the differential consequences of changes in the governance of science across scientific fields is limited. Labels like entrepreneurial science or ‘Mode 2’ tend to obscure the diversity of science, treating it as a monolithic system moving from one state to the other (Hessels and van Lente 2008; Heimeriks et al. 2008). However, changes in the governance of science may have different implications for different research fields, due to their cognitive and organizational differences (Whitley 2000; Albert 2003; Bonaccorsi 2008).

The current paper aims to fill these two gaps by analyzing the effect of institutional changes on research practices in different scientific fields. Its central question is: have the changes in the science-society relationship made practical applications into a source of credibility for academic scientists in three fields of chemistry? Our analysis consists of two steps. First, we give a detailed analysis of the changing relationship between Dutch academic chemistry and society using the framework of a science-society contract. Second, we systematically investigate the role of practical applications in the research practices of three fields of chemistry by analyzing all steps of the credibility cycle.

Theoretical Framework

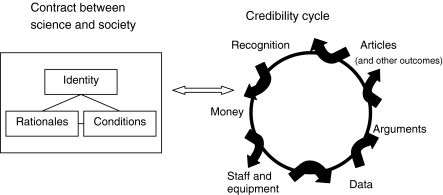

In order to address our research question, a heuristic framework is needed to conceptualize both the (macro-level) relationship between science and society and actual research practices on the micro-level. The theoretical framework of this paper contains two main elements: the science-society contract and the credibility cycle. In two earlier case studies, this combination has proven a valuable tool for investigating interactions between macro-level developments and actual research practices (Hessels et al. 2009, 2010). Our theoretical starting point is that scientists and their organizations are no isolated entities, but they interact with their environments to achieve their objectives. They depend on their environments for critical resources like funding and legitimacy (Leišytė et al. 2008; Pfeffer and Salancik 1978). From this perspective, on the macro-level the relationship of academic research with society can be conceived as a ‘contract’ (Guston and Kenniston 1994; Elzinga 1997; Martin 2003). Such a contract is not a physical entity, but a representation of the moral positions that encompasses all implicit and explicit agreements between academic science and governmental departments, NGOs, firms and other societal parties. The contract between science and society regulates the delegation of a particular task: doing research and, as a rule, will be tacit and implicit. Yet it is meaningful and traceable as it constitutes a resource for arguments, rights and obligations. The idea of a contract can be used as a metaphor for the (changing) societal position of science, but it can also be used as a heuristic framework to focus on its specific content, that is the terms and conditions under which the task of science is delegated. We developed the concept of the science-society contract in an earlier study (Hessels et al. 2009). As shown in Fig. 1, it has three elements, specifying what science should do (identity), why it should do this (rationale), and the appropriate conditions for science to function well (conditions). According to this contractual perspective, the very identity of science is connected to the provision of a valuable public good. Science’s task is to produce knowledge and to deliver it in forms like papers, patents, artifacts or educated people. The precise type of expected knowledge and the degree to which science should be involved with practical applications vary over time and across disciplines. The contract, that is, the set of implicit and explicit agreements, also describes why science deserves support. Academic research is often regarded as a necessary stipulation for sustaining a system of higher education, commercial product development, and informing complex decisions and innovation. The third element of the contract, which will receive most attention in this paper, contains agreements about the conditions under which scientists work, including expectations regarding the social structure of the research community, allocation of research funds, and incentives for generating practical applications. The changing science-society contract of Dutch academic chemistry research will be analyzed in section “The Changing Societal Contract of Dutch Academic Chemistry, 1975–2010”.

Fig. 1.

Our framework for studying the changing relevance of academic chemistry (based on Hessels et al. 2009)

The position of individual researchers, on the other hand, can be expressed by the ‘credibility cycle’ (Latour and Woolgar 1986). This model (see Fig. 1) explains how struggles for reputation steer the behaviour of individual scientists. Its starting assumption, underpinned by many sociological studies of science, is that a major motivation for a scientist’s actions is the quest for credibility. Similar to Whitley’s notion of reputation (Whitley 2000), credibility refers to the ability ‘actually to do science’ (Latour and Woolgar 1986, p. 198). Note that credibility is broader than recognition or rewards for scientific achievements. It is a resource coming in various forms (see Table 1), which can be earned as a return on earlier investments. Scientists invest time and money expecting to acquire data that can support arguments. These are written down in articles, which may yield recognition from colleagues. Based on this, scientists hope to be able to receive new funding, from which they buy new equipment (or hire staff) which will help to gather data again, etc. Conceived in this way, the research process can be depicted as a repetitive cycle in which conversions take place between money, staff, data, arguments, articles, recognition, and so on. Practical applications, the topic of this paper, can have various functions in the credibility cycle; they may act as a source of credibility (in the form of recognition or money), but they could also influence particular credibility conversions as a catalyst or an inhibitor. In section “Academic Research and Practical Applications: A Credibility Cycle Analysis”, we will analyze the role of practical applications in the credibility cycle of three fields of Dutch chemistry. Given the strong influence of incentive and reward structures, an analysis of the credibility cycle can reveal the forces under which academic researchers have to operate. It enables us to identify how the new science-society contract shapes the behaviour of individual researchers.

Table 1.

Definitions of the various forms of credibility

| Form of credibility | Definitiona |

|---|---|

| Money | Funding that can be spent on research activities |

| Staff and equipment | Human and technical capital for research activities |

| Data | Raw findings |

| Arguments | Contributions to scientific debates based on interpreted findings |

| Articles (and other outcomes) | Publications and other concrete products of research activities |

| Recognition | A scientist’s (informal) reputation based on his/her achievements and scores in formal quality assessments such as research evaluations and performance interviews |

Methods: A Case Study of Chemistry in the Netherlands

The general research strategy of this study is a case study approach (Yin 2003). This strategy seems appropriate because our aim is to contribute to the understanding of changing science systems. For our exploration of the effects of institutional changes on academic research practices, we use a set of case studies of three chemical fields in the Netherlands1. Investigating the potential tension between pressures for academic publications and pressures for practical impact requires a discipline with both a strong publication tradition and possibilities to turn research outcomes into practical applications. Chemistry fulfills both conditions well, especially in the Netherlands. In Dutch chemistry there is a long tradition of relationships between university researchers and companies (Rip 1997; Homburg 2003). There is an exceptionally cooperative relationship between universities and industry in this field, also in the form of collective lobbying for public money (A07, R06). Moreover, chemistry has a strong academic tradition, and Dutch chemists have an excellent reputation for their scientific publications (Moed and Hesselink 1996).

Still, connections with industry are not of the same intensity across all chemical fields. Because we aim to explore the diversity of science, our case studies deal with three different fields. Our theoretical sampling (Eisenhardt & Graebner 2007) of fields is based on the different relationships to industry and other societal stakeholders. Biochemistry is a relatively fundamental field and traditionally has relatively few interactions with societal organizations. Its main applications are in the medical domain and are mediated by medical researchers. Environmental chemistry contributes directly and indirectly to environmental policy. It also delivers knowledge and tools to industry and non-governmental organizations related to the risk assessment of industrial chemicals. Catalysis is strongly connected to chemical industry. Its knowledge can help firms to enhance the efficiency of their production processes and to decrease their environmental impact.

Our analysis of the changing science-society contract of Dutch chemistry (“The Changing Societal Contract of Dutch Academic Chemistry, 1975–2010” section) is based on the documents listed in “Appendix A” in combination with interviews with four scholarly experts2 on Dutch chemistry, R&D officers of five chemical companies, and representatives of research council NWO, the association of Dutch chemical industry (VNCI), the Dutch chemical association (KNCV) and the department of science policy of the Ministry of Education, Culture and Science. The documents were collected based on prior knowledge of the authors, tips from interviewees, and the ‘snowball method’. The selection includes governmental policy documents, reports and strategic plans of research councils, foresight studies, evaluations and other important publications about Dutch academic chemistry. The findings from these documents were triangulated in interviews with the experts and stakeholders mentioned above. In this paper we will refer to these documents using the abbreviations presented in the appendix. The contract analysis is delimited to the period of 1975 until 2010. The starting year of 1975 marks the beginning of governmental science policy in the Netherlands (M74), which is generally regarded as a landmark event in the growing societal demand for application-oriented research.

For the credibility cycle analysis we carried out semi-structured in-depth interviews with 20 academic researchers in catalysis, environmental chemistry and biochemistry. We chose interviews rather than a survey in order to gain in-depth insight into the behaviour and motivations of individual scientists. The respondents’ ranks ranged from PhD-student to full professor and they were employed at five different universities in the Netherlands (see Table 2). We pursued a purposive sampling strategy in order to reach a reasonable degree of diversity concerning age, academic rank and affiliation. All interviews were carried out in 2007 and 2008. This implies that, in contrast with the longitudinal nature of our contract-analysis, the interviews provide the richest view of the situation just before the end of the period studied, and give more anecdotal and fragmented insight into the past. Our flexible interview protocol allowed us to explore a wide variety of possible relationships between practical applications and credibility. The scientists were asked questions about their current and past research activities, their personal motivation, and their experiences and strategies concerning funding acquisition, publishing, scientific reputation, and performance evaluations. These questions provided access to both personal behaviour and to cultural characteristics of the scientific field, that is, the norms, values and criteria guiding the credibility cycle. CVs and publication lists of all scientists were collected in advance, to prepare the interview and to provide additional insights and empirical support. Using NVivo (qualitative analysis software), the interview transcripts were coded in accordance with the different steps of the credibility cycle. In the interview analysis, special attention was given to differences among the three scientific fields. In section “Academic Research and Practical Applications: A Credibility Cycle Analysis” some interview quotes will be presented to illustrate and clarify our findings.

Table 2.

Distribution of 20 respondents over fields, universities and academic ranks

|

Catalysis (9) Biochemistry (6) Environmental chemistry (5) |

University of Amsterdam (8) Utrecht University (6) VU University Amsterdam (3) Radboud University Nijmegen (1) Eindhoven University of Technology (1) Leiden University (1) |

Full professor (6) Retired full professor (5) Associate professor (5) PhD-student (3) Post-doc researcher (1) |

The Changing Societal Contract of Dutch Academic Chemistry, 1975–2010

In the following we will empirically address our question about practical applications as a source of credibility in two parts: a historical analysis of the changing science-society contract and a sociological analysis of the position of practical applications in today’s credibility cycle. This section will reconstruct the way the science-society contract has changed for chemistry. The main attention will go to the conditions, as this part of the contract is most directly connected to the resources of research practices and the institutional environment of academic research.

Over the period studied the identity of academic chemistry has changed from basic research to the production of strategic knowledge. Although some professors already had strong ties with chemical companies in the 1950s and 1960s (Homburg and Palm 2004), in these years direct contributions to practical applications were not regarded as the main task of academic chemists. Since the introduction of science policy in the 1970s and innovation policy in the 1980s, however, the government has increasingly expected academic chemistry to address societal needs and to produce applicable knowledge as well3. During the 1990s the idea of ‘strategic research’ has won ground, which concerns the development of fundamental insights in domains of high relevance for economy or society (Irvine and Martin 1984). The strategic identity of academic chemistry endures in the new millennium as it is compatible with the most recent innovation concepts, in which the university is seen as a supplier of basic knowledge which can be valorized by other actors in the innovation system. However, since 2005 the active participation in commercialization activities (often called ‘valorization’ in Dutch policy discourse) is becoming an increasingly central aspect of its identity, too (R06, M07, N10).

In the rationales for funding academic chemistry the emphasis has shifted from education and cultural value to the need for innovation and sustainability. In the first post-war decades, the two dominant rationales were the necessity of chemical research for the training of new R&D-workers and (less importantly) the cultural value of basic research (Homburg 2003; Hutter 2004). In the 1970s, the wake of environmental awareness and the start of science policy together caused a shift in the rationales from industry’s need of educated workers to society’s need of chemical expertise in the wider sense (Rip and Boeker 1975). The budget cuts on basic research in industry in the 1980s increased the importance of the rationale for supporting academic chemistry related to its potential contribution to technological innovations4 (de Wit et al. 2007; Van Helvoort 2005). In the 1990s, the notion of sustainable development became increasingly significant in rationales for funding (chemical) research5. Around the turn of the century, the funding of university research was increasingly framed as support for the national innovation system. In this perspective, support for university researchers is accompanied with the expectation that they actively interact with other actors in the innovation system, and contribute to the process of “valorization”, by writing patents or by starting spin-off companies.

Regarding the institutional conditions specified in the contract, during the 1950s and 1960s chemical scientists had a high degree of autonomy. The most important types of funding (the so-called first and second money stream) were distributed without any conditions attached, based on considerations of academic quality and reputation. The first money stream was direct funding from the government to universities. The second money stream was supplied by SON, the Dutch research council for chemical research, founded in 1956, whose missions were to stimulate and coordinate basic chemical research6. SON’s resources were distributed by ‘working communities’, thematic research networks, based on considerations of innovativeness but with little steering power (Hutter 2004). As a third stream of funding a small proportion of all professors engaged in contract research for industry (Homburg 2003).

In the 1970s, scientists were increasingly held accountable for their work. Although they were not yet directly affected by policy measures, chemical researchers needed to put more effort in explaining to society what they were doing. Governmental science policy explicitly aimed at enhancing the agreement of research agenda’s with societal demands (M74). Initially this was attempted by simply facilitating the interactions of scientists with societal actors.

From 1980 onwards, however, a considerable change occurred in the funding of chemical science, shifting the emphasis towards applicability (van der Meulen and Rip 1998). This change was due to three intertwined developments. First, in 1983, science policy minister Deetman implemented the system of ‘conditional funding’, in which part of the first money stream became subject to selection based on criteria of scientific quality and societal significance (M84). This served as an occasion to both cut budgets and to increase governmental steering of research directions7. Second, informed by foresight studies on Dutch chemistry (K82, VS80), an increasing share of the second money stream was dedicated to application-oriented research. SON started a program for applied chemical research in 1980, together with the new technology foundation STW, the share of which in SON’s total budget grew steadily to approximately 20% in 1995 (S95). In 1988, SON’s mother organization ZWO was drastically reorganized into the new NWO, which resulted in increased funding for application-oriented research (Kersten 1996; van der Meulen and Rip 1998). Third, and most significantly, the third money stream, which was often application-oriented, grew fivefold (AC91). During the 1980s chemical companies became more willing to sponsor academic research, due to their budget cuts on in-house basic research which made them more dependent on basic research conducted elsewhere (de Wit et al. 2007; Van Helvoort 2005)(K84). Also the ministry of Economic Affairs entered the scene and started the IOP-programs8 to fund university research, in order to enhance the innovation capacity of the Netherlands.

These developments continued in the 1990s. The second money stream continued to broaden its mission beyond basic research. The minister of science policy adopted the recommendation from the 1995 foresight study (O95) to strive for increasing the share of industrially steered chemical research from 50% to 75% (M97).

Another significant change in the 1990s was the institutionalization of performance evaluations. Governmental policy-makers, research councils and university managers who had all gained steering power in the funding allocation, developed a need for transparency regarding research outcomes. In 1996, the first nation-wide quality assessment of chemical science was conducted (V96), the second in 2002 (V02). Due to the lack of a compelling protocol, the members of the evaluation committees were free to choose to what extent they take into account societal relevance or applications (van der Meulen 2008; van der Meulen and Rip 2000). In practice, they turned out to generally ignore this criterion and focus strongly on traditional scientific quality indicators, like the number of publications in high quality scientific journals. Relevance was one of the four major criteria used (the others being quality, productivity and viability), but this was mainly conceived as ‘scientific relevance’. The societal or economic impact of the research was only assessed if this suits the group’s (self-defined) mission.

After 2000 chemistry faced a further diversification of funding sources. Thanks to their continued growth, the European Framework Programmes have become a substantial source of income for academic chemists. Moreover, consortia-based funding emerged, large sums of governmental money supplied to collaborative programs of university scientists which are monitored by (industrial) user committees. Significant examples are the NanoNed program (2004) and the TTI ‘Dutch Polymer Institute’ (1997). Moreover, in 2002, the ACTS program was founded, for ‘Advanced Chemical Technologies for Sustainability’9. This program is funded by several ministries and chemical companies, but it is managed by NWO. In 2005, its volume was about half of all second money stream funding available for chemical research in the Netherlands: the program amounted to 11.3 Million Euros, compared with 14.5 Million for NWO-CW (CW06). Besides, there is currently a major effort to increase the thematic task division among the Dutch universities active in chemical research. Thanks to a successful lobby by the Regiegroep Chemie (R06), chemistry has been appointed by the Dutch Innovation Platform as one of the key-areas which are seen as major drivers of future economic competitiveness. This has helped to acquire about 20 Million Euros of additional governmental funding annually for chemistry and physics, which will be spent mainly on the enhancement of strategic focus areas (B10).

A new evaluation protocol was implemented in 2009, in which ‘societal relevance’ has a more prominent position (V09). A recent set of pilot projects has demonstrated the possibility to measure this aspect with relatively robust and valid indicators in a wide range of fields (E10). The new protocol has already been applied in an evaluation of chemical engineering research (Q09), but it still has to prove its use in the evaluation of (general) chemistry.

Summary

Table 3 provides an overview of the changes in the science-society contract of academic chemistry, as has been discussed in this section. Four important changes can be identified in the conditions under which chemists work. First, the funding now available for university research provides room for considerable efforts in application-oriented domains, while in the first post-war decades, there was general consensus that universities should restrict themselves to ‘pure science’. Second, the current contract demands more intensive interactions with industry. Because the main products of university research are not anymore people (only), but also knowledge, industrial steering of the content of research has become justified. Since the rise of innovation policy, industrial representatives have a say in the design of most major chemical research programs. Third, universities are challenged to play an active role in the valorization of research outcomes. Merely providing knowledge is not considered sufficient anymore. The national government actively stimulates academic patenting and the creation of spin-off companies. Although there is little proof of actual success, all Dutch universities provide facilities to support researchers in translating their knowledge into commercial activities. Fourth, systematic performance evaluations have become a powerful institution governing academic research. Every research group is subject to regular assessments, which tend to focus most strongly on bibliometric quality indicators. To conclude, the new contract seems to be ambivalent with regard to practical applications of academic chemistry. On the one hand, the available funding stimulates application-oriented research, but, on the other hand, the applications actually generated are not rewarded in the increasingly powerful performance evaluations.

Table 3.

Overview of the changing contract for academic chemistry (adapted from Hessels et al. 2009)

| Summary of identity | Most dominant rationales | Most important conditions | |

|---|---|---|---|

| 1950s and 1960s | Basic research |

Education Cultural value |

Autonomy Unconditional funding SON communities |

| 1970s | + Useful knowledge | + Problem solving potential | + Social accountability |

| 1980s | Applicable knowledge | Technological innovation |

+ Conditional funding + Application-oriented funding (STW, IOP, contract research) + Foresight + Scarcity of resources Reorganization NWO |

| 1990s | Strategic knowledge | + Sustainable development |

Further prioritization + Performance assessments |

| 2000+ | + Valorization | + Innovation system |

+ Consortia (ACTS, TTI, BSIK) + European FPs |

+ signs indicate that these elements complement rather than replace existing elements

ACTS: Advanced Chemical Technologies for Sustainability; BSIK: Besluit Subsidies Investeringen Kennisinfrastructuur Programs; IOP: Innovation Oriented Program; NWO: Dutch organization for Scientific Research; TTI: Technological Top Institutes; SON: Chemical Research Netherlands; STW: Technology Foundation

Academic Research and Practical Applications: A Credibility Cycle Analysis

How do changes and ambivalences in the contract play out in the daily practice of academic researchers in the various fields of chemistry? Do contributions to practical applications add to their credibility? In this section we will closely analyze the six steps of the credibility cycle, with special attention for the differences across the three fields of chemistry we have investigated.

From Recognition to Money

Our analysis of the changing societal contract of Dutch academic chemistry in the previous section has shown that the palette of available funding sources has changed dramatically.

The three fields we have studied use a variety of funding sources (see Table 4). Do (promised) practical applications help researchers to acquire funding?

Table 4.

Overview of the most significant external funding sources in the three sub-disciplines

| biochemistry | catalysis | environmental chemistry | |

|---|---|---|---|

| Funding sources |

NWO EU FPs |

NWO, STW EU FPs industry consortia entrepreneurship |

NWO, STW EU FPs government industry NGOs |

Based on our interviews

In catalysis, promising a contribution to practical applications is a requirement for most types of funding. The procedures for acquiring funding vary. To get money from an individual firm, very short descriptions can suffice to convince of the quality and relevance of the proposed project. Research councils and hybrid consortia, however, demand extensive proposals addressing a number of predefined issues like innovativeness, scientific relevance, societal impact, research methods, expected outcomes and deliverables. Except for NWO and the ‘National Research School Combination Catalysis’, all funding sources require that their research is both relevant for industry and excellent in scientific terms. Several of our interviewees in catalysis have founded small firms based on patented inventions. Currently these are still too young to make profit, but in the future they may serve as sources of research funding. In case such a company is acquired by a larger firm, a significant sum will flow to the research department, to be spent freely on research activities. The increased industrial influence on the research agenda generates incentives to pay closer attention to possible practical applications, but it does not imply a shift from basic to applied research. As the following quote illustrates, both public and private funding sources are willing to support research on fundamental questions, granting considerable autonomy to the researchers to choose specific compounds and reactions to focus on:

‘And it is of course the case, that they seldom really let us do a research project in order to get that specific catalyst after four years for sure. They are rather interested in having you work in a particular area of research, of which we see together: this is promising. And then the innovations come automatically and if they really want to apply it, they pick it up themselves’ (full professor, catalysis).

For environmental researchers, however, there are significant differences among the various possible sources of funding. The national research council NWO and the European Framework Programmes (FPs), on the one hand, strongly focus on academic quality and reputation. Governmental bodies, industry and NGOs, on the other hand, have specific questions that can be answered by applied research. These funders tend to look for the researcher who can answer them with the best price-quality ratio, creating a competition between academic researchers and (semi-) commercial research institutes. The projects for industry and other application-oriented funding sources are often pretty short. They can threaten the continuity in research activities, they involve little basic research and they are not suitable for researchers to get a PhD-degree. However, environmental chemists need to do them to remain financially healthy, and they pay better than research councils10.

In biochemistry, scientists only use funding from sources oriented to basic research. The various grants and programs of NWO are most significant; next to that the European FPs are gaining importance. Acquiring money from industry seems hardly possible because ‘the time horizon of companies, also of big companies, has become very limited’11. Academic quality of research proposals and of research groups are still the most important criteria to get money from NWO and FPs. The rise of thematic priority programs does not seem to have serious consequences. In many programs referring to potential practical applications enhances the chances of success, but this can usually be dealt with by rather loose and unrestrictive statements. This is nicely summarized by a respondent who explained that he simply looks around what thematic programs are available and then thinks up a link with his own competencies and existing research plans:

‘You try to make your expertise fit, we have become pretty good at that. You write it down in such a way that it fits the program’ (full professor, biochemistry).12

Overall, the changes in the funding structures do not seem to have strong implications for the degee of practical orientation of the research directions biochemical scientists choose.

Another possible way in which practical applications can be used to acquire money is consultancy. In catalytic and in environmental chemistry it is common to conduct small consultancy projects for public-sector or private organizations beside the (bigger) research projects. Consultancy is not common in biochemistry.

It must also be noted that the scores on official performance evaluations increasingly contribute to the funding available to a research group. University managers take them into account when faced with the need for budget cuts13. Also in the review process of NWO proposals the scores are used as a quality indicator. Groups with a good score will advertise it when attracting contract research as well.

To conclude, in all fields an increasing share of all funding demands researchers to articulate possible practical applications in industry or society of the proposed project. In catalysis and environmental chemistry, however, the promises about practical applications tend to be much more explicit and specific than in biochemistry.

From Money to Equipment and Staff

Once a scientist has acquired research funding, he or she can use it to buy equipment or to hire one or more people to carry out the work. What criteria are used in the selection of candidates?14 Do (realized or promised) practical applications play a role? Asked what characteristics they look at when selecting candidates for academic positions, senior researchers mention research quality, abilities to attract funding and management and collaboration skills. Publication lists stand out as the most important quality indicator. This is confirmed by all respondents, both juniors and seniors, for example:

‘When we hire new staff, their publication list is the most important criterion, possibly in combination with a Hirsch-index or something similar. The same goes for contract extension and for promotion to associate or full professor’ (full professor, environmental chemistry).

‘You have got to publish a lot. Imagine I have a post-doc position and there are 60 candidates. First I look at the people who publish 10 articles a year. Why? Because they will also publish 10 articles for me’ (associate professor, catalysis).

PhD-students do not believe that practical applications of their research will enhance their prospects for an academic career. However, in catalysis some professors report that they do look at the number of patents a candidate has developed, or the amount of interest that industry shows for his research (in terms of industrial funding). But practical applications are never seen as a necessary requirement in order to qualify for a certain academic position.

One other major aspect taken into account by many seniors is the candidate’s proven acquisition skills.

‘Today it is important that you have acquired a European project once, or at least paid a significant contribution to it. That you show that you can do that as well. But I would say that publications are really number 1 and this is a good number 2’ (full professor, environmental chemistry).

For this reason, practical applications can make an indirect contribution to career prospects. Indeed, in catalysis researchers at various levels expect that contacts with industry will be valuable in academic job applications.

To conclude, in the selection of candidates for academic positions, academic criteria tend to rule. In catalysis, career perspectives may be slightly enhanced by industrially relevant work or commercial activities, but one’s patents are still far less helpful than one’s publications and citations.

From Equipment and Staff to Data

What is the role of practical applications in the production of data? In general, different kinds of research projects offer a varying degree of operational autonomy. A personal grant from NWO, for example, is qualified as ‘reasonably free money’15, and is therefore highly appreciated. The same goes for university funding. Money from industry or other third parties typically involve more communication with the funding source, but the degree to which this decreases the flexibility for the researchers to deviate from the original plans varies across fields.

In catalysis, firms providing (co-)funding obviously aspire to benefit from it, but they do not steer the experimental work in detail. In principle, they do not predefine all details of the research to be conducted, but only the type of system, class of compounds, or type of reactions to be studied. Companies hope to benefit from obtaining more background knowledge in the field they are working in, which can serve as a source of inspiration for more applied innovation projects conducted in-house. Industrially (co-)funded projects usually have a supervising committee which receives an update about the progress about three to four times a year and which can suggest particular directions, but these are only followed if this does not hinder the academic development of the PhD-student involved. According to both our industrial and academic respondents few disagreements on this point occur. In cases in which companies steer a project in specific directions this often has little implications for the academic question that is addressed. The same catalytic mechanism can be studied using different substances.

The situation is different in environmental chemistry. Here the projects for industry, the government or NGOs tend to be short and serve specific goals. In this domain specific actors often have a strong stake in particular outcomes, which can complicate the collection of data16. Researchers have an interest in having the assent of all organizations involved (government, industry and interest groups), because this increases the impact of their outcomes. However, this may challenge their independence, as some parties may try to influence the outcomes to their own benefit.

Most biochemists do not have any contacts with possible users of their knowledge. Only one professor we have interviewed regularly meets with medical researchers in the context of a research project with medical relevance, but others do not report any interaction outside their own field influencing their work.

To conclude, practical applications can have various functions in the actual research process. Biochemists are not concerned with practical applications during data collection, but researchers in catalysis and environmental chemistry tend to interact frequently with industry or other users that (co-)fund the research. In environmental chemistry such interactions sometimes disturb the data collection; in catalysis this happens less, and they are often perceived as a source of inspiration and motivation.

From Data to Arguments

Although the conversion of data into arguments is relatively straightforward in chemistry, it is still an active step with significant degrees of freedom. To what extent do practical applications influence this process? For academic chemists the main consideration in this step seems to contribute to scientific debates. Researchers use their data to construct claims that fit in a particular scientific discourse in which they are participating. The arguments they develop are their tool for positioning themselves within a particular research community (Latour and Woolgar 1986).

In catalysis we found no evidence of the influence of practical applications on the arguments researchers produce, apart from an emphasis on either environmental or economic benefits. In the other two fields, however, the funding source of the research does influence the production of arguments. In biochemistry arising practical applications can steer the arguments in a particular direction. The results of biochemical experiments paid by a patient organization need not be of a different kind than the ones from experiments funded by NWO, but the former are more likely to be converted into medical arguments while the latter may be used only to contribute to more fundamental biochemical debates17. In the case of contract research, the sponsor (or ‘client’) may also influence the (types of) arguments produced. The environmental chemists in our sample report that they sometimes have difficulties defending their academic ‘objectivity’ against unwanted interference of the companies or environmental agencies that have a stake in the research. Industry often hopes the data are turned into good news about the safety of chemical substances.

To conclude, in most cases researchers are relatively autonomous in developing their data into arguments, but in applied research projects the funding party sometimes succeeds to influence the conversion of data to arguments, thereby harming the objective position of academic scientists.

From Arguments to Articles (and Other Outcomes)

Publishing in scientific journals is of vital importance in all three fields of chemistry. Many scientists try to get their work published in the best journals possible, which is often defined as the ones with the highest impact factor. The following two quotes illustrate the utmost importance attributed to scientific publications by individual researchers:

‘Yes, it is important, for two reasons. First, of course, one wants to make one’s findings publically known. This is a way to receive recognition of your peers. Second, it is also dire necessity, in order to secure the continuity of funding. Because if you do not have publications… it is the way for the outside world to assess you’ (associate professor, biochemistry).

‘I think it is the only way to show what you have done. And I think that if something is not publishable in a scientific journal, it is not worth much’ (PhD-student, biochemistry).

How do practical applications influence the publication endeavour? In principle, application-oriented research can also be published. Both in environmental chemistry and in catalysis, scientists publish the results of research issued by industry or other users in prestigious journals, too. However, this is not always as easy as with research funded by the first or second money stream. Companies sponsoring catalytic research are protective with respect to commercially relevant outcomes. Research contracts usually specify a period in which a company has exclusive access to the results to explore the feasibility of the developed technology and to consider applying for a patent before the academics are allowed to make them public. This hardly ever leads to complete bans on publications, but it does complicate early stage communication such as poster-presentations. There are also exceptional cases in which a research project is completely secret and no publications are allowed at all.

In environmental chemistry, the small size of many assigned projects complicates the publication of academic papers.

‘Yes, then you almost always face the situation that it is just too little to make a good scientific publication about it’ (associate professor, environmental chemistry).

Moreover, writing a paper beside the project report requires extra work which can not be accommodated within the projects themselves.

In their evaluation of manuscripts, journal editors and reviewers hardly assess the (possible) practical applications of the research. In all three fields, the main criteria in the selection of papers are the novelty, accuracy and the scientific relevance of the research. In environmental chemistry, it may help if one manages to link one’s research to an important societal issue like global warming. Often, however, such framing is downplayed as well, as the following quote nicely illustrates:

‘Climate change is of course very hot. So in the piece we are currently working on, we try to steer it a bit in that direction. So that the result is useful. And sustainability. That you try to associate with the fashion terms. […] On the work itself it does not really have an influence. But it does on your introduction, how you stage your story, sketch the framework, there you include a couple of words’ (PhD-student, environmental chemistry).

In other fields, it is matter of personal style whether one refers to the societal context or the possible applications of the research. Both in biochemistry and in catalysis some researchers make an effort to do this, but this does not particularly help them to get their papers published.

Although scientific publications are the most important type of research outcomes, chemists deliver other products, too. In environmental chemistry it is common to write scientific reports for the organizations commissioning the research. Catalytic chemists are frequently involved in patent applications. Some senior researchers have contributed to tens of patents. PhD research commissioned by industry often leads to patents, for which the companies sometimes even pay a bonus. In other cases academics write patents themselves and start a company to make a profit of it that can partly be used as research funding. Although less common, this phenomenon starts occurring in biochemistry, too18.

In conclusion, journal publications remain the most important form of output in academic chemistry, but under the new science-society contract researchers also produce patents and write scientific reports. In environmental chemistry application-oriented research seems more difficult to publish due to the small project size. In catalysis practical applications only create a delay, but do not inhibit the eventual publishing of results. Anyway, we found no evidence of practical applications that help to get one’s work published; the selection of journal manuscripts is based solely on academic criteria.

From Articles (and Other Outcomes) to Recognition

Do practical applications contribute to an academic reputation? One can distinguish a formal and an informal component of recognition. The formal component is the result of official research group evaluations and individual performance interviews. Someone’s informal recognition is based on the assessment of colleagues of one’s qualities, expressed in conference contributions, (informal) discussions, and publications. Both types of recognition mutually influence each other. A good score on official evaluations will be known by one’s colleagues and taken into account in their informal recognition of one’s work. In turn, informal recognition also contributes to the score on formal performance evaluations.

Within one’s own small subfield one can earn recognition for the content of one’s papers and lectures. These are valued for their innovative content and for being published in prestigious journals. Beyond one’s own specialty one’s reputation is more based on quantitative indicators like publication and citation scores and formal performance evaluations. Journal impact factors, for instance, are taken very seriously, and compared up to three digits.

Since research grants have become scarce, the amount of funding attracted has also become a contributing factor to one’s reputation. In environmental chemistry especially the more academic grants, like the ones from research council NWO are appreciated, as they are generally regarded more prestigious than funds from the ‘third money stream’.

Practical applications of academic chemistry contribute little to recognition. Some catalytic researchers have respect for contributions to industrial innovations, especially in engineering subfields. However, these contributions often remain invisible to academic colleagues. The number of patents scientists hold does not contribute much to their academic reputation; they can even have a negative effect. One professor argued that his long list of patents tends to distract people from his academic success and makes them forget that he also has an impressive list of scientific publications. In biochemistry societal contributions do not play any role in getting academic recognition. Promises about applications (most often in the medical domain) can contribute only indirectly to one’s reputation if they help to start big research programs or consortia. Practical applications of environmental research also contribute little to one’s academic reputation19.

Beside the issues discussed so far, some other aspects that may contribute to informal recognition are management skills, collaborations with well-respected scientists, educational work, and presentation skills. However, our interviews indicate that these are all of far less importance than journal publications.

With regard to formal recognition, all scientists have a performance interview with their direct boss once a year. Practical applications receive very little attention20; productivity in terms of publications is the most important issue on the agenda. In environmental chemistry, also non-academic output, the so-called ‘grey’ publications, is taken into account. In catalysis and biochemistry these are not regarded as valuable output. For senior researchers contributions to education, funding acquisition and other management tasks are also discussed.

The scores on performance assessments both count for internal university policy and for the acquisition of additional funding in the second and third money stream. In the official protocol used in most evaluations so far, one out of the four main criteria is ‘relevance’ (V03). In practice, however, the evaluation committee is free to choose its own interpretation of this criterion. In biochemistry, the committees typically define it as scientific relevance, because this is considered most appropriate in a field of basic research. In catalysis and environmental chemistry, however, the reports do not clearly define their concept of relevance and do not express the extent to which it concerns societal relevance as well (V96, U01, V02). This situation may change soon, since the 2009 evaluation protocol contains more compelling criteria for societal relevance (V09) and a recent set of pilot studies has indicated the possibility of measuring societal impact in various scientific fields (E10).

To conclude, in none of the three fields practical applications seem to make a significant contribution to recognition. Recognition is mainly based on academic publications. Beside informal recognition, formal processes like performance interviews and performance assessment also contribute to one’s reputation, but all focus on the same quality indicators: publications and citations.

Conclusion: The Importance of Stakeholders

This paper has explored the differential effects of changing scientific governance across scientific fields. Our analysis has shown that the relationship between academic chemistry and society has undergone some major changes since 1975. Under the current societal contract, academic chemists are expected to deliver strategic knowledge and to participate actively in the valorization of research outcomes. Due to the changing demands of both public and from private funding sources, academic researchers are increasingly challenged to contribute to practical applications. At the same time, however, they are subjected to systematic evaluations which hardly reward applicable knowledge, spin-offs or patents, but mainly publications in academic journals. In the performance assessments of 1996 and 2002 the societal dimensions of academic chemistry receive little attention. Although installed to increase the social accountability of scientists, evaluations merely enhance the need for peer recognition. Bibliometric quality indicators strengthen the pressure to publish in scientific journals and enhance the ‘publish or perish’ norm (Weingart 2005; Wouters 1997). As a result, there is a potential contradiction between research agendas that are fruitful with regard to funding acquisition and research agendas promising peer recognition and high evaluation scores.

Have practical applications become a source of credibility in Dutch academic chemistry? We found considerable differences among the three fields under study. In catalysis, practical applications constitute a rich source of credibility. Promising a contribution to industrial processes is a necessary requirement for acquiring research funding. The intensive interactions with firms during the research process stimulate rather than inhibit data collection and publications. Moreover, commercially viable outcomes can be turned into new research funding by selling patents or exploiting them in a spin-off firm. In biochemistry, practical applications do not help a lot in gaining credibility. The available funding sources provide incentives to articulate possible practical applications, but this has a modest effect, as subtle cosmetic adaptations of existing research plans usually suffice. Due to the rise of bibliometric performance evaluations, biochemists experience a much stronger pressure to publish than to contribute to practical applications. For environmental chemists, practical applications have a positive effect on some parts of the credibility cycle, but a negative on other parts. The funding structure provides strong incentives for application-oriented research and to contribute even more directly to practical solutions than before. However, relatively short, application-oriented projects are not most fruitful in terms of scientific publications, evaluation scores and academic recognition.

A partial explanation of the different effects of the changing contract on these three fields can be found in their socio-organizational characteristics. In environmental chemistry, the task uncertainty (Whitley 2000) is higher than in the other two fields. Catalysis and biochemistry have developed a convergent research agenda, in which there is considerable agreement about problem definitions and theoretical goals. In the young field of environmental chemistry, however, procedures are less standardized and intellectual priorities more uncertain. In the terminology of Becher and Trowler (2001), this can be characterized as a ‘rural’ field, with a low people-to-problems-ratio and with no sharply demarcated or delineated problems. Catalysis and biochemistry are more ‘urban’, in the sense that there are many researchers working on a narrow area of study, and there is strong mutual competition for priority of discoveries. The weaker competition and higher task uncertainty make it more difficult for environmental chemists to publish in prestigious journals. Moreover, they make this field more sensitive to external steering of its research agenda.

However, the difference between biochemistry and catalysis can not easily be explained by their social organization. Both fields have high mutual dependence and low task uncertainty (Whitley 2000), they are both urban, and composed of ‘tightly knit’ communities (Becher and Trowler 2001). The crucial difference between the two seems the type of stakeholders they have outside university. Catalysis, on the one hand, has a strong relationship with a homogeneous set of ‘upstream end-users’ (Lyall et al. 2004), namely chemical firms (see Table 5). Due to the high investments these firms make in their industrial facilities, they have a long term perspective. They make enough economic profit to be able to make substantial investments in relatively fundamental research. Moreover, the relationship between chemical industry and academic catalysis is characterized by high cognitive and social proximity (Tijssen and Korevaar 1997), which facilitates the knowledge transfer and alignment of research activities.

Table 5.

The different categories of end-users of each field

| Catalysis | Biochemistry | Environmental chemistry | |

|---|---|---|---|

| Upstream end-users | Industry | - |

Policy-makers Industry NGOs |

| Collaborators | (Other catalytic chemists) | Medical researchers | Other (more applied) environmental scientists |

| Intermediaries | Research councils |

Research councils Medical charities Patient organizations |

Research councils |

| Downstream end-users | Industry |

Health care practitioners Patients |

Policy-makers Industry NGOs |

Biochemistry, on the other hand, hardly has any ‘upstream end-users’ (Lyall et al. 2004). As its main applications are in the medical domain, its main stakeholders are health care practitioners and patients. But these both figure more as ‘downstream end-users’, as they do not directly interact with biochemical researchers and they do not have any formal channels to influence academic research activities. Biochemical researchers only interact with other scientists and with intermediaries that represent the stakes of these downstream users. These categories of stakeholders, however, provide little funding for academic biochemistry, compared to the more fundamental research councils. The lack of upstream users explains that practical applications do not form an important source of credibility for academic biochemists.

Environmental chemistry has a heterogeneous set of upstream end-users. Environmental policy-makers, firms and environmental NGOs all have a stake in this research, and all provide a share of the research funding. However, the time horizon of these users is relatively short. The knowledge needs of firms and NGOs with respect to environmental chemistry are usually related to short term problems, dealing with the regulation of specific chemicals. Policy-makers typically have a longer term perspective, as they invest in generic models for the regulation of different classes of chemical compounds. Still, their time horizon is much shorter than that of the companies taking an interest in catalytic research. Although environmental policy itself could benefit from a perspective up to several decades, in practice the time horizon of policy-makers is often limited by election cycles.

This study shows that practical applications have not (yet) acquired a central position in the credibility cycle in all chemical fields. This suggests that the science system is more inert than influential writings about Mode 2 and Post-Academic Science make believe. A powerful development, which receives relatively little coverage in the debate about changing science systems is the rise of bibliometric quality indicators. Installed to enhance the social accountability of science as a (largely) publicly funded enterprise, such indicators have been embraced by various actors as instruments of management and control (Gläser and Laudel 2007). Systematic research evaluations can be seen as a tool for ‘principals’ (policy-makers, research councils, university managers) to enhance their power over ‘agents’ (researchers), as they help to overcome problems of delegation (Braun 2003). Possibly their dominant position in the credibility cycle will change in the near future, as indicators of societal impact are gaining ground as a regular element of systematic evaluations. However, their advance is challenged by a growing need for (quasi-)straightforward and unequivocal indicators, as illustrated by the growing attention for university rankings, policy for excellence and metric-based university management tools.

To conclude, in this paper we have explored how the interplay between shifting funding sources and the rise of performance evaluations affects different scientific fields. The degree to which these developments stimulate scientists to contribute to practical applications turned out to vary strongly across fields. Using the concepts of ‘task uncertainty’, and ‘upstream end-users’, we have explored possible explanations for these differences which may be useful for the understanding of the dynamics of other disciplines in various national contexts. Further research is needed, however, before drawing generic conclusions on the relative importance of these characteristics in relation to other factors.

Acknowledgements

The authors thank Arie Rip, Ernst Homburg, Barend van der Meulen and Jan de Wit for sharing their expertise and for their valuable comments on earlier versions of this paper. All interviewees are gratefully acknowledged for their cooperation. We thank Ruud Smits and John Grin for their helpful comments on earlier drafts and for their contribution to the research design and Floor van der Wind for his assistance in the data collection.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Appendix

Appendix A.

Documents studied

| Abbreviation | Publisher/Authora | Year | Title | City |

|---|---|---|---|---|

| S73-S95 | SON | 1973-1995 | Jaarverslag | Den Haag |

| M74 | Ministry of Science and Education | 1974 | Nota Wetenschapsbeleid | Den Haag |

| M76-M97 | Ministry of Science and Education | 1976-1997 | Wetenschapsbudget | Den Haag |

| AR79 | Academische Raad | 1979 | Beleidsnota Universitair Onderzoek | Den Haag |

| K80 | KNCV | 1980 | Tien Researchdoelen | |

| VS80 | Verkenningscommissie Scheikunde | 1980 | Chemie, nu en straks: een verkenning van het door de overheid gefinancierde chemisch onderzoek in Nederland | Den Haag |

| K84 | KNCV & VNCI | 1984 | Toekomstig Chemisch Onderzoek: Een uitwerking van het rapport Wagner I voor de Chemie | |

| AC91 | ACC-evaluatiecommissie | 1991 | Evaluatie van de universitaire chemie in de jaren ‘80 | Amsterdam |

| S91, S93, S94, S96 | SON | 1991, 1993, 1994, 1996 | Meerjarenplan | Den Haag |

| K94 | KNCV & VNCI | 1994 | Toekomstig chemisch onderzoek: Universitair fundament voor industriële meerwaarde | |

| O95 | OCV | 1995 | Chemie in Perspectief: een verkenning van vraag en aanbod in het chemisch onderzoek | Amsterdam |

| V96 | VSNU | 1996 | Quality Assessment of Research: Chemistry: past performances and future perspectives | |

| M00 | Ministry of Education, Culture and Science | 2000 | Wie oogsten wil, moet zaaien: Wetenschapsbudget 2000 | Den Haag |

| N00 | NVBMB | 2000 | Verder met Biochemie en Moleculaire Biologie: Beleid voor een Vitale Wetenschap, | Nijmegen |

| U01 | Chemistry - Utrecht University | 2001 | Assessment of research quality | Utrecht |

| CW01 | NWO-CW | 2001 | Strategienota 2002-2005: Chemie, Duurzaam en Verweven | Den Haag |

| V02 | VSNU | 2002 | Assessment of Research Quality: Chemistry and Chemical Engeneering. | |

| VI03 | VNCI | 2003 | Vijfentachtig jaar VNCI in vogelvlucht | Leidschendam |

| V03 | VSNU, NWO and KNAW | 2003 | Standard Evaluation Protocol 2003-2009 for Public Research Organisations. | |

| CW03-CW05 | NWO-CW | 2003-2005 | CW-Jaarverslag | Den Haag |

| M04 | Ministry of Education, Culture and Science | 2004 | Focus op Excellentie en meer waarde: Wetenschapsbudget 2004 | Den Haag |

| N04 | VNO-NCW, VSNU & NFU | 2004 | Beschermde kennis is bruikbare kennis: Innovation Charter bedrijfsleven en kennisinstellingen. | |

| V05 | VSNU | 2005 | Onderzoek van Waarde: Activiteiten van Universiteiten gericht op Kennisvalorisatie, | Den Haag |

| CW06 | NWO-CW and ACTS | 2006 | Chemie@NWO: Naar een Environment of Excellence; Strategische koers 2007-2010 | Den Haag |

| R06 | Regiegroep Chemie | 2006 | Businessplan: Sleutelgebied Chemie zorgt voor groei, | Leidschendam |

| A07 | ACTS | 2007 | ACTS Means Business: Second Phase ACTS Plan 2007-2011 | Den Haag |

| M07 | Ministry of Education, Culture and Science | 2007 | Voortgangsrapportage Wetenschapsbeleid | Den Haag |

| R07 | Regiegroep Chemie | 2007 | De perfecte chemie tussen onderwijs en onderzoek | |

| Q09 | QANU | 2009 | QANU Research Review Chemical Engineering 3TU | Utrecht |

| V09 | VSNU, KNAW and NWO | 2009 | Standard Evaluation Protocol 2009-2015: Protocol for research assessment in the Netherlands | |

| B10 | Commissie Breimer | 2010 | Advies inzake Implementatie Sectorplan Natuur- en Scheikunde | Utrecht |

| E10 | Evaluating Research in Context | 2010 | Handreiking: evaluatie van maatschappelijke relevantie van wetenschappelijk onderzoek | |

| N10 | NWO | 2010 | Strategienota 2011-2014: Groeien met Kennis | Den Haag |

aACC: Academische Commissie voor de Chemie (the committee for chemistry of the KNAW)

ACTS: Advanced Chemical Technologies for Sustainability (a research program)

CW: Chemische Wetenschappen (division for chemical sciences)

KNAW: Koninklijke Nederlandse Akademie voor de Wetenschappen (royal Dutch academy)

KNCV: Koninklijke Nederlandse Chemische Vereniging (royal Dutch chemical association)

NCBMB: Nederlandse Vereniging voor Biochemie en Moleculaire Biologie (Dutch association for biochemistry and molecular biology)

NFU: Nederlandse Federatie van Universitair Medische Centra (federation of Dutch academic medical centres)

NWO: Nederlandse organisatie voor Wetenschappelijk Onderzoek (Dutch organization for scientific research)

OCV: Overlegcommissie Verkenningen (committee for foresight studies)

SON: Scheikundig Onderzoek Nederland (research council for chemistry in the Netherlands)

VNCI: Vereniging voor Nederlandse Chemische Industrie (association of the Dutch chemical industry)

VNO-NCW: Verbond van Nederlandse Ondernemingen en het Nederlands Christelijk Werkgeversverbond (Dutch employers’ association)

VSNU: Vereniging voor Samenwerkende Nederlandse Universiteiten (association of Dutch universities)

Footnotes

These case studies have also been used as a brief empirical illustration in an earlier, more theoretical paper (Hessels et al. 2009). The current paper presents a more detailed analysis of the material, focusing in particular on the differences between the three fields.

Scholars in the field of Science, Technology and Innovation Studies with expertise on chemistry: Prof. Dr. Ernst Homburg (Maastricht University), Dr. Barend van der Meulen (University of Twente and Rathenau Institute), Prof. Dr. Arie Rip (University of Twente), Prof. Dr. Jan de Wit (Radboud University Nijmegen).

In the first policy paper (M74), the primary mission of science policy is defined as enhancing the agreements of research agenda’s with societal demands.

The first foresight study of chemistry (VS80), commissioned by the minister of science policy concludes that academic chemistry should define its research goals more sharply and that the contacts with industry deserve intensification.

‘For all new scientific and industrial activities on the field of chemistry the strives for sustainability and the minimalisation of environmental pressure have become important boundary conditions’ (original emphasis)(O95, p. 2). NWO’s Strategy Note on Chemistry for 2002-2005 is even titled: ‘Chemistry, Sustainable and Interwoven’ (CW01).

The aims of SON are ‘the enhancement of fundamental research at universities, colleges and other institutes in the area of chemistry in the broadest sense and the development of cooperation among researchers who carry out such scientific research’ (Hutter 2004).

Deetman explicitly mentioned that the connection between chemical research and societal needs should be improved (M83, M84). In contrast to other fields, in the assessment of chemical research proposals, ‘social relevance’ was used as an important criterion (Blume and Spaapen 1988).

‘Innovatie gerichte onderzoeksprogramma’s’ (Innovation oriented research programs), for example ‘Membranes’ (1983), ‘Carbohydrates’ (1985) and ‘Catalysis’ (1989).

The program was initially defined narrower as ‘Advanced Catalytic Technologies for Sustainability’, but soon the program was widened to a generic program for chemical technology.

Researchers aim for a diverse range of funding sources, in order not to be dependent on one client (interview 11).

Full professor, biochemistry. Indeed our interviews with industry representatives resonate the fact that many multinational firms have closed their more fundamental corporate R&D facilities; their research is now funded by individual business units and mainly directed at product development.

This reasoning is in line with Morris’ observation that biologists tend to adapt their proposals to fit the priorities and initiatives of funding bodies (Morris 2000, p. 433).

This is the experience of most senior researchers we have interviewed.

Purchasing equipment has not been investigated in this study.

Full professor, catalysis.

An associate professor, for example, told us that in a project about the risks of a particular class of compounds his work was complicated, because of the sensitivity of the required information.

This specific difference was visible in the research of a full professor in biochemistry.

The interviewee involved (associate professor in biochemistry) claims that he was stimulated by NWO policy.

Two out of five respondents perceive them not to contribute at all. The three others claim that applications can make a contribution to one’s reputation, but this is complementary to one’s scientific impact. ‘Everything with climate change of course is an example. If you find important new things there, you will receive many invitations to tell about it somewhere, both at scientific and at more societally organized conferences. But, on the other hand, you are invited just as often for scientific conferences if you simply have produced sound research and you have shown that you can give a nice talk about it’ (Associate professor, environmental chemistry).

One professor reports that his boss appreciates his publications in popular media and some of his additional functions because they contribute to the visibility and the impact of his institute.

Contributor Information

Laurens K. Hessels, Phone: +31-70-3421548, Email: l.hessels@rathenau.nl

Harro van Lente, Email: h.vanlente@geo.uu.nl.

References

- Albert Mathieu. Universities and the market economy: The differential impact on knowledge production in sociology and economics. Higher Education. 2003;45(2):147–182. doi: 10.1023/A:1022428802287. [DOI] [Google Scholar]

- Becher Tony, Trowler Paul R. Academic Tribes and Territories. 2. Maidenhead, Berkshire: SRHE and Open University Press; 2001. [Google Scholar]

- Blume Stuart, Spaapen Jack. External assessment and “conditional financing” of research in Dutch universities. Minerva. 1988;26(1):1–30. doi: 10.1007/BF01096698. [DOI] [Google Scholar]

- Bonaccorsi Andrea. Search regimes and the industrial dynamics of science. Minerva. 2008;46(3):285–315. doi: 10.1007/s11024-008-9101-3. [DOI] [Google Scholar]

- Braun Dietmar. Lasting tensions in research policy-making—a delegation problem. Science and Public Policy. 2003;30(5):309–321. doi: 10.3152/147154303781780353. [DOI] [Google Scholar]

- de Wit Jan, Dankbaar Ben, Vissers Geert. Open innovation: The new way of knowledge transfer? Journal of Business Chemistry. 2007;4(1):11–19. [Google Scholar]

- Eisenhardt, Kathleen M., and Melissa E. Graebner. 2007. Theory building from cases: Opportunities and challenges. Academy of Management Journal 50(1): 25–32.

- Elzinga Aant. The science-society contract in historical transformation: With special reference to “epistemic drift”. Social Science Information. 1997;36(3):411–445. doi: 10.1177/053901897036003002. [DOI] [Google Scholar]

- Etzkowitz Henry. The norms of entrepreneurial science: Cognitive effects of the new university-industry linkages. Research Policy. 1998;27:823–833. doi: 10.1016/S0048-7333(98)00093-6. [DOI] [Google Scholar]

- Geuna Aldo, Nesta Lionel JJ. University patenting and its effects on academic research: The emerging European evidence. Research Policy. 2006;35:790–807. doi: 10.1016/j.respol.2006.04.005. [DOI] [Google Scholar]

- Gibbons Michael, Limoges Camille, Nowotny Helga, Schwartzman Simon, Scott Peter, Trow Martin. The New Production of Knowledge: The Dynamics of Science and Research in Contemporary Societies. London: SAGE; 1994. [Google Scholar]

- Gläser, Jochen, and Grit Laudel. 2007. The social construction of bibliometric evaluations. In The Changing Governance of the Sciences, eds. R. Whitley, and J. Gläser, 101–123. Dordrecht: Springer.

- Guston David H, Kenniston Kenneth. The Fragile Contract: University Science and the Federal Government. Cambridge, Massachusetts: MIT Press; 1994. [Google Scholar]

- Heimeriks, Gaston, Peter van den Besselaar, and Koen Frenken. 2008. Digital disciplinary differences: An analysis of computer-mediated science and ‘Mode 2’ knowledge production. Research Policy, in press.

- Hessels Laurens K, van Lente Harro. Re-thinking new knowledge production: A literature review and a research agenda. Research Policy. 2008;37:740–760. doi: 10.1016/j.respol.2008.01.008. [DOI] [Google Scholar]

- Hessels Laurens K, van Lente Harro, Smits Ruud EHM. In search of relevance: The changing contract between science and society. Science and Public Policy. 2009;36(5):387–401. doi: 10.3152/030234209X442034. [DOI] [Google Scholar]

- Hessels, Laurens K., Stefan de Jong, and Harro van Lente. 2010. Multidisciplinary collaborations in toxicology and paleo-ecology: Equal means to different ends. In Collaboration in the New Life Sciences, eds. J. N. Parker, N. Vermeulen, and B. Penders, 37–62. London: Ashgate.

- Hicks Diana. Evolving regimes of multi-university research evaluation. Higher Education. 2009;57:393–404. doi: 10.1007/s10734-008-9154-0. [DOI] [Google Scholar]

- Homburg Ernst. Speuren op de tast: Een historische kijk op industriële en universitaire research. Maastricht: Universiteit Maastricht; 2003. [Google Scholar]

- Homburg Ernst, Palm Lodewijk. Grenzen aan de groei - groei aan de grenzen: enkele ontwikkelingslijnen van de na-oorlogse chemie. In: Homburg E, Palm L, editors. De Geschiedenis van de Scheikunde in Nederland 3. Delft: Delft University Press; 2004. pp. 3–18. [Google Scholar]

- Hutter Wim. Chemie, chemici en wetenschapsbeleid. In: Homburg E, Palm L, editors. De Geschiedenis van de Scheikunde in Nederland 3. Delft: Delft University Press; 2004. pp. 19–36. [Google Scholar]

- Irvine John, Martin Ben R. Foresight in science: Picking the winners. London: Frances Pinter; 1984. [Google Scholar]

- Kersten Albert E. Een organisatie van en voor onderzoekers: ZWO 1947–1988. Assen: Van Gorcum; 1996. [Google Scholar]

- Latour Bruno, Woolgar Steve. Laboratory Life: The Construction of Scientific Facts. 2. Aufl. London: Sage; 1986. [Google Scholar]

- Leišytė Liudvika, Enders Jürgen, de Boer Harry. The freedom to set research agendas: Illusion and reality of the research units in the Dutch universities. Higher Education Policy. 2008;21:377–391. doi: 10.1057/hep.2008.14. [DOI] [Google Scholar]

- Lepori Benedetto, van den Besselaar Peter, Dinges Michael, Potì Bianca, Reale Emanuele, Slipsaeter Stig, Thèves Jean, van der Meulen Barend. Comparing the evolution of national research policies: What patterns of change? Science and Public Policy. 2007;34(6):372–388. doi: 10.3152/030234207X234578. [DOI] [Google Scholar]

- Lyall Catherine, Bruce Ann, Firn John, Firn Marion, Tait Joyce. Assessing end-use relevance of public sector research organisations. Research Policy. 2004;33(1):73–87. doi: 10.1016/S0048-7333(03)00090-8. [DOI] [Google Scholar]

- Martin Ben R. The changing social contract for science and the evolution of the university. In: Geuna A, Salter A, Steinmueller WE, editors. Science and Innovation: Rethinking the Rationales for Funding and Governance. Cheltenham: Edward Elgar; 2003. pp. 7–29. [Google Scholar]

- Moed Henk F, Hesselink F Th. The publication output and impact of academic chemistry research in the Netherlands during the 1980s: Bibliometric analyses and policy implications. Research Policy. 1996;25:819–836. doi: 10.1016/0048-7333(96)00881-5. [DOI] [Google Scholar]

- Morris Norma. Science policy in action: Policy and the researcher. Minerva. 2000;38:425–451. doi: 10.1023/A:1004873100189. [DOI] [Google Scholar]

- Nowotny Helga, Scott Peter, Gibbons Michael. ‘Mode 2’ revisited: The new production of knowledge. Minerva. 2003;41:179–194. doi: 10.1023/A:1025505528250. [DOI] [Google Scholar]