Abstract

The efficacy of cochlear implants in children who are deaf has been firmly established in the literature. However, the effectiveness of cochlear implants varies widely and is influenced by demographic and experiential factors. Several key findings suggest new directions for research on central auditory factors that underlie the effectiveness of cochlear implants. First, enormous individual differences have been observed in both adults and children on a wide range of audiological outcome measures. Some patients show large increases in speech perception scores after implantation, whereas others display only modest gains on standardized tests. Second, age of implantation and length of deafness affect all outcome measures. Children implanted at younger ages do better than children implanted at older ages, and children who have been deaf for shorter periods do better than children who have been deaf for longer periods. Third, communication mode affects outcome measures. Children from “oral-only” environments do much better on standardized tests that assess phonological processing skills than children who use Total Communication. Fourth, at the present time there are no preimplant predictors of outcome performance in young children. The underlying perceptual, cognitive, and linguistic abilities and skills emerge after implantation and improve over time. Finally, there are no significant differences in audiological outcome measures among current implant devices or processing strategies. This finding suggests that the major source of variance in outcome measures lies in the neural and cognitive information processing operations that the user applies to the signal provided by the implant. Taken together, this overall pattern of results suggests that higher-level central processes such as perception, attention, learning, and memory may play important roles in explaining the large individual differences observed among users of cochlear implants. Investigations of the content and flow of information in the central nervous system and interactions between sensory input and stored knowledge may provide important new insights into the basis of individual differences. Knowledge about the underlying basis of individual differences may also help in developing new intervention strategies to improve the effectiveness of cochlear implants in children who show relatively poor development of oral/aural language skills.

Introduction

One of the most consistent findings reported in the literature on pediatric cochlear implants is the enormous variability in outcome performance observed on a wide range of behavioral measures. Anyone who begins working on cochlear implants will be immediately struck by the enormous variation and the large individual differences in performance. Some children do very well with their cochlear implants and other children do poorly. At the present time, we do not have a good understanding or explanation for these large individual differences in performance on standardized tests of speech and language. As a consequence, the study of individual differences and variability in performance represents a major challenge for clinicians and researchers working in the field of cochlear implants.

The National Institute on Deafness and Other Communication Disorders (NIDCD) considers the problem of individual differences in cochlear implant “effectiveness” to be an important area of research. The 1995 Consensus Statement on Cochlear Implants in Adults and Children identified this topic as one of the major new directions for research. The study of variation in performance and individual differences is also a goal of the research program at the Indiana University School of Medicine. We have focused our research in a number of new directions in order to understand the sensory, perceptual, and cognitive bases for these differences. In this article, we give a summary of our most recent findings and discuss some implications for future research on individual differences.

At the present, there are few questions about the efficacy of cochlear implants in children who are profoundly deaf. Cochlear implants work and, for some children, they work well enough to permit them to develop spoken language through the auditory modality (Waltzman & Cohen, 2000). However, one of most difficult problems with cochlear implants in children who are deaf is the “clinical effectiveness” of these devices. Cochlear implants work well in some children but not others, and no good explanation of why this happens has been proposed. If we eliminate differences due to the number of active electrodes that provide the initial sensory information, there are few additional factors to investigate other than demographics and device characteristics. Psychophysical differences in frequency and intensity resolution may play an important role in setting initial constraints on how the sensory information is encoded at the auditory periphery, but this is not the whole story. Something else is happening at more central levels of processing in the nervous system beyond the auditory nerve. We believe individual variation in performance on outcome measures might be related to processing information at more central levels of analysis that reflect the operation of cognitive processes such as perception, attention, learning, memory, and language. But there is very little, if any, research on these factors yet. The new findings reported so far are encouraging and suggest this is a good direction to pursue.

Almost all of the past research on cochlear implants has focused on demographic variables and traditional outcome measures using assessment tools developed by clinical audiologists and speech pathologists. All outcome measures of performance are the final product of a large number of complex sensory, perceptual, cognitive, and linguistic processes that may be responsible for the observed variation among cochlear implant users. Until our recent studies reported below, no research had focused on “process” or examined the underlying mechanisms used to perceive and produce spoken language. Understanding these intermediate processes may provide some new insights into the basis of these individual differences.

In addition to the enormous individual differences and variation in outcome measures, several other findings have been consistently reported in the literature on cochlear implants in children. Age of implantation has also been shown to affect outcome measures. Children who receive an implant at a young age after short periods of auditory deprivation do much better on a whole range of performance measures than children who are implanted at an older age after longer periods of sensory deprivation. Length of deprivation or length of deafness is also related to outcome. Children who have been deaf for shorter periods do much better on a variety of performance measures than children who have been deaf for longer periods. Both findings demonstrate the important contribution of sensitive periods in sensory and perceptual development and the close links between neural development and behavior, especially hearing, speech, and language development (Ball & Hulse, 1998; Konishi, 1985; Konishi & Nottebohm, 1969; Marler & Peters, 1988).

Communication mode also affects performance on a wide range of outcome measures. Implanted children who are immersed in oral-only communication environments do much better on standardized tests of speech and language development than implanted children who are placed in Total Communication programs (Kirk, Pisoni, & Miyamoto, 2000). The differences in performance between these two groups of children are seen most prominently in both receptive and expressive language tasks that involve phonological coding and phonological processing skills such as open-set spoken word recognition, comprehension, and measures of speech production, especially speech intelligibility and expressive language.

Until recently, researchers were unable to identify reliable preimplant predictors of outcome and success with a cochlear implant. This is an important finding because it demonstrates the existence and operation of complex interactions between the newly acquired sensory capabilities of a child after a period of sensory deprivation, attributes of the language-learning environment, and the interactions with parents and caregivers that the child is exposed to early after receiving a cochlear implant More seriously, however, the lack of any preimplant predictors of outcome also makes it difficult for both researchers and clinicians to identify those children who are doing poorly at a time in development when changes can be made to improve their language processing skills.

Finally, when all of the outcome and demographic measures are considered together, the evidence strongly suggests that the underlying sensory and perceptual abilities for speech and language emerge after implantation and that performance with a cochlear implant improves over time. Success with a cochlear implant, therefore, appears to be due to several kinds of learning processes and exposure to the target language in the environment. Because outcome with a cochlear implant cannot be predicted reliably from traditional behavioral measures obtained before implantation, any improvement in performance observed after implantation must be the result of perceptual learning that is related in complex ways to maturational changes in neural and perceptual development.

Taken together, these five key findings suggest several general conclusions about the way cochlear implants work in facilitating the acquisition and development of spoken language. These key findings also point to several underlying factors that affect performance on various outcome measures. Our current hypothesis about the source of individual differences is that while some proportion of the total variance in performance is clearly caused by peripheral factors related to audibility and the initial sensory encoding of the speech signal into information-bearing sensory channels in the auditory nerve, an additional source of variance may also come from more central cognitive factors that are related to psychological processes such as perception, attention, learning, memory, and language. This source of variance is related to information processing operations and cognitive demands — that is, how the child uses the initial sensory input he or she receives from the cochlear implant and how the environment modulates, shapes, and facilitates this learning process. Of course, processes such as perception, attention, language, and memory are topics considered the “meat and potatoes” of what cognitive psychologists and cognitive scientists study, namely the encoding, rehearsal, storage, and retrieval of information and the transformation and manipulation of memory codes and neural representations of the initial sensory input in a wide range of language processing tasks.

Several years ago, we began analyzing a set of data from a longitudinal project on cochlear implants in children to get a better handle on the issue of individual differences and variation in outcome (Pisoni, Svirsky, Kirk, & Miyamoto, 1997). We began by looking at the exceptionally good users of cochlear implants — the so-called “Stars.” These are the children who did extraordinarily well with their cochlear implants after only two years of implantation. The “Stars” are the children who were able to acquire spoken language relatively quickly and easily and seemed to be on a developmental trajectory that parallels children with normal hearing. In many ways, at first glance, they look like normally hearing and normally developing children who simply have language delays (Svirsky, Robbins, Kirk, Pisoni, & Miyamoto, 2000).

Our initial interest and motivation in studying the “Stars” came, in part, from an extensive body of research in the field of cognitive psychology over the last 25 years on “expertise” and “expert systems” theory (Ericsson & Pernnington, 1993; Ericsson & Smith, 1991). Many important insights have come from studying expert chess players, radiologists, and other people who have highly developed skills in specific knowledge domains such as computer programming, spectrogram reading, and even chicken sexing! The rationale underlying our approach to the problem of individual differences was that if we could learn something about the “Stars” and the reasons why they do so well with their cochlear implants by adopting the orientation of expert systems theory, perhaps we could use this information to develop new intervention techniques with children who are not doing as well with their implants. Knowledge and understanding of the “Stars” might also be very useful in developing new preimplant predictors of performance, in modifying current criteria for candidacy, and in creating better and more precise methods of assessing performance and measuring outcome over time. Thus, there may be several important benefits from learning more about the basis of the large individual differences in performance in these children.

An initial report describing our findings on the “Stars” was presented in New York City in 1997 at the Vth International Cochlear Implant Conference (Waltzman & Cohen, 2000). At that time, longitudinal data collected over a three-year period postimplantation were presented. We now have additional data on these children over six years, which are summarized below. Since that report, our research on individual differences has continued and expanded into several new directions as we try to understand the nature of these underlying factors. Several studies have been carried out at Central Institute for the Deaf (CID) to obtain some new data on working memory from 8- and 9-year-old children who have used their cochlear implants for at least four years. This working memory data using digit spans provided an opportunity to test a critical hypothesis about differences in information processing in children with cochlear implants. The research on digit spans then led to other analyses, development of new methodologies to measure working memory, and several additional experiments on coding and rehearsal strategies that will be summarized below. We have also developed a new experimental methodology to study verbal and spatial coding in children with cochlear implants. Our initial findings are encouraging, and the results have provided some new insights into the underlying basis for the large individual differences observed in children with cochlear implants.

Theoretical Approach

Before we present the results of these studies, it is appropriate to say a few words about the theoretical motivation that underlies our work on individual differences. Previous research on cochlear implants has relied heavily on traditional outcome measures of performance that were developed within the field of clinical audiology. Historically, this research orientation focused on static assessment measures based on accuracy, device characteristics, and various demographic variables. In the past, there has been little, if any, concern or interest in “process” or a description of the underlying perceptual, cognitive, or linguistic mechanisms that mediate performance. Researchers working on cochlear implants are interested in measuring change in performance over time, but they have not studied change with an interest in describing the underlying neural and cognitive mechanisms or the flow and contents of information in the nervous system.

In contrast to this traditional approach, our research program on individual differences is motivated by several general theoretical principles that come from the field of human information processing and cognitive science (Pisoni, 2000). We are interested in describing and understanding the nature of the sensory and perceptual information that a child gets from his or her cochlear implant. We investigate and try to describe the phonetic, phonological, and lexical representations that the child creates and how these are used in various language processing tasks. In adopting the information processing approach, our goal is to describe the “stages of processing” and to trace out the “time-course” of the various transformations this information takes from stimulus input to an observer’s overt response in a specific task. This theoretical perspective is very different from the approach used in clinical research on patients with cochlear implants that has focused almost exclusively on assessment and outcome measures. We hope our approach will provide new knowledge about the underlying source of the individual differences observed among children and identify some of the factors that affect performance on traditional outcome measures.

Analysis of the “Stars”

First, we turn to a summary of the major findings we obtained in our analyses of the “Stars.” We analyzed data obtained from several outcome measures over a six-year period from the time of implantation in order to examine changes in performance over time. Before we present these results, however, it is necessary to describe how we originally identified and selected the “Stars” and the control or comparison group for our analyses.

The criterion used to identify the “Stars,” the exceptionally good users of their cochlear implants, was based on performance on a particular perceptual test: the Phonetically Balanced Kindergarten Words (PBK) test (Haskins, 1949). This is an open-set test of spoken word recognition (also see Meyer & Pisoni, 1999). Among clinicians, the PBK test is considered to be very difficult for children who are prelingually deaf compared to other closed-set perceptual tests that are routinely included in a standard assessment battery (Zwolan, Zimmerman-Phillips, Asbaugh, Hieber, Kileny, & Telian, 1997). The children who do moderately well on the PBK test frequently display ceiling levels of performance on all of the other closed-set speech perception tests that measure speech pattern discrimination. In contrast, open-set tests such as the PBK measure word recognition and lexical discrimination and require the child to search and retrieve the phonological representations of the test words from lexical memory. Open-set tests of word recognition are extremely difficult for children with hearing loss and adults with cochlear implants because the procedure and task demands require the listener to perceive and encode fine phonetic differences based entirely on information present in the speech signal without the aid of any external context or retrieval cues. The listener must then discriminate and select a unique pattern — a phonological representation — from a large number of equivalence classes in lexical memory (Luce & Pisoni, 1998). Recognizing isolated words in an open-set test format might seem like a simple task at first glance, but it is very difficult for a child with a hearing loss who has a cochlear implant. Children with normal hearing have little difficulty with open-set tests such as the PBK and they routinely display ceiling levels of performance in recognizing words under these presentation conditions (Kluck, Pisoni, & Kirk, 1997).

To learn more about the “Stars,” we analyzed outcome data from pediatric cochlear implant users who scored exceptionally well on the PBK test two years after implantation. The PBK score was used as the “criterial variable” to initially identify and select two groups of subjects for subsequent analysis using an extreme groups design. After these subjects were selected and sorted into groups, we examined their performance on a variety of outcome measures already obtained from these children as part of the large-scale longitudinal study at Indiana University. These measures included tests of speech perception, comprehension, word recognition, receptive vocabulary knowledge, receptive and expressive language development, as well as speech intelligibility.

Methods

Subjects

Scores for the two groups of pediatric cochlear implant users were obtained from a large longitudinal database containing a variety of demographic and outcome measures from 160 children who are deaf. Subjects in both groups were all prelingually deafened (mean = 0.4 years of age at onset). Each child received a cochlear implant because he or she was profoundly deaf and was unable to derive any benefit from conventional hearing aids. The criterion used to identify the “Stars” was based entirely on word recognition scores from the PBK test. This group consisted of 27 children who scored in the upper 20% of all children tested on the PBK test two years postimplant. A “comparison” group of subjects consisting of 23 children who scored in the bottom 20% on the PBK test two years postimplant was also created for the analysis. The mean percentage of words correctly recognized on the PBK test was 25.6 for the “Stars” and 0.0 for the comparison (“Controls”) group.

A summary of the demographic characteristics of the two groups is shown in Table I. No attempt was made to match the subjects on any demographic variable other than length of implant use, which was uniform at two years postimplantation at the time these analyses were carried out. As a result of this selection procedure, the two groups turned out to be roughly comparable in terms of age at onset of deafness and length of implant use. In addition, scores on several psychological tests that were originally used as clinical data for determining implant candidacy were also obtained for each, subject These measures included nonverbal intelligence test (P-IQ) scores, results on the Developmental Test of Visual–Motor Integration (VMI) (Beery, 1989) — a test of visual–motor coordination — as well as several measures of visual attention and vigilance taken, from the Gordon Diagnostic System (Gordon, 1987), a continuous performance test used to diagnose Attention Deficit Hyperactivity Disorder (American Psychiatric Association, 1994). The two groups did not differ on any of these clinical measures. However, as shown in Table I, the two groups differed in terms of age at time of implantation, length of deprivation, and communication mode.

Table I.

Summary of Demographic Information

| Stars | Controls | |

|---|---|---|

| (n = 27) | (n = 23) | |

| Mean Age at Onset (Years) | .3 | .8 |

| Mean Age at Implantation (FIT) (Years) | 5.8 | 4.4 |

| Mean Length of Deprivation (Years) | 5.5 | 3.6 |

| Communication Mode: | ||

| Oral Communication | n = 19 | n = 8 |

| Total Communication | n = 8 | n = 15 |

For ease of exposition, the results of these analyses will be presented in three sections: receptive measures, language development, and speech intelligibility. The interrelations among these various measures using correlational methods are then summarized.

Outcome Measures of Performance

Receptive Measures: Speech Perception and Spoken Word Recognition

Minimal Pairs Test

Measures of speech feature discrimination for both consonants and vowels were obtained for both groups of subjects with the Minimal Parrs Test (Robbins, Renshaw, Miyamoto, Osberger, & Pope, 1988). This test uses a two-alternative, forced-choice, picture-pointing procedure. The child hears a single word spoken in isolation on each trial by the examiner using live voice presentation and is required to select one of the pictures that correspond to the test item.

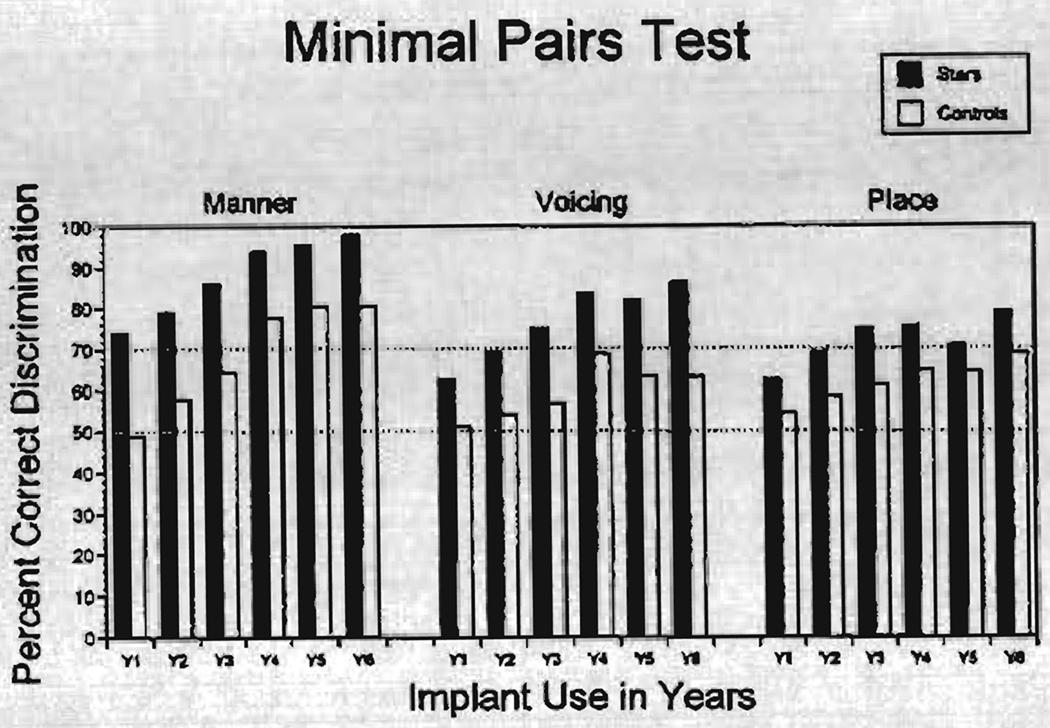

A summary of the consonant discrimination results for both groups of subjects is shown in Figure 1. Percent correct discrimination is displayed separately for the distinctive features of manner, voicing, and place of articulation as a function of implant use in years. Data for the “Stars” are shown by the filled bars; data for die “Controls” are shown by the open bars in this figure. Chance performance on this task is 5% correct as shown by a dotted horizontal line. A second dotted horizontal line is also shown in this figure at 70% correct corresponding to scores that are significantly above chance using the binominal distribution.

Figure 1.

Percent correct discrimination on the Minimal Pairs Test (MPT) for manner, voicing, and place as a function of implant use. The “Stars” are shown by filled bars; the “Controls” are shown by open bars.

Inspection of the results for the Minimal Pairs Test obtained over a six-year period of implant use reveals several findings. First, performance of the “Stars” was consistently better than the “Controls” for every comparison across all three consonant features. Second, discrimination performance improved over time with implant use for both groups, although the increases were due primarily to improvements in discrimination of manner and voicing by the “Stars.” At no interval did the mean scores of the control group significantly exceed chance performance on discrimination of voicing and place features. Although increases in performance were observed over time for the control group, their discrimination scores never reached the levels observed with the “Stars,” even for the manner contrasts that eventually exceeded chance performance in years 4, 5, and 6.

The results of the Minimal Pairs Test indicate that both groups of children have difficulty perceiving, encoding, and discriminating fine phonetic details of isolated spoken words even in a two-choice, closed-set testing format. The “Stars” were able to discriminate differences in manner of articulation after one year of implant use and they showed consistent improvements in performance over time for both manner and voicing contrasts; still, they had difficulty reliably discriminating differences in place of articulation, even after five years of experience with their implants. In contrast, the “Controls” were just barely able to discriminate differences in manner of articulation after four years of implant use and these children still had serious problems perceiving differences in voicing and place of articulation even after five or six years of use.

The pattern of speech feature discrimination results shown here suggests that both groups of children are encoding spoken words using “coarse” phonetic representations that contain much less fine-grained acoustic-phonetic detail than is typically used by children with normal hearing. The “Stars” are able to reliably discriminate manner and, to some extent voicing much sooner after implantation than the “Controls.” They also display consistent improvements in speech feature discrimination over time. These speech feature discrimination skills are assumed to place initial constraints on the basic sensory information that can be used for subsequent word learning and lexical development It is very likely that if a child cannot reliably discriminate differences between pairs of spoken words that are acoustically similar under these relatively easy forced-choice test conditions, they will subsequently have great difficulty recognizing words in isolation with no context or retrieving the phonological representations of these sound patterns from memory for use in simple speech production tasks such as imitation or immediate repetition.

Common Phrases Test

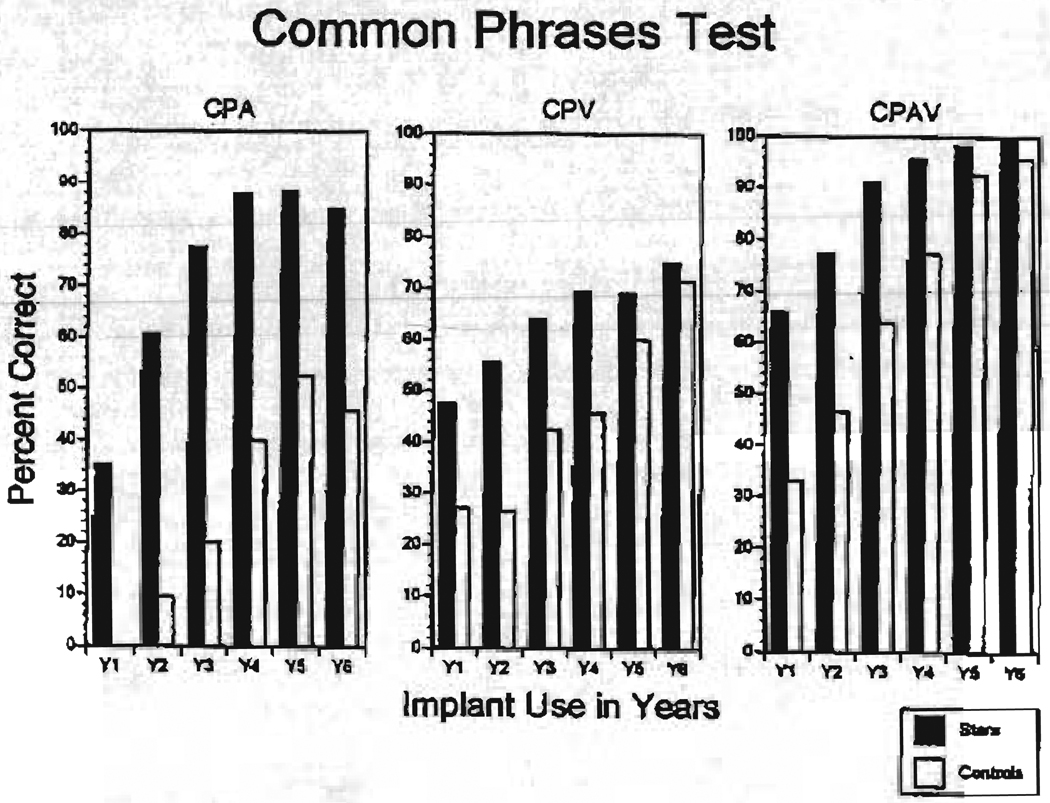

Spoken language comprehension performance was measured using the Common Phrases Test (Osberger, Miyamoto, Zimmerman-Phillips, et al., 1991). This is an open-set test that uses three presentation formats: auditory-only (CPA), visual-only (CPV), and combined auditory plus visual (CPAV). Children are asked questions or given commands to follow under these three conditions. The results of the Common Phrases Test are shown in Figure 2 for both groups of subjects (“Stars” and “Controls”) as a function of implant use for the three different presentation formats. Inspection of this figure shows that the “Stars” performed consistently better than the “Controls” in all three presentation conditions and across all six years of implant use, although performance begins to approach ceiling levels for both groups in the CPAV condition after five years of implant use. CPAV conditions were always better than either the CPA or CPV conditions. This pattern was observed for both groups of subjects. In addition, both groups displayed improvements in performance over time in all three presentation conditions. Not surprisingly, the largest differences in performance between the two groups occurred in the CPA conditions. Even after three years of implant use, the “Controls” were barely able to perform this comprehension task above 25% correct when they had to rely entirely on auditory cues in the speech signal to carry out the task.

Figure 2.

Percent correct performance on the Common Phrases Test (CPT) for auditory-only (CPA), visual-only (CPV), and combined auditory plus visual presentation modes (CPAV) as a function of implant use. The “Stars” are shown by filled bars; the “Controls” are shown by open bars.

Word Recognition Tests

Two word recognition tests, the Lexical Neighborhood Test (LNT) and the Multi-syllabic Lexical Neighborhood Test (MLNT), were used to measure open-set word recognition skills in both groups of subjects (Kirk, Pisoni, & Osberger, 1995). Both tests use words that are familiar to preschool-age children. The LNT contains short monosyllabic words, the MLNT contains longer polysyllabic words. Both of these tests use two different sets of items to measure lexical discrimination and provide details about how the lexical selection process is being carried out. Half of the items in each test consist of lexically “easy” words and half consist of lexically “hard” words. The differences in performance on these two sets of items in each test provide an index of how well a listener is able to make fine phonetic discriminations among acoustically similar words in his or her lexicons. Differences in performance between the LNT and the MLNT provide a measure of the extent to which the listener is able to make use of word length cues to recognize and access words from the mental lexicon. The items on both tests are presented in isolation one at a time by the examiner using auditory-only format. The child is required to imitate and immediately repeat back the test item after it is presented by the examiner on each trial.

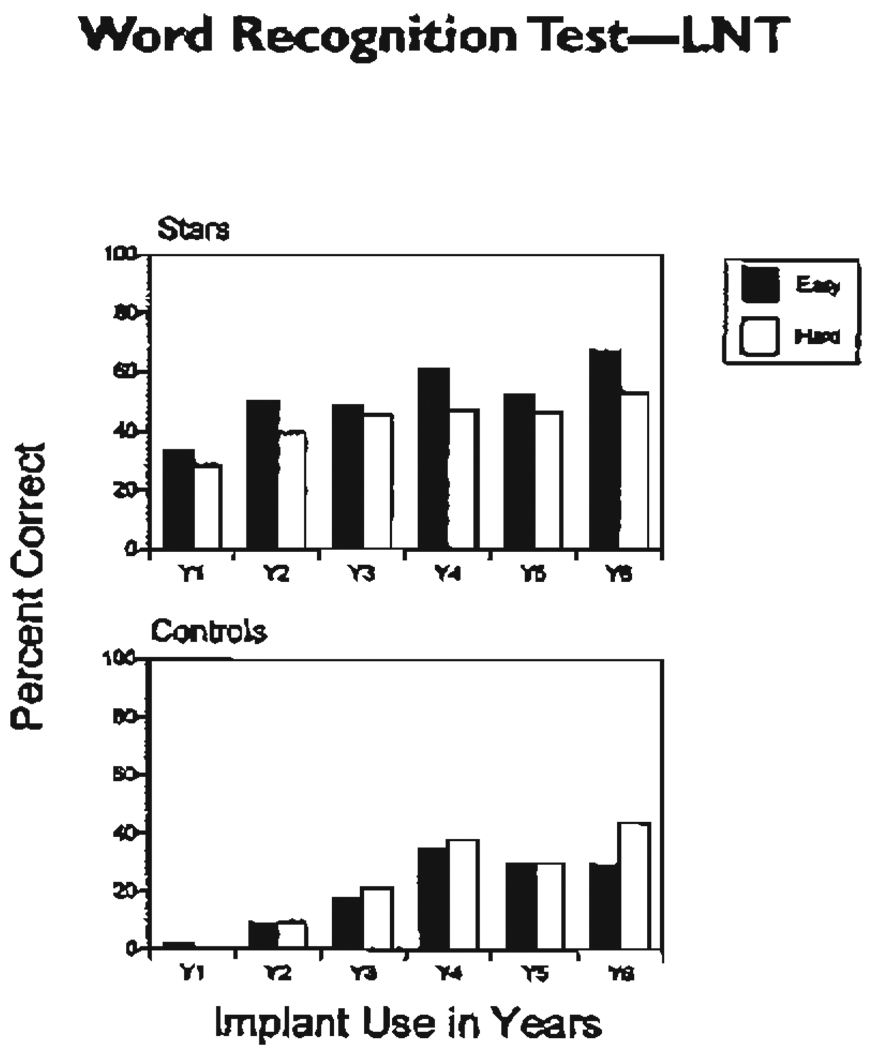

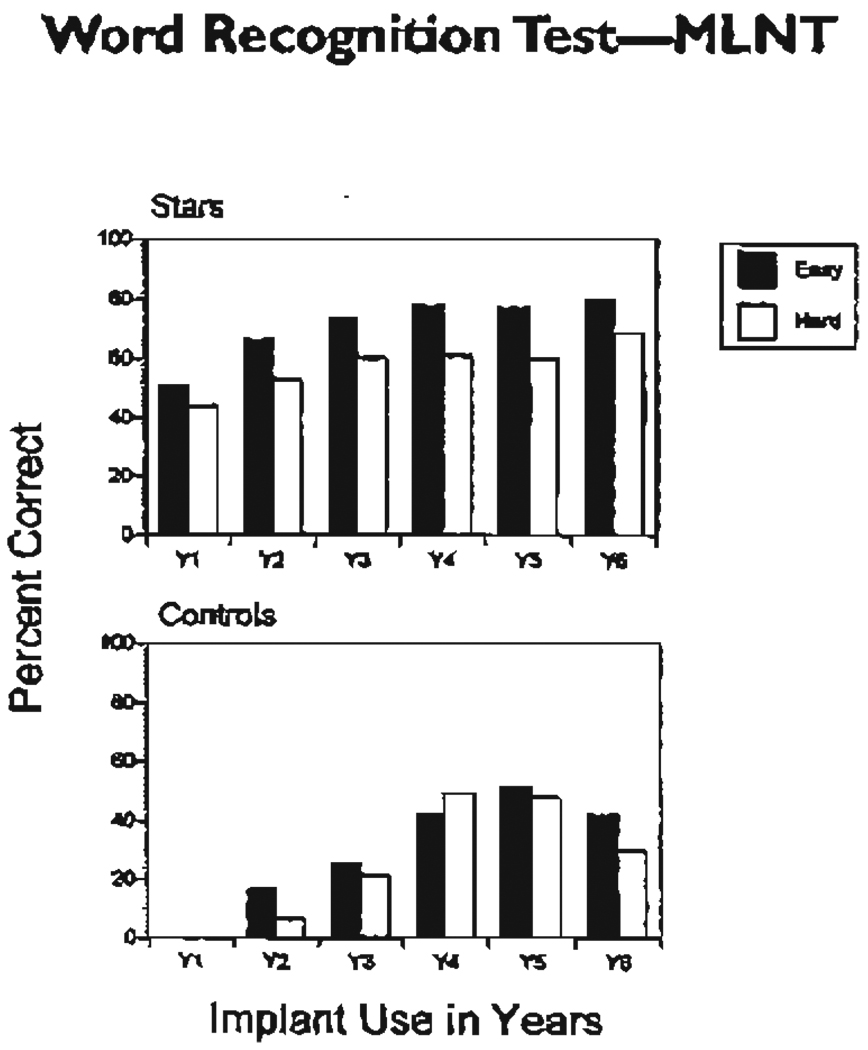

Figures 3 and 4 show the results, expressed as percent correct word recognition, obtained on the LNT and the MLNT for both groups of subjects as a function of implant use. The data for the “Stars” are shown in the top panel of each figure; the data for the “Controls” are shown in the bottom panels. Scores for the “easy” and “hard” words are shown within each panel. Shown in these two figures are several important differences in performance that provide some insight into the task demands and processing operations used in open-set tests. First, the “Stars” consistently demonstrate higher levels of word recognition performance on both the LNT and the MLNT than the “Controls.” These differences are present across all six years, but they are most prominent during the first three years postimplantation. Word recognition scores for the “Controls” on both the LNT and the MLNT are very low and close to the floor compared with the performance observed for the “Stars,” who did moderately well on this test although they never reached ceiling levels of performance on either the LNT or MLNT even after six years of implant use. Children with normal hearing typically display ceiling levels of performance on both of these tests by age 4 (Kluck, Pisoni, &, Kirk, 1997).

Figure 3.

Percent correct word recognition performance for the Lexical Neighborhood Test (LNT) monosyllabic word lists as a function of implant use and lexical difficulty. “Easy Words” are shown by filled bars; “Hard Words” are shown by open bars. Data for the “Stars” are displayed in the top panel; “Controls” are displayed in the bottom panel.

Figure 4.

Percent correct word recognition performance for the Multi-syllabic Lexical Neighborhood Test (MLNT) word lists as a function of implant use and lexical difficulty. “Easy Words” are shown by filled bars; “Hard Words” are shown by open bars. Data for the “Stars” are displayed in the top panel; “Controls” are displayed in the bottom panel.

Another theoretically important finding is also shown in these figures. The “Stars” displayed evidence of a word length effect at each testing interval. Recognition was always better for the long words on the MLNT test than the short words on the LNT test. This pattern is obscured by a floor effect for the “Controls” who were unable to do this open-set task at all during the first three years. The presence of a word length effect for the “Stars” on these two tests suggests that they are recognizing words “relationally” in the context of other words that they have in their lexicon (Luce & Pisoni, 1998). If these listeners were just recognizing words in isolation, feature-by-feature, or segment-by-segment, without reference to words they already know and can access from lexical memory, we would expect that performance would be worse for longer words than shorter words because longer words simply contain more information. The pattern of findings observed here is exactly the opposite of this prediction and parallels earlier results obtained with adults and children with normal hearing (Luce & Pisoni, 1998; Kirk, Pisoni, & Osberger, 1995; Kluck, Pisoni, & Kirk, 1997). Longer words are easier to recognize than shorter words because they are more distinctive and discriminable and are, therefore, less confused with other phonetically similar words. The present findings suggest that the “Stars” are recognizing words based on their knowledge of other words in the language using processing strategies that are similar to those used by listeners with normal hearing.

Another finding shown in both figures provides additional support for the role of the lexicon and the use of lexical knowledge in open-set word recognition. The “Stars” showed a consistent effect of lexical discrimination for the words on both tests. Examination of Figures 3 and 4 reveals that the “Stars” recognize lexically “easy” words better than lexically “hard” words. The difference in performance between “easy” words and “hard” words is present for both the LNT and the MLNT vocabularies, but it is larger and more consistent over time for the words on the MLNT test Because of floor effects, the “Controls” did not show the same consistent pattern of performance or sensitivity to lexical competition among the test words.

The differences in performance observed between these two groups of children on both open-set word recognition tests are not at all surprising and were expected because these two extreme groups were initially created based on their PBK scores. But the overall pattern of the results is theoretically important because the findings obtained with these two open-set word recognition tests — the LNT and the MLNT — demonstrate mat the skills and abilities used to recognize isolated spoken words are not specific to the test items used on the PBK test or the experimental procedures used in open-set tests of spoken word recognition. The initial differences between the two groups readily generalized to other open-set word recognition tests using different words.

The pattern of results strongly suggests the operation and use of some common underlying set of cognitive and linguistic processes that are used in recognizing, imitating, and immediately repeating spoken words presented in isolation. As suggested below, identifying and understanding the processing mechanisms that are used in these kinds of tasks may provide some new insights into the underlying basis of the large individual differences observed in outcome measures in children with cochlear implants. It is probably no accident that the PBK test has had some important diagnostic utility in identifying the exceptionally good users of cochlear implants over the years (Kirk, Pisoni, & Osberger, 1995; Meyer & Pisoni, 1999). The PBK test is clearly measuring several important language-processing skills that may generalize well beyond the specific repetition task used in open-set tests. The most important conceptual issue now is to explain why this happens to be the case and to begin to identify the underlying cognitive and linguistic mechanisms being used in open-set word recognition tasks as well as other tasks that draw on the same set of processing resources and operations.

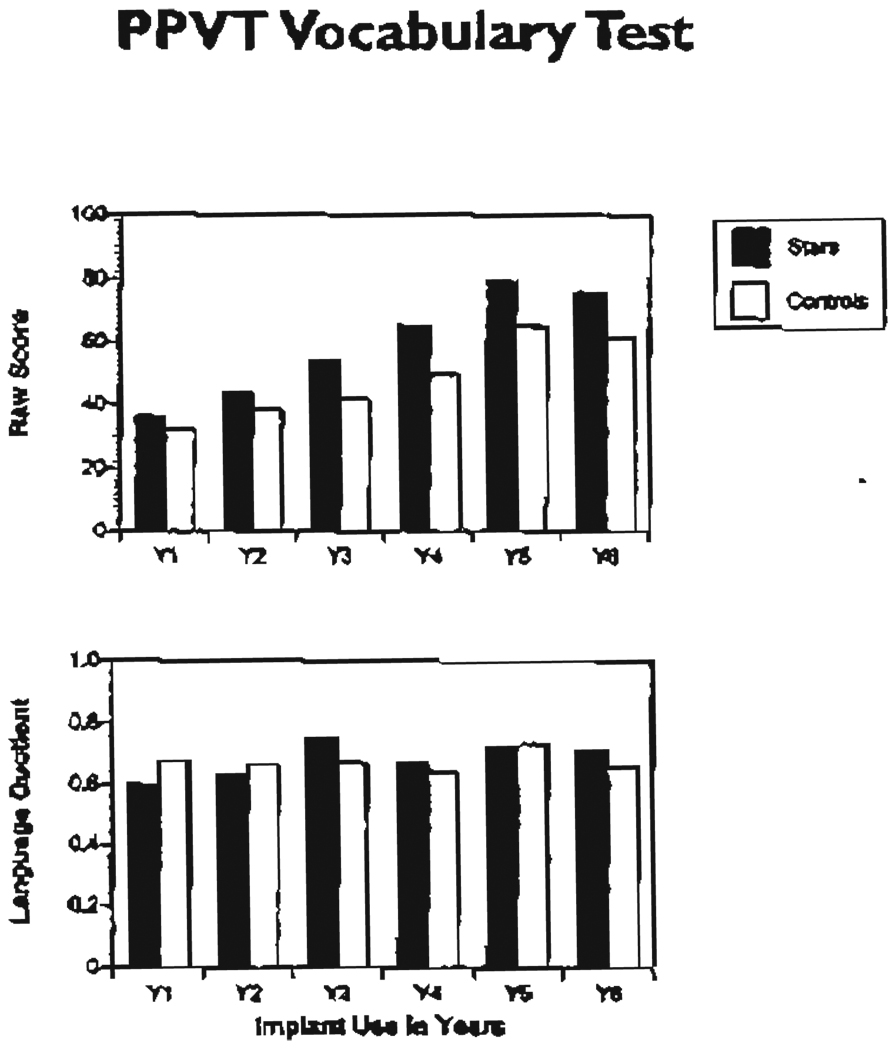

Receptive Vocabulary Knowledge

Vocabulary knowledge was assessed using the Peabody Picture Vocabulary Test (PPVT–R), a standardized test that provides a measure of receptive language development based on word knowledge (Dunn & Dunn, 1981). Test items were presented using the child’s preferred mode of communication, either speech or sign, depending on whether the child was placed in an oral-only (OC) or Total Communication (TC) environment The scores on the PPVT–R are shown in Figure 5 for both groups of subjects as a function of implant use. The top panel of Figure 5 shows the raw scores; the bottom panel shows the same data expressed as language quotients that were obtained by dividing the child’s language age by his or her chronological age. Language age is based on norms from children with normal hearing. Children with normal hearing with typical age-appropriate language skills would be expected to achieve scores of 1.0 on this scale. Inspection of the top panel shows that when expressed in terms of raw scores, both groups improve over time with implant use. However, the “Stars” score consistently better than the “Controls,” although the differences are not as large as those observed on the previous word recognition tests. This pattern may occur because this test and the other standardized language tests are routinely administered in the child’s preferred communication mode. As shown earlier in Table I, most of the “Controls” included in this group were enrolled in TC programs, whereas most of the “Stars” were enrolled in OC programs. Examination of the language quotients shown in the bottom panel indicates that both groups of children display comparable scores on this test that remain the same over time. This is not surprising because chronological age was used to normalize the raw scores.

Figure 5.

Raw scores (top panel) and language quotients (bottom panel) for the “Stars” and “Controls” on the Peabody Picture Vocabulary Test (PPVT–R) as a function of implant use in years.

Measures of Language Development

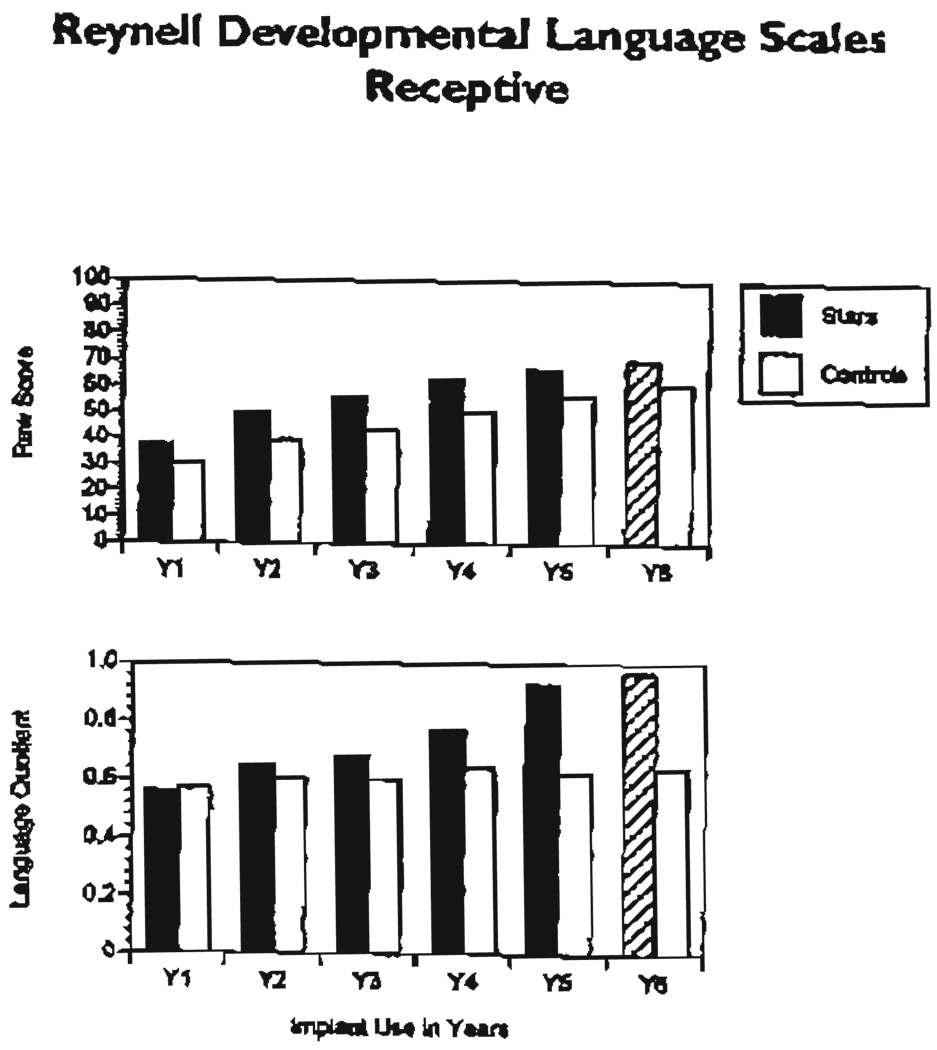

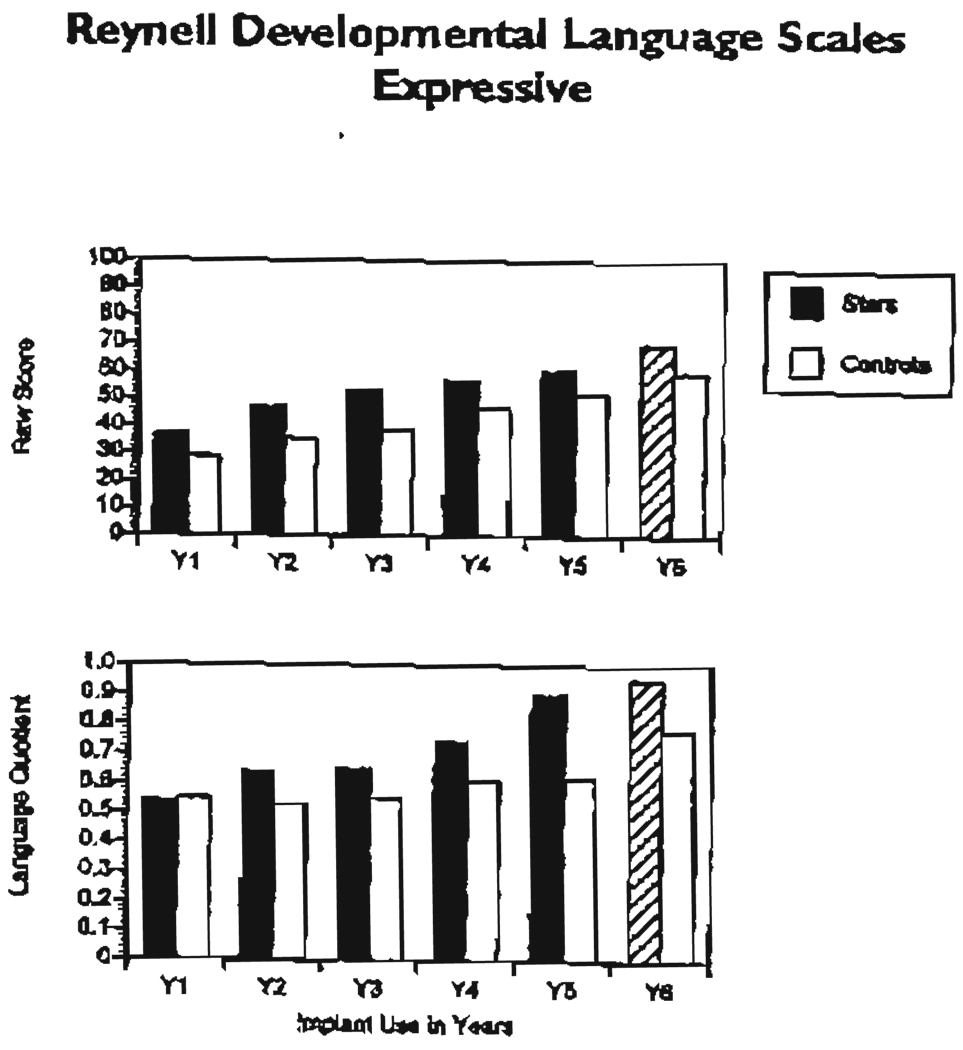

Measures of receptive and expressive language development were obtained for both groups of children using the Reynell Developmental Language Scales (Reynell & Huntley, 1985). These scales assess receptive and expressive language skills independently, using tasks involving object manipulation and description based on questions that vary in length and linguistic complexity. The Reynell tests have been used extensively with children who are deaf and are appropriate for a broad age range of children from 1 to 8 years old. Normative data have also been collected on children with normal hearing so appropriate comparisons can be drawn (Svirsky, Robbins, Kirk, Pisoni, & Miyamoto, 2000).

Figures 6 and 7 show scores for the Reynell receptive and expressive scales for both groups of children as a function of implant use. The top panel in each figure shows the raw scores for each measure, the bottom panel shows the corresponding language quotients. Scores for the “Stars” in year 6 (Y6) in each figure are based on projected estimates because these children had reached ceiling levels of performance for their age and the tests were no longer routinely administered at the yearly assessments. Both sets of data for the Reynell show gradual improvement in language over time. Once again, the differences in performance between the two groups were not very large, although the “Stars” achieved higher scores than the “Controls” on both receptive and expressive scales. In our earlier analyses of the performance of the “Stars,” after the first three years of implantation (Pisoni, Svirsky, Kirk, & Miyamoto, 1997), we found a main effect for communication mode and an interaction of communication mode with group. Overall, TC children scored higher than OC children, but this was observed only for the “Control” subjects and not the “Stars.”

Figure 6.

Reynell receptive language scores for “Stars” and “Controls” as a function of implant use. The top panel shows raw scores; the bottom panel shows language quotients.

Figure 7.

Reynell expressive language scores for “Stars” and “Controls” as a function of implant use. The top panel shows raw scores; the bottom panel shows language quotients.

Taken together with the earlier PPVT–R scores, the present results suggest that communication mode does influence outcome measures on standardized tests that assess language and Language-related abilities, such as vocabulary knowledge and language use. It is clear that the specific types of social and linguistic interactions that take place in the child’s language-learning environment after implantation play an important role in promoting and facilitating language development, vocabulary acquisition, and overall success with a cochlear implant. Children with cochlear implants who are placed in OC environments consistently show large gains in oral language skills on tasks that specifically require the use of phonological representations and phonological processing strategies in speech perception and speech production tasks

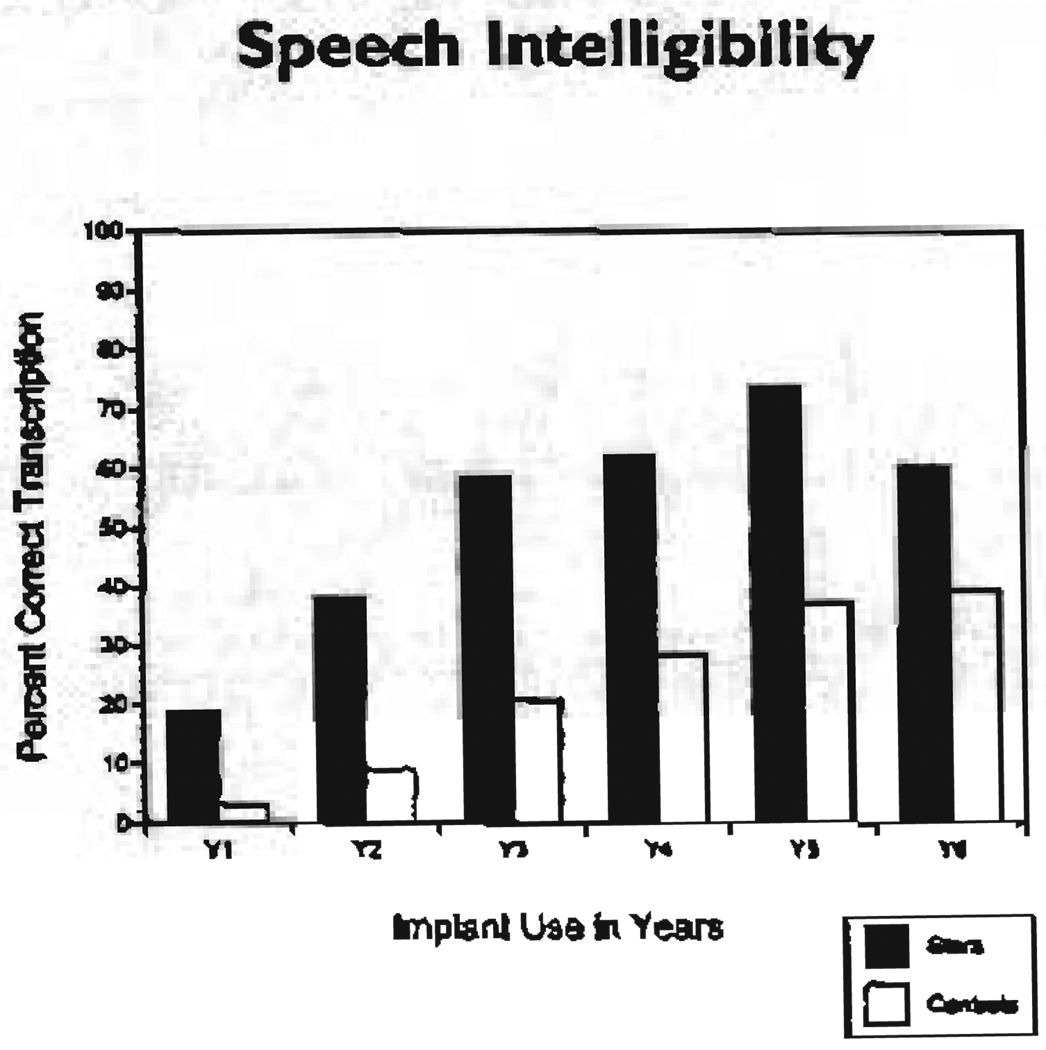

Speech Intelligibility

Measures of speech intelligibility were also obtained for both groups of subjects using a transcription task (McGarr, 1981). Speech samples were first obtained from each child using standardized elicitation materials. Each child produced 10 sentences that were repeated after an examiner’s spoken model. One list from the Beginners Intelligibility Test (BIT) was administered to obtain the speech samples from each child. This test uses objects and pictures to convey the target sentence (Osberger, Robbins, Todd, & Riley, 1994). The speech samples were then played back to small groups of adult listeners with normal hearing who were asked to listen and transcribe what the child had said. A composite score based on the number of words correctly transcribed for each child was obtained from the responses provided by three listeners who heard each child’s utterance.

The percent correct transcription for the “Stars” and “Controls” as a function of implant use is shown in Figure 8. Examination of this figure shows that the “Stars” display much better speech intelligibility than the “Controls.” Although both groups showed improvement in speech intelligibility over time, the difference in performance between the “Stars” and “Controls” remained roughly constant even after six years of implant use. The differences in speech intelligibility found here demonstrate that variation in outcome performance between the “Stars” and “Controls” is not restricted only to receptive measures of language processing such as speech perception, spoken word recognition, receptive vocabulary knowledge, or comprehension. The present findings on speech intelligibility provide evidence for transfer of knowledge from one linguistic domain to another and suggest an overlap and commonality between perception and production (O’Donoghue, Nikolopoulos, Archbold, & Tait, 1999). This overlap of receptive and expressive language function reflects knowledge of the sound/meaning contrasts in the language and a common underlying linguistic system, a grammar the child constructs from the linguistic input he or she is exposed to in the ambient environment. As we observed earlier, the “Stars” showed large and consistent improvements in both receptive and expressive measures of language, including speech feature perception, spoken word recognition, vocabulary knowledge, comprehension, and speech intelligibility. In contrast, the “Control” subjects not only showed much lower levels of performance overall on these tests, but the rate of their improvement in performance was much slower over time.

Figure 8.

Percent correct transcription scores for “Stars” and “Controls” as a function of implant use in years.

Correlations among Test Measures

Examination of these descriptive results shows that the “Stars” do well on a wide variety of outcome measures, including speech feature perception, comprehension, spoken word recognition, and receptive and expressive language, as well as measures of speech intelligibility. This pattern of findings was very encouraging because it suggested that some common source of variance may underlie the exceptionally good performance of these children on many different outcome measures. Our working hypothesis is that this particular source of variance reflects “modality-specific” fundamental information processing operations that are involved in the phonological coding of sensory inputs and the construction of phonological and lexical representations of speech (Pisoni, Svirsky, Kirk, & Miyamoto, 1997).

Until our investigation of the “Stars,” very little previous research was directed specifically at the study of individual differences among pediatric cochlear implant users or the perceptual, cognitive, and linguistic abilities of the exceptionally good subjects. The analyses of speech perception, word recognition, spoken language comprehension, vocabulary knowledge, and language development scores demonstrate that a child who displays exceptionally good performance on the PBK test also shows very good scores on a variety of other speech and language measures as well. These are theoretically and clinically important findings. The differences in performance observed here between the “Stars” and “Controls” are of substantial interest because it might now be possible to determine precisely how and why the “Stars” differ from other less successful cochlear implant users. If we have knowledge of the factors that are responsible for individual differences in performance among children who receive cochlear implants — particularly the variables that underlie the extraordinarily good performance of the “Stars” — we may be able to help those children who are not doing as well with their implant at an early point in development. Moreover, our findings on individual differences might have direct clinical relevance in terms of intervention in recommending specific changes in the child’s language-learning environment and in modifying the nature of the sensory inputs and linguistic interactions a child has with his or her parents, teachers, and speech therapists who provide the primary language model for the child. Our findings on individual differences may also help in providing clinicians and parents with a principled basis for generating realistic expectations about outcome measures, particularly measures of speech perception, comprehension, language development, and speech intelligibility in children with cochlear implants.

One of the most interesting and informative analyses we carried out on these data was a series of simple correlations among the different dependent measures summarized above. We were interested in the following questions: Does a child who performs exceptionally well on the PBK test also perform exceptionally well on other tests of speech feature discrimination, word recognition, and language? What is the relationship between performance on the PBK test and speech intelligibility? Is the extraordinarily good performance of the “Stars” restricted to only open-set word recognition tests like the PBK, or is it possible to identify a common underlying variable or process that can account for the relationships observed among several different dependent measures? To answer these questions, we examined the intercorrelations for each of the dependent variables described earlier. Simple bivariate correlations were carried out separately for the “Stars” and “Controls” using the test scores obtained after only one year of implant use. A detailed summary of these findings was reported in Pisoni et al. (1997).

In this section, we present the correlations for three of the dependent measures — open-set word recognition using the LNT; receptive and expressive language based on the Reynell test; and speech intelligibility scores using measures of transcription — to illustrate the general pattern that was found across the other dependent measures. More details are provided in the earlier report by Pisoni et al. (1997).

Open-Set Word Recognition

Table II shows the correlations of the LNT word recognition scores with each of the other dependent measures for the “Stars.” The correlations for the lexically “easy” words are shown in the left-hand column; the correlations for the lexically “hard” words axe shown in die right-hand column. Because the “Control” subjects were unable to recognize any of the words on the LNT after one year of implant use, it was not possible to compute any correlations with the other test measures. An examination of Table II shows that performance on the LNT is highly correlated with comprehension, receptive vocabulary knowledge, both receptive and expressive measures of language development and speech intelligibility. The pattern of intercorrelations among these dependent measures strongly suggests a common underlying source of variance that is shared by all these different tasks. The extremely high correlations of the LNT word recognition scores with the Common Phrases–Auditory-Only scores and both language measures on the Reynell suggests that this common source of variance might be related in some way to the encoding, storage, and retrieval and rehearsal of spoken words — specifically, the phonological representations of spoken words in lexical memory. The fundamental cognitive and linguistic processes used to recognize and repeat spoken words in an open-set format (like the PBK or LNT test, where there is no context other than the acoustic-phonetic information in the signal) are probably also used in other language-processing tasks, such as comprehension and speech production, which draw on the same sources of information about spoken words in the lexicon.

Table II.

Correlations: Word Recognition — Year 1

| Lexical Neighborhood Test (LNT) | ||||

|---|---|---|---|---|

| Easy Words | Hard Words | |||

| Stars | Controls | Stars | Controls | |

| r = | r = | r = | r = | |

| Speech Perception: | ||||

| Minimal Pairs — Manner | .34 | — | .51 | — |

| Minimal Pairs — Voicing | .20 | — | .58 | — |

| Minimal Pairs — Place | .16 | — | −.06 | — |

| Comprehension: | ||||

| Common Phrases — Auditory-only | .81*** | — | .85*** | — |

| Common Phrases — Visual-only | .41 | — | .57 | — |

| Common Phrases — Auditary + Visual | .42 | — | .55 | — |

| Vocabulary: | ||||

| PPVT–R | .62* | — | .63* | — |

| Language: | ||||

| Reynell Receptive Language Quotient | .86*** | — | .81** | — |

| Reynell Expressive Language Quotient | .83*** | — | .82** | — |

| Speech Intelligibility: | ||||

| Transcription | .89** | — | .80** | — |

p<.05;

p <.01;

p <.001

Reynell Language Scales

The correlations obtained for the receptive and expressive scales of the Reynell and the other dependent measures are shown in Table III for the “Stars” and the “Controls.” Once again, a systematic pattern of intercorrelations can be observed among nearly all of the test scores for the “Stars.” These correlations are extremely high and statistically significant given the relatively small sample sizes used here. The strong correlations of both the Reynell receptive and expressive scores with the open-set word recognition scores on the LNT suggests a common underlying factor that is related, in some way, to spoken word recognition and lexical access. The correlations between the language scores and speech intelligibility may reflect a common or shared representational system and a set of phonological processing skills that are used in both receptive and expressive language processing tasks.

Table III.

Correlations: Language — Year 1

| Reynell Language Scales (Language Quotient) |

||||

|---|---|---|---|---|

| Receptive | Expressive | |||

| Stars | Controls | Stars | Controls | |

| r = | r = | r = | r = | |

| Speech Perception: | ||||

| Minimal Pairs — Manner | .77** | .08 | .78** | −.28 |

| Minimal Pairs — Voicing | .69* | −.63 | .61* | −.49 |

| Minimal Pairs — Place | .20 | −.01 | .31 | .33 |

| Comprehension: | ||||

| Common Phrases — Auditory-only | .82** | — | .85*** | — |

| Common Phrases — Visual-only | .64* | — | .79** | — |

| Common Phrases — Auditory+Visual | .64* | .33 | .67* | .36 |

| Word Recognition: | ||||

| LNT — Easy words | .86*** | — | .83*** | — |

| LNT — Hard words | .81** | — | .82** | — |

| MLNT — Easy words | .84** | — | .87*** | — |

| MLNT — Hard words | .66* | — | .76 | — |

| Vocabulary: | ||||

| PPVT–R | .81*** | .69** | .68** | .56* |

| Speech Intelligibility: | ||||

| Transcription | .80** | −.39 | .85** | −.13 |

P<.05;

P<.01;

P<.001

Speech Intelligibility

The correlations between the speech intelligibility scores and the other dependent measures are shown in Table IV separately for the “Stars” and “Controls.” Examination of this table also shows once again a pattern of correlations that is very similar to those observed in the previous two tables. Speech intelligibility scores are highly correlated with language comprehension, spoken word recognition, and language development, suggesting a common underlying source of variance (see also O’Donoghue et al., 1999, for recent findings on the relationship between speech perception and production in young children with cochlear implants).

Table IV.

Correlations: Speech Intelligibility — Year 1

| Transcription Scores | ||

|---|---|---|

| Stars | Controls | |

| r = | r = | |

| Speech Perception: | ||

| Minimal Pairs — Manner | .55 | .19 |

| Minimal Pairs — Voicing | .53 | −.11 |

| Minimal Pairs — Place | .41 | −.09 |

| Comprehension: | ||

| Common Phrases — Auditory-only | .65** | .04 |

| Common Phrases — Visual-only | .87** | .25 |

| Common Phrases — Auditory+Visual | .43 | .07 |

| Word Recognition: | ||

| LNT — Easy Words | .89** | — |

| LNT — Hard Words | .80* | — |

| MLNT — Easy Words | .87** | — |

| MLNT — Hard Words | .72 | — |

| Vocabulary: | ||

| PPVT–R | .45 | −.01 |

| Language: | ||

| Reynell Receptive Language Quotient | .80** | −.39 |

| Reynell Expressive Language Quotient | .85** | −.13 |

p<.05;

p<.01;

p<.001

The results of the present set of analyses suggest several hypotheses about the source of the differences in performance between the “Stars” and the “Controls.” We believe these accounts are worth pursuing and evaluating in much greater depth because they suggest new and unexplored areas of basic and clinical research on pediatric cochlear implant users. Our working hypothesis places the locus of the differences in performance between the “Stars” and “Controls” at central rather than peripheral processes. This account of the source of the individual differences focuses on how the initial sensory information is encoded, stored, retrieved, and manipulated in various kinds of information processing tasks such as speech feature discrimination, spoken word recognition, language comprehension, and speech production. The emphasis here is on higher-level perceptual and cognitive factors that play a critical role in how the sensory, perceptual and linguistic information input is processed, organized, and used in various psychological tasks. One of the key components that links these various processes and operations together and serves as the “interface” between the initial sensory input and stored knowledge in memory is the working memory system. The properties of this particular memory system may provide further insights into the nature and locus of the individual differences observed among users of cochlear implants (Carpenter, Miyake, & Just, 1994; Baddeley, Gathercole, & Papagno, 1998; Gupta & MacWhinney, 1997). Unfortunately, at the time these analyses were carried out, we did not have any memory data from the “Stars” and “Controls” to test this proposal. However, several new studies have been carried out recently using new measures of performance. These results are reported in the sections below.

Some New Process Measures of Performance

It is easy to say that children who “hear“ better through their cochlear implant simply learn language better and subsequently recognize words better. But it is much more difficult to explain the observed differences in speech intelligibility on the basis of better hearing and language skills without a more detailed description of exactly what these underlying skills and abilities are and on which specific cognitive processes they draw. To account for the differences in speech intelligibility performance and expressive language, it is necessary to assume some underlying linguistic structure and process that mediates between speech perception and speech production. Without access to and use of a common underlying linguistic system — a “grammar” — separate receptive and expressive language abilities and skills such as these would not be so closely coordinated and mutually dependent. Reciprocal links exist between speech perception, production, and a whole range of language-related abilities, and these links reflect the child’s linguistic knowledge of phonology, morphology, and syntax. Speech perception, spoken word recognition, and language comprehension are not isolated autonomous perceptual abilities or skills that are independent of language and the child’s developing linguistic system. The same observation is true for speech production, reading, and lipreading. An account framed in terms of hearing, audibility, or sensory discrimination abilities cannot provide a satisfactory explanation of all the results or an adequate description of the “process” of how early auditory experience affects speech perception and language development in these children. Some other process or set of processes underlies the commonalities observed across these diverse tasks.

To provide a unified account of these findings, it is necessary to obtain additional performance measures that assess how children with cochlear implants actually “process” and “code” the sensory, perceptual, and linguistic information they receive through their implants and how they store, retrieve, and use this information in a variety of information-processing tasks. The outcome measures in our database were scores on traditional standardized tests that were used for assessment of specific speech and language skills believed to be important for measuring change and success postimplantation. These tests were designed and constructed many years ago when theoretical issues about individual differences and underlying processing strategies were not an important research priority. As a result, no data are available on psychological/cognitive processes such as memory, learning, attention, automaticity, or modes of processing. These are new topics that need to be studied in greater detail in children who have received cochlear implants. We also need to learn more about the role of early auditory experience on perceptual and cognitive development, especially spoken word recognition, lexical development, language comprehension, and speech intelligibility. These factors were also not important priorities in earlier research on cochlear implants in children, but they may play a substantial role in understanding variation in performance and individual differences among children.

If we were going to examine process measures (i.e., measures of what a child does with the sensory information he or she receives through toe cochlear implant), where would we look first? We could explore several areas; perception, attention, learning, and memory. And, we could use many different techniques and experimental procedures. For a variety of theoretical reasons, we selected “working memory.” Working memory is known to be an important component of the human information processing system; it serves as the interface between sensory input and stored knowledge in long-term memory. Working memory has also been shown to be the source of individual differences observed across a wide range of domains from perception to memory to language (Ackerman, Kyllonen, & Roberts, 1999). To obtain some initial measures of working memory, we began by collecting digit spans from children at the Central Institute for the Deaf (CID) who were 8 and 9 years old and had used their cochlear implant for at least four years. Thus, chronological age and implant use were controlled in this study.

Working Memory Span

Methods

Subjects

For this study, 43 8- and 9-year-old children with cochlear implants were recruited from a much larger ongoing project conducted at CID. All of these children had used their implant for at least four years before being tested.

Procedure

In addition to the auditory digit span measures that were collected specifically for this study, the children also received an extensive battery of speech, language, and reading tests that were part of the original large-scale project. Forward and backward digit spans were obtained using the digit-span subtests of the Wechsler Intelligence Scale for Children-III (Wechsler, 1991). In the forward span task of the WISC-III, the child is required to repeat a list of digits in the order in which the sequence was presented. In the backward span task, the child is instructed to repeat the list of digits in the reverse order. Digit spans were obtained using live voice presentation with lipreading cues available. In both parts of the WISC digit span task, the lists began with two items and then increased in length until the child recalled two lists at a given length incorrectly at which point the procedure was terminated. Items were not repeated within any list and each list of digits was unique. To receive full credit for a trial, the child was required to recall all of the digits in a given list in the correct temporal order. One point was awarded for each correct trial. Each child was run individually.

Results

Several measures of memory span performance were obtained from the response protocols. For the present analysis, digit span was defined as the number of lists correctly recalled expressed as the summed total point score. This dependent measure was used in all of the analyses reported below. The forward digit spans ranged from 0 to 8 points correct with a mean span length of 5.3 points averaged for 43 subjects. Only one child failed to carry out the digit span task and his data were not included in any of the final analyses.

Shown in Table V are the correlations of the forward auditory digit spans with several measures of speech perception performance that were obtained from these children as part of the larger project at CID. These measures included scores on both closed-set Word Intelligibility by Picture Identification (WIPI) (Ross & Lerman, 1979) and open-set Lexical Neighborhood Test (LNT) word recognition tests, a sentence perception test Bamford-Kowal-Bench (BKB) (Bench, Kowal, & Bamford, 1979), tests of auditory-visual integration (CHIVE) (Tye Murray & Geers, 1997), as well as speech feature discrimination Videogame Speech Pattern Contrast Test (VIDSPAC) (Boothroyd, 1997). The correlations are all positive and generally moderate to quite strong, suggesting a common underlying source of variance. In interpreting these simple first-order correlations, it is possible to account for the memory span results in terms of purely sensory factors such as audibility or basic speech discrimination skills that propagate and cascade up the processing system. According to this account, children who display longer digit spans simply perceive speech better and have more detailed and robust sensory representations of the speech waveforms than children who have shorter digit spans.

Table V.

Correlations: Speech PerceptionA

| Forward Auditory Digit Span | |

|---|---|

| (n = 43) | |

| r | |

| Spoken Word Recognition: | |

| WIPI | +.71* |

| LNT | +.64* |

| BKB | +.59* |

| Auditory+Visual: | |

| CHIVE V (Lipreading) | +.52* |

| CHIVE VE (visual enhancement) | +.66* |

| Speech Feature Discrimination: | |

| VIDSPAC | +.59* |

Adapted from Pisoni & Geers, 1998

P<.01

To assess this explanation, a series of partial correlations were also computed using performance on the VTDSPAC — a test of speech feature discrimination — as a measure of speech discrimination performance. When the variance due to speech feature discrimination was partialed out, the correlations were reduced in size but they were still statistically significant. This suggests that the results were due not to audibility or basic sensory discrimination skills but were related in some manner to the way in which the initial sensory information is encoded, processed, and retrieved from memory. Processing differences among these children may reflect fundamental limitations on the capacity of working memory in terms of the speed and efficiency that sensory information can be encoded and rehearsed using a cochlear implant. These differences in information processing may affect the initial encoding, rehearsal, and scanning of information in working memory. The pattern, of correlations between digit span and the speech perception tests also suggests the presence of a source of variance in these tests that is associated in some way with processing operations — that is, what the child does with the initial sensory information he or she received through the cochlear implant.

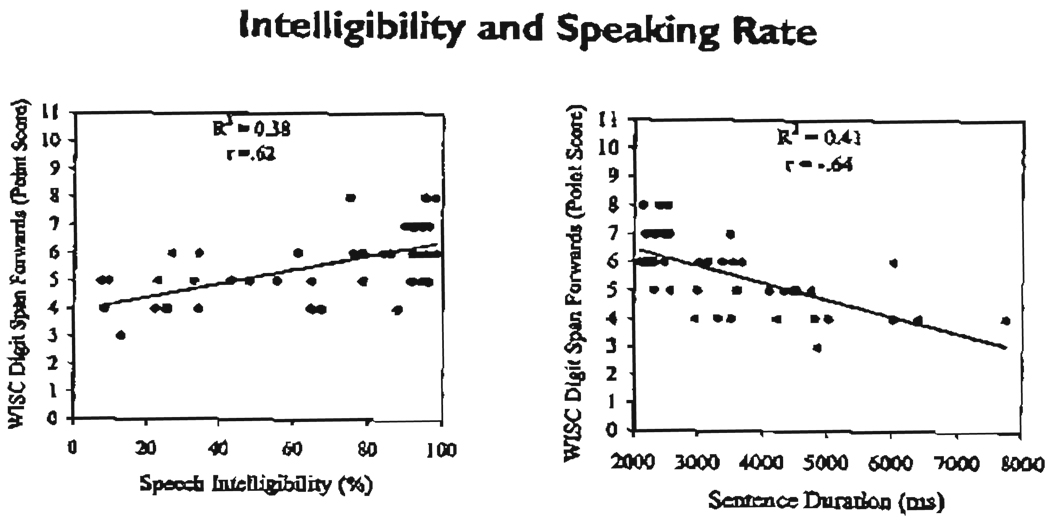

Two other theoretically important findings were also obtained in this study of working memory using the WISC digit span task. As part of the larger project at CID, measures of speech intelligibility were also obtained from these children using the elicitation materials originally developed by McGarr (1981). These methods are similar to the intelligibility study described earlier. Utterances were obtained from each child, and then played back to adults with normal hearing who were asked to transcribe the sentences. The transcription scores based on words correctly recognized from three listeners were pooled for each child and a composite measure of speech intelligibility was obtained. In addition to the speech intelligibility scores, we also obtained measures of the sentence durations for the speech samples. We then computed a correlation between the auditory digit spans from the WISC and the speech intelligibility scores for these children. A scatterplot of the individual subjects is shown in the top panel of Figure 9. The WISC digit span is represented on the ordinate and the McGarr Speech Intelligibility score is represented on the abscissa. Examination of this figure shows an orderly relationship between these two measures. Subjects with longer digit spans tend to display higher levels of speech intelligibility. The correlation was r = +.62 (p < .001), suggesting a strong association between working memory span and speech intelligibility. This particular correlation is especially important because it suggests a reciprocal relationship between speech perception and speech production, and implies that the two processes are closely linked and draw on a common set of processing resources that are related to the retrieval and maintenance of phonological representations of spoken words in working memory.

Figure 9.

Left panel shows a scatterplot of WISC forward digit spans in points as a function of speech intelligibility; right panel shows the WISC forward digit spans in points as a function of sentence duration in milliseconds.

In addition to the speech intelligibility scores, the duration measurements obtained from each child were also analyzed. Correlations were carried out between the WISC digit span measures and the average sentence durations for the utterances produced by each child. A scatterplot of the individual subject data is shown in the bottom panel of Figure 9. The WISC digit span i shown on the ordinate; the average sentence duration is shown on the abscissa. Once again, we see an orderly and systematic relationship between working memory span and sentence duration. Subjects who display longer digispans tend to produce sentences with shorter overall durations. The correlation between these two variables was r = −.64 (p < .001). This finding suggest that children who speak raster may have faster rehearsal speeds in working memory and this may be the reason why these subjects are able to recall longer sequences of digits.

The finding on memory span and speaking rate is consistent with a large body of earlier research on verbal short-term memory which demonstrates a dose relation between memory span and the fastest rate at which a person can pronounce a short list of words (Baddeley, Thomson, & Buchanan, 1975; Chase, 1977; Schweickert & Boruff, 1986). Several recent studies have suggested that this global information processing rate may actually reflect the combined effects of two independent processes, one related to speed of articulation and the other to the retrieval of words from short-term memory (Schweickert, Guentert, & Hersberger, 1990; Cowan, Wood, Wood, Keller, Nugent, & Keller, 1998). Cowan and colleagues (1998) suggest that while both of these processes affect memory span, they are actually independent of each other. Thus, memory span may depend on several component processes that are occurring simultaneously. Without additional data, it is not possible to dissociate the contribution of these two effects in the present analyses, but the results clearly demonstrate a strong relation between digit span and a specific processing mechanism related to the rate at which information is encoded, rehearsed, and subsequently output in an immediate serial recall task. These findings provide additional converging support for the proposal that the variation in the underlying component processes may account for the large differences in outcome performance on a wide range of audiological tests.

The correlations between WISC digit span and the four sets of outcome measures obtained from these children clearly demonstrate that the working memory component of the human information processing system is involved in mediating performance across a wide range of language-related tasks such as speech perception, speech production, spoken word recognition, and language comprehension. Thus, processes related to the encoding, rehearsal, and short-term storage of spoken words play an important role in the component underlying abilities and skills that are actually being measured by the four different language-based outcome measures.

The correlations observed between the WISC digit spans and the two measures obtained from the speech production task, speech intelligibility scores, and sentence durations provided some valuable new information about the specific processing mechanisms that might be responsible for the differences in working memory capacity and the correlations found with other language processing measures. Both findings suggest that some aspect of the rehearsal process may be the locus of the individual differences observed in these children. Although these are only correlational data and must be interpreted cautiously, the relationship observed between WISC digit span and rehearsal speed suggests a number of new research directions to pursue in order to test this hypothesis more directly. The next experiment was designed to investigate coding and rehearsal strategies using a new experimental procedure to measure sequence memory.

Coding and Rehearsal Processes in Working Memory

The findings obtained using the WISC auditory digit span as a measure of short-term memory capacity were informative and suggested that some processing variable related to working memory may underlie the large individual differences in outcome measures observed in children with cochlear implants. Gaining a detailed understanding of how young children encode and manipulate the phonological representations of spoken words may provide further insights into the development of their spoken language abilities and skills and might help explain the underlying basis of the variability in performance in terms of information processing variables.

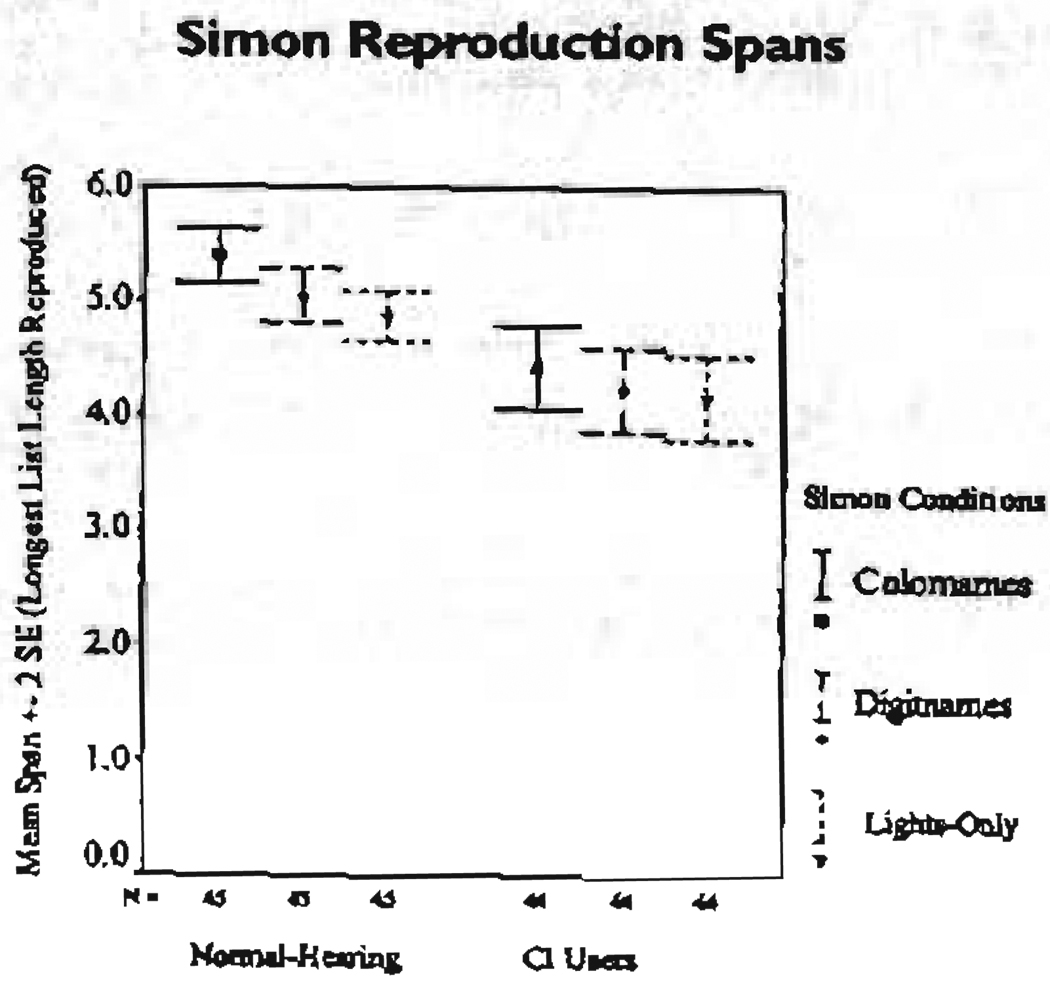

Because some children with cochlear implants may have difficulty producing intelligible speech due to differences in speaking rate and fluency of articulation, it was necessary to find an experimental procedure that did not require the child to produce an explicit verbal output response. To meet this need, we recently developed a new procedure to measure working memory span modeled after Milton Bradley’s Simon®, the popular electronic game with four large colored response buttons. Children are presented with a sequence of sounds and colored lights and are asked to immediately reproduce the sequence in the order in which it was originally presented. They enter their response by depressing the appropriate sequence of buttons on the response box.

The Simon memory game apparatus and experimental procedures we developed have a number of useful attributes that were explored in the experiment described below. First, the difficulty of the task can be adjusted by simply increasing the length of the sequence to be reproduced. Second, using an adaptive testing procedure running under computer control, it is possible to locate quickly the longest sequence a child can reproduce under a given experimental condition and use that value as a measure of the child’s memory span. Finally, it is possible, as shown below, to manipulate the properties of both the visual and auditory stimuli separately or in combination and the contingencies between them at the time a sequence is presented. This particular feature permitted us to study how redundancies between visual and auditory cues are perceived, encoded, and processed, and how correlations between these two stimulus dimensions would affect memory span for reproducing sequences of sounds or sequences of lights or sequences of sounds and lights together.

In the experiment described below, we obtained reproductive spans using the Simon memory game under three presentation conditions that were designed to manipulate the redundancies across dimensions. In the first condition, whenever one of the four colored lights was illuminated on the display, the color-name of the response button was also simultaneously output as an auditory signal. In the second condition, whenever a colored light was illuminated, a digit-name was output as an auditory signal. The digits were always consistently mapped to a response button and remained invariant for an individual child. Finally, in the third condition, visual patterns were generated using only the lights with no auditory stimuli. This last condition — the lights-only presentation — served as an important control condition to assess the extent to which a child would be able to take advantage of the cross-correlations between stimulus dimensions presented in two different modalities.

Methods

Subjects

Two groups of 45 children were recruited for this study. One group consisted of 45 prelingually deaf children with cochlear implants who were obtained from the large-scale project underway at CID. All of these children were between 8 and 9 years of age and all of them had used their cochlear implant for at least four years. None of the children served in the previous experiment. The second group consisted of 45 children with normal hearing who were recruited as a comparison group. These children were matched for gender and chronological age with the children from CID. The children with normal hearing were obtained from Bloomington, Indiana, using names recorded from birth announcements that were published in the local newspaper. A hearing screening was carried out on each child with normal hearing to ensure there were no hearing problems at the time of testing. Left and right ears were screened separately using a Maico audiometer (Model MA 27®) and Telephonics TDH-39® headphones.

Procedures

The auditory signals used in this experiment were obtained from recordings made by a male talker. He recorded tokens of the following eight words: “red,” “blue,” “green,” “yellow,” “one,” “three,” “five,” and “eight.” The words were spoken in a clear voice at a moderate-to-slow speaking rate and were recorded digitally in real-time using a 16-bit A–D converter running at 22 KHz. The amplitudes of the digital speech files were equated using software to achieve equal loudness. All of the auditory stimuli were output using a SoundBlaster AWE64® sound card and were presented over a loudspeaker at approximately 70 dB SPL.

Forward and backward WISC digit spans were also obtained from each child using the procedures described in the experiment cited above. Digit spans were obtained using live voice presentation by the examiner with visual cues to lipreading present. Presentation of the auditory and visual sequences in the Simon memory game and collection of the child’s responses was controlled by a computer program running on a personal computer. The response box consisted of a highly modified version of the Simon game that had been rebuilt and interfaced to the computer so that the lights and sounds could be varied and controlled independently by the experimenter under program control. The computer program automatically tracked the child’s performance in a given condition using an adaptive testing procedure developed by Levitt (1970) that is frequently used in psychophysical experiments.

This experiment was designed to measure reproduction spans using sequences of stimuli presented under three conditions: color-names and lights, digit-names and lights, and lights-only. Both groups of subjects received all three conditions using a within-subject design. The lights-only condition was always completed last in the series; the other two conditions were counterbalanced across subjects.