Abstract

Background

Health care professionals worldwide attend courses and workshops to learn evidence-based medicine (EBM), but evidence regarding the impact of these educational interventions is conflicting and of low methodologic quality and lacks generalizability. Furthermore, little is known about determinants of success. We sought to measure the effect of EBM short courses and workshops on knowledge and to identify course and learner characteristics associated with knowledge acquisition.

Methods

Health care professionals with varying expertise in EBM participated in an international, multicentre before–after study. The intervention consisted of short courses and workshops on EBM offered in diverse settings, formats and intensities. The primary outcome measure was the score on the Berlin Questionnaire, a validated instrument measuring EBM knowledge that the participants completed before and after the course.

Results

A total of 15 centres participated in the study and 420 learners from North America and Europe completed the study. The baseline score across courses was 7.49 points (range 3.97–10.42 points) out of a possible 15 points. The average increase in score was 1.40 points (95% confidence interval 0.48–2.31 points), which corresponded with an effect size of 0.44 standard deviation units. Greater improvement in scores was associated (in order of greatest to least magnitude) with active participation required of the learners, a separate statistics session, fewer topics, less teaching time, fewer learners per tutor, larger overall course size and smaller group size. Clinicians and learners involved in medical publishing improved their score more than other types of learners; administrators and public health professionals improved their score less. Learners who perceived themselves to have an advanced knowledge of EBM and had prior experience as an EBM tutor also showed greater improvement than those who did not.

Interpretation

EBM course organizers who wish to optimize knowledge gain should require learners to actively participate in the course and should consider focusing on a small number of topics, giving particular attention to statistical concepts.

From the time the term evidence-based medicine (EBM) was coined,1 proponents of this approach to health care delivery have offered courses and workshops to teach and disseminate it. Some observers have expressed skepticism regarding the effectiveness of teaching EBM,2 and multiple systematic reviews have not clarified which teaching approaches are optimal.3-7 Nevertheless, the demand for courses and learning opportunities for EBM continues to increase, and EBM concepts have been disseminated worldwide.

Traditionally, EBM courses have involved problem-based, self-directed learning in which learners work in small groups supported by tutor-facilitators. Although the ingenuity of medical educators has led to the development of variations on the teaching approach and new educational formats targeted to particular settings, learner characteristics and local resources,8-10 short courses and workshops remain a pillar of EBM dissemination. Empirical evidence to guide the design and delivery of short courses and workshops continues, however, to be limited. To close this gap, we undertook an international study to determine whether teaching EBM can produce changes in knowledge across a heterogeneous sample of course formats, course content and target groups and to explore which course features and learner characteristics are particularly suited to the acquisition of EBM knowledge.

Methods

Recruitment of workshops and learners

Through course announcements on the Internet, in an EBM mailing list (EVIDENCE-BASED-HEALTH@JISCMAIL.AC.UK) and in publications,11 as well as through personal contacts, we identified short courses and workshops designed to teach EBM and invited the coordinators to participate in the study. We did not restrict participation by country, course format, setting or teaching style. We targeted courses for health care professionals but did not exclude courses for students.

Design

We performed a multicentre before–after study addressing the impact of short courses and workshops on EBM knowledge.12 The primary outcome measure was the score on the Berlin Questionnaire, a validated instrument measuring EBM knowledge that the participants completed before and after the course.11To balance any potential difference in difficulty between the 2 alternative versions of the Berlin Questionnaire (designated sets A and B), we randomly assigned the learners to complete either set A or set B immediately before the course and then the other set immediately after the course. We advised course organizers to describe the study design to the learners and to inform them that participation was voluntary and that individual results would not be disclosed to learners.

Course characteristics

Coordinators of eligible courses provided information regarding the format and content of their course, including its duration, total teaching time, the number of tutors, the mix of small- and large-group activities, the number of learners in the entire course, the number of learners in each small group, and the target groups. Coordinators also indicated the learners’ expected level of engagement: compulsory engagement (e.g., case preparations or presentations), optional engagement (e.g., discussions) or no expectation of engagement (e.g., lectures). We asked about topics covered (therapy, diagnosis, prognosis, harm, meta-analysis, guidelines and study design), whether additional sessions devoted to searching the literature, statistics and teaching techniques were offered, and about the cost.

Learner characteristics

We asked learners to provide personal information regarding their age and sex, education, current position, occupation, prior exposure to EBM (e.g., had attended a session lasting less than 1 day, participated in a workshop, read a book, or taught as a tutor) and their assessment of their knowledge of EBM. We also asked them whether their participation in the course was mandatory or voluntary.

Assessment tool: the Berlin Questionnaire

The Berlin Questionnaire11 consists of 2 separate sets (A and B) of 15 multiple-choice questions built around typical clinical scenarios and focusing on therapy and diagnostics (4 questions each) and meta-analysis, prognosis and harm (1 question each), with 4 questions that cover a variety of categories. Each question has 5 response options. The questionnaire addresses the following domains of EBM: relating a clinical problem to a clinical question, identifying study design and interpreting the evidence.13 The Berlin Questionnaire has documented content validity and internal consistency. The instrument is able to discriminate different levels of knowledge and is responsive to change.11

Analysis

We recorded the number of correct answers for each questionnaire administration and calculated the difference in respondents’ scores before and after the course. To identify factors associated with degree of change in questionnaire score (the dependent variable), we constructed a 2-level linear mixed model with course as the upper (cluster) level and learner as the lower (individual) level. Potential confounders and predictors were entered into or deleted from the model step by step as described below. All models included questionnaire sequence (A or B first) and baseline score.

To estimate the variance in knowledge gain between courses and between learners by restricted maximum likelihood (REML) estimates and the intraclass correlation (the variance ratio) that measures the cluster effect that results from the differences between courses, we began by constructing a model that included only sequence and baseline score. We calculated not only the main outcome (score change) but also the corresponding effect size (i.e., the difference in means divided by the square root of the pooled variance of the baseline score).14 This allows comparison with the results of other studies and reflects a recent trend in evidence-based education.15 In accordance with Cohen’s interpretation, we labelled an effect size of 0.2 as small, an effect size of 0.5 as moderate and an effect size of 0.8 as large.14

We then entered 8 additional potential predictors (degree of active participation [required/encouraged], total number of learners, group size, ratio of tutors to learners, English or German language of questionnaire, number of topics, separate statistics session and total teaching time) at the course level and 11 potential predictors at the learner level (age, sex, degree in epidemiology or public health, current occupation, current position, education, previous exposure to EBM [had attended a session lasting less than 1 day, participated in a workshop, read a book, or taught as a tutor] and self-rated EBM knowledge) to the model and reduced the model step by step by deleting non-significant predictors, starting with the predictors with the highest p value. We stopped the stepwise backward elimination when only variables with p values below 0.05 remained in the model. We report the estimated parameter contrasts or regression coefficients of the final model with confidence limits and p values. All analyses were done using SPSS 13.5 (SPSS Inc., Chicago, Ill.).

Ethical approval was granted by the King’s College research ethics committee (PG) and by the McMaster University research ethics committee (HJS). At all other participating centres, waivers of review were obtained because the study involved educational research or there was no requirement for ethical approval.

Results

Selection of courses and participants

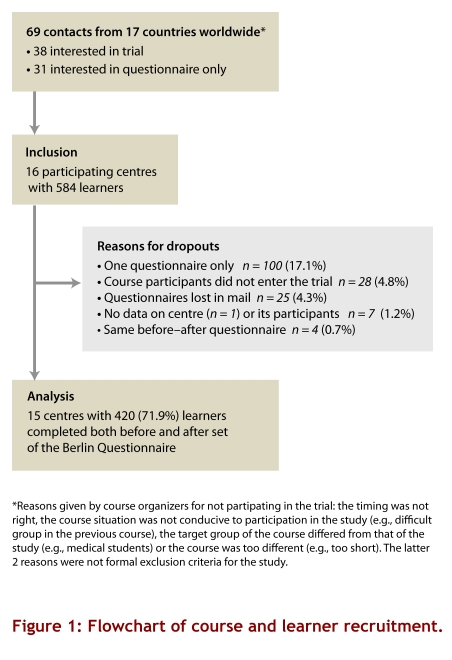

We established contacts with 69 course organizers from 17 countries; 16 of these course organizers (United States, n = 2; Canada, n = 1; New Zealand, n = 1; and Europe, n = 12) agreed to participate. In total there were 584 learners in the participating courses. Figure 1 summarizes the recruitment of courses and learners. We excluded 1 course that did not provide centre and participant information. One hundred (17.1%) participants provided only 1 questionnaire; 34 of these single questionnaires came from a course that had a tight schedule and a high proportion of participants who had to catch air transport after the course. Another 25 questionnaires were lost in the mail. Our final sample included 15 courses with 420 learners (71.9%).

Figure 1.

Flowchart of course and learner recruitment

Course characteristics

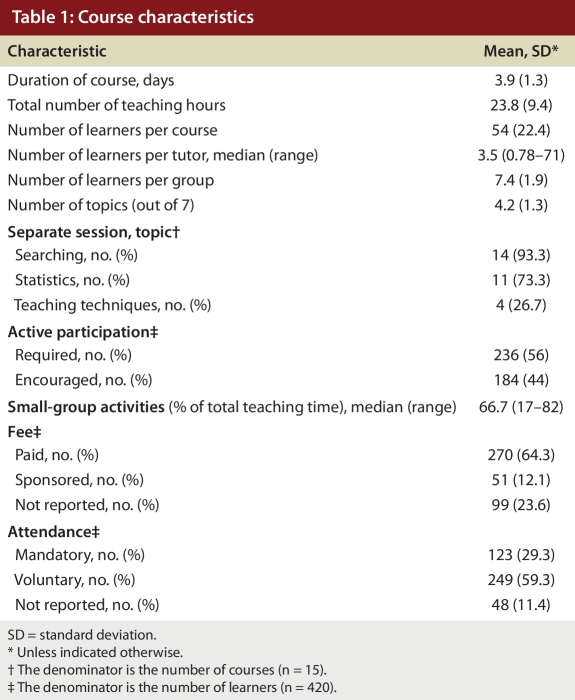

Many centres used a modified “McMaster course” format:16 there was a strong emphasis on small-group sessions (a median of 67% of total teaching time in the courses in this study), a high ratio of tutors to learners (a median of 3.5 learners per tutor) and unambiguous expectations of active participation (56% of all learners were required to participate in their course) (Table 1). The courses had between 7 and 98 participants and lasted between 1.3 and 6 days; the median teaching time was 23.8 hours. They covered an average of 4.2 (range 3–6) of 7 possible topics: therapy (n = 14; 93%); meta-analysis (n = 13; 87%); diagnosis (n = 10; 67%); study design, harm and prognosis (each n = 6; 40%); and guidelines (n = 4; 27%).

Table 1.

Course characteristics

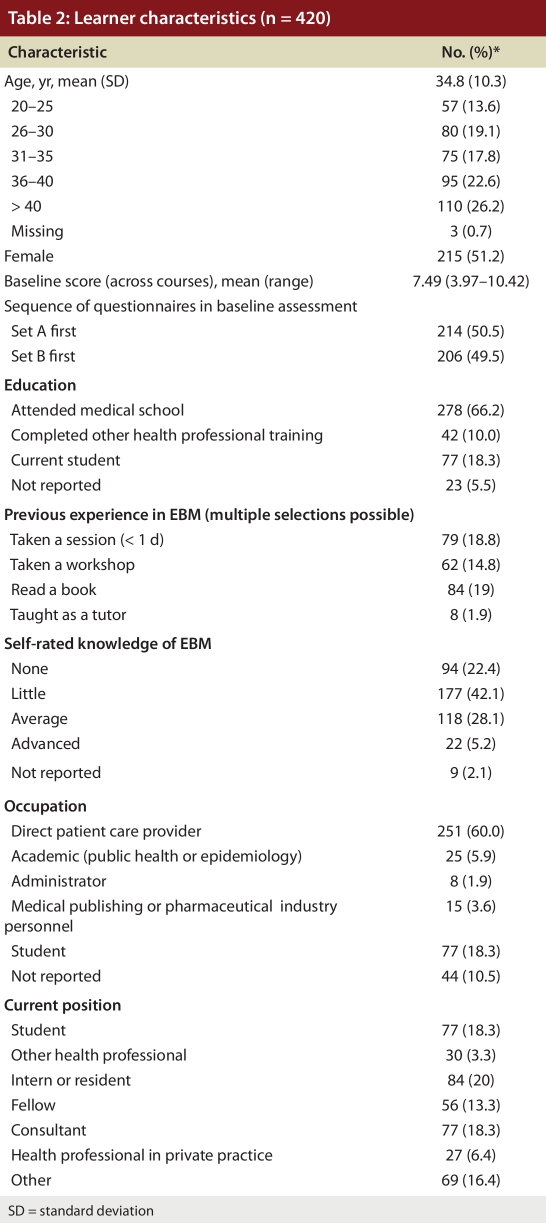

Learner characteristics

Two-thirds of the learners had completed medical school (Table 2). Few of the 420 learners had previous exposure to EBM; 42.1% (177) rated their knowledge on EBM as little and 22.4% (94) as non-existent.

Table 2.

Learner characteristics

Gain in knowledge

The mean baseline score across the 15 courses was 7.49 points (range 3.97–10.42 points). Set B (mean score 7.29 points, standard deviation [SD] 3.34) proved to be slightly more difficult than set A (mean score 7.71 points, SD 3.20; difference 0.42 points, 95% confidence interval [CI] 0.85–0.02). The mean post-test score was 8.89 points (range 4.77–10.91 points).

The average increase in score, adjusted for baseline score and questionnaire sequence, was 1.40 points (95% CI 0.48–2.31). This gain corresponds to an effect size of 0.44 of the baseline SD of the pooled sample of participants. There was significant variation in knowledge gain among courses (SD 1.50, p = 0.031) and highly significant variation among learners within courses (SD 2.14, p < 0.001).

A total of 100 learners filled in only 1 questionnaire. Of these, 81 participants from 13 courses answered the pre-course questionnaire only. Their baseline score was 7.04 points (95% CI 6.28–7.80). Another 19 participants from 9 courses completed only the post-course questionnaire. They achieved a post-course score of 8.68 points (95% CI 7.34–10.02). The scores of learners who completed only the pre- or the post-course questionnaire did not differ significantly from the corresponding scores of those who completed both (p > 0.05 in both cases).

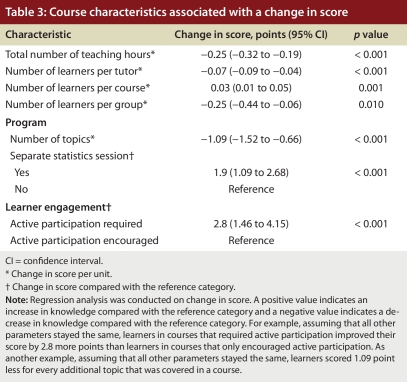

Factors associated with a change in knowledge and skills

We found 7 course variables that were independently associated with change in knowledge (Table 3). Greater improvements in knowledge were associated with (in order of decreasing magnitude) a requirement for active engagement in the learning process, a separate statistics session, fewer topics covered in a given time, less teaching time, smaller numbers of learners per tutor, more participants in the entire course and smaller groups. The 7 course variables together explain one-third of the total variation in knowledge gain (intraclass correlation coefficient 33%).

Table 3.

Course characteristics associated with a change in score

Predicting learning success on an individual level proved more difficult. Prior knowledge was the strongest learner determinant for change in knowledge: participants with a lower baseline score improved their score more than did those with higher baseline scores (Table 4). Of the other 11 learners’ variables, 3 independently influenced change: current occupation (we observed a gradient in scores across learners with jobs in the pharmaceutical industry or medical publishing, learners involved in patient care, and administrators and epidemiologists or public health professionals), self-rating (we observed a gradient of change from learners describing themselves as having advanced knowledge, who showed the greatest improvement, to those describing themselves as having average knowledge, who showed less improvement, to those describing themselves as having little or no knowledge, who showed the least improvement) and previous experience as a tutor (those who had previous experience improved more than those who did not). Once baseline knowledge entered the model, additional learner characteristics explained 7.2% of the residual variance.

Table 4.

Learner characteristics associated with a change in score

Interpretation

In this study of 15 EBM courses with diverse settings, formats and participants, we demonstrated an increase in EBM knowledge among most participants and identified key course features that influence knowledge acquisition.

What is the significance of the mean effect size of 0.44 (corresponding to an increase of 1.40 correct answers)? This change is greater than that achieved by most single-centre courses. A recent systematic review examining 24 randomized, non-randomized and before–after studies of postgraduate EBM interventions7 found a median effect size of 0.36 with an interquartile range of 0.31–0.42. We observed a larger effect even though the simultaneous consideration of courses (clusters) and individual learners in our model inflates the variance and therefore provides a more conservative estimate of the effect size than would a consideration of a single course. Furthermore, the outcome assessment tool we used, the Berlin Questionnaire, includes questions addressing studies of harm and prognosis that were not covered in all course programs. Thus, an outcome measure targeted specifically to course content may have shown even larger effects. However, some courses had a substantially greater impact than others. Course differences contributed one-third to the total variability in knowledge gain between learners.

Another way of thinking about the change of 1.40 is in terms of the differences found in the scores of different categories of clinicians. We previously demonstrated that clinicians without formal EBM training have a mean score of 4.2, those enrolling in EBM courses (presumably because they have a particular interest in the subject and have done some prior reading) have a mean score of 6.3 and experts have a mean score of 11.9.11 Thus, a mean change of 1.40 would take an individual without prior knowledge of EBM approximately one-fifth of the way to achieving the score that one might expect from an expert.

This study has a number of strengths. Because of the diversity in course format, content, participants and geographic location, this study provides a picture of the impact of a broad range of EBM courses. We used the same well-validated instrument, the Berlin Questionnaire, for outcome assessment in all courses, which allowed a valid comparison of the learning effect across courses. The study design, with learners randomly assigned to complete one or the other version of the Berlin Questionnaire first, minimized possible bias. Our calculation of effect size allowed comparison with the impact of courses previously reported in the literature.7 Most importantly, we collected detailed information about each course, which allowed us to construct a sophisticated statistical model that explained the variability in knowledge gain across courses. The association of knowledge gain with some variables was very strong: required active engagement of learners was associated with a difference of 2.8 items answered correctly. This is a very large effect in a questionnaire with 15 items (Table 3). The ability to explain the variability in outcomes to this extent is unusual and provides planners of subsequent courses and workshops, one of whose goals is to improve knowledge, with guidance in structuring their program.

Many of the course items that proved to be a significant determinant of knowledge gain in our analysis are consistent with theories of problem-based learning.17 An exploratory matching of the characteristics of problem-based learning with similar terms from a synthesis of 302 meta-analyses on educational and psychological interventions18 found small to moderate effect sizes for the following characteristics: “individualized learning” (effect size 0.23), “cooperative learning” (effect size 0.54), “small group process” (effect size 0.31), “using problems” (effect size 0.2) and “instructions for problem-solving provided” (effect size 0.54).19

One limitation of our study is the before–after design, which produces weaker inferences than a randomized trial. Thus, one might question the extent to which courses were actually responsible for the learners’ improved questionnaire performance after they finished the course (e.g., pre-course practice with the alternate form of the questionnaire could have been responsible for the change instead). If another factor were responsible for the change, however, it is extremely implausible that course characteristics would have been so powerful in explaining variability in knowledge gain across courses.

Another potential limitation is the risk of selection bias. Only a minority of course organizers expressed interest in participating in our study, and some felt that the questionnaire was inappropriate for their course and withdrew after they reviewed the questionnaire. Non-participating courses may have been less successful than participating courses; thus, selection bias provides another potential explanation for the large size of effect we observed.

Furthermore, 100 learners filled in only 1 part of the questionnaire (pre- or post-course). Scores from individuals who completed only one part of the questionnaire were, however, similar to the scores of those who completed both pre- and post- versions. Although the group that completed only the pre-course questionnaire scored somewhat lower overall, the confidence interval around this score included the entire confidence interval around the mean score of the 420 participants who completed both questionnaire administrations.

The generalizability of our results is limited in that we studied only formal courses involving direct contact between educators and learners. Thus, our results do not address either courses embedded in the clinical setting (known as integrated EBM courses)6,9 or e-learning EBM courses, which represent an emerging format.20-22 Nevertheless, the course formats we studied are likely to remain both prevalent and popular. Finally, we did not assess long-term outcomes, the use of the new knowledge in clinical practice, or attitude or behaviour changes among learners. Recent evidence from a systematic review and 3 randomized trials shows that the translation from knowledge gain to change in behaviour is complex.6,23-25

Our results also provide information concerning outcome measurement for EBM courses and workshops. A number of reviews have highlighted the shortage of validated instruments for assessing the outcomes of EBM teaching.8,26,27 A recent systematic review of assessment tools noted the attractive psychometric properties of the Berlin Questionnaire.13 Our results provide further evidence that the Berlin Questionnaire is responsive across different settings, participants and course formats and can differentiate between individuals and courses.

Although incorporating some of the factors associated with higher scores (smaller group size, higher ratio of tutors to learners and larger overall course size) would require more resources, 1 factor (less teaching time) would require fewer resources, and others, including the 3 most powerful predictors (required active participation of the learners, a separate statistics session and fewer topics) would require no increase in resources. In terms of the importance of the overall effect, there may be other benefits (enthusiasm for evidence-based practice, stimulation of subsequent learning and ultimate improvement in practice) not measured here that would further justify the investment in resources.

In conclusion, our results provide guidance for EBM course organizers interested in maximizing participant gains in knowledge. If it is feasible, they should use a small-group structure with a high tutor-to-learner ratio. Course organizers may welcome the news that long courses are not necessary; indeed, shorter courses may be more effective. Organizers should focus on a small number of topics, have special sessions devoted to statistical issues and, most importantly, insist on active participation from the learners.

Biographies

Regina Kunz is nephrologist, associate professor and vice director, Basel Institute for Clinical Epidemiology and Biostatistics, University Hospital Basel, Basel, Switzerland.

Karl Wegscheider is statistician, biometrician, professor and director, Institute of Medical Biometry and Epidemiology, University Medical Center Hamburg-Eppendorf, Hamburg, Germany.

Lutz Fritsche is vice medical director, Charité, Berlin, Germany.

Holger J Schünemann is internist, professor and chair, Department of Clinical Epidemiology and Biostatistics, CLARITY research group, McMaster University, Hamilton, Ontario, Canada.

Virginia Moyer is pediatrician and professor, Department of Pediatrics, Baylor College of Medicine, Texas Children’s Hospital, Houston, Texas, US.

Donald Miller is professor and chair, Pharmacy Practice Department, College of Pharmacy, Nursing and Allied Sciences, North Dakota State University, Fargo, North Dakota, US.

Nicole Boluyt is pediatrician, Department of Pediatric Clinical Epidemiology, Academic Medical Center, Amsterdam, The Netherlands.

Yngve Falck-Ytter is gastroenterologist, assistant professor of medicine and director of hepatology, Case Western Reserve University and Louis Stokes VA Medical Center, Cleveland, Ohio, US.

Peter Griffiths is professor and director, National Nursing Research Unit, King’s College London, London, UK.

Heiner C Bucher is internist, professor and director, Basel Institute for Clinical Epidemiology and Biostatistics, University Hospital Basel, Basel.

Antje Timmer is internist and senior researcher, Helmholtz Center, German Research Center for Environmental Health, Institute for Epidemiology, Neuherberg, Germany.

Jana Meyerrose is general practitioner trainee, Practice Center Rudolf Virchow, Berlin.

Klaus Witt is general practitioner and associate professor, Department of General Practice, University of Copenhagen, Copenhagen, Denmark.

Martin Dawes is general practitioner, professor and chair, Department of Family Medicine, McGill University, Montréal, Quebec, Canada.

Trisha Greenhalgh is general practitioner and professor, Department of Primary Health Care, University College London, London.

Gordon H Guyatt is internist and professor, Department of Clinical Epidemiology and Biostatistics and CLARITY research group, McMaster University, Hamilton.

Footnotes

Competing interests: Holger J Schünemann and Gordon H Guyatt have received honoraria and consulting fees for activities related to their work on teaching evidence-based medicine and some of these payments have been from for-profit organizations. These honoraria and consulting fees have been used to support the CLARITY research group at McMaster University, of which they are members.

Funding source: Regina Kunz and Heiner C. Bucher receive funding from santésuisse and the Gottfried und Julia Bangerter-Rhyner-Stiftung, Switzerland. Holger J. Schünemann’s work is partially funded by a European Commission grant (The human factor, mobility and Marie Curie Actions scientist reintegration grant: IGR 42192–GRADE). The sponsors had no role in the collection, analysis or interpretation of the data, in the writing of the report or in the decision to submit the report for publication.

Contributors: Lutz Fritsche, Regina Kunz and Gordon Guyatt conceived and designed the study. Regina Kunz and Lutz Fritsche supervised the study. All of the authors apart from Karl Wegscheider acquired the data, and all of the authors participated in the data analysis and interpretation. Karl Wegscheider and Lutz Fritsche conducted the statistical analysis. Regina Kunz, Karl Wegscheider and Gordon Guyatt drafted the manuscript; all of the authors critically revised it for important intellectual content. Regina Kunz, Karl Wegscheider, Lutz Fritsche and Gordon Guyatt had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

References

- 1.Guyatt GH. Evidence-based medicine [editorial] ACP J Club. 1991;114(2):A16. [Google Scholar]

- 2.Norman G R, Shannon S I. Effectiveness of instruction in critical appraisal (evidence-based medicine) skills: a critical appraisal. CMAJ. 1998;158(2):177–181. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pubmed&pubmedid=9469138. [PMC free article] [PubMed] [Google Scholar]

- 3.Parkes J, Hyde C, Deeks J, Milne R. Teaching critical appraisal skills in health care settings. Cochrane Database Syst Rev. 2001;(3):CD001270. doi: 10.1002/14651858.CD001270. [DOI] [PubMed] [Google Scholar]

- 4.Taylor R, Reeves B, Ewings P, Binns S, Keast J, Mears R. A systematic review of the effectiveness of critical appraisal skills training for clinicians. Med Educ. 2000;34(2):120–125. doi: 10.1046/j.1365-2923.2000.00574.x. [DOI] [PubMed] [Google Scholar]

- 5.Ebbert Jon O, Montori Victor M, Schultz Henry J. The journal club in postgraduate medical education: a systematic review. Med Teach. 2001;23(5):455–461. doi: 10.1080/01421590120075670. [DOI] [PubMed] [Google Scholar]

- 6.Coomarasamy Arri, Khan Khalid S. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. BMJ. 2004;329(7473):1017. doi: 10.1136/bmj.329.7473.1017. http://bmj.com/cgi/pmidlookup?view=long&pmid=15514348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Flores-Mateo Gemma, Argimon Josep M. Evidence based practice in postgraduate healthcare education: a systematic review. BMC Health Serv Res. 2007 Jul 26;7:119–117. doi: 10.1186/1472-6963-7-119. http://www.biomedcentral.com/1472-6963/7/119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Straus Sharon E, Green Michael L, Bell Douglas S, Badgett Robert, Davis Dave, Gerrity Martha, Ortiz Eduardo, Shaneyfelt Terrence M, Whelan Chad, Mangrulkar Rajesh, Society of General Internal Medicine Evidence-Based Medicine Task Force. Evaluating the teaching of evidence based medicine: conceptual framework. BMJ. 2004;329(7473):1029–1032. doi: 10.1136/bmj.329.7473.1029. http://bmj.com/cgi/pmidlookup?view=long&pmid=15514352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Khan Khalid S, Coomarasamy Arri. A hierarchy of effective teaching and learning to acquire competence in evidenced-based medicine. BMC Med Educ. 2006 Dec 15;6:59. doi: 10.1186/1472-6920-6-59. http://www.biomedcentral.com/1472-6920/6/59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Guyatt G H, Meade M O, Jaeschke R Z, Cook D J, Haynes R B. Practitioners of evidence based care. Not all clinicians need to appraise evidence from scratch but all need some skills. BMJ. 2000;320(7240):954–955. doi: 10.1136/bmj.320.7240.954. http://bmj.com/cgi/pmidlookup?view=long&pmid=10753130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fritsche L, Greenhalgh T, Falck-Ytter Y, Neumayer H-H, Kunz R. Do short courses in evidence based medicine improve knowledge and skills? Validation of Berlin questionnaire and before and after study of courses in evidence based medicine. BMJ. 2002;325(7376):1338–1341. doi: 10.1136/bmj.325.7376.1338. http://bmj.com/cgi/pmidlookup?view=long&pmid=12468485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Anderson LW, Krathwohl DR, editors. A taxonomy for learning, teaching and assessing: A revision of Bloom’s Taxonomy of educational objectives. New York: Longman; 2001. [Google Scholar]

- 13.Shaneyfelt Terrence, Baum Karyn D, Bell Douglas, Feldstein David, Houston Thomas K, Kaatz Scott, Whelan Chad, Green Michael. Instruments for evaluating education in evidence-based practice: a systematic review. JAMA. 2006;296(9):1116–1127. doi: 10.1001/jama.296.9.1116. http://jama.ama-assn.org/cgi/pmidlookup?view=long&pmid=16954491. [DOI] [PubMed] [Google Scholar]

- 14.Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. New Jersey: Lawrence Erlbaum; 1988. [Google Scholar]

- 15.Coe R. It’s the effect size, stupid. What effect size is and why it is important. British Educational Research Association Annual Conference; Exeter. 2002. [Google Scholar]

- 16.How To Teach Evidence-Based Clinical Practice Workshop [Website of McMaster University] [accessed 28 Sept 2009]. http://ebm.mcmaster.ca/

- 17.Maudsley G. Do we all mean the same thing by "problem-based learning"? A review of the concepts and a formulation of the ground rules. Acad Med. 1999;74(2):178–185. doi: 10.1097/00001888-199902000-00016. [DOI] [PubMed] [Google Scholar]

- 18.Lipsey M W, Wilson D B. The efficacy of psychological, educational, and behavioral treatment. Confirmation from meta-analysis. Am Psychol. 1993;48(12):1181–1209. doi: 10.1037//0003-066x.48.12.1181. [DOI] [PubMed] [Google Scholar]

- 19.Norman G R, Schmidt H G. Effectiveness of problem-based learning curricula: theory, practice and paper darts. Med Educ. 2000;34(9):721–728. doi: 10.1046/j.1365-2923.2000.00749.x. [DOI] [PubMed] [Google Scholar]

- 20.Coppus Sjors F P J, Emparanza Jose I, Hadley Julie, Kulier Regina, Weinbrenner Susanne, Arvanitis Theodoros N, Burls Amanda, Cabello Juan B, Decsi Tamas, Horvath Andrea R, Kaczor Marcin, Zanrei Gianni, Pierer Karin, Stawiarz Katarzyna, Kunz Regina, Mol Ben W J, Khan Khalid S. A clinically integrated curriculum in evidence-based medicine for just-in-time learning through on-the-job training: the EU-EBM project. BMC Med Educ. 2007 Nov 27;7(1):46. doi: 10.1186/1472-6920-7-46. http://www.biomedcentral.com/1472-6920/7/46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kunz Regina, Arvanitis Theo, Burls Amanda, Gibbons Sinead, Horvath Rita, Khan Khalid, Kaczor Marcin, Mol Ben. EU EBM Unity Project: Implementing systematic reviews into patient care through an integrated European curriculum in evidence-based medicine [abstract P073]. 14th Cochrane Colloquium; Oct 22–26; Dublin. 2009. http://www.imbi.uni-freiburg.de/OJS/cca/index.php/cca/article/view/2026. [Google Scholar]

- 22.Schilling Katherine, Wiecha John, Polineni Deepika, Khalil Souad. An interactive web-based curriculum on evidence-based medicine: design and effectiveness. Fam Med. 2006;38(2):126–132. http://www.stfm.org/fmhub/fm2006/February/Katherine126.pdf. [PubMed] [Google Scholar]

- 23.Hugenholtz Nathalie I R, Schaafsma Frederieke G, Nieuwenhuijsen Karen, van Dijk Frank J H. Effect of an EBM course in combination with case method learning sessions: an RCT on professional performance, job satisfaction, and self-efficacy of occupational physicians. Int Arch Occup Environ Health. 2008 Apr 02;82(1):107–115. doi: 10.1007/s00420-008-0315-3. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pubmed&pubmedid=18386046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shuval Kerem, Berkovits Eldar, Netzer Doron, Hekselman Igal, Linn Shai, Brezis Mayer, Reis Shmuel. Evaluating the impact of an evidence-based medicine educational intervention on primary care doctors' attitudes, knowledge and clinical behaviour: a controlled trial and before and after study. J Eval Clin Pract. 2007;13(4):581–598. doi: 10.1111/j.1365-2753.2007.00859.x. [DOI] [PubMed] [Google Scholar]

- 25.Kim Sarang, Willett Laura R, Murphy David J, O'Rourke Kerry, Sharma Ranita, Shea Judy A. Impact of an evidence-based medicine curriculum on resident use of electronic resources: a randomized controlled study. J Gen Intern Med. 2008 Sep 04;23(11):1804–1808. doi: 10.1007/s11606-008-0766-y. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pubmed&pubmedid=18769979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Green Michael L. Evaluating evidence-based practice performance. Evid Based Med. 2006;11(4):99–101. doi: 10.1136/ebm.11.4.99. [DOI] [PubMed] [Google Scholar]

- 27.Hatala Rose, Guyatt Gordon. Evaluating the teaching of evidence-based medicine. JAMA. 2002;288(9):1110–1112. doi: 10.1001/jama.288.9.1110. [DOI] [PubMed] [Google Scholar]