Abstract

Past studies have found that, in adults, the acoustic properties of sound signals (such as fast versus slow temporal features) differentially activate the left and right hemispheres, and some have hypothesized that left-lateralization for speech processing may follow from left-lateralization to rapidly changing signals. Here, we tested whether newborns’ brains show some evidence of signal-specific lateralization responses using near-infrared spectroscopy (NIRS) and auditory stimuli that elicits lateralized responses in adults, composed of segments that vary in duration and spectral diversity. We found significantly greater bilateral responses of oxygenated hemoglobin (oxy-Hb) in the temporal areas for stimuli with a minimum segment duration of 21 ms, than stimuli with a minimum segment duration of 667 ms. However, we found no evidence for hemispheric asymmetries dependent on the stimulus characteristics. We hypothesize that acoustic-based functional brain asymmetries may develop throughout early infancy, and discuss their possible relationship with brain asymmetries for language.

Keywords: near-infrared spectroscopy, neonates, functional lateralization, auditory cortex, hemispheric specialization, development

Introduction

There is a considerable gap between what we know about the language brain networks in adulthood and the development of this network in infancy. At the root of this gap is the lack of non-invasive, infant-friendly imaging tools. In the past decade, multi-channel near-infrared spectroscopy (NIRS) has generated a lot of interest, as it can measure infants’ cerebral cortex hemodynamic responses with a spatial resolution of about 2–3 cm and is as innocuous as electroencephalography (EEG; Minagawa-Kawai et al., 2008 for a review). One of the issues that remain largely unsettled is the developmental origin of the left lateralization for language that is observed in adults (see Minagawa-Kawai et al., 2011a). At least two general hypotheses have been put forward; the domain-driven hypothesis postulates that there brain networks specifically dedicated to language (see Dehaene-Lambertz et al., 2006, 2010). The signal-driven hypothesis holds that specialization for higher cognitive functions emerges out of interactions between sensory signals and general learning principles (see Elman et al., 1997). In particular, the left–right asymmetry for language found in adults has been tied to a structural preference of left cortices for fast changing signals as opposed to slow changing signals. Such patterns have been supported by brain data collected on human adults (Zatorre and Belin, 2001; Boemio et al., 2005; Schonwiesner et al., 2005; Jamison et al., 2006), animals (Wetzel et al., 1998; Rybalko et al., 2006), and, more recently, infants (Homae et al., 2006). In this general instantiation, the left hemisphere is preferentially involved in processing rapid changes such as those that distinguish phonemes, whereas the right hemisphere is more engaged in spectral processing such as that required for the discrimination of prosodic changes. The fast/slow distinction in itself cannot be sufficient to account for the lateralization of language in adults. Indeed, language is a very complex signal defined at several hierarchical levels (features, phonemes, morphemes, utterances), the acoustic correlates of which use the entire spectrum of cues, from very short temporal events to complex and rather slow spectral patterns (see also Minagawa-Kawai et al., 2011a). Nonetheless, the idea that signal characteristics provide an initial bias for left/right lateralization is theoretically attractive, as it would reduce the number of specialized brain networks stipulated in infants, and makes important predictions regarding the development of functional specialization for speech on the one hand, and the biases affecting auditory processing even in adults.

Therefore, it is not surprising that this view has inspired a great deal of research over the past 10 years. Over this period of time, a number of operational definitions of precisely what in the acoustic signal determines lateralization have been proposed. Some of the acoustic candidates proposed to determine the hemispheric dominance include the rate of change (Belin et al., 1998), the duration of constituent events (shorter leads to left dominant; Bedoin et al., 2010), the type of encoding (temporal versus spectral, Zatorre and Belin, 2001), and the size of the analysis window (Poeppel et al., 2003). Among these many hypotheses, two dominant views are emerging. The multi-time-resolution model (Poeppel et al., 2003) stipulates that the left and right hemispheres are tuned to different frequencies for sampling and integrating information. Specifically, the left (or bilateral) cortices perform rapid sampling of small integration windows, whereas the right cortices perform slow sampling over a rather large integration window (Hickok and Poeppel, 2007; Poeppel et al., 2008). The temporal versus spectral hypothesis (Zatorre and Belin, 2001) emphasizes the trade-off that arises between the representation of speech that have good temporal resolution and those that have good spectral resolution. Temporal information would be represented most accurately in the left hemisphere and spectral information in the right hemisphere. Even though these hypotheses have a family resemblance, they are conceptually distinct, and have given rise to distinct sets of stimuli, which vary in the lateralization patterns they elicit in different brain structures.

In general, signal-driven asymmetries have most frequently been observed in the superior temporal gyrus (STG), particularly the auditory cortex (Belin et al., 1998; Zatorre and Belin, 2001; Jamison et al., 2006). In addition, anterior and posterior superior temporal sulcus (STS) (Zatorre and Belin, 2001; Boemio et al., 2005), as well as the temporo-parietal region (Telkemeyer et al., 2009), have been reported to be sensitive to the acoustic properties of stimuli. Regarding lateralization, some studies document left dominance for fast stimuli and right dominance for slow ones, whereas other studies only report half of this pattern (typically, only right dominance for slow stimuli; e.g., Boemio et al., 2005; Britton et al., 2009). We believe that some of this diversity could be due to using different stimuli and different fractioning of regions adjacent to the auditory cortex (see Belin et al., 1998; Giraud et al., 2007). Therefore, it becomes imperative to assess the existence of signal-driven asymmetries early on in development using a range of different stimuli.

The first infant studies documenting variable lateralization patterns in response to auditory stimulation typically focused on the contrast between speech and non-speech. For example, Peña et al. (2003) found that the newborn brain exhibited greater activation in the left hemisphere when presented with forward than with backward speech. Sato et al. (2006) replicated this effect for the newborns’ native language, but documented symmetrical temporal responses to forward and backward speech in a language not spoken by the newborns’ mothers. These results suggest that experience may modulate left dominance in response to speech; however, since forward and backward speech (native or non-native) are similar in terms of the fast/slow distinction, these results cannot shed light on the possible existence of signal-driven asymmetries.

In fact, only one published study has tested one implementation of the signal-driven hypothesis in newborns. Telkemeyer et al. (2009) presented newborns with stimuli previously used on adults by Boemio et al. (2005). The stimuli set used in the newborn study consisted in static tones concatenated differently in the different conditions, so as to vary their temporal structure. There were two fast conditions, obtained by concatenating segments of either 12 or 25 ms of length; and two slow conditions, where segments where either 160 ms or 300 ms of duration (the adult version had two additional conditions, where segments were 45 and 85 ms in duration; and a second set of frequency modulated tones with the same temporal manipulations). The adult fMRI study by Boemio et al. (2005) had documented a bilateral response to the temporal structure, such that there were linearly greater activation with greater segment length in both left and right STS and STG. Telkemeyer et al. (2009) measured oxy- and deoxy-Hb changes using six NIRS channels placed over temporal and parietal areas in each hemisphere. The results reported only included deoxy-Hb signals on three NIRS channels in each hemisphere; one of these three channels exhibited a greater activation over the right temporo-parietal area for the 160 and 300 ms conditions. This last result, however, was not particularly strong statistically, as the greater activation in the right hemisphere was only significant on a one-tailed, uncorrected t-test. In addition, results showed an overall stronger bilateral response for stimuli using 25 ms modulations compared to all other durations. Thus, in neither the adult nor newborn data did those stimuli modulate the activation of the left hemisphere. Both of these results are in line with a recent version of the multi-temporal-resolution model that proposes that temporal structure modulates activation bilaterally (Poeppel et al., 2008).

However, as we mentioned above, this is not the only instantiation of the signal-based hypothesis, and different sets of stimuli have led to more or less clear-cut lateralization patterns. In the study presented here, we re-evaluate the possibility that newborns’ functional lateralization can be modulated by acoustic characteristics of the auditory stimulation using a set of stimuli that vary in both temporal and spectral complexity; and which has elicited a clear left–right asymmetry in adults according to both PET (Zatorre and Belin, 2001) and fMRI (Jamison et al., 2006). These adult data suggested that left temporal cortices were most responsive to fast, spectrally simple stimuli; whereas spectrally variable, slow stimuli elicited greater activation in right temporal cortices. As noted above, newborn studies sometimes lack statistical power. We tried to maximize the statistical power in the present study in several ways. First, we selected a small subset of 3 out of the original 11 conditions used by Zatorre and Belin (2001), selecting an intermediate case with long duration and little variability (“control”) as well as the two most extreme conditions, namely brief, 21 ms segments with little spectral variability across them (fixed at 1 octave jumps; “temporal”); and long, 667 ms segments with greater spectral variability across subsequent segments (any of eight different frequencies could occur; “spectral”). Fewer conditions ensured more trials per condition; and the choice of extreme temporal and spectral characteristics enhanced the probability of finding signal-driven asymmetries. To further increase the power, the artifacts were rejected so as to keep as much good data as possible (for instance, an artifact in the middle of a trial will not necessarily yield a removal of the entire trial, but only of the section of signal that was artifacted). In addition, we measured signals from both small (2.5 cm) and large (5.6 cm) separations between probes and detectors, potentially opening up the possibility of measuring “deeper” brain regions; and we provide data for a total of 14 channels per hemisphere. With this configuration, we could sample at several of the potential candidates for asymmetries: STG involving auditory cortex (one shallow and deep channels), anterior STG and STS (two channels), and temporo-parietal (one channel – similar to the one showing a trend toward an asymmetrical activation in Telkemeyer et al., 2009). Finally, we have investigated both oxygenated hemoglobin (oxy-Hb) and deoxy-Hb, while only deoxy-Hb was reported in the previous newborn study looking at the question. Although it has been argued that deoxy-Hb is less affected by extracerebral artifacts than oxy-Hb (see Obrig et al., 2010; and Obrig and Villringer, 2003, for important considerations regarding the two measures), most previous NIRS research on infants focuses on oxy-Hb, which appears to be more stable across children. In a recent review, Lloyd-Fox et al. (2010) report that of all infant NIRS studies published up to 2009, 83% reported increases in oxy with stimulation, 6% decreases, 1% no change, and 3% did not report it; in contrast, 28% reported increases in deoxy with stimulation, 17% decreases, 19% no change, and 36% did not report it altogether. Our analyses below concentrate on oxy-Hb; results with deoxy-Hb, showing the same pattern but with weaker significance levels, are reported in Table A1 in Appendix.

We expected that through the combination of these strategies, we would be able to document signal-based asymmetries as elicited by maximally different stimuli in terms of their spectral and temporal composition. Based on the previous adult work carried out with these same stimuli, we predicted that, if temporal and spectral characteristics drove lateralization early on in development, temporal stimuli should evoke larger activations than control stimuli in the LH, and spectral stimuli should evoke larger activations in the RH when compared to control stimuli.

Materials and Methods

Participants

Thirty-eight newborns were tested; we excluded nine infants who did not have enough data due to movement artifacts, loose attachment, or test interruption due to infant discomfort. The final sample consisted of 29 infants (15 boys), tested between 0 and 5 days of age (M = 2.41), for which we obtained the equivalent of at least 10 full analyzable trials per condition, for more than 50% of the channels. Parental report indicated that both parents were left handed in one case (<5%), and one parent was left handed in seven cases (<25%). All infants were full-term without medical problems. Consent forms were obtained from parents before the infants’ participation. This study was approved by the CPP Ile de France III Committee (No. ID RCB (AFSSAPS) 2007-A01142-51).

Stimuli

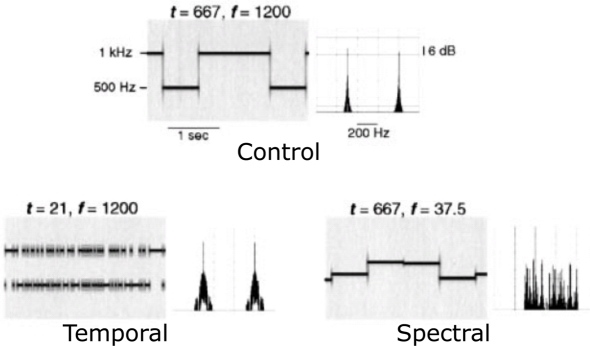

The stimuli were pure-tone patterns which varied in their spectral and temporal properties as shown in Figure 1 using two parameters: f, the frequency separation between two adjacent tones, and t, the base duration of a tone segment. The original stimuli in Zatorre and Belin (2001) included a five-step continuum of stimuli varying in spectral complexity, a five-step continuum of stimuli varying in temporal complexity, and a control condition (see Figure 1 of Zatorre and Belin, 2001 for more details). Among them, we selected two conditions that constituted the extreme exemplars of the spectral and temporal continua, respectively, plus the control condition (Zatorre and Belin, 2001). The acoustic parameters of the stimuli in these three conditions are as follows. The control stimuli (low spectral complexity – long duration) consisted in randomly selected pure tones of either 500 or 1000 Hz (a frequency separation of one octave, i.e., f = 1200 cents) and a long base duration (t = 667 ms). These had low spectral complexity because only two frequencies were used; this is evident in the long term spectrum shown in Figure 1 (top panel). Spectral stimuli (high spectral complexity – long duration) had the same temporal properties as the control stimuli (t = 667 ms), but consisted of tones selected from a set of 33 frequencies, roughly spaced by 1/32 of an octave, between 500 and 1000 Hz (minimum separation f = 37.5 cents, see the more complex long term spectrum, Figure 1, bottom right). Temporal stimuli (low spectral complexity – short duration) kept the spectral properties identical to those in the control condition, with randomly selected tones of either 500 or 1000 Hz (f = 1200 cents), but used a much shorter base duration (t = 21 ms). The stimuli were generated through a random sampling procedure respecting the following distribution: if the base duration was t for a condition, the actual duration of a tone of a given frequency varied from t to nt, where n is an integer, with probability of 1/2n. Therefore, the highest probability of occurrence (1/21 = 0.5) is equal to the shortest duration (t). The next longer tone would have a duration of 2t, and would occur with a probability of 1/22 = 0.25 (a probability for 3t is 1/23 = 0.125 and so forth). Therefore, the stimuli with a base duration of t contained segments of varying duration, whose average was Σn n.2−n/Σn 2−n ∼ 2t. This random sampling procedure was chosen in order to avoid the appearance of a low frequency spectral component that would have arose if a strict alternation had been used. We truncated the original stimuli in Zatorre and Belin (2001) to last 10 s (1 trial = 10 s stimulation). Trials belonging to the three conditions were presented in random alternations. A jittered silence period was inserted between the trials (8–14 s). A run was the concatenation of eight trials of each type (i.e., a total of 24 trials per run). Infants we presented with 2 or 3 runs depending on their state; for a total of 24 trials per condition, or 72 trials in overall total. Specifically, if the infant was calm enough without too much movement even after the two runs, we kept on recording. Twenty-one out of the 29 infants included finished all 3 runs; in average, infants completed 2.7 runs.

Figure 1.

Schematic representation of the auditory stimuli. Each pair of panels shows a stimulus sequence represented on the left as a spectrogram (frequency as a function of time) and on the right as a Fourier spectrum (amplitude as a function of frequency). The top pair of panels shows the control stimulus: two tones with a frequency separation f of 1200 cents (one octave) with the fastest temporal change of t = 667 ms. The bottom two panels represent temporal and spectral conditions. The figure is adapted from Zatorre and Belin (2001) with permission (No. 2681761435248).

Procedure

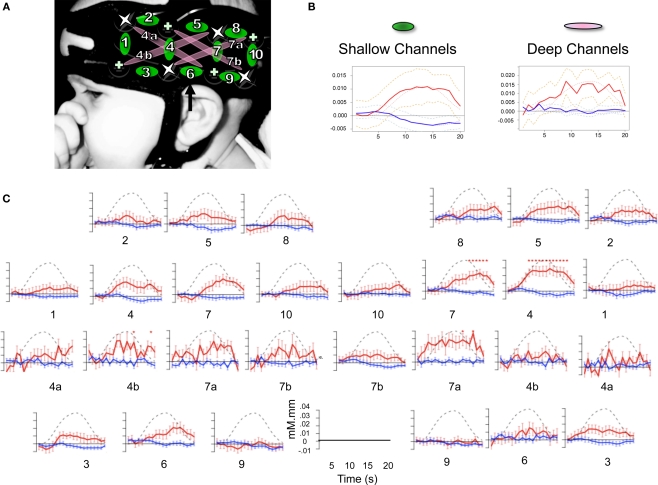

Changes in hemoglobin concentrations and oxygenation levels in the bilateral temporal and frontal areas were recorded using NIRS. Our NIRS system (UCL-NTS, Department of Medical Physics and Bioengineering, UCL, London, UK; Everdell et al., 2005) continuously emits near-infrared lasers of two wavelengths (670 and 850 nm) from four sources on each pad, each of them being modulated by a specific frequency for source separation. The light transmitted through scalp and brain tissues is measured by four detectors on each pad, as shown in Figure 2. This configuration provides data from 10 channels between adjacent source-detector pairs, at a distance of 25 mm; and four channels between non-adjacent pairs, at a distance of 56 mm. According to Fukui et al. (2003)’s model on light penetration in newborns, the NIR light remains in a shallow area of the gray matter with separations of 20–30 mm, but it may go into white matter with 50 mm separations. Therefore, we will call the first 10 “shallow,” and the other 4 “deep.” Channel numbering goes from anterior to posterior, with deep channels coded with the same number, as a shallow counterpart that travels in the same region and a letter (a/b).

Figure 2.

(A) Design of the probe array showing distribution and separation of sources and detectors. Location of the 10 shallow channels (1–10) and 4 deep ones (4a,b and 7a,b) on an infant's head. Crosses indicate detectors and stars sources. Channel 6 is aligned to the T3 position in the international 10–20 system. (B) Time course of Hb changes averaged over shallow and deep channels; in red oxy-Hb, in blue deoxy-Hb; dashed lines = 95% confidence intervals, dotted lines = the canonical HRF model. (C) Time course of Hb changes in all 28 channels collapsing across the conditions. Stars indicate a significant difference from zero (FDR corrected within channels).

The NIRS recording was performed in a sound-attenuated room. In attaching the probes, we used the international 10–20 system as a reference, aligning the bottom of the pad with the T3–T5 line using anatomical landmarks. Once the cap was fit, the stimuli were presented from a loudspeaker at about 80 dB measured at the approximate location of the infant's head. The infants were inside their cots while they listened to the stimuli; all of them were asleep for most or all of the study.

Data analysis

Preprocessing

We used the modified Beer Lambert Law to convert intensity signals into oxy- and deoxy-Hb concentration. Movement artifacts are typically detected through the application of a threshold on signal change measured by the difference between successive samples for each channel (e.g., Peña et al., 2003; Gervain et al., 2008). Unfortunately, this strategy cannot be applied to deep channels, for which the signal-to-noise is very low, due to high signal attenuation because of a longer path length through brain tissues. An application of the standard thresholds for deep channels would result in removing almost all of the signal. We therefore developed a probe-based artifact rejection technique based on the consideration that movement artifacts should theoretically affect probes (i.e., poor probe attachment where a given source or detector is not close enough to the skin), rather than individual channels. Therefore, we applied artifact detection not on the individual channels signals, but to the average of all of the signals relevant for a given probe. Naturally, movement artifacts could affect multiple probes; but then they will also affect all the individual channels associated with each of those probes. As in Gervain et al. (2008) and Peña et al. (2003), we used total-Hb (previously band-pass filtered between 0.02 and 0.7 Hz). Total-Hb is used with the reasoning that a movement artifact should affect both wavelengths, and therefore both oxy and deoxy-Hb. A segment of signal was labeled as artifacted if two successive samples (separated by 100 ms because of the 10 Hz sampling rate) differed by more than 1.5 mM·mm (as in Peña et al., 2003). This threshold operates under the assumption that hemodynamic responses normally do not change more than 1.5 mM·mm in 100 ms. The channel-based movement artifacts were computed by taking the set theoretic union of the movement artifacts of the two probes (sources and detectors) defining a particular channel. Additionally, if there were less than 20 s between two regions of artifact, the intervening signal was esteemed to be too short for a proper analysis (20 s is the duration of a typical hemodynamic response from the brain) and was also coded as artifacted. In brief, this method profits from the relatively good signal-to-noise ratio of the surface channels to detect probe-based movement artifacts, and then applies the temporal definition of these artifacts to the analysis of both surface and deep channels.

Non-linear detrending was achieved through applying a general linear model (GLM) to the data of each channel, introducing sine and cosine regressors of periods between 2 min and the duration of the run (8 min), plus a boxcar regressor for each of stretch of non-artifacted data (to model possible changes in baseline after a movement artifact). The artifacted regions were silenced (that is, given a weight of zero in the regression; this is mathematically equivalent to removing the artifacted signals prior to running the regression). The residuals of this analysis were taken as primary data on subsequent analyses.

HRF reconstruction

In order to reconstruct the hemodynamic response function for each infant and channel, a linear model was fitted with twenty 1-s boxcar regressors time-shifted by 0, 1, …, 19 s respectively from stimulus onset. These reconstructed HRF were averaged across all channels, and compared to an adult HRF model at various delays using a linear regression. The phase was estimated as the delay that yields the best regression coefficient within the range of (−2, 6). Finally, bootstrap resampling of the individual subjects’ data (Westfall and Young, 1993; N = 10,000) was used to generate 95% confidence intervals for this optimal phase.

Analyses of activations

Experimental effects were analyzed by introducing three regressors, one for each of the three conditions (temporal, spectral, control). Each of these regressors consisted of a boxcar on for the stimulation duration (with a value of 1) and off (with a value of zero) everywhere else, convolved with the HRF response using the optimal phase as described in the previous steps. As before, the regions of artifacts were silenced. The beta values for each channel and each conditions resulting from this GLM were tested against zero using a t-test. The t-test significance levels were corrected for multiple comparisons channel-wise using Monte Carlo resampling. This method consists in estimating the sampling distribution of the maximum of the t value across channels under the null hypothesis (tmax). The null hypothesis was obtained by flipping the sign of all beta values across channels randomly for each subject, computing the t-test across subjects, and finding the maximum of this statistics for that particular simulation. This procedure was repeated 10,000 times, providing an estimate of the tmax distribution, from which we derived the corrected p-values. To answer the main research question, the beta values were entered into Analyses of Variance in order to test whether the intercept was different from zero and whether there were effects of Condition (Temporal, Control, Spectral) or with Hemisphere (Left, Right). Three ANOVAs were carried out. The first included all of the channels averaged across channel position for each hemisphere and depth separately; thus, there were three within-subject factors: Condition (Temporal, Control, Spectral); Hemisphere (Left, Right); and Depth (Deep, Shallow). However, since deep and shallow channels have different light path length, we also carried out separate ANOVAs on shallow and deep channels. In the latter, there were only two within-subject factors: Condition (Temporal, Control, Spectral); and Hemisphere (Left, Right). Analyses of regions of interest (ROI) are frequently used in research on the signal-based hypothesis, and, as noted in the Introduction, these analyses highlight the role of STG, STS, and inferior parietal area. Consequently, we also report mean and SE for each of the six channels that tap those regions (3, 6, 7, 7a, 8, 10). For spatial estimation of channel location in the brain, we employed the virtual registration method (Tsuzuki et al., 2007) to map NIRS data onto the MNI standard brain space. Although this method is basically applicable to adult brains, we adapted it for evaluation of infants’ brain activity by adjusting for differences in head size and the emitter-detector separation length (inter-probe separation) between adults and neonates.

Results

As shown in Figure 2, the infant HRF appears to be delayed by about 2 s with respect to the adult one for the oxy-Hb signal (95% confidence interval: [0.0, 2.0]) and by about 4 s for deoxy-Hb (95% confidence interval: [1.4, 5.8]). In shallow channels, the fit between the reconstructed oxy- and deoxy-Hb and the adult HRF model was of R = 0.97 and R = 0.96, respectively. The fit remained relatively good for deep channels for oxy-Hb (R = 0.84); but not so for deoxy-Hb (R = 0.62). Given that there is a better correlation with the adult model for oxy-Hb, the rest of the analyses take into account only this variable. Results on deoxy-Hb showed a similar, but statistically weaker pattern; they are reported in Table A1 in Appendix. As in Minagawa-Kawai et al. (2011b), we have carried out the rest of the analyses for oxy-Hb with the adult HRF model shifted by the average phase lag (2 s). Activation results in the three conditions are represented in Figure 3 and summarized in Table 1. There were significant activations for the Temporal condition in two channels in the Left STG area (channels 4 and 7b, p < 0.05 corrected for multiple comparisons), and in five channels in homologous regions in the Right (channels 4, 4a, 7, 7a, and 8, p < 0.05 corrected). For the Control condition, only one channel survives multiple comparison (Right channel 5, p < 0.05 corrected). No channel was found to be reliably activated in the Spectral condition.

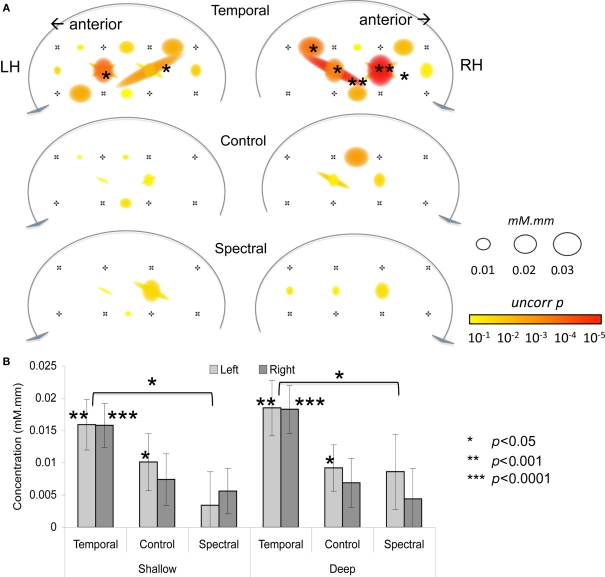

Figure 3.

(A) Activation map for three conditions. The size of the circle/oval corresponds to p-value; and the color to β value. (B) Average activation for each condition, as a function of Hemisphere and Depth. Error bars correspond to SEs.

Table 1.

Levels of activations (oxy-Hb changes) for temporal, control, and spectral conditions.

| Channel | Temporal | Control | Spectral | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| β | T | N | p (unc) | p (corr) | β | T | N | p (unc) | p (corr) | β | T | N | p (unc) | p (corr) | |

| LEFT SHALLOW | |||||||||||||||

| 1 MFG/IFG | 0.0129 | 2.49 | 28 | 0.022 | ns | ||||||||||

| 2 IFG | 0.0122 | 2.11 | 29 | 0.046 | ns | 0.0111 | 1.75 | 29 | 0.088 | ns | |||||

| 3 aSTG | 0.0208 | 3.20 | 28 | 0.004 | ∼ | ||||||||||

| 4 STG/IFG | 0.0223 | 3.99 | 29 | 0.001 | 0.008 | ||||||||||

| 5 Precentral | 0.0170 | 2.59 | 29 | 0.017 | ns | ||||||||||

| 6 STG/STS | 0.0154 | 1.86 | 29 | 0.072 | ns | 0.0117 | 2.09 | 29 | 0.048 | ns | 0.0117 | 1.95 | 29 | 0.063 | ns |

| 7 STG | 0.0165 | 2.96 | 29 | 0.008 | ns | 0.0150 | 2.37 | 29 | 0.028 | ns | 0.0208 | 2.60 | 29 | 0.017 | ns |

| 8 SMG | 0.0208 | 3.16 | 29 | 0.005 | ∼ | 0.0156 | 2.07 | 29 | 0.050 | ns | |||||

| 10 SMG/pSTG | 0.0156 | 2.64 | 29 | 0.016 | ns | ||||||||||

| LEFT DEEP | |||||||||||||||

| 4a FG/TG | 0.0162 | 2.92 | 29 | 0.008 | ns | 0.0118 | 1.78 | 29 | 0.084 | ns | 0.0122 | 1.75 | 29 | 0.088 | ns |

| 4b FG/postcent | 0.0149 | 2.87 | 28 | 0.009 | ns | 0.0099 | 2.11 | 28 | 0.047 | ns | |||||

| 7a STG/SMG postcentral/ | 0.0265 | 3.43 | 28 | 0.002 | 0.037 | 0.0122 | 2.41 | 29 | 0.025 | ns | |||||

| 7b TG/FG | 0.0160 | 2.24 | 29 | 0.036 | ns | 0.0181 | 2.36 | 28 | 0.028 | ns | |||||

| RIGHT SHALLOW | |||||||||||||||

| 1 MFG/IFG | 0.0173 | 2.18 | 29 | 0.041 | ns | −0.011 | −1.87 | 28 | 0.072 | ns | |||||

| 2 IFG | 0.0200 | 2.95 | 29 | 0.008 | ns | ||||||||||

| 4 STG/IFG | 0.0259 | 5.27 | 29 | 0.000 | 0.000 | 0.0163 | 2.49 | 29 | 0.021 | ns | 0.0178 | 2.43 | 29 | 0.024 | ns |

| 5 precentral | 0.0131 | 1.90 | 29 | 0.068 | ns | 0.0221 | 3.34 | 29 | 0.003 | 0.044 | |||||

| 6 STG/STS | 0.0194 | 3.14 | 29 | 0.005 | ∼ | ||||||||||

| 7 STG | 0.0218 | 3.74 | 29 | 0.001 | 0.016 | 0.0155 | 2.14 | 29 | 0.044 | ns | 0.0135 | 2.05 | 29 | 0.052 | ns |

| 8 SMG | 0.0229 | 3.85 | 29 | 0.001 | 0.011 | ||||||||||

| 10 SMG/pSTG | 0.0131 | 2.30 | 28 | 0.032 | ns | ||||||||||

| RIGHT DEEP | |||||||||||||||

| 4a FG/TG | 0.0178 | 2.82 | 29 | 0.011 | ns | ||||||||||

| 4b FG/postcent | 0.0169 | 3.31 | 29 | 0.003 | 0.050 | ||||||||||

| 7a STG/SMG postcentral | 0.0252 | 4.84 | 29 | 0.000 | 0.001 | 0.0172 | 2.62 | 28 | 0.016 | ns | |||||

| 7b/TG/FG | 0.0134 | 2.93 | 29 | 0.008 | ns | ||||||||||

N, number of children contributing data for that channel and condition; unc, uncorrected; corr, corrected. MFG, middle frontal gyrus; IFG, inferior frontal gyrus; a, anterior; STG, superior temporal gyrus; STS, superior temporal sulcus; p, posterior; MTG, middle temporal gyrus; FG, frontal gyrus; TG, temporal gyrus.

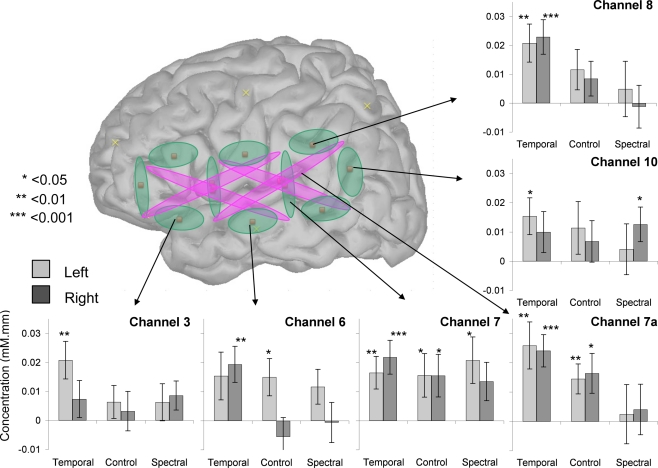

An Analysis of Variance with Hemisphere, Condition, and Depth as factors revealed a significant intercept [F(1, 28) = 17.4, p < 0.001] and an effect of Condition [F(2, 56) = 3.6, p = 0.03] but no other main effects or interactions [all other Fs < 1 except for Hemisphere × Condition × Depth, F(2, 56) = 1.9, p > 0.1; Figure 3]. The main effect of Condition was due to the Temporal stimuli evoking greater activation, as evident on Figure 3. The separate ANOVAs suggested this effect may be stronger in shallow [F(2, 56) = 2.7, p = 0.08] than in deep [F(2, 56) = 4.0, p < 0.03] channels, but neither revealed any significant or marginal effects of or interactions with Hemisphere (all Fs < 0.6, p > 0.55). Moreover, even a preliminary inspection of channels selected on the basis of previously used ROIs reveals little in the way of an interaction between hemisphere and condition, as shown in Figure 4 (a number of children did not provide data for some of these comparisons: 1 for channels 3 and 4a; 2 for channels 7a, 9, and 10). Although Channel 3 appears to follow half of the prediction (greater activation on the LH), the Condition × Hemisphere interaction is also non-significant in an ANOVA specific to this channel [F(2, 54) = 1.3, p > 0.1]. The same ANOVA was applied to deoxy-Hb data but none of the channels showed significant main effects nor interactions after correction for multiple comparisons.

Figure 4.

Average activation in six target channels. In each bar graph, activation amplitude is indicated as a function of hemisphere and condition.

Discussion

In this study, we have tested the possibility that newborns’ brain activation responds to the rate of change or spectral variability of the stimuli, and if so, whether briefer, spectrally simpler segments (Temporal) evoke left-dominant activations, whereas longer (Control), spectrally more variable (Spectral) stimuli capture the RH to a greater extent. The answer was clear in both cases: Activation was greater bilaterally for the Temporal stimulation than either Spectral or Control; but there was no evidence for hemispheric asymmetries dependent on the stimulus characteristics.

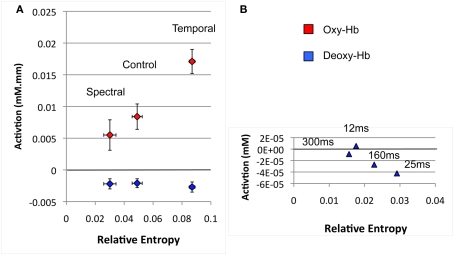

To the best of our knowledge, little attention has been devoted to overall changes in response depending on the rate of change in adults, a robust finding in the present study. This pattern had already been noted in the previous newborn study by Telkemeyer et al. (2009). In that work, five of the six channels exhibit greater activation in response to 25 ms segments than any of the other stimuli tested (Figure 4, p. 14730). One may interpret this result as a predisposition of the infant brain to respond to stimuli whose rate of change approximates that of speech, estimated at 25–35 ms by previous work (e.g., Poeppel and Hickok, 2004). In Figure 5, we present the average newborns’ brain responses as a function of rate of change. This rate of change was quantified as the Relative Entropy computed over adjacent 25 ms frames of a cochleogram's representation of the stimuli. Both Telkemeyer et al. (2009) and our results fit a linear increase in brain activation as a function of Relative Entropy (stimuli that change too fast, as the 12 ms stimuli of Telkemeyer have actually a Lower Relative Entropy than stimuli that change at the 25 ms rate).

Figure 5.

Average newborn brain activation in the perisylvian areas as a function of rate of change as measured by average Relative Entropy for the stimuli. (A) Spectral, Control, and Temporal stimuli used in the present study (B) Stimuli used in Telkemeyer's study (2009). The average activations have been calculated over the three reported temporal channels in both hemispheres. Relative Entropy was computed as where is the proportion of spectral energy in band i at time t, and Δ = 25 ms.

Thus, these findings are in good agreement with the recently revised version of the time-resolution model proposed by Poeppel et al. (2008), where it is hypothesized that fast changing stimuli elicit larger responses which are largely symmetrical. Our data complies with this prediction, as newborns had greater bilateral activations for the temporal condition than for the other conditions. Interestingly, the same is not always found in adults. Careful inspection of previous research results also shows greater activation for temporally complex stimuli in some cases (Jamison et al., 2006, Table 1, p. 1270: The Temporal > Spectral contrast activates 1354 voxels in HG and STG, and the Spectral > Temporal contrast, 197 voxels in STG; so at least eight times as many voxels activated for Temporal than Spectral); but not in others (Zatorre and Belin, 2001, Figure 3, p. 950: total average CBF in Anterior STG was identical for the Temporal and Spectral conditions; Boemio et al., 2005, Figure 4, p. 392, activation in STG decreases linearly with temporal rate). This is certainly a question that deserves further investigation with both infant and adult populations.

As for the question of asymmetries induced by the present stimuli, it is clear that the current study on newborns fails to replicate the PET (Zatorre and Belin, 2001) and fMRI (Jamison et al., 2006) adult results found with a superset of the same stimuli. However, our results line up with the previous newborn study, where the evidence for lateralization was scarce (one-tailed t-test on 1 channel selected among 6, 0.03 < puncorr < 0.05; Telkemeyer et al., 2009). Given that two studies on a comparable population yielded similar results, this may indicate initially weak signal-driven asymmetries. Such a result may not be too surprising, given that the hypothesized etiologies for these asymmetries do indeed strengthen with development. Previous research has suggested three possible bases underlying hemispheric signal-driven specialization: myelination and column spread (Zatorre and Belin, 2001), structural connectivity between STS and STG (Boemio et al., 2005), and functional connectivity among auditory areas (Poeppel and Hickok, 2004). A good deal of research has documented the dramatic changes in myelination and structural connectivity as a function of development (see for example, Paus et al., 2001; Haynes et al., 2005; Dubois et al., 2006), and functional connectivity from EEG, MRI, and NIRS studies begin to draw a picture of rapidly changing, increasingly complex connections (e.g., Johnson et al., 2005; Lin et al., 2008; Gao et al., 2009; Homae et al., 2010). As Homae et al. (2010) put it, “the infant brain is not a miniature version of the adult brain but a continuously self-organizing system that forms functional regions and networks among multiple regions via short-range and long-range connectivity” (p. 4877). Efficient hemispheric specialization relies on synaptic bases for rapid inter-hemispheric communication of neural signals. This system may not be sufficiently developed in neonates. Indeed, recent work documents that long-range functional connectivity across hemispheres is not present in the temporal and parietal areas in young infants (Homae et al., 2010).

Nonetheless, there is an alternative explanation, according to which all of the necessary components for signal-based asymmetries are present and functional by full-term birth, but our study simply failed to reveal them, for example due to problems with the stimuli. To begin with, given that studies using language or music stimuli have been able to uncover hemispheric asymmetries in newborns (Peña et al., 2003; Gervain et al., 2008; Perani et al., 2010; Arimitsu et al., this volume), it could be the case that our non-speech, pure-tone stimuli fails to evoke a strong enough response that would allow the assessment of interactions of stimuli type by hemisphere. In line with this explanation, notice that in Peña et al. (2003), backward speech failed to activate auditory areas (a result replicated in Sato et al., 2006), suggesting that not all stimuli are able to evoke activations measurable with NIRS. Moreover, one previous adult study suggests that simple tones evoke activation in deeper areas of the brain (Jamison et al., 2006). Although we have made an attempt to capture such activations by analyzing light absorption over long-distance channels, the paths traveled by this light may not have included the relevant structures. Additionally, a reviewer pointed out that, due to limitations in infants’ frequency resolution, the stimuli should have been altered to provide a better match with the previous adult work. Novitski et al. (2007) have documented that newborns’ ERPs did not evidence detecting a change in a sequence of 100 ms tones separated by 700 ms inter-stimulus interval when the oddball deviated from the standard by only 5%; that is, 12.5 Hz away from a 250 Hz standard or 50 Hz away from a 1000 Hz standard. However, the response was reliable when the oddball differed by 20% (50 Hz from the 250 Hz, 200 from the 1000 Hz). Newborns may not have been able to detect some of the spectral changes in the Spectral condition, since our stimuli were sampled at 32 steps between 500 and 1000 Hz. Yet, the probability of two subsequent segments being less than 5% away is only 20%; therefore, 80% of the transitions could probably be detected by the newborns. While expanding the range of variation to two octaves and sampling at larger intervals is certainly an avenue worth exploring, we remain agnostic as to the power of this strategy, given that no channel in either hemisphere was activated either during this condition, nor the Control condition, where there were easily detectable 500-Hz frequency changes.

Another way in which an underlying asymmetry could have escaped detection is due to technical limitations in NIRS. In addition to the problem of detecting activation below the cortical surface mentioned above, NIRS channels necessarily travel in a limited path, and due to their spacing, low-density NIRS may fail to capture particular activation foci. Indeed, as pointed out by Rutten et al. (2002), lateralization is more accurately measured by limiting the analyses to specified regions, which is not feasible with low-density NIRS as used here. The activation area captured with NIRS appears broader than it really is, and thus NIRS fails to capture strong signals from discrete activation foci.

An even larger question that also awaits further data is whether signal-driven hypotheses still stand a good chance to explain left lateralization for language. These hypotheses face a number of challenges, the first of which, as briefly stated in the Introduction, is the definition of the stimulus characteristics yielding robust lateralization patterns. Disentangling the precise physical characteristics that drive the hemispheric dominance patterns could be resolved by further experimentation with more minimalistic stimuli.

The second challenge for the explanatory power of this class of hypotheses for language relates to the fit between these distinguishing characteristics and speech itself. With the gain in precision of the implementation of the hypothesis, our work faces the loss of its explanatory power, as it is clear that speech has both short and long segments, fast and slow transitions, temporal and spectral contrasts, etc. It is, nonetheless, possible that some combination of these factors succeeds in perfectly describing language, though this is also an empirical problem, which could be addressed as a first approach by doing multiple regressions on speech with the proposed factors as regressors.

Naturally, the final challenge of these hypotheses lies not in their potential explanatory power, but in their empirical fit. On the one hand, it is clear that a substantial number of researchers have abandoned signal-driven explanations for the left lateralization of speech processing, and instead propose that acoustic characteristics can only modulate overall activation or right dominance (Poeppel et al., 2008). On the other hand, we know that, at some point, acoustic characteristics give way to linguistic characteristics, such that vowel and pitch contrasts (spectral, slow, lower frequency…) elicit strong left dominance, just as consonants do, but only if they belong to the listener's language. Zatorre and Belin (2001) have proposed that the signal driven hypothesis only provides an initial bias, but language later takes a life of its own. Indeed, we recently proposed a model of speech acquisition and brain development in which left dominance for language is the result not of a single bias, but a combination of factors whose significance is modulated by development (Minagawa-Kawai et al., 2007, 2011a). This model proposes that signal-driven biases dominate activation patterns at an early stage of speech acquisition, such that hemispheric lateralization is determined primarily by the acoustic features of the spoken stimuli. As infants gain in language experience, they begin to acquire sounds, sound patterns, word forms, and other frequent sequences, all of which involve learning mechanisms that are particularly efficient in the left hemisphere. Consequently, as linguistic categories are built, activation in response to speech is best described as domain-driven, as it results in an increased leftward lateralization exclusively for the first language categories. Although evidence on the development of lateralization continues to be sparse, current data on infants’ listening to speech or non-speech are accurately captured by this model. For example, cerebral responses in young infants are bilateral to vowel contrasts (Minagawa-Kawai et al., 2007, 2009) and right-dominant or bilateral (Sato et al., 2010) for pitch accent, while in adulthood these contrasts evoke left-dominant activations in listeners whose language utilizes them, and right-dominant otherwise. We assume that it is through the learning process that the cerebral networks that process native vowel contrast shift from the bilateral, signal-driven pattern to a left dominant, linguistically-driven processing.

Conclusion

In brief summary, this study aimed at providing additional evidence on the modulation of newborns’ brain activation in response to physical characteristics of auditory stimulations. While these data provided further support for an increased responsiveness for change rates similar to those found in speech, there was no evidence of hemispheric asymmetries. This may indicate that the functional organizations responsible for such asymmetries in the adult are not in place by birth, but other explanations are still open, and further data, both in the way of replications and extensions to other age groups, are needed before drawing any definite conclusions.

Conflict of Interest Statement

The authors declare that this manuscript was prepared in the absence of any commercial and financial relationships that could be constructed as a potential conflict of interests.

Acknowledgments

This work was supported by EU's sixth frame work program (neuronal origins of language and communication: NEUROCOM, Project no. 012738), in part by a grant from the Agence Nationale pour la Recherche (ANR-09-BLAN-0327 SOCODEV), Grant-in-Aid for Scientific Research (A) (Project no. 21682002), Academic Frontier Project supported by Ministry of Education, Culture, Sports, Science and Technology (MEXT) and funding from the Ecole de Neurosciences de Paris, and the Fyssen Foundation. The authors thank R. Zatorre for use of the stimuli, J. Hebden, and N. Everdell for their comments and help with the NIRS system, K. Friston for illuminating suggestions regarding data analysis and H. van der Lely, A. Shestakova, E. Kushnerenko, and J. Meek for their support of this earlier version of this study. We thank L. Filippin for help with the acquisition and analysis software; A. Bachmann for designing and constructing the probe pad; I. Brunet, A. Elgellab, J. Gervain, and S. Margules for assistance with the infant recruitment and test.

Appendix

Table A1.

Levels of activations (deoxy-Hb changes) for temporal, control and spectral conditions.

| Channel | Temporal | Control | Spectral | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| β | T | N | p (unc) | p (corr) | β | T | N | p (unc) | p (corr) | β | T | N | p (unc) | p (corr) | |

| LEFT SHALLOW | |||||||||||||||

| 2 IFG | −0.0080 | −2.14 | 29 | 0.044 | ns | ||||||||||

| 3 aSTG | −0.0051 | −2.39 | 29 | 0.027 | ns | ||||||||||

| 4 STG/IFG | −0.0067 | −2.14 | 29 | 0.044 | ns | −0.0057 | −2.01 | 29 | 0.056 | ns | −0.0050 | −1.73 | 29 | 0.091 | ns |

| 5 precentral | −0.0061 | −1.90 | 29 | 0.068 | ns | ||||||||||

| 7 STG | −0.0064 | −2.25 | 29 | 0.035 | ns | ||||||||||

| 9 pSTG/STS | −0.0054 | −2.19 | 29 | 0.040 | ns | −0.0058 | −2.81 | 28 | 0.011 | ns | |||||

| LEFT DEEP | |||||||||||||||

| 4b FG/postcent postcentral | −0.0047 | −2.80 | 28 | 0.011 | ns | ||||||||||

| 7b/TG/FG | −0.0032 | −1.84 | 29 | 0.076 | ns | −0.0045 | −2.69 | 28 | 0.014 | ns | |||||

| RIGHT SHALLOW | |||||||||||||||

| 1 MFG/IFG | −0.0052 | −1.72 | 28 | 0.092 | ns | ||||||||||

| 2 IFG | −0.0054 | −2.48 | 29 | 0.022 | ns | ||||||||||

| 3 aSTG | −0.0054 | −2.06 | 29 | 0.051 | ns | −0.0046 | −1.94 | 29 | 0.063 | ns | |||||

| 4 STG/IFG | −0.0068 | −2.47 | 29 | 0.023 | ns | −0.0050 | −1.92 | 29 | 0.066 | ns | |||||

| 5 Precentral | −0.0045 | −1.78 | 29 | 0.083 | ns | ||||||||||

| 6 STG/STS | −0.0054 | −2.54 | 29 | 0.019 | ns | −0.0063 | −2.39 | 29 | 0.027 | ns | −0.0081 | −3.12 | 29 | 0.005 | ∼ |

| 9 pSTG/STS | −0.0065 | −2.44 | 29 | 0.024 | ns | ||||||||||

N, number of children contributing data for that channel and condition; unc, uncorrected; corr, corrected; MFG, middle frontal gyrus; IFG, inferior frontal gyrus; a, anterior; STG, superior temporal gyrus; STS, superior temporal sulcus; p, posterior; MTG, middle temporal gyrus; FG, frontal gyrus; TG, temporal gyrus.

References

- Bedoin N., Ferragne E., Marsico E. (2010). Hemispheric asymmetries depend on the phonetic feature: a dichotic study of place of articulation and voicing in French stops. Brain Lang. 115, 133–140 10.1016/j.bandl.2010.06.001 [DOI] [PubMed] [Google Scholar]

- Belin P., Zilbovicius M., Crozier S., Thivard L., Fontaine A., Masure M. C., Samson Y. (1998). Lateralization of speech and auditory temporal processing. J. Cogn. Neurosci. 10, 536–540 [DOI] [PubMed] [Google Scholar]

- Boemio A., Fromm S., Braun A., Poeppel D. (2005). Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat. Neurosci. 8, 389–395 [DOI] [PubMed] [Google Scholar]

- Britton B., Blumstein S. E., Myers E. B., Grindrod C. (2009). The role of spectral and durational properties on hemispheric asymmetries in vowel perception. Neuropsychologia 47, 1096–1106 10.1016/j.neuropsychologia.2008.12.033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene-Lambertz G., Hertz-Pannier L., Dubois J. (2006). Nature and nurture in language acquisition: anatomical and functional brain-imaging studies in infants. Trends Neurosci. 29, 367–373 10.1016/j.tins.2006.05.011 [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G., Montavont A., Jobert A., Allirol L., Dubois J., Hertz-Pannier L., Dehaene S. (2010). Language or music, mother or Mozart? Structural and environmental influences on infants’ language networks. Brain Lang. 114, 53–65 10.1016/j.bandl.2009.09.003 [DOI] [PubMed] [Google Scholar]

- Dubois J., Hertz-Pannier L., Dehaene-Lambertz G., Cointepas Y., Le Bihan D. (2006). Assessment of the early organization and maturation of infants’ cerebral white matter fiber bundles: a feasibility study using quantitative diffusion tensor imaging and tractography. Neuroimage 30, 1121–1132 10.1016/j.neuroimage.2005.11.022 [DOI] [PubMed] [Google Scholar]

- Elman J., Bates E., Johnson M., Karmiloff-Smith A., Parisi D., Plunkett K. (1997). Rethinking Innateness: A Connectionist Perspective on Development. Cambridge, MA: MIT Press [Google Scholar]

- Everdell N. L., Gibson A. P., Tullis I. D. C., Vaithianathan T., Hebden J. C., Delpy D. T. (2005). A frequency multiplexed near-infrared topography system for imaging functional activation in the brain. Rev. Sci. Instrum. 76, 093705 [Google Scholar]

- Fukui Y., Ajichi Y., Okada E. (2003). Monte Carlo prediction of near-infrared light propagation in realistic adult and neonatal head models. Appl. Opt. 42, 2881–2887 [DOI] [PubMed] [Google Scholar]

- Gao W., Zhu H., Giovanello K.S., Smith J.K., Shen D., Gilmore J.H., Lin W. (2009). Evidence on the emergence of the brain's default network from 2-week-old to 2-year-old healthy pediatric subjects. Proc. Natl. Acad. Sci. USA 106, 6790–6795 10.1073/pnas.0811221106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gervain J., Macagno F., Cogoi S., Pena M., Mehler J. (2008). The neonate brain detects speech structure. Proc. Natl. Acad. Sci. U.S.A. 105, 14222–14227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud A. L., Kleinschmidt A., Poeppel D., Lund T. E., Frackowiak R. S., Laufs H. (2007). Endogenous cortical rhythms determine cerebral specialization for speech perception and production. Neuron 56, 1127–1134 10.1016/j.neuron.2007.09.038 [DOI] [PubMed] [Google Scholar]

- Haynes R.L., Borenstein N.S., Desilva T.M., Folkerth R.D., Liu L.G., Volpe J.J., Kinney H.C. (2005). Axonal development in the cerebral white matter of the human fetus and infant. J. Comp. Neurol. 484, 156–167 [DOI] [PubMed] [Google Scholar]

- Hickok G., Poeppel D. (2007). The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402 [DOI] [PubMed] [Google Scholar]

- Homae F., Watanabe H., Nakano T., Asakawa K., Taga G. (2006). The right hemisphere of sleeping infant perceives sentential prosody. Neurosci. Res. 54, 276–280 [DOI] [PubMed] [Google Scholar]

- Homae F., Watanabe H., Otobe T., Nakano T., Go T., Konishi Y., Taga G. (2010). Development of global cortical networks in early infancy. J. Neurosci. 30, 4877–4882 10.1523/JNEUROSCI.5618-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jamison H. L., Watkins K. E., Bishop D. V., Matthews P. M. (2006). Hemispheric specialization for processing auditory nonspeech stimuli. Cereb. Cortex 16, 1266–1275 [DOI] [PubMed] [Google Scholar]

- Johnson M. H., Griffin R., Cibra G., Halit H., Farroni T., de Haan M., Tucker L. A., Baron-Cohen S., Richards J. (2005). The emergence of the social brain network: evidence from typical and typical development. Dev. Psychopathol. 17, 599–619 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin W., Zhu Q., Gao W., Chen Y., Toh C.-H., Styner M., Gerig G., Smith J.K., Biswal B., Gilmore J.H. (2008). Functional connectivity MR imaging reveals cortical functional connectivity in the developing brain. Am. J. Neuroradiol. 29,1883–1889 10.3174/ajnr.A1256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Elwell C. E. (2010). Illuminating the developing brain: the past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 34, 269–284 [DOI] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Cristià A., Dupoux E. (2011a). Cerebral lateralization and early speech acquisition: developmental scenario. J. Dev. Cogn. Neurosci. 1, 217–232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., van der Lely H., Ramus F., Sato Y., Mazuka R., Dupoux E. (2011b). Optical brain imaging reveals general auditory and language-specific processing in early infant development. Cereb. Cortex 21, 254–261 10.1093/cercor/bhq082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Mori K., Hebden J. C., Dupoux E. (2008). Optical imaging of infants’ neurocognitive development: recent advances and perspectives. Dev Neurobiol 68, 712–728 10.1002/dneu.20618 [DOI] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Mori K., Naoi N., Kojima S. (2007). Neural attunement processes in infants during the acquisition of a language-specific phonemic contrast. J. Neurosci. 27, 315–321 10.1523/JNEUROSCI.1984-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Naoi N., Kojima S. (2009). New Approach to Functional Neuroimaging: Near Infrared Spectroscopy. Tokyo: Keio University Press [Google Scholar]

- Novitski N., Huotilainen M., Tervaniemi M., Näätänen R., Fellman V. (2007). Neonatal frequency discrimination in 250-4000Hz range: electrophysiological evidence. Clin. Neurophysiol. 118, 412–419 10.1016/j.clinph.2006.10.008 [DOI] [PubMed] [Google Scholar]

- Obrig H., Rossi S., Telkemeyer S., Wartenburger I. (2010). From acoustic segmentation to language processing: evidence from optical imaging. Front. Neuroenerg. 2:13. 10.3389/fnene.2010.00013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obrig H., Villringer A. (2003). Beyond the visible – imaging the human brain with light. J. Cereb. Blood Flow Metab. 23, 1–18 10.1097/00004647-200301000-00001 [DOI] [PubMed] [Google Scholar]

- Paus T., Collins D. L., Evans A. C., Leonard G., Pike B., Zijdenbos A. (2001). Maturation of white matter in the human brain: a review of magnetic resonance studies. Brain Res. Bull. 54, 255–266 [DOI] [PubMed] [Google Scholar]

- Peña M., Maki A., Kovacic D., Dehaene-Lambertz G., Koizumi H., Bouquet F., Mehler J. (2003). Sounds and silence: an optical topography study of language recognition at birth. Proc. Natl. Acad. Sci. U.S.A. 100, 11702–11705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perani D., Saccuman M.C., Scifo P., Spada D., Andreolli G., Rovelli R., Baldoli C., Koelsch S. (2010). Functional specializations for music processing in the human newborn brain. Proc. Natl. Acad. Sci. USA 107, 4758–4763 10.1073/pnas.0909074107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D., Guillemin A., Thompson J., Fritz J., Bavelier D., Braun A. R. (2003). Auditory lexical decision, categorical perception, and FM direction discrimination differentially engage left and right auditory cortex. Neuropsychologia 42, 183–200 10.1016/j.neuropsychologia.2003.07.010 [DOI] [PubMed] [Google Scholar]

- Poeppel D., Hickok G. (2004). Towards a new functional anatomy of language. Cognition 92, 1–12 10.1016/j.cognition.2003.11.001 [DOI] [PubMed] [Google Scholar]

- Poeppel D., Idsardi W. J., van Wassenhove V. (2008). Speech per-ception at the interface of neurobiology and linguistics. Philos. Trans. R. Soc. Lond. Biol. Sci. 363, 1071–1086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutten G. J. M., Ramsey N. F., van Rijen P. C., van Veelen C. W. M. (2002). Reproducibility of fMRI-determined language lateralization in individual subjects. Brain Lang. 80, 421–437 10.1006/brln.2001.2600 [DOI] [PubMed] [Google Scholar]

- Rybalko N., Suta D., Nwabueze-Ogbo F., Syka J. (2006). Effect of auditory cortex lesions on the discrimination of frequency-modulated tones in rats. Eur. J. Neurosci. 23, 1614–1622 [DOI] [PubMed] [Google Scholar]

- Sato H., Hirabayashi Y., Tsubokura S., Kanai M., Ashida S., Konishi I., Uchida M., Hasegawa T., Konishi Y., Maki A. (2006). Cortical activation in newborns while listening to sounds of mother tongue and foreign language: an optical topography study. Proc. Intl. Conf. Inf. Study, 037–070 [Google Scholar]

- Sato Y., Sogabe Y., Mazuka R. (2010). Development of hemispheric specialization for lexical pitch-accent in Japanese infants. J. Cogn. Neurosci. 22, 2503–2513 [DOI] [PubMed] [Google Scholar]

- Schonwiesner M., Rubsamen R., von Cramon D. Y. (2005). Hemispheric asymmetry for spectral and temporal processing in the human antero-lateral auditory belt cortex. Eur. J. Neurosci. 22, 1521–1528 [DOI] [PubMed] [Google Scholar]

- Telkemeyer S., Rossi S., Koch S. P., Nierhaus T., Steinbrink J., Poeppel D., Obrig H., Wartenburger I. (2009). Sensitivity of newborn auditory cortex to the temporal structure of sounds. J. Neurosci. 29, 14726–14733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuzuki D., Jurcak V., Singh A. K., Okamoto M., Watanabe E., Dan I. (2007). Virtual spatial registration of stand-alone fNIRS data to MNI space. Neuroimage 34, 1506–1518 10.1016/j.neuroimage.2006.10.043 [DOI] [PubMed] [Google Scholar]

- Westfall P. H., Young S.S. (1993). Resampling-based multiple testing. New York, NY: John Wiley & Co [Google Scholar]

- Wetzel W., Ohl F. W., Wagner T., Scheich H. (1998). Right auditory cortex lesion in Mongolian gerbils impairs discrimination of rising and falling frequency-modulated tones. Neurosci. Lett. 252, 115–118 [DOI] [PubMed] [Google Scholar]

- Zatorre R. J., Belin P. (2001). Spectral and temporal processing in human auditory cortex. Cereb. Cortex 11, 946–953 10.1093/cercor/11.10.946 [DOI] [PubMed] [Google Scholar]