Abstract

Visual imagery comprises object and spatial dimensions. Both types of imagery encode shape but a key difference is that object imagers are more likely to encode surface properties than spatial imagers. Since visual and haptic object representations share many characteristics, we investigated whether haptic and multisensory representations also share an object-spatial continuum. Experiment 1 involved two tasks in both visual and haptic within-modal conditions, one requiring discrimination of shape across changes in texture, the other discrimination of texture across changes in shape. In both modalities, spatial imagers could ignore changes in texture but not shape, whereas object imagers could ignore changes in shape but not texture. Experiment 2 re-analyzed a cross-modal version of the shape discrimination task from an earlier study. We found that spatial imagers could discriminate shape across changes in texture but object imagers could not; and that the more one preferred object imagery, the more texture changes impaired discrimination. These findings are the first evidence that object and spatial dimensions of imagery can be observed in haptic and multisensory representations.

Keywords: cross-modal, multisensory, texture, orientation, vision, touch

INTRODUCTION

Research into cognitive styles (consistent individual preferences for processing information in one way over another) has identified ‘verbalizers’, who employ verbal-analytical strategies, and ‘visualizers’, who prefer visual imagery (Kozhevnikov et al., 2002). Visualizers have been sub-divided into object and spatial imagers (Kozhevnikov et al., 2005) although it is important to note that imagery preferences tend to be relative, rather than absolute. Both encode shape, but object imagers tend to generate pictorial images that are vivid, detailed, and include information about surface properties, e.g., color; by contrast, spatial imagers tend to generate more schematic images primarily concerned with spatial relations, e.g., between component parts of objects, and spatial transformations (Kozhevnikov et al., 2005). So far, object and spatial imagery dimensions have been shown only in vision, but object properties and spatial relations are also perceived by touch, and can be transferred cross-modally. Representations derived from vision and touch share many characteristics (Lacey & Sathian, 2011) and here we examine whether individuals differ along object and spatial imagery dimensions with regard to haptic and multisensory representations.

A key difference is that object, but not spatial, imagers tend to encode surface properties, e.g. color and texture, in object representations (Kozhevnikov et al., 2005). We recently showed that texture information is integrated into both visual and haptic representations (Lacey et al., 2010) but did not examine individual differences in this respect. Here, we tested shape discrimination across changes in texture, and texture discrimination across changes in shape, in visual and haptic within-modal conditions. We predicted a task x imagery interaction in which spatial imagers would be able to discriminate shape across changes in texture (because they tend not to encode texture) but object imagers would not (because they integrate shape and texture). In discriminating texture across shape changes, we expected spatial imagers to be impaired; object imagers might be predicted to also be impaired (because shape and texture are integrated), alternatively, they might be unaffected (because they are better able than spatial imagers to focus on surface properties when necessary). Finally, we expected no effect of modality on either task or imagery type if object and spatial imagery dimensions are common to vision and touch.

EXPERIMENT 1: UNISENSORY REPRESENTATIONS

Methods

Participants

Thirty-five undergraduate students from Emory University took part (16 male; mean age 20 years, 11 months) and were remunerated for their time. All gave informed written consent and all procedures were approved by the Emory University Institutional Review Board.

Stimuli

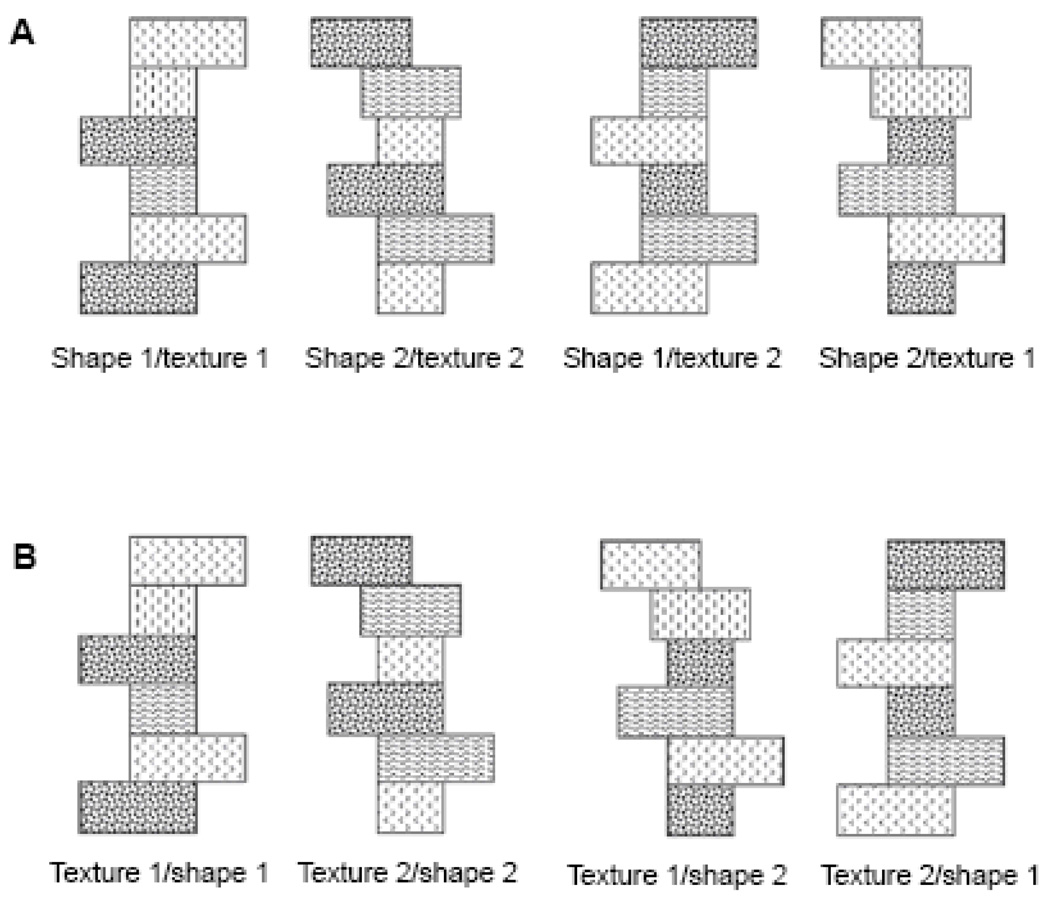

We used a total of 80 objects, each made from six smooth wooden blocks measuring 1.6 X 3.6 X 2.2 cm (Fig. 1). These objects had four-part texture schemes: each component block was covered with sandpaper (20 grit), standard Braille paper with Braille characters, velvet cloth, or was left with the original smooth surface. No two objects had the same texture scheme, no two adjacent blocks had the same texture, and all textures were rendered in matt black. Each object had a small (<1 mm) grey pencil dot on the bottom facet that the experimenter could use as a cue to ensure consistent presentation. Debriefing showed that participants were never aware of these small dots. The 80 objects were divided into 4 sets of 10 pairs (original Shape 1 and Shape 2). We then duplicated these, creating 4 sets of 10 copy pairs in which the copy of Shape 1 had the texture scheme of the original Shape 2 and vice versa (Fig. 1A). The end result was a series of object quadruplets in which shape and texture were exchanged between pairs so that, in each quadruplet, pairs could be used for either shape or texture discrimination. We used difference matrices based on the number of differences in the position and orientation of component blocks to calculate the mean difference in object shape for each of the sets (Lacey et al., 2007, 2009a, 2010). Paired t-tests showed no significant differences between these sets (all p values > .05) and they were therefore considered equally discriminable. Use of the object sets for the shape and texture discrimination tasks and for each modality condition was counterbalanced.

Figure 1.

(A) Schematic example of a trial in the shape discrimination task. Participants studied Shapes 1 and 2 with the original texture schemes (left pair). At test, these original objects were presented, one at a time in pseudorandom order, with two additional objects (right pair), these additional objects had the same shapes as Shapes 1 and 2 but the texture schemes were exchanged. (B) Schematic example of a trial in the texture discrimination task. Participants studied Textures 1 and 2 with the original shapes (left pair). At test, these original objects were presented, one at a time in pseudorandom order, with two additional objects (right pair), these additional objects had the same textures as Textures 1 and 2 but the shapes were exchanged. (Experiment 2 used the same texture discrimination task but each of the four objects was presented twice, once in its original orientation, and once rotated by 180°).

Procedure

Before the first experimental session, participants completed the Object-Spatial Imagery Questionnaire (OSIQ) (Blajenkova et al., 2006). We deducted the spatial imagery score from the object imagery score: negative OSIQ difference scores (OSIQd) indicated a preference for spatial imagery and positive scores indicated a preference for object imagery. During pilot-testing, we noted that some participants spontaneously reported an imagery preference at odds with their OSIQd. Therefore, after the second experimental session, but before debriefing participants, we collected self-reports (SR) about imagery preference in response to a brief explanation of object and spatial imagery (Appendix A) based on the same definitions as the OSIQ (Blajenkova et al., 2006).

Participants performed a 2-alternative, forced-choice (2AFC) shape discrimination task in both visual and haptic within-modal conditions. One or two days later, they also completed a 2AFC texture discrimination task in both visual and haptic within-modal conditions. Task order and modality were counterbalanced across participants. Due to the odd sample size, there was a small discrepancy in the object imagery group: one extra person did the shape task first; and of those who did the texture task first, one extra person did the haptic condition first. The post-experiment definition of groups by SR inevitably changed the counterbalancing. Of the 28 participants analyzed, 12 did the texture task first (7 did the visual condition first) and 16 did the shape task first (8 did the visual condition first).

In each trial of the shape task, participants sequentially studied two objects (Shape 1 and Shape 2) with different texture schemes and were instructed to remember the shape of each. These instructions were intended to draw attention away from the experimental manipulation of texture. They were then sequentially presented, in pseudorandom order, with the original objects, and two new objects that had the same shape as the originals but interchanged texture schemes as described above. The task was to decide whether each of the objects had the same shape as Shape 1 or Shape 2 (Fig. 1A). There were ten such trials in each modality condition, resulting in 20 discriminations where the texture was unchanged and 20 where it was different. The texture task was exactly the same except for the requirement to remember the sequence of textures on the objects, instead of their shape (Fig. 1B). In both tasks, 10s was allowed for haptic study and 5s for visual study; this 2:1 ratio is consistent with prior studies (Newell et al., 2001; Lacey & Campbell, 2006; Lacey et al., 2007, 2009a, 2010) and was designed to avoid putting haptics at a disadvantage compared to vision. Response times during the test phase were unrestricted.

Participants sat facing the experimenter at a table on which objects were placed for both visual and haptic exploration. The table was 86 cm high so that, for visual exploration, the viewing distance was 30–40 cm and the viewing angle was approximately 35–45° from the vertical. For visual presentation, the objects were placed on the table oriented along their vertical axes (Fig. 1). Participants were free to move their head and eyes when looking at the objects but were not allowed to get up and move around them. For haptic exploration, participants felt the objects behind an opaque screen. Each object was placed into the participants’ hands, oriented along its vertical axis (Fig. 1). Participants used both hands, moving them freely over the object, but were instructed not to re-orient the object. The experimenter monitored each participant and did not observe any non-compliance.

Results

Classification by OSIQd gave 19 object imagers (mean OSIQd .7; SD .6) and 16 spatial imagers (-.5; .3). An omnibus ANOVA (between-subject: imagery type; within-subject: task, object property change, modality) did not show the crucial imagery type x task interaction (F1,33 = .001, p = .9). Further analysis, using three-way repeated-measures ANOVA (RM-ANOVA) with factors of task (shape, texture), object property change (present, absent), and modality (visual, haptic) on each group separately, showed a main effect of object property change for both object (F1,18 = 25.8, p < .001) and spatial (F1,15 = 11.0, p = .005) imagers. Spatial imagers also showed a main effect of modality (F1,15 = 7.5, p = .01) but there were no other significant effects or interactions for either group.

Classification by SR gave 17 object imagers (8 changed classification), 11 spatial imagers (6 changed classification), and 7 undecided (3 had been classed as spatial, and 4 as object, imagers). These 7 may have been verbalizers rather than visualizers (Kozhevnikov et al., 2002). We analyzed the 28 individuals stating a clear preference with the same omnibus ANOVA as above. Here, the imagery type x task interaction was significant (F1,33 = 12.4, p = .002)and was analyzed further using the same RM-ANOVAs as above separately for each group.

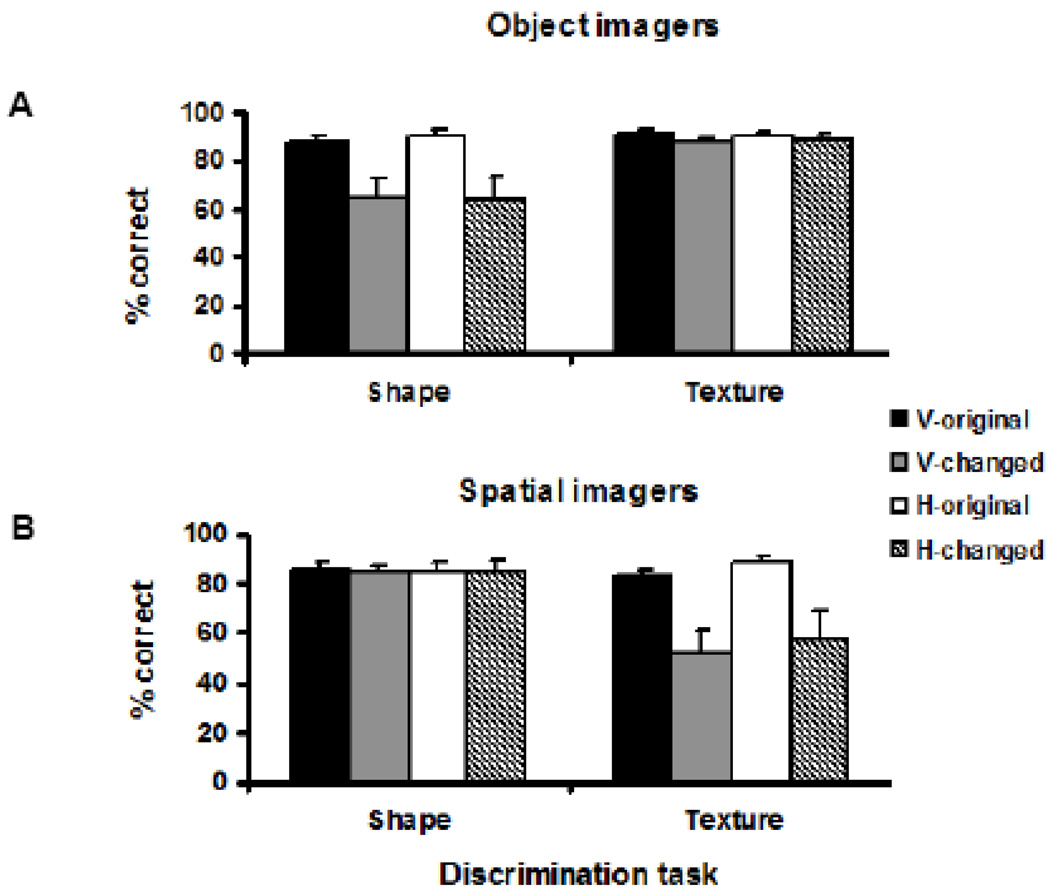

For the object imagers (Fig. 2A), there was a main effect of task such that they were better at texture than shape discrimination (F1,16 = 7.5, p = .01) and a main effect of object property change (F1,16 = 13.6, p = .002), but there was no significant effect of modality (F1,16 = .2, p = .7). A significant interaction of task x object property change (F1,16 = 8.0, p = .01) was because the effect of property change was confined to the shape task. Object imagers could discriminate texture equally well whether shape changed or not (t16 = 2.0, p = .06) but a change in texture impaired their shape discrimination (t16 = 3.3, p = .005). None of the other interactions was significant.

Figure 2.

When classified by self-report, (A) object imagers (n=17) could discriminate texture across shape changes but not shape across texture changes; (B) spatial imagers (n=11) could discriminate shape across texture changes but not texture across shape changes.

For the spatial imagers (Fig. 2B), there was a main effect of task such that they were better at shape than texture discrimination (F1,10 = 5.0, p = .049) and a main effect of object property change (F1,10 = 10.5, p = .009), but there was no significant effect of modality (F1,10 = 1.7, p = .2). There was a significant interaction of task x object property change (F1,10 = 8.6, p = .01): the effect of property change was confined to the texture task. Spatial imagers could discriminate shape equally well whether texture changed or not (t10 = .5, p = .6) but could not discriminate texture if there was a change in shape (t10 = 3.1, p = .01). None of the other interactions was significant.

Discussion

A key difference between visual object and spatial imagery is that object imagers, but not spatial imagers, tend to encode surface properties into object representations (Kozhevnikov et al., 2005). Exp 1 showed that, in both vision and touch, shape discrimination was disrupted by texture changes for object imagers but not spatial imagers, whereas texture discrimination was disrupted by shape changes for spatial imagers but not object imagers. Together, these results suggest that object and spatial dimensions exist for haptic, as well as for visual, representations. Next, we examined whether these dimensions also characterize the multisensory representation underlying visuo-haptic cross-modal recognition.

EXPERIMENT 2: MULTISENSORY REPRESENTATIONS

To examine object and spatial dimensions in visuo-haptic multisensory representations, we re-analyzed an earlier study investigating whether surface properties are integrated into these representations (Lacey et al., 2010 Exp 3). In that study, the task required cross-modal shape discrimination across changes in both texture and orientation, view-independence being a characteristic of the multisensory representation underlying cross-modal recognition (Lacey et al., 2007, 2009a; but see Newell et al., 2001; Lawson, 2009). When there was no change in texture, cross-modal shape discrimination was view-independent, indicating that participants could successfully form the multisensory representation, and replicating our previous finding (Lacey et al., 2007). However, a change in texture reduced cross-modal recognition to chance levels whether orientation changed or not. Thus, it was unclear whether the multisensory representation also encoded surface properties. However, inspection of the data in the texture-change condition revealed two distinct patterns of performance absent from the no-change condition. One pattern involved above-chance recognition independent of changes in both orientation and texture; clearly, here, participants were abstracting away from surface properties. In the other, a change in texture reduced performance to chance levels whether or not there was also a change in orientation; clearly, these participants were unable to abstract away from surface properties. In the light of Exp 1, we tested the idea that these patterns reflected individual differences in spatial and object imagery.

Methods

Participants

We contacted participants from Exp 3 of our earlier study (Lacey et al., 2010); twenty took part (4 were not contactable) (9 male; mean age 20 years, 9 months). All gave informed consent and all procedures were approved by the Emory University Institutional Review Board.

Stimuli & Procedure

All participants completed the OSIQ and self-reported their preference for object or spatial imagery. They had all completed the task of shape discrimination across changes in texture and orientation described in Lacey et al. (2010) in both cross-modal conditions: visual study-haptic test and vice versa. Briefly, this was the same as the shape discrimination task used in Exp 1 above, with the following exceptions. Firstly, the objects were placed into the participants’ hands so that the long axis of the object was horizontal. Secondly, in the test phase, each object was presented twice: once in the same orientation as the study phase and once rotated 180° about its long axis, i.e. rotated in depth (in Exp 1, each object was only presented once). Thirdly, there were six trials in each cross-modal condition giving 24 discriminations where the texture was the same and 24 where it was different (6 trials x 2 objects x 2 orientations for each set of 24 discriminations). Thus, there were 48 responses in total, compared to 40 in Exp 1.

Results and discussion

Classification by OSIQd gave 14 object imagers (mean OSIQd .8; SD .5) and 6 spatial imagers (-.5; .4). An omnibus ANOVA (between-subject: imagery type; within-subject: texture, orientation, modality) did not show an imagery type x texture interaction (F1,18 = 1.1, p = .3). This may reflect a lack of power but, in the light of Exp 1 and the clear indication of two types of performance in the data, three-way RM-ANOVA (modality [visual-haptic, haptic-visual], texture [change, no change], and orientation [rotated, unrotated]) was conducted on each group separately. For object imagers, there was a main effect of texture (F1,13 = 14.9, p = .002), such that objects were recognized better when the texture did not change, but there was no effect of a change in orientation (F1,13 = .3, p = .6) and no effect of modality (F1,13 = .3, p = .6). There were no significant interactions. For spatial imagers, there was no main effect for texture (F1,5 = 2.1, p = .2), orientation (F1,5 = 1.0, p = .4), or modality (F1,5 = 1.4, p = .3) and there were no significant interactions.

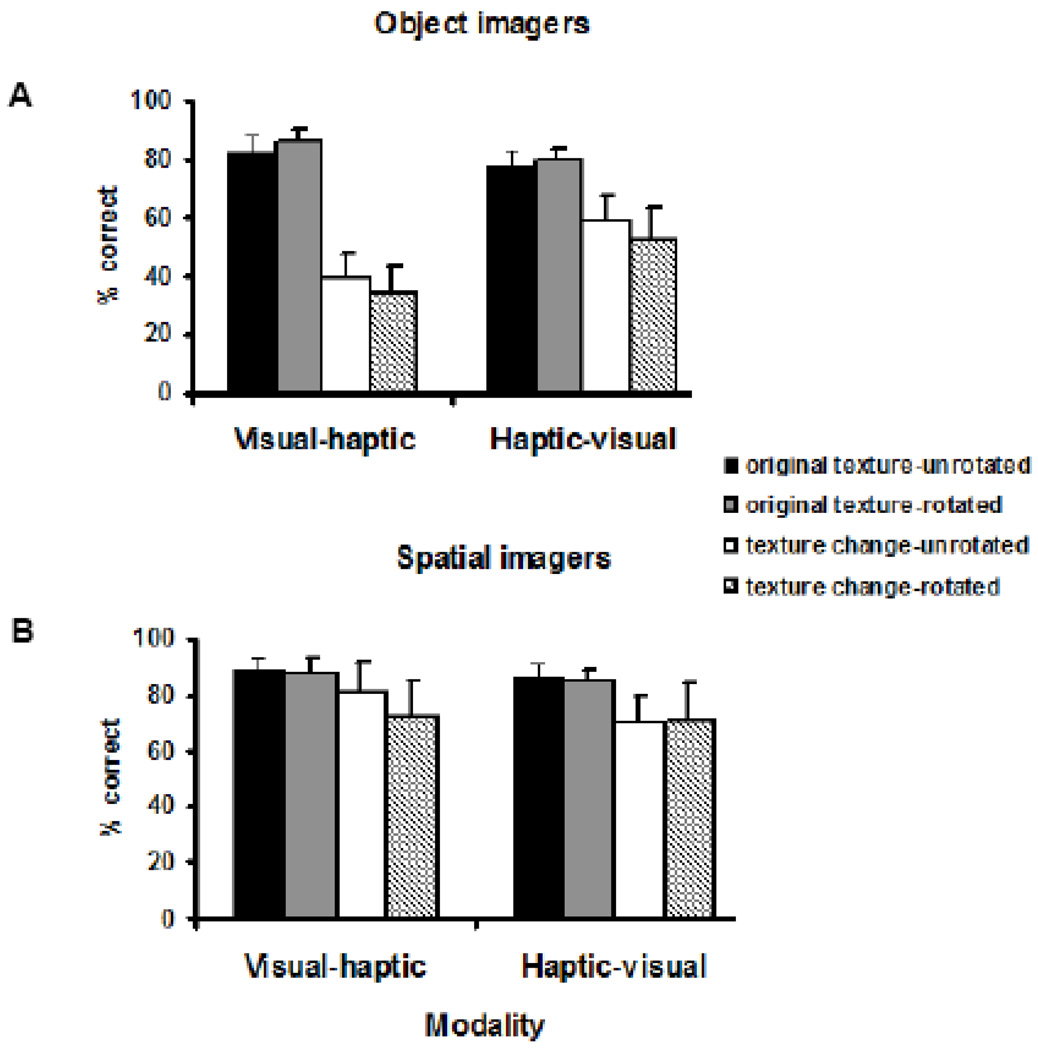

Classification by SR gave 6 spatial imagers (2 changed classification), 8 object imagers (1 changed classification), and 6 undecided (5 had been classed as object imagers and 1 as a spatial imager). These 6 may have been verbalizers rather than visualizers (Kozhevnikov et al., 2002). For the 14 individuals stating a clear preference, an omnibus ANOVA, as above, did not show an imagery type x texture interaction (F1,12 = 2.2, p = .16). Separate three-way RM-ANOVAs, as above, showed that, for object imagers, there was a main effect of texture (F1,7 = 18.0, p = .004), such that objects were recognized better when the texture did not change. But there was no effect of a change in orientation (F1,7 = 1.11, p = .33) and no effect of modality (F1,7 = 1.98, p = .2) (Fig. 3A). There were no significant interactions. For spatial imagers, there was no main effect for texture (F1,5 = 1.06, p = .35), orientation (F1,5 = .99, p = .36), or modality (F1,5 = .83, p = .4) (Fig. 3B) and there were no significant interactions.

Figure 3.

When classified by self-report, (A) object imagers (n=8) could discriminate shape across orientation changes but not if texture also changed; (B) spatial imagers (n=6) could discriminate shape across orientation changes whether texture changed or not.

Because the sample sizes were relatively small, we also conducted non-parametric analyses (Friedman’s ANOVA with Bonferroni-corrected post hoc Wilcoxon signed ranks tests). These confirmed the results of the RM-ANOVAs reported above for both object and spatial imagers whether classified by OSIQd or SR. Because of the disproportionate group sizes for OSIQd, we also pooled across object and spatial imagers to conduct correlations. OSIQd was negatively correlated with overall shape discrimination when there was a change in texture such that the more one preferred object imagery, the more discrimination was disrupted, whether there was also a change in orientation (r = -.6, p = .007) or not (r = -.5, p = .02). When there was no change in texture, OSIQd and performance were uncorrelated (unrotated r = -.4, p = .1; rotated r =.01, p = .9).

Discussion

When participants were required to discriminate shape across texture and orientation changes, both object and spatial imagers were view-independent and were therefore able to form the multisensory representation underlying cross-modal object recognition. However, object imagers were impaired by a change in texture while spatial imagers were not, and greater preference for object imagery was associated with greater impairment. For the ‘original texture’ objects, note that the 180° rotation reversed the order of the different textures. Object imagers were not impaired by this (Fig. 3A), indicating that shape and texture binding was robust across changes in orientation. We conclude that the multisensory representation involved in cross-modal recognition appears to reflect object-spatial imagery dimensions (although note that Exp 2 did not include a texture discrimination task).

GENERAL DISCUSSION

Exp 1 showed that the object and spatial dimensions demonstrated for visual imagery (Kozhevnikov et al., 2005) also exist in haptic representations. A key difference between these dimensions is whether or not surface properties are encoded into the representation: object imagers are more likely to encode these than spatial imagers (Kozhevnikov et al., 2005). Here, we showed that in both vision and touch, object imagers could ignore changes in shape in order to discriminate texture but were unable to ignore texture changes when discriminating shape, while the reverse was true for spatial imagers. Haptically-derived representations therefore can be inferred to include surface property information for object, but not spatial, imagers.

The idea that object and spatial dimensions apply not only to unisensory but also to multisensory representations aids further understanding of our earlier study of the integration of structural and surface properties (Lacey et al., 2010). In cross-modal (visual-haptic and haptic-visual) discrimination of shape across changes in both texture and orientation, both object and spatial imagers were view-independent, replicating Lacey et al. (2007, 2009a). However, texture changes produced greater disruption with increasing preference for object imagery and less disruption with increasing preference for spatial imagery (Exp 2, present study). Thus, the extent to which the multisensory view-independent representation supporting cross-modal object recognition includes surface property information depends on imagery preference. It appears, then, that the multisensory representation has some features that are stable across individuals, like view-independence, and some that vary across individuals, such as integration of surface property information.

The OSIQd and SR classifications did not always agree and only SR predicted performance in both experiments. Both are based on the same definition of object and spatial imagery, and both are indirect measures. The SR measure may have resulted in classifications that better predicted performance for two reasons. Firstly, it explicitly explained the difference between object and spatial imagery while the OSIQ does not. Secondly, of the 30 OSIQ items, most relate to the pictorial vs. schematic nature of images: only 6 items mention a surface property (color) and only 3 of these refer to the inclusion of surface properties in an image, the crucial issue in the present experiments. Thus, the OSIQ is more weighted towards the format of images rather than their content. By contrast, the SR measure gave approximately equal weight to these two factors. SR may thus have produced better classification because it provided participants with more explicit information on which to base their introspective reports, of particular relevance to the present study. This does not imply that SR is better than the OSIQ, (it was not, itself, a perfect indicator of performance and, in fact, OSIQd did predict performance in Exp 2) but that due regard should be paid to the experimental manipulation of interest and what measure will best reflect it. An important avenue for further research will be development of haptic and modality-independent versions of the OSIQ for use in multisensory research where imagery preferences are of interest (for example, see Lacey et al., 2009b; Pecher et al., 2009), or where blind populations are studied.

It is important to note that imagery preferences are relative, rather than absolute: object imagers do not completely lack spatial imagery ability, and vice versa. Spatial imagers performed as accurately on discriminating texture schemes, provided there was no change in shape, as they did on shape discrimination itself. In the same way, object imagers could perform as well on shape discrimination when there was no change in texture as they did on texture discrimination itself. In other words, spatial imagers can encode textures, as object imagers can encode shape, when required, but there is a trade-off over which aspects of an object are more salient. Individual preference for object or spatial imagery may arise early in development due to capacity limitations of visual attention (Kozhevnikov et al., 2010). Further research is required to examine the role of visual and haptic attentional resources in the development of haptic object and spatial imagery. Here, it would be instructive to compare healthy controls with the early- and late-blind in order to assess the effects of differing amounts of visual, compared to haptic, experience.

To conclude, we have shown that object and spatial dimensions occur in both visual and haptic unisensory, and visuo-haptic multisensory, representations. It remains to be explored whether object-spatial preferences are stable across modalities, i.e., that a visual object or spatial imager is also a haptic and cross-modal object or spatial imager. Exp 1 suggests that imagery dimensions may be stable across modalities, at least for within-modal tasks; but the cross-modal position is less clear, as Exp 2 involved different participants and examined only shape discrimination across texture changes. Further work, including the development of haptic and multisensory imagery scales, is required to investigate this.

ACKNOWLEDGMENTS

Support to KS from the National Eye Institute, the National Science Foundation, and the Veterans Administration is gratefully acknowledged.

APPENDIX A

Self-report instructions

Research has shown that when people use visual imagery – imagining something in their mind’s eye – they usually prefer one of two kinds of imagery, either object imagery or spatial imagery.

Object imagers tend to have detailed pictorial images of objects and scenes that tend to concentrate on the literal appearance of objects, including color, texture, patterns, brightness etc, as well as shape. By contrast, spatial imagers tend to have more schematic images that concentrate on the shape of objects, their spatial layout in a scene, the spatial relationships between parts of an object, and how these are transformed. An easy way to think of this difference is the contrast between a photograph (object imagery) and blueprint or diagram (spatial imagery).

With this in mind, which kind of imagery do you think that you typically prefer: Are you an object imager or a spatial imager?

REFERENCES

- Blajenkova O, Kozhevnikov M, Motes MA. Object-spatial imagery: a new self-report imagery questionnaire. Appl Cognit Psychol. 2006;20:239–263. [Google Scholar]

- Kozhevnikov M, Blazhenkova O, Becker M. Trade-off in object versus spatial visualization abilities: Restriction in the development of visual-processing resources. Psychon B Rev. 2010;17:29–35. doi: 10.3758/PBR.17.1.29. [DOI] [PubMed] [Google Scholar]

- Kozhevnikov M, Hegarty M, Mayer RE. Revising the visualiser-verbaliser dimension: evidence for two types of visualisers. Cognition Instruct. 2002;20:47–77. [Google Scholar]

- Kozhevnikov M, Kosslyn SM, Shephard J. Spatial versus object visualisers: a new characterisation of cognitive style. Mem Cognition. 2005;33:710–726. doi: 10.3758/bf03195337. [DOI] [PubMed] [Google Scholar]

- Lacey S, Campbell C. Mental representation in visual/haptic crossmodal memory: evidence from interference effects. Q J Exp Psychol. 2006;59:361–376. doi: 10.1080/17470210500173232. [DOI] [PubMed] [Google Scholar]

- Lacey S, Hall J, Sathian K. Are surface properties integrated into visuo-haptic object representations? Eur J Neurosci. 2010;30:1882–1888. doi: 10.1111/j.1460-9568.2010.07204.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Pappas M, Kreps A, Lee K, Sathian K. Perceptual learning of view-independence in visuo-haptic object representations. Exp Brain Res. 2009a;198:329–337. doi: 10.1007/s00221-009-1856-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Peters A, Sathian K. Cross-modal object representation is viewpoint-independent. PLoS ONE 2:e890. 2007 doi: 10.1371/journal.pone.0000890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Sathian K. Multisensory object representation: insights from studies of vision and touch. Prog Brain Res. 2011 doi: 10.1016/B978-0-444-53752-2.00006-0. in press. [DOI] [PubMed] [Google Scholar]

- Lacey S, Tal N, Amedi A, Sathian K. A putative model of multisensory object representation. Brain Topogr. 2009b;21:269–274. doi: 10.1007/s10548-009-0087-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawson R. A comparison of the effects of depth rotation on visual and haptic three-dimensional object recognition. J Exp Psychol: Human. 2009;35:911–930. doi: 10.1037/a0015025. [DOI] [PubMed] [Google Scholar]

- Newell FN, Ernst MO, Tjan BS, Bülthoff HH. View dependence in visual and haptic object recognition. Psychol Sci. 2001;12 doi: 10.1111/1467-9280.00307. 37-4. [DOI] [PubMed] [Google Scholar]

- Pecher D, van Dantzig S, Schifferstein HNJ. Concepts are not represented by conscious imagery. Psychon B Rev. 2009;16:914–919. doi: 10.3758/PBR.16.5.914. [DOI] [PubMed] [Google Scholar]