Abstract

The factors responsible for interindividual differences in speech-understanding ability among hearing-impaired listeners are not well understood. Although audibility has been found to account for some of this variability, other factors may play a role. This study sought to examine whether part of the large interindividual variability of speech-recognition performance in individuals with severe-to-profound high-frequency hearing loss could be accounted for by differences in hearing-loss onset type (early, progressive, or sudden), age at hearing-loss onset, or hearing-loss duration. Other potential factors including age, hearing thresholds, speech-presentation levels, and speech audibility were controlled. Percent-correct (PC) scores for syllables in dissyllabic words, which were either unprocessed or lowpass filtered at cutoff frequencies ranging from 250 to 2,000 Hz, were measured in 20 subjects (40 ears) with severe-to-profound hearing losses above 1 kHz. For comparison purposes, 20 normal-hearing subjects (20 ears) were also tested using the same filtering conditions and a range of speech levels (10–80 dB SPL). Significantly higher asymptotic PCs were observed in the early (<=4 years) hearing-loss onset group than in both the progressive- and sudden-onset groups, even though the three groups did not differ significantly with respect to age, hearing thresholds, or speech audibility. In addition, significant negative correlations between PC and hearing-loss onset age, and positive correlations between PC and hearing-loss duration were observed. These variables accounted for a greater proportion of the variance in speech-intelligibility scores than, and were not significantly correlated with, speech audibility, as quantified using a variant of the articulation index. Although the lack of statistical independence between hearing-loss onset type, hearing-loss onset age, hearing-loss duration, and age complicate and limit the interpretation of the results, these findings indicate that other variables than audibility can influence speech intelligibility in listeners with severe-to-profound high-frequency hearing loss.

Keywords: high-frequency hearing loss, hearing-loss onset age, hearing-loss duration, speech intelligibility

Introduction

Hearing-impaired (HI) individuals often vary considerably in their ability to understand speech. The factors responsible for this interindividual variability are not entirely clear. While audibility plays an important role (Dubno et al. 1989b; Rankovic 1991; Hogan and Turner 1998; Rankovic 1998, 2002), listeners with severe hearing losses have often been found not to benefit from amplification, even when audibility calculations indicate that they should (Pavlovic 1984; Kamm et al. 1985; Dubno et al. 1989a; Ching et al. 1998; Hogan and Turner 1998; Baer et al. 2002).

Studies of cochlear-implant (CI) subjects indicate that, for these subjects, whether hearing-loss occurred before or after the acquisition of language, and how long the duration of deafness was prior to implantation, are two of the most significant predictors of speech-recognition performance (Tong et al. 1988; Busby et al. 1992, 1993; Dawson et al. 1992; Gantz et al. 1993; Hinderink et al. 1995; Kessler et al. 1995; Shipp and Nedzelski 1995; Blamey et al. 1996; Okazawa et al. 1996; Rubinstein et al. 1999; Van Dijk et al. 1999; Friedland et al. 2003; Leung et al. 2005; Green et al. 2007; Gantz et al. 2009). In general, the results show that individuals in whom hearing-loss occurred postlingually achieve statistically higher speech-recognition scores than individuals who became deaf prior to the acquisition of language (Tong et al. 1988; Busby et al. 1993; Hinderink et al. 1995; Okazawa et al. 1996), and that the duration of deafness correlates negatively with speech-recognition performance (Gantz et al. 1993; Blamey et al. 1996; Rubinstein et al. 1999; Gantz et al. 2009). These findings have been variously explained in terms of neural plasticity and detrimental effects of sensory deprivation versus beneficial effects of sensory stimulation on auditory pathways involved in speech recognition, and in terms of perceptual learning of speech cues.

While these findings concern specifically CI subjects, age at hearing-loss onset and hearing-loss duration may influence speech-recognition performance in non-implanted HI individuals as well. Firstly, hearing losses incurred before the acquisition of language may impede the development of speech-recognition skills, so that subjects with congenital or early-onset hearing loss are less likely to achieve as high levels of speech understanding as individuals with similar hearing thresholds but later-onset hearing loss. Conversely, individuals in whom the hearing loss is already in place before language acquisition may develop long-term memory traces (or “templates”) that are more adapted to decoding the impoverished and distorted speech signals that they receive. As a result, these individuals might be better able to recognize speech than subjects who have similar audiograms, but who incurred a hearing loss at a later age, at which point their central auditory system was perhaps less plastic. In addition, speech-recognition performance could be related statistically to the age or type (early versus late) of hearing-loss even if it does not directly depend on these variables. For instance, early hearing losses might be more frequently associated with certain types of peripheral or central damage, which impact speech-perception performance in a different way than other types of peripheral damage more frequently associated with late-onset losses. Similarly, hearing-loss duration could be a factor of speech-recognition performance if, for example, having a relatively stable or slow-changing hearing-loss allowed HI individuals to progressively adjust their listening strategies to optimally decode the impoverished and distorted speech signals that they receive (Rhodes 1966; Niemeyer 1972). On the other hand, progressive degeneration of neural pathways following peripheral insults could result in poorer speech-recognition performance in the individuals in whom a hearing-loss has been present for several years than in individuals in whom peripheral damage was incurred more recently, even if these individuals have similar pure-tone audiograms (Kujawa and Liberman 2009).

Accordingly, this study sought to test whether hearing-loss onset age, onset type, and hearing-loss duration can contribute to explain (in a statistical sense) the large variability in speech-recognition scores in individuals with severe-to-profound high-frequency hearing loss.

Methods

Subjects

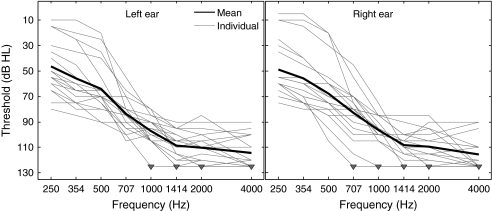

Twenty HI subjects (11 female, nine male; ages, 17–71 years; median age = 44.5 years) and 20 normal-hearing (NH) subjects (seven female, 13 male; ages, 20–32 years; median age = 23 years) took part in the study. All NH subjects had pure-tone hearing thresholds lower than 20 dB HL at octave frequencies between 250 and 8,000 Hz. The HI subjects had severe-to-profound hearing loss at and above 1 kHz. Most of them also had some degree of hearing loss (ranging from mild to profound) below 1 kHz. Figure 1 shows the mean and individual hearing thresholds of the HI listeners between 250 and 4,000 Hz, separately for the left and right ears. For the purpose of this study, thresholds were measured in half-octave steps between 250 and 2,000 Hz; these frequencies were the same as the lowpass-filter cutoff frequencies (CFs) used in the speech tests described below. The downward-pointing triangles in Figure 1 indicate “unmeasurable” thresholds. Thresholds were considered unmeasurable when the subject did not detect the pure-tone stimulus at the maximum sound level that could be produced by the audiometer at the considered test frequency, with contralateral masking to avoid cross-over effects. This maximum level varied from 105 to 120 dB HL depending on the test frequency and channel. It was equal to 120 dB HL between 600 and 2,000 Hz. For data-visualization and data-analysis purposes, unmeasurable thresholds were set arbitrarily to a value of 125 dB HL. Three of the 40 ears tested in this study had unmeasurable thresholds at 1 kHz. At 4 kHz, 17 ears had unmeasurable thresholds. Air-bone gaps were never greater than 10 dB at any of the frequencies tested, indicating that the hearing losses were sensorineural rather than conductive.

FIG. 1.

Mean and individual pure-tone hearing thresholds in HI subjects. Thick lines, mean thresholds across all HI subjects. Light lines, individual data. Downward-pointing triangles indicate unmeasurable thresholds.

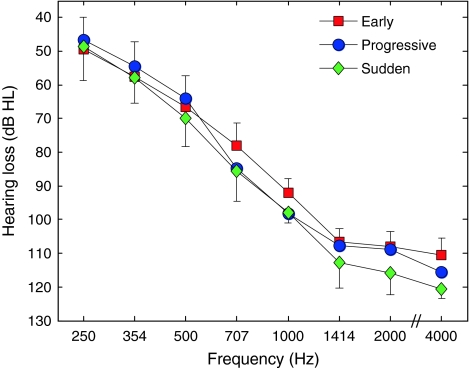

Table 1 provides additional individual information, including gender, age, and pure-tone threshold averages over two frequency ranges (250–710 Hz and 1–4 kHz). Based on the information provided by the subjects concerning the history of their hearing loss and, when available, audiometric records, ears were categorized into three groups corresponding to three types of hearing-loss onset: “early” (ten ears), which was defined as congenital hearing loss or hearing loss incurred at or before the age of 4, with little or no change in pure-tone thresholds thereafter; “progressive” (23 ears), which was defined as hearing loss having developed over the course of several years (in some cases, several decades), after the age of 4; and “sudden” hearing loss (seven ears), which was defined as abrupt hearing loss having occurred after the age of 4 (to avoid overlap with the “early” group). With one exception (subject 18), the type of hearing loss was the same for both ears in every subject; that subject exhibited a sudden and relatively stable hearing loss in the right ear, but a more progressive hearing loss in the left ear. Subjects 13 and 15 were placed into the “progressive” category even though hearing loss was diagnosed within their first year of life, because their hearing loss progressed over the course of several years, well beyond the fourth year of life. We acknowledge that these grouping criteria are somewhat arbitrary, and that any conclusion concerning the effects of hearing-loss type based on this grouping must be qualified accordingly. Importantly, the subjects in these three groups were selected in such a way that they had similar age characteristics (“early”: 17–64 years, median = 42 years; “progressive”: 30–71, median = 47 years; “sudden”: 37–63, median = 37), and similar pure-tone thresholds within the 250–4,000 Hz range (Fig. 2). There was no significant difference in pure-tone thresholds or age between the groups (group × ear × frequency analysis of variance (ANOVA) on thresholds with group as a between-subject factor and ear and frequency as within-subject factors: F2, 17 = 0.386 and P = 0.686; one-way ANOVA on age with group as a between-subject factor: F2, 19 = 0.73 and P = 0.496).

TABLE 1.

Individual participant information

| Gender | Age (years) | Ear | Hearing loss | HA use (years) | Speech level (dB SPL) | PTA 250–710 Hz (dB HL) | PTA 1–4 kHz (dB HL) | ||

|---|---|---|---|---|---|---|---|---|---|

| Onset type | Duration (years) | ||||||||

| 1 | M | 17 | R | Early | 17 | 14 | 100 | 64 | 98 |

| L | Early | 17 | 14 | 100 | 65 | 93 | |||

| 2 | M | 29 | R | Early | 28 | 18 | 100 | 79 | 104 |

| L | Early | 28 | 18 | 100 | 76 | 101 | |||

| 3 | M | 42 | R | Early | 39 | 38 | 100 | 75 | 98 |

| L | Early | 39 | 38 | 100 | 71 | 105 | |||

| 4 | F | 54 | R | Early | 50 | 45 | 100 | 70 | 110 |

| L | Early | 50 | 45 | 100 | 68 | 109 | |||

| 5 | F | 64 | R | Early | 64 | 10 | 80 | 25 | 108 |

| L | Early | 64 | 0 | 80 | 36 | 119 | |||

| 6 | F | 59 | R | Progressive | 4 | 4 | 100 | 63 | 118 |

| L | Progressive | 4 | 4 | 90 | 73 | 120 | |||

| 7 | M | 69 | R | Progressive | 9 | 3 | 100 | 53 | 96 |

| L | Progressive | 9 | 3 | 100 | 64 | 100 | |||

| 8 | F | 30 | R | Progressive | 15 | 0 | 90 | 65 | 110 |

| L | progressive | 15 | 5 | 90 | 65 | 118 | |||

| 9 | F | 34 | R | Progressive | 15 | 0 | 80 | 24 | 96 |

| L | Progressive | 15 | 0 | 90 | 29 | 89 | |||

| 10 | M | 68 | R | Progressive | 18 | 14 | 100 | 71 | 95 |

| L | Progressive | 18 | 14 | 100 | 78 | 95 | |||

| 11 | F | 30 | R | Progressive | 18 | 17 | 100 | 84 | 110 |

| L | Progressive | 18 | 5 | 100 | 89 | 108 | |||

| 12 | F | 53 | R | Progressive | 28 | 18 | 100 | 76 | 100 |

| L | Progressive | 28 | 18 | 100 | 83 | 100 | |||

| 13 | M | 31 | R | Progressive | 31 | 21 | 80 | 26 | 105 |

| L | Progressive | 31 | 21 | 80 | 30 | 104 | |||

| 14 | F | 47 | R | Progressive | 35 | 2 | 100 | 90 | 125 |

| L | Progressive | 35 | 2 | 90 | 46 | 118 | |||

| 15 | F | 38 | R | Progressive | 38 | 11 | 100 | 83 | 116 |

| L | Progressive | 38 | 11 | 100 | 65 | 111 | |||

| 16 | M | 71 | R | Progressive | 41 | 18 | 100 | 51 | 111 |

| L | Progressive | 41 | 18 | 100 | 60 | 116 | |||

| 17 | F | 37 | R | Sudden | 6 | 0 | 80 | 49 | 108 |

| L | Sudden | 6 | 0 | 100 | 71 | 124 | |||

| 18 | M | 60 | R | Sudden | 22 | 12 | 100 | 74 | 118 |

| L | Progressive | 22 | 12 | 95 | 71 | 115 | |||

| 19 | F | 37 | R | Sudden | 26 | 9 | 100 | 68 | 99 |

| L | Sudden | 26 | 0 | 100 | 69 | 100 | |||

| 20 | M | 63 | R | Sudden | 7 | 7 | 100 | 86 | 125 |

| L | Sudden | 44 | 3 | 100 | 43 | 110 | |||

The numbers in the first column refer to subject numbers. “HA use” refers to the number of years of hearing-aid use. “PTA 250–710 Hz” and “PTA 1–4 kHz” indicate pure-tone thresholds averaged over the indicated frequency range

FIG. 2.

Mean pure-tone hearing thresholds for the three groups of hearing-loss onset type. Color is used to indicate group, as indicated in the key. The same group color coding scheme is used in all figures: red for the early-onset group; blue for the progressive-onset group; green for the sudden-onset group. Error bars show standard error of the mean across ears within each group. To avoid clutter, error bars are only shown for the lowest and highest mean at each CF.

Since the three groups of hearing-impaired listeners did not differ significantly with respect to age but did differ with respect to hearing-loss onset age (which was lower, by definition, for the early-onset group than for the other two groups), we expected them to also differ with respect to hearing-loss duration, since this variable was equal to the listeners’ age minus the hearing-loss onset age. Due perhaps to interindividual variability, the main effect of hearing-loss duration in a two-way ANOVA with group as a between-subject factor and ear as a within-subject factor failed to reach statistical significance (F2, 17 = 3.19 and P = 0.067). However, a planned contrast analysis comparing hearing-loss duration in the early-onset group versus the mean hearing-loss duration across the other two groups showed a significant difference (t = 18.21 and P = 0.022). Hearing-loss duration was longer, by 18 years on average, in the early-onset group than in the other two groups. Accordingly, tests were performed to determine whether differences in hearing-loss duration could account for differences in speech-recognition scores across the three groups; these tests are described in “Data analysis” and “Results”.

Table 1 additionally lists the duration of the hearing loss, which was determined by subtracting the age at which hearing-loss was diagnosed or suspected to have started, as indicated in the subject’s history, from the subject’s age at the time of testing. For 14 out of the 20 subjects, the cause of the hearing loss was unknown. For the remaining six subjects, the suspected causes of hearing loss were as follows: malformation of the vestibular aqueduct (subject 1), electrocution (subject 3), Pott’s disease (subject 5), Usher’s syndrome (subject 15), otitis media or genetic (subject 19), and head injury (subject 20).

At the time of testing, all but six of the subjects were wearing hearing aids in both ears on a regular basis in their daily life. Out of the six subjects who were not wearing hearing aids in both ears on a regular basis, one (subject 9) was never fitted, one (subject 13) had been fitted bilaterally but did not wear his hearing aids on a regular basis, one (subject 17) tried using hearing aids twice but never succeeded in wearing them for a long period of time, and the remaining three were wearing a hearing aid in one ear only (the right ear for subjects 5 and 19, the left ear for subject 8). The number of years of hearing-aid use for each ear is indicated in Table 1. The subjects did not wear their hearing aids during any of the tests performed in this study.

In accordance with the Declaration of Helsinki, written informed consent was obtained from the subjects prior to their inclusion into the study. The study was approved by the local Ethics Committee (CPP Sud-Est IV, Centre Leon Berard de Lyon, France, no. ID RCB: 2008-A01479-46).

Stimuli and procedure

Speech intelligibility was measured using 40 lists of ten disyllabic French words (Fournier 1951). These lists may be regarded as the French-language equivalent of the American Spondaic lists. The words were uttered by a male talker and recorded on a CD, sampled at 44.1 kHz, with a 16-bit quantization range. They were lowpass filtered digitally in the frequency domain using the FFT filter of the Adobe Audition software, with a stopband attenuation of 70 dB. Seven lowpass CFs, corresponding to half-octave steps between 250 and 2,000 Hz (i.e., 250, 354, 500, 707, 1,000, 1,414, and 2,000 Hz) were used, yielding a total of 8 test conditions (the seven lowpass-filtering conditions plus the “unprocessed” condition), for a total of 3,200 stimuli (corresponding to the eight processing conditions × 40 lists × ten words per list). To facilitate the description of the results, hereafter and in the figures, the “unprocessed” condition is referred to as another CF condition, with CF = 22,050 Hz, i.e., half of the rate at which the digitized word lists were sampled.

The rationale for testing multiple CF conditions was as follows. Firstly, we reasoned that relationships between speech-recognition performance and other variables of interest in this study, such as hearing-loss duration or hearing-loss onset type, might be CF-dependent. Previous studies in which intelligibility has been measured for lowpass-filtered speech in listeners with high-frequency hearing losses (e.g., Murray and Byrne 1986; Hogan and Turner 1998; Vickers et al. 2001) have found that in some listeners, performance varied nonmonotonically with CF. In these listeners, surprisingly, the wideband condition did not always yield the highest performance. While the reasons for this effect are not completely known, one hypothesis is that, in frequency regions where the hearing loss is severe or profound, the speech signal received by the central auditory system is so distorted that it provides no useful information to the listener, and actually interferes with the processing of speech information contained in other (usually, lower) frequency regions, where the hearing loss is less marked. The fact that not all listeners show evidence of this effect may be due, not just to interindividual differences in hearing-loss characteristics (as reflected in pure-tone thresholds and audibility calculations), but also, to more central factors, such as differences in the ability to “ignore” peripheral channels in which information is too distorted to be useful, or a better ability to extract information from a distorted peripheral signal. To the extent that these factors are related to hearing-loss onset age or to hearing-loss duration, correlations between these variables and speech-recognition performance should be observed specifically in wideband conditions, or in conditions where the CF of the lowpass filter is sufficiently high for the speech signal to contain energy in frequency regions where peripheral information is highly distorted. In addition, testing multiple filtering conditions yielded a wider range of performance levels within a given listener, and increased the likelihood of capturing relationships between performance and other variables. A third reason for testing multiple lowpass-filtering conditions in HI listeners was that several of these listeners were candidates for implantation with a short-electrode implant and electro-acoustic stimulation. Therefore, it seemed both interesting and potentially important to measure the ability to understand speech for different hypothetical scenarios of preservation of low-frequency acoustic hearing in these listeners (Moore et al. 2010), and to investigate whether this ability was related to hearing-loss duration or hearing-loss onset type. For NH listeners, testing multiple CF conditions allowed us to derive frequency-importance functions specific to the speech stimuli that were used in this study. These frequency-importance functions were then used to compute articulation-index (AI) predictions in HI listeners, as explained in the next section.

For HI listeners, the “unprocessed” (CF = 22,050 Hz) condition was tested first, at three or four different sound levels: 70, 80, 90, and/or 100 dB SPL, where SPL refers to the root-mean-square (RMS) sound-pressure level of the stimuli across all stimuli. The highest level (100 dB SPL) was only tested in listeners who did not find it uncomfortably loud. In this initial test, and in all subsequent tests, the left and right ears of each subject were tested, separately and in random order. During this initial phase of the test, the subjects were asked to indicate which listening level they found most comfortable. These levels are listed in Table 1. One of these subjects described the intensity of 90 dB SPL as too low, and that of 100 dB SPL as too loud; for this listener, an intermediate level (95 dB SPL) was used. On average, the level chosen by the subjects as most comfortable was approximately equal to 95 dB SPL. Importantly, average speech-presentation levels did not differ significantly between the three hearing-loss onset groups (F2, 17 = 0.108 and P = 0.898). The average speech-presentation levels were equal to 96 dB (SD = 8.44) for the “early” group, 95 dB (±7.30) for the “progressive” group, 97 dB (±6.67) in the “sudden” group.

The comfortable level chosen by the subject was kept during subsequent testing, which involved lowpass-filtered speech. However, because the bandwidth of the filtered stimuli decreased with CF, and was always smaller than for the “unprocessed” stimuli, the actual SPL of the filtered stimuli was lower than the SPL of the unprocessed (CF = 22,050 Hz) stimuli, and it decreased with CF—as was the case in previous studies (e.g., Hogan and Turner 1998; Vickers et al. 2001). The NH listeners were tested using eight speech levels, ranging from 10 to 80 dB SPL in steps of 10 dB, for each of the CF conditions.

For HI listeners, two lists of ten words were drawn at random for each stimulus condition. The lists were presented monaurally to the listener, whose task was to repeat the words as they heard them. Because the number of conditions tested in NH listeners was larger (due to the testing of eight speech levels for each CF), in these listeners, only one randomly selected list of ten words was presented in each stimulus condition, and only the right ear was tested. The different lowpass-filtering conditions were tested in the same order in all subjects, starting with the highest CF condition (22,050 Hz) first, then proceeding with the next highest CF (2,000 Hz), and so forth, until the lowest CF (250 Hz) was tested. Our decision to adopt this reverse-order testing procedure, instead of a completely randomized testing procedure, was based on the following considerations. Firstly, we reasoned that presenting all stimuli corresponding to a given filtering condition as a group, rather than interspersed randomly amidst stimuli filtered differently, would alleviate the detrimental influence of lack of familiarity with the filtered stimuli. Secondly, we reasoned that testing the conditions in order of increasing difficulty would promote higher performance in the most difficult conditions, by allowing listeners to adapt progressively to increasing task difficulty. In an attempt to avoid fatigue and attentional lapses, the subjects were encouraged to take breaks whenever they felt the need to do so during the test.

Data analysis

Speech-recognition performance was measured by counting the number of syllables that were correctly repeated by the listener in a given test condition, dividing this number by the total number of syllables presented in that condition (40 for HI listeners and 20 for NH listeners), and multiplying the result by 100 to obtain a percent-correct (PC) score. Our decision to count correctly repeated syllables, rather than correctly repeated words, was motivated by the observation that listeners could often identify correctly one of the two syllables in the word, but were not able to repeat the whole word. In addition, we reasoned that scoring syllables would result in more accurate estimates of PC than scoring whole-words since there were more syllables than words, and the joint probability of correctly identifying both syllables in a word was not equal to the product of the corresponding marginal probabilities.

Statistical relationships between PC and other variables, including hearing-loss duration, hearing-loss onset age, age, and pure-tone thresholds, were investigated using Pearson’s correlation coefficients. To take into account the lack of statistical independence between variables corresponding to the left and right ears in the same subject, the sampling distribution of Fisher’s z-transformed correlation coefficients (Fisher 1915) under the null hypothesis (no correlation) was computed using a statistical resampling technique (bootstrap) (Efron and Tibshirani 1994). This involved computing the test statistic repeatedly, a large number of times, with the data shuffled randomly on each trial. Measures from the left and right ears were kept in pairs at all times, so that correlations between the two ears were taken into account. Between-ear correlations were reflected in a wider distribution of the test statistic, and in larger confidence intervals, compared with the uncorrelated case. Partial-correlation coefficients (Fisher 1924) were used to test statistical relationships between two variables (e.g., hearing-loss duration and PC), taking into account correlations between these variables and a third variable (e.g., age).

To determine whether hearing-loss type was a significant factor of performance, we performed ANOVA on the PC values measured in HI listeners, using hearing-loss type (early, progressive, and sudden) as an across-subject factor, and CF and ear (left–right) as within-subject factors. To determine whether hearing-loss duration was a factor in differences in PC between the groups, it was entered as a covariate in the ANOVA—a procedure commonly known as “analysis of covariance” (ANCOVA). Because hearing-loss onset type could not be used as both an across-subject factor and a within-subject (across-ear) factor, for the purpose of these two analyses (and these two analyses only), subject 18, who had different types of hearing-loss onset for the left and right ears, was placed into the “sudden” group. However, we verified that the main conclusions of these two analyses were qualitatively unchanged when that subject was placed into the “progressive” group instead. These ANOVA and ANCOVA were preceded by a Mauchly’s test of sphericity, and a Huyhn–Feldt correction was applied when required. Multiple post hoc comparisons following the finding of significant main effects in the ANOVA were performed according to Fisher’s protected least-significant-difference (LSD) procedure. All statistical tests were performed using untransformed PC data. However, we verified that the conclusions remained unchanged when the analyses were performed instead on arcsine-transformed data.

The influence of speech audibility on the results was evaluated by computing an index inspired from the AI (ANSI 1969), which we denote AI′. To this aim, we used the PC data obtained for different CFs and levels in the NH listeners to, firstly, derive frequency-importance functions for the speech stimuli used in this study. Frequency-importance functions represent the relative importance of different frequency bands for speech understanding (see, e.g., French and Steinberg 1947). Deriving importance functions for the specific speech stimuli used in this study was necessary because there was no guarantee a priori that the “standard” importance functions for English, which can be found in, e.g., ANSI (1997), were the same as the importance functions for the French dissyllabic word lists used here. However, we also performed similar calculations using the standard importance functions provided in ANSI (1997) and found that, although predicted AI values based on these standard functions were generally lower than those obtained using the specific importance functions measured in this study, our main conclusions regarding the relationship between speech audibility and other variables did not depend critically on which of these two functions was used.

To derive frequency-importance functions for the word lists used in this study, we first transformed the PCs measured in NH listeners into d′. This was done by solving the following equation for d′ numerically.

|

1 |

In this equation, which follows from the equal-variance Gaussian signal-detection-theory model for the 1-of-m identification task (Green and Dai 1991; Macmillan and Creelman 2005), ϕ and Φ denote the standard normal and cumulative standard normal functions, respectively, and m, the number of response alternatives, was set to 288, the number of possible pairwise combinations of 16 vowels and 18 consonants. The rationale for applying this nonlinear transformation is that, unlike PC, d′ provides an additive measure of band importance. The average importance of frequency band i, 1 < i ≤ n, was estimated as

|

2 |

where  and

and  denote the mean d′s measured in the (i–1)th and ith CF conditions (e.g., 707 Hz and 1,000 Hz), respectively, across all NH listeners and speech levels, and n is the total number of frequency bands (which was equal to the number of CF conditions). For the lowest frequency band (band 1),

denote the mean d′s measured in the (i–1)th and ith CF conditions (e.g., 707 Hz and 1,000 Hz), respectively, across all NH listeners and speech levels, and n is the total number of frequency bands (which was equal to the number of CF conditions). For the lowest frequency band (band 1),

|

3 |

The denominator in Eqs. 2 and 3 ensures that the total importance across all n frequency bands equals 1 (French and Steinberg 1947).

To estimate the audibility of the speech signals presented to the HI listeners, we used a simplified version of the formulas described in ANSI (1997), which was similar to the formula originally proposed in ANSI (1969). Specifically, the mean audibility of the speech signals for band i was computed as:

|

4 |

In this equation, Li (dB SPL/Hz) denotes the long-term average spectrum (LTAS) level of the speech in band i, assuming a 0-dB overall SPL; L0 (dB SPL) denotes the actual overall SPL of the speech signals; Ti denotes the absolute threshold (dB HL) at the center frequency of band i, which was estimated by interpolating the pure-tone hearing thresholds measured in the HI listeners; Ni denotes the “reference internal noise spectrum level” for band i, as defined in the ANSI (1997) standard. The max and min operations, and the division by 30, ensure that the result is comprised between 0 and 1. Finally, the AI-like measure, AI′, was computed as,

|

5 |

The value of the constant, C, in Eq. 4 was originally set to 15, as in ANSI (1997). However, a value of 25 was subsequently found to yield a better overall fit between the psychometric functions for the NH subjects, which were used to determine the specific frequency-band importance functions used in the current study, and the corresponding psychometric functions, which were predicted based on AI′ using the approach described below; therefore, the value of 25 was kept.

To derive PC predictions based on the computed AI′ values, we performed linear regression on the AI′-versus-d′ data of the NH subjects. We then used the resulting linear-regression equation to transform the AI′ values obtained in the HI subjects into d′. Finally, the resulting d′ values were transformed into PC using Eq. 1.

Apparatus

Pure-tone audiometry was performed using a MADSEN Orbiter 922 audiometer and TDH39 earphones. For the speech tests, the signals were burned on CDs and played via a CD player (PHILIPS CD723) connected to the MADSEN audiometer. The signals were presented monaurally to the listeners via TDH39 earphones.

Results

Speech intelligibility as a function of CF and effect of hearing-loss onset type

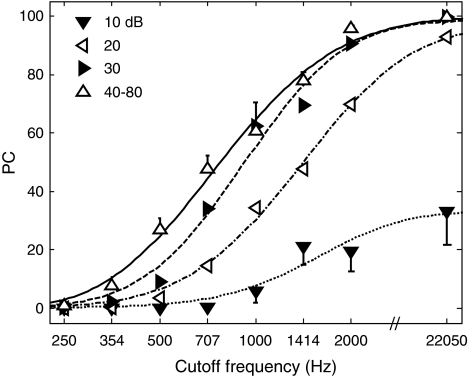

Figure 3 shows mean PC as a function of CF for NH listeners. As expected, PC increased with CF. It also increased with level up to 40 dB SPL, beyond which no consistent improvement in PC with level was observed. Accordingly, PC values for each CF were averaged across the highest five speech levels (40–80 dB SPL). The lines through the data show best-fitting psychometric functions computed as described in the Appendix. These psychometric functions are re-plotted in the next figure to facilitate comparisons between NH and HI listeners.

FIG. 3.

PC as a function of lowpass-filter CF in NH subjects. The different combinations of symbols and line styles indicate different speech levels, as indicated in the key. The curves corresponding to levels comprised between 40 and 80 dB SPL were averaged because they were similar and largely overlapped. Error bars show standard error of the mean across subjects. To avoid clutter, error bars are only shown for the lowest and highest mean at each CF condition.

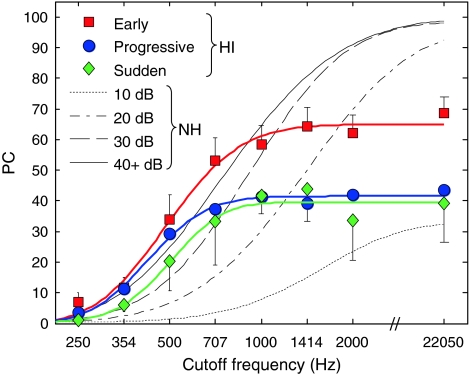

Figure 4 shows mean PC as a function of CF for HI listeners. The results of a group × ear × CF ANOVA with group as a between-subject factor and ear and CF as within-subject factors showed a significant main effect of group (F2, 17 = 4.61 and P = 0.025), and a significant interaction between hearing-loss type and CF (Huynh–Feldt-corrected F14, 119 = 2.10, and P = 0.036). Post hoc tests (Fisher’s LSD) comparing the three groups two at a time showed significant differences in PC between the “early” and “progressive” groups (mean difference = 12.94 and P = 0.032), as well as between the “early” and “sudden” groups (mean difference = 20.01 and P = 0.010), but not between the “progressive” and “sudden” groups (mean difference = 7.07 and P = 0.254). Contrast analyses, which compared PC in the “early” group versus mean PC across the other two groups for each CF separately showed significant differences for CFs of 1,000 Hz and higher (1,000 Hz: mean difference = 18.61 and P = 0.038; 1,414 Hz: mean difference = 23.98 and P = 0.020; 2,000 Hz: mean difference = 25.21 and P = 0.005; 22,050 Hz: mean difference = 28.43 and P = 0.005), but not for lower CFs (250 Hz: mean difference = 4.68 and P = 0.072; 354 Hz: mean difference = 2.75 and P = 0.457; 500 Hz: mean difference = 9.45 and P = 0.243; 707 Hz: mean difference = 18.68 and P = 0.065).

FIG. 4.

PC as a function of lowpass-filter CF in HI subjects. The data corresponding to the early-, progressive-, and sudden-onset groups are indicated by different colors, using the same scheme as in Figure 2. To facilitate comparisons, the best-fitting psychometric functions of the NH subjects are re-plotted (from Fig. 3) as black lines, with the different line styles corresponding to different speech-presentation levels (as in Fig. 3), as indicated in the key. The psychometric functions for speech-presentation levels of 40 dB SPL or higher were largely overlapped, and were averaged before display, to avoid clutter; the corresponding, averaged psychometric function is shown, labeled as 40+ dB. Error bars show standard error of the mean across ears within each group. To avoid clutter, error bars are only shown for the lowest and highest mean at each CF.

As mentioned above, the three HI groups did not differ significantly with respect to age, pure-tone thresholds, or speech-presentation levels. However, as mentioned above, duration of hearing-loss was significantly longer on average for the early-onset group than for the other two groups. To determine whether this difference in hearing-loss duration could account for the differences in PC between the groups, hearing-loss duration was entered as a covariate in a group × ear × CF ANOVA, with group as a between-subject factor and ear and CF as within-subject factors. This analysis (ANCOVA) showed no significant difference between the groups (F2, 16 = 1.84 and P = 0.192), and no significant effect of the cofactor (F1, 16 = 3.03 and P = 0.101). This outcome indicates that the differences in PC between the HI groups could be accounted for by differences in hearing-loss duration. The three HI groups also differed with respect to mean age at hearing-loss onset, which was lower for the “early” group (1.6 years) than for the “progressive” and “sudden” groups (25.82 and 28.14 years, respectively). However, since hearing-loss onset age was one of the criteria used to define the groups, this variable could not be meaningfully used as a cofactor in the ANOVA.

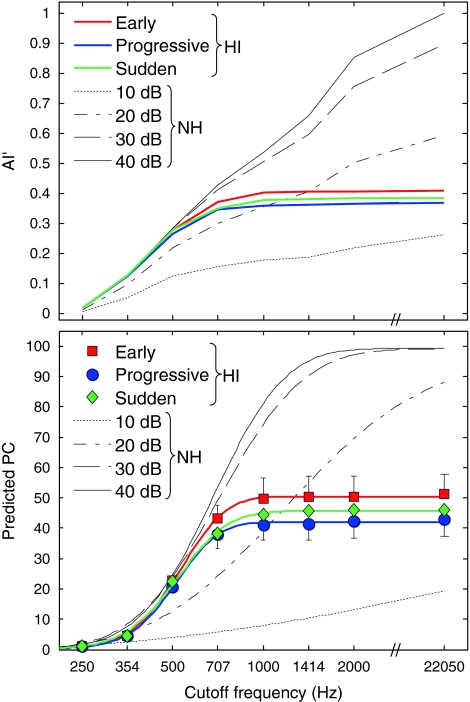

Lastly, it is interesting to consider whether speech audibility can account for differences between the three HI groups, as well as between HI and NH listeners. The upper panel in Figure 5 shows mean AI′ as a function of CF for the three HI groups and for the NH listeners. The lower panel shows the mean PC predicted based on AI′, as a function of CF, for the three HI groups and for the NH listeners. As can be seen, the mean AI′ and predicted PC functions of the three groups of HI listeners are generally close to each other. The 15- to 25-percentage-points difference between the “early” group and the two other HI groups that was observed for CFs of 1 kHz and higher in Figure 4, is not reflected in the predicted-PC functions (Fig. 5, lower panel). This suggests that the superior speech-recognition performance of the “early” group at high CFs cannot be explained simply in terms of audibility; rather, it appears that, for these CFs, the listeners of the “early” group were better able to extract useful information from the speech signals than the listeners in the other two HI groups, on average.

FIG. 5.

AI′ and predicted PC as a function of CF for HI and NH subjects. Upper panel, AI′. Lower panel, PC predicted based on AI′. For HI subjects, AI′ (for the upper panel) or predicted PC values (for the lower panel) were averaged across ears within each hearing-loss onset group. As in Figures 2 and 4, the different hearing-loss onset groups are indicated by different colors. For NH subjects, AI′ (for the upper panel) or predicted PC values (for the lower panel) were averaged across subjects separately for each speech-presentation level. The AI′ and PC functions for speech-presentation levels higher than 40 dB SPL were essentially identical to the psychometric function corresponding to 40 dB SPL. The data of the NH subjects are indicated by black lines, with different line styles indicating different speech-presentation levels. AI′ curves for levels higher than 40 dB SPL were identical to the curve corresponding to 40 dB SPL.

Correlations between speech-recognition performance and other variables

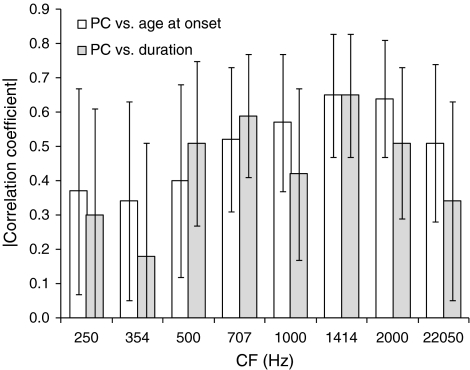

Statistically significant correlations between PC and hearing-loss onset age, and between PC and hearing loss duration, were observed for all CFs except the lowest two, i.e., 250 and 354 Hz, where PC was generally low and close to chance. The correlation coefficients are listed in Table 2 with the corresponding P values, and their magnitudes are illustrated in Figure 6, with the corresponding 95% confidence intervals. Statistically significant correlations are indicated by lower error bars terminating above zero.

TABLE 2.

Correlation coefficients between PC and hearing-loss onset age, hearing-loss duration, or age, and corresponding P values

| CF | Onset age | Duration | Age | |||

|---|---|---|---|---|---|---|

| r | P | r | P | r | P | |

| 250 | −0.37 | 0.035 | 0.30 | 0.069 | −0.17 | 0.210 |

| 354 | −0.34 | 0.038 | 0.18 | 0.177 | −0.25 | 0.097 |

| 500 | −0.40 | 0.021 | 0.51 | 0.002 | −0.00 | 0.494 |

| 707 | −0.52 | 0.001 | 0.59 | 0.000 | −0.08 | 0.332 |

| 1,000 | −0.57 | 0.000 | 0.42 | 0.006 | −0.30 | 0.044 |

| 1,414 | −0.65 | 0.000 | 0.65 | 0.000 | −0.18 | 0.190 |

| 2,000 | −0.64 | 0.000 | 0.51 | 0.001 | −0.30 | 0.051 |

| 22,050 | −0.51 | 0.002 | 0.34 | 0.034 | −0.30 | 0.058 |

Note that the same correlation coefficients can be associated with different P values because, as mentioned in the methods section, P values were computed using a statistical resampling technique (bootstrap), which took into account the correlation between left- and right-ear data, separately for each variable

FIG. 6.

Magnitudes of the correlation coefficients for PC and age at hearing-loss onset and PC and hearing-loss duration. The former are shown as empty bars; the latter are shown as filled bars. The error bars show 95% (bootstrap) confidence intervals.

The last two columns in Table 2 list correlation coefficients for PC and age, with the corresponding P values. As expected from previous studies showing decreases in speech-recognition performance with aging (for a review, see e.g., Gordon-Salant 2005), these correlations coefficients were generally negative. In fact, all eight correlation coefficients between PC and age were negative—which, according to a sign test, had a probability of P = 0.0039 of occurring by chance. However, it is important to note that these correlation coefficients for PC and age were generally smaller in magnitude than the correlations coefficients for PC and hearing-loss onset age or PC and hearing-loss duration, and unlike the latter, they only reached statistical significance (P < 0.05) for one of the CF conditions (CF = 1,000 Hz). The lack of significant correlation between PC and age for most of the CF conditions tested in the current study can be explained by considering that the variable, age, was equal to the sum of hearing-loss onset age and hearing-loss duration, and that the negative correlations between PC and hearing-loss onset age that were observed in several CF conditions approximately canceled out the positive correlations between PC and hearing-loss duration that were observed in the same conditions.

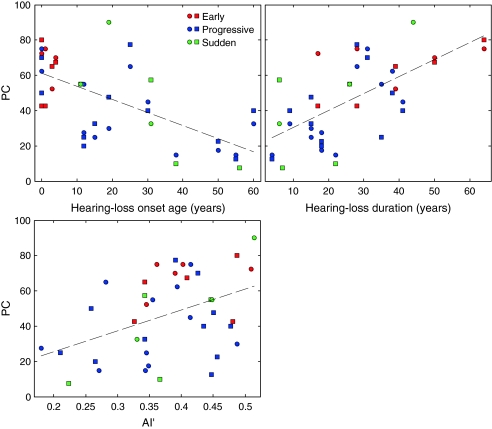

The two upper panels of Figure 7 shows scatter plots of PC versus hearing-loss onset age (upper left panel) and PC versus hearing-loss duration (upper right panel) for the 1,414-Hz CF condition. This condition was chosen for illustration purposes, as it yielded the largest correlation coefficients (in absolute value). The dashed lines are regression lines. For both hearing-loss onset age and hearing-loss duration, the linear regression explained approximately 42% of the variance in PC across ears. For comparison, the bottom panel in Figure 7 illustrates the relationship between PC and AI′, for the same CF (1,414 Hz). The corresponding R2 was equal to 19%. This indicates that, for the HI subjects tested in this study, hearing-loss onset age and hearing-loss duration were more informative than AI′ for predicting speech-recognition performance. While these observations are based on the results of the 1,414-Hz CF condition, qualitatively similar conclusions were reached based on the results obtained for other CFs—except the lowest two, where the PC values were usually close to chance and not sufficiently variable to yield meaningful statistical results.

FIG. 7.

Scatter plots of PC in the 1,414-Hz CF condition versus hearing-loss onset age, hearing loss duration, and AI′ across all HI ears. Each data point corresponds to a single ear, in a single subject. As indicated in the key, circles indicate the right ear, squares indicate the left ear, and the different colors indicate different hearing-loss onset groups, using the same color-group scheme as in previous figures. The dashed lines show regression lines through the data points.

Since neither hearing-loss onset age nor hearing-loss duration were significantly correlated with pure-tone thresholds, speech levels, or AI′ for any of the CF conditions tested, the above-noted correlations between PC and hearing-loss onset age or hearing-loss duration cannot be explained (statistically) by correlations between PC and any of the following variables: AI′, pure-tone thresholds, or speech levels. This was verified by computing correlations between PC and hearing-loss onset age, and between PC and hearing-loss duration, with and without the influence of AI′, pure-tone thresholds, or speech levels partialed out. With one exception (for the CF = 22,050 Hz condition, for which the correlation between hearing-loss duration and PC became nonsignificant when AI′ was partialed out), correlations that were statistically significant when AI′, pure-tone thresholds, and speech levels were not partialed out remained significant when any of these variables was partialed out.

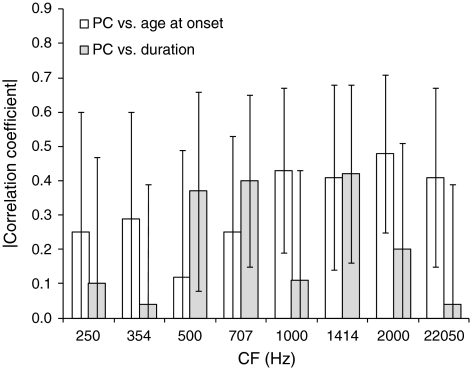

The interpretation of simple correlations between PC and either hearing-loss onset age or hearing-loss duration, which are illustrated in Figure 6, is complicated by the existence of correlations between the latter two variables—expectedly, since hearing-loss duration was obtained by subtracting hearing-loss onset age from age. Significant correlations between PC and hearing-loss onset age could be by-products of correlations between PC and hearing-loss duration, or the other way around. To determine whether the correlations with PC were driven by hearing-loss onset age, hearing-loss duration, or both, we computed partial-correlation coefficients between PC and each of the two variables, with the influence of the other variable partialed out. Figure 8 shows the magnitude of the resulting partial-correlation coefficients for PC and hearing-loss onset age, with the effect of hearing-loss duration partialed out (empty bars), and for PC and hearing-loss duration, with the effect of hearing-loss onset partialed out (filled bars). The partial-correlation coefficients are listed in Table 3. Significant negative partial correlations between PC and hearing-loss onset age were found for CFs higher than 707 Hz; for lower CFs, the partial correlations between these two variables were not significantly different from zero. Significant positive partial correlations between PC and hearing-loss duration were observed for CFs of 500, 707, and 1,414 Hz; for the other CFs, the correlations were not statistically significant. Although the above statistical-significance statements are based on a two-tailed critical probability level of 0.05 per comparison, we determined (using bootstrap and taking into account the correlations in PC values across CFs) that the probability of finding three or more significant correlation coefficients out of eight by chance was slightly lower than 0.05. Since at least three significant correlations were observed for each variable (i.e., age at hearing loss onset and hearing-loss duration), the null hypothesis can be rejected.

FIG. 8.

Magnitudes of the partial correlation coefficients for PC and age at hearing-loss onset, and PC and hearing-loss duration, with the effect of the remaining variable partialed out. The empty bars correspond to partial correlation coefficients between PC and age at hearing-loss onset with the effect of hearing-loss duration partialed out. The filled bars correspond to partial correlation coefficients between PC and hearing-loss duration with the effect of age at hearing-loss onset partialed out. The error bars show 95% (bootstrap) confidence intervals.

TABLE 3.

Partial correlation coefficients for PC and hearing-loss onset age with hearing-loss duration partialed out, and PC and hearing-loss duration with hearing-loss onset age partialed out, and corresponding P values

| CF | Onset age | Duration | ||

|---|---|---|---|---|

| rpartial | P | rpartial | P | |

| 250 | −0.25 | 0.119 | 0.10 | 0.326 |

| 354 | −0.29 | 0.065 | −0.04 | 0.413 |

| 500 | −0.12 | 0.284 | 0.37 | 0.027 |

| 707 | −0.25 | 0.082 | 0.40 | 0.008 |

| 1,000 | −0.43 | 0.006 | 0.11 | 0.279 |

| 1,414 | −0.41 | 0.016 | 0.42 | 0.014 |

| 2,000 | −0.48 | 0.003 | 0.20 | 0.142 |

| 22,050 | −0.41 | 0.016 | 0.04 | 0.423 |

Note that the same correlation coefficients can be associated with different P values because, as mentioned in “Methods”, P values were computed using a statistical resampling technique (bootstrap), which took into account the correlation between left- and right-ear data, separately for each variable

In the above-described analyses of correlations, the relationship between PC and other variables was considered for each CF separately. However, an interesting question, which these analyses do not address, is whether the dependence of PC on CF was related to hearing-loss onset age or duration; otherwise stated: was the rate at which PC increased with CF correlated with hearing-loss onset age or duration? To determine the answer to this question, while at the same time taking into account the influence of audibility, the following analysis was performed. Firstly, the slopes of individual “articulation functions” (i.e., PC as a function of AI′) were computed. For determining the slope, only CFs over which AI′ increased with CF were included. Secondly, correlation coefficients between these slopes and either hearing-loss onset age or hearing-loss duration were computed. The resulting correlation coefficients were equal to r = −0.38, with P = 0.008 for slope versus onset age, and r = 0.48, with P = 0.001 for slope versus hearing-loss duration. The correlation between articulation-function slope and hearing-loss onset age was no longer significant after partialing out the influence of hearing-loss duration (r = −0.12 and P = 0.246); however, the correlation between articulation-function slope and hearing-loss duration remained significant after hearing-loss onset age was partialed out (r = 0.34 and P = 0.017).

Finally, we examined correlations between PC and the duration of hearing-aid use. Because the duration of hearing-aid use was significantly correlated with hearing loss duration (r = 0.46 and P = 0.017), and the latter variable was found earlier to be significantly correlated with PC, we expected significant correlations also between hearing-aid use duration and PC. In fact, significant correlations between these two variables were only observed for two of the eight CF conditions tested (CF = 1,414 Hz: r = 0.33 and P = 0.042 and CF = 22,050 Hz: r = 0.34 and P = 0.035). When hearing-loss duration was partialed out, no significant partial correlation was found between the duration of hearing-aid use and PC.

Discussion

The main findings of this study can be summarized as follows. Firstly, HI subjects with early-onset hearing loss (defined as hearing loss incurred before the age of four, with no major change thereafter) had higher mean speech-recognition scores than subjects with later, progressive or sudden hearing loss; the difference was statistically significant for CFs of 1 kHz and higher. Secondly, across all HI subjects, and in several of the CF conditions tested, speech-recognition scores were found to be negatively correlated with hearing-loss onset age, and positively correlated with hearing-loss duration. In the following, we consider possible explanations for these findings.

Higher speech intelligibility in subjects with early-onset hearing loss and correlation between speech intelligibility and hearing-loss onset age

Since the three HI groups had similar hearing thresholds, speech-presentation levels, and speech audibility (as measured by AI′) on average, and similar age characteristics, the finding of higher speech-recognition scores in the “early” group than in the “progressive” and “sudden” groups cannot be explained simply by differences in audibility or age. One variable that differed significantly between three groups was hearing-loss onset age, which was lower (by definition) for the “early” group than for the two other groups. The groups also differed with respect to hearing-loss duration, which was longer for the “early” group—a by-product of the negative relationship between hearing-loss duration and hearing-loss onset age. When the effect of hearing-loss duration was partialed out—by entering it as a covariate in the ANOVA—the three HI groups were no longer found to differ significantly with respect to speech-recognition scores. One interpretation of this outcome is that the difference in speech-recognition scores between the “early” group and the other two groups was due entirely to a difference in hearing-loss duration between the groups. However, since hearing-loss duration co-varied with hearing-loss onset age, including one of these two variables as cofactor necessarily reduced the proportion of variance in speech-recognition scores that was left for the other variable to explain. Therefore, the absence of a significant group effect in the ANCOVA with hearing-loss duration as a cofactor should not necessarily be interpreted as evidence that the effect was driven entirely by differences in hearing-loss duration. Further study is needed to determine whether speech-recognition performance is significantly higher on average in subjects with “early” hearing loss onset than in subjects with later onset even after hearing-loss duration is equated. What the current results do demonstrate, on the other hand, is a relationship between speech intelligibility and hearing-loss onset age even after the influence of hearing-loss duration is partialed out. This evidence comes from the finding of significant partial correlations between PC and hearing-loss onset age, in which correlations between each of these variables and hearing-loss duration were partialed out. Such significant partial correlations were observed for the four highest CFs (1, 1.41, 2, and 22.05 kHz).

In the current state of knowledge, we can only speculate as to the origin of these correlations between speech intelligibility and hearing-loss onset age, and as to why they were only observed for CFs of 1 kHz or higher. A possible explanation is in terms of neural plasticity, or perceptual learning. According to this explanation, the earlier the hearing loss, the more likely it is that subjects will learn—or that their central auditory systems will develop the neural circuits that are needed—to successfully decode the impoverished and distorted speech signals that they receive through their damaged peripheral auditory system. In this context, the finding of significant partial correlations between hearing-loss onset age and speech intelligibility for CFs of 1 kHz or higher can be explained by considering that at these frequencies, all of the ears tested had severe (≥70 dB HL) or profound (≥90 dB HL) hearing loss. As a result, speech cues in this frequency region were probably minimal, and strongly distorted. Being able to take advantage of such minimal cues to supplement speech-recognition performance—beyond the level that could be achieved using lower-frequency, less-distorted information only—may require special perceptual abilities; it is possible that individuals who were relatively young and had a highly plastic auditory system at the time at which they incurred a hearing loss are more able (or more likely) to develop such abilities than individuals in whom hearing loss was incurred later in life.

While plasticity provides one possible explanation for the findings of higher speech-recognition performance in subjects with early-onset hearing loss and of negative correlations between speech-recognition performance and hearing loss onset age, alternative explanations cannot be ruled out at this stage. For instance, early and late hearing losses may involve different patterns of inner and outer hair-cell damage or different degrees of auditory-nerve degeneration. This could lead to different impact on neural representations of speech in early and late loss, on average, without necessarily being reflected in different pure-tone thresholds. Alternatively, or in addition, because hearing generally deteriorates with age, and the subjects of the three hearing-loss onset groups were selected to have approximately the same amount of hearing loss on average than the subjects of the other two groups, it is possible that the selection criteria that were used in this study created a bias, whereby subjects of the early-onset group tended to have lighter insults initially than subjects in the other two groups. Unfortunately, we did not have enough information concerning hearing-loss etiology and cochlear function in these subjects to address in detail the question of relationships between etiology and speech-understanding ability. This important question warrants further investigation.

Correlations between speech intelligibility and hearing-loss duration

The finding of significant correlations between hearing-loss duration and speech intelligibility was not completely unexpected. Previous studies in cochlear-implant listeners have shown that the duration of hearing-loss prior to cochlear implantation was a strong predictor of postimplantation performance in speech-perception tasks (Gantz et al. 1993; Blamey et al. 1996; Rubinstein et al. 1999). However, in these earlier studies, the correlation between hearing-loss duration and speech-recognition performance was negative: longer durations of hearing loss were associated with worse performance. In contrast, the results of the current study show a positive relationship between hearing loss duration and performance. How can these two apparently discrepant sets of results be reconciled? One possible explanation is that, while in the cochlear-implant studies duration of deafness represents a time when the auditory system was probably nearly totally deprived of input, in the current study, duration of deafness is time spent with some auditory input. Another—more speculative—explanation, relates to the fact that cochlear implants, being inserted basally into the cochlea, stimulate primarily auditory-nerve fibers that normally respond to relatively high frequencies. Therefore, it is likely that cochlear-implant listeners rely primarily or exclusively on information conveyed by “high-frequency” fibers in order to understand speech. On the other hand, the listeners in the current study had severe hearing loss above 1 kHz. Therefore, they had to rely primarily or exclusively on relatively low frequencies for speech understanding. It is conceivable that those listeners who had a hearing-loss for many years progressively became more accustomed to relying solely or primarily on low-frequency information (conveyed by auditory neurons with low characteristic frequencies) than the listeners in whom the loss of high-frequency hearing was more recent. Similar ideas have been proposed by Rhodes (1966), and more recently, Vestergaard (2003) and Moore and Vinay (2009). The latter two publications reported that listeners with cochlear dead regions at high frequencies were able to make better use of low-frequency information for speech understanding than listeners who did not have such dead regions. The listeners in the present study were not tested for dead regions. It would be interesting, in a future study, to test whether longer-duration hearing losses are more likely to be associated with dead regions than shorter-duration hearing losses, and whether this is the case even when the listeners in the two groups have similar audiograms.

An enhanced ability to use low-frequency auditory channels (stimulated acoustically) at the expense of high-frequency channels (stimulated electrically by cochlear implants) could reflect tonotopic-map reorganization in the central auditory system following cochlear damage. Specifically, neurophysiological studies have shown that in animals with high-frequency hearing loss induced by mechanical or chemical damage to the basal part of the cochlea, primary auditory cortex neurons that formerly responded to high frequencies—within the frequency region where a hearing loss is now present—shift their preferred frequency downward—usually, within a frequency region that corresponds to the “edge” of the cochlear lesion (Rajan et al. 1993; for reviews, see: Irvine and Rajan 1996; Irvine et al. 2001). We can only speculate as to whether or not such central reorganization occurred in our human listeners and, if it did, whether it had significant functional consequences on speech understanding. To the best of our knowledge, the only perceptual effects that have been identified as possible consequences of tonotopic-map reorganization following cochlear damage so far are relatively small improvements in frequency-discrimination thresholds localized near the hearing-loss edge (McDermott et al. 1998; for a review, see Thai-Van et al. 2007). However, it is conceivable that central neural plasticity, which may develop over the course of several years following cochlear damage, has significant consequences on the processing of complex stimuli such as speech, which have not yet been identified. In fact, part of the large variability in speech-perception performance that has been observed across hearing-impaired individuals in this study and previous ones might stem from differences in the extent and functional success of central neural reorganization following peripheral damage.

Finally, it is worth noting that some studies have reported improvements in speech-recognition scores related to hearing-aid use, even when these scores were measured without the hearing aid—an effect known as “acclimatization” (for reviews, see Palmer et al. 1998; Turner et al. 1996; see also the note by Turner and Bentler 1998). Because 17 of the 20 subjects who took part in this study wore hearing aids on a regular basis (three monaurally, the other 14, binaurally), and the duration of hearing-aid use tended to increase with the duration of hearing loss, it is conceivable that the positive correlations between hearing-loss and speech-recognition performance that were observed in the current study were actually driven by long-term acclimatization effects. Since acclimatization effects have rarely been documented over time periods exceeding a few months to a year, we do not know whether such effects can account for slow improvements in speech intelligibility over the course of several decades. To gain some clarity on this issue, one could do a study similar to the current one in a group of patients with varied (long as well as short) hearing-loss durations, who have never worn hearing aids.

Although the present results do not allow us to determine whether, and why, speech-recognition performance in HI subjects depends (in a causal way) on hearing-loss onset age and hearing-loss duration, these results demonstrate that these two variables can account for part of the large interindividual variability in speech-recognition performance among HI individuals with severe-to-profound high-frequency hearing loss.

Acknowledgments

This work was supported by Vibrant Med-El France (Doctoral Research Grant CIFRE 266/2007 to F.S.) and the French National Center for Scientific Research (CNRS). Associate Editor Robert Carlyon and three anonymous reviewers greatly helped to improve the manuscript by suggesting important supplementary analyses, including the AI calculations. Special thanks are due to Liz Anderson and Christopher Turner, who provided detailed feedback on an earlier version of the manuscript. Michel Beliaeff, Christian Berger-Vachon, Sami Labassi, Peggy Nelson, Bénédicte Philibert, and Bert Schlauch are acknowledged for their support and helpful suggestions.

Appendix

To fit the psychometric functions relating PC to CF in the groups of NH and HI subjects, we used the following mathematical function, which is based on the equal-variance Gaussian signal-detection-theory model for the 1-of-m identification task (Green and Dai 1991; Macmillan and Creelman 2005).

|

6 |

In this equation, Pmafc(CF) is the predicted PC for a given CF; λ is the asymptotic error rate; ϕ denotes the standard normal function; Φ denotes the cumulative standard function; m is the number of response alternatives (which was set to 288 here, reflecting all possible pairwise combinations of 16 vowels and 18 consonants); and d′ is the index of sensitivity. A power-law relationship between d′ and CF was assumed: d′(CF) = CFα/σ, where σ is the standard deviation of the internal noise. The function described in Eq. 6 was used to fit the PC data using a least-squares approach (implemented with Matlab’s fminsearch function, which uses the Nelder–Mead algorithm for function minimization).

Contributor Information

Fabien Seldran, Phone: +33-4-72110503, FAX: +33-4-72110504, Email: fseldran@yahoo.fr.

Stéphane Gallego, Email: sgallego@hotmail.fr.

Christophe Micheyl, Email: cmicheyl@umn.edu.

Evelyne Veuillet, Email: evelyne.veuillet@chu-lyon.fr.

Eric Truy, Email: eric.truy@chu-lyon.fr.

Hung Thai-Van, Email: hthaivan@gmail.com.

References

- American National Standard methods for the calculation of the speech intelligibility index, ANSI S3.5-1969. New York: ANSI; 1969. [Google Scholar]

- American National Standard methods for the calculation of the speech intelligibility index, ANSI S3.5-1997. New York: ANSI; 1997. [Google Scholar]

- Baer T, Moore BC, Kluk K. Effects of low pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. J Acoust Soc Am. 2002;112:1133–1144. doi: 10.1121/1.1498853. [DOI] [PubMed] [Google Scholar]

- Blamey P, Arndt P, Bergeron F, Bredberg G, Brimacombe J, Facer G, Larky J, Lindstrom B, Nedzelski J, Peterson A, Shipp D, Staller S, Whitford L. Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants. Audiol Neurootol. 1996;1:293–306. doi: 10.1159/000259212. [DOI] [PubMed] [Google Scholar]

- Busby PA, Tong YC, Clark GM. Psychophysical studies using a multiple-electrode cochlear implant in patients who were deafened early in life. Audiology. 1992;31:95–111. doi: 10.3109/00206099209072905. [DOI] [PubMed] [Google Scholar]

- Busby PA, Tong YC, Clark GM. Electrode position, repetition rate, and speech perception by early- and late-deafened cochlear implant patients. J Acoust Soc Am. 1993;93:1058–1067. doi: 10.1121/1.405554. [DOI] [PubMed] [Google Scholar]

- Ching TY, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: predictions from audibility and the limited role of high-frequency amplification. J Acoust Soc Am. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Dawson PW, Blamey PJ, Rowland LC, Dettman SJ, Clark GM, Busby PA, Brown AM, Dowell RC, Rickards FW. Cochlear implants in children, adolescents, and prelinguistically deafened adults: speech perception. J Speech Hear Res. 1992;35:401–417. doi: 10.1044/jshr.3502.401. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Ellison DE. Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. I: the contribution of selected frequency regions. J Acoust Soc Am. 1989;85:347–354. doi: 10.1121/1.397686. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Schaefer AB. Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. II: articulation index predictions. J Acoust Soc Am. 1989;85:355–364. doi: 10.1121/1.397687. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman & Hall; 1994. [Google Scholar]

- Fisher RA. Frequency distribution of the values of the correlation coefficient in samples of an indefinitely large population. Biometrika. 1915;10:507–521. [Google Scholar]

- Fisher RA. The distribution of the partial correlation coefficient. Metron. 1924;3:329–332. [Google Scholar]

- Fournier JE. Audiométrie vocale. Paris: Maloine; 1951. [Google Scholar]

- French NR, Steinberg JC. Factors governing the intelligibility of speech sounds. J Acoust Soc Am. 1947;19:90–119. doi: 10.1121/1.1916407. [DOI] [Google Scholar]

- Friedland DR, Venick HS, Niparko JK. Choice of ear for cochlear implantation: the effect of history and residual hearing on predicted postoperative performance. Otol Neurotol. 2003;24:582–589. doi: 10.1097/00129492-200307000-00009. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Woodworth GG, Knutson JF, Abbas PJ, Tyler RS. Multivariate predictors of audiological success with multichannel cochlear implants. Ann Otol Rhinol Laryngol. 1993;102:909–916. doi: 10.1177/000348949310201201. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, Oleson JJ, Reiss LA, Parkinson AJ. Hybrid 10 clinical trial: preliminary results. Audiol Neurootol. 2009;14(Suppl 1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S. Hearing loss and aging: new research findings and clinical implications. J Rehabil Res Dev. 2005;42(Suppl 2):9–24. doi: 10.1682/JRRD.2005.01.0006. [DOI] [PubMed] [Google Scholar]

- Green DM, Dai HP. Probability of being correct with 1 of M orthogonal signals. Percept Psychophys. 1991;49:100–101. doi: 10.3758/BF03211621. [DOI] [PubMed] [Google Scholar]

- Green KMJ, Bhatt YM, Mawman DJ, O’Driscoll MP, Saeed SR, Ramsden RT, Green MW. Predictors of audiological outcome following cochlear implantation in adults. Cochlear Implants Int. 2007;8:1–11. doi: 10.1002/cii.326. [DOI] [PubMed] [Google Scholar]

- Hinderink JB, Mens LH, Brokx JP, Broek P. Performance of prelingually and postlingually deaf patients using single-channel or multichannel cochlear implants. Laryngoscope. 1995;105:618–622. doi: 10.1288/00005537-199506000-00011. [DOI] [PubMed] [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: benefits for hearing-impaired listeners. J Acoust Soc Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Irvine DR, Rajan R. Injury- and use-related plasticity in the primary sensory cortex of adult mammals: possible relationship to perceptual learning. Clin Exp Pharmacol Physiol. 1996;23:939–947. doi: 10.1111/j.1440-1681.1996.tb01146.x. [DOI] [PubMed] [Google Scholar]

- Irvine DR, Rajan R, Brown M. Injury- and use-related plasticity in adult auditory cortex. Audiol Neurootol. 2001;6:192–195. doi: 10.1159/000046831. [DOI] [PubMed] [Google Scholar]

- Kamm CA, Dirks DD, Bell TS. Speech recognition and the Articulation Index for normal and hearing-impaired listeners. J Acoust Soc Am. 1985;77:281–288. doi: 10.1121/1.392269. [DOI] [PubMed] [Google Scholar]

- Kessler DK, Loeb GE, Barker MJ. Distribution of speech recognition results with the Clarion cochlear prothesis. Ann Otol Rhinol Laryngol. 1995;166:283–285. [PubMed] [Google Scholar]

- Kujawa SG, Liberman MC. Adding insult to injury: cochlear nerve degeneration after “temporary” noise-induced hearing loss. J Neurosci. 2009;29:14077–14085. doi: 10.1523/JNEUROSCI.2845-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leung J, Wang NY, Yeagle JD, Chinnici J, Bowditch S, Francis HW, Niparko JK. Predictive models for cochlear implantation in elderly candidates. Arch Otolaryngol Head Neck Surg. 2005;131:1049–1054. doi: 10.1001/archotol.131.12.1049. [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection theory: a user’s guide. Mahwah: Erlbaum; 2005. [Google Scholar]

- McDermott HJ, Lech M, Kornblum MS, Irvine DRF. Loudness perception and frequency discrimination in subjects with steeply sloping hearing loss: possible correlates of neural plasticity. J Acoust Soc Am. 1998;104:2314–2325. doi: 10.1121/1.423744. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Vinay SN. Enhanced discrimination of low-frequency sounds for subjects with high-frequency dead regions. Brain. 2009;132:524–536. doi: 10.1093/brain/awn308. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Glasberg B, Schlueter A. Detection of dead regions in the cochlea: relevance for combined electric and acoustic stimulation. Adv Otorhinolaryngol. 2010;67:43–50. doi: 10.1159/000262595. [DOI] [PubMed] [Google Scholar]

- Murray N, Byrne D. Performance of hearing-impaired and normal hearing listeners with various high-frequency cut-offs in hearing aids. Aust J Audiol. 1986;8:21–28. [Google Scholar]

- Niemeyer W (1972) Studies on speech perception in dissociated hearing loss. In: Fant G (ed) International symposium on speech communication ability and profound deafness. The Alexander Graham Bell Association for the Deaf, Inc. pp 106–118

- Okazawa H, Naito Y, Yonekura Y, Sadato N, Hirano S, Nishizawa S, Magata Y, Ishizu K, Tamaki N, Honjo I, Konishi J. Cochlear implant efficiency in pre- and postlingually deaf subjects. A study with H215O and PET. Brain. 1996;119:1297–1306. doi: 10.1093/brain/119.4.1297. [DOI] [PubMed] [Google Scholar]

- Palmer CV, Nelson CT, Lindley GA. The functionally and physiologically plastic adult auditory system. J Acoust Soc Am. 1998;103:1705–1721. doi: 10.1121/1.421050. [DOI] [PubMed] [Google Scholar]

- Pavlovic CV. Use of the articulation index for assessing residual auditory function in listeners with sensorineural hearing impairment. J Acoust Soc Am. 1984;75:1253–1258. doi: 10.1121/1.390731. [DOI] [PubMed] [Google Scholar]

- Rajan R, Irvine DR, Wise LZ, Heil P. Effect of unilateral partial cochlear lesions in adult cats on the representation of lesioned and unlesioned cochleas in primary auditory cortex. J Comp Neurol. 1993;338:17–49. doi: 10.1002/cne.903380104. [DOI] [PubMed] [Google Scholar]

- Rankovic CM. An application of the articulation index to hearing aid fitting. J Speech Hear Res. 1991;34:391–402. doi: 10.1044/jshr.3402.391. [DOI] [PubMed] [Google Scholar]

- Rankovic CM. Factors governing speech reception benefits of adaptive linear filtering for listeners with sensorineural hearing loss. J Acoust Soc Am. 1998;103:1043–1057. doi: 10.1121/1.423106. [DOI] [PubMed] [Google Scholar]

- Rankovic CM. Articulation index predictions for hearing-impaired listeners with and without cochlear dead regions. J Acoust Soc Am. 2002;111:2545–2548. doi: 10.1121/1.1476922. [DOI] [PubMed] [Google Scholar]

- Rhodes RC. Discrimination of filtered CNC lists by normals and hypacusics. J Aud Res. 1966;6:129–133. [Google Scholar]

- Rubinstein JT, Parkinson WS, Tyler RS, Gantz BJ. Residual speech recognition and cochlear implant performance: effects of implantation criteria. Am J Otol. 1999;20:445–452. [PubMed] [Google Scholar]

- Shipp DB, Nedzelski J. Prognostic indicators of speech recognition performance in adult cochlear implant users: a prospective analysis. Ann Otol Rhinol Laryngol Suppl. 1995;166:194–196. [PubMed] [Google Scholar]

- Thai-Van H, Micheyl C, Norena A, Veuillet E, Gabriel D, Collet L. Enhanced frequency discrimination in hearing-impaired individuals: a review of perceptual correlates of central neural plasticity induced by cochlear damage. Hear Res. 2007;233:14–22. doi: 10.1016/j.heares.2007.06.003. [DOI] [PubMed] [Google Scholar]

- Tong YC, Busby PA, Clark GM. Perceptual studies on cochlear implant patients with early onset of profound hearing impairment prior to normal development of auditory, speech, and language skills. J Acoust Soc Am. 1988;84:951–962. doi: 10.1121/1.396664. [DOI] [PubMed] [Google Scholar]

- Turner CW, Bentler RA. Does hearing aid benefit increase over time? J Acoust Soc Am. 1998;104:3673–3674. doi: 10.1121/1.423949. [DOI] [PubMed] [Google Scholar]

- Turner CW, Humes LE, Bentler RA, Cox RM. A review of the literature on hearing aid benefit as a function of time. Ear Hear. 1996;17:14S–28S. doi: 10.1097/00003446-199617031-00003. [DOI] [PubMed] [Google Scholar]

- Dijk JE, Olphen AF, Langereis MC, Mens LHM, Brokx JPL, Smoorenburg GF. Predictors of cochlear implant performance. Audiology. 1999;38:109–116. doi: 10.3109/00206099909073010. [DOI] [PubMed] [Google Scholar]

- Vestergaard MD. Dead regions in the cochlea: implications for speech recognition and applicability of articulation index theory. Int J Audiol. 2003;42:249–261. doi: 10.3109/14992020309078344. [DOI] [PubMed] [Google Scholar]

- Vickers DA, Moore BC, Baer T. Effects of low-pass filtering on the intelligibility of speech in quiet for people with and without dead regions at high frequencies. J Acoust Soc Am. 2001;110:1164–1175. doi: 10.1121/1.1381534. [DOI] [PubMed] [Google Scholar]